Abstract

Electromagnetic (EM) motion tracking systems are suitable for many research and clinical applications, including in vivo measurements of whole-arm movements. Unfortunately, the methodology for in vivo measurements of whole-arm movements using EM sensors is not well described in the literature, making it difficult to perform new measurements and all but impossible to make meaningful comparisons between studies. The recommendations of the International Society of Biomechanics (ISB) have provided a great service, but by necessity they do not provide clear guidance or standardization on all required steps. The goal of this paper was to provide a comprehensive methodology for using EM sensors to measure whole-arm movements in vivo. We selected methodological details from past studies that were compatible with the ISB recommendations and suitable for measuring whole-arm movements using EM sensors, filling in gaps with recommendations from our own past experiments. The presented methodology includes recommendations for defining coordinate systems (CSs) and joint angles, placing sensors, performing sensor-to-body calibration, calculating rotation matrices from sensor data, and extracting unique joint angles from rotation matrices. We present this process, including all equations, for both the right and left upper limbs, models with nine or seven degrees-of-freedom (DOF), and two different calibration methods. Providing a detailed methodology for the entire process in one location promotes replicability of studies by allowing researchers to clearly define their experimental methods. It is hoped that this paper will simplify new investigations of whole-arm movement using EM sensors and facilitate comparison between studies.

Keywords: inverse kinematics, electromagnetic sensors, in vivo measurements, whole arm, upper limb, ISB, landmark, postural, sensor-to-segment, sensor-to-body

1 Introduction

For some applications, electromagnetic (EM) motion capture sensors2 are a practical alternative to the more commonly used optoelectronic (OE) sensors. Although EM sensors have some disadvantages [1], including a small sensing volume [2] (a sphere with radius of the order of 4 ft) and susceptibility to electromagnetic interference from ferromagnetic materials [2–8] and electrical equipment [8], they have some advantages over optoelectronic sensors. EM sensors: do not require a direct line of sight; output six degrees-of-freedom (DOF) per sensor; sample at relatively high frequencies (between 40 and 360 samples/s); and are relatively low-cost. In addition, they have relatively high accuracy (1–5 mm for translation, 0.5–5 deg for rotation) and resolution (of the order of 0.05 mm for translation 0.001 deg for rotation). These characteristics make them well-suited for many short-range applications, including evaluation of upper-limb movement in research and clinical settings [1,9].

Unfortunately, the methodology for in vivo measurements of whole-arm movements using EM sensors is not well described in the literature. To clarify, the recommendations of the International Society of Biomechanics (ISB) [10] have provided a great service in clearly defining body coordinate systems (BCSs) for individual bones and joint coordinate systems (JCSs) between bones. However, by necessity, the ISB recommendations do not provide clear guidance or standardization on all steps required to measure joint angles. In particular, for measuring whole-arm movements in vivo using EM sensors, the following steps are not well defined: First, although the BCS and JCS are clearly defined for individual joints, they are not clearly defined for whole-arm movements. Simply concatenating the various JCS is ambiguous because JCS definitions sometimes differ for different limb regions.3 Similarly, although the ISB recommendations includes guidelines for adapting the JCS, originally defined for the right upper limb, to the left upper limb, the guidelines are not consistent among the joints of the upper limb.4 Second, some landmarks recommended for calibration are difficult or impossible to access in vivo.5 Third, the ISB recommendations do not include guidelines for the placement of EM sensors. Fourth, the ISB recommendations deliberately exclude the calibration process, leaving it “up to the individual researcher to relate the marker or other (e.g., electromagnetic) coordinate systems (CSs) to the defined anatomic system through digitization, calibration movements, or population-based anatomical relationships.” Fifth, the ISB recommendations do not include the inverse kinematics algorithms required to extract joint angles from EM sensor data.

Although some of these gaps have been filled in by individual studies, the added recommendations are usually specific to OE motion capture. Although similar, methods for tracking motion using EM sensors differ significantly from those for OE sensors in several aspects, including sensor placement and portions of the inverse kinematics process. Also, although motion analysis software packages are commercially available, they are likewise usually made for OE systems and do not recommend methodological details for EM sensors, nor do they provide the underlying equations necessary for customization (such as inclusion of soft-tissue artifact compensation). In addition, the few studies involving EM sensors often lack sufficient details in the description of their methods to enable replication. Finally, although a small number of these gaps are easily overcome, for in vivo measurements of whole-arm movements using EM sensors, these gaps are numerous, complex, and inter-related, making it difficult to choose the best course of action and all but impossible to make meaningful comparisons between studies.

In this paper, we describe in detail the process for using EM sensors to measure whole-arm movements in vivo and obtain upper limb joint angles defined as much as possible according to ISB recommendations. This process includes defining joint angles, placing sensors, calibrating the sensor system, calculating rotation matrices from sensor data, and extracting unique joint angles from rotation matrices. We present this process for both the right and left upper limbs using the landmark and postural calibration methods. Models with 9DOF (3 each at the shoulder, elbow/forearm, and wrist) and 7DOF (3 at the shoulder, 2 at the elbow/forearm, and 2 at the wrist) are presented. All equations required to complete the entire process are included in the appendices. Although this process is described for all major DOF of the upper limb, a subset of these descriptions can be used for any combination of upper-limb DOF.

2 Methods

2.1 Definitions: Body-Segment Coordinate Systems, Joint Coordinate Systems, and Joint Angles.

The ISB recommendations [10] define joint angles through the use of BCS and JCS. Each body segment is represented by a BCS that is fixed in, and rotates with, the body segment. Rotation of one BCS relative to an adjacent BCS constitutes a joint, which is defined by its JCS. The definition of a JCS includes both the axes of rotation and, because finite rotations do not commute, the sequence of rotation (e.g., rotate first about the first JCS axis by , then about the second JCS axis by , and finally about the third JCS axis by ). Angles , , and , which are examples of Euler/Cardan angles,6 are the joint angles.

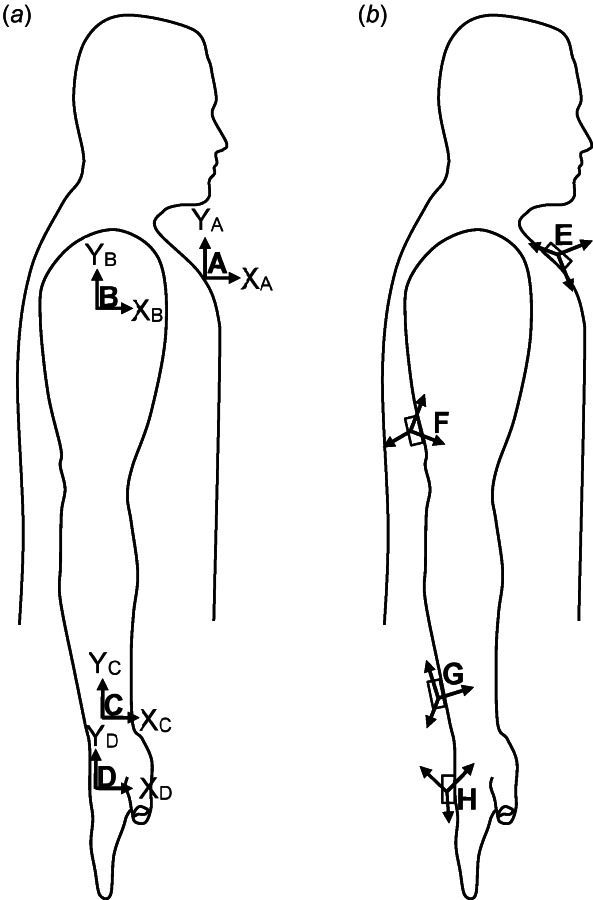

While the ISB recommendations focus mostly on describing rotation between two articulating bones, they can also be used to describe global motion caused by the aggregate rotation of multiple bones. This paper focuses on global limb motion and follows the ISB recommendations on global motion when specified in Ref. [10]. Specifically, we defined four body segments (from proximal to distal): thorax, upper arm, forearm, and hand (represented by the third metacarpal), which are represented by BCS , , , and , respectively (Fig. 1(a) and Table 1). These four body segments are connected by three joints (Table 2): the thorax and humerus are connected by the thoracohumeral joint, the humerus, and distal forearm articulate via the elbow (humeroulnar) joint and the forearm (radio ulnar) joint, grouped as one joint in this paper, and the distal forearm and hand segments are connected by the wrist joint. Here, the three joints are referred to as the shoulder, elbow-forearm, and wrist joints, all of which are examples of global motion. These JCS (Table 2) were taken from among the options proposed in the ISB recommendations.

Fig. 1.

Body segment coordinate systems (BCS) and sensor coordinate systems (SCS) of the right arm are shown in (a) and (b), respectively. (a) The BCS of the thorax, upper arm, forearm, and hand align in anatomical position. (b) In general, the SCS are not aligned to each other or to their respective BCS.

Table 1.

Body coordinate systems and SCS suggested for in vivo measurements of whole-arm movements, chosen from among the multiple definitions advocated by the ISB recommendations [10]

| Label | Description | Reference to ISB recommendation [10] |

|---|---|---|

| BCS of thorax | 2.3.1 | |

| BCS of upper arm (humerus) | 2.3.5 = 3.3.1 | |

| BCS of forearm (distal forearm) | 2.3.6 = 3.3.2 | |

| BCS of hand (third metacarpal) | 4.3.4 | |

| SCS of sensor on thorax | N/A | |

| SCS of sensor on upper arm | N/A | |

| SCS of sensor on forearm | N/A | |

| SCS of sensor on hand | N/A | |

| Stationary frame of transmitter | N/A |

Table 2.

Joint coordinate systems suggested for in vivo measurements of whole-arm movements, chosen from among the multiple definitions advocated by the ISB recommendations [10]

| Joint | Axis | Angle | Description | Positive direction | Origin (0 deg) | ISB equivalent |

|---|---|---|---|---|---|---|

| Shoulder (humerus relative to thorax) | Plane of elevation | (positive ) | (anatomical position) | e1 of humerus rel. to thorax, (2.4.7) | ||

| Elevation | (positive ) | (anatomical position) | e2 of humerus rel. to thorax, (2.4.7) | |||

| Axial rotation | Internal rotation | (anatomical position) | e3 of humerus rel. to thorax, (2.4.7) | |||

| Elbow-forearm (forearm relative to humerus) | Elbow flexion–extension | Flexion | Fully extended | e1 of elbow/forearm joint, (3.4.1) | ||

| Carrying angle | (positive ) |

in plane |

e2 of elbow/forearm joint, (3.4.1) | |||

| Forearm pronation–supination | Pronation | Fully supinated | e3 of elbow/forearm joint, (3.4.1) | |||

| Wrist (third metacarpal relative to forearm) | Wrist flexion–extension | Flexion | third metacarpal parallel to line from US to EL-EM midpoint | e1 of wrist joint, (4.4.1) | ||

| Wrist radial–ulnar deviation | Ulnar deviation | e2 of wrist joint, (4.4.1) | ||||

| Wrist axial rotation | (positive ) |

in plane |

e3 of wrist joint, (4.4.1) |

Each JCS is defined by axes of rotation, listed in order from first to third rotation axis. The rotation axes are given in terms of axes of the BCS of the distal segment and, in parentheses, in terms of axes embedded in the proximal and distal segments.8 Given are also the names of the angles of rotation used in this paper, along with their descriptions and explanations of which direction is positive and where the angle begins. Finally, the last column lists the equivalent axes and angles defined in the ISB recommendations (with references).

Some may be concerned that global definitions of joints may neglect the contributions of some bones. It is important to understand that even though the proposed model does not explicitly provide all of the information provided by more detailed models, the information it does provide is nonetheless equally accurate. For example, even though the proposed model does not explicitly parse rotation of the thoracohumeral joint into rotation at the sternoclavicular, acromioclavicular, and glenohumeral joints (GHs), the proposed model does include scapular and clavicular contributions. In fact, defining the thoracohumeral joint simply as the orientation of the humerus relative to the thorax forces it to include all contributions of the scapula and clavicle to rotation of the humerus relative to the thorax. Researchers interested in whole-arm movement often do not parse thoracohumeral rotation into rotations at the sternoclavicular, acromioclavicular, and glenohumeral joints, but instead group these joints into a single “shoulder joint.” Therefore, we present here the thoracohumeral joint as the “shoulder joint” as recommended by the ISB (section 2.4 of Ref. [10]). In our opinion, use of this global definition of shoulder motion is the safest way to promote accurate reports of shoulder motion during whole arm movements, albeit at the sacrifice of detail. Furthermore, researchers who wish to parse rotation of the thoracohumeral joint into rotation at the sternoclavicular, acromioclavicular, and glenohumeral joints can easily expand our procedures by including sensors on the scapula and clavicle. Recommendations for the BCS of the scapula and clavicle, and for the JCS of the sternoclavicular, acromioclavicular, and glenohumeral joints are given by the ISB [10]. Prior studies using EM sensors to investigate the shoulder complex include [7,14–16].

In addition to the shoulder JCS recommended by the ISB (Table 2), we provide an alternative shoulder JCS (Table 3) that does not suffer from gimbal lock in anatomical shoulder position. To clarify, gimbal lock is a mathematical singularity; when a joint is in gimbal lock, it is not possible to determine the first and third joint angles uniquely. In addition, close to gimbal lock, small changes in the actual orientation (in this case, the orientation of the upper arm) produce large changes in the first and third joint angles. Gimbal lock is an unavoidable property of Euler/Cardan angles—any JCS includes two orientations (180 deg apart) that suffer from gimbal lock. The only remedy is to choose a JCS whose two gimbal-lock orientations are as far as possible from the orientations of interest. The ISB recommends using a rotation sequence for the shoulder, which suffers from gimbal lock when the shoulder is in neutral abduction–adduction (and 180 deg of abduction). This JCS definition is appropriate for studies that focus on movements with abduction angles around 90 deg, such as overhead tasks and some athletic tasks. In contrast, studies that focus on movements involving small abduction–adduction angles (i.e., when the upper arm is at the side of the thorax), which include many of the activities of daily living, are better off using a sequence (Table 3). This sequence places gimbal lock in 90 deg of abduction (and 90 deg of adduction, which is beyond the range of motion). Note that gimbal lock is not a problem for the elbow-forearm and wrist joints because gimbal lock would occur when the carrying angle and radial-ulnar deviation are at 90 deg, which is far beyond the range of motion in these DOF.

Table 3.

Alternative JCS for the shoulder ( )

| Joint | Axis | Angle | Description | Positive direction | Origin (0 deg) |

|---|---|---|---|---|---|

| Shoulder (humerus rel. to thorax) | Shoulder flexion–extension | Flexion | (Anatomical position) | ||

| Shoulder abduction–adduction | Adduction | (Anatomical position) | |||

| Shoulder internal–external humeral rotation | Internal rotation | (Anatomical position) |

This JCS exhibits gimbal lock in 90 deg of shoulder abduction instead of anatomical position (0 deg of abduction).

2.2 Sensor Placement.

Attached to each body segment is an EM motion capture sensor (a receiver) with its own sensor coordinate system (SCS), labeled (proximal to distal) as , , , and , respectively (Fig. 1(b) and Table 1). Theoretically, each sensor can be attached to its respective limb segment at any location and in any orientation. However, judicious placement can minimize the effects of soft-tissue artifact, especially for longitudinal rotations such as humeral internal–external rotation and forearm pronation–supination [17]. Because humeral rotation causes the skin of the distal portion of the upper arm to rotate more than the skin of the proximal portion, it is recommended that the upper arm sensor be attached to the distal portion of the upper arm. Similarly, because the calculation of forearm pronation–supination relies on the sensor attached to the forearm, and the distal portion of the forearm rotates much more than the proximal portion, it is recommended that the forearm sensor be attached to the distal portion of the forearm, just proximal to the wrist joint [17]. Attaching the hand sensor to the dorsal aspect of the hand in such a way that it straddles the third and fourth metacarpals makes it particularly stable. The thorax sensor is attached to the sternum [14]. EM systems typically include a stationary transmitter, whose coordinate frame of the transmitter, , is fixed in space and serves as the universal frame for describing the orientation of all other frames.

2.3 Calibration.

Because the sensors can be affixed to the limb segment in any orientation and are therefore not generally aligned with the BCS of the corresponding body segment, a calibration is required to determine the orientation of each BCS relative to the corresponding SCS.7 This calibration can take one of three forms [18]: (1) using an instrumented stylus to define a number of anatomical landmarks [7,9], (2) placing the limb in a calibration posture in which all joint angles are known [19], or (3) performing functional movements to define functional axes [20]. The first method is the landmark calibration method and is recommended by ISB [10], but the second method, the postural calibration method, is simpler and common, especially for in vivo experiments. Therefore, we present both the landmark and postural calibration methods below (with equations in Appendix B).

2.3.1 Landmark Calibration.

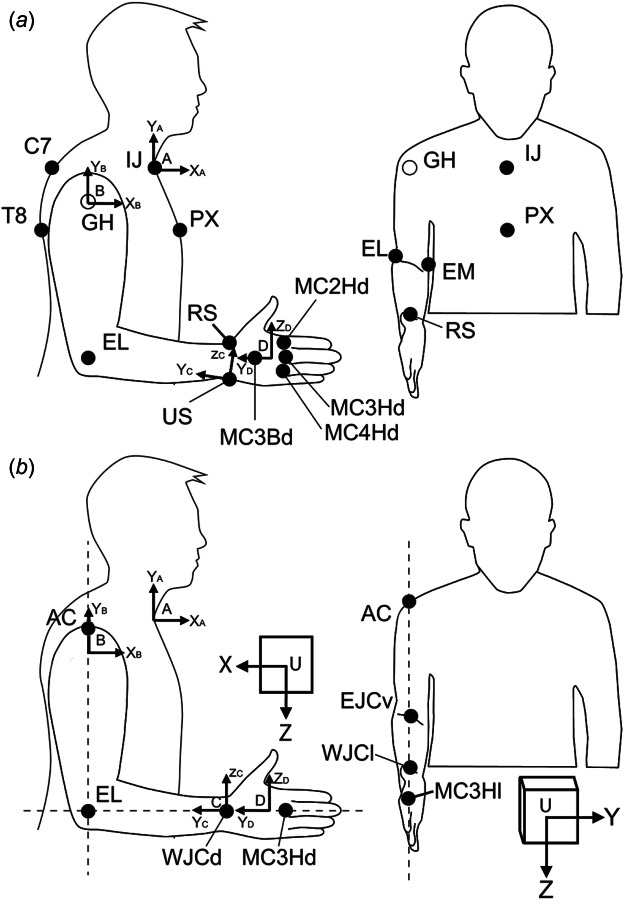

The landmark calibration method determines the relationship between a BCS and its corresponding SCS through the use of landmarks and therefore requires that the experimenter determine the position of a number of landmarks on the subject (Table 4, Fig. 2(a)). The landmarks on the thorax, upper arm, and forearm included here are identical to those recommended by ISB [10], but the landmarks on the hand were altered for in vivo use. To clarify, the third metacarpal was used to represent the orientation of the hand, as suggested in 4.3.4 of the ISB recommendations. However, instead of using the centers of the head and base of the third metacarpal to determine its long axis ( ), which are not easily accessed in living subjects, we used the projections of those centers onto the dorsum of the hand, i.e., the dorsal-most point of the head and base of the third metacarpal. The base of the third metacarpal can be palpated on the dorsum of the hand by moving proximally along the length of the third metacarpal. Also, instead of using the plane of symmetry of the bone to determine the other two axes (because it is difficult to identify in vivo), we used the dorsal projections of the heads of the second and fourth metacarpals. These two landmarks and form a plane that defines , from which can be calculated. We suggest that these landmarks on the hand be located when the fingers are in a relaxed position (neither fully extended nor fully flexed).

Table 4.

Anatomical landmarks used in the landmark calibration method

| Abbreviation | Description |

|---|---|

| C7 | Processus Spinosus (spinous process) of the seventh cervical vertebra |

| T8 | Processus Spinosus (spinous process) of the eighth thoracic vertebra |

| IJ | Deepest point of Incisura Jugularis (suprasternal notch) |

| PX | Processus Xiphoideus (xiphoid process), most caudal point on the sternum |

| GH | Glenohumeral rotation center, estimated by motion recordings |

| EL | Most caudal point on lateral epicondyle |

| EM | Most caudal point on medial epicondyle |

| RS | Most caudal-lateral point on the radial styloid |

| US | Most caudal-medial point on the ulnar styloida |

| MC2Hd | Dorsal projection of midpoint of head of second metacarpalb |

| MC3Hd | Dorsal projection of midpoint of head of third metacarpalb |

| MC4Hd | Dorsal projection of midpoint of head of fourth metacarpalb |

| MC3Bd | Dorsal projection of midpoint of base of third metacarpalb |

Note: The descriptions of landmarks C7 through US are taken directly from the ISB recommendations [10], but landmarks for the hand (MC2Hd through MC3Bd) were altered for in vivo use.

According to 2.3.5 of the ISB recommendations, this landmark must be located when the elbow is flexed 90 deg and the forearm is fully pronated.

The fingers should be in a relaxed position.

Fig. 2.

Landmarks needed for Landmark calibration method (a) and postural calibration method (b). (a) In the landmark method, the landmarks given by solid circles are localized with the help of the stylus. The center of the glenohumeral joint (GH, open circle) cannot be palpated and is estimated from shoulder movements. Note that some landmarks, such as the ulnar styloid, should be located in a different posture (see above). (b) In the postural method, the illustrated landmarks are aligned parallel to the axes of the universal frame of the transmitter (U). Laser levels used to aid in this process are depicted as dashed lines. Abbreviations in A and B are defined in Tables 4 and 5, respectively.

With the exception of the center of rotation of the glenohumeral joint (see below), the positions of these landmarks can be recorded with the help of a stylus, which is available in some EM systems or is, alternatively, easily constructed by attaching an EM sensor to the end of a long slender object [7]. The location of the tip of the stylus relative to the SCS of the EM sensor can be determined experimentally by calculating the pivot point of the instantaneous helical axes, analogous to determining the center of rotation of the glenohumeral joint (see below).

The center of rotation of the glenohumeral joint is one of the landmarks required for landmark calibration (Table 4), but it cannot be palpated. The ISB recommendations suggest estimating it by calculating the pivot point of the instantaneous helical axes following [21,22] (see also Ref. [23]), who implemented the method described in Ref. [24]. This method requires a sensor on the scapula and a sensor on the upper arm. The sensor on the scapula is only needed to find the center of rotation of the glenohumeral joint and may be removed immediately afterward since the center of rotation will be recorded relative to the sensor on the upper arm. Subjects are asked to make a number of shoulder rotations from which the center of rotation of the glenohumeral joint can be estimated as described in Appendix B.1.1.

Once the landmarks are localized, one can calculate the relationship between each SCS and its associated BCS following the process outlined in Appendix B.1.

2.3.2 Postural Calibration.

The postural calibration method is meant to be a simple and quick approximation of the landmark calibration method; it does not require the use of a stylus or determination of the center of rotation of the GH. According to this method, the subject assumes a posture in which his/her BCS frames have a known orientation with respect to the transmitter frame . The orientation of each SCS is recorded in this posture, from which the relationships between the BCS and SCS can be determined. This approach only requires a single posture, which is often the posture shown in Fig. 2, referred to as neutral position. This posture is preferred over anatomical posture because anatomical posture places the elbow and forearm at or near the end of the range of motion, which varies between subjects.

Aligning the BCS frames to the transmitter frame is accomplished with the use of landmarks that are marked on the skin (e.g., with a pen) and aligned in the parasagittal, frontal, and transverse planes, as shown in Fig. 2. A variety of landmarks have been used in the literature. We present here landmarks (Table 5) that are as close as possible to those suggested in the ISB recommendations [10] but do not require the use of a stylus or determination of GH. The acromion approximates the position of GH in the anteroposterior and mediolateral directions (the position of GH in the superior–inferior direction is not required). Note that the forearm and hand are aligned when the lateral epicondyle, wrist joint center, and head of the third metacarpal are collinear. To allow easy alignment of all BCS frames at once, this suggested alignment differs from the ISB standard, which defines the long axis of the forearm as passing through the ulnar styloid (as opposed to the wrist joint center). Finally, the head of the second metacarpal is visible in the parasagittal plane and approximates the position of the head of the third metacarpal in that plane.

Table 5.

Anatomical landmarks used in the postural calibration method

| Abbreviation | Description |

|---|---|

| AC | Acromion |

| EL | Most caudal point on lateral epicondyle |

| EJCv | Ventral projection of elbow joint center (EJC) into antecubital fossa, where EJC is assumed midway between EL and EM |

| WJCd | Dorsal projection of wrist joint center (WJC), where WJC is assumed midway between RS and US |

| WJCl | Lateral projection of wrist joint center (WJCL = RS) |

| MC3Hd | Dorsal projection of midpoint of head of third metacarpal |

| MC2Hl | Lateral projection of midpoint of head of second metacarpal |

Aligning this many landmarks at once can be accomplished with the use of three laser levels that project lines onto the subject's upper limb (Fig. 2). If the laser-level lines are parallel to the axes of the transmitter frame, then aligning the landmarks to the laser levels will place the BCS frames in a known orientation relative to the transmitter frame. Once the subject is in the correct position, one can calculate the relationship between each SCS and its associated BCS following the process outlined in Appendix B.2.

2.4 Inverse Kinematics.

The process of calculating joint angles from sensor angles requires four steps, represented by the columns of blocks in Fig. 3.

Fig. 3.

![Schematic of the inverse kinematics process for whole-arm movements. Inputs include angles [a,e,r] (representing azimuth, elevation, and roll) of each sensor (E–H) and the rotation matrices between each sensor and its BCS (A–D) established during calibration. The output consists of the three joint angles ([α,β,γ]) for each of the shoulder (s), elbow-forearm (e), and wrist (w) joints. The inverse kinematics process includes the four steps described above, each represented by a column of boxes: (1) aer→RSCS converts sensor angles into rotation matrices describing the orientation of each SCS with respect to the universal frame, (2) RSCS→RBCS multiplies each SCS rotation matrix by its calibration matrix, yielding the rotation matrices describing the orientation of each BCS related to the universal frame, (3) RBCS→RJCS multiplies the rotation matrices of adjacent BCS to obtain JCS rotation matrices, and (4) RJCS→αβγ extracts joint angles from each JCS rotation matrix. The leading superscript and subscript of rotation matrices indicate the original and final CS; for example, RBA is the rotation matrix that describes B relative to A (see Appendix A for more detail).](https://cdn.ncbi.nlm.nih.gov/pmc/blobs/d128/10782867/9612a38aa67e/bio-19-1278_074502_g003.jpg)

Schematic of the inverse kinematics process for whole-arm movements. Inputs include angles (representing azimuth, elevation, and roll) of each sensor ( – ) and the rotation matrices between each sensor and its BCS ( – ) established during calibration. The output consists of the three joint angles ( ) for each of the shoulder ( ), elbow-forearm ( ), and wrist ( ) joints. The inverse kinematics process includes the four steps described above, each represented by a column of boxes: (1) converts sensor angles into rotation matrices describing the orientation of each SCS with respect to the universal frame, (2) multiplies each SCS rotation matrix by its calibration matrix, yielding the rotation matrices describing the orientation of each BCS related to the universal frame, (3) multiplies the rotation matrices of adjacent BCS to obtain JCS rotation matrices, and (4) extracts joint angles from each JCS rotation matrix. The leading superscript and subscript of rotation matrices indicate the original and final CS; for example, is the rotation matrix that describes relative to (see Appendix A for more detail).

Step 1: The set of angles describing the orientation (azimuth, elevation, and roll) of each SCS relative to the transmitter frame is converted to a rotation matrix.

Step 2: These rotation matrices are multiplied with the rotation matrices describing the orientation of each SCS relative to its associated BCS (determined during calibration) to determine the orientation of each BCS relative to

Step 3: The rotation matrices (describing the orientation of each BCS relative to ) of adjacent BCS are multiplied to calculate the orientation of one BCS relative to its adjacent BCS (which gives the JCS rotation matrix).

Step 4: The joint angles are extracted from the JCS rotation matrices.

Though the second and third steps in this process involve only simple matrix multiplications, defining the proper rotation matrices (in step 1 and during calibration) and extracting joint angles can be challenging. All of the equations needed to perform each of these steps are provided in Appendix C.

2.5 Seven-Degrees-of-Freedom Model.

The upper limb is often modeled as having 7DOF (instead of 9DOF) by assuming that the carrying angle of the elbow ( ) and the amount of axial rotation at the wrist ( ) are constant. These assumptions simplify the extraction of joint angles from rotation matrices (Appendix C.4).

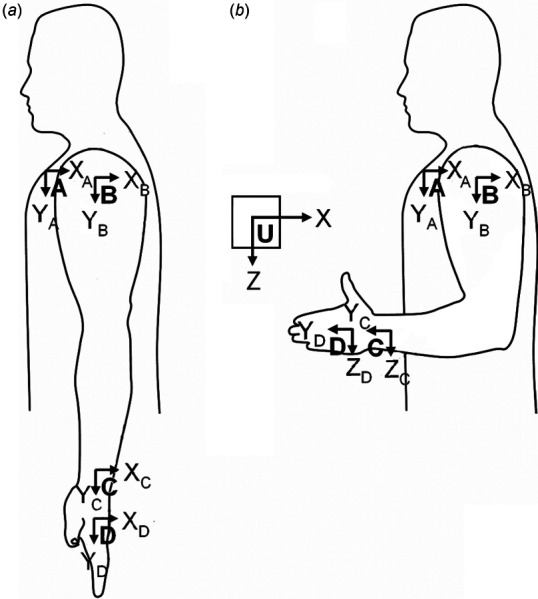

2.6 Left Arm.

For clinical motions of the left limb to have the same sign convention as those for the right limb (e.g., wrist flexion is positive, wrist extension is negative), the BCS of the left limb must be defined differently than the BCS of the right limb. In anatomical posture, the BCS frames of the left limb and thorax must have y-axes that point distally and x-axes that point dorsally, with z-axes completing the right-handed triad as shown in Fig. 4 (compare to Fig. 1). Using the postural calibration method to calibrate the left arm requires additional care (see Appendix B.2.2).

Fig. 4.

Body‐coordinate systems are defined differently for the left arm, shown here in anatomical position (a) and neutral position (b)

3 Discussion

In vivo measurement of joint angles during whole-arm movements requires many steps, including body and joint coordinate system definitions, sensor placement, calibration, and inverse-kinematics algorithms. Some of these steps are not well defined in the literature, particularly for EM sensors. Important details are often omitted, spread across many different sources, incompatible across sources (sometimes even across joints within the same source), or under-constrained. A small number of such gaps are easily overcome by the individual researcher. Unfortunately, the number, complexity and inter-relatedness of these gaps become almost intractable for in vivo measurements of whole-arm movements using EM sensors, rendering it difficult to choose the best course of action and compare results between studies. Therefore, the purpose of this paper was to provide a comprehensive methodology for using EM motion capture to track joint angles of the whole arm in vivo.

3.1 Comparison to International Society of Biomechanics Recommendations

3.1.1 Conformance.

The method presented in this paper is based as closely as possible on the ISB recommendations and the studies that formed the basis for the ISB recommendations. BCS definitions for all four limb segments (Table 1) were selected following ISB recommendations for global limb motion. JCS definitions also followed ISB recommendations for global motion (Table 2). We used global definitions because they are more common in the disciplines of motor control, clinical evaluation, rehabilitation, and occupational therapy.

The ISB recommendations do not include guidelines for calibration but states that “it is up to the individual researcher to relate the marker or other (e.g., electromagnetic) coordinate systems to the defined anatomic system” [10]. The landmark calibration method described in this paper uses an anatomical system that follows the ISB landmarks used to define BCS as closely as possible (minor adaptation for third metacarpal).

3.1.2 Differences.

In select instances, the methods presented in this paper deviated from the ISB standards. These specific deviations include the landmarks of the third metacarpal and the BCS definitions of the left limb. The landmarks used to define the third metacarpal in the landmark method were altered from those specified by the ISB, which are not accessible in vivo. We chose landmarks that, in addition to being accessible in vivo, would result in a similar calibration as the inaccessible landmarks. The BCS frames for the left limb are defined such that right and left limb motion follow the same sign convention. This follows the ISB recommendations for the left elbow-forearm and wrist, but differs from the ISB recommendations for the left shoulder, which suggests mirroring marker data with respect to the XY plane. Since this practice creates inconsistencies between the joints of the same limb and is not directly applicable to EM sensors (which output sensor orientation directly instead of just marker position), we provided explicit BCS definitions for EM motion capture of the left shoulder that are compatible with the other methods presented here.

3.1.3 Additions.

Some information provided in this paper is not addressed in the ISB recommendations but is still necessary for in vivo measurement of whole-arm movements. Examples include proper sensor placement, explanations of gimbal lock for specific rotation sequences, adaptations for a 7DOF model, and the process and accompanying equations needed to estimate the center of rotation for the glenohumeral joint. Likewise, the equations and algorithms needed to perform inverse kinematics on EM data are presented in full.

In addition to the landmark calibration method, we also presented the postural calibration method. It differs slightly from the ISB guidelines (in its definition of the long axis of the forearm) but provides a quick and simple approximation of the landmark method and is commonly used in the disciplines mentioned above.

We also provided the equations for a shoulder angle sequence ( ) that does not suffer from gimbal lock in anatomical position like the ISB-recommended sequence. Studies focusing on large abduction angles should use the sequence, which places gimbal lock in neutral abduction–adduction, whereas studies focusing on small shoulder abduction angles are better off using the sequence, which places gimbal lock at 90 deg of abduction (see Sec. 2.1 for more details).

3.2 Implementation.

The methods presented here have been tested and successfully implemented in a whole-arm study of tremor [25,26]. We are currently working on a quantitative comparison of postural versus landmark calibration methods to allow for more informed comparison between studies.

3.3 Limitations.

The methodology given in this paper has two noteworthy limitations in the inverse kinematics process: the inverse kinematics algorithms do not (1) take advantage of the position information of the sensors or (2) compensate for the effects of soft-tissue artifact. It is possible to use the partially redundant nature of the position and orientation data from the sensors to minimize errors [20] or compensate for soft-tissue artifact. Soft-tissue artifact refers to the error in calculated joint angle caused by movement of the skin (and the sensor placed on the skin) relative to the underlying skeletal structures. This error is especially large in axial rotation of the humerus and in forearm pronation–supination. During axial rotation of the humerus, for example, the tissues close to the glenohumeral joint remain mostly static, whereas tissues close to the elbow joint rotate, with varying amounts of movement in between. It is clear that sensors placed at different locations on the upper arm will detect different amounts of rotation, resulting in errors of the order of 20–50% of the axial rotation of the humerus [27–29]. Multiple methods have been developed to compensate for soft-tissue artifact [27,28,30–34], but with the exception of Ref. [28], these methods were developed for optoelectric motion capture systems and cannot be directly applied to electromagnetic motion capture systems because the algorithms take advantage of the individual markers used in optoelectronic systems. The first step in developing soft-tissue artifact compensation methods for electromagnetic systems is to establish a self-consistent framework for calibration and inverse kinematics, which is the focus of this paper. We are currently working on extending the inverse kinematics presented here to include soft-tissue artifact compensation.

3.4 Additional Methodological Considerations.

This paper focuses on the steps necessary for tracking joint angles: defining joint angles, placing sensors, calibrating the sensor system, calculating rotation matrices from sensor data, and extracting unique joint angles from rotation matrices. However, there are additional considerations that must be taken into account when using EM sensors. Yaniv et al. described a broad set of factors influencing the utility of EM motion capture systems in clinical settings [1]. Here, we discuss briefly some of these and other factors.

One of the chief concerns is accuracy; it is important to verify the instrument's accuracy within one's own testing environment. This can be accomplished in a variety of ways. Some studies have placed sensors at known distances to the transmitter or to each other, often using assessment phantoms such as grid boards [1–4,6,8,35]. Other studies have characterized the accuracy of EM systems by comparing EM measurements to a standard, such as a robot [36] or materials testing device [8], optoelectronic motion capture system [5,36,37], inclinometer [15], pendulum potentiometer [38], inertial-ultrasound hybrid motion capture system [39], or linkage digitizer [7].

Factors affecting accuracy include transmitter–receiver separation distance, distortion of the electromagnetic field, and limitations in dynamic response.

Transmitter–receiver separation. In EM systems, the signal drops off with the third power of transmitter–sensor separation, so errors are reduced by keeping the sensor(s) as close as possible to the transmitter [2]. The effect of distance from the transmitter can be assessed by measuring the distance and relative orientation between two sensors fixed relative to each other as the sensors are moved throughout the testing environment. If it is known that the sensor will remain within a certain distance from the transmitter, it is possible in some EM systems to increase the resolution by decreasing the range of the analog-to-digital conversion.

Distortion of electromagnetic field. Since EM motion capture systems use an electromagnetic field to measure the position and orientation of the sensors, distortions of this field cause measurement errors. Many studies have investigated the magnitude of such errors due to ferromagnetic materials or electrical equipment (power lines, monitors, accelerometers) close to the motion capture system [1–4,6–8,35], or in specialized environments such as clinical suites [1,3,8,40], specialized laboratories [41], and virtual-reality environments [39]. These studies have made it clear that the most direct approach for decreasing such errors is to increase the distance between ferromagnetic materials and the transmitter and/or sensor, since metal effects decrease as the third power of transmitter-metal separation and sensor-metal separation, and as the sixth power of separation of metal from both transmitter and sensor [2]. Interference from electrical equipment can be reduced with appropriate sampling synchronization and filtering [2]. Further reductions in errors may be possible by applying correcting algorithms [5,37] or choosing the sampling rate based on the type of metal [6].

Limitations in dynamic response. For applications in which fast dynamic response is required (e.g., visual feedback in virtual-reality environments), one may have to take additional factors into account. Adelstein et al. characterized the latency, gain, and noise of two EM systems at a variety of frequencies spanning the bandwidth of volitional human movement [42].

There are, of course, additional considerations specific to each application. For example, in their study on using EM systems to localize electrodes and natural landmarks on the head, Engels et al. found that skin and hair softness and head movements affected the localization precision [4].

3.5 Conclusion.

The purpose of this paper was to provide a detailed methodology for in vivo measurements of whole-arm movements using EM sensors, following the ISB recommendations [10] as much as possible. This methodology includes consistent definitions of joint angles for global motions of the whole arm, recommendations for placing sensors, processes required for calibration, and complete equations for performing inverse-kinematics. We present this methodology for both the right and left upper limbs and for the landmark and postural calibration methods. Although presented here for the entire upper limb (9 or 7 DOF), the methodology can be adapted to a subset of upper-limb joints. It is hoped that this paper will simplify new investigations of whole-arm movement using EM sensors and facilitate comparison between studies.

Published Code

Accompanying code and instructions can be found online.9

Acknowledgment

The authors thank Eric Stone for his editing contributions.

Appendix A. Notation

In this paper, we use the following common notation described in more detail in Ref. [11]. The unit vectors defining a coordinate system (CS) are labeled with the name of the CS as a trailing subscript. For example, CS is defined by unit vectors , , and . Vectors can be expressed in (i.e., decomposed into the unit vectors of) any CS, and the preceding superscript indicates the CS in which a vector is expressed. For example, , , and are the unit vectors of CS , expressed in CS . Rotation matrices, which describe the orientation of one CS relative to another, have a leading superscript and subscript that indicate the original and final CS. For example, is the rotation matrix that describes relative to , i.e., . The product means that is the rotation matrix describing a sequence of three rotations: first about the -axis by , then about the once-rotated -axis by , then about the twice-rotated ′′-axis by . In general, rotation matrices vary with time, and denotes the rotation matrix at some time t, whereas denotes the rotation matrix established during calibration. The symbol represents the cross-product operation, and represents the magnitude (L2 norm) of vector .

Appendix B. Calibration

Step 2 of the inverse kinematics process (Fig. 3) requires the rotation matrices describing the orientation of each SCS relative to its associated BCS . We provide here the equations necessary to obtain these rotation matrices using two methods: landmark calibration and postural calibration.

B.1 Landmark Calibration

The landmark calibration method requires the positions of the landmarks listed in Table 4, which can be obtained using a stylus (see 2.3.1). Here, we use the following notation: represents the vector location of the C7 landmark in the frame of Sensor E at the time C7 was located with the stylus; represents the rotation matrix describing the SCS frame E with respect to the universal frame at the time C7 was located with the stylus; and is a vector pointing along the y-axis of BCS A, expressed in SCS E (but unlike , does not generally have unit length). Rotation matrices , , , and can be obtained as follows:

Matrix

Matrix (must be calculated before because requires )

Matrix

(calculated as described in Appendix B.1.1)

Matrix

B.1.1 Estimating the Center of Rotation of the Glenohumeral Joint (Adapted From Ref. [21]).

To estimate the location of the GH used in the landmark calibration method, subjects are asked to make a number of shoulder rotations involving flexion–extension, abduction–adduction, and internal–external humeral rotation. Given the position and orientation of the upper arm sensor relative to the transmitter ( and , respectively), and the position and orientation of the scapular sensor relative to the transmitter ( and ), we can express the position and orientation of the scapular sensor relative to the upper arm sensor

The rotation of the scapula relative to the humerus is described by the instantaneous helical axis (IHA), whose direction is given by the angular velocity vector (superscript denotes the transpose). The elements of (expressed in terms of frame ) can be determined from the rotation matrix and its derivative [24]

The position of the IHA at any time is expressed in terms of frame as

The center of rotation over time is the mean “pivot” closest to all IHA. For a set of IHA with positions and angular velocity vectors , the optimal position (in the least-squared sense) of the center of rotation of the glenohumeral joint is

with

where is the 3-by-3 identity matrix and is the unit vector along , i.e., . Because this method is sensitive to low angular velocities, Stokdijk et al. excluded from the calculation samples with angular velocity below 0.25 rad/s [21].

B.2 Postural Calibration

In the postural calibration method, the subject assumes a posture in which his/her BCS frames have a known orientation with respect to the transmitter frame (see 2.3.2). Since the BCS

frames are different for the right and left arms (see Secs. 2.1 and 2.6), we present the process separately for the right and left arms.

B.2.1 Right Arm.

With the right upper limb and transmitter positioned as shown in Fig. 2(b), the rotation matrices describing the orientation of the BCS relative to the universal frame are

where we made explicit that rotation matrices are functions of time , i.e., , with representing the moment of calibration (when landmarks were aligned). From the orientation of the SCS at the moment all landmarks are aligned , , , and , the relationship between the BCS and SCS can be calculated as

The relationship between an SCS and its corresponding BCS was approximated as constant over time, i.e., . For more detail, see the Limitations section of the Discussion.

B.2.2 Left Arm.

With the left upper limb and transmitter positioned as shown in Fig. 4(b), the orientations of the BCS frames relative to the transmitter frame are

From the orientation of the SCS at the moment, all landmarks are aligned ( , , , and ), the relationship between the BCS and SCS can be calculated with the same equations used for the right upper limb

Appendix C. Inverse Kinematics

As described in Sec. 2.4, the process of calculating joint angles from sensor angles requires four steps (Fig. 3).

C.1 Step 1: Calculating Rotation Matrices From Sensor Angles

Most electromagnetic motion tracking systems provide the orientation of each sensor as a set of Euler angles or as a rotation matrix (between transmitter and sensor). If the output is given as a rotation matrix, step 1 can be skipped, but before moving on to step 2, one should ensure that the rotation matrix describes the SCS relative to the universal frame and not the universal frame relative to the SCS (e.g., instead of ). If the output is given as the rotation of the universal frame relative to the SCS, one can obtain its inverse by simply transposing the matrix (e.g., ).

If the sensor orientation is given in terms of angles (e.g., azimuth, elevation, and roll), the rotation matrices must be calculated before moving on to step 2. Calculating the rotation matrices requires knowledge of the Euler angle axes and sequence used by the system. For example, for trakSTAR, the Euler angles are defined as follows: rotation about by , followed by rotation about by , followed by rotation about by , where , , and are the angles of azimuth (yaw), elevation (pitch), and roll, respectively, and , , are axes of the rotating sensor frame. From this, the rotation matrix can be calculated. For example, can be calculated from the angles associated with sensor E as

where ≡ cos and ≡ sin. The same equations can be used to calculate , , and . Using angles , , and at time , this equation can be used to calculate , , , and . Alternatively, using angles , , and obtained during calibration, this equation can be used to calculate , , , and .

C.2 Step 2: Obtaining Body Coordinate System Orientation in Universal Frame

The rotation matrices found in Step 1, which describe the orientations of the SCS relative to , are multiplied with the rotation matrices describing the orientation of each SCS relative to its associated BCS (determined during calibration). The resulting product is the orientation of each BCS relative to

C.3 Step 3: Obtaining Joint Coordinate Systems Rotation Matrices

The rotation matrices describing the orientation of each BCS relative to found in Step 2 are then used to calculate the JCS rotation matrices. More specifically, adjacent BCS are multiplied to obtain the JCS rotation matrices describing the orientation of one BCS relative to its adjacent BCS

C.4 Step 4: Extracting Joint Angles From Rotation Matrices

The final step in the inverse kinematics process is to extract joint angles from the rotation matrix associated with each JCS ( and ). The relationship between the joint angles and rotation matrix associated with a JCS is prescribed by the rotation sequence of that JCS. Consequently, different algorithms must be used for different JCS. The 9DOF case is presented first, with simplifications for the 7DOF case presented afterwards.

C.4.1 Nine-Degrees-of-Freedom Model

Shoulder

. According to the ISB recommendations [10], the rotation sequence associated with the JCS of the thoracohumeral joint is , so its rotation matrix is

where ≡ cos and ≡ sin. The elements of must equal the numeric values of the elements of calculated through steps 1–3 of the inverse kinematics process, resulting in 9 equations and 3 unknowns. Unfortunately, these nine equations do not contain enough information to determine a unique solution; there are two sets of joint angles ( and ) that satisfy these nine equations. Which set is correct? They are both correct in the sense that both sets produce the same joint configuration (i.e., the same orientation of the distal limb segment relative to the proximal limb segment); therefore, mathematically it does not matter which set is chosen as long as one consistently chooses the same set to avoid discontinuities in joint angles from one sample to the next. That said, for ease of interpretation it may be useful to choose the set that is within the range of motion of the joint, as follows. The cosine of is given in , and the sine of can be calculated from and as . Therefore, can be computed as

where atan2 is the four-quadrant inverse tangent function. Choosing the negative square root as the first argument of atan2 (as opposed to the positive square root) forces to be in the range , which is appropriate for shoulder abduction–adduction (abduction is negative according to the ISB convention). Having chosen this range for , one can find unique solutions for the other two angles

These equations work well unless or , resulting in division by zero. In these configurations, the joint is in gimbal lock, and it is not possible to differentiate between and because their axes ( and ) are parallel (or antiparallel). To clarify, after a rotation about by , then a “rotation” about by , and finally a rotation about by , it is not possible to determine how much of the total rotation came from the first rotation versus the last rotation. However, it also does not matter since the final joint orientation will be the same no matter how much of the rotation is assigned to versus . Therefore, one may choose the proportions to assign to each angle. It is common to set , assigning all of the rotation to . In this case ( ), the rotation matrix degenerates to

and can be uniquely determined as

Although the calculation of and results in division by zero only when exactly equals 0 or 180 deg, effects of gimbal lock are felt in the vicinity of and . More specifically, close to gimbal lock, small changes in limb orientation may cause very large changes in joint angles. While the resulting joint angles may not be easily interpreted, they are nonetheless correct in the sense that they represent the correct joint configuration.

Shoulder . If one uses the sequence to describe the shoulder (see Sec. 2.1), the rotation matrix is

where ≡ cos and ≡ sin. Analogous to the derivation above, can be calculated as

Choosing the positive square root for the second argument of atan2 forces to lie in the range and results in a unique set of joint angles

Positive values of represent adduction beyond neutral position and are generally outside the range of motion of the shoulder. However, will be positive when the joint is in extreme positions, e.g., when the shoulder is abducted beyond 90 deg. Although it may be difficult to interpret such extreme joint angles as clinical motions, they nonetheless are mathematically correct in the sense that they produce the correct joint configuration.

For this rotation sequence ( ), gimbal lock occurs when (arm abducted into the horizontal plane) or (not physically possible). As for the , in gimbal lock the first and third axes are parallel, and it is not possible to determine how much of the rotation should be assigned to the first versus third axis; it is common to set , assigning all of the rotation to . In this case ( and ), the rotation matrix degenerates to

and can be uniquely determined as

Elbow-forearm.

In accordance with the ISB guidelines, we used the rotation sequence for the elbow-forearm joint. The derivation of the rotation matrix is identical to that of the shoulder when using the sequence

where ≡ cos and ≡ sin. Extraction of the joint angles is analogous to the shoulder joint; the elements of must equal the numeric values of the elements of calculated through steps 1–3 of the inverse kinematics process, resulting in 9 equations and 3 unknowns, which are satisfied by two sets of joint angles: , and The two angles are

In this case, the physical limitations of the range of motion of (i.e., carrying angle) make the choice between and obvious. The carrying angle will always be well within the range: , therefore the positive square root will always yield clinically interpretable joint angles. Having selected , and can be found as

For the elbow-forearm joint, gimbal lock occurs when or (i.e., carrying angle = ). Neither of these orientations is physically possible, so gimbal lock is not a problem for the elbow.

Wrist.

In accordance with the ISB guidelines, we defined the wrist joint using the rotation sequence. The derivation of the rotation matrix is identical to that of the elbow

where ≡ cos and ≡ sin. Extraction of the joint angles is analogous to the elbow-forearm joint. The elements of must equal the numeric values of the elements of calculated through steps 1–3 of the inverse kinematics process, resulting in 9 equations and 3 unknowns. Again, these equations yield two sets of joint angles: , and

Calculating and involves the same equation as the elbow-forearm joint

In this case, the physical limitations of (i.e., radial/ulnar deviation) also make the choice between and obvious. The wrist will never deviate radially or ulnarly beyond the range , therefore the positive square root will always yield clinically interpretable joint angle values. Having selected , and can be found as

For the wrist joint, gimbal lock occurs when or (i.e., radial deviation of 90 or ulnar deviation of 90 , respectively). Neither of these orientations is physically possible, so gimbal lock is not a problem for the wrist.

C.4.2 Seven-Degrees-of-Freedom Model.

The 7DOF model of the arm is a simplification that assumes the elbow carrying angle ( ) and wrist axial rotation ( ) to be constant (see Table 2). The wrist experiences only small amounts of axial rotation, so one may wish to approximate as zero. The carrying angle has been measured to be of the order of 5–15 deg for men and 10–25 deg for women [17]. Depending on the application, one may wish to approximate the carrying angle as zero or as a constant value in the measured range. Alternatively, the carrying angle could be measured for individual subjects as the angle between the ulna and the extension of the humerus when the arm is in anatomical position (Fig. 1) [17]

where denotes the dot product. Unit vectors and must be expressed in the same frame. Choosing frame B,

where and describes the orientation of C relative to B when the arm is in anatomical position.

Having chosen a value for , one can calculate and directly using the equations in Appendix C.4.1

Since and depend on , all three joint angles will differ from those calculated with the 9DOF model. In contrast, choosing a value for does not affect and (see Appendix C.4.1)

Note that the simplicity associated with the 7DOF model comes at a cost: the resulting joint angles no longer satisfy the rotation matrices perfectly.

Footnotes

Commercially available systems include 3D Guidance trakSTAR™ and Aurora® (Northern Digital Inc., Waterloo, ON, Canada) and FASTRAK® and LIBERTY™ (Polhemus., Colchester, VT).

For example, the ISB recommends one definition for the BCS of the radius and ulna for studying the elbow and forearm joints (see secs. 3.3.3 and 3.3.4 in Ref. [10]) but another definition of the same BCS for studying the wrist joint (4.3.1 and 4.3.2).

For example, the guidelines for the shoulder (see 2.1 in Ref. [10]) differ from those for the wrist and hand (4.3).

For example, the landmarks needed to define the recommended BCS for the third metacarpal (needed to define global wrist motion) include the centers of the base and head of the third metacarpal, which cannot be accessed in vivo without time-consuming and expensive imaging.

Technically, joint angles defined for JCS in which the first and third body-fixed axes are repeated ( , , , , , or ) are called proper Euler angles, whereas joint angles defined for JCS in which all three axes are different ( , , , , , or ) are Cardan angles. A more detailed discussion on Euler angles and sequence of rotation can be found in many mechanics texts, including Refs. [11–13].

In this paper, we approximated the relative orientation between BCS and SCS as constant over time, even though in reality, it varies slightly because of movement of soft tissue relative to the underlying skeletal structures (see Limitations section of Discussion for more detail).

For example, in terms of the BCS of the distal segment, the configuration of the shoulder joint is defined by first rotating the humerus about the axis of the BCS of the humerus, then about the axis of the once-rotated BCS of the humerus ( ), and finally about the axis of the twice-rotated BCS of the humerus ( ). The configuration of the shoulder can equivalently be defined in terms of axes embedded in the proximal and distal frame: the first rotation is about the axis of the proximal BCS (thorax, A), the third rotation is about the axis of the distal BCS (humerus, B) in its final orientation, and the second rotation is about an intermediate axis (Int.) that is perpendicular to both the first and third axes ( and ).

Funding Data

NSF (Grant No. 1806056; Funder ID: 10.13039/100000001).

CBET (Funder ID: 10.13039/100000146).

References

- [1]. Yaniv, Z. , Wilson, E. , Lindisch, D. , and Cleary, K. , 2009, “ Electromagnetic Tracking in the Clinical Environment,” Med. Phys., 36(3), pp. 876–892. 10.1118/1.3075829 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2]. Nixon, M. A. , McCallum, B. C. , Fright, W. R. , and Price, N. B. , 1998, “ The Effects of Metals and Interfering Fields on Electromagnetic Trackers,” Presence, 7(2), pp. 204–218. 10.1162/105474698565587 [DOI] [Google Scholar]

- [3]. Franz, A. M. , Seitel, A. , Cheray, D. , and Maier-Hein, L. , 2019, “ Polhemus EM Tracked Micro Sensor for CT-Guided Interventions,” Med. Phys., 46(1), pp. 15–24. 10.1002/mp.13280 [DOI] [PubMed] [Google Scholar]

- [4]. Engels, L. , De Tiege, X. , Op de Beeck, M. , and Warzée, N. , 2010, “ Factors Influencing the Spatial Precision of Electromagnetic Tracking Systems Used for MEG/EEG Source Imaging,” Neurophysiol. Clin./Clin. Neurophysiol., 40(1), pp. 19–25. 10.1016/j.neucli.2010.01.002 [DOI] [PubMed] [Google Scholar]

- [5]. Hagemeister, N. , Parent, G. , Husse, S. , and de Guise, J. A. , 2008, “ A Simple and Rapid Method for Electromagnetic Field Distortion Correction When Using Two Fastrak Sensors for Biomechanical Studies,” J. Biomech., 41(8), pp. 1813–1817. 10.1016/j.jbiomech.2008.02.030 [DOI] [PubMed] [Google Scholar]

- [6]. LaScalza, S. , Arico, J. , and Hughes, R. , 2003, “ Effect of Metal and Sampling Rate on Accuracy of Flock of Birds Electromagnetic Tracking System,” J. Biomech., 36(1), pp. 141–144. 10.1016/S0021-9290(02)00322-6 [DOI] [PubMed] [Google Scholar]

- [7]. Meskers, C. G. M. , Fraterman, H. , van der Helm, F. C. T. , Vermeulen, H. M. , and Rozing, P. M. , 1999, “ Calibration of the “Flock of Birds” Electromagnetic Tracking Device and Its Application in Shoulder Motion Studies,” J. Biomech., 32(6), pp. 629–633. 10.1016/S0021-9290(99)00011-1 [DOI] [PubMed] [Google Scholar]

- [8]. Milne, A. D. , Chess, D. G. , Johnson, J. A. , and King, G. J. W. , 1996, “ Accuracy of an Electromagnetic Tracking Device: A Study of the Optimal Operating Range and Metal Interference,” J. Biomech., 29(6), pp. 791–793. 10.1016/0021-9290(96)83335-5 [DOI] [PubMed] [Google Scholar]

- [9]. van Andel, C. J. , Wolterbeek, N. , Doorenbosch, C. A. M. , Veeger, D. , and Harlaar, J. , 2008, “ Complete 3D Kinematics of Upper Extremity Functional Tasks,” Gait Posture, 27(1), pp. 120–127. 10.1016/j.gaitpost.2007.03.002 [DOI] [PubMed] [Google Scholar]

- [10]. Wu, G. , van der Helm, F. C. T. , (DirkJan) Veeger, H. E. J. , Makhsous, M. , Van Roy, P. , Anglin, C. , Nagels, J. , Karduna, A. R. , McQuade, K. , Wang, X. , Werner, F. W. , and Buchholz, B. , 2005, “ ISB Recommendation on Definitions of Joint Coordinate Systems of Various Joints for the Reporting of Human Joint motion—Part II: Shoulder, Elbow, Wrist and Hand,” J. Biomech., 38(5), pp. 981–992. 10.1016/j.jbiomech.2004.05.042 [DOI] [PubMed] [Google Scholar]

- [11]. Craig, J. J. , 2005, Introduction to Robotics, 3rd ed., Pearson Prentice Hall, Upper Saddle River, NJ. [Google Scholar]

- [12]. Spong, M. W. , Hutchinson, S. , and Vidyasagar, M. , 2006, Robot Modeling and Control, Wiley, Hoboken, NJ. [Google Scholar]

- [13]. Goldstein, H. , Poole, C. , and Safko, J. , 2002, Classical Mechanics, 3rd ed., Addison Wesley, San Francisco, CA. [Google Scholar]

- [14]. Meskers, C. G. M. , Vermeulen, H. M. , de Groot, J. H. , van der Helm, F. C. T. , and Rozing, P. M. , 1998, “ 3D Shoulder Position Measurements Using a Six-Degree-of-Freedom Electromagnetic Tracking Device,” Clin. Biomech., 13(4–5), pp. 280–292. 10.1016/S0268-0033(98)00095-3 [DOI] [PubMed] [Google Scholar]

- [15]. Scibek, J. S. , and Carcia, C. R. , 2013, “ Validation and Repeatability of a Shoulder Biomechanics Data Collection Methodology and Instrumentation,” J. Appl. Biomech., 29(5), pp. 609–615. 10.1123/jab.29.5.609 [DOI] [PubMed] [Google Scholar]

- [16]. Barnett, N. D. , Duncan, R. D. D. , and Johnson, G. R. , 1999, “ The Measurement of Three Dimensional Scapulohumeral Kinematics—A Study of Reliability,” Clin. Biomech., 14(4), pp. 287–290. 10.1016/S0268-0033(98)00106-5 [DOI] [PubMed] [Google Scholar]

- [17]. Anglin, C. , and Wyss, U. P. , 2000, “ Review of Arm Motion Analyses,” Proc. Inst. Mech. Eng., Part H, 214(5), pp. 541–555. 10.1243/0954411001535570 [DOI] [PubMed] [Google Scholar]

- [18]. Kontaxis, A. , Cutti, A. G. , Johnson, G. R. , and Veeger, H. E. J. , 2009, “ A Framework for the Definition of Standardized Protocols for Measuring Upper-Extremity Kinematics,” Clin. Biomech., 24(3), pp. 246–253. 10.1016/j.clinbiomech.2008.12.009 [DOI] [PubMed] [Google Scholar]

- [19]. Aizawa, J. , Masuda, T. , Koyama, T. , Nakamaru, K. , Isozaki, K. , Okawa, A. , and Morita, S. , 2010, “ Three-Dimensional Motion of the Upper Extremity Joints During Various Activities of Daily Living,” J. Biomech., 43(15), pp. 2915–2922. 10.1016/j.jbiomech.2010.07.006 [DOI] [PubMed] [Google Scholar]

- [20]. Biryukova, E. V. , Roby-Brami, A. , Frolov, A. A. , and Mokhtari, M. , 2000, “ Kinematics of Human Arm Reconstructed From Spatial Tracking System Recordings,” J. Biomech., 33(8), pp. 985–995 (in English). 10.1016/S0021-9290(00)00040-3 [DOI] [PubMed] [Google Scholar]

- [21]. Stokdijk, M. , Nagels, J. , and Rozing, P. M. , 2000, “ The Glenohumeral Joint Rotation Centre In Vivo,” J. Biomech., 33(12), pp. 1629–1636. 10.1016/S0021-9290(00)00121-4 [DOI] [PubMed] [Google Scholar]

- [22]. Veeger, H. E. J. , Yu, B. , and An, K. N. , 1997, “ Orientation of Axes in the Elbow and Forearm for Biomechanical Modeling,” Conference of the International Shoulder Group, Delft, The Netherlands, Shaker Publishing, Maastricht, The Netherlands, pp. 83–87. [Google Scholar]

- [23]. Veeger, H. E. J. , Yu, B. , An, K. N. , and Rozendal, R. H. , 1997, “ Parameters for Modeling the Upper Extremity,” J. Biomech., 30(6), pp. 647–652. 10.1016/S0021-9290(97)00011-0 [DOI] [PubMed] [Google Scholar]

- [24]. Woltring, H. , 1990, “ Data Processing and Error Analysis,” Biomechanics of Human Movement: Applications in Rehabilitation, Sport and Ergonomics, Capozza A., and Berme P., eds., Berlec Corporation, Worthington, OH, pp. 203–237. [Google Scholar]

- [25]. Geiger, D. W. , Eggett, D. L. , and Charles, S. K. , 2018, “ A Method for Characterizing Essential Tremor From the Shoulder to the Wrist,” Clin. Biomech., 52, pp. 117–123. 10.1016/j.clinbiomech.2017.12.003 [DOI] [PubMed] [Google Scholar]

- [26]. Pigg, A. , “ Distribution of Essential Tremor Among the Degrees of Freedom of the Upper Limb,” Clin. Neurophysiol., under review. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [27]. Cutti, A. G. , Paolini, G. , Troncossi, M. , Cappello, A. , and Davalli, A. , 2005, “ Soft Tissue Artefact Assessment in Humeral Axial Rotation,” Gait Posture, 21(3), pp. 341–349. 10.1016/j.gaitpost.2004.04.001 [DOI] [PubMed] [Google Scholar]

- [28]. Cao, L. , Masuda, T. , and Morita, S. , 2007, “ Compensation for the Effect of Soft Tissue Artifact on Humeral Axis Rotation Angle,” J. Med. Dent. Sci., 54(1), pp. 1–7. [PubMed] [Google Scholar]

- [29]. Karduna, A. R. , McClure, P. W. , Michener, L. A. , and Sennett, B. , 2001, “ Dynamic Measurements of Three-Dimensional Scapular Kinematics: A Validation Study,” ASME J. Biomech. Eng., 123(2), pp. 184–190. 10.1115/1.1351892 [DOI] [PubMed] [Google Scholar]

- [30]. Cutti, A. G. , Cappello, A. , and Davalli, A. , 2005, “ A New Technique for Compensating the Soft Tissue Artefact at the Upper-Arm: In Vitro Validation,” J. Mech. Med. Biol., 5(2), pp. 333–347. 10.1142/S0219519405001485 [DOI] [Google Scholar]

- [31]. Schmidt, R. , Disselhorst-Klug, C. , Silny, J. , and Rau, G. , 1999, “ A Marker-Based Measurement Procedure for Unconstrained Wrist and Elbow Motions,” J. Biomech., 32(6), pp. 615–621. 10.1016/S0021-9290(99)00036-6 [DOI] [PubMed] [Google Scholar]

- [32]. Roux, E. , Bouilland, S. , Godillon-Maquinghen, A. P. , and Bouttens, D. , 2002, “ Evaluation of the Global Optimisation Method Within the Upper Limb Kinematics Analysis,” J. Biomech., 35(9), pp. 1279–1283. 10.1016/S0021-9290(02)00088-X [DOI] [PubMed] [Google Scholar]

- [33]. Zhang, Y. , Lloyd, D. G. , Campbell, A. C. , and Alderson, J. A. , 2011, “ Can the Effect of Soft Tissue Artifact Be Eliminated in Upper-Arm Internal-External Rotation?,” J. Appl. Biomech., 27(3), pp. 258–265 (in English). 10.1123/jab.27.3.258 [DOI] [PubMed] [Google Scholar]

- [34]. Begon, M. , Dal Maso, F. , Arndt, A. , and Monnet, T. , 2015, “ Can Optimal Marker Weightings Improve Thoracohumeral Kinematics Accuracy?,” J. Biomech., 48(10), pp. 2019–2025. 10.1016/j.jbiomech.2015.03.023 [DOI] [PubMed] [Google Scholar]

- [35]. Ribeiro, D. C. , Sole, G. , Abbott, J. H. , and Milosavljevic, S. , 2011, “ The Reliability and Accuracy of an Electromagnetic Motion Analysis System When Used Conjointly With an Accelerometer,” Ergonomics, 54(7), pp. 672–677. 10.1080/00140139.2011.583363 [DOI] [PubMed] [Google Scholar]

- [36].Lugade, V. , Chen, T. , Erickson, C. , Fujimoto, M. , Juan, J. G. S. , Karduna, A. , and Chou, L.-S. , 2015, “ Comparison of an Electromagnetic and Optical System During Dynamic Motion,” Biomed. Eng., 27(5), p. 1550041. 10.4015/S1016237215500416. [DOI] [Google Scholar]

- [37]. Hassan, E. A. , Jenkyn, T. R. , and Dunning, C. E. , 2007, “ Direct Comparison of Kinematic Data Collected Using an Electromagnetic Tracking System Versus a Digital Optical System,” J. Biomech., 40(4), pp. 930–935 (in English). 10.1016/j.jbiomech.2006.03.019 [DOI] [PubMed] [Google Scholar]

- [38]. McQuade, K. J. , Finley, M. A. , Harris-Love, M. , and McCombe-Waller, S. , 2002, “ Dynamic Error Analysis of Ascension's Flock of Birds (TM) Electromagnetic Tracking Device Using a Pendulum Model,” J. Appl. Biomech., 18(2), pp. 171–179. 10.1123/jab.18.2.171 [DOI] [Google Scholar]

- [39]. Kindratenko, V. , 2001, “ A Comparison of the Accuracy of an Electromagnetic and a Hybrid Ultrasound-Inertia Position Tracking System,” Presence, 10(6), pp. 657–663. 10.1162/105474601753272899 [DOI] [Google Scholar]

- [40]. Bottlang, M. , Marsh, J. L. , and Brown, T. D. , 1998, “ Factors Influencing Accuracy of Screw Displacement Axis Detection With a DC-Based Electromagnetic Tracking System,” ASME J. Biomech. Eng., 120(3), pp. 431–435. 10.1115/1.2798011 [DOI] [PubMed] [Google Scholar]

- [41]. Murphy, A. J. , Bull, A. M. J. , and McGregor, A. H. , 2011, “ Optimizing and Validating an Electromagnetic Tracker in a Human Performance Laboratory,” Proc. Inst. Mech. Eng., Part H, 225(4), pp. 343–351. 10.1177/2041303310393231 [DOI] [Google Scholar]

- [42]. Adelstein, B. D. , Johnston, E. R. , and Ellis, S. R. , 1996, “ Dynamic Response of Electromagnetic Spatial Displacement Trackers,” Presence, 5(3), pp. 302–318. 10.1162/pres.1996.5.3.302 [DOI] [PubMed] [Google Scholar]