Abstract

Ovarian cancer, a potentially life-threatening disease, is often difficult to treat. There is a critical need for innovations that can assist in improved therapy selection. Although deep learning models are showing promising results, they are employed as a “black-box” and require enormous amounts of data. Therefore, we explore the transferable and interpretable prediction of treatment effectiveness for ovarian cancer patients. Unlike existing works focusing on histopathology images, we propose a multimodal deep learning framework which takes into account not only large histopathology images, but also clinical variables to increase the scope of the data. The results demonstrate that the proposed models achieve high prediction accuracy and interpretability, and can also be transferred to other cancer datasets without significant loss of performance.

1 Introduction

In the United States, approximately 22,000 people are diagnosed with ovarian cancer each year, and approximately 14,000 people will die of this disease. There is a critical need for innovations that can assist in the selection of chemotherapy for these patients. For example, current clinical practice follows a series of prescribed chemotherapeutic regimens, despite the fact that a significant number of patients will not respond to these standard drugs. If resistance could be identified prior to treatment, patient’s could be offered alternative, potentially more appropriate, drugs. Deep learning techniques are shown to be successful in fulfilling this need by harnessing the huge amount of data available to discover individualized disease factors and improve treatment decisions. In the context of ovarian cancer, deep learning models have been applied to tasks such as tumor segmentation and classification1,2, survival prediction3,4, and treatment effectiveness prediction5,6.

Despite promising results, there are still several challenges associated with applying deep learning models to ovarian cancer research. One major challenge is the need for large and diverse datasets to train these models. The digitization of patient tissue samples enables global distribution of the data and high-throughput screening of patients for diagnostic and research applications settings7. Specifically, digital whole slide images (WSIs) are being inreasingly used for both ovarian cancer research, as well a routine diagnostics. While WSIs have the potential to further develop the workflow of pathologists, the collection of WSI images requires significant technical expertise, resources, and infrastructure, and may not be feasible in all settings8. Furthermore, a lack of model interpretability can lead to the failure in understanding the biological mechanisms and ensuring ethical considerations.

In this work, we explore the interpretable and transferable prediction of treatment effectiveness for ovarian cancer patients. Different from existing works focusing on solely on histopathology images, we proposed a multimodal deep learning framework that takes into account both large histopathology images and clinical variables. In addition to providing treatment effectiveness prediction, our approach improves interpretability by providing insights into the factors that influence treatment effectiveness in ovarian cancer patients. We further apply our model to kidney cancer research, showing our model is transferable to different cancers at a feature-level given WSIs and clinical variables. Our interpretable and transferable approach is promised to assist the selection of chemotherapy in a trustworthy and data-efficient fashion. The specific contributions of our work are as follows.

Multimodal deep learning framework. We propose a multimodal deep learning framework which utilizes not only large histopathology images but also clinical variables. In particular, we predict the treatment effectiveness using histopathology images, without requiring any pathologist-provided locally annotated regions, and clinical variables.

Interpretable prediction. Not only predicting treatment effectiveness, our approach, more importantly, is able to interpret the predictions via a state-of-the-art interpretability method that provides visualizations of feature interactions and patch attention scores. We provide insights into predictions of the classifier and thus the factors that influence treatment effectiveness in ovarian cancer patients. Our interpretable prediction helps inform personalized treatment decisions.

Feature-level transferring to kidney cancer. We further explore DL prediction on cancer recurrence using a state-of-the-art kidney cancer dataset and observation leverage for ovarian cancer. Through the proposed feature-level transferring, we are able to perform prediction on kidney cancer in a data-efficient manner.

1.1 Related Works

Treatment effectiveness prediction is an important research area that can help clinicians identify the most appropriate treatment options for patients. Deep learning has shown promising results in predicting treatment effectiveness for cancer patients using various data sources, including gene expression profiles9, radiomic features10, and clinical information11. These studies demonstrate the potential of deep learning in predicting treatment effectiveness through the use of different data sources. Whole slide image (WSI) images12, as another data source, has also been widely explored in deep learning approaches in order to perform treatment effectiveness prediction.

The challenge of applying digital WSIs to predict post-treatment response involves handling the huge size and complex content of WSIs. Without requiring the access to any pathologist-provided locally annotated regions, Wang et al.5 proposed a weakly-supervised learning approach for treatment effectiveness prediction for ovarian cancer. Multi-instance learning (MIL) has been shown to be effective for WSI analysis. Li et al.13 proposed a dual-stream MIL (DSMIL) network through a self-supervised contrastive learning, whereby the embedding for both instance-level and bag-level are learned simultaneously. Along this line, Qu et al.14 proposed a distribution guided MIL framework to further improve the performance for WSI classification tasks. Moreover, cascade learning has also been explored for WSI classification problems15. Though different deep learning methods are proposed to improve WSI classification, these methods are agnostic to interpretability of their predictions and usually assume data sufficiency.

2 Methodology

We describe the datasets considered in this work, and then present the proposed approach.

2.1 Dataset

Ovarian Bevacizumab Dataset12. This dataset consists of hematoxylin and eosin (H&E) WSIs. In total, there are 288 de-identified H&E stained WSIs with 162 being effective and 126 being invalid. The WSIs were acquired with a Leica AT2 digital scanner with a 20x objective lens. The resolution of WSIs is 54342 × 41048 in pixels on average. Clinical information of epithelial ovarian cancer (EOC) patients and peritoneal serous papillary carcinoma (PSPC) patients are also provided. The clinical variables of EOC and PSPC patients are collected from 78 patients at the Tri-Service General Hospital and the National Defense Medical Center, Taipei, Taiwan. The clinical variables are composed of age, diagnosis, FIGO stage, operation type, method for avastin use, days from the operation date to the starting date for use of avastin, and BMI.

Kidney Dataset16. This dataset consists of WSIs from ten patients with clear cell renal cell carcinoma (ccRCC), a subtype of kidney cancer. The stained slides are scanned at an objective of 20x object magnification on an Axioscan Zeiss digital scanner. In total, there are 10 WSIs collected from 10 patients. The WSIs are around 100, 000 × 100, 000 pixels in size. The clinical variables are also provided and included gender, age at surgery, disease-free months, fuhrman nuclear grade, ISUP nuclear grade, tumour stage, tumour size, node status, necrosis, leibovich score (Fuhrman), leibovich score (ISUP). The number of patients with and without cancer recurrence in the next five years is equal.

2.2 Preprocessing

Image Preprocessing. WSIs were processed using the OpenSlide library, a software tool for interfacing with WSIs. Each WSI is downsampled three times and then is made grayscale. Edge detection algorithm is applied to the grayscale WSI to extract edges. These steps reduce the computational resources required in subsequent model training. The WSI is tiled into 64 × 64 pixels per tile. Tiling is performed since the WSIs are too large to be input directly into a deep learning model. Following the DSMIL13, we filter the tiles by image entropy greater than five (i.e., remove images with no tissue in them). This process was applied to the WSIs without any pathologist-provided locally annotated regions.

Tabular Data Preprocessing. For the clinical variables, experts selected the clinical variable features based on their expected predictive importance. The categorical features were one-hot encoded to transform the data into numbers and ensure that the algorithm does not interpret higher numbers as more important. Ordinal features were label encoded. The data was normalized using z-score.

2.3 Multimodal Deep Learning for Treatment Effectiveness Prediction

Multimodal deep learning makes use of all available information from different sources (i.e., multiple modalities) to improve deep learning models’ performance17. Multimodal deep learning intuitively mimics real-world diagnostic procedures by employing unique feature observations during medical screening and diagnostics. Employing a multimodal deep learning approach has been shown to improve prediction accuracy and be effective in medical research18–22. To leverage available multimodal features, we propose a multimodal deep learning framework to predict treatment effectiveness. In addition to predictions, we also analyze our predictions using state-of-the-art interpretability techniques.

The proposed multimodal deep learning framework for treatment effectiveness prediction is shown in Fig. 1. Given medical imaging data, we adopt the backbone proposed in DSMIL13 but differ from their approach by inputting both the image embedding and additional clinical variables into the MIL classifier. We combine these two data sources by concatenating the embedding of images with the tabular clinical variables. Then, we input the combined features into the MIL classifier for the prediction.

Figure 1:

Overview of the proposed interpretable and transferable multimodal deep learning framework.

2.4 Model Specifics and Training

For the feature embedder, we use the ResNet18 model and train it from scratch using all WSIs. The learned bag embedding is of dimension (number of instances in a bag, 512). We concatenate these bag embeddings with 22 tabular clinical variables and linearly reduce the data to 20 components using PCA. Resulting vectors are input into the MIL classifier model and trained using Adam optimization, a learning rate of 1e − 5, and weight decay of 1e − 5. The model was trained over 100 epochs with a batch size of 128 using the Cross Entropy Loss function. The ovarian Bevacizumab dataset was split into 80% training 20% test sets. The kidney dataset was split into 50% training 50% test sets.

2.5 Interpretability

Deep neural networks trained as black box predictors do not give insights into the reasoning behind their recommendations. In this work, we leverage the interpretability techniques of feature interaction detection and additive modeling to provide interpretable insights from our machine learning models23,24. Specifically, we adapt Sparse Interaction Additive Networks (SIAN), an effective selection algorithm inspired by feature interaction detection that identifies the essential feature combinations in large-scale tabular datasets. The method advanced the fusion of interpretable models and neural networks by developing an efficient training implementation that accommodates higher-order interactions. We use the interaction detection procedure SIAN in order to select relevant features from the tabular medical records to build a simplistic and interpretable model which utilizes the feature interactions selected by this procedure.

In addition, we utilize the CLAM25 attention-based learning framework to generate attention heatmaps of our WSIs. The CLAM framework first extracts WSI patches. These patches are encoded using a pretrained CNN, ranked based on their relative importance to the slide-level prediction, and assigned attention scores based on their rank. The process of creating an attention heatmap then involves converting model attention scores to percentiles and mapping them spatially to the original slide. Visualizing attention scores as a heatmap captures the relative contribution and importance of each patch to the model’s predictions. This visualization can also aid in identifying key diagnostic features.

2.6 Metrics

We evaluate the accuracy by measuring the area under the curve (AUC) metric, which evaluates the area under the Receiver Operating Characteristic (ROC) curve between the True Positive Rate (TPR) and the False Positive Rate (FPR). In addition, we also report the accuracy. Accuracy is calculated as the ratio of the number of correctly predicted WSIs to the total number of WSI in the dataset. On the kidney dataset, we perform across 3-fold cross-validation and report the average of the scores.

3 Results

Comparison to baselines. To show the effectiveness of the proposed multimodal deep learning framework, we compare our model to two baselines: 1) image-only baseline which performs treatment effectiveness prediction with WSIs only; 2) clinic-only baseline which performs treatment effectiveness prediction with tabular clinical variables only. The AUC and accuracy results for the proposed and baseline methods are shown in Table 1.

Table 1:

Comparison to baseline models

| Methods | AUC | Accuracy |

|---|---|---|

| DSMIL | 0.780 | 0.780 |

| Clinic-only | 0.767 | 0.730 |

| Multimodal (ours) | 0.796 | 0.783 |

The decision to administer therapy drugs is a complex problem that does not rely solely on WSIs or clinical variables alone. Clinicians must consider other factors such as patient factors and treatment risks. Therefore, our multimodal framework addresses these limitations. These results demonstrate that the proposed multimodal deep learning approach can effectively combine the available WSIs and clinical variables to provide better performance compared to a single data source.

Interpretability. The resulting model can be effectively visualized using shape functions in Figure 2 generated by the SIAN model.

Figure 2:

Shape functions for four selected clinical variable features.

In Figure 2a, we expect suboptimal operation debulking and no debulking correspond to high risk of ineffective treatment while optimal debulking corresponds to low risk of ineffective treatment because there is more residual tumor remaining after the operation. In Figure 2b, we observe that risk of ineffective treatment increases with FIGO stage severity. This observation aligns with clinical expertise since earlier stages of cancer are more likely to be treated effectively than later stages of cancer.

We observe the feature interaction between patient BMI and risk of avastin ineffectiveness in Figure 2c. The plot suggests that a lower BMI corresponds to lower risk of ineffective treatment, and that the risk increases as patient BMI increases. In Figure 2d, the feature interaction suggests that an older-aged patient may have a moderately lower risk of ineffective treatment than younger-aged patients.

In clinical settings, it is important to be able to communicate high-stakes decisions not only to the doctor but also to the patient. When external algorithms are unable to explain their decisions, medical professionals often choose to ignore blackbox decisions instead of endangering their patient’s ability to fully understand the risks and benefits of a procedure. As a result, we present visualizations of feature interactions. We demonstrate that these interactions align with clinical expertise, ensuring that predictions are accurate and reliable.

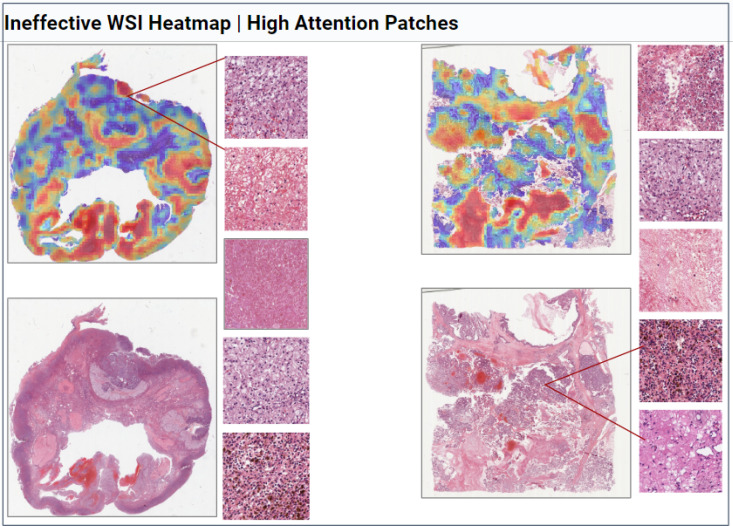

WSI Heatmaps. Figure 3 and Figure 4 display the output of the adapted CLAM framework. We observe that areas of high attention patches (red) often occur in the tumor regions of the WSI while low attention patches (blue) often occur in benign fibrous tissue and non-tumor regions. These findings are consistent with previous literature25 and the clinical expertise. The high attention patches provide additional interpreability, allowing clinicians to observe finer details within the WSI tissue such as necrosis, growth patterns, and cell nuclei.

Figure 3:

Selected WSIs, heatmaps, and their high attention patches (red) from Bevacizumab effective patients.

Figure 4:

Selected WSIs, heatmaps, and their high attention patches (red) from Bevacizumab effective patients.

Feature-level Transferring to Kidney Cancer. We perform the feature-level transferring to the kidney cancer dataset to predict cancer recurrence in the next five years. In particular, we employ the transferable feature embedder trained on the ovarian Bevacizumab dataset to extract embedders for the WSIs in the kidney dataset. To demonstrate the effectiveness of the feature-level transferring, we compare the performance to the baseline where the feature embedder is not trained on the Bevacizumab dataset. The AUC and accuracy results for the proposed and the baseline methods are shown in Table 2.

Table 2:

Feature-level transferring to kidney dataset.

| Methods | AUC | Accuracy |

|---|---|---|

| w/o Bevacizumab | 0.400 | 0.640 |

| w Bevacizumab (ours) | 0.582 | 0.720 |

As shown, we empirically demonstrate the effectiveness of the feature-level transferring to the kidney cancer dataset. In particular, training the feature embedder on the ovarian Bevacizumab dataset achieves better performance (AUC 0.582) than the baseline (AUC 0.400). These results suggest potential for transfer learning applications between different cancer datasets.

4 Discussion

Conclusions. In this work, we proposed a transferable and interpretable treatment effectiveness prediction for ovarian cancer. In particular, we proposed a multimodal deep learning framework which performed treatment effectiveness prediction based on both large histopathology images and tabular clinical information. In addition to predicting treatment effectiveness, we further interpreted the predictions by adopting a state-of-the-art interpretability model. To improve data-efficiency, we explored the feature-level transferring from ovarian cancer to kidney cancer. Our results show that our approach achieves competitive prediction accuracy and is transferable and interpretable.

Limitations and Future Work. One of the limitations of our work lies in the feature-level transferring to the kidney dataset. In this task, we only consider the transfer between the kidney dataset and the Bevacizumab dataset at the feature-level due the difference in their prediction tasks. To further study decision-level transferring in ovarian cancer research, one of our future works is to collect a private dataset that shares the similar settings as the Bevacizumab dataset. In particular, we are currently collecting a Platinum Resistant and Platinum Sensitive Dataset. These datasets will describe people with ovarian cancer who typically present at an advanced stage, and the standard of care is extensive surgery followed by platinum based chemotherapy. Most people will then be cancer free, until they undergo a relapse. This usually occurs after approximately 12-18 months, and these people are described as “platinum sensitive”. However, approximately 15% of people will relapse within 6 months of their chemotherapy. These people are deemed to be “Platinum resistant”. The Platinum Resistant Dataset has potential use in the automatic identification of people with platinum resistance diagnostics. The clinical impact of this would be to allow oncologists to identify different patients groups and offer individualised chemotherapy than the standard.

5 Acknowledgments

This project was funded by the Ming Hsieh Institute (MHI) for Research on Engineering Medicine for Cancer.

Figures & Tables

References

- [1].Srilatha K, Jayasudha FV, Sumathi M, Chitra P. Advances in Electrical and Computer Technologies: Select Proceedings of ICAECT 2021. Springer; 2022. Automated ultrasound ovarian tumour segmentation and classification based on deep learning techniques; pp. pages 59–70. [Google Scholar]

- [2].Zhang Zheng, Han Yibo. Detection of ovarian tumors in obstetric ultrasound imaging using logistic regression classifier with an advanced machine learning approach. IEEE Access. 2020;8:44999–45008. [Google Scholar]

- [3].Avesani Giacomo, Tran Huong Elena, Cammarata Giulio, Botta Francesca, Raimondi Sara, Russo Luca, Persiani Salvatore, Bonatti Matteo, Tagliaferri Tiziana, Dolciami Miriam, et al. Ct-based radiomics and deep learning for brca mutation and progression-free survival prediction in ovarian cancer using a multicentric dataset. Cancers. 2022;14(11):2739. doi: 10.3390/cancers14112739. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Kaur Ishleen, Doja MN, Ahmad Tanvir, Ahmad Musheer, Hussain Amir, Nadeem Ahmed, El-Latif Abd, Ahmed A, et al. An integrated approach for cancer survival prediction using data mining techniques. Computational Intelligence and Neuroscience. 2022;2021 doi: 10.1155/2021/6342226. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Wang Ching-Wei, Chang Cheng-Chang, Lee Yu-Ching, Lin Yi-Jia, Lo Shih-Chang, Hsu Po-Chao, Liou Yi-An, Wang Chih-Hung, Chao Tai-Kuang. Weakly supervised deep learning for prediction of treatment effectiveness on ovarian cancer from histopathology images. Computerized Medical Imaging and Graphics. 2022;99:102093. doi: 10.1016/j.compmedimag.2022.102093. [DOI] [PubMed] [Google Scholar]

- [6].Wang Ching-Wei, Lee Yu-Ching, Chang Cheng-Chang, Lin Yi-Jia, Liou Yi-An, Hsu Po-Chao, Chang Chun-Chieh, Sai Aung-Kyaw-Oo, Wang Chih-Hung, Chao Tai-Kuang. A weakly supervised deep learning method for guiding ovarian cancer treatment and identifying an effective biomarker. Cancers. 2022;14(7):1651. doi: 10.3390/cancers14071651. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Dimitriou Neofytos, Arandjelovic´ Ognjen, D Peter. Caie. Deep learning for whole slide image analysis: An overview. Frontiers in Medicine. 2019;6 doi: 10.3389/fmed.2019.00264. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Kumar Neeta, Gupta Ruchika, Gupta Sanjay. Whole slide imaging (wsi) in pathology: current perspectives and future directions. Journal of digital imaging. 2020;33(4):1034–1040. doi: 10.1007/s10278-020-00351-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Gunther Erik C, Stone David J, Gerwien Robert W, Bento Patricia, Heyes Melvyn P. Prediction of clinical drug efficacy by classification of drug-induced genomic expression profiles in vitro. Proceedings of the national academy of sciences. 2003;100(16):9608–9613. doi: 10.1073/pnas.1632587100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Lian Chunfeng, Ruan Su, Denœux Thierry, Jardin Fabrice, Vera Pierre. Selecting radiomic features from fdg-pet images for cancer treatment outcome prediction. Medical image analysis. 2016;32:257–268. doi: 10.1016/j.media.2016.05.007. [DOI] [PubMed] [Google Scholar]

- [11].Yang Jialiang, Ju Jie, Guo Lei, Ji Binbin, Shi Shufang, Yang Zixuan, Gao Songlin, Yuan Xu, Tian Geng, Liang Yuebin, et al. Prediction of her2-positive breast cancer recurrence and metastasis risk from histopathological images and clinical information via multimodal deep learning. Computational and structural biotechnology journal. 2022;20:333–342. doi: 10.1016/j.csbj.2021.12.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Wang Ching-Wei, Chang Cheng-Chang, Khalil Muhammad Adil, Lin Yi-Jia, Liou Yi-An, Hsu Po-Chao, Lee Yu-Ching, Wang Chih-Hung, Chao Tai-Kuang. Histopathological whole slide image dataset for classification of treatment effectiveness to ovarian cancer. Scientific Data. 2022;9(1):25. doi: 10.1038/s41597-022-01127-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Li Bin, Li Yin, Eliceiri Kevin W. Dual-stream multiple instance learning network for whole slide image classification with self-supervised contrastive learning. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2021. pp. pages 14318–14328. [DOI] [PMC free article] [PubMed]

- [14].Qu Linhao, Luo Xiaoyuan, Liu Shaolei, Wang Manning, Song Zhijian. Medical Image Computing and Computer Assisted Intervention–MICCAI 2022: 25th International Conference, Singapore, September 18–22, 2022, Proceedings, Part II. Springer; 2022. Dgmil: Distribution guided multiple instance learning for whole slide image classification; pp. pages 24–34. [Google Scholar]

- [15].Wang Junwen, Du Xin, Farrahi Katayoun, Niranjan Mahesan. Deep cascade learning for optimal medical image feature representation. 2022.

- [16].Wölflein Georg, Um In Hwa, Harrison David J, Arandjelovic´ Ognjen. Whole-slide images and patches of clear cell renal cell carcinoma tissue sections counterstained with hoechst 33342, cd3, and cd8 using multiple immunofluorescence. Data. 2023;8(2):40. [Google Scholar]

- [17].Ngiam Jiquan, Khosla Aditya, Kim Mingyu, Nam Juhan, Lee Honglak, Ng Andrew Y. Multimodal deep learning. ICML. 2011.

- [18].Song Dezhao, Kim Edward, Huang Xiaolei, Patruno Joseph, Muñoz-Avila Héctor, Heflin Jeff, Long L Rodney, Antani Sameer. Multimodal entity coreference for cervical dysplasia diagnosis. IEEE transactions on medical imaging. 2014;34(1):229–245. doi: 10.1109/TMI.2014.2352311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Xu Tao, Zhang Han, Huang Xiaolei, Zhang Shaoting, Metaxas Dimitris N. International conference on medical image computing and computer-assisted intervention. Springer; 2016. Multimodal deep learning for cervical dysplasia diagnosis; pp. pages 115–123. [Google Scholar]

- [20].Lee Garam, Nho Kwangsik, Kang Byungkon, Sohn Kyung-Ah, Kim Dokyoon. Predicting alzheimer’s disease progression using multi-modal deep learning approach. Scientific reports. 2019;9(1):1–12. doi: 10.1038/s41598-018-37769-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Zhang Fan, Li Zhenzhen, Zhang Boyan, Du Haishun, Wang Binjie, Zhang Xinhong. Multi-modal deep learning model for auxiliary diagnosis of alzheimer’s disease. Neurocomputing. 2019;361:185–195. [Google Scholar]

- [22].Gessert Nils, Nielsen Maximilian, Shaikh Mohsin, Werner René, Schlaefer Alexander. Skin lesion classification using ensembles of multi-resolution efficientnets with meta data. MethodsX. 2020;7:100864. doi: 10.1016/j.mex.2020.100864. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].Tsang Michael, Rambhatla Sirisha, Liu Yan. How does this interaction affect me? interpretable attribution for feature interactions. Advances in Neural Information Processing Systems. 2020;33 [Google Scholar]

- [24].Enouen James, Liu Yan. Sparse interaction additive networks via feature interaction detection and sparse selection. Advances in Neural Information Processing Systems. 2022.

- [25].Lu Ming Y., Williamson Drew F. K., Chen Tiffany Y., Chen Richard J., Barbieri Matteo, Mahmood Faisal. Data efficient and weakly supervised computational pathology on whole slide images. 2020. [DOI] [PMC free article] [PubMed]