Abstract

With widespread electronic health record (EHR) adoption and improvements in health information interoperability in the United States, troves of data are available for knowledge discovery. Several data sharing programs and tools have been developed to support research activities, including efforts funded by the National Institutes of Health (NIH), EHR vendors, and other public- and private-sector entities. We surveyed 65 leading research institutions (77% response rate) about their use of and value derived from ten programs/tools, including NIH’s Accrual to Clinical Trials, Epic Corporation’s Cosmos, and the Observational Health Data Sciences and Informatics consortium. Most institutions participated in multiple programs/tools but reported relatively low usage (even when they participated, they frequently indicated that fewer than one individual/month benefitted from the platform to support research activities). Our findings suggest that investments in research data sharing have not yet achieved desired results.

Introduction

By 2021, electronic health records (EHR) had been adopted by 96% of hospitals and 78% of office-based physician practices in the United States, providing unprecedented access to digital health information. [1] This widespread adoption paved the way for large-scale analysis of data to advance biomedical discovery. [2,3] While data from a single healthcare organization may be limited in volume and generalizability, combining data from multiple organizations offers greater opportunities to gain insights from the collective experience of diverse patient populations. [4]

Although the cross-institution sharing of clinical data sets holds promise for improving healthcare, the lack of interoperability among siloed EHR systems, as well as financial, technical, and regulatory hurdles, have posed significant challenges historically. [5] The 21st Century Cures Act expanded requirements to facilitate sharing of clinical data – though actual sharing has thus far fallen short of some people’s expectations. [6-8] Barriers to participation in data aggregation and exchange initiatives include concerns for patient privacy and confidentiality, technical challenges associated with variation in clinical data models, resource limitations, and a perceived lack of value. Despite these challenges, collaborations utilizing both commercial and publicly funded entities have made significant investments in developing technologies and communities to support data sharing to accelerate biomedical research.

Beginning in 2006, the National Center for Advancing Translational Sciences (NCATS) Clinical and Translational Science Awards (CTSA) Program established a national network of academic research centers, or program hubs. [9] Strategic goals for the CTSA program include an emphasis on translation and consortium-wide, cross-disciplinary collaboration including capabilities for integration of data from diverse sources. [10] Informatics and digital health capabilities are described as “essential characteristics of successful CTSA Hubs.” [11] Consequently, investigators at CTSA program hubs may be ideally positioned to leverage the capabilities of tools designed to facilitate interinstitutional collaboration.

This study’s objective was to describe trends in the use of clinical data sharing programs and tools at leading research institutions in the United States as identified by their status as CTSA-funded program hubs. We investigated the institutions’ perceptions of the value these programs and tools have yielded to date. We also inquired about governance and leadership of data sharing efforts. [12]

Methods

Study Participants

CTSA program hubs that received NCATS funding in federal fiscal year 2022 were identified from the directory of the Center for Leading Innovation & Collaboration website. [13] In February 2023, an informatics lead listed on each website was sent a personalized email with an invitation to complete a brief survey. In some cases, the initial contact identified a counterpart within the same CTSA program hub who was invited to complete the survey. The study was reviewed by the Geisinger IRB and determined to be non-human subject research.

Health Data Sharing Programs and Technologies

Based on our knowledge of the market as well as a review of CTSA recipients’ websites, we identified 10 health data sharing programs and technologies to be included in the survey. Though not an exhaustive list, the survey included commercial, open-source, and federally supported programs and technologies using a variety of approaches to enable the exchange of clinical data. The list with brief self-descriptions is shown in Table 1.

Table 1.

Health Data Sharing Programs and Technologies.

| Health Data Sharing Program/Technology | Reported Patient Records Included | Reported Number of Participating Organizations | Self-Description |

|---|---|---|---|

| Accrual To Clinical Trials (ACT) / Evolve to Next-Gen ACT (ENACT) |

150 million | 50 | The ACT Network is a real-time platform that allows researchers to explore and validate feasibility for clinical studies across the CTSA consortium from their desktops. ACT helps researchers design and complete clinical studies and is secure and HIPAA-compliant. [14,15] |

| All of Us Research Program | 417,000+ | 340+ | The National Institutes of Health leads this ambitious effort to gather health data from one million or more people living in the United States to accelerate research that may improve health. [16,17] |

| Cerner Real-World Data | 100 million | 70 | Cerner Real-World DataTM is a national, de-identified, person-centric data set solution that enables researchers to leverage longitudinal record data from contributing organizations.[18] |

| Datavant Switchboard | Does not store data | 500+ | The Datavant Switchboard makes it easy for companies to securely manage the data that they send out and bring into their institution with the click of a button. It is designed to connect disparate datasets, control how their data is used, and comply with applicable regulations regarding patient privacy. [19] |

| Epic Cosmos | 178 million | 191 | The Cosmos data set is unlike any other used in health research today. Cosmos combines billions of clinical data points in a way that forms a high quality, representative, and integrated data set that can be used to change the health and lives of people everywhere. [20] |

| National COVID Cohort Collaborative (N3C) | 18 million | 77 | The N3C is a partnership among the CTSA Program hubs and the National Center for Data to Health (CD2H) to contribute and use COVID-19 clinical data to answer critical research questions to address the pandemic. [21] |

| Observational Health Data Sciences and Informatics (OHDSI) | 810 million | 150+ | Observational Health Data Sciences and Informatics (OHDSI) is an international collaborative whose goal is to create and apply open-source data analytic solutions to a large network of health databases to improve human health and wellbeing. [22,23] |

| PCORnet | 60 million | 70+ | PCORnet data are accessible via a distributed research network model of eight large Clinical Research Networks (CRNs) and facilitated by a Coordinating Center. [24] |

| TriNetX | 250 million | 120 | Our longitudinal data combines diagnostics, laboratory results, treatments and additional information like genomics and visit types for full view of the patient. Most queries start broad, with a diagnosis, then layer on requirements for record longevity, a lab value range, or medication history. We give you the flexibility to define your precision cohort in whatever way your research demands. [25,26] |

| Truveta Studio | 70 million | 27 | Motivated by the lack of useful data on how best to respond during COVID-19, 27 health systems providing 16% of all US healthcare, formed Truveta. Data from Truveta’s members is normalized, de-identified, and made available for research. [27] |

Survey

In addition to asking about the 10 health data sharing programs and technologies listed in Table 1, the survey allowed respondents to list any additional technologies used by their organizations. For each program or technology, respondents were asked whether their organization participated in the program or used the technology, and they were also asked to report the approximate number of monthly users of each at their institution using four options: <1, 1-5, 6-10, or >10. Respondents were also asked to rate the perceived value of each technology to their organization using a Likert scale with 1 representing “very low” and 5 representing “very high” perceived value.

The survey also inquired whether a respondent’s organization had a guiding principles or policy document about data sharing, and if so, whether it was for internal use only or public facing. Respondents were also asked whether their organization had a Chief Research Informatics Officer (CRIO) or equivalent, and they were requested to provide the number of full-time equivalent staff employed to support research data management activities, including the programs and tools listed. Finally, respondents were invited to provide any free-text comments related to the programs or technologies included in the survey.

Data Analysis

Results to each survey question were aggregated and presented as percentages of respondents, along with other descriptive statistics. Free-text responses were reviewed and grouped thematically. A chi-square test was performed to identify differences in the number of programs/tools receiving a 4 or 5 (“high” or “very high”) score for value at sites with a CRIO compared to those without.

Results

Table 2 lists the CTSA-funded organizations. To the 64 program hubs, 65 surveys were sent and 50 (77%) completed the survey. Sixty-four percent of respondents reported having a CRIO or equivalent role at their organization. With respect to the number of research data staff, 22% of respondents reported having 1-5 staff, another 22% reported having 6-10 staff, 16% reported having 11-15 staff, and the remaining 40% reported having more than 15 staff.

Table 2.

Names, locations, and websites representing organizations with a Clinical and Translational Science Award from the National Center for Advancing Translational Sciences in 2022.

| Organization | Location | CTSA Website |

|---|---|---|

| Albert Einstein College of Medicine | Bronx, NY | https://www.einsteinmed.edu/centers/ictr/ |

| Boston University | Boston, MA | https://www.bu.edu/ctsi/about/leadership/ |

| Case Western Reserve University | Cleveland, OH | https://case.edu/medicine/ctsc/ |

| Columbia University Health Sciences | New York, NY | https://www.irvinginstitute.columbia.edu/ |

| Duke University | Durham, NC | https://ctsi.duke.edu/ |

| Emory University (Georgia CTSA) | Atlanta, GA | https://georgiactsa.org/ |

| Georgetown University/Howard University | Washington, DC | http://www.georgetownhowardctsa.org/research |

| Harvard Medical School | Boston, MA | https://catalyst.harvard.edu/ |

| Icahn School of Medicine at Mount Sinai | New York, NY | https://icahn.mssm.edu/research/conduits |

| Indiana University-Purdue University | Indianapolis, IN | https://indianactsi.org/ |

| Johns Hopkins University | Baltimore, MD | https://ictr.johnshopkins.edu/ |

| Mayo Clinic Rochester | Rochester, MN | https://www.mayo.edu/research/centers-programs/center-clinical-translational-science |

| Medical College of Wisconsin | Milwaukee, WI | https://ctsi.mcw.edu/ |

| Medical University of South Carolina | Charleston, SC | https://research.musc.edu/resources/sctr |

| New York University School of Medicine | New York, NY | https://med.nyu.edu/departments-institutes/clinical-translational-science/ |

| Northwestern University at Chicago | Chicago, IL | https://www.nucats.northwestern.edu/ |

| Ohio State University | Columbus, OH | https://ccts.osu.edu/ |

| Oregon Health & Science University | Portland, OR | https://www.ohsu.edu/octri |

| Pennsylvania State University Hershey Medical Center | Hershey, PA | https://ctsi.psu.edu/ |

| Rockefeller University | New York, NY | https://www2.rockefeller.edu/ccts/ |

| Rutgers Biomedical/Health Sciences-RBHS | New Brunswick, NJ | https://njacts.rbhs.rutgers.edu/ |

| Scripps Research Institute | La Jolla, CA | https://www.scripps.edu/science-and-medicine/translational-institute/ |

| Stanford University | Stanford, CA | https://med.stanford.edu/spectrum/ctsa-cores-and-programs.html |

| State University of New York at Buffalo | Amherst, NY | https://www.buffalo.edu/ctsi.html |

| Tufts University Boston | Boston, MA | https://www.tuftsctsi.org/ |

| University of Arkansas for Medical Sciences | Little Rock, AR | https://tri.uams.edu/tag/ctsa/ |

| University of Massachusetts Medical School Worcester | Worcester, MA | https://www.umassmed.edu/ccts/ |

| University of North Carolina Chapel Hill | Chapel Hill, NC | https://tracs.unc.edu/ |

| University of Alabama at Birmingham | Birmingham, AL | https://www.uab.edu/ccts/ |

| University of California at Davis | Sacramento, CA | https://health.ucdavis.edu/ctsc/ |

| University of California Los Angeles | Los Angeles, CA | https://ctsi.ucla.edu/ |

| University of California San Diego | La Jolla, CA | https://actri.ucsd.edu/ |

| University of California, San Francisco | San Francisco, CA | https://ctsi.ucsf.edu/ |

| University of California-Irvine | Irvine, CA | https://www.icts.uci.edu/ |

| University of Chicago | Chicago, IL | https://chicagoitm.org/ |

| University of Cincinnati | Cincinnati, OH | https://www.cctst.org/ |

| University of Colorado Denver | Aurora, CO | https://cctsi.cuanschutz.edu/ |

| University of Florida | Gainesville, FL | https://www.ctsi.ufl.edu/ |

| University of Illinois at Chicago | Chicago, IL | https://ccts.uic.edu/ |

| University of Iowa | Iowa City, IA | https://icts.uiowa.edu/ |

| University of Kansas Medical Center | Kansas City, KS | https://frontiersctsi.org |

| University of Kentucky | Lexington, KY | https://www.ccts.uky.edu/ |

| University of Miami School of Medicine | Miami, FL | https://miamictsi.org/ |

| University of Michigan at Ann Arbor | Ann Arbor, MI | https:// ichr.umich.edu/ |

| University of Minnesota | Minneapolis, MN | https://ctsi.umn.edu/ |

| University of New Mexico Health Sciences Center | Albuquerque, NM | https://hsc.unm.edu/ctsc/ |

| University of Pennsylvania | Philadelphia, PA | https://www.itmat.upenn.edu/ctsa-home.html |

| University of Pittsburgh | Pittsburgh, PA | https://ctsi.pitt.edu/ |

| University of Rochester | Rochester, NY | https://www.urmc.rochester.edu/clinical-translational-science-institute.aspx |

| University of Southern California | Los Angeles, CA | https://sc-ctsi.org/ |

| University of Texas Health Science Center San Antonio | San Antonio, TX | https://iims.uthscsa.edu/ |

| University of Texas Health Sciences Center Houston | Houston, TX | https://www.uth.edu/ccts/ |

| University of Texas Medical Branch Galveston | Galveston, TX | https://its.utmb.edu/ |

| University of Utah | Salt Lake City, UT | https://ctsi.utah.edu/ |

| University of Virginia | Charlottesville, VA | https://www.ithriv.org/ |

| University of Washington | Seattle, WA | https://www.washington.edu/research/research-centers/institute-of-translational-health-sciences/ |

| University of Wisconsin-Madison | Madison, WI | https://ictr.wisc.edu/ |

| UT Southwestern Medical Center | Dallas, TX | https://www.utsouthwestern.edu/research/ctsa/ |

| Vanderbilt University Medical Center | Nashville, TN | https://victr.vumc.org/ |

| Virginia Commonwealth University | Richmond, VA | https://cctr.vcu.edu/collaborations/ctsa-network/ |

| Wake Forest University Health Sciences | Winston-Salem, NC | https://ctsi.wakehealth.edu/ |

| Washington University in St. Louis | Saint Louis, MO | https://icts.wustl.edu/ |

| Weill Medical College of Cornell University | New York, NY | https://ctscweb.weill.cornell.edu/ |

| Yale University | New Haven, CT | https://medicine.yale.edu/ycci/ |

Table 3 shows the reported use (at any level) and perceived value of health data sharing programs and tools. Organizations reported participating in a median of 5 (mean of 5.1) of the 10 resources. The National COVID Cohort Collaborative (N3C) had the highest participation rate (94% of respondents) and Truveta, with just three sites reporting participation, had the lowest (6%).

Table 3.

Proportion of 50 respondents who reported using each data sharing program/tool at any level, as well as the percentage of those participating sites reporting high or very high on the perceived value scale.

| Program / Tool | Sites reporting any participation | Participating sites reporting high or very high value | ||

|---|---|---|---|---|

| N | % (of 50 respondents) | N | % (of participants) | |

| ACT/ENACT | 39 | 78% | 6 | 15% |

| All of Us | 37 | 74% | 13 | 35% |

| Cerner Real-World Data | 4 | 8% | 1 | 25% |

| Datavant Switchboard | 12 | 24% | 6 | 50% |

| Epic Cosmos | 16 | 32% | 3 | 19% |

| National COVID Cohort Collaborative (N3C) | 47 | 94% | 12 | 26% |

| OHDSI | 38 | 76% | 20 | 53% |

| PCORnet | 32 | 64% | 14 | 44% |

| TriNetX | 26 | 52% | 14 | 54% |

| Truveta | 3 | 6% | 1 | 33% |

On average, the 10 programs/tools received high or very high ratings of perceived value from 35% of participating organizations. TriNetX received a high or very high rating from the largest percentage (54% of 26 participating sites). ACT/ENACT received a high or very high rating from the smallest percentage (15% of 39 participants). At sites with CRIOs, 1.7 programs/tools received a high or very high value rating on average compared to 1.9 from institutions without a CRIO, a non-significant difference (p=0.72).

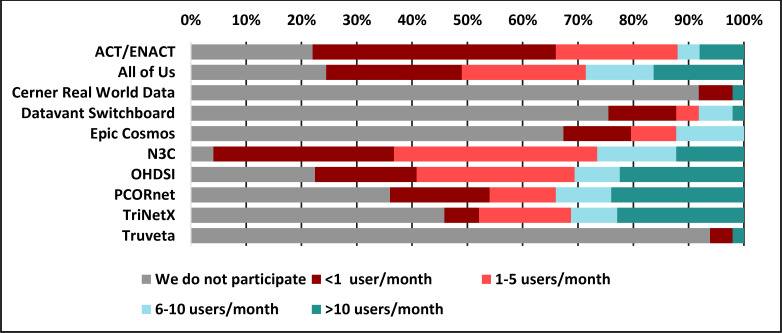

Figure 1 displays the reported level of use of the 10 surveyed programs/tools among the 50 respondents. In response to an open question about other tools used, the following were identified: The Consortium for Clinical Characterization of COVID-19 by EHR (4CE), FHIR (Fast Healthcare Interoperability Resources), Informatics for Integrating Biology & the Bedside (i2b2), Leaf, Linkja, MarketScan, MDClone, Medical Imaging and Data Resource Center (MIDRC), nference, Open Specimen, RECOVER (Researching COVID to Enhance Recovery), REDCap, and Visual NLP, as well as various regional or state-supported resources.

Figure 1.

Usage rates among 10 data-sharing programs and tools

Ninety percent of respondents reported having a guiding principles or policy document to help govern participation in data sharing programs and tools. Of those who reported having a document, 69% said it was for internal use only, and the remaining 31% indicated that their document was public facing (e.g., posted on a website).

Of the 50 respondents, 31 provided free-text comments in response to the question, “Do you have any comments related to the programs/tools listed above that you wish to share?” We grouped the responses into the five themes shown in Table 4. We redacted portions of the responses to preserve anonymity.

Table 4.

Themes derived from free-text survey comments.

| Themes | Example Responses (anonymized) |

|---|---|

| Limited benefits reported from participation | “We have participated with [X] for quite a while now but have had only a handful of users.” “...has always felt like a good idea that never really got much use.” “It became too much of a hassle for too little investigator return.” “If tools are hard to use, then access to data is inhibited, people simply give up.” “The majority of the functionality is duplicative with / could be accomplished with [other listed programs].” |

| Programs facilitate collaboration | “The ability to quickly form broad groups / teams is helpful, but they sometimes lack cohesion, and there is no guarantee strong analytic principles will be followed.” “Without these systems the relatively small number of users would not be doing the important work that they are doing... for these users the systems have incredible value.” |

| Lack of resources required to participate | “Good people are expensive.” “The bar is high to participate...the efforts are underfunded.” “Keeping our data refreshed in [A] requires additional research support effort in addition to keeping other platforms such as [X, Y, Z] up-to-date with data refreshes.” “...getting staff to support these areas is challenging as it is neither ‘IT’ nor ‘Research’.” “Funding agency support is grossly inadequate & results in need for substantial subsidy that is rate-limiting step in providing services” “What is lacking ... tends to be local concierge, training and refinement to engage use – simply making them available does not usually generate interest and use.” |

| Data require content expertise to be useful | “The most valuable resource we have continues to be expert research data analysts...” “Data require deep understanding.” “...the work is meaningful but is not particularly accessible for the average clinical researcher.” “[X] has been available with limited client interest due to skillset required.” |

| Concerns about how data are used is a consideration for participation | “We are not participating due to concerns related to our ability to decide how our data are used and ownership of the data...there is no way for us to opt out of how data are used and no mechanism for our data to be removed after it has been submitted.” |

Discussion

Are top research institutions consistent in their participation with various programs/tools?

Given the multiple opportunities for organizations to participate in data sharing programs and tools, prioritizing allocation of limited resources is a challenge. The survey revealed that most respondents’ institutions (74%) participated in five or more programs/tools. The National COVID Cohort Collaborative (N3C) and ACT/ENACT were the most common among respondents, with participation rates of 94% and 78%, respectively.

Selection of programs and tools to participate in appears to be influenced by various factors, including alignment with institutional and funding organizations’ priorities, integration with the institution’s EHR, staffing and infrastructure requirements, and budget considerations. Lack of resources poses a significant challenge, as 22% of respondents reported having five or fewer full-time staff to support data management activities including the programs/tools in the survey. As one respondent pointed out, “The bar is high to participate... the efforts are underfunded.” Further, some respondents highlighted the overlap in functionality of the surveyed programs leading to selection of the tool most suitable for the organization’s needs and resources, rather than trying to participate in multiple programs.

How much value is being generated by participation with various programs/tools?

Determining the value of a data sharing program or tool is complex. Several respondents observed that estimating usage was difficult due to a lack of reporting capabilities for many of the programs. We found that while some respondents reported low perceived value in their participation in a specific program/tool, others indicated that the same program/tool was highly valued. It is likely that the level of investment–not only in terms of implementing a program/tool, but cultivating its use–influences the value generated. It’s also likely that a lack of resources contributes to organizations’ inability to unlock the potential value of various programs/tools.

Participation and usage rates varied across the surveyed organizations, as did the perceived value of the various programs. It is noteworthy that certain tools were consistently rated more highly than others. N3C had the highest rate of participation (94%), though just 26% ranked the program as providing high or very high value. ACT/ENACT had the second highest rate of participation (78%), though 56% of participants estimated that fewer than one individual per month at their institution used the resource, and only 15% of participants indicated that it provided high or very high value. At least half of respondents described participation in OHDSI (76%), All of Us (74%), PCORnet (64%) and TriNetX (52%) with comparatively favorable usage and value. Fifty-four percent of TriNetX participants reported high or very high value; 53% of OHDSI, 44% of PCORnet, and 35% of All of Us participants reported the same.

How can usability and user experience be improved?

Tools that are difficult to use can impede data access and lead to low participation rates. As one respondent observed, “The ability to quickly form broad groups / teams is helpful, but they sometimes lack cohesion, and there is no guarantee strong analytic principles will be followed.” Improving the use and usefulness of data sharing tools and programs is not only a technology challenge, but perhaps even more a people and process challenge. To encourage adoption of data sharing programs and tools once they are implemented in an organization, concierge services, training, and tool refinement are necessary. Providing clunky tools that purport to support “self-service” may not be enough to achieve broad adoption.

How do organizations decide what is ethically appropriate when it comes to data sharing, and what is the appetite for commercial “profit-driven” programs/tools?

When sharing data among institutions, especially for non-clinical purposes such as education, research, or activities that involve commercialization, local governance is essential. Cole and colleagues highlighted the importance of evaluating the suitability of sharing data for non-clinical purposes and provided a framework of common questions to consider, including the rights of providers, patients, and organizations, as well as considerations of privacy, ownership, intellectual property, scientific publication, and transparency. [28] Institutional policies or guiding principles addressing these questions may influence participation in specific data sharing initiatives.

Our study disproportionately reflects the viewpoint of large academic medical centers compared to other healthcare organizations. As per the CTSA program goals, academic centers may be more predisposed towards participation in data integration and sharing programs, particularly those that do not involve commercial applications. This likely impacted the results of the study, and our findings may not be representative of other types of organizations. It is noteworthy that there is virtually no overlap between Truveta’s membership [29] and the CTSA-funded sites. Truveta claims to have a membership of 700 hospitals across 43 states. Epic reports that 191 organizations participate in its Cosmos platform, and Cerner claims 70 members in its Real-World Data platform. This suggests that there is a significant amount of participation in participating in data sharing and integration programs outside of traditional academic medical centers.

According to a 2022 report, Epic claimed that 38% of their customers were contributing data to Cosmos, with 50% signed up to contribute [30]. This number was consistent with the 32% participation in Cosmos reported by our survey. Just 40% of surveyed organizations reported participation in the commercial solutions provided by Epic or Cerner, despite a potentially lower implementation barrier compared to other programs and tools. This suggests that open-source and government supported tools, governance structures, and user-communities may better align with the values and interests of academic health systems compared to some of the solutions provided by commercial vendors.

Recommendations for research institutions, funders, and vendors

Quotes such as “this has always felt like a good idea that never really got much use” and “it became too much of a hassle for too little investigator return” suggest there are opportunities for the informatics community to help improve adoption, usability, and return on investments in data sharing programs and technologies.

For research institutions, we recommend:

Developing approaches to optimize the usefulness and value of those programs/tools in which they choose to participate

Collaborating with policymakers/funders, and commercial vendors to combine efforts where feasible.

Providing training and education about available resources and developing user engagement strategies.

For policymakers and funding organizations, we recommend:

Being mindful of the resources required to participating programs/tools and making efforts to simplify and standardize programs and underlying technologies where possible.

Considering how to evaluate the success of various programs/tools

Highlighting ethical considerations about confidentiality, privacy, and monetization of data assets

For technology developers, we recommend:

Designing governance models that facilitate user input and feedback

Promoting collaboration and coordination among a community of users as well as transparency across all data sharing and analysis efforts

Being aware of ethical considerations regarding confidentiality, privacy, and data ownership

Including feedback from of an independent advisory board which includes consumer advocates

Leveraging data standards like FHIR; competing on value-adds rather than proprietary data models

Tracking usage metrics to help institutions assess the impact of programs/tools

Limitations

Our survey did not include an exhaustive list of data sharing programs or tools, nor did it include programs focused on the exchange of non-phenotypic health data such as genomic or image data which are likely to be increasingly important to future initiatives. Neither did the survey include claims-based data resources such as Medicare Provider Analysis and Review (MEDPAR), Optum, or state resources such as all-payer databases. According to the Agency for Healthcare Research and Quality, 18 states have legislation mandating the creation and use of all-payer claims databases (APCDs) or are actively establishing APCDs, and more than 30 states maintain, are developing, or have a strong interest in developing an APCD. [31] How these all-payer databases will be used relative to the tools and programs investigated in this study is unknown. Future studies should consider a wider range of data sharing resources that encompass a broader set of health data.

Conclusion

There is considerable variability in the use and perceived value of data sharing programs and tools across top academic health systems. In our survey of 50 organizations, most indicated their participation in multiple programs/tools but reported relatively low usage (even when they participated, they frequently reported that fewer than 1 individual per month benefitted from the platform to support research activities.) Our results suggest that major investments to support research data sharing have not yet achieved desired results.

Figures & Tables

References

- 1.Office of the National Coordinator for Health Information Technology [Internet] National Trends in Hospital and Physician Adoption of Electronic Health Records | HealthIT.gov. [cited 2023 Mar 4]. Available from: https://www.healthit.gov/data/quickstats/national-trends-hospital-and-physician-adoption-electronic-health-records.

- 2.Institute of Medicine (US) Roundtable on Evidence-Based Medicine. The Learning Healthcare System: Workshop Summary. 2007. [PubMed]

- 3.Friedman C, Rubin J, Brown J, Buntin M, Corn M, Etheredge L, et al. Toward a science of learning systems: a research agenda for the high-functioning Learning Health System. J Am Med Inform Assoc. 2015;22(1):43–50. doi: 10.1136/amiajnl-2014-002977. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Tarabichi Y, Frees A, Honeywell S, Huang C, Naidech AM, Moore JH, et al. The Cosmos Collaborative: A Vendor-Facilitated Electronic Health Record Data Aggregation Platform. ACI open. 2021;5(1):e36–e46. doi: 10.1055/s-0041-1731004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Lenert L, Sundwall DN. Public health surveillance and meaningful use regulations: a crisis of opportunity. Am J Public Health. 2012;102(3):e1–7. doi: 10.2105/AJPH.2011.300542. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Danchev V, Min Y, Borghi J, Baiocchi M, Ioannidis JPA. Evaluation of Data Sharing After Implementation of the International Committee of Medical Journal Editors Data Sharing Statement Requirement. JAMA Netw Open. 2021;4(1):e2033972. doi: 10.1001/jamanetworkopen.2020.33972. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Majumder MA, Guerrini CJ, Bollinger JM, Cook-Deegan R, McGuire AL. Sharing data under the 21st Century Cures Act. Genet Med. 2017;19(12):1289–94. doi: 10.1038/gim.2017.59. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Butte AJ. Trials and Tribulations-11 Reasons Why We Need to Promote Clinical Trials Data Sharing. JAMA Netw Open. 2021;4(1):e2035043. doi: 10.1001/jamanetworkopen.2020.35043. [DOI] [PubMed] [Google Scholar]

- 9.National Center for Research Resources. NCRR Fact Sheet: Clinical and Translational Science Awards. 2009. [cited 18 Feb 2023]. Available from: https://web.archive.org/web/20100527171210/http://www.ncrr.nih.gov/publications/pdf/ctsa_factsheet.pdf.

- 10.Ragon B, Volkov BB, Pulley C, Holmes K. Using informatics to advance translational science: Environmental scan of adaptive capacity and preparedness of Clinical and Translational Science Award Program hubs. J Clin Transl Sci. 2022;6(1):e76. doi: 10.1017/cts.2022.402. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.National Center for Advancing Translational Sciences. PAR-21-293: Clinical and Translational Science Award (UM1 Clinical Trial Optional) National Center for Advancing Translational Sciences. 2021. [cited 18 Feb 2023]. Available from: https://grants.nih.gov/grants/guide/pa-files/PAR-21-293.html.

- 12.Sanchez-Pinto LN, Mosa ASM, Fultz-Hollis K, Tachinardi U, Barnett WK, Embi PJ. The Emerging Role of the Chief Research Informatics Officer in Academic Health Centers. Appl Clin Inform. 2017;8(3):845–53. doi: 10.4338/ACI-2017-04-RA-0062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.National Center for Advancing Translational Sciences. CTSA Program Hub Directory. [cited 2023 Feb 20]. Available from: https://clic-ctsa.org/ctsa-program-hub-directory.

- 14.Visweswaran S, Becich MJ, D’Itri VS, Sendro ER, MacFadden D, Anderson NR, et al. Accrual to Clinical Trials (ACT): A Clinical and Translational Science Award Consortium Network. JAMIA Open. 2018;1(2):147–52. doi: 10.1093/jamiaopen/ooy033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.National Center for Advancing Translational Sciences. ENACT: A New CTSA Consortium-Wide EHR Research Platform. [cited 2023 Feb 20]. Available from: https://clic-ctsa.org/news/enact-new-ctsa-consortium-wide-ehr-research-platform.

- 16.National Institutes of Health. National Institutes of Health All of Us Program. [cited 2023 Feb 20]. Available from: https://allofus.nih.gov.

- 17.Denny JC, Rutter JL, Goldstein DB, Philippakis A, Smoller JW, Jenkins G, et al. The “All of Us” Research Program. N Engl J Med. 2019;381(7):668–76. doi: 10.1056/NEJMsr1809937. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Oracle Cerner. Real-World Data. [cited 2023 Feb 20]. Available from: https://www.cerner.com/solutions/real-world-data.

- 19.Datavant. [cited 2023 Feb 20]. Available from: https://datavant.com/products/switchboard/

- 20.Epic. Epic Cosmos. [cited 2023 Feb 20]. Available from: https://cosmos.epic.com.

- 21.National Center for Advancing Translational Sciences. National COVID Cohort Collaborative (N3C) [cited 2023 Feb 20]. Available from: https://ncats.nih.gov/n3c.

- 22.OHDSI. OHDSI Observational Health Data Sciences and Informatics. [cited 2023 Feb 20]. Available from https://www.ohdsi.org.

- 23.Hripcsak G, Duke JD, Shah NH, Reich CG, Huser V, Schuemie MJ, et al. Observational Health Data Sciences and Informatics (OHDSI): Opportunities for Observational Researchers. Stud Health Technol Inform. 2015;216:574–8. [PMC free article] [PubMed] [Google Scholar]

- 24.PCORnet, the National Patient-Centered Clinical Research Network. [cited 2023 Feb 20]. Available from https://pcornet.org.

- 25.TriNetX. Real-World Data. [cited 2023 Feb 20]. Available from: https://trinetx.com/real-world-data.

- 26.Topaloglu U, Palchuk MB. Using a Federated Network of Real-World Data to Optimize Clinical Trials Operations. JCO Clin Cancer Inform. 2018;2:1–10. doi: 10.1200/CCI.17.00067. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Truveta. Saving Lives with Data. [cited 2023 Feb 20]. Available from: https://www.truveta.com.

- 28.Cole CL, Sengupta S, Rossetti Née Collins S, Vawdrey DK, Halaas M, Maddox TM, et al. Ten principles for data sharing and commercialization. J Am Med Inform Assoc. 2021;28(3):646–9. doi: 10.1093/jamia/ocaa260. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Truveta. Members. [cited 2023 Mar 3]. Available from: https://www.truveta.com/members.

- 30.TechTarget. How Epic’s Cosmos Supported Clinical Research with De-Identified Data. [cited 2023 Mar 13]. Available from https://ehrintelligence.com/features/how-epics-cosmos-supported-clinical-research-with-de-identified-data.

- 31.Agency for Healthcare Research and Quality. All-Payer Claims Databases. [cited 2023 Mar 1]. Available from: https://www.ahrq.gov/data/apcd/index.html.