Abstract

The concept of evidence-based practice has persisted over several years and remains a cornerstone in clinical practice, representing the gold standard for optimal patient care. However, despite widespread recognition of its significance, practical application faces various challenges and barriers, including a lack of skills in interpreting studies, limited resources, time constraints, linguistic competencies, and more. Recently, we have witnessed the emergence of a groundbreaking technological revolution known as artificial intelligence. Although artificial intelligence has become increasingly integrated into our daily lives, some reluctance persists among certain segments of the public. This article explores the potential of artificial intelligence as a solution to some of the main barriers encountered in the application of evidence-based practice. It highlights how artificial intelligence can assist in staying updated with the latest evidence, enhancing clinical decision-making, addressing patient misinformation, and mitigating time constraints in clinical practice. The integration of artificial intelligence into evidence-based practice has the potential to revolutionize healthcare, leading to more precise diagnoses, personalized treatment plans, and improved doctor-patient interactions. This proposed synergy between evidence-based practice and artificial intelligence may necessitate adjustments to its core concept, heralding a new era in healthcare.

Keywords: Evidence, Clinicians, Patients, Artificial intelligence, Evidence-based practice

Core Tip: Evidence-based practice principles remain crucial in clinical care. However, practical application faces challenges. The recent emergence of artificial intelligence offers solutions for the main barriers. Artificial intelligence can swiftly provide evidence, enhances clinical decision-making, combat patient misinformation, and improve clinical consultations. The integration of artificial intelligence into evidence-based practice represents a potential paradigm shift, requiring some adjustments to the core concept of evidence-based practice.

INTRODUCTION

The evidence-based practice (EBP) principles are several years old[1], yet their importance remains as relevant as ever. EBP continues to be considered the gold standard for the best clinical practice[2]. As it was developed some years ago, it has undergone some changes (e.g., evidence-informed practice), but its foundation principles persist, with the best evidence, clinical expertise, and patient preferences playing central roles[3]. However, despite the widespread acknowledgment of its importance in the daily clinical practice, its practical application can be challenging and some barriers to its implementation arise. Some of these barriers include[4]: Lack of skills to understand studies; insufficient resources and funding; time constraints; lack of informatics and linguistic competencies; overtasking, heavy workload and competing priorities; inadequate training and stakeholders support; patient personal characteristics and health illiteracy; lack of motivation, confidence, interest, and commitment to change; evidence “unrealistic”, inaccessible, “unreadable”, conflicting, and massive.

While the concept is not new[5-9], we have only recently witnessed the emergence, interest, and adoption of a new technological revolution known as artificial intelligence (AI)[5,10]. AI encompasses the creation of machine learning technology, capable of performing high-level executive functions that typically require human intelligence (such as reasoning, learning, planning, and creativity)[7,11-17]. We observe applications in techs (e.g., smart cars, smart homes, smartphones, computers, robots, and drones), navigation systems, code writing, facial recognition, chatbots, image and data analysis, translators, audio output, and more[11-13,18-23]. Like any other technological tool, AI has incrementally become a part of our lives, despite the reluctance of some public[13,16,24,25]. The AI use brings both advantages and disadvantages. Its advantages and disadvantages are summarized in (Table 1)[7,14,24,26-35].

Table 1.

Example of artificial intelligence advantages and disadvantages

|

Advantages

|

Disadvantages

|

| Efficiency | Job displacement |

| Accuracy | Bias |

| Cost reduction | Lack of empathy |

| Constant availability | Complexity |

| Data analysis | Security risks |

| Customization | Lack of transparency |

| Scalability | Fairness |

| Natural language processing | Regulation |

| Automation | Ethical concerns |

| Productivity | |

| Accessibility |

By understanding and carefully evaluating its pros and cons, AI could be considered as a potential solution for overcoming some of the main barriers encountered in the application and implementation of EBP. Here are some examples: (1) Inability to stay updated with the best evidence. As it may be known, the pace of scientific production is currently at its peak. The number of articles is growing exponentially[36], making it nearly humanly impossible to search for and read all the information published every day. AI can assist in the search process by summarizing the latest literature within seconds, thus saving clinicians time for other tasks[24,26,31,37-39]. However, as it currently stands, AI still has some “bugs” (known as AI Hallucinations) and is not yet able to critically analyze it[12,13,21,34,37,39-51], making it essential that the clinician continues to do their own "homework"[51,52]. In addition, there is another evidence-related barrier – comprehension. Studies often employ a specialized scientific language, with English as the predominant language[53,54]. Many clinicians still do not have a satisfactory scientific and linguistic understanding to stay updated[55]. AI is already capable of providing definitions, complete document translations, (re)writing, and summarizing[13,18,21,22,25,37,39,54,56-61], overcoming this barrier; (2) Enhanced clinical decision-making. During a clinical session, it is up to the clinician to assess the patient's clinical situation and, based on the results, present the best intervention plan to their patients, considering the best and most recent literature along with their clinical experience[62]. Therefore, the first phase of clinical reasoning heavily relies on the clinician's “isolated” judgment. AI has already evolved to the point where it can integrate information from imaging and clinical findings, typical disease progression patterns, treatment responses (risk and benefits), and scientific information[7,8,12,16,23,24,35,38,39,57,63-70]. Consequently, AI can act as a second clinician in the decision-making process, where the human clinical expert can interact with the "artificial clinical expert", leading to more accurate decisions and presenting more precise diagnoses, prognoses, and personalized/tailored interventions plans for their patients[6,14,16,23,52,57,65,69-73]. This human-machine interaction may be particularly valuable for those just starting out their careers. As explored, one of the foundations for better clinical decision-making involves clinical experience. However, those who are just starting out in the profession do not yet have enough clinical experience to be experts in the field, and often have to make the first stage of the decision solely based on scientific evidence[74]. Therefore, AI could act as the expert in this situation, helping novice clinicians with their clinical decision-making; (3) Patient misinformation. While patients often do not actively participate in clinical decision-making, their beliefs and preferences should, in accordance with the principles of EBP, be considered when devising an intervention plan[1]. In this way, there is a mutual partnership between the clinician and the patient, as their beliefs and preferences can help (positively) limit the number of possible interventions/therapies/treatments, making a treatment plan easier to adhere[75]. However, patients' beliefs and preferences can sometimes pose a barrier. Patients frequently arrive at clinical appointments misinformed about their clinical condition and some of the interventions/therapies/treatments[76]. AI, if less biased[34,40], could correctly provide information to patients about their clinical condition and the most appropriate interventions/therapies/treatments[14,71,77]. This will greatly facilitate the doctor-patient interaction, thus enhancing the quality of intervention planning and clinical management[71,77]; and (4) Time constraints in clinical practice and consultations. As is well known, many clinicians spend a substantial amount of time on bureaucratic and administrative tasks, leaving them with insufficient time for proper patient care[7,38,75]. AI can assist in scheduling, triage, filling out forms, billing, monitoring, and responding to routine tasks (almost like an artificial assistant or secretary)[7,28,38,69,73,78]. This would allow clinicians to free up more time for tasks that involve essential human interaction, simply by issuing a few basic and quick commands[31,38,52].

CONCLUSION

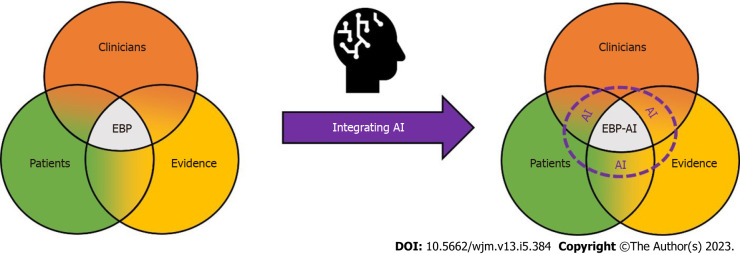

As explored, AI can be considered a useful tool in clinical management, encompassing various aspects such as time management, assessment, interaction, prescription, monitoring, decision-making, information processing, and more. This could potentially usher in a paradigm-shift in EBP, requiring minor adjustments to its core concept. Consequently, the new EBP-AI proposal is presented in (Figure 1).

Figure 1.

Evidence-based practice artificial intelligence. EBP: Evidence-based practice; AI: Artificial intelligence.

Footnotes

Conflict-of-interest statement: Ricardo Maia Ferreira has no conflit of interest to declare.

Provenance and peer review: Invited article; Externally peer reviewed.

Peer-review model: Single blind

Corresponding Author's Membership in Professional Societies: Polytechnic Institute of Maia; Polytechnic Institute of Coimbra; Polytechnic Institute of Castelo Branco; Sport Physical Activity and Health Research & Innovation Center.

Peer-review started: October 17, 2023

First decision: October 24, 2023

Article in press: November 8, 2023

Specialty type: Medicine, research and experimental

Country/Territory of origin: Portugal

Peer-review report’s scientific quality classification

Grade A (Excellent): 0

Grade B (Very good): 0

Grade C (Good): C

Grade D (Fair): 0

Grade E (Poor): 0

P-Reviewer: Gisbert JP, Spain S-Editor: Liu JH L-Editor: A P-Editor: Liu JH

References

- 1.Sackett DL, Rosenberg WM, Gray JA, Haynes RB, Richardson WS. Evidence based medicine: what it is and what it isn't. BMJ. 1996;312:71–72. doi: 10.1136/bmj.312.7023.71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Groah SL, Libin A, Lauderdale M, Kroll T, DeJong G, Hsieh J. Beyond the evidence-based practice paradigm to achieve best practice in rehabilitation medicine: a clinical review. PM R. 2009;1:941–950. doi: 10.1016/j.pmrj.2009.06.001. [DOI] [PubMed] [Google Scholar]

- 3.Woodbury MG, Kuhnke JL. Evidence-based practice vs. evidence-informed practice: what’s the difference. Wound Care Canada. 2014;12:18–21. [Google Scholar]

- 4.Li S, Cao M, Zhu X. Evidence-based practice: Knowledge, attitudes, implementation, facilitators, and barriers among community nurses-systematic review. Medicine (Baltimore) 2019;98:e17209. doi: 10.1097/MD.0000000000017209. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Katritsis DG. Artificial Intelligence, Superintelligence and Intelligence. Arrhythm Electrophysiol Rev. 2021;10:223–224. doi: 10.15420/aer.2021.61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Hamet P, Tremblay J. Artificial intelligence in medicine. Metabolism. 2017;69S:S36–S40. doi: 10.1016/j.metabol.2017.01.011. [DOI] [PubMed] [Google Scholar]

- 7.Aung YYM, Wong DCS, Ting DSW. The promise of artificial intelligence: a review of the opportunities and challenges of artificial intelligence in healthcare. Br Med Bull. 2021;139:4–15. doi: 10.1093/bmb/ldab016. [DOI] [PubMed] [Google Scholar]

- 8.Frith KH. Swarm Thinking: Can Humans Beat Artificial Intelligence? Nurs Educ Perspect. 2023;44:69. doi: 10.1097/01.NEP.0000000000001087. [DOI] [PubMed] [Google Scholar]

- 9.Zarbin MA. Artificial Intelligence: Quo Vadis? Transl Vis Sci Technol. 2020;9:1. doi: 10.1167/tvst.9.2.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Alawi F. Artificial intelligence: the future might already be here. Oral Surg Oral Med Oral Pathol Oral Radiol. 2023;135:313–315. doi: 10.1016/j.oooo.2023.01.002. [DOI] [PubMed] [Google Scholar]

- 11.Chan PP, Lee VWY, Yam JCS, Brelén ME, Chu WK, Wan KH, Chen LJ, Tham CC, Pang CP. Flipped Classroom Case Learning vs Traditional Lecture-Based Learning in Medical School Ophthalmology Education: A Randomized Trial. Acad Med. 2023;98:1053–1061. doi: 10.1097/ACM.0000000000005238. [DOI] [PubMed] [Google Scholar]

- 12.Kung TH, Cheatham M, Medenilla A, Sillos C, De Leon L, Elepaño C, Madriaga M, Aggabao R, Diaz-Candido G, Maningo J, Tseng V. Performance of ChatGPT on USMLE: Potential for AI-assisted medical education using large language models. PLOS Digit Health. 2023;2:e0000198. doi: 10.1371/journal.pdig.0000198. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Wittmann J. Science fact vs science fiction: A ChatGPT immunological review experiment gone awry. Immunol Lett. 2023;256-257:42–47. doi: 10.1016/j.imlet.2023.04.002. [DOI] [PubMed] [Google Scholar]

- 14.Sallam M. ChatGPT Utility in Healthcare Education, Research, and Practice: Systematic Review on the Promising Perspectives and Valid Concerns. Healthcare (Basel) 2023;11 doi: 10.3390/healthcare11060887. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Marreiros A, Cordeiro C. Clinical research and artificial intelligence. Port J Card Thorac Vasc Surg. 2022;29:13–15. doi: 10.48729/pjctvs.273. [DOI] [PubMed] [Google Scholar]

- 16.Nicolas J, Pitaro NL, Vogel B, Mehran R. Artificial Intelligence - Advisory or Adversary? Interv Cardiol. 2023;18:e17. doi: 10.15420/icr.2022.22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Razavian N, Knoll F, Geras KJ. Artificial Intelligence Explained for Nonexperts. Semin Musculoskelet Radiol. 2020;24:3–11. doi: 10.1055/s-0039-3401041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Lecler A, Duron L, Soyer P. Revolutionizing radiology with GPT-based models: Current applications, future possibilities and limitations of ChatGPT. Diagn Interv Imaging. 2023;104:269–274. doi: 10.1016/j.diii.2023.02.003. [DOI] [PubMed] [Google Scholar]

- 19.Ang TL, Choolani M, See KC, Poh KK. The rise of artificial intelligence: addressing the impact of large language models such as ChatGPT on scientific publications. Singapore Med J. 2023;64:219–221. doi: 10.4103/singaporemedj.SMJ-2023-055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.The Lancet Digital Health. ChatGPT: friend or foe? Lancet Digit Health. 2023;5:e102. doi: 10.1016/S2589-7500(23)00023-7. [DOI] [PubMed] [Google Scholar]

- 21.van Dis EAM, Bollen J, Zuidema W, van Rooij R, Bockting CL. ChatGPT: five priorities for research. Nature. 2023;614:224–226. doi: 10.1038/d41586-023-00288-7. [DOI] [PubMed] [Google Scholar]

- 22.Salvagno M, Taccone FS, Gerli AG. Can artificial intelligence help for scientific writing? Crit Care. 2023;27:75. doi: 10.1186/s13054-023-04380-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Niazi MKK, Parwani AV, Gurcan MN. Digital pathology and artificial intelligence. Lancet Oncol. 2019;20:e253–e261. doi: 10.1016/S1470-2045(19)30154-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Temsah O, Khan SA, Chaiah Y, Senjab A, Alhasan K, Jamal A, Aljamaan F, Malki KH, Halwani R, Al-Tawfiq JA, Temsah MH, Al-Eyadhy A. Overview of Early ChatGPT's Presence in Medical Literature: Insights From a Hybrid Literature Review by ChatGPT and Human Experts. Cureus. 2023;15:e37281. doi: 10.7759/cureus.37281. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Dupps WJ Jr. Artificial intelligence and academic publishing. J Cataract Refract Surg. 2023;49:655–656. doi: 10.1097/j.jcrs.0000000000001223. [DOI] [PubMed] [Google Scholar]

- 26.Ferreira RM. Artificial intelligence in health and science: an introspection. Journal of Evidence-Based Healthcare. 2023;5:e5236–e5236. [Google Scholar]

- 27.Lee D, Yoon SN. Application of Artificial Intelligence-Based Technologies in the Healthcare Industry: Opportunities and Challenges. Int J Environ Res Public Health. 2021;18 doi: 10.3390/ijerph18010271. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Sonawane A, Shah S, Pote S, He M. The application of artificial intelligence: perceptions from healthcare professionals. Health and Technology 2023. [Google Scholar]

- 29.Lee H. The rise of ChatGPT: Exploring its potential in medical education. Anat Sci Educ. 2023 doi: 10.1002/ase.2270. [DOI] [PubMed] [Google Scholar]

- 30.Anderson N, Belavy DL, Perle SM, Hendricks S, Hespanhol L, Verhagen E, Memon AR. AI did not write this manuscript, or did it? Can we trick the AI text detector into generated texts? The potential future of ChatGPT and AI in Sports & Exercise Medicine manuscript generation. BMJ Open Sport Exerc Med. 2023;9:e001568. doi: 10.1136/bmjsem-2023-001568. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Khan RA, Jawaid M, Khan AR, Sajjad M. ChatGPT - Reshaping medical education and clinical management. Pak J Med Sci. 2023;39:605–607. doi: 10.12669/pjms.39.2.7653. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Baumgartner C. The potential impact of ChatGPT in clinical and translational medicine. Clin Transl Med. 2023;13:e1206. doi: 10.1002/ctm2.1206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Vaishya R, Misra A, Vaish A. ChatGPT: Is this version good for healthcare and research? Diabetes Metab Syndr. 2023;17:102744. doi: 10.1016/j.dsx.2023.102744. [DOI] [PubMed] [Google Scholar]

- 34.Nelson GS. Bias in Artificial Intelligence. N C Med J. 2019;80:220–222. doi: 10.18043/ncm.80.4.220. [DOI] [PubMed] [Google Scholar]

- 35.Chattu VK. A review of artificial intelligence, big data, and blockchain technology applications in medicine and global health. Big Data Cogn Comput. 2021;5:41. [Google Scholar]

- 36.Moseley AM, Elkins MR, Van der Wees PJ, Pinheiro MB. Using research to guide practice: The Physiotherapy Evidence Database (PEDro) Braz J Phys Ther. 2020;24:384–391. doi: 10.1016/j.bjpt.2019.11.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Ariyaratne S, Iyengar KP, Nischal N, Chitti Babu N, Botchu R. A comparison of ChatGPT-generated articles with human-written articles. Skeletal Radiol. 2023;52:1755–1758. doi: 10.1007/s00256-023-04340-5. [DOI] [PubMed] [Google Scholar]

- 38.Patel SB, Lam K. ChatGPT: the future of discharge summaries? Lancet Digit Health. 2023;5:e107–e108. doi: 10.1016/S2589-7500(23)00021-3. [DOI] [PubMed] [Google Scholar]

- 39.Dahmen J, Kayaalp ME, Ollivier M, Pareek A, Hirschmann MT, Karlsson J, Winkler PW. Artificial intelligence bot ChatGPT in medical research: the potential game changer as a double-edged sword. Knee Surg Sports Traumatol Arthrosc. 2023;31:1187–1189. doi: 10.1007/s00167-023-07355-6. [DOI] [PubMed] [Google Scholar]

- 40.Belenguer L. AI bias: exploring discriminatory algorithmic decision-making models and the application of possible machine-centric solutions adapted from the pharmaceutical industry. AI Ethics. 2022;2:771–787. doi: 10.1007/s43681-022-00138-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Metze K, Morandin-Reis RC, Lorand-Metze I, Florindo JB. The Amount of Errors in ChatGPT's Responses is Indirectly Correlated with the Number of Publications Related to the Topic Under Investigation. Ann Biomed Eng. 2023;51:1360–1361. doi: 10.1007/s10439-023-03205-1. [DOI] [PubMed] [Google Scholar]

- 42.Wen J, Wang W. The future of ChatGPT in academic research and publishing: A commentary for clinical and translational medicine. Clin Transl Med. 2023;13:e1207. doi: 10.1002/ctm2.1207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Najafali D, Camacho JM, Reiche E, Galbraith LG, Morrison SD, Dorafshar AH. Truth or Lies? The Pitfalls and Limitations of ChatGPT in Systematic Review Creation. Aesthet Surg J. 2023;43:NP654–NP655. doi: 10.1093/asj/sjad093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Kleesiek J, Wu Y, Stiglic G, Egger J, Bian J. An Opinion on ChatGPT in Health Care-Written by Humans Only. J Nucl Med. 2023;64:701–703. doi: 10.2967/jnumed.123.265687. [DOI] [PubMed] [Google Scholar]

- 45.Haman M, Školník M. Using ChatGPT to conduct a literature review. Account Res. 2023:1–3. doi: 10.1080/08989621.2023.2185514. [DOI] [PubMed] [Google Scholar]

- 46.Fulton JS. Authorship and ChatGPT. Clin Nurse Spec. 2023;37:109–110. doi: 10.1097/NUR.0000000000000750. [DOI] [PubMed] [Google Scholar]

- 47.Goto A, Katanoda K. Should We Acknowledge ChatGPT as an Author? J Epidemiol. 2023;33:333–334. doi: 10.2188/jea.JE20230078. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Zheng H, Zhan H. ChatGPT in Scientific Writing: A Cautionary Tale. Am J Med. 2023;136:725–726.e6. doi: 10.1016/j.amjmed.2023.02.011. [DOI] [PubMed] [Google Scholar]

- 49.Macdonald C, Adeloye D, Sheikh A, Rudan I. Can ChatGPT draft a research article? An example of population-level vaccine effectiveness analysis. J Glob Health. 2023;13:01003. doi: 10.7189/jogh.13.01003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Alkaissi H, McFarlane SI. Artificial Hallucinations in ChatGPT: Implications in Scientific Writing. Cureus. 2023;15:e35179. doi: 10.7759/cureus.35179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Salvagno M, Taccone FS, Gerli AG. Artificial intelligence hallucinations. Crit Care. 2023;27:180. doi: 10.1186/s13054-023-04473-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Homolak J. Opportunities and risks of ChatGPT in medicine, science, and academic publishing: a modern Promethean dilemma. Croat Med J. 2023;64:1–3. doi: 10.3325/cmj.2023.64.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Karin H, Filip S, Jo G, Bert A. Obstacles to the implementation of evidence-based physiotherapy in practice: a focus group-based study in Belgium (Flanders) Physiother Theory Pract. 2009;25:476–488. doi: 10.3109/09593980802661949. [DOI] [PubMed] [Google Scholar]

- 54.Chen TJ. ChatGPT and other artificial intelligence applications speed up scientific writing. J Chin Med Assoc. 2023;86:351–353. doi: 10.1097/JCMA.0000000000000900. [DOI] [PubMed] [Google Scholar]

- 55.Ferreira RM, Martins PN, Pimenta N, Gonçalves RS. Measuring evidence-based practice in physical therapy: a mix-methods study. PeerJ. 2022;9:e12666. doi: 10.7717/peerj.12666. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Marchandot B, Matsushita K, Carmona A, Trimaille A, Morel O. ChatGPT: the next frontier in academic writing for cardiologists or a pandora's box of ethical dilemmas. Eur Heart J Open. 2023;3:oead007. doi: 10.1093/ehjopen/oead007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Cascella M, Montomoli J, Bellini V, Bignami E. Evaluating the Feasibility of ChatGPT in Healthcare: An Analysis of Multiple Clinical and Research Scenarios. J Med Syst. 2023;47:33. doi: 10.1007/s10916-023-01925-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Graf A, Bernardi RE. ChatGPT in Research: Balancing Ethics, Transparency and Advancement. Neuroscience. 2023;515:71–73. doi: 10.1016/j.neuroscience.2023.02.008. [DOI] [PubMed] [Google Scholar]

- 59.Zimmerman A. A Ghostwriter for the Masses: ChatGPT and the Future of Writing. Ann Surg Oncol. 2023;30:3170–3173. doi: 10.1245/s10434-023-13436-0. [DOI] [PubMed] [Google Scholar]

- 60.Zhu JJ, Jiang J, Yang M, Ren ZJ. ChatGPT and environmental research. ESE. 2023 doi: 10.1021/acs.est.3c01818. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Else H. Abstracts written by ChatGPT fool scientists. Nature. 2023;613:423. doi: 10.1038/d41586-023-00056-7. [DOI] [PubMed] [Google Scholar]

- 62.Hamilton DK. Evidence, Best Practice, and Intuition. HERD. 2017;10:87–90. doi: 10.1177/1937586717711366. [DOI] [PubMed] [Google Scholar]

- 63.Zhou Z, Wang X, Li X, Liao L. Is ChatGPT an Evidence-based Doctor? Eur Urol. 2023;84:355–356. doi: 10.1016/j.eururo.2023.03.037. [DOI] [PubMed] [Google Scholar]

- 64.Morreel S, Mathysen D, Verhoeven V. Aye, AI! ChatGPT passes multiple-choice family medicine exam. Med Teach. 2023;45:665–666. doi: 10.1080/0142159X.2023.2187684. [DOI] [PubMed] [Google Scholar]

- 65.Shen Y, Heacock L, Elias J, Hentel KD, Reig B, Shih G, Moy L. ChatGPT and Other Large Language Models Are Double-edged Swords. Radiology. 2023;307:e230163. doi: 10.1148/radiol.230163. [DOI] [PubMed] [Google Scholar]

- 66.Gilson A, Safranek CW, Huang T, Socrates V, Chi L, Taylor RA, Chartash D. How Does ChatGPT Perform on the United States Medical Licensing Examination? The Implications of Large Language Models for Medical Education and Knowledge Assessment. JMIR Med Educ. 2023;9:e45312. doi: 10.2196/45312. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Subramani M, Jaleel I, Krishna Mohan S. Evaluating the performance of ChatGPT in medical physiology university examination of phase I MBBS. Adv Physiol Educ. 2023;47:270–271. doi: 10.1152/advan.00036.2023. [DOI] [PubMed] [Google Scholar]

- 68.Verhoeven F, Wendling D, Prati C. ChatGPT: when artificial intelligence replaces the rheumatologist in medical writing. Ann Rheum Dis. 2023;82:1015–1017. doi: 10.1136/ard-2023-223936. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Cutler DM. What Artificial Intelligence Means for Health Care. JAMA Health Forum. 2023;4:e232652. doi: 10.1001/jamahealthforum.2023.2652. [DOI] [PubMed] [Google Scholar]

- 70.Wang DY, Ding J, Sun AL, Liu SG, Jiang D, Li N, Yu JK. Artificial intelligence suppression as a strategy to mitigate artificial intelligence automation bias. J Am Med Inform Assoc. 2023;30:1684–1692. doi: 10.1093/jamia/ocad118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Beltrami EJ, Grant-Kels JM. Consulting ChatGPT: Ethical dilemmas in language model artificial intelligence. J Am Acad Dermatol. 2023 doi: 10.1016/j.jaad.2023.02.052. [DOI] [PubMed] [Google Scholar]

- 72.DiGiorgio AM, Ehrenfeld JM. Artificial Intelligence in Medicine & ChatGPT: De-Tether the Physician. J Med Syst. 2023;47:32. doi: 10.1007/s10916-023-01926-3. [DOI] [PubMed] [Google Scholar]

- 73.Krittanawong C, Kaplin S. Artificial Intelligence in Global Health. Eur Heart J. 2021;42:2321–2322. doi: 10.1093/eurheartj/ehab036. [DOI] [PubMed] [Google Scholar]

- 74.Fernández-Domínguez JC, Sesé-Abad A, Morales-Asencio JM, Oliva-Pascual-Vaca A, Salinas-Bueno I, de Pedro-Gómez JE. Validity and reliability of instruments aimed at measuring Evidence-Based Practice in Physical Therapy: a systematic review of the literature. J Eval Clin Pract. 2014;20:767–778. doi: 10.1111/jep.12180. [DOI] [PubMed] [Google Scholar]

- 75.Mathieson A, Grande G, Luker K. Strategies, facilitators and barriers to implementation of evidence-based practice in community nursing: a systematic mixed-studies review and qualitative synthesis. Prim Health Care Res Dev. 2019;20:e6. doi: 10.1017/S1463423618000488. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Ayoubian A, Nasiripour AA, Tabibi SJ, Bahadori M. Evaluation of Facilitators and Barriers to Implementing Evidence-Based Practice in the Health Services: A Systematic Review. Galen Med J. 2020;9:e1645. doi: 10.31661/gmj.v9i0.1645. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77. Will ChatGPT transform healthcare? Nat Med. 2023;29:505–506. doi: 10.1038/s41591-023-02289-5. [DOI] [PubMed] [Google Scholar]

- 78.Arif TB, Munaf U, Ul-Haque I. The future of medical education and research: Is ChatGPT a blessing or blight in disguise? Med Educ Online. 2023;28:2181052. doi: 10.1080/10872981.2023.2181052. [DOI] [PMC free article] [PubMed] [Google Scholar]