Summary

The becoming of the human being is a multisensory process that starts in the womb. By integrating spontaneous neuronal activity with inputs from the external world, the developing brain learns to make sense of itself through multiple sensory experiences. Over the past ten years, advances in neuroimaging and electrophysiological techniques have allowed the exploration of the neural correlates of multisensory processing in the newborn and infant brain, thus adding an important piece of information to behavioral evidence of early sensitivity to multisensory events. Here, we review recent behavioral and neuroimaging findings to document the origins and early development of multisensory processing, particularly showing that the human brain appears naturally tuned to multisensory events at birth, which requires multisensory experience to fully mature. We conclude the review by highlighting the potential uses and benefits of multisensory interventions in promoting healthy development by discussing emerging studies in preterm infants.

Subject areas: Behavioral neuroscience, Developmental neuroscience, Sensory neuroscience

Graphical abstract

Behavioral neuroscience; Developmental neuroscience; Sensory neuroscience

Early sensitivity to multisensory events: Insights from behavioral newborn studies

Growing evidence shows that a large part of the human brain is intrinsically multisensory, that is, able to process and integrate sensory information regardless of its modality, not only in higher order association cortices but even at a very low-level of processing, in the putatively modality-specific primary sensory areas.1,2 The multisensory organization of the cerebral cortex has been supported by neurophysiological studies in animals, showing the existence of multisensory neurons in various brain structures, as well as direct feedforward connections between the primary sensory cortices and feedback pathways from higher-order cortical regions and low-level sensory areas and subcortical structures.3,4,5

At the behavioral level, the primary advantage of multisensory integration consists in the enhancement of the salience of sensory stimuli which, in turn, facilitates behavioral responses to them.6 The term ‘multisensory integration’ is typically used to refer to situations in which multiple stimuli presented in different sensory modalities, but in close spatial and temporal proximity, are bound together,7,8 mimicking the multisensory enhancement effects observable at the cellular level; instead, stimuli from different sensory modality are not integrated into a unified percept, and may even hamper sensory processing, if spatially and temporally incongruent.

Indeed, of particular relevance to understanding how our brain faces incoming multiple sensory information has been the discovery of multisensory neurons in the cat’s superior colliculus (SC), so much that it became a representative model to study multisensory integration in the human brain and perceptual systems.3 Most importantly, these studies have served the developmental cause, documenting that, first, the multisensory layers of the SC of newborn animal are not silent at birth but become overtly responsive to sensory stimuli depending on the time in which the single organs become ‘active’. In other words, at birth the cat’s SC neurons are only responsive to tactile stimulation because it is the only afference at that stage of development; the ear canals and eyelids open approximately 10 days after birth, and around this time, auditory and visual responsiveness appear too. However, the ability to synthesize such different sensory information (indexed by the enhancement of neural activity by multisensory stimuli) appears weeks later, suggesting that the animal requires a more prolonged sensory experience to optimize multisensory processing through a shared neural code.

This view is supported by neurophysiological evidence in experimentally deprived animals (e.g.,9): cats reared in the dark up to 6 months of age present a certain degree of immaturity of multisensory neurons, which indeed not benefit from multisensory stimulation, so that their responses to congruent audiovisual stimulation is not greater to their response to unisensory stimuli (auditory or visual), which corresponds to impairments of behavioral responses to combination of sensory stimuli (see10).

This evidence converges with that in cataract-reversal patients, who represent the corresponding human model to the cats reared in the dark. Indeed, these individuals are born blind due to cataract, but their vision can be restored through surgery following even a short period of visual deprivation. However, restoring vision even within the first year of life has long-term consequences on the development of multisensory capabilities, in that these patients present impaired multisensory processing in adulthood, at net of their visual performance, which develops typically. These findings, together with the animal studies mentioned above, suggest that multisensory input is necessary during the first weeks of life for the full maturation of multisensory interactions (see11,12).

To date, studies in newborns investigating multisensory integration as defined above are sparse, while there is evidence that, from the very first hours of life, newborns are sensitive to crossmodal interactions, both for low-level object characteristics (e.g., shape, see13) and for social stimuli, such as the matching between the mother’s voice and face.14 The term crossmodal interaction is not synonymous to multisensory integration, since it refers to situation in which stimuli from a sensory system can exert an influence on the perception of, or the ability to respond to, stimuli presented in another sensory modality, without resulting in a unified representation directly traceable to the activity of multisensory neurons.8 This process reflects the dynamic exchange of information between sensory systems, without necessarily involving the convergence of different sensory inputs on a single neuron. At the behavioral level, crossmodal interactions may cause perceptual distortions or illusory effect, occurring when a discordant sensory input overpowers another one that is less reliable,7 or they may favor the matching of distinct features or dimensions of experience across different senses (i.e., crossmodal correspondence).

The study of crossmodal interactions in newborns has capitalized on classic preferential looking techniques, by which longer looking/visual attention or the number of head turns directed toward one stimulus over another is taken as a marker that newborns not only can discriminate between two stimuli but actually prefer one over the other. Worth noting, a preference for paired bimodal stimuli over unimodal stimuli can also be seen as evidence of multisensory facilitation in processing, for example, the mother’s face (as seen in14).

Interestingly, work from Lewkowicz et al.15(2010, see Figure 1, panel A) has shown that crossmodal interactions are broadly tuned in early infancy; this translates, for example, into newborns’ ability to match not only native faces and voices but also non-native social stimuli, such as a non-human primate facial and its vocal gestures. Indeed, newborns look longer at a non-human primate face, producing a visible call that is accompanied by a corresponding audible call, with respect to a face not producing any sound (Experiment 1) and a face that produces a complex tone different from the corresponding one (Experiment 2). Note that this broad crossmodal interaction disappears with age due to ‘perceptual narrowing’, a concept that reflects the growing specialization of the brain in its native environment.16 Thus, by the end of the first year of life, infants lose their ability to discriminate between non-native stimuli in favor of increasing capabilities of discriminating across native stimuli (e.g., identities, phonemes, etc).

Figure 1.

Graphical adaptation of the results of behavioral studies depicting examples of multisensory interactions at birth and early infancy

(A) Displays the study by Lewkowicz et al.15 (2010), showing that multisensory perceptual tuning is broad at birth, enabling them to integrate facial and vocal gestures of non-native social stimuli.

(B) Displays the study by Anobile et al.17 (2021), revealing that multisensory cues help them matching audiovisual stimuli related to numbers.

(C) Displays the study of Filippetti et al.18 (2013), in which newborns showed a preference for infant’s faces while being stroked on their cheek, but only when the image was presented upright.

(D) Displays the study of Orioli et al.19 (2018), showing that newborns can match auditory and visual stimuli that apparently move toward or away from them.

Another line of investigation has focused on the role of crossmodal information in the development of abstract concepts, such as number.17,20 By adapting a behavioral paradigm developed by Izard et al.20 (2009), Anobile et al.17 (2021, see Figure 1, panel B) have explored crossmodal influences on number cognition. Auditory sequences containing a fixed number of sounds (i.e., 4 or 12 syllables) were presented to newborns: In the first minute of the auditory stimulation, the screen remained black; then, two consecutive images containing the same or a different number of items were displayed. The results revealed that newborns looked longer at the incongruent visual numerical stimuli, suggesting that audiovisual interactions favor the development of numerical cognition.

Crossmodal interactions were also explored with respect to the development of body representation; in human adults, body representation relies on the stability of multisensory cues for the construction and maintenance of the self.21 For example, Filippetti et al.18 (2013, see Figure 1, panel C) presented newborns with videos of either upright or inverted infant faces, who were stroked with a paintbrush on one of their cheeks. The newborn participants were stroked on one cheek by the experimenter, either synchronously or asynchronously, with respect to the viewed infant’s face. The results showed that newborns preferred the synchronous condition, but only when the viewed face was upright. That is, newborns do not prefer the amodal properties of multisensory stimuli per se (i.e., synchrony of observed and felt stroking) but prefer the congruence with their own body, i.e., prefer a body that looks like their own or is, at least, in an anatomically possible position (upright).

Another study by Filippetti et al.22 (2015) showed that newborns prefer synchronous visuo-tactile stimulation only if the seen and felt touch is spatially congruent, as shown in adults (e.g.,23,24). Newborns were presented with videos of infant faces touched either on their cheeks or foreheads. Newborns received the touches always synchronously to the viewed touch, they could be touched on the same/congruent body part (e.g., cheek-cheek) or on a different/incongruent body part (e.g., cheek-forehead) with respect to the viewed face. Newborns preferred the congruent over the incongruent visuo-tactile stimulation, and these data, together with the previous studies by the same authors, corroborate the notion that soon after birth, newborns are equipped with a rudimentary sense of bodily self, the origins and mechanisms of which are rooted in the temporal coherence of multisensory stimuli.

Again, related to body representation and perception, a recent study showed that newborns prefer approaching stimuli and do not show any preference for receding stimuli (19, see Figure 1, panel D). This was investigated by presenting newborns with two side-by-side visual displays, one looming toward and the other receding from the participant. A sound that either increased or decreased in intensity accompanied the visual looming stimulus, thus creating a congruent audiovisual approaching (i.e., a visual stimulus increasing in size accompanied by a sound rising in intensity) or receding (i.e., a visual stimulus decreasing in size accompanied by a sound decreasing in intensity) stimulus. Newborns only looked longer at the visual display when the sound increased in intensity but not when it decreased, thus revealing a preference for approaching audiovisual stimuli. This pattern is interesting with respect of two aspects of crossmodal correspondence: first, newborns are able to match dynamic, congruent, audiovisual information in which no redundant amodal feature is provided; second, since this crossmodal matching occurred only for approaching stimuli suggests that since the early stages of life, newborns are equipped with some multisensory knowledge about their own body, and can ‘recognize’ the evolutionary meaning of a looming stimulus approaching the body, often fundamental to activate defensive reactions.

While these studies have all provided evidence in favor of the notion that newborns appear to be tuned to multisensory events from very early on in ontogeny, only a few studies have investigated the neural correlates of such precocious ability. Because of the scarcity of studies conducted in newborns, the following paragraphs will discuss studies conducted in the first year of life and the contribution of different methods in unveiling the development of the multisensory human brain.

We conclude the review with a paragraph on the role of multisensory interventions in promoting well-being in preterm infants, highlighting the benefit of multisensory processing early in life and the importance of investigating its functioning from a behavioral and a neurophysiological point of view.

The idea of multisensory intervention is familiar and has been explored in both typical and atypical development. In particular, for the former, there is evidence that multisensory training can translate in better performance even in unisensory tasks.25,26 For example, individuals trained with audiovisual stimuli show improvement of both visual and auditory processing alone. This phenomenon also extends to social stimuli, as is the case of individuals trained with faces and voices, who are then facilitated in recognizing a voice without any visual cue,27 or individuals trained to encode objects with multisensory features, who then more easily process the same objects even under unisensory conditions.28

Of particular interest is the suggestion that even a mere multisensory experience - without any training – may impact on higher-level cognitive functions, such as memory and reasoning. Denervaud et al.29 (2020) provided evidence for this in a small group of children and adolescents, who underwent a simple detection task with unisensory (visual or auditory stimuli presented alone) and multisensory (audiovisual) stimuli and a multisensory recognition task, the discrimination of common objects presented with or without auditory congruent stimuli. These two measures of multisensory abilities were then correlated with the children’s working memory and abstract non-verbal reasoning performance. The first was measured with a backward digit span, the second by means of the Raven’s Colored Progressive Matrices. The results showed that multisensory abilities (in term of multisensory integration and crossmodal interactions effects) predict memory and intelligence scores. Although these data do not demonstrate the causal role of multisensory experience in promoting cognitive functioning, they at least suggest that multisensory processes are associate to mental processes. These findings are further supported by evidence in typical aging population: older people with better multisensory integration abilities present higher cognitive profiles on tasks of sustained attention, memory, processing speed, and executive functions.30

The fact that multisensory abilities are tightly linked to cognitive processes suggests that potentiating multisensory mechanisms might also improve cognitive processes; this, in turn, opens a new avenue of research, particularly for studying the effects of such training in preterm infants, who have an increased risk for learning disabilities but whose future outcome might be protected by early interventions.31,32,33

Electroencephalographic studies (EEG)

Audiovisual interactions

Among the many multisensory experiences, seeing talking faces is likely the most immediate and intense stimulation infants experience since birth. To observe the neural signatures of audiovisual speech perception, Hyde et al.34 (2011) presented 5-month-old infants with a female face saying ‘hi’; the auditory component, presented 400 ms before the face onset, could be synchronous or asynchronous with respect to the face.

Interestingly, but contrary to what is commonly observed in adults in which there is a modulation of EEG activity by synchronous stimuli (e.g.,35,36), infants exhibited greater magnitude of a large slow negative component (Nc) over frontocentral sites in the asynchronous, but not the synchronous, condition, suggesting that infants find this event particularly unfamiliar and novel and thus captures their attention.

Importantly, the ‘perceptual narrowing’16,37 mentioned above proposes that the developmental process ‘pushes’ toward the specialization of the perceptual systems and, by doing so, progressively sharpens the perceptual abilities of the individual. From a behavioral and functional point of view, this translates into ‘being able to do less, but better’ within a certain developmental period and likely corresponds to the pruning of unused connections in favor of the strengthening of connections that are highly used. For example, Pons et al.38 (2009) showed that 6-month-old native Spanish infants can match audiovisual speech with English speech sounds but lose this ability by the age of 11 months, as they have developed a specialized system for detecting and discriminating their native language.

The neural correlates of such narrowing were investigated by Grossmann et al.39 (2012), who recorded event-related potentials (ERPs) in 4- and 8-month-old infants while they viewed a human and a non-human primate face, producing a facial expression that could either match or non-match the heard vocalizations ‘grunt’ and ‘coo’. The electrophysiological responses mimicked the ones observed in the behavioral studies, namely 4-month-old infants were sensitive to the congruent match (face and voice), irrespective of face species (human and non-human primate); on the contrary, 8-month-olds were only sensitive to the congruent human face and vocalization but did not detect the incongruency between non-human primate face and vocalization. In particular, ERP analyses revealed recruitment of the N290 (a typical component signaling face processing in early infancy, see40) in both younger and older infants; however, while there was no difference between human and non-human primate faces in 4-month-olds, the N290 in 8-month-old infants was more negative for non-human primate than human faces, revealing the emergence of a specialized system for own-species faces that starts by 8 months of age. This study, and the general idea of perceptual narrowing suggest that at birth, humans are broadly tuned to multisensory information; however, postnatal experience with one’s own species is associated with neural changes in multisensory processing.

Odor-visual

Category acquisition in the first months of life seems to be promoted by multisensory integration, with early maturing systems, such as olfaction, driving the acquisition of categories in later-developing systems, such as vision. In their elegant study, Rekow et al.41 (2021) provided original evidence to support that view. Fast periodic visual stimulation of natural images of objects, as well as face-like stimuli, was coupled with EEG frequency tagging to track visual responses to the category ‘face’ in infants. The presentation of the images was accompanied by two odors, one maternal and one neutral (control/baseline). Within the sequence, face-like images were interleaved at every sixth stimulus, corresponding to a periodic rate of 1 Hz. This period response in the EEG frequency spectrum commonly reflects a response that is specific to faces and not to the stimulus category displayed within the sequence. The results revealed that by 4 months of age, infants’ ability to categorize face-like stimuli are initiated by maternal odor. Indeed, cerebral activity is comparable between the two hemispheres when infants are presented with the control odor. However, when coupled with maternal odor, cerebral activity is larger over the right than the left hemisphere, where the specialized response to faces usually occurs in adults (e.g.,42). Thus, the developing visual system builds upon multisensory experiences (e.g., maternal odor and face-like stimuli) to learn to categorize complex unimodal sensory inputs, such as faces.

An important finding of Rekow et al.’s study41 relates to individual differences in reactions to multisensory events: the infants who presented the weakest responses in the baseline odor condition had the largest visual responses with the maternal odor. This effect is reminiscent of the inverse effectiveness principle, by which, at the neural level, maximal multisensory response enhancement occurs when the constituent unisensory stimuli are minimally effective in evoking responses.43,44 Applied to the current data, it would indicate that the more ambiguous a visual stimulus is to the infant, the more the maternal odor can promote face categorization, suggesting that multisensory input is crucial to developing specialized systems.

Audio-tactile

Finally, it is worth mentioning a recent newborn study that investigated the neural correlates of the peripersonal space using EEG, which is closely linked to body representation. Ronga et al.45 (2021) touched one of the hands of the newborns while presenting auditory stimuli near or far from the touched hand (see Figure 2, panel A). Unisensory and multisensory stimuli were presented either close or far with respect to the body, under the assumption that stimuli occurring close to the body might speed up neural activity46 (i.e., peripersonal space has a protective function and events occurring within it are more relevant to the organism). In fact, newborns showed significantly multisensory integration effects for stimuli presented in near space, specifically in a time window between 222 ms and 338 ms post-stimulus onset, which corresponds to the latency of the P2 component. Overall, this study shows that newborns already exhibit multisensory integration processes active at birth, which can be detected not only from preferential looking times studies,9 but also at the level of their neural activity.

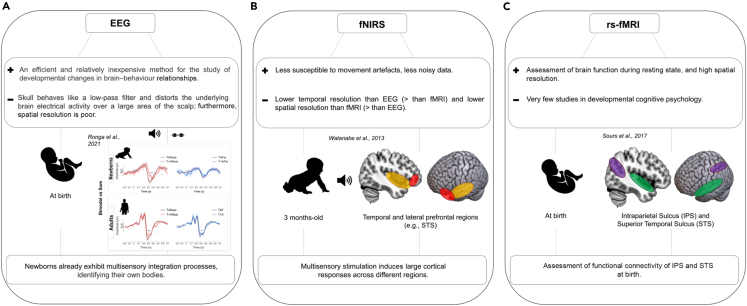

Figure 2.

Comparison of three neurophysiological techniques used to assess multisensory interactions in newborns

(A) Displays the study by Ronga et al.45 (2021), in which newborns’ electrophysiological response (EEG) to audio-tactile stimuli was recorded when presented close or far from the body. The authors found an electrophysiological pattern of MSI, with older newborns showing a larger MSI effect.

(B) Shows the fNIRS study of Watanabe et al.47 (2013) aimed at assessing spatiotemporal cortical hemodynamic responses of 3-month-old infants while they perceived visual objects with or without accompanying sounds. The comparison between the two stimulus conditions revealed that the effect of sound manipulation was pervasive throughout the diverse cortical regions, and the effects were specific to each cortical region.

Finally, (C) shows the rs-fMRI study by Sours et al.48 (2017), in which the functional connectivity of two typical multisensory cortical areas was found to be functional at birth: the intraparietal sulcus and the superior temporal sulcus.

Functional near-infrared spectroscopy (fNIRS)

Functional near-infrared spectroscopy (fNIRS) represents a relatively new neuroimaging technique well-suited to study infant brain responses to basic sensory stimuli, as well as to more complex interactive social contexts49 (see50 for a review of the technique). fNIRS measures cerebral functions through different chromophore mobilization (oxygenated hemoglobin, deoxygenated hemoglobin, and cytochrome c-oxidase, which absorb light at different wavelengths) and their timing with events/stimuli. With respect to its use in developmental neuroscientific research, the major advantage of fNIRS is that its data output is less susceptible to infants’ movement artifacts than other techniques. With regard to temporal resolution, it is higher than fMRI but lower than EEG; on a spatial resolution level, it is higher than EEG but lower than fMRI.

Despite these shortcomings, fNIRS has been increasingly used in developmental research, including recent studies investigating the multisensory nature of cortices in early infancy.

Watanabe et al.47 (2013) measured fNIRS responses in 3–4 months infants while they saw visual objects presented alone or with a concurrent sound (Figure 2, panel B). Under the assumption that increases in the oxy-Hb signal and decreases in the deoxy-Hb signal indicate cortical activation (positive), while a decrease in the oxy-Hb signal and increase in the deoxy-Hb signal indicate cortical deactivation (negative), the data showed positive changes in oxy-Hb signals in the audiovisual condition in the earliest time window in the temporal auditory regions and persisted until the later windows (up to 13 s following stimulus onset) in the early visual, posterior temporal, and prefrontal areas. Instead, in the unisensory condition, where only the visual stimulus was present, positive changes in oxy-Hb signals were observed at temporal and lateral prefrontal regions in early time windows but disappeared later.

In general, it should be noted that the presence or absence of sound dramatically affected the activation and/or deactivation of the diverse cortical regions. Furthermore, the difference between sound and no-sound conditions was compared within different brain regions, revealing different activation and deactivation patterns depending on the cortical site. For example, while the superior temporal sulcus (STS) and the middle temporal gyrus (MTGa) showed activation with large amplitudes in the sound condition and deactivation in the later time windows in the no-sound condition, the inferior frontal gyrus showed equal activation in both conditions.

The major finding that emerged from Watanabe et al.’ study47 (2013) was that in 3/4-month infants, multisensory stimulation induces large cortical responses across multiple regions, including heteromodal association areas, namely: the middle temporal gyrus (MTG), middle occipital gyrus (MOG), and inferior parietal lobe (IPL) as well as prefrontal regions inferior frontal gyrus (IFG) and superior frontal gyrus (SFG).

Importantly, the comparison between the addition vs. removal of auditory feedback to the visual image captured important characteristics of multisensory processing in infancy: auditory input did not just enhance overall activation concerning visual perception but also induced specific changes in each cortical region. For instance, removing the sound produced active suppression of activity in the superior temporal gyrus (STG, an early auditory region) and MTG. Removal of the sound also failed to produce any activity in the SFG, revealing that this region only responds to multisensory information. Similarly, removing the sound reduced the activation of MTG, MTG and IPL, revealing the multisensory properties of these regions and demonstrating that their functions are already in place very early in development.

FNIRS studies have also begun to shed light on brain connectivity, subtending multisensory processing in infancy. For example, Werchan et al.51 (2018) presented familiarization events in which infants viewed a ball bouncing either up and down or back and forth across the screen, while a sound was presented either as the ball changed direction in the synchronous condition, or 450 ms before the ball changed direction in the asynchronous condition. Before and after these familiarization events, infants saw two unisensory events: the bouncing ball without sound (unisensory visual) and the sound alone without the ball (unisensory auditory). One hypothesis of the authors was that if the occipital and temporal regions are functionally connected during multisensory synchronous events, this would suggest that crossmodal interactions might become an experience-driven process by which the unisensory cortices somehow ‘train’ each other to become multisensory. Accordingly, the results showed that infants with higher occipitotemporal connectivity exhibited occipital activation to unisensory auditory stimuli, thus revealing the multisensory properties of the occipital cortex. Although the temporal cortex did not exhibit the same pattern, the results nevertheless support the notion that functional connectivity enhances sensory processing by amplifying neural responses in unisensory cortices.52 Because the unisensory cortices did not respond to multisensory stimuli, this study suggests the possibility that multisensory processing, rather than being innate, represents a learned response to the naturally multisensory environment in which the child grows up.

Localizing multisensory areas in the infant brain through resting-state Functional Magnetic Resonance imaging (rs-fMRI)

Despite its great potential and general interest, the use of resting-state Functional Magnetic Resonance (rs-fMRI) in developmental studies is still in its infancy. fMRI is a non-invasive brain functional imaging technique that can provide relatively high spatial resolution compared with the previous techniques mentioned (EEG and fNIRS). While fMRI provides BOLD signals of task- and cognitive-related stimuli, rs-fMRI assesses brain functions during the resting state, thus reflecting spontaneous neural activity. This activity is believed to relate to naturally existing brain organization, supported by vast cerebral connectivity,53 forming a coordinated BOLD fluctuation pattern (also known as ‘FC’). Spontaneous brain activity supports a series of behaviors, such as preparing for task execution54 or consolidating memories.55

The benefits of using rs-fMRI in the neonatal population appear almost obvious: task-free recording of brain activity allows establishing the existence of neural networks in a population that can only indirectly perform a task (e.g., assessment of visual preference). A recent rs-fMRI study48 explored the functional connectivity of typical areas of multisensory convergence in human adults - the intraparietal sulcus (IPS) and STS – at birth to prove the innateness of the cortical multisensory architecture. The IPS is involved in multisensory attention and exhibits different connections to auditory, visual, somatosensory, and default mode networks, suggesting local specialization within these regions across multiple sensory modalities.56 The STS receives inputs from visual, auditory, and somatosensory areas and is involved in a series of behaviorally relevant processes, such as object recognition, speech, and face and voice integration.57,58

Sours et al.48 (2017) found that in newborns the IPS connects with visual and somatosensory areas, whereas the STS connects with visual, auditory, and somatosensory regions; all these connections appeared to be very similar to those of adults (see Figure 2, panel C). Thus, this evidence suggests that at the earliest stages of development, the brain already exhibits patterns of crossmodal connectivity that remain stable in adulthood.

What do the studies reviewed so far tell us about the origins of multisensory processing in humans?

First, it is important to revisit the difference in terminology presented in the introduction. The current literature investigated the development of multisensory abilities with different experimental paradigms and physiological techniques. The neurophysiological and electrophysiological techniques (EEG, fNIRS, and rs-fMRI) show the existence of a multisensory cortical and subcortical substrate present at or near birth, as documented by different brain responses (at a local or a circuit level) to unisensory and multisensory stimuli.45,47,48 On the one hand, the fact that multisensory areas are already present near birth and refine their functioning in the first months of life, parallels findings in the animal model.1,48,59 On the other hand, lack of behavioral data associated with neural data, do not allow stating that multisensory integration is present at birth. Similarly, behavioral paradigms (typically using looking times to assess preference) have revealed greater attention and sensitivity to multisensory events, as compared to modality-specific, unisensory, stimuli.17,18,19 However, such effects cannot be labeled as evidence of multisensory integration given the lack of a neural marker, such as the multisensory enhancement of brain activity in areas of sensory convergence. Thus, it seems that so far our knowledge of the development of multisensory integration (and specifically the integrative properties of multisensory neurons and its correlation with overt behavior) has been dominated by animal models, in which single cell recording is possible. Recognizing the difficulties of adopting the neurophysiological model of multisensory integration during human development, the question is it really so crucial to establish multisensory integration at birth in human beings? How would such knowledge benefit the newborn and infant human being whose overt behavior is very limited?

Our review has provided evidence that the functional architecture needed for multisensory processing in the mature brain is already present within the first days/weeks of life. We believe that this organization – in accord with animal and deprivation studies3,9,11,12,60,61 - may prepare the infant to receive, process and integrate various sensory inputs. As in adults, synchronous multisensory experience drives functional coupling between the occipital and temporal regions, in turn enhancing unisensory processing. Furthermore, multisensory information appears to increase activation in the two stimulated sensory cortices, which produce a higher signal with respect to activity when only one sensory region is stimulated.47,51,48

While this pattern suggests that the human brain is already ‘prepared’ for multisensory processing, in turn displaying a basic functional structure that might support an early sensitivity to multisensory information (see59 for a summary of the behavioral studies reported above), there is a large amount of evidence that innatism views of development should be abandoned in favor of more experience-dependent and gene-environment interactive views.

Sensory deprivation provides a case to demonstrate that it is only after considerable experience during early life with the statistics of multisensory events that the brain can skillfully use the basic principles of multisensory integration, such as space and time.62 For example, cats reared in the dark9 and human infants born with dense bilateral cataracts63 do not typically develop adult multisensory cortical circuits. This is because congenital visual deprivation influences the ability of cortical areas to process different sensory inputs, favoring reorganization of the visual cortex and, in turn, the functioning of the other sensory areas (both auditory and tactile areas, see, e.g.,64,65). Similar conclusions were obtained from deafferented individuals, born deaf and implanted with one or two cochlear implants at different stages of development. Stevenson et al.66 (2017) emphasized the role of sensitive periods of multisensory development and the need to implant as early as possible to allow children to optimize those crossmodal interactions supporting communication skills.

The complexity and outcome of multisensory processing and the behavioral responses derived from them are further influenced by genes. For instance, studies conducted across different neuropsychiatric disorders have revealed that disturbed expression levels of specific genes during critical periods of development may lead to hyperplasticity within the sensory circuits,67 leading to abnormal and hypersensitivity to sensory stimulation, such as autism spectrum disorder. Furthermore, it is worth noting that social functioning is multisensory in nature, as social cues need to be gathered by integrating facial expressions, speech, and body language. The integration of such cues occurs at a high level of multisensory integration to allow an appropriate behavioral response, and because social dysfunction is a common symptom across neuropsychiatric disorders, impaired multisensory processing might be one of the causes of social dysfunction.68

These findings underscore the importance of exposure to multisensory environments, which integrate with genes and allow for the optimal development of both sensory systems and their interactions. Therefore, having a brain endorsed with primitive multisensory mechanisms at birth is not sufficient, as its development is a long and multifactorial process.

In the following and last paragraph, we wish to provide some insight on how early sensitivity to multisensory experiences might prove helpful in situations of infants at risk of neurodevelopmental delays (or disorders), as is the case of preterm infants, who present a higher probability with respect to full-term infants to present delayed and atypical cognitive and socio-affective development, that might not even resolve with age.

Studies conducted in populations with diagnosed neurodevelopmental disorders, such as autism (ASD) and ADHD, have shown that one of the core characteristics of these conditions is the disruption of multisensory processing.69,70 This research has suggested that major difficulties in cognitive and social functioning correlate with the efficiency of multisensory integration.71,72,73 Assuming a cascade model, for example in ASD or ADHD, atypical processing of sensory information would impair multisensory integration, in turn affecting higher-order functions that depend on it. It follows that interventions aimed at refining multisensory integration could impact in a bottom-up manner on the development of cognitive and social abilities in neurodevelopmental disorders.69,74

In light of this evidence, in the last decades, multisensory-based rehabilitation programs have been promoted as a useful approach to neurological and neuropsychological rehabilitation.75,76,77 For example, infant massage (IM), by providing various sensory stimuli (e.g., touch, kinesthetic manipulation, voice, and facial expressions of the caregiver), can foster intensive and affective multisensory stimulation. The IM paradigm has been shown to have a positive and profound effect on visual system development (e.g., visual acuity and stereopsis maturation) in both preterm and Down syndrome children,78,79 as well as to facilitate the process of maturation of brain electrical activity in low-risk preterm infants similar to that observed (in utero) in term infants.80 It also has been shown that multisensory-integration-based interventions can significantly decrease autistic mannerisms81,82,83 and significantly improve the concentration level of children with ADHD and, in turn, improve the behavioral symptoms of impulsive-hyperactivity and hyperactivity.74

Although a causal link between multisensory integration impairments and the emergence of neurodevelopmental problems has not yet been established, evidence of a positive influence of multisensory training on cognitive and social functioning suggests a strong relationship between them.

Clinical implications for studying the early development of multisensory processes: Insights from preterm infants

Recently, there has been growing interest in understanding the cognitive, motor, and socio-affective outcomes of preterm infants, who are at risk for brain injury and delayed development, which are sometimes associated with long-term deficits across different physical and cognitive domains.84,85 In particular, advances in neuroimaging techniques have allowed the observation of widespread alterations in cortical mass, surface area as well and whole brain connectivity86,87,88,89 in preterm infants, which in turn represent predictors of later deficits in cognitive processing and psychiatric diagnosis.

Interestingly, brain responses to multisensory stimuli differ significantly between preterm and full-term infants, and these differences are predictive of later behavioral deficits. In one study, Maitre et al.90 (2020) recorded continuous EEG in preterm and full-term infants during unisensory tactile, auditory, and audio-tactile (multisensory) stimulation. A questionnaire assessing internalizing and externalizing tendencies was administered to the families to observe the long-term consequences of prematurity at the age of 24 months. A series of differences between the groups emerged across ERP waveforms and topography: while multisensory processing in full-term infants was characterized by a linear addition of unisensory signals and by a single ERP topography for both summed unisensory and bimodal stimuli, preterm infants showed non-linear neural responses across multiple topographies. Furthermore, atypical brain activity predicted internalizing problems in preterm toddlers at 24 months of age, suggesting that dysfunctional multisensory processing in infancy may have long-term consequences on later behavior.

If preterm infants display atypical multisensory processing, which, in turn, appears to be crucial for typical development, early multisensory interventions might promote healthy cognitive and behavioral development. Some evidence in support of this hypothesis is offered by studies investigating the effects of multisensory exposures on a series of indexes of healthy neurodevelopment, such as motor, cognitive, and emotional behavior.

For example, the audio-tactile-visual-vestibular (ATVV) intervention consists of 10 min of auditory (a female voice) and tactile (massage) stimulation, followed by a 5-min vestibular stimulation (horizontal rocking), which has proved useful in improving alertness and feeding behavior (see91 for a recent review; 92,93).

Another intervention, the Family Nurture Intervention (FNI) was designed to facilitate affective connections between infants and their mothers by adopting a multisensory approach to promote such bonding.94 In particular, the FNI consists of a 1-h interaction with the infant, during which the caregiver is required to establish eye contact (visual stimulation), perform vocal soothing (auditory stimulation), and skin-to-skin contact (tactile stimulation). Welch et al.95 (2014) found that preterm infants treated with such multisensory intervention showed increased EEG power in the frontal polar region across several frequencies, as assessed during sleep, and that such cerebral pattern is commonly associated with healthy neurodevelopment.

In a follow-up study, the same authors96 found that at 18 months from preterm birth, FNI improved sensorimotor development, concept formation, memory, and language as assessed through the Bayley Scale III, a standardized and validated assessment of general infant development. The FNI also improved behavioral problems, as assessed on the Child Behavior Checklist, which measures externalizing and internalizing behavior, as well as emotional, attention, sleep, anxiety and aggression problems in very early childhood.

The above-mentioned studies present a series of limitations that preclude these interventions from being considered conclusive. Indeed, as observed in a recent meta-analysis,97 the sample sizes used in most studies are very small, and the longitudinal data are poor, leaving the question of whether these interventions are beneficial in the long term.

Considering the increasing number of preterm births, there is the urge to intervene immediately to promote the healthy development of these infants in a period in life in which brain plasticity can support faster and more efficient learning.

Furthermore, multisensory interventions developed so far capitalize on two decades of studies showing how multisensory learning can benefit individuals as our brains have evolved to process the natural (multisensory) environment.98 As shown in the first paragraph of this chapter, human infants are naturally attracted to multisensory stimuli, and their brains appear to be tuned to process such information. This is reflected in their facilitation in processing multisensory information over unisensory information, which is a pattern that persists into adulthood. As shown by behavioral, neuroimaging and computational data,99,100,101 multisensory learning outperforms unisensory learning, and alters the underlying multisensory structures, as well as the connectivity between unisensory structures. Thus, promoting multisensory training in preterm infants holds promise for the development of healthy and successful individuals.

In conclusion, this review has illustrated that since birth, human newborns step into the world with a brain that is already prepared to receive and process multisensory stimuli. Throughout postnatal life, multisensory experience shapes and reinforces neural circuits that are laid down for processing multisensory stimuli. We have concluded the review with insights from the preterm literature and demonstrated the potential benefits of multisensory stimulation in the clinical setting. Indeed, we believe that this is the path multisensory research should take to address the question of promoting healthy development in newborns and infants who are born at risk of developmental delays (Figure 3).

Figure 3.

The graph depicts a suggestion on how multisensory processing could influence higher-order cognition, in turn impacting how individuals will benefit from it on a behavioral and functional level

Acknowledgments

Research partially funded by the Italian Ministry of Health (to N.B.).

Author contributions

E.N. and N.B. conceptualized the manuscript. EN drafted the manuscript. M.G. made the figures. M.G. and N.B. reviewed and edited the manuscript.

Declaration of interests

The authors declare no competing interests.

References

- 1.Murray M.M., Lewkowicz D.J., Amedi A., Wallace M.T. Multisensory Processes: A Balancing Act across the Lifespan. Trends Neurosci. 2016;39:567–579. doi: 10.1016/j.tins.2016.05.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Ghazanfar A.A., Schroeder C.E. Is neocortex essentially multisensory? Trends Cognit. Sci. 2006;10:278–285. doi: 10.1016/j.tics.2006.04.008. [DOI] [PubMed] [Google Scholar]

- 3.Stein B.E., Stanford T.R., Rowland B.A. Development of multisensory integration from the perspective of the individual neuron. Nat. Rev. Neurosci. 2014;15:520–535. doi: 10.1038/nrn3742. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Cappe C., Morel A., Barone P., Rouiller E.M. The Thalamocortical Projection Systems in Primate: An Anatomical Support for Multisensory and Sensorimotor Interplay. Cerebr. Cortex. 2009;19:2025–2037. doi: 10.1093/cercor/bhn228. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Falchier A., Schroeder C.E., Hackett T.A., Lakatos P., Nascimento-Silva S., Ulbert I., Karmos G., Smiley J.F. Projection from Visual Areas V2 and Prostriata to Caudal Auditory Cortex in the Monkey. Cerebr. Cortex. 2010;20:1529–1538. doi: 10.1093/cercor/bhp213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Alais D., Newell F.N., Mamassian P. Multisensory Processing in Review: from Physiology to Behaviour. Seeing Perceiving. 2010;23:3–38. doi: 10.1163/187847510X488603. [DOI] [PubMed] [Google Scholar]

- 7.Bolognini N., Russo C., Vallar G. Crossmodal illusions in neurorehabilitation. Front. Behav. Neurosci. 2015;9:212. doi: 10.3389/fnbeh.2015.00212. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Spence C., Senkowski D., Röder B. Crossmodal processing. Exp. Brain Res. 2009;198:107–111. doi: 10.1007/s00221-009-1973-4. [DOI] [PubMed] [Google Scholar]

- 9.Wallace M.T., Perrault T.J., Hairston W.D., Stein B.E. Visual Experience Is Necessary for the Development of Multisensory Integration. J. Neurosci. 2004;24:9580–9584. doi: 10.1523/JNEUROSCI.2535-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Smyre S.A., Wang Z., Stein B.E., Rowland B.A. Multisensory enhancement of overt behavior requires multisensory experience. Eur. J. Neurosci. 2021;54:4514–4527. doi: 10.1111/ejn.15315. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Guerreiro M.J.S., Putzar L., Röder B. The effect of early visual deprivation on the neural bases of multisensory processing. Brain. 2015;138:1499–1504. doi: 10.1093/brain/awv076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Putzar L., Goerendt I., Lange K., Rösler F., Röder B. Early visual deprivation impairs multisensory interactions in humans. Nat. Neurosci. 2007;10:1243–1245. doi: 10.1038/nn1978. [DOI] [PubMed] [Google Scholar]

- 13.Sann C., Streri A. Perception of object shape and texture in human newborns: evidence from cross-modal transfer tasks. Dev. Sci. 2007;10:399–410. doi: 10.1111/j.1467-7687.2007.00593.x. [DOI] [PubMed] [Google Scholar]

- 14.Sai F.Z. The role of the mother’s voice in developing mother’s face preference: Evidence for intermodal perception at birth. Infant Child Dev. 2005;14:29–50. [Google Scholar]

- 15.Lewkowicz D.J., Leo I., Simion F. Intersensory Perception at Birth: Newborns Match Nonhuman Primate Faces and Voices. Infancy. 2010;15:46–60. doi: 10.1111/j.1532-7078.2009.00005.x. [DOI] [PubMed] [Google Scholar]

- 16.Lewkowicz D.J., Ghazanfar A.A. The decline of cross-species intersensory perception in human infants. Proc. Natl. Acad. Sci. USA. 2006;103:6771–6774. doi: 10.1073/pnas.0602027103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Anobile G., Arrighi R., Castaldi E., Burr D.C. A Sensorimotor Numerosity System. Trends Cognit. Sci. 2021;25:24–36. doi: 10.1016/j.tics.2020.10.009. [DOI] [PubMed] [Google Scholar]

- 18.Filippetti M.L., Johnson M.H., Lloyd-Fox S., Dragovic D., Farroni T. Body Perception in Newborns. Curr. Biol. 2013;23:2413–2416. doi: 10.1016/j.cub.2013.10.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Orioli G., Bremner A.J., Farroni T. Multisensory perception of looming and receding objects in human newborns. Curr. Biol. 2018;28:R1294–R1295. doi: 10.1016/j.cub.2018.10.004. [DOI] [PubMed] [Google Scholar]

- 20.Izard V., Sann C., Spelke E.S., Streri A. Newborn infants perceive abstract numbers. Proc. Natl. Acad. Sci. USA. 2009;106:10382–10385. doi: 10.1073/pnas.0812142106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Blanke O. Multisensory brain mechanisms of bodily self-consciousness. Nat. Rev. Neurosci. 2012;13:556–571. doi: 10.1038/nrn3292. [DOI] [PubMed] [Google Scholar]

- 22.Filippetti M.L., Orioli G., Johnson M.H., Farroni T. Newborn Body Perception: Sensitivity to Spatial Congruency. Infancy. 2015;20:455–465. doi: 10.1111/infa.12083. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Tsakiris M., Schütz-Bosbach S., Gallagher S. On agency and body-ownership: Phenomenological and neurocognitive reflections. Conscious. Cognit. 2007;16:645–660. doi: 10.1016/j.concog.2007.05.012. [DOI] [PubMed] [Google Scholar]

- 24.Kilteni K., Maselli A., Kording K.P., Slater M. Over my fake body: body ownership illusions for studying the multisensory basis of own-body perception. Front. Hum. Neurosci. 2015;9:141. doi: 10.3389/fnhum.2015.00141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Kim R.S., Seitz A.R., Shams L. Benefits of Stimulus Congruency for Multisensory Facilitation of Visual Learning. PLoS One. 2008;3 doi: 10.1371/journal.pone.0001532. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Seitz A.R., Kim R., Shams L. Sound Facilitates Visual Learning. Curr. Biol. 2006;16:1422–1427. doi: 10.1016/j.cub.2006.05.048. [DOI] [PubMed] [Google Scholar]

- 27.von Kriegstein K., Giraud A.-L. Implicit Multisensory Associations Influence Voice Recognition. PLoS Biol. 2006;4:e326. doi: 10.1371/journal.pbio.0040326. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Lehmann S., Murray M.M. The role of multisensory memories in unisensory object discrimination. Brain Res. Cogn. Brain Res. 2005;24:326–334. doi: 10.1016/j.cogbrainres.2005.02.005. [DOI] [PubMed] [Google Scholar]

- 29.Denervaud S., Gentaz E., Matusz P.J., Murray M.M. Multisensory Gains in Simple Detection Predict Global Cognition in Schoolchildren. Sci. Rep. 2020;10:1394. doi: 10.1038/s41598-020-58329-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Hirst R.J., Setti A., De Looze C., Kenny R.A., Newell F.N. Multisensory integration precision is associated with better cognitive performance over time in older adults: A large-scale exploratory study. Aging Brain. 2022;2 doi: 10.1016/j.nbas.2022.100038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Spittle A., Orton J., Anderson P.J., Boyd R., Doyle L.W. Early developmental intervention programmes provided post hospital discharge to prevent motor and cognitive impairment in preterm infants. Cochrane Database Syst. Rev. 2015;2015:CD005495. doi: 10.1002/14651858.CD005495.pub4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Spittle A.J., Barton S., Treyvaud K., Molloy C.S., Doyle L.W., Anderson P.J. School-Age Outcomes of Early Intervention for Preterm Infants and Their Parents: A Randomized Trial. Pediatrics. 2016;138 doi: 10.1542/peds.2016-1363. [DOI] [PubMed] [Google Scholar]

- 33.Brooks-Gunn J., Liaw F.R., Klebanov P.K. Effects of early intervention on cognitive function of low birth weight preterm infants. J. Pediatr. 1992;120:350–359. doi: 10.1016/s0022-3476(05)80896-0. [DOI] [PubMed] [Google Scholar]

- 34.Hyde D.C., Jones B.L., Flom R., Porter C.L. Neural signatures of face-voice synchrony in 5-month-old human infants. Dev. Psychobiol. 2011;53:359–370. doi: 10.1002/dev.20525. [DOI] [PubMed] [Google Scholar]

- 35.Timora J.R., Budd T.W. Steady-State EEG and Psychophysical Measures of Multisensory Integration to Cross-Modally Synchronous and Asynchronous Acoustic and Vibrotactile Amplitude Modulation Rate. Multisensory Res. 2018;31:391–418. doi: 10.1163/22134808-00002549. [DOI] [PubMed] [Google Scholar]

- 36.Schneider T.R., Debener S., Oostenveld R., Engel A.K. Enhanced EEG gamma-band activity reflects multisensory semantic matching in visual-to-auditory object priming. Neuroimage. 2008;42:1244–1254. doi: 10.1016/j.neuroimage.2008.05.033. [DOI] [PubMed] [Google Scholar]

- 37.Lewkowicz D.J., Ghazanfar A.A. The emergence of multisensory systems through perceptual narrowing. Trends Cognit. Sci. 2009;13:470–478. doi: 10.1016/j.tics.2009.08.004. [DOI] [PubMed] [Google Scholar]

- 38.Pons F., Lewkowicz D.J., Soto-Faraco S., Sebastián-Gallés N. Narrowing of intersensory speech perception in infancy. Proc. Natl. Acad. Sci. USA. 2009;106:10598–10602. doi: 10.1073/pnas.0904134106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Grossmann T., Missana M., Friederici A.D., Ghazanfar A.A. Neural correlates of perceptual narrowing in cross-species face-voice matching. Dev. Sci. 2012;15:830–839. doi: 10.1111/j.1467-7687.2012.01179.x. [DOI] [PubMed] [Google Scholar]

- 40.Halit H., de Haan M., Johnson M.H. Cortical specialisation for face processing: face-sensitive event-related potential components in 3- and 12-month-old infants. Neuroimage. 2003;19:1180–1193. doi: 10.1016/s1053-8119(03)00076-4. [DOI] [PubMed] [Google Scholar]

- 41.Rekow D., Baudouin J.-Y., Poncet F., Damon F., Durand K., Schaal B., Rossion B., Leleu A. Odor-driven face-like categorization in the human infant brain. Proc. Natl. Acad. Sci. USA. 2021;118 doi: 10.1073/pnas.2014979118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Rossion B. Twenty years of investigation with the case of prosopagnosia PS to understand human face identity recognition.Part II: Neural basis. Neuropsychologia. 2022;173 doi: 10.1016/j.neuropsychologia.2022.108279. [DOI] [PubMed] [Google Scholar]

- 43.Stanford T.R., Stein B.E. Superadditivity in multisensory integration: putting the computation in context. Neuroreport. 2007;18:787–792. doi: 10.1097/WNR.0b013e3280c1e315. [DOI] [PubMed] [Google Scholar]

- 44.Senkowski D., Saint-Amour D., Höfle M., Foxe J.J. Multisensory interactions in early evoked brain activity follow the principle of inverse effectiveness. Neuroimage. 2011;56:2200–2208. doi: 10.1016/j.neuroimage.2011.03.075. [DOI] [PubMed] [Google Scholar]

- 45.Ronga I., Galigani M., Bruno V., Castellani N., Rossi Sebastiano A., Valentini E., Fossataro C., Neppi-Modona M., Garbarini F. Seeming confines: Electrophysiological evidence of peripersonal space remapping following tool-use in humans. Cortex. 2021;144:133–150. doi: 10.1016/j.cortex.2021.08.004. [DOI] [PubMed] [Google Scholar]

- 46.Bernasconi F., Noel J.-P., Park H.D., Faivre N., Seeck M., Spinelli L., Schaller K., Blanke O., Serino A. Audio-Tactile and Peripersonal Space Processing Around the Trunk in Human Parietal and Temporal Cortex: An Intracranial EEG Study. Cerebr. Cortex. 2018;28:3385–3397. doi: 10.1093/cercor/bhy156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Watanabe H., Homae F., Nakano T., Tsuzuki D., Enkhtur L., Nemoto K., Dan I., Taga G. Effect of auditory input on activations in infant diverse cortical regions during audiovisual processing. Hum. Brain Mapp. 2013;34:543–565. doi: 10.1002/hbm.21453. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Sours C., Raghavan P., Foxworthy W.A., Meredith M.A., El Metwally D., Zhuo J., Gilmore J.H., Medina A.E., Gullapalli R.P. Cortical multisensory connectivity is present near birth in humans. Brain Imaging Behav. 2017;11:1207–1213. doi: 10.1007/s11682-016-9586-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.McDonald N.M., Perdue K.L. The infant brain in the social world: Moving toward interactive social neuroscience with functional near-infrared spectroscopy. Neurosci. Biobehav. Rev. 2018;87:38–49. doi: 10.1016/j.neubiorev.2018.01.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Lloyd-Fox S., Blasi A., Elwell C.E. Illuminating the developing brain: The past, present and future of functional near infrared spectroscopy. Neurosci. Biobehav. Rev. 2010;34:269–284. doi: 10.1016/j.neubiorev.2009.07.008. [DOI] [PubMed] [Google Scholar]

- 51.Werchan D.M., Baumgartner H.A., Lewkowicz D.J., Amso D. The origins of cortical multisensory dynamics: Evidence from human infants. Dev. Cogn. Neurosci. 2018;34:75–81. doi: 10.1016/j.dcn.2018.07.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Lewis R., Noppeney U. Audiovisual Synchrony Improves Motion Discrimination via Enhanced Connectivity between Early Visual and Auditory Areas. J. Neurosci. 2010;30:12329–12339. doi: 10.1523/JNEUROSCI.5745-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Lowe M.J. The emergence of doing “nothing” as a viable paradigm design. Neuroimage. 2012;62:1146–1151. doi: 10.1016/j.neuroimage.2012.01.014. [DOI] [PubMed] [Google Scholar]

- 54.Smith S.M., Fox P.T., Miller K.L., Glahn D.C., Fox P.M., Mackay C.E., Filippini N., Watkins K.E., Toro R., Laird A.R., Beckmann C.F. Correspondence of the brain’s functional architecture during activation and rest. Proc. Natl. Acad. Sci. USA. 2009;106:13040–13045. doi: 10.1073/pnas.0905267106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Buckner R.L., Vincent J.L. Unrest at rest: Default activity and spontaneous network correlations. Neuroimage. 2007;37:1091–1096. doi: 10.1016/j.neuroimage.2007.01.010. discussion 1097-1099. [DOI] [PubMed] [Google Scholar]

- 56.Anderson J.S., Ferguson M.A., Lopez-Larson M., Yurgelun-Todd D. Topographic maps of multisensory attention. Proc. Natl. Acad. Sci. USA. 2010;107:20110–20114. doi: 10.1073/pnas.1011616107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Tyll S., Bonath B., Schoenfeld M.A., Heinze H.-J., Ohl F.W., Noesselt T. Neural basis of multisensory looming signals. Neuroimage. 2013;65:13–22. doi: 10.1016/j.neuroimage.2012.09.056. [DOI] [PubMed] [Google Scholar]

- 58.Marchant J.L., Ruff C.C., Driver J. Audiovisual synchrony enhances BOLD responses in a brain network including multisensory STS while also enhancing target-detection performance for both modalities. Hum. Brain Mapp. 2012;33:1212–1224. doi: 10.1002/hbm.21278. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Bremner A.J., Lewkowicz D.J., Spence C. Multisensory Development. Oxford University Press; 2012. The multisensory approach to development; pp. 1–26. [Google Scholar]

- 60.Wallace M.T., Stein B.E. Sensory and Multisensory Responses in the Newborn Monkey Superior Colliculus. J. Neurosci. 2001;21:8886–8894. doi: 10.1523/JNEUROSCI.21-22-08886.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Meredith M.A., Stein B.E. Spatial determinants of multisensory integration in cat superior colliculus neurons. J. Neurophysiol. 1996;75:1843–1857. doi: 10.1152/jn.1996.75.5.1843. [DOI] [PubMed] [Google Scholar]

- 62.Wallace M.T., Stein B.E. Early Experience Determines How the Senses Will Interact. J. Neurophysiol. 2007;97:921–926. doi: 10.1152/jn.00497.2006. [DOI] [PubMed] [Google Scholar]

- 63.Guerreiro M.J.S., Putzar L., Röder B. Persisting Cross-Modal Changes in Sight-Recovery Individuals Modulate Visual Perception. Curr. Biol. 2016;26:3096–3100. doi: 10.1016/j.cub.2016.08.069. [DOI] [PubMed] [Google Scholar]

- 64.Occelli V., Spence C., Zampini M. Auditory, tactile, and audiotactile information processing following visual deprivation. Psychol. Bull. 2013;139:189–212. doi: 10.1037/a0028416. [DOI] [PubMed] [Google Scholar]

- 65.Kupers R., Ptito M. Compensatory plasticity and cross-modal reorganization following early visual deprivation. Neurosci. Biobehav. Rev. 2014;41:36–52. doi: 10.1016/j.neubiorev.2013.08.001. [DOI] [PubMed] [Google Scholar]

- 66.Stevenson R.A., Sheffield S.W., Butera I.M., Gifford R.H., Wallace M.T. Multisensory Integration in Cochlear Implant Recipients. Ear Hear. 2017;38:521–538. doi: 10.1097/AUD.0000000000000435. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.LeBlanc J.J., Fagiolini M. Autism: A “Critical Period” Disorder? Neural Plast. 2011;2011:921680–921717. doi: 10.1155/2011/921680. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Hornix B.E., Havekes R., Kas M.J.H. Multisensory cortical processing and dysfunction across the neuropsychiatric spectrum. Neurosci. Biobehav. Rev. 2019;97:138–151. doi: 10.1016/j.neubiorev.2018.02.010. [DOI] [PubMed] [Google Scholar]

- 69.Kawakami S., Otsuka S. 2021. Multisensory Processing in Autism Spectrum Disorders. [PubMed] [Google Scholar]

- 70.Panagiotidi M., Overton P.G., Stafford T. Multisensory integration and ADHD-like traits: Evidence for an abnormal temporal integration window in ADHD. Acta Psychol. 2017;181:10–17. doi: 10.1016/j.actpsy.2017.10.001. [DOI] [PubMed] [Google Scholar]

- 71.Kawakami S., Uono S., Otsuka S., Yoshimura S., Zhao S., Toichi M. Atypical Multisensory Integration and the Temporal Binding Window in Autism Spectrum Disorder. J. Autism Dev. Disord. 2020;50:3944–3956. doi: 10.1007/s10803-020-04452-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Stevenson R.A., Zemtsov R.K., Wallace M.T. Individual differences in the multisensory temporal binding window predict susceptibility to audiovisual illusions. J. Exp. Psychol. Hum. Percept. Perform. 2012;38:1517–1529. doi: 10.1037/a0027339. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Kawakami S., Uono S., Otsuka S., Zhao S., Toichi M. Everything has Its Time: Narrow Temporal Windows are Associated with High Levels of Autistic Traits Via Weaknesses in Multisensory Integration. J. Autism Dev. Disord. 2020;50:1561–1571. doi: 10.1007/s10803-018-3762-z. [DOI] [PubMed] [Google Scholar]

- 74.Ning K., Wang T. Multimodal Interventions Are More Effective in Improving Core Symptoms in Children With ADHD. Front. Psychiatr. 2021;12:759315. doi: 10.3389/fpsyt.2021.759315. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Purpura G., Cioni G., Tinelli F. Multisensory-Based Rehabilitation Approach: Translational Insights from Animal Models to Early Intervention. Front. Neurosci. 2017;11:430. doi: 10.3389/fnins.2017.00430. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Bolognini N., Vallar G. In: Multisensory Perception: From Laboratory to Clinic. Sathian K., Ramachandran V.S., editors. Elsevier/Academic Press; 2019. Neglect, Hemianopia and their rehabilitation. In press. [Google Scholar]

- 77.Bolognini N., Convento S., Rossetti A., Merabet L.B. Multisensory processing after a brain damage: Clues on post-injury crossmodal plasticity from neuropsychology. Neurosci. Biobehav. Rev. 2013;37:269–278. doi: 10.1016/j.neubiorev.2012.12.006. [DOI] [PubMed] [Google Scholar]

- 78.Guzzetta A., Baldini S., Bancale A., Baroncelli L., Ciucci F., Ghirri P., Putignano E., Sale A., Viegi A., Berardi N., et al. Massage Accelerates Brain Development and the Maturation of Visual Function. J. Neurosci. 2009;29:6042–6051. doi: 10.1523/JNEUROSCI.5548-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Purpura G., Tinelli F., Bargagna S., Bozza M., Bastiani L., Cioni G. Effect of early multisensory massage intervention on visual functions in infants with Down syndrome. Early Hum. Dev. 2014;90:809–813. doi: 10.1016/j.earlhumdev.2014.08.016. [DOI] [PubMed] [Google Scholar]

- 80.Guzzetta A., D’acunto M.G., Carotenuto M., Berardi N., Bancale A., Biagioni E., Boldrini A., Ghirri P., Maffei L., Cioni G. The effects of preterm infant massage on brain electrical activity. Dev. Med. Child Neurol. 2011;53:46–51. doi: 10.1111/j.1469-8749.2011.04065.x. [DOI] [PubMed] [Google Scholar]

- 81.Schaaf R.C., Benevides T.W., Kelly D., Mailloux-Maggio Z. Occupational therapy and sensory integration for children with autism: a feasibility, safety, acceptability and fidelity study. Autism. 2012;16:321–327. doi: 10.1177/1362361311435157. [DOI] [PubMed] [Google Scholar]

- 82.Pfeiffer B.A., Koenig K., Kinnealey M., Sheppard M., Henderson L. Effectiveness of Sensory Integration Interventions in Children With Autism Spectrum Disorders: A Pilot Study. Am. J. Occup. Ther. 2011;65:76–85. doi: 10.5014/ajot.2011.09205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Schoen S.A., Lane S.J., Mailloux Z., May-Benson T., Parham L.D., Smith Roley S., Schaaf R.C. A systematic review of ayres sensory integration intervention for children with autism. Autism Res. 2019;12:6–19. doi: 10.1002/aur.2046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Lindström K., Lindblad F., Hjern A. Preterm Birth and Attention-Deficit/Hyperactivity Disorder in Schoolchildren. Pediatrics. 2011;127:858–865. doi: 10.1542/peds.2010-1279. [DOI] [PubMed] [Google Scholar]

- 85.Chehade H., Simeoni U., Guignard J.-P., Boubred F. Preterm Birth: Long Term Cardiovascular and Renal Consequences. Curr. Pediatr. Rev. 2018;14:219–226. doi: 10.2174/1573396314666180813121652. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Batalle D., Hughes E.J., Zhang H., Tournier J.-D., Tusor N., Aljabar P., Wali L., Alexander D.C., Hajnal J.V., Nosarti C., et al. Early development of structural networks and the impact of prematurity on brain connectivity. Neuroimage. 2017;149:379–392. doi: 10.1016/j.neuroimage.2017.01.065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Inder T.E., Warfield S.K., Wang H., Hüppi P.S., Volpe J.J. Abnormal Cerebral Structure Is Present at Term in Premature Infants. Pediatrics. 2005;115:286–294. doi: 10.1542/peds.2004-0326. [DOI] [PubMed] [Google Scholar]

- 88.Fischi-Gómez E., Vasung L., Meskaldji D.-E., Lazeyras F., Borradori-Tolsa C., Hagmann P., Barisnikov K., Thiran J.-P., Hüppi P.S. Structural Brain Connectivity in School-Age Preterm Infants Provides Evidence for Impaired Networks Relevant for Higher Order Cognitive Skills and Social Cognition. Cerebr. Cortex. 2015;25:2793–2805. doi: 10.1093/cercor/bhu073. [DOI] [PubMed] [Google Scholar]

- 89.Tokariev A., Stjerna S., Lano A., Metsäranta M., Palva J.M., Vanhatalo S. Preterm Birth Changes Networks of Newborn Cortical Activity. Cerebr. Cortex. 2019;29:814–826. doi: 10.1093/cercor/bhy012. [DOI] [PubMed] [Google Scholar]

- 90.Maitre N.L., Key A.P., Slaughter J.C., Yoder P.J., Neel M.L., Richard C., Wallace M.T., Murray M.M. Neonatal Multisensory Processing in Preterm and Term Infants Predicts Sensory Reactivity and Internalizing Tendencies in Early Childhood. Brain Topogr. 2020;33:586–599. doi: 10.1007/s10548-020-00791-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Embarek-Hernández M., Güeita-Rodríguez J., Molina-Rueda F. Multisensory stimulation to promote feeding and psychomotor development in preterm infants: A systematic review. Pediatr. Neonatol. 2022;63:452–461. doi: 10.1016/j.pedneo.2022.07.001. [DOI] [PubMed] [Google Scholar]

- 92.White-Traut R.C., Nelson M.N., Silvestri J.M., Vasan U., Littau S., Meleedy-Rey P., Gouguang G., Patel M. Effect of auditory, tactile, visual, and vestibular intervention on length of stay, alertness, and feeding progression in preterm infants. Dev. Med. Child Neurol. 2007;44:91–97. doi: 10.1017/s0012162201001736. [DOI] [PubMed] [Google Scholar]

- 93.Medoff-Cooper B., Rankin K., Li Z., Liu L., White-Traut R. Multisensory Intervention for Preterm Infants Improves Sucking Organization. Adv. Neonatal Care. 2015;15:142–149. doi: 10.1097/ANC.0000000000000166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Welch M.G., Hofer M.A., Brunelli S.A., Stark R.I., Andrews H.F., Austin J., Myers M.M., Family Nurture Intervention FNI Trial Group Family nurture intervention (FNI): methods and treatment protocol of a randomized controlled trial in the NICU. BMC Pediatr. 2012;12:14. doi: 10.1186/1471-2431-12-14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Welch M.G., Myers M.M., Grieve P.G., Isler J.R., Fifer W.P., Sahni R., Hofer M.A., Austin J., Ludwig R.J., Stark R.I., FNI Trial Group Electroencephalographic activity of preterm infants is increased by Family Nurture Intervention: A randomized controlled trial in the NICU. Clin. Neurophysiol. 2014;125:675–684. doi: 10.1016/j.clinph.2013.08.021. [DOI] [PubMed] [Google Scholar]

- 96.Welch M.G., Firestein M.R., Austin J., Hane A.A., Stark R.I., Hofer M.A., Garland M., Glickstein S.B., Brunelli S.A., Ludwig R.J., Myers M.M. Family Nurture Intervention in the Neonatal Intensive Care Unit improves social-relatedness, attention, and neurodevelopment of preterm infants at 18 months in a randomized controlled trial. JCPP (J. Child Psychol. Psychiatry) 2015;56:1202–1211. doi: 10.1111/jcpp.12405. [DOI] [PubMed] [Google Scholar]

- 97.Aita M., De Clifford Faugère G., Lavallée A., Feeley N., Stremler R., Rioux É., Proulx M.-H. Effectiveness of interventions on early neurodevelopment of preterm infants: a systematic review and meta-analysis. BMC Pediatr. 2021;21:210. doi: 10.1186/s12887-021-02559-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98.Shams L., Seitz A.R. Benefits of multisensory learning. Trends Cognit. Sci. 2008;12:411–417. doi: 10.1016/j.tics.2008.07.006. [DOI] [PubMed] [Google Scholar]

- 99.Rao A.R. An oscillatory neural network model that demonstrates the benefits of multisensory learning. Cogn. Neurodyn. 2018;12:481–499. doi: 10.1007/s11571-018-9489-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.Paraskevopoulos E., Kuchenbuch A., Herholz S.C., Pantev C. Evidence for Training-Induced Plasticity in Multisensory Brain Structures: An MEG Study. PLoS One. 2012;7 doi: 10.1371/journal.pone.0036534. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Gnaedinger A., Gurden H., Gourévitch B., Martin C. Multisensory learning between odor and sound enhances beta oscillations. Sci. Rep. 2019;9 doi: 10.1038/s41598-019-47503-y. [DOI] [PMC free article] [PubMed] [Google Scholar]