Why might deception be of interest to a clinical readership? Is it not a moral issue, more relevant to legal or theological discourse? How can we determine, scientifically, whether another human being is lying to us? Should we want to?

The answers to all of these questions might depend on the clinical setting envisaged. The significance of a lie about adherence to a treatment will depend on the clinical necessity of that treatment. In the forensic arena, a lie about plans for future conduct might have profound consequences: will the paedophile avoid playgrounds? In psychiatry, neurology, medicolegal practice and perhaps certain other areas of medicine, doctors are called upon to judge the veracity of their patient’s account (even though this may not be made explicit). Doctors commonly imply veracity in the terms that they use.

Consider the distinction between feigned physical symptoms (‘malingering’) and those ascribed to conversion disorder (‘hysteria’). These diagnoses have very different meanings, yet what objective grounds are there for differentiating between them?1 Notwithstanding the findings of brain imaging experiments,2 it would seem that, phenomenologically, there is little objective evidence that would favour one above the other, and the diagnosis reached may be influenced by circumstantial factors and the physician’s opinion of the patient’s personality or background. Also, the subtle ‘tricks’ used to elicit hysterical motor inconsistency (e.g. the unintentional movement of the ‘paralysed’ limb) might just as well be used to indicate deception.1,3 The point is not that these disorders are equivalent, rather that they lack objective differentiation. Yet, when recording these diagnoses, the physician implies whether the patient is to be believed.1

Are doctors especially good at detecting deception? This seems unlikely. When psychologists have studied various groups trying to decide whether others are lying to them, doctors have performed at the level of chance;4,5 with the possible exception of security personnel, it seems to make little difference whether the putative ‘lie detector’ is a judge, a police officer or a doctor.

However, there is a more subtle aspect to deception that emerges when human behaviour is conceptualized in terms of its higher, executive, control processes. For it would appear that deception ‘behaves’ as if it is a skill—something that must be worked at, for which attention is required, and in which fatigue may lead to inconsistency or unintended confession.5

The question of whether lying relies upon higher brain systems is important because such systems may be differentially affected in neuropsychiatric disorders. In the case of some of the most difficult patients with whom psychiatrists interact (such as ‘psychopaths’ and sex offenders), deception may be a feature of that interaction. A psychopath who is a skilled liar may be demonstrating preserved, or possibly superior, executive brain function. This may have profound implications for our understanding of responsibility and mitigation.

LEARNING TO LIE

‘[L]ie—a false statement made with the intention of deceiving...’6

‘[D]eception—a successful or unsuccessful deliberate attempt, without forewarning, to create in another a belief which the communicator considers to be untrue.’5

On the evidence of religious texts dating from antiquity, lying and deception have been of concern to humans for millennia.7 However, despite the apparent premium placed upon honesty in ancient and modern life, there is emerging evidence from the disciplines of evolutionary studies,8 child development and developmental psychopathology that the ability to deceive is acquired and, indeed, ‘normal’. Such behaviours follow a predictable developmental trajectory in human infants and are ‘impaired’ among human beings with specific neurodevelopmental disorders, such as autism.9,10 Hence, there seems to be a tension between what is apparently socially undesirable but ‘normal’ (i.e. lying) and what is socially commendable but pathological (i.e. always telling the truth). Higher organisms have evolved the ability to deceive each other consciously or otherwise,11 while humans, in a social context, are encouraged to refrain from deception. It might be hypothesized that it is precisely because the human organism has such an ability to deceive that it is called upon to exercise control over the potential use of this ability.

THE BENEFITS OF DECEPTION

Given the ‘normal’ appearance of lying and deception during childhood, several commentators have speculated upon the purpose served by such behaviours. One view has been that deceit delineates a boundary between ‘self’ and ‘other’, originally between child and parent.12 Knowing something that the mother does not know establishes for the child the limit of the former’s omniscience, while allowing the latter a measure of power. Indeed, such power over information might drive the ‘pathological lying’ seen among dysfunctional adolescents and adults.12

Lying also eases social interaction, by way of compliments and information management. Precisely truthful communication at all times would be difficult and perhaps rather brutal.5 Deception can sometimes denote consideration for others. Hence, it is unsurprising that the man-in-the-street admits to telling lies most days.5 Social psychological studies, often of college students, suggest that lying facilitates impression management, especially early in a romantic relationship.5

Deception may also be a vital skill in the context of conflict—for instance, between social groups, countries or intelligence agencies. When practised under these circumstances it might even be perceived as a ‘good’. However, when a person is branded ‘a liar’, any advantage formerly gained may be lost. Though fluent liars might make entertaining companions, to become known as a liar is likely to be disadvantageous in the long run.5

PRINCIPLES OF EXECUTIVE CONTROL

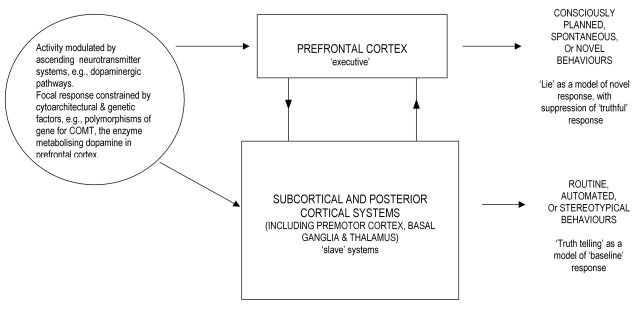

Control of voluntary behaviour in everyday human life is constrained by the availability of cognitive, neurobiological resources.13 Control (or executive) functions are not necessarily ‘conscious’, though they may access awareness.14,15 Executive functions include planning, problem solving, the initiation and inhibition of behaviours, and the manipulation of useful data in conscious ‘working’ memory (e.g. the telephone number about to be dialled). These processes come to the fore in non-routine situations, when the need arises to ‘think on one’s feet’. Such processes have been shown to engage specific regions of prefrontal cortex, though they also rely upon normal function in lower brain systems. There seems to be a principle to the cognitive architecture of executive control: higher centres, such as prefrontal cortex, are essential to adaptive behaviour in novel or difficult circumstances, while lower, ‘slave’ systems may suffice to perform routine or automated tasks (e.g. riding a bicycle while thinking of something else; Figure 1).16

Figure 1.

Schematic diagram illustrating neural basis of behavioural control. Prefrontal systems are implicated in control of complex and novel behaviour patterns, modulating ‘lower’ brain systems (such as basal ganglia and premotor cortices). However, constraints are imposed by genetic and neurodevelopmental factors (left) and function is modulated by neurotransmitters. These constraints impose limits on the envelope of possible responses emitted by the organism (right) (Ref. 13)

A recurring theme in the psychology of deception is the difficulty of deceiving in high-stake situations: information previously divulged must be remembered, emotions and behaviours controlled, information managed.5 These are quintessentially executive functions. Hence, much of the liar’s behaviour may be seen, from a cognitive neurobiological perspective, as an exercise in behavioural control, making use of limited cognitive resources. Some examples may serve to illustrate the principles underlying such behavioural control.

TESTING CONTROL PROCESSES IN THE CLINIC

One of the clinical means by which a psychiatrist can assess whether a patient receiving neuroleptic medication exhibits involuntary movements is through the use of distraction— e.g. when the patient is requested to stand and perform complex hand movements. While distracted by this manual task the patient may begin to tramp (shuffle repetitively) on the spot, his tongue protruding and exhibiting dyskinesia.17 Hence, while executive control systems are engaged in complex manual tasks they are unable to inhibit other, necessarily involuntary, movements (of the legs and tongue). Similarly, patients with hysterical conversion symptoms, such as motor paralysis, may move the affected limb when distracted or sedated, their executive processes momentarily diverted or obtunded. This suggests that the executive is engaged in the maintenance of such symptoms.1

In certain situations, liars betray deception by their bodily movements. While telling complex lies they may make fewer hand and arm movements (‘illustrators’).5 The slow rigid behaviour exhibited by liars has been termed the motivational impairment effect; police officers have been advised to observe witnesses from ‘head to toe’ rather than concentrating upon their eyes.5

LYING AS A COGNITIVE PROCESS

Deceiving another person is likely to involve multiple cognitive processes, including social cognitions concerning the victim’s thoughts (his or her current beliefs) and the monitoring of responses made by both the liar and the victim in the context of their interaction. In the light of the above, we can posit that the liar is called upon to do at least two things simultaneously—to construct a new item of information (the lie) and to withhold a factual item (the truth). Within such a theoretical framework it is apparent that the truthful response comprises a form of baseline, or pre-potent response—information that we would expect to be forthcoming if an honest person were asked the question, or if the liar were distracted or fatigued. We might, therefore, hypothesize that responding with a lie demands something ‘extra’, and that it will engage executive prefrontal systems more than does telling the truth. Hence, we have a hypothesis that we can test by means of functional neuroimaging.18

IMAGING OF DECEPTION

In our own work we hypothesized that the generation of lie responses (in contrast to ‘truths’) would be associated with greater dorsolateral prefrontal activity;18 and that the concomitant inhibition of relatively pre-potent responses (truths) would be associated with greater activation of ventral prefrontal regions (systems known to be involved in response inhibition).19

We used a simple computerized protocol in which volunteers answered questions with a ‘yes’ or a ‘no’, pressing specified single computer keys.18 All the questions concerned activities the individuals could have performed on the day they were studied. We had previously acquired information about their activities from each of them when they were first interviewed. However, there was an added feature, in that the volunteers performed these tests in the presence of an investigator who was a ‘stooge’, who would be required to judge afterwards whether the responses were truths or lies. The computer screen presenting the questions also carried a green or red prompt (the sequence counterbalanced across subjects). Without the stooge knowing the ‘colour rule’, participants registered truth responses in the presence of one colour and lie responses in the presence of the other. All questions were presented twice, once each under each colour condition, so that in the end we were able to compare response times and brain activity during ‘truth’ and ‘lie’ responses. We have studied three cohorts ‘outside the scanner’ (30–48 in each7) and one sample of 10 ‘inside the scanner’18, performing two variants of this experimental protocol and confirming internal validity. The brain imaging technique applied was functional magnetic resonance imaging (fMRI).

Our analyses revealed that, whether the participants were inside or outside the scanner, response time was about 200 ms longer for lying than for truth-telling. In the scanned sample, lie responses were associated with increased activation in several prefrontal regions, including ventrolateral prefrontal and anterior cingulate cortices. These data support the hypothesis that prefrontal systems exhibit greater activation when the participant is called upon to generate experimental ‘lies’ and they show that, on average, a longer processing time is required to answer with a lie.

There are considerable limitations to our methodology, not least the artificiality of the experimental setting, the low-stake nature of the lying required, and the fact that most of the volunteers were academics and students. Also, we analyse our data on the basis of groups; there may be considerable inter-subject variation. Overall, there is a need for more ‘ecological’ studies of deception.

Nevertheless, our finding of increased response time during lying is congruent with a 2001 report of a convicted murderer filmed while lying and telling the truth.20 Although recounting similar material on both occasions, this person exhibited slower speech with longer pauses and more speech disturbance when lying. He also displayed fewer bodily movements.20 Previous meta-analyses of behavioural lying studies have likewise pointed to speech disturbance, increased response latency and a decrease in other motor behaviours in the context of attempted deception.20 Although responses on our computerized tasks were non-verbal, the behavioural and functional anatomical profile may indicate a common process underlying these findings and others18,20—namely, an inhibitory mechanism being utilized by those attempting to withhold the truth (a process associated with increased response latency). It is noteworthy that the difference between lying and truth times for all groups in our studies was around 200 ms.7,18 This figure is consistent with behavioural data from investigators using ‘guilty knowledge’ tasks.21,22.

Other groups using fMRI have similarly found prefrontal cortex to be implicated in deception. Although the foci reported differ in some cases, the principle of preferential engagement by executive brain regions seems to hold, as does the notion that ‘truth’ comprises a baseline23,24. None of these studies has identified areas of greater activation during truthful responding relative to ‘lying’.18,23,24 Recent reports, from conference abstracts, again describe greater activation of prefrontal systems during lying,25 while a unique study of motor evoked potentials points to increased excitability, bilaterally, in motor regions of the brain.26

Taken together, these data seem to support the contention that lying is an executive process, engaging ‘higher’ brain regions, notably within frontal systems. However, they also seem to imply that truth-telling constitutes a relative baseline in human cognition and communication. The caveat here is that the absence of foci of greater activation during truth-telling may represent a type II error (a false-negative): conceivably, as the sensitivity of imaging techniques increases, more subtle activations may be detected during truthfulness.

Acknowledgments

I thank Mrs Jean Woodhead for her assistance with the manuscript, and colleagues and volunteers who contributed to the studies described.

References

- 1.Spence SA. Hysterical paralyses as disorders of action. Cognitive Neuropsychiatry 1999;4: 203–26 [DOI] [PubMed] [Google Scholar]

- 2.Spence SA, Crimlisk HL, Cope H, et al. Discrete neurophysiological correlates in prefrontal cortex during hysterical and feigned disorder of movement. Lancet 2000;355: 1243–4 [DOI] [PubMed] [Google Scholar]

- 3.Merskey H. The Analysis of Hysteria: Understanding Conversion and Dissociation. London: Gaskell, 1995

- 4.Ekman P, O’Sullivan M. Who can catch a liar? Am Psychologist 1991;46: 913–20 [DOI] [PubMed] [Google Scholar]

- 5.Vrij A. Detecting Lies and Deceit: The Psychology of Lying and the Implications for Professional Practice. Chichester: Wiley, 2001

- 6.Chambers Concise Dictionary. Edinburgh: Chambers Harrap, 1991

- 7.Spence S, Farrow T, Leung D, et al. Lying as an executive function. In: Halligan PW, Bass C, Oakley DA, eds. Malingering and Illness Deception. Oxford: Oxford University Press, 2003: 255–66

- 8.Dunbar R. On the origin of the human mind. In: Carruthers P, Chamberlain A, eds. Evolution and the Human Mind: Modularity, Language and Meta-cognition. Cambridge: Cambridge University Press, 2000: 238–53

- 9.O’Connell S. Mindreading: An Investigation into How we Learn to Love and Lie. London: Arrow Books, 1998

- 10.Happe F. Autism: an Introduction to Psychological Theory. Hove: Psychology Press, 1994

- 11.Giannetti E. Lies we Live By: the Art of Self-deception (transl. J Gledson). London: Bloomsbury, 2000

- 12.Ford CV, King BH, Hollender MH. Lies and liars: psychiatric aspects of prevarication. Am J Psychiatry 1988;145: 554–62 [DOI] [PubMed] [Google Scholar]

- 13.Spence SA, Hunter MD, Harpin G. Neuroscience and the will. Curr Opin Psychiatry 2002;15: 519–26 [Google Scholar]

- 14.Badgaiyan RD. Executive control, willed actions, and nonconscious processing. Human Brain Mapping 2000;9: 38–41 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Jack AI, Shallice T. Introspective physicalism as an approach to the science of consciousness. Cognition 2001;79: 161–96 [DOI] [PubMed] [Google Scholar]

- 16.Shallice T. From Neuropsychology to Mental Structure. Cambridge: Cambridge University Press, 1988

- 17.Barnes TRE, Spence SA. Movement disorders associated with antipsychotic drugs: clinical and biological implications. In: Reveley MA, Deakin JFW, eds. The Psychopharmacology of Schizophrenia. London: Arnold, 2000: 178–210

- 18.Spence SA, Farrow TFD, Herford AE, et al. Behavioural and functional anatomical correlates of deception in humans. NeuroReport 2001;12: 2849–53 [DOI] [PubMed] [Google Scholar]

- 19.Starkstein SE, Robinson RG. Mechanism of disinhibition after brain lesions. J Nerv Ment Dis 1997;185: 108–14 [DOI] [PubMed] [Google Scholar]

- 20.Vrij A, Mann S. Telling and detecting lies in a high-stake situation: the case of a convicted murderer. Appl Cogn Psychol 2001;15: 187–203 [Google Scholar]

- 21.Farwell LA, Donchin E. The truth will out: interrogative polygraphy (“lie detection”) with event-related brain potentials. Psychophysiology 1991;28: 531–47 [DOI] [PubMed] [Google Scholar]

- 22.Seymour TL, Seifert CM, Shafto MG, Mosmann AL. Using response time measures to assess “guilty knowledge”. J Appl Psychol 2000;85: 30–7 [DOI] [PubMed] [Google Scholar]

- 23.Langleben DD, Schroeder L, Maldjian JA, et al. Brain activity during simulated deception: an event-related functional magnetic resonance study. NeuroImage 2002;15: 727–32 [DOI] [PubMed] [Google Scholar]

- 24.Lee TMC, Liu H-L, Tan L-H, et al. Lie detection by functional magnetic resonance imaging. Hum Brain Mapping 2002;15: 157–64 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Nunez J, Gutman D, Hirsch J. Fabrication versus truth telling can be distinguished as a measure of cognitive load using FMRI in human subjects. Hum Brain Mapping 2003, June 18–22, 2003 New York City, New York [abstract]

- 26.Lo YL, Fook-Chong S, Tan EK. Increased cortical excitability in human deception. NeuroReport 2003;14: 1021–4 [DOI] [PubMed] [Google Scholar]