Abstract

Hippocampal place cells fire in sequences that span spatial environments and non-spatial modalities, suggesting that hippocampal activity can anchor to the most behaviorally salient aspects of experience. As reward is a highly salient event, we hypothesized that sequences of hippocampal activity can anchor to rewards. To test this, we performed two-photon imaging of hippocampal CA1 neurons as mice navigated virtual environments with changing hidden reward locations. When the reward moved, the firing fields of a subpopulation of cells moved to the same relative position with respect to reward, constructing a sequence of reward-relative cells that spanned the entire task structure. The density of these reward-relative sequences increased with task experience as additional neurons were recruited to the reward-relative population. Conversely, a largely separate subpopulation maintained a spatially-based place code. These findings thus reveal separate hippocampal ensembles can flexibly encode multiple behaviorally salient reference frames, reflecting the structure of the experience.

INTRODUCTION

Memories of positive experiences are essential for reinforcing rewarding behaviors. These memories must also have the capacity to update when knowledge of reward changes (i.e. a water source moves to a new location)1,2. How does the brain both amplify memories of events surrounding a reward and maintain a stable representation of the external world? The hippocampus provides a potential neural circuit for this process. Hippocampal place cells fire in one or a few spatially restricted locations, defined as their place fields3. In different environments, place cells “remap”, such that the preferred location or firing rate of their place field changes4–12. Together, the population of place cells creates reliable sequences of activity as an animal traverses an environment, resulting in a unique neural code for each environment and a unique sequence of spiking for each traversal9,13–21. While these patterns support spatial navigation22–26, recent evidence has revealed that hippocampal neurons also show reliable tuning relative to a variety of non-spatial modalities and create sequences of activity for these modalities17,27,28. For instance, sequential hippocampal firing has been observed across time29–35, in relation to progressions of auditory36, olfactory37–42, or multisensory stimuli43–45, and during accumulation of evidence for decision-making tasks46. Together, these findings suggest that the hippocampus can be understood as generating sequential activity13,17,27,29,47,48 to encode the progression of events as they unfold in a given experience27,28,49–51.

Multiple lines of evidence demonstrate that the hippocampus prioritizes coding for aspects of experience that are particularly salient or that can affect the animal’s behavior27,33–46,52–62. The presence of food or water reward is one such highly salient event that is consistently prioritized, as demonstrated by prior work finding that place cells tend to cluster near (i.e. “over-represent”) reward locations1,2,54,55,63–66. In addition, a small subpopulation of hippocampal cells are active precisely at rewarded locations54,64, even when the reward is moved to a new location54. Moreover, optogenetic activation of cells with place fields near reward drives the animal to engage in reward-seeking actions24, suggesting a causal role for hippocampal activity in reward-related behaviors. Yet it remains unclear whether the hippocampus encodes complete sequences of events around rewards separately from the influence of other sensory stimuli. Moreover, it is incompletely understood how different aspects of experience are represented simultaneously.

We therefore hypothesized that reward may anchor the sequential activity of the hippocampus in a subpopulation of pyramidal cells. Remapping dynamics supporting this hypothesis have been reported at locations very close to reward sites1,2,54,55,63–66, but in order to support memory of events leading up to and following rewarding experiences, hippocampal activity encoding events at distances further away from reward must also be able to update when reward conditions change. Yet predictable remapping that spans the entire environment in response to changing reward locations has not been demonstrated. We reasoned that previously reported reward-specific cells54 may comprise a subset of a larger population encoding an entire sequence of events relative to reward. This hypothesis predicts that moving the reward within a constant environment should induce remapping even at locations far from reward, in a manner that preserves sequential firing relative to each reward location. In parallel, we predicted that a subset of hippocampal neurons should preserve their firing relative to the spatial environment, reflecting an ability of the hippocampus to flexibly anchor to both the spatial environment and the experience of finding reward as two salient reference frames45,59,60,67–73 that are required to solve the task at hand.

To investigate whether the hippocampus simultaneously encodes sequential experience relative to reward and the spatial environment, we used 2-photon (2P) calcium imaging74,75 to monitor large populations of CA1 neurons in head-fixed mice learning a virtual reality (VR) task. The use of VR provided tight control of the sensory stimuli and constrained the animal’s trajectory, allowing us to more readily observe remapping of neurons in relation to distant rewards, a phenomenon potentially less visible in freely moving scenarios. Further, the task included multiple updates to hidden reward zones across two environments, allowing us to dissociate spatially-driven and reward-driven remapping. We found a subpopulation of neurons that, when rewards moved, remapped to the same relative position with respect to reward and thus constructed a sequence of activity relative to the reward location. With increasing experience, more neurons were recruited to these “reward-relative sequences”. These results suggest that the hippocampus learned a generalized representation of the task anchored to reward, while also maintaining a spatial map in largely separate neural ensembles.

RESULTS

Monitoring neural activity in mice during a virtual reality navigation and reward learning task

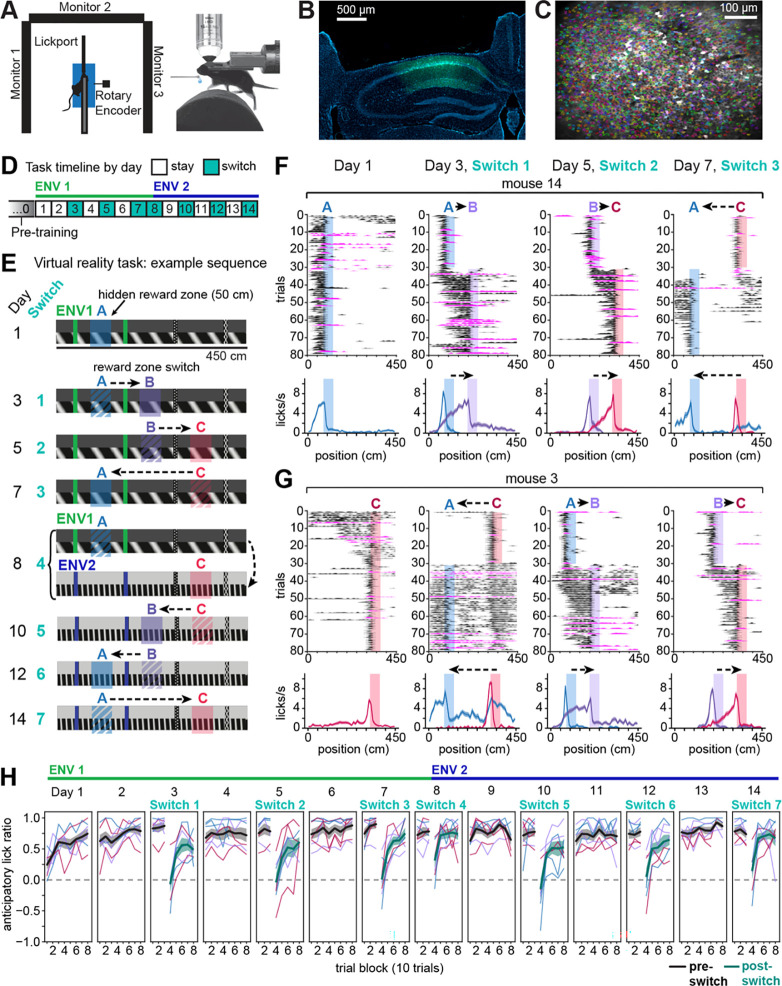

We performed 2P calcium imaging to monitor CA1 neurons expressing GCaMP7f in head-fixed mice learning a VR linear navigation task (Fig. 1A–C). To observe putative remapping related to rewards, and examine how this remapping might develop with experience, we designed a task with multiple changing hidden reward locations. In the task, animals were required to run down a 450 cm virtual linear environment (Env 1) with a single hidden, 50-cm reward zone. Sucrose water reward was delivered operantly for licking within the hidden reward zone (Fig. 1D–E). Imaging began on day 1 of task acquisition. On day 3, the reward zone was moved within a “switch” session to a new location on the track. The reward switch was signaled by automatic reward delivery on the first 10 trials after the switch only if the mouse failed to lick in the new zone. On day 5, a third reward zone was introduced, followed by a return to the original zone on day 7. On day 8, the reward switch coincided with the introduction of a novel environment (Env 2) that evokes spatially-driven global remapping7. The sequence of switches was subsequently reversed in Env 2 (Fig. 1E). On intervening non-switch (“stay”) days, the last reward location from the previous day was maintained. Reward switch sequences were counterbalanced across animals (n=7 “switch” mice, Fig. S1A–D). The three possible reward locations were positioned equidistant from each of the “tower” landmarks along the track, thus controlling for proximity of each reward to visual landmarks8,55,76–80. A separate “fixed-condition” group of mice (n=3) experienced only one reward location in a single environment (Env 1) (Fig. S1D, F), to control for the effect of extended experience alone compared to learning about the reward switches. To keep animals engaged and prevent the movement of the reward zone from being a completely novel experience, on ~15% of trials the reward was randomly omitted for all animals. At the end of the track, the mouse passed through a brief gray “teleport” tunnel (~50 cm + temporal jitter up to 10 s; see Methods) before starting the next lap in the same direction. Time in the teleport tunnel was randomly jittered so that exact time of track entry could not be anticipated.

Figure 1. 2P imaging of CA1 neurons in a VR task with changing hidden reward locations.

A) Top-down view (left) and side view (right) of head-fixed VR set-up. 5% sucrose water reward is automatically delivered when the mouse licks a capacitive lick port.

B) Coronal histology showing imaging cannula implantation site and calcium indicator GCaMP7f expression under the pan-neuronal synapsin promoter (AAV1-Syn-jGCaMP7f, green) in dorsal CA1 (DAPI, blue).

C) Example field of view (mean image) from the same mouse in (B) (mouse m12) with identified CA1 neurons in shaded colors (n=1780 cells).

D) Task timeline. On “stay” days, the reward remains at the same location throughout the session; on “switch” days, it moves to a new location after the first 30 trials (typically 80 trials/session).

E) Side views of virtual linear tracks showing an example reward switch sequence. Shaded regions indicate hidden reward zones, for illustration only. On intervening days, the last reward location from the previous day is maintained.

F) Licking behavior for an example mouse (m14) on the first day and first 3 switches. Top row: Smoothed lick rasters. Rewarded trials in black; omission trials in magenta. Shaded regions indicate the active reward zone. Bottom row: Mean ± s.e.m. lick rate for trials at each reward location (blue=A, purple=B, red=C).

G) Licking behavior for a second example mouse (m3) that experienced a different starting reward zone and switch sequence from the mouse in (F). See Fig. S1D for the full set of sequence allocations per mouse.

H) Behavior across animals performing reward switches (n=7 mice). An anticipatory lick ratio of 1 indicates licking exclusively in the 50 cm before a reward zone (“anticipatory zone”, see Fig. S1E); 0 (horizontal dashed line) indicates chance licking across the whole track outside of the reward zone. Thin lines represent individual mice, with lines colored according to the reward zone active for each mouse on a given set of trials (blue=A, purple=B, red=C); thick black and teal lines show mean ± s.e.m. across mice for pre- and post-switch trials, respectively. See also Figure S1.

We observed that mice developed an anticipatory ramp of licking that, on average, peaked at the beginning of the reward zone (Fig. 1F–G, Fig. S1A–C). This is the earliest location where operant licking triggered reward delivery, demonstrating successful task acquisition. After a reward switch, mice entered an exploratory period of licking but then adapted their licking to anticipate each new zone, typically within a session (Fig. 1F–G, Fig. S1A–C). We quantified this improvement in licking precision across the switch as the ratio of anticipatory lick rate 50 cm before the currently rewarded zone compared to everywhere else outside the zone (Fig. S1E). We found that all mice were able to improve and retain their licking precision for the new reward zone (Fig. 1H, n=7 mice), demonstrating accurate spatial learning and memory.

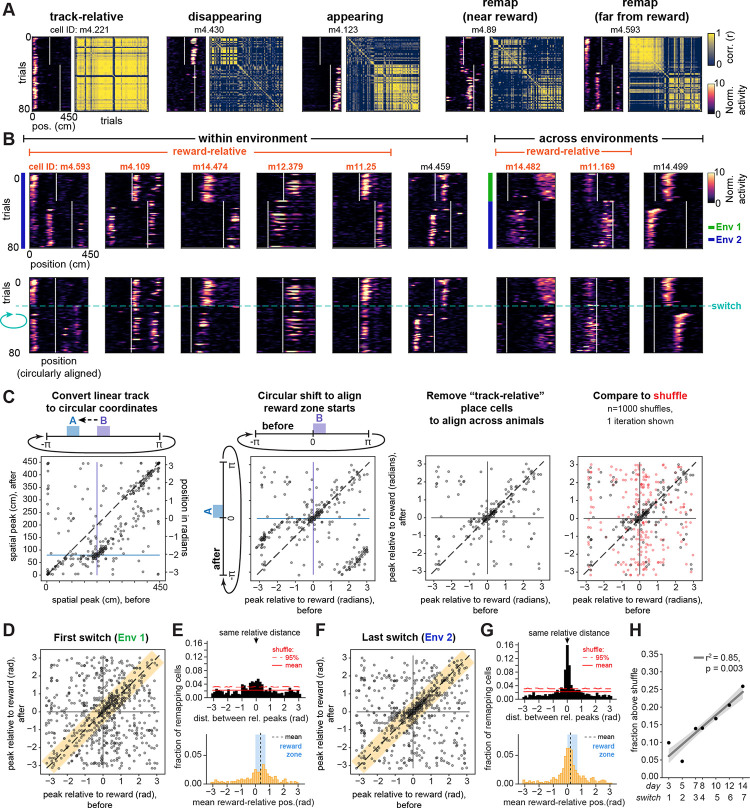

Moving the reward induces remapping that spans a constant environment

We focused on the switch days to analyze single-cell remapping patterns. First, we identified place cells active either before or after the reward switch (see Methods; means ± standard deviation [s.d.] across 7 mice and 7 switch sessions: 408 ± 216 place cells out of 868 ± 356 cells imaged; 48.6% ± 16.7% place cells active before or after the switch). After a reward switch within an environment, a subset of place cells maintained a stable field at the same location on the track (“track-relative” cells, 20.2% ± 8.8%, mean ± s.d. of all place cells, averaged across all 6 switches within environment, 7 mice; Fig. 2A, Fig. S2A). However, many place cells remapped, despite the constant visual stimuli and visual landmarks (Fig. 2A, Fig. S2A–D). First, we observed subsets of cells with place fields that disappeared (“disappearing”, 11.5% ± 7.8%) or appeared (“appearing”, 8.3% ± 5.2%) after the reward location switched (Fig. 2A, Fig. S2A). Other cells precisely followed the reward location (“remap near reward”, 4.7% ± 3.9%, Fig. 2A, Fig. S2A–D), consistently firing within ±50 cm of the beginning of the reward zone, similar to previous reports54. Notably, a subset of place cells with fields distant from reward (>50 cm) also remapped after the reward switch (“remap far from reward”, 15.6% ± 3.7%; Fig. 2A, Fig. S2A, right). A remaining 39.9% ± 19.3% of place cells did not show sufficiently stereotyped remapping patterns to be classified by these criteria. At the population level, we observed that a reward switch within a constant environment induced more remapping than the spontaneous instability seen with a fixed reward and fixed environment81–85, but less remapping than the introduction of a novel environment with the reward switch5–11 (Fig. S2B–D).

Figure 2. A subpopulation of CA1 cells remaps relative to reward.

A) Five place cells from an example mouse (m4) with fields that are either track-relative, disappearing, appearing, following the reward location closely (“remap near reward”, with spatial firing peaks ≤ 50 cm from both reward zone starts), or “remap far from reward” (peaks >50 cm from at least one reward zone start; see Methods). Data are from an example switch day in Env 2 (day 14). Left of each cell: Spatial activity per trial shown as smoothed deconvolved calcium events normalized to the mean activity for each cell within session. White lines indicate beginnings of reward zones. Right of each cell: Trial-by-trial correlation matrix for that cell.

B) Example place cells that remap to the same relative distance from reward (orange cell ID labels), compared to cells that do not (black cell ID labels). Top row: Mean-normalized spatial activity plotted on the original track axis. Bottom row: Post-switch trials (after the horizontal blue dashed line) are circularly shifted to align the reward locations, to illustrate alignment of fields at a similar relative distance from reward both within (left, examples from day 14) and across environments (right, day 8).

C) Illustration of method to quantify remapping relative to reward. Left: A scatter of peak activity positions before vs. after the reward switch in linear track coordinates reveals track-relative place cells along the diagonal (dashed line; shown for example animal m12 on day 14, switching from reward zone “B” before to “A” after). An off-diagonal band at the intersection of the starts of reward zones suggests cells remapped relative to both rewards. Middle left: We center this reward-relative band by converting the linear coordinates to periodic and rotating the data to align the starts of reward zones at 0. Middle right: Track-relative place cells are removed to isolate remapping cells. Right: The data are then compared to a random remapping shuffle (one shuffle iteration shown in red). Together, these shuffles (n=1000) produce the distribution marked in red lines in (E) and (G). All points are jittered slightly for visualization (see Methods) and are translucent; darker shades indicate overlapping points.

D) Peak activity positions relative to reward for all animals on the first switch (day 3), for remapping cells with significant spatial information both before and after the switch (n=677 cells, 7 mice). Orange shaded region indicates a range of ≤50 cm (~0.698 radians) between relative peaks, corresponding to points included in the histogram in (E, bottom).

E) The fraction of cells remapping to a consistent reward-relative position (≤ 50 cm between relative peaks) on the first switch day is higher than expected by chance. Top: histogram of the circular distance between relative peaks after minus before the switch (i.e. orthogonal to the unity line in D), compared to the mean and upper 95% of the shuffle distribution (solid and dotted red lines, respectively). Bottom: Distribution of mean firing position relative to reward for cells with ≤ 50 cm distance between relative peaks, corresponding to orange shaded area along the unity line in (D) (n=261 cells, 7 mice, non-zero mean = 0.358 radians, 95% confidence interval [lower, upper] = [0.196, 0.519], circular mean test). Black dotted line marks the mean, blue shading marks the extent of the reward zone.

F) Same as (D) but for the last switch (day 14) (n=707 cells, 7 mice).

G) Same as (E) but for the last switch (day 14). Bottom: n=398 cells with ≤ 50 cm distance between relative peaks (non-zero mean = 0.206 radians, 95% confidence interval [lower, upper] = [0.103, 0.309], circular mean test).

H) Fraction of cells above chance that remap relative to reward grows linearly with task experience (number of switches). Each dot shown is the combined fraction across n=7 mice; mean ± confidence interval of linear regression is shown in gray.

See also Figure S2.

An above-chance fraction of reward-relative remapping cells

We next investigated whether a subpopulation of cells encoded experience anchored to reward. To test this hypothesis, we attempted to identify neurons with fields far from the start of the reward zone (>50 cm) that shifted their place fields to match the shift in reward location. To visualize this form of remapping, we circularly shifted the spatial activity of cells on trials following the switch to align the reward locations (Fig. 2B). This approach revealed that a subset of cells remapped to a similar relative distance from the reward, both within and across environments (“reward-relative” cells; see below and Methods for full criteria) (Fig. 2B). This field alignment relative to reward was observed even for fields that, from the perspective of the linear track position, remapped from the latter half of the track to the beginning, or vice versa (e.g. Fig. 2B cells m4.109, m14.482). Such alignment occurred despite the variable length of the teleport zone, suggesting that these cells do not simply track distance run from the last reward. Consistent with this interpretation, the distance run in the teleport zone did not predict the cells’ spatial firing variability on the following lap in the vast majority (~92%) of reward-relative cells (Fig. S2E–F). Instead, reward-relative cells seemed to maintain their preferred firing position with respect to reward according to where the animal was in the progression of the overall task, which spans across the teleport and the trial start but is informed by these boundaries. Altogether, these observations suggest that a reward-relative subpopulation maps a periodic axis of experience from reward to reward, consistent with the cyclical manner in which the animal repeats the task structure. At the same time, other cells seemed to remap randomly, as circular shifting did not align their fields (“non-reward-relative remapping”, 12.3% ± 3.1% of all place cells; Fig. 2B, black cell ID labels).

To assess whether reward-relative remapping occurred at greater than chance levels, we plotted the peak spatial firing of all cells that maintained significant spatial information before versus after the switch. This revealed the track-relative place cells along the diagonal, as they maintained their peak firing positions pre- to post-switch. In addition, this analysis revealed an off-diagonal band of cells at the intersection of the reward locations, which extended linearly away from the cluster of reward cells that has been previously described54 (Fig. 2C, far left panel; Fig. S2B, middle). As noted above, we reasoned that the animals may experience the task as periodic instead of a sequence of discrete linear episodes. We therefore transformed the linear track coordinates to the phase of a periodic variable (i.e. 0 to 450 cm becomes to radians), allowing us to shift the data to align the reward zones at zero and isolate the band of cells with peaks at this intersection (Fig. 2C, middle-left panel). Cells which fall along the unity line in this analysis thus putatively remap to the same relative distance from reward. We then excluded the track-relative cells to focus on the remapping cells across animals (Fig. 2C, middle-right panel). We measured the circular distance of each cell’s peak firing position relative to the reward zone pre- or post-switch (Fig. 2D) and calculated the difference between each of these relative peaks. A difference between relative peak positions close to zero indicates reward-relative remapping. We compared the distribution of differences to a “random-remapping” shuffle (Fig. 2C, far right panel) and found that the fraction of place cells that exhibited reward-relative remapping across animals exceeded chance on the first switch day (Fig. 2E, top). Further, the fraction of cells that exceeded the chance level increased by the last switch day (Fig. 2F–G, Fig. S2G) and significantly increased linearly across task experience with each switch (Fig. 2H). A linear mixed effects model accounting for mouse identity revealed a similar, significant increase in reward-relative remapping over experience (Fig. S2H). Across days and animals, the fraction of cells exceeding chance spanned a mean range of ~0.65 radians around the unity line, or nearly 50 cm, suggesting there is some variability in the precision of reward-relative remapping (Fig. 2E, G, Fig. S2G). This distance thus provided a candidate region to quantitatively identify reward-relative remapping cells. We next analyzed the distribution of reward-relative peak firing positions within this candidate zone (i.e. position along the unity line in Fig. 2D) and observed that the mean of the distribution was significantly greater than zero (Fig. 2E, bottom). This indicates that more reward-relative place fields are in locations following reward delivery (as indicated by maximal licking, Fig. 1F–G, Fig. S1A–C) than in locations preceding the reward. This post-reward shift of the distribution of cells was consistent across days (Fig. 2G, bottom).

Next, to investigate the degree to which cells active in close proximity to the reward contribute to the above-chance levels of reward-relative remapping, we excluded cells with peaks within ± 50 cm of the start of both reward zones. Note that this excludes both the reward and anticipatory zones. With this exclusion, we still found above-chance reward-relative remapping (Fig. S2I–L). In addition, the mean of the remaining reward-relative firing positions continued to follow the reward zone (Fig. S2J, L, bottom). However, there was not a significant increase in remapping at these distances across days (Fig. S2M), suggesting there is more growth in the population of reward-relative cells at closer proximities to reward. Nevertheless, this analysis confirmed that reward-relative remapping is not limited to neurons with close proximity to reward.

Finally, we implemented two main criteria to identify a robust subpopulation of reward-relative cells for further analysis. First, we identified candidate place cells with reward-relative spatial peaks that were within 50 cm of each other before versus after a reward switch (i.e. within the orange candidate zone highlighted in Fig. 2D, F). This criterion included cells with peaks both very close to and potentially very far from the reward. Second, to reduce the influence of noise in spatial peak detection and take into account the shape of the firing fields, we performed a cross-correlation of the cells’ activity aligned to the reward zone pre- vs. post- switch. We required the offset at the maximum of this cross-correlation to be ≤50 cm from zero lag and exceed chance levels relative to a shuffle for each cell (Fig. S2N–P). This approach provides a metric for how stable each cell’s mean firing position is relative to reward. We confirmed that these criteria identified a subpopulation of cells whose fields were maximally correlated in periodic coordinates relative to reward, in contrast to the track-relative cells which were maximally correlated relative to the original linear track coordinates (Fig. S2O–P). From here on, we refer to cells that passed both of these criteria as “reward-relative” (RR) (15.4% ± 5.3% of place cells averaged over all switch days; 12.4% ± 3.3% on the first switch, 18.9% ± 6.5% on the last switch).

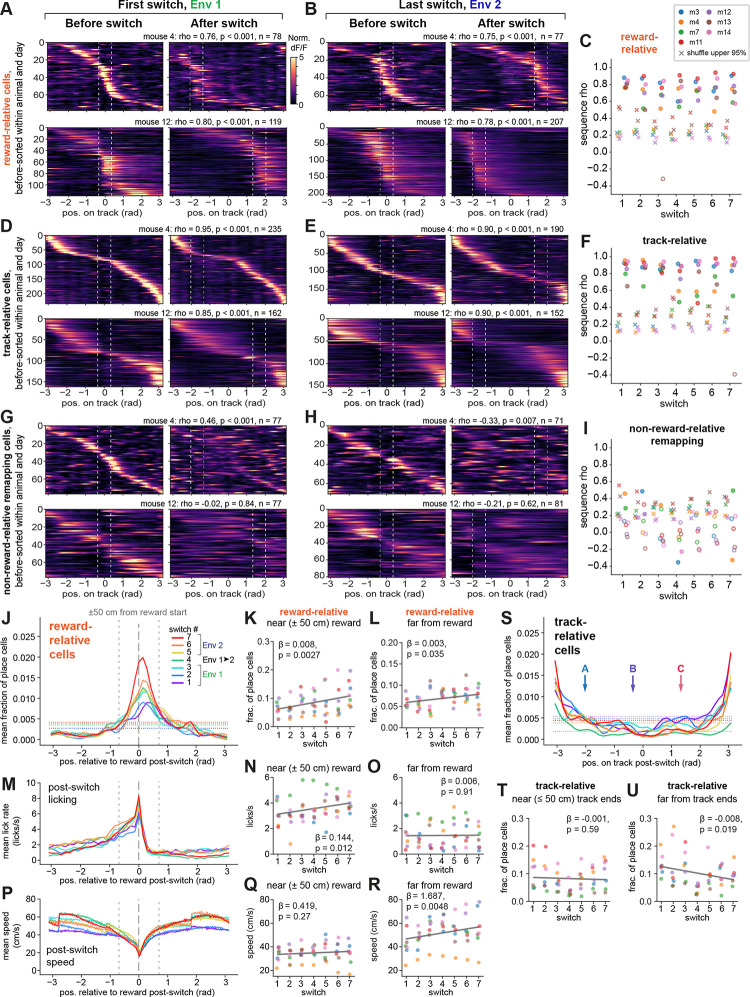

Reward-relative sequences are preserved when the reward moves

Next, to ask whether the reward-relative cells constructed sequences of activity anchored to reward, we sorted the reward-relative cells within each animal by their peak firing position on trials before the reward switch, using split-halves cross-validation (see Methods). We applied this sort to the post-switch trials and found that the reward-relative subpopulation indeed constructed sequences that strongly preserved their firing order relative to reward across the switch within an environment (Fig. 3A–B, Fig. S3A). Sequence preservation was quantified as a circular-circular correlation coefficient between the peak firing positions in the pre- and post-switch sequences, which was much higher than the shuffle of cell identities for nearly all animals and days (48 out of 49 sessions positively correlated, with rho >95% of the shuffle, two-sided permutation test) (Fig. 3C). These sequences spanned the task structure from reward to reward, such that cells firing at the furthest distances from reward would occasionally “wrap around” from the beginning to the end of the linear track or vice versa (e.g. Fig. 3A, mouse 4 and Fig. S3A). When we introduced the novel environment on day 8, despite apparent global remapping in the total population of place cells (Fig. S3B), reward-relative sequences were clearly preserved (Fig. S3C, top). The track-relative place cells likewise constructed robust sequences (48 out of 49 sessions positively correlated, with rho >95% of the shuffle, two-sided permutation test) both within (Fig. 3D–F) and even across environments (Fig. S3C, middle) in a minority of place cells (20.2% ± 8.8% of place cells within vs. 8.4% ± 3.6% across, mean ± s.d. across 7 mice). The sequence stability of both the reward-relative and track-relative subpopulations contrasted starkly with the absence of sequence preservation for most of the non-reward-relative remapping cells (40 out of 49 sessions did not exceed the shuffle; Fig. 3G–I; Fig. S3C, bottom) and for the disappearing and appearing cells (Fig. S3D–E), as expected.

Figure 3. Preserved sequences relative to reward and space.

A) Preserved firing order of reward-relative sequences across the 1st switch (day 3) (2 example mice, top and bottom rows; m4 is the same mouse shown in Fig. 2A, m12 had the maximum number of cells). Reward-relative remapping cells within each animal are sorted by their cross-validated peak activity before the switch, and this sort is applied to activity after the switch. Data are plotted as the spatially-binned, unsmoothed dF/F normalized to each cell’s mean within session, from which peak firing positions are identified in linear track coordinates that have been converted to radians (see Fig. 2C). White dotted lines indicate starts and ends of each reward zone. Each sequence’s circular-circular correlation coefficient (rho), p-value relative to shuffle, and number of cells is shown above, where p < 0.001 indicates that the observed rho exceeded all 1000 shuffles. Color scale is applied in (B), (D-E), (G-H).

B) Reward-relative sequences on the last switch (day 14), for the same mice shown in (A).

C) Circular-circular correlation coefficients for reward-relative sequences before vs. after the reward switch, for each mouse (n=7), with the upper 95th percentile of 1000 shuffles of cell IDs marked as “x”. Closed circles indicate coefficients with p < 0.05 compared to shuffle using a two-sided permutation test; open circles indicate p ≥ 0.05. D) Sequences of track-relative place cells on the 1st switch day for the same mice as in (A-B).

E) Sequences of track-relative place cells on the last switch day for the same mice as in (A-B,D).

F) Circular-circular correlation coefficients for track-relative place cell sequences (n=7 mice), colored as in (C).

G-H) Sequences of non-reward-relative remapping cells with significant spatial information before and after the switch, for the same mice as above and on the 1st (G) and last (H) switch day. Note that a cross-validated sort of the “before” trials produces a sequence, but in general this sequence is not robustly maintained across the switch. I) Circular-circular correlation coefficients for non-reward-relative remapping cells (n=7 mice), colored as in (C).

J) Distribution of peak firing positions for the reward-relative sequences after the switch on each switch day, shown as a fraction of total place cells within each animal, averaged across animals (n=7 mice). Standard errors are omitted for visualization clarity. Horizontal dotted lines indicate the expected uniform distribution for each day (see Methods); vertical gray dashed lines indicate the reward zone start and surrounding ±50 cm.

K-L) Quantification of changes in reward-relative sequence shape across animals and days, using linear mixed effects models with switch index as a fixed effect and mice as random effects (n=7 mice). β is the coefficient for switch index, shown with corresponding p-value (Wald test). Each point is the fraction of place cells within that mouse (colored as in C) in the reward-relative sequences, specifically within ±50 cm (K) or >50 cm (L) from the start of the reward zone.

M) Mean lick rate across post-switch trials and across animals (10 cm bins), colored by switch as in (J).

N-O) Quantification of change in lick rate across days within ±50 cm of the reward zone start (N) or >50 cm (O), using a linear mixed effects model as in (K-L).

P) Mean running speed across post-switch trials and across animals (2 cm bins), colored by switch as in (J).

Q-R) Quantification of change in speed across days, as in (K-L) and (N-O).

S) Distribution of peak firing positions for the track-relative sequences after the switch on each switch day, shown on the track coordinates converted to radians (not reward-relative). Arrows indicate the start position of each reward zone (“A”, “B”, “C”). Horizontal dotted lines indicate the expected uniform distribution for each day.

T-U) Quantification of changes in track-relative sequence shape across animals and days as in (K-L), here within ≤50 cm of either end of the track (T) or >50 cm from the track ends (U). See also Figure S3.

We next quantified the mean sequence shape for each subpopulation, either relative to reward or to the VR track coordinates. The reward-relative sequences strongly over-represented the reward zone1,2,54,55,63–66 and were most concentrated just following the start of the reward zone (Fig. 3J). Further, this density near the reward zone grew across task experience (Fig. 3J–K). The fraction of place cells at further positions (>50 cm) from the start of the reward zone showed a modest increase over days, but to a much lesser degree than positions near the reward (Fig. 3L). In parallel, mice increased their average post-switch lick rate prior to the reward zone (Fig. 3M–O) and their running speed away from the reward zone over days (Fig. 3P–R). These changes suggest that reward-relative sequence strength increases as behavioral performance becomes more robust. In addition, on any given day, the precision of the animal’s licking correlated with the precision of the reward-relative sequence (i.e. the narrowness of the cell distribution around reward, measured as the circular variance) (Fig. S3F–I). In contrast to the reward-relative sequences, the track-relative place cell sequences tended to over-represent the ends of the track, as opposed to the reward zones (Fig. 3S). In addition, the track-relative sequences showed a subtle but significant drop in density at positions away from the ends of the track across days, consistent with selective stabilization of place fields near key landmarks8,55,76–80,86 (Fig. 3S–U). These differences in sequence shape between the reward-relative and track-relative cells suggest that each of these subpopulations anchors to the most salient point in the “space” they encode. For the reward-relative sequences, this is the reward zone, and for the track-relative sequences, this is likely the start and end of the track where the animal exits and enters the teleport zone. By comparison, the disappearing, appearing, and non-reward-relative remapping cells encoded a more uniform representation of the track (Fig. S3J–K), with only subtle over-representation of the track ends and an observable decrease across the track for the disappearing cells across days (Fig. S3J–K).

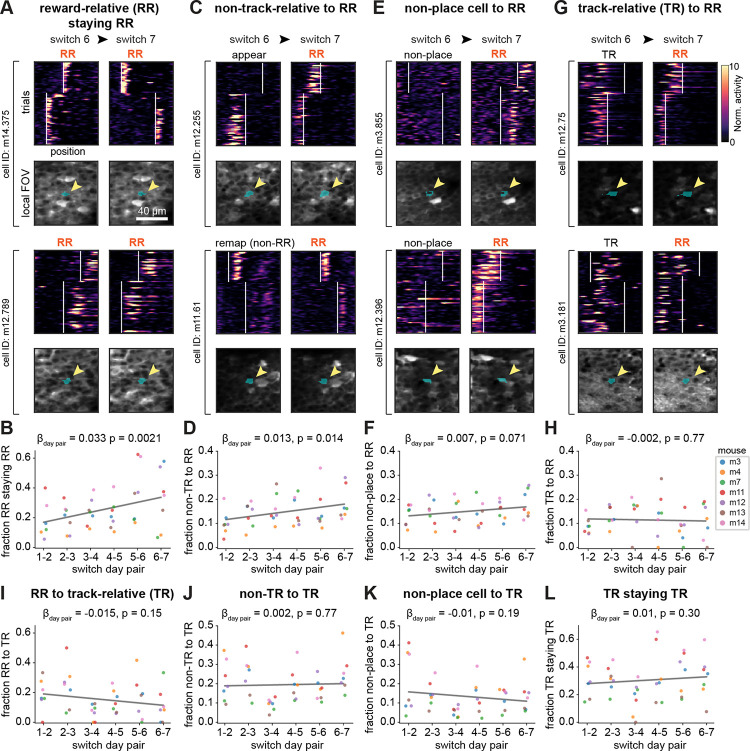

Non-track-relative place cells are increasingly recruited to the reward-relative population over days

The growth in reward-relative sequence density across task experience raised three possibilities: (1) reward-relative cells may become more consistent at remapping to precise reward-relative positions with extended learning, (2) more cells might be recruited from other populations to the reward-relative population, or (3) a combination of both changes occurs. To investigate these possibilities and understand how individual neurons are dynamically reallocated to represent updated reward information within and across environments, we followed the activity of the same neurons across days by matching the aligned regions of interest (ROIs) for each cell. Specifically, we followed single neurons across pairs of switch days and analyzed their spatial firing patterns from one switch to the next (Fig. 4). Using linear mixed effects models to account for variance across animals, we found that there was an increase in the fraction of cells that remained reward-relative on consecutive reward switches, indicating that reward-relative cells are more likely to maintain their reward-relative coding across experience (Fig. 4A–B). In addition, an increasing proportion of non-track-relative cells (appearing, disappearing, and non-reward-relative remapping) were recruited into the reward-relative population with task experience (Fig. 4C–D). Non-place cells and track-relative cells likewise were recruited into the reward-relative population, though this occurred at a constant rate of turnover that did not increase over days (Fig. 4E–H). In addition, the track-relative cell population did not recruit more cells from any other population (Fig. 4I–K) and did not show an increased likelihood of remaining track-relative across experience (Fig. 4L), in contrast to the reward-relative population. These results suggest that the track-relative place cells remain a more independent population from the reward-relative cells, and that cells exhibiting high flexibility (such as appearing/disappearing) are more likely to be recruited into the reward-relative representation with extended experience in this task.

Figure 4. Tracking cells over days reveals increased recruitment into the reward-relative population.

A) Example cells from 2 separate mice that were tracked from switch 6 to 7 (days 12–14) that were identified as reward-relative (RR) on both switch 6 and switch 7, and which maintained their firing position relative to reward. Top of each cell: spatial firing over trials and linear track position on each day (deconvolved activity normalized to the mean of each cell). White vertical lines indicate the beginnings of the reward zones. Bottom of each cell: Mean local field of view (FOV) around the ROI of the cell on both days (blue shading highlights ROI, indicated by the yellow arrow).

B) Fraction of tracked RR cells on each switch day that remain RR on subsequent switch days increases over task experience. All β coefficients and p-values in (B, D, F, H, and I-L) are from linear mixed effects models with switch day pair as a fixed effect and mice as random effects (n=7 mice in each plot, key in H). Coefficients correspond to the fractional increase in recruitment per switch day pair, from the model fit (gray line).

C) Same as (A) but for 2 example cells that were identified as appearing (top) or non-RR remapping (bottom) on switch 6 but were converted to RR on switch 7.

D) Fraction of “non-track-relative” place cells (combined appearing, disappearing, and non-RR remapping) that convert into RR cells on subsequent switch days increases over task experience.

E) Same as (A) but for 2 example non-place cells on switch 6 (did not have significant spatial information in either trial set) that were converted to RR place cells on day 7.

F) Fraction of non-place cells converting to RR cells on subsequent switch days shows a modest increase over task experience.

G) Same as (A) but for 2 example track-relative (TR) cells on switch 6 that were converted to RR on switch 7.

H) Fraction of TR cells converting to RR cells does not increase over task experience.

I) Fraction of RR cells converting to TR cells does not change significantly over task experience.

J) Fraction of non-TR cells (combined appearing, disappearing, and non-RR remapping) converting to TR does not change significantly.

K) Fraction of non-place cells converting to TR does not change significantly.

L) Fraction of TR cells remaining TR does not change significantly.

See also Figure S4.

The track-relative population itself showed a steady rate of turnover over days (Fig. 4L), consistent with representational drift81–85. Indeed, when we analyzed place cell sequences identified on each reference day and followed two days later, we observed drift even in the fixed-condition animals (n=3 mice) that experienced a single environment and reward location for all 14 days (Fig. S4A, F). Drift was prevalent as well in the reward-relative and track-relative sequences tracked across consecutive switch days (Fig. S4B–E, G–J), at a slightly higher degree than the fixed-condition animals (Fig. S4F), consistent with our observation that moving a reward induces more remapping than not moving a reward (Fig. 2A, Fig. S2A–D). In a subset of animals and day pairs, cells that remained reward-relative or track-relative tended to maintain their firing order across days. However, sequence preservation across days was generally lower (i.e. closer to the shuffle) (Fig. S4C, E, H, J) than within-day sequences (Fig. 3C, F; Fig. S3A, C). These results indicate that rather than being dedicated cell classes, reward-relative and track-relative ensembles are both subject to the same plasticity across days of experience as the overall hippocampal population81–85. However, the increasing recruitment of cells into the reward-relative population, despite this population having different membership on different days, suggests an increased allocation of resources to the reward-relative representation. We conclude that as animals become more familiar with the task demands—specifically the requirement to estimate a hidden reward location that can be dissociated from spatial stimuli—the hippocampus builds an increasingly dense representation of experience relative to reward, while preserving a fixed representation of the spatial environment in a largely separate population of cells on any given day.

Encoding of reward proximity versus movement covariates in reward-relative cells

The animals’ approach to and departure from the reward zone in this task is associated with stereotyped running and licking behaviors that comprise an important aspect of the animal’s experience surrounding reward. For instance, these behaviors must be timed with an accurate estimate of distance from reward to inform behavioral adaptations after the reward switch. We therefore took two complementary approaches, detailed below, to disentangle which features of the reward-relative experience are encoded in the reward-relative subpopulation. These approaches further allowed us to control for the potential influence of movement covariates on the neural activity.

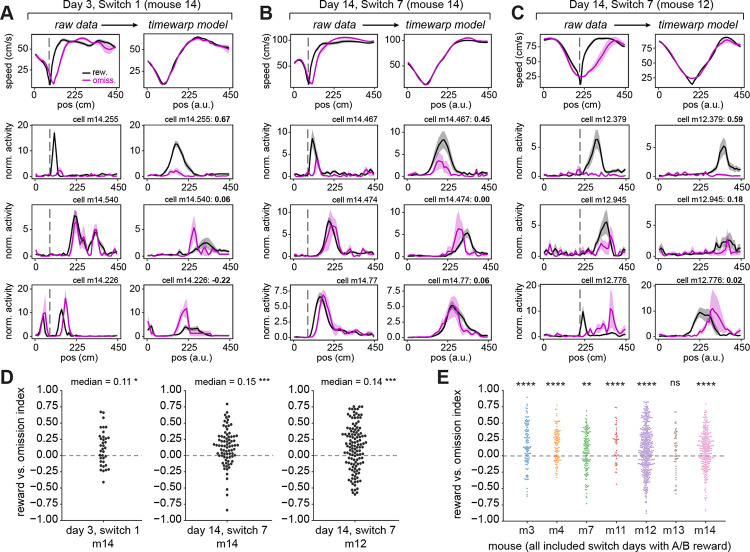

First, we tested the hypothesis that reward-relative firing following the reward could be locked to the running speed profile of the animal, as opposed to the location where the animal expected to receive reward. For this, we leveraged the ~15% omission trials to compare the activity of reward-relative neurons on rewarded versus unrewarded trials. To consider the activity of reward-relative cells under conditions where running speed was generally comparable between rewarded and omission trials, we restricted our analysis to: reward-relative cells with peaks following the start of the reward zone, sessions in which the reward zone began at location “A” (80 cm) or “B” (200 cm), and trials before a reward switch. To then align the running speed profiles across rewarded and omission trials, we fit a time warping model87 on the running speed data and applied this time warped transformation to the neural data (Fig. S5A–D, see Methods). We then computed a “reward versus omission index” (RO index) to quantify the difference in each cell’s firing rate following rewards versus omissions (Fig. S5E).

Reward-relative cells exhibited heterogeneity in their firing rates following rewards versus omissions (Fig. 5A–C). A subset of cells fired at the same position relative to reward regardless of the animal’s running speed (Fig. 5A, top and middle row of cells, Fig. 5B, middle, Fig. 5C, top and middle), with firing rates often differing between rewarded and omission trials (e.g. Fig. 5A, top, Fig. 5C, top and middle). Another subset of cells fired relative to a particular phase of the speed profile (Fig. 5A, bottom, Fig. 5B, top and bottom, Fig. 5C, bottom). However, at the population level, the distribution of RO indices for reward-relative cells was skewed toward reward-preferring (Fig. 5D). This reward-preference was also clearly observable at the single-cell and population levels in trials after the reward switch (Fig. S5F–I), although running speed is also more variable on these trials (Fig. S5F). Finally, combining RO indices across days (Fig. S5J) revealed that, while there is heterogeneity among individual cells, there is a significant preference for the reward-relative population to fire more on rewarded rather than omission trials (Fig. 5E, Fig. S5K; note results were similar when all sessions were included, Fig. S5L).

Figure 5. Diversity in reward-relative cell coding on rewarded vs. omission trials, with a population preference for reward.

A) Raw and time warp model-aligned speed (first row) and neural activity for 3 reward-relative example cells (second to fourth rows) on the first switch day, in mouse 14. Throughout Fig. 5, analysis is performed only on trial sets before the reward switch which have at least 3 omission trials. In (A-C), the left columns show raw (untransformed) data, with the start of the reward zone indicated by the gray dashed line. The right columns show data transformed by the time warp model fit to the trial-by-trial speed in that session (see Methods, Fig. S5). All included cells have peak firing following the start of the reward zone. First row: mean ± s.e.m. speed profiles for rewarded (black) and omission (magenta) trials. Note precise alignment of the transformed speed profiles on the right. Second to fourth rows: mean ± s.e.m. deconvolved activity for rewarded and omission trials, normalized to each cell’s mean within the session, showing an example reward-relative cell in each row. The difference in model-transformed activity between rewarded and omission trials is captured as a “reward vs. omission” index (bold text, top right of each panel; see Methods). The top row highlights neurons with much higher activity on rewarded trials. A positive index indicates higher activity on rewarded trials, a negative index indicates higher activity on omission trials.

B) Raw and time warp model-aligned speed and neural activity for 3 reward-relative example cells on the last switch day, in mouse 14. Note cell m14.474 is also shown in Fig. 2B.

C) Raw and time warp model-aligned speed and neural activity for 3 reward-relative example cells on the last switch day, in mouse 12. Note that speed is still aligned well with the reward zone at position “B” (at 220 cm) for m12 vs. position “A” (at 80 cm) for m14. Note cell m12.379 is also shown in Fig. 2B.

D) Reward vs. omission index for the population of reward-relative cells that have spatial firing peaks following the start of the reward zone, in the session shown in (A) (left), (B) (middle), and (C) (right). Each dot is a cell. *p<0.05, ***p<0.001, one-sample Wilcoxon signed-rank test against a 0 median. Switch 1, m14: W = 183, p = 0.018, n = 36 cells; Switch 7, m14: W = 721, p = 2.69e-5, n = 79 cells; Switch 7, m12: W = 2358, p = 3.70e-4, n = 122 cells.

E) Reward vs. omission index across all switch days where the reward zone was at “A” or “B”, for the population of reward-relative cells that have spatial firing peaks following the start of the reward zone within each mouse (n = 7 mice). Each dot is a cell, colors correspond to individual mice. Significance level is set at p<0.007 with Bonferroni correction. **p<0.007, ****p<0.0001. m3: median = 0.14, W = 1.50e3, p = 4.66e-7, n = 3 days, 114 cells; m4: median = 0.20, W = 1.03e3, p = 5.93e-10, n = 3 days, 112 cells; m7: median = 0.09, W = 4.44e3, p = 2.08e-3, n = 4 days, 157 cells; m11: median = 0.23, W = 194, p = 5.32e-5, n = 4 days, 48 cells; m12: median = 0.09, W = 3.41e4, p = 4.40e-8, n = 5 days, 441 cells; m13: median = 0.09, W = 523, p = 0.13, n = 3 days, 52 cells; m14: median = 0.11, W = 1.39e4, p = 3.74e-8, n = 4 days, 296 cells. See also Figure S5.

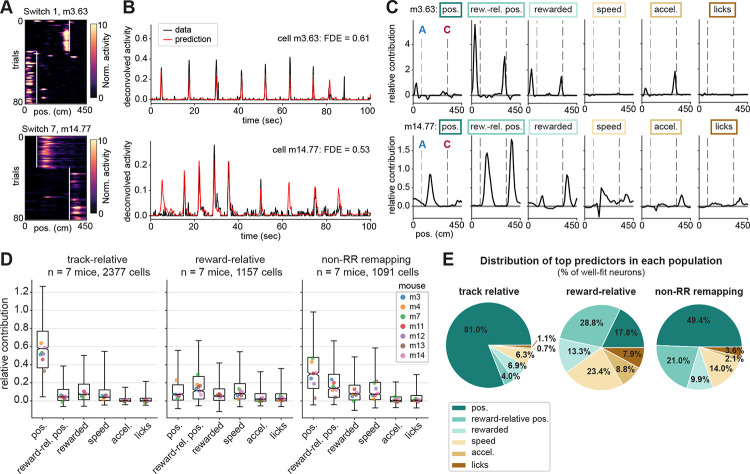

Second, we implemented a generalized linear model (GLM)88 to dissociate the contribution of task versus movement variables to the moment-to-moment deconvolved calcium activity of each cell. For task variables, we included linear track position, reward-relative position, and whether the animal was rewarded on each trial, convolved with the linear track position. For movement variables, we included running speed, acceleration, and licking (Fig. S6A, see Methods for details). We trained the GLM using 5-fold cross-validation and tested its prediction on the activity of each neuron in held out trials (Fig. 6A–B, Fig. S6B–C). Only well-fit neurons (fraction deviance explained > 0.15)88 were included in the analysis (35% of place cells across 7 mice and 7 switch days; Fig. S6B–C). This included 61% (2377/3901) of track-relative cells, 37% (1157/3104) of reward-relative cells (Fig. 6A–B), and 43% (1091/2518) of non-RR remapping cells.

Figure 6. A generalized linear model reveals reward-relative position as a leading predictor of neural activity in the reward-relative population.

A) Example spatial firing maps of two reward-relative cells that were fit well (fraction deviance explained > 0.5) by the GLM, on the first switch day (top) and last switch day (bottom). White lines mark the starts of reward zones. Note that cell m14.77 (bottom) is the same cell as shown in Fig. 5B, bottom row.

B) Deconvolved calcium activity (black) and GLM prediction (red) of held-out test data for the two example cells shown in (A). Fraction Poisson deviance explained (FDE) of the test data is reported as a measure of model performance.

C) Relative contribution (“fraction explained deviance”, see Methods), or encoding strength, for each variable included in the GLM binned by track position, for the two example cells shown in (A). Task variables are “pos.”: linear track position; “rew.-rel. pos.”: reward-relative position; “rewarded”: whether the animal was rewarded as a function of position (i.e. a binary that stays high from the reward delivery time to the end of the trial). Movement variables are speed, acceleration, and licking (see Fig. S6A for implementation). Relative contribution is calculated from an ablation procedure where each variable is individually removed from the full model (see Methods). Gray dashed lines mark the starts of the two reward zones in each session, also indicated in (A). Boxes correspond to color coding in (E). Note that reward-relative position provides the maximum relative contribution for both example cells, including m14.77 even though it fired equivalently on rewarded and omission trials (see Fig. 5B).

D) Distributions of relative contribution of each variable in the track-relative, reward-relative, and non-reward-relative remapping subpopulations across animals. Boxes indicate the interquartile range, whiskers extend from the 2.5th to 97.5th percentile, horizontal line indicates the median, and notches indicate the confidence interval around the median computed from 10,000 bootstrap iterations. Colored dots mark the medians of each individual mouse.

E) Distributions of top predictor variables (maximum relative contribution) for individual cells within each subpopulation, shown as % of cells in the subpopulation; same n as in (D).

See also Figure S6.

After testing the full model, we performed an ablation procedure to measure the relative contribution of each predictor variable (see Methods). This ablation procedure revealed clear peaks in the contribution of reward-relative position to the activity of many reward-relative cells as a function of position along the track, recapitulating their firing fields (Fig. 6C). We then quantified the relative contribution of each variable within the track-relative, reward-relative, and non-RR remapping subpopulations that were well-fit by the full model (Fig. 6D). We found that linear track position was the strongest predictor for the track-relative population (Fig. 6D) and the top predictor for 81% of included track-relative cells (Fig. 6E). This result provided confirmation of our classification of these cells as stable place cells that remain locked to the spatial environment within a session. The non-RR remapping cells likewise were best predicted by linear track position followed by reward-relative position (Fig. 6E), consistent with their recruitment to the reward-relative population over experience (Fig. 4). In contrast, for the reward-relative population, reward-relative position provided the highest relative contribution (Fig. 6D) and the top predictor for 28.8% of reward-relative cells (Fig. 6E). Reward-relative position was followed by speed (top predictor for 23.4% of reward-relative cells), linear track position (17.8%), and the receipt of reward (13.3%), with minimal contributions of acceleration and licking. Within the reward-relative cells that exhibited reward-relative position or speed as their top predictors, we observed a mixed contribution of the other variables as well (Fig. S6D–E). These results suggest that the reward-relative population supplies a heterogeneous code for multiple aspects of the reward-related experience. While movement covariates are challenging to fully disentangle from the animal’s progression through the task, the GLM, combined with our analyses of rewarded versus omission trials, provides evidence that reward and reward-relative position are strong predictors of activity in the reward-relative cell population.

DISCUSSION

Here, we reveal that the hippocampus encodes an animal’s physical location and its position relative to rewards through parallel sequences of neural activity. We employed 2-photon calcium imaging to observe large groups of CA1 neurons in the hippocampus as mice navigated virtual linear tracks with changing hidden reward locations. This paradigm allowed us to identify reward-relative sequences that are dissociable from the spatial visual stimuli, as they instead remain anchored to the movable reward location. This suggests that the brain employs a parallel coding system to simultaneously track an animal’s physical and reward-relative positions, pointing to a mechanism for how the brain could monitor an animal’s experience relative to multiple frames of reference45,59,60,67–73,89. Further, we found that the reward-relative sequences, but not the “track-relative” sequences locked to the spatial environment, show a notable increase in the number of neurons involved as the animal gains more experience with the task. These results suggest that the hippocampus builds a strengthening representation of generalized events surrounding reward as animals become more familiar with the task structure. Moreover, this work raises the possibility that the hippocampal code for space, provided by a subpopulation of place cells, can update the progression of reward-relative sequences when the reward location changes. In this way, hippocampal ensembles encoding different aspects of experience may interact to support accurate memory while amplifying behaviorally salient features such as reward.

We validated prior work that found a subpopulation of hippocampal neurons precisely encodes reward locations54. Here, we found that this subset of reward-relative neurons, which fire very close to rewards, comprised a central component of a more extensive reward-relative sequence. Our finding of reward-relative coding at farther distances from reward is reminiscent of hippocampal cells that encode distances and directions to goals in freely flying bats90. At the same time, we extended these previous findings in two key ways: (1) we found that the reward-relative code spans the entire environment, involving cells firing at distances up to hundreds of centimeters away (i.e. as far as from one reward to the next), and (2) additional neurons are recruited into the reward-relative sequences over time, demonstrating a dynamic and evolving coding strategy. Specifically, the cells firing closest to reward were most likely to increase in number across days (Fig. 2D–H, Fig. S2I-M, Fig. 3J–L), suggesting that they may be the most detectable in other paradigms. Importantly, we also observed a noticeable turnover in the neurons that form the reward-relative population from day to day, indicating a high degree of reorganization in terms of which neurons participate. Despite this daily fluctuation in individual neurons, the overall sequential structure appears to be maintained within the population.

This latter finding of day to day fluctuation in the reward-relative population is consistent with recent studies which have highlighted a phenomenon known as representational drift81–85. In the hippocampus81–85 and in other cortical areas91–93, this drift is characterized by changing spatial tuning and stability of single neurons as a function of extended experience82,83. Despite these single neuron changes, the population often continues to accurately code for the spatial environment81 or task91. This flexibility is thought to increase the storage capacity of neuronal networks94–97. Related changes over time have also been proposed to support the decoding of temporal separation between unique events50,92,94,98, which could be particularly important for separating memories of events surrounding different rewarding experiences. We observed population drift in both the reward-relative and track-relative sequence membership. This frequent reorganization suggests that these are not dedicated subpopulations; neurons in the reward-relative population occasionally switched coding modes—though this flexibility may vary by anatomical location within CA154,99,100. At the same time, we found that more resources (i.e. neurons) are allocated to the reward-relative representation at the population level with extended experience. This finding indicates an increasingly robust network code that can be reliably anchored to reward within a day despite day-to-day drift. This stability amidst flux offers insight into the brain’s ability to balance dynamic coding with the preservation of population-level representations91,92,97.

In the hippocampus, spatial firing can also be highly flexible at short timescales even within a fixed environment. This flexibility is evident in the variability of place cell firing even within a field57,85 and especially when an animal takes different routes101–106 or targets a different goal89,90,107–109 through the same physical position. Our work is consistent with such trajectory specific coding and raises the possibility that the reward-relative population may split even further by specific destinations or reward sites when there are multiple possible reward locations. Indeed, in cases where multiple rewards are present at once, hippocampal activity may multiplex spatial and reward-relative information to dissociate different goal locations64,89,110. This discrimination is enhanced over learning110, consistent with the manner in which hippocampal maps are likewise dependent on learned experience4,6,7,12,52,53,111.

Furthermore, place cells undergo significant remapping in response to changes in the animal’s motivational state, such as during task engagement61,62,70 or disengagement112, or upon removal of a reward113. Importantly, in our task the animal must remain engaged when the reward zone updates in order to accurately recall and seek out the new reward location. Consistent with this enhanced attention58,59,61,62, we see predictable structure in how the neurons remap surrounding reward (i.e. they maintain their firing order relative to reward), in contrast to the more random reorganization observed with task disengagement112 or the absence of reward113. However, an important consideration for all of these short timescale firing differences, and for representational drift, is the contribution of behavioral variability to neural activity changes114,115. For example, small changes in path shape116 and the movement dynamics involved in path integration117–121 may influence aspects of hippocampal activity. Our results from a generalized linear model indicate that such movement dynamics influence but do not dominate the activity in the reward-relative population, as reward-relative position was a leading predictor of activity. Further work will be required to disentangle which aspects of reward-seeking behavior are encoded in the hippocampus.

The origin of the input that informs reward-relative neurons remains an open question. One possibility is that subpopulations of neurons in CA1 receive stronger neuromodulatory inputs than others1, increasing their plasticity in response to reward-related information122,123. CA1 receives reward-related dopaminergic input from both the locus coeruleus (LC)124,125 and the ventral tegmental area (VTA)113,126,127 that could potentially drive plasticity to recruit neurons into reward-relative sequences. Both of these inputs have recently been shown to restructure hippocampal population activity, with both LC and VTA input contributing to the hippocampal reward over-representation113,125,126, and VTA input additionally stabilizing place fields113 in a manner consistent with reward expectation113,128. Alternatively, it is possible that upstream cortical regions, such as the prefrontal cortex129, contribute to shaping these reward-relative sequences. Supporting this idea, the prefrontal cortex has been observed to exhibit comparable neural activity sequences that generalize across multiple reward-directed trajectories130–134 to encode the animal’s progression towards a goal135,136. Another potential source of input is the medial entorhinal cortex, which exhibits changes in both firing rate and the location where cells are active in response to hidden reward locations137,138. In particular, layer III of the medial entorhinal cortex is thought to contribute significantly to the over-representation of rewards by the hippocampus63. Additionally, the lateral entorhinal cortex, which also projects to the hippocampus, exhibits a strong over-representation of positions and cues just preceding and following rewards120,139–141 and provides critical input for learning about updated reward locations139,140.

These insights point to a complex network of brain regions potentially interacting to inform reward-relative sequences in the hippocampus. In turn, the hippocampal-entorhinal network may send information about distance from expected rewards back to the prefrontal cortex and other regions where similar activity has been observed1, such as the nucleus accumbens142–147. Such goal-related hippocampal outputs to prefrontal cortex148 and nucleus accumbens149 have recently been reported, specifically in the deep sublayer of CA1148, where goal-related remapping is generally more prevalent148,150. Over learning, reward-relative sequences generated between the entorhinal cortices and hippocampus may become linked with codes for goal-related action sequences in the prefrontal cortex and striatum, helping the brain to generalize learned information surrounding reward to similar experiences1. Understanding these interactions in future work will provide new insight regarding how the brain integrates spatial and reward information, which is crucial for navigation and decision-making processes.

The significance of reward-relative sequences in the brain may lie in their connection to hidden state estimation7,43,151–155, a concept closely related to generalization28,156. Hidden state estimation refers to the process by which an animal or agent infers an unobservable state, such as the probability of receiving a reward under a certain set of conditions, in order to choose an optimal behavioral strategy151. For example, in our study involving mice, reward-relative sequences could be instrumental in helping the animals discern different reward states, such as whether they are in a state of the task associated with reward A, B, or C. By building a code during learning that allows the hippocampus to accurately infer such states, this inference process can generalize to similar future scenarios in which the animal must make predictions about which states lead to reward151,152. Interestingly, however, the density of the reward-relative sequences we observed was highest following the beginning of the zone where animals were most likely to get reward, rather than preceding it. This finding contrasts with computational simulations of predictive codes in the hippocampus that are skewed preceding goals157. Instead, the shape of the reward-relative sequences may be more consistent with anchoring to the animal’s estimate of the reward zone, with decreasing density around the zone as a function of distance which mirrors the organization seen around other salient landmarks86,158.

Our work is consistent with previous proposals that suggest a primary function of the hippocampus is to generate sequential activity at a baseline state17,27,29,47,48. These sequences could then act as a substrate that can become anchored to particular salient reference points16,17,27,47, allowing maximal flexibility to learn new information as it becomes relevant and layer it onto existing representations159–161. This role for hippocampal sequences extends to computational studies28,159,162 and human research163–167, where such coding schemes have been linked to the organization of knowledge and the chronological order of events in episodic memory27,49,163–166,168–170. The ability of the hippocampus to parallelize coding schemes for different features of experience may help both build complete memories and generalize knowledge about specific features without interfering with the original memory. As observed in our study, the hippocampus may then amplify the representations of some of these features over others according to task demands. A similar effect has been observed in humans, in that memory recall is particularly strong for information that either precedes or follows a reward171,172. This suggests that rewards can significantly enhance the strength and precision of memory storage and retrieval, especially for events closely associated with these rewards. Thus, reward-relative sequences may play a crucial role in how we form and recall our experiences, with implications stretching beyond spatial navigation.

METHODS

Subjects

All procedures were approved by the Institutional Animal Care and Use Committee at Stanford University School of Medicine. Male and female (n = 5 male, 5 female) C57BL/6J mice were acquired from Jackson Laboratory. Mice were housed in a transparent cage (Innovive) in groups of five same-sex littermates prior to surgery, with access to an in-cage running wheel for at least 4 weeks. After surgical implantation, mice were housed in groups of 1–3 same-sex littermates, with all mice per cage implanted. All mice were kept on a 12-h light–dark schedule, with experiments conducted during the light phase. Mice were between ~2.5 to 4.5 months at the time of surgery (weighing 18–31 g). Before surgery, animals had ad libitum access to food and water, and ad libitum access to food throughout the experiment. Mice were excluded from the study if they failed to perform the behavioral pre-training task described below.

Surgery: calcium indicator virus injections and imaging window implants

We adapted previously established procedures for 2-photon imaging of CA1 pyramidal cells7,173. The imaging cannula was constructed using a ~1.3-mm long stainless-steel cannula (3 mm outer diameter, McMaster) affixed to a circular cover glass (Warner Instruments, number 0 cover glass, 3 mm in diameter; Norland Optics, number 81 adhesive). Any excess glass extending beyond the cannula edge was removed with a diamond-tipped file. During the imaging cannula implantation procedure, animals were anesthetized through intraperitoneal injection of ketamine (~85 mg/kg) and xylazine (~8.5 mg/kg). Anesthesia was maintained during the procedure with inhaled 0.5–1.5% isoflurane and oxygen at a flow rate of 0.8–1 L/min using a standard isoflurane vaporizer. Prior to surgery, animals received a subcutaneous administration of ~2 mg/kg dexamethasone and 5–10 mg/kg Rimadyl (to reduce inflammation and promote analgesia, respectively). First, the viral injection site targeting the left dorsal CA1 was determined by stereotaxis (AP −1.94 mm, ML −1.10 to −1.30 mm) and an initial hole was drilled only deep enough to expose the dura. An automated Hamilton syringe microinjector (World Precisions Instruments) was used to lower the syringe (35-gauge needle) to the target depth at the CA1 pyramidal layer (DV −1.33 to −1.37 mm) and inject 500 nL adenovirus (AAV) at 50 nL/min, to express the genetically encoded calcium indicator GCaMP under the pan-neuronal synapsin promoter (AAV1-Syn-jGCaMP7f-WPRE, AddGene, viral prep 104488-AAV1, titer 2×1012). The needle was left in place for 10 min to allow for virus diffusion.

After retracting the needle, a 3 mm diameter circular craniotomy was then performed over the left hippocampus using a robotic surgery drill for precision (Neurostar). The craniotomy was centered at AP −1.95 mm, ML −1.8 to −2.1 mm (avoiding the midline suture). During drilling, the skull was kept moist by applying cold sterile artificial cerebrospinal fluid (ACSF; sterilized using a vacuum filter). The dura was then delicately removed using a bent 30-gauge needle. To access CA1, the cortex overlying hippocampus was carefully aspirated using a blunt aspiration needle, with continuous irrigation of ice-cold, sterile ACSF. Aspiration was stopped when the fibers of the external capsule were clearly visible, leaving the external capsule intact. Following hemostasis, the imaging cannula was gently lowered into the craniotomy until the cover glass lightly contacted the fibers of the external capsule. To optimize an imaging plane tangential to the CA1 pyramidal layer while minimizing structural distortion, the cannula was positioned at an approximate 10° roll angle relative to horizontal. The cannula was affixed in place using cyanoacrylate adhesive. A thin layer of adhesive was also applied to the exposed skull surface, which was pre-scored with a number 11 scalpel before the craniotomy to provide increased surface area for adhesive binding. A headplate featuring a left-offset 7-mm diameter beveled window and lateral screw holes for attachment to the imaging rig was positioned over the imaging cannula at a matching 10° angle. The headplate was cemented to the skull using Metabond dental acrylic dyed black with India ink or black acrylic powder.

Upon completion of the procedure, animals were administered 1 mL of saline and 10 mg/kg of Baytril, then placed on a warming blanket for recovery. Monitoring continued for the next several days, and additional Rimadyl and Baytril were administered if signs of discomfort or infection appeared. A minimum recovery period of 10 days was required before initiation of water restriction, head-fixation, and virtual reality training.

Histology

After the conclusion of all experiments, mice were deeply anesthetized and administered an overdose of Euthasol, then perfused transcardially with PBS followed by 4% paraformaldehyde (PFA) in 0.1M PB. Brains were removed and post-fixed in PFA for 24 hours, followed by incubation in 30% sucrose in PBS for >4 days. 50 μm coronal sections were cut on a cryostat, mounted on gelatin-coated slides, and coverslipped with DAPI mounting medium (Vectashield). Histological images were taken on a Zeiss widefield fluorescence microscope.

Virtual Reality (VR) Design

All VR tasks were designed and operated using custom code written for the Unity game engine (https://unity.com). Virtual environments were displayed on three 24-inch LCD monitors surrounding the mouse at 90° angles relative to each other. The VR behavior system included a rotating fixed axis cylinder to serve as the animal’s treadmill and a rotary encoder (Yumo) to read axis rotations to record the animal’s running. A capacitive lick port, consisting of a feeding tube (Kent Scientific) wired to a capacitive sensor, detected licks and delivered sucrose water reward via a gravity solenoid valve (Cole Palmer). Two separate Arduino Uno microcontrollers operated the rotary encoder and lick detection system. Unity was controlled on a separate computer from the calcium imaging acquisition computer. Behavioral data were sampled at 50 Hz, matching the VR frame rate. Both the start of the VR task as well as each 50 Hz frame were synchronized with the ~15.5 Hz sampled imaging data via Unity-generated TTL pulses from an Arduino to the imaging computer.

Behavioral training and VR tasks

Handling and pre-training

After ~1 week of recovery, the mice were handled for 2–3 days for 10 minutes each day, then acclimated to head-fixation (at least 10 days after surgery) on the cylindrical treadmill in a stepwise fashion for at minimum 15-min sessions for 2–3 days (increasing to 30 min-1 hour on the second to third day). Mice were then acclimated to the lick port by 1–2 days of pre-training to lick for water rewards delivered at 2-second intervals (i.e. a minimum of 2 s between rewards if mice were actively licking, otherwise no reward was delivered). For this pre-training and all subsequent behavior, sucrose water reward (5% sucrose w/v) was delivered when the mouse licked the capacitive lick port. The water delivery system was calibrated to release ~4 μL of liquid per drop. To motivate behavior, mice were water restricted to 85% or higher of their baseline body weight. Mice were weighed daily to monitor health and underwent hydration assessments (via skin tenting). The total volume of water consumed during the training and behavioral sessions was measured, and after the experiment each day, supplemental water was supplied up to a total of ~0.045 mL/gm per day (typically 0.8–1 mL per day, adjusted to maintain body weight). After the training and water consumption, the animals were returned to their home cage each day.

Once acclimated to the lick port, mice were pre-trained on a “running” task on a 350 cm virtual linear track with random black and white checkerboard walls and a white floor to collect a cued reward. The reward location was marked by two large gray towers, initially positioned at 50 cm down the track. If the mouse licked within 25 cm of the towers, it received a liquid reward; otherwise, an automatic reward was given at the towers. After dispensing the reward, the towers advanced (disappeared and quickly reappeared further down the track). Covering the distance to the current reward in under 20 s increased the inter-reward distance by 10 cm, but if the mouse took longer than 30 s, the distance decreased by 10 cm, with a maximum reward location of 300 cm. Upon consistent completion of 300 cm distance in under 20 s, the automatic reward was turned off, requiring the mice to lick to receive reward. At the end of the track, the mice passed through a black “curtain” and into a gray teleport zone (50 cm long) that was equiluminant with the VR environment before re-entering the track from the beginning. Once mice were reliably licking and completing 200 laps of the pre-training track within a 30–40 min period, they were advanced to the main task and imaging (mean ± s.d.: 6.1 ± 1.8 days of running pre-training across 10 mice).

Hidden reward zone “switch” VR task

The main “switch” task reported in this study involved two virtual environments highly similar to those previously used to study hippocampal remapping7, each with visually distinct features from the pre-training environment. Both environments consisted of a 450 cm linear track, with two colored towers and two patterned towers along the walls. Environment 1 (Env 1) had diagonal low-frequency black and white gratings on the walls, a gray floor and dark gray sky, and began with two green towers. Environment 2 (Env 2) had higher frequency gratings on the wall, a gray floor, a very light gray sky, and began with two blue towers. The reward zone was a “hidden” (not explicitly marked) 50-cm span at one of 3 possible locations along the track, each equidistantly spaced between the towers but with different proximity to the start or end of the track: zone A: 80–130 cm, zone B: 200–250 cm, zone C: 320–370 cm. Only one reward zone was ever active at a time. On the first 10 trials of any new condition, including the first day and each switch subsequently described, the reward was automatically delivered at the end of the zone if the mouse had not yet licked within the zone to signal reward availability. Otherwise, reward was delivered at the first location within the zone where the mouse licked. After these 10 trials, reward was operantly delivered for licking within the zone. Reward was randomly omitted on approximately 15% of trials (i.e. if a random number generator exceeded 0.85). Each lap terminated in a black curtain and gray teleportation “tunnel” to return the mouse to the beginning of the track. Time in the teleport tunnel was randomly jittered between 1 and 5s (5–10s on trials following reward omissions or trials where the mouse did not lick in the reward zone), followed by 50 cm of running during which the beginning of the track was visible to provide smooth optic flow.

Each mouse encountered a different starting reward zone and sequence of reward zone switches, counterbalanced across mice (n = 7 mice). Mice were allowed to learn an initial reward zone (e.g. A as in Fig. 1E) for days 1–2 of the task. On day 3 (Switch 1), the zone was moved to one of the two other possible locations on the track after 30 trials (e.g. B). For the first 10 trials at the new location, automatic delivery was activated at stated above, but empirically, we observed that the mice often started licking at the new location before that 10 trials had elapsed (Fig. S1A–C). The new reward zone was maintained on day 4. On day 5 (Switch 2), the zone was moved to the third possible reward zone (e.g. C), maintained on day 6, and moved back to the original location on day 7 (e.g. A). Each switch occurred after 30 trials. On day 8, the reward zone switch coincided with a switch into Env 2, where the sequence of zone switches was then reversed on the same day-to-day schedule for a total of 14 days (Fig. 1, Fig. S1).

An additional “fixed-condition” cohort (n=3 mice) experienced only Env 1 and one fixed reward location throughout the 14 days (Fig. S1D,F).

We targeted 80–100 trials per session with simultaneous two-photon imaging (described below), with 1 imaging session per day (mean ± s.d: 80.9 ± 8.8 trials across 10 mice). The session was terminated early if the mouse ceased licking for an extended period of time or ceased running consistently, and/or if the imaging time exceeded 50 minutes. Prior to the imaging session for this task, mice were provided 30 “warm-up” trials using the task and reward zone from the previous day to re-acclimate them to the VR setup. On day 1 of imaging, this warm-up session used the pre-training environment from prior days. On days 2 onward, the warm-up session was whichever environment and reward zone was active at the end of the previous day. Following the completion of the daily imaging session, mice were given another ~100 training trials without imaging on the last reward zone seen in the imaging session until they acquired their daily water allotment.

Two-photon (2P) imaging