Abstract

Measuring the function of decision-making systems is a central goal of computational psychiatry. Individual measures of decisional function could be used to describe neurocognitive profiles that underpin psychopathology and offer insights into deficits that are shared across traditional diagnostic classes. However, there are few demonstrably reliable and mechanistically relevant metrics of decision making that can accurately capture the complex overlapping domains of cognition whilst also quantifying the heterogeneity of function between individuals. The WebSurf task is a reverse-translational human experiential foraging paradigm which indexes naturalistic and clinically relevant decision-making. To determine its potential clinical utility, we examined the psychometric properties and clinical correlates of behavioural parameters extracted from WebSurf in an initial exploratory experiment and a pre-registered validation experiment. Behaviour was stable over repeated administrations of the task, as were individual differences. The ability to measure decision making consistently supports the potential utility of the task in predicting an individual’s propensity for response to psychiatric treatment, in evaluating clinical change during treatment, and in defining neurocognitive profiles that relate to psychopathology. Specific aspects of WebSurf behaviour also correlate with anhedonic and externalising symptoms. Importantly, these behavioural parameters may measure dimensions of psychological variance that are not captured by traditional rating scales. WebSurf and related paradigms might therefore be useful platforms for computational approaches to precision psychiatry.

Keywords: decision making, reliability, foraging, computational psychiatry, WebSurf

1.0. Psychometric validation and clinical correlates of an experiential foraging task

Foraging is a core behaviour in every motile species, requiring an integration of multiple cognitive functions. It requires an organism to accurately monitor the environment and its contingencies, including the availability of resources and the costs and risks of collecting them, whilst assessing one’s homeostatic state (Lima & Bednekoff, 1999; Mobbs et al., 2018; Stephens, 2008; Stephens & Krebs, 1986). Importantly, given that these behaviours have been subject to strong selective pressure over evolution (Stephens, 2008; Stephens & Krebs, 1986), foraging paradigms offer a naturalistic way to understand the decision-making processes that are pertinent to approach problems that the brain evolved to solve (Mobbs et al., 2018). Thus, foraging paradigms can be useful as a lens to study psychopathology, because many mental disorders are thought to fundamentally arise from problems in decision-making systems (Adams et al., 2016; Kishida et al., 2010; Redish, 2013). Neuroeconomic tasks model behavioural strategies that animals adopt and are increasingly being used in psychiatric research as a means to elucidate the relationship between decision-making and psychopathology (Hasler, 2012; Redish et al., 2021).

Previous efforts to translate animal foraging paradigms to human research have often applied secondary reinforcements such as points or money (Kolling et al., 2012; Reynolds & Schiffbauer, 2004; Shenhav et al., 2014). This may impact the validity of these tasks because secondary reward, as opposed to primary consummatory reward, may evoke activity in different neurobiological reward circuitry (Abram et al., 2016). This is pertinent to the validity of these tasks in understanding psychopathology because aberrant reward processing is prevalent transdiagnostically (Zald & Treadway, 2017). The WebSurf task (Abram et al., 2016; and its rodent counterpart, Restaurant Row; Steiner & Redish, 2014; Sweis et al., 2018) is a serial foraging paradigm developed to address this issue. In these tasks, subjects make decisions whether to accept or reject time delays for primary rewards (entertaining videos in WebSurf, flavoured food pellets in Restaurant Row (Abram et al., 2016; Abram, et al., 2019; Abram, et al., 2019; Huynh et al., 2021; Steiner & Redish, 2014; Sweis et al., 2018). In the WebSurf task, participants move sequentially between four video ‘galleries’ and decide whether to invest time from a limited budget in order to receive reward, or forgo the time delay and move to the next video gallery. Importantly, multiple decisional constructs can be derived from task behaviour and behavioural patterns are consistent across human and rodent species (Abram, et al., 2019; Kazinka et al., 2021; Sweis et al., 2018).

Applying foraging paradigms may be useful in establishing neurocognitive profiles that support psychopathology and which are mechanistically relevant, rather than relying solely on clinical rating scales. The establishment of such neurocognitive profiles may facilitate the development of animal models in psychiatry by allowing parallel investigation across species, and offer insights to psychiatric comorbidities by defining deficits that are shared across traditional psychiatric classification (Adams et al., 2016; Huys et al., 2016; Redish et al., 2021; Redish & Gordon, 2016; Robbins et al., 2012). However, the absence of psychometric evidence such as good retest reliability and minimal ceiling and floor effects impedes further progress because one cannot assume that psychophysical tasks accurately capture individual differences in behaviour (Poldrack & Yarkoni, 2016). For example, tasks that are traditionally used to quantify decision-making are typically optimised to index individual domains of cognition, at the expense of capturing the interactions between multiple overlapping constructs. Furthermore, these tasks often demonstrate only modest retest reliability, and show poor inter-individual variability, because they increase the observable differences between experimental conditions and suppress variability between individuals (Dang et al., 2020; Enkavi et al., 2019; Goschke, 2014; Poldrack & Yarkoni, 2016; Redish et al., 2021; Strauss et al., 2006). More naturalistic behavioural tasks, which do not rely on a specific A/B contrast, may be more useful in examining decisional systems which reflect psychopathology (Dang et al., 2020; Enkavi et al., 2019; Poldrack & Yarkoni, 2016), however this hypothesis awaits confirmation.

The WebSurf task holds value as a clinically meaningful tool to examine aberrant decisional processing because it addresses the issues faced by many other neurocognitive tools. However, it has yet to be determined whether behaviour in WebSurf is reliable over time and whether it holds predictive utility for psychopathological symptoms. For rodents, behaviour in this paradigm remains consistent over multiple days of testing (Steiner & Redish, 2014; Sweis et al., 2018) but differs from animal to animal. While humans show between-individual variability consistent with that of animals (Abram, Hanke, et al., 2019; Huynh et al., 2021; Kazinka et al., 2021; Redish et al., 2022; Sweis et al., 2018), within-individual behavioural consistency across multiple days of testing has not been confirmed. Establishing reliability of a measure can indicate both its signal-to-noise ratio and its ability to capture trait constructs. This is important to establish for a task to be used as a valid metric of clinical change (such as that during treatment), as a predictor of response to treatment, and to determine who should receive treatment. Furthermore, variants of the WebSurf task have identified behavioural patterns that are associated with trait externalising features, which is a risk factor for addiction (Abram, Redish, et al., 2019). However, it remains unclear whether task behaviour can predict a wider range of psychopathological symptoms. Finally, for the task to be clinically scalable, it should be valid in an unsupervised environment (Lavigne et al., 2022). Given the primary risk in WebSurf is boredom, unsupervised administration of the task may threaten its computational validity because participants can have unlimited access to distractions that relieve task-evoked boredom (Weydmann et al., 2022). For example, when administered online, some participants demonstrate uneconomical WebSurf task behaviour (preferring longer delays) that may indicate self-distraction (Huynh et al., 2021; Kazinka et al., 2021). Therefore, if the task is to be used as a scalable, clinical tool, it must be validated to determine whether behavioural quantification is repeatable and reliable when participants are unsupervised.

Here, we examined the clinical utility of the WebSurf task in this unsupervised context. Specifically, we tested the stability of task parameters over time, and applied behavioural checks to determine the frequency at which obvious task inattentiveness occurred in an uncontrolled and unsupervised online environment. Finally, we administered a battery of questionnaires assessing symptoms of internalising and externalising psychopathology to determine how patterns of task behaviour may relate to certain psychopathological profiles. We conducted these in an initial exploratory experiment and validated those findings with a pre-registered confirmatory experiment.

2.0. Methods

2.1. Participants

Participants (n = 201) were recruited from the online platform Prolific for participation in Experiment One (exploratory sample). To receive an invitation to participate in the study, participants were required to be a resident of the United States and be older than 18 years of age. Participants were also required to have access to a desktop computer or laptop with audio capabilities. Half of these participants completed a single session of the WebSurf task (Group A) and the other half completed repeated sessions (Group B). This study was approved for human subjects research by the University of Minnesota local Institutional Review Board (STUDY00007274). All participants provided informed consent in accordance with the Declaration of Helsinki.

We recruited a second sample of 200 participants from Prolific to perform validation analyses of our findings. The demographics of the exploratory (Experiment One) and validation (Experiment Two) samples are reported in Table 1.

Table 1:

Demographics of recruited samples

| Experiment One | Experiment Two | ||||||

|---|---|---|---|---|---|---|---|

| Group A (n = 101) | Group B (n = 100) | All (n = 201) | Group A (n = 100) | Group B (n = 100) | All (n = 200) | ||

| Age | Min (years) | 18 | 19 | 18 | 20 | 21 | 20 |

| Max (years) | 65 | 71 | 71 | 78 | 72 | 78 | |

| Did not specify (n) | 3 | 0 | 3 | 0 | 5 | 5 | |

| Mean (SD) [years] | 35.06 (11.60) | 34.83 (12.35) | 34.94 (12.48) | 36.47 (12.97) | 36.87 (11.11) | 36.67 (12.07) | |

| Gender Identity | Female (n) | 49 | 56 | 105 | 41 | 45 | 86 |

| Male (n) | 49 | 42 | 91 | 58 | 53 | 111 | |

| Another gender (n) | 2 | 2 | 4 | 0 | 0 | 0 | |

| Did not specify (n) | 1 | 0 | 1 | 1 | 2 | 3 | |

| Ethnicity | % Asian | 4.95 | 8 | 6.46 | 5 | 9 | 7 |

| % Black | 12.87 | 7 | 9.95 | 13 | 14 | 13.5 | |

| % Mixed | 4.95 | 10 | 7.46 | 8 | 6 | 7 | |

| % Another ethnicity | 2.97 | 6 | 4.48 | 5 | 4 | 4.5 | |

| % White | 67.33 | 68 | 67.66 | 68 | 63 | 65.5 | |

| % Did not specify | 6.93 | 1 | 3.98 | 1 | 4 | 2.5 | |

2.2. Procedure

The same data collection procedures were conducted for both experiments. After consenting to participate in the study, participants were directed to a battery of self-report clinical rating scales, measuring facets of compulsivity, psychosis, depression, and other related psychopathology. Participants were compensated with $5 USD for completing the questionnaire battery. Twenty-four hours after completing the questionnaire battery, participants were invited to complete a session of the informational foraging task WebSurf. The task had a duration of 30 minutes, and participants received $6 USD for completion of the task. In addition, a random subset of 100 participants (referred to as Group B) were invited to participate in two further sessions of the WebSurf task. This Group was intended to assess reliability of task behaviour. An invitation was sent 24 hours after completion of the first WebSurf session, and another 72 hours after completion of the second WebSurf session. Participants were compensated with $6 and $7 USD for completion of the second and third WebSurf sessions, respectively.

2.2.1. Measures

2.2.1.1. WebSurf Task

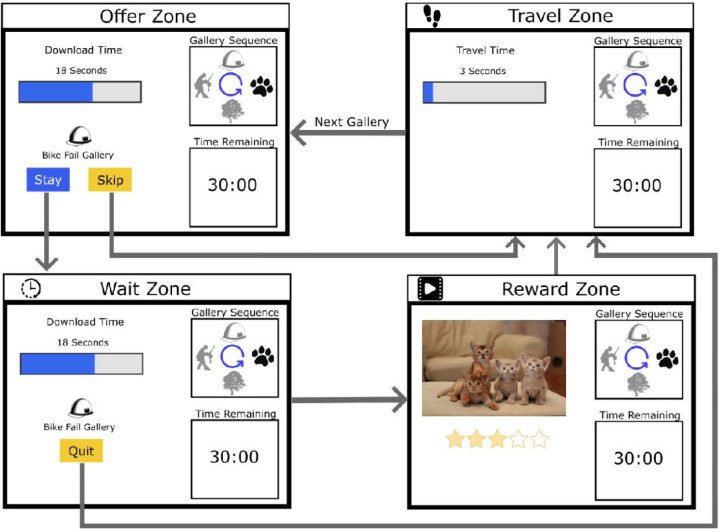

The WebSurf task simulates foraging behaviour in humans, in which participants move between four galleries (kittens, dance, bike accidents, landscapes) of videos which function as a reward for investing time (Abram et al. 2016). The task sequence is shown in Figure 1. Upon arrival to a video gallery, participants are informed of a time delay that they must wait for the current gallery’s video to download. This wait duration is randomised from a uniform distribution of 3 – 30 seconds. Participants can choose to accept or reject the offer. If the participant decides to skip, they move to the next video gallery, where they are given another offer which they must choose to accept or reject. If the participant decides to accept the offer, they must wait for the reward. During the wait-period, participants can choose to quit out of the wait and move to the next gallery. If participants successfully wait for the entire duration, a video from the current gallery plays for approximately four seconds. At the completion of each video, participants are asked to rate the video using a five-star rating system, where one star = extremely dislike and five stars = extremely like. Once the video is rated, participants move to the next video gallery and are presented with another offer. The four video galleries presented in the task were consistent across sessions, but unique videos within each gallery were presented in each session.

Figure 1.

Sequence of the WebSurf task. Participants start in the Offer Zone, where they are presented the time delay they must wait for reward. If they accept the offer, the move to the Wait Zone. If they reject the offer, they move to the Travel Zone. In the Wait Zone, participants must wait the accepted duration of time (3 – 30 seconds) before they move to the Reward Zone. If participants decide to quit, they move to the Travel Zone. Once the duration has ended in the Wait Zone, participants are presented a video from the current video gallery. At the completion of the video, participants provide a rating from 1 – 5 stars and move to the Travel Zone. In the Travel Zone, participants must wait three seconds before moving to the next video gallery.

The core measures of behaviour we extracted from the task are decision threshold, probability of accepting offers (P[Accept]), average video rating, average decision latency, and number of threshold violations. Behavioural parameters such as decision thresholds, P(Accept), and video ratings are clinically relevant constructs to measure because they assess responses to positive motivational contexts, including reward responsiveness and valuation, which are implicated in a range of psychiatric disorders (Barch et al., 2009). Decision latencies can reflect the engagement of deliberative decisional processes as individuals evaluate options and their outcomes (Pleskac et al., 2019). Reaction times may also indicate the efficiency of decisional systems, and the engagement of impulsive or habitual decision strategies. The number of threshold violations is also a relevant behavioural parameter in assessing the optimality of decisions in maximising the ratio of investment to reward, and relates to impulsive decisional styles. An outline of these parameters is provided in Table 2.

Table 2.

Definition of task parameters

| Task parameter | Calculation | Construct measured |

|---|---|---|

| Decision threshold | Derived via Heaviside step function of stays (1)/skips (0) for each video gallery. | Revealed preferences, the duration of time the respondent is willing to wait for a video from a particular gallery. |

| P(Accept) | N(Accept) / N(Trials) | Likelihood of accepting offers regardless of value |

| Average video rating | Mean(Video rating | Gallery) | Explicit gallery preference and desirability of videos |

| Average decision latency | Median(Decision time) | Deliberation |

| Number of threshold violations | N(Accept) where offer > threshold + N(Skip) where offer < threshold. | Optimality of decisions. |

2.2.1.2. Questionnaire Battery

A battery of previously validated self-report rating scales was administered to participants after initial recruitment into the study. The questionnaire battery included the Altman Self-Rating Mania Scale (ASRM; Altman et al., 1997), the Alcohol Use Disorders Identification Test (AUDIT; Saunders et al., 1993), the Aberrant Salience Inventory (ASI; Cicero et al., 2010), the Centre for Epidemiological Studies – Depression (CES-D; Radloff, 2016), the Daily Sessions, Frequency, Age of Onset, and Frequency of Cannabis Use (DFAQ-CU; Cuttler & Spradlin, 2017), the Eating Atttitudes Test (EAT-26; Garner et al., 1982), the Mini Mood and Anxiety Symptoms Questionnaire (Mini-MASQ; Watson et al., 1995), the Obsessive Compulsive Inventory – Revised (OCI-R; Foa et al., 2002), the Snaith-Hamilton Pleasure Scale (SHAPS; Snaith et al., 1995), the Barratt Impulsivity Scale (BIS-11; Patton et al., 1995), the Schizotypal Personality Questionnaire – Brief (SPQ-B; Raine & Benishay, 1995), the Temporal Experience of Pleasure Scale (TEPS; Gard et al., 2006), and the World Health Organization Alcohol, Smoking, and Substance Involvement Screening Test (WHO-ASSIST; WHO ASSIST Working Group, 2002). These data were used to assess the clinically relevant predictive utility of task behavioural parameters. To this end, we examined whether task behaviour is predictive of symptomology, as measured by individual questionnaire scores, and of psychopathological profiles, as measured by a principal component analysis (PCA) of questionnaire scores. The questionnaires were administered in a fixed order for all participants, with attention-check items interspersed within questionnaire items. Participants were excluded from the study if they failed more than one attention check item in the questionnaire battery. Subject responses to a particular scale were discarded if its corresponding attention-check item was failed. Descriptions of each measure and their subscales are outlined in Supplementary Table S1.

Questionnaire items were reverse scored where necessary and scored items were summed to produce a total score for each questionnaire. The exception to this was the DFAQ-CU, for which the three subscales measure cannabis use on three different scales of measurement (frequency, age of onset, and quantity). As such, items were transformed by computing z-scores within each subscale and summed to produce a total score.

2.2.2. Statistical Analyses

All data processing and analyses were conducted using R (R Core Team, 2016). Prior to analysing behavioural data from the WebSurf task, we screened data for evidence that subjects were inattentive during their participation (see Supplementary Materials). Once total scores were calculated for each questionnaire and WebSurf task parameters were derived, we subjected these data to a series of exploratory analyses in Experiment One. First, we examined the reliability and stability of behaviour in the WebSurf task over repeated administrations. These repeatability tests were conducted with the subset of participants who completed three sessions of the task (Group B). To evaluate reliability of task behaviour, we calculated intraclass correlations (ICCs) of WebSurf task parameters with two-way mixed effects and based on absolute agreement (ICC(2,1); Koo & Li, 2016; McGraw & Wong, 1996; Shrout & Fleiss, 1979). We calculated ICCs for all session combinations (i.e. across all sessions, across the first and second session, across the first and third session, and across the second and third session). Based on criteria proposed by Koo and Li (2016), reliability was deemed adequate if the ICC of a parameter was > 0.75.

In addition, we examined whether there is a systematic change in task parameters with repeated task administration, which may indicate that participants satiate to the task. If task parameters are stable over repeated administrations, then the main effect of session number should not be significant. Given that this proposition relies on confirmation of the null hypothesis, we evaluated it with a series of Bayesian linear mixed-effects models, with WebSurf task parameters (decision thresholds, P(Accept), average video ratings, median decision latencies, and number of threshold violations) as response variables, with session number and category rank as predictor variables, and subject IDs as a random effect. We calculated BF01 values to evaluate evidence for the null over the alternative hypothesis and interpreted these based on the criteria outlined by Jeffreys (2006).

For the subsequent analyses, we included all first-session data across Groups A and B. We evaluated hedonic reliability within sessions by calculating correlations between different indices of gallery preference (gallery decision thresholds, average video ratings for each gallery, and explicit gallery rankings). For each combination of these preference indices, we calculated Kendall’s τb and determined that hedonic reliability was adequate if these values were > 0.3 (Schaeffer & Levitt, 1958).

To determine profiles of psychiatric symptoms which cut across traditional psychiatric classification, we subjected our questionnaire scores to principal component analysis (PCA). Total scores from each questionnaire were normalized via z-transformation prior to running PCA. The most important PCs were selected from the PCA by evaluating the eigenvalues and percentage of questionnaire score variance accounted for by each PC. The PCA rotations were used to calculate individual subject scores for each of the PCs we extracted from our questionnaire data. The resulting components extracted from the PCA are presented in the Supplementary Materials. These PC scores were then used as predictors of WebSurf task behaviour in following analyses. We also ran a series of least absolute shrinkage and selection operator (LASSO) models to identify specific symptom patterns (questionnaire scores) which were significant predictors of task behaviour.

We ran a series of tests to evaluate how psychiatric symptomology can predict behaviour in the WebSurf task. To assess how study drop out may have impacted the representativeness of these data, we evaluated the probability of completing all parts of the study as a function of psychiatric symptomology by running logistic regressions with study completion as the dependent variable. Predictive questionnaire scores were identified via LASSO and predictive PCs were identified by examining the significance of their main effects on study completion. We also evaluated predictors of behavioural reliability over time by entering the standard deviations of task behavioural parameters across all three sessions of the task as response variables in a series of linear models, with predictive questionnaire scores (as identified by LASSO) entered as predictor variables. PC scores were also entered separately into linear models to evaluate which PCs were significant predictors of study completion. Given that standard deviations are right-skewed, we normalised them via z-transformation prior to entering them as response variables in the linear models. Predictors of hedonic reliability were also evaluated. After calculating individual subject correlations between each of decision thresholds × average video ratings, decision thresholds × explicit gallery rankings, and average video ratings × explicit gallery rankings, we entered these correlation coefficients as response variables into a series of linear models, with either significant questionnaire predictors or the PC scores as predictor variables. Finally, we directly entered behavioural parameters from the WebSurf task as response variables in a series of linear models and identified predictive questionnaire scores via LASSO and evaluated the main effects of each of the PCs on the response variable. For all frequentist tests, statistical significance was determined at α = 0.05 and post hoc tests were corrected for multiple comparisons via the false discovery rate method (Benjamini & Hochberg, 1995). Linear mixed-effects models were conducted with Satterthwaite’s approximation for degrees of freedom (Kuznetsova et al., 2017).

In Experiment Two, we performed a validation of the effects we observed in Experiment One. As such, we conducted the same analyses as Experiment One, but rather than using LASSO regression for variable selection, we directly tested the effects observed in Experiment One. These analyses were pre-registered and the registration report is available at https://osf.io/8h6tz.

3.0. Results

3.1. Study drop out

First, we report the number of participants that did not complete all parts of the study. One source of attrition was due to the longitudinal nature of the study, with some participants failing to complete parts of the study within the allocated timeframe. In addition, participants were rejected from the study if they failed our online attention checking procedure (see Supplementary Materials). Tables 3 and 4 outline the number of participants in each group who were invited to complete each part of Experiment One (exploratory sample) and Experiment Two (validation sample) and the number of task failures in each.

Table 3:

Group A sample size – Experiments One and Two

| Completed questionnaire battery | Questionnair e responses rejected due to failed attention checks | Invited to complete WebSurf | Returned and started WebSurf | Failed WebSurf | Completed WebSurf | |

|---|---|---|---|---|---|---|

| N (Exp. 1) | 101 | 1 | 100 | 94 | 27 | 67 |

| N (Exp. 2) | 100 | 0 | 100 | 84 | 29 | 55 |

Table 4:

Group B sample size – Experiments One and Two

| Completed questionna ire battery | Questionnair e responses rejected due to failed attention checks | Invited to complete WebSurf Day 1 | Returned and started WebSurf Day 1 | Failed WebSurf Day 1 | Completed WebSurf Day 1 | |

|---|---|---|---|---|---|---|

| N (Exp. 1) | 100 | 0 | 100 | 83 | 18 | 65 |

| N (Exp. 2) | 100 | 0 | 100 | 84 | 30 | 54 |

| Invited to complete WebSurf Day 2 | Returned and started WebSurf Day 2 | Failed WebSurf Day 2 | Complete d WebSurf Day 2 | |||

| N (Exp. 1) | 65 | 57 | 9 | 48 | ||

| N (Exp. 2) | 54 | 51 | 4 | 47 | ||

| Invited to complete WebSurf Day 3 | Returned and started WebSurf Day 3 | Failed WebSurf Day 3 | Completed WebSurf Day 3 | |||

| N (Exp. 1) | 48 | 44 | 4 | 40 | ||

| N (Exp. 2) | 47 | 46 | 3 | 43 | ||

3.2. Task Behavioural Parameters

3.2.1. Behavioural Reliability

We examined decision thresholds, P(Accept), average video ratings, median decision times, and number of threshold violations across video gallery ranks and across sessions for the sample of participants who completed all three sessions of WebSurf. Prior to behavioural analysis, we screened data for poor task engagement via our behavioural screening criteria. In total, using these criteria, 11 participants were rejected from Experiment One and eight participants were rejected from Experiment Two.

To examine the reliability of task behavioural parameters over multiple sessions, we calculated ICCs for each combination of sessions. These were calculated for participants that completed all sessions of the task. ICCs of decision thresholds, P(Accept), average video ratings, median decision times, and number of threshold violations are plotted in Figure 2. ICCs of behavioural parameters between sessions and across video gallery rankings in Experiment One are plotted in Supplementary Figure S3. Supplementary Figure S4 shows these ICCs for Experiment Two. In Experiment One, the most reliable parameters were average video rating and median decision latency, whereas P(Accept) and the number of threshold violations generally did not meet reliability criteria. Also of note is the fact that ICCs were consistently > .75 across the second and third sessions. This suggests that task behaviour becomes more stable after initial exposure to the task. The ICC patterns in Experiment One generally replicated in Experiment Two (see Figure 2). However, decision latencies generally showed poorer reliability in Experiment Two in comparison to Experiment One.

Figure 2.

Intraclass correlations (ICCs) of behavioural parameters across sessions of the WebSurf task. Black points represent ICCs from Experiment One. Grey points represent ICCs from Experiment Two. Error bars represent the 95% confidence interval.

3.2.2. Behaviour over repeated sessions

We further ran a series of linear mixed-effects models to examine whether behavioural parameters drift systematically across repeated administrations of the task and whether there is variance in parameters as a function of gallery preference. Such a systematic change in behaviour over repeated administrations may indicate that participants are prone to satiation after repeated exposure to the task. We report the results of these tests in Table 5. Decision thresholds did not change systematically across repeated administrations of the task, with no significant main effect of session number or interaction of session number with video gallery. A Bayesian linear model supported this conclusion, with very strong evidence against an effect of session number on decision thresholds, BF01 = 32.20. There was variation in decision thresholds between galleries, as indicated by the significant main effect of video gallery rank. Decision thresholds decreased with increasing gallery preference, as would be expected by basic economic principles (MRANK1 = 28.02, SDRANK1 = 6.38; MRANK2 = 25.69, SDRANK2 = 5.97; MRANK3 = 23.3, SDRANK3 = 7.1; MRANK4 = 14.88, SDRANK4 = 10.01). Pairwise contrasts indicated a significant difference in decision threshold between all gallery ranks. In Experiment Two, these results replicated. Decision thresholds were stable across sessions, as indicated by no main effect of session number and no interaction of session number with video gallery. Again, there was variance in decision thresholds between galleries, with a significant main effect of gallery rank. Decision thresholds decreased with increasing gallery preference (MRANK1 = 27.83, SDRANK1 = 6.49; MRANK2 = 26.20, SDRANK2 = 7.00; MRANK3 = 21.27, SDRANK3 = 8.63; MRANK4 = 11.13, SDRANK4 = 10.15). Pairwise contrasts indicated a significant difference in decision threshold between all gallery ranks, except for the difference between ranks one and two. Supplementary Figures S1 – S2 show the parameters as a function of session number and gallery rank for Experiments One and Two.

Table 5.

Results of linear mixed-effects models of task behavioural parameters as a function of session number and gallery rank, across Experiments One and Two.

| Experiment | Behavioural parameter | Predictor | Degrees of freedom | F | p |

|---|---|---|---|---|---|

| 1 | Decision threshold | Gallery rank | 3, 401.06 | 79.39 | < .001 |

| 2 | 3, 403.16 | 113.81 | < .001 | ||

| 1 | Session number | 2, 404.76 | 1.30 | .273 | |

| 2 | 2, 423.13 | 0.94 | .391 | ||

| 1 | Gallery rank × Session number | 6, 401.06 | 0.94 | .466 | |

| 2 | 6, 403.16 | 0.33 | .921 | ||

| 1 | P(Accept) | Gallery rank | 3, 400.97 | 107.84 | < .001 |

| 2 | 3, 402.92 | 171.36 | < .001 | ||

| 1 | Session number | 2, 404.71 | 2.85 | .059 | |

| 2 | 2, 423.66 | 2.06 | .130 | ||

| 1 | Gallery rank × Session number | 6, 400.97 | 0.59 | .738 | |

| 2 | 6, 402,92 | 0.21 | .975 | ||

| 1 | Average video rating | Gallery rank | 3, 383.48 | 136.61 | < .001 |

| 2 | 3, 383.11 | 190.70 | < .001 | ||

| 1 | Session number | 2, 385.49 | 0.57 | .568 | |

| 2 | 2, 398.41 | 0.56 | .569 | ||

| 1 | Gallery rank × Session number | 6, 385.15 | 0.23 | .968 | |

| 2 | 6, 283.50 | 0.86 | .527 | ||

| 1 | Median | Gallery rank | 3, 401.11 | 0.90 | .442 |

| 2 | decision latency | 3, 403.94 | 8.10 | < .001 | |

| 1 | Session number | 2, 401.96 | 0.56 | .572 | |

| 2 | 2, 411.16 | 9.99 | < .001 | ||

| 1 | Gallery rank × Session number | 6, 401.11 | 0.31 | .929 | |

| 2 | 6, 403.94 | 0.32 | .931 | ||

| 1 | Number of threshold violations | Gallery rank | 3, 401 | 10.49 | < .001 |

| 2 | 3, 403.90 | 15.07 | < .001 | ||

| 1 | Session number | 2, 403.79 | 2.13 | .120 | |

| 2 | 2, 416.39 | 2.0 | .137 | ||

| 1 | Gallery rank × Session number | 6, 401 | 0.77 | .599 | |

| 2 | 6, 403.90 | 1.58 | .152 |

In both Experiment One and Experiment Two, the probability of accepting offers did not vary systematically across sessions. A Bayesian linear model supported the stability of P(Accept) across sessions, providing strong evidence against an effect of session number on P(Accept), BF01 = 18.81 (Experiment One), BF01 = 28.47 (Experiment Two). The probability of accepting offers did vary across video gallery ranks. In Experiment One the probability of accepting offers increased with increasing gallery preference (MRANK1 = .95, SDRANK1 = .19; MRANK2 = .86, SDRANK2 = .17; MRANK3 = .77, SDRANK3 = .21; MRANK4 = .49, SDRANK4 = .31) and pairwise contrasts indicated a significant difference in the probability of accepting offers between all gallery ranks. This was replicated in Experiment Two (MRANK1 = .90, SDRANK1 = .19; MRANK2 = .83, SDRANK2 = .20; MRANK3 = .66, SDRANK3 = .25; MRANK4 = .31, SDRANK4 = .28).

Average video ratings were also stable across sessions. In Experiment One, this was supported by a Bayesian linear model which indicated strong evidence against change in video ratings as a function of session number, BF01 = 17.80. The stability of video ratings across sessions was replicated in Experiment Two, BF01 = 32.61. A linear model indicated average video ratings differed across gallery ranks, showing an increase with increasing gallery preference in both Experiment One (MRANK1 = 4.09, SDRANK1 = 0.54; MRANK2 = 3.74, SDRANK2 = 0.54; MRANK3 = 3.17, SDRANK3 = 0.66; MRANK4 = 2.54, SDRANK4 = 0.87), and Experiment Two (MRANK1 = 4.05, SDRANK1 = 0.63; MRANK2 = 3.73, SDRANK2 = 0.61; MRANK3 = 3.01, SDRANK3 = 0.63; MRANK4 = 2.27, SDRANK4 = 0.79). Pairwise contrasts indicated a significant difference in average video ratings between all gallery ranks, and this was replicated in Experiment Two.

In Experiment One, log normalised median decision times showed no main effect of session number and no interaction of session number with gallery preference. The stability of decision times across sessions was supported by a Bayesian linear model which yielded very strong evidence against a change in decision times as a function of session number, BF01 = 32.61. The main effect of gallery preference was not significant, indicating decision latencies also did not differ systematically as a function of gallery preference. However, in Experiment Two, there was a systematic change in decision latencies across sessions. There was a significant difference in median decision latencies between session one (M = 7.46, SD = 0.18) and session three (M = 7.37, SD = 0.20, p < .001), between session two (M = 7.43, SD = 0.21) and session three (p = .003), but not between session one and session two (p = .132). In addition, in contrast to Experiment One, there was a main effect of gallery preference in Experiment Two. Decision latencies decreased with gallery preference (MRANK1 = 7.38, SDRANK1 = 0.17; MRANK2 = 7.41, SDRANK2 = 0.19; MRANK3 = 7.42, SDRANK3 = 0.19; MRANK4 = 7.49, SDRANK4 = 0.20). The difference in median decision latency was significant between gallery ranks one and four, ranks two and four, and ranks three and four.

The number of threshold violations appeared stable across sessions in Experiment One. Neither the main effect of session number, nor the interaction of session number with gallery rank, was significant. A Bayesian model indicated stability across sessions, with strong evidence against an effect of session number on threshold violations, BF01 = 14.91. The reliability of threshold violations across sessions was replicated in Experiment Two, and the Bayesian model provided substantial evidence against a change in the number of threshold violations as a function of session number, BF01 = 4.69. There was variation between galleries, as indicated by a decrease of the number of threshold violations as gallery preference increased in Experiment One (MRANK1 = 1.06, SDRANK1 = 1.96; MRANK2 = 1.81, SDRANK2 = 2.09; MRANK3 = 2.04, SDRANK3 = 2.01; MRANK4 = 2.50, SDRANK4 = 2.47). Pairwise contrasts indicated a significant difference in threshold violations between all gallery ranks except for the difference between ranks two and three (p = .424), and between ranks three and four (p = .087). This was replicated in Experiment Two, with threshold violations decreasing as gallery preference increased, (MRANK1 = 1.85, SDRANK1 = 2.35; MRANK2 = 2.12, SDRANK2 = 2.30; MRANK3 = 3.52, SDRANK3 = 2.99; MRANK4 = 3.49, SDRANK4 = 2.91). In Experiment Two the difference in the number of threshold violations was significant between all gallery ranks except for the difference between ranks one and two and between ranks three and four.

3.3. Hedonic Reliability

We determined each participant’s ranking of each video gallery based on decision threshold, average video rating, and explicit gallery rankings. With each combination of these variables, we calculated Kendall’s τb to determine hedonic reliability. There was a significant relationship between average video rating rank and decision threshold rank, τb [95% CI] = .48 [.41, .55], p < .001, between average video rating rank and explicit gallery rank, τb [95% CI] = .69 [.64, .73], p < .001, and between decision threshold rank and explicit gallery rank, τb [95% CI] = .49 [.43, .56], p < .001. The frequency of congruency and correlations between revealed and stated preferences are shown in Figure 3.These results replicated in Experiment Two - average video rating rank and decision threshold rank, τb [95% CI] = .49 [.41, .58], p < .001, average video rating rank and explicit gallery rank, τb [95% CI] = .70 [.63, .76], p < .001, and decision threshold rank and explicit gallery rank, τb [95% CI] = .51 [.43, .60], p < .001, again showed hedonic reliability.

Figure 3.

Hedonic reliability of revealed and stated preferences. (A-C) Matrix of revealed and stated preference congruency in Experiment 1. (D-F) Matrix of revealed and stated preference congruency in Experiment 2. (G-H) Correlation of revealed preference (decision thresholds) with stated preference (average video ratings) for Experiments 1 and 2. (I, K, M) Individual subject correlation coefficients between revealed and stated preferences in Experiment 1. (J, L, N) Individual subject correlation coefficients between revealed and stated preferences in Experiment 2.

3.4. Predicting Behavioural Reliability

We calculated z-scored standard deviations of the task variables across sessions as an index of reliability of the task over time. We then used a series of LASSO models to identify any questionnaire predictors of behavioural reliability. In addition, a PCA of our questionnaire data (see Supplementary Materials) indicated two underlying components – internalising symptoms (PC1) and externalising symptoms (PC2). We ran a series of linear models to identify which PCs were significant predictors of behavioural reliability (see Table 6).

Table 6.

Predictors of behavioural stability across sessions in Experiment One.

| Variable | Questionnaire predictors | PCA predictors |

|---|---|---|

| SD[Decision threshold] | OCI-R, Mini-MASQ | None |

| SD[P(Accept)] | ASRM | PC2 |

| SD(Median[RT]) | Mini-MASQ | None |

LASSO regression of the standard deviation of decision thresholds across sessions identified obsessive-compulsive (OCI-R), F(1, 37) = 6.83, p = .013, R2 = .05, and mood/anxiety (Mini-MASQ), F(1, 37) = 7.52, p = .009, R2 = .05, symptoms as predictors. For the LASSO model of P(Accept) variability, mania (ASRM) was a predictor, F(1, 36) = 5.33, p = .028, R2 = .09. In addition, PC2 was a significant predictor of P(Accept) variability, F(1, 36) = 5.19, p = .030, R2 = .226. Finally, mood and anxiety symptoms (Mini-MASQ) were identified as a significant predictor of variability in decision times across sessions, F(1, 38) = 6.15, p = .018, R2 = .21. Predictors of behavioural reliability in Experiment One are plotted in Figures 5A–E and 5G. The predictive utility of mania symptoms (ASRM), F(1, 36) = 5.87, p = .020, R2 = .12, and PC2 scores, F(1, 36) = 5.04, p = .030, R2 = .11, on P(Accept) variability was replicated in Experiment Two (see Figures 4F and 4H).

Figure 5.

Model predictions of hedonic reliability in Experiment One.

Figure 4.

Model predictions of behavioural stability across sessions in Experiment One (AE) and Experiment Two (F-G).

3.5. Predicting Hedonic Reliability

For each participant, we calculated correlation coefficients between each combination of their decision thresholds, average video ratings, and explicit gallery rankings. With these correlation coefficients, we ran a series of LASSO models to identify which questionnaires could predict hedonic reliability. We also ran a series of linear models to identify which PCs were significant predictors of these correlation coefficients (see Table 7).

Table 7.

Predictors of hedonic reliability in Experiment One.

| Correlation variables | Questionnaire predictors | PCA predictors |

|---|---|---|

| Decision threshold × average video rating | TEPS | None |

| Average video rating × Explicit gallery ranking | None | None |

| Decision threshold × Explicit gallery ranking | TEPS, WHO-ASSIST | None |

For the correlations of average video ratings with decision thresholds, pleasure-seeking (TEPS) was identified as a predictor, F(1, 111) = 7.38, p = .007, R2 = .07. In addition, for the correlations of decision thresholds with explicit video rankings, pleasure-seeking (TEPS), F(1, 109) = 4.83, p = .030, R2 = .06, and drug use (WHO.ASSIST), F(1, 109) = 6.42, p = .013, R2 = .06, were retained as predictors. Predictors of hedonic reliability in Experiment One are plotted in Figure 5. We ran the same linear models examining hedonic reliability with data from our validation sample. None of the effects observed in Experiment One were replicated in Experiment Two.

3.6. Behavioural Predictions

Using LASSO regression, no questionnaires were retained as predictors of average decision threshold, P(Accept), average video ratings, or median decision time. Similarly, using linear mixed models, PC1, PC2, and PC3 were not significant predictors of average decision threshold, P(Accept), or median decision latency. However, PC2 was a significant predictor of average video ratings, F(1, 107) = 14.46, p < .001, R2 = .129, and that prediction replicated in Experiment Two, F(1, 109) = 7.26, p = .008, R2 = .06. The model predictions across Experiments One and Two are shown in Figure 6.

Figure 6.

Model predictions of behaviour in Experiment One (A) and Experiment Two (B).

4.0. Discussion

4.1. Foraging as an etiological approach to investigate psychopathology

Quantifying underlying neurocognitive profiles may serve as a useful approach to understand the etiological commonalities to psychopathology and improve therapies to address them. A key challenge to consider in adopting this approach is that there is a lack of demonstrably reliable and predictive behavioural assays that can index clinically relevant neurocognitive profiles. Psychophysical tasks are often applied to measure specific facets of cognition at the expense of capturing multiple overlapping constructs. As an attempt to address this, complex behaviour is increasingly being studied in psychiatric research to understand typical and pathological cognition as it relates to naturalistic behaviour (Hasler, 2012; Kishida et al., 2010). In particular, foraging paradigms can provide a computational account of naturalistic behaviour, allowing an examination of maladaptive decisional processes that may support psychopathology (Adams et al., 2016; Huys et al., 2016; Redish & Gordon, 2016).

The WebSurf foraging paradigm offers a mechanistically relevant, translational platform to investigate decision making, which is particularly applicable to psychiatric research because translational accounts of neurocognitive processing are critical for developing successful interventions. Therefore, an obvious next step is examining whether the WebSurf paradigm has utility in objectively quantifying psychopathology. Behavioural patterns within the WebSurf task have previously been linked to psychopathology. For example, Abram et al. (2019) applied a risk component to the WebSurf task, where the time delay imposed before reward was presented as a range of delays and the true delay was only known by the participant once they had accepted an offer. In non-risk trials, participants were informed of the exact time delay before reward when choosing to accept or reject an offer. Individuals who were higher in externalising disorder vulnerability, and thus more vulnerable to addiction, were more likely to accept a risky offer when they had received a bad outcome on the previous trial and valued bad outcomes after risky decisions as more pleasurable. Two separate variants of the WebSurf task have been applied in humans – one in which participants navigate a virtual maze for video rewards (Movie Row) and one in which participants receive candy as rewards, rather than videos (Candy Row). In the Movie Row task, participants with a BMI > 25 were more likely to accept offers for which the delay was longer than their decision threshold (Huynh et al., 2021). Therefore, there may be some relation of task behaviour with psychobiological processes. However, it is unclear whether task behaviour can be predictive of more broad psychopathological profiles. Furthermore, while task behaviour remains relatively stable over multiple sessions of Restaurant Row, WebSurf retest reliability has not been demonstrated.

4.2. The challenge of remote behavioural assessment

The first challenge in validating the neurocognitive utility of the WebSurf task is administering it online in uncontrolled and unsupervised environments. Similar behavioural patterns have been observed in the WebSurf task across samples administered remotely and in-person (Huynh et al., 2021; Kazinka et al., 2021; Redish et al., 2022). However, participants may engage in task-irrelevant distractions to countermand the boredom elicited by the imposed time delays for reward. To ensure data validity, our platform monitored the status of the browser window in which the task was running and could detect if the window was minimised, or browser tabs had been switched (see Supplementary Materials). If an inactive task window was detected, the task quit, and participants were excluded from further participation. A large proportion of participants failed this attention check, despite being informed that they would be unable to complete the task if they did not keep their task window active. Across our administrations of the task, the failure rate was as high as 35.7%. Furthermore, while we employed additional post-hoc attention checks, we cannot confirm that participants were not engaging in other task-irrelevant distractions. In addition, there was attrition in participants returning to complete repeated administrations of the task within the allocated timeframe and the completion rate of all study components was as low as 40%. Increasing that engagement and retention would be an important consideration for studies seeking to deploy WebSurf or similar attentional tasks in clinical populations at scale. Interestingly, depression, mania, and schizotypy symptoms could predict study completion in Experiment One and when accounting for depression and mania symptoms, the predictive value of schizotypy on study completion was validated in Experiment Two.

4.3. Stability of task behaviour and the exploration/exploitation trade-off

WebSurf behaviour is generally stable over time. Our Bayesian analysis for the most part indicated strong- to very-strong evidence against an effect of session number on behavioural parameters. An exception to this is the number of threshold violations which showed some evidence of systematic change across repeated sessions. This suggests that the optimality of choices made in the task may be less stable over time. Our calculation of ICCs over repeated sessions further support the reliability of task behaviour. When considering all three sessions of the task, ICCs of task parameters showed good reliability, with the exception of P(Accept) and the number of threshold violations. However, further examination of individual session combinations shows where the stability of parameters breaks down. Across all behavioural parameters, and across both our exploratory and validation samples, ICCs showed excellent retest reliability when considering only the second and third administrations of the task. Behavioural stability was consistently lower when considering ICCs across the first and second, and first and third administrations of the task. This suggests that the first session produces behaviour which is prone to change. At initial exposure to the task, participants may spend more time in an exploratory approach where they explore unfamiliar options for reward (Addicott et al., 2017). This exploration of options and rewards likely involves an internal formation of preference, willingness to wait, and strategy for reward maximisation, which is reflected in task behaviour. During this time, participants may form rules for decisions which can heuristically optimise the decision-making process but may also manifest as overly-rigid and habitual behaviour common to psychopathology. The stability of behaviour at future exposure to the task suggests that participants largely switch to an exploitation strategy for reward maximisation. A future point of consideration may address whether momentary task behaviour can indicate state-like changes in exploration and exploitation. Given the noradrenergic system’s role in the exploration/exploitation trade-off, pupillometry could be a particularly useful in capturing these state-like changes as it is thought to index locus coeruleus activity (Joshi et al., 2016), which plays a key role in the release of noradrenaline throughout the central nervous system (Foote et al., 1983; Jepma & Nieuwenhuis, 2011).

Furthermore, our finding that initial task performance is less reliable but remains stable in future task administrations is informative for future applications of the WebSurf task. These data suggest that in obtaining stable behavioural performance in WebSurf, it may be useful for participants to complete an initial exposure to the task prior to completing the entire assay, because subsequent measures are likely to be more stable and retention was much greater between the second and third sessions. The stability of task behaviour in repeated administrations supports its utility as a clinical tool, but there is a large proportion of attrition which poses a threat to its application. In addition, we observed a predictive utility of mood and anxiety symptoms and externalising symptoms on P(Accept) variability. These changes in behavioural stability suggest there may be underlying differences in how individuals with psychiatric symptoms approach the task, which may similarly reflect shifts in exploratory versus exploitative strategies for reward maximisation.

4.4. Hedonic reliability

Correlating stated preferences with revealed preferences can provide an indication of hedonic reliability. When unreliable, this may suggest that conscious deliberative processes are disrupted or that there is an alteration in affective motivation (Barch et al., 2017). Across our samples, the consistency between stated and revealed preferences was generally high, although there was variation between individuals. None of the administered questionnaires could predict hedonic reliability in a replicable manner. This suggests that hedonic reliability is a construct that is not reliably captured by traditionally psychometric scales.

4.5. The link from symptoms to behaviour

In our exploratory analysis, we observed a relationship between externalising symptoms and task behaviour, reflected in P(Accept). This is consistent with the alterations of behaviour in the risk-taking variant of the WebSurf task for those with high trait externalising symptoms (Abram, Redish, et al., 2019). However, our metric of externalising symptoms was not directly taken from an externalising symptom measurement, but was derived via our questionnaire battery PCA. Therefore, these results suggest there is some predictive utility of task behaviour on broad psychopathological symptom patterns. While the effect replicated in our confirmatory sample, there was a large number of tested parameters in our exploratory sample which did not show any predictive utility. These findings suggest that the behavioural parameters we investigated here may capture dimensions of psychological variance that are different than that of traditional psychometric rating scales. While we note that method variation may attribute to the modest correlation of extracted parameters with self-reported distress, a critical point is that task behaviour may reflect psychopathological variance that is not captured by self-report. Importantly, many emerging therapies for psychiatric disorders directly target aberrant decisional processing (Amidfar et al., 2019; Basu et al., 2023; Fisher et al., 2010; Haber et al., 2020; Subramaniam et al., 2014). Behavioural metrics that can repeatably quantify complex cognitive constructs are thus necessary to develop these technologies further. Given that WebSurf is demonstrably reliable, assesses multiple aspects of decision-making simultaneously, and is clinically scalable, it offers a promising paradigm to assess and track psychiatric phenomena.

4.6. Conclusion

In summary, we have validated a mechanistically relevant, reverse translatable, and clinically viable assessment of multiple decision-making systems. Psychopathology is increasingly thought to arise from dysfunctional information processing which is often highly variable between individuals, but many neurocognitive assessments suppress the variance between individuals. This limits their ability to capture the heterogeneity prevalent in psychiatric phenomena. The WebSurf task addresses this by integrating complex, naturalistic behaviour to assess mechanistically relevant multi-system function and track behavioural variance between individuals. Here we demonstrate that behaviour within individuals is highly stable after initial exposure to the task, supporting its utility as a tool to track clinical phenomena. Behavioural parameters extracted from the task can predict anhedonic or externalising symptomology, but importantly, task parameters may measure dimensions of psychological variance that are not captured by traditional rating scales. An integration of these etiological paradigms to quantify psychiatric phenomena is thus relevant to understand and treat psychiatric disorders by providing a more holistic platform to measure the complex systems underpinning them.

Supplementary Material

Acknowledgements

We thank A. David Redish for critical intellectual discussions around the overall design and concept of the project, as well as for contributions to the original WebSurf task. We acknowledge the technical support provided by the University of Minnesota Department of Psychiatry & Behavioral Sciences Computerized Psychiatric Assessment Suite (COMPAS) development team, and particularly Dr. Karrie Fitzpatrick.

Funding Information

Research reported in this publication was supported by the National Institute of Mental Health of the National Institutes of Health under award number R21MH120785-01, by the Minnesota’s Discovery, Research, and Innovation Economy (MnDRIVE) initiative, and the Minnesota Medical Discovery Team on Addictions.

References

- Abram S. V., Breton Y. A., Schmidt B., Redish A. D., & MacDonald A. W. (2016). The Web-Surf Task: A translational model of human decision-making. Cognitive, Affective and Behavioral Neuroscience, 16(1), 37–50. 10.3758/S13415-015-0379-Y/FIGURES/9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Abram S. V., Hanke M., Redish A. D., & MacDonald A. W. (2019). Neural signatures underlying deliberation in human foraging decisions. Cognitive, Affective, & Behavioral Neuroscience, 19(6), 1492–1508. 10.3758/s13415-019-00733-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- Abram S. V., Redish A. D., & MacDonald A. W. (2019). Learning From Loss After Risk: Dissociating Reward Pursuit and Reward Valuation in a Naturalistic Foraging Task. Frontiers in Psychiatry, 10, 359. 10.3389/fpsyt.2019.00359 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adams R. A., Huys Q. J. M., & Roiser J. P. (2016). Computational Psychiatry: Towards a mathematically informed understanding of mental illness. Journal of Neurology, Neurosurgery & Psychiatry, 87(1), 53–63. 10.1136/jnnp-2015-310737 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Addicott M. A., Pearson J. M., Sweitzer M. M., Barack D. L., & Platt M. L. (2017). A Primer on Foraging and the Explore/Exploit Trade-Off for Psychiatry Research. Neuropsychopharmacology, 42(10), Article 10. 10.1038/npp.2017.108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Altman E. G., Hedeker D., Peterson J. L., & Davis J. M. (1997). The Altman Self-Rating Mania Scale. Biological Psychiatry, 42(10), 948–955. 10.1016/S0006-3223(96)00548-3 [DOI] [PubMed] [Google Scholar]

- Amidfar M., Ko Y.-H., & Kim Y.-K. (2019). Neuromodulation and Cognitive Control of Emotion. In Kim Y.-K. (Ed.), Frontiers in Psychiatry: Artificial Intelligence, Precision Medicine, and Other Paradigm Shifts (pp. 545–564). Springer. 10.1007/978-981-32-9721-0_27 [DOI] [PubMed] [Google Scholar]

- Barch D. M., Carter C. S., Arnsten A., Buchanan R. W., Cohen J. D., Geyer M., Green M. F., Krystal J. H., Nuechterlein K., Robbins T., Silverstein S., Smith E. E., Strauss M., Wykes T., & Heinssen R. (2009). Selecting paradigms from cognitive neuroscience for translation into use in clinical trials: Proceedings of the third CNTRICS meeting. Schizophrenia Bulletin, 35(1), 109–114. 10.1093/schbul/sbn163 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barch D. M., Gold J. M., & Kring A. M. (2017). Paradigms for Assessing Hedonic Processing and Motivation in Humans: Relevance to Understanding Negative Symptoms in Psychopathology. Schizophrenia Bulletin, 43(4), 701–705. 10.1093/schbul/sbx063 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Basu I., Yousefi A., Crocker B., Zelmann R., Paulk A. C., Peled N., Ellard K. K., Weisholtz D. S., Cosgrove G. R., Deckersbach T., Eden U. T., Eskandar E. N., Dougherty D. D., Cash S. S., & Widge A. S. (2023). Closed-loop enhancement and neural decoding of cognitive control in humans. Nature Biomedical Engineering, 7(4), 576–588. 10.1038/s41551-021-00804-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benjamini Y., & Hochberg Y. (1995). Controlling the false discovery rate: A practical and powerful approach to multiple testing. Journal of the Royal Statistical Society: Series B (Methodological), 57(1), 289–300. 10.1111/j.2517-6161.1995.tb02031.x [DOI] [Google Scholar]

- Cicero D. C., Kerns J. G., & McCarthy D. M. (2010). The Aberrant Salience Inventory: A new measure of psychosis proneness. Psychological Assessment, 22(3), 688–701. 10.1037/a0019913 [DOI] [PubMed] [Google Scholar]

- Cuttler C., & Spradlin A. (2017). Measuring cannabis consumption: Psychometric properties of the Daily Sessions, Frequency, Age of Onset, and Quantity of Cannabis Use Inventory (DFAQ-CU). PLOS ONE, 12(5), e0178194. 10.1371/journal.pone.0178194 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dang J., King K. M., & Inzlicht M. (2020). Why Are Self-Report and Behavioral Measures Weakly Correlated? Trends in Cognitive Sciences, 24(4), 267–269. 10.1016/j.tics.2020.01.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Enkavi A. Z., Eisenberg I. W., Bissett P. G., Mazza G. L., MacKinnon D. P., Marsch L. A., & Poldrack R. A. (2019). Large-scale analysis of test–retest reliabilities of self-regulation measures. Proceedings of the National Academy of Sciences, 116(12), 5472–5477. 10.1073/pnas.1818430116 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fisher M., Holland C., Subramaniam K., & Vinogradov S. (2010). Neuroplasticity-based cognitive training in schizophrenia: An interim report on the effects 6 months later. Schizophrenia Bulletin, 36(4), 869–879. 10.1093/schbul/sbn170 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foa E. B., Huppert J. D., Leiberg S., Langner R., Kichic R., Hajcak G., & Salkovskis P. M. (2002). The Obsessive-Compulsive Inventory: Development and validation of a short version. Psychological Assessment, 14(4), 485. 10.1037/1040-3590.14.4.485 [DOI] [PubMed] [Google Scholar]

- Foote S. L., Bloom F. E., & Aston Jones G. (1983). Nucleus locus ceruleus: New evidence of anatomical and physiological specificity. Physiological Reviews, 63(3), 844–914. 10.1152/physrev.1983.63.3.844 [DOI] [PubMed] [Google Scholar]

- Gard D. E., Gard M. G., Kring A. M., & John O. P. (2006). Anticipatory and consummatory components of the experience of pleasure: A scale development study. Journal of Research in Personality, 40(6), 1086–1102. 10.1016/j.jrp.2005.11.001 [DOI] [Google Scholar]

- Garner D. M., Bohr Y., & Garfinkel P. E. (1982). The Eating Attitudes Test: Psychometric features and clinical correlates. Psychological Medicine, 12(4), 871–878. 10.1017/S0033291700049163 [DOI] [PubMed] [Google Scholar]

- Goschke T. (2014). Dysfunctions of decision-making and cognitive control as transdiagnostic mechanisms of mental disorders: Advances, gaps, and needs in current research. International Journal of Methods in Psychiatric Research, 23(S1), 41–57. 10.1002/mpr.1410 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haber S. N., Tang W., Choi E. Y., Yendiki A., Liu H., Jbabdi S., Versace A., & Phillips M. (2020). Circuits, Networks, and Neuropsychiatric Disease: Transitioning From Anatomy to Imaging. Biological Psychiatry, 87(4), 318–327. 10.1016/j.biopsych.2019.10.024 [DOI] [PubMed] [Google Scholar]

- Hasler G. (2012). Can the neuroeconomics revolution revolutionize psychiatry? Neuroscience & Biobehavioral Reviews, 36(1), 64–78. 10.1016/j.neubiorev.2011.04.011 [DOI] [PubMed] [Google Scholar]

- Huynh T., Alstatt K., Abram S. V., & Schmitzer-Torbert N. (2021). Vicarious Trial-and-Error Is Enhanced During Deliberation in Human Virtual Navigation in a Translational Foraging Task. Frontiers in Behavioral Neuroscience, 15. https://www.frontiersin.org/articles/10.3389/fnbeh.2021.586159 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huys Q. J. M., Maia T. V., & Frank M. J. (2016). Computational psychiatry as a bridge from neuroscience to clinical applications. Nature Neuroscience, 19(3), Article 3. 10.1038/nn.4238 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jeffreys H. (2006). Theory of probability. In Community Care. Oxford University Press. 10.2307/3028701 [DOI] [Google Scholar]

- Jepma M., & Nieuwenhuis S. (2011). Pupil Diameter Predicts Changes in the Exploration–Exploitation Trade-off: Evidence for the Adaptive Gain Theory. Journal of Cognitive Neuroscience, 23(7), 1587–1596. 10.1162/jocn.2010.21548 [DOI] [PubMed] [Google Scholar]

- Joshi S., Li Y., Kalwani R. M., & Gold J. I. (2016). Relationships between pupil diameter and neuronal activity in the locus coeruleus, colliculi, and cingulate cortex. Neuron, 89(1), 221–234. 10.1016/j.neuron.2015.11.028 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kazinka R., MacDonald A. W., & Redish A. D. (2021). Sensitivity to Sunk Costs Depends on Attention to the Delay. Frontiers in Psychology, 12, 373. 10.3389/FPSYG.2021.604843/BIBTEX [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kishida K. T., King-Casas B., & Montague P. R. (2010). Neuroeconomic approaches to mental disorders. Neuron, 67(4), 543–554. 10.1016/j.neuron.2010.07.021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kolling N., Behrens T. E., Mars R. B., & Rushworth M. F. (2012). Neural Mechanisms of Foraging. Science (New York, N.Y.), 336(6077), 95–98. 10.1126/science.1216930 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koo T. K., & Li M. Y. (2016). A Guideline of Selecting and Reporting Intraclass Correlation Coefficients for Reliability Research. Journal of Chiropractic Medicine, 15(2), 155. 10.1016/J.JCM.2016.02.012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuznetsova A., Brockhoff P. B., & Christensen R. H. B. (2017). lmerTest Package: Tests in Linear Mixed Effects Models. Journal of Statistical Software, 82(13). 10.18637/jss.v082.i13 [DOI] [Google Scholar]

- Lavigne K. M., Sauvé G., Raucher-Chéné D., Guimond S., Lecomte T., Bowie C. R., Menon M., Lal S., Woodward T. S., Bodnar M. D., & Lepage M. (2022). Remote cognitive assessment in severe mental illness: A scoping review. Schizophrenia, 8(1), 14. 10.1038/s41537-022-00219-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lima S. L., & Bednekoff P. A. (1999). Temporal Variation in Danger Drives Antipredator Behavior: The Predation Risk Allocation Hypothesis. The American Naturalist, 153(6), 649–659. 10.1086/303202 [DOI] [PubMed] [Google Scholar]

- McGraw K. O., & Wong S. P. (1996). Forming inferences about some intraclass correlation coefficients. Psychological Methods, 1(1), 30–46. 10.1037/1082-989X.1.1.30 [DOI] [Google Scholar]

- Mobbs D., Trimmer P. C., Blumstein D. T., & Dayan P. (2018). Foraging for foundations in decision neuroscience: Insights from ethology. Nature Reviews. Neuroscience, 19(7), 419–427. 10.1038/s41583-018-0010-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Patton J. H., Stanford M. S., & Barratt E. S. (1995). Factor structure of the barratt impulsiveness scale. Journal of Clinical Psychology, 51(6), 768–774. [DOI] [PubMed] [Google Scholar]

- Pleskac T. J., Yu S., Hopwood C., & Liu T. (2019). Mechanisms of deliberation during preferential choice: Perspectives from computational modeling and individual differences. Decision (Washington, D.C.), 6(1), 77–107. 10.1037/dec0000092 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poldrack R. A., & Yarkoni T. (2016). From Brain Maps to Cognitive Ontologies: Informatics and the Search for Mental Structure. Annual Review of Psychology, 67(1), 587–612. 10.1146/annurev-psych-122414-033729 [DOI] [PMC free article] [PubMed] [Google Scholar]

- R Core Team. (2016). A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna (2016). 2013.

- Radloff L. S. (2016). The CES-D Scale: A Self-Report Depression Scale for Research in the General Population. Http://Dx.Doi.Org/10.1177/014662167700100306, 1(3), 385–401. 10.1177/014662167700100306 [DOI] [Google Scholar]

- Raine A., & Benishay D. (1995). The SPQ-B: A Brief Screening Instrument for Schizotypal Personality Disorder. Journal of Personality Disorders, 9(4), 346–355. 10.1521/pedi.1995.9.4.346 [DOI] [Google Scholar]

- Redish A. D. (2013). The mind within the brain: How we make decisions and how those decisions go wrong (pp. xiii, 377). Oxford University Press. [Google Scholar]

- Redish A. D., Abram S. V., Cunningham P. J., Duin A. A., Durand-de Cuttoli R., Kazinka R., Kocharian A., MacDonald A. W., Schmidt B., Schmitzer-Torbert N., Thomas M. J., & Sweis B. M. (2022). Sunk cost sensitivity during change-of-mind decisions is informed by both the spent and remaining costs. Communications Biology, 5(1), Article 1. 10.1038/s42003-022-04235-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Redish A. D., & Gordon J. A. (2016). Computational Psychiatry: New Perspectives on Mental Illness. MIT Press. [Google Scholar]

- Redish A. D., Kepecs A., Anderson L. M., Calvin O. L., Grissom N. M., Haynos A. F., Heilbronner S. R., Herman A. B., Jacob S., Ma S., Vilares I., Vinogradov S., Walters C. J., Widge A. S., Zick J. L., & Zilverstand A. (2021). Computational validity: Using computation to translate behaviours across species. Philosophical Transactions of the Royal Society B: Biological Sciences, 377(1844), 20200525. 10.1098/rstb.2020.0525 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reynolds B., & Schiffbauer R. (2004). Measuring state changes in human delay discounting: An experiential discounting task. Behavioural Processes, 67(3), 343–356. 10.1016/j.beproc.2004.06.003 [DOI] [PubMed] [Google Scholar]

- Robbins T. W., Gillan C. M., Smith D. G., de Wit S., & Ersche K. D. (2012). Neurocognitive endophenotypes of impulsivity and compulsivity: Towards dimensional psychiatry. Trends in Cognitive Sciences, 16(1), 81–91. 10.1016/j.tics.2011.11.009 [DOI] [PubMed] [Google Scholar]

- Saunders J. B., Aasland O. G., Babor T. F., De La Fuente J. R., & Grant M. (1993). Development of the Alcohol Use Disorders Identification Test (AUDIT): WHO Collaborative Project on Early Detection of Persons with Harmful Alcohol Consumption-II. Addiction, 88(6), 791–804. 10.1111/j.1360-0443.1993.tb02093.x [DOI] [PubMed] [Google Scholar]

- Schaeffer M. S., & Levitt E. E. (1958). Concerning Kendall’s tau, a nonparametric correlation coefficient. Psychological Bulletin, 53(4), 338. 10.1037/h0045013 [DOI] [PubMed] [Google Scholar]

- Shenhav A., Straccia M. A., Cohen J. D., & Botvinick M. M. (2014). Anterior Cingulate Engagement in a Foraging Context Reflects Choice Difficulty, Not Foraging Value. Nature Neuroscience, 17(9), 1249–1254. 10.1038/nn.3771 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shrout P. E., & Fleiss J. L. (1979). Intraclass correlations: Uses in assessing rater reliability. Psychological Bulletin, 86(2), 420–428. 10.1037/0033-2909.86.2.420 [DOI] [PubMed] [Google Scholar]

- Snaith R. P., Hamilton M., Morley S., Humayan A., Hargreaves D., & Trigwell P. (1995). A Scale for the Assessment of Hedonic Tone the Snaith–Hamilton Pleasure Scale. The British Journal of Psychiatry, 167(1), 99–103. 10.1192/BJP.167.1.99 [DOI] [PubMed] [Google Scholar]

- Steiner A. P., & Redish A. D. (2014). Behavioral and neurophysiological correlates of regret in rat decision-making on a neuroeconomic task. Nature Neuroscience, 17(7), 995–1002. 10.1038/nn.3740 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stephens D. W. (2008). Decision ecology: Foraging and the ecology of animal decision making. Cognitive, Affective & Behavioral Neuroscience, 8(4), 475–484. 10.3758/CABN.8.4.475 [DOI] [PubMed] [Google Scholar]

- Stephens D. W., & Krebs J. R. (1986). Foraging Theory. Princeton University Press. [Google Scholar]

- Strauss E., Sherman E. M. S., & Spreen O. (2006). A Compendium of Neuropsychological Tests: Administration, Norms, and Commentary. Oxford University Press. [Google Scholar]

- Subramaniam K., Luks T. L., Garrett C., Chung C., Fisher M., Nagarajan S., & Vinogradov S. (2014). Intensive cognitive training in schizophrenia enhances working memory and associated prefrontal cortical efficiency in a manner that drives long-term functional gains. NeuroImage, 99, 281–292. 10.1016/j.neuroimage.2014.05.057 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sweis B. M., Abram S. V., Schmidt B. J., Seeland K. D., MacDonald A. W., Thomas M. J., & Redish A. D. (2018). Sensitivity to “sunk costs” in mice, rats, and humans. Science, 361(6398), 178–181. 10.1126/science.aar8644 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watson D., Clark L. A., Weber K., Assenheimer J. S., Strauss M. E., & McCormick R. A. (1995). Testing a tripartite model: II. Exploring the symptom structure of anxiety and depression in student, adult, and patient samples. Journal of Abnormal Psychology, 104(1), 15–25. 10.1037/0021-843X.104.1.15 [DOI] [PubMed] [Google Scholar]

- Weydmann G., Palmieri I., Simões R. A. G., Centurion Cabral J. C., Eckhardt J., Tavares P., Moro C., Alves P., Buchmann S., Schmidt E., Friedman R., & Bizarro L. (2022). Switching to online: Testing the validity of supervised remote testing for online reinforcement learning experiments. Behavior Research Methods. 10.3758/s13428-022-01982-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- WHO ASSIST Working Group. (2002). The Alcohol, Smoking and Substance Involvement Screening Test (ASSIST): Development, reliability and feasibility. Addiction, 97(9), 1183–1194. 10.1046/j.1360-0443.2002.00185.x [DOI] [PubMed] [Google Scholar]

- Zald D. H., & Treadway M. (2017). Reward Processing, Neuroeconomics, and Psychopathology. Annual Review of Clinical Psychology, 13, 471–495. 10.1146/annurev-clinpsy-032816-044957 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.