Abstract

How is the fundamental sense of one’s body, a basic aspect of selfhood, incorporated into memories for events? Disrupting bodily self-awareness during encoding impairs functioning of the left posterior hippocampus during retrieval, which implies weakened encoding. However, how changes in bodily self-awareness influence neural encoding is unknown. We investigated how the sense of body ownership, a core aspect of the bodily self, impacts encoding in the left posterior hippocampus and additional core memory regions including the angular gyrus. Furthermore, we assessed the degree to which memories are reinstated according to body ownership during encoding and vividness during retrieval as a measure of memory strength. We immersed participants in naturalistic scenes where events unfolded while we manipulated feelings of body ownership with a full-body-illusion during functional magnetic resonance imaging scanning. One week later, participants retrieved memories for the videos during functional magnetic resonance imaging scanning. A whole brain analysis revealed that patterns of activity in regions including the right hippocampus and angular gyrus distinguished between events encoded with strong versus weak body ownership. A planned region-of-interest analysis showed that patterns of activity in the left posterior hippocampus specifically could predict body ownership during memory encoding. Using the wider network of regions sensitive to body ownership during encoding and the left posterior hippocampus as separate regions-of-interest, we observed that patterns of activity present at encoding were reinstated more during the retrieval of events encoded with strong body ownership and high memory vividness. Our results demonstrate how the sense of physical self is bound within an event during encoding, which facilitates reactivation of a memory trace during retrieval.

Keywords: body ownership, memories for events, fMRI, naturalistic stimuli, hippocampus

Introduction

Every time we experience or remember an event, our conscious self is at the center of the episode (Tulving 1985). This intimate association between the conscious self and memory suggests a fundamental relationship. The spatial and perceptual experience of the body as one’s own (i.e. body ownership) is the most basic form of conscious self-experience (Blanke et al. 2015), rooted in a coherent multisensory representation of one’s body based on the continuous integration of bodily-related sensory signals, including touch, vision, proprioception, and interoception (Ehrsson 2020). This perceptual bodily-self defines the egocentric reference frame that is crucial for the processing of sensory and cognitive information. Emerging literature suggests that the multisensory experience of one’s own body at encoding influences memory upon retrieval (Bergouignan et al. 2014; Bréchet et al. 2019, 2020; Tacikowski et al. 2020; Iriye and Ehrsson 2022). The idea is that the perception of one’s own body binds sensory and cognitive information into a unified experience with the self in the center during encoding, and that impairing this fundamental binding weakens the effectiveness of the encoding process leading to reduced memory at recall. Behavioral studies that manipulated the sense of body ownership by using bodily illusions during encoding (Tacikowski et al. 2020; Iriye and Ehrsson 2022) found reductions in vividness and memory accuracy during later recall for events that were encoded with a reduced sense of body ownership. Bergouignan et al. (2014) observed a reversal of the normal activation pattern of the left posterior hippocampus during repeated retrieval of social interactions encoded while experiencing an out-of-body illusion, which correlated with reduced vividness. However, hippocampal activity was not measured at encoding and the specific effect of body ownership cannot be disentangled from effects of changes in self-location and visual perspective that are also components of the out-of-body illusion (Ehrsson 2007; Guterstam and Ehrsson 2012). Furthermore, the angular gyrus in the inferior posterior parietal cortex may integrate body ownership within memory (Bréchet et al. 2018). The angular gyrus supports multisensory encoding (Bonnici et al. 2016; Jablonowski and Rose 2022) by combining cross-modal information into a common egocentric framework (Bonnici et al. 2018; Humphreys et al. 2021), and encodes self-relevant stimuli (Singh-Curry and Husain 2009). However, whether angular gyrus activity reflects body ownership during memory encoding has not been directly tested.

During retrieval, there is a reinstatement of brain activity patterns present when events were initially experienced, which is coordinated by the hippocampus (e.g. Hebscher et al. 2021). The higher the overlap between patterns of neural activity at encoding and retrieval, the stronger the memory (Bird et al. 2015; Oedekoven et al. 2017). Hence, if the hippocampus is sensitive to own-body perception during encoding, as has been hypothesized (Bergouignan et al. 2014), this may have consequences for the strength of memory reinstatement in a manner that scales with memory vividness. Experiencing naturalistic multisensory memories with a strong sense of body ownership during encoding could facilitate access to hippocampal representations of the memory trace during vivid recall, which would lead to increased reinstatement of these memories in brain regions initially involved in encoding across the cortex.

Here, we investigate how changes in body ownership during encoding infuence neural processes related to memory encoding and memory reinstatement. Participants watched immersive stereoscopic videos of naturalistic scenes through a head-mounted display (HMD) during functional magnetic resonance imaging (fMRI) scanning while we manipulated their feelings of ownership over a mannequin’s body in the center of the scene with synchronous/asynchronous visuotactile stimulation in a well-established full-body illusion paradigm (Petkova and Ehrsson 2008; Ehrsson 2020). One week later, participants recalled these experiences during functional scanning. We predicted that patterns of activity in the left posterior hippocampal region identified by Bergouignan et al. (2014) and angular gyrus during encoding would reflect whether an event was experienced with a strong or weak sense of body ownership. We also predicted that memory reinstatement in the left posterior hippocampus and the distributed cortical regions associated with the multisensory experience during encoding would be greater for memories formed with strong illusory body ownership and high vividness during retrieval.

Materials and methods

Participants

Participants included 30 healthy, right-handed young adults (age range 19–29 yr old) with no prior history of neurological or psychiatric impairment, and who were not currently taking medication that affected mood or cognitive function (18 men, 12 women; mean age = 25.13, SD = 3.06; mean years of education: 16.31, SD = 3.01). Importantly, we only recruited participants who had not previously taken part in any experiments involving body illusions to ensure they would be naïve to the illusion. Participants provided informed written consent as approved by the Swedish Ethical Review Authority. Six participants were excluded from the fMRI analyses due to technical issues during scanning (i.e. scanner overheating, n = 1) and excessive head movement during the memory encoding session (i.e. >3 mm in pitch, yaw, and/or roll, n = 5). Thus, the final fMRI analyses were performed on 24 participants (15 men, 9 women; mean age = 25.33 yr old, SD = 3.31; mean years of education = 16.31, SD = 3.14). The sample size in the present study (n = 24) is comparable with the sample size of Bergouignan et al. (2014); n = 21).

Materials

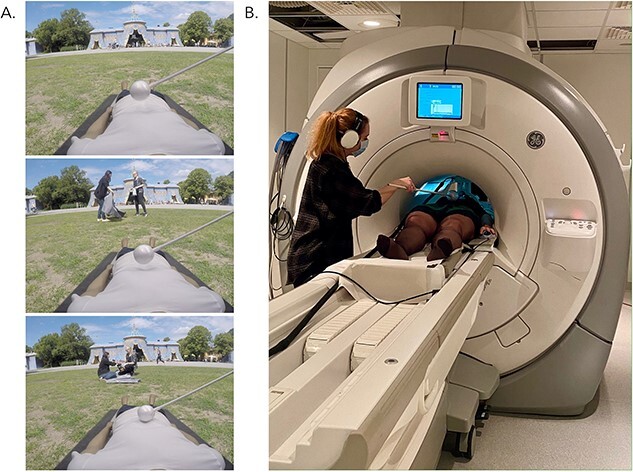

Stimuli consisted of 26 high-resolution digital 3D-videos depicting everyday life events in various famous locations around Stockholm, Sweden that have been described in a previous experiment (Iriye and Ehrsson 2022). We chose recognizable local places of interest to recreate natural real-life experiences for participants and enhance the ecological validity of our study. These stimuli were carefully matched for visual, audio, narrative, and emotional complexity so that observed behavioral/fMRI effects of experimental condition would not be due to differences in video content (see Supplementary Material). Each video included a stereoscopic view of a mannequin’s body seen from a supine, first-person perspective. Stereoscopic vision was achieved by filming each event with two GoPro Hero 7 cameras (San Mateo, California, United States) mounted side-by-side on a tripod to mimic left and right eye positions. The recordings from the left and right cameras were viewed in the left and right eye of a pair of MR-compatible LCD displays in the HMD (VisualSystem HD, Nordic Neurolab, Norway) to create a stereoscopic effect within the videos. Twenty-four videos were used for the main memory task, while the other two were used in the illusion induction testing portion of the experiment.

Throughout each video, a white Styrofoam ball (6.5 cm in diameter) attached to a wooden stick (1 m long) repeatedly stroked the mannequin’s torso from the sternum to the belly button in a downwards direction every 2 s for 40 s total (O’Kane and Ehrsson 2021; Iriye and Ehrsson 2022). Each stroke lasted ~1 s and stroking continued for the duration of each video (i.e. 40 s). A still frame image depicting the opening frame of the video was overlaid with the footage of the mannequin being stroked for the initial 20 s.

After 20 s, an everyday scene unfolded in front of the mannequin’s body, which always involved the same two actors engaged in a short conversation based on a unique, visible item. One of the actors was an author of the present study referred to as “Heather” in the videos, and the second was a fellow lab member referred to as “Vicki.” The background of each scene consisted of a typical summer day in a well-known location in Stockholm, Sweden (e.g. Central Station, Gamla Stan, Kungsträdgården) complete with members of the public going about their lives as usual. For example, in the video titled “Central Station,” Vicki and Heather are walking toward the main entrance of the city’s main train station together discussing a vacation they are about to take to Oslo, Norway. The two discuss details of the trip including how long they’ll be gone for and who they are staying with. Vicki pauses and mentions she thinks she has left her travel pillow at home. She removes the backpack she is wearing, rifles through it to check, finds she has packed it after all, and shows a blue travel pillow to Heather. Then, the two continue to make their way to the entrance of the station. A gray van is parked on the street to the right and two green bicycles are locked to poles next to the station. Several people are seen entering and exiting the station in the background. The video footage of the mannequin being stroked by the wooden stick was filmed separately against a green screen and imposed onto the footage of the everyday scenes using Final Cut Pro X 10.4.7. An audio track of the dialog was recorded separately from the video in a soundproof environment using a Røde Videomic Go microphone (Sydney, Australia). Background noises appropriate to each video (e.g. birds, wind, distant conversations, traffic) were downloaded from an open-source repository (https://pixabay.com/sound-effects/search/background) and layered underneath the dialog using Audacity ® 2.3.2. The final audio track was integrated with its respective video using Final Cut Pro X 10.4.7. A second audio track only heard by the experimenter through a separate set of headphones was added to each video to cue precise timing and duration of the tactile stimuli. Audio tracks delivered to the experimenter and participant were separated by an Edirol USB AudioCapture sound card (model number: UA-25EX, 24-bit, 96 kHz) and played through MR-compatible headphones (AudioSystem, Nordic Neurolab, Norway).

Procedure

Session one: memory encoding

The study involved two separate fMRI scanning sessions spaced 7 d apart. During the first session, participants were fitted with an HMD containing a pair of MR-compatible LCD visual displays (VisualSystem HD, Nordic Neurolab, Norway) and repeatedly watched the 24 of the immersive videos during functional scanning (Fig. 1A). The location of the participants’ real bodies lying on the scanner table was aligned with a first-person view of the mannequin’s body in the video (i.e. the experimenter ensured that the participants’ arms and legs were straight, and the participants’ body was located on the center of the table to match the mannequin in the videos; see Fig. 1B). Each video was associated with a unique title (i.e. the location depicted in the scene) that was presented in the center of the screen for 2.5 s before the video began. In half (i.e. 12) of the videos, to induce a bodily illusion of the mannequin feeling like one’s own body with proprioceptive and tactile sensations seemingly originating from the fake body, participants were instructed to relax and focus on the mannequin as touches were delivered on their torso at the same time and in the same direction that they saw the mannequin’s torso being touched in the video, i.e. synchronous visuotactile stimulation (e.g. Petkova and Ehrsson 2008). As a control condition, participants saw and felt touches in an alternating temporal pattern in the other half (i.e. 12) of the videos, i.e. asynchronous visuotactile stimulation, which significantly reduces the illusion (Petkova and Ehrsson 2008). Critically, the strokes in both conditions were identical in magnitude and location, and the only difference between conditions was the 1 s delay between seen and felt strokes in the asynchronous condition.

Fig. 1.

Screenshots of an example video: Hagaparken. Each immersive video involved a first-person view of a mannequin’s body that was continuously stroked with the Styrofoam ball as an event unfolded within the scene (A). Participants watched videos through an HMD and felt strokes on their actual torso either synchronously or asynchronously with the strokes in the video (B). Note that footage from only one eye is shown here. Actual stimuli consisted of two videos taken from slightly different positions corresponding to a left and right eye centered in the middle of the HMD to create a stereoscopic effect. Video stimuli were divided into two carefully matched groups to control for differences in visual, auditory, narrative, and emotional complexity (see Supplementary Material).

After the initial 20 s of visuotactile stimulation had elapsed, the video depicting the unique event took place during a subsequent 20 s where participants were instructed to remember as much as possible about each video, including the title, the dialog, and the visual details present in the scene. Visuotactile stimulation persisted throughout the entire 40 s of each video to either maintain illusory ownership over the mannequin’s body in the synchronous condition or reduce it in the asynchronous condition. Videos were separated by an active baseline consisting of a left versus right decision task that lasted between 2.5 and 10 s and was distributed such that shorter inter-trial intervals occurred more frequently than longer inter-trial intervals (4 × 2.5 s, 3 × 5 s, 2 × 7 s, 1 × 10 s; Iriye and St. Jacques 2020; Stark and Squire 2001). We chose an active baseline as activity in the medial temporal lobes can be higher when participants rest in between trials compared with when they are performing a memory task during a trial, which can be reversed by adopting a left–right decision task as a baseline measure (Stark and Squire 2001). During rest, participants have the opportunity for mind-wandering and self-reflection that can activate core memory regions. Thus, having participants perform a mindless task such as deciding whether an arrow is pointing left or right is a more appropriate baseline task to compare against memory encoding and retrieval.

Participants viewed individual videos a total of three times over the course of nine functional runs, each consisting of eight videos, for a total of 36 trials per condition. Videos were repeated three times to ensure that participants would be able to recall each video one week later during session two, which had been determined by pilot testing on a separate group of participants (data not shown). Video presentation was pseudo-randomized across runs, such that no condition was repeated more than twice in a row and videos repeated every three runs. Prior to scanning, the experimenter verified that the participant understood the task (i.e. to remember as much as possible about each video, including the title, dialog, and visual scene) by having the participant verbally repeat the instructions. Participants were also immersed within two videos not used for the main memory experiment, one with synchronous visuotactile stimulation and one with asynchronous visuotactile stimulation, inside a mock scanner to become accustomed to the experimental stimuli and active baseline task.

Session one: cued recall and subjective ratings

Immediately after scanning, participants completed a cued recall test comprised of five questions per video to assess objective memory accuracy outside the scanning environment in a nearby testing room. Three questions were related to the main storyline (e.g. Which animal in the museum was Heather surprised to see?) and two questions concerned background peripheral details not important to the storyline (e.g. How many scooters were parked outside the museum?). To assess memory phenomenology, participants rated reliving, emotional intensity, vividness, and degree of belief in memory accuracy on a 7-point Likert scales (1 = none, 7 = high; Iriye and Ehrsson 2022; Iriye and St. Jacques 2020; see Supplementary Material for specific instructions). Cued recall questions and subjective ratings were completed on a computer. Cued recall questions from each video were randomly assigned to either session one or session two for each participant and presented in random order.

Session two: memory retrieval

One week after session one, participants were asked to repeatedly retrieve each of the videos from memory. We chose a 1-week interval between testing sessions to maintain consistency with previous studies in the field, which have shown declines in the richness of recollection during retrieval over a delay of this length (Bergouignan et al. 2014; Iriye and Ehrsson 2022).

Each trial began with the video title presented in the center of the field of view for 2.5 s, followed by an instruction for participants to close their eyes and retrieve the memory in as much detail as possible for a duration of 17.5 s. After 17.5 s had elapsed, an auditory cue sounded through the headphones, which signaled participants to stop retrieving their memory and open their eyes (2.5 s). Immediately following each trial, participants were asked to rate the vividness of their memory on a visual analog scale (0 = low, 100 = high). Participants had 2.5 s for the vividness rating and responded using an MR-compatible mouse. We selected a retrieval window of 17.5 s as even though participants were instructed not to begin retrieving their memory for the video associated with the cue title until the prompt to close their eyes appeared, we anticipated that some participants would inadvertently and automatically start to search for a memory when presented with the cue title regardless. The cue title presentation (i.e. 2.5 s) added to the retrieval period (17.5 s) matches the 20 s of the encoding duration. The onset of retrieval trial was locked to the onset of the “close eyes” prompt to coincide with the time that we could be sure participants were genuinely retrieving memories. Each video was retrieved a total of three times over the course of six functional runs (12 trials each), resulting in a total of 36 trials per condition. Trials were separated by the same active baseline task as session one, adapted to the increased number of trials per functional run (6 × 2.5 s, 3 × 5 s, 2 × 7.5 s, 1 × 10 s). Video presentation was pseudo-randomized such that a given condition was not repeated more than twice in a row.

Prior to scanning, participants were presented with the video titles and asked to indicate whether they were able to recall the associated video with a yes/no response to verify that they would be successfully retrieving a memory during the experiment. In the event that the response was “no,” the participant received a word cue related to the theme of the video (e.g. “picnic” for the video Hagaparken) and was asked to indicate if this helped them recover a specific memory. If the response was still “no,” the participant was instructed to focus on trying to recover their memory for that video during the 17.5 s retrieval period in the scanner. Participants were unable to retrieve a memory for 23 of the 576 total videos (.04% of total trials). The experimenter also verified that the participant understood the task (i.e. to close their eyes and vividly recall the video in as much detail as possible—not just the words that were said, but details of the surrounding, objects, and people in the scene) by having the participant verbally repeat the instructions before entering the scanner.

Session two: illusion testing

We measured the strength of the full-body illusion during session two, directly after participants had finished retrieving their memories of the videos. Participants were immersed within two previously unseen videos that were not viewed during the main memory portion of the experiment, one with synchronous and the other with asynchronous visuotactile stimulation, while lying on the MR scanner bed to mimic how the videos presented during the encoding session were experienced. However, the scanner was not recording functional images during illusion testing. Video presentation order was counterbalanced across participants. Each video was viewed twice. These new videos were shown purely to confirm that the bodily illusion manipulation worked as expected and that a stronger illusion was experienced in the synchronous compared with asynchronous condition. The videos were similar to those used in the main experiment: They involved an initial 20 s of a mannequin’s body viewed from a first-person perspective being stroked on the torso within a static scene depicting a unique location around Stockholm, Sweden, followed by an additional 20s of two characters engaged in an everyday conversation. However, unlike the videos included in the main experiment, a knife appeared and slid quickly just above the mannequin’s stomach, which lasted ~2 s, at two points in each video (i.e. at 18 and 32 s). There was no tactile stimulation applied to the participants’ bodies during the knife threat or for 2 s following it. The knife threats trigger stronger physiological emotional defense reactions that translate to increased sweating that can be registered as changes in skin conductance when the illusion is experienced compared with when it is not. This threat-evoked skin conductance response (SCR) serves as an indirect psychophysiological index of the illusion (Ehrsson et al. 2007; Petkova et al. 2008; Guterstam et al. 2015; Tacikowski et al. 2020). We did not include knife threats in the videos used for the main experiment to avoid confounding effects on memory.

We recorded threat-evoked SCR with the MR-compatible BIOPAC System (MP160, Goleta, California, United States; sampling rate = 100 Hz). The data were processed with AcqKnowledge® software (Version 5.0, BIOPAC). Two electrodes with electrode gel (BIOPAC, Goleta, California, United States) were placed on the participants’ left index and middle fingers (distal phalanges). The script used to present the videos created in PyschoPy (v3.2.4; Peirce et al. 2019) sent a signal to the recording software to mark the beginning of each knife threat. We also tracked participants’ eye movements while they watched the videos used to assess the strength of the illusion induction to determine whether there were any systematic differences in gaze patterns between the synchronous and asynchronous conditions that may potentially have influenced the results of the memory task. MR-compatible binocular eye tracking cameras mounted inside of the VisualSystem HD (acquisition frequency: 60 Hz; NordicNeuroLab, 2018, Norway) recorded fixation coordinates as participants watched the videos.

Immediately after participants had viewed the first illusion testing video, they were led to the MR control room and completed two sets of questionnaires that assessed the degree of illusory bodily ownership they felt over the mannequin and the level of presence they felt within the immersive scene presented in the HMD (see further below). The illusion induction questionnaire consisted of three statements pertaining to the degree of multisensory bodily illusion of the mannequin as one’s own body and three control statements on 7-point Likert scales (−3 = strongly disagree, 0 = neutral, 3 = strongly agree; see Table 1) presented in random order on a computer screen. S1 and S2 assessed “referral of touch” from the participant’s actual body to the mannequin indicating coherent multisensory binding of the seen and felt strokes on the mannequin’s body, while S3 assessed degree of explicitly sensed ownership over the mannequin’s body (Petkova and Ehrsson 2008). The presence questionnaire was included to monitor the overall feeling that participants experienced “being there” inside the immersive 3D scene, which we reasoned might have unintended effects on participants’ ability to recall specific details about each scene, independent from the bodily illusion. For a description of the presence questionnaire and its results, see Supplementary Material. The same process was repeated for the second illusion testing video associated with the opposite condition (i.e. if the first illusion testing video involved synchronous visuotactile stimulation, the second illusion testing video involved asynchronous visuotacitle stimulation). After the participants had completed the illusion testing and questionnaires for the second illusion testing video, they returned to the scanner bed a final time, where they watched the same two videos back-to-back in the same order as we measured their SCRs and eye movements (as described above). Thus, for each condition we obtained one set of questionnaire ratings, skin conductance data from four knife threats (i.e. two per video), and eye movement data from both presentations of each video. We counterbalanced the order of video presentation and the condition assigned to each video across participants.

Table 1.

Illusion testing questionnaire.

| S1 | I felt the touch of the brush on the mannequin in the location where I saw the brush moving |

| S2 | I experienced that the touch I felt was caused by the brush touching the mannequin |

| S3 | I felt as if the mannequin I saw was my body |

| S4 | I could no longer feel my body |

| S5 | I felt as if I had two bodies |

| S6 | When I saw the brush moving, I experienced the touch on my back |

Session two: cued recall and subjective ratings

At the end of session two, participants completed a cued recall accuracy test with new questions (three pertaining to central event details, and two pertaining to peripheral details of the visual scene) and the same subjective ratings made during session one outside of the scanning environment in a nearby testing room on a laptop. Cued recall questions were randomly assigned to either session one or session two for each participant and presented in random order. Thus, the cued recall questions participants answered during session two were different from those previously seen in session one, which ensured that participants retrieved their memories of the videos rather than relying on simply remembering their answers to cued recall questions from session one. The instructions and procedure for completing the cued recall and subjective ratings were identical to those for session one. Figure 2 outlines a schematic of the complete experimental protocol.

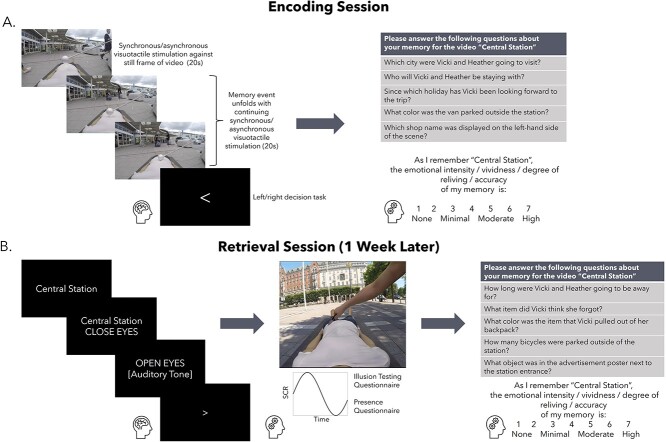

Fig. 2.

The experimental protocol. (A) During the encoding session, participants viewed immersive 3D videos through an HMD during fMRI scanning. For the first 20 s of each video, either synchronous (12 videos) or asynchronous (12 videos) visuotactile stimulation between the participant’s and mannequin’s body was administered to induce a sense of ownership over the mannequin’s body or reduce/abolish the illusion, respectively. The mannequin’s body was superimposed against a still frame image depicting the location of each video. Next, a unique event took place in front of the mannequin as visuotactile stimulation continued, which lasted a total of 20 additional seconds. Participants were asked to remember as much as possible about each scene. After scanning, participant answered cued recall questions and completed subjective ratings about the videos. (B) One week later, participants retrieved memories for the videos again during fMRI scanning. After participants finished retrieving memories but while they were still lying on the scanner table, we assessed the strength of the full-body illusion induction by measuring SCRs to knife threats embedded within two new videos (one with synchronous, one with asynchronous visuotactile stimulation) and questionnaire measures of the degree of ownership over the mannequin’s body. Participants’ eye movements were tracked as they watched the illusion testing videos. The sense of presence within the immersive scene was also assessed for each condition. Finally, participants answered a new set of cued recall questions and completed the same subjective ratings as in the previous session. SCR = skin conductance response.

MRI data acquisition and preprocessing

Functional and structural images were collected on a GE750 3 Tesla MRI scanner. Detailed anatomical data were collected using a T1-weighted BRAVO sequence. Functional images were acquired using at T2*-weighted echo planar sequence (TR = 2500 ms, TE = 30 ms, FOV = 230 × 230, slice thickness = 3 mm). Whole brain coverage was obtained via 48 coronal oblique slices, acquired at an angle corresponding to AC-PC alignment in an interleaved ascending fashion, with a 2.4 × 2.4 mm in-plane resolution. The first ten volumes of each run were discarded to allow for T1 equilibrium.

Preprocessing of functional images was performed using SPM12 (Wellcome Department of Imaging Neuroscience, London, United Kingdom). Functional images were corrected for differences in acquisition time between slices for each whole brain volume using slice-timing, realigned within and across runs to correct for head movement, spatially normalized to the Montreal Neurological Institute (MNI) template (resampled at 2 × 2 × 2 mm voxels), and spatially smoothed using a Gaussian kernel (3 mm full-width at half maximum). BOLD signal response patterns according to condition for both the encoding and retrieval scanning session separately were estimated using general linear models (GLMs). A regressor was estimated for every trial in each functional run to facilitate the multivariate analyses (see below), resulting in eight beta estimates (i.e. four per condition) for each of the nine functional runs in the encoding session and twelve beta estimates (i.e. six per condition) for each of the six functional runs in the retrieval session. To provide the multivariate classifiers with as much training data as possible for each condition and improve classification accuracy, we calculated response estimates for each trial (Turner et al. 2012), as opposed to one mean estimate for each condition in a run as is standard for GLM analyses. Thus, there were a total of 36 beta estimates per condition for each scanning session. For the encoding session, regressors were time-locked to the onset of the natural social event in the video signaling the beginning of the memory task, after the initial 20s of visuotactile stimulation that was only used to trigger the bodily illusion (and no memorable event was presented). The duration lasted until the end of the video (i.e. 20 s). We excluded the initial 20 s of each video from the main analyses of interest to allow time to induce (i.e. synchronous condition) illusory ownership of the mannequin’s body, which has been previously shown to occur approximately within this time period (Petkova and Ehrsson 2008; O’Kane and Ehrsson 2021). The corresponding initial period of 20 s visuotactile stimulation was also excluded from the asynchronous condition fMRI data to match the conditions in this aspect. For the retrieval session, regressors were time-locked to the onset of the memory retrieval cue and the duration was set to cover the retrieval period (i.e. 17.5 s), excluding the auditory tone and the vividness rating. For both sessions, six movement parameters were included as separate regressors. The SPM canonical hemodynamic response function was used to estimate brain responses. Trial-wise beta estimates were used as input for the decoding analyses conducted on the encoding and retrieval sessions. For the representational similarity analysis (RSA), we averaged beta estimates across the three repetitions of each video (n = 24) for each scanning session separately to allow us to compare patterns of activity at encoding and retrieval according to condition i.e. illusion (synchronous) or control (asynchronous).

Behavioral data analysis

To assess the strength of the bodily illusion induction from the questionnaire data, we calculated illusion statement ratings corrected for suggestibility and response bias by subtracting average control statements from average illusion statement ratings separately for each condition (Iriye and Ehrsson 2022). To analyze SCRs collected during illusion induction testing, we used a custom Matlab toolbox (Tacikowski et al. 2020) to identify the amplitude of each response defined as the difference between the maximum and minimum conductance values 0–6 s after a knife threat (Tacikowski et al. 2020). We then performed a paired-samples t-test comparing magnitudes of SCRs to knife threats between conditions. All trials were included in the SCR analysis, i.e. also null responses, which means that we assessed the magnitude of the SCR for each condition (Dawson et al. 2000). To determine whether there were differences in gaze behavior and overt attention due to experimental condition, we analyzed eye tracking data by calculating average fixation coordinates for both conditions for each participant, and then conducting a 2 (visuotactile congruence: synchronous, asynchronous) × 2 (fixation coordinate: X, Y) repeated-measures ANOVA (Guterstam et al. 2015). We were unable to collect SCR data from four participants and eye tracking data from two participants due to technical issues with the recording equipment. Thus, the analysis of SCR data was conducted on 26 participants and the analysis of the eye tracking data was conducted on 28 participants.

Cued recall questions were coded for accuracy using strict criteria in which responses had to exactly match the correct response to be scored as correct (e.g. What type of injury was Heather recovering from? Correct answer: A sprained ankle, Incorrect answer: A foot injury). Using more lenient criteria that allowed for partial marks did not change the overall pattern of results. The percentage of correct responses for central and peripheral details for both conditions and testing points was calculated for each participant. Subjective ratings of memory phenomenology were averaged across videos to create a mean score for each rating of reliving, emotional intensity, vividness, and degree of belief in memory accuracy for each condition and testing session (see above).

Shapiro–Wilk tests were used to assess the normality of the data. We used repeated measures ANOVAs to analyze data that were normally distributed and Wilcoxon signed-rank tests to assess data that were not normally distributed. Follow-up t-tests were corrected for multiple comparisons using Bonferroni corrections. Greenhouse Geisser corrections were applied where the data violated assumptions of sphericity. Significance was defined as P < 0.05. Statistical analyses of all behavioral data were conducted using JASP (JASP Team 2023).

fMRI analyses

Multivariate pattern analysis (MVPA) of encoding and retrieval sessions

All decoding analyses were performed with the CoSMoMVPA toolbox (Oosterhof et al. 2016). For each scanning session, we conducted a whole brain searchlight analysis to identify regions where patterns of neural activity could distinguish between the synchronous (illusion) and asynchronous (control) conditions during the memory encoding and retrieval sessions separately based on trial-wise beta estimates. For each subject, a sphere comprised 100 voxels was fitted around each voxel in the acquired volumes to create searchlight maps for each participant that reflected classification accuracies determined by k-fold cross-validation using a linear discriminant analysis (LDA) classifier. We investigated group-level effects corrected for multiple comparisons by submitting the resulting subject-specific classification accuracy maps to threshold-free cluster enhancement (TFCE). TFCE calculates a combined value for each voxel after a raw statistical map has been thresholded over an extensive set of values (Smith and Nichols 2009). This approach capitalizes on the heightened sensitivity of cluster-based thresholding methods, without the limitation of defining an initial fixed cluster threshold a priori (Smith and Nichols 2009; Oosterhof et al. 2016), and is less affected by nonstationarity within data (Salimi-Khorshidi et al. 2011). A total of 10,000 Monte Carlo simulations were estimated to identify brain regions where classification accuracy was higher than chance level (i.e. 50%). The resulting group-level whole brain classification accuracy map was thresholded at z = 2.19 to correspond to a false discovery rate of q < 0.05 (q is the adjusted P-value after using the FDR approach). Significant clusters of activation were extracted and anatomically labeled using the xjview toolbox (https://www.alivelearn.net/xjview). The anatomical labels were then cross-referenced with the mni2atlas toolbox (https://github.com/dmascali/mni2atlas) for accuracy, using the Harvard-Oxford Cortical and Subcortical Structural Atlases. The group-level statistical map was additionally manually compared with the mean normal structural scans of the current group of participants.

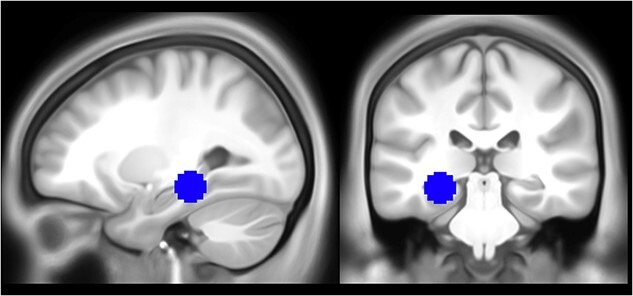

We then performed two separate region-of-interest (ROI) analyses (i.e. one for each scanning session) to investigate whether patterns of activity in the hippocampus could predict whether a memory was associated with the body ownership illusion (synchronous) or not (asynchronous) during memory encoding and retrieval. The hippocampal ROI was created by forming a 10 mm sphere centered on coordinates reported by Bergouignan et al. (2014); X = −27, Y = −31, Z = −11; see Fig. 3) using the MarsBar toolbox for SPM (Brett et al. 2002). The ROI was converted to binary format and resampled to 2 mm cubic voxels. Decoding accuracy for each participant and for each session (i.e. encoding, retrieval) within the hippocampal ROI was estimated using an LDA classifier. Subject-level accuracies were then submitted to a two-sided, one-sample t-test against a null hypothesis of chance-level classification accuracy (i.e. 50%). To verify that expected brain regions were engaged by the encoding and retrieval tasks, we performed univariate analyses on each session (see Supplemental Material).

Fig. 3.

The hippocampal ROI used in the MVPA and RSA, based on MNI coordinates extracted from Bergouignan et al. (2014); X = −27, Y = −31, Z = −11).

Representational similarity analysis: encoding-retrieval pattern similarity

We employed RSA to investigate whether the condition (synchronous or asynchronous) associated with each video was related to the degree of similarity between spatial patterns of BOLD activity during memory encoding and retrieval (i.e. encoding-retrieval similarity) at the whole brain and ROI level. We carried out additional whole brain and ROI analyses to test whether a stronger body ownership illusion and memory vividness interacted to influence encoding-retrieval similarity.

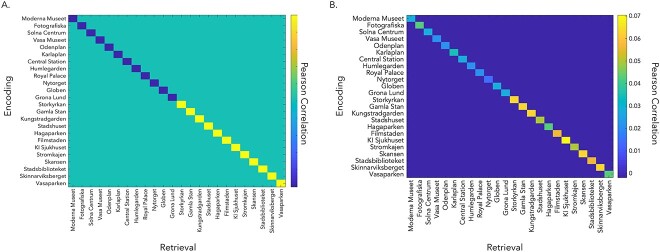

First, we created subject-specific contrast matrices to assess whether correlations between patterns of activity at encoding and retrieval were higher in the synchronous, compared with asynchronous, condition (see Fig. 4A). Normalized beta estimates from each video across the three repetitions in each session (i.e. encoding, retrieval) were averaged together, resulting in 24 beta estimates per session (i.e. 12 per condition, 1 per video). Videos associated with the synchronous condition were weighted positively while videos associated with the asynchronous condition were weighted negatively, such that the diagonal of the contrast matrix summed to zero. This analysis sought to identify brain regions where a stronger body ownership illusion (synchronous condition) led to greater memory reinstatement, relative to asynchronous condition with a suppressed illusion. For example, if the Moderna Museet video was encoded with synchronous visuotactile stimulation, it received a weighting of +1. In contrast, if the Central Station video was encoded with asynchronous visuotactile stimulation, it received a weighting of −1.

Fig. 4.

RSA contrast matrices. (A) We created individual subject contrast matrices comparing pattern similarity between encoding and retrieval of the same video where similarity is higher in the synchronous condition (on-diagonal). Videos encoded with the body ownership illusion (synchronous condition) received positive weights (i.e. yellow on-diagonal values), while videos encoded with reduced illusion (asynchronous) received negative weights (i.e. blue on-diagonal values) (B) Same-video correlations (on-diagonal) were weighted according to condition (i.e. synchronous = positive/yellow on-diagonal values, asynchronous = negative/blue on-diagonal values) and factor of interest (e.g. memory vividness). This RSA detects regions where reinstatement is greater for videos encoded with the body ownership illusion and increasing memory vividness. An example contrast matrix from a single participant is shown here.

Second, we conducted an analysis to identify regions where encoding-retrieval pattern similarity scaled with the body ownership illusion (synchronous > asynchronous) and the vividness of retrieval. We weighted correlations positively or negatively by convolving condition (i.e. synchronous = positive, asynchronous = negative) and memory vividness, as measured by the average vividness reported post-scanning from session one and session two (see Fig. 4B; Oedekoven et al. 2017). For example, if the video for Moderna Museet was encoded with synchronous visuotactile stimulation and received a vividness rating of four, then this video received a contrast value of five, which was divided by the sum of the contrast values for all videos such that the contrast values for all videos summed to one. For a video encoded with asynchronous visuotactile stimulation, a value of one would be subtracted rather than added to a participant’s vividness rating before it was divided by the sum of the contrast values for all videos. Contrast values corresponding to each video were assigned to the diagonal of the participant’s contrast matrix and set to sum to positive one. Off-diagonal elements were set to sum to negative one (i.e. −0.0018 per element), such that the final contrast matrix totaled zero.

For the whole brain analyses, a spherical searchlight of 100 voxels was centered at each voxel in the acquired volumes. The summed difference in Fisher-transformed Pearson correlations between encoding and retrieval of the same video was assigned to the center voxel of the searchlight. The individual searchlight maps were analyzed at the group level by submitting them to a one-sample t-test corrected for multiple comparisons using TFCE (Smith and Nichols 2009) with 10,000 Monte Carlo simulations to identify regions where correlations were statistically different than zero (e.g. Oedekoven et al. 2017).

For the ROI analyses, we tested whether the body ownership illusion (synchronous > asynchronous), and its interaction with memory vividness during retrieval, influenced encoding-retrieval pattern similarity in (i) the hippocampus, and (ii) brain regions that were sensitive to encoding memories with a stronger (synchronous condition) versus weaker (asynchronous condition) body ownership illusion as measures of memory reinstatement. We used the same hippocampal ROI as in the MVPA of the encoding and retrieval sessions. We created the ROI for the regions able to decode synchronicity of visuotactile stimulation during encoding based on the group-level results of the MVPA, which was converted into binary format using the MarsBar toolbox for SPM (Brett et al. 2002) and resampled to 2 mm cubic voxels. For both ROIs, encoding-retrieval pattern similarity was estimated using Pearson correlations and compared with the relevant contrast matrix for each participant. Subject-level correlations were then Fisher-transformed to approximate a normal distribution more closely for statistical analysis, and submitted to a two-sided, one-sample t-test against a null hypothesis of zero. Each ROI contained one outlier, which was removed from the analysis. To verify that the neural data extracted from each of the ROIs were of high quality, we counted the number of voxels retained in the RSAs for each participant and ROI separately. A voxel was dropped from the analysis if it contained non-numeric, missing data.

Verification of the involvement of the left posterior hippocampus in memory retrieval

We carried out a univariate analysis to verify that the left posterior hippocampal ROI was implicated in memory retrieval generally, regardless of condition as justification for the use of this ROI in the RSAs. We used the same trial-wise beta-estimates from the encoding and retrieval sessions as in the multivariate analyses, except that they were spatially smoothed with an 8 mm full-width at half maximum Gaussian kernel, consistent with standards for univariate analyses (Mikl et al. 2008). Whitened, filtered beta estimates reflecting the contrast memory retrieval (synchronous + asynchronous condition) versus rest were extracted from the hippocampal ROI (Fig. 3) and submitted to a two-sided, one-sample t-test against a null hypothesis of zero.

Results: illusion induction

Questionnaire responses

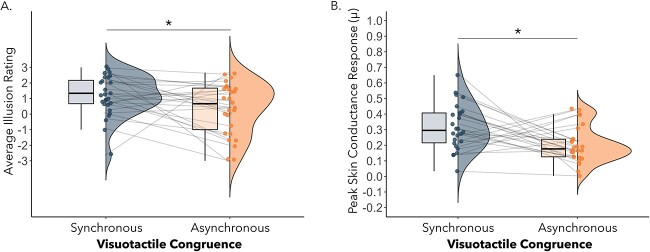

We performed a paired-samples t-test comparing average illusion minus average control ratings for the synchronous and asynchronous condition separately to assess the subjective strength of the illusion induction. Critically, difference ratings were significantly higher in the synchronous (M = 3.13, SD = 1.52) compared with asynchronous condition (M = 2.31, SD = 1.87), t(29) = 2.08, P = 0.047, Cohen’s d = 0.38). Restricting the analysis to only those participants included in the fMRI analyses (n = 24) led to the same pattern of results (t(23) = 3.33, P = 0.003, Cohen’s d = 0.68). Consistent with this finding, a direct comparison of average illusion statement ratings between the synchronous (M = 1.19, SD = 1.33) and asynchronous visuotactile condition (M = 0.23, SD = 1.78) was also significant, t(29) = 2.55, P = 0.02, Cohen’s d = 0.38 (see Fig. 5A). Restricting the analysis to only those participants included in the fMRI analyses (N = 24) led to the same pattern of results (t(23) = 2.35, p = .03, Cohen’s d = .48). These findings confirm that the participants felt a stronger illusion of embodying the mannequin following synchronous compared with asynchronous visuotactile stimulation as expected (Petkova and Ehrsson 2008; Guterstam et al. 2015; Iriye and Ehrsson 2022; O’Kane and Ehrsson 2021). For responses to individual illusion and control statement items, please see Supplementary Fig. 1.

Fig. 5.

(A) The average illusion statement scores were significantly higher following synchronous compared with asynchronous visuotactile stimulation, P = 0.02. (B) The peak magnitudes of SCRs were higher for knife threats experienced during the synchronous compared with asynchronous condition, P = 0.01.

Skin conductance responses

A paired-samples t-test comparing SCR magnitudes between conditions found a significant effect of visuotactile congruence (t(25) = 2.69, P = 0.01, Cohen’s d = 0.53). Restricting the analysis to only those participants included in the fMRI analyses (N = 22) led to a marginally significant difference between the synchronous (M = .29, SD = .13) and asynchronous (M = .20, SD = .14) visuotactile stimulation conditions, t(21) = 1.93, p = 0.068, Cohen’s d = . 41. SCR magnitude was higher in the synchronous (M = 0.32, SD = 0.15) compared with asynchronous condition (M = 0.20, SD = 0.12, see Fig. 5B). Together with the questionnaire responses, this finding provides additional evidence that illusory ownership over the mannequin’s body was higher in the synchronous compared with asynchronous condition.

Eye tracking data

Average fixation coordinates were plotted for both experimental conditions for each participant (see Supplementary Fig. 3A and B). A 2 (visuotactile stimulation: synchronous, asynchronous) × 2 (fixation coordinate: X,Y) did not reveal a significant main effect of fixation location according to condition, nor an interaction between visuotactile congruence and fixation coordinate (P’s > 0.22; see Supplementary Fig 2). Restricting the analysis to only those participants included in the fMRI analyses (N = 23) led to the same pattern of results: a main effect of coordinate, F(1,22) = 5.85, p = 0.24, ηp2 = .21, revealing a greater value for mean x compared to y coordinates, p = .024. There was no significant main effect of type of visuotactile stimulation or interaction between coordinate and type of visuotactile stimulation, p’s > .17, demonstrating that mean gaze location did not differ between conditions. Thus, the average gaze location did not differ according to condition, implying that eye-movements and overt attention was reasonably matched between conditions. There was a significant main effect of coordinate, F(1,27) = 10.00, P = 0.004, ηp2 = 0.27, but this is irrelevant as there was no interaction with congruence of visuotactile stimulation.

Results: memory accuracy and phenomenology

Cued recall accuracy

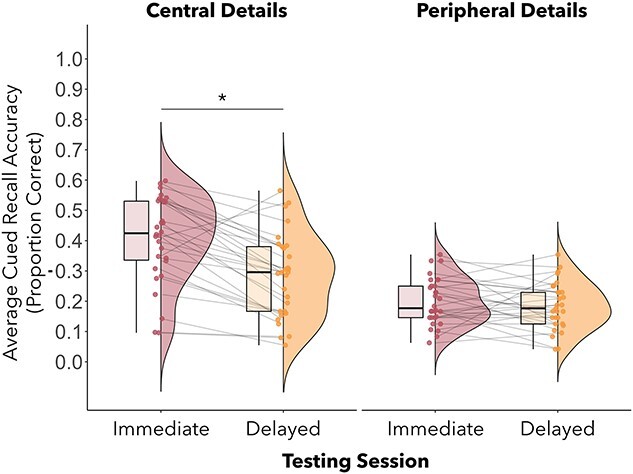

A 2 (testing session: immediate, delayed) × 2 (detail type: central, peripheral) × 2 (visuotactile congruence: synchronous, asynchronous) repeated measures ANOVA revealed significant main effects of testing session, F(1,28) = 27.10, P < 0.001, ηp2 = 0.49, and detail type, F(1,28) = 49.84, P < 0.001, ηp2 = 0.64, but not visuotactile congruence F(1,28) = 0.01, P = 0.92, ηp2 = 0.0004. Main effects were qualified by a significant interaction between testing session and detail type, F(1,28) = 0.78, P < 0.001, ηp2 = 0.39. Post hoc tests with Bonferroni corrections indicated that memory accuracy for central details decreased between testing points (immediate: M = 0.41, SD = 0.14; delayed: M = 0.28, SD = 0.14; see Fig. 7), P < 0.001. In contrast, memory accuracy for peripheral details did not change between testing points (immediate: M = 0.20, SD = 0.08; delayed: M = 0.18, SD = 0.18, see Fig. 6), P = 1.00. Restricting the analysis to only those participants included in the fMRI analyses (N = 24) led to the same pattern of results, with main effects of session (F(1,22) = 24.73, p < .001, ηp2 = .53), and detail type (F(1,22) = 30.84, p < .001, ηp2 = .58), and an interaction between session and detail type (F(1,22) = 11.25 p < .003, ηp2 = .34), but no main effect or interaction effect of visuotactile congruence.

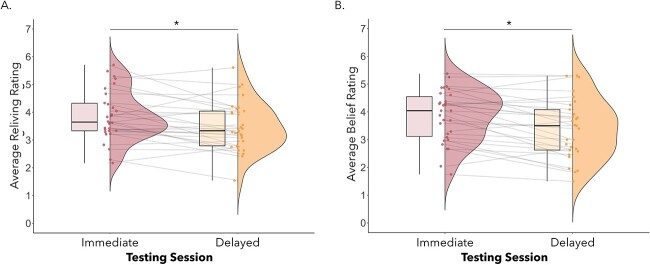

Fig. 7.

Average reliving (A) and belief in memory accuracy (B) ratings were higher at immediate compared with delayed testing, P’s < 0.02.

Fig. 6.

Average cued recall accuracy was higher at immediate testing compared with delayed testing for central event details (P < 0.001), but not peripheral details.

Subjective ratings

We conducted four separate 2 (testing session: immediate, delayed) × 2 (visuotactile congruence: synchronous, asynchronous) repeated measures ANOVAs on participants’ ratings of vividness, reliving, perceived memory accuracy, and emotional intensity. For vividness ratings, there was a significant interaction between session and type of visuotactile congruence, F(1,27) = 4.51, P = 0.04, ηp2 = 0.14. However, follow-up post hoc tests with Bonferroni corrections did not reveal any significant effects, all P’s > 0.63. Restricting the analysis to only those participants included in the fMRI analyses led to the same interaction of session and visuotactile congruence F(1,21) = 4.89, p = 0.38, ηp2 = .19 without significant effects in follow-up t-tests with Bonferroni corrections, p’s > .48. There was a main effect of testing session on reliving ratings, F(1,27) = 6.00, P = 0.02, ηp2 = 0.18, which indicated higher reliving at immediate (M = 3.86, SD = 0.91) compared with delayed testing (M = 3.51, SD = 0.91; see Fig. 7A). This effect was not significant when only considering data from participants included in the fMRI analyses, F(21) = 1.49, p = .08, ηp2 = .14. No other significant main effects or interactions were observed, p’s > .08. There was also a main effect of testing session on perceived accuracy ratings, F(1,27) = 15.03, P < 0.001, ηp2 = 0.36, whereby participants believed in the accuracy of their memories to a higher degree during immediate (M = 3.85, SD = 0.94) relative to delayed testing (M = 3.35, SD = 1.06; see Fig. 7B). The main effect of testing session was also present for participants included in the fMRI analyses, F(1,21) = 9.87, p = .005, ηp2 = .32. There was no main effect of type of visuotactile congruence or an interaction effect, p’s > .20. For emotional intensity ratings, there were no significant main effects or interactions. No significant main effects or interactions were observed when only including participants in the fMRI analyses, p’s > .19.

fMRI results

Multivariate analysis of memory encoding

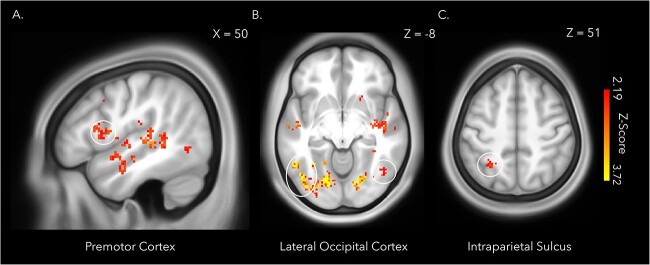

A whole brain MVPA of the period of memory encoding during session one revealed a distributed set of regions where patterns of neural activity could distinguish between the synchronous and asynchronous conditions, thereby decoding the full-body illusion and whether body ownership of the mannequin was stronger or weaker (Table 2). The identified regions included the right ventral premotor cortex, right intraparietal sulcus, bilateral lateral occipital cortex (Fig. 8), putamen, and lateral cerebellum (Petkova et al. 2011; Guterstam et al. 2015; Preston and Ehrsson 2016), which have been previously linked to feelings of body ownership in full-body illusion paradigms and rubber hand illusion studies (Ehrsson, Spence and Passingham 2004; Gentile et al. 2015; Limanowski et al. 2014). These findings thus confirm that the participants were experiencing a stronger illusion in the synchronous condition than in the asynchronous condition, in line with the questionnaire and threat-evoked results reported above.

Table 2.

MVPA encoding session whole brain searchlight results.

| MNI coordinates | |||||||

|---|---|---|---|---|---|---|---|

| Anatomical region | BA | Extent | z-score | q-value | x | y | z |

| Middle Frontal Gyrus | 8 | 13 | 2.447 | .014 | 46 | 10 | 36 |

| Precentral Gyrus (cluster extends into Premotor Cortex) | 44 | 123 | 3.195 | .006 | 48 | 8 | 10 |

| Central Opercular Cortex | 41 | 23 | 2.770 | 0.011 | 62 | −12 | 8 |

| Insular Cortex | 13 | 10 | 3.121 | 0.007 | −42 | −2 | 2 |

| Planum Polare | 22 | 186 | 3.353 | 0.006 | −48 | −12 | −6 |

| 22 | 10 | 2.447 | 0.014 | −56 | 0 | −4 | |

| Heschl’s Gyrus | 41 | 17 | 2.678 | 0.011 | −36 | −26 | 8 |

| 41 | 21 | 2.697 | 0.011 | 50 | −8 | 0 | |

| Superior Temporal Gyrus | 22 | 259 | 3.291 | 0.006 | 52 | −26 | 4 |

| 22 | 105 | 3.195 | 0.006 | 48 | −18 | −6 | |

| Middle Temporal Gyrus | 21 | 76 | 2.770 | 0.011 | 50 | −4 | −22 |

| 21 | 12 | 2.628 | 0.012 | 60 | −12 | −10 | |

| 21 | 80 | 2.549 | 0.012 | 58 | −58 | 6 | |

| Parahippocampal Gyrus | 36 | 7 | 2.597 | 0.012 | 20 | −40 | −4 |

| 36 | 8 | 2.562 | 0.012 | 26 | −30 | −20 | |

| 36 | 11 | 2.562 | 0.012 | 34 | −30 | −14 | |

| Amygdala | 105 | 2.807 | 0.011 | 30 | −8 | −12 | |

| Hippocampus | 12 | 2.759 | 0.011 | 34 | −18 | −14 | |

| Parietal Operculum Cortex | 40 | 8 | 2.549 | 0.012 | 50 | −22 | 18 |

| Superior Parietal Lobule (cluster extends into the Intraparietal Sulcus) | 7 | 57 | 2.759 | 0.011 | −26 | −56 | 52 |

| Supramarginal Gyrus | 40 | 9 | 2.549 | 0.012 | 60 | −44 | 18 |

| 40 | 7 | 2.549 | 0.012 | 54 | −22 | 26 | |

| Angular Gyrus | 39 | 18 | 2.549 | 0.012 | −48 | −70 | 30 |

| Precuneus | 7 | 5 | 2.501 | 0.013 | 8 | −72 | 32 |

| Cingulate Gyrus (Posterior Division) | 30 | 64 | 3.195 | 0.006 | −16 | −42 | 2 |

| Temporal Occipital Fusiform Cortex | 37 | 13 | 3.719 | 0.003 | −32 | −56 | −8 |

| 37 | 11 | 2.697 | 0.011 | 34 | −42 | −10 | |

| 37 | 62 | 2.669 | 0.011 | 30 | −42 | −16 | |

| Occipital Fusiform Cortex | 18 | 1582 | 3.719 | 0.007 | 18 | −84 | −10 |

| 37 | 6 | 2.834 | 0.011 | 32 | −70 | −10 | |

| 37 | 62 | 2.678 | 0.011 | 32 | −62 | −16 | |

| Lateral Occipital Cortex | 37 | 1582 | 3.719 | 0.007 | −46 | −66 | −12 |

| (cluster extends into the Angular Gyrus) | 39 | 36 | 2.863 | 0.011 | −50 | −64 | 26 |

| 19 | 60 | 2.863 | 0.011 | −18 | −84 | 26 | |

| 19 | 5 | 2.697 | 0.011 | −30 | −82 | 12 | |

| 19 | 20 | 3.195 | 0.006 | 28 | −84 | 6 | |

| 37 | 80 | 2.697 | 0.011 | 48 | −64 | −12 | |

| Supracalcarine Cortex | 18 | 14 | 2.770 | 0.011 | −22 | −60 | 18 |

| Calcarine Cortex | 17 | 11 | 2.707 | 0.011 | −26 | −64 | 12 |

| Cuneal Cortex | 19 | 35 | 2.697 | 0.011 | −6 | −82 | 30 |

| 18 | 21 | 2.620 | 0.012 | 8 | −72 | 24 | |

| Occipital Pole | 18 | 23 | 3.719 | 0.003 | −20 | −94 | 6 |

| 18 | 7 | 2.473 | 0.014 | −14 | −90 | 22 | |

| Lingual Gyrus | 18 | 1582 | 3.719 | 0.003 | −14 | −72 | −10 |

| 19 | 64 | 3.195 | 0.006 | −20 | −56 | −12 | |

aCluster peaks within gray matter with cluster extent thresholds of greater than five voxels are listed.

bResults are thresholded following FDR-correction (q < 0.05).

cBA, Brodmann area.

Fig. 8.

The whole brain searchlight analysis decoding illusion condition (synchronous or asynchronous) during encoding of the immersive videos identified several regions previously implicated in the sense of body ownership, including the right premotor cortex (A), bilateral lateral occipital cortex (B), and the left intraparietal sulcus (C). The statistical map was thresholded at qFDR < 0.05 and overlaid onto the average T1w images of 440 subjects obtained from the WU-Minn HCP dataset (van Essen et al. 2013). Cluster peaks within gray matter and cluster extent thresholds of greater than five voxels are depicted.

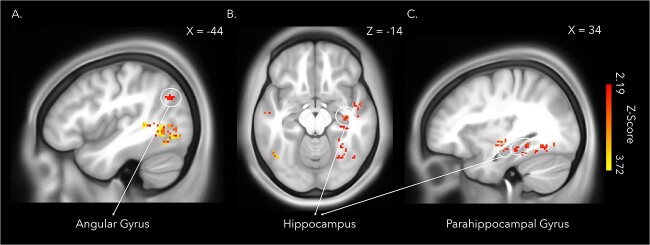

More importantly for the main questions of the present study, the whole-brain analysis further revealed a set of medial temporal and inferior posterior parietal regions known to be essential to successful memory encoding, including the right hippocampus, right parahippocampal gyri (extending into the fusiform gyrus), and left angular gyrus (Fig. 9). Lateral frontal and temporal regions were also sensitive to the full-body illusion during encoding. While patterns of activity in the left hippocampus did not survive corrections for multiple comparisons at the whole-brain level, the ROI analysis revealed that the same hippocampal region previously identified by Bergouignan et al. (2014) could significantly predict whether a memory was encoded with illusory ownership (synchronous) or not (asynchronous), t(23) = 2.17, P = 0.04, Cohen’s d = 0.50 (Maccuracy-chance score = 0.03, SD = 0.07).

Fig. 9.

Patterns of activity in posterior parietal and medial temporal lobe regions known to be crucial to successful memory formation predicted body illusion condition (synchronous or asynchronous) during the encoding of the immersive videos in a whole brain searchlight analysis. The regions identified included the left angular gyrus (A), the right hippocampus (B&C), and the right parahippocampal gyrus (C). The statistical map was thresholded at qFDR < 0.05 and overlaid onto the average T1w images of 440 subjects obtained from the WU-Minn HCP dataset (van Essen et al. 2013). Cluster peaks within gray matter and cluster extent thresholds of greater than five voxels are depicted.

Memory retrieval

A whole brain MVPA of the period of memory retrieval during session one did not reveal any regions that could significantly distinguish between memories that had been encoded in the synchronous and asynchronous conditions in the preceding session (q < 0.05). The results were not affected by whether the onset of memory retrieval was set to the presentation of the memory cue or the instruction for the participant to close their eyes and begin retrieving their memory for the specified video.

Classification accuracy of illusion condition during encoding (synchronous versus asynchronous) was significantly below chance level in the hippocampal ROI, t(23) = −2.22, P = 0.04, Cohen’s d = −.43 (Maccuracy-chance = −.03, SD = 0.07). Since significantly below chance level decoding accuracy can result from unstable classifier performance between cross-validation folds (Jamalabadi et al. 2016), we extracted the classification accuracy for each fold averaged over participants. Classifier accuracy did indeed vary across folds, with folds one and four performing the worst (see Supplementary Fig. 3). To minimize the effect of variation in classification accuracy between folds, we modified the cross-validation partition scheme to include two runs as test data for each fold, instead of one run as was part of the original analysis (Etzel 2013). Using the modified partition scheme, classification accuracy of illusion condition during encoding (synchronous versus asynchronous) was not significantly above chance level in the hippocampal ROI, t(23) = −2.05, P = 0.05, Cohen’s d = −0.43 (Maccuracy-chance = −.03, SD = 0.08).

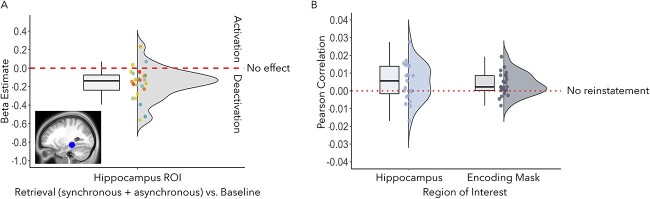

Univariate hippocampal ROI analysis: retrieval (synchronous + asynchronous) versus rest

To confirm that the region of the left posterior hippocampus we based our hypotheses on was implicated in memory retrieval generally and support our decision to include it in the RSAs, we extracted beta estimates from the hippocampal ROI (see Fig. 3) and submitted them to a two-sided one-sample t-test against a null hypothesis of zero. Beta estimates were significantly below zero, t(23) = −4.54, P = 0.001, Cohen’s d = −0.93, indicating that this region was deactivated as participants retrieved memories of the previously seen videos (Fig. 10A). Deactivations of the hippocampus have been reported in response to effortful memory retrieval (Reas et al. 2011; Reas and Brewer 2013), and memory retrieval based on environmental landmarks (Nilsson et al. 2013), which were likely prominent in participants’ memories as the retrieval cues were based on famous locations based on landmarks in Stockholm. Thus, the left posterior hippocampus was included as an ROI in the encoding-retrieval pattern similarity analyses as (i) we had theory-driven predictions concerning this precise region’s involvement in integrating body ownership within memories, (ii) it was involved in memory retrieval at the univariate level, regardless of condition, and (iii) it was sensitive to strong versus weak body ownership during encoding (see MVPA Memory Retrieval results above).

Fig. 10.

(A) We extracted beta estimates from the hippocampal ROI, based on coordinates identified by Bergouignan et al. (2014); X = −27, Y = −31, Z = −11), corresponding to the univariate contrast comparing memory retrieval, regardless of condition, to the baseline task. Beta estimates were significantly below zero (P = 0.001), indicating deactivation of the left posterior hippocampus during memory retrieval. The dotted line indicates a null effect (i.e. no effect of retrieval on activity in the hippocampal mask). (B) Memory reinstatement (i.e. encoding-retrieval pattern similarity) was stronger for memories encoded with strong body ownership (synchronous versus asynchronous condition) and increasing levels of vividness in the left posterior hippocampal ROI (x-axis: hippocampus), P = 0.03, and in the larger set of regions that decoded strong versus weak body ownership during encoding, P = 0.01 (x-axis: encoding mask). Pearson correlations, between the neural data extracted from each ROI and the individual contrast matrices specifying stronger reinstatement for memories encoded with strong body ownership and retrieved with high vividness (see Fig. 4B), are significantly greater than zero. The dotted line indicates a null effect (i.e. no encoding-retrieval pattern similarity).

RSA

The whole brain searchlight analyses did not reveal any regions where encoding-retrieval pattern similarity was significantly higher according to condition, or condition convolved with average vividness (q < 0.05). Similarly, encoding-retrieval similarity was not significantly higher in the synchronous compared with asynchronous condition in the hippocampal ROI, t(23) = −0.24, P = 0.81, Cohen’s d = −0.05 (M = −0.002, SD = 0.0331), or the ROI specifying regions whose patterns of activity could decode body illusion condition (synchronous versus asynchronous) during encoding, t(23) = 1.32, P = 0.20, Cohen’s d = 0.27 (M = −0.001, SD = 0.003).

However, as hypothesized, once vividness was factored into the analysis, encoding-retrieval pattern similarity in the left posterior hippocampal ROI based on coordinates from Bergouignan et al. (2014); Fig. 3) was higher for memories encoded with a stronger full-body illusion (synchronous condition) compared with a weaker illusion (asynchronous condition), t(22) = 2.30, P = 0.03, Cohen’s d = 0.50 (M = 0.005, SD = 0.013; see Fig. 10B). The same was true for the ROI based on regions that decoded the synchronous versus asynchronous conditions during encoding, which included the angular gyrus and right posterior hippocampus, t(22) = 2.84, P = 0.01, Cohen’s d = 0.59 (M = 0.004, SD = 0.006; see Fig. 10B). Thus, memories for the immersive 3D videos formed while experiencing the mannequin’s body in the center of the scene as one’s own body and retrieved with high vividness led to increased memory reinstatement in the same brain regions sensitive to the full-body illusion during encoding, including the bilateral posterior hippocampus.

We verified that the neural data extracted from the hippocampal and encoding mask ROIs were of high quality by counting the number of voxels within each ROI retained in the RSAs. An average of 542.83 (SD = 11.87) out of 552 total possible voxels were retained across participants in the hippocampal ROI (i.e. 98.34%; Supplementary Fig. 4A). All of the possible 4,874 voxels were retained across participants in the encoding mask ROI (Supplementary Fig. 4B). Together, these results indicate that the data extracted from each ROI and used as input in the RSAs were of reliable, high quality.

Discussion

The fundamental sense of our own body is at the center of every event we experience, which affects how events are encoded and recalled. During encoding, a whole brain searchlight analysis revealed that experiencing or not experiencing a mannequin’s body as one’s own in the center of a naturalistic multisensory scene led to different patterns of activity in regions associated with memory formation, i.e. the right hippocampus, right parahippocampal gyrus, and left angular gyrus. A hypothesis-driven ROI analysis focusing on the left posterior hippocampal region identified by Bergouignan et al. (2014) additionally indicated that patterns of activity could predict strong versus weak body ownership during encoding. Our findings show that regions critical to memory encoding form memories for events according to the degree of body ownership experienced as an event unfolds, which supports the hypothesis that bodily self-awareness is intrinsically part of episodic memories as they form. Furthermore, ROI analyses demonstrated that reinstatement of the original memory trace was greater for memories formed with a strong compared with weak sense of illusory body ownership and high levels of vividness in the set of regions sensitive to body ownership during encoding, including the hippocampus. Thus, a coherent multisensory experience of a body as one’s own during encoding strengthens how core hubs of memory, including the hippocampus, reinstate the past during vivid recall. Collectively, these findings provide new insights into how the perceptual awareness of one’s own body in the center of one’s multisensory experience influences memory by binding incoming information into a common egocentric framework during encoding, which facilitates memory reinstatement.

Our full-body illusion paradigm successfully manipulated the illusory sensation of body ownership as confirmed by significant differences in illusion questionnaire ratings, threat-evoked SCR and activation of key areas associated with such illusions when comparing the synchronous and asynchronous conditions, including the premotor cortex, intraparietal cortex, lateral occipital cortex, and cerebellum (Petkova et al. 2011; Guterstam et al. 2015; Preston and Ehrsson 2016). However, we did not find that disrupting body ownership leads to impaired memory accuracy and subjective re-experiencing, as previously observed (Iriye and Ehrsson 2022), perhaps due to a weaker illusion induction in the present study, participant fatigue from lengthened experimental sessions, and/or the distracting nature of the MR-environment. However, the lack of differences between experimental conditions in terms of memory accuracy and the subjective memory ratings clarifies the interpretation of our neuroimaging results. The neural effects of body ownership illusions on activity in areas related to encoding and reinstatement are linked to changes in the multisensory experience of one’s own body, rather than (indirectly) mediated though changes in memory accuracy and phenomenology.

Body ownership influences memory encoding and reinstatement in the hippocampus

Patterns of activity in the hippocampus distinguished between events encoded with unified compared with disrupted body ownership. The hippocampus plays a crucial role in encoding by logging distributed cortical activity elicited in response to an event, extracting associated spatial, temporal, and sensory contextual details, and merging them into a cohesive engram that can later be reinstated during retrieval (Kim 2015; Simons et al. 2022). Our finding suggests that information bound in the hippocampus includes multisensory signals that create a fundamental sense of body ownership, which may define a common egocentric reference frame (Ehrsson 2007; Guterstam et al. 2015) that can be used to bind contextual details and center spatial representations of an external scene (Burgess 2006). Bergouignan et al. (2014) proposed that an altered sense of body ownership, self-location, and visual perspective achieved by encoding events from an out-of-body perspective impaired the ability of the hippocampus to integrate event details within this common egocentric framework, explaining enhancement in hippocampal activation across repeated retrieval attempts and reduced memory vividness. However, the authors were unable to test their prediction as fMRI data were only collected during retrieval. Here, we provide evidence that body ownership, isolated from manipulations of self-location and visual perspective, is linked to memory formation in the hippocampus.

The ability of the hippocampus to categorize memories according to level of body ownership during encoding affected how the resulting memory trace was reinstated. We observed that unified body ownership and high retrieval vividness increased memory reinstatement in the left posterior hippocampus. We expected body ownership to interact with vividness in the present study based on previous evidence that vividness is correlated with hippocampal activity for events encoded with atypical bodily self-awareness (Bergouignan et al. 2014), and the vividness of retrieval generally (Geib et al. 2017; Thakral, Benoit, and Schacter 2017a; Thakral, Madore, and Schacter 2017b). Thus, a coherent sense of one’s body and the vividness of recollection together enhance how the hippocampus represents the past. Memory strength is determined by the degree to which a retrieval cue matches an encoding context (for review see Roediger et al. 2002; Rugg, Johnson, and Uncapher 2018). A strong match between encoding and retrieval contexts increases the chance that the index of cortical activity present at the time the remembered event was experienced will be activated in the hippocampus during retrieval, setting off a cascade of cortical activity that reinstates those original patterns and enabling the memory to be re-experienced (e.g. Hebscher et al. 2021). Accordingly, reinstatement was higher for events encoded with a stronger sense of body ownership and increasing levels of memory vividness both in the hippocampus, which we hypothesize initiated reactivation of the memory, and brain regions sensitive to illusory body ownership during initial encoding, where the memory trace of patterns of activity present during encoding was stored. Hence, feelings of body ownership during encoding are likely a fundamental contextual memory cue based on spatial relationships that delineate oneself from the external world. During retrieval, a coherent sense of body ownership consistent with encoding in the illusion condition when the mannequin felt like oneself may facilitate access to a memory representation located in the hippocampus by increasing reinstatement. Further evidence that body ownership provides a crucial context for memory comes from our finding that patterns of activity in the right parahippocampal gyrus discriminated between events experienced with stronger or weaker illusory body ownership during encoding. The parahippocampal gyrus represents information about contexts in memory, which are bound with item-level information in the hippocampus (Diana et al. 2007; Eichenbaum et al. 2007). Future studies that manipulate body ownership at both encoding and retrieval are needed to directly determine whether a coherent sense of body ownership constitutes a fundamental context for memory processing.

Patterns of activity in the angular gyrus, dorsolateral prefrontal cortex, and occipital cortex reflect integration of body ownership into memory

Additional brain regions outside the medial temporal lobes discriminated stronger or weaker illusory body ownership as participants formed and reinstated memories. Regions included the angular gyrus, dorsolateral prefrontal cortex, and occipital regions which have not been previously implicated in body ownership illusions and may rather reflect the incorporation of information related to own-body perception into memory. Previous research has found that successful recall of a stimulus was predicted by similarity in patterns of brain activity in each of these regions at encoding (Kuhl et al. 2012; Ward et al. 2013; Xue et al. 2013, 2010; Lu et al. 2015; Hasinski and Sederberg 2016). Increased similarity in patterns of activity across repetitions in prefrontal, ventral posterior parietal and sensory cortices optimizes memory by providing a more reliable, less noisy representation of encoded stimuli, which is fed to medial temporal lobe regions that rely on pattern separation mechanisms to distinguish between memories (Xue 2018). We posit that the ability of frontoparietal and visual cortices to separate patterns of activity underlying memory encoding with and without body ownership may improve the distinctiveness of information sent to medial temporal lobe regions, which would then be registered as uniquely identifiable memory traces stored in the hippocampus, ultimately facilitating memory reinstatement. We suggest that the ability of the angular gyrus to discriminate between encoding of events with strong and weak body ownership represents the integration of self-relevant bodily information into multisensory memory. Previous research has implicated this region in successful encoding (Uncapher and Wagner 2009; Rugg et al. 2018; Lee et al. 2017; Rugg and King 2018), especially for multisensory memories (Bonnici et al. 2016; Jablonowski and Rose 2022. The angular gyrus identifies cross-modal information perceived as behaviorally self-relevant (Cabeza et al. 2008; Singh-Curry and Husain 2009; Uncapher et al. 2011), and integrates it into a common egocentric framework to support re-experiencing during retrieval (Yazar et al. 2014; Bonnici et al. 2018; Bréchet et al. 2018; Humphreys et al. 2021). We add to this literature by showing that multisensory signals underpinning a stable sense of body ownership frames how the angular gyrus encodes an event. In sum, strong compared with weak body ownership during encoding led to separate patterns of activity in parietal, frontal, and occipital areas, which may have enhanced the uniqueness of memory representations later processed in medial temporal lobe regions.

Conclusion