Abstract

Many specialist societies present ‘best poster’ prizes, yet without generally agreed assessment methods. 31 posters at a neurology meeting were divided randomly into two sets; 14 neurologists, randomized into two groups, were each assigned one poster set. They ‘quick scored’ the first half, viewing posters for 10-15 seconds, and ‘detailed scored’ the others. 11 administrators and pharmaceutical representatives quick scored all posters. Neurologists' quick score ranking correlated highly (r=0.75) with other neurologists' detailed score ranking, and identified four of their six top-ranked posters. Correlations were strongest for presentation (r=0.65), message (r=0.65) and star-quality (r=0.64), but weak for facts (r=0.09), originality (r=0.15) or science (r=0.02). Non-neurologists could not identify the posters ranked highest by neurologists. We conclude that quick ranking by specialists can efficiently identify the best posters for more detailed assessment. On this basis we offer poster-scoring guidelines for use at scientific meetings.

INTRODUCTION

Posters are used widely at medical meetings to present a concise overview of clinical and scientific research, and generally provide a more relaxed environment for exchanging ideas than the crowded auditorium. Many specialist societies award ‘best poster’ prizes, but without uniformly agreed assessment methods. We evaluated posters at a recent Association of British Neurologists' meeting in order to generate poster assessment guidelines.

METHODS

31 of the expected 34 posters were presented; 14 of the 16 participating neurologists (9 consultants, 5 specialist registrars) completed score sheets. Posters were divided randomly into two sets. Neurologists were randomized into two groups, and each participant was assigned one poster set. They scored their first impression (10-15 seconds viewing: ‘quick score’ out of 50) of their poster set, and made a detailed assessment (out of 50) on the other set, using a specifically designed score sheet based upon practical guidelines,1 scoring as follows:

0-1 points for each of 12 presentation characteristics: correct format, title, introduction, conclusions, references, subheadings, no overcrowding, readable from 2 metres, pictures and tables as well as text, appropriate colouring, correct spelling and grammar, written handout.

0-8 points each for factually correct content, originality, and scientific merit.

0-7 bonus points each for a quickly understandable message and ‘star quality’.

Individual neurologists therefore generated quick and detailed scores on different posters. To pool their results, all scores were corrected according to the observer's mean score. The mean corrected scores for each poster were then ranked in order.

RESULTS

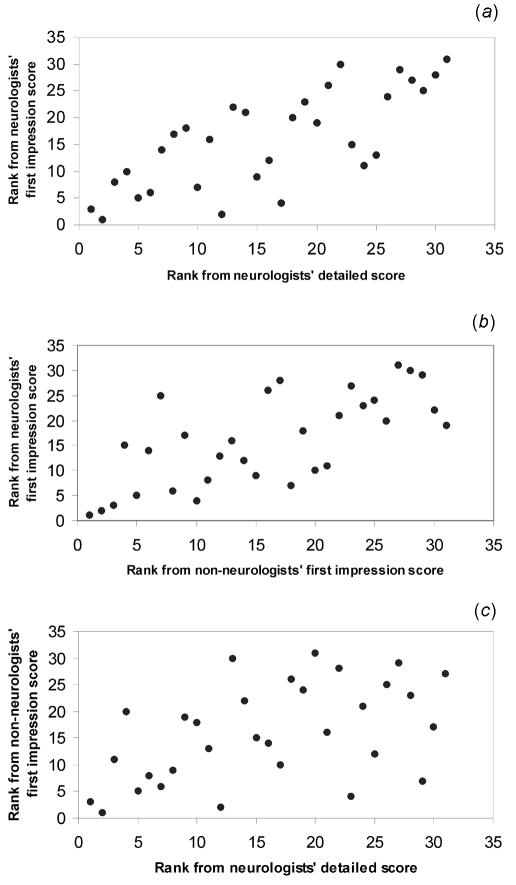

Neurologists' mean quick scores were 30.6 SD 2.8 and 31.2 SD 4.2 (overall mean 30.9); mean detailed scores were 32.1 SD 5.9 and 35.1 SD 3.7 (overall mean 33.6). The neurologists' corrected quick score rankings correlated well with colleagues' corrected detailed score rankings (r=0.75) (Figure 1a). Four of the six posters placed highest on detailed scoring were among the top-ranked six on quick scores, and vice versa. The quick ranking correlated best with presentation (r=0.65), quickly understandable message (r=0.65) and star quality (r=0.64), but poorly with factually correct content (r=0.09), originality (r=0.15) and scientific merit (r=0.02).

Figure 1.

Correlation of ranked corrected mean scores from neurologists' first impression with (a) ranked corrected mean scores from neurologists' detailed assessment (r=0.75) and (b) non-neurologists' first impression rankings (r=0.70);(c) shows the poor correlation between non-neurologists' first impression rankings and neurologists' detailed score rankings (r=0.49)

11 non-neurologists (8 pharmaceutical representatives, 3 administrators) also scored their first impression of each poster; all returned their scores. Their mean quick score was 33.7 SD 3.7. Their ranked mean scores correlated well with neurologists' ranked quick scores (r=0.70)—with clear agreement on the lowest ranked posters (Figure 1b)—but correlated less well with neurologists' ranked detailed scores (r=0.49) (Figure 1c); they were unable to identify the neurologists' top ranked posters.

COMMENT

Neurologists' ‘first impression’ of a poster's quality correlated highly with other neurologists' detailed assessment, and identified four of their six top-ranked posters. Non-neurologists' quick scores correlated poorly with neurologists' detailed scores, and did not identify the highest ranked posters. Thus, in practice, neurologists (but not non-neurologists) could rapidly have identified the best few posters to be scored in more detail. Quick scores inevitably reflected posters' presentational qualities rather than scientific merit. An unattractive poster with high scientific merit risked being overlooked on first impression. However, we felt that a prize-winning poster should show both presentational and scientific excellence.

Individual neurologists made their quick and detailed assessments on different posters. Since the posters were randomized into two groups, there was no reason to suppose that one group contained consistently better posters than the other. We therefore assumed that any difference in mean scores between the two groups related mainly to observer differences, especially as some used the full range of marks while others marked consistently higher or lower than the group mean. In order to pool the results from both groups, we therefore corrected individual observers' scores before ranking the posters' mean scores.

Our mark sheet attempted to balance presentational and scientific qualities: a quarter (14/50) for formal presentational characteristics, half for more scientific qualities (science facts, originality), and a quarter for characteristics combining the two (message, star quality).

Potential observer bias in this study included unblinded scoring and non-uniform scoring conditions. The poster assessments were undertaken opportunistically during the meeting. Poster lists, although randomized, were typically scored sequentially. Also, there was a variable amount of interaction with the poster presenter. Use of delegates as observers inevitably clashed with their time constraints and other distractions at a busy meeting. However, this was a pragmatic study performed within a scientific meeting, with the aim of producing guidelines applicable to similar meetings.

Our results allow us to suggest preliminary guidelines for poster assessment at scientific meetings, as follows:

Emphasize to presenters that the presentational qualities of their poster are important, as well as its scientific merit.

Several observers from the profession give ‘first impression’ scores for all the posters.

The mean first impression scores are then ranked in order.

The top 20% of posters identified by first impression are then assessed in more detail by several observers from the profession.

The detailed assessment might give equal weighting to presentation, factually correct content, originality, scientific merit, a quickly understandable message, and star quality.

The mean detailed scores are then ranked to give the best poster.

References

- 1.Griffiths MD. Giving poster presentations. BMJ Careers 2003;326: S46 [Google Scholar]