Abstract

We develop a new approach to estimating the causal effects of treatments or instruments that combine multiple sources of variation according to a known formula. Examples include treatments capturing spillovers in social or transportation networks and simulated instruments for policy eligibility. We show how exogenous shocks to some, but not all, determinants of such variables can be leveraged while avoiding omitted variables bias. Our solution involves specifying counterfactual shocks that may as well have been realized and adjusting for a summary measure of non-randomness in shock exposure: the average treatment (or instrument) across shock counterfactuals. We use this approach to address bias when estimating employment effects of market access growth from Chinese high-speed rail construction.

1. Introduction

Many questions in economics involve the causal effects of treatments which are computed from multiple sources of variation, and sometimes observed at different “levels,” according to a known formula. Consider three examples. First, when estimating spillovers from a randomized intervention, one might count the number of an individual’s neighbors who were selected for the intervention. This spillover treatment combines variation in who was selected with variation in who neighbors whom. Second, in studies of transportation infrastructure effects, one might measure the growth of regional market access: a treatment computed from the location and timing of transportation upgrades and the spatial distribution of economic activity in a country. A third example is a treatment capturing individual eligibility for a public program, such as Medicaid, which is jointly determined by the eligibility policy in the individual’s state and her household’s demographics and income.1

This paper develops a new approach to estimating the effects of such composite variables when some, but not all, of their determinants are generated by a true or natural experiment. We ask, for example, how one can estimate market access effects by leveraging the timing of new railroad line construction as exogenous shocks, when the other determinants of market access (such as the pre-determined location of large markets and planned lines) are non-random.

We first show that omitted variable bias (OVB) may confound conventional regression approaches in such settings. Bias arises from different observations receiving systematically different values of the treatment because of their individual non-random “exposure” to the exogenous shocks. For example, even when construction is delayed for a random set of lines, regions that are economically or geographically more central will tend to see a larger growth in market access because they are closer to a typical potential line (and thus closer to a typical constructed line). Regression estimation of market access effects then fails without an additional assumption on the exogeneity of economic geography: that more exposed (e.g., central) regions do not differ in their relevant unobservables, such as changes in local productivity or amenities. Intuitively, randomizing transportation upgrades does not randomize the market access growth generated by them.

Our solution to the OVB challenge is based on the specification of counterfactual exogenous shocks that might as well have been realized. This approach views the observed shocks as one realization of some data-generating process—what we call the shock assignment process—which can be simulated to obtain counterfactuals. In a true experiment, the shock assignment process is given by the randomization protocol. In natural experiments, shock counterfactuals make explicit the contrasts which the researcher wishes to leverage, for instance by specifying permutations of the shocks that were as likely to have occurred. For example, if line construction delays are considered as-good-as-random, one might produce counterfactual network maps by randomly exchanging the lines which were completed earlier and later.

Valid shock counterfactuals can be used to avoid OVB by a “recentering” procedure which involves measuring and appropriately adjusting for a single confounder: the expected treatment. To do so, a researcher draws counterfactual shocks from the assignment process and recomputes the instrument many times. Then, for each observation, the treatment is averaged across these many draws to obtain the expected treatment. Finally, the expected treatment is subtracted from the realized treatment to obtain the recentered treatment. We show that using this recentered treatment as an instrument for the realized treatment removes the bias from non-random shock exposure. Intuitively, observations only get high vs. low values of the recentered treatment because the observed shocks were drawn instead of the counterfactuals, which is assumed to happen by chance. For example, when the expected treatment is constructed by permuting the timing of new line construction, regressions that instrument with recentered market access growth compare regions which received higher vs. lower market access growth because proximate lines were constructed early vs. late, and not because of the economic geography. Another closely related solution to OVB is to include the expected treatment as a control in the regression of an outcome on the realized treatment; this can be viewed as recentering the treatment while also removing some residual variation in the outcome, in a control function approach.2

This approach to causal inference with composite variables, in which some determinants are labeled as exogenous and characterized by an assignment process, can be seen as formalizing the natural experiment of interest and bringing composite variables to familiar econometric territory.3 Indeed, the conditions we impose on the exogenous shocks are similar to those which might be used if the shocks were directly used as treatments: e.g., if shocks to the timing of railroad line upgrades were used in a regression of outcomes defined at the “level” of those lines. Recentering ensures identification from the natural experiment, even when the regression is estimated at a different level (e.g., across regions instead of lines).

Our framework further allows the treatment to have endogenous or unobserved determinants. In this case one may construct candidate composite instruments based on the treatment’s exogenous and predetermined components. The same OVB problem arises in this instrumental variable (IV) case, and it can again be solved by recentering the candidate instrument by its expectation over the shock assignment process. Controlling for the expected instrument is again another solution.

We establish several attractive properties of the recentering approach, beyond our primary results on OVB. First, recentered estimators are consistent provided the exogenous shocks induce sufficient cross-sectional variation in the instrument and treatment—regardless of the correlation structure of unobservables. Second, shock counterfactuals can be used for exact finite-sample inference and specification tests via randomization inference (RI). Finally, while our consistency and RI results rely on an assumption of constant treatment effects, recentered IV estimators generally capture a convex average of heterogeneous effects under a natural first-stage monotonicity condition.

We apply this framework to estimate the employment effects of market access (MA) growth due to new high-speed railway system in China. We show how recentering can help leverage variation in the timing of transportation upgrades to purge OVB. Simple regressions of employment growth on MA growth suggest a large and statistically significant effect, which is only partially reduced by conventional geography-based controls. But this effect is eliminated when we adjust for expected MA growth, measured by permuting constructed HSR lines with similar ones that were planned but not built. The unadjusted estimates thus reflect the fact that employment grew in regions which were more exposed to planned high-speed rail construction, whether or not construction actually occurred.

Econometrically, expected treatment and instrument adjustment is similar to propensity score methods for removing OVB (Rosenbaum and Rubin 1983), with two key differences. First, we propose using the structure of composite treatments and instruments to compute their expectation from more primitive assumptions on the assignment process for exogenous shocks. This approach is similar to how Borusyak et al. (2022) and Aronow and Samii (2017) address OVB when using linear shift-share instruments and network treatments, respectively. It differs from conventional methods of directly estimating propensity scores; such methods are typically infeasible in the settings we consider because the exposure to exogenous shocks is intractably high-dimensional. Second, our regression-based adjustment differs from conventional approaches of weighting by or matching on propensity scores.4 Regression adjustment is more popular in applied research, avoids practical issues of limited overlap (due to, e.g., propensity scores that are close to zero or one), does not require the treatments or instruments to be binary, and is natural for estimating constant structural parameters or convex averages of heterogeneous treatment effects.

The remainder of this paper is organized as follows. The next section motivates our analysis with three examples related to network spillovers, market access effects, and Medicaid eligibility effects. Section 3 develops our general framework and results. Section 4 presents our application, and Section 5 concludes. Additional results and extensions are given in an earlier working paper, Borusyak and Hull (2021, henceforth BH).

2. Motivating Examples

We develop three stylized examples, inspired respectively by the settings of Miguel and Kremer (2004), Donaldson and Hornbeck (2016), and Currie and Gruber (1996), to illustrate the main insights of this paper. In each example we consider estimating the parameter of a causal or structural model which relates an outcome to a treatment ,

| (1) |

for a set of units with an unobserved error . The common feature of the examples is that is computed from multiple sources of variation by a known formula.

Network spillovers:

Suppose is student ’s educational achievement and counts the number of ’s neighbors who have been dewormed in an intervention:

Here is an indicator for student being selected for the deworming intervention and indicates that and are neighbors (i.e., connected by an observed network link). The error term captures ’s educational outcome when none of her neighbors are dewormed. This example is a stylized version of the main specification in Miguel and Kremer (2004).5

Market access:

Suppose is the growth of land values in region between two dates and is the growth of regional market access (MA) due to improvements to the interregional railroad network. Market access is computed

following standard models of economic geography (e.g. Redding and Venables (2004)). Here is the time-invariant population of region , is the set of railway lines and other types of transit which comprise the transportation network in operation at time , is the location of region on the map, and is a function giving the travel time between regions and . The error term captures location ’s land value growth in the absence of market access growth, due to some regional amenity and productivity shocks. Similar market access growth specifications are considered in, for example, Donaldson and Hornbeck (2016).

Medicaid eligibility:

Suppose is individual ’s health outcome and indicates her eligibility for Medicaid. Let be a vector of individual income and demographics, index ’s state of residence, and be state ’s eligibility policy: i.e. the set of income and demographic groups eligible for Medicaid in that state. Then

The error term captures individual ’s outcome when she is ineligible for Medicaid. This example comes from Currie and Gruber (1996).

To estimate in each example, we consider a true or natural experiment that manipulates some of the determinants of . Formally, we partition the variables from which is computed into two groups: a set of shocks and a set of predetermined variables . The shocks are assumed to be exogenous, i.e. independent of the errors . Shock exogeneity combines two conceptually distinct assumptions—that is as-good-as-randomly assigned, and that this assignment only affects the outcome of each unit via its treatment (an exclusion restriction). The shocks can be assigned at a different “level” than the observations, with . The remaining variables have an arbitrary structure and governs the mapping from the exogenous shocks to each unit’s treatment, i.e. the observation’s “exposure” to the shocks.6 We assume that is determined prior to the (natural) experiment and is unaffected by the shocks.

Network spillovers (cont.)

Suppose deworming is assigned in a randomized control trial (RCT) and fully captures its spillover effects. Then collects the exogenous shocks, for .7 The remaining determinants of the spillover treatments of all units, , are fixed in the experiment.

Market access (cont.)

Suppose the timing of new railroads is exogenous. Specifically, suppose that among lines planned to be constructed by some are randomly delayed by unexpected engineering problems (unrelated to the trends in regional land values). Suppose also the model of economic geography is correctly specified, so fully captures the effects of transportation upgrades. Then collects the exogenous shocks, where is an indicator for whether planned line faces no delays. Assuming no other changes to the network at , we can partition the determinants of MA growth into and as is fully determined by and the set of newly opened lines.

Medicaid eligibility (cont.)

Suppose Medicaid policies across the states are exogenous, i.e. are chosen irrespective of the potential health outcomes and affect individual outcomes only via Medicaid eligibility. Then collects the exogenous shocks, with the other determinants of eligibility collected in .

The first point of this paper is that ordinary least squares (OLS) estimation of can suffer from OVB, despite the experimental variation underlying .8 The OVB problem arises because some units receive systematically higher values of than others, as a consequence of their non-random exposure to the shocks. This systematic variation may be cross-sectionally correlated with the errors , generating bias in OLS estimation of equation (1).

Network spillovers (cont.)

Even when deworming is randomly assigned to students, those with more neighbors (e.g., because they live in dense urban areas) will tend to have more dewormed neighbors and therefore be more exposed to the deworming intervention. Urban areas may have different educational outcomes for reasons unrelated to deworming, generating OVB.

Market access (cont.)

Even when the opening status of lines is as-good-as-randomly assigned, regions in the economic and geographic center of the country will tend to see more market access growth than peripheral regions as the former are closer to a typical potential line. Central regions may face different amenity and productivity shocks, generating OVB.

Medicaid eligibility (cont.)

Even when Medicaid policies are as-good-as-randomly assigned to states, poorer individuals will tend to see higher rates of eligibility. Poor individuals may face different health shocks, generating OVB.

Our second insight is that this OVB problem has a conceptually simple solution, which follows from viewing the set of realized as one draw from a shock assignment process and considering what counterfactual sets of exogenous shocks could have as likely been drawn. The specification of such counterfactuals allows one to measure and remove the systematic component of variation in the treatment which drives OVB. Specifically, the researcher recomputes the treatment of each unit across many counterfactual sets of shocks and takes their average to measure the expected treatment, . We show that this , which is co-determined by the exposure of to the shocks and the shock assignment process, is the sole confounder in equation (1). OVB can then be purged by “recentering” the treatment, i.e. instrumenting with in equation (1), or by simply adding as a control in OLS estimation. The key to removing bias with this approach is thus to credibly specify and average over shock counterfactuals—a task which is trivial in true experiments and which otherwise formalizes the natural experiment of interest.

Network spillovers (cont.)

With deworming assigned in an RCT, the shock assignment process is given by the known randomization protocol. If, say, each student has a 30% chance of being dewormed, the expected number of ’s dewormed neighbors over repeated draws of deworming shocks is 0.3 times their number of neighbors . OVB is thus purged by controlling for the number of neighbors, or by using the recentered number of dewormed neighbors to instrument for . With either adjustment, the regression will only compare students who had more neighbors dewormed than expected (given the network) to those with fewer-than-expected dewormed neighbors.

Market access (cont.)

The as-good-as-random assignment of opening status can be formalized by each planned line facing an equal and independent chance of opening. Then, if railway lines open by , every counterfactual network in which lines from the plan opened was as likely to have occurred. One can thus compute expected MA growth as the average MA growth of region across these counterfactuals (or a random subset of them). Recentering by or controlling for this ensures that the regressions only compare regions which saw higher MA growth than expected—given pre-existing economic geography and the plan—to those which saw less-than-expected MA growth.

Medicaid eligibility (cont.)

The as-good-as-random assignment of Medicaid policies can be formalized by each state randomly drawing from a pool of potential policies, such that every permutation of the realized policies was equally likely to have occurred. Averaging individual ’s eligibility across these permutations yields an expected eligibility which equals the share of states in which she would be eligible. Our solution is to instrument actual eligibility with recentered eligibility , or control for in an OLS regression. Either approach would, for example, effectively remove from the sample “always-eligible” or “never-eligible” individuals (with or ) whose income and demographics make them unaffected by policy variation.

The recentering solution generally dominates more conventional ones, such as instrumenting directly by the shocks or controlling for the other determinants of . Instrumenting with the shocks is infeasible when the shocks are assigned at a different level than the units, and generally discards variation in treatment due to . Controlling for an observation’s non-random shock exposure flexibly is typically infeasible, because such exposure is high-dimensional. Conversely, low-dimensional controls are only guaranteed to purge OVB (absent additional non-experimental restrictions on the error term) when they linearly span , which is difficult to establish except when is known and recentering is feasible. If either the assignment process or shock exposure mapping is complex, is unlikely to be a simple function of observed characteristics.

Network spillovers (cont.)

Using student ’s own deworming status as an instrument is infeasible as it does not predict the number of dewormed neighbors; incorporating the non-random network adjacency matrix is necessary. Controlling for the entire row of the adjacency matrix (which characterizes student’s exposure) is also infeasible, as it would absorb all cross-sectional variation in the treatment. Controlling for the number of ’s neighbors is enough to purge OVB under completely random assignment of deworming, since this control is proportional to . However, such simple controls would not linearly span with more complex randomization protocols, such as with two tiers (by school, then by student) or stratification (e.g., with girls dewormed with a known higher probability). Simple controls are also generally insufficient with more complex specifications of spillovers.9

Market access (cont.)

Railroad timing shocks vary at the level of lines, so it is infeasible to use them as instruments for regional market access without incorporating some non-random features of economic geography. Controlling perfectly for these features is also infeasible, as each region’s market access depends on the entire spatial distribution of economic activity. Simple sets of controls, such as polynomials in the latitude and longitude of a region, need not linearly span given the complexity of , and thus are not guaranteed to purge OVB.

Medicaid eligibility (cont.)

Currie and Gruber (1996) propose instrumenting individual eligibility with a measure of the overall policy generosity of her state—a so-called “simulated instrument.” Such instruments are simple functions of for all individuals in state and are thus exogenous and relevant under random policy assignment. However they discard relevant within-state variation in ’s income and demographics and are thus likely to yield a less powerful first-stage prediction of than recentered eligibility.10

We conclude this section by noting that the OVB problem and recentering solution both extend to the case with an arbitrary endogenous and a candidate instrument which is constructed from exogenous shocks and other variables by a known formula. This approach is natural when the treatment can be represented as a function of exogenous shocks , predetermined variables , and endogenous (and possibly unobserved) variables : i.e. when for a known . An intuitive candidate instrument for is the prediction of in the scenario when the shocks are ignored: . Our framework shows that these candidate instruments are generally invalid, again because of the non-random exposure of to . Yet OVB can again be purged by measuring the expected instrument —the average across counterfactual —and either instrumenting with the recentered IV or controlling for while instrumenting with .

Market access (cont.)

Suppose population sizes also change between and , and the observable changes are not exogenous (e.g. they respond to house price shocks in ). Then one can consider instrumenting the realized change in MA by a predicted change in MA which keeps population sizes fixed at levels. Without recentering, this IV regression may suffer from the same OVB as the OLS regression discussed above. OVB is now avoided by recentering the MA prediction via counterfactual railroad networks.

Medicaid eligibilty (cont.)

Suppose one is interested in the effects of Medicaid takeup, instead of eligibility. Takeup is the product of eligibility and , where indicates that individual would decline Medicaid if eligible and is unobserved. Under the appropriate exclusion restriction one can consider instrumenting takeup with eligibility; our recentering strategy then again removes OVB from non-random variation in policy exposure.

3. Theory

We now develop a general econometric framework for settings with non-random exposure to exogenous shocks. We introduce the baseline setting, develop our approach to estimation based on the recentering procedure, and discuss how this recentering can be performed by specifying counterfactual shocks in Sections 3.1-3.3. We then discuss conditions for consistency of recentered IV estimators in Section 3.4, and how inference can be conducted in Section 3.5. Several extensions are summarized in Section 3.6.

3.1. Setting

We consider estimation of in the causal or structural model

| (2) |

from a dataset of scalar and demeaned and , . Below we discuss extensions to heterogeneous causal effects, nonlinear models, multiple treatments, and additional control variables. Although we use a single index of for observations, we note our framework accommodates repeated cross-sections and panel data.

Importantly for the applicability of our framework, we do not assume that the observations of and are independently or identically distributed () as when arising from random sampling. This allows for complex dependencies across the units due to their common exposure to observed and potentially unobserved shocks. It is also consistent with settings where the units represent a population—for example, all regions of a country—and conventional random sampling assumptions are inappropriate (Abadie et al., 2020).11

We suppose that to estimate a researcher has constructed a candidate instrument

| (3) |

where is a list of known non-stochastic functions, is a vector of shocks, and is a list of other variables of unrestricted dimension. Equation (3) is very general: any that can be computed from a set of observed data, according to a known formula, can be described in this way.12 It also allows , in which case is the causal effect of the composite treatment.

We assume that the shocks are exogenous, which we formalize by their conditional independence from the vector of errors given the other sources of instrument variation:

Assumption 1. (Shock exogeneity):

As noted in Section 2, this notion of shock exogeneity combines two conceptually distinct conditions. First, it imposes an exclusion restriction, reflecting an economic model of how can affect . Second, it requires as-good-as-random shock assignment. This latter condition is satisfied when the shocks are fully randomly assigned, as in an RCT (i.e., ), but also allows to contain variables that govern the shock assignment process.13 Importantly, Assumption 1 allows to vary arbitrarily across ; this reflects the lack of non-experimental assumptions, such as parallel trends, constraining the error in equation (2).14 Assumption 1 is consistent with a two-step data-generating process, where is determined prior to the realization of shocks and errors which then together determine ().15

We start by considering an instrumental variable (IV) regression of on that instruments with . As usual, this strategy requires to be relevant to the treatment and orthogonal to the error term. In our non- setting, we formalize these two conditions in terms of the full-sample IV moments and . Since (2) implies , is recoverable from the ratio of these moments (what we term identification) under the relevance condition of and the orthogonality condition of .16 To start we assume the two IV moments are known, in order to focus on the potential for OVB when the orthogonality condition fails. We discuss conditions for consistent estimation in Section 3.4.

3.2. OVB and Instrument Recentering

We define the expected instrument as the average value of across different realizations of the shocks, conditional on . Our first result shows that OVB may arise when predetermined exposure to the natural experiment is endogenous, and that the potential for such bias is entirely governed by the relationship between and the error . Formally, under Assumption 1 instrument orthogonality need not hold: in general. Rather,

| (4) |

This result follows from the law of iterated expectations: for all , where the second equality uses Assumption 1 and the definition of .

The central role of in governing OVB suggests the recentering solution: even though OVB results from potentially high-dimensional variation in units’ exposure to shocks, adjustment for the one-dimensional confounder is sufficient for instrument orthogonality. We adjust by defining the recentered instrument . By equation (4), orthogonality always holds for this instrument:

Thus, if is also relevant, is identified by the IV regression which uses the recentered instrument instead of .17

A closely related solution, also suggested by equation (4), is to include the expected instrument as a control in specification (2) while using the original as the instrument in a control function approach (Wooldridge, 2015). Controlling for can be thought of as recentering while also removing the residual variation in which is cross-sectionally correlated with . As usual, removing this residual variation may generate precision gains in large samples; similar gains may arise from including (a fixed number of) any predetermined controls in a recentered IV regression.18

Equation (4) further shows that adjusting for is generally necessary for identification, absent additional restrictions on the unobserved error. Conventional controls and fixed effects are only guaranteed to purge OVB when they linearly span : a condition that is difficult to verify except when recentering is also feasible.19

Adjustments based on , as the sole confounder of , are similar to more conventional propensity score methods. There are three key differences, concerning the setting, adjustment method, and computation of . First, propensity score methods have mostly been applied to binary treatments, starting from Rosenbaum and Rubin (1983). While generalizations to binary instruments (e.g. Abadie (2003)) and non-binary treatments (e.g. Imbens (2000)) have been proposed, our setting allows for arbitrary treatments or instruments. Second, the propensity score literature has mostly used non-regression adjustment methods, such as matching or binning (Abadie and Imbens, 2016; King and Nielsen, 2019). A notable exception is the E-estimator of Robins et al. (1992), which similarly leverages linearity of an outcome model like (2) to recenter by a scalar variable. Third, and most importantly, propensity scores are usually estimated from the data by relating the treatment to a vector of observation-specific covariates. This approach is generally not feasible because exposure to exogenous shocks is high-dimensional: for instance, as noted in Section 2, the expected market access of any region depends on the entire economic geography of the country. We therefore take a different approach to computing , which we turn to next.

3.3. Computing the Expected Instrument via Shock Counterfactuals

We propose computing the expected instrument by specifying an assignment process for the shocks, drawing many sets of counterfactual shocks from this process, recomputing the candidate instrument each time, and averaging it across the counterfactuals. Here we formalize this approach, discuss general ways in which counterfactual shocks can be specified, and highlight the advantages of our approach over alternatives.

We define the shock assignment process as the conditional distribution of , with cumulative distribution function . When is known, the expected instrument can be computed and either used to recenter or added as a regression control.20 To emphasize the importance of a known shock assignment process, we write it as an assumption:

Assumption 2. (Known assignment process): is known in the support of .

This assumption is unrestrictive when the shocks are determined by a known randomization protocol, as in an RCT or with policy randomizations (such as tie-breaking lottery numbers in centralized assignment mechanisms; Abdulkadiroglu et al. (2017)). The assignment process may also be given by scientific knowledge when the shocks are randomized naturally, such as when captures weather or seismic shocks governed by meteorological or geological processes (e.g., Carvalho et al. (2021); Madestam et al. (2013)). Policy discontinuities (as in regression discontinuity designs) can also yield a known when viewed as generating local randomization around known cutoffs (Lee, 2008; Cattaneo et al., 2015).

In observational data, where the distribution of shocks is unknown, Assumption 2 can be satisfied by specifying some permutations of shocks that were as likely to have occurred. For instance, if one is willing to assume the shocks are across , it follows that all permutations of the observed are equally likely. In this case is uniform when is augmented by the permutation class , where denotes the set of permutation operators on vectors of length (e.g. Lehmann and Romano, 2006, p. 634). The distribution of each (conditionally on other components of ) then needs not be specified; the expected instrument is the average across all permutations of shocks, which serve as counterfactuals:

Such are easy to compute (or approximate with a random set of permutations).

Similar expected instrument calculations follow under weaker shock exchangeability conditions, such as when the are within, but not across, a set of known clusters and the class of within-cluster permutations is used to draw counterfactuals. We illustrate this approach in Section 4. In BH we discuss how our framework can also apply with specified up to a low-dimensional vector of consistently estimable parameters (Appendix C.5); we also show how Assumption 2 can derive from an economic model (e.g. of transportation network formation) with stochastic shocks or from symmetries of the joint shock distribution (Appendices D.1 and D.2)

We note that even when is challenging to specify, a possibly incorrect specification can be useful as a sensitivity check. Specifically, if Assumption 1 holds and there is already no OVB because the included regression controls perfectly capture either the endogenous features of exposure or the expected instrument, then controlling for any candidate expected instrument cannot introduce bias. In this case the researcher may safely control for one or several based on some guesses of the assignment process.21 More generally, researchers may achieve additional robustness by controlling for multiple candidate based on multiple shock assignment process guesses; only one such guess needs to be right to purge OVB.

3.4. Recentered IV Consistency

With the ratio of recentered IV moments identifying , we now consider whether the corresponding IV estimator is consistent, i.e. whether as the number of observed outcomes and treatments grows large (). To formalize consistency in our context we consider a sequence of distributions for the complete data (). Only in this section, to make the asymptotic sequence explicit, we index moments by : e.g., we write the recentered IV moments as and . We allow the number of observed shocks, , and the dimensions of to change arbitrarily with .

We first consider mean-square convergence of to under Assumption 1: i.e., whether . Since by (2), such convergence implies so long as the instrument is asymptotically relevant (a condition we return to below). We establish this convergence under a regularity condition on and a substantive restriction on , which we term weak mutual dependence:

Assumption 3. (Weak mutual dependence):

Proposition 1. Suppose Assumptions 1-3 hold and uniformly across and . Then .

Proof. See Appendix D.

Assumption 3 holds when the shocks induce rich cross-sectional variation in the recentered instrument, through heterogeneous exposure, such that most pairs of () have a weak covariance across possible realizations of . The proof shows this is enough for a law of large numbers to apply to .22

Note that in line with our approach to identification, Proposition 1 makes no substantive restrictions on the errors beyond Assumption 1 (in particular, it puts no restrictions on the dependence of across observations). In the absence of such restrictions, Proposition 2 in Appendix C shows the Assumption 3 is not only sufficient for but, under regularity conditions, also necessary. Of course, more conventional restrictions on the mutual dependence of errors (such as or clustered ) may also suffice for convergence when weak mutual dependence of fails.

Three additional results in Appendix C, which extend the results on consistency with linear shift-share instruments from Borusyak et al. (2022), unpack Assumption 3 further. First, a large number of exogenous shocks is essentially necessary for the recentered instrument to not have many strong cross-sectional dependencies. Proposition 3 formalizes this intuition by showing that, with sufficiently smooth , Assumption 3 can only hold with . Moreover, the concentration of exposure to this growing number of shocks matters. Proposition 4 formalizes this idea by considering a concentration measure for average shock exposure which is similar to a Herfindahl-Hirschman Index (HHI): , where . For binary and weakly monotone (as in the network spillovers and market access examples) and with mutually-independent shocks, Assumption 3 is satisfied when this measure converges to zero such that the impact of any finite set of shocks on vanishes.23 Proposition 5 considers a different low-level condition in a case covering the medicaid eligibility example: Assumption 3 holds when most pairs of observations of are affected by non-overlapping sets of shocks.

Convergence of implies consistency of the recentered IV estimator so long as (i) remains bounded away from zero and (ii) .24 Condition (i) follows when the relationship between and is strong and when most observations of have exposure concentrated in a small number of exogenous shocks, such that does not dissipate even as . Proposition 6 in Appendix C formalizes these conditions with a linear first stage model of , with , and a different measure of shock exposure concentration: the HHI of the effects of different shocks on , , averaged across observations . In the case of mutually-independent binary shocks, we require and that the expectation of this concentration measure is bounded above zero. The HHI conditions from Propositions 4 and 6 may simultaneously hold when most observations are mostly exposed to a small number of shocks, differentially across a large number of shocks. Conditions similar to Assumption 3 can be derived to ensure convergence of the sample first stage, (ii).25

3.5. Randomization Inference and Specification Tests

In some applications of our framework, natural assumptions on the mutual independence of or across observations can make conventional (e.g. clustered) asymptotic inference valid. Generally, however, the common exposure of observations to observed and unobserved shocks generates complex dependencies across observations making conventional asymptotic analysis inapplicable.26 In such cases, it may be attractive to construct confidence intervals for the constant effect and tests for Assumptions 1 and 2 based on the specification of the shock assignment process, following a long tradition of randomization inference (RI; Fisher, 1935). The RI approach guarantees correct coverage in finite samples of both observations and shocks.27 We focus on a particular type of RI test which is tightly linked to the recentered IV estimator .

RI tests and confidence intervals for are based on a scalar test statistic , where is a candidate parameter value. Under the null hypothesis of and Assumption 1, the distribution of conditional on and is implied by the shock assignment process . One may simulate this distribution, by redrawing the shocks and recomputing . If the original value of is far in the tails of the simulated distribution, one has grounds to reject the null. Inversion of such tests yields confidence interval for by collecting all that are not rejected. These intervals have correct size, both conditionally on () and unconditionally (see Appendix C.3 of BH for details).

We propose addressing the practical issue of choosing a randomization test statistic by picking a that is tightly linked to the recentered IV estimator, building on the theory of Hodges and Lehmann (1963) and Rosenbaum (2002). Specifically, we consider the sample covariance of the recentered instrument and implied residual: . Lemma 2 of BH shows that is a Hodges-Lehmann estimator corresponding to this , meaning that equates with its expectation across counterfactual shocks (specifically, zero).28 This connection makes RI tests and confidence intervals based on inherit the consistency of : the test power asymptotically increases to one for any fixed alternative under additional regularity conditions (Proposition S2 of BH).29

Randomization inference can also be used to perform falsification tests on our key Assumptions 1 and 2. Recentering implies a testable prediction that is orthogonal to any variable satisfying , such as any function of or other observables (either predetermined or contemporaneous) thought to be conditionally independent of . To test this restriction, one may check that the sample covariance is sufficiently close to zero by drawing counterfactual shocks and checking that is not in the tails of its conditional-on-() distribution. Multiple falsification tests, based on a vector of predetermined variables , can be combined by an appropriate RI procedure, e.g. by taking to be the sample sum of squared fitted values from regressing on .30

Falsification tests can be useful in two ways. First, when is a lagged outcome or another variable thought to proxy for , they provide an RI implementation of conventional placebo and covariate balance tests of Assumption 1. While the use of RI for inference on causal effects may be complicated by treatment effect heterogeneity, the sharp hypothesis of zero placebo effects is a natural null. Second, RI tests will generally have power to reject false specifications of the shock assignment process, i.e. violations of Assumption 2, even when does not proxy for . For , for example (which is trivially conditionally independent of ), the test verifies that the sample mean of is typical for the realizations of the specified assignment process. Setting instead checks that the recentered instrument is not correlated with the expected instrument that it is supposed to remove.

3.6. Extensions

While we analyze the constant-effect model (2), identification by -adjusted regressions extends to settings with heterogeneous treatment effects. Namely, Appendix C.1 of BH shows that the recentered IV estimator generally identifies a convex-weighted average of heterogeneous effects under an appropriate monotonicity condition, extending Imbens and Angrist (1994). The weights are proportional to the conditional variance of across counterfactual shocks, . These , like , are given by the shock assignment process (Assumption 2) and therefore can be computed by the researcher. Moreover, they can be used to identify more conventional weighted average effects. For example, in reduced-form models of the form a recentered and rescaled IV identifies the average effect . Similarly, in IV settings with binary and , this rescaled instrument identifies the local average treatment effect of Imbens and Angrist (1994).31

Further extensions are given in the appendix of BH. Appendix C.6 shows how predetermined observables can be included as regression controls to reduce residual variation and potentially increase power. Appendix C.7 discusses identification and inference with multiple treatments or instruments. Finally, Appendix C.8 extends the framework to nonlinear outcome models.

4. Application: Effects of Transportation Infrastructure

We now present an empirical application showing how our theoretic framework can be used to avoid OVB in practice. Specifically, we estimate the effect of market access growth on Chinese regional employment growth over 2007–2016, leveraging the recent construction of high-speed rail (HSR). We show how counterfactual HSR shocks can be specified, and how correcting for expected market access growth can help purge OVB.

The recent construction of Chinese HSR has produced a network longer than in all other countries combined (Lawrence et al., 2019). The network mostly consists of dedicated passenger lines and has developed rapidly since 2007.32 Construction objectives included freeing up capacity on the low-speed rail network and supporting economic development by improving regional connectivity (Lawrence et al., 2019; Ma, 2011). While affordable fares make HSR popular for multiple purposes, business travel is an important component of rail traffic, ranging between 28% and 62%, depending on the line (Ollivier et al., 2014; Lawrence et al., 2019). The role of HSR may also extend beyond directly connected regions, as passengers frequently transfer between HSR and traditional lines (and between intersecting HSR lines). An early analysis by Zheng and Kahn (2013) finds positive effects of HSR on housing prices, while Lin (2017) similarly finds positive effects on regional employment.

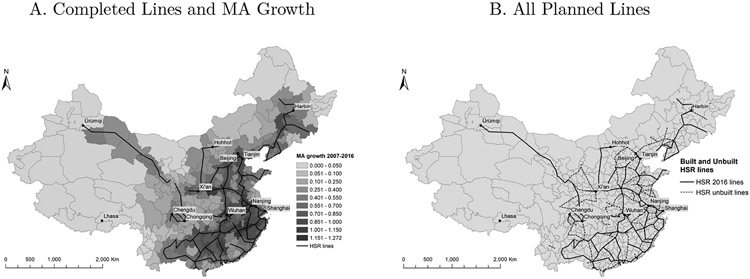

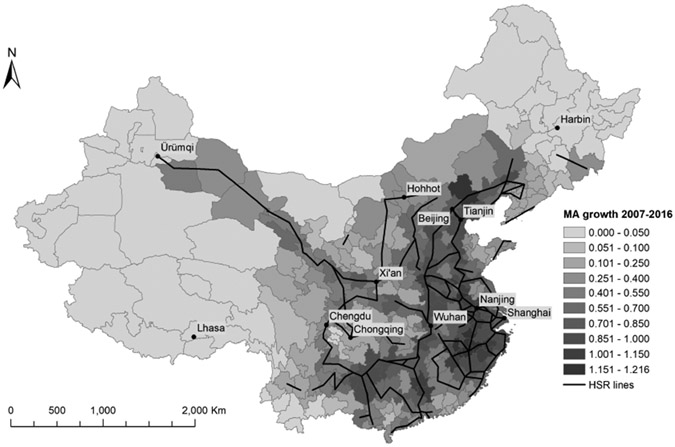

We analyze HSR-induced market access effects for 340 sub-province-level administrative divisions in mainland China, referred to as prefectures.33 We measure market access growth between 2007 and 2016 by combining data on the development of the HSR network and each prefecture’s location and population (as measured in the 2000 census). A total of 83 HSR lines opened between these years, with the first in 2008; a further 66 lines were completed or under construction as of April 2019.34 We compute a simple market access measure in each prefecture and year based on the formula in Zheng and Kahn (2013): , where denotes the year-2000 population of prefecture and denotes predicted travel time between regions and in year (in minutes). Travel time predictions are based on the operational speed of each HSR line as well as geographic distance, which proxies for the travel time by car or a low-speed train. We relate MA growth, , to the corresponding growth in prefecture’s urban employment from Chinese City Statistical Yearbooks. This yields a set of 275 prefectures with non-missing outcome data; see Appendix A for details on the sample construction and MA measure. Panel A of Figure 1 shows the Chinese HSR network as of the end of 2016, along with the implied MA growth of relative to 2007.

Figure 1:

Chinese High Speed Rail and Market Access Growth, 2007-2016 A. Completed Lines and MA Growth B. All Planned Lines Notes: Panel A shows the completed China high-speed rail network by the end of 2016, with shading indicating MA growth (i.e. log-change in MA) relative to 2007. Panel B shows the network of all HSR lines, including those planned but not yet completed as of 2016.

Column 1 of Table I, Panel A, reports the coefficient from a regression of employment growth on MA growth.35 The estimated elasticity of 0.23 is large. With an average MA growth of 0.54 log points, it implies a 12.4% employment growth attributable to HSR for an average prefecture—almost half of the 26.6% average employment growth. The estimate is also highly statistically significant using Conley (1999) spatially-clustered standard errors.

Table I:

Employment Effects of Market Access: Unadjusted and Recentered Estimates

| Unadjusted OLS (1) |

Recentered IV (2) |

Controlled OLS (3) |

|

|---|---|---|---|

| Panel A: No Controls | |||

| Market Access Growth | 0.232 (0.075) |

0.084 (0.097) [−0.245, 0.337] |

0.072 (0.093) [−0.169, 0.337] |

| Expected Market Access Growth | 0.317 (0.096) |

||

| Panel B: With Geography Controls | |||

| Market Access Growth | 0.133 (0.064) |

0.056 (0.089) [−0.135, 0.280] |

0.047 (0.092) [−0.146, 0.280] |

| Expected Market Access Growth | 0.214 (0.073) |

||

| Recentered | No | Yes | Yes |

| Prefectures | 275 | 275 | 275 |

Notes: This table reports coefficients from regressions of employment growth on MA growth in Chinese prefectures from 2007–2016. MA growth is unadjusted in Column 1. In Column 2 this treatment is instrumented by MA growth recentered by permuting the opening status of built and unbuilt HSR lines with the same number of cross-prefecture links. Column 3 instead estimates an OLS regression with recentered MA growth as treatment and controlling for expected MA growth given by the same HSR counterfactuals. The regressions in Panel B control for distance to Beijing, latitude, and longitude. Standard errors which allow for linearly decaying spatial correlation (up to a bandwidth of 500km) are reported in parentheses. 95% RI confidence intervals based on the HSR counterfactuals are reported in brackets.

Panel A of Figure 1, however, gives reason for caution against causally interpreting the OLS coefficient. Prefectures with high MA growth, which serve as the effective treatment group, tend to be clustered in the main economic areas in the southeast of the country where HSR lines and large markets are concentrated. A comparison between these prefectures and the economic periphery may be confounded by the effects of unobserved policies, both contemporaneous and historical, that differentially affected the economic center.

We quantify the systematic nature of spatial variation in MA growth in Column 1 of Table II, by regressing it on a prefecture’s distance to Beijing, latitude, and longitude. These predictors capture over 80% of the variation in MA growth (as measured by the regression’s ), reinforcing the OVB concern: for a causal interpretation of the Table I regression, one would need to assume that all unobserved determinants of employment growth (e.g. local productivity shocks) are uncorrelated with these geographic features. While one could of course control for the specific geographic variables from Table II (as we explore below), controlling perfectly for geography is impossible without removing all variation in .

Table II:

Regressions of Market Access Growth on Measures of Economic Geography

| Unadjusted | Recentered | |||

|---|---|---|---|---|

| (1) | (2) | (3) | (4) | |

| Distance to Beijing | −0.291 (0.062) |

0.069 (0.039) |

0.088 (0.045) |

|

| Latitude/100 | −3.324 (0.646) |

−0.342 (0.276) |

−0.182 (0.319) |

|

| Longitude/100 | 1.321 (0.458) |

0.485 (0.237) |

0.440 (0.240) |

|

| Expected Market Access Growth | 0.026 (0.056) |

0.054 (0.069) |

||

| Constant | 0.536 (0.029) |

0.018 (0.018) |

0.018 (0.021) |

0.018 (0.018) |

| Joint RI p-value | 0.443 | 0.711 | 0.492 | |

| 0.824 | 0.083 | 0.010 | 0.086 | |

| Prefectures | 275 | 275 | 275 | 275 |

Notes: This table reports coefficients from regressing the unadjusted and recentered MA growth of Chinese prefectures (2007–2016) on geographic controls. Recentering is done by permuting the opening status of built and unbuilt lines with the same number of cross-prefecture links. All regressors are measured for the prefecture’s main city and demeaned such that the constant in each regression captures the average outcome. Distance to Beijing is measured in 1,000km. Standard errors which allow for linearly decaying spatial correlation (up to a bandwidth of 500km) are reported in parentheses. Joint RI p-values are based on the 1,999 HSR counterfactuals and the sum-of-square fitted values statistic, as described in footnote 30.

Our solution is to view certain features of the HSR network as realizations of a natural experiment. By specifying a set of counterfactual HSR networks we can compute the appropriate function of geography which removes the systematic variation in MA growth.

Our specification of counterfactuals exploits the heterogeneous timing of HSR construction. Specifically, we permute the 2016 completion status of the built and unbuilt (but planned) lines, assuming that the timing of line completion is conditionally as-good-as-random. Panel B of Figure 1 compares the built and unbuilt lines which form our counterfactuals. Unbuilt lines tend to be concentrated in the same areas of China as built lines, reinforcing the fact that construction is not uniformly distributed in space. Moreover, built lines tend to connect more regions: the average number of cross-prefecture “links” is 3.19 and 2.44 for built and unbuilt lines, respectively, with a statistically significant difference (p = 0.048). To account for this difference we construct counterfactual upgrades by permuting the 2016 completion status only among lines with the same number of links. For example the main Beijing to Shanghai HSR line, which has the greatest number of links, is always included in the counterfactuals. This procedure generates 1,999 counterfactual HSR maps that are visually similar to the actual 2016 network; Appendix Figure A1 gives an illustrative example.

Columns 2–4 of Table II validate this specification of the HSR assignment process by the test described in Section 3.5. Column 2 shows that this recentering successfully removes the systematic geographic variation in market access. Specifically, we regress recentered MA growth on a constant and the same geographic controls as in Column 1. The regression coefficients and fall dramatically relative to Column 1, while a permutation-based p-value for their joint significance (based on the regression’s sum-of-squares, as suggested in footnote 30) is 0.44. Columns 3 and 4 further show that recentered MA growth is uncorrelated with expected MA growth.36

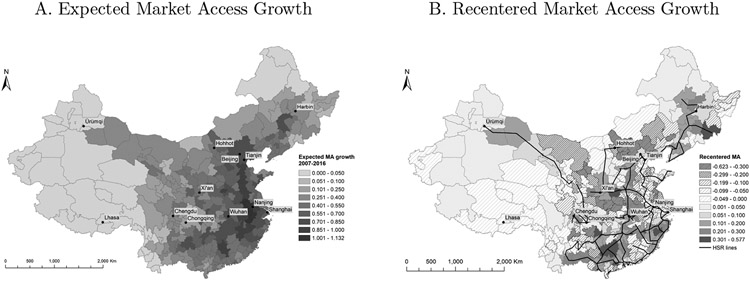

Figure 2 plots expected and recentered MA growth given by the permutations of built and unbuilt lines. The effect of recentering is apparent by contrasting the solid and striped regions in Panel B of Figure 2 (indicating high and low recentered MA growth) with the dark- and light-shaded regions in Panel A of Figure 1 (indicating high and low MA growth). The recentered treatment no longer places western prefectures in the effective control group, as their MA growth is as low as expected. Similarly, some prefectures in the east (such as Tianjin) are no longer in the effective treatment group, as they saw an expectedly large increase in MA. At the same time, recentering provides a justification for retaining other regional contrasts. Hohhot, for example, expected higher MA growth than Harbin due to the planned connection to Beijing. This line was still under construction in 2016, however, resulting in lower MA growth in Hohhot than Harbin.

Figure 2: Expected and Recentered Market Access Growth from Chinese HSR.

Notes: Panel A shows the variation in expected 2007–16 MA growth across Chinese prefectures, computed from 1,999 HSR counterfactuals that permute the opening status of built and unbuilt lines with the same number of cross-prefecture links. Panel B plots the variation in corresponding recentered MA growth: the difference between the MA growth shown in Panel A of Figure 1 and expected MA growth. The HSR network as of 2016 is also shown in this panel.

Column 2 of Table I, Panel A, shows that instrumenting MA growth with recentered MA growth reduces the estimated employment elasticity substantially, from 0.23 to 0.08. Controlling for expected MA growth yields a similar estimate of 0.07 in Column 3. Neither of the two adjusted estimates is statistically distinguishable from zero according to either Conley (1999) spatial-clustered standard errors or permutation-based inference (which yields a wider confidence interval in this setting). The difference between the unadjusted and adjusted estimates is explained by the fact that employment growth is strongly predicted by expected MA growth. In Column 3 we find a large coefficient on , of 0.32, meaning that employment grew faster in prefectures that were more highly exposed to potential HSR construction, whether or not the nearby lines were built yet.

Panel B of Table I shows that the geographic controls from Table II do not isolate the same variation as expected MA growth adjustment. Including these controls in the unadjusted regression of Column 1 yields a smaller but still economically and statistically significant coefficient of 0.13. In contrast, Columns 2 and 3 show that the finding of no significant MA effect after adjusting for is robust to including geographic controls. The adjustment alone appears sufficient to remove the geographic dependence of MA, as Table II also showed.37

While our primary interest is to illustrate the recentering approach, we note that there are several possible explanations for the substantive finding of a small employment effect of MA. Unlike other transportation networks used for trading goods, the Chinese HSR network primarily operates passenger trains. Its scope for directly affecting production is therefore smaller, although it could still facilitate cross-regional business relationships. In addition, the employment effects of growing market access could be positive for some regions but negative for others, as easier commuting between regions relocates employers. We leave analyses of such mechanisms and heterogeneity for future study.

In BH we discuss how market access recentering relates to other approaches in the long literature estimating transportation infrastructure upgrade effects (Redding and Turner, 2015). We first contrast the well-known challenge of strategically chosen transportation upgrades with the less discussed problem that regional exposure to exogenous upgrades may be unequal. We then explain how common strategies to address the former issue (e.g. by leveraging historical routes or inconsequential places) can be incorporated in our framework, at least in principle. At the same time, we highlight that recentering may still be needed to address the latter issue. We further discuss how some of the existing approaches naturally yield specifications of counterfactual networks (e.g. the placebos in Donaldson (2018) and Ahlfeldt and Feddersen (2018)) and summarize the conceptual and practical advantages of our approach relative to employing more conventional controls. We emphasize that even when it is challenging to obtain a convincing specification of counterfactuals, any specification can yield a robustness check on these alternative strategies (see footnote 21).

5. Conclusion

Many studies in economics use treatments or instruments which combine multiple sources of variation, which are sometimes observed at different “levels,” according to a known formula. We develop a general approach to causal inference when some, but not all, of this variation is exogenous. Non-random exposure to the exogenous shocks can bias conventional regression estimators, but this problem can be solved by specifying a shock assignment process: namely, a set of counterfactual shocks that might as well have been realized. Averaging the treatment or instrument over these counterfactuals yields a single which can be adjusted for to achieve identification and consistency. The specification of counterfactuals also yields a natural form of valid finite-sample inference.

In practice, researchers face a choice in how to use in a regression analysis: recentering by it or controlling for it. When the assignment process is given by a true randomization protocol, as in a RCT, we recommend researchers recenter first to purge OVB. Then any predetermined controls (i.e. functions of exposure) can be included to remove variation in the error term and likely increase estimation efficiency. While is one possible control, which automatically recenters the treatment or instrument, it need not be the best choice in terms of predicting the residual variation. Our recommendation is different in natural experiments where assumptions must be placed on the assignment process. Then controlling for candidate instead of recentering can have a valuable “double-robustness” property. Researchers can compute and control for several candidate based on different assignment processes, such that OVB is purged if at least one of the processes is specified correctly (or if there is no OVB to begin with).

We conclude by noting that our framework bears practical lessons for a range of common treatments and instruments, well beyond the market access measure in our empirical application. In our working paper (Borusyak and Hull, 2021), we discuss and illustrate some of these implications for policy eligibility treatments, network spillover treatments, linear and nonlinear shift-share instruments, model-implied instruments, instruments from centralized school assignment mechanisms, “free-space” instruments for mass media access, and weather instruments. We expect other settings may also benefit from explicit specification of shock counterfactuals and appropriate adjustment for non-random shock exposure.

A. Data Appendix

Our analysis of market access effects uses data on 340 prefectures of mainland China. This excludes the islands of Hainan and Taiwan and the special administrative regions of Hong Kong and Macau, but includes six sub-prefecture-level cities (e.g. Shihezi) that do not belong to any prefecture. We use United Nations shapefiles to geocode each prefecture by the location of its main city (or, in a few cases, by the prefecture centroid).38

We use a variety of sources to assemble a comprehensive database of the HSR network in 2016 as well as the lines planned (and in many cases under construction) as of April 2019 but not opened yet by the end of 2016. Our starting points are Map 1.2 of Lawrence et al. (2019), China Railway Yearbooks (China Railway Yearbook Editorial Board, 2001-2013), and the replication files of Lin (2017). We cross-check network links across these sources and use Internet resources such as Wikipedia and Baidu Baike to confirm and fill in missing information. Our database includes various types of HSR lines, including the National HSR Grid (4+4 and 8+8) and high-speed intercity railways. However, we only consider newly built HSR lines, excluding traditional lines upgraded to higher speeds. We do not put further restrictions on the class of trains (e.g. to G- and D-classes only) or specify an explicit minimum speed. The operating speed therefore ranges between 160 and 380kph, although the majority of lines are at 250kph. For each line we collect the date of its official opening (if it has opened), the actual or planned operating speed, and the list of prefecture stops. When different sections of the same line opened in a staggered way, we classify each section as a separate line for the purposes of constructing our 1,999 counterfactuals, following the definition of a line in footnote 34. We include only one contiguous stop per prefecture and drop lines that do not cross prefecture borders.

We compute travel time between all pairs of prefectures and as of the ends of 2007 and 2016 for both the actual and counterfactual networks. Travel time combines traditional modes of transportation (car or low-speed train) with HSR, where available. We allow for unlimited changes between different HSR lines and between HSR and traditional modes without a layover penalty, as HSR trains tend to operate frequently and traditional modes also involve downtime. Following the existing literature, we proxy for travel time by traditional modes by the straight-line distance, and specify the speed of 100 = 120/1.2kph, where 120kph is their typical speed and the 1.2 adjustment for actual routes that are longer than a straight line. For two prefectures connected by an HSR line, we compute the distance along the line as the sum of straight-line distances between adjacent prefectures on the line. We use the operating speed of each line divided by an adjustment factor of 1.3 to capture the fact that the average speed is lower than the nominal speed we record. Computing MA further requires the population of each of the 340 prefectures from the 2000 population Census, which we obtain from Brinkhoff (2018).39

We measure prefecture employment in the 2008–2017 China City Yearbooks (China Statistics Press, 2000-2017).40 Each yearbook covers the previous year (so our data cover 2007–2016). While the yearbooks provide several employment variables, we use “The Average Number of Staff and Workers” (from the “People’s Living Conditions and Social Security” chapter), as measured in the entire prefecture and not just the main urban core. This employment series has by far the lowest number of strong year-to-year deviations which may indicate data quality issues.

We finally apply a data cleaning procedure to the outcome variable. We first mark a prefecture-year observation as exhibiting a “structural break” if () the outcome changes by more than twice in either direction relative to the previous non-missing value for the prefecture, () it is not followed by a change in the opposite direction that is between 3/4 and 4/3 as large in terms of log-changes (which we view as a one-off jump and ignore), and () the previous change does not satisfy (i). We view the outcome change between 2007 and 2016 as valid only if there are no structural breaks in any year in between. This reduces the sample from 283 to the final set of 275 prefectures.

B. Additional Exhibits

Figure A1: Simulated HSR Lines and Market Access Growth.

Notes: This figure shows an example map of simulated Chinese HSR lines and market access growth over 2007–2016, obtained by permuting the opening status of built and unbuilt lines with the same number of cross-prefecture links.

C. Additional Results

Throughout the results and later proofs we omit the phrase “almost surely with respect to ” for brevity. We also abbreviate weak mutual dependence (Assumption 3) as WMD.

Proposition 2 (Convergence for all errors implies WMD). Suppose uniformly. If, for some , for every sequence of distributions of such that for all and then WMD holds.

Proposition 3 (WMD implies growing number of shocks). Suppose the support of is bounded uniformly across across and . Suppose further that, uniformly across , is Lipschitz-continuous in with the Lipschitz constant below and that for at least units , with . Then WMD implies .

Proposition 4 (WMD and dispersed shock exposure). Suppose is weakly monotone in for all , the components of are jointly independent conditionally on , and for . Consider three cases: conditionally on , () all components of are normally distributed and , (ii) all components of have the Bernoulli distribution, or (iii) is linear in . In each case:

If , WMD holds;

If WMD holds, ,

where we define in the Bernoulli shock case.

Proposition 5 (WMD and non-overlapping exposure sets). For each , let be a fixed set of functions of to subsets of such that does not depend on for any . Suppose the components of are jointly independent conditionally on , and , uniformly across and . Then WMD holds if .

Proposition 6 (First stage and concentrated individual exposure). Suppose with , for all and . Suppose further, conditionally on , the components of are mutually independent with uniformly across and . Moreover, one of three conditions hold: () all components of are mutually independent and , (ii) all components of have the Bernoulli distribution, or (iii) all are linear in . Then uniformly across , is also uniformly bounded away from zero (by .

D. Proofs

We drop the subscripting of moments for all proofs to simplify notation. We again abbreviate weak mutual dependence (Assumption 3) as WMD.

Proof of Proposition 1. By Assumption 1 and the Cauchy-Schwarz inequality

| (5) |

When is approximated by for and for a finite number of random draws from , the same argument holds with a variance upper bound that is at most twice as large. Indeed, since

we have, repeating the steps in (5),

Proof of Proposition 2. For each consider where (, ) is distributed as (, ), and . Then . Moreover,

By the Cauchy-Schwarz inequality, .

And by Jensen’s inequality, .

Proof of Proposition 3.41 We prove this result by contradiction. Without loss of generality suppose is constant along the asymptotic sequence; whenever , there is a subsequence of bounded by some , and the proof follows for that subsequence without change. Also without loss, we condition on and suppress the notation. We denote the upper bound on the support of by and extend the domain of each to preserving its Lipschitz constant, by the Kirszbraun theorem.

Let denote the upward-rounding function for some . Consider which rounds both the shocks and the values of Note that

where the second inequality uses the Lipschitz condition and since for each .

By the Lipschitz condition for any . Since and , this implies and consequently . Thus, for all and there is only a finite number of possible “rounded” functions. Therefore, at least of observations with have the same rounded function, and thus there are at least such pairs of observations (). For any such pair, since ,

Setting , we have , and therefore

Thus, weak mutual dependence does not hold, establishing the contradiction.

To establish Proposition 4, we first state and prove four lemmas. We assume all moments relevant for those lemmas exist.

Lemma 1. is weakly increasing and random variables are independent, then for any the conditional expectation is weakly increasing.

Proof. Fix and such that for , and define the vectors and . Note . For , denote the cumulative distribution function of by . Then

Lemma 2. For any weakly increasing , , for with independent components.

Proof. For this is well known. The proof for follows by induction. Suppose it is true for . Then by the law of total covariance

The first term is the expectation of a covariance of two monotone (by Lemma 1) functions of variables. The second term, again by Lemma 1, is a covariance of two monotone functions of random scalars. Thus both terms are non-negative.

Lemma 3. If is weakly monotone in for all and components of are jointly independent conditionally on , then for all and . Furthermore WMD simplifies to for .

Proof. Applying Lemma 2 to and (or their negations, if is weakly decreasing) and conditioning on everywhere, we obtain . Thus, WMD simplifies to

where the second line rearranges terms and the third line follows by .

Lemma 4. Suppose is jointly independent with and consider a scalar function on the support of . Then if all components of are normally distributed and or (ii) all components of have the Bernoulli distribution,

| (6) |

with defined in the Bernoulli case as in Proposition 4. Further, (iii) if is linear, (6) holds with equalities, regardless of the distributions of the components of .

Proof. For part (i), the lower bound is established by Cacoullos (1982, Proposition 3.7), and the upper bound on is established by Chen (1982, Corollary 3.2). For part (ii), the lower bound follows from restricting the results for binomial distributions in Cacoullos and Papathanasiou (1989, p. 355), and the upper bound is similarly a special case of the result in Cacoullos and Papathanasiou (1985, p. 183). Part (iii) follows trivially from the fact that is non-stochastic.

Proof of Proposition 4. By Lemma 3, WMD is equivalent to . Applying Lemma 4 conditionally on and using the bounds on ,

The upper bound, the law of iterated expectations, and imply that if , , and thus WMD holds. The lower bound and imply that if WMD holds and thus , we have .

Proof of Proposition 5. For any and fixed in the support of and for and such that we have because and are functions of two non-overlapping subvectors of , the components of which are conditionally independent. Thus for such (, ) pairs, and we obtain

and therefore .

Proof of Proposition 6. By the law of iterated expectations, . The result then follows directly from Lemma 4 applied to each :

and thus .

Footnotes

Examples of these three settings include Miguel and Kremer (2004), Donaldson and Hornbeck (2016), and Currie and Gruber (1996), respectively. Our working paper (Borusyak and Hull, 2021) discusses other common treatments and instruments nested in our framework: linear and nonlinear shift-share variables, model-implied optimal instruments, instruments based on centralized school assignment mechanisms, “free-space” instruments for access to mass media, and variables leveraging weather shocks.

While recentering is the key step that removes OVB, removing residual variation is likely to increase the efficiency of estimation in large samples. We give practical recommendations for each adjustment in the paper’s conclusion.

Our approach is “design-based,” in that identification is achieved by specifying the assignment process of some observed shocks (see, e.g., Lee (2008), Athey and Imbens (2022), Shaikh and Toulis (2021), and de Chaisemartin and Behaghel (2020)). This strategy for analyzing observational data builds on a long tradition in the analysis of randomized experiments, going back to Neyman (1923). It contrasts with other identification strategies that instead model the residual determinants of the outcome, such as difference-in-difference strategies (e.g. de Chaisemartin and D’Haultfoeuille (2020) and Athey et al. (2021)) or fully-specified structural models.

A notable exception of a recentering-type regression adjustment in the traditional propensity scores setting is the E-estimator of Robins et al. (1992).

Nothing is changed in what follows if one instead considers the number of not-dewormed neighbors as the treatment. For simplicity here we consider only the spillover treatment and not also the direct deworming treatment; see Section 3.6 on the extension to multiple treatments.

Aronow and Samii (2017) use a similar “exposure mapping” terminology for objects like in the network spillover context. We depart from this literature by referring to the realized as the “treatment” or “candidate instrument” and not the realized “exposure.”

For simplicity here we assume away any direct effects of deworming; see Section 3.6 on the extension to multiple treatments.

Such OVB may arise even if (as in the network spillovers and market access examples) variation in the treatment “results” from the experimental shocks, in the sense that whenever .

An example is given by Carvalho et al. (2021), where is a Japanese firm and is the distance in the firm-to-firm supply network from to the nearest firm located in the area hit by an earthquake. Unlike the number of treated neighbors, this spillover treatment is a nonlinear function of the earthquake shock dummies. The earthquake assignment process is also more complex, exhibiting spatial correlation. Our recentering approach still applies naturally in cases like this.

With completely random policy assignment, flexibly controlling for may purge OVB as this is the only source of variation in . However, even in this setting the relevant demographics in and their interactions can be high-dimensional, as discussed by Gruber (2003). This problem is exacerbated under more complex assignment processes, e.g. if policies can be viewed as random only within some groups of states, in which case group indicators and their interactions with the demographics would also have to be included. Recentering extends naturally and avoids the curse of dimensionality.

Formally, we assume and the and variables introduced below are all drawn from some joint distribution which is unrestricted at this point.

In some cases (such as the network spillover and medicaid eligibility examples in the previous section) the candidate instrument can be naturally written as with a common function and a unit-specific measure of exposure . In other cases a more general notation is necessary: in the market access example, for example, the MA of each region depends on the entire country’s economic geography. An alternative way to formalize general composite variables is for a common and unit-specific . This notation is equivalent to (3); we use as it is more compact. We also note also that equation (3) does not contain a residual: it formalizes an algorithm for computing an instrument rather than characterizing an economic relationship.

The exclusion and as-good-as-random assignment assumptions are isolated in Appendix C.1 of BH, via a general potential outcomes model.

Our identification results hold under the weaker conditional mean independence assumption of . This assumption can be understood as defining a partially linear model, as in Robinson (1988): where and for . A difference from Robinson (1988) arises because we do not assume data; for instance, we do not assume for .

Throughout, we allow (, ) to be stochastic (as when some components are sampled from a superpopulation) or fixed (as in a more conventional “design-based” analysis; e.g. Athey and Imbens (2022)). In the Medicaid eligibility example it may be more natural to view the observed (, ) as sampled from the national population, along with untreated potential outcomes . Conversely, in the market access example, it may be more natural to view the set of observed regions as a finite population, with fixed geography . With fixed (, ), Assumption 1 holds trivially but Assumption 2 below is still restrictive.

It is worth emphasizing that in our non- setup these conditions combine two dimensions of variation: over the stochastic realizations of , , , and , and across the cross-section of observations . In the case they reduce to the more familiar conditions of and .

There exist constructions that yield a relevant recentered instrument whenever the shocks induce some variation in treatment. Formally, when is not almost-surely zero at least for some , the recentered instrument constructed as is relevant. This again follows by the law of iterated expectations: .

Formally, the regression with as a control yields the reduced-form and first-stage moments and , where denotes the residuals from a cross-sectional projection of on . We show in Appendix B.1 of BH that these moments also identify under Assumption 1. Appendix C.9 of BH shows that controlling for always reduces asymptotic variance of the estimator when is homoskedastic, while also giving a counterexample under heteroskedasticity.

In panel data with , for example, unit fixed effects generally purge OVB only when the expected instrument is time-invariant, which generally requires the mapping, the value of , and the distribution of to be time-invariant. While plausible in some applications, these conditions (in particular, stationarity of the shock distribution) can be quite restrictive. For instance, when new railroad lines tend to be built more than destroyed, expected market access will tend to grow over time.

For the identification results, it is enough to approximate by an average of for any number of drawn from , independently of each other and of . We have, for example, by iterated expectations, since . We discuss how the number of draws affects the asymptotic behavior of the recentered IV estimator below.

Formally, suppose either or for each , where denotes the cross-sectional residualization of variable on some functions of used as controls. Then , where here denotes the residuals from a cross-sectional projection of on . See Appendix C.6 of BH for our framework extended to predetermined controls.