Abstract

Social determinants of health (SDoH) surveys are data sets that provide useful health-related information about individuals and communities. This study aims to develop a user-friendly web application that allows clinicians to get a predictive insight into the social needs of their patients before their in-patient visits using SDoH survey data to provide an improved and personalized service. The study used a longitudinal survey that consisted of 108,563 patient responses to 12 questions. Questions were designed to have a binary outcome as the response and the patient's most recent responses for each of these questions were modeled independently by incorporating explanatory variables. Multiple classification and regression techniques were used, including logistic regression, Bayesian generalized linear model, extreme gradient boosting, gradient boosting, neural networks, and random forests. Based on the area under the curve values, gradient boosting models provided the highest precision values. Finally, the models were incorporated into an R Shiny application, enabling users to predict and compare the impact of SDoH on patients’ lives. The tool is freely hosted online by the University of Kansas Medical Center's Department of Biostatistics and Data Science. The supporting materials for the application are publicly accessible on GitHub.

Keywords: social determinants of health, predictive modeling, binary classification, R Shiny

The social needs of patients, social determinants of health (SDoH), and social disparities have long been known to influence the health care people receive, and consequently health outcomes.1,2,3 Consequently, there has been increasing research and advocacy focused on addressing these matters. SDoH can present a significant roadblock that prevents individuals from receiving the care they need.4,5 Health care policies aimed at whole populations without consideration for marginalized communities can exacerbate this issue, as they fail to account for the unique circumstances that individuals face. 6 Several studies have identified significant effects of SDoH on disease risk and health outcomes, as well as an awareness of those effects among health care professionals.7,8,9,10,11 With obvious gaps still present in health outcomes due to SDoH, novel analysis methods can be employed to assist health care providers in delivering appropriate care to individuals affected by SDoH.

Predictive modeling through machine learning modeling could be used to detect the impact of SDoH-related disparities on patient care. Machine learning uses computational modeling to learn from data, meaning that performance at executing a specific task improves with more data. 12 In the field of epidemiology, the utilization of machine learning is on the rise for analyzing questions, predicting service utilization, and suggesting suitable interventions. 13 In addition, predictive modeling could provide the improvements needed to provide optimal preventive care by identifying those with the greatest risk.14,15 Deep neural network models have demonstrated the ability to identify a patient's SDoH in clinical notes from electronic health records and have been increasingly used for that purpose in recent years.16,17 This presents an opportunity to further this critical research in improving patient care.

In a previous University of Kansas Medical Center study, gradient boosting was applied to evaluate data gathered from self-answered questionnaires by patients throughout Kansas and Western Missouri. This method has been previously applied to assess risk in health care settings as well. 12 Applying these methods to integrated SDoH measures in electronic health records (EHRs) could be used to improve the quality of patient care on both an individual and population level.18,19 The results of these predictive models could also guide new developments in how to analyze and gather data on SDoH. 20 Similarly, natural language processing models can be used to develop screening tools, risk prediction models, and decision-making support systems. 21 For these benefits to be feasible, the integration of SDoH measures in EHRs is also a necessity. 22

This study was conducted to assess the performance of various machine learning methods in the prediction of SDoH questionnaire responses based on demographic and location data. It was further expanded by the development of a user-friendly web application for physicians to assess the risk of SDoH-related outcomes in their patients.

Materials and Methods

The primary data element utilized for this study is the SDoH survey responses. Patients complete this survey during their primary care visit at The University of Kansas Health System (TUKHS). The survey was designed by TUKHS based on the Health Leads SDoH Toolkit 14 and data were retrieved from the HERON (a.k.a. i2b2) data warehouse source.

The data set consisted of 108,563 unique patient responses to 12 SDoH survey questions, along with demographic information from 2016 to 2022. For each of these questions, patients provided binary responses (yes/no).

The twelve SDoH questions on the survey are:

In the last 12 months, did you ever eat less than you should because there wasn’t enough?

In the last 12 months, has your utility company shut off your service for not paying your bill?

Are you worried that in the next 2 months, you may not have stable housing?

Are you afraid you might be hurt in your home by someone you know?

Are you afraid you might be hurt in your apartment building or neighborhood?

Do problems getting childcare make it difficult for you to work or study?

In the last 12 months, have you needed to see a doctor, but could not because of cost?

In the last 12 months, did you skip medications to save money?

In the last 12 months, have you ever had to go without health care?

Do you have problems understanding what is told to you about your medical conditions?

Do you often feel that you lack companionship?

If you answered YES to any questions above, would you like to discuss help?

The data were split into two groups with 60 percent for model training and 40 percent for testing and validation of the model. The statistical analyses were performed by using the caret package and the base-r package in the R (version 4.1.2) programming language. Patient responses for each of these questions was modeled independently by incorporating the explanatory variables of county, gender, race, ethnicity, age, and the Area Deprivation Index taken from the 2020 Neighborhood Atlas published by the University of Wisconsin. Several machine learning models were used: logistic regression (glm), Bayesian generalized linear model (bayesglm), extreme gradient boosting (xgbTree), gradient boosting (gbm), neural net (nnet), and random forest (ranger). 23 For the machine learning models 25-fold cross validation was used to choose a better model.

A total of 72 models (6 models per each of 12 questions) were fitted and all of these models were used to predict the patient's responses using default tuning parameters. For each model, the receiver operating curves (ROC) were graphed and area under the curves (AUCs) were calculated. Models were compared with reference to the logistic model using DeLong difference, as well as Net Reclassification Index (NRI) and Integrated Discrimination Index (IDI). Finally, these models and model statistics were packaged into an R Shiny application that allows users to make predictions and comparisons among the models.

Results

The AUC statistics for each question based on the model are presented in Table 1.

Table 1.

AUC Values and the Confidence Interval Values per Each Question Based on Fitted Models. 95 Percent Confidence Interval for AUC is Given in Brackets.

| Question | Machine Learning Model | |||||

|---|---|---|---|---|---|---|

| Logistic Regression | Baysian GLM | XGBoosting | Gradient Boosting | Neural Network | Random Forest | |

| 01 | 0.707 [0.688-0.725] |

0.707 [0.688-0.725] |

0.716 [0.698-0.734] |

0.716 [0.698-0.734] |

0.707 [0.689-0.725] |

0.706 [0.688-0.724] |

| 02 | 0.738 [0.715-0.760] |

0.738 [0.716-0.760] |

0.754 [0.733-0.775] |

0.755 [0.734-0.777] |

0.746 [0.723-0.768] |

0.746 [0.724-0.768] |

| 03 | 0.676 [0.653-0.700] |

0.676 [0.653-0.700] |

0.706 [0.683-0.729] |

0.706 [0.684-0.729] |

0.703 [0.680-0.726] |

0.696 [0.672-0.720] |

| 04 | 0.589 [0.525-0.653] |

0.592 [0.527-0.656] |

0.600 [0.536-0.664] |

0.625 [0.566-0.685] |

0.602 [0.542-0.661] |

0.595 [0.531-0.658] |

| 05 | 0.678 [0.644-0.712] |

0.680 [0.647-0.713] |

0.692 [0.660-0.725] |

0.703 [0.671-0.735] |

0.682 [0.649-0.715] |

0.696 [0.663-0.728] |

| 06 | 0.753 [0.723-0.783] |

0.753 [0.723-0.784] |

0.798 [0.771-0.826] |

0.803 [0.776-0.830] |

0.799 [0.772-0.826] |

0.777 [0.748-0.807] |

| 07 | 0.685 [0.671-0.700] |

0.686 [0.672-0.700] |

0.724 [0.711-0.738] |

0.726 [0.713-0.739] |

0.719 [0.706-0.732] |

0.708 [0.694-0.721] |

| 08 | 0.646 [0.629-0.663] |

0.646 [0.629-0.663] |

0.689 [0.673-0.705] |

0.688 [0.672-0.705] |

0.681 [0.665-0.698] |

0.674 [0.657-0.690] |

| 09 | 0.685 [0.664-0.707] |

0.685 [0.664-0.707] |

0.702 [0.680-0.723] |

0.701 [0.679-0.722] |

0.687 [0.664-0.709] |

0.689 [0.668-0.711] |

| 10 | 0.670 [0.650-0.690] |

0.670 [0.650-0.690] |

0.689 [0.670-0.708] |

0.688 [0.668-0.707] |

0.675 [0.655-0.695] |

0.685 [0.665-0.704] |

| 11 | 0.604 [0.588-0.620] |

0.604 [0.588-0.620] |

0.620 [0.604-0.635] |

0.618 [0.602-0.634] |

0.615 [0.599-0.630] |

0.615 [0.599-0.631] |

| 12 | 0.630 [0.609-0.650] |

0.630 [0.610-0.650] |

0.641 [0.621-0.661] |

0.648 [0.628-0.667] |

0.631 [0.611-0.652] |

0.639 [0.619-0.659] |

According to the data, AUC scores are greater than 0.5; hence it can be concluded with 95 percent confidence that all the fitted models have better predictions accuracy than random choices. When comparing the AUC values across the models within each question, in most cases either gradient boosting or extreme gradient boosting models have higher AUC scores than the other prediction models. This implies that the correct predictions will more likely be made by these models.

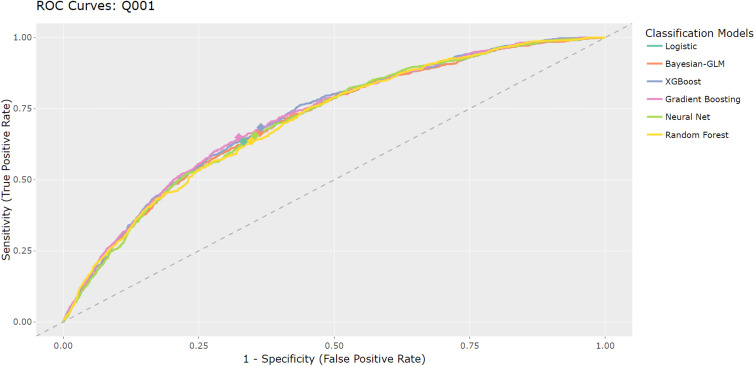

The false positive and true positive rates for each model and each question will be different. For these models, false positives are not a critical concern as it is only predicting survey responses. Therefore, model predictions were optimized for sensitivity and specificity by designating the location on the curve closest to the top left corner of the ROC plot. Figure 1 demonstrates that both gradient boosting model and extreme gradient boosting model have similar prediction accuracies to binary classifiers.

Figure 1.

Question 1 ROC curves for six classification models.

Since the 95 percent confidence interval for AUCs overlaps heavily, there is no clear best model across all the questions (see Table 1). Therefore, in addition to the simple AUC comparison, the IDI and NRI were considered as measures of prediction model performance relative to logistic regression. The models within each question were compared to that of the logistic regression model for testing whether the above models improve the prediction performance. Based on the results presented in Table 2, it can be concluded with 95 percent confidence that for questions 1–3, the extreme gradient boost model has a significantly better IDI and NRI compared to the logistic model. Additionally, for question 4, the gradient boost has a significant NRI. For questions 5–12, extreme gradient boost has a significant NRI.

Table 2.

Model Comparison Between XGBoosting and Gradient Boosting Based on IDI and NRI Indexes.

| Question | XGBoosting | Gradient Boosting | ||||||

|---|---|---|---|---|---|---|---|---|

| IDI | NRI | IDI | NRI | |||||

| 01 | 0.003 | 0.002-0.005 | 0.339 | 0.266-0.411 | <0.001 | −0.001-0.001 | −0.135 | −0.209, −0.061 |

| 02 | 0.002 | 0.001-0.003 | 0.454 | 0.361-0.546 | −0.001 | −0.001-0.000 | −0.298 | −0.392, −0.204 |

| 03 | 0.002 | 0.002-0.003 | 0.346 | 0.261-0.431 | <0.000 | −0.001-0.000 | −0.162 | −0.250, −0.073 |

| 04 | <0.001 | 0.000-0.001 | 0.044 | −0.179-0.267 | 0.000 | 0.000-<0.001 | 0.251 | 0.031-0.471 |

| 05 | 0.001 | 0.000-0.001 | 0.389 | 0.264-0.515 | 0.000 | −0.001-0.001 | −0.282 | −0.412, −0.153 |

| 06 | 0.004 | 0.002-0.005 | 0.627 | 0.502-0.752 | <0.001 | −0.001-0.001 | −0.244 | −0.376, −0.111 |

| 07 | 0.008 | 0.007-0.009 | 0.477 | 0.424-0.530 | <0.001 | 0.000-0.001 | −0.132 | −0.190, −0.074 |

| 08 | 0.005 | 0.004-0.006 | 0.339 | 0.279-0.400 | <0.001 | 0.000-0.001 | 0.026 | −0.039-0.091 |

| 09 | 0.002 | 0.002-0.003 | 0.345 | 0.266-0.424 | <0.001 | 0.000-0.001 | −0.121 | −0.201, −0.042 |

| 10 | 0.005 | 0.004-0.007 | 0.177 | 0.108-0.245 | <0.001 | −0.001-0.001 | −0.178 | −0.247, −0.110 |

| 11 | 0.002 | 0.002-0.003 | 0.174 | 0.117-0.230 | −0.001 | −0.001-0.000 | −0.098 | −0.154, −0.041 |

| 12 | 0.001 | 0.001-0.002 | 0.190 | 0.120-0.261 | <0.001 | −0.001-0.001 | −0.110 | −0.180, −0.039 |

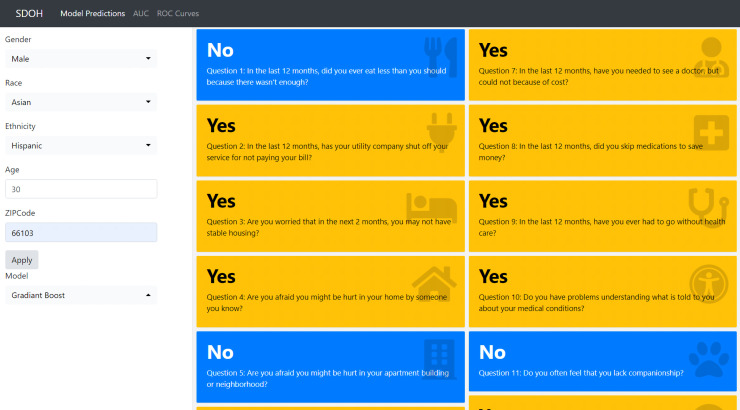

The dashboard was developed using the R software and is hosted using the R Shiny web server allowing anyone in Kansas and Western Missouri to access the application easily and predict their responses (https://biostats-shinyr.kumc.edu/Predicting_SDOH/). Additionally, the R code used to create the dashboard can be found on GitHub (https://github.com/CRISsupport/SDOH-Predictions-KS-WestMO). This web application provides clinicians with the ability to predict the yes/no responses for each SDoH survey question based on the values entered for the explanatory variables and selected model. All the models and outputs were packaged into a Shiny R application where users can experiment and explore the results. Figure 2 shows the sample output for different explanatory variables and models choices.

Figure 2.

SDOH web application dashboard.

Discussion

The future of medicine is trending toward personalized health care; gathering more insight about patients will allow the health care system to better address their needs. Predictive models are being used elsewhere to identify individuals at risk of cancer. A predictive model for pancreatic cancer risk can be used to identify those at high risk and improve the early treatment of their disease. 24 Suicide risk is another example of a condition that has been modeled with high accuracy to help identify individuals at risk, but largely these suicide risk models are not useful because their accuracy of predicting future events is low. 25

This study produced a predictive model bundled in an interactive dashboard that allows users to input high-level demographic data to receive a personalized social needs prediction. In addition to predictive capabilities, this dashboard also allows users to compare between different models and displays their respective descriptive statistics. While not perfect, these models were able to predict better than random assignment as indicated by their AUC, though it should be noted that a high count of no responses in social needs surveys exists, which skews the response distributions.

We collaborated with a family medicine physician to gather this information and we discovered that the application is crucial for understanding location-based risks. To the best of our knowledge, no such application currently exists. As SDoH survey data continues to be collected at the University of Kansas, these models should be run against future data to gauge their usefulness. In the meantime, these models can be accessed to provide users insight into predicted social needs. It is important to note that these models produce predictions and that predictions can be incorrect. As more data becomes available, we should continue to explore how predictive insight can help clinicians.

Conclusion

We propose SDOH,a user-friendly tool that will help the treating physician and clinical teams to better address patients in need of assistance and by extension improve the communities that they serve. This predictive tool is hosted online and is made freely available by the University of Kansas Medical Center's Department of Biostatistics and Data Science (https://biostats-shinyr.kumc.edu/Predicting_SDOH/). The R source code used to host the models and take user input in the web application has been made publicly available on GitHub (https://github.com/CRISsupport/SDOH-Predictions-KS-WestMO). This tool could be expanded to include different modeling techniques and more explanatory variables to improve AUCs. Model tuning should also be explored to improve the efficiency of the current techniques. Our future work aims to extend our tool to provide summary statistics around key social needs stratified by county, highlighting the hot spots based on the social risk factor that is selected. This work paves a path for evidence-based policy approaches, which could address the social risk factors among underserved communities.

Author Biographies

Isuru Ratnayake, PhD, is an Education Assistant Professor of Biostatistics and Data Science at the University of Kansas Medical Center. His primary research areas focus on modeling the heteroskedasticity of high-frequency data, classification modeling, geospatial modeling, and spatial-temporal modeling of health data. With over six years of experience in developing time series models, his work includes modeling zero-inflated count data, long-term and short-term volatilities in time series data, time series data for infectious diseases, and developing spatial-temporal disease models. In this field, he has made contributions to the time series literature by proposing methodological frameworks for analyzing the conditional heteroskedastic structure.

Sam Pepper is a Senior Data Scientist in the University of Kansas Medical Center Department of Biostatistics and Data Science with research experience in classification modeling, machine learning, clinical trials, and public health. He has a master's degree in Biostatistics from the University of Kansas Medical Center.

Aliyah Anderson received her bachelor's in health science with a minor in Public and Population Health from the University of Kansas and is currently earning a master's in public health with a concentration in Infectious Diseases and Microbiology at the University of Pittsburgh. As an intern at the KUMC Department of Biostatistics and Data Science, she has contributed to research in the study start-up process for preventative clinical trials for breast cancer and designed a DATA Wrangling course.

Alexander Alsup, MS, is a Data Scientist with previous research experience in technological applications for clinical trial execution, public health, genomics, and immunology. He is a current PhD Student at the University of Kansas Medical Center Department of Biostatistics and Data Science, researching analysis methods for DNA methylation data. He has worked previously as a senior research analyst for the University of Kansas Medical Center Department of Pulmonary, Sleep, and Critical Care where he gained research experience in survival analysis, mixed-effects models, unsupervised statistical learning, clinical trial design, and database administration.

Dinesh Pal Mudaranthakam, PhD, MBA, is Assistant Professor and Director of Research Information Technology at the University of Kansas Medical Center. His work focuses on developing novel technological applications that improve the availability, affordability, and accessibility of life-saving care to cancer patients. He has published work investigating social determinants of health, financial toxicity, and barriers to clinical trial participation in the context of cancer patients. Dr. Mudaranthakam has led the creation of informatics teams overseeing cancer and non-cancer research data warehousing, clinical trial data management across phases and sites, and integration of diverse research data sources into a research informatics ecosystem.

Footnotes

The authors declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding: The authors received no financial support for the research, authorship, and/or publication of this article.

ORCID iDs: Isuru Ratnayake https://orcid.org/0000-0001-9596-781X

Sam Pepper https://orcid.org/0000-0003-0696-3820

Alexander Alsup https://orcid.org/0000-0002-9487-4686

References

- 1.Braveman P, Egerter S, Williams DR. The social determinants of health: Coming of age. Annu Rev Public Health. 2011;32:381–398. 10.1146/annurev-publhealth-031210-101218 [DOI] [PubMed] [Google Scholar]

- 2.Braveman P, Gottlieb L. The social determinants of health: It’s time to consider the causes of the causes. Public Health Reports (Washington, D.C. : 1974). 2014;129(Suppl 2):19–31. 10.1177/00333549141291S206 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Penman-Aguilar A, Talih M, Huang D, Moonesinghe R, Bouye K, Beckles G. Measurement of health disparities, health inequities, and social determinants of health to support the advancement of health equity. Journal of Public Health Management and Practice: JPHMP. 2016;2(Suppl 1):S33–S42. 10.1097/PHH.0000000000000373 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Morone JF, Teitelman AM, Cronholm PF, Hawkes CP, Lipman TH. Influence of social determinants of health barriers to family management of type 1 diabetes in Black single parent families: A mixed methods study. Pediatr Diabetes. 2021;22(8):1150–1161. 10.1111/pedi.13276 [DOI] [PubMed] [Google Scholar]

- 5.Butler AM. Social determinants of health and racial/ethnic disparities in type 2 diabetes in youth. Curr Diab Rep. 2017;17(8):60. 10.1007/s11892-017-0885- [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Gottlieb L, Sandel M, Adler NE. Collecting and applying data on social determinants of health in health care settings. JAMA Intern Med. 2013;173(11):1017–1020. 10.1001/jamainternmed.2013.560 [DOI] [PubMed] [Google Scholar]

- 7.Robert Wood Johnson Foundation. Health care’s blind side: The overlooked connection between social needs and good health. Robert Wood Johnson Foundation; 2011. https://www.rwjf.org/en/library/research/2011/12/health-care-s-blind-side.html. [Google Scholar]

- 8.Physicians Foundation. 2018 Survey of America’s Physicians: practice patterns and perspectives. 2018. Available at: https://www.merritthawkins.com/uploadedFiles/MerrittHawkins/Content/Pdf/MerrittHawkins_PhysiciansFoundation_Survey2018.pdf

- 9.Marmot M, Bell R. Social inequalities in health: A proper concern of epidemiology. Ann Epidemiol. 2016;26(4):238–240. 10.1016/j.annepidem.2016.02.003 [DOI] [PubMed] [Google Scholar]

- 10.Cuddapah G, Vallivedu Chennakesavulu P, Pentapurthy P, et al. Complications in diabetes mellitus: Social determinants and trends. Cureus. April 23, 2022;14(4):e24415. doi: 10.7759/cureus.24415 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Walker RJ, Strom Williams J, Egede LE. Influence of race, ethnicity and social determinants of health on diabetes outcomes. Am J Med Sci. 2016;351(4):366–373. 10.1016/j.amjms.2016.01.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Shung DL, Au B, Taylor RAet al. Validation of a machine learning model that outperforms clinical risk scoring systems for upper gastrointestinal bleeding. Gastroenterology. 2020;158(1):160–167. 10.1053/j.gastro.2019.09.009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Kino S, Hsu YT, Shiba Ket al. et al. A scoping review on the use of machine learning in research on social determinants of health: Trends and research prospects. SSM - Population Health. 2021;15:100836. 10.1016/j.ssmph.2021.100836 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Hammond G, Johnston K, Huang K, Joynt Maddox KE. Social determinants of health improve predictive accuracy of clinical risk models for cardiovascular hospitalization, annual cost, and death. Circ Cardiovasc Qual Outcomes. 2020;13(6):e006752. 10.1161/CIRCOUTCOMES.120.006752 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Chen M, Tan X, Padman R. Social determinants of health in electronic health records and their impact on analysis and risk prediction: A systematic review. Journal of the American Medical Informatics Association : JAMIA. 2020;27(11):1764–1773. 10.1093/jamia/ocaa143 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Han S, Zhang RF, Shi Let al. et al. Classifying social determinants of health from unstructured electronic health records using deep learning-based natural language processing. J Biomed Inform. 2022;127:103984. [DOI] [PubMed] [Google Scholar]

- 17.Kino S, Hsu YT, Shiba Ket al. et al. A scoping review on the use of machine learning in research on social determinants of health: Trends and research prospects. SSM-population Health. 2021;15:100836. 10.1016/j.ssmph.2021.100836 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Chen M, Tan X, Padman R. Social determinants of health in electronic health records and their impact on analysis and risk prediction: A systematic review. J Am Med Inform Assoc. 2020 Nov 1;27(11):1764–1773. doi: 10.1093/jamia/ocaa143. PMID: 33202021; PMCID: PMC7671639. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Thomas Craig KJ, Fusco N, Gunnarsdottir T, Chamberland L, Snowdon JL, Kassler WJ. Leveraging data and digital health technologies to assess and impact social determinants of health (SDoH): A state-of-the-art literature review. Online J Public Health Inform. 2021 Dec 24;13(3):E14. doi: 10.5210/ojphi.v13i3.11081. PMID: 35082976; PMCID: PMC8765800. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Wallace JW, Decosimo KP, Simon MC. Applying data analytics to address social determinants of health in practice. N C Med J. 2019 Jul-Aug;80(4):244–248. doi: 10.18043/ncm.80.4.244. PMID: 31278189. [DOI] [PubMed] [Google Scholar]

- 21.Patra BG, Sharma MM, Vekaria Vet al. Extracting social determinants of health from electronic health records using natural language processing: A systematic review. J Am Med Inform Assoc. 2021 Nov 25;28(12):2716–2727. doi: 10.1093/jamia/ocab170. PMID: 34613399; PMCID: PMC8633615. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Cantor MN, Thorpe L. Integrating data on social determinants of health into electronic health records. Health Aff (Millwood). 2018 Apr;37(4):585–590. doi: 10.1377/hlthaff.2017.1252. PMID: 29608369. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Kuhn M. Building predictive models in R using the caret package. J Stat Softw. 2008;28(5):1–26. 10.18637/jss.v028.i0527774042 [DOI] [Google Scholar]

- 24.Lee HA, Chen KW, Hsu CY. Prediction model for pancreatic cancer—A population-based study from NHIRD. Cancers (Basel). 2022;14(4):882. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Belsher BE, Smolenski DJ, Pruitt LDet al. et al. Prediction models for suicide attempts and deaths: A systematic review and simulation. JAMA psychiatry. 2019;76(6):642–651. [DOI] [PubMed] [Google Scholar]