Abstract

The ever-increasing number of chemicals has raised public concerns due to their adverse effects on human health and the environment. To protect public health and the environment, it is critical to assess the toxicity of these chemicals. Traditional in vitro and in vivo toxicity assays are complicated, costly, and time-consuming and may face ethical issues. These constraints raise the need for alternative methods for assessing the toxicity of chemicals. Recently, due to the advancement of machine learning algorithms and the increase in computational power, many toxicity prediction models have been developed using various machine learning and deep learning algorithms such as support vector machine, random forest, k-nearest neighbors, ensemble learning, and deep neural network. This review summarizes the machine learning- and deep learning-based toxicity prediction models developed in recent years. Support vector machine and random forest are the most popular machine learning algorithms, and hepatotoxicity, cardiotoxicity, and carcinogenicity are the frequently modeled toxicity endpoints in predictive toxicology. It is known that datasets impact model performance. The quality of datasets used in the development of toxicity prediction models using machine learning and deep learning is vital to the performance of the developed models. The different toxicity assignments for the same chemicals among different datasets of the same type of toxicity have been observed, indicating benchmarking datasets is needed for developing reliable toxicity prediction models using machine learning and deep learning algorithms. This review provides insights into current machine learning models in predictive toxicology, which are expected to promote the development and application of toxicity prediction models in the future.

Keywords: Toxicity, machine learning, deep learning, model, dataset, data quality

Impact Statement

Machine learning- and deep learning-based toxicity prediction models have become popular due to their ability to predict the toxicity of chemicals accurately and economically. There is not a comprehensive review that summarizes current developments and applications of machine learning and deep learning models for predicting various toxicity endpoints and discusses factors impacting model performance, especially the quality of datasets. This review aims to fill this cap by discussing the current machine learning and deep learning models to aid the development of more reliable toxicity prediction models using machine learning. We examine current machine learning and deep learning models for toxicity prediction from common toxicities, machine learning algorithms, and datasets. We also discuss the efforts that are crucial to improving the performance of toxicity prediction models in the future.

Introduction

The safety of chemical-containing products and the risks of environmental chemicals have become one of the most serious problems for people all over the world due to the ever-increasing number of chemicals. To reduce the potential adverse effects of chemicals on human health, it is crucial to assess the toxic effects associated with exposure to chemicals. Toxicity assessments have been used by regulatory decision-making bodies such as the U.S. Food and Drug Administration (FDA), U.S. Environmental Protection Agency (EPA), European Environment Agency, and European Medicines Agency (EMA) to ensure public safety by reducing human and environmental exposure to harmful chemicals. Currently, the standard methods of toxicity evaluation are based on animal experiments. However, these tests are constrained by time, cost, and ethical issues. Moreover, it is impossible to test such a large number of compounds for toxicological, regulatory, or drug development purposes via animal experimentation. To address these challenges, it is crucial to develop fast and economical alternatives to avoid conducting animal toxicity tests, including in vitro and in silico methods.

In recent decades, various computational methods such as structural alerts, read-across, and quantitative structure-activity relationship (QSAR) have been used to predict the toxicological effects of chemicals.1–14 QSAR builds a quantitative relationship between the structural or physicochemical characteristics of chemicals and their toxic effects. It has been one of the widely used methods to build toxicity prediction models. Recently, due to the continuous improvement of computational power, the emergence of big data, and the rapid development of machine learning (ML) and deep learning (DL) techniques, QSAR based on ML and DL has become increasingly prominent in predictive toxicology. The ability to automatically learn from data to perform predictions makes ML and DL very attractive computational techniques to predict toxicity for a large number of chemicals. Our group has used ML to estimate various physicochemical properties and toxicological activities of chemicals.1,2,4,6,8,9,15,16

Although enormous progress has been made in implementing ML- and DL-based models in predictive toxicology, there are growing interests in developing more reliable toxicity prediction models using ML and DL. A comprehensive review to summarize the current development and applications of ML and DL models in predictive toxicology may provide insight and promote and improve the development of more reliable ML and DL models in predictive toxicology. This review recapitulates current ML and DL models in predictive toxicology and discusses various factors related to the models and their performance.

Toxicity types

Many ML and DL models have been built to predict a variety of toxicity types. In Table 1, ML algorithms and their performance were analyzed for models from 82 papers. For paper selection, we conducted searches on PubMed (https://pubmed.ncbi.nlm.nih.gov/) using a combination of keywords including (“toxicity” or “carcinogenicity” or “cardiotoxicity” or “cytotoxicity” or “genotoxicity” or “hepatoxicity” or “acute toxicity” or “skin toxicity” or “reprotoxicity”) and (“machine learning” or “deep learning”). To ensure the reports are current, we only considered papers published after 2008. Furthermore, we focused on papers with classification models that reported balanced accuracy in their cross validation (on the entire dataset, not just the training dataset), holdout, and external validation. From this analysis, we summarized a total of 82 papers, specifically addressing models for predicting carcinogenicity, cardiotoxicity, cytotoxicity, genotoxicity, hepatoxicity, acute toxicity, skin toxicity, and reprotoxicity. This review intentionally excludes models geared toward predicting other types of toxicity to maintain a focused scope. The balanced accuracy values for cross validation, holdout validation, and external validation are given in this table. For some external validations, the external dataset was obtained by splitting the same dataset into training and external datasets, and we listed them as holdout validations in the table. For models without balanced accuracy reported, the reported sensitivity and specificity were used to calculate balance accuracy.

Table 1.

Summary of machine learning and deep learning models for toxicity prediction.

| Toxicity type | Dataset | Algorithm | Descriptors | Feature selection | Model validation | Ref | |||

|---|---|---|---|---|---|---|---|---|---|

| Endpoint | Size | CV | Holdout | External | |||||

| Carcinogenicity | In vivo (dog) | 25 | RF | MOE, MACCS |

SW, PCA1 |

0.72 | 0.7 | 59 | |

| In vivo (hamster) | 72 | RF | MOE, MACCS |

SW, PCA1 |

0.72 | 0.54 | 59 | ||

| In vivo (rat) | 829 | DT | PaDEL | NS | 0.697 a | 67 | |||

| 829 | kNN | PaDEL | NS | 0.806 a | 0.700 a | 67 | |||

| 829 | NB | PaDEL | NS | 0.640 a | 67 | ||||

| 829 | RF | PaDEL | NS | 0.734 a | 0.724 | 67 | |||

| 829 | SVM | PaDEL | NS | 0.802 a | 0.692 a | 67 | |||

| 852 | SVM | MOE, MACCS |

SW, PCA2 |

0.738 a | 0.825 a | 66 | |||

| 897 | RF | MOE, MACCS |

SW, PCA1 |

0.64 | 0.665 | 59 | |||

| 1003 | CNN | Multiple1 | NA | 0.663 a | 0.679 a | 64 | |||

| 1003 | EL b | PaDEL | PCA2 | 0.676 a | 0.665 | 65 | |||

| 1003 | EL c | PaDEL | PCA2 | 0.670 a | 0.687 | 65 | |||

| 1003 | EL d | PaDEL | PCA2 | 0.682 a | 0.709 | 65 | |||

| 1003 | kNN | Multiple1 | NA | 0.599 a | 0.648 a | 64 | |||

| 1003 | RF | PaDEL | PCA2 | 0.647 a | 65 | ||||

| 1003 | RF | Multiple1 | NA | 0.656 a | 0.663 a | 64 | |||

| 1003 | SVM | PaDEL | PCA2 | 0.638 a | 65 | ||||

| 1003 | SVM | Multiple1 | NA | 0.618 a | 0.701 a | 64 | |||

| 1003 | XGBoost | PaDEL | PCA2 | 0.647 a | 65 | ||||

| 1003 | XGBoost | Multiple1 | NA | 0.641 a | 0.609 a | 64 | |||

| 1042 | NB | Multiple2 | NS | 0.643 a | 63 | ||||

| 844 | MLP | Multiple3 | PCA2, F-score, MC-SA |

0.824 | 70 | ||||

| 844 | SVM | Multiple3 | PCA2, F-score, MC-SA |

0.834 | 70 | ||||

| 854 | RF | PaDEL | CAS | 0.782 | 0.58 | 61 | |||

| 374 | RF | PaDEL | CAS | 0.6 | 61 | ||||

| In vivo | 172 | SVM | SMILES | NS | 0.909 a | 0.904 | 69 | ||

| 665 | SVM | SMILES | NS | 0.756 a | 0.76 | 69 | |||

| Multicell | 818 | RF | MOE, MACCS |

SW, PCA1 |

0.685 | 0.685 | 59 | ||

| Single-cell | 1121 | RF | MOE, MACCS |

SW, PCA1 |

0.625 | 0.665 | 59 | ||

| In vivo (mouse) | 1391 | RF | MACCS, Morgan |

NS | 0.812 | 0.833 | 30 | ||

| In vivo (rat and mice) | 314 | kNN | MCZ | NS | 0.675 a | 62 | |||

| 384 | kNN | MCZ | NS | 0.764 a | 0.615 | 62 | |||

| Cardiotoxicity | IC50 (hERG) | 172 | EL e | PaDEL | NA | 0.703 a | 0.578 a | 51 | |

| 172 | kNN | PaDEL | NA | 0.656 a | 0.556 a | 51 | |||

| 368 | RF | Multiple4 | NS | 0.745 a | 45 | ||||

| 368 | SVM | Multiple4 | NS | 0.77 a | 45 | ||||

| 476 | RF | Multiple4 | NS | 0.49 a | 45 | ||||

| 476 | SVM | Multiple4 | NS | 0.63 a | 45 | ||||

| 620 | Bayesian | Multiple5 | NS | 0.828 a | 44 | ||||

| 620 | RP | ECFP_8 | NS | 0.845 a | 44 | ||||

| 697 | MLP | Multiple6 | LV, HC | 0.775 a | 0.556 a | 49 | |||

| 697 | RF | Multiple6 | LV, HC | 0.782 a | 0.546 a | 49 | |||

| 740 | Bayesian | Multiple5 | NS | 0.852 a | 0.658 a | 44 | |||

| 740 | RP | ECFP_8 | NS | 0.805 a | 44 | ||||

| 1163 | DT | Multiple7 | NS | 0.664 a | 54 | ||||

| 1163 | kNN | Multiple7 | NS | 0.700 a | 0.612 a | 54 | |||

| 1163 | NB | Multiple7 | NS | 0.649 a | 54 | ||||

| 1163 | RF | Multiple7 | NS | 0.641 a | 54 | ||||

| 1163 | SVM | Multiple7 | NS | 0.701 a | 0.597 a | 54 | |||

| 1668 | SVM | Multiple8 | NS | 0.912 a | 0.706 a | 46 | |||

| 1865 | EL f | PaDEL | LV, HC | 0.726 a | 0.68 | 50 | |||

| 1865 | RF | PaDEL | LV, HC | 0.693 a | 50 | ||||

| 1865 | SVM | PaDEL | LV, HC | 0.690 a | 50 | ||||

| 1865 | XGBoost | PaDEL | LV, HC | 0.712 a | 50 | ||||

| 1939 | ASNN | Multiple9 | PW | 0.846 a | 0.783 a | 48 | |||

| 1939 | kNN | Multiple9 | PW | 0.838 a | 0.735 a | 48 | |||

| 1939 | SVM | Multiple9 | PW | 0.853 a | 0.770 a | 48 | |||

| 1939 | RF | Multiple9 | PW | 0.836 a | 0.753 a | 48 | |||

| 2117 | kNN | Multiple4 | NS | 0.58 a | 45 | ||||

| 2117 | RF | Multiple4 | NS | 0.515 a | 45 | ||||

| 2117 | SVM | Multiple4 | NS | 0.505 a | 45 | ||||

| 2130 | LR | DRAGON, ECFP | HC | 0.701 | 47 | ||||

| 2130 | MLP | DRAGON, ECFP | HC | 0.644 | 47 | ||||

| 2130 | RR | DRAGON, ECFP | HC | 0.68 | 47 | ||||

| 2217 | kNN | Multiple4 | NS | 0.77 a | 45 | ||||

| 2217 | RF | Multiple4 | NS | 0.675 a | 45 | ||||

| 2217 | SVM | Multiple4 | NS | 0.66 | 45 | ||||

| 2317 | kNN | Multiple4 | NS | 0.855 a | 45 | ||||

| 2317 | RF | Multiple4 | NS | 0.815 a | 45 | ||||

| 2317 | SVM | Multiple4 | NS | 0.78 | 45 | ||||

| 2389 | Bayesian | PC | NS | 0.83 | 0.625 | 55 | |||

| 2389 | Bayesian | PC, ECFP_14 |

NS | 0.91 | 0.59 | 55 | |||

| 2389 | RF | Multiple4 | NS | 0.89 | 45 | ||||

| 2389 | SVM | Multiple4 | NS | 0.83 | 45 | ||||

| 2660 | GCN | MG | NA | 0.863 | 52 | ||||

| 2660 | SVM | Morgan | LV, HC, RFE |

0.837 | 52 | ||||

| 3024 | RF | Multiple4 | NS | 0.76 a | 45 | ||||

| 3024 | SVM | Multiple4 | NS | 0.75 a | 45 | ||||

| 3223 | kNN | Multiple4 | NS | 0.848 a | 45 | ||||

| 3223 | RF | Multiple4 | NS | 0.865 a | 0.789 a | 45 | |||

| 3223 | SVM | Multiple4 | NS | 0.79 a | 0.748 a | 45 | |||

| 3591 | RF | Multiple4 | NS | 0.765 a | 45 | ||||

| 3591 | SVM | Multiple4 | NS | 0.75 a | 45 | ||||

| 3634 | GCN | MG | NA | 0.81 | 52 | ||||

| 3634 | SVM | MD | NA | 0.809 | 52 | ||||

| 3699 | RF | Multiple4 | NS | 0.84 a | 45 | ||||

| 3699 | SVM | Multiple4 | NS | 0.785 a | 45 | ||||

| 3721 | ASNN g1 | Multiple9 | PW | 0.743 a | 0.770 a | 48 | |||

| 3721 | ASNN g2 | Multiple9 | PW | 0.727 a | 0.742 a | 48 | |||

| 3721 | EL g1h | Multiple9 | PW | 0.757 a | 0.776 a | 48 | |||

| 3721 | kNN g1 | Multiple9 | PW | 0.718 a | 0.629 a | 48 | |||

| 3721 | kNN g2 | Multiple9 | PW | 0.674 | 0.678 | 48 | |||

| 3721 | SVM g1 | Multiple9 | PW | 0.714 a | 0.736 a | 48 | |||

| 3721 | SVM g2 | Multiple9 | PW | 0.722 a | 0.737 a | 48 | |||

| 3721 | RF g1 | Multiple9 | PW | 0.687 a | 0.690 a | 48 | |||

| 3721 | RF g2 | Multiple9 | PW | 0.704 a | 0.716 a | 48 | |||

| 4556 | GCN | MG | NA | 0.756 | 52 | ||||

| 4556 | GCN | MG | NA | 0.802 | 52 | ||||

| 4556 | GCN | MG | NA | 0.778 | 52 | ||||

| 4556 | RF | Morgan | LV, HC, RFE |

0.734 | 52 | ||||

| 4556 | RF | Morgan | LV, HC, RFE |

0.743 | 52 | ||||

| 4556 | SVM | Morgan | LV, HC, RFE |

0.755 | 52 | ||||

| 5612 | RF | Multiple4 | NS | 0.91 a | 45 | ||||

| 5612 | SVM | Multiple4 | NS | 0.86 a | 45 | ||||

| 5804 | kNN g1 | Multiple4 | NS | 0.74 a | 45 | ||||

| 5804 | kNN g2 | Multiple4 | NS | 0.715 a | 45 | ||||

| 5804 | RF g1 | Multiple4 | NS | 0.718 a | 45 | ||||

| 5804 | RF g2 | Multiple4 | NS | 0.72 a | 45 | ||||

| 5804 | SVM g1 | Multiple4 | NS | 0.623 a | 45 | ||||

| 5804 | SVM g2 | Multiple4 | NS | 0.633 a | 45 | ||||

| 5984 | EL b | Multiple10 | HC | 0.798 a | 28 | ||||

| 5984 | NN-MDRA | Multiple10 | HC | 0.765 a | 28 | ||||

| 6247 | RF | Multiple4 | NS | 0.82 a | 45 | ||||

| 6247 | SVM | Multiple4 | NS | 0.80 a | 45 | ||||

| 7889 | MLP | Mol2vec, MOE | NS | 0.873 a | 42 | ||||

| 12,620 | MLP | Multiple11 | NS | 0.843 a | 122 | ||||

| 12,620 | GCNN | Multiple11 | NS | 0.81 | 122 | ||||

| 12,620 | CNN | Multiple11 | NS | 0.818 a | 122 | ||||

| 12,620 | EL t | Multiple11 | NS | 0.847 a | 0.770 a | 122 | |||

| 14,440 | MLP | Multiple12 | NA | 0.822 a | 0.830 a | 43 | |||

| 14,440 | DL i | Multiple13 | NA | 0.811 | 0.738 | 43 | |||

| 14,440 | GCNN | MG | NA | 0.8 | 0.797 | 43 | |||

| Cytotoxicity | Human cell line | 50 | SVM | Multiple14 | NS | 0.536 a | 82 | ||

| 547 | RF | PC | NS | 0.782 | 81 | ||||

| 651 | RF | PC | NS | 0.761 | 81 | ||||

| 965 | RF | PC | NS | 0.854 | 81 | ||||

| 1099 | RF | PC | NS | 0.862 | 81 | ||||

| 1244 | RF | PC | NS | 0.809 | 0.692 | 81 | |||

| 1300 | RF | Multiple14 | NS | 0.521 a | 82 | ||||

| 1300 | SVM | Multiple14 | NS | 0.529 a | 82 | ||||

| 1659 | RF | PC | NS | 0.808 | 81 | ||||

| 1685 | RF | PC | NS | 0.767 | 81 | ||||

| 2041 | RF | PC | NS | 0.796 | 81 | ||||

| 2258 | RF | PC | NS | 0.826 | 81 | ||||

| 3316 | EL b | Multiple15 | LV, HC | 0.725 a | 0.67 | 84 | |||

| 3316 | RF | Multiple15 | LV, HC | 0.582 a | 84 | ||||

| 5201 | RF | PC | NS | 0.783 | 81 | ||||

| 5429 | RF | PC | NS | 0.8 | 81 | ||||

| 5487 | RF | MACCS, Morgan |

NS | 0.85 | 0.836 | 30 | |||

| 5784 | RF | ECFP_4 | NS | 0.775 a | 85 | ||||

| 8833 | RF | PC | NS | 0.783 | 81 | ||||

| 27,492 | MLP | Morgan | 5-time | 0.689 | 83 | ||||

| 27,492 | RF | Morgan | 5-time | 0.683 | 83 | ||||

| 41,198 | EL b | Multiple15 | LV, HC | 0.704 | 84 | ||||

| 52,513 | EL b | Multiple15 | LV, HC | 0.746 | 84 | ||||

| 62,655 | EL b | Multiple15 | LV, HC | 0.74 | 78 | ||||

| Mouse cell line | 338 | RF | PC | NS | 0.83 | 81 | |||

| 378 | RF | PC | NS | 0.605 | 81 | ||||

| 4080 | RF | PC | NS | 0.759 | 81 | ||||

| 12,388 | EL b | Multiple15 | LV, HC | 0.856 | 84 | ||||

| Rat cell line | 3727 | RF | PC | NS | 0.783 | 81 | |||

| Genotoxicity | Combined r | 230 | DF | PC, OFG |

NS | 0.989 | 92 | ||

| 230 | DT | PC, OFG |

NS | 0.652 a | 92 | ||||

| Comet assay | 49 | DT | MLB | NS | 0.75 | 94 | |||

| GreenScreen assay | 1415 | RF | PaDEL | CAS | 0.908 | 0.541 | 55 | ||

| In vivo micronucleus assay | 641 | MLP | Multiple16 | LV, HC, RFE | 0.841 a | 0.906 a | 93 | ||

| 641 | DT | Multiple16 | LV, HC, RFE | 0.810 a | 93 | ||||

| 641 | kNN | Multiple16 | LV, HC, RFE | 0.806 a | 93 | ||||

| 641 | NB | Multiple16 | LV, HC, RFE | 0.819 a | 0.86 | 93 | |||

| 641 | RF | Multiple16 | LV, HC, RFE | 0.817 a | 0.937 | 93 | |||

| 641 | SVM | Multiple16 | LV, HC, RFE | 0.863 a | 0.877 a | 93 | |||

| Mammalian cells | 85 | MLP | PC, OFG |

NS | 0.915 | 92 | |||

| 85 | EL j | PC, OFG |

NS | 0.927 | 92 | ||||

| 85 | LR | PC, OFG |

NS | 0.902 | 92 | ||||

| 85 | RF | PC, OFG |

NS | 0.816 | 92 | ||||

| Ames assay | 49 | DT | MLB | NS | 0.83 | 94 | |||

| 658 | RF | MOE, MACCS |

PCA1, SW |

0.74 | 0.715 | 59 | |||

| 2262 | MLP | DRAGON, TSAR | SFFS, HC |

0.607 | 78 | ||||

| 2262 | Bayesian | DRAGON, TSAR | SFFS, HC |

0.67 | 78 | ||||

| 2262 | SVM | DRAGON, TSAR | SFFS, HC |

0.717 | 78 | ||||

| 4361 | EL b | Multiple9 | HC | 0.788 a | 28 | ||||

| 4361 | NN-MDRA | Multiple9 | HC | 0.793 a | 28 | ||||

| 6156 | RF | MACCS, Morgan |

NS | 0.84 | 0.85 | 30 | |||

| 6307 | GCNN | MG | NA | 0.805 a | 0.759 a | 77 | |||

| 6448 | NB | Multiple17 | NS | 0.694 a | 0.624 a | 76 | |||

| 6448 | RP | Multiple18 | NS | 0.757 | 0.653 | 76 | |||

| 6509 | MLP | Multiple19 | NS | 0.715 a | 75 | ||||

| 6509 | Light GBM | Multiple19 | NS | 0.793 a | 75 | ||||

| 6509 | RF | Multiple19 | NS | 0.726 a | 75 | ||||

| 6509 | SVM | Multiple19 | NS | 0.779 a | 75 | ||||

| 6509 | XGBoost | Multiple19 | NS | 0.776 a | 75 | ||||

| 6512 | AdaBoost | Multiple20 | NA | 0.788 a | 74 | ||||

| 6512 | DT | Multiple20 | NA | 0.767 | 74 | ||||

| 6512 | EL k | Multiple20 | NA | 0.801 a | 74 | ||||

| 6512 | EL e | Multiple20 | NA | 0.746 a | 74 | ||||

| 6512 | EL c | Multiple20 | NA | 0.813 a | 74 | ||||

| 6512 | kNN | Multiple20 | NA | 0.779 | 74 | ||||

| 6512 | RF | PaDEL | CAS | 0.815 | 0.532 | 55 | |||

| 6512 | SVM | Multiple20 | NA | 0.797 | 74 | ||||

| 8348 | MLP | PaDEL | NS | 0.795 | 73 | ||||

| 18,947 | MLP | ECFP | LRFS | 0.875 | 72 | ||||

| 18,947 | LR | ECFP | LRFS | 0.878 | 72 | ||||

| 18,947 | LSTM | ECFP | LRFS | 0.873 a | 72 | ||||

| Hepatotoxicity | DILI | 96 | Bayesian l1 | ECPF6 | NS | 0.702 | 0.586 a | 24 | |

| 96 | Bayesian l2 | ECPF6 | NS | 0.636 | 0.589 a | 24 | |||

| 102 | EL b | Multiple9 | HC | 0.490 a | 28 | ||||

| 102 | NN-MDRA | Multiple9 | HC | 0.509 a | 28 | ||||

| 116 | SVM | Toxicogenomics | NS | 0.731 a | 27 | ||||

| 221 | Bayesian l3 | ECPF6 | NS | 0.733 | 0.614 a | 24 | |||

| 221 | Bayesian l4 | ECPF6 | NS | 0.716 | 0.615 a | 24 | |||

| 221 | Bayesian l5 | ECPF6 | NS | 0.718 | 0.660 a | 24 | |||

| 221 | Bayesian l6 | ECPF6 | NS | 0.793 | 0.603 a | 24 | |||

| 312 | RF | PaDEL | NS | 0.574 a | 117 | ||||

| 312 | SVM | PaDEL | NS | 0.575 a | 117 | ||||

| 387 | DF | Mold2 | CR1 | 0.69 | 0.632 a | 138 | |||

| 401 | RF | ECFP4 | NS | 0.734 | 0.741 a | 0.583 a | 32 | ||

| 401 | SVM | ECFP5 | NS | 0.714 | 0.736 a | 0.598 a | 32 | ||

| 451 | DF | Mold2 | CR2 | 0.713 | 1 | ||||

| 617 | EL m | Multiple21 | CR3 | 0.65 a | 29 | ||||

| 617 | GLM | CCR | HC | 0.56 a | 29 | ||||

| 617 | MLP | Multiple22 | HC | 0.59 a | 29 | ||||

| 617 | QDA | CCR | HC | 0.63 | 29 | ||||

| 617 | RF | GA | HC | 0.61 a | 29 | ||||

| 617 | RPART | GA | HC | 0.54 | 29 | ||||

| 617 | SVM | Multiple23 | FT | 0.634 a | 29 | ||||

| 627 | SVM | PaDEL | CR4 | 0.98 | 35 | ||||

| 640 | kNN | Transcriptomic | KS | 0.698 | 26 | ||||

| 640 | MLP | Transcriptomic | KS | 0.721 | 26 | ||||

| 640 | RF | Transcriptomic | KS | 0.7 | 26 | ||||

| 640 | SVM | Transcriptomic | KS | 0.709 | 26 | ||||

| 661 | SVM | ECFP5 | NS | 0.671 | 0.697 | 32 | |||

| 694 | EL n | Dragon | LV, HC | 0.728 a | 121 | ||||

| 694 | EL o | Dragon | CR5 | 0.746 | 121 | ||||

| 694 | EL b | Dragon | CR5 | 0.744 | 121 | ||||

| 705 | Bayesian l7 | ECPF6 | NS | 0.748 | 0.572 | 24 | |||

| 705 | Bayesian l8 | ECPF6 | NS | 0.699 | 0.532 | 24 | |||

| 850 | RF | MACCS, Morgan |

NS | 0.82 | 0.86 | 30 | |||

| 914 | Bayesian | ECPF6 | NS | 0.736 | 0.711 a | 24 | |||

| 923 | SVM | ECFP5 | NS | 0.643 | 0.709 | 32 | |||

| 938 | Bayesian l9 | ECPF6 | NS | 0.657 | 0.56 | 24 | |||

| 938 | Bayesian l10 | ECPF6 | NS | 0.676 | 0.602 | 24 | |||

| 938 | Bayesian l11 | ECPF6 | NS | 0.721 | 0.558 | 24 | |||

| 966 | RF | Multiple24 | NS | 0.642 a | 0.611 a | 118 | |||

| 988 | MLP | gene | CR6 | 0.953 a | 34 | ||||

| 988 | SVM | gene | CR6 | 0.884 a | 34 | ||||

| 1087 | EL e | PaDEL | CR1 | 0.684 | 0.611 a | 120 | |||

| 1087 | EL p | PaDEL | CR1 | 0.637 | 0.608 a | 120 | |||

| 1241 | EL f | PaDEL | LV, HC | 0.700 a | 0.812 | 116 | |||

| 1241 | RF | PaDEL | LV, HC | 0.665 a | 0.804 a | 116 | |||

| 1241 | SVM | PaDEL | LV, HC | 0.657 a | 0.762 a | 116 | |||

| 1241 | XGBoost | PaDEL | LV, HC | 0.659 a | 0.741 a | 116 | |||

| 1254 | AdaBoost | Multiple25 | LV, HC | 0.749 | 33 | ||||

| 1254 | Bagging | Multiple25 | LV, HC | 0.759 | 33 | ||||

| 1254 | DT | Multiple25 | LV, HC | 0.667 | 33 | ||||

| 1254 | EL q | Multiple25 | LV, HC | 0.783 | 0.716 | 33 | |||

| 1254 | kNN | Multiple25 | LV, HC | 0.777 | 33 | ||||

| 1254 | KStar | Multiple25 | LV, HC | 0.736 | 33 | ||||

| 1254 | MLP | Multiple25 | LV, HC | 0.6 | 33 | ||||

| 1254 | NB | Multiple25 | LV, HC | 0.629 | 33 | ||||

| 1254 | RF | Multiple25 | LV, HC | 0.761 | 33 | ||||

| 1274 | EL e | PaDEL | CR1 | 0.83 | 120 | ||||

| 1274 | EL o | PaDEL | CR1 | 0.772 a | 120 | ||||

| 1597 | CNN | Morgan1 | NA | 0.89 | 37 | ||||

| 2144 | DT | Multiple26 | NS | 0.684 a | 0.667 | 25 | |||

| 2144 | kNN | Multiple26 | NS | 0.727 a | 0.702 a | 25 | |||

| 2144 | NB | Multiple26 | NS | 0.675 a | 25 | ||||

| 2144 | NN | CDK | NS | 0.715 | 25 | ||||

| 2144 | RF | Multiple26 | NS | 0.696 a | 0.725 | 25 | |||

| 2144 | SVM | Multiple26 | NS | 0.714 a | 0.741 a | 25 | |||

| In vivo (mouse) | 233 | EL b | Multiple27 | LV, HC | 0.735 a | 31 | |||

| 233 | RF | Multiple27 | LV, HC | 0.614 a | 31 | ||||

| Rat liver hypertrophy | 677 | DT | Multiple28 | CR1 | 0.817 a | 131 | |||

| 677 | EL u | Multiple28 | CR1 | 0.760 a | 131 | ||||

| 677 | KNN | Multiple28 | CR1 | 0.747 a | 131 | ||||

| 677 | LDA | Multiple28 | CR1 | 0.727 a | 131 | ||||

| 677 | NB | Multiple28 | CR1 | 0.727 a | 131 | ||||

| 677 | SVM | Multiple28 | CR1 | 0.745 a | 131 | ||||

| Rat liver hypertrophy | 677 | DT | Multiple28 | CR1 | 0.787 a | 131 | |||

| 677 | EL u | Multiple28 | CR1 | 0.720 a | 131 | ||||

| 677 | KNN | Multiple28 | CR1 | 0.710 a | 131 | ||||

| 677 | LDA | Multiple28 | CR1 | 0.697 a | 131 | ||||

| 677 | NB | Multiple28 | CR1 | 0.697 a | 131 | ||||

| 677 | SVM | Multiple28 | CR1 | 0.714 a | 131 | ||||

| Rat liver proliferative | 677 | DT | Multiple28 | CR1 | 0.780 a | 131 | |||

| 677 | EL u | Multiple28 | CR1 | 0.703 a | 131 | ||||

| 677 | KNN | Multiple28 | CR1 | 0.700 a | 131 | ||||

| 677 | LDA | Multiple28 | CR1 | 0.677 a | 131 | ||||

| 677 | NB | Multiple28 | CR1 | 0.687 a | 131 | ||||

| 677 | SVM | Multiple28 | CR1 | 0.700 a | 131 | ||||

| Acute toxicity | LC50 (Daphina magna) | 485 | ASNN | SIRMS | PW | 0.886 | 104 | ||

| 485 | DNN | Chemaxon | PW | 0.832 | 104 | ||||

| 485 | DNN | SIRMS | PW | 0.838 | 104 | ||||

| 485 | XGBoost | FCFP4 | PW | 0.861 | 104 | ||||

| 485 | EAGCNG | SMILES | NA | 0.828 | 104 | ||||

| 485 | EL x | Multiple29 | PW | 0.902 | 104 | ||||

| 660 | SVM | Multiple30 | LV, HC | 0.795 a | 95 | ||||

| LC50 (fathead minnow) | 400 | EL c | PaDEL | LV, HC | 0.843 | 106 | |||

| 573 | PNN | Multiple31 | CR7 | 0.813 | 107 | ||||

| 573 | MLPN | Multiple31 | CR7 | 0.803 | 107 | ||||

| 573 | RBFN | Multiple31 | CR7 | 0.798 | 107 | ||||

| 573 | SVC | Multiple31 | CR7 | 0.842 | 107 | ||||

| 573 | DT | Multiple31 | CR7 | 0.867 | 107 | ||||

| 961 | ASNN | SIRMS | PW | 0.857 | 104 | ||||

| 961 | XGBOOST | SIRMS | PW | 0.824 | 104 | ||||

| 961 | RF | SIRMS | PW | 0.873 | 104 | ||||

| 961 | RF | Chemaxon | PW | 0.838 | 104 | ||||

| 961 | TRANSNNI | SMILES | NA | 0.815 | 104 | ||||

| 961 | EL x | Multiple29 | PW | 0.852 | 104 | ||||

| IG50 (Tetrahymena pyriformis assay) | 1129 | SVM | Multiple32 | RFE | 0.837 | 105 | |||

| 1129 | SVM | Multiple32 | NA | 0.878 | 105 | ||||

| 1129 | LR | Multiple32 | RFE | 0.819 | 0.842 | 105 | |||

| 1129 | DT | Multiple32 | RFE | 0.812 | 0.864 | 105 | |||

| 1129 | kNN | Multiple32 | RFE | 0.829 | 0.863 | 105 | |||

| 1129 | PNN | Multiple32 | RFE | 0.872 | 0.95 | 105 | |||

| 1129 | SVM | Multiple32 | RFE | 0.878 | 0.941 | 105 | |||

| 1129 | LR | Multiple32 | NA | 0.666 | 105 | ||||

| 1129 | DT | Multiple32 | NA | 0.807 | 105 | ||||

| 1129 | kNN | Multiple32 | NA | 0.848 | 105 | ||||

| 1129 | PNN | Multiple32 | NA | 0.856 | 105 | ||||

| 1129 | SVM | Multiple32 | NA | 0.837 | 105 | ||||

| 1438 | ASNN | Chemaxon | PW | 0.924 | 104 | ||||

| 1438 | ASNN | SIRMS | PW | 0.927 | 104 | ||||

| 1438 | RF | SIRMS | PW | 0.91 | 104 | ||||

| 1438 | TCNN | SMILES | NA | 0.939 | 104 | ||||

| 1438 | GIN | SMILES | NA | 0.929 | 104 | ||||

| 1438 | EL x | Multiple29 | PW | 0.945 | 104 | ||||

| LD50 (oral, rat) | 80 | MLP | PaDEL | NS | 0.698 a | 0.589 a | 101 | ||

| 80 | LR | PaDEL | NS | 0.675 a | 0.735 a | 101 | |||

| 80 | RF | PaDEL | NS | 0.7 | 0.54 | 101 | |||

| 80 | SVM | PaDEL | NS | 0.66 | 0.825 | 101 | |||

| 1296 | EL Y | PaDEL, CDK2 |

FS | 0.84 | 103 | ||||

| 1153 | EL Y | PaDEL, CDK2 |

FS | 0.78 | 103 | ||||

| 1089 | EL Y | PaDEL, CDK2 |

FS | 0.74 | 103 | ||||

| 1083 | EL Y | PaDEL, CDK2 |

FS | 0.74 | 103 | ||||

| 8515 | AdaBoost | ECFP6 | NS | 0.581 a | 97 | ||||

| 8515 | Bayesian | ECFP6 | NS | 0.770 a | 0.756 a | 97 | |||

| 8515 | MLP | ECFP6 | NS | 0.685 a | 97 | ||||

| 8515 | kNN | ECFP6 | NS | 0.715 a | 97 | ||||

| 8515 | NB | ECFP6 | NS | 0.648 a | 97 | ||||

| 8515 | RF | ECFP6 | NS | 0.702 a | 97 | ||||

| 8515 | SVM | ECFP6 | NS | 0.745 a | 97 | ||||

| 8582 | AdaBoost | ECFP6 | NS | 0.597 a | 97 | ||||

| 8582 | Bayesian | ECFP6 | NS | 0.795 a | 0.783 a | 97 | |||

| 8582 | MLP | ECFP6 | NS | 0.688 a | 97 | ||||

| 8582 | kNN | ECFP6 | NS | 0.719 a | 97 | ||||

| 8582 | NB | ECFP6 | NS | 0.616 a | 97 | ||||

| 8582 | RF | ECFP6 | NS | 0.718 a | 97 | ||||

| 8582 | SVM | ECFP6 | NS | 0.684 a | 97 | ||||

| 8613 | AdaBoost | ECFP6 | NS | 0.623 | 97 | ||||

| 8613 | Bayesian | ECFP6 | NS | 0.653 | 0.753 a | 97 | |||

| 8613 | MLP | ECFP6 | NS | 0.754 | 97 | ||||

| 8613 | kNN | ECFP6 | NS | 0.731 | 97 | ||||

| 8613 | NB | ECFP6 | NS | 0.698 | 97 | ||||

| 8613 | RF | ECFP6 | NS | 0.735 | 97 | ||||

| 8613 | SVM | ECFP6 | NS | 0.718 | 97 | ||||

| 10,863 | EL z | ISIDA | GTM | 0.69 | 100 | ||||

| 11,981 | EL z | ISIDA | GTM | 0.72 | 100 | ||||

| 11,981 | RF | ISIDA | GTM | 0.74 | 100 | ||||

| 11,981 | SVM | ISIDA | GTM | 0.73 | 100 | ||||

| 11,981 | NB | ISIDA | GTM | 0.64 | 100 | ||||

| 13,544 | EL z | ISIDA | GTM | 0.87 | 100 | ||||

| 132,979 | LLL | ECFP_4 | lV, HC | 0.692 | 0.7365 | 114 | |||

| 132,979 | LLL | FCFP_4 | lV, HC | 0.679 | 114 | ||||

| 132,979 | LLL | Interactions | lV, HC | 0.62 | 114 | ||||

| Reprotoxicity | AR binding | 1662 | EL v | PaDEL | CR8 | 0.78 | 140 | ||

| AR agonist | 1659 | EL v | PaDEL | CR8 | 0.86 | 140 | |||

| AR antagonist | 1525 | EL v | PaDEL | CR8 | 0.74 | 140 | |||

| DIDT | 284 | AdaBoost | Multiple33 | LV, HC | 0.748 | 90 | |||

| 284 | DT | Multiple33 | LV, HC | 0.733 | 90 | ||||

| 284 | kNN | Multiple33 | LV, HC | 0.74 | 90 | ||||

| 284 | NB | Multiple33 | LV, HC | 0.819 | 90 | ||||

| 284 | RF | Multiple33 | LV, HC | 0.723 | 90 | ||||

| 284 | RP | Multiple33 | LV, HC | 0.78 | 90 | ||||

| 284 | SVM | Multiple33 | LV, HC | 0.794 | 90 | ||||

| 286 | EL c | Multiple34 | LV | 0.949 | 89 | ||||

| 286 | SVM | Multiple34 | LV | 0.878 a | 89 | ||||

| 290 | NB | Multiple35 | GA | 0.751 a | 91 | ||||

| ECTA | 356 | RF | PaDEL | CAS | 0.808 | 0.567 | 61 | ||

| ER binding | 222 | SVM | SMILES | CR9 | 0.838 a | 0.817 | 69 | ||

| 1812 | DF | Mold2 | LV | 0.744 | 0.562 | 9 | |||

| 3308 | DF | Mold2 | LV | 0.862 | 0.576 a | 2 | |||

| 1677 | EL w | Multiple36 | CR10 | 0.59 | 88 | ||||

| In vivo s | 1458 | MLP | PaDEL | NS | 0.810 a | 18 | |||

| 1458 | DT | PaDEL | NS | 0.776 a | 18 | ||||

| 1458 | kNN | PaDEL | NS | 0.805 a | 18 | ||||

| 1458 | NB | PaDEL | NS | 0.730 a | 18 | ||||

| 1458 | RF | PaDEL | NS | 0.801 a | 18 | ||||

| 1823 | MLP | PaDEL | NS | 0.785 a | 18 | ||||

| 1823 | DT | PaDEL | NS | 0.757 a | 18 | ||||

| 1823 | EL f | PaDEL | LV, HC | 0.857 a | 0.829 a | 17 | |||

| 1823 | kNN | PaDEL | NS | 0.768 a | 18 | ||||

| 1823 | NB | PaDEL | NS | 0.720 a | 18 | ||||

| 1823 | RF | PaDEL | LV, HC | 0.815 a | 0.793 a | 17 | |||

| 1823 | RF | PaDEL | NS | 0.780 a | 18 | ||||

| 1823 | SVM | PaDEL | LV, HC | 0.808 a | 0.785 a | 17 | |||

| 1823 | SVM | PaDEL | NS | 0.799 a | 18 | ||||

| 1823 | XGBoost | PaDEL | LV, HC | 0.811 a | 0.794 a | 17 | |||

| Skin | LLNA | 194 | DT | gene | NS | 0.825 | 111 | ||

| LLNA | 1416 | SVM | Multiple37 | NS | 0.734a | 0.735a | 110 | ||

| LLNA | 1416 | RF | Multiple37 | NS | 0.716a | 0.658a | 110 | ||

| GARD assay | 108 | SVM | gene | NS | 0.884a | 111 | |||

| Human cell line | 102 | DT | Multiple38 | NS | 0.85 | 112 | |||

| Irritation | 6415 | LLL | PC | LV, HC | 0.668 | 114 | |||

| 6415 | LLL | ECFP_4 | LV, HC | 0.68 | 0.7565 | 114 | |||

| 6415 | LLL | FCFP_4 | LV, HC | 0.678 | 114 | ||||

| 6415 | LLL | Interactions | LV, HC | 0.59 | 114 | ||||

In descriptors: MACCS: Molecular Access System descriptors. MOE: a set of molecular descriptors calculated using the MOE (Molecular Operating Environment) software package. PaDEL: PaDEL (Prediction and Activity of Chemicals) descriptors refer to a set of molecular descriptors generated by the PaDEL-Descriptor software tool. MCZ: MolConnZ chemical descriptors. Morgan: Morgan circular fingerprints. PC: physicochemical descriptors OFG: organic functional groups. MD: molecular descriptor. MLB: metal-ligand binding-derived descriptors including covalent index (CI), cation polarizing power (CPP), their reverse values (1/CI) and (1/CPP), and combined descriptor. TSAR: Topological Surface Area and Reactivity descriptors. LRRS: the L1 regularization/Lasso regression to remove irrelevant descriptors. CCR: concentration-response curve ranks. GA: gender and age demographic features. ISIDA: ISIDA property-label molecular descriptors.

Multiple1: Seven types of molecular fingerprints were utilized: CDK, CDKExt, CDKGraph, MACCS, PubChem, KR, and KRC. Each of these fingerprints, along with six physicochemical and structural descriptors, was used to construct seven models. The validation results display the average performance of these models. Multiple2: the combination of ECFPs (a type of molecular fingerprint) and 22 physicochemical and structural descriptors. Multiple3: 3778 descriptors, encompassing various categories, including constitutional descriptors, electronic descriptors, physicochemical properties, topological indices, geometrical molecular descriptors, and quantum chemistry descriptors. Multiple4: Four molecular fingerprints were utilized: Molecular Accession System (MACCS) keys, PubChem fingerprints, Extended Connectivity Fingerprints (ECFP), and Morgan fingerprints. Each model was constructed using one type of fingerprint, and the validation results display the average values. Multiple5: six fingerprints: ECFP, FCFP, LCFP, EPFP, FPFP, and LPFP. Multiple6: 2D Chemopy, 2D MOE, and PaDEL descriptors were used. Three combinations of descriptors (only 2D, only fingerprint, and 2D with fingerprint) were explored for each model. The validation results display the average performance of the three models. Multiple7: 13 molecular descriptors and 5 PaDEL descriptors were used. Both the fingerprints and molecular descriptors were used to build models. The validation results display the average values of these models. Multiple8: Models were built using only 4D-FP, only MOE, and combinations of 4D-FP and MOE. The averages of the models were shown in the validation results. Multiple9: CDK (3D, 274 descriptors), Dragon v.6 (3D, 4885 descriptors grouped in 29 different blocks), Dragon6_part (blocks: 1 28), OEstate and ALogPS, ISIDA Fragments (length 2–4), GSFrag, Mera, and Mersy (3D), Chemaxon (3D, 499 descriptors), Inductive (3D), Adriana (3D, 211 descriptors), Spectrophores (3D), QNPR(length 1–3), Structural Alerts, and Simplex Representation of Molecular Structure (SIRMS). All the above descriptor packages were used individually to create classification models. The averages of the models were shown in the validation results. Multiple10: The combination of ECFP4-like circular fingerprints (Morgan), PaDEL, SiRMS, and DRAGON. Multiple11: The combination of 2D and 3D physicochemical descriptors (DESC) from Mordred, molecular graph features, EFCP2 and PubChem from PyBioMed, SMILES vectorizer, and fingerprint vectorizer. Multiple12: Models were built using 995 molecular descriptors and molecular fingerprints from PyBioMed (1024 EFCP fingerprints and 881 PubChem fingerprints) separately. The average values of the models were shown in the validation results. Multiple13: 995 molecular descriptors, molecular fingerprints from PyBioMed (1024 EFCP fingerprints and 881 PubChem fingerprints), and graph-based GCN were used to train the model. Multiple14: The models were constructed using 4D-FPs, MOE (1D, 2D, and 2.5D), noNP (4D Fingerprints excluding NP) combined with MOE, and CATS2D trial descriptor pools. The validation results display the average results of the models. Multiple15: Models were constructed using 10 descriptors, including nine PaDEL descriptors (AD2D, APC2D, Estate, KR, KRC, MACCSFP, PubChem, FP4C, and FP4) along with ECFP. The validation results display the average performance of these models. Multiple16: Models were built using six fingerprints (CDK fingerprint, CDK Extended fingerprint, Estate fingerprint, MACCS fingerprint, PubChem Substructure fingerprint, and 325 physicochemical + structural descriptors). The validation results show the average values of these models. Multiple17: Models were constructed using four molecular descriptors (Apol, No. of H donors, Num-Rings, and Wiener) combined with ECFP_14, 22 molecular descriptors (physicochemical and structural descriptors) combined with ECFP_14, and again, four molecular descriptors (Apol, No. of H donors, Num-Rings, and Wiener). The validation results display the average values of these models. Multiple18: Models were built using four molecular descriptors (Apol, No. of H donors, Num-Rings, and Wiener) combined with ECFP_14. Multiple19: Models were constructed using 97 structural and physicochemical descriptors as well as ECFP fingerprints. The validation results show the average values of the models’ performance. Multiple20: 117 descriptors, including constitutional, topological, hybrid, and van der Waals surface descriptors. Multiple21: Ensemble models were constructed using three models built on gene expression data, 20 features corresponding to information on the percentage of reported adverse events for each drug compound by gender and age group demographic (FAERS), 32 features corresponding to concentration-response curve ranks (Tox21), and MOLD2. The average values of the model performance were shown in the validation results. Multiple22: Models were built using 20 features corresponding to information on the percentage of reported adverse events for each drug compound by gender and age group demographic (FAERS) as well as 32 features corresponding to concentration-response curve ranks (Tox21). The average results of the two models were shown in the validation results. Multiple23: Models were built on gene expression and MOLD2 separately. Average results were calculated for the validation results. Multiple24: The combination of MOE, PaDEL, ECFP6, and transporter inhibition profile. Multiple25: 30 physicochemical properties and 55 topological geometry properties. Multiple26: Eight Models were constructed using each of seven fingerprints (Estate, CDK, CDK extended, Klekota–Roth, MACCS, PubChem, SubFP) and a set of molecular descriptors containing 12 key physical–chemical properties. The average of the models was shown in the results. Multiple27: Individual models were built on CDK, Dragon, Mold2, and HTS descriptors separately. The average model performance was calculated for each algorithm. Multiple28: The chemical structure descriptors include 51 molecular descriptors generated using the QikProp software (Schrödinger, version 3.2) and 4325 substructural fingerprints generated using publicly available SMARTS sets (FP3, FP4, and MACCS) from OpenBabel, PaDEL, and PubChem. Multiple29: Consensus models were built on top performed individual models built on Chemaxon descriptors, Inductive descriptors, Spectrophores descriptors, SIRMS descriptors, ECFP4 fingerprint, and FCFP4 fingerprint. Multiple30: Individual models were built on HYBOT descriptors and SiRMS descriptors. The average model performance was calculated for each algorithm. Multiple31: the physical, constitutional, geometrical, and topological properties. Multiple32: the combination of simple molecular properties, molecular connectivity and shape, electrotopological state, quantum chemical properties, and geometrical properties. Multiple33: WHIM descriptors, connectivity indices, topological charge indices, 3D-MORSE descriptors, topological descriptors, molecular properties, RDF descriptors, information indices, constitutional descriptors, functional group counts, and getaway descriptors. Multiple34: the combination of structural descriptors and physicochemical, geometrical, and topological descriptors. Multiple35: the combination of element counts, molecular properties, molecular property counts, surface area and volume, and topological descriptors and ECFP6. Multiple36: descriptors used in each model developed by research groups that participated in the Collaborative Acute Toxicity Modeling Suite. Multiple37: Models were built on up to two different sets of molecular descriptors from MOE, PaDEL, MACCS, MORGAN2, and OASIS (OASIS skin sensitization protein binding fingerprint). The average values of different models were calculated in the validation results. Multiple38: outputs from Derek Nexus, exclusion criteria, results from in chemico/in vitro assays, and the kNN potency prediction model into a decision tree to predict skin sensitization potential.

In Feature Selection: NS: not specified. This indicates that the reference does not clearly specify the feature selection methods used. NA: not applicable. This term is used when no feature selection methods are applied in the reference. 5-time: Atom Environments are only included if they appear at least five times in the data set. CAS: CfsSubsetEval attribute selection. CFS: correlation-based feature selection algorithm. F-score: the Fischer score. GTM: generative topographic mapping analysis. HC: high correlation removal for feature selection. LV: low variance removal for feature selection. MC-SA: Monte Carlo simulated annealing (MC-SA) procedure. MG: molecular graph. PCA1: principal component analysis (PCA), PCA2: Pearson correlation analysis. PW: pairwise decorrelation method. RFE: recursive feature elimination. SFFS: sequential forward feature selection algorithm. SW: stepwise feature selection. CR1: conditional removal by eliminating descriptors with constant values across all drugs and those with less than 5% of drugs exhibiting non-zero values. CR2: conditional removal by eliminating descriptors with constant values across all drugs. CR3: High correlation removal for feature selection for FAERS and Tox21 dataset; for gene expression descriptors, Fisher’s exact test was used to determine the gene’s significance (P value < 0.01) and select features. CR4: excluded all descriptors that failed in 5% of molecules and removed low-variance descriptors. CR5: Two methods were used. First, the full set of molecular descriptors were selected, and each molecular descriptor was weighted with respect to the class label. Second, a random number of descriptors were selected and weighted. Varying cutoff weights were used to select descriptors. CR6: Two methods were used: (1) differential gene expression analysis and (2) feature selection based on weight values of feature vectors. CR7: Both the correlative and model-fitting approaches were used to select relevant descriptors. CR8: KNN coupled with genetic algorithms were used to select a minimized optimal subset of molecular descriptors. CR9: (1) remove those near zero or zero variance descriptors; (2) remove any one of two descriptors with correlation > 0.95; and (3) calculate the descriptor importance by receiver operating characteristic (ROC) area and then retain those descriptors with importance > 1.5. CR10: feature selection methods used by each individual model such as GA and RF.

AR: androgen receptor; ECTA: embryonic cell transformation assay; ER: estrogen receptor; LD50: the dose of a substance required to cause death in 50% of a tested population of organisms; IC50: the concentration of a substance required to inhibit a specific biological or biochemical function by 50% in an in vitro assay; Multicell: experimental bioassay results of multiple carcinogenicity sex/species cell (e.g., rat male, rat female, mouse male, etc.); Single-Cell: experimental bioassay results of one or more species; DILI: drug-induced liver injury; DIDT: drug-induced developmental toxicity; EL: ensemble learning with base classifier specified in the parenthesis; CV: cross validation; ASNN: associative neural network; CNN: convolutional neural network; DT: decision tree; GBM: gradient boosting machines; GCNN: graph convolutional neural network; GLM: generalized linear model; RF: random forest; kNN: k-nearest neighbors; LDA: linear discriminant analysis; LR: linear regression; MLP: multilayer perceptron; NB: Naïve Bayes; NN-MDRA: nearest neighbor-multidescriptor read-across; QDA: quadratic discriminant analysis; RF: random forest; RP: recursive partition; RPART: recursive partitioning and regression trees; RR: ridge regression; SVM: support vector machine; TCNN: transformer convolutional neural network; GIN: graph isomorphism network; EAGCNG: edge attention-based multirelational graph convolutional.

Average values of balanced accuracy when multiple values were calculated in the literature.

The ensemble model developed using RF models and various descriptors.

The ensemble model developed using SVM models and various descriptors.

The ensemble model developed using XGBoost models and various descriptors.

The ensemble model developed using kNN models and various descriptors.

The ensemble model developed using SVM, RF, and XGBoost algorithms with different descriptors.

Two models developed with the compounds classified as blockers and non-blockers using thresholds of 1 and 10 µm, respectively.

The ensemble model developed using ASNN, kNN, SVM, and RF models with different descriptors and a 1-µm threshold to classify blockers and non-blockers in the dataset.

The ensemble model developed using MLP and GCNN models with different descriptors.

The ensemble model developed using LR, MLP, and RF models with the same descriptors.

The ensemble model developed using DT models and various descriptors.

Two models generated using compounds from the same dataset, with compounds classified as “active” and “non-active” using two thresholds: DILI severity scores score = 3 and score ⩾ 2, respectively.

Four models built using compounds from the same dataset, with compounds classified as “active” and “non-active” using four thresholds: partition hybrid scoring system threshold = 4, partition hybrid scoring system threshold = 8, Ro2 scoring system threshold = 3, and Ro2 scoring system threshold = 8, respectively.

Two models developed based compounds from the same dataset, with compounds classified as “active” and “non-active” using two thresholds: most and less DILI (arbitrary threshold ⩾ 3) and most DILI with arbitrary threshold = 4, respectively.

Three models developed based on DILIRank’s DILI severity datasets where compounds were classified as “active” versus “non-active” using three thresholds: severe liver damage (threshold ⩾ 6), moderate and severe liver damage (threshold ⩾ 4), and any kind of liver damage (threshold ⩾ 1), respectively.

The ensemble model developed using GLM, RF, SVM, NB, RPART, and QDA models with different descriptors.

The ensemble model developed using kNN, SVM, NB, and DT models with different descriptors.

The ensemble model developed using NB models and various descriptors.

The ensemble model developed using kNN, SVM, and NB algorithms and various descriptors.

The ensemble model developed using MLP, DT, NB, RF, kNN, KStar, Bagging, and AdaBoost models and the same descriptors.

Toxicity of chemicals is determined by combining results of the Ames test, in vitro mammalian assay, and in vivo micronucleus assay.

The in vivo studies observing sperm reduction, gonadal dysgenesis, abnormal ovulation, teratogenicity and infertility growth, and retardation.

Ensemble models developed using MLP, GCNN, and CNN with different descriptors.

Ensemble model developed using LDA, NB, SVM, DT, and kNN models with different descriptors.

Ensemble models developed using all the models built by research groups that participated in the Collaborative Modeling Project for Androgen Receptor Activity.

Ensemble models were built using models developed by research groups that participated in the Collaborative Estrogen Receptor Activity Prediction Project.

Ensemble models were built using ASNN, DNN, XGBoost, EACNG, TCNN, and GIN.

Ensemble models were built using models developed by research groups that participated in the Collaborative Acute Toxicity Modeling Suite.

The ensemble model developed using SVM, RF, and NB models.

As shown in Figure 1, the most studied toxicity types are cardiotoxicity with 504 models, hepatotoxicity with 293 models, and carcinogenicity with 147 models. Despite the 141 models developed for reprotoxicity, 108 models were developed by Feng et al. 17 and Jiang et al.18; therefore, this toxicity type is less studied. For the various endpoints of these toxicity types, both ML and DL have been applied to develop the prediction models.

Figure 1.

Distribution of machine learning and deep learning models for toxicity prediction and publications for different toxicity types. The x-axis indicates toxicity types. The left y-axis shows the number of models (bars), and the right y-axis depicts the number of publications (red squares).

Hepatotoxicity is one of the main causes of drug clinical trial termination and drug withdrawal because the liver is the main organ for the metabolism of drugs and compounds. 19 Drug-induced liver injury (DILI) refers to the damage to a large number of hepatocytes and other liver cells. 20 In recent decades, DILI has become one of the most concerning topics in drug discovery and development.21,22 When building prediction models, DILI is often simplified to a classification problem. For example, in Chen et al.’s 23 work, drugs were annotated into three categories: “no DILI,” “less DILI,” and “most DILI.” Various classification models have been developed based on well-known ML algorithms such as Bayesian, 24 support vector machines (SVMs),25–27 ensemble modeling (EL),28,29 random forest (RF),30–32 k-nearest neighbors (kNN),25,33 and deep neural networks (DNNs) such as multilayer perceptron (MLP)26,34–36 and convolutional neural network (CNN).36,37

Cardiotoxicity is another important toxicity that requires assessment because the related side effects like cardiac arrest may cause serious undesirable consequences. The occurrence of cardiotoxicity is closely connected to the human ether-a-go-go related gene (hERG), a potassium ion channel protein. The inhibition of hERG can lead to potentially fatal QT prolongation syndrome. 38 Therefore, screening of drug candidates with hERG inhibition potential early in drug discovery is crucial to prevent the candidates from entering the next phase in the drug development process. In recent years, the large hERG datasets extracted from BindingDB, 39 PubChem Bioassay, 40 ChEMBL bioactivity database, 41 and other literature-derived data 42 allow for developing QSAR models based on ML and DL algorithms.42–51 In these QSAR models, molecules are categorized as hERG blockers and non-blockers based on the activity threshold that ranges from 1 to 40 µm. Although 1 and 10 µm have been commonly used as the activity thresholds, there is no widely accepted threshold, and multiple threshold settings are often used to change the compositions of the training datasets. Therefore, many ML and DL models, including graph convolutional neural network (GCN) by Chen et al., 52 DNN by Cai et al., 42 hERG-Att by Kim et al., 53 Deep HIT by Ryu et al. 43 and BayeshERG as presented by Kim et al., 53 have been reported for the same training dataset.47,50,51,54 This is one reason that many models (504 models) have been reported for cardiotoxicity prediction. As shown in Table 1, by holdout validation, Liu et al. 55 achieved a balanced accuracy of 0.91 using Bayesian models on a dataset containing 2389 compounds. Chen et al. 52 reported a balanced accuracy of 0.863 on a dataset of 2660 compounds, and Cai et al. 42 reported an average balanced accuracy of 0.873 on 7889 compounds. Using cross validations, Siramshetty et al. 45 obtained an average balanced accuracy of 0.865 with RF on 3223 compounds and Shen et al. 46 reached an average balanced accuracy of 0.912 on 1668 compounds. In external validation conducted by Siramshetty et al. 45 RF and SVM models yielded average balanced accuracy of 0.91 and 0.86 on 4556 compounds, respectively. In addition to hERG inhibition, ML and DL models were developed for predicting cardiotoxicity as a drug-induced side effect.56,57 For example, DL models were developed to predict drug-induced cardiotoxicity. 42

Carcinogenicity is also one of the most important toxicity types since chemical carcinogens can interact with DNA or damage cellular metabolic processes and cause undesirable effects such as cancer. Carcinogenicity of compounds is generally measured using animal experiments including the 2-year animal carcinogenicity study and the 26-week Tg-rasH2 mice carcinogenicity test. 58 However, due to constraints such as labor, time, cost, and ethical concerns with animal studies, computational methods have been used to predict carcinogenicity to supplement rodent carcinogenicity bioassays. Recently, diverse ML approaches have been developed based on the Carcinogenic Potency Database (CPDB). 59 As shown in Table 1, most ML and DL models were built using datasets from rodent bioassays such as rat, mice, and hamster. Carcinogenicity has been widely studied, with 147 models published using ML60–69 and DL algorithms.64,70 Similar to carcinogenicity, mutagenicity may also result in certain diseases such as cancer by causing abnormal genetic mutations such as changes in the DNA of a cell. The Ames test is commonly used to test the mutagenicity of chemicals using a short-term bacterial reverse mutation assay. 71 Currently, most of the databases for mutagenicity are based on in vitro experiments. In the past few years, several ML28,30,61,72–78 and DL72,73,75,77 classification models have been developed for predicting mutagenicity. Most models are built on Ames mutagenicity benchmark datasets developed by Hansen et al. 79

Cytotoxicity is an adverse event that may result in cell lysis, cell growth inhibition, or cell death. The experimental evaluation of cytotoxicity measures the survival rates of a cell line following treatment with a specific substance. 80 In drug discovery, evaluating cytotoxicity is an early step for toxicity assessment of a drug candidate. As shown in Table 1, some computational cytotoxicity prediction models have been developed using ML and DL algorithms such as RF,30,81–85 SVM, 82 and MLP. 83

Reprotoxicity includes endpoints such as developmental toxicity and reproductive toxicity. Developmental toxicity is the adverse effect of a substance on an organism’s development which may cause the death of the developing organism, structural or functional abnormality, or altered growth. Reproductive toxicity can cause significant harm to the fetus, including teratogenicity, growth retardation, and dysplasia. The in vitro testing of pregnant animals, preferably rats and rabbits, allows for the prediction of toxic effects in both the dams and their fetuses.86,87 In addition to traditional in vivo methods, computational approaches, including ML models2,9,17,18,88–91 and DL models, 18 have been used as alternative methods to assess several endpoints of reproductive toxicity such as sperm reduction, gonadal dysgenesis, abnormal ovulation, teratogenicity, and infertility growth retardation.

In vitro, chemical genotoxicity is toxicity from chemical interactions with genomic material. Genotoxicity has been extensively investigated with computational models by associating physicochemical properties and structural features of chemicals with their experimentally tested in vitro genotoxicity endpoints. Both ML and DL models have been reported for predicting genotoxicity with toxicity endpoints on mammalian cells, 92 in vivo micronucleus assay, 93 comet assay, 94 and Ames assay.74,76,77

Acute toxicity represents the immediate adverse change occurring within 24 h of exposure to a substance and the assessment continues for a mandatory observation period of at least 14 days. Assessing acute toxicity is crucial for determining the immediate harmful impacts of chemicals and is a fundamental aspect of chemical safety regulation to classify and manage chemical hazards. 95 For example, EPA has established four categories for oral, dermal, and inhalation toxicities to represent the level of toxicity based on median lethal dose (LD50) or median lethal concentration (LC50). 96 LD50 or LC50 refers to the amount expected to kill 50% of the tested animals. Traditionally, these studies involved conducting experiments on live animals, exposing them to chemicals via different routes such as ingestion, skin contact, or inhalation, which is costly, time-consuming, and ethically problematic due to animal use. To address these challenges, an increasing number of ML97–100 and DL101,102 classification models have been developed to improve toxicity prediction, particularly in the context of acute oral toxicity. Recently, a collaborative effort between the NTP Interagency Center for the Evaluation of Alternative Toxicological Methods (NICEATM) and the EPA National Center for Computational Toxicology (NCCT) has generated a comprehensive repository of acute oral LD50 data on about 12,000 chemicals. 103 These data have been made available to the scientific community to develop new computational models for predicting acute oral toxicity essential for regulatory purposes. Furthermore, in addition to acute oral toxicity, ML and DL models have been applied to study other representative acute toxicity endpoints including Tetrahymena pyriformis IGC50,104,105 fathead minnow LC50,106,107 and Daphnia magna LC50.95,104 These efforts contributed to the advancement of predictive models across a range of acute toxicity assessments.

Skin toxicity refers to the adverse effects or damage inflicted on the skin when exposed to potentially harmful or toxic substances. These effects include irritations, rashes, burns, or other negative reactions on the skin. Skin toxicity plays a vital role in assessing the safety of products, particularly in determining their potential to induce skin-related health issues. The evaluation of skin irritation/corrosion has been included in regulatory requirements and must be fulfilled before a compound enters the market. 108 In addition, skin sensitizing hazard represents another important regulatory endpoint, particularly relevant to allergic contact dermatitis. Currently, the murine lymph node assay (LLNA) 109 has been considered the gold standard in animal experiments for evaluating the potential for skin sensitization. This method quantifies the proliferation rates of cells within the draining lymph nodes of mice. However, to address the concerns associated with in vivo studies and promote ethical alternatives, there has been an increasing number of ML and DL models developed to predict skin sensitization110–113 and skin irritation. 114 These computational models leverage diverse datasets and advanced techniques to provide predictive insights, thereby advancing our ability to assess and mitigate skin-related toxicological risks.

ML and DL models

The toxicity of chemicals can be experimentally determined using animal models, but the experimental evaluation is time-consuming and costly. Therefore, ML and DL have become an attractive approach to evaluate chemical toxicity. There are two types of ML models: regression and classification models. Regression models are built on quantitative toxicity values such as LD50 and LC50, while classification models are built on categorical toxicity values. In the predictive toxicology field, classification models are more popular. In this view, only classification models for predicting two-class toxicity such as active and inactive will be recapped.

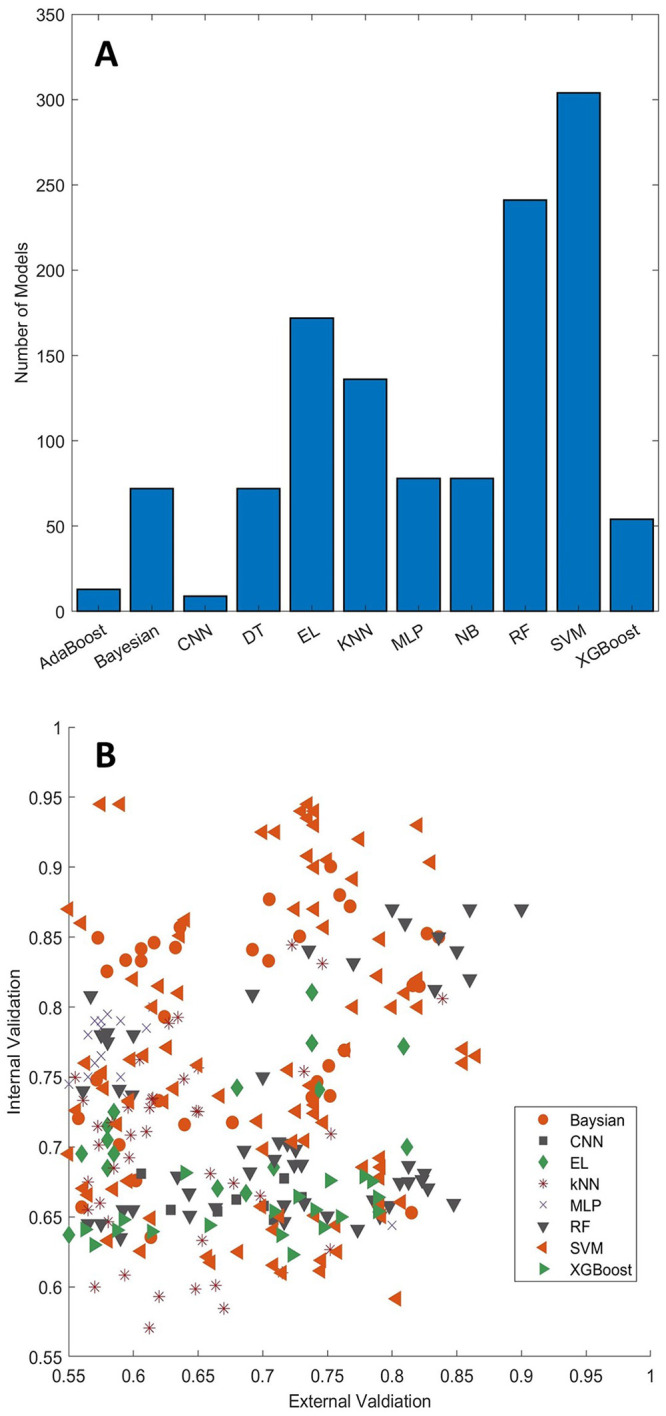

Many ML and DL algorithms such as SVM, RF, kNN, EL, and neural network (NN) have been applied to develop toxicity prediction models. Table 1 lists the ML and DL algorithms that have been used in the reported toxicity prediction models. Figure 2 summarizes the frequency of ML and DL algorithms in the toxicity prediction models as well as model performance in internal and external validations. For ML models, SVM, RF, and EL are the most frequently used algorithms, with 304, 241, and 172 models reported, respectively. For DL models, MLP and CNN are the widely used algorithms, with 78 and 9 models reported, respectively.

Figure 2.

Distribution of toxicity prediction models for machine learning and deep learning algorithms marked at the x-axis (a). Comparison between external validations (x-axis) and internal validations (y-axis) for toxicity prediction models (b). The machine learning and deep learning algorithms are depicted with different shapes and colors as shown in the figure legend. AdaBoost: adaptive boosting; CNN: convolutional neural network; DT: decision tree; EL: ensemble learning; KNN: k-nearest neighbors; MLP: multilayer perceptron; NB: Naïve Bayes; RF: random forest; SVM: support vector machine; XGBoost: extreme gradient boosting.

SVM is one of the most popular supervised ML algorithms and was introduced by Vapnik et al. 115 based on the structural risk minimization principle. In SVM, chemicals described by the original input descriptors are mapped into a higher dimensional space using a kernel function, and a hyperplane is then identified in the mapped space to separate classes of chemicals. When training an SVM model, the algorithmic parameters such as the ones associated with kernel function are tuned to determine the optimal hyperplane that maximizes the distance between the hyperplane and the margin (samples are most close to the hyperplane, they form the support vector) of each class of chemicals. Since SVM can handle correlated descriptors and has good generalization performance, it has been widely used in the development of models for predicting toxicity of chemicals, with 304 models reported.18,25–27,29,32,34,35,64–70,82,89,93,101,116,117

Decision tree (DT) is an upside-down tree-like classification and regression algorithm with the root on the top, leaf nodes at the bottom, and several layers of internal nodes in the middle. A path from the root to a leaf forms a branch which represents a series of decision rules used to classify chemicals. Since the decision rules can be easily retrieved from a DT model, chemical toxicity prediction models constructed with a DT algorithm are easy to interpret the predicted toxicity and intuitive to understand the importance of chemical features of the toxicity. However, the paths of decisions in a DT model use cutoffs for chemical features but do not take into consideration the values of chemical features. This results in chemicals that meet the same cutoffs but have very different feature values being assigned to the same class, which may make the performance of a DT model on testing not as good as in training. Therefore, relatively few prediction models are developed using DT in predictive toxicology, with only 72 models reported for predicting carcinogenicity, 67 genotoxicity,74,92–94 hepatotoxicity,25,33 and reprotoxicity.18,90

An RF model is built based on DT models. It makes predictions by taking majority votes from its member DT models. In RF, chemicals and structural features are randomly selected from the training dataset to construct a set of DT models for making a prediction model. The consensus of DT models generated using different chemicals and structural features selected by randomization is expected to minimize the effect of overfitting of individual DT models and to improve prediction performance. For example, Fujita et al. 92 developed both DT and RF models to evaluate the carcinogenicity of 230 chemicals. The balanced accuracy from the evaluation for the RF model was 0.755, which was substantially higher than the balanced accuracy of 0.54 for the DT model. Although RF is less interpretable, it is computationally efficient and has been very successful in developing classification models for a wide range of toxicity types.18,25,29,30,45,48,50,65,75,93,101,118

Ensemble learning models that combine individual models other than DT models in RF have also been reported for predicting various toxicity endpoints. These ensemble learning models used majority voting of their individual models as the final predictions. Most of the ensemble learning models outperformed the individual models, especially when the individual models are diverse. The ensemble learning models given in Table 1 used combinations of individual models constructed from SVM, DT, kNN, and Naïve Bayes. Table 1 and Figure 2 showed that ensemble learning has been widely used in the predictive toxicology field, with 172 models published.28,29,33,48,50,51,65,74,116,119–121

kNN is one of the simplest ML algorithms. In a kNN model, the activity of a chemical is predicted using k chemicals with the shortest distances to it among the training chemicals in the chemical space that is represented with a set of chemical descriptors. For classification, the class prediction for a chemical is usually determined by majority voting, that is, the class with most of its k-nearest chemicals. kNN algorithm is simple and easy to understand and prediction models constructed with kNN have high interpretability. Therefore, it has been widely used in predictive toxicology with 136 kNN models reported for predicting carconogenicty,62,64,67 cardiotoxicity,45,48,51 genotoxicity, 93 hepatotoxicity,25,26,33 and reprotoxicity.18,90

Artificial neural networks (ANNs) are a set of algorithms that are used to recognize underlying relationships in data through a process that mimics the function of biological NNs. There are three layers in an ANN: an input layer, a hidden layer, and an output layer. Each layer consists of neurons, and each neuron is connected to all the neurons in the next layer by weight. The weights are randomly chosen at the beginning of a training process and are then calculated to minimize errors between predicted values from the output layers and actual values. As an extension to ANNs, DNNs with multiple hidden layers have been successfully applied in many fields due to the increase in computational power. In a DNN, the earlier layers can learn low-level simple features, while the later layers can learn more complex features. This complex model architecture makes DL well suited to build complex relationships between chemical structures and toxic effects that traditional ML models are unable to handle. In the reported DL models for toxicity prediction, MLP and CNN are the most used algorithms, with 78 and 9 models reported, respectively. MLP is a popular DNN with feedforward NN that utilizes a supervised learning technique called backpropagation to recognize underlying relationships in data. MLP models have been developed for predicting cardiotoxity,42,49 cytotoxicity, 83 genotoxicity,78,93,119 hepatotoxicity,26,29,33,34 oral toxicity, 101 and reprotoxicity. 18 CNN is a feedforward NN and typically consists of convolutional and pooling layers, which differs from MLP models. CNN has an advantage over traditional ANNs since it requires fewer free parameters. However, a large amount of data is required for training a CNN model. Therefore, compared with MLP models, fewer CNN models have been reported for toxicity assessment.37,64 Recently, GCN has attracted a lot of attention for its application in the analysis of biomolecular structures, which can be represented as undirected graphs. In the graphical representation of a molecule, atoms are denoted as nodes and bonds as edges. Since GCN can directly process graph structures, it bypasses the limitation typically associated with conventional molecular descriptors. This inherent feature contributes to its enhanced performance in predictive tasks, especially in the toxicity prediction fields where various GCN-based models have been developed to address diverse endpoints. 42,77,122–124 For example, Kearnes et al. 125 developed a GCN model to extract informative features from the graph-based representation of atoms and bonds. Furthermore, researchers have advanced GCN-based models, including graph attention CNN, 126 DeepAffnity, 124 MutagenPre-GCNN, 77 to improve predictive accuracy and identification of structurally significant features.

Figure 2(a) shows the numbers of models developed using different ML algorithms. SVM and RF are the most frequently used ML algorithms. Various validation methods such as holdout validation, cross validation, and external validation have been used for assessing the performance of those ML and DL models developed for predicting the toxicity of chemicals. In a holdout validation, the original dataset is split into a training set and a test dataset. A model is trained on the training dataset and evaluated on the test dataset. In a k-fold cross validation, the original dataset is first randomly divided into k groups. Then, k-1 groups are used to build a model, and the remaining group is used to evaluate the model. This process is iterated k times so that each of the k groups is used only once as the test set. In an external validation, an external dataset is used to validate the performance of the model developed with a training dataset.

As shown in Table 1, most studies used only internal validations (holdout and k-fold cross validation) to assess model performance. About 25% of the models were validated using both internal and external validations. Figure 2(b) compares the internal and external validation results. Not surprisingly, the internal validations had better performance than the external validations. Furthermore, the differences between internal and external validations are not dependent on the ML algorithms used for model development. The comparative analysis suggests that external validation should be used for validating the performance of ML and DL models developed for predicting the toxicity of chemicals. When an external dataset is not available, internal validation provides a useful estimation of model performance though internal validation usually overestimates model performance.

Datasets

ML and DL models are trained using known experimental data to learn the relationships between chemical structures and toxicity endpoints in the training chemicals. Therefore, the quality of experimental data used for training ML and DL models is important for the reliability of developed toxicity prediction models. Many toxicity studies collected experimental data from a variety of data sources and established databases to manage the collected data, including ToxCast/Tox21, 127 ChEMBL, 41 ToxRefDB, 128 PubChem, 40 CPDB, 59 EDKB, 129 and EADB. 5 Since these databases contain data that were generated from different experiments and in various formats, data processing and curation are needed to prepare datasets from these databases for developing ML and DL models. For example, datasets extracted from the ToxCast/Tox21 database have been used to develop models for predicting reprotoxicity,128,130 hepatotoxicity, 131 and other organ toxicity.132,133 The datasets that have been used for developing toxicity prediction models are summarized below.

Compared with large datasets with billions or even trillions of data in image analysis, data size for the predictive toxicology field is typically small due to the high cost and time involved in performing toxicological experiments. Figure 3 shows the size distribution of the datasets that have been used in the development of ML and DL models for predicting various toxicity types. The largest dataset is the cytotoxicity dataset that has 62,655 compounds, 84 and few datasets contain more than 10,000 compounds. Most of the datasets have around 1000 chemicals. The sizes of most datasets are not large enough to develop accurate and reliable DL models. Therefore, most of the toxicity prediction models have been developed using ML algorithms (Figure 2[a]). There are more datasets for cardiotoxicity, carcinogenicity, and hepatotoxicity than other types of toxicity. The average data sizes for cardiotoxicity, hepatotoxicity, and carcinogenicity are 2053, 958, and 896, respectively.

Figure 3.

Histogram of sizes of the datasets used in the development of machine learning and deep learning models for toxicity prediction.

For cardiotoxicity, Cai et al, 42 Chavan et al., 51 Karim et al., 122 and Doddareddy et al., 134 built datasets by collecting data from BindingDB, 39 PubChem, 40 and ChEMBL 41 databases, as well as from the literature. Some cardiotoxicity datasets have thousands of molecules with inhibitory activity of the hERG channel.45,46,48,54,134 It is interesting to note that DL models have been developed for some large cardiotoxicity datasets. For example, various DL algorithms were used in the development of hERG channel blockade prediction models based on 12,620 chemicals that were curated from multiple sources. 122 Different chemical descriptors such as fingerprints and features vectorized from SMILES strings were used in those DL models. However, cross validations had an accuracy between 60% and 86%, and external validations resulted in an accuracy between 75% and 81% for the best models. Compared with ML models (Table 1), DL did not show advantages over ML for such size hERG inhibition datasets.

For hepatotoxicity, some datasets have been generated and curated in the last decade, including ones published by Chen et al., 23 Liew et al., 120 Fourches et al., 135 Zhu et al., 136 and Zhang et al. 137 These datasets served as important resources for developing hepatotoxicity prediction models. As hepatotoxicity is a major concern in drug safety evaluation, DILI in humans is the objective for most of the ML and DL models for predicting hepatotoxicity. DILI in humans is caused by diverse and complicated mechanisms. Thus, predicting DILI in humans is very challenging, and high-quality datasets are vital for developing reliable and accurate prediction models using ML and DL learning algorithms. The DILI datasets used in training the reported ML and DL prediction models were generated using various methods which can be categorized into three approaches. The first approach is based on DFA-approved drug labeling documents.23,138 The second approach is based on drug safety reports such as the FDA adverse event reporting system 136 and Micromedex Healthcare Series reports on adverse reactions. 120 The third approach is to search publications in the literature such as MEDLINE abstracts 135 and publications. 137 Hepatotoxicity endpoints based on animal experiments were also curated for developing ML prediction models. 131 A chemical could be annotated as hepatotoxic in one dataset, but as non-hepatotoxic in another dataset due to the difference in the approaches to define hepatotoxicity, not only leading to quality and reliability concerns on ML models based on such datasets but also resulting in the discordance in predictions from those models. Figure 4 shows comparisons between drugs in the datasets obtained from three sources: postmarket surveillance reports (282 drugs), 136 literature (937 drugs), 135 and drug labeling documents (387 drugs). 138 Of those drugs, 79 drugs are included in all three datasets, 184 drugs are common to the datasets obtained from postmarket surveillance reports and from literature, 100 drugs are included in the datasets obtained from postmarket surveillance reports and from drug labeling documents, and 206 drugs are shared by the datasets obtained from drug labeling documents and from literature. Comparing DILI annotations between datasets for the same drugs revealed that a considerable number of drugs have conflict DILI annotations, raising concerns on utilization of ML and DL models developed based on different datasets. Figure 5 compares DILI annotations of drugs common in pairs of datasets obtained from different sources. Close examination of the figure found that few drugs have conflict DILI annotations between drug labeling documents and postmarket surveillance reports, while a notably large number of drugs have conflict DILI annotations between literature and drug labeling documents and between literature and postmarket surveillance reports. The high conflict rates may be due to the many DILI annotations obtained from literature mining are based on animal experimental data, which are different from observations in humans in postmarket surveillance reports and drug labeling documents. Therefore, a high-quality benchmarking is urgently needed to enhance the development of ML and DL models for predicting hepatotoxicity in drug safety evaluation and chemical risk assessment.

Figure 4.

Venn diagram for comparison of DILI datasets generated from different sources. The dataset PMR obtained from postmarket surveillance reports is represented in the red circle; the dataset DLD generated from drug labeling documents is shown in the purple circle; and the dataset LIT, yielded through mining publications in the literature, is indicated in the green circle.

Figure 5.

Comparison of DILI annotations for the same drugs common to two datasets. Drugs in different categories of annotations are given in bars depicted by the y-axis. Drugs with the same DILI annotations are shown in blue bars. Drugs with conflict DILI annotations are plotted in the orange and green bars. DILI annotations for the same drugs are marked at the x-axis.

For carcinogenicity, most of the developed ML and DL models are based on the dataset extracted from the CPDB. 59 CPDB is a comprehensive resource of long-term animal carcinogenesis studies and collected results on various animal studies. Chemicals are labeled as carcinogens or non-carcinogens according to their carcinogenic potency (TD50) values obtained in the studies. A chemical could be carcinogenic in one animal study but could be shown as non-carcinogenic in another animal study, raising challenges in classifying chemicals as carcinogens or non-carcinogens. Therefore, integrating results from different animal studies such as the dataset from combining rat, dog, and hamster studies 60 has not been well investigated in the development of ML and DL models for predicting the carcinogenic activity of chemicals. Most of the developed carcinogenesis prediction models were developed based on datasets of in vivo studies on rat from the CPDB. However, different datasets of rat carcinogenic activity were derived from the same CPDB data source without clear descriptions on how they are generated, and they were used in the development of ML and DL prediction models, resulting in different prediction performances. Our observations indicate that a well-annotated carcinogenic activity dataset is extremely important for developing reproducible and accurate prediction models using ML and DL algorithms. Furthermore, a clear description of the process that is used for generating a dataset is highly recommended in the publication of an ML or DL model for better understanding and applying the developed model in safety evaluation and risk assessment.