Abstract

Background:

The Orthopaedic Minimal Data Set (OrthoMiDaS) Episode of care (OME) is a prospectively collected database enabling capture of patient and surgeon-reported data in a more efficient, comprehensive, and dependable manner than electronic medical record (EMR) review. We aimed to assess and validate the OME as a data capture tool for carpometacarpal (CMC) arthroplasty compared to traditional EMR-based review. Specifically, we aimed to: (1) compare the completeness of the OME versus EMR data; and (2) evaluate the extent of agreement between the OME and EMR data-based datasets for carpometacarpal (CMC) arthroplasty.

Methods:

The first 100 thumb CMC arthroplasties after OME inception (Febuary, 2015) were included. Blinded EMR-based review of the same cases was performedfor 48 perioperative variables and compared to their OME-sourced counterparts. Outcomes included completion rates and agreement measures in OME versus EMR-based control datasets.

Results:

The OME demonstrated superior completion rates compared to EMR-based retrospective review. There was high agreement between both datasets where 75.6% (34/45) had an agreement proportion of >0.90% and 82.2% (37/45) had an agreement proportion of >0.80. Over 40% of the variables had almost perfect to substantial agreement (κ > 0.60). Among the 6 variables demonstrating poor agreement, the surgeon-inputted OME values were more accurate than the EMR-based review control.

Conclusions:

This study validates the use of the OME for CMC arthroplasty by illustrating that it is reliably able to match or supersede traditional chart review for data collection; thereby offering a high-quality tool for future CMC arthroplasty studies.

Keywords: OME, dataset, CMC, arthritis, diagnosis, prospective, database, OrthoMidas episode of care, perioperative outcomes, surgical details, complications

Introduction

Thumb carpometacarpal (CMC) osteoarthritis is a common disorder with a reported prevalence of 5.8% of males and 7.3% of females over 50 years old. 1 Carpometacarpal arthroplasty is an established, effective procedure for the treatment of basilar thumb joint arthritis. 2 While most patients have good clinical outcomes, it is reported that up to 30% of patients describe continued thumb pain and/or instability postoperatively; this may be attributed to a variety of confounders, including proximal metacarpal subsidence, method of arthroplasty, untreated concomitant thumb metacarpophalangeal (MCP) joint hyperextension, or scaphotrapezoid joint arthritis.3,4 It is challenging, however, to generalize these results, as patient demographics, bone quality, and operative techniques may be highly varied between each CMC arthroplasty procedure.5,6 Controlling for these variations is challenging, and would require extensive datasets with accurate and reliable reporting on granular outcomes, a function not afforded by nationally representative datasets or insurance claims databases.

Large institutional databases have sought to provide sources for granular intra/perioperative details necessary to define operative success drivers after CMC arthroplasty.7,8 These datasets, however, are limited by their dependence on electronic medical record (EMR) and operative note review, which are prone to reviewer error, incomplete data reporting, inter-reviewer variability in finding interpretation, and inherently retrospective in nature.9-11 Moreover, these data sources often do not record any measurements of patient or surgical quality, which will likely be crucial for future reimbursement metrics. 12 To meet the demand for accurate and reliable data capture, the Orthopaedic Minimal Data Set (OrthoMiDaS) Episode of care (OME) was developed as a continually updating prospective institutional data collection system.9-11 This prospectively created cohort database enables the capture of patient and surgeon-reported data in a more efficient, comprehensive, and dependable manner than EMR or operative report review. While this system has been previously validated for lower extremity arthroplasty, upper extremity arthroscopy, and rotator cuff repair, it has yet to be validated for CMC arthroplasty data capture.9-11,13

This investigation aimed to assess the OME as a data capture tool for CMC arthroplasty compared to traditional EMR-based chart and operative note review. Specifically, we aimed to: (1) compare the completeness of the OME versus EMR data; and (2) evaluate the extent of agreement between the OME and EMR data-based datasets for CMC arthroplasty.

Methods

OME System Design

The OME system was established by an integrated team of multi-professionals, including orthopaedic providers, software developers, data analysts, database managers, and administrators.9-11,13 The OME system leverages the Research Electronic Data Capture (REDCap) system as a platform to allow for continuous prospective collection of clinically relevant data from surgical episodes in a cost-effective, valid, and scalable manner that can be incorporated into an existing workflow.14,15 The OME data collection system captures baseline patient determinants, including demographics and comorbidities, surgeon-entered procedural data from the surgical event, and patient-reported outcome measures (PROMs). This system has become our healthcare system’s standard of care for all elective knee, hip, shoulder, and hand surgeries.9-11,13,16-20

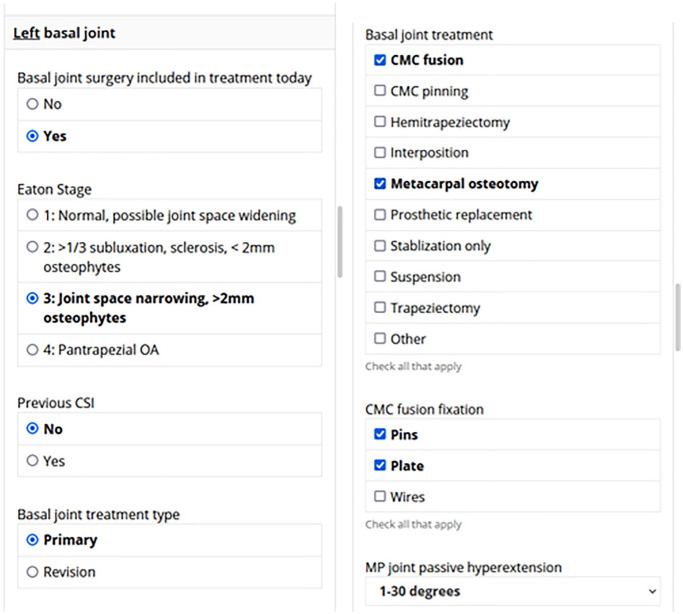

Patient baseline determinants and PROMs are input preoperatively through a tablet device provided to the patient on the day of surgery. In addition, a restricted link specific to the surgical episode is emailed to the surgeon on the day of surgery. Such links contain standardized surgeon-directed questionnaires that record peri- and intra-operative details entered prospectively by each surgeon within 48 hours of concluding the surgical procedure. These questionnaires are automatically generated for each surgical episode and exported to a continuously updated REDCap-based master database. Surgeons can complete the questionnaires using a variety of platforms, including their institutional smartphones, tablets, or computers (Figure 1). Captured data include past surgical histories, findings from examinations under anesthesia, intraoperative parameters, and key predictors of operative outcomes for the surgical episode. A built-in branching logic facilitates data collection by displaying only surgery-specific fields, avoiding question prompts irrelevant to the performed procedure. In addition, OME forms cannot be submitted before completion, ensuring maximal capture of relevant data in a timely fashion.

Figure 1.

Example primary forms of branching smart-phone orthopaedic minimal data set episode of care collection system.

Note. CMC = carpometacarpal; OA = osteoarthritis; CSI = corticosteroid injection; MP = metacarpophalangeal.

Patient Selection

Institutional review board was obtained prior to initiation of the current investigation. The first 100 patients since the OME database initiation on February 18, 2015, who underwent unilateral CMC joint arthroplasty were included. This patient pool was compiled by capturing surgeries performed by the 9 orthopaedic surgeons invited to the platform at a single academic healthcare system.

Data Collection and Validation

Perioperative data and intraoperative details for the initial 100 cases prospectively captured by OME were extracted from the continuously updating master dataset. Captured data points were subsequently compared to an EMR control dataset obtained through a retrospective chart review of the included 100 surgical episodes. Data collection for the EMR-based control dataset was done retrospectively via a thorough review of the narrative operative reports and implant logs recorded in the institutional EMR system (EPIC [Epic Systems, Verona, Winconsin]). EMR data collection was conducted by 2 independent blinded reviewers who were not involved in prospective OME data recording. A third blinded reviewer conducted a subsequent evaluation of the retrospectively collected data for instances of disagreement or dissimilarity between the 2 reviewers.

Outcome Measures and Data Handling

The primary outcome was data completeness and concordance between the OME and the EMR-based control datasets (Online Appendix 1). The OME versus EMR variable completion, and OME versus EMR distinct values (captured only in the OME dataset or the EMR control dataset) were recorded for each variable. Distinct values were recorded via estimating the number of nonmissing distinct levels/categories used for each variable.

The OME data were handled to obtain an analyzable dataset. This was done by reordering while maintaining case-specific laterality and the branching logic variables’ net outcomes (ie, any data elements that would be filled by the surgeon in the form of checkboxes and expandable fields were converted into categorical variables within an analyzable dataset). Unmarked variables were then transmuted to either “No” or “Missing” as appropriate using a variable-specific conversion algorithm.

Statistical Analysis

Completion rates for data entry were compared between the OME- and EMR-based control datasets. This comparison implemented the McNemar test with continuity correction. Whenever completion rates for a particular variable were 100%, McNemar’s test was not implemented (a minimum of 2-levels per variable are required for test implementation). For each of the evaluated variables, agreement proportions were estimated by dividing the number of cases where the OME and control datasets agreed by the total number of cases. Numeric variables’ agreement was evaluated using the concordance correlation coefficient (CCC). The subsequent agreement strength was assessed as demonstrated by McBride 21 (<0.90: poor agreement; 0.90-0.95: moderate agreement; 0.95-0.99: substantial agreement; and >0.99: almost perfect agreement). Unweighted and quadratically weighted kappa statistics (κ) were calculated for binary/nominal and ordinal variables, respectively. The same “penalty” was assigned for any mismatch in category placement (ie, any mismatch between variables was assigned the same penalty regardless of their values). Mismatches in ordinal data that involved levels that are closer hierarchically were penalized less than those with a greater hierarchal difference. Of note, calculating κ was not feasible for variables that exhibited < 2 levels. The κ statistics represented a chance-corrected agreement measure whose strength was reported according to the parameters described by Landis and Koch 22 (<0.00: poor agreement; 0.00-0.20: slight agreement; 0.21-0.40: fair agreement; 0.41-0.60: moderate agreement; 0.61-0.80: substantial agreement; and 0.81-1.00: almost perfect agreement). All analyses were performed using R software (R version 3.2.3; Vienna, Austria).

Results

Variable Completion Rate

The OME consistently demonstrated similar or better completion rates compared to the EMR-based control dataset for all captured variables (48/48; Online Appendix 1). Specifically, the OME dataset exhibited identical completion rates to the EMR control (ie, equal number of completed records in both cohorts and a P-value = 1) in 68.8% (33/48) of variables and statistically equivalent completion rates (ie, unequal number of completed records but difference not statistically significant; .05 < P-value < 1.0) in 27.1% (13/48) of all captured variables. The OME dataset had significantly higher completion rates in designating anesthetic type as a local anesthetic tourniquet (P = .023) and was the only source of reporting the use of Bier Blocks (Online Appendix 1).

Variable Agreement Proportion

After exclusion of variables almost exclusively reported in the OME dataset (n = 2) or not reported in either cohort (n = 1), data point matching between the OME and Control datasets demonstrated that 75.6% (34/45) had an agreement proportion of > 0.90% and 82.2% (37/45) had an agreement proportion of > 0.80 (Table 1). Variables exclusively reported in OME were not amenable for formal agreement evaluation due to unilateral reporting. If such OME-specific datapoints were accounted for as perfect agreement variables, the OME dataset would demonstrate 76.6% (36/47) and 83.0 (39/47) agreement rates above the 0.9 and 0.8 thresholds, respectively.

Table 1.

Percent of Variables Within Each Category of Agreement Proportion Between Orthopaedic Minimal Data Set Episode of Care and Electronic Medical Record Datasets.

| Agreement proportion | Number of variables (N = 45) |

% |

|---|---|---|

| 0.0-0.2 | 0 | 0.0 |

| 0.21-0.4 | 0 | 0.0 |

| 0.41-0.6 | 2 | 4.4 |

| 0.61-0.8 | 6 | 12.5 |

| 0.81-1.0 | 37 | 82.2 |

| N/A | 3 | |

Variable Agreement Measure

Of the 48 variables, 13 datapoints demonstrated only one distinct level or no comparable records were available; therefore, agreement measure estimation was not feasible. In all, 35 variables were analyzed for agreement measure. In all, 40.0% (14/35) had perfect to substantial agreement, and 34.3% (12/35) exhibited moderate to fair agreement (Table 2). Six variables demonstrated poor agreement; however, the OME descriptors were expressed more accurately than their corresponding values in the control dataset, which was based on the EMR reviewer’s interpretation (Online Appendix 1).

Table 2.

Percent of Variables Within Each Category of Agreement Measure Between Orthopaedic Minimal Data Set Episode of Care and Electronic Medical Record Datasets.

| Agreement measure (κ) | Interpretation | Included in agreement analysis (N = 35) |

% |

|---|---|---|---|

| <0.00 | Poor agreement | 6 | 17.1 |

| 0.00-0.20 | Slight agreement | 3 | 8.6 |

| 0.21-0.40 | Fair agreement | 6 | 17.1 |

| 0.41-0.60 | Moderate agreement | 6 | 17.1 |

| 0.61-0.80 | Substantial agreement | 8 | 22.9 |

| 0.81-1.00 | Almost perfect agreement | 6 | 17.1 |

Time to Form Completion

Mean time required for individual form completion by the attending surgeon was 186.8 ± 178.5 seconds.

Discussion

The manual chart review or automated/machine learning-based retrospective data extraction from patients’ EMR has been the long-standing “gold standard” for database compilation.23-25 This method, however, has been associated with marked limitations, including inter-reviewer variability, human error, coding errors, machine learning inaccuracies, inappropriate time-point estimation, and high cost in addition to labor-intensiveness.26,27 Furthermore, EMR review cannot accommodate large institutional prospective cohorts that require continuous enrollment, review, and datapoint update. The current investigation highlighted the validity of the OME as a prospective data collection system. This prospective institution-wide data collection system leverages smartphone/tablet technologies to ensure prompt, rapid (~3 minutes per form) data point entry after each surgical intervention with completion rates that are equivalent to those captured through retrospective chart review across all evaluated variables. The majority of captured variables had substantial to perfect agreement compared to the values inputted through retrospective chart review. Furthermore, all OME datapoints were directly inputted in the immediate postoperative period by the operating surgeon (i.e., first person reporting) as opposed to researcher-dependent chart review which involves the researcher’s own interpretation of EMR-based variables (i.e., second or even third-person reporting). As such, our findings demonstrate the validity and accuracy of the OME. As the OME is a continuously updating prospective data collection system as opposed to the labor-intensive and costly chart review method, its high level of agreement substantiates its claim as a valid data collection tool.

The findings of the present study should be interpreted in the context of its limitations. Captured variables include peri- and intraoperative details inputted by surgeons in the immediate perioperative period. However, certain details including additional intraoperative procedures such as associated ganglion excisions, Guyon canal decompressions, scapholunate treatments, synovial biopsies, trigger finger releases, and z-platies were not evaluated in the present investigation. Data collection errors pose a risk to any system including the OME. As OME data input is performed by fellowship trained orthopaedic surgeons within the immediate postoperative period, we expect their primary input to be more accurate than secondary collectors. The OME’s branching logic implemented in data collection promotes ease of interaction and timely data collection. However, such design may introduce potential for missed unique events which may not fit the branching logic’s flow. Finally, the present study did not provide cost-related data to evaluate the expenses involved in managing this prospective data collection system. However, the system leverages REDCap for data input and storage, which is the standard system-wide data storage system for all research activity. Furthermore, apart from costs associated with conceptualization and initiation of the system, minimal maintenance cost are required which are predominantly directed to database analysts’ salaries. Despite such limitations, the present prospective data collection system affords granular and reliable data capture which surpasses traditional EMR-based retrospective data collection.

In a recent investigation by Mohr et al, 17 the authors assessed completion and agreement rates among the initial 100 arthroscopic interventions for shoulder instability captured by the OME system versus an EMR-based control dataset. The OME exhibited equivalent or superior completion rates in 36 of the 37 assessed datapoints and had an agreement proportion that surpassed 0.90 in approximately 76% of captured variables. In addition, the authors found that the time needed for completing data input postoperatively did not impede surgeons’ workflow with a median requirement of 103.5 seconds for form completion. In another investigation, Sahoo et al 9 evaluated the OME’s utility in capturing rotator cuff repair surgery data points. The authors found that the OME dataset had higher data counts for 25% (10/40) of variables when compared to an EMR-based control. Furthermore, the authors reported high levels of proportional and statistical agreement: 17% of variables demonstrated perfect agreement and 37% had almost perfect concordance. The current study’s findings fall in line with those reported by previous OME validation analyses and indicate that the OME dataset can be reliably used in assessing peri/intraoperative details for CMC arthroplasty recipients.9-11,13,16-20

While the current analysis is the first to highlight the validity of the OME as data collection tool in capturing CMC arthroplasty peri- and intraoperative variables, several prior investigations have evaluated the OME’s utility for a variety of surgical interventions.9-11,13,16-20 Furthermore, the OME has been subsequently leveraged as a data source for an increasing number of contemporary investigations, especially in lower extremity total joint arthroplasty.10,28,29 One investigation assessed the utility of OME in primary total hip arthroplasty through a comparison between an OME-obtained prospective cohort versus an EMR-based control. 11 The authors found that the OME dataset exhibited consistently higher completion rates in 41% of the evaluated variables in addition to having agreement proportions that surpassed 0.90 and 0.80 for 54% and 79% of the collected data points, respectively. In another investigation validating the use of OME for data collection of primary total knee arthroplasty perioperative details, Ramanathan et al 10 described almost perfect overall agreement measure (0.916 ± 0.152) for the data collection system compared to an EMR-based dataset.

The OME system provides a valid point of care data collection modality for reliable documentation of peri- and intraoperative details of CMC arthroplasty. This prospective data collection tool is an efficient and accurate alternative to traditional EMR review. The granularity provided by the OME coupled with its prospective design allows for conducting reliable subgroup analyses and evaluating patient- and surgeon-specific quality factors driving outcomes. Such an advantage is afforded without marked compromise to providers’ time or additional expenses and with minimal need for manpower. Implementing similar systems across various institutions may hold potential in promoting musculoskeletal research.

Supplemental Material

Supplemental material, sj-docx-1-han-10.1177_15589447221082163 for Validation of a Smartphone-Based Institutional Electronic Data Capture System for Thumb Carpometacarpal Joint Arthroplasty by Morad Chughtai, Joseph P. Scollan, Ahmed K. Emara, Yuxuan Jin, Peter J. Evans, David B. Shapiro and Joseph F. Styron in HAND

Footnotes

Ethical Approval: This study was approved by our institutional review board.

Statement of Human and Animal Rights: This article does not contain any studies with animal subjects. Institutional review board approval was obtained for the present study. All procedures performed were in accordance with the ethical standards of the Helsinki Declaration of 1975, as revised in 2008.

Statement of Informed Consent: Institutional review board approval was obtained prior to the initiation of the current investigation. All patients included in the present study provided full informed consent prior to participation.

The author(s) declared the following potential conflicts of interest with respect to the research, authorship, and/or publication of this article: J.F.S. has the following disclosures, none of which is related to the topic of the current study: Acumed, LLC: paid consultant; Axogen: paid consultant; paid presenter or speaker; EXSOmed: paid consultant; paid presenter or speaker; PJE has the following disclosures, none of which is related to the topic of the current study: Acumed, LLC: paid presenter or speaker; American Association for Hand Surgery: Board or committee member; American Society for Surgery of the Hand: board or committee member; Axogen: paid consultant; paid presenter or speaker; Biomet: IP royalties; paid presenter or speaker; extremity Medical: IP royalties; Hely Weber: IP royalties; Innomed: IP royalties; Lineage Medical: stock or stock Options; Nutek: stock or stock Options; Tenex: stock or stock options; M.C., J.P.S., A.K.E., D.B.S., and Y.J. have nothing to disclose.

Funding: The author(s) received no financial support for the research, authorship, and/or publication of this article.

ORCID iD: Joseph P. Scollan  https://orcid.org/0000-0002-2376-1449

https://orcid.org/0000-0002-2376-1449

Supplemental material is available in the online version of the article.

References

- 1. van der Oest MJW, Duraku LS, Andrinopoulou ER, et al. The prevalence of radiographic thumb base osteoarthritis: a meta-analysis. Osteoarthritis Cartilage. 2021;29(6):785-792. doi: 10.1016/j.joca.2021.03.004. [DOI] [PubMed] [Google Scholar]

- 2. Papatheodorou LK, Winston JD, Bielicka DL, et al. Revision of the failed thumb carpometacarpal arthroplasty. J Hand Surg Am. 2017;42(12):1032.e1-1032.e7. doi: 10.1016/j.jhsa.2017.07.015. [DOI] [PubMed] [Google Scholar]

- 3. Burton RI, Pellegrini VD., Jr. Surgical management of basal joint arthritis of the thumb. Part II. Ligament reconstruction with tendon interposition arthroplasty. J Hand Surg Am. 1986;11(3):324-332. doi: 10.1016/s0363-5023(86)80137-x. [DOI] [PubMed] [Google Scholar]

- 4. Taccardo G, de Vitis R, Parrone G, et al. Surgical treatment of trapeziometacarpal joint osteoarthritis. Joints. 2013;1(3):138-144. doi: 10.11138/jts/2013.1.3.138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Matullo KS, Ilyas A, Thoder JJ. CMC arthroplasty of the thumb: a review. Hand (N Y). 2007;2(4):232-239. doi: 10.1007/s11552-007-9068-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Weiss A-PC, Kamal RN, Paci GM, et al. Suture suspension arthroplasty for the treatment of thumb carpometacarpal arthritis. J Hand Surg Am. 2019;44(4):296-303. doi: 10.1016/j.jhsa.2019.02.005. [DOI] [PubMed] [Google Scholar]

- 7. Giddins G. Functional outcomes after surgery for thumb carpometacarpal joint arthritis. J Hand Surg Eur Vol. 2020;45(1):64-70. doi: 10.1177/1753193419883968. [DOI] [PubMed] [Google Scholar]

- 8. Weiss A-PC. Five-year results of suture suspension thumb CMC arthroplasty: level 4 evidence. J Hand Surg Am. 2018;43(9):S38-S39. doi: 10.1016/j.jhsa.2018.06.084. [DOI] [Google Scholar]

- 9. Sahoo S, Mohr J, Strnad GJ, et al. Validity and efficiency of a smartphone-based electronic data collection tool for operative data in rotator cuff repair. J Shoulder Elbow Surg. 2019;28(7):1249-1256. doi: 10.1016/j.jse.2018.12.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Ramanathan D, Gad BV, Luckett MR, et al. Validation of a new web-based system for point-of-care implant documentation in total knee arthroplasty. J Knee Surg. 2018;31(8):767-771. doi: 10.1055/s-0037-1608822. [DOI] [PubMed] [Google Scholar]

- 11. Curtis GL, Tariq MB, Brigati DP, et al. Validation of a novel surgical data capturing system following total hip arthroplasty. J Arthroplasty. 2018;33(11):3479-3483. doi: 10.1016/j.arth.2018.07.011. [DOI] [PubMed] [Google Scholar]

- 12. Porter ME, Larsson S, Lee TH. Standardizing patient outcomes measurement. N Engl J Med. 2016;374(6):504-506. doi: 10.1056/nejmp1511701. [DOI] [PubMed] [Google Scholar]

- 13. Piuzzi NS, Strnad G, Brooks P, et al. Implementing a scientifically valid, cost-effective, and scalable data collection system at point of care: the Cleveland clinic OME cohort. J Bone Jt Surg Am. 2019;101(5):458-464. doi: 10.2106/JBJS.18.00767. [DOI] [PubMed] [Google Scholar]

- 14. Harris PA, Taylor R, Thielke R, et al. Research electronic data capture (REDCap): a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42(2):377-381. doi: 10.1016/j.jbi.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Shah J, Rajgor D, Pradhan S, et al. Electronic data capture for registries and clinical trials in orthopaedic surgery: open source versus commercial systems. In: Clinical Orthopaedics and Related Research, vol. 468. New York, NY: Springer; 2010:2664-2671. doi: 10.1007/s11999-010-1469-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Brown MC, Westermann RW, Hagen MS, et al. Validation of a novel surgical data capturing system after hip arthroscopy. J Am Acad Orthop Surg. 2019;27(22):E1009-E1015. doi: 10.5435/JAAOS-D-18-00550. [DOI] [PubMed] [Google Scholar]

- 17. Mohr J, Strnad GJ, Farrow L, et al. A smart decision: smartphone use for operative data collection in arthroscopic shoulder instability surgery. J Am Med Informatics Assoc. 2019;26(10):1030-1036. doi: 10.1093/jamia/ocz074. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Featherall J, Oak SR, Strnad GJ, et al. Smartphone data capture efficiently augments dictation for knee arthroscopic surgery. J Am Acad Orthop Surg. 2020;28(3):e115-e124. doi: 10.5435/JAAOS-D-19-00074. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Roth A, Anis HK, Emara AK, et al. The potential effects of imposing a body mass index threshold on patient-reported outcomes after total knee arthroplasty. J Arthroplasty. 2021;36:S198-S208. doi: 10.1016/j.arth.2020.08.060. [DOI] [PubMed] [Google Scholar]

- 20. Ramkumar PN, Navarro SM, Haeberle HS, et al. No difference in outcomes 12 and 24 months after lower extremity total joint arthroplasty: a systematic review and meta-analysis. J Arthroplasty. 2018;33(7):2322-2329. doi: 10.1016/j.arth.2018.02.056. [DOI] [PubMed] [Google Scholar]

- 21. McBride G. A proposal for strength-of-agreement criteria for Lin’s Concordance Correlation Coefficient. NIWA Client Rep. 2005;45(1):307-310. [Google Scholar]

- 22. Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics. 1977;33(1):159-174. [PubMed] [Google Scholar]

- 23. Feng JE, Anoushiravani AA, Tesoriero PJ, et al. Transcription error rates in retrospective chart reviews. Orthopedics. 2020;43(5):e404-e408. doi: 10.3928/01477447-20200619-10. [DOI] [PubMed] [Google Scholar]

- 24. Sarkar S, Seshadri D. Conducting record review studies in clinical practice. J Clin Diagn Res. 2014;8(9):JG01-JG4. doi: 10.7860/JCDR/2014/8301.4806. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Cunningham BP, Harmsen S, Kweon C, et al. Have levels of evidence improved the quality of orthopaedic research? Clin Orthop Relat Res. 2013;471(11):3679-3686. doi: 10.1007/s11999-013-3159-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Gearing RE, Mian IA, Barber J, et al. A methodology for conducting retrospective chart review research in child and adolescent psychiatry. J Can Acad Child Adolesc Psychiatry. 2006;15(3):126-134. [PMC free article] [PubMed] [Google Scholar]

- 27. Hu Z, Melton GB, Moeller ND, et al. Accelerating chart review using automated methods on electronic health record data for postoperative complications. AMIA Annu Symp Proc. 2017;2016:1822-1831. [PMC free article] [PubMed] [Google Scholar]

- 28. Anis HK, Strnad GJ, Klika AK, et al. Developing a personalized outcome prediction tool for knee arthroplasty. Bone Joint J. 2020;102-B(9):1183-1193. doi: 10.1302/0301-620X.102B9.BJJ-2019-1642.R1. [DOI] [PubMed] [Google Scholar]

- 29. Piuzzi NS, Hussain ZB, Chahla J, et al. Variability in the preparation, reporting, and use of bone marrow aspirate concentrate in musculoskeletal disorders. J Bone Jt Surg. 2018;100(6):517-525. doi: 10.2106/JBJS.17.00451. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplemental material, sj-docx-1-han-10.1177_15589447221082163 for Validation of a Smartphone-Based Institutional Electronic Data Capture System for Thumb Carpometacarpal Joint Arthroplasty by Morad Chughtai, Joseph P. Scollan, Ahmed K. Emara, Yuxuan Jin, Peter J. Evans, David B. Shapiro and Joseph F. Styron in HAND