Abstract

Pathologic myopia (PM) is a common blinding retinal degeneration suffered by highly myopic population. Early screening of this condition can reduce the damage caused by the associated fundus lesions and therefore prevent vision loss. Automated diagnostic tools based on artificial intelligence methods can benefit this process by aiding clinicians to identify disease signs or to screen mass populations using color fundus photographs as inputs. This paper provides insights about PALM, our open fundus imaging dataset for pathological myopia recognition and anatomical structure annotation. Our databases comprises 1200 images with associated labels for the pathologic myopia category and manual annotations of the optic disc, the position of the fovea and delineations of lesions such as patchy retinal atrophy (including peripapillary atrophy) and retinal detachment. In addition, this paper elaborates on other details such as the labeling process used to construct the database, the quality and characteristics of the samples and provides other relevant usage notes.

Subject terms: Image processing, Eye diseases, Machine learning

Background & Summary

Myopia has become a global burden of public health. In 2020, this condition affected nearly 30% of the world population, and that number is expected to rise up to 50% by 20501. Among myopic patients, about 10% have high myopia1, which is defined by a refractive error of at least −6.00D or an axial length of 26.5 mm or larger2. As myopic refraction increases, there is an associated risk of pathological changes to the retina and choroid, i.e., high myopia will develop into pathological myopia (PM)3. PM is characterized by the formation of pathologic changes at the posterior pole and the optic disc and by myopic maculopathy4. Among lesions usually observed in PM retinas, some of the more commonly seen are peripapillary atrophies, tessellations and macular hemorrhages (Fig. 1). These abnormalities can be observed in color fundus photography (CFP) (Fig. 1), which is currently the most cost-effective imaging modality for this condition5. As undetected PM might potentially result in irreversible visual impairment, it turns relevant to diagnose it at an early stage, to ensure regular patient follow-ups and treatments before further complications.

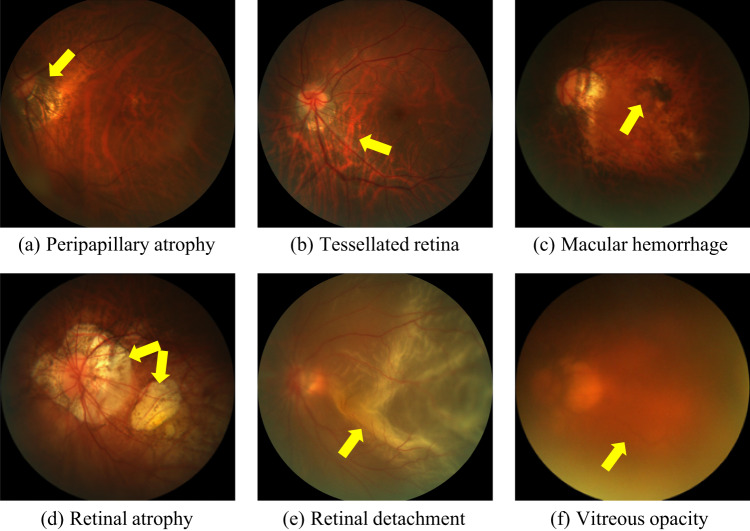

Fig. 1.

Examples of retinal lesions commonly observed in PM cases: (a) Peripapillary atrophies, which occur at the proximity of the optic disc; (b) Tessellated retina, with an observable large choroidal vessels at the posterior fundus pole; (c) Macular hemorrhages, mostly along the crack itself and near from the center of the fovea or in its immediate vicinity; (d) Retinal atrophy, pigment clumping in and around the lesion due to migration of the degenerated retinal pigment epithelium cells into the inner retinal layers; (e) Retinal detachment, an emergency situation in which the retina is pulled away from its normal position; (f) Vitreous opacity, in which the vitreous shrinks and forms strands that cast shadows on the retina. All images corresponds to training samples from PALM.

In view of the recent developments in artificial intelligence (AI) technology in the field of computer-aided disease diagnosis and treatment, multiple studies started to focus on applying this technology for automated analysis of CFPs6–8. However, only a few studies aimed at PM in particular. We believe this is likely due to the fact that these data-driven models need to be trained using large curated and annotated datasets, which are currently scarce and not publicly available for this specific condition.

To facilitate future research in this topic, we provide PALM, an open database containing 1200 color fundus photographs related to PM9. Unlike other disease datasets already available for the ophthalmic image analysis community such as SCES10, ODIR (https://odir2019.grand-challenge.org/) or AIROGS11, ours includes not only CFPs and the disease labels but also optic disc segmentations, the location of the fovea and manual delineations of disease related lesions. These additional annotations can assist in building complementary AI models for disease classification and interpretation, which can aid clinicians to comprehensively analyze disease patterns and provide a more accurate diagnostic of PM.

PALM dataset12 has been released as part of the PAthologicaL Myopia challenge, which was held in conjunction with the International Symposium on Biomedical Imaging (ISBI) in 2019. To date, our dataset has already been used in more than 100 papers in the field of automated diagnosis of PM13–17 or fundus structure analysis18–20 based on CFPs.

Methods

Data collection

PALM12 contains retinal images retrospectively collected from a myopic examination cohort at the Zhongshan Ophthalmic Center (ZOC), Sun Yat-sen University, China. Each CFP was acquired in a single field of view, i.e., the fundus was photographed with the midpoint of the optic disc (OD) and macula as the center, or in a dual field of view, i.e. with the OD and macula as the center of the image, respectively (Fig. 2).

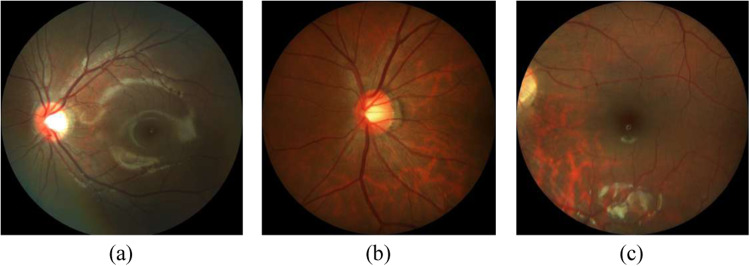

Fig. 2.

Color fundus images from PALM training set centered at (a) the midpoint between optic disc and fovea, (b) the optic disc, and (c) the fovea).

The protocol for retrieving the images was approved by the ethics board of ZOC. CFPs were included if: (1) they were acquired with a single field of view or with a dual field of view; (2) they do not have noticeable quality issues, such as severe smudges, artifacts, out-of-focus, blurriness, incorrect exposure, etc., that would affect the clarity of the observed target area. Images were excluded if they showed any trace of treatment, severe exposure abnormalities, severe refractive interstitial opacities, large-scale contaminations or if information about its origin was missing.

The CFPs in the final dataset were captured from the left eyes of 720 subjects, with 1–3 CFPs meeting the quality requirements retained for each eye. In total, 1200 CFPs were retained. The 1–3 CFPs captured for each subject were taken at the same examination time. Of the 720 subjects, 48.1% were male, and the average age was 37.5 ± 15.91. The ethnicity of the subjects in PALM dataset is Chinese. Among the 1,200 images, 1047 were captured with a Zeiss Visucam 500 camera (resolution of 2124 × 2056 pixels), and 153 were captured with a Canon CR-2 camera (resolution of 1444 × 1444 pixels). The database is provided already split into a training, a validation, and a test set (Table 1), with images belonging to the same patient assigned to the same set.

Table 1.

Summary of the main characteristics of each subset of the PALM dataset, stratified by disease, structure, lesion, image acquisition type, and acquisition device.

| Set | Num. | PM/Non-PM | With/o OD | With/o Fovea | With/o Detachment | With/o Atrophy | Photo centering (OD/ fovea/ midpoint of OD and fovea) | Device (Zeiss/Canon) |

|---|---|---|---|---|---|---|---|---|

| Training | 400 | 213/187 | 381/19 | 397/3 | 12/388 | 311/89 | 42/258/100 | 350/50 |

| Validation | 400 | 211/189 | 379/21 | 397/3 | 6/394 | 271/129 | 43/258/99 | 344/56 |

| Testing | 400 | 213/187 | 384/16 | 398/2 | 6/394 | 288/112 | 38/284/78 | 353/47 |

| Total | 1200 | 637/563 | 1144/56 | 1192/8 | 24/1176 | 870/330 | 123/800/277 | 1047/153 |

Disease diagnosis

Disease labels indicating the presence or absence of PM were assigned to each scan based on clinical diagnoses provided by the clinicians at the time of examination, which considered in a comprehensive manner the medical history, refractive error, fundus imaging reports, optical coherence tomography (OCT) imaging reports, etc.The guidelines of the International Myopia Institute21 were followed, so that a subject was considered as PM if structural changes in the posterior segment of the eye caused by an excessive axial elongation associated with myopia were observed, including posterior staphyloma, myopic maculopathy, and high myopia-associated glaucoma-like optic neuropathy. These alterations were observed during clinical examination using multiple imaging modalities, including OCT, fluorescein angiography (FA), and OCT angiography (OCTA). Notice that non-PM images might not necessarily correspond to healthy subjects, as shown in Fig. 3.

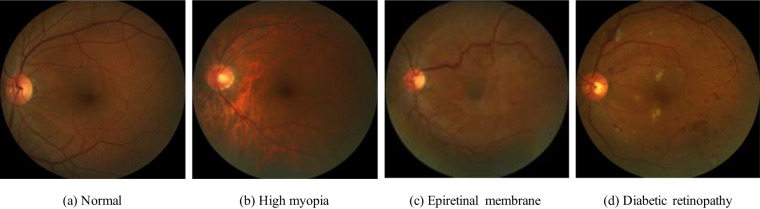

Fig. 3.

Examples of color fundus images from PALM corresponding to the non-PM category. Notice that this subset contains not only healthy subjects (a) but also subjects with other conditions such as high myopia (b), epiretinal membrane (c) and diabetic retinopathy (d), among others.

Manual annotations

Manual delineations of the optic disc and fundus lesions and the annotation of the fovea localization (Fig. 4) were performed by seven ophthalmologists with an average experience of 8 years in the field (ranging from 5 to 10 years) and one senior ophthalmologist, with more than 10 years of experience, all of them part of ZOC staff (Fig. 5). All ophthalmologists annotated the structures by themselves without having access to any patient information or knowledge of disease prevalence in the data. Details regarding the annotation protocol followed for each specific target are provided in the sequel.

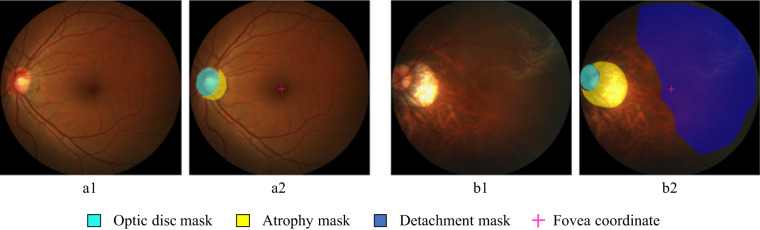

Fig. 4.

Example of the annotation interface used by the experts in (a) a no-PM sample, and (b) a PM sample. (a1) and (b1): original input images, (a2) and (b2): manual annotations.

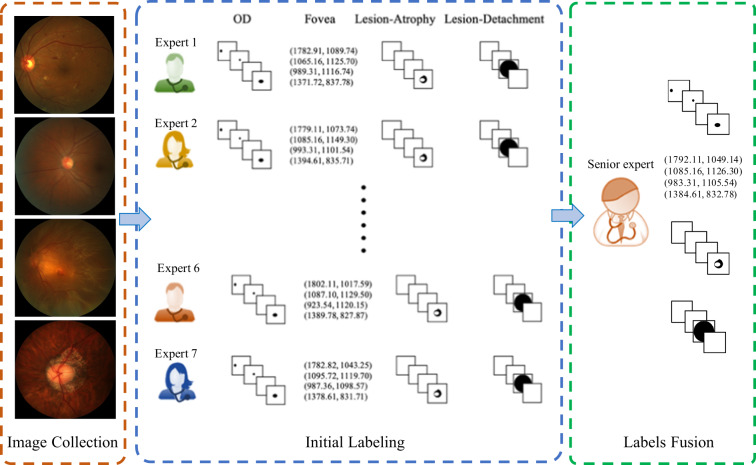

Fig. 5.

Manual annotation process. Manual delineations were performed by seven different experts and reviewed subsequently by one senior expert.

Optic disc annotation

Experts used a free annotation tool with capabilities for image review, zoom, contrast enhancement, and circle and ellipse fitting, to manually draw elliptical structures approximately covering the optic disc. Pixels within the fitted area were then mapped to a binary pixel-wise segmentation mask. Annotations of the same image performed by the seven different graders were merged into a single one by majority voting. The senior ophthalmologist then performed a quality check of this resulting mask to account for any potential mistakes. When errors in the annotations were observed, the senior ophthalmologist analyzed each of the seven masks, removed those that were considered erroneous and repeated the majority voting process with the remaining ones.

Fovea localization

A tool that allows to manually set the position of the crosshair on an image was used to approximate the location of the fovea. The final annotation was produced by averaging the seven coordinates provided by the ophthalmologists, which was further reviewed by the senior ophthalmologist. Out of all the initial fovea position annotations, roughly 0.43% contained inaccurate coordinate information. The senior ophthalmologist eliminated these erroneous coordinates and recalculated the average of the remaining coordinates to ascertain the final fovea position.

Lesion annotation

Two types of lesions related to PM were annotated on each image: patchy retinal atrophy (including peripapillary atrophy) and retinal detachment. Experts used the same annotation tool as for the optic disc, although using a closed curve to outline the lesions. Unlike the SUSTech-SYSU dataset22, a free-form closed curve was used to allow a more accurate approximation of lesion borders. The same revision process used for the optic disc mask was followed for lesion masks as well.

Data validation

Data quality was automatically verified using the Multiple Color-space Fusion Network (MCF-Net) approach by Fu et al.23, which classifies color fundus images into quality grades good, usable and reject based on different color-space representation at feature and prediction levels. Tables 2, 3 indicate the number of images grouped by quality according to each disease label and for each split, respectively.

Table 2.

The image quality assessment results in PM and Non-PM samples of the PALM dataset according to the fundus image quality assessment method proposed by Fu et al.23.

| Quality | Good | Usable | Reject |

|---|---|---|---|

| PM | 37 | 123 | 477 |

| Non-PM | 238 | 290 | 35 |

Table 3.

The image quality assessment results in different subsets of the PALM dataset according to the fundus image quality assessment method proposed by Fu et al.23.

| Quality | Good | Usable | Reject |

|---|---|---|---|

| Training set | 84 | 143 | 173 |

| Validation set | 87 | 143 | 170 |

| Testing set | 104 | 127 | 169 |

According to Tables 2, 6.2% of the images in the non-PM category are classified as reject, while this number rises to 74.9% in the PM category. This is because Fu et al.’s model23 categorizes images showing unclear visibility of the optic disc, macula, or blood vessels as ‘reject’ which is a common practice in similar studies24–27. In PM images, due to conditions such as macular hemorrhage, retinal atrophy, retinal detachment, or vitreous opacities, there are many instances where the visibility of the optic disc, macula, or blood vessel structures is unclear (as shown in Fig. 1). Nevertheless, these ‘reject’ images should not be excluded as they represent real clinical data. When designing AI image analysis algorithms, these types of images should be taken into account. However, existing open-source datasets tend to select images with clear visibility of the fundus structures, meaning that low-quality images with indistinct descriptions of the optic disc, macula, or blood vessel structures are often not included in the dataset. This limitation results in current algorithms having reduced efficiency when dealing with such images. Therefore, the strength of our dataset lies in its inclusion of numerous low-quality images (reject images) that replicate real clinical scenarios, which is crucial for research involving the analysis of fundus image structures.

In addition, it can be seen from Table 3 that the image quality distribution in training, validation and testing subsets of the proposed PALM dataset12 is relatively consistent. This ensures that the posterior evaluation of the model will not suffer from unstratified sampling and distribution biases.

Data Records

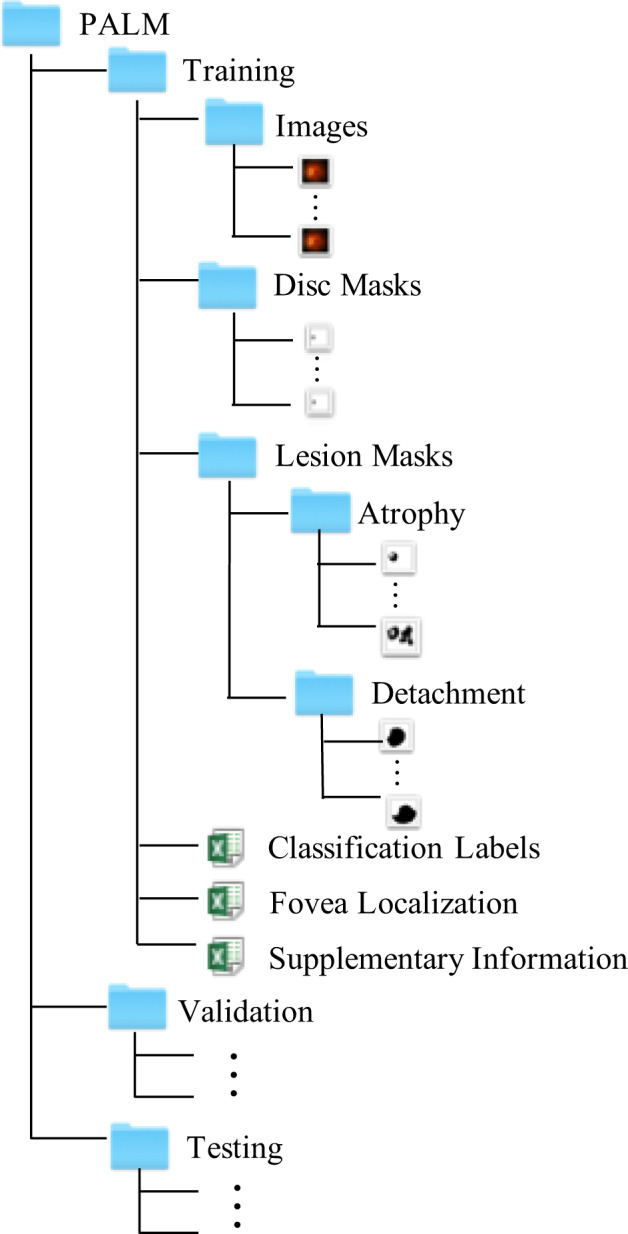

PALM is available on Figshare12. All personal information that could be used to identify the patients was removed before preparation. Data is provided already partitioned in folders Training set, Validation set and Test set, with each subset containing folders for ‘Images’, ‘Disc Masks’, and ‘Lesion Masks’, and three Excel files (i.e. ‘Classification Labels.xlsx’, ‘Fovea Localization.xlsx’, and ‘Supplementary Information.xlsx’), as shown in Fig. 6.

Fig. 6.

Folder organization of our PALM dataset.

The ‘Images’ folder within each subset contains 400 color fundus images each, stored in JPEG format, with 8 bits per color channel. Similarly, the ‘Disc Masks’ has all binary optic disc masks associated to each fundus picture, as BMP files, also with 8 bits per color channel. On the other hand, the ‘Lesion Masks’ folder contains two subfolders, corresponding to each lesion type target, namely, ‘Atrophy’ and ‘Detachment’, with binary annotations for patchy retinal atrophy and retinal detachment, respectively. The file format of the lesion segmentation masks is consistent with those of the optic disc masks.

The ‘Classification Labels.xlsx’ file contains the labels for PM classification, with 1 representing PM and 0 no-PM. The ‘Fovea Localization.xlsx’ provides the x- and y- coordinates of the fovea. Notice that a coordinate (0, 0) is used when the fovea is not visible in the associated image. Additional information, i.e., the equipments used for image acquisition and the type of photo centering, are provided in the ‘Supplementary Information.xlsx’ file, using in one column 1 to denote Zeiss and 2 to denote Canon devices, and, in a second column, 1, 2, and 3 to indicate optic disc centered, fovea centered, and center at the midpoint of optic disc and fovea, respectively.

Technical Validation

Tables 4, 5 provide the proportion of pixels corresponding to regions with retinal detachment and patchy retinal atrophy, respectively, differentiating by each disease category, acquisition protocol and subset. As expected, no retinal detachment lesions were found in images acquired with the optic disc at the center of the field of view or in images of patients with no PM (Table 4). Patchy retinal atrophies, on the other hand, are observed in both PM and non-PM categories (Table 5), although their size is much larger in PM subjects. Furthermore, these lesions are more frequently observed in images with visible optic disc, which is expected considering that these lesions appear at the vecinity of this anatomical structure.

Table 4.

Proportion of the detachment mask pixels in different categories of fundus images in the PALM dataset.

| Training | Validation | Testing | ||||

|---|---|---|---|---|---|---|

| PM | Non-PM | PM | Non-PM | PM | Non-PM | |

| Optic disc centered | 0% | 0% | 0% | 0% | 0% | 0% |

| Fovea centered | 3.6% | 0% | 1.2% | 0% | 0.8% | 0% |

| Midpoint of the optic disc and fovea centered | 2.4% | 0% | 2.3% | 0% | 3.6% | 0% |

Table 5.

Proportion of the atrophy mask pixels in different categories of fundus images in the PALM dataset.

| Training | Validation | Testing | ||||

|---|---|---|---|---|---|---|

| PM | Non-PM | PM | Non-PM | PM | Non-PM | |

| Optic disc centered | 15.1% | 0.6% | 16.8% | 1.1% | 16.8% | 1.5% |

| Fovea centered | 9.8% | 0.3% | 14.3% | 0.3% | 13.3% | 0.3% |

| Midpoint of the optic disc and fovea centered | 14.2% | 0.3% | 15.7% | 0.3% | 20.3% | 0.5% |

In addition to discussing the characteristics of the lesions in the images, we also counted the properties of the fovea position in the fundus images with respect to photo centering used. Table 6 shows the mean of the normalized coordinates for the fovea localization among the fundus images with different photo centering in PALM dataset12. , where [xi, yi] is the coordinate of the fovea in the ith image, and Hi and Wi are the height and width of the image. N is the total number of the samples in the corresponding categories. From the table, we can see that in fundus image centered on the optic disc, the fovea appears on the right side of the images, as fundus pictures correspond in all cases to left eyes. In the images centered on the macula, the fovea appears in the center, and in the images centered on the midpoint of the optic disc and the macula, the fovea appears to the right of the image center. Thus, the fovea position characteristics are consistent with our expectations.

Table 6.

The mean of the normalized coordinates for the fovea localization among the fundus images with different photo centering in PALM dataset.

| Training | Validation | Testing | |

|---|---|---|---|

| Optic disc centered | [0.856, 0.495] | [0.846, 0.506] | [0.851, 0.515] |

| Fovea centered | [0.522, 0.513] | [0.524, 0.516] | [0.5224, 0.514] |

| Midpoint of the optic disc and fovea centered | [0.614, 0.503] | [0.607, 0.503] | [0.607, 0.506] |

Usage Notes

PALM images can be used to perform studies on automated PM classification, optic disc segmentation, fovea localization, and atrophic lesion retinal detachment segmentation. In the aforementioned PALM Challenge, these tasks were set up as sub-challenges in which different participating teams proposed their own methods to automate them. The evaluation of their corresponding approaches in the validation and test sets for each of the sub-challenges are accessible in https://palm.grand-challenge.org/SemifinalLeaderboard/ and https://palm.grand-challenge.org/Test/.

For the studies on classification, segmentation and localization, we designed a series of baseline models28, which we trained and evaluated using PALM data12. For optic disc and lesion segmentation, we used a standard U-shaped network29 with residual blocks, while for PM classication and fovea localization we utilized ResNet5030 architectures. The corresponding code has been released as open source (See Code availability section).

In summary, PALM12 is the first dataset for assisting AI researchers in training AI models for automated PM analysis. Disease labels are complemented by a series of manual annotations of lesions and anatomical structures that can allow studies focusing on exploiting complementary features to enhance results. Furthermore, PALM12 can be used in combination with other existing fundus image datasets such as REFUGE5 and ADAM31 to produce much more robust models for optic disc segmentation, fovea localization and even quality assessment. In addition, researchers can use the PALM dataset, which includes Chinese ethnicity, and related datasets that include other ethnicities to conduct race-specific studies related to pathological myopia, optic cup/disc segmentation, and fovea localization. In the future, as we expand this dataset, we will include data from the right eye to create a more comprehensive resource for research and model development.

Author contributions

Acquisition of data: X.Z. and F.L., Analysis and interpretation of data: H.F. (Huihui Fang), J.W. and X.S., PALM Challenge organization: Y.X., X.Z., H.F. (Huazhu Fu), F.L., X.S., J.I.O, H.B., Drafting the work or revising it critically: H.F. (Huihui Fang), F.L., J.I.O, H.B., H.F. (Huazhu Fu), J.W. and Y.X. F.L. was supported by the Young Talent Support Project of Guangzhou Association for Science and Technology (2022).

Code availability

The source code for the image quality assessment by Fu et.al. can be accessed at https://github.com/hzfu/EyeQ. The source code for the baseline model training and testing is available at https://github.com/tianyizheming/ichallenge_baseline.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

These authors contributed equally: Huihui Fang, Fei Li.

Contributor Information

Xiulan Zhang, Email: zhangxl2@mail.sysu.edu.cn.

Yanwu Xu, Email: xuyanwu@scut.edu.cn.

References

- 1.Sankaridurg P, et al. Imi impact of myopia. Investigative Ophthalmology & Visual Science. 2021;62:2–2. doi: 10.1167/iovs.62.5.2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Percival S. Redefinition of high myopia: the relationship of axial length measurement to myopic pathology and its relevance to cataract surgery. Developments in ophthalmology. 1987;14:42–46. doi: 10.1159/000414364. [DOI] [PubMed] [Google Scholar]

- 3.Ohno-Matsui K, et al. Imi pathologic myopia. Investigative Ophthalmology & Visual Science. 2021;62:5–5. doi: 10.1167/iovs.62.5.5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Vingolo, E. M., Napolitano, G. & Casillo, L. Pathologic myopia: complications and visual rehabilitation. In Intraocular Lens, 67 (IntechOpen, 2019).

- 5.Orlando JI, et al. Refuge challenge: A unified framework for evaluating automated methods for glaucoma assessment from fundus photographs. Medical image analysis. 2020;59:101570. doi: 10.1016/j.media.2019.101570. [DOI] [PubMed] [Google Scholar]

- 6.Li T, et al. Applications of deep learning in fundus images: A review. Medical Image Analysis. 2021;69:101971. doi: 10.1016/j.media.2021.101971. [DOI] [PubMed] [Google Scholar]

- 7.Hagiwara Y, et al. Computer-aided diagnosis of glaucoma using fundus images: A review. Computer methods and programs in biomedicine. 2018;165:1–12. doi: 10.1016/j.cmpb.2018.07.012. [DOI] [PubMed] [Google Scholar]

- 8.Sengupta S, Singh A, Leopold HA, Gulati T, Lakshminarayanan V. Ophthalmic diagnosis using deep learning with fundus images–a critical review. Artificial Intelligence in Medicine. 2020;102:101758. doi: 10.1016/j.artmed.2019.101758. [DOI] [PubMed] [Google Scholar]

- 9.Fu, H. et al. Palm: Pathologic myopia challenge. IEEE Dataport (2019).

- 10.Baskaran M, et al. The prevalence and types of glaucoma in an urban chinese population: the singapore chinese eye study. JAMA ophthalmology. 2015;133:874–880. doi: 10.1001/jamaophthalmol.2015.1110. [DOI] [PubMed] [Google Scholar]

- 11.de Vente C, et al. Rotterdam eyepacs airogs train set - part 2/2. 2021 doi: 10.5281/zenodo.5745834. [DOI] [Google Scholar]

- 12.Fang H, 2023. Open fundus photograph dataset with pathologic myopia recognition and anatomical structure annotation. Figshare. [DOI] [PMC free article] [PubMed]

- 13.Biswas S, et al. Which color channel is better for diagnosing retinal diseases automatically in color fundus photographs? Life. 2022;12:973. doi: 10.3390/life12070973. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Son, J., Kim, J., Kong, S. T. & Jung, K.-H. Leveraging the generalization ability of deep convolutional neural networks for improving classifiers for color fundus photographs. Applied Sciences11, 10.3390/app11020591 (2021).

- 15.Cui, J., Zhang, X., Xiong, F. & Chen, C.-L. Pathological myopia image recognition strategy based on data augmentation and model fusion. Journal of Healthcare Engineering2021 (2021). [DOI] [PMC free article] [PubMed]

- 16.Rauf N, Gilani SO, Waris A. Automatic detection of pathological myopia using machine learning. Scientific Reports. 2021;11:1–9. doi: 10.1038/s41598-021-95205-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Hemelings R, et al. Pathological myopia classification with simultaneous lesion segmentation using deep learning. Computer Methods and Programs in Biomedicine. 2021;199:105920. doi: 10.1016/j.cmpb.2020.105920. [DOI] [PubMed] [Google Scholar]

- 18.Guo, Y. et al. Lesion-aware segmentation network for atrophy and detachment of pathological myopia on fundus images. In 2020 IEEE 17th international symposium on biomedical imaging (ISBI), 1242–1245 (IEEE, 2020).

- 19.Xie R, et al. End-to-end fovea localisation in colour fundus images with a hierarchical deep regression network. IEEE Transactions on Medical Imaging. 2021;40:116–128. doi: 10.1109/TMI.2020.3023254. [DOI] [PubMed] [Google Scholar]

- 20.Du R, et al. Deep learning approach for automated detection of myopic maculopathy and pathologic myopia in fundus images. Ophthalmology Retina. 2021;5:1235–1244. doi: 10.1016/j.oret.2021.02.006. [DOI] [PubMed] [Google Scholar]

- 21.Flitcroft DI, et al. Imi–defining and classifying myopia: a proposed set of standards for clinical and epidemiologic studies. Investigative ophthalmology & visual science. 2019;60:M20–M30. doi: 10.1167/iovs.18-25957. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Lin L, et al. The sustech-sysu dataset for automated exudate detection and diabetic retinopathy grading. Scientific Data. 2020;7:1–10. doi: 10.1038/s41597-020-00755-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Fu, H. et al. Evaluation of retinal image quality assessment networks in different color-spaces. In International Conference on Medical Image Computing and Computer-Assisted Intervention, 48–56 (Springer, 2019).

- 24.Raj A, Tiwari AK, Martini MG. Fundus image quality assessment: survey, challenges, and future scope. IET Image Processing. 2019;13:1211–1224. doi: 10.1049/iet-ipr.2018.6212. [DOI] [Google Scholar]

- 25.Shao F, Yang Y, Jiang Q, Jiang G, Ho Y-S. Automated quality assessment of fundus images via analysis of illumination, naturalness and structure. IEEE Access. 2017;6:806–817. doi: 10.1109/ACCESS.2017.2776126. [DOI] [Google Scholar]

- 26.Fleming AD, Philip S, Goatman KA, Olson JA, Sharp PF. Automated assessment of diabetic retinal image quality based on clarity and field definition. Investigative ophthalmology & visual science. 2006;47:1120–1125. doi: 10.1167/iovs.05-1155. [DOI] [PubMed] [Google Scholar]

- 27.Niemeijer M, Abramoff MD, van Ginneken B. Image structure clustering for image quality verification of color retina images in diabetic retinopathy screening. Medical image analysis. 2006;10:888–898. doi: 10.1016/j.media.2006.09.006. [DOI] [PubMed] [Google Scholar]

- 28.Fang, H. et al. Dataset and evaluation algorithm design for goals challenge. In International Workshop on Ophthalmic Medical Image Analysis, 135–142 (Springer, 2022).

- 29.Ronneberger, O., Fischer, P. & Brox, T. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical image computing and computer-assisted intervention, 234–241 (Springer, 2015).

- 30.He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, 770–778 (2016).

- 31.Fang H, et al. Adam challenge: Detecting age-related macular degeneration from fundus images. IEEE Transactions on Medical Imaging. 2022;41:2828–2847. doi: 10.1109/TMI.2022.3172773. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Citations

- Fang H, 2023. Open fundus photograph dataset with pathologic myopia recognition and anatomical structure annotation. Figshare. [DOI] [PMC free article] [PubMed]

Data Availability Statement

The source code for the image quality assessment by Fu et.al. can be accessed at https://github.com/hzfu/EyeQ. The source code for the baseline model training and testing is available at https://github.com/tianyizheming/ichallenge_baseline.