Abstract

Purpose

Deep learning can be used to automatically digitize interstitial needles in high-dose-rate (HDR) brachytherapy for patients with cervical cancer. The aim of this study was to design a novel attention-gated deep-learning model, which may further improve the accuracy of and better differentiate needles.

Methods and Materials

Seventeen patients with cervical cancer with 56 computed tomography–based interstitial HDR brachytherapy plans from the local hospital were retrospectively chosen with the local institutional review board's approval. Among them, 50 plans were randomly selected as the training set and the rest as the validation set. Spatial and channel attention gates (AGs) were added to 3-dimensional convolutional neural networks (CNNs) to highlight needle features and suppress irrelevant regions; this was supposed to facilitate convergence and improve accuracy of automatic needle digitization. Subsequently, the automatically digitized needles were exported to the Oncentra treatment planning system (Elekta Solutions AB, Stockholm, Sweden) for dose evaluation. The geometric and dosimetric accuracy of automatic needle digitization was compared among 3 methods: (1) clinically approved plans with manual needle digitization (ground truth); (2) the conventional deep-learning (CNN) method; and (3) the attention-added deep-learning (CNN + AG) method, in terms of the Dice similarity coefficient (DSC), tip and shaft positioning errors, dose distribution in the high-risk clinical target volume (HR-CTV), organs at risk, and so on.

Results

The attention-gated CNN model was superior to CNN without AGs, with a greater DSC (approximately 94% for CNN + AG vs 89% for CNN). The needle tip and shaft errors of the CNN + AG method (1.1 mm and 1.8 mm, respectively) were also much smaller than those of the CNN method (2.0 mm and 3.3 mm, respectively). Finally, the dose difference for the HR-CTV D90 using the CNN + AG method was much more accurate than that using CNN (0.4% and 1.7%, respectively).

Conclusions

The attention-added deep-learning model was successfully implemented for automatic needle digitization in HDR brachytherapy, with clinically acceptable geometric and dosimetric accuracy. Compared with conventional deep-learning neural networks, attention-gated deep learning may have superior performance and great clinical potential.

Introduction

Cervical cancer is the most common cause of cancer death among women.1 Despite being a highly preventable and treatable cancer, an estimated 342,000 people worldwide died of the disease in 2020. High-dose-rate (HDR) brachytherapy after external beam radiation therapy is the standard-of-care treatment for patients with cervical cancer.2 Computed tomography (CT)–based HDR brachytherapy is often involved with freehand insertion of interstitial needles, multineedle digitization, and treatment planning for precision radiation therapy.

The dose distribution of HDR brachytherapy for patients with cervical cancer often depends on the accuracy of needle digitization, where a small amount of uncertainty in needle rotation or tip position may affect the dwell time and position.3 However, the manual digitization used in the current practice of HDR brachytherapy can be time consuming, user dependent, and error prone. There is an urgent need to implement automatic needle digitization integrated with current treatment planning systems for HDR brachytherapy.

There are challenges in automatic digitization of interstitial needles. First, the geometric relationship of needles can be complex, with crossing or touching needles impeding accurate and reliable needle digitization. Second, the metal artifacts of interstitial needles can be severe in CT simulation and prevent precise automatic needle digitization.4 Consequently, accurate and precise needle digitization independent of planners can be technically difficult.

Several methods of automatic needle or applicator digitization have been proposed previously.4, 5, 6, 7, 8, 9, 10, 11, 12, 13 However, most of them were focused on digital reconstructions of tandem & ovids applicator (T&O) or vaginal cylinder applicators. Zhou et al5 introduced a web-based AutoBrachy system for vaginal cylinder applicators. Deufel et al6 used Housfield units thresholding and a density-based clustering algorithm for T&O with treatment planning evaluation. Recently, deep-learning–based solutions have attracted increasing attention owing to their wide application to medical image analysis.14 For example, deep convolutional neural networks (CNNs), such as Unet, have achieved significant progress in the past few years, including image segmentation,15 image synthesis,16 dose prediction,17,18 and lesion detection.19 For automatic digitization of needles in brachytherapy, deep-learning–based techniques have been used in different image modalities.4,7, 8, 9, 10 Zaffino et al7 adopted a 3-dimensional (3D) Unet model for segmentation of multiple catheters in intraoperative magnetic resonance imaging (MRI). Zhang et al9 presented a 3D Unet model incorporating spatial attention gates and total variation regularization for needle localization in ultrasound-guided HDR prostate brachytherapy. Jung et al4 extended their AutoBrachy system with a 2.5-dimensional Unet model to digitize the interstitial needles in 3D CT images for HDR brachytherapy. Other attempts to automatically segment and digitize applicators based on 3D Unet in CT images were also reported,11, 12, 13 but no attention-added CNN for needle digitization has been implemented thus far.

The standard Unet CNN structure uses the skip connection at multiscale levels, which leads to relearning the redundant low-level features.20 The redundancy may slow down the convergence or reduce the accuracy of tasks. We aimed to propose an attention-integrated 3D Unet CNN method to highlight salient regions and suppress irrelevant information in needle digitization. Because the additive attention gates (AGs) may increase model computation,20 we proposed to apply group normalization21 to improve computation efficiency. The automatic needle digitization was subsequently compared in terms of geometric and dosimetric accuracies. Compared with the standard 3D Unet method, the incorporation of spatial AGs may increase the computation intensively.20 We proposed to integrate group normalization21 at the encoder to improve the convergence speed, which may be especially suitable for a small batch size such as that in our study.

We aim to implement an attention-gated deep-learning model for automatic needle digitization of HDR brachytherapy planning, which may improve efficacy and decrease heterogeneity introduced by manual digitization from different planners in the current practice of HDR brachytherapy.

Methods and Materials

The planning workflow of automatic needle digitization in HDR brachytherapy included 2 main steps: (1) regions of interstitial needles nearby were segmented via 3D Unet, and (2) needle trajectories were digitized as channels for the HDR radioactive source incorporated in the treatment planning process.

Patient selection

Seventeen patients with different stages of cervical cancer who underwent freehand interstitial needle insertions in HDR brachytherapy were retrospectively selected. The patients’ characteristics are shown in Table 1. This study was approved by the local institutional review board. Each patient was treated with 4 or 5 fractions of HDR brachytherapy with 4 to 6 trocar stainless steel needles inserted during each fraction delivered on the Flexitron HDR treatment unit (Elekta AB, Stockholm, Sweden). There were 56 CT sets acquired on the CT simulator (Siemens SOMATOM Sensation Open, Siemens Medical System, Germany) with 5-mm slice thickness, a resolution of 512 × 512, and 0.8 mm in-plane voxel size (range, 0.6-1.0 mm). All interstitial needles were trocar with a diameter of 1.5 mm and a length of 200 mm (Elekta AB). All HDR brachytherapy plans were created on the Oncentra Brachy Planning System, version 4.6 (Elekta AB), by experienced medical physicists and approved clinically by experienced radiation oncologists.

Table 1.

Patient characteristics

| Characteristic | Patients (N = 17) |

|---|---|

| Age, mean, y | 53.6 ± 11.0 |

| Volume of HR-CTV, mean, cm3 | 122.8 ± 67.6 |

| Prescription dose per fraction, Gy | 6 Gy × 4-5 fractions |

| Number of needles, mean ± SD | 4.0 ± 0.6 |

| FIGO clinical stage, no. of patients | |

| I A | 4 |

| II A | 1 |

| II B | 4 |

| III A | 1 |

| III B | 2 |

| III C | 5 |

| Total plans | 56 |

| CT slice thickness, mm | 5 |

| CT voxel average dimension, mm | 0.8 × 0.8 |

Abbreviations: CT = computed tomography; FIGO = International Federation of Gynecology and Obstetrics; HR-CTV = high-risk clinical target volume.

Image preprocessing

Fifty planning CT sets were randomly selected as the training data and the remaining 6 as the validation data. The mask images of manually digitized needles were considered as ground truth when the 3D Unet and attention-gated 3D Unet were trained separately. We applied image augmentation, including rotation, horizontal flip, vertical flip, and scaling on the CT images and the corresponding needle-mask images, to prevent overfitting on training a relatively small data set. We also cropped the image size to 256 × 256 to improve computational efficiency, as routinely done in the preprocessing of images before training the deep-learning models.6

Deep-learning neural networks with and without AGs

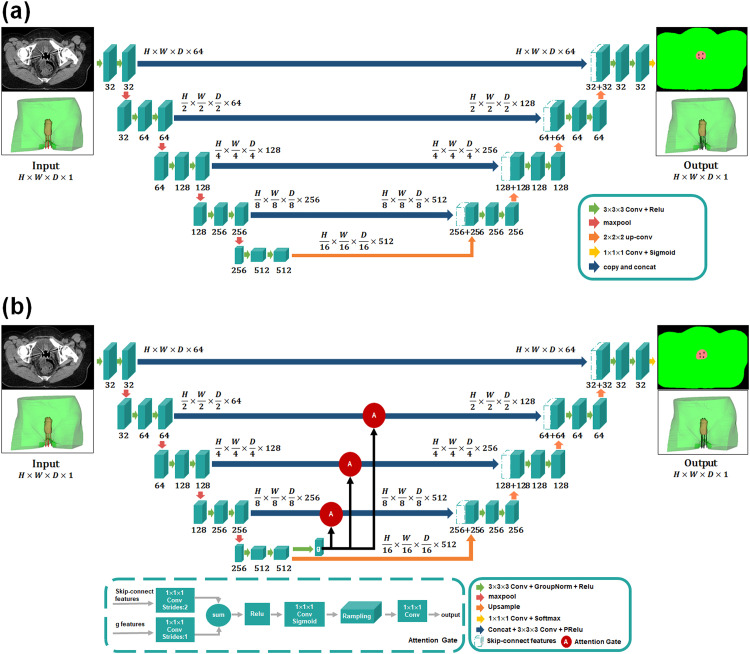

3D Unet (CNN)

The Unet model, a type of CNN in deep-learning algorithms, has been widely used in image segmentation and radiation therapy planning.22, 23, 24, 25 The network architecture of a traditional 3D Unet in our study is illustrated in Fig. 1A. The encoding part consisted of 2 convolutional layers with a kernel size of 3 × 3 × 3, followed by rectified linear unit (Relu) and max-pooling operation. The coarse-feature maps were extracted at multiple scales in the encoding stage and later combined with fine-feature maps in the decoding stage through skip connections. The decoding part consisted of an up-convolution with a stride of 2 followed by Relu and concatenation, except the final layer as the 1 × 1 × 1 convolution with the sigmoid activation. The skip-connections trick combined the coarse- and fine-level feature maps to obtain more refined structures. There were a total of 22 convolutional layers with zero padding in each layer in the proposed 3D Unet. Inspired by Kearney et al,20 the entire 3D Unet model was trained with a soft Dice similarity coefficient (DSC) loss function, which is described in Eq. 3.

Figure 1.

The network architecture of (A) 3-dimensional (3D) Unet and (B) attention-gated 3D Unet. The red-circled A represents the attention gate incorporated in the standard convolutional neural network (3D Unet).

3D Unet with attention gates

Attention gates were incorporated into the 3D Unet model previously described to highlight salient features for small needles and progressively suppress feature responses in irrelevant regions. To reduce false-positive errors in needle digitization, we integrated AGs before the skip connections.26 The multiscale coarse features extracted from the encoder were selected to incorporate into AGs before skip connections to merge relevant features of fine needles with deep-learning neural networks. Attention gates in the CNN + AG method had weighted relevant spatial features (Fig. 1B) and were formulated as follows:

| (1) |

| (2) |

where was the Relu and was a sigmoid activation function to restrict the range of attention coefficients. The gating signal g was the coarse-scale activation map to encode features from large spatial regions, and x represented the fine-level feature map. Attention gates were characterized by the parameters , which included the 1 × 1 × 1 convolution ( , ) and bias terms (), and all parameters of AGs could be updated with the standard back-propagation. The trilinear interpolation was used for grid resampling of attention coefficients. Therefore, the CNN + AG model was designed to focus on target regions from a wide range of image foreground content.

Training and validation of deep-learning models

The 3D Unet, both with and without AGs, was trained with a soft Dice loss function to mitigate the imbalance objectives during the needle's digitization:

| (3) |

where P and T represented the prediction and ground truth, respectively.was a constant value of 0.0001, which was introduced to prevent the numerator from being divided by 0.

We used adaptive moment estimation (Adam) optimizer with a learning rate of 5 × 10–4 to train deep-learning networks. The maximum epoch was set to 200, with a batch size of 1. The training stage was undertaken on an Intel Core i9-10980XE CPU @3.00GHz, NVIDIA Quadro RTX 5000 with 16 GB of memory. Both models were implemented on the PyTorch framework.

Needle digitization and dose calculation

After acquiring the segmented contours, we calculated the central trajectory coordinates of each needle by an open-source software 3D Slicer (Slicer 4.10.2). Moreover, we performed polynomial curve fitting to avoid a systematic error.

The central trajectory coordinates of each needle generated by the deep-learning–based method were rewritten into the original treatment plan file. The properties of the treatment plan, such as prescription dose, number of dwell, dwell time of source, step size of source, needle length, and needle offset, were kept the same. Dose recalculation was conducted using the Oncentra Brachy treatment planning system (TPS), version 4.6 (Elekta AB), with the same parameters. Dose-volume histograms (DVHs) and 3D dose distributions were compared between manual digitization and automatic digitization of interstitial needles using CNN and CNN + AG methods.

Evaluation

Geometric evaluation: Needle digitization

The distance metrics, including the DSC, Jaccard index (JI), and Hausdorff distance (HD)27 were used to assess accuracy. The DSC metric measured the spatial overlap between the prediction and ground truth regions:

| (4) |

The JI measured the similarity between the 2 regions by calculating the ratio of intersection and union as

| (5) |

where P and T are the deep-learning model prediction and ground truth mask regions, respectively. The HD was defined as

| (6) |

| (7) |

where A and B are the measured voxel set of deep-learning model prediction and ground truth; a and b are the points of sets A and B, respectively; and is the Euclidean distance between points A and B. The HD metric measures the maximum mismatch between the automatic segmentation and ground truth. A smaller HD and larger DSC and JI coefficients indicate better segmentation performance.

In terms of the geometric evaluation of needles central trajectory, we used the tip error and shaft error7 to evaluate the accuracy of needle position. The needle tip error was defined as

| (8) |

where N indicated the total needles path number. Pi and Ti were the predicted length and ground truth length for the ith needle. The needle shaft error was defined as

| (9) |

where M indicated the number of measured points in the needle's central trajectories, P(x,y) represented the predicted coordinates, and T(x,y) represented the ground truth coordinates for the ith needle. We performed paired t tests to assess whether the geometric results between CNN and CNN + AG methods were statistically significant at P < .05.

Dosimetric evaluation

The dose recalculation of deep-learning models in the Oncentra Brachy TPS was the same as that of manual digitized needles based on the updated American Association of Physicists in Medicine Task Group Report 43.28 The isodose lines and DVHs were compared between automated and manual needle digitization. The DVH metrics, such as D90% and D100% for the high-risk clinical target volume (HR-CTV) and D2 cc for organs at risk (OARs)—the bladder wall, rectum wall, intestines, and sigmoids—were reported.29 In general, the dosimetric differences between the manual and automatic plan were assessed as follows:

| (10) |

| (11) |

Results

Geometric comparison

The number of interstitial needles was 4 to 6 in the training set and 3 to 6 in the validation set. The average DSC and JI of CNN + AG were 93.7% and 88.2%, respectively (Table 2), demonstrating consistency with the ground truth of manual needle digitization using deep learning. Furthermore, the mean DSC and JI were statistically significantly higher (P < .05) using CNN + AG than using CNN only. The average HD obtained using CNN + AG was 2.9 mm smaller than that using CNN only, showing deep learning with AGs was more accurate than CNN only. The average difference of tip and shaft positions using CNN + AG versus ground truth of manual segmentation was 1.1 ± 0.7 mm and 1.8 ± 1.6 mm, respectively, which was also more accurate than the CNN-only method. Our proposed CNN + AG method was superior to the CNN method, revealing that the integrated attention mechanism with group normalization of the deep-learning model was feasible in needle digitization, despite needles crossing or touching in the CT of freehand needle insertions in HDR brachytherapy.

Table 2.

Needle digitization for 6 validation cases

| DSC, % | JI, % | HD, mm | Tip error, mm | Shaft error, mm | Time, s | |

|---|---|---|---|---|---|---|

| 3D CNN | 88.5 ± 1.8 | 79.4 ± 2.8 | 5.8 ± 3.9 | 2.0 ± 1.6 | 3.3 ± 3.3 | 1.3 |

| 3D CNN + AG | 93.7 ± 1.4 | 88.2 ± 2.5 | 3.0 ± 1.9 | 1.1 ± 0.7 | 1.8 ± 1.6 | 1.6 |

| P value | <.05 | <.05 | - | - | - | - |

Abbreviations: 3D CNN = 3-dimensional convolutional neural network; AG = attention gate; DSC = Dice similarity coefficient; HD = Hausdorff distance; JI = Jaccard index.

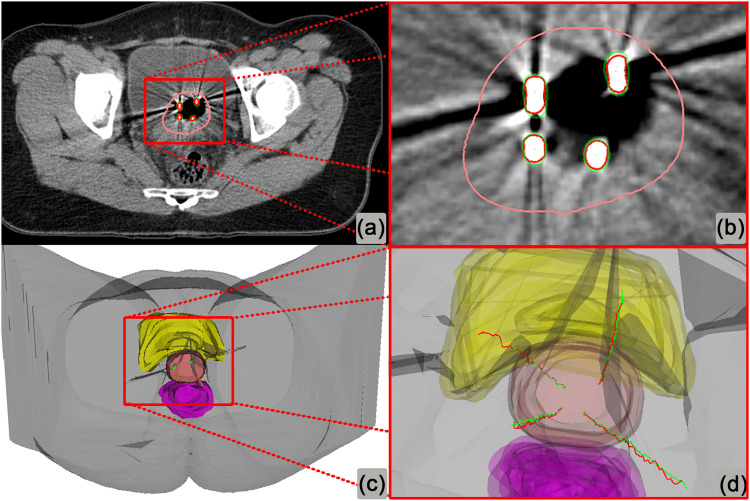

One example of needle digitization generated by attention-gated 3D Unet is shown in Fig. 2. The digitization using attention-gated 3D Unet was in good agreement with the ground truth. The total time of training for the proposed attention-gated 3D Unet versus 3D Unet only was about 12.6 and 3.3 hours, respectively. However, in the validation set, the time of automatic needle digitization was only 1 to 2 seconds per patient, without a significant difference.

Figure 2.

The needle digitization of a patient example using attention-gated 3-dimensional (3D) Unet. Red indicates manual contours and central trajectories of needles; green, automatic needle digitization using attention-gated 3D Unet; Pink, high-risk clinical target volume; yellow: bladder; and purple: rectum.

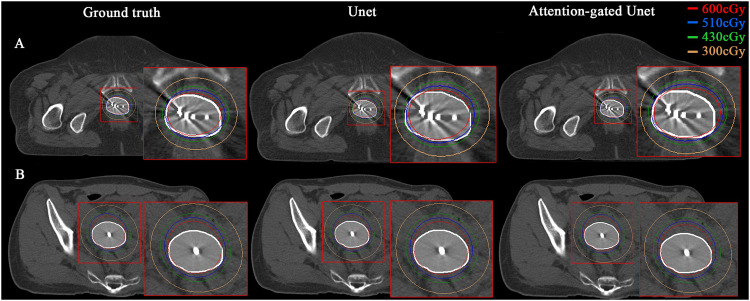

Dosimetric analysis

The dose difference in the CTV and OARs of manual and automatic digitization is shown in Fig. E2. The 3D dose distribution of 2 examples is shown in Fig. 3, where isodose lines generated by the ground truth (manual digitization), CNN, and CNN + AG models were compared in the axial plane. Both the CNN and CNN + AG methods had consistent dose distribution with the manual digitization of interstitial needles (Table E1), which meant the CNN + AG method could be a surrogate for manual digitization of interstitial needles. Also, the CNN + AG method demonstrated slightly better performance than the CNN method. The manual digitization process of HDR brachytherapy is time consuming and can take up to 15 minutes. The entire automatic needle digitization without additional human guidance and rewriting the treatment plan into the commercial TPS takes about 1 minute on average, which reduces the time for needle digitization by 93%. Consequently, the proposed CNN + AG–based automatic digitization method would be integrated into the TPS and used to create clinically acceptable plans for further time savings and would reduce user dependency in HDR brachytherapy treatment planning.

Figure 3.

The 3-dimensional (3D) dose distribution of the ground truth (manual digitization), 3D convolutional neural network (CNN), and 3D CNN plus attention gates–based automatic digitization of 2 patient examples. The white shadow area indicates the high-risk clinical target volume.

Discussion

Needle digitization is one of the most critical steps of CT-based HDR brachytherapy planning and remarkably affects planning quality and curative effect.29 We have proposed a novel deep-learning method with AGs for automatic digitization to accelerate the workflow of brachytherapy and to avoid potential human errors. Two different 3D Unet–based deep-learning models were created, with and without spatial AGs. Both models had geometric and dosimetric consistency with manual digitization in the TPS. Furthermore, the AGs integrated into the 3D Unet model served as a feature selector by progressively highlighting salient features while suppressing task-irrelevant information. We adopted group normalization to improve the accuracy and convergence speed of automatic needle digitization in this work.

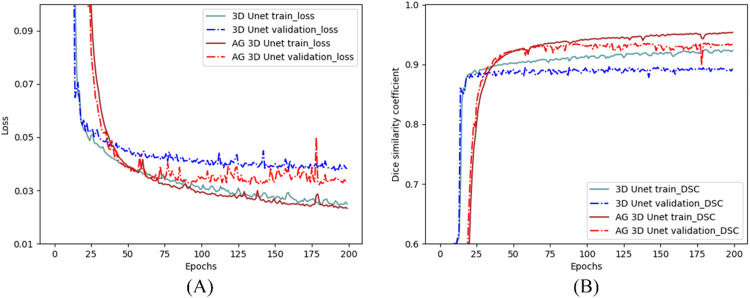

The results also showed that the performance of the CNN + AG method was superior to the CNN model, with statistically significant improvement in DSC and JI (P < .05). In addition, the lowest loss and highest DSC were more quickly reached for the CNN + AG model, showing a faster convergence of the CNN + AG method (Fig. 4).

Figure 4.

The loss and Dice evolution of the training and validation set.

To the best of our knowledge, this work is the first to report both geometric and dosimetric differences between manual and automatic digitization of interstitial needles in HDR brachytherapy using attention mechanisms. Previously, Hu et al11 and Deufel et al6 conducted dosimetric analysis using automatic digitization of Fletcher applicators.

There are several limitations in our study. First, the performance of the CNN + AG model was constrained by the slice thickness of CT simulation. Deufel et al6 suggested that geometric agreement of manual and automatic digitization could be improved by higher resolution and thinner-sliced CT images. Qing et al30 reported that the tip position of needles was affected by the slice thickness of the CT. Hu et al11 reported a greater DSC, lower HD95 distance, and smaller tip error for automatic digitization of the Fletcher applicator when CT slice thickness was reduced to 1.3 mm. In the future, CT slice thickness should be reduced to improve accuracy of automatic needle digitization.

Second, we constructed 3D deep-learning models with and without AGs based on CT only and did not include MRI-guided HDR brachytherapy. Magnetic resonance scans should be introduced as input images in the future, with automatic digitization of MRI-compatible needles. Shaaer et al31 proposed a 2D Unet automatic reconstruction algorithm based on T1- and T2-weighted MRI, which had potential to replace conventional manual catheter reconstruction, although the reconstruction time was still relatively long (approximately 11 minutes).

Third, potential uncertainty in the manual contour still exists and may affect the accuracy of needle digitization, which could be resolved by implementing more cases to the deep-learning model or inviting multiple planners for the manual-digitization step. Future work will be involved with an end-to-end design for automatic digitization of the HR-CTV, OARs, and needle trajectories with high-resolution CT to improve the overall performance.

Conclusions

A deep-learning model incorporated with AGs was proposed and evaluated geometrically and dosimetrically for automatic digitization of interstitial needles in HDR brachytherapy for cervical cancer. This model has clinical potential to improve planning efficiency.

Disclosures

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Footnotes

Sources of support: This study was supported by the CAS Key Laboratory of Health Informatics, Shenzhen Institute of Advanced Technology (#2011DP173015); the Shenzhen Technology and Innovation Program (JCYJ20210324110210029); and a clinical research grant from Peking University Shenzhen Hospital (LCYJ2021018).

Research data are stored in an institutional repository and will be shared upon request to the corresponding authors.

Supplementary material associated with this article can be found, in the online version, at doi:10.1016/j.adro.2023.101340.

Contributor Information

Yuenan Wang, Email: yuenan.wang@gmail.com.

Xuetao Wang, Email: wangxuetao0625@126.com.

Appendix. Supplementary materials

References

- 1.Siegel RL, Miller KD, Fuchs HE, Jemal A. Cancer statistics, 2022. CA Cancer J Clin. 2022;72:7–33. doi: 10.3322/caac.21708. [DOI] [PubMed] [Google Scholar]

- 2.Viswanathan AN, Thomadsen B, American Brachytherapy Society Cervical Cancer Recommendations Committee. American Brachytherapy Society American Brachytherapy Society consensus guidelines for locally advanced carcinoma of the cervix. Part I: general principles. Brachytherapy. 2012;11:33–46. doi: 10.1016/j.brachy.2011.07.003. [DOI] [PubMed] [Google Scholar]

- 3.Tanderup K, Hellebust TP, Lang S, et al. Consequences of random and systematic reconstruction uncertainties in 3D image based brachytherapy in cervical cancer. Radiother Oncol. 2008;89:156–163. doi: 10.1016/j.radonc.2008.06.010. [DOI] [PubMed] [Google Scholar]

- 4.Jung H, Shen C, Gonzalez Y, Albuquerque K, Jia X. Deep-learning assisted automatic digitization of interstitial needles in 3D CT image based high dose-rate brachytherapy of gynecological cancer. Phys Med Biol. 2019;64 doi: 10.1088/1361-6560/ab3fcb. [DOI] [PubMed] [Google Scholar]

- 5.Zhou Y, Klages P, Tan J, et al. Automated high-dose rate brachytherapy treatment planning for a single-channel vaginal cylinder applicator. Phys Med Biol. 2017;62:4361–4374. doi: 10.1088/1361-6560/aa637e. [DOI] [PubMed] [Google Scholar]

- 6.Deufel CL, Tian S, Yan BB, Vaishnav BD, Haddock MG, Petersen IA. Automated applicator digitization for high-dose-rate cervix brachytherapy using image thresholding and density-based clustering. Brachytherapy. 2020;19:111–118. doi: 10.1016/j.brachy.2019.09.002. [DOI] [PubMed] [Google Scholar]

- 7.Zaffino P, Pernelle G, Mastmeyer A, et al. Fully automatic catheter segmentation in MRI with 3D convolutional neural networks: Application to MRI-guided gynecologic brachytherapy. Phys Med Biol. 2019;64 doi: 10.1088/1361-6560/ab2f47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Pernelle G, Mehrtash A, Barber L, et al. Validation of catheter segmentation for MR-guided gynecologic cancer brachytherapy. Med Image Comput Comput Assist Interv. 2013;16:380–387. doi: 10.1007/978-3-642-40760-4_48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Zhang Y, Lei Y, Qiu RLJ, et al. Multi-needle localization with attention U-Net in US-guided HDR prostate brachytherapy. Med Phys. 2020;47:2735–2745. doi: 10.1002/mp.14128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Andersén C, Rydén T, Thunberg P, Lagerlöf JH. Deep learning-based digitization of prostate brachytherapy needles in ultrasound images. Med Phys. 2020;47:6414–6420. doi: 10.1002/mp.14508. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Hu H, Yang Q, Li J, et al. Deep learning applications in automatic segmentation and reconstruction in CT-based cervix brachytherapy. J Contemp Brachytherapy. 2021;13:325–330. doi: 10.5114/jcb.2021.106118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Zhang D, Yang Z, Jiang S, Zhou Z, Meng M, Wang W. Automatic segmentation and applicator reconstruction for CT-based brachytherapy of cervical cancer using 3D convolutional neural networks. J Appl Clin Med Phys. 2020;21:158–169. doi: 10.1002/acm2.13024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Jung H, Gonzalez Y, Shen C, Klages P, Albuquerque K, Jia X. Deep-learning-assisted automatic digitization of applicators in 3D CT image-based high-dose-rate brachytherapy of gynecological cancer. Brachytherapy. 2019;18:841–851. doi: 10.1016/j.brachy.2019.06.003. [DOI] [PubMed] [Google Scholar]

- 14.Litjens G, Kooi T, Bejnordi BE, et al. A survey on deep learning in medical image analysis. Medical Image Anal. 2017;42:60–88. doi: 10.1016/j.media.2017.07.005. [DOI] [PubMed] [Google Scholar]

- 15.Mohammadi R, Shokatian I, Salehi M, Arabi H, Shiri I, Zaidi H. Deep learning-based auto-segmentation of organs at risk in high-dose rate brachytherapy of cervical cancer. Radiother Oncol. 2021;159:231–240. doi: 10.1016/j.radonc.2021.03.030. [DOI] [PubMed] [Google Scholar]

- 16.Dong X, Lei Y, Tian S, et al. Synthetic MRI-aided multi-organ segmentation on male pelvic CT using cycle consistent deep attention network. Radiother Oncol. 2019;141:192–199. doi: 10.1016/j.radonc.2019.09.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Kandalan RN, Nguyen D, Rezaeian NH, et al. Dose prediction with deep learning for prostate cancer radiation therapy: Model adaptation to different treatment planning practices. Radiother Oncol. 2020;153:228–235. doi: 10.1016/j.radonc.2020.10.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Kearney V, Chan JW, Wang T, et al. DoseGAN: A generative adversarial network for synthetic dose prediction using attention-gated discrimination and generation. Sci Rep. 2020;10:11073. doi: 10.1038/s41598-020-68062-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Park S, Lee SM, Lee KH, et al. Deep learning-based detection system for multiclass lesions on chest radiographs: Comparison with observer readings. Eur Radiol. 2020;30:1359–1368. doi: 10.1007/s00330-019-06532-x. [DOI] [PubMed] [Google Scholar]

- 20.Kearney V, Chan JW, Wang T, Perry A, Yom SS, Solberg TD. Attention-enabled 3D boosted convolutional neural networks for semantic CT segmentation using deep supervision. Phys Med Biol. 2019;64 doi: 10.1088/1361-6560/ab2818. [DOI] [PubMed] [Google Scholar]

- 21.Wu Y, He K. In: Computer Vision—ECCV 2018. Lecture Notes in Computer Science, vol 11217. Ferrari V, Hebert M, Sminchisescu C, Weiss Y, editors. Springer; Champagne, Illinois: 2018. Group normalization. [Google Scholar]

- 22.Ronneberger O, Fischer P, Brox T. In: Medical Image Computing and Computer-Assisted Intervention – MICCAI 2015. Lecture Notes in Computer Science, vol 9351. Navab N, Hornegger J, Wells W, Frangi A, editors. Springer; Champagne, Illinois: 2015. U-net: Convolutional networks for biomedical image segmentation. [Google Scholar]

- 23.Chen X, Sun S, Bai N, et al. A deep learning-based auto-segmentation system for organs-at-risk on whole-body computed tomography images for radiation therapy. Radiother Oncol. 2021;160:175–184. doi: 10.1016/j.radonc.2021.04.019. [DOI] [PubMed] [Google Scholar]

- 24.Rodríguez Outeiral R, Bos P, Al-Mamgani A, Jasperse B, Simões R, van der Heide UA. Oropharyngeal primary tumor segmentation for radiotherapy planning on magnetic resonance imaging using deep learning. Phys Imaging Radiat Oncol. 2021;19:39–44. doi: 10.1016/j.phro.2021.06.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Garrett Fernandes M, Bussink J, Stam B, et al. Deep learning model for automatic contouring of cardiovascular substructures on radiotherapy planning CT images: Dosimetric validation and reader study based clinical acceptability testing. Radiother Oncol. 2021;165:52–59. doi: 10.1016/j.radonc.2021.10.008. [DOI] [PubMed] [Google Scholar]

- 26.Schlemper J, Oktay O, Schaap M, et al. Attention gated networks: Learning to leverage salient regions in medical images. Med Image Anal. 2019;53:197–207. doi: 10.1016/j.media.2019.01.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Taha AA, Hanbury A. Metrics for evaluating 3D medical image segmentation: Analysis, selection, and tool. BMC Med Imaging. 2015;15:29. doi: 10.1186/s12880-015-0068-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Rivard MJ, Coursey BM, DeWerd LA, et al. Update of AAPM Task Group No. 43 report: A revised AAPM protocol for brachytherapy dose calculations. Med Phys. 2004;31:633–674. doi: 10.1118/1.1646040. [DOI] [PubMed] [Google Scholar]

- 29.Pötter R, Haie-Meder C, Van Limbergen E, et al. Recommendations from gynaecological (GYN) GEC ESTRO working group (II): Concepts and terms in 3D image-based treatment planning in cervix cancer brachytherapy-3D dose volume parameters and aspects of 3D image-based anatomy, radiation physics, radiobiology. Radiother Oncol. 2006;78:67–77. doi: 10.1016/j.radonc.2005.11.014. [DOI] [PubMed] [Google Scholar]

- 30.Qing K, Yue NJ, Hathout L, et al. The combined use of 2D scout and 3D axial CT images to accurately determine the catheter tips for high-dose-rate brachytherapy plans. J Appl Clin Med Phys. 2021;22:273–278. doi: 10.1002/acm2.13184. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Shaaer A, Paudel M, Smith M, Tonolete F, Ravi A. Deep-learning-assisted algorithm for catheter reconstruction during MR-only gynecological interstitial brachytherapy. J Appl Clin Med Phys. 2022;23:e13494. doi: 10.1002/acm2.13494. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.