Abstract

The ability to detect and learn about the predictive relations existing between events in the world is essential for adaptive behavior. It allows us to use past events to predict the future and to adjust our behavior accordingly. Pavlovian fear conditioning allows anticipation of sources of danger in the environment. It guides attention away from poorer predictors toward better predictors of danger and elicits defensive behavior appropriate to these threats. This article reviews the differences between learning about predictive relations and learning about contiguous relations in Pavlovian fear conditioning. It then describes behavioral approaches to the study of these differences and to the examination of subtle variations in the nature and consequences of predictive learning. Finally, it reviews recent data from rodent and human studies that have begun to identify the neural mechanisms for direct and indirect predictive fear learning.

The ability to detect and learn about predictive relations existing between events in the world is essential for adaptive behavior. Such learning allows us to use past events to predict the future and to adjust our behavior accordingly. Learning about predictive relations depends on what is already known about the events in the relation: If an outcome is unexpected, we learn about cues that predict its occurrence; if the outcome is already expected, information provided by other cues about its occurrence is redundant and our learning about them is impaired. Pavlovian fear conditioning enables learning about, and adaptive responding to, sources of danger in the environment. It involves encoding the predictive relation between a conditioned stimulus (CS) and an aversive unconditioned stimulus (US) stimulus. In this way Pavlovian fear conditioning allows anticipation of sources of danger in the environment. It guides attention away from poorer predictors toward better predictors of danger, and it elicits defensive behavior appropriate to these threats.

A wealth of data accumulated over the past ≥20 years has provided significant insights into the neurobiological mechanisms underlying the formation of fear memories in the mammalian brain. These data support the view that the amygdala is essential for encoding, storing, and retrieving fear memories. In contrast to this knowledge of the brain mechanisms for storage of fear memories, the brain mechanisms for predicting danger are only just beginning to be understood. This article begins by reviewing the differences between learning about predictive relations and learning about contiguous relations in Pavlovian fear conditioning. It then describes some behavioral designs that permit differentiation between the neural mechanisms for predictive versus contiguity learning and also permit examination of the nature and consequences of predictive learning. Finally, it considers recent data from rodent and human experiments that reveal the brain mechanisms for predicting danger.

Predicting danger: More than just contiguous relations

Contingency versus contiguity

Learning about predictive relations should be distinguished from learning about contiguous relations. In the latter, learning proceeds as a function of the contiguous relationship between stimuli. Procedurally, fear conditioning involves a particular temporal relationship between a CS and an aversive US. The CS is arranged by the experimenter to precede the occurrence of the US. If this contiguous relation is broken, so that the trace interval between the offset of the CS and the onset of the US is increased, fear learning is severely retarded (Yeo 1974). If the trace interval is long enough, then learning is abolished. That fear learning entails temporal pairings of two events and does not occur across long trace intervals between those events suggests that the temporal relation between the CS and US is critical for learning. Indeed, most early theories of Pavlovian conditioning took for granted that CS–US contiguity was the critical determinant of learning. Hebb’s learning rule is an important example of this approach (Hebb 1949).

Learning about predictive relations involves learning about more than just the contiguous pairing between the CS and US. It involves learning about the causal relationship between the two events (Dickinson 1980; Rescorla 1988). Predictive learning depends on what is already known about those events. If little is known about the relation between the events, so that the US is not predicted by the CS, then learning occurs. If much is known about this relation, so that the US is adequately predicted by the CS, then learning fails. The Rescorla-Wagner learning rule (Rescorla and Wagner 1972; Wagner and Rescorla 1972) is an important example of a theory that captures this role for prediction in learning. It states that the amount learned about a CS on a conditioning trial, or the associative strength (V) that accrues to a CS on that trial, is a function of the discrepancy, or predictive error, between the actual outcome of the conditioning trial (λ) and the expected outcome of the conditioning (∑V). The expected outcome of the conditioning trial is the summed associative strengths of all CSs present on that trial. The parenthetical term, (λ − ∑V), drives learning. When the output of this discrepancy is positive, that is, when λ > ∑V, so that the actual outcome of the trial exceeds the predicted outcome, excitatory conditioning occurs. When the output of this discrepancy is zero, that is, when λ = ∑V, so that the actual and predicted outcomes are the same, no conditioning occurs. Finally, when the output of this discrepancy is negative, that is, when λ < ∑V, so that the expected outcome exceeds the actual outcome, then inhibitory conditioning occurs.

Trial-level versus real-time models

In its original application, the Rescorla-Wagner learning rule operated at the level of the conditioning trial. Predictions are made and knowledge updated, based on the outcomes of those predictions, after a CS–US pairing. A complex but more realistic approach has been to suppose that learning occurs not on a trial-by-trial basis but instead continuously across a trial. The temporal-difference (TD) model (Sutton 1988; Sutton and Barto 1990) is among the most influential of these models (see also Sutton and Barto 1981; Schmajuk and Moore 1988; Lamoureaux et al. 1998). According to this real-time approach, predictions are made and knowledge updated, based on the outcomes of those predictions, continually during a trial. In a trial-by-trial instantiation of the Rescorla-Wagner model, there can be no predictive error until receipt or omission of the US. In time derivative models of the Rescorla-Wagner learning rule, such as the TD model, there can be multiple sources of predictive error within a trial. For example, there can be predictive error immediately upon receipt of a CS if the associative strength of that CS is different to the associative strength of stimuli present (e.g., contextual cues) in the time immediately before it just as there may be predictive error upon receipt or omission of the US. Moreover, there can be predictive error within an individual CS presentation so that subjects can learn different things about different temporal components of a CS.

The real-time approach adopted by these models is an important distinction in the nature of predictive learning. A real-time approach is used extensively in contemporary computational and cognitive neuroscience investigations of predictive learning. This article focuses on trial level models of predictive learning and discusses real-time models only when behavioral designs explicitly require them. This focus may be justified on two grounds. First, under most of the behavioral conditions considered here (e.g., a CS compound of two simultaneously presented elements, such as a light and noise), the TD model and other real-time models often reduce to the Rescorla-Wagner model. Second, the TD model does not explicitly address the role of attention and learned changes in CS salience and therefore has somewhat less clear applications to understanding the indirect actions of predictive error on learning.

Direct versus indirect predictive learning: Variations in US versus CS processing

A second important distinction is whether predictive error has a direct or indirect action on fear learning. This distinction can be rephrased in terms of whether the important consequence of predictive error is a change in how the nervous system processes the shock US (direct action) or how it processes the CS (indirect action). In the Rescorla-Wagner model, for example, predictive error regulates learning directly by altering the effectiveness of the shock US. An unexpected or surprising shock US is more effective in promoting learning than is an expected or unsurprising shock US. In this way predictive error has an immediate and direct action on learning.

Predictive error can also have delayed and indirect actions on learning. These actions are achieved by regulating the amount of attention allocated to the CS (e.g., Mackintosh 1975; Pearce and Hall 1980). Learning about predictive relations requires attention to the events in those relations. Theories of indirect predictive learning note that the amount of attention allocated to a CS is not static; rather, attention varies as a function of how accurately that CS predicts danger. Better predictors of danger are attended to and learned about, whereas poorer predictors of danger are ignored and consequently are not learned about. Predictive learning is indirect because it involves learned changes in the attentional processing of the CS. Predictive learning is delayed because learning to ignore unreliable predictors of danger, or learning to attend to reliable predictors, requires prior experience with the predictor and the source of danger. An important example of this approach to understanding predictive learning is the Mackintosh model (Mackintosh 1975), which states that the organism extracts the best predictor (e.g., CSA or CSB) of an aversive event by comparing their respective discrepancies |λ − VA| versus |λ − VB|. The smaller the discrepancy, the better the predictor and the more attention allocated to that CS, at the expense of the other CS, on subsequent trials. In this way, learning about predictive relations guides attention toward better predictors of danger and away from poorer predictors.

Different circumstances favor a direct action of predictive error over an indirect action and vice versa. These are reviewed below. Recent research, described later in this article, has exploited these circumstances to study the distinct neural substrates of direct and indirect predictive learning. Separating the influences of these two actions is important for empirical investigations into the mechanisms for predictive learning. However, this separation should not be taken to imply that predicting danger always relies exclusively on one action over the other. Predicting danger is multiply determined, involving both direct and indirect actions of predictive error on fear learning.

Behavioral approaches for studying predictive learning

In a standard fear conditioning experiment, a subject (e.g., a rat, mouse, or human), is exposed to pairings of a CS with footshock. The CS is later presented, and the subject’s fear reactions are assessed. Often performance is compared to the performance of a number of control conditions that may include CS-only or US-only presentations during conditioning or unpaired presentations of the CS and US during conditioning. The fact that Pavlovian fear learning is sensitive to both the contiguous and predictive relationship between a CS and US requires the use of a different approach that is able to dissociate these two relations.

Blocking, unblocking, and the role of affective learning

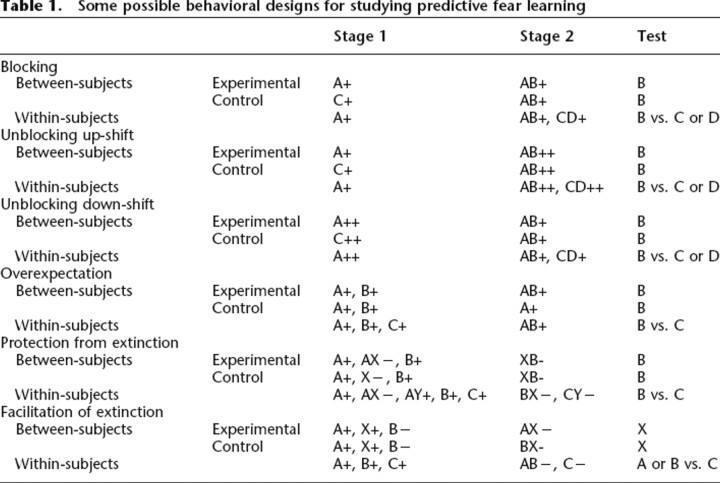

The classic behavioral preparation for studying predictive learning is blocking (Table 1). Kamin (1968, 1969) subjected rats to CSA–shock pairings. In stage 2, rats received a compound stimulus of CSA and CSB followed by shock. Kamin’s seminal finding was that prior conditioning of fear to CSA blocked fear from accruing to CSB compared with a control group that received no training in stage 1 training. Conditioning failed to CSB, despite adequate CSB–US contiguity, because the shock was not surprising when it was preceded by CSA. The animals could predict the occurrence of the US from CSA, and so conditioning to CSB failed because it was uninformative. Blocking has been observed in many species across many conditioning preparations, including appetitive and aversive conditioning, spatial learning, and human causal judgements (Khallad and Moore 1996; Biegler and Morris 1999; Roberts and Pearce 1999; McNish et al. 2000; Dickinson 2001; McNally et al. 2004a). Simple variants of the basic blocking design (Table 1) permit direct selection between the different mechanisms for predictive learning. According to the Rescorla-Wagner model, blocking occurs because the expected shock in stage 2 is less effective than is a surprising shock. According to attentional theories, blocking occurs due to the withdrawal of attention from the added CS in stage 2 because it is a worse predictor of shock than the pre-trained CS. These learned variations in attention can only develop across multiple conditioning trials. So, according to attentional theories, blocking does not occur on the first conditioning in stage 2. Rather, it occurs on subsequent trials because the subject ignores the blocked CS on these later trials and therefore does not learn about it (Mackintosh et al. 1980; but see Dickinson et al. 1983). These differences have been exploited to study the neural mechanisms for indirect predictive learning (Iordanova et al. 2006a,b).

Table 1.

Some possible behavioral designs for studying predictive fear learning

Unblocking also permits study of predictive learning. Kamin (1968, 1969) reported that blocking was abolished (i.e., unblocking occurred) when the intensity of footshock was increased from stage 1 to stage 2. Rats that had received pairings of a CSA with a 1-mA footshock in stage 1 and pairings of the CSA–CSB compound with a 4-mA footshock in stage 2 showed robust conditioning to CSB. Expressed casually, fear accrued normally to CSB because it was predictive of the increase in US intensity. Unblocking also permits direct selection between different mechanisms of predictive learning (Table 1). According to the Rescorla-Wagner model, unblocking will only occur when the number, magnitude, or duration of the US is increased in stage 2. Attentional theories of predictive learning make different predictions regarding the locus of the effects of a surprising US and the circumstances under which unblocking of fear will be observed. Regarding the locus of unblocking, theories of indirect predictive learning hold that a surprising US prevents the decline in attention otherwise suffered by a blocked CS and so permits the CS to associate with shock on subsequent trials (Mackintosh et al. 1977, 1980). Regarding the circumstances that produce unblocking, theories of indirect predictive learning state that unblocking occurs with increases or decreases in the US from stage 1 to stage 2 (Pearce and Hall 1980). Unblocking of fear learning with decreases or increases in stage 2 US intensity have been reported under different circumstances (e.g., Dickinson et al. 1976; McNally et al. 2004a), and these differences have been exploited to directly study the neural mechanisms for direct predictive learning.

The original Sutton-Barto model of TD learning (Sutton and Barto 1981) makes an important and unique claim about predictive learning in a variant of the blocking and unblocking designs. In the standard blocking design, CSA is paired with shock. A compound of CSA and CSB is then paired with shock. The Sutton-Barto model is identical to the Rescorla-Wagner model under these conditions. However, suppose that during a third stage of training, CSB is arranged to precede and overlap with CSA and this compound is then followed by shock. The Sutton-Barto model uniquely predicts that A will lose, and B will gain, associative strength under these conditions. In other words, a change in the temporal order of CS presentations produces unblocking of CSB and instates blocking of CSA. This prediction has been confirmed in rabbit eyeblink conditioning (Kehoe et al. 1987). It is another demonstration that learning about contiguous relations is distinct from learning about predictive relations. During each stage of the experiment, the pre-trained CS, A, stands in a close contiguous relation to the US yet what is learned about CSA changes across the course of the experiment.

Predictive learning is especially sensitive to the affective value of the US. For example, CSA could signal a shock US during stage 1 of a blocking experiment and the AB compound signal a frightening loud noise US in stage 2 (Bakal et al. 1974). Blocking of fear learning to CSB still occurs despite the use of different USs in stage 1 and 2. This sensitivity to the affective not sensory properties of a US is even more dramatically illustrated by the fact that the pre-trained CS need never have been paired with an aversive US at all. For example, the blocking CS, CSA, could be trained to signal the absence of an appetitive event. Having been established as an appetitive conditioned inhibitor, CSA is able to block fear learning from accruing to CSB when the AB compound is paired with shock (Dickinson and Dearing 1979). Betts et al. (1996) provided an important demonstration of the power of the blocking paradigm in dissociating learning about the sensory versus affective properties of a shock US. They arranged that CSA signaled a shock US to one eye of a rabbit. One consequence of these pairings was that the subjects came to show a defensive eyeblink CR to the CS. A second consequence was that the subjects came to fear the CS as indexed by a potentiated startle response. A compound of CSA and novel CSB then signaled the occurrence of shock to the other eye of the same subjects. The outcome of stage 2 training was that subjects showed conditioned eyeblink responses but not potentiated startle to CSB. The subjects had learned that CSB signaled shock to the other eye, but they were not afraid of CSB. This finding is important because it constrains any explanation of the action of predictive error on fear learning. It shows that subjects detect and respond to the shock US during stage 2 of the blocking paradigm and they even learn defensive motor responses to the blocked CS; they simply do not learn to fear that CS. Blocking is specific to the affective properties of the US and is not due to some sensory failure to detect or process the US.

Overexpectation and extinction

The designs described above highlight the role of predictive error in acquiring fear. Analogous designs can be used to study the role of predictive error in the loss of fear. Overexpectation is a powerful design for studying predictive error in the loss of fear (Table 1). In stage 1, rats learn to fear CSA and CSB by pairing each CS with shock. In stage 2, rats in the experimental group receive compound presentations of CSA and CSB with shock, whereas rats in the control groups receive either additional CSA–shock pairings or no additional training. Stage 2 compound training of CSA and CSB reduces the amount of fear provoked by either CS. Expressed casually, the subjects could be said to sum the predictions of CSA and CSB in stage 2 and thus expect two shocks. However they receive only a single shock.

According to the Rescorla-Wagner model, during stage 1 the V values of CSA and CSB increase toward λ. In stage 2 the sum of these V values, ∑V, exceeds λ. The discrepancy (λ − ∑V) is therefore negative and CSA and CSB undergo commensurate reductions in the associative strengths until (λ = ∑V). Consistent with this interpretation, increases in the shock intensity in stage 2, so that (λ = ∑V), prevents overexpectation (Kamin and Gaioni 1974). Similar to blocking, overexpectation is a robust finding. It has been observed in a number of species and conditioning preparations (e.g., Rescorla 1970, 1999; Kamin and Gaioni 1974; Kremer 1978; Khallad and Moore 1996; Lattal and Nakajima 1998; Kehoe and White 2004; McNally et al. 2004a).

Overexpectation permits selection between different accounts of predictive learning. Overexpectation selectively reveals the operation of predictive learning based on the summed, or pooled, associative strengths of all CSs present during stage 2. This is necessarily so because it is the summation of the associative strengths of the two CSs that cause the discrepancy (λ < ∑V) and so cause overexpectation. Whereas some models of predictive learning rely on pooled associative strengths to control learning, others do not. For example, the Mackintosh model of indirect predictive learning (Mackintosh, 1975) computes associative strengths of CSA and B separately, so that what is learned about CSA, |λ − VA|, and what is learned about CSB, |λ − VB|, on an AB+ trial can be different. Because indirect predictive learning in this model does not pool the predictive strengths of CSA and CSB, it cannot generate the discrepancy (λ < ∑V) and so cannot explain overexpectation. Therefore, overexpectation can be used to select between the neural mechanisms for these different kinds of predictive learning (McNally et al. 2004a).

Fear extinction can also be used to study the role of predictive error. Fear extinction may be caused procedurally by repeated presentations of a fear CS in the absence of the aversive US, but extinction learning, similar to overexpectation, is caused by a negative prediction error (Rescorla 2002; Delamater 2004). Extinction occurs when the expected outcome (V) exceeds the actual outcome (λ) so that the discrepancy (λ − ∑V) is negative. Simple contiguity based learning rules, such as Hebb’s learning rule, cannot explain extinction learning. Extinction learning, similar to fear learning, can be blocked (e.g., Lovibond et al. 2000; Rescorla 2003). In a typical “protection from extinction” design, a subject is trained with CSA–US pairings and also trained that CSX is a conditioned inhibitor. If the CSA is extinguished in the presence of CSX, the presence of X blocks extinction learning to A. Protection from extinction occurs because the associative strengths of CSA and the inhibitor CSX nullify each other, so that predictive error, (λ − ∑V), is small and no extinction occurs. Conversely, extinction to CSA can be facilitated if CSX is also trained as a signal for shock and the AX compound then extinguished (Wagner 1969; Rescorla 2000). Facilitation of extinction occurs because the summed associative strengths of CSA and CSX mean that predictive error, (λ − ∑V), is large and extinction learning facilitated.

Recent data confirm a key claim of real-time models of predictive learning during extinction. Recall that these theories suppose that each temporal element of a CS is itself subject to predictive learning and so subjects could learn different things about different temporal elements of the CS. Kehoe and Joscelyne (2005) trained rabbits with CS–US pairings with US delivery at both 200 msec and 1200 msec after the onset of the CS (or stimulus intervals [ISIs]. One eyeblink CR emerged at each ISI. Kehoe and Joscelyne (2005) then omitted the first US but retained the second US. Under these conditions the short latency CR was extinguished, whereas the longer latency CR was completely preserved. Moreover, the short latency CR spontaneously recovered between sessions and was rapidly reacquired when the short latency ISI was reintroduced.

Summary

Fear conditioning, blocking, and overexpectation highlight the different consequences for learning of the contiguous and predictive relationship between a CS and a shock US. In each case the contiguous relationship between a CS and a shock US is intact. However, in each case, the outcome of these pairings is quite different. In fear conditioning, a CS–US pairing gives rise to fear of the CS. In blocking, this pairing gives rise to no fear of the CS. In overexpectation, this pairing actually reduces fear of the CS. A similar reduction of a CR, despite adequate CS–US contiguity, has been observed during conditioning with serial compounds. Protection from extinction and facilitation of extinction similarly highlight the operation of predictive error underlying the processes by which organisms learn that a once dangerous cue is harmless. In both cases a fear CS is repeatedly presented in the absence of shock; however, when combined with an inhibitor extinction, learning does not occur, whereas when combined with another excitor, extinction learning is facilitated. These designs reveal the important place of predictive learning in fear conditioning. They can be used as powerful behavioral tools to probe the neural mechanisms for predictive learning and to distinguish between subtle variations in the nature and consequences of predictive learning.

Brain mechanisms for direct predictive learning

The amygdala is important for the encoding, storage, and retrieval of fear memories (Davis 1992; Maren 2005). The cellular and molecular mechanisms underlying these processes are remarkably well understood. Activation of NMDA receptors in the amygdala, and recruitment of the signal transduction cascades subsequent to NMDA receptor activation (e.g., Ca2+ and cyclic AMP-dependent signaling) are essential for fear memory formation (Schafe et al. 2001). Evidence from single unit recordings, lesions, or localized pharmacological and molecular manipulations supports this role (Maren and Quirk 2004). This evidence suggests that the multimodal CS and US sensory inputs converge on individual amygdala neurons to induce long-term synaptic plasticity and formation of fear memories (for reviews, see Fanselow and LeDoux 1999; Maren 2001; Schafe et al. 2001; Maren and Quirk 2004; Kim and Jung 2006). Recent empirical work has begun to shed light on the brain mechanisms for direct predictive learning. Interestingly, these depend, at least on part, on structures other than the amygdala.

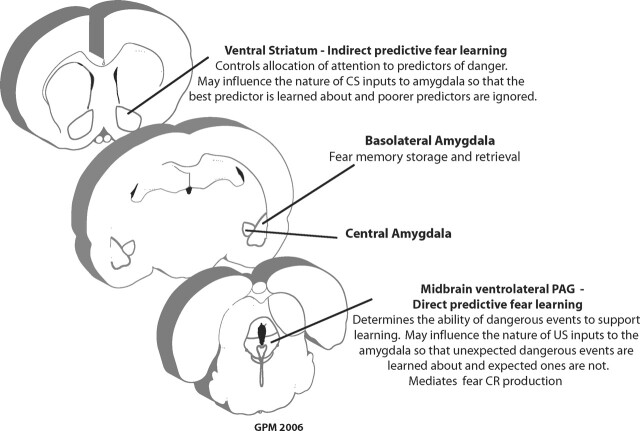

Rescorla (1968) originally suggested that direct predictive learning could be mediated by the discrepancy between the actual conditioned response (CR) produced by the CS and the maximum CR that the US can support. Excitatory fear learning occurs when the current CR is less than the maximal CR supportable by the US (a positive prediction error). Inhibitory fear learning occurs when the current CR exceeds the maximal CR supported by the CS (a negative prediction error). No fear learning occurs when the current CR equals the maximal CR supported by the US (no prediction error). According to this suggestion, there should be overlap in the neuroanatomical substrates for predictive learning and for defensive CR production. The midbrain periaqueductal gray (PAG) is an important structure for integrating defensive behavioral and autonomic responses to threats (Carrive 1993; Keay and Bandler 2001, 2004). The PAG receives extensive projections from the CeA and other forebrain structures important for learning, and it controls expression of defensive behaviors as fear CRs. The PAG is organized as a series of four longitudinal columns located dorsomedial (dm), dorsolateral (dl), lateral (l), and ventrolateral (vl) to the cerebral aqueduct that exert differential control over defensive behaviors. Both the dPAG and vlPAG have been implicated in defensive responses. The dPAG is important for controlling unconditioned defensive responses, whereas the vlPAG is important for controlling conditioned defensive responses (Carrive 1993). Recent studies have shown that the vlPAG is also an important site for fear predictive learning.

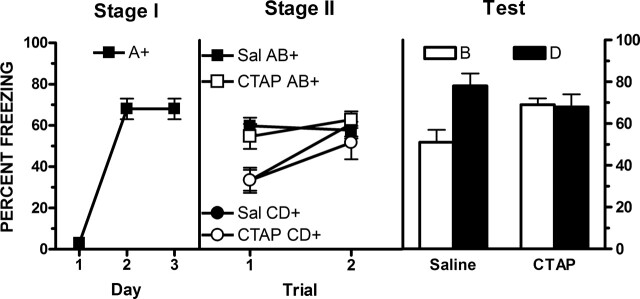

Opioid receptors in the vlPAG contribute to predictive fear learning. Molecular cloning and pharmacological studies have identified four opioid receptor subtypes, μ, δ, κ, and nociceptin opioid receptors (for reviews, see Williams et al. 2001; McNally and Akil 2002), and all of these receptors are expressed in the PAG. These receptors belong to the superfamily of seven transmembrane spanning G-protein–coupled receptors, and the four receptors share a large degree of structural homology. vlPAG μ-opioid receptors mediate predictive fear learning. For example, by using a within-subject design to study blocking, McNally and Cole (2006) trained rats to fear CSA via pairings with shock. In stage 2, they then arranged that an AB compound and a CD compound were each paired with shock. Tests showed that rats were more afraid of D than B, indicating that the presence of the frightening A had blocked conditioning to B. This blocking was prevented (i.e., fear accrued normally to B), if stage 2 training was preceded by either systemic injection of the opioid receptor antagonist naloxone or intra-vlPAG infusions of the μ-opioid receptor antagonist CTAP (Fig. 1). Identical effects of both manipulations have been reported for extinction. Thus, fear extinction learning is prevented by systemic or intra-vlPAG infusions of a nonselective opioid receptor antagonist as well as by vlPAG infusions of a μ-opioid receptor–specific antagonist (McNally and Westbrook 2003; McNally et al. 2004b, 2005). Moreover, fear extinction learning is facilitated by manipulations than enhance vlPAG opioid neuromodulation (McNally 2005). In all cases, the effects of opioid receptor antagonism were dose dependent and neuroanatomically specific to vlPAG. Finally, overexpectation is also prevented by these manipulations (McNally et al. 2004a).

Figure 1.

Prevention of blocking by infusions of the μ-opioid receptor antagonist CTAP into the vlPAG prior to stage 2 training. (Reprinted with permission from the American Psychological Association, © 2006, McNally and Cole [2006]).

The common sensitivity of overexpectation, extinction, and blocking to opioid receptor antagonism is important for several reasons. For instance, it underscores the point that predictive error contributes to learning in each of these three behavioral designs. It also identifies the associative mechanism for midbrain contributions to predictive fear learning. It indicates a role for μ-opioid receptors in predictive learning based on pooled associative strengths, (λ − ∑V), rather than learning based on separate computations for CSA, |λ − VA|, and CSB, |λ − VB|. Recall that overexpectation can only be explained by theories of predictive learning that employ a pooled, or summed, error term. A role for opioids in direct as opposed to indirect predictive fear learning under these conditions was confirmed by the use of an unblocking design that manipulated opioid receptor antagonism with increases and decreases in US intensity during stage 2 (McNally et al. 2004a).

The data reviewed thus far have been derived exclusively from studies of fear conditioning in rodents. Recent work using people has also identified prediction-related activity in the midbrain during aversive conditioning (Seymour et al. 2004). The behavioral design used did not permit examination of whether this prediction-related activity had a direct action on associative formation, as would be expected from the rodent studies reviewed above, or whether this action on associative formation was indirect via changes in attention. The region of activity was ventral to the aqueduct and consistent with dorsal raphe nucleus (DRN) rather than the immediately adjacent vlPAG. However, there are important intra-midbrain circuits involving DRN and PAG (Lovick 1994; Stezhka and Lovick 1994) that may mediate this midbrain contribution to predictive fear learning.

The intracellular mechanisms for direct predictive learning in the midbrain have also begun to be elucidated. The opioid receptors couple to G proteins inhibiting adenylyl cyclase, activating inwardly rectifying K+ channels, and decreasing the conductance of voltage-gated Ca2+ channels (Williams et al. 2001). They also couple to an array of other second messenger systems, which include the MAP kinases. Reductions in vlPAG adenylyl cyclase and cAMP are important signal transduction events for direct predictive learning because this learning is prevented by increasing vlPAG cAMP (McNally et al. 2005). Interestingly, neither vlPAG protein kinase A nor MAP kinases appear to contribute to direct predictive learning (McNally et al. 2005). This stands in contrast to the roles of these kinases in learning about contiguous relations in the amygdala and illustrates that different mechanisms can mediate learning about predictive versus contiguous relations.

These findings support earlier suggestions for a role of opioids and their receptors in predictive learning (Schull 1979; Bolles and Fanselow 1980; Fanselow 1998). The neuroanatomical overlap revealed in these experiments between the midbrain mechanisms for direct predictive learning and CR production may also be a general principle for organization of Pavlovian learning because it has been reported in other conditioning preparations (Kim et al. 1998; Medina et al. 2002). This overlap explains why predictive learning within one response system (e.g., fear) blocks learning within that system but not in another response system (e.g., eyeblink). The within-subject response specificity of blocking would be otherwise impossible.

Brain mechanisms for indirect predictive learning

The indirect actions of predictive learning on Pavlovian fear conditioning are achieved by selective attention. Predictive learning occurs by directing attention toward better predictors of danger and away from poorer predictors. Recent evidence suggests that, during fear learning, this indirect action is achieved in the ventral striatum.

Studies of reward-responsive midbrain dopamine neurons in monkeys indicate that the firing of these cells is closely linked to predictive learning (Schultz 2006). These cells display high levels of firing to unexpected rewards and low levels of firing to expected rewards (Waelti et al. 2001). Conversely, these cells show high levels of firing to CSs that reliably predict rewards and low levels of firing to CSs that are not predictive of rewards (Waelti et al. 2001). Neuroimaging studies in human participants reveal similar changes in activity in target regions of midbrain dopamine cells, the ventral putamen/striatum, as a function of whether a reward is expected or not (Pagnoni et al. 2002; McLure et al. 2003; O’Doherty et al. 2003). These changes also occur during blocking. For example, reward-responsive cells in monkey midbrain acquire stronger responses to a reward-predicting stimulus than a blocked stimulus (Waelti et al. 2001). In human participants, the ventral putamen/striatum also shows larger responses to a reward-predicting stimulus than a blocked stimulus (Tobler et al. 2006). Together, these findings strongly implicate dopamine neurotransmission and the ventral putamen/striatum in predicting rewards.

Do these same mechanisms also contribute to predicting danger? There is evidence from human participants that ventral putamen/striatum activity correlates with predictive error during category learning (Rodriguez et al. 2005). In this experiment participants were required to predict an outcome based on visual features of a stimulus. Feedback (correct or incorrect) for these predictions was provided on a trial by trial basis. Activity in the ventral putamen/striatum was positively correlated with incorrect predictions (i.e., when the outcome of the trial was surprising). This suggests that the role of this structure may not be limited to learning about rewards. However, there is conflicting evidence from human and rodent studies regarding the existence and interpretation of dopamine release and/or activity in the ventral putamen/striatum during aversive conditioning (for reviews, see Salamone 1994; Horvitz 2000; Pezze and Feldon 2004). Moreover, although there is evidence that dopamine and the ventral striatum make important contributions to fear learning, the nature of this contribution is only poorly understood (Redgrave et al. 1999; Horvitz 2000; Pezze and Feldon 2004). Recent data from both human and rodent studies suggest a specific role for the ventral striatum/putamen in predictive fear learning.

Studies of human conditioning have revealed activity in the ventral striatum during presentations of a CS previously paired with aversive stimulation (Jensen et al. 2003). This can occur during presentations of a trained CS but prior to delivery of the US and thus, under some circumstances, does not appear directly attributable to any relief that might be occasioned by the termination of the US. Moreover, this activity can be related to predictive error. For example, consider a recent experiment that studied predictive error during serial compound conditioning in humans participants (Seymour et al. 2004). One pair of CSs was followed by a high (i.e., CS1→CS2→high US) and the other by a low (i.e., CS3→CS4→low US) intensity aversive US. Occasionally a CS from one pair would be presented sequentially with a CS from the other pair and followed by the US associated with the second CS (e.g., CS1→CS4→low US). There are a number of sources of predictive error, derived by TD learning rules, under these conditions. The change from the intertrial interval to the unexpected CS1 is a source of large predictive error (positive prediction error) as is the change from CS1 to CS4 on CS1→CS4 trials (negative prediction error). Activity in the ventral putamen/striatum was significantly positively correlated with these TD prediction errors.

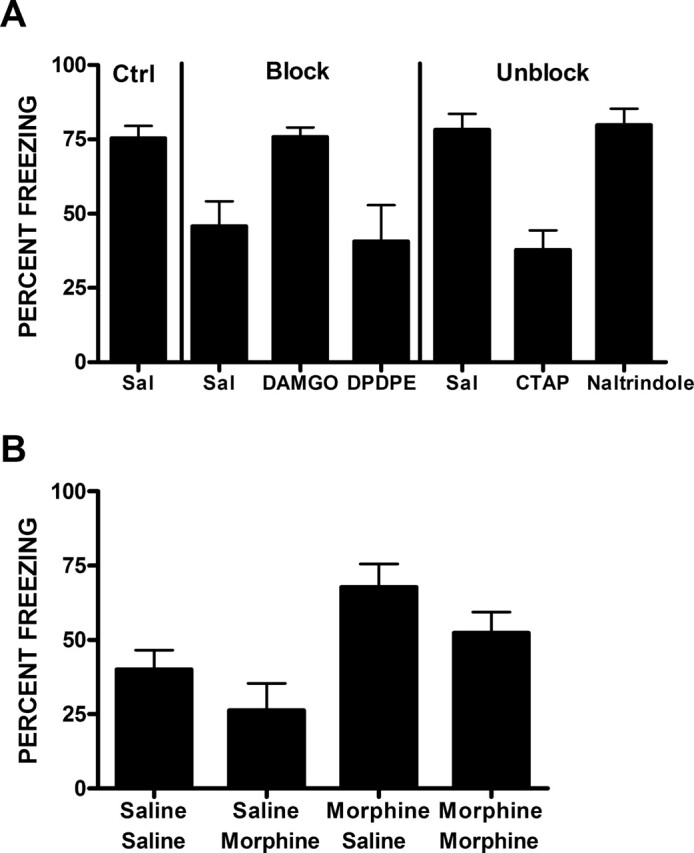

Studies of blocking in rodent fear conditioning have provided evidence that the ventral striatum is critical for predictive fear learning. These studies have also identified some of the important neurotransmitters and neuromodulators underpinning this learning. Blocking of Pavlovian fear conditioning in rodents depends on dopamine neurotransmission in the nucleus accumbens (Acb) (Iordanova et al. 2006a). Blocking is enhanced by manipulations that increase, and is prevented by manipulations that decrease Acb dopamine neurotransmission. Interestingly, blocking of fear conditioning depends upon the combined activity of D1 and D2 dopamine receptors because only combined microinjections of D1 and D2 selective antagonists, not microinjections of either D1 or D2 antagonists, prevented blocking (Iordanova et al. 2006a). Blocking and unblocking also depend on accumbal opioid receptors (Iordanova et al. 2006b). Acb microinjections of μ-opioid receptor agonists prevent blocking, whereas microinjections of μ-opioid receptor antagonists prevent unblocking (Fig. 2).

Figure 2.

(A) Prevention of blocking by infusions of the μ-opioid receptor agonist DAMGO, and prevention of unblocking by the μ-opioid receptor antagonist CTAP, upon infusion into the Acb prior to stage 2 training. Infusions of a δ-opioid receptor agonist (DPDPE) or antagonist (Nalt [Naltrindole]) were without effect. (B) μ-opioid receptors in the Acb regulate indirect predictive fear learning. Infusions of a μ-opioid receptor agonist prior to the first (morphine–saline) stage 2 trial prevents blocking, whereas infusion prior to the second trial (saline–saline) does not. (Reprinted with permission from The Society for Neuroscience © 2006, Iordanova et al. [2006b]).

Both human fMRI and rodent fear conditioning therefore identify a role for the ventral putamen/striatum in predictive fear learning. Recent data suggest this role is in indirect predictive learning and that the ventral putamen/striatum controls allocation of attention to predictors of danger. Examination of the associative mechanism for blocking and unblocking during rodent fear conditioning revealed that the ventral striatum determines the attention allocated to a CS and, hence, its subsequent association with shock. A key component of theories of indirect predictive learning is that predictive learning is delayed. Predictive error on trial N acts to regulate what is learned about that CS on trial N + 1 by controlling how much attention is allocated to that CS on the latter trial. For example, Mackintosh et al. (1977) used an unblocking design to show that an increase in shock intensity on the first trial of stage 2 training did not alter how much was learned about the added CS on that trial (as would be predicted by a direct action of predictive error), but rather influenced learning about the added CS on the next trial (as would be predicted by an indirect action of predictive error). Iordanova et al. (2006b) recently used an analogous design to study the role of ventral striatal opioid receptors in predictive learning (Fig. 2). Iordanova et al. first trained rats to fear CSA. In stage 2, they subjected rats to two pairings of CSA and CSB with shock. They compared the effects on blocking of infusions of a μ-opioid receptor agonist into the Acb either before the first or the second of the two stage 2 conditioning trials. According to theories of direct predictive learning, infusions prior to either the first or second stage 2 trial should prevent blocking, whereas according to attentional theories, only infusions prior to the first trial should prevent blocking. Attentional models of predictive learning state that during the first AB+ trial in stage 2, the subject learns that CSA signals shock and that CSB signals shock. The subject also learns that CSA is a superior predictor of shock than CSB because the associative strength of CSA is higher due to it being paired with shock in stage 1 (i.e., |λ − VA| > |λ − VB|). Consequently, the subject attends to CSA and ignores CSB on the second AB + trial, and learning about CSB on this second trial suffers accordingly. The data showed that only infusions prior to the first trial prevented blocking. So, Acb μ-opioid agonists prevent the decline in attention suffered by B and allow it to associate with shock on the second trial.

These findings provide compelling evidence that an important role of the ventral striatum during fear learning is the attentional selection between competing predictors of danger. This selection results in the allocation of attention to, and therefore learning about, the best predictor of danger events at the expense of worse predictors. Understanding these brain mechanisms for attentional regulation during fear may have important clinical implications. Many instances of pathological fear and anxiety, including generalized anxiety (Mathews and MacLeod 1985; Mogg et al. 1989), panic disorder (McNally et al. 1990b), post-traumatic stress disorder (McNally et al. 1990a), simple phobia (Watts et al. 1986), and social phobia (Hope et al. 1990), are characterized by attentional bias. Anxiety patients selectively attend to danger and threat-related cues at the expense of other stimuli. This attentional bias may emerge from alterations in ventral striatal mechanisms for predicting danger.

Conclusions

The ability to predict sources of danger in the environment is essential for adaptive behavior and survival. Pavlovian fear conditioning allows anticipation of sources of danger in the environment. It guides attention away from poorer predictors toward better predictors of danger, and it elicits defensive behavior appropriate to these threats. Learning about predictive relations is distinct, at both the behavioral and neural levels, from learning about contiguous relations. Learning about contiguous relations requires activation of amygdala NMDA receptors and recruitment of the signal transduction cascades subsequent to this activation. Direct learning about predictive relations requires μ-opioid receptors in the vlPAG and reductions in vlPAG cAMP. Indirect learning about predictive relations requires D1 and D2 dopamine receptors and μ-opioid receptors in the Acb which select cues for attentional processing and, hence, learning.

Despite their distinct neural and behavioral bases, learning about predictive relations and learning about contiguous relations cannot occur independently. The mechanisms for predicting danger are complementary to the mechanisms for fear memory formation. Predicting danger depends upon retrieving a fear memory, but it also regulates new fear memory formation by regulating attention to the CS and by regulating what is learned about the shock US. Wagner’s Sometime Opponent Process of Learning (SOP) and its more recent affective and real-time extensions are important examples of approaches that coherently incorporate both contiguity and predictive mechanisms into a general theory of associative learning (Wagner 1981; Wagner and Brandon 1989, 2001). Within these models, learning depends critically upon the activation of simultaneous mental representations of the CS and the US into the focus working memory. Contiguous relations are important because only closely spaced presentations of the CS and US allow for their mental representations to be simultaneously active in the focus of working memory and learned about. If a long trace interval is introduced, then the CS representation will have decayed from the focus working memory by the time the US is presented and thus the CS will not be learned about. Predictive relationships are important because knowledge of the CS–US causal relationship gates the ability of the CS and US representations to be activated to the focus of working memory. Expected CSs and USs are processed differently in memory to unexpected CSs and USs. The amygdala synaptic mechanisms for fear memory formation are sensitive to predictive relations (Bauer et al. 2001). The question is how this sensitivity is achieved. Following the general architecture of SOP, the mechanisms of indirect and direct predictive fear learning reviewed here might be understood as regulating the access of the CS and shock US, respectively, to amygdala-based mechanisms for fear memory formation (Fig. 3).

Figure 3.

Roles of the ventral striatum and midbrain in predicting danger.

Studies of the neural mechanisms that allow organisms to predict sources of danger in their environment are beginning to reveal greater complexity and subtlety in the brain mechanisms for fear learning than was previously realized. These studies show that structures not typically viewed as important for fear learning are important for predictive learning. This complexity is perhaps somewhat unsurprising given the key role that predictive learning plays in enabling adaptive responses to threat. What is surprising is that understanding of the brain mechanisms for predicting danger, as opposed to those important for storage of fear memories, is so incomplete. Likewise, knowledge of the brain mechanisms for predicting danger is remarkably limited when compared to knowledge of the brain mechanisms for predicting rewards (Schultz and Dickinson 2000; Schultz 2006). A more complete understanding of these mechanisms is needed. The behavioral approaches reviewed in the first part of this article can be used to dissociate learning about contiguous versus predictive relations during fear conditioning. The empirical studies, described in the latter parts of this article, which have adopted these approaches, provide important insights into the neural substrates for predicting danger. However these studies also leave unanswered many important questions, for example, about the circuit level and molecular mechanisms that allow the ventral striatum and midbrain to regulate fear learning. The exact relationship between the neural mechanisms for predicting danger and predicting rewards is also unclear. Certain theoretical traditions place emphasis on opponent interactions between aversive (fear) and appetitive (reward) motivational systems in regulating associative learning (Konorski 1967; Dickinson and Dearing 1979). Other theoretical approaches suppose commonalities between the brain mechanisms for predictive danger and for predicting rewards (Redgrave et al. 1999). The data reviewed here indicate some overlap between these processes, at least at the level of the ventral striatum. Finally, an increased understanding of the neural mechanisms for predicting danger should shed light on the brain mechanisms for pathological anxiety because many instances of pathological anxiety are characterized by excessive and biased attention toward danger.

Acknowledgments

We thank Gabrielle Weidemann, Mihaela Iordanova, and Jim Kehoe for their helpful discussions. Preparation of this manuscript was supported by a Discovery Project Grant (DP0343808) from the Australian Research Council to G.P.M.

Footnotes

Article and publication are at http://www.learnmem.org/cgi/doi/10.1101/lm.196606

References

- Bakal C.W., Johnson R.D., Rescorla R.A. The effect of change in US quality on the blocking effect. Pavlov. J. Biol. Sci. 1974;9:97–103. doi: 10.1007/BF03000529. [DOI] [PubMed] [Google Scholar]

- Bauer E.P., LeDoux J.E., Nader K. Fear conditioning and LTP in the lateral amygdala are sensitive to the same stimulus contingencies. Nat. Neurosci. 2001;4:687–688. doi: 10.1038/89465. [DOI] [PubMed] [Google Scholar]

- Betts S.L., Brandon S.E., Wagner A.R. Dissociation of the blocking of conditioned eyeblink and conditioned fear following a shift in US locus. Anim. Learn. Behav. 1996;24:459–470. [Google Scholar]

- Biegler R., Morris R.G. Blocking in the spatial domain with arrays of discrete landmarks. J. Exp. Psychol. Anim. Behav. Process. 1999;25:334–351. [PubMed] [Google Scholar]

- Bolles R.C., Fanselow M.S. A perceptual-defensive-recuperative model of fear and pain. Behav. Brain Sci. 1980;3:291–323. [Google Scholar]

- Carrive P. The periaqueductal gray and defensive behavior: Functional Representation and neuronal organization. Behav. Brain Res. 1993;58:27–47. doi: 10.1016/0166-4328(93)90088-8. [DOI] [PubMed] [Google Scholar]

- Davis M. The role of the amygdala in fear and anxiety. Annu. Rev. Neurosci. 1992;15:353–375. doi: 10.1146/annurev.ne.15.030192.002033. [DOI] [PubMed] [Google Scholar]

- Delamater A.R. Experimental extinction in Pavlovian conditioning: Behavioural and neuroscience perspectives. Q. J. Exp. Psychol. 2004;57B:97–132. doi: 10.1080/02724990344000097. [DOI] [PubMed] [Google Scholar]

- Dickinson A. Contemporary learning theory. Cambridge University Press; Cambridge, UK.: 1980. [Google Scholar]

- Dickinson A. Causal learning: An associative analysis. Q. J. Exp Psychol. 2001;54B:3–25. doi: 10.1080/02724990042000010. [DOI] [PubMed] [Google Scholar]

- Dickinson A., Dearing M.F. 1979. Appetitive-aversive interactions and inhibitory processes. In Mechanisms of learning and motivation: A memorial volume to Jerzy Konorsk (eds. A. Dickinson and R.A. Boakes), pp. 203–231. Erlbaum; Hillsadle, NJ [Google Scholar]

- Dickinson A., Hall G.A., Mackintosh N.J. Surprise and the attenuation of blocking. J. Exp. Psychol. Anim. Behav. Process. 1976;2:313–322. [Google Scholar]

- Dickinson A., Nicholas D.J., Mackintosh N.J. A re-examination of one-trial blocking in conditioned suppression. Q. J. Exp. Psychol. 1983;35B:67–79. [Google Scholar]

- Fanselow M.S. Pavlovian conditioning, negative feedback, and blocking: Mechanisms that regulate association formation. Neuron. 1998;20:625–627. doi: 10.1016/s0896-6273(00)81002-8. [DOI] [PubMed] [Google Scholar]

- Fanselow M.S., LeDoux J.E. Why we think plasticity underlying Pavlovian fear conditioning occurs in the basolateral amygdala. Neuron. 1999;23:229–232. doi: 10.1016/s0896-6273(00)80775-8. [DOI] [PubMed] [Google Scholar]

- Hebb D.O. The organization of behavior. John Wiley and Sons; New York.: 1949. [Google Scholar]

- Hope D.A., Rapee R.M., Heimberg R.G., Dombeck M. Representations of self in social phobia: Vulnerability to social threat. Cognit. Ther. Res. 1990;14:177–189. [Google Scholar]

- Horvitz J.C. Mesolimbocortical and nigrostriatal dopamine responses to salient non-reward events. Neuroscience. 2000;96:651–666. doi: 10.1016/s0306-4522(00)00019-1. [DOI] [PubMed] [Google Scholar]

- Iordanova M.D., Westbrook R.F., Killcross A. 2006a. Dopamine in the nucleus accumbens regulates error-correction in associative learning. Eur. J. Neurosci. (in press). [DOI] [PubMed] [Google Scholar]

- Iordanova M., McNally G.P., Westbrook R.F. 2006b. Opioid receptors in the nucleus accumbens regulate attentional learning in the blocking paradigm. J. Neurosci. (in press). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jensen J., McIntosh A.R., Crawley A.P., Mikulis D.J., Remington G., Kapur S. Direct activation of the ventral striatum in anticipation of aversive stimuli. Neuron. 2003;40:1251–1257. doi: 10.1016/s0896-6273(03)00724-4. [DOI] [PubMed] [Google Scholar]

- Kamin L.J. 1968. “Attention-like” processes in classical conditioning. In Miami symposium on the prediction of behavior: Aversive stimulation (ed. M.R. Jones), pp. 9–33. University of Miami Press; Miami, FL [Google Scholar]

- Kamin L.J. 1969. Predictability, surprise, attention, and conditioning. In Punishment and aversive behavior (eds. B. Campbell and R.M. Church) pp. 9–31. Appleton Century Crofts; New York [Google Scholar]

- Kamin L.J., Gaioni S.J. Compound conditioned emotional response: Conditioning with differentially salient elements in rats. J. Comp. Physiol. Psychol. 1974;87:591–597. doi: 10.1037/h0036989. [DOI] [PubMed] [Google Scholar]

- Keay K.A., Bandler R. Parallel circuits mediating distinct emotional coping reactions to different stypes of stress. Neurosci. Biobehav. Rev. 2001;25:669–678. doi: 10.1016/s0149-7634(01)00049-5. [DOI] [PubMed] [Google Scholar]

- Keay K.A., Bandler R. 2004. The periaqueductal gray. In The rat nervous system, 3rd ed. (ed. G. Paxinos), pp. 243–257. Academic Press; San Diego, CA [Google Scholar]

- Kehoe E.J., Joscelyne A. Temporally specific extinction of conditioned responses in the rabbit (Oryctolagus cuniculus) nictitating membrane preparation. Behav. Neurosci. 2005;119:1011–1022. doi: 10.1037/0735-7044.119.4.1011. [DOI] [PubMed] [Google Scholar]

- Kehoe E.J., White N.E. Overexpectation response loss during sustained stimulus compounding in the rabbit nictitating membrane preparation. Learn. Mem. 2004;11:476–483. doi: 10.1101/lm.77604. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kehoe E.J., Schreurs B.G., Graham P. Temporal primacy overrides prior training in serial compound conditioning with the rabbit. Anim. Learn. Behav. 1987;15:47–54. [Google Scholar]

- Khallad Y., Moore J. Blocking, unblocking, and overexpectation in autoshaping with pigeons. J. Exp. Anal. Behav. 1996;65:575–591. doi: 10.1901/jeab.1996.65-575. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim J., Jung M.W. Neural circuits and mechanisms involved in Pavlovian fear conditioning: A critical review. Neurosci. Biobehav. Rev. 2006;30:188–202. doi: 10.1016/j.neubiorev.2005.06.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim J.J., Krupa D.J., Thompson R.F. Inhibitory cerebello-olivary projections and blocking effect in classical conditioning. Science. 1998;279:570–573. doi: 10.1126/science.279.5350.570. [DOI] [PubMed] [Google Scholar]

- Konorski J. Integrative activity of the brain. University of Chicago Press; Chicago.: 1967. [Google Scholar]

- Kremer E.F. The Rescorla-Wagner model: Losses in associative strength in Compound conditioned stimuli. J. Exp. Psychol. Anim. Behav. Process. 1978;4:22–36. doi: 10.1037//0097-7403.4.1.22. [DOI] [PubMed] [Google Scholar]

- Lamoureux J.A., Buhusi C.V., Schmajuk N.A. 1998. A real-time theory of Pavlovian conditioning: Simple CSs and occasion setters. In Occasion setting: Associative learning and cognition in animals (eds. N.A. Schmajuk and P.C. Holland), pp. 383–424. American Psychological Association; Washington, DC [Google Scholar]

- Lattal K.M., Nakajima S. Overexpectation in appetitive Pavlovian and instrumental conditioning. Anim. Learn. Behav. 1998;26:351–360. [Google Scholar]

- Lovibond P.F., Davis N.R., O’Flaherty A.S. Protection from extinction in human fear conditioning. Behav. Res. Ther. 2000;38:967–983. doi: 10.1016/s0005-7967(99)00121-7. [DOI] [PubMed] [Google Scholar]

- Lovick T.A. Influence of the dorsal and median raphe nuclei on neurons in the periaqueductal gray matter: Role of 5-hydroxytryptamine. Neuroscience. 1994;59:993–1000. doi: 10.1016/0306-4522(94)90301-8. [DOI] [PubMed] [Google Scholar]

- Mackintosh N.J. A theory of attention: Variations in the associability of stimulus with reinforcement. Psychol. Rev. 1975;82:276–298. [Google Scholar]

- Mackintosh N.J., Bygrave D.J., Picton B.M.B. Locus of the effects of a surprising reinforcer in the attenuation of blocking. Q. J. Exp Psychol. 1977;29:327–336. [Google Scholar]

- Mackintosh N.J., Dickinson A., Cotton M.M. Surprise and blocking: Effects of the number of compound trials. Anim. Learn. Behav. 1980;8:387–391. [Google Scholar]

- Maren S. Neurobiology of Pavlovian fear conditioning. Annu. Rev. Neurosci. 2001;24:897–931. doi: 10.1146/annurev.neuro.24.1.897. [DOI] [PubMed] [Google Scholar]

- Maren S. Building and burying fear memories in the brain. Neuroscientist. 2005;11:89–99. doi: 10.1177/1073858404269232. [DOI] [PubMed] [Google Scholar]

- Maren S., Quirk G.J. Neuronal signalling of fear memory. Nat. Rev. Neurosci. 2004;5:844–852. doi: 10.1038/nrn1535. [DOI] [PubMed] [Google Scholar]

- Mathews A., MacLeod C. Selective processing of threat cues in anxiety states. Behav. Res. Ther. 1985;23:563–569. doi: 10.1016/0005-7967(85)90104-4. [DOI] [PubMed] [Google Scholar]

- McLure S.M., Berns G.S., Montague P.R. Temporal prediction errors in a passive learning task activate human striatum. Neuron. 2003;38:339–346. doi: 10.1016/s0896-6273(03)00154-5. [DOI] [PubMed] [Google Scholar]

- McNally G.P. Facilitation of fear extinction by midbrain periaqueductal gray infusions of RB101(S), an inhibitor of enkephalin-degrading enzymes. Behav. Neurosci. 2005;119:1672–1677. doi: 10.1037/0735-7044.119.6.1672. [DOI] [PubMed] [Google Scholar]

- McNally G.P., Akil H. 2002. Opioid peptides and their receptors: Overview and function in pain modulation. In Neuropsychopharmacology: A fifth generation of progress (eds. K. Davis. et al.) pp. 35–46. Lippincott Williams & Wilkins; New York [Google Scholar]

- McNally G.P., Cole S. 2006. Opioid receptors in the midbrain periaqueductal gray regulate prediction errors during Pavlovian fear conditioning. Behav. Neurosci. (in press). [DOI] [PubMed] [Google Scholar]

- McNally G.P., Westbrook R.F. Opioid receptors regulate the extinction of Pavlovian fear conditioning. Behav. Neurosci. 2003;117:1292–1301. doi: 10.1037/0735-7044.117.6.1292. [DOI] [PubMed] [Google Scholar]

- McNally R.J., Kaspi S.P., Riemann B.C., Zeitlin S.B. Selective processing of threat cues in posttraumatic stress disorder. J. Abnorm. Psychol. 1990a;99:398–402. doi: 10.1037//0021-843x.99.4.398. [DOI] [PubMed] [Google Scholar]

- McNally R.J., Reimann B.C., Kim E. Selective processing of threat cues in panic disorder. Behav. Res. Ther. 1990b;28:407–412. doi: 10.1016/0005-7967(90)90160-k. [DOI] [PubMed] [Google Scholar]

- McNally G.P., Pigg M., Weidemann G. Blocking, unblocking, and overexpectation of fear: Opioid receptors regulate Pavlovian association formation. Behav. Neurosci. 2004a;118:111–120. doi: 10.1037/0735-7044.118.1.111. [DOI] [PubMed] [Google Scholar]

- McNally G.P., Pigg M., Weidemann G. Opioid receptors in the midbrain periaqueductal gray matter regulate the extinction of Pavlovian fear conditioning. Behav. Neurosci. 2004b;24:6912–6919. doi: 10.1523/JNEUROSCI.1828-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McNally G.P., Lee B., Chiem J.Y., Choi E.A. The midbrain periaqueductal gray and fear extinction: Opioid receptor subtype and roles of cAMP, protein kinase A, and mitogen-activated protein kinase. Behav. Neurosci. 2005;119:1023–1033. doi: 10.1037/0735-7044.119.4.1023. [DOI] [PubMed] [Google Scholar]

- McNish K.A., Gewirtz J.C., Davis M. Disruption of contextual freezing, but not contextual blocking of fear-potentiated startle, after lesions of the dorsal hippocampus. Behav. Neurosci. 2000;114:64–76. doi: 10.1037//0735-7044.114.1.64. [DOI] [PubMed] [Google Scholar]

- Medina J.F., Nores W.L., Mauk M.D. Inhibition of climbing fibres is a signal for the extinction of conditioned eyelid responses. Nature. 2002;416:330–333. doi: 10.1038/416330a. [DOI] [PubMed] [Google Scholar]

- Mogg K., Mathews A., Weinman J. Selective processing of threat cues in anxiety states: A replication. Behav. Res. Ther. 1989;27:317–323. doi: 10.1016/0005-7967(89)90001-6. [DOI] [PubMed] [Google Scholar]

- O’Doherty J.P., Dayan P., Friston K., Critchely H., Dolan R.J. Temporal difference models and reward-related learning in the human brain. Neuron. 2003;38:329–337. doi: 10.1016/s0896-6273(03)00169-7. [DOI] [PubMed] [Google Scholar]

- Pagnoni G., Zink C.F., Montague P.R., Berns G.S. Activity in human ventral striatum locked to errors of reward prediction. Nat. Neurol. 2002;5:97–98. doi: 10.1038/nn802. [DOI] [PubMed] [Google Scholar]

- Pearce J.M., Hall G. A model for Pavlovian learning: Variations in the effectiveness of conditioned but not of unconditioned stimuli. Psychol. Rev. 1980;87:532–552. [PubMed] [Google Scholar]

- Pezze M.A., Feldon J. Mesolimbic dopamine pathways in fear conditioning. Prog. Neurobiol. 2004;74:301–330. doi: 10.1016/j.pneurobio.2004.09.004. [DOI] [PubMed] [Google Scholar]

- Redgrave P., Presscott T.J., Gurney K. Is the short-latency dopamine response too short to signal reward error? Trends Neurosci. 1999;22:146–151. doi: 10.1016/s0166-2236(98)01373-3. [DOI] [PubMed] [Google Scholar]

- Rescorla R.A. 1968. Conditioned inhibition of fear. In Fundamental issues in associative learning (eds. N.J. Mackintosh and W.K Honig), pp. 65–90. Dalhousie University Press; Halifax, Canada [Google Scholar]

- Rescorla R.A. Reductions in effectiveness after prior excitatory conditioning in the rat. Learn. Motiv. 1970;1:372–381. [Google Scholar]

- Rescorla R.A. Behavioral studies of Pavlovian conditioning. Annu. Rev. Neurosci. 1988;11:329–352. doi: 10.1146/annurev.ne.11.030188.001553. [DOI] [PubMed] [Google Scholar]

- Rescorla R.A. Summation and overexpectation with qualitatively different outcomes. Anim. Learn. Behav. 1999;27:50–62. [Google Scholar]

- Rescorla R.A. Extinction can be enhanced by a concurrent excitor. J. Exp. Psychol. Anim. Behav. Process. 2000;26:251–260. doi: 10.1037//0097-7403.26.3.251. [DOI] [PubMed] [Google Scholar]

- Rescorla R.A. 2002. Experimental Extinction. In. Handbook of contemporary learning theories (eds. R.R. Mowrer and S.B Klein), pp. 119–154. Erlbaum; Hillsdale, NJ [Google Scholar]

- Rescorla R.A. Protection from extinction. Learn. Behav. 2003;31:124–132. doi: 10.3758/bf03195975. [DOI] [PubMed] [Google Scholar]

- Rescorla R.A., Wagner A.R. 1972. A theory of Pavlovian conditioning: Variations in the effectiveness of reinforcement and non-reinforcement. In Classical conditioning II: Current research and theory (eds. A.H. Black and W.F. Prokasy) pp. 64–99. Appleton Century Crofts; New York [Google Scholar]

- Roberts A.D., Pearce J.M. Blocking in the Morris swimming pool. J. Exp. Psychol. Anim. Behav. Process. 1999;25:225–235. doi: 10.1037//0097-7403.25.2.225. [DOI] [PubMed] [Google Scholar]

- Rodriguez P.F., Aron A.R., Poldrack R.A. Ventral-striatal/nucleus accumbens sensitivity to prediction errors during classification learning. Hum. Brain Map. 2005;27:306–313. doi: 10.1002/hbm.20186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salamone J.D. The involvement of nucleus accumbens dopamine in appetitive and aversive motivation. Behav. Brain Res. 1994;61:117–133. doi: 10.1016/0166-4328(94)90153-8. [DOI] [PubMed] [Google Scholar]

- Schafe G.E., Nader K., Blair H.T., LeDoux J.E. Memory consolidation of Pavlovian fear conditioning: A cellular and molecular perspective. Trends Neurosci. 2001;24:540–546. doi: 10.1016/s0166-2236(00)01969-x. [DOI] [PubMed] [Google Scholar]

- Schmajuk N.A., Moore J.W. The hippocampus and the classically conditioned nicitating membrane response: A real-time attentional-associative network. Psychobiology. 1988;46:20–35. [Google Scholar]

- Schull J. 1979. A conditioned opponent theory of Pavlovian conditioning and habituation. In The psychology of learning and motivation, Vol. 13 (ed. G.H. Bower) pp. 57–90. Academic Press; New York [Google Scholar]

- Schultz W. Behavioral theories and the neurophysiology of reward. Annu. Rev. Psychol. 2006;57:87–115. doi: 10.1146/annurev.psych.56.091103.070229. [DOI] [PubMed] [Google Scholar]

- Shultz W., Dickinson A. Neuronal coding of prediction errors. Annu. Rev. Neurosci. 2000;23:473–500. doi: 10.1146/annurev.neuro.23.1.473. [DOI] [PubMed] [Google Scholar]

- Seymour B., O’Doherty J.P., Dayan P., Kolztenburg M., Jones A.K., Dolan R.J., Friston K.J., Frackowiak R.S. Temporal difference models describe higher-order learning in humans. Nature. 2004;429:664–667. doi: 10.1038/nature02581. [DOI] [PubMed] [Google Scholar]

- Stezhka V.V., Lovick T.A. Inhibitory and excitatory projections from the dorsal raphe nucleus to neurons in the dorsolateral periaqueductal gray matter in slices of midbrain maintained in vitro. Neuroscience. 1994;62:177–187. doi: 10.1016/0306-4522(94)90323-9. [DOI] [PubMed] [Google Scholar]

- Sutton R.G. Learning to predict by methods of temporal differences. Mach. Learn. 1988;3:4–44. [Google Scholar]

- Sutton R.G., Barto A.G. Toward a modern theory of adaptive networks: Expectation and prediction. Psychol. Rev. 1981;88:135–170. [PubMed] [Google Scholar]

- Sutton R.G., Barto A.G. 1990. Timer-derivative models of Pavlovian reinforcement. In Learning and computational neuroscience: Foundations of adaptive networks (eds. M. Gabriel and J. Moore), pp. 497–537. MIT Press; Cambridge, MA [Google Scholar]

- Tobler P.N., O’Doherty J.P., Dolan R.J., Schultz W. Human neural learning depends on reward prediction errors in the blocking paradigm. J. Neurophysiol. 2006;95:301–310. doi: 10.1152/jn.00762.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Waelti P., Dickinson A., Schultz W. Dopamine responses comply with basic assumptions of formal learning theory. Nature. 2001;412:43–48. doi: 10.1038/35083500. [DOI] [PubMed] [Google Scholar]

- Wagner A.R. 1969. Stimulus selection and a modified “continuity” theory. In The psychology of learning and motivation, Vol. 3 (eds. G.H. Bower and J.T. Spence) pp. 1–41. Academic Press; New York [Google Scholar]

- Wagner A.R. 1981. SOP: A model of automatic memory processing in animal behavior. In Information processing in animals: Memory mechanisms (eds. N.E. Spear and R.R. Miller), pp. 5–47. Erlbaum; Hillsdale, NJ [Google Scholar]

- Wagner A.R., Brandon S.E. 1989. Evolution of a structured connectionist model of Pavlovian conditioning (AESOP). In Contemporary learning theories: Pavlovian conditioning and the status of traditional learning theory (eds. S.B. Klein and R.R. Mowrer), pp. 149–189. Erlbaum; Hillsdale, NJ [Google Scholar]

- Wagner A.R., Brandon S.E. 2001. A componential theory of Pavlovian conditioning. In Contemporary learning theories: Theory and application (eds. R.R. Mowrer and S.B. Klein), pp. 23–64. Erlbaum; Mahwah, NJ [Google Scholar]

- Wagner A.R., Rescorla R.A. 1972. Inhibition in Pavlovian conditioning: Application of a theory. In Inhibition and learning (ed. M.S. Halliday), pp. 301–336. Academic Press; London [Google Scholar]

- Watts F.N., McKenna F.P., Sharrock R., Trezise L. Colour naming of phobia related words. Brit. J. Psychiatry. 1986;77:97–108. doi: 10.1111/j.2044-8295.1986.tb01985.x. [DOI] [PubMed] [Google Scholar]

- Williams J.T., Christie M.J., Manzoni O. Cellular and synaptic adaptations mediating opioid dependence. Physiol. Rev. 2001;81:299–343. doi: 10.1152/physrev.2001.81.1.299. [DOI] [PubMed] [Google Scholar]

- Yeo A.G. The acquisition of conditioned suppression as a function of interstimulus interval duration. Q. J. Exp Psychol. 1974;26:405–416. doi: 10.1080/14640747408400430. [DOI] [PubMed] [Google Scholar]