Abstract

Let be a compact group and let . We define the Non-Unique Games (NUG) problem as finding to minimize . We introduce a convex relaxation of the NUG problem to a semidefinite program (SDP) by taking the Fourier transform of over . The NUG framework can be seen as a generalization of the little Grothendieck problem over the orthogonal group and the Unique Games problem and includes many practically relevant problems, such as the maximum likelihood estimator to registering bandlimited functions over the unit sphere in -dimensions and orientation estimation of noisy cryo-Electron Microscopy (cryo-EM) projection images. We implement a SDP solver for the NUG cryo-EM problem using the alternating direction method of multipliers (ADMM). Numerical study with synthetic datasets indicate that while our ADMM solver is slower than existing methods, it can estimate the rotations more accurately, especially at low signal-to-noise ratio (SNR).

1991 Mathematics Subject Classification. Primary: 00A69; Secondary: 90C34, 20C40

Key words and phrases. Computer vision, pattern recognition, algorithms, optimization, cryo-EM

1. Introduction

We consider problems of the following form:

| (1.1) |

where is a compact group and are suitable functions. We will refer to such problems as a Non-Unique Game (NUG) problem over .

Note that the solution to the NUG problem is not unique. If is a solution to (1.1), then so is for any . That is, we can solve (1.1) up to a global shift .

In many inverse problems, the goal is to estimate multiple group elements from information about group offsets, and can be formulated as (1.1). A simple example is angular synchronization [40], where one is tasked with estimating angles from information about their offsets mod . The problem of estimating the angles can then be formulated as an optimization problem depending on the offsets, and thus be written in the form of (1.1). In this case, .

One of the simplest instances of (1.1) is the Max-Cut problem, where the objective is to partition the vertices of a graph as to maximize the number of edges (the cut) between the two sets. In this case, , the group of two elements {±1}, and is zero if is not an edge of the graph and

if is an edge. In fact, we take a semidefinite programming based approach towards (1.1) that is inspired by — and can be seen as a generalization of — the semidefinite relaxation for the Max-Cut problem by Goemans and Williamson [21].

Another important source of inspiration is the semidefinite relaxation of , proposed in [15], for the Unique Games problem, a central problem in theoretical computer science [26, 27]. Given integers and , an Unique-Games instance is a system of linear equations over on variables . Each equation constraints the difference of two variables. More precisely, for each in a subset of the pairs, we associate a constraint

The objective is then to find in that satisfy as many equations as possible. This can be easily described within our framework by taking, for each constraint,

and for pairs not corresponding to constraints. The term ‘unique’ derives from the fact that the constraints have this special structure where the offset can only take one value to satisfy the constraint, and all other values have the same score. This motivated our choice of nomenclature for the framework treated in this paper. The semidefinite relaxation for the unique games problem proposed in [15] was investigated in [8] in the context of the signal alignment problem, where the are not forced to have a special structure (but ). The NUG framework presented in this paper can be seen as a generalization of the approach in [8] to other compact groups . We emphasize that, unlike [8] that was limited to the case of a finite cyclic group, here we consider compact groups that are possibly infinite and non-commutative.

Besides the signal alignment problem treated in [8] the semidefinite relaxation to the NUG problem we develop generalizes with other effective relaxations. When it coincides with the semidefinite relaxations for Max-Cut [21], the little Grothendieck problem over [3, 32], recovery in the stochastic block model [2, 7], and Synchronization over [1, 7, 18]. When and the functions are linear with respect to the representation given by , it coincides with the semidefinite relaxation for angular synchronization [40]. Similarly, when and the functions are linear with respect to the natural -dimensional representation, then the NUG problem essentially coincides with the little Grothendieck problem over the orthogonal group [9, 31]. Other examples include the shape matching problem in computer graphics for which is the permutation group (see [24, 16]). In addition, it has been shown in [13] that the formulation of NUG and the algorithms presented in this paper can be extended to simultaneous alignment and classification of a mixture of different signals.

1.1. Orientation estimation in cryo-Electron Microscopy.

A particularly important application of this framework is the orientation estimation problem in cryo-Electron Microscopy [39].

Cryo-EM is a technique used to determine the 3-dimensional structure of biological macromolecules. The molecules are rapidly frozen in a thin layer of ice and imaged with an electron microscope, which gives noisy 2-dimensional projections. One of the main difficulties with this imaging process is that these molecules are imaged at different unknown orientations in the sheet of ice and each molecule can only be imaged once (due to the destructive nature of the imaging process). More precisely, each measurement consists of a tomographic projection of a rotated (by an unknown rotation) copy of the molecule. The task is then to reconstruct the molecule density from many such noisy measurements. Although in principle it is possible to reconstruct the 3-dimensional density directly from the noisy images without estimation of the rotations [25], or by treating rotations as nuisance parameters [47, 6] here we consider the problem of estimating the rotations directly from the noisy images. In Section 2, we describe how this problem can be formulated in the form (1.1).

2. Multireference Alignment

In classical linear inverse problems, one is tasked with recovering an unknown element from a noisy measurement of the form , where represents the measurement error and is a linear observation operator. There are, however, many problems where an additional difficulty is present; one class of such problems includes non-linear inverse problems in which an unknown transformation acts on prior to the linear measurement. Specifically, let be a vector space and be a group acting on . Suppose we have measurements of the form

| (2.1) |

where

is a fixed but unknown element of ,

are unknown elements of ,

is the action of on ,

is a linear operator,

is the (finite-dimensional) measurement space,

’s are independent noise terms.

If the ’s were known, then the task of recovering would reduce to a classical linear inverse problem, for which many effective techniques exist. While in many situations it is possible to estimate directly without estimating , or by treating these as nuisance parameters, here we focus on the problem of estimating the group elements .

There are several common approaches for inverse problems of the form (2.1). One is motivated by the observation that estimating knowing the ’s and estimating the ’s knowing are both considerably easier tasks. This suggests an alternating minimization approach where each estimation is updated iteratively. Besides a lack of theoretical guarantees, convergence may also depend on the initial guess. Another approach, which we refer to as pairwise comparisons [40], consists in determining, from pairs of observations , the most likely value for . Although the problem of estimating the ’s from these pairwise guesses is fairly well-understood [40, 10, 43] enjoying efficient algorithms and performance guarantees, this method suffers from loss of information as not all of the information of the problem is captured in this most likely value for and thus this approach tends to fail at low signal-to-noise-ratio.

In contrast, the Maximum Likelihood Estimator (MLE) leverages all information. Assuming that the ’s are i.i.d. Gaussian, the MLE for the observation model (2.1) is given by the following optimization problem:

| (2.2) |

We refer to (2.2) as the Multireference Alignment (MRA) problem. Let us denote the ground truth signal and group elements by and ; the solution to the optimization problem by and , which we will also refer to as and . Unfortunately, the exponentially large search space and nonconvex nature of (2.2) often render it computationally intractable. However, for several problems of interest, we formulate (2.2) as an instance of an NUG for which we develop computationally tractable approximations.

Notice that although MLE typically enjoys several theoretical properties, their underlying technical conditions do not hold in this case. Specifically, the number of parameters to be estimated is not fixed but rather grows indefinitely with the sample size : for each sample there is a group element that needs to be estimated. As a result, the MLE may not be consistent in this case. In other words, even in the limit the estimator may not converge to the ground truth . Similarly, the estimated group elements will not converge to their true values. A different version of MLE, not considered in this paper, in which the group elements are treated as nuisance parameters and are marginalized would enjoy the nice theoretical properties.

2.1. Registration of signals on the sphere.

Consider the problem of estimating a bandlimited signal on the circle from noisy rotated discrete sampled copies of it. In this problem, is the space of bandlimited functions up to degree on and the group action is

where and we identified with .

The measurements are of the form

where

,

,

samples the function at equally spaced points in ,

are independent Gaussians.

Our objective is to estimate and . Since estimating knowing the group elements is considerably easier, we will focus on estimating . As shown below, this will essentially reduce to the problem of aligning (or registering) the observations .

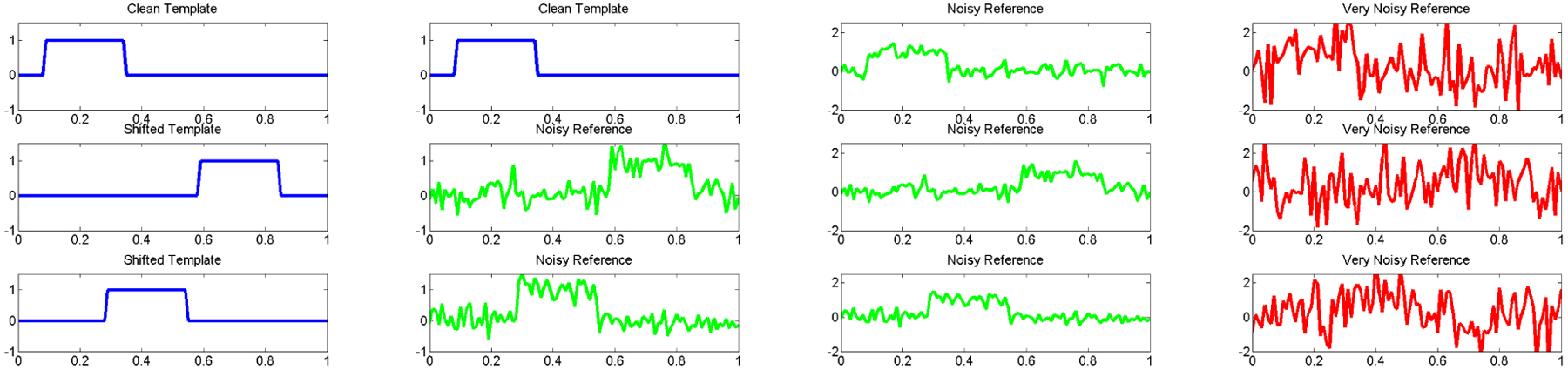

In absence of noise, the problem of finding the ’s is trivial (cf. first column of Figure 2.1). With noise, if is known (as it is in some applications), then the problem of determining the ’s can be solved by matched filtering (cf. second column of Figure 2.1). However, is unknown in general. This, together with the high levels of noise, render the problem significantly more difficult (cf. last two columns of Figure 2.1).

Figure 2.1.

Illustration of the registration problem in . The first column consists of a noiseless signal at three different shifts, the second column represents an instance for which the template is known and matched filtering is effective to estimate the shifts. However, in the examples we are interested in the template is unknown (last two columns) rendering the problem of estimating the shifts significantly harder.

We now define the problem of registration in -dimensions in general. is the space of bandlimited functions up to degree on where the ’s are orthonormal polynomials on indexes all up to degree and .

The measurements are of the form

| (2.3) |

where

,

samples the function on points in ,

are independent Gaussians.

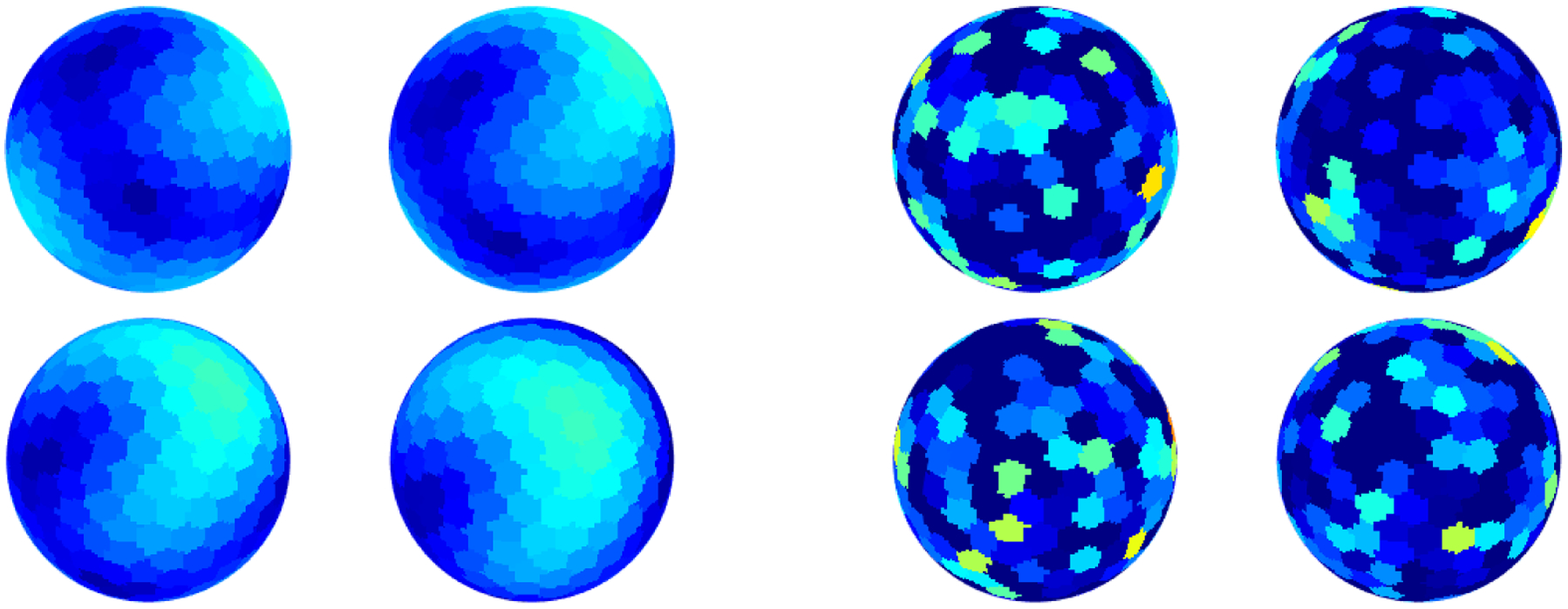

Again, our objective is to estimate and . We would like the sampling operator to be ‘uniform’. One possible sampling scheme is spherical designs surveyed in [11]. An illustration of signals on a sphere, sampled at such points, is provided in Figure 2.2.

Figure 2.2.

An illustration of registration in 2-dimensions. The left four spheres provide examples of clean signals and the right four spheres are of noisy observations. Note that the images are generated using a quantization of the sphere.

The MRA solution for registration in -dimensions is given by

| (2.4) |

We now remove from (2.4). Let be the adjoint of is also an approximate inverse of (up to normalization), because points are sampled from a -design which has the property of exactly integrating polynomials on the sphere. Then, (up to normalization), and the approximation error decreases as increases. Since preserves the norm , it follows that (2.4) is equivalent to

| (2.5) |

Since the minimizer with fixed ’s is the average, (2.5) is equivalent to

| (2.6) |

Since preserves norm, then (2.6) is equivalent to

| (2.7) |

In summary, (2.4) can be approximated by (2.7), which is an instance of (1.1).

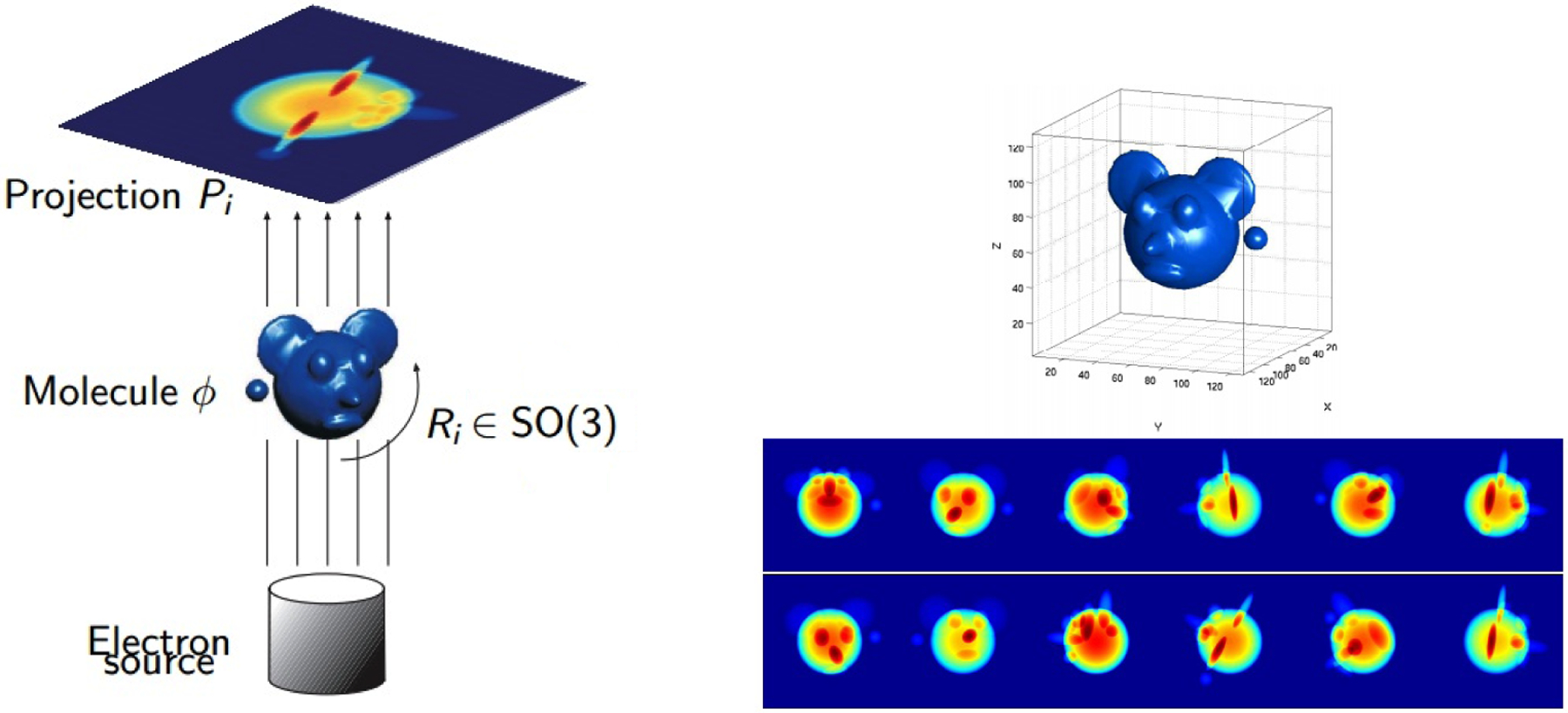

2.2. Orientation estimation in cryo-EM.

The task here is to reconstruct the molecule density from many noisy tomographic projection images (see the right column of Figure 1.1 for an idealized density and measurement dataset). We assume the molecule does not have any non-trivial point group symmetry. The linear inverse problem of recovering the molecule density given the rotations fits in the framework of classical computerized tomography for which effective methods exist. Thus, we focus on the non-linear inverse problem of estimating the unknown rotations and the underlying density.

Figure 1.1.

Illustration of the cryo-EM imaging process: A molecule is imaged after being frozen at a random (unknown) rotation and a tomographic 2-dimensional projection is captured. Given a number of tomographic projections taken at unknown rotations, we are interested in determining such rotations with the objective of reconstructing the molecule density. Images courtesy of Amit Singer and Yoel Shkolnisky [42].

An added difficulty is the high level of noise in the images. In fact, it is already non-trivial to distinguish whether a molecule is present in an image or if the image consists only of noise (see Figure 2.3 for a subset of an experimental dataset). On the other hand, these datasets consist of many projection images which renders reconstruction possible.

Figure 2.3.

Sample images from the E. coli 50S ribosomal subunit, generously provided by Dr. Fred Sigworth at the Yale Medical School.

We formulate the problem of orientation estimation in cryo-EM. Let be the space of bandlimited functions that are also essentially compactly supported in and . For perfectly centered images, and ignoring the effect of the microscope’s contrast transfer function, the measurements are of the form

| (2.8) |

,

samples is called the discrete X-ray transform),

’s are i.i.d Gaussians representing noise.

Our objective is to find and .

The operator in the orientation estimation problem is different than in the registration problem. Specifically, is a composition of tomographic projection and sampling. To write the objective function for the orientation estimation problem, we will use the Fourier slice theorem [30].

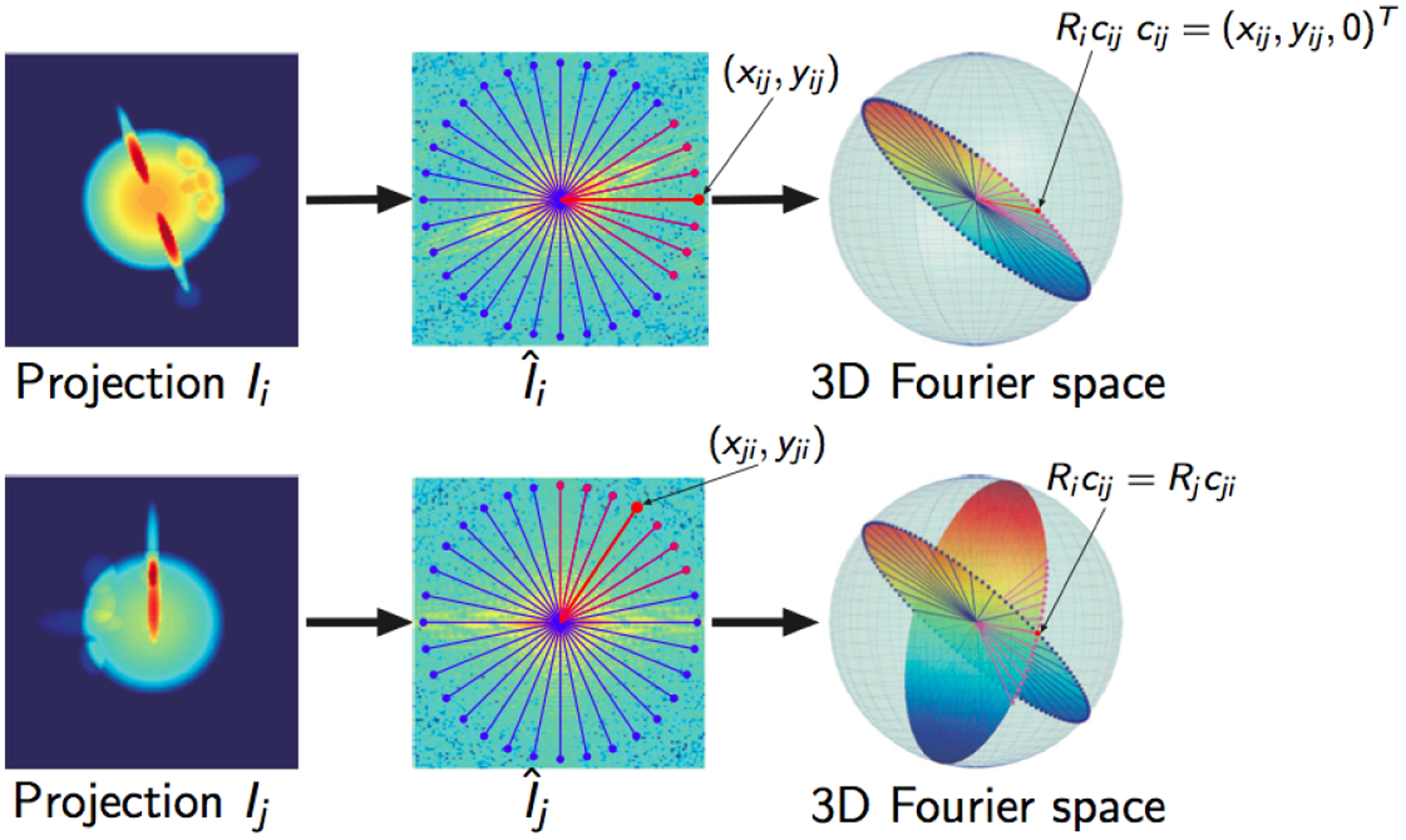

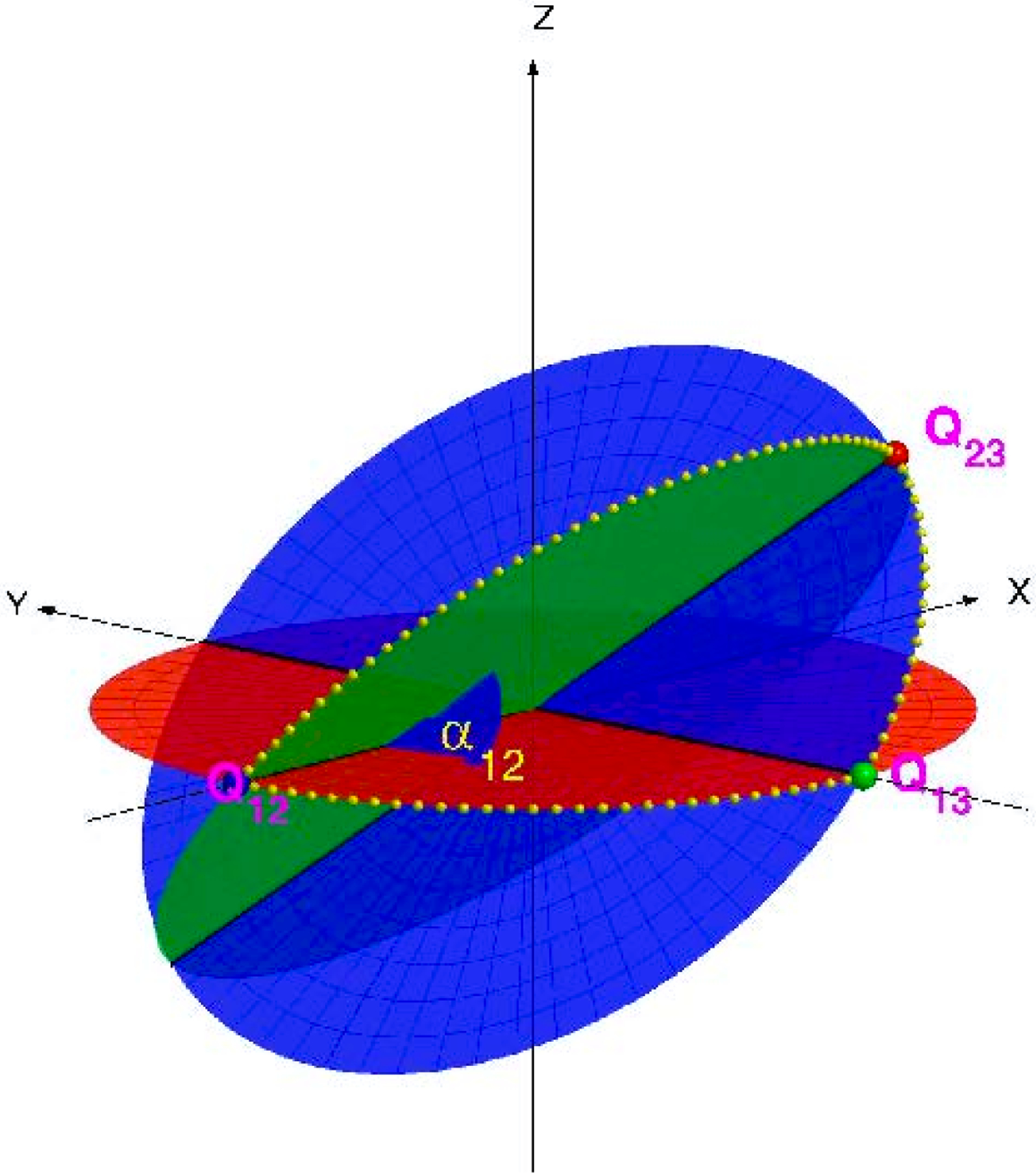

The Fourier slice theorem states that the 2-dimensional Fourier transform of a tomographic projection of a molecular density coincides with the restriction to a plane normal to the projection direction, a slice, of the 3-dimensional Fourier transform of the density . See Figure 2.4.

Figure 2.4.

An illustration of the use of the Fourier slice theorem and the common lines approach to the orientation estimation problem in cryo-EM. Image courtesy of Amit Singer and Yoel Shkolnisky [42].

Let be the Fourier transform of in polar coordinates. We identify and with the -plane in , and apply and to and , respectively. Then, the directions of the lines of intersection on and are given, respectively, by unit vectors

| (2.9) |

| (2.10) |

where . See [42] for details.

Since the noiseless images should agree on their common lines, we consider the following MRA-like cost function:

| (2.11) |

where, with a minor abuse of notation, we identify the vector with the angle of a common line in the Fourier transform of an image . Equation (2.11) is an instance of (1.1). Note that we could also use the norm or a weighted norm in the cost function.

Note that for images, there is always a degree of freedom along the line of intersection. In other words, we cannot recover the true orientation between and . However, for , this degree of freedom is eliminated. It is also worth mentioning several important references in the context of angular reconstitution [22, 45]. In general, the measurement system suffers from a handedness ambiguity on the reconstruction (see, for example, [42]), this will be discussed in detail later in the paper.

3. Linearization via Fourier expansion

Let us consider the objective function in the general form

| (3.1) |

Note that each in (3.1) can be nonlinear and nonconvex. However, since is compact (and since ), we can expand, each in Fourier series. More precisely, given the unitary irreducible representations of , we can write

| (3.2) |

where are the Fourier coefficients of and can be computed from via the Fourier transform

| (3.3) |

Above, denotes the Haar measure on and the dimension of the representation . See [17] for an introduction to the representations of compact groups.

We express the objective function (3.1) as

which is linear in . This motivates writing (1.1) as linear optimization over the variables

In other words,

where the coefficient matrices are given by

We refer to the block of corresponding to as . We now turn our attention to constraints on the variables . It is easy to see that:

| (3.4) |

| (3.5) |

| (3.6) |

| (3.7) |

Constraints (3.4), (3.5) and (3.6) ensure is of the form

for some unitary matrices. The constraint (3.7) attempts to ensure that is in the image of the representation of . Notably, none of these constraints ensures that, for different values of correspond to the same group element . Adding such a constraint would yield

| (3.8) |

where and are elements of .

Unfortunately, both the rank constraint and the last constraint in (3.8) are, in general, nonconvex. We will relax (3.8) by dropping the rank requirement and replacing the last constraint by positivity constraints that couple different ’s. We achieve this by considering the Dirac delta funcion on . Notice that the Dirac delta funcion on the identity can be expanded as

If we replace with , then we get

To arrive at a convex program, we consider the following convex constraints, that form a natural convex relaxation for Dirac deltas,

| (3.9) |

| (3.10) |

This suggests relaxing (3.8) to

| (3.11) |

For a nontrivial irreducible representation , we have . This means that the integral constraint in (3.11) is equivalent to the constraint

Thus, we focus on the optimization problem

| (3.12) |

When is a finite group it has only a finite number of irreducible representations. This means that (3.12) is a semidefinite program and can be solved, to arbitrary precision, in polynomial time [46]. In fact, when , a suitable change of basis shows that (3.12) is equivalent to the semidefinite programming relaxation proposed in [8] for the signal alignment problem.

Unfortunately, many of the applications of interest involve infinite groups. This creates two obstacles to solving (3.12). One is due to the infinite sum in the objective function and the other due to the infinite number of positivity constraints. In the next section, we address these two obstacles for the groups and .

4. Finite truncations for via Fejér kernels

The objective of this section is to replace (3.12) by an optimization problem depending only in finitely many variables . The objective function in (3.12) is converted from an infinite sum to a finite sum by truncating at degree . That is, we fix a and set for . This consists of truncating the Fourier series of . Unfortunately, constraint (3.9) given by

still involves infinitely many variables and consists of infinitely many linear constraints.

We now address this issue for the groups and .

4.1. Truncation for .

Since we truncated the objective function at degree , it is then natural to truncate the infinite sum in constraint (3.9) also at . If we truncated below , then some variables (such as ) are not constrained; and if we truncated above , then some variables (such as ) do not affect the cost function. The irreducible representations of are , and for all . Let us identify with . That straightforward truncation corresponds to approximating the Dirac delta with

This approximation is known as the Dirichlet kernel, which we denote as

However, the Dirichlet kernel does not inherit all the desirable properties of the delta function. In fact, is negative for some values of .

Instead, we use the Fejér kernel, which is a non-negative kernel, to approximate the Dirac delta. The Fejér kernel is defined as

which is the first-order Cesàro mean of the Dirichlet kernel.

This motivates us to replace constraint (3.9) with

where, for denotes .

This suggests considering

which only depends on the variables for .

Unfortunately, the condition that the trigonometric polynomial is always non-negative, still involves an infinite number of linear in-equalities. Interestingly, due to the Fejér-Riesz factorization theorem (see [19]), this condition can be replaced by an equivalent condition involving a positive semidefinite matrix — it turns out that every nonnegative trigonometric polynomial is a square, meaning that the so called sum-of-squares relaxation [33, 34] is exact. However, while such a formulation would still be an SDP and thus solvable, up to arbitrary precision, in polynomial time, it would involve a positive semidefinite variable for every pair , rendering it computationally challenging. For this reason we relax the non-negativity constraint by asking that is non-negative in a finite set . This yields the following optimization problem:

| (4.1) |

4.2. Truncation for .

The irreducible representations of are the Wigner-D matrices , and . See [48] for an introduction to Wigner-D matrices. Let us associate with Euler angle . A straightforward truncation yields the approximation

Observe that the operator tr is invariant under conjugation. Then can be decomposed as

with an such that

We can think of as a change of basis and as a rotation from under the basis . It follows that

The relationship between and is

This relationship can be obtained by directly evaluating using the Wigner-d matrix :

See [48] also for an introduction to Wigner-d matrix. This straightforward truncation at yields

which, again, inherits the undesirable property that this approximation can be negative for some . Recall that we circumvented this property in the 1-dimension case by taking the first-order Cesàro mean of the Dirichlet kernel. In the 2-dimension case, we will need the second-order Cesàro mean. Notice that

Fejér proved that [4]

where . It follows that

Let us define

where .

We replace constraint (3.9) with

Secondly, we discretize the group to obtain a finite number of constraints. We consider a suitable finite subset . In our implementation, we use a Hopf fibration [49] to discretize . The quotient space is equivalent to . We take a uniform discretization of and a spherical design [11] of . It is possible to find a spherical design on with points [11]. By [49], we use points to discretize . The size of our discretization is . So, we have to enforce inequality constraints. The choice of is up to the user to strike a balance between computational speed and accuracy. We can then relax the non-negativity constraint yielding the following semidefinite program1:

| (4.2) |

4.3. An additional constraint on .

In this section, we discuss an additional constraint on , which uses properties of quaternions to constrain each block of in the convex hull of more directly.

We consider the standard rotation matrix and the unit quaternion which represent the same rotation as the block (whose representation is associated with spherical harmonics), and we consider the outer product of the unit quaternion

| (4.3) |

The standard rotation matrix is related to the block by the formula

where,

and

Indeed, one can verify that

where,

and is used simply to rearrange the elements of .

Next, is mapped to by Rodrigues’ rotation formula (see [37]):

In summary, the mapping from to , which we denote by , is given by the formula:

For each , we wish to impose the constraints implied by Equation (4.3):

But since the constraint is not convex, we will drop it, giving us the following SDP in place of (4.2):

| (4.4) |

5. Applications

In this section, we consider the application of (4.1) to the problem of registration over the unit circle and the application of (4.4) to registration over the unit sphere and orientation estimation in cryo-EM. To solve the SDP for each problem, the only parameters we need to determine are the coefficient matrices and the truncation parameter . The calculations of are detailed in the following pages. As for , we experimented over a range of values for each problem, and chose a value that balances computational time with accuracy in the estimated rotations. The SDP outputs the relative transformations , while we need . For each problem, we describe a rounding procedure to recover from the ’s.

5.1. Registration in 1-dimension.

Recall that is the space of bandlimited functions up to degree on . That is, for , we can express

Again, the irreducible representations of are , and for all . Let us identify with , then

Let sample the underlying signal at distinct points. This way, we can determine all the ’s associated with .

Since , for the adjoint , we have

Let us identify with . Then, we can express in terms of and :

The Fourier coefficients of are

Note that we re-indexed the coefficients .

5.1.1. Rounding.

(4.1) gives us the ’s. From the ’s, we want to extract each (up to a global transformation). Let us consider . We want to be of the form

Although is not guaranteed to be rank 1, we will simply take the top eigenvector of as our estimate of . And from , we can recover . See [40] for the reasoning behind this approach. In practice, we find using the top eigenvector of is a sufficient estimate of . We do not need to run additional comparisons against .

5.2. Registration in 2-dimension.

Recall that is the space of bandlimited functions up to degree on . That is, for , we can express

where are the spherical harmonics. Again, the irreducible representations of are the Wigner D-matrices , and . Let us associate with Euler (Z-Y-Z) angle , then

Let sample the underlying signal at points. This way, we can determine all the ’s associated with .

Again, for the adjoint we have

Let us identify with Euler (Z-Y-Z) angle . Then, we can express in terms of and :

The Fourier coefficients are given by

Here, we used the orthogonality relationship

and the property

5.2.1. Rounding.

Again, (4.4) gives us the ’s. From the ’s, we want to extract each (up to a global transformation). Let us consider . We want to be of the form

where each is a 3 × 3 matrix. Similarly, is not guaranteed to be rank 3, but we will simply take the top 3 eigenvector of as our estimate of . And from , we can recover .

5.3. Orientation estimation in cryo-EM.

We refer to [50] to expand the objective function. We emphasize that the theory holds for arbitrary basis on the space containing the ’s. We choose to construct the ’s using coefficients and parameters from the Fourier-Bessel expansion. Projection can be expanded via Fourier-Bessel series as

where

The parameters above are defined as follows:

is the radius of the disc containing the support of ,

is the Bessel function of integer order ,

is the root of ,

is a normalization factor.

To avoid aliasing, we truncate the Fourier-Bessel expansion as follows.

See [50] for a discussion on and . For the purpose of this section, let us assume we have for each . (These can be computed from the Cartesian grid sampled images.)

We shall determine the relationship between and , and the lines of intersection between and embedded in . Recall from (2.9) and (2.10) that the directions of the lines of intersection between and are given, respectively, by unit vectors

Let us associate with Euler (Z-Y-Z) angle . Then

under the rotation matrix . The directions of the lines of intersection in and under are in the directions, respectively,

We express the ’s in terms of , and and :

For each , we approximate the integral

with a Gaussian quadrature.

Using the approximation above, we have

where

In terms of the Euler (Z-Y-Z) angles,

The Fourier coefficients are given by

Note that

where is the Wigner -matrix. Let us define

The entry of is approximated by

Here, is the Kronecker delta and absorbed .

5.3.1. Handedness ambiguity in cryo-EM.

There exists one additional issue specifically for the cryo-EM problem arising from the handedness ambiguity. Suppose an image was projected from some molecular density and orientation . Let be the reflection operator across the imaging plane. The molecular density under orientation would produce the same projection. In other words, the set of projection images can belong to different molecular densities and under different rotations.

We will formalize and deal with the handedness ambiguity in terms of the Wigner D-matrices. Recall that the Wigner D-matrix corresponding to is

Let be the following diagonal matrix:

(The diagonal alternates between +1 and −1.) Due to the handedness ambiguity, if is a solution to (4.4), then is also a valid solution to (4.4). In fact, for any ,

is a valid solution to (4.4).

Let us remove this extra degree of freedom . Observe that for ,

I.e., the odd-indexed entries are 0. For example, in the case of ,

and in the case of ,

We constrain the odd-indexed entries of ’s to be 0 so that the SDP finds the solution with . Note that, in practice, we do not explicitly add this constraint. Instead, we permute into two disjoint diagonal blocks. For example, in the case of ,

and in the case of ,

We can conjugate each in (4.4) by a permutation and get

Similarly, we can conjugate by a permutation and get

where

Let us denote the above permutation as . The objective function in (4.4) is preserved if we conjugate both the ’s and the ’s by . I.e.,

where and are blocks corresponding to and , respectively. We apply the same permutation to the ’s in the constraints of (4.4) and reduce (4.4) to

| (5.1) |

where defines the linear relationship between and as

5.3.2. Rounding.

(5.1) gives us the ’s and the ’s. We aggregate and into the matrix . From the ’s, we build the synchronization matrix described in [39] and apply the cryo_syncrotations function on from the ASPIRE software package [5] to recover . Note that we do not truncate the eigenvectors and eigenvalues from .

6. Implementation and results for synthetic cryo-EM datasets

In this section, we give a brief history of methods used for determining orientations of cryo-EM projection images, and where we stand against the current state-of-the-art.

In 1986, Vainshtein and Goncharov developed a common-lines based method for ab-initio modeling in cryo-EM [45]. In 1987, M. Van Heel also independently discovered the same method, and coined it angular reconstitution [22]. Recall that by the Fourier slice theorem, two cryo-EM projection images (in Fourier space) must intersect along a common line. Given three cryo-EM images from different viewing directions, their common lines must uniquely determine their relative orientations up to handedness. (See Figure 6.1.) The orientations of the rest of the images are determined by common lines with the first three images. This is angular reconstitution in a nutshell.

Figure 6.1.

Three cryo-EM images uniquely determine their orientations up to handedness. Image courtesy of Singer et al. [41].

In 1992, Farrow and Ottensmeyer expanded upon angular reconstitution by developing a method to sequentially add images via least squares [20]. One major drawback in sequentially assigning orientations of cryo-EM images is the propagation of error due to false common line detection. In 1996, Penczek, Zhu, and Frank tried to circumvent the issue via brute-force search for a global energy minimizer [35]. However, the search space is simply too big for that method to be applicable. In 2006, Mallick et al. introduced a Bayesian method in which common lines between pairs of images are determined by their common lines with different projection triplets [29]. In the method by Mallick et al., at least seven common lines must be correctly and simultaneously determined, which can be problematic. In 2010, Singer et al. lowered the requirement to that only two common lines need to be correctly and simultaneously determined [41]. In 2011, Singer and Shkolnisky built upon [41] by adding a global consistency condition among the orientations [39]. This method is called synchronization, and it is regarded as the current state-of-the-art.

Note that all avaible methods for orientation determination, including the NUG approach proposed in this paper, cannot be directly applied to raw experimental images. We will explain the factors preventing us from doing so in the next subsection. We therefore numerically validate and evaluate the method using synthetic data.

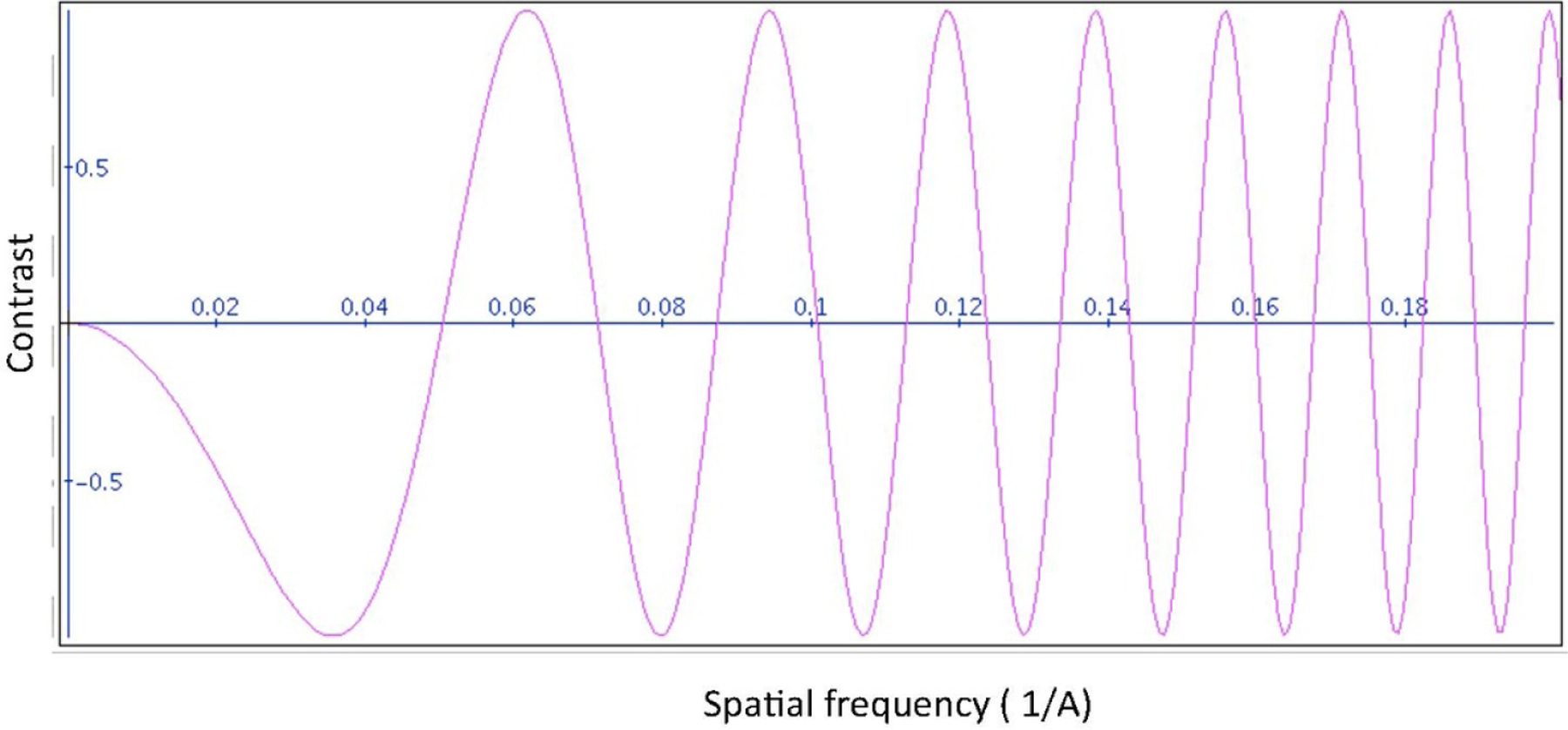

6.1. Shifts, CTF and contrast.

In comparison to the simplistic forward model (2.8), there are three major imperfections in the experimental cryo-EM datasets. First, the images are not centered, and the common lines will not correspond exactly even under their true orientations. Second, the images are subject to the contrast transfer function (CTF) of the electron microscope. A CTF, as a function of radial frequency, is shown in Figure 6.2. Making matters mathematically more challenging, a CTF is typically estimated per micrograph and each micrograph would have a different CTF. Thus, all images from the same micrograph are typically assigned the same CTF. We also say those images belong to the same defocus group. However, as shown in Table 2 of Section 6.4, shifts and CTFs are not detrimental to NUG’s performance. This brings us to the third obstacle, which does prevent us from directly applying NUG on raw experimental images. The ice layer in which the molecules are frozen is not uniform. It can be thicker/thinner where different projections are taken, and this effect is equivalent to scaling the projections by a factor . So, various projections have various contrasts. Those effects are typically mitigated in class averages. Class averages are formed by in-plane aligning and averaging raw images that are estimated to have similar viewing directions. It is possible to apply NUG on class averages instead of the original raw experimental images. However, the quality of the results then depends crucially on the specific class averaging procedure being used and does not provide much insight into the performance of NUG itself.

Figure 6.2.

Example created by Jiang and Chiu available at http://jiang.bio.purdue.edu/software/ctf/ctfapplet.html

Table 2.

Effects of shifts, CTFs and contrast on the MSE (defined in (6.8)) of the NUG SDP cryo-EM. Shifts are sampled from , CTFs are drawn uniformly from 4 defocus groups and contrasts are sampled from . We used 500 simulated projections of size 64 × 64.

| SNR | benchmark | CTF | CTF and shifts | CTF and contrast |

|---|---|---|---|---|

| 1/4 | 0.0285 | 0.0381 | 0.3382 | 0.7342 |

| 1/8 | 0.0333 | 0.1450 | 0.5741 | > 2.0 |

| 1/16 | 0.0698 | 0.7631 | 1.3391 | > 2.0 |

| 1/32 | 0.4387 | 1.9199 | > 2.0 | > 2.0 |

| 1/64 | 1.8587 | > 2.0 | > 2.0 | > 2.0 |

6.2. ADMM implementation.

There are two parts that are particularly challenging for obtaining a numerical solution to the NUG SDP (4.4) and (5.1):

implementing a SDP solver that is scalable to real-world problems such as orientation estimation in cryo-EM,

computing the coefficient matrix for generic objective function ’s.

For the cryo-EM problem, the NUG SDP is simply too big to iterate on solvers based on techniques like interior point methods. Interior point methods are known for their accuracy. However, their accuracy is achieved at the expense of computational complexity. In essence, interior point methods solve a system of linear equations that attempts to satisfy both primal and dual feasibilities [14]. That is, solvers based on interior point methods have to invert a matrix that contains both primal and dual variables. The number and size of the signals coupled with the number of inequality constraints in (4.4) and (5.1) make this inversion impractical. Instead, we use the alternating direction method of multipliers, or ADMM. As the name suggests, ADMM alternates between the primal and the different sets of dual variables. More importantly, the steps in ADMM are linear, with the exception of an eigendecomposition on the primal variable. In practice, the eigendecomposition on the primal variable is manageable.

In general, it is not possible to obtain a closed-form expression for the coefficient matrices ’s. Sometimes, we need to employ numerical integration schemes over the group to find the ’s.

6.2.1. The alternating direction method of multipliers.

In short, ADMM solves the augmented Lagrangian via iterative partial updating. We solve an unconstrained optimization problem over the objective variable, and enforce the constraints via dual variables. We will express the NUG SDP in a more general form, and derive ADMM updates in the more general setting. (4.4) and (5.1) are SDPs of the following form:

| (6.1) |

We can think of and as the following block matrices:

Note that going from (4.4) and (5.1) to (6.1), we used the fact that

because the ’s are Hermitian. and are linear operators that encapsulate the equality and inequality constraints, respectively. For example, we can think of as

where, for the constraint is the matrix containing at the position of and 0 everywhere else.

More concretely, is equivalent to , where

and vec vectorizes the matrix along the columns. The adjoint operator of is given by

where mat is the reverse operator of vec. Furthermore, we can verify

,

.

Now, we describe the ADMM solver outlined in [44] for SDP (6.1). ADMM is essentially a series of partial iterative updates based on the augmented Lagrangian. So, we will define the dual variables and write out the augmented Lagrangian. Then, we derive the updates by setting the gradient of the augmented Lagrangian, with respect to specific variables, to 0.

The dual variables corresponding to (6.1) are

The Lagrangian for (6.1) is

| (6.2) |

The motivation for defining (6.2) is so that the primal variable satisfies the constraints using the dual variables via

| (6.3) |

Notice that (6.3) is an unconstrained optimization problem over . If is not PSD, then there exists such that . Thus, due to the inner maximization over (6.3) produces a solution satisfying . The equality and inequality constraints are enforced in a similar manner. The maximizing must satisfy

In fact, this gives us the dual problem to (6.1)

The augmented Lagrangian is the Lagrangian with the dual variables regularized by the Frobenius norm:

| (6.4) |

This regularization term is crucial for numerical convergence of the solver.

The updates for the dual variables are given by their individual optimality conditions.

- Solving for and applying the PSD projection, we get

- Solving for , we get

Note that for the NUG SDP, because each equality constraint is on a single entry in . So, this update simplifies to - Note that if we solve for , we will have to invert the operator , and this is extremely computationally challenging. Instead, we add an additional regularization term , where is the largest eigenvalue of and is the element from the previous -update. Solving for and applying the the nonnegativity projection, we get

The update for the primal variable is simply a gradient descent given by

We initialize the variables as the following:

,

,

,

.

We apply the updates in the following order:

Note that we updated twice, which guarantees the solver’s convergence to the optimizer [44].

6.2.2. Fourier coefficients for bandlimited functions on .

The coefficient matrix in (3.12) is composed of

We obtained closed-form expressions for the registration problem (2.4) under the assumption that the noise in the underlying signal is Gaussian. For the cryo-EM problem (2.11), we require a single approximation of an integral using Gaussian quadrature. However, this is not always the case. For example, the noise in the cryo-EM projections is better modeled by the Poisson distribution [36]. We describe the numerical integration scheme in [28] that can be used to obtain the desired Fourier coefficients . We need to make the assumption that ’s are bandlimited by . The method described in [28] has a better computational complexity than straight forward numerical integration. We will define the quadrature, and then outline the evaluation over the quadrature.

For function with bandlimit , we have the equality

| (6.5) |

where

and

Recall that

We can re-write the entries in (6.5) as

| (6.6) |

By rearranging the terms in (6.6), it becomes obvious that we should compute in the following order:

- for all and , compute

- for all and , compute

- for all , compute

The complexity to compute for all and is ; and the complexity of the straight-forward evaluation in (6.5) is [28].

6.2.3. Simplification for cryo-EM.

Recall that the objective function for cryo-EM does not depend on . I.e.,

(6.6) reduces to

| (6.7) |

We compute (6.7) in the following order:

- compute

- for all and , compute

- for all , compute

- for all , compute

The complexity to compute for all and is .

6.3. Rotation MSE and FSC resolution.

We assess the performance of orientation estimation method using the mean squared error (MSE), defined as follows:

| (6.8) |

Here are the true rotations (which are known in the simulation setting) and are the estimated rotations (note that we previously used the hat notation for the Fourier transform, but here it is used for estimators). Since the estimation is up to a global rotation and possibly handedness, the two sets of rotations are aligned prior to computing the MSE.

In addition, we will include the Fourier Shell Correlation (FSC) of the reconstructed structure against the known structure. We reconstruct the 3-dimensional structure (in Fourier space) using the estimated orientations to get , and compare it against the known structure (in Fourier space). For each spatial frequency , we calculate the FSC

We derive the resolution (in Angstroms) by interpolating the FSC until we reach an yielding . See [23] for a discussion on the FSC and the cutoff value.

Note that the rotation MSE is the most direct assessment of orientation estimation methods. After all, the stated objective of orientation estimation in cryo-EM is to estimate the orientations. In addition, the quality of the 3-dimensional reconstruction depends on several other factors such as the signal-to-noise ratio of the images, the number of images, the distribution of the viewing directions and the CTF of the images. We focus on the rotation MSE because the other factors mentioned are independent of the algorithm being used.

6.4. Simulated data.

With the ASPIRE software package in [5], we generate a set of 100 and a set of 500 projection images of size 129 × 129 from the Plasmodium falciparum ribosome (see https://www.ebi.ac.uk/pdbe/emdb/empiar/entry/10028/.) We add Gaussian noise to the simulated projections, and then apply a circular mask to zero out the noise outside of the radius. Table 1 shows a comparison of NUG and the synchronization method for different noise levels. In particular, the estimation error of NUG at SNR=1/64 with 500 images is sufficiently low (MSE=0.05) to result in a meaningful 3-D ab-initio model (with ¡ 30 Angstrom resolution). Numerical experiments were conducted on a cluster of Intel(R) Xeon(R) CPU E7–8880v3 @ 2.30GHz totaling 144 cores and 792GB of RAM. The synchronization method is roughly two orders of magnitude faster than NUG, but NUG gives more accurate estimates at low SNR.

Table 1.

The resolutions shown are in Angstroms. At high SNR, synchronization is accurate to more decimal places. This is not surprising since we have compromised on various truncations and discretizations for computational speed, etc. However, as the noise increases, we see NUG outperforming synchronization.

| 100 images | SNR | NUG MSE | sync. MSE | NUG res | sync res |

|---|---|---|---|---|---|

| 1/1 | 0.0153 | 1.2759e-04 | 24.6 | 20.8 | |

| 1/2 | 0.0155 | 1.3593e-04 | 21.5 | 20.3 | |

| 1/4 | 0.0174 | 3.6615e-04 | 27.0 | 22.4 | |

| 1/8 | 0.0192 | 0.0037 | 28.7 | 25.8 | |

| 1/16 | 0.0227 | 0.0300 | 29.8 | 30.9 | |

| 1/32 | 0.0298 | 0.1572 | 35.6 | 45.8 | |

| 1/64 | 0.1559 | 2.7818 | 45.3 | 174.1 | |

| 1/128 | 2.1239 | 4.1492 | 97.9 | 175.3 | |

| 500 images | SNR | NUG MSE | sync. MSE | NUG res | sync res |

| 1/1 | 0.0125 | 4.1412e-05 | 18.1 | 14.6 | |

| 1/2 | 0.0130 | 5.1825e-05 | 20.8 | 18.2 | |

| 1/4 | 0.0134 | 1.5268e-04 | 21.7 | 17.0 | |

| 1/8 | 0.0143 | 0.0018 | 16.4 | 18.1 | |

| 1/16 | 0.0175 | 0.0189 | 20.8 | 17.7 | |

| 1/32 | 0.0195 | 0.1559 | 24.6 | 30.7 | |

| 1/64 | 0.0460 | 2.2496 | 29.3 | 71.1 | |

| 1/128 | 1.6060 | 3.1661 | 64.2 | 107.6 |

To put the numbers in Table 1 into perspective, a MSE of 0.1, for example, can be regarded as small enough in one instance to lead to a good reconstruction, but too large in another. The 3-dimensional reconstruction from 100 images at low SNR (e.g., SNR=1/128) looks bad even if one uses the true orientations (i.e., MSE=0). On the other hand, the 3-dimensional reconstruction from 500 images at moderate SNR (e.g., SNR=1/32) would look very decent at MSE=0.1. The latter case is shown in Figure 7 in [39], where SNR=1/32 and the MSE is slightly above 0.1. The resolution measure quantifies the quality of the 3-D ab-initio model. An idea about the quality of 3-dimensional reconstruction with respect to the MSE can also be obtained from Table 3 and Figure 8 in [42]. We would like to point out that for the same computational cost, synchronization can process more images than NUG, and therefore may yield better 3-dimensional reconstructions. However, beyond the theoretical importance, NUG still might be useful in situations in which the number of images is limited (e.g., when there are only a handful number of class averages or in electron tomography).

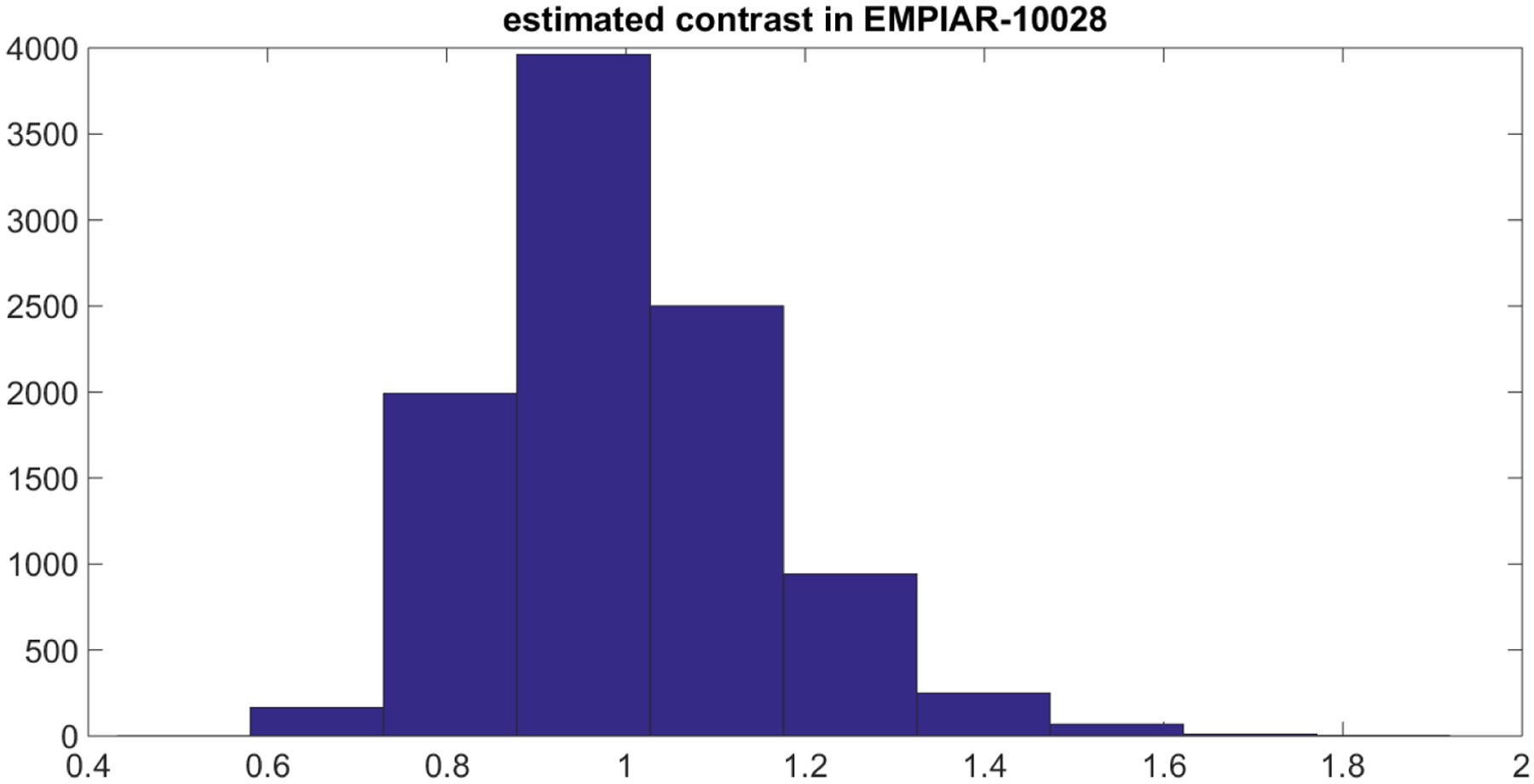

We also illustrate the effects of contrast, CTF and shifts on the performance of NUG in the numerical experiment reported in Table 2. In this numerical experiment, phase flipping was applied to correct for the phases of the CTF but not their magnitudes. As for shifts, we simply ignore it in the NUG SDP. In the future, we can expand (4.4) to include shifts to improve upon our estimates. Please see Section 6.1 for a discussion on the effects of contrast, CTF and shifts.

The distribution for the contrast was based on the following numerical experiment. We used the publicly available experimental dataset EMPIAR-10028 found here: https://www.ebi.ac.uk/pdbe/emdb/empiar/entry/10028/. Using the known 3-dimensional structure of the molecule, we construct 10,000 simulated clean projections of size 64 × 64 at different orientations sampled uniformly over . For each image in the experimental dataset, we solve for in

where ’s are simulated projections generated from the known structure. Figure 6.3 shows the empirical distribution of .

Figure 6.3.

Distribution of contrast in the experimental dataset EMPIAR-10028.

7. Summary

The NUG problem consists in the minimization of the sum of pairwise cost functions defined over the ratio between group elements, for arbitrary compact groups. This corresponds to the simultaneous multi-alignment of many datapoints (e.g. signals, images, or molecule densities) with respect to transformations given by an action of the corresponding group. We presented a methodology to solve this problem involving a relaxation of the problem to an SDP and an implementation of an ADMM algorithm to solve the resulting SDP.

In this paper we focused mainly in the context of alignment over and . A notable example is that of finding a consistent set of pairwise rotations of many different functions defined on a sphere which globally minimize the disagreement between pairs of functions.

The NUG problem arises in cryo-EM, where the task is to recover the relative orientations of many noisy 2-dimensional projections images of a 3-dimensional object, each obtained from a different unknown viewing direction. Once good alignments are estimated, the 3-dimensional object can be reconstructed using standard tomography algorithms. In this paper we formulate the problem of image alignment in cryo-EM as an instance of NUG, and demonstrate the applicability to simulated datasets.

The computational and numerical considerations require truncations of the both the cost functions and group representations; a general approach is proposed as part of the formulation as an SDP, and additional methods particularly developed for the case of , and to the special properties of the cryo-EM problem, are presented.

It is noteworthy that, compared to previous work on related alignment problems, the formulation of the problem as an SDP can provide a certificate for optimality in some cases. Specifically, whenever the solution of the SDP also satisfies the nonconvex constraints that have been relaxed, it serves as a certificate that optimality has been achieved. In numerical work not reported in this paper we have numerically observed optimality of NUG SDP for some instances of MRA, but not for estimation of orientations in cryo-EM. Better theoretical understanding of when NUG SDP achieves optimality is an interesting open problem. In the context of cryo-EM, like other common-line based approaches, it does not require an initial guess. These properties make NUG a candidate for future work on robust ab initio alignment which would provide an initialization for other refinement algorithms.

Acknowledgments

The authors thank the anonymous reviewers for their valuable comments that helped improve the paper.

A. Singer was partially supported by NIGMS Award Number R01GM090200, AFOSR FA9550-17-1-0291, Simons Investigator Award, the Moore Foundation Data-Driven Discovery Investigator Award, and NSF BIGDATA Award IIS-1837992. These awards also partially supported A. S. Bandeira, Y. Chen, and R. R. Lederman at Princeton University.

Part of this manuscript was prepared while A. S. Bandeira was with the Courant Institute of Mathematical Sciences and the Center for Data Science at New York University, and partially supported by National Science Foundation Grants DMS-1317308, DMS-1712730, DMS1719545, and by a grant from the Sloan Foundation.

The authors performed most of the research at Princeton University.

Footnotes

Similarly to , it is possible that the non-negativity constraint may be replaced by an SDP or sums-of-squares constraint. [38]

Contributor Information

Afonso S. Bandeira, Department of Mathematics, ETH Zürich Ramistrasse 101, 8092 Zürich, Switzerland

Yutong Chen, Systems Trading, Tudor Investment Corporation, New York, NY USA.

Roy R. Lederman, Department of Statistics and Data Science, Yale University, 24 Hillhouse Avenue, New Haven, CT 06511 USA

Amit Singer, Department of Mathematics, Program in Applied and Computational Mathematics, Princeton University, Fine Hall, Washington Road, Princeton, NJ 08544 USA.

References

- [1].Abbe E, Bandeira AS, Bracher A, and Singer A. Decoding binary node labels from censored edge measurements: Phase transition and efficient recovery. Network Science and Engineering, IEEE Transactions on, 1(1):10–22, Jan 2014. [Google Scholar]

- [2].Abbe E, Bandeira AS, and Hall G. Exact recovery in the stochastic block model. IEEE Transactions on Information Theory, 62(1):471–487, 2016. [Google Scholar]

- [3].Alon N and Naor A. Approximating the cut-norm via Grothendieck’s inequality. In Proc. of the 36 th ACM STOC, pages 72–80. ACM Press, 2004. [Google Scholar]

- [4].Askey R. Orthogonal Polynomials and Special Functions. SIAM, 4 edition, 1975. [Google Scholar]

- [5].ASPIRE - algorithms for single particle reconstruction software package. http://spr.math.princeton.edu/.

- [6].Bai XC, McMullan G, and Scheres SH. How cryo-EM is revolutionizing structural biology. Trends in Biochemical Sciences, 40(1):49–57, 2015. [DOI] [PubMed] [Google Scholar]

- [7].Bandeira AS. Random Laplacian matrices and convex relaxations. Foundations of Computational Mathematics, 18(2):345–379, 2018. [Google Scholar]

- [8].Bandeira AS, Charikar M, Singer A, and Zhu A. Multireference alignment using semidefinite programming. 5th Innovations in Theoretical Computer Science (ITCS 2014), 2014. [Google Scholar]

- [9].Bandeira AS, Kennedy C, and Singer A. Approximating the little Grothendieck problem over the orthogonal and unitary groups. Math. Program, 160:433–475, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Bandeira AS, Singer A, and Spielman DA. A Cheeger inequality for the graph connection Laplacian. SIAM J. Matrix Anal. Appl, 34(4):1611–1630, 2013. [Google Scholar]

- [11].Bannai E and Bannai E. A survey on spherical designs and algebraic combinatorics on spheres. European Journal of Combinatorics, 30(6):1392–1425, 2009. [Google Scholar]

- [12].Bhamre T, Zhao Z, and Singer A. Mahalanobis distance for class averaging of cryo-EM images. 2017 IEEE 14 th International Symposium on Biomedical Imaging, pages 654–658, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Lederman RR and Singer A. A representation theory perspective on simultaneous alignment and classification. Applied and Computational Harmonic Analysis, 2019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [14].Boyd S and Vandenberghe L. Convex Optimization. Cambridge University Press, 2004. [Google Scholar]

- [15].Charikar M, Makarychev K, and Makarychev Y. Near-optimal algorithms for unique Games. Proceedings of the 38th ACM Symposium on Theory of Computing, 2006. [Google Scholar]

- [16].Chen Y, Huang Q-X, and Guibas L. Near-optimal joint object matching via convex relaxation. Proceedings of the 31st International Conference on Machine Learning, 32(2):100–108, 2014. [Google Scholar]

- [17].Coifman RR and Weiss G. Representations of compact groups and spherical harmonics. L’Enseignement Mathematique, Vol. 14, 1968. [Google Scholar]

- [18].Cucuringu M. Synchronization over Z2 and community detection in signed multiplex networks with constraints. Journal of Complex Networks, 2015. [Google Scholar]

- [19].Dumitrescu B. Positive Trigonometric Polynomials and Signal Processing Applications. Springer, 2007. [Google Scholar]

- [20].Farrow NA and Ottensmeyer EP. A posteriori determination of relative projection directions of arbitrarily oriented macromolecules. Journal of the Optical Society of America A, 9(10):1749–1760, 1992. [Google Scholar]

- [21].Goemans MX and Williamson DP. Improved approximation algorithms for maximum cut and satisfiability problems using semidefinite programming. Journal of the Association for Computing and Machinery, 42(6):1115–1145, 1995. [Google Scholar]

- [22].Van Heel M. Angular reconstitution: a posteriori assignment of projection directions for 3D reconstruction. Ultramicroscopy, 21:111–123, 1987. [DOI] [PubMed] [Google Scholar]

- [23].Van Heel M and Schatz M. Fourier shell correlation threshold criteria. Journal of Structural Biology, 151:250–262, 2005. [DOI] [PubMed] [Google Scholar]

- [24].Huang Q-X and Guibas L. Consistent shape maps via semidefinite programming. Computer Graphics Forum, 32(5):177–186, 2013. [Google Scholar]

- [25].Kam Z. The reconstruction of structure from electron micrographs of randomly oriented particles. Journal of Theoretical Biology, 82(1):15–39, 1980. [DOI] [PubMed] [Google Scholar]

- [26].Khot S. On the power of unique 2-prover 1-round games. Thiry-fourth annual ACM symposium on Theory of computing, 2002. [Google Scholar]

- [27].Khot S. On the unique Games conjecture (invited survey). In Proceedings of the 2010 IEEE 25th Annual Conference on Computational Complexity, CCC ‘10, pages 99–121, Washington, DC, USA, 2010. IEEE Computer Society. [Google Scholar]

- [28].Kostlee PJ and Rockmore DN. FFTs on the rotation group. Journal of Fourier Analysis and Applications, 14:145–179, 2008. [Google Scholar]

- [29].Mallick SP, Agarwal S, Kriegman DJ, Belongie SJ, Carragher B, and Potter CS. Structure and view estimation for tomographic reconstruction: A Bayesian approach. EEE Conference on Computer Vision and Pattern Recognition, 2:2253–2260, 2006. [Google Scholar]

- [30].Natterer F. The Mathematics of Computerized Tomography. Classics in Applied Mathematics, SIAM, 2001. [Google Scholar]

- [31].Naor A, Regev O, and Vidick T. Efficient rounding for the noncommutative Grothendieck inequality. In Proceedings of the 45th annual ACM symposium on Symposium on theory of computing, STOC ‘13, pages 71–80, New York, NY, USA, 2013. ACM. [Google Scholar]

- [32].Nesterov Y. Semidefinite relaxation and nonconvex quadratic optimization. Optimization Methods and Software, 9(1–3):141–160, 1998. [Google Scholar]

- [33].Parrilo PA. Structured semidefinite programs and semialgebraic geometry methods in robustness and optimization. Thesis, California Institute of Technology, 2000. [Google Scholar]

- [34].Parrilo PA Blekherman G and Thomas RR. Semidefinite Optimization and Convex Algebraic Geometry. MOS-SIAM Series on Optimization, 2013. [Google Scholar]

- [35].Penczek PA, Zhu J, and Frank J. A common-lines based method for determining orientations for n > 3 particle projections simultaneously. Ultramicroscropy, 63:205–218, 1996. [DOI] [PubMed] [Google Scholar]

- [36].Prucnal PR and Saleh BEA. Transformation of image-signal-dependent noise into image-signal-independent noise. Optics Letters, 6:316–318, 1981. [DOI] [PubMed] [Google Scholar]

- [37].Rodrigues O. Des lois géometriques qui regissent les déplacements d’ un systéme solide dans l’ espace, et de la variation des coordonnées provenant de ces déplacement considérées indépendent des causes qui peuvent les produire. J. Math. Pures Appl, 5:380–440, 1840. [Google Scholar]

- [38].Saunderson J, Parrilo PA, and Willsky AS. Semidefinite descriptions of the convex hull of rotation matrices. SIAM J. Optim, 25(3):1314–1343, 2015. [Google Scholar]

- [39].Shkolnisky Y and Singer A. Viewing direction estimation in cryo-EM using synchronization. SIAM J. Imagin Sciences, 5(3):1088–1110, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [40].Singer A. Angular synchronization by eigenvectors and semidefinite programming. Applied and Computational Harmonic Analysis, 30(1):20–36, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [41].Singer A, Coifman RR, Sigworth FJ, Chester DW, and Shkolnisky Y. Detecting consistent common lines in cryo-EM by voting. Journal of Structural Biology, 169(3):312–322, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [42].Singer A and Shkolnisky Y. Three-dimensional structure determination from common lines in cryo-EM by eigenvectors and semidefinite programming. SIAM J. Imaging Sciences, 4(2):543–572, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [43].Singer A, Zhao Z, Shkolnisky Y, and Hadani R. Viewing angle classification of cryo-Electron Microscopy images using eigenvectors. SIAM Journal on Imaging Sciences, 4:723–759, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [44].Sun D, Toh KC, and Yang L. A convergent 3-block semiproximal alternating direction method of multipliers for conic programming with 4-type constraints. SIAM J. Opt, 25(2):882–915, 2015. [Google Scholar]

- [45].Vainshtein BK and Goncharov AB. Determination of the spatial orientation of arbitrarily arranged identical particles of unknown structure from their projections. 1986.

- [46].Vanderberghe L and Boyd S. Semidefinite programming. SIAM Review, 38:49–95, 1996. [Google Scholar]

- [47].Wang L and Sigworth FJ. Cryo-EM and single particles. Physiology, 21:13–18, 2006. [DOI] [PubMed] [Google Scholar]

- [48].Wigner EP. Group Theory and its Application to the Quantum Mechanics of Atomic Spectra. New York: Academic Press, 1959. [Google Scholar]

- [49].Yershova A, Jain S, LaValle SM and Mitchell JC. Generating Uniform Incremental Grids on SO(3) Using the Hopf Fibration. The International journal of robotics research, 29(7):801–812, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [50].Zhao Z, Shkolnisky Y, and Singer A. Fast steerable principal component analysis. IEEE Transactions on Computational Imaging, 2:1–12, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [51].Zhao Z and Singer A. Rotationally invariant image representation for viewing direction classification in cryo-EM. Journal of Structural Biology, 186(1):153–166, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]