Abstract

Vehicle re-identification (Re-ID) involves identifying the same vehicle captured by other cameras, given a vehicle image. It plays a crucial role in the development of safe cities and smart cities. With the rapid growth and implementation of unmanned aerial vehicles (UAVs) technology, vehicle Re-ID in UAV aerial photography scenes has garnered significant attention from researchers. However, due to the high altitude of UAVs, the shooting angle of vehicle images sometimes approximates vertical, resulting in fewer local features for Re-ID. Therefore, this paper proposes a novel dual-pooling attention (DpA) module, which achieves the extraction and enhancement of locally important information about vehicles from both channel and spatial dimensions by constructing two branches of channel-pooling attention (CpA) and spatial-pooling attention (SpA), and employing multiple pooling operations to enhance the attention to fine-grained information of vehicles. Specifically, the CpA module operates between the channels of the feature map and splices features by combining four pooling operations so that vehicle regions containing discriminative information are given greater attention. The SpA module uses the same pooling operations strategy to identify discriminative representations and merge vehicle features in image regions in a weighted manner. The feature information of both dimensions is finally fused and trained jointly using label smoothing cross-entropy loss and hard mining triplet loss, thus solving the problem of missing detail information due to the high height of UAV shots. The proposed method’s effectiveness is demonstrated through extensive experiments on the UAV-based vehicle datasets VeRi-UAV and VRU.

Subject terms: Electrical and electronic engineering, Information technology

Introduction

As an important component of intelligent transportation systems, vehicle re-identification (Re-ID) aims to find the same vehicle from the vehicle images taken by different surveillance cameras. The use of vehicle Re-ID algorithm can automatically perform the work of image matching, solving the problem of vehicle identification due to the influence of external conditions, such as artificially blocked license plates, obstacle blocking, blurred images, etc., saving manpower and consuming less time, providing strong technical support for the construction and maintenance of urban security order and guaranteeing public safety. Driven by deep learning technology, more and more researchers have started to shift towards the deep convolutional neural network, which solves the previous problem of insufficient feature extraction expression using traditional methods.

Existing vehicle Re-ID work1–6 is mainly through road surveillance video to obtain vehicle data. A large number of surveillance cameras deployed in highways, intersections and other areas can only provide a specific angle and a small range of vehicle images. When encountering certain special circumstances, such as camera failure or events that the target vehicle is not in the monitoring coverage, it is impossible to identify and re-identify the target vehicle. In recent years, unmanned aerial vehicles (UAVs) technology7 has made significant developments in terms of flight time, wireless image transmission, automatic control, etc. Mobile cameras on UAVs have a wider range of viewpoints as well as better maneuverability, mobility, and flexibility, and UAVs can track and record specific vehicles in urban areas and highways8. Therefore, the vehicle Re-ID task in the UAV scenario has received increasingly wide attention from researchers as a complementary development to the traditional road surveillance scenario and has greater application value in practical public safety management, traffic monitoring, and vehicle statistics. Figure 1 compares the two types of vehicle images based on road surveillance and aerial photography based on UAVs. The similarity between the two is that the captured vehicle image is a single complete vehicle. The difference is that the height of the UAV is usually higher than the height of the fixed surveillance camera, which results in the angle of the vehicle image sometimes being approximately vertical. Also, the height of the UAV is uncertain, resulting in scale variation in the captured vehicle images.

Figure 1.

Comparison of two types of vehicle images.

Since the height of the UAV is usually higher than the height of the fixed surveillance camera, the obtained vehicle images are taken at an almost near vertical angle, and therefore fewer local features of the vehicle are used for Re-ID. On the one hand, the idea of the attention mechanism has been proven to be effective. It is important to build an attention module to focus on channel information and important regions. On the other hand, average pooling9 takes the average value in each rectangular region, which preserves the background information in the image and allows input of the information of extracting all features in the feature map to the next layer. Generalized mean pooling operation10 allows focusing on regions with different fineness by adjusting the parameters. The minimum pooling operation11 will focus on the smallest pixel points in the feature map. Soft pooling12 is based on softmax weighting to retain the basic attributes of the input while amplifying the feature activation with greater intensity, i.e., to minimize the information loss brought about by the pooling process and to better retain the information features. Unlike maximum pooling, soft pooling is differentiable, so the network acquires a gradient for each input during backpropagation, which facilitates better training. A series of pooling methods have been successively proposed by researchers13–15, each of which has shown different advantages and disadvantages. Previous studies usually combine only average pooling and maximum pooling to capture key features of images, while ignoring the use of multiple pooling methods in combination. In addition, the pooling layer is an important component in convolutional neural networks and has a significant role in reducing the number of network training parameters, decreasing the difficulty of network optimization, and preventing overfitting16.

Based on the above analysis and thinking, this paper presents a novel dual-pooling attention (DpA) module for UAV vehicle Re-ID. Our main contributions are:

• We design the channel-pooling attention (CpA) module and spatial-pooling attention (SpA) module respectively, where the CpA module aims to focus on the important features of the vehicle while ignoring the unimportant information. The SpA module aims to capture the local range dependency of the spatial region. By combining multiple pooling operations, the network is enabled to better focus on detailed information while avoiding the intervention of more redundant information, and the pooling operations also help prevent overfitting. In addition, omni-dimensional dynamic (OD) convolution is introduced in the CpA and SpA modules to further dynamically extract rich contextual information.

• We concatenate the two to obtain the DpA module and embed it into the conventional ResNet50 backbone network to improve the model’s channel and spatial awareness. In addition, this paper introduces hard mining triplet loss combined with cross-entropy loss with label smoothing for training, thus improving the ability of triplet loss to perform strong discrimination even in the face of difficult vehicle samples.

• We conduct a number of experiments to verify the effectiveness of the our model, and the results show that the proposed method achieves 81.74% mean average precision (mAP) on the VeRi-UAV dataset. In the three test subsets of VRU, the accuracy of mAP reaches 98.83%, 97.90% and 95.29%, respectively. This indicates that the DpA module can solve the problem of insufficient fine-grained information based on vehicle Re-ID images taken by UAVs.

Related work on the vehicle Re-ID task

In recent years, most vehicle Re-ID methods are based on traditional road surveillance images, and their methodological ideas broadly include using vehicle local features to achieve the extraction of detailed feature information17–19, using attention mechanisms to improve the model’s ability to focus on important regions20–22, optimizing network training to improve recognition rates by designing appropriate loss functions23,24, and using unsupervised learning without manual labeling to improve the generalization ability of the model in complex realistic scenes25–27. For example, Jiang et al.28 designed a global reference attention network (GRA-Net) with three branches to mine a large number of useful discriminative features to reduce the difficulty of distinguishing similar-looking but different vehicles. EMRN29 proposes a multi-resolution features dimension uniform module to fix dimensional features from images of varying resolutions, thus solving the multi-scale problem. Besides, GiT30 uses a graph network approach to propose a structure where graphs and transformers interact constantly, enabling close collaboration between global and local features for vehicle Re-ID. The dual-relational attention module (DRAM)31 models the importance of feature points in the spatial dimension and the channel dimension to form a three-dimensional attention module to mine more detailed semantic information. In addition, viewpoint-aware network (VANet)32 is used to learn feature metrics for the same and different viewpoints. Generative adversarial networks (GAN) are used to solve the labeling difficulty in the Re-ID dataset33.

However, the current vehicle datasets VeRi-776, VehicleID, etc. are captured by fixed surveillance cameras, and the perspective and diversity of vehicles are insufficient, so the above-mentioned feature extraction methods are only for vehicle images captured by traditional road surveillance. Since the birth of the first vehicle Re-ID dataset VARI34 based on aerial images in 2019, vehicle Re-ID using images captured by UAVs has started to attract the attention of researchers35–37. In UAV surveillance scenarios, the height of its aerial photography is more flexible and usually higher than the height of the fixed surveillance camera, resulting in more challenging recognition as most of the captured vehicle images are non-complete vehicles captured in top-down viewpoints.

In view of the existing research work, we broadly classify the specific research ideas into three categories, namely, based on multi-view features, optimizing the loss function, and introducing the attention mechanism.

Based on multi-view features

The viewpoint problem is an important challenge in UAV aerial photography scenarios. Therefore, Song et al.35 designed a multi-branch twin network based on a viewpoint decision model to be used as a deep feature learning network for vehicle images with different viewpoints. The network combines viewpoint information to match composite sample pairs, and then learns deep features via a multi-branch separated twins network to enhance the learning of images of the same vehicle at different viewpoints, which is validated on VeRi-UAV, a multi-scale vehicle image Re-ID dataset. Organisciak et al.38 also proposed a UAV Re-ID benchmark, for evaluating Re-ID performance across viewpoints and scale respectively. In addition, Teng et al.39 proposed a point-of-view adversarial strategy and a multi-scale consensus loss to improve the robustness and discriminative ability of learning deep features.

Optimizing the loss function

The design of the loss function is used to improve the model sampling method, which in turn improves the performance of the vehicle Re-ID model in UAV scenarios. Yao et al.40 introduced a weighted triplet loss (WTL) function to penalize the embedded features of larger strength negative pairs, which is well targeted for the training of UAV vehicle Re-ID networks. Besides, the normalized softmax loss41 is proposed to increase the inter-class distance and decrease the intra-class distance and combine with the triplet loss to train the model, which solves the problem of how to robustly learn a common visual representation of vehicles from different viewpoints and distinguish between different vehicles with similar visual appearance by optimizing the loss function.

Introduction of attention mechanism

Many researchers have combined the attention mechanism with the Re-ID model to further improve the feature representation capability of the model. Lu et al. researchers42 proposed a global attention and full-scale network (GASNet) for vehicle Re-ID task based on UAV images, which captures vehicle features with global information by global relationship-aware attention mechanism in the network. Recently, in order to be able to effectively extract distinguishable vehicle features, Jiao et al.43 proposed an effective orientation adaptive and salience attentive (OASA) network, and designed a transformer-based salience attentive module to direct the model to focus on subtle but discriminative cues of vehicle instances in the aerial images.

In summary, compared with road surveillance with fixed camera locations, it is more flexible and convenient to utilize images captured in UAV scenarios for vehicle Re-ID tasks in public transportation safety management. From the current state of research, there are fewer related studies because experimental datasets are still difficult to obtain, so this paper focuses on further research in the field of vehicle Re-ID based on UAV aerial scenes.

Proposed approach

Overall network architecture

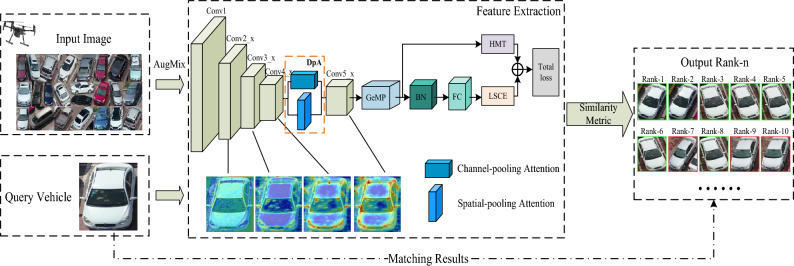

The overall network architecture of this paper is shown in Fig. 2. It consists of three parts: input images, feature extraction, and output results. First, the input image is enhanced with data by AugMix44 method, where AugMix overcomes the image distortion problem caused by previous MixUp data enhancement by applying different data enhancements randomly to the same image. Then, the backbone network ResNet50 and a dual-pooling attention (DpA) module are used as the feature extraction part of the network. After the gallery set to be queried and the target query vehicle are input to the network model for feature extraction, the similarity between the features of the target query vehicle image and the vehicle image features in the gallery set is calculated by a metric method. Finally, the similarity is ranked and the vehicle retrieval results are obtained.

Figure 2.

The overall framework of the network for vehicle Re-ID.

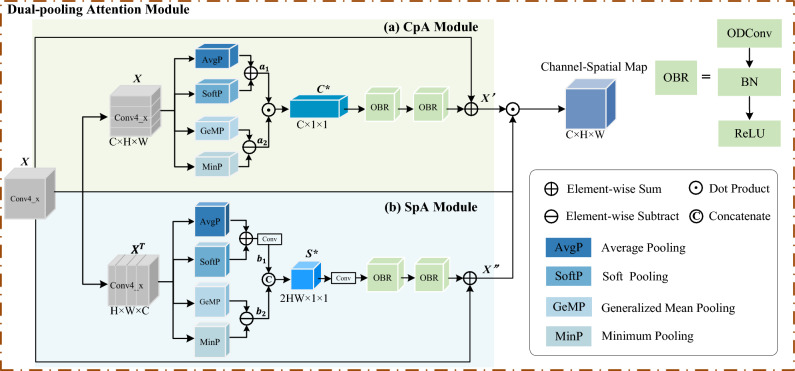

Channel-pooling attention

To focus more on the features with the discriminative nature of vehicle images and avoid the interference of background clutter information, four pooling methods are introduced to process the channel features. The specific module diagram is shown in Fig. 3a. First, let the output features of the third residual block (Conv4_x) of ResNet50 be the input matrix X. Suppose the input matrix , where C, H, and W represent the channel number, height, and width of the feature map respectively. Four copies of X are made, and the average pooling (AvgP)9, generalized mean pooling (GeMP)10, minimum pooling (MinP)11, and soft pooling (SoftP)12 operations are performed on them. The first three poolings make the dimension change from to channel descriptors. The feature map is taken as input and a vector is generated as the output of the pooling operation. The vector in the case of the AvgP, MinP and GeMP of are respectively given by:

| 1 |

| 2 |

where denotes the element located at (p, q) in the rectangular region , indicates the number of elements in the rectangular area .

| 3 |

where denotes the element located at (p, q) in the rectangular region R, |R| denotes the number of all elements of the feature map, and is the control coefficient.

Figure 3.

Dual-pooling attention module. (a) CpA represents channel-pooling attention, (b) SpA represents spatial-pooling attention.

And the feature map generated by SoftP is still . Its formulas for SoftP are shown as follows:

| 4 |

where is similar to above and denotes the element located at (m, n) in the rectangular region R.

From one perspective, since AvgP focuses on each pixel of the feature map equally and SoftP captures important regions better than maximum pooling, the outputs of both are summed to obtain to give more attention to important vehicle features. From another perspective, GeMP can focus on different fine-grained regions adaptively by adjusting the parameters, while minimum pooling focuses on small pixels in the feature map, i.e., the background regions, so GeMP and MinP are subtracted to obtain to give more attention to vehicle fine-grained features and ignore the background regions as much as possible. The output of both of them is dotted and multiplied to obtain the channel attention map . The channel pooling matrix can be formulated as:

| 5 |

| 6 |

| 7 |

where * represents the dot product operation.

The OBR module is composed of OD convolution, batch normalization (BN) and rectified linear unit (ReLU) activation function, which is sequentially used twice in a row for the channel attention map . Compared with normal convolution, dynamic convolution is used here, which is linearly weighted by multiple convolution kernels and establishes certain dependencies with the input data to better learn flexible attention and enhance the extraction of feature information. Finally, the original input matrix X is summed with the output of the OBR module and normalized by the sigmoid function to obtain the final channel-pooling attention output matrix . These operations can be defined as:

| 8 |

where (.) is the sigmoid activation function and the OBR module represents the OD convolution of 33, BN, and ReLU activation function.

Spatial-pooling attention

Feature relations are used to compute spatial attention, similar to the above channel-pooling attention module. As shown in Fig. 3b, first, the output feature X of the original feature, the third residual block of ResNet50 (Conv4_x), is transposed to obtain . Then the operation of multiplying H and W is performed to aggregate and extend the dimensions to become a matrix of . This matrix is then copied in four copies and AvgP, SoftP, GeMP, and MinP are applied along the channel axis, which finally makes the dimension change from to spatial descriptors. Similarly, the outputs of AvgP and SoftP are added and the convolution layer is applied to obtain . The outputs of GeMP and MinP are subtracted to obtain . Finally, the two are concatenated to get the output . The spatial pooling matrix can be formulated as:

| 9 |

| 10 |

| 11 |

where Conv stands for convolution operation and [. , .] is the concatenation operation.

Then convolution is applied to to expand it to . Similarly, the OBR module uses twice for the output attention map to dynamically enhance the acquisition of spatial domain information features. Finally, the original input X is added up to get the output matrix of the spatial-pooling attention module. These operations can be defined as:

| 12 |

Loss functions

In vehicle Re-ID, a combination of identity loss and metric loss is often used. Therefore, in the training phase, we use cross-entropy (CE) loss for classification and triplet loss for metric learning. The CE loss is often used in classification tasks to represent the difference between the true and predicted values. The smaller the value, the better the prediction of the model. The label smoothing (LS) strategy45 is introduced to solve the overfitting problem. Therefore, the formula for the label smoothing cross-entropy (LSCE) loss is as follows:

| 13 |

where parameter is the smoothing factor, which was set to 0.1 in the experiment.

The core idea of triplet loss is to first build a triplet consisting of anchor samples, positive samples, and negative samples. Then after continuous learning, the distance between positive samples and anchor samples under the same category in the feature space is made closer, and the distance between negative samples and anchor samples under different categories are made farther. In this paper, we use hard mining triplet (HMT) loss to further improve the mining ability in the face of difficult vehicle samples by selecting the more difficult to distinguish positive and negative sample pairs in a batch for training. The loss function for the hard mining triplet is calculated as follows:

| 14 |

where T denotes the number of vehicle identities in each training batch, S denotes the number of images included in each vehicle identity. , , and denote the anchor sample, the vehicles belonging to the same category as the anchor sample but least similar to it, and the vehicles belonging to a different category than the anchor sample but most similar to it, respectively. m represents the minimum boundary value of this loss, and [.] is the max(., 0) function.

In summary, this work combines LSCE loss and HMT loss. The final loss is:

| 15 |

where and are two weights for different losses, and = = 1.

Experiment results and discussion

In this section, we perform a number of experiments on vehicle datasets (VeRi-UAV and VRU) based on UAV photography to validate the effectiveness of our method, including a performance comparison with state-of-the-art methods and a set of ablation studies (mainly on VeRi-UAV). The experimental results showed 81.7% mAP and 96.6% Rank-1 on VeRi-UAV. In the three test subsets of VRU, the accuracy of mAP reaches 98.83%, 97.90% and 95.29%, respectively. It can be concluded that our model can effectively mine the fine-grained information of vehicle images captured by UAVs, which leads to a better performance in model accuracy and model retrieval capability. And by combining CpA, which focuses on the important features of vehicles and ignores the unimportant information, and SpA, which captures the local range dependence of spatial regions, the model can further be improved in terms of channel and spatial perception. It is also verified that the hard mining triplet loss combined with cross-entropy loss with label smoothing can perform a strong discriminative ability in the face of difficult vehicle samples.

Next, information about the dataset used, implementation details and evaluation metrics, experimental results compared to state-of-the-art methods, ablation experimental results, discussion of validity, and visual analysis of the model retrieval results are shown specifically, respectively.

Datasets

Liu et al.46 constructed VeRi-UAV, a dataset based on the Re-ID of UAV vehicles, to capture vehicles from multiple angles in different areas, including parking lots and highways. VeRi-UAV includes 2,157 images of 17,516 complete vehicles with 453 IDs. To test the Re-ID method, the authors segmented another 17,516 vehicle images using a vehicle segmentation model. After some minor manual adjustments, the dataset has a total of 9792 training images, 6489 test images, and 1235 query images. Lu et al.42 constructed VRU, the largest current vehicle Re-ID dataset based on aerial drone photography. The dataset was divided into a training set and three test sets: small, medium, and large. The training set includes 80,532 images of 7085 vehicles. The small, medium, and large test sets contain 13,920 images of 1200 vehicles, 27,345 images of 2400 vehicles, and 91,595 images of 8000 vehicles, respectively.

Implementation details and evaluation metric

In this paper, we use the weight parameters of ResNet50 pre-trained on ImageNet as the initial weights of the network model. All experiments were performed on PyTorch. For each training image, balanced identity sampling is taken and it is resized to and pre-processing is also performed using the AugMix data augmentation method. In the training phase, the model was trained for a total of 60 epochs, and a warm-up strategy using a linear learning rate was employed. For the VeRi-UAV dataset, the training batch size is 32 and an SGD optimizer was used with an initial learning rate of 0.35e−4. The learning rate tuning strategy of CosineAnnealingLR is also used. For the VRU dataset, the training batch size is 64 and the initial learning rate is 1e−4 using the Adam optimizer. The learning rate tuning strategy of MultiStepLR is used, which decays to 1e−5 and 1e−6 in the 30th and 50th epochs. In addition, the batch sizes for testing are all 128.

In the model testing phase, we use Rank-n and mean average precision (mAP) as the main evaluation metrics. Among them, Rank-n denotes the probability that there is a correct vehicle in the first n vehicle images in the retrieval results. mAP is obtained by averaging the average precision (AP) and can be regarded as the mathematical expectation of the average precision. In addition, mINP introduced in the ablation experiments is used to evaluate the cost required by the model to search for the most difficult-to-match vehicle samples, thus further demonstrating the experimental effectiveness.

Comparison with state-of-the-art methods

Comparisons on VeRi-UAV

The methods compared on the VeRi-UAV dataset include the handcrafted feature-based methods BOW-SIFT47 and LOMO48, and the deep learning-based methods Cross-entropy Loss45, Hard Triplet Loss49, VANet50, Triplet+ID Loss34, RANet51, ResNeSt52 and PC-CHSML46. Among them, LOMO48 improves vehicle viewpoint and lighting changes through handcrafted local features. BOW-SIFT47 performs feature extraction by employing content-based image retrieval and SIFT. VANet50 learns visually perceptive depth metrics and can retrieve images with different viewpoints under similar viewpoint image interference. RANet51 implements a deep CNN to perform resolution adaptive. PC-CHSML46 are approaches for UAV aerial photography scenarios, which improves the recognition retrieval of UAV aerial images by combining pose-calibrated cross-view and difficult sample-aware metric learning. Table 1 shows the comparison results with the above-mentioned methods in detail. First of all, the results show that the deep learning-based approach achieves superior improvement over the manual feature-based approach. Secondly, compared with methods for fixed surveillance shooting scenarios such as VANet50, DpA shows some improvement in shooting highly flexible situations. Additionally, compared with the method PC-CHSML46 for the UAV aerial photography scenario, DpA shows an improvement of 4.2%, 10.0%, 9.5%, and 9.6% for different metrics of mAP, Rank-1, Rank-5, and Rank-10. Consequently, the effectiveness of the module is further verified.

Table 1.

Comparison of various proposed methods on VeRi-UAV dataset (in %).

| Method | mAP | Rank-1 | Rank-5 | Rank-10 |

|---|---|---|---|---|

| BOW-SIFT47 | 6.7 | 18.9 | 34.4 | 43.4 |

| LOMO48 | 25.5 | 51.9 | 70.1 | 77.0 |

| RANet51 | 44.3 | 71.6 | 82.4 | 85.3 |

| ResNeSt52 | 64.4 | 80.1 | 85.5 | 86.9 |

| Triplet+ID Loss34 | 66.1 | 80.9 | 86.9 | 88.4 |

| VANet50 | 66.5 | 81.6 | 87.0 | 88.0 |

| Cross-entropy Loss45 | 67.6 | 94.8 | 96.3 | 97.4 |

| Hard Triplet Loss49 | 73.2 | 84.8 | 88.6 | 89.2 |

| PC-CHSML46 | 77.5 | 86.6 | 89.0 | 89.8 |

| DpA (Ours) | 81.7 | 96.6 | 98.5 | 99.4 |

Bold numbers indicate the best ranked results.

Comparisons on VRU

It is a relatively newly released UAV-based vehicle dataset, hence, few results have been reported about it. Table 2 compares DpA with other methods42,53–55 on VRU dataset. Among them, MGN53 integrates information with different granularity by designing one global branch and two local branches to improve the robustness of the network model. SCAN54 uses channel and spatial attention branches to adjust the weights at different locations and in different channels to make the model more focused on regions with discriminative information. Triplet+CE loss55 then uses ordinary triplet loss and cross-entropy loss for model training. The GASNet model42 captures effective vehicle information by extracting viewpoint-invariant features and scale-invariant features. The results show that, in comparison, DpA contributes 0.32%, 0.59%, and 1.36% of the mAP improvement to the three subsets of VRU. Taken together, this indicates that the DpA module enhances the ability of the model to extract discriminative features, which can well solve the problem of local features being ignored in UAV scenes.

Table 2.

Comparison of various proposed methods on VRU dataset (in %). Bold numbers indicate the best ranked results.

| Method | Small | Medium | Large | ||||||

|---|---|---|---|---|---|---|---|---|---|

| mAP | Rank-1 | Rank-5 | mAP | Rank-1 | Rank-5 | mAP | Rank-1 | Rank-5 | |

| MGN53 | 82.48 | 81.72 | 95.08 | 80.06 | 78.75 | 93.75 | 71.53 | 66.25 | 87.15 |

| SCAN54 | 83.95 | 75.22 | 95.03 | 77.34 | 67.27 | 90.51 | 64.51 | 52.44 | 79.63 |

| Triplet+CE loss55 | 97.40 | 95.81 | 99.29 | 95.82 | 93.33 | 98.83 | 92.04 | 87.83 | 97.28 |

| GASNet42 | 98.51 | 97.45 | 99.66 | 97.31 | 95.59 | 99.33 | 93.93 | 90.29 | 98.40 |

| DpA(Ours) | 98.83 | 98.07 | 99.70 | 97.90 | 96.51 | 99.44 | 95.29 | 92.30 | 98.96 |

Ablation experiments

In this section, we designed some ablation experiments on the VeRi-UAV dataset to evaluate the effectiveness of the proposed methodological framework. The detailed results of the ablation studies are listed in Tables 3, 4, 5 and 6. It is worth noting that a new evaluation index mINP was introduced in the experiment. The mINP is a recently proposed metric for the evaluation of Re-ID models i.e., the percentage of correct samples among those that have been checked out as of the last correct result.

Table 3.

Ablation experiments of DpA module on VeRi-UAV (in %).

| Method | mAP | Rank-1 | Rank-5 | mINP |

|---|---|---|---|---|

| ResNet50+LSCE+HMT (Baseline) | 79.25 | 95.96 | 98.12 | 49.29 |

| Baseline+CpA | 80.87 | 96.32 | 98.21 | 49.88 |

| Baseline+SpA | 79.92 | 96.50 | 98.21 | 48.97 |

| Baseline+DpA | 81.74 | 96.59 | 98.48 | 51.56 |

Bold and italicized numbers indicate the best and second best ranked results, respectively.

Table 4.

Ablation experiments of different attention modules on VeRi-UAV (in %).

| Method | mAP | Rank-1 | Rank-5 | mINP |

|---|---|---|---|---|

| ResNet50+LSCE+HMT (Baseline) | 79.25 | 95.96 | 98.12 | 49.29 |

| Baseline+SE56 | 79.89 | 96.14 | 98.48 | 49.71 |

| Baseline+Non-local57 | 78.85 | 96.50 | 98.21 | 47.42 |

| Baseline+CBAM58 | 78.69 | 96.23 | 97.85 | 48.17 |

| Baseline+CA59 | 79.58 | 96.23 | 98.21 | 47.01 |

| Baseline+DpA | 81.74 | 96.59 | 98.48 | 51.56 |

Bold and italicized numbers indicate the best and second best ranked results, respectively.

Table 5.

Ablation experiment of adding DpA module at different residual blocks of the backbone network on VeRi-UAV (in %).

| No. | Conv3_x | Conv4_x | Conv5_x | mAP | Rank-1 | Rank-5 | mINP | Training time (h) |

|---|---|---|---|---|---|---|---|---|

| 0 | 79.25 | 95.96 | 98.12 | 49.29 | 0.70 | |||

| 1 | 79.71 | 96.23 | 98.21 | 49.35 | 0.84 | |||

| 2 | 81.74 | 96.59 | 98.48 | 51.56 | 0.81 | |||

| 3 | 78.94 | 96.41 | 98.12 | 48.64 | 1.10 | |||

| 4 | 78.34 | 96.41 | 98.39 | 46.84 | 1.00 | |||

| 5 | 78.87 | 96.23 | 97.76 | 47.58 | 1.32 | |||

| 6 | 79.57 | 96.50 | 98.39 | 47.75 | 1.27 |

Bold and italicized numbers indicate the best and second best ranked results, respectively.

Table 6.

Ablation experiments of different metric losses on VeRi-UAV (in %).

| Method | mAP | Rank-1 | Rank-5 | mINP |

|---|---|---|---|---|

| DpA+Circle60 | 72.29 | 95.43 | 97.67 | 38.05 |

| DpA+MS61 | 72.64 | 95.43 | 97.58 | 38.31 |

| DpA+SupCon62 | 74.91 | 97.13 | 98.12 | 39.04 |

| DpA+HMT | 81.74 | 96.59 | 98.48 | 51.56 |

Bold and italicized numbers indicate the best and second best ranked results, respectively.

Evaluation of DpA module

To verify the validity of the DpA module, we directly used the baseline network composed of ResNet50 as the backbone network, combined with generalized mean pooling, batch normalization layer, fully connected layer, LSCE loss, and HMT loss. The detailed results of the ablation study on the VeRi-UAV dataset are shown in Table 3. Firstly, the results showed that the addition of CpA to the baseline resulted in a 1.62% and 0.36% improvement in the assessment over the baseline on mAP and Rank-1, respectively. This indicates that CpA enhances the channel information to be able to extract discriminative local vehicle features. Then after adding SpA to the baseline alone, it improved by 0.67% and 0.54% over the baseline on mAP and Rank-1 respectively, showing a greater focus on important regions in the spatial dimension. Finally, after combining CpA and SpA on top of the baseline, we can find another 2.49%, 0.63%, 0.36%, and 2.27% improvement on mAP, Rank-1, Rank-5, and mINP, respectively. We can draw two conclusions: firstly, feature extraction from two dimensions, channel and spatial, respectively, can effectively extract more and discriminative fine-grained vehicle features. Secondly, the accuracy of Re-ID is improved by connecting two attention modules in parallel.

Comparison of different attention modules

This subsection compares the performance with the already proposed attention modules SE56, Non-local57, CBAM58, and CA59. Correspondingly, SE56 gives different weights to different positions of the image from the perspective of the channel domain through a weight matrix to obtain more important feature information. Non-local57 achieves long-distance dependence between pixel locations, thus enhancing the attention to non-local features. CBAM58 module sequentially infers the attention map along two independent channel and spatial dimensions and then multiplies the attention map with the input feature map to perform adaptive feature optimization. CA59 decomposes channel attention into two one-dimensional feature encoding processes that aggregate features along both vertical and horizontal directions to efficiently integrate spatial coordinate information into the generated attention maps.

Table 4 shows the experimental comparison results for different attentional mechanisms. Firstly, adding the SE and CA attention modules can slightly improve the accuracy of the model to some extent, while adding the Non-local, CBAM attention module does not produce the corresponding effect. Second, compared with the newer attention module CA, the proposed DpA module can achieve 2.16% mAP, 0.36% Rank-1, 0.27% Rank-5, and 4.55% mINP gains on VeRi-UAV. Therefore, this demonstrates the proposed DpA module is more robust in UAV aerial photography scenarios with near-vertical shooting angles and long shooting distances.

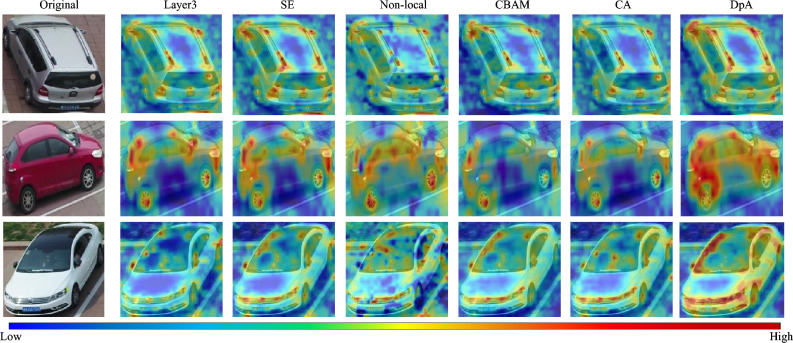

To further validate the effectiveness of the DpA method, we also used the Grad-CAM++ technique to visualize the different attention maps. As shown in Fig. 4, from left to right, the attention maps of residual layer 3 (without any attention), SE, Non-local, CBAM, CA, and DpA are shown in order. It can be clearly seen that, firstly, all six methods focus on the vehicle itself. Secondly, the attention modules of SE, Non-local, CBAM and CA pay less attention to the local information of the vehicle and some important parts are even ignored, while the red area of the DpA module is more obvious to achieve more attention to important cues at different fine-grained levels and to improve the feature extraction capability of the network.

Figure 4.

Heat map comparison of different attention modules. The red area indicates the part of the network with the highest attention value, and the blue area indicates the part of the network with the lowest attention value.

Comparison of DpA module placement in the network

We designed a set of experiments and demonstrated its effectiveness by adding DpA modules at different stages of the backbone network. indicates that the DpA module is added after one of the residual blocks of the backbone network.

Table 5 shows the experimental results of adding the DpA module after different residual blocks of the backbone. Firstly, the results show that the different residual blocks added to the backbone network have an impact on the network robustness. Specifically, adding the DpA module behind the 2nd (No.1), 3rd (No.2) and 3rd and 4th (No.6) residual blocks of the backbone network respectively improves the accuracy over the baseline (No.0), indicating that the module is able to effectively extract fine-grained vehicle features at these locations. In contrast, adding the DpA module behind the 4th residual block (No.3) and behind the 2nd and 3rd (No.4), 2nd and 4th (No.5) residual blocks all show some decrease in accuracy over the baseline (No.0), which indicates that the network’s attention is more dispersed after adding it to these positions, thus introducing more irrelevant information. Secondly, it can be seen from the table that using mostly one DpA is more robust to the learning of network features than using two DpAs jointly, and saves some training time. In particular, No.2, after adding the DpA module to the third residual block of the backbone network, has at least a 2.17% improvement in mAP compared to the joint use of two DpA’s. In brief, weighing the pros and cons, we choose to add the DpA module only after Conv4_x of ResNet50.

Comparison of different metric losses

Metric loss has been shown to be effective in Re-ID tasks, which aim to maximize intra-class similarity while minimizing inter-class similarity. The current metric losses treat each instance as an anchor, such as HMT loss and circle loss60 which utilize the hardest anchor-positive sample pairs. The multi-similarity (MS) loss61 which selects anchor-positive sample pairs is based on the hardest negative sample pairs. The supervised contrastive (SupCon) loss62 samples all positive samples of each anchor, introducing cluttered triplet while obtaining richer information. The adaptation of different loss functions to the scenario often depends on the characteristics of the training dataset. Table 6 shows the experimental results of applying different metric losses for training on the VeRi-UAV dataset, and it can be seen that the HMT loss improves both in terms of mAP compared to other losses, which indicates that the HMT loss targeted to improve the network’s ability to discriminate difficult samples for more robust performance in the vehicle Re-ID task in the UAV scenario.

Discussion

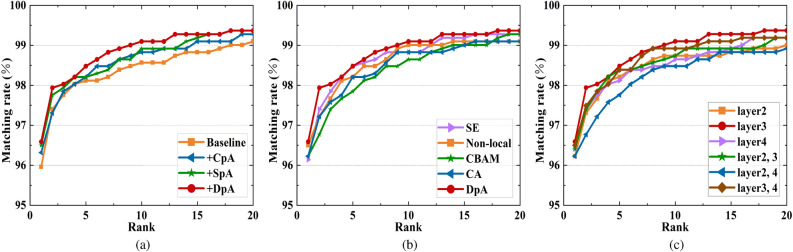

Although the current attention mechanism can achieve certain effect on some vision tasks, its direct application is not effective due to the special characteristics of the UAV shooting angle. Therefore, the main idea of this paper is to design the attention module combining multiple pooling operations and embedding it into the backbone network, which improves the fine-grained information extraction capability for the vehicle Re-ID in the UAV shooting scenario, and devotes to solving the problem of insufficient local information of the vehicle due to the near vertical angle of the UAV shooting and the varying height. A large number of experimental results prove its better results on both VeRi-UAV and VRU datasets. In addition, Fig. 5 shows the matching rate results from the top 1 to the top 20 for the different validation models mentioned above, respectively. In contrast, the curves plotted by our proposed method as a whole lie above the others, which further validates the effectiveness of the method in terms of actual vehicle retrieval effects. Therefore, our vehicle Re-ID model not only achieves accurate identification of the same vehicle, but also provides certain technical support for the injection of UAV technology into intelligent transportation systems.

Figure 5.

Comparisons of CMC curves for the case of: (a) CpA, SpA and DpA modules, (b) five different attention mechanisms, and (c) DpA placed in different positions of the backbone network.

Visualization of model retrieval results

To illustrate the superiority of our model more vividly, Fig. 6 shows the visualization of the top 10 ranked retrieval results for the baseline and model on the VeRi-UAV dataset. A total of four query images corresponding to the retrieval results are randomly shown, the first row for the baseline method and the second row for our method. The images with green borders represent the correct samples retrieved, while the images with red borders are the incorrect samples retrieved.

Figure 6.

Visualization of the ranking lists of model and baseline on VeRi-UAV. For each query, the top and bottom rows show the ranking results for the baseline and joining the DpA module, respectively. The green (red) boxes denote the correct (wrong) results.

In contrast, on the one hand, the baseline approach focuses on general appearance features, where the top-ranked negative samples all have similar body postures. However, our method focuses on vehicle features with discriminative information, such as the vehicle parts marked with red circles in the query image in Fig. 6 (vehicle type symbol, front window, rear window, and side window). On the other hand, as in the second query image in the figure, our method correctly retrieves the top 5 target vehicle samples in only 5 retrievals, while in the baseline method, it takes 9 retrievals to correctly retrieve the top 5 target vehicle samples.

Conclusion and future work

In this work, we propose a dual-pooling attention (DpA) module for vehicle Re-ID that to solve the current problem of difficult extraction of local features of vehicles in UAV scenarios due to the high shooting height and vertical shooting angle. The first designed DpA module consists of a channel-pooling attention module and a spatial-pooling attention module. The former aims to focus on the important features of the vehicle while ignoring the unimportant information, and the latter aims to capture the local range dependence of the spatial region. Effective extraction of fine-grained important features of a vehicle is achieved by taking two dimensions, the channel domain and the spatial domain. Then, we fuse the features extracted from the two dimensions and improve the model’s channel and spatial awareness by introducing OD convolution to achieve dynamic extraction of rich contextual information. Extensive comparative evaluations show that our approach outperforms state-of-the-art results on two challenging UAV-based aerial vehicle Re-ID datasets, achieving competitive performance in the Re-ID task.

In addition, there is room for further improvement of the approach proposed in this paper. From the retrieval visualization in Fig. 6, it can be seen that there are retrieval errors for vehicles with serious occlusion. Therefore, further research will be carried out in the future to address the problem of occlusion of important parts of the vehicle, so that the network can adaptively focus on the fine-grained information of other parts to improve the recognition accuracy and retrieval capability. Meanwhile, due to the lack of research on vehicle Re-ID in the UAV aerial photography scene, there is great potential for future research, such as considering expanding UAV scene datasets (e.g., placing drones at different angles to increase the number of vehicle images containing multiple views), combining spatial-temporal information of vehicles, and combining vehicle images captured by traditional fixed surveillance cameras and UAVs for application to vehicle Re-ID tasks.

Acknowledgements

This work was funded by the Shandong Province Postgraduate Education Quality Curriculum Project (SDYKC19083), Shandong Province Postgraduate Education Joint Training Base Project (SDYJD18027).

Author contributions

Conceptualization, Y.X.; Methodology, X.G.; Design of experiments and interpretation of results, X.G. and C.Z.; Validation, J.Y.; Data recording and analysis, X.J.; Writing-original draft preparation, X.G.; Writing-review and editing, Y.X. and Z.C. All authors read and approved the final manuscript.

Data availability

The dataset analyzed during this study and the associated data are available in the GitHub repository at the link https://github.com/Gxy0221/g-re-id.git.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Zhu J, et al. Vehicle re-identification using quadruple directional deep learning features. IEEE Trans. Intell. Transport. Syst. 2020;21:410–420. doi: 10.1109/TITS.2019.2901312. [DOI] [Google Scholar]

- 2.Shen F, Zhu J, Zhu X, Xie Y, Huang J. Exploring spatial significance via hybrid pyramidal graph network for vehicle re-identification. IEEE Trans. Intell. Transport. Syst. 2022;23:8793–8804. doi: 10.1109/TITS.2021.3086142. [DOI] [Google Scholar]

- 3.He, S. et al. Multi-domain learning and identity mining for vehicle re-identification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops 582–583 (2020).

- 4.Zheng Z, Ruan T, Wei Y, Yang Y, Mei T. Vehiclenet: Learning robust visual representation for vehicle re-identification. IEEE Trans. Multimed. 2021;23:2683–2693. doi: 10.1109/TMM.2020.3014488. [DOI] [Google Scholar]

- 5.Rong L, et al. A vehicle re-identification framework based on the improved multi-branch feature fusion network. Sci. Rep. 2021;11:1–12. doi: 10.1038/s41598-021-99646-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Shen, F., Du, X., Zhang, L. & Tang, J. Triplet contrastive learning for unsupervised vehicle re-identification. arXiv preprint arXiv:2301.09498 (2023).

- 7.Outay F, Mengash HA, Adnan M. Applications of unmanned aerial vehicle (UAV) in road safety, traffic and highway infrastructure management: Recent advances and challenges. Transport. Res. Part A. 2020;141:116–129. doi: 10.1016/j.tra.2020.09.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Wang S, Jiang F, Zhang B, Ma R, Hao Q. Development of uav-based target tracking and recognition systems. IEEE Trans. Intell. Transport. Syst. 2020;21:3409–3422. doi: 10.1109/TITS.2019.2927838. [DOI] [Google Scholar]

- 9.Lin, M., Chen, Q. & Yan, S. Network in network. arXiv preprint arXiv:1312.4400 (2013).

- 10.Gu, Y., Li, C. & Xie, J. Attention-aware generalized mean pooling for image retrieval. arXiv preprint arXiv:1811.00202 (2018).

- 11.Kauffmann J, Müller K-R, Montavon G. Towards explaining anomalies: A deep taylor decomposition of one-class models. Pattern Recognit. 2020;101:107198. doi: 10.1016/j.patcog.2020.107198. [DOI] [Google Scholar]

- 12.Stergiou, A., Poppe, R. & Kalliatakis, G. Refining activation downsampling with softpool. In Proceedings of the IEEE/CVF International Conference on Computer Vision 10357–10366 (2021).

- 13.Zhai, S. et al. S3pool: Pooling with stochastic spatial sampling. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 4970–4978 (2017).

- 14.Gulcehre, C., Cho, K., Pascanu, R. & Bengio, Y. Learned-norm pooling for deep feedforward and recurrent neural networks. In Machine Learning and Knowledge Discovery in Databases: European Conference, ECML PKDD 2014, Nancy, France, September 15-19, 2014. Proceedings, Part I 14 530–546 (Springer, 2014).

- 15.Zhao, J. & Snoek, C. G. Liftpool: Bidirectional convnet pooling. arXiv preprint arXiv:2104.00996 (2021).

- 16.Sun M, Song Z, Jiang X, Pan J, Pang Y. Learning pooling for convolutional neural network. Neurocomputing. 2017;224:96–104. doi: 10.1016/j.neucom.2016.10.049. [DOI] [Google Scholar]

- 17.Liu, X., Zhang, S., Huang, Q. & Gao, W. Ram: a region-aware deep model for vehicle re-identification. In 2018 IEEE International Conference on Multimedia and Expo (ICME) 1–6 (IEEE, 2018).

- 18.Chen, H., Lagadec, B. & Bremond, F. Partition and reunion: A two-branch neural network for vehicle re-identification. In CVPR Workshops 184–192 (2019).

- 19.Wang, Z. et al. Orientation invariant feature embedding and spatial temporal regularization for vehicle re-identification. In Proceedings of the IEEE International Conference on Computer Vision 379–387 (2017).

- 20.Zhang G, et al. Sha-mtl: Soft and hard attention multi-task learning for automated breast cancer ultrasound image segmentation and classification. Int. J. Comput. Assist. Radiol. Surg. 2021;16:1719–1725. doi: 10.1007/s11548-021-02445-7. [DOI] [PubMed] [Google Scholar]

- 21.Shen, F. et al. Hsgm: A hierarchical similarity graph module for object re-identification. In 2022 IEEE International Conference on Multimedia and Expo (ICME) 1–6 (IEEE, 2022).

- 22.Pan, X. et al. Vehicle re-identification approach combining multiple attention mechanisms and style transfer. In 2022 3rd International Conference on Pattern Recognition and Machine Learning (PRML) 65–71 (IEEE, 2022).

- 23.Bai Y, et al. Group-sensitive triplet embedding for vehicle reidentification. IEEE Trans. Multimed. 2018;20:2385–2399. doi: 10.1109/TMM.2018.2796240. [DOI] [Google Scholar]

- 24.Li K, Ding Z, Li K, Zhang Y, Fu Y. Vehicle and person re-identification with support neighbor loss. IEEE Trans. Neural Netw. Learn. Syst. 2022;33:826–838. doi: 10.1109/TNNLS.2020.3029299. [DOI] [PubMed] [Google Scholar]

- 25.Peng J, Wang H, Xu F, Fu X. Cross domain knowledge learning with dual-branch adversarial network for vehicle re-identification. Neurocomputing. 2020;401:133–144. doi: 10.1016/j.neucom.2020.02.112. [DOI] [Google Scholar]

- 26.Song L, et al. Unsupervised domain adaptive re-identification: Theory and practice. Pattern Recognit. 2020;102:107173. doi: 10.1016/j.patcog.2019.107173. [DOI] [Google Scholar]

- 27.Bashir RMS, Shahzad M, Fraz M. Vr-proud: Vehicle re-identification using progressive unsupervised deep architecture. Pattern Recognit. 2019;90:52–65. doi: 10.1016/j.patcog.2019.01.008. [DOI] [Google Scholar]

- 28.Jiang, G., Pang, X., Tian, X., Zheng, Y. & Meng, Q. Global reference attention network for vehicle re-identification. Applied Intelligence 1–16 (2022).

- 29.Shen F, et al. An efficient multiresolution network for vehicle reidentification. IEEE Internet Things J. 2022;9:9049–9059. doi: 10.1109/JIOT.2021.3119525. [DOI] [Google Scholar]

- 30.Shen F, Xie Y, Zhu J, Zhu X, Zeng H. Git: Graph interactive transformer for vehicle re-identification. IEEE Trans. Image Process. 2023;32:1039–1051. doi: 10.1109/TIP.2023.3238642. [DOI] [PubMed] [Google Scholar]

- 31.Zheng Y, Pang X, Jiang G, Tian X, Meng Q. Dual-relational attention network for vehicle re-identification. Appl. Intell. 2023;53:7776–7787. doi: 10.1007/s10489-022-03801-z. [DOI] [Google Scholar]

- 32.Wang Q, et al. Viewpoint adaptation learning with cross-view distance metric for robust vehicle re-identification. Inf. Sci. 2021;564:71–84. doi: 10.1016/j.ins.2021.02.013. [DOI] [Google Scholar]

- 33.Wang Q, et al. Inter-domain adaptation label for data augmentation in vehicle re-identification. IEEE Trans. Multimed. 2022;24:1031–1041. doi: 10.1109/TMM.2021.3104141. [DOI] [Google Scholar]

- 34.Wang, P. et al. Vehicle re-identification in aerial imagery: Dataset and approach. In Proceedings of the IEEE/CVF International Conference on Computer Vision 460–469 (2019).

- 35.Song, Y., Liu, C., Zhang, W., Nie, Z. & Chen, L. View-decision based compound match learning for vehicle re-identification in uav surveillance. In 2020 39th Chinese Control Conference (CCC) 6594–6601 (IEEE, 2020).

- 36.Shen B, Zhang R, Chen H. An adaptively attention-driven cascade part-based graph embedding framework for UAV object re-identification. Remote Sens. 2022;14:1436. doi: 10.3390/rs14061436. [DOI] [Google Scholar]

- 37.Ferdous, S. N., Li, X. & Lyu, S. Uncertainty aware multitask pyramid vision transformer for uav-based object re-identification. In 2022 IEEE International Conference on Image Processing (ICIP) 2381–2385 (IEEE, 2022).

- 38.Organisciak, D. et al. Uav-reid: A benchmark on unmanned aerial vehicle re-identification. CoRR (2021).

- 39.Teng S, Zhang S, Huang Q, Sebe N. Viewpoint and scale consistency reinforcement for UAV vehicle re-identification. Int. J. Comput. Vis. 2021;129:719–735. doi: 10.1007/s11263-020-01402-2. [DOI] [Google Scholar]

- 40.Yao A, Huang M, Qi J, Zhong P. Attention mask-based network with simple color annotation for UAV vehicle re-identification. IEEE Geosci. Remote Sens. Lett. 2021;19:1–5. [Google Scholar]

- 41.Qiao W, Ren W, Zhao L. Vehicle re-identification in aerial imagery based on normalized virtual softmax loss. Appl. Sci. 2022;12:4731. doi: 10.3390/app12094731. [DOI] [Google Scholar]

- 42.Lu M, Xu Y, Li H. Vehicle re-identification based on UAV viewpoint: Dataset and method. Remote Sens. 2022;14:4603. doi: 10.3390/rs14184603. [DOI] [Google Scholar]

- 43.Jiao, B. et al. Vehicle re-identification in aerial images and videos: Dataset and approach. IEEE Transactions on Circuits and Systems for Video Technology (2023).

- 44.Hendrycks, D. et al. Augmix: A simple data processing method to improve robustness and uncertainty. arXiv preprint arXiv:1912.02781 (2019).

- 45.Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J. & Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2818–2826 (2016).

- 46.Liu C, et al. Posture calibration based cross-view & hard-sensitive metric learning for UAV-based vehicle re-identification. IEEE Trans. Intell. Transport. Syst. 2022;23:19246–19257. doi: 10.1109/TITS.2022.3165175. [DOI] [Google Scholar]

- 47.Liu, X., Liu, W., Ma, H. & Fu, H. Large-scale vehicle re-identification in urban surveillance videos. In 2016 IEEE International Conference on Multimedia and Expo (ICME) 1–6 (IEEE, 2016).

- 48.Liao, S., Hu, Y., Zhu, X. & Li, S. Z. Person re-identification by local maximal occurrence representation and metric learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2197–2206 (2015).

- 49.Hermans, A., Beyer, L. & Leibe, B. In defense of the triplet loss for person re-identification. arXiv preprint arXiv:1703.07737 (2017).

- 50.Chu, R. et al. Vehicle re-identification with viewpoint-aware metric learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision 8282–8291 (2019).

- 51.Yang, L. et al. Resolution adaptive networks for efficient inference. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2369–2378 (2020).

- 52.Zhang, H. et al. Resnest: Split-attention networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2736–2746 (2022).

- 53.Wang, G., Yuan, Y., Chen, X., Li, J. & Zhou, X. Learning discriminative features with multiple granularities for person re-identification. In Proceedings of the 26th ACM International Conference on Multimedia 274–282 (2018).

- 54.Teng, S., Liu, X., Zhang, S. & Huang, Q. Scan: Spatial and channel attention network for vehicle re-identification. In Advances in Multimedia Information Processing–PCM 2018: 19th Pacific-Rim Conference on Multimedia, Hefei, China, September 21-22, 2018, Proceedings, Part III 19 350–361 (Springer, 2018).

- 55.He, L. et al. Fastreid: A pytorch toolbox for general instance re-identification. arXiv preprint arXiv:2006.02631 (2020).

- 56.Hu, J., Shen, L. & Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 7132–7141 (2018).

- 57.Wang, X., Girshick, R., Gupta, A. & He, K. Non-local neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 7794–7803 (2018).

- 58.Woo, S., Park, J., Lee, J.-Y. & Kweon, I. S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV) 3–19 (2018).

- 59.Hou, Q., Zhou, D. & Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 13713–13722 (2021).

- 60.Sun, Y. et al. Circle loss: A unified perspective of pair similarity optimization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 6398–6407 (2020).

- 61.Wang, X., Han, X., Huang, W., Dong, D. & Scott, M. R. Multi-similarity loss with general pair weighting for deep metric learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 5022–5030 (2019).

- 62.Khosla P, et al. Supervised contrastive learning. Adv. Neural Inf. Process. Syst. 2020;33:18661–18673. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The dataset analyzed during this study and the associated data are available in the GitHub repository at the link https://github.com/Gxy0221/g-re-id.git.