Abstract

For rare diseases, conducting large, randomized trials of new treatments can be infeasible due to limited sample size, and it may answer the wrong scientific questions due to heterogeneity of treatment effects. Personalized (N-of-1) trials are multi-period crossover studies that aim to estimate individual treatment effects, thereby identifying the optimal treatments for individuals. This article examines the statistical design issues of evaluating a personalized (N-of-1) treatment program in people with amyotrophic lateral sclerosis (ALS). We propose an evaluation framework based on an analytical model for longitudinal data observed in a personalized trial. Under this framework, we address two design parameters: length of experimentation in each trial and number of trials needed. For the former, we consider patient-centric design criteria that aim to maximize the benefits of enrolled patients. Using theoretical investigation and numerical studies, we demonstrate that, from a patient’s perspective, the duration of an experimentation period should be no longer than one-third of the entire follow-up period of the trial. For the latter, we provide analytical formulae to calculate the power for testing quality improvement due to personalized trials in a randomized evaluation program and hence determine the required number of trials needed for the program. We apply our theoretical results to design an evaluation program for ALS treatments informed by pilot data and show that the length of experimentation has a small impact on power relative to other factors such as the degree of heterogeneity of treatment effects.

Keywords: ALS, heterogeneity of treatment effects (HTE), minimally clinically important heterogeneity, patient-centered research, rare diseases, sample size formulae

1. Introduction

When managing chronic diseases and conditions, patients commonly try different treatments over time before finding the right treatments. The practice of N-of-1 trials operationalizes this type of patient-centered experimentation by randomizing treatments to single patients in multiple crossover periods, often in a balanced fashion. N-of-1 trials can be used to identify the optimal personalized treatment for single patients in situations involving evidence for heterogeneity of treatment effects (HTE) or the lack of a cure (Davidson et al. 2021). As such, these trials are sometimes called single-patient trials or personalized trials. First introduced by (Hogben and Sim 1953), N-of-1 trials have recently been applied to treat rare diseases (Roustit et al. 2018), as well as common chronic conditions such as hypertension (Kronish et al. 2019)(Samuel et al. 2019). The use of personalized (N-of-1) trials in treating rare diseases is particularly appealing because demonstrating comparative effectiveness of treatments at the population level via parallel-group randomized trials is often infeasible.

In this article, we consider personalized (N-of-1) trials of treatments for people with amyotrophic lateral sclerosis (ALS). ALS is a rare neurodegenerative disease that affects motor neurons in the brain and spinal cord. Despite the fact that two modestly effective disease-modifying medications have been approved for the treatment of ALS (Edaravone [MCI-186] ALS 19 Study Group 2017), the disease has no cure, and thus, symptomatic treatments remain an important strategy to improve the quality of life in people with ALS (Mitsumoto, Brooks, and Silani 2014). In particular, muscle cramps are disabling symptoms affecting over 90% of ALS patients, with demonstrated between-patient variability and yet stable manifestation of symptoms in a patient (Caress et al. 2016). Several treatments targeting muscle cramps have been evaluated and have shown mixed results, suggesting the presence of HTE or inadequate statistical power for definitive conclusions (Baldinger, Katzberg, and Weber 2012). Furthermore, ALS itself has been considered markedly heterogeneous in its pathogeneses, disease manifestations, and disease progression (Al-Chalabi and Hardiman 2013)(van den Berg et al. 2019). These are the clinical situations in which personalized (N-of-1) trials can help patients identify the best treatments for themselves (n.d.a).

Despite renewed interest in N-of-1 trials and numerous recent applications, the literature has offered little discussion on the evaluation of the usefulness of N-of-1 trials. As N-of-1 trials typically require active physician involvement, intense monitoring, and frequent data collection compared with usual care, these additional costs and resources warrant careful evaluation of effectiveness before said trials are used in practice as regular clinical service. The primary evaluation question is “Does the practice of N-of-1 trials in clinical care improve outcomes on the standard of care?” However, presuming the quality of treatment decisions based on N-of-1 trials is higher than what standard of care would prescribe, reports of N-of-1 trials often describe only the applications and results of the trials without plans to address the evaluation question. An exception is (Kravitz et al. 2018) who compare N-of-1 intervention against the usual care for patients with musculoskeletal pain in a randomized fashion using data collected after experimentation ends and find no evidence of superior outcomes among participants undergoing N-of-1 trials. However, when planning the study, the authors had not considered the underlying model that accounts for variability and correlation in the longitudinal observations and the assumptions on the effect size, which would in turn drive the appropriate sample size of an evaluation program for N-of-1 trials. A design issue related to sample size determination is the duration of experimentation in N-of-1 trials. In this article, we propose a framework to evaluate the quality and effectiveness of N-of-1 trials and develop specific guidance to address these design issues. We will introduce the evaluation framework in Section 2 and define the basic analytical model for analyzing N-of-1 trials in Section 3. The main findings on the experimentation duration and sample size are derived and described in Section 4 and applied to the ALS treatment program in Section 5. The article ends with a discussion in Section 6. All technical details are provided in the Appendices.

2. An Evaluation Framework for Personalized (N-of-1) Trials

2.1. The Anatomy of a Personalized (N-of-1) Trial

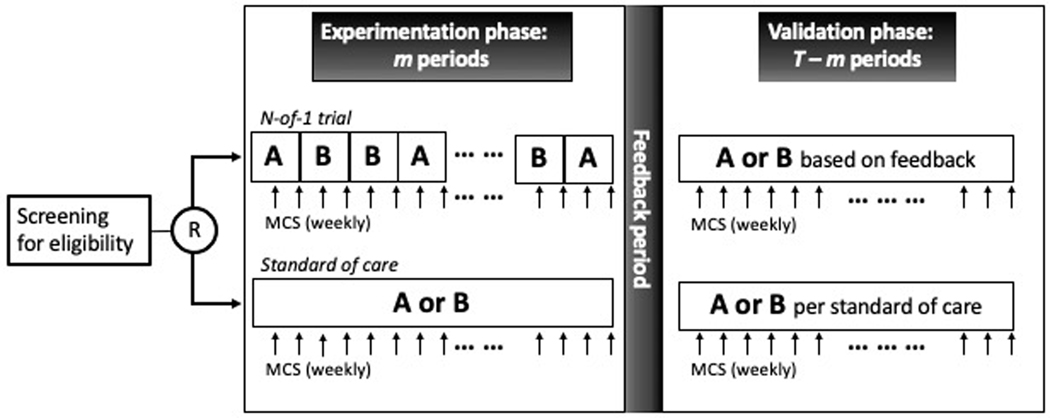

We consider an evaluation program comparing the effectiveness of personalized (N-of-1) trials in treating muscle cramps in people with ALS relative to the institutional standard of care. Under the program, people with ALS will be randomized to receive personalized (N-of-1) trials that compare two standard drugs prescribed for muscle cramps, mexiletine and baclofen. In each trial, a patient will be given the two drugs sequentially over two-week treatment periods in two phases. The first phase consists of treatment periods (with ) when the two drugs are randomized in a multiple crossover fashion. This phase shall be referred to as the experimentation phase. In the remaining treatment periods, the patient will continue with a drug treatment selected based on data in the experimentation phase. This phase shall be referred to as the validation phase (Figure 1).

Figure 1.

Schema of an evaluation program for personalized (N-of-1) trials comparing treatment A and treatment B. Under the evaluation program, patients are randomized to either an N-of-1 trial or the standard of care.

During the treatment periods, the Columbia Muscle Cramp Scale (MCS) will be collected weekly to result in two MCS measurements for each period: one at the end of week 1 and one at the end of week 2. The MCS is a validated, composite score summarizing the frequency, severity, and clinical relevance of cramps in people with ALS (Mitsumoto et al. 2019). While the study does not include washout periods between treatments, only the measurement at the end of each two-week period will be used in the primary analysis in order to avoid carryover effects of the drugs.

Sandwiched between the two treatment phases is a feedback period where the MCS data in the experimentation phase are reviewed with the treating physician and the patient. The feedback period enables data-driven treatment decisions by providing the stakeholders with data visualization as well as numerical comparison (Davidson et al. 2021).

2.2. Standard of Care

In this article, we focus on a randomized controlled evaluation program where patients are randomized between an N-of-1 trial and standard of care (SOC). As depicted in Figure 1, a patient under SOC will be given either mexiletine or baclofen for 36 weeks, corresponding to the 18 two-week treatment periods in the N-of-1 trials, and will have the same follow-up schedule as the N-of-1 trial patients. Treatments in the ‘experimentation phase’ will be determined by the treating physicians. The ‘feedback period’ in the SOC arm may be viewed as a sham intervention and be conducted as a regular clinic visit before the patient continues into the ‘validation phase’ with the same drug in the remaining treatment periods. By the virtue of randomization, MCS collected in the validation phase under SOC will serve as the control data and allow for an unbiased comparison with the validation phase in the N-of-1 trial patients.

Let denote the probability that mexiletine will be prescribed under SOC and the probability baclofen will be prescribed such that . The special case and corresponds to a clinical scenario where mexiletine is considered the standard treatment. Generally, the program probability parameters are somewhere between 0 and 1 when no clear best treatment exists. A program equipoise may be defined as when the treating physicians will give either of the drugs with equal likelihood, that is, . These program parameters apparently affect the quality of treatment under standard of care, and hence the advantage of N-of-1 trials over standard of care. At the end of the evaluation program, these parameters can be estimated using the control data.

2.3. Design Parameters

While the study duration (or the number of treatment periods ) is determined based on feasibility and how long a patient can be followed in the evaluation program, an N-of-1 trial under the evaluation framework is defined by the length of the experimentation phase, and hence the length of the validation phase. Intuitively, the quality of the treatment decision by an N-of-1 trial improves with a larger as more data will be available during the feedback period. On the other hand, a long experimentation phase may place excessive burden on patients without benefitting them and imply a short validation phase for a given . Rather than maximize accuracy, the experimentation length will respond to the question “How much experimentation is needed for an N-of-1 trial to be beneficial to an individual?”

A second design parameter is the specification of an analytical plan used to guide treatment selection during the feedback period. Principled statistical or data science methods should be employed to ensure the analysis is rigorous, while a prespecified plan entails preprogrammed algorithms that in turn facilitate quick feedback to the stakeholders.

Finally, as in conventional randomized controlled trials, the number of patients randomized in an evaluation program will need to be determined to ensure adequate statistical power for the primary evaluation question on whether N-of-1 trials improve outcomes.

To summarize, the design parameters that need to be prespecified at the planning stage of an evaluation program are the primary analysis plan used in the feedback period, the experimentation length () for each individual, and the number of individuals required. These will be discussed in next two sections.

3. An Analytical Model for N-of-1 Trials

Let be the outcome of patient in treatment period and be the corresponding treatment for and . Without loss of generality, we assume a large value of the outcome is desirable. To put the notation in the context of our study, we let denote the negative value of MCS at the end of each two-week treatment period. For the treatments, baclofen is coded as and mexiletine as . In this article, we focus on balanced sequences between baclofen and mexiletine in the experimentation phase, that is, assuming

| (3.1) |

Consider the outcome model

| (3.2) |

where is the patient-specific treatment effect and the noise are mean zero normal with cov and . To reflect heterogeneous symptoms and HTE among the patients, we postulate and . The mean indicates the average treatment effect and the variance indicates the extent of HTE in the disease population. While represents the null scenarios where there is no average treatment effects, a large value of indicates the needs for personalizing treatments.

Under model (3.2), the optimal treatment for patient can be expressed as , where is an indicator function. During the feedback period, we may present to patient an estimated treatment effect based on the experimentation phase data along with the estimated optimal personalized treatment for the patient:

| (3.3) |

Subsequently, in the event of perfect adherence to analysis result, the patient will receive the estimated optimal treatment (3.3) in the validation phase, that is, for .

Some practical notes on the choice of are in order. For the purposes of providing quick feedback, a broad range of estimators can be considered. The theoretical results derived in the following sections will hold for any estimators that are approximately normally distributed with mean and some finite variance . A simple example is the the patient-specific least squares estimator for patient . The least squares estimator is unbiased for the patient-specific treatment effect regardless of the variance-covariance structure of with variance

| (3.4) |

Note that the conditional variance (3.4) is free of the patient-specific parameters and . For the purposes of planning an N-of-1 trial, we will focus on the use of least squares. However, in the actual analysis, if additional information is available to inform the appropriate correlation structure of the data, likelihood-based estimation or weighted least squares accounting for such structure may improve efficiency.

4. How Much Experimentation Is Enough?

4.1. Patient-Centric Criteria and Length of Experimentation Phase

In this subsection, we discuss the choice of the experimentation length of an N-of-1 trial with respect to two different patient-centric criteria, both of which aim to maximize the benefits to patients on N-of-1 trials.

The first criterion is defined as the expected number of periods where a patient receives the optimal treatment. Mathematically, this criterion is denoted as , where is the number of periods in which patient receives the optimal treatment over the treatment periods.

Proposition 1.

Suppose under a balanced experimentation phase (3.1). Then for ,

where are independent standard normal variables. Furthermore, if , then

| (4.1) |

where is the cumulative distribution function of , which is a pivotal distribution.

The second patient-centric criterion is defined as the expected average outcome of a patient during an N-of-1 trial. This criterion is denoted as , where is the average outcome of the patient in all treatment periods.

Proposition 2.

Under the same condition as in Proposition 1, for ,

where and respectively denote the standard normal distribution function and density.

We can derive a few practical principles from Proposition 1 and Proposition 2. First, conducting an N-of-1 trial with an experimentation length is generally beneficial for the patient compared to experimentation in all period. Specifically, we can derive from Proposition 1 that the patient will receive at least half of the time, that is, for all , and attain the minimum when . Analogously from Proposition 2, the expected average outcome will be no smaller than the population average, that is, for all , and equality holds when .

Second, we can derive from the propositions that and are increasing in under the null . In other words, an N-of-1 trial becomes more beneficial to the patient when there is a larger variability in the treatment effects across patients.

Third, and importantly, considering the null case where and when the least squares is used to make inference based on the experimentation phase data is instructive. Under these conditions, we can derive from Proposition 2 that the criterion is maximized at

| (4.2) |

where and is defined in (3.4). While Equation (4.2) gives the optimal length as a function and , it provides some general guidance:

Main Result 1.

The optimal experimentation length is less than one-third of the total N-of-1 trial duration from a patient’s perspective, that is, .

4.2. Sample Size

In this subsection, we discuss how much experimentation is adequate in terms of the sample size enrolled to the evaluation program. We first define the quality of an N-of-1 trial as the expected health outcome under the estimated optimal treatment (3.3). Assuming perfect adherence to the analysis results in the feedback period, the quality of an N-of-1 trial can be defined as where and the expectation is taken with respect to the distributions of , and . Analogously, we can define the quality of standard of care as where is the average outcome observed in the validation phase for a patient under SOC and the expectation is taken under the assumption that the treatment in the experimentation phase continues to the validation phase. The primary objective of the evaluation program is to compare the quality of an N-of-1 trial and the quality of SOC. This can be formulated into a hypothesis testing problem with

| (4.3) |

where measures the degree of quality improvement due to N-of-1 trials defined over the patient population. The hypotheses (4.3) can in turn be tested using the regular -statistic:

| (4.4) |

where and are respectively the sample mean and the sample variance of in the patients randomized to an N-of-1 trial and and are the sample mean and sample variance of in the patients randomized to SOC. Using standard arguments gives the power of the -test

| (4.5) |

where is the upper percentile of standard normal. In Appendix C, we derive the expressions for , var , and var in (4.5) under the condition that for all . This condition is met when the N-of-1 trial patients receive the same sequence in the experimentation phase or when has a specific variance-covariance structure. For example, under a compound symmetry, that is, , we can show , that is, having for all . Specifically, under the assumption that the SOC treatment for a given patient is independent of the patient-specific treatment effect , we have

| (4.6) |

| (4.7) |

and

| (4.8) |

The above expressions account for population-level information about the treatments through the program parameter . For example, if emerging evidence in the literature suggests slight advantage of over , we may assume the physicians in the program will select with . In Appendix C, we provide expressions analogous to (4.6)–(4.8) for the situations where the physician may prescribe treatment with patient-specific knowledge in addition to the population-level parameter . However, we note that using expressions (4.6)–(4.8) may adequately reflect the standard of care where treatments are chosen based on population-level information rather than patient-specific knowledge. Furthermore, under the independence assumption of and , the power expression depends only on model parameters for which information may be available to provide preliminary estimates and the known design parameters . Finally, under the null case :

Main Result 2.

All else being equal, the power to demonstrate quality improvement due to N-of-1 trials (vs SOC) increases as heterogeneity of treatment effects increases.

5. Numerical Illustrations: Application to ALS Patients

5.1. Optimal Length of Experimentation

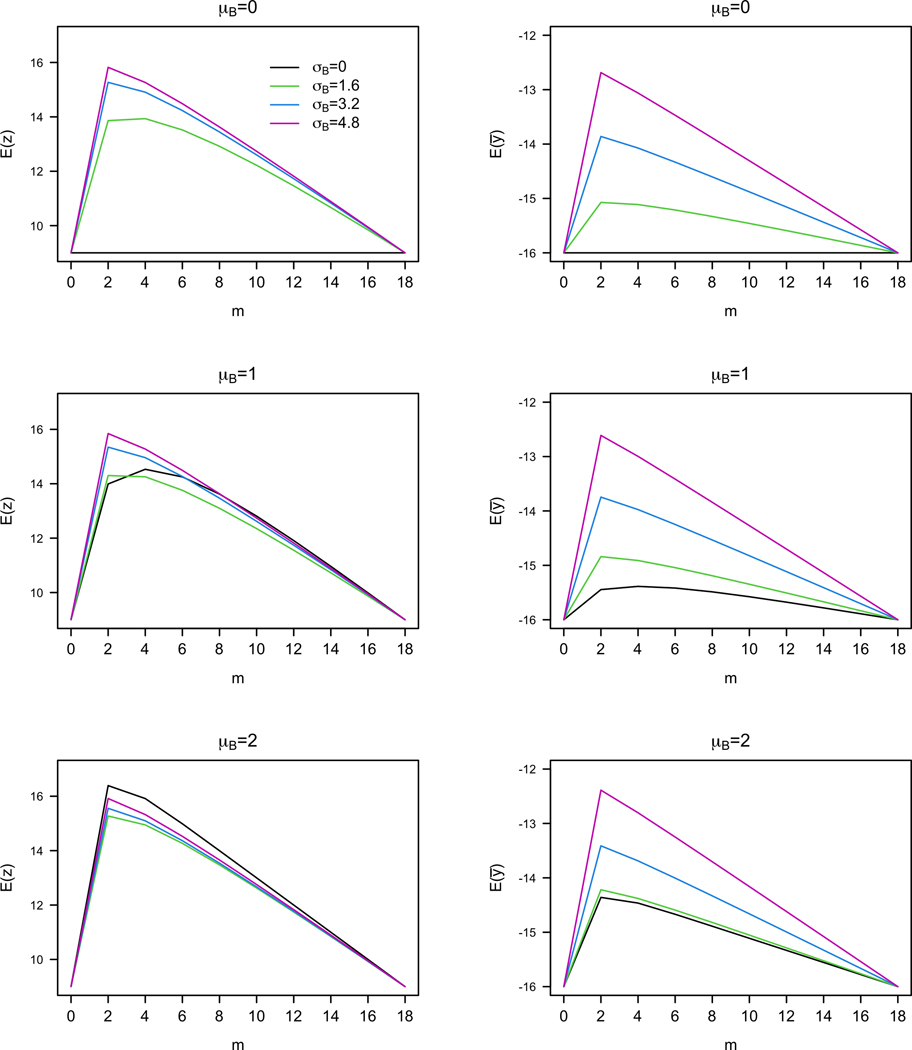

We use the MCS natural history data in (Mitsumoto et al. 2019) to inform the design of the evaluation program for people with ALS. Specifically, we fitted a random effects model to the data and obtained an estimate of and . For simplicity in illustration, we further assume that the within-subject noise is conditionally independent given the population-level parameters. Figure 2 plots the patient-centric criteria against different values of and for . While the two criteria adopt different metrics, they are maximized when is relatively small. In Figure 2 and in all that we have considered (not shown here), the optimal values of range from 2 to 6 for both criteria. This is consistent with what Main Result 1 implies: .

Figure 2.

Patient-centric criteria vs experimentation length under different values of and . Left: Expected number of optimal treatment periods vs . Right: Expected average outcome (negative of MCS) of patient vs .

5.2. Sample Size and Effect Size

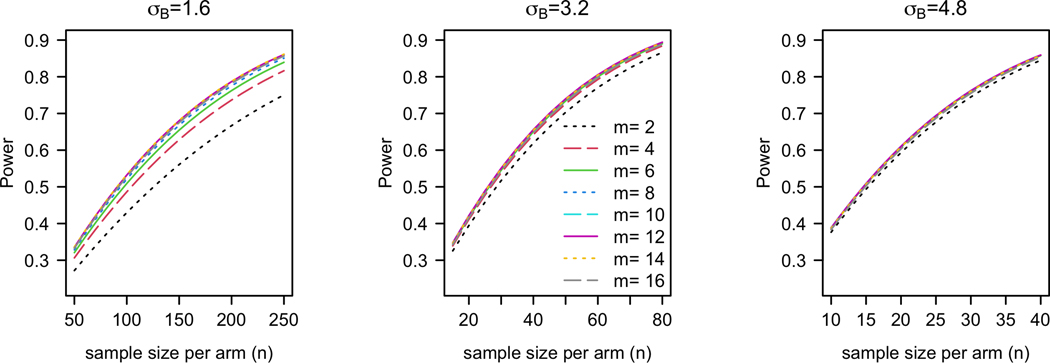

Main Result 2 implies that may be viewed as an effect size in power calculation, while the power also depends on other model parameters and design parameters. As in conventional practice, the choice of an effect size should be based on a clinically meaningful difference, whereas the other model parameters (e.g., , etc) may be based on pilot data if available. Figure 3 plots the power against for three different effect sizes for a one-sided test at 5% significance. Under each effect size, we identify the smallest that achieves 80% power for any and obtain that the required are , and respectively for and 4.8. We note that under a small effect size , the required is greater than . In light of Main Result 1, we may instead adopt in order to maximize the benefits of the N-of-1 trials to the patients. The power of this modified design is 78%, which is slightly lower than the target 80%. Generally, we observe from Figure 3 that the impact of on power is relatively small compared to that of and except when the effect size is small ().

Figure 3.

Power vs for different values of with , , , , and .

To determine if a specific value of corresponds to a clinically meaningful effect size, relating to using (4.6) may be useful, as lives on the same scale as the measurement outcomes. In our application, a 3- to 4-point change on the MCS will represent a clinical meaningful shift. Based on the pilot data and assumptions, the effect size translate to a degree of quality improvement respectively. Thus, we set the sample size for this evaluation program at with four treatment periods (two on mexiletine and two on baclofen) based on the results for . Generally, the minimally clinically important heterogeneity (MCIH ) may be determined relating to the minimally clinically important change (MCID, ) using (4.6).

5.3. Power for Comparing to Fully Informed SOC

The calculations in the previous subsection assume the null case under which the power (4.5) does not depend on the parameter . Under a non-null case, the value of reflects how informed the practice is about the population-level treatment effect. For example, if evidence in the literature suggests , an informed practice will prescribe with . Specifically, standard of care that is fully informed by the literature may correspond to .

Table 1 shows that as the average treatment effect grows larger and a fully informed SOC practice prescribes more often (i.e., larger ), the power to demonstrate quality improvement in (4.3) becomes smaller. On the one hand, this suggests that if there is overwhelming evidence favoring over in the literature, conducting N-of-1 trials will have diminished effect provided that the standard of care is fully informed. On the other hand, even with a large average treatment effect , quality improvement due to N-of-1 trials , which is still clinically meaningful and the power is still reasonable high (68%) in this sensitivity analysis. This suggests that evaluating N-of-1 trials is a worthwhile endeavor unless there is overwhelming evidence of a large average treatment effect.

Table 1.

Quality improvement and power for comparing to a fully informed SOC (standard of care) with with , and .

| Power | |||

|---|---|---|---|

| 0 | 0.50 | 3.8 | 80% |

| 1.2 | 0.60 | 3.7 | 77% |

| 1.6 | 0.63 | 3.6 | 75% |

| 2.4 | 0.69 | 3.3 | 68% |

| 4.8 | 0.84 | 2.3 | 39% |

The numerical results in this article, and power and the patient-centric criteria in general, can be computed using tools are available at: https://roadmap2health.io/hdsr/n1power/.

6. Discussion

N-of-1 trials have been increasingly used as a design tool to bridge practice and science in rare diseases (Müller et al. 2021)(n.d.b). However, the literature is missing concrete guidelines on N-of-1 designs as to how much experimentation is appropriate. A fundamental issue is the articulation of a framework that will facilitate the evaluation of the usefulness of N-of-1 trials. In this article, we introduce an evaluation framework and outline the basic elements in an evaluation program for N-of-1 trials—namely, an experimentation phase, a feedback period, and a validation phase. In the literature, the reporting of N-of-1 trials mostly focuses only on the results of the experimentation phase, where patients explore the different treatments sequentially under a rigorous clinical protocol such as randomization, blinding, and scheduled follow-up. The feedback period and the validation phase are the critical elements in the planning and the conduct of N-of-1 trials but are, unfortunately, often omitted in the description of the design and the analytical plan.

Specifically, the length of the validation phase, relative to that of the experimentation phase, should be given careful consideration. We have demonstrated theoretically and numerically that the optimal length of experimentation from the patient’s perspective should be no greater than one-third of the entire study duration. This implies a relatively long validation phase, suggesting the importance of reproducing the quality of the decisions due to N-of-1 trials with additional follow-up. Our theoretical results also provide guidance on how many patients are needed in order to adequately power for testing quality improvement. Importantly, the relative length of experimentation and validation has minimal impact on the power. In other words, little conflict exists between the goal of maximizing patient benefits and maximizing power.

The feedback period facilitates evidence-based treatment decisions using data measured in the experimentation phase. Summarizing the relative benefits of the treatments via a single numerical statistic is a pragmatic way to present such evidence, because the information can be objectively presented and quickly digested by stakeholders. We have developed design calculus based on the model-based least squares estimation, which is quick to compute and produces unbiased estimates of patient-specific treatment effects under a broad range of scenarios. Other more sophisticated model-based methods may be used to deal with the more complex situations. For example, when we observe high volume of outcome measures via wearable devices, we could extend model (3.2) to an autoregressive model with multiple observations per treatment period (Kronish et al. 2019). In practice, treatment decisions are likely determined based on the totality of evidence. For example, in situations where a treatment that apparently benefits a patient may have side effects, a possibly less effective treatment may be preferred if it is more easily tolerated. Considerations of multiple outcomes in the analysis during the feedback period will likely increase adherence and will warrant further empirical, domain-specific research. Overall, as the feedback period potentially changes the treatment decisions—and hence, the outcomes—in the validation phase, it can be viewed as an integral part of the intervention component. We may thus experiment in a randomized fashion different elements in the feedback period for different individuals: we may consider presenting different endpoints (e.g., muscle cramp or safety), using a single endpoint, a composite outcome, or multivariate endpoints, using different types of analyses (e.g., intent-to-treat vs per-protocol), and asking patients for their satisfaction and preference (Cheung et al. 2020).

Some considerations, assumptions, and limitations for power calculation in conventional randomized controlled trials also apply for N-of-1 trials. First, power calculation involves the inputs of a number of nuisance model parameters (e.g., ) as well as the effect size (). While the effect size should be determined based on clinical relevance shift, the other parameters ideally can be based on estimates from pilot data. However, in situations where robust pilot data are not available, a potential useful strategy is to leverage the concepts of adaptive designs (U.S. Food & Drug Administration 2019) whereby the model parameters are updated using interim data in the evaluation program and the updates in turn inform a reassessment of the degree of quality improvement and the sample size required.

Second, our derivations assume that patients in both arms comply with their treatments in the following sense: patients in the N-of-1 trials adhere to the estimated optimal treatments based on the experimentation phase data, and patients in the SOC continue with the same treatment as in the experimentation phase. If there is prior information about noncompliance rate, power expressions can be derived accordingly under the proposed framework. However, from the viewpoint that the feedback period is part of the N-of-1 trial intervention, it should be designed to maximize adherence by choosing the outcomes and analyses that most reflect patient preference as discussed in the previous paragraph. Third, approaches to deal with missing data should be prespecified and implemented during the feedback period. An advantage of using model-based estimation is that the model can also serve as the basis for multiple imputations. That being said, no statistical approach can replace a well-conducted trial that is characterized by good compliance to treatment and minimal missing data.

Disclosure Statement

This work was supported by grants R01LM012836 from the NIH/NLM, P30AG063786 from the NIH/NIA, UL1TR001873 from NIH/NCATS, and R01MH109496 from NIH/NIMH. Dr. Mitsumoto’s work was also supported by ALS Association, MDA Wings Over Wall Street, Spastic Paraplegia Foundation, Mitsubishi-Tanabe, and Tsumura. The funders had no role in the design and conduct of the study; collection, management, analysis, and interpretation of the data; preparation, review, or approval of the manuscript; or decision to submit the manuscript for publication. The views expressed in this paper are those of the authors and do not represent the views of the National Institutes of Health, the U.S. Department of Health and Human Services, or any other government entity.

Appendices

Appendix A. Theoretical Results Concerning

A.1. Proof of Proposition 1.

First, consider the case for patient so the optimal treatment is . Then, the number of periods the patient is on the optimal treatment equals

| (A.1) |

The first term in the right-hand-side of (A.1) is the number of optimal treatment periods received in the experimental phase, and the second term is the number in the validation phase. Since , we have

and therefore,

| (A.2) |

Next, under the case , we can analogously derive that

| (A.3) |

Combining (A.2) and (A.3) gives

| (A.4) |

which is free of . Since , the expectations of both sides in (A.4) are to be taken with respect to the distribution of to complete the proof. By change of variable, we have

| (A.5) |

Appendix B. Theoretical Results Concerning

B.1. Lemma 1

Derivations of will be facilitated by first noting the following lemma:

Lemma 1.

Let . Then,

where and respectively denote the standard normal distribution function and density function.

Proof of Lemma 1:

Using definition of expectation, we derive

| (B.1) |

where are independent standard normal variables. Thus, , and the first term in (B.1) can be evaluated as

| (B.2) |

Next, the single integral in the second term in (B.2) can be evaluated using integration by parts

| (B.3) |

Equation (B.3) can be derived by straightforward derivation. The proof of Lemma 1 is thus completed by plugging (B.2) and (B.3) into (B.1).

B.2. Proof of Proposition 2

Recall that denotes the average outcome of patient in all treatment periods in an N-of-1 trial. Hence,

| (B.4) |

Equation (B.4) holds as because of balanced design . Next, since , we have

| (B.5) |

Expression (B.5) is obtained by applying Lemma 1 with . Putting (B.5) into (B.4) gives

| (B.6) |

thus completing the proof of Proposition 2.

B.3. Derivation of optimal experimentation length and Main Result 1

For least squares , the variance , where . Further supposing simplifies (B.6) to

Hence, maximizing as a function of is equivalent to maximizing the function

where is free of . Using standard calculus arguments, we can show that the maximizer of solves the equation or equivalently,

| (B.7) |

The derivation of is completed by multiplying in the numerator and the denominator of (B.7), which gives

Now, since , we have . As a practical note, due to discreteness in , the optimal may be a result of rounding up . Hence a slightly less sharp inequality would be .

Appendix C. Theoretical Results Concerning Power

In this section, we derive the expressions involved in the power of the -test—namely, , var , and var .

Recall that and respectively denote the probabilities that the treating physicians will prescribe mexiletine and baclofen under the treatment program. Based on model (3.2), we can express the quality of an N-of-1 trial as:

and analogously where is the treatment given to patient in SOC. Hence . Under the independence assumption of and , we further obtain , and

| (C.1) |

where is given in (B.5).

Next,

The last equality is a result of (C.1). Similarly, we can show

Finally, under the null , we have

Main Result 2 is proved by dividing on the numerator and the denominator in the above expression, as a result of which the numerator will be a constant and the denominator will be a decreasing function .

For the situations where the physicians have patient-specific knowledge to inform treatments under the SOC, we may postulate that

| (C.2) |

The parameter indicates how perfect the knowledge the physicians have about the specific best treatments for their patients, with indicating perfect knowledge and indicating no additional knowledge beyond the population-level information . Under the SOC treatment system (C.2), we have

| (C.3) |

where

| (C.4) |

Using (B.5), (C.3), and (C.4), after some algebra, we have

It is instructive to consider the null case , under which

Hence, quality improvement due to N-of-1 trials diminishes as the standard of care (SOC) patient-specific knowledge increases. Also under this special case, if and only if

Similarly, we can obtain and under the SOC treatment system (C.2) by plugging (B.5) and (C.3) respectively in the followings:

and

Then under the null , we have

By dividing on the numerator and the denominator in the above expression, we can see that the numerator is an increasing function in and the denominator is a decreasing function in . Hence, Main Result 2 holds under this general case.

References

- Al-Chalabi A, & Hardiman O. (2013). The epidemiology of ALS: A conspiracy of genes, environment and time. Nature Reviews Neurology, 9(11), 617–628. 10.1038/nrneurol.2013.203 [DOI] [PubMed] [Google Scholar]

- Baldinger R, Katzberg HD, & Weber M. (2012). Treatment for cramps in amyotrophic lateral sclerosis/motor neuron disease. Cochrane Database of Systematic Reviews, Article CD004157. 10.1002/14651858.CD004157.pub2 [DOI] [PubMed]

- Caress JB, Ciarlone SL, Sullivan EA, Griffin LP, & Cartwright MS (2016). Natural history of muscle cramps in amyotrophic lateral sclerosis. Muscle & Nerve, 53(4), 513–517. 10.1002/mus.24892 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cheung K, Wood D, Zhang K, Ridenour TA, Derby L, St Onge T, Duan N, Duer-Hefele J, Davidson KW, Kronish IM, & Moise N. (2020). Personal preferences for personalized trials among patients with chronic experience: An empirical Bayesian analysis of a conjoint survey. BMJ Open, 10(6), Article e036056. 10.1136/bmjopen-2019-036056 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davidson KW, Silverstein M, Cheung K, Paluch RA, & Epstein LH (2021). Experimental designs to optimize treatments for individuals: Personalized N-of-1 trials. JAMA Pediatrics, 175(4), 404–409. 10.1001/jamapediatrics.2020.5801 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Edaravone [MCI-186] ALS 19 Study Group. (2017). Safety and efficacy of edaravone in well defined patients with amyotrophic lateral sclerosis: A randomised, double-blind, placebo-controlled trial. Lancet Neurology, 16(7), 505–512. 10.1016/s1474-4422(17)30115-1 [DOI] [PubMed] [Google Scholar]

- Hogben L, & Sim M. (1953). The self-controlled and self-recorded clinical trial for low-grade morbidity. British Journal of Preventive and Social Medicine, 7(4), 163–179. 10.1136/jech.7.4.163 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kravitz RL, Duan N. (Eds), and the DEcIDE Methods Center N-of-1 Guidance Panel (Duan N, Eslick I, Gabler NB, Kaplan HC, Kravitz RL, Larson EB, Pace WD, Schmid CH, Sim I, & Vohra S) (2014). Design and implementation of N-of-1 trials: A user’s guide. Agency for Healthcare Research and Quality. https://effectivehealthcare.ahrq.gov/products/n-1-trials/research-2014-5 [Google Scholar]

- Kravitz RL, Schmid CH, Marois M, Wilsey B, Ward D, Hays RD, Duan N, Wang Y, MacDonald S, Jerant A, Servadio JL, Haddad D, & Sim I. (2018). Effect of mobile device-supported single-patient multi-crossover trials on treatment of chronic musculoskeletal pain: A randomized clinical trial. JAMA Internal Medicine, 178(10), 1368–1378. 10.1001/jamainternmed.2018.3981 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kronish IM, Cheung YK, Shimbo D, Julian J, Gallagher B, Parsons F, & Davidson KW (2019). Increasing the precision of hypertension treatment through personalized trials: A pilot study. Journal of General Internal Medicine, 34(6), 839–845. 10.1007/s11606-019-04831-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mitsumoto H, Brooks BR, & Silani V. (2014). Clinical trials in amyotrophic lateral sclerosis: Why so many negative trials and how can trials be improved? Lancet Neurology, 13(11), 1127–1138. 10.1016/s1474-4422(14)70129-2 [DOI] [PubMed] [Google Scholar]

- Mitsumoto H, Chiuzan C, Gilmore M, Zhang Y, Ibagon C, McHale B, Hupf J, & Oskarsson B. (2019). A novel muscle cramp scale (MCS) in amyotrophic lateral sclerosis (ALS). Amyotrophic Lateral Sclerosis and Frontotemporal Degeneration, 20(5–6), 328–335. 10.1080/21678421.2019.1603310 [DOI] [PubMed] [Google Scholar]

- Müller AR, Brands MMMG, van de Ven PM, Roes KCB, Cornel MC, van Karnebeek CDM, Wijburg FA, Daams JG, Boot E, & van Eeghen AM (2021). The power of 1: Systematic review of N-of-1 studies in rare genetic neurodevelopmental disorders. Neurology, 96(11), 529–540. 10.1212/WNL.0000000000011597 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roustit M, Giai J, Gaget O, Khouri C, Mouhib M, Lotito A, Blaise S, Seinturier C, Subtil F, Paris A, Cracowski C, Imbert B, Carpentier P, Vohra S, & Cracowski J-L (2018). On-demand sildenafil as a treatment for raynaud phenomenon: A series of N-of-1 trials. Annals of Internal Medicine, 169(10), 694–703. 10.7326/m18-0517 [DOI] [PubMed] [Google Scholar]

- Samuel JP, Tyson JE, Green C, Bell CS, Pedroza C, Molony D, & Samuels J. (2019). Treating hypertension in children with n-of-1 trials. Pediatrics, 143(4), Article e20181818. 10.1542/peds.2018-1818 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stunnenberg B, Raaphorst J, Groenewoud H, Statland J, Griggs R, Woertman W, Stegeman D, Timmermans J, Trivedi J, Matthews E, Saris C, Schouwenberg B, Drost G, van Engelen B, & van der Wilt G. (2018). A series of aggregated randomized-controlled N-of-1 trials with mexiletine in non-dystrophic myotonia: Clinical trial results and validation of rare disease design (p3.440) [70th Annual Meeting of the American-Academy-of-Neurology (AAN) ; Conference date: 21–04-2018 through 27–04-2018]. Neurology, 90(15 Suppl). https://n.neurology.org/content/90/15_Supplement/P3.440 [Google Scholar]

- U.S. Food & Drug Administration. (2019). Adaptive designs for clinical trials of drugs and biologics: Guidance for Industry. https://www.fda.gov/media/78495/download

- van den Berg LH, Sorenson E, Gronseth G, Macklin EA, Andrews J, Baloh RH, Benatar M, Berry JD, Chio A, Corcia P, Genge A, Gubitz AK, Lomen-Hoerth C, McDermott CJ, Pioro EP, Rosenfeld J, Silani V, Turner MR, Weber M, . . . Mitsumoto H. (2019). Revised Airlie House consensus guidelines for design and implementation of ALS clinical trials. Neurology, 92(14), e1610–e1623. 10.1212/wnl.0000000000007242 [DOI] [PMC free article] [PubMed] [Google Scholar]