Summary

This perspective highlights the importance of addressing social determinants of health (SDOH) in patient health outcomes and health inequity, a global problem exacerbated by the COVID-19 pandemic. We provide a broad discussion on current developments in digital health and artificial intelligence (AI), including large language models (LLMs), as transformative tools in addressing SDOH factors, offering new capabilities for disease surveillance and patient care. Simultaneously, we bring attention to challenges, such as data standardization, infrastructure limitations, digital literacy, and algorithmic bias, that could hinder equitable access to AI benefits. For LLMs, we highlight potential unique challenges and risks including environmental impact, unfair labor practices, inadvertent disinformation or “hallucinations,” proliferation of bias, and infringement of copyrights. We propose the need for a multitiered approach to digital inclusion as an SDOH and the development of ethical and responsible AI practice frameworks globally and provide suggestions on bridging the gap from development to implementation of equitable AI technologies.

Ong et al. discuss opportunities and limitations for AI and large language model (LLM)-based solutions in addressing social determinants of health (SDOH) factors and promoting global health equity. More work is needed to develop responsible AI frameworks and adopt a multitiered approach to digital inclusion.

Introduction

Globally, health equity is a pervasive and prevalent problem. Indicators of health often follow a social gradient: the lower the socioeconomic position, the worse the health indicator.1,2 Factors contributing to health indicators can be broadly classified into five key domains, genetics, behavior, environmental and physical influences/environmental exposures, medical care, and social,3 of which social and behavioral factors account for up to 60% premature deaths.4

The COVID-19 pandemic, which led to lockdowns worldwide, occurred against this backdrop of existing health inequality.5 COVID-19 infection and mortality rates were disproportionately higher in socially disadvantaged regions, racial and ethnic minority groups, and marginalized populations across the globe.6,7 In addition to a higher endemic prevalence of chronic diseases such as diabetes and cardiopulmonary conditions, social factors are thought to be key contributors to this observed phenomenon, e.g., living in crowded spaces, exposure to secondhand smoke, limited access to ambulatory or acute healthcare, and information asymmetry.8,9 This pandemic has thrown social determinants of health (SDOH) research into the global spotlight: to achieve the goal of global health equity, addressing SDOH is crucial.

SDOH

SDOH is defined by the World Health Organization as non-medical conditions that affect one’s health and impact health-related outcomes.10 Conventionally, SDOH refers to the environments people reside and work in and encompasses systems and forces that influence one’s daily life such as policies related to the economy, political systems, and social norms. Its importance has led to development of initiatives such as “Healthy People 2023” in the USA, which seeks to remove health disparities. Of note, five key domains related to SDOH were identified, namely economic stability, education access and quality, healthcare access and quality, neighborhood and built environment, and lastly, social and community context.11

A mounting body of evidence has shown that SDOH impacts health outcomes significantly12: lead exposure in suboptimal residential areas is associated with poorer cognitive function and physical development in young children,13 and air pollution, which is prevalent in disadvantaged regions, is causally related to exacerbations of respiratory diseases14; children growing up in impoverished communities are more likely to suffer from emotional and mental stressors and subsequently are at higher risk for premature mortality.15

Overall, despite the wealth of evidence available related to SDOH and health outcomes, there remain significant evidence gaps in the literature, particularly with regard to SDOH with complex health interactions, which include intangible factors such as political, socioeconomic, and cultural constructs that are difficult to quantify.16 Significant technical challenges lie in the designing of studies, as some factors may not be amenable to conventional studies such as randomized controlled trials.15 This is also compounded by a long lag time to see the study effects and the need for collection of extensive sociodemographic and economic factors. The advent of artificial-intelligence-related technologies that are able to analyze large volume of healthcare data may aid in allowing better risk stratification of patients and eliciting understanding of the complex interactions between SDOH and health outcomes.

Digital health and SDOH

Digital health tools helped to address major global health challenges through improved access to healthcare services, strengthened health promotion and disease prevention, and enhanced care experiences for professionals and patients. Core digital domains include telehealth and artificial intelligence (AI), supported by other technology domains such as big data analytics, the internet of things (IoT), next-generation networks (e.g., 5G), and privacy-preserving platforms, e.g., blockchain.17,18 The COVID-19 global health crisis was a major turning point for digital health. Humanitarian and economic needs presented by the pandemic are driving the development and adoption of new digital technologies at an unprecedented scale and speed. Various digital technologies were in clinical implementation during COVID-19. In particular, AI proved to be a powerful emerging technology that improved care during the pandemic in various ways: forecast of infectious disease dynamics and outcomes of public health interventions, disease surveillance and outbreak detection, real-time population monitoring, and timely and accurate diagnosis of infections, as well as prognosis of disease severity.19

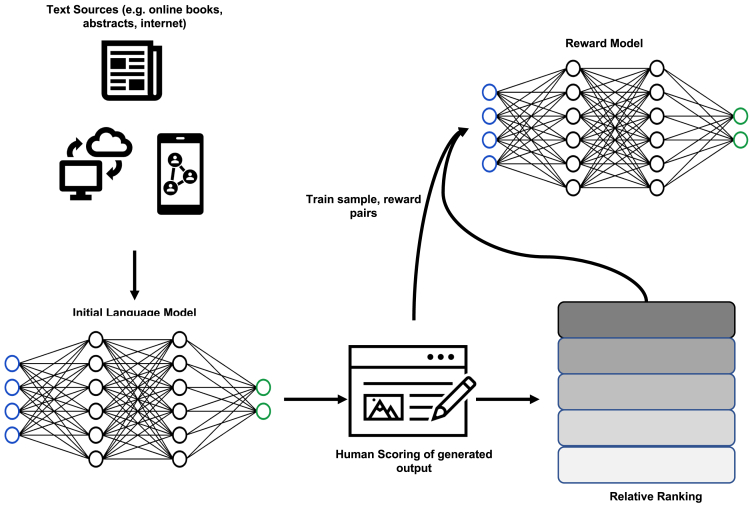

The past months have seen an accelerated development in the field of generative AI with the release of large language models (LLM) such as GPT-4, ChatGPT, and bidirectional encoder representations from Transformers (BERT).20 In brief, Transformer architecture, a type of neural network algorithm, forms the basis of LLMs—also known as “foundation models.” Transformers learn contextual information from sequential data such as written text and audio signals.21 LLMs, trained on a large corpus of data from a wide range of sources, contain up to trillions of parameters that enable them to perform a broad variety of tasks without the need for specific training. Their application for the creation of new content has made them a key technology in the field of generative AI.22 The development of ChatGPT is outlined in Figure 1, illustrating the concept of reinforcement learning with human in the loop. In the biomedical space, proposed applications for LLMs are wide ranging23,24: drug discovery and genome analysis; medical education; searching, analyzing, and interpreting large patient datasets; and generating human-like responses to improve physician-patient communication.

Figure 1.

Reinforcement learning with human in the loop

Despite rapid technological advancements, a concerted effort to address barriers to digital health such as challenges related to leadership and strategic alignment, information and technology governance, data management capacity, and systems integration, effective solution design is still lacking.25 Low digital literacy, unequal access to digital health, and biased AI algorithms have raised mounting concerns over health equity.26 As AI applications and LLM models become pervasive, we seek to understand the potential pitfalls of AI in driving health inequalities and identify key opportunities for AI in SDOH from a global perspective.

Opportunities for AI in SDOH

SDOH data used in AI models

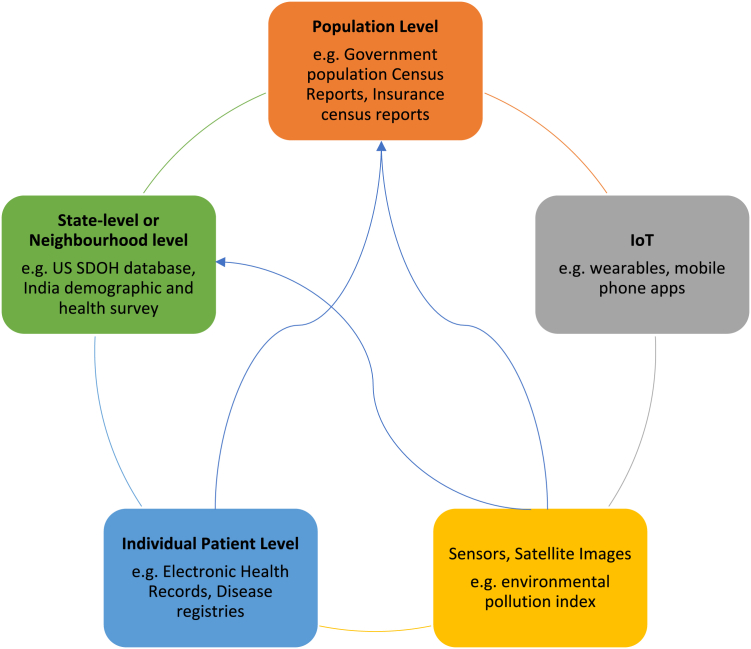

Current AI models incorporating SDOH utilize various levels of data: individual-patient-level data, state- or neighborhood-level data, or country-level data (Figure 2). SDOH data are most collected by public health agencies. These datasets made available to public are often aggregated and anonymized reports or figures. In AI model development, securing patient-level data is often a priority.27 Between high-income countries (HICs) and low- and middle-income countries (LMICs), we see a significant difference in the amount of open-access patient-level data that are availed under each SDOH domain. Table 1 illustrates examples of common SDOH data used in AI algorithms. As discussed in the sections below, a lack of data standardization poses a significant barrier to AI model development and validation across different populations.

Figure 2.

The interconnectedness of SDOH data sources

Individual patient-level data flow into neighborhood- and population-level data sources such as population census reports.

Table 1.

Examples of publicly available SDOH data in HICs and LMICs

| WHO SDOH domains | Data collected by HICs28 | Data collected by LMICs29 |

|---|---|---|

| Economic stability | household poverty ratio, type of employment, occupation | type of employment, occupation |

| Education | highest education level | highest education level, native language |

| Healthcare services | most recent doctor visit, most recent wellness doctor visit, have a usual care facility, type of usual care facility | any visit to health facility in last 12 months, time spent traveling to healthcare facility |

| Social and community | household composition, caregiving sources, health insurance, working hours | household composition, health insurance |

| Neighborhood and built environment | region of residence | source of drinking water, access to toilet, access to vehicular transport, materials used in the construction of home |

Under each SDOH domain, a lack of congruence and standardization of variables collected reflects differences in prevailing health priorities of the respective region.

SDOH extraction from electronic health records (EHRs)

Up to 80% medical data are unstructured, and traditional means of extracting information from this text are manual and time consuming. Qualitative information about patients’ lifestyle and social determinants is often embedded within unstructured clinical notes of EHRs. Advances in natural language processing (NLP) offer an efficient and automated approach to identify, collect, and analyze useful SDOH information from existing EHR systems and databases.30 Of note, deep learning (DL) algorithms such as CNNs (convolutional neural networks) and BERT have been applied to SDOH annotation from clinical unstructured text.31,32 However, the NLP methodology with the best performance for identification of less-well-studied SDOH and longitudinal analyses of SDOH-related data remains a topic of contention. LLMs have also been shown to have superior performance in the extraction of SDOH compared to structured data.33

Research on SDOH and health outcomes

Several reviews have evaluated the role of AI in assessing social determinants, especially substance abuse, employment status, and socioeconomic status on health outcomes.34 Across these studies, the roles of AI and SDOH were most extensively evaluated in patients with mental health and chronic diseases such as diabetes, particularly with the incorporation of SDOH factors for clinical risk prediction. The relationship between social determinants and health outcomes is often indirect and complex, involving different biopsychosocial processes.15 Advancements in multilevel modeling have made it possible to combine individual-level and group-level SDOH data to improve disease surveillance and prediction and evaluation of population health interventions.35 In addition, temporal evolution of SDOH factors, geospatial relationships, and their impact on health outcomes have also been poorly characterized by conventional statistical methods. AI models generally outperform parametric models in this respect.36 However, inherent weaknesses such as lack of interpretability with consequent difficulty in deriving mechanistic explanations may limit widespread application of AI models in SDOH assessment.

Improve access to care

AI healthcare applications range broadly from disease diagnosis, patient triage, disease surveillance, and prediction to personalizing treatment, health policy, and planning.37 Applications of AI in LMICs are still fairly limited in scope, most frequently adopted for the screening of non-communicable diseases such as diabetic retinopathy, diagnosis of infectious diseases such as tuberculosis, and augmentation of maternal and child health.38,39 At a policy level, AI modeling has been used to accurately identify high-risk individuals for more targeted health policy generation.40

AI has the potential to democratize specialized care in under-resourced settings and improve care accessibility, an important SDOH. Democratization, used in our context, refers to the endeavor of making healthcare universally accessible. In LMICs, telehealth platforms can be coupled with innovative diagnostic tests and home-based monitoring tools to promote delivery of patient-centric specialist care in a primary care setting. For example, the integration of DL systems with smartphone-based applications has been developed to detect prevalent eye diseases such as glaucoma and diabetic retinopathy in LMICs.41 Dacal et al. described an end-to-end solution for diagnosis of soil-transmitted helminth infections in Kenya that includes a mobile app for image digitization, a DL algorithm for automatic detection of pathogen, and a telemedicine platform for analysis.42 High-risk pregnancy detection in rural areas of Africa is now possible without trained sonographers through the use a low-cost ultrasound probe attached to a smartphone app installed with a real-time DL algorithm.43 While current studies predominantly address the accuracy of various AI tools in different healthcare settings, we acknowledge that a direct correlation between tool accuracy and the enhancement of care quality or accessibility has not been explicitly demonstrated. Empirical studies that holistically examine the integration of AI tools into care systems, their impact on patient outcomes, and broader systems-level outcomes are necessary to solidify the claim that AI can truly democratize care and enhance its accessibility and quality.

The role of AI in health service democratization is evident in high-income settings as well, particularly in the delivery of specialized care in radiology, pathology, and ophthalmology. These countries possess the ability to scale and implement AI tools at local or international levels for healthcare service delivery. AI tools currently used in the screening and detection of debilitating diseases such as diabetic retinopathy (DR) demonstrate high levels of precision and cost effectiveness when implemented country wide.44,45 Better health equity is then achieved through efficient distribution of specialized person power and resources. In addition to right siting of specialized resources, AI enhances the overall quality of healthcare. AI-powered algorithms have outperformed physicians and traditional screening tools in detecting and diagnosing serious diseases such as skin and breast cancers.46,47 Detection of these conditions at early stages significantly increases response to traditional lines of treatments that are potentially cheaper and more accessible to the general population.

LLMs in SDOH

LLMs like ChatGPT have the ability to generate and replicate human responses in a highly convincing manner. This linguistic ability, coupled with multilingual capabilities,48,49 is purported to be capable of enhancing communication between healthcare providers and patients across geographical and language borders. Through the provision of health education and patient enquires, basic medical advice can be administered without the need to engage healthcare professionals directly, which addresses operational challenges in LMICs and remote areas where healthcare professional manpower is in a critical crunch. These models can support time-consuming, administrative activities such as discharge report writing, patient stay summaries, and patient history interrogation.50 In addition, LLMs may overcome the infrastructural limitations (discussed in the section below) imposed by semantic and syntactic interoperability in EHR system.51 However, despite the huge promise of LLMs in transforming healthcare, the deployment of these models needs to be approached with caution. The lack of reliable and robust methods to distinguish between LLM-generated and human-generated content amplifies healthcare professionals’ and patients’ vulnerability to anthropomorphism.52,53 Inherent risks presented by LLM-based applications include inadvertent disinformation or “hallucinations,” proliferation of bias, and infringement of copyrights, all of which can have far-reaching societal and health equity implications. These technologies in their current form have the potential to incite harm, especially in mental health, where NLP algorithms have shown biases relating to religion, race, gender, nationality, sexuality, and age.20 There is a need for a framework to govern the development, deployment, technology assessment, and eventual regulation of LLMs for medical applications. We discuss some of the challenges and barriers relating to LLMs and SDOH below.

Challenges and barriers

Lack of SDOH data standardization

A lack of a standardized method of data collection and definitions has impeded progress in global SDOH research. Take housing data for example: it can include different definitions, metadata, and measurements. There is a lack of standardized data elements, assessment tools, measurable inputs, and data collection practices in clinical notes for patient-level SDOH information.30 SDOH also presents at individual, neighborhood, and population levels. LMICs face capacity constraints to handle large data. Data sources owned by private sectors may not be available publicly as discussed above. Data are often not harmonized nor interoperable across sectors, making data integration a challenge and subsequent analysis/modeling difficult.29 The lack of standardized terminologies and definitions will also limit semantic interoperability, posing a challenge to the implementation of algorithms. This issue is not specific to LMICs. In Denmark, for example, the healthcare sector is highly digitized, with a long history of health data registry establishment. Robust healthcare research is made possible due to individual-level record linkage between all data sources.54 However, important SDOH variables such as lifestyle factors are still not routinely captured in a standardized manner. Furthermore, multicohort validation of AI models rely heavily on standardized frameworks for SDOH data collection.

Initial efforts have been made to improve standardization of SDOH data and integrate data into care delivery. The AMA Integrated Health Model Initiative (IMHI) and Gravity project spearheaded by the American Medicine Academy seeks to develop consensus-driven standards for collection and transfer of SDOH data with subsequent integration into CPT codes.55 The NHS England published a consensus common outcomes framework (COF), which recommended the use of 3 primary care codes to standardize recording of social-prescribing activities.56 However, the codes lack the level of granularity that is required for the purpose of SDOH research, AI modeling, and implementation. Another widely available tool is the ICD-10 Z-codes (Z55–Z65) that represent SDOH data such as employment status, access to shelter, and social insurance or welfare support. However, despite global adoption of ICD-10, Z-codes are highly underutilized, with less than 2% hospitalized patients having a Z-coded diagnosis.57

Health infrastructure limitations and environmental impact

The lack of essential physical facilities, regulatory barriers, and population readiness pose significant challenges to equitable implementation of AI. Variances in internet connectivity, linguistic compatibility, operational skills, and hardware prerequisites may challenge access to benefits conferred by AI models and LLMs. On the level of physical infrastructure, LMICs and remote areas may not possess reliable telecommunication networks, stable internet connections, or sufficient equipment. Recognizing infrastructure limitations is essential for successful implementation of digital tools, e.g., mobile applications, in many cases, need to have the ability to work in both online and offline modes.58 Notably, disparities in technological access may further exacerbate global inequalities by disproportionately favoring specific cohorts.59 Implementing digital solutions with lower technical requirements and cost is promising. A multidisciplinary approach supported by government, hospital systems, physicians, AI companies, and industry partners is necessary to create a robust local infrastructure of IT systems, clinical services, and digital communications networks.60 LMICs should invest in infrastructure for local validation, and model recalibration will also lay the groundwork for eventual contribution of local data to international data repositories.61 Aside from physical infrastructure, regulatory barriers pose a critical challenge to successful clinical deployment of AI models and digital health solutions. While a plethora of fully validated AI models can be found in published literature, clinical implementation in practice is hampered by the lack of a clear regulatory approval and deployment roadmap.62 The development of a regulatory framework for AI models is at its nascent stage. The establishment of national and international regulatory standards could accelerate technology adoption.

Intensive computing processes of AI and LLMs alike give rise to environmental and potential labor impacts. LLMs, in particular, require significant energy demands during the training process and, correspondingly, result in high carbon emissions.63 This can exacerbate existing environmental challenges including rising carbon footprint, water use, and soil pollution of sealing, which has implications for environmental quality and collective influence on SDOH.52,64 Particular to LMICs, the engagement of low-cost workers to perform annotation of toxic language (including text embedding hate speech, sexual abuse, violence) may contribute to negative psychological health outcomes among these workers.65,66 It is thus imperative to examine the impact of the aforementioned labor practices to ensure that advancements in AI do not come at the expense of employee well-being.

Digital literacy, inclusivity, and culture of acceptance

Digital literacy and digital inclusivity are important indicators of population readiness. Certain population groups fare better in a digitized world, for example the younger, the better educated, and/or urban dwellers. These groups consistently report higher internet access and digital skills and will benefit the most from digital and AI health technologies. Conversely, vulnerable groups commonly experience high barriers to access, creating a digital health paradox. Singapore is a digitally advanced nation with almost universal digital availability, yet when COVID-19 forced rapid digital adoption, gaps in access by vulnerable groups such as low-income households, the elderly, and migrant workers were found.67 Placing emphasis on digital inclusivity as a “super” SDOH could mitigate health inequalities brought about by AI and digital health technologies. Underpinning successful implementation of digital health is innate trust and belief in the benefits of digital technologies at an individual level. Careful, calibrated change management strategies are critical but often neglected by governments and healthcare leaders. This is also critical to the cultural transformation of digital health from traditional health services.

Pertaining to LLMs, we discussed in the above section the potential of fine-tuned or domain-specific LLMs in mitigating healthcare worker burdens through automation of clinical documentation and enhancement of healthcare conversations. However, widespread adoption of such technology is currently impeded by concerns related to accuracy and consistency. Medical document summarization involves more than just noting facts; it also involves creation of an accurate narrative of the patient. Nuances of medical humanities, patient preferences, and complexities of socioeconomic and psychological status have yet to be fully replicated by generative AI.68 These current limitations suggest that healthcare professionals may still have to manually review outputs for accuracy that, in turn, may be more time intensive and cognitively fatiguing.

Bias in algorithms and privacy concerns

Inadequate representation in training datasets may jeopardize the effectiveness of AI-based health interventions in vulnerable populations. AI models often generalize poorly in populations outside of its data training and validation cohort. While models may perform well in well-represented regions, disparate performance in less-represented cohorts and minority populations may perpetuate existing health disparities.69 Clinical AI models often draw data from EHRs, biobanks, or genome databases, in which groups with irregular or limited access to the healthcare systems, e.g., ethnic minorities and immigrants, will be poorly represented.70,71 Data shifts and model deterioration may result in missed diagnosis, inaccurate prognosis, and suboptimal treatment recommendations in unrepresented cohorts.72,73 Along the same vein, implementing algorithms trained using large datasets has been shown to be biased against individuals of different races and socioeconomic status. For instance, chest X-ray classifiers trained using datasets dominated by White patients performed poorly in Medicare patients,74 and a risk prediction algorithm trained using large datasets failed to triage African Americans for necessary care.75 This will remain a pervasive problem since more than half of the datasets used for clinical AI development originate from either the USA or China.61 Careful validation of AI algorithms is imperative in ensuring reproducible results before being deployed in other populations. To effectively combat such bias, models should be trained using multi-institutional datasets and surveyed for disparate performance during model deployment.76 In addition to a lack of representation in datasets, AI-driven clinical decision support may inadvertently reproduce the biased judgements and decision-making that are entrenched in real-world practice. Biases captured in unstructured clinical notes may be picked up by and replicated in NLP-based AI tools. Along the same vein, bias in pretraining or fine-tuning datasets of LLMs may encode historical and ongoing bias. Prejudiced, stereotyped, and discriminatory outputs reflect empirically observed patterns of judgements from pretraining datasets. In an experiment using commercial LLMs, kidney function estimation queries returned answers based on an outdated and race-biased medical formula.77 At this juncture, we caution against unchecked, unaccounted, and untested applications of LLM in clinical decision support. LLMs may inadvertently perpetuate these biases and contribute toward widening health inequities.

In the realm of data and model responsibility, the party that remains liable for the outcomes of AI and LLMs remains unclear, and it is important to note that absence of autonomy and sentience renders a lack of moral agency in AI.78 Pertaining to data privacy, patient-related data are entrusted to healthcare institutions and professionals, and the use of big data may lead to potential unauthorized usage of personal data in predictive analyses.79 A lack of robust data privacy frameworks may introduce vulnerabilities for penetration and manipulation of sensitive patient information. Reidentification of anonymized medical data is possible with few spatiotemporal datapoints despite deidentification efforts.80 Vulnerable populations are in jeopardy when SDOH data carrying sensitive and stigmatizing information are uncovered. Examples of cybersecurity measures include user authentication, audit trails, data encryption, and secure data transmission mechanisms.58

Developments in privacy-preserving technologies are gaining traction. Homomorphic encryption is one such solution that permits for analyses to be performed securely on encrypted data without the need for decryption. Generative adversarial networks (GANs) are generative models that learn from the distribution of healthcare data or medical images to create large, realistic synthetic data.76 The privacy of patients is protected, as data cannot be attributed to any single individual. In addition, GANs can be used to augment healthcare datasets with class imbalance or insufficient variability. Performance of models augmented by GANs outperforms those trained using traditionally augmented data using a fraction of the original training dataset.81 GANs present as a novel tool to overcome both privacy concerns and algorithmic bias discussed above. Federated machine learning is a data-private, multisite, collaborative learning approach that distributes model training and aggregates model weights without the need to share individual patient datasets.82,83 Blockchain technology facilitates secure and auditable exchange of sensitive patient data, safeguarding data integrity and immutability. Blockchain can be coupled with the aforenamed privacy-preserving techniques to augment AI algorithms while providing an additional layer of data security.84,85

Future directions

Emphasis on digital inclusion

In the recent decades, digital literacy and internet access have been increasingly recognized as “super” SDOHs due to their complex interlink and ability to affect other SODH.86 A key barrier to digital literacy lies in the digital divide, which afflicts disadvantaged populations more significantly. For example, approximately one-sixth of low-income household families in the USA do not have broadband internet access.87

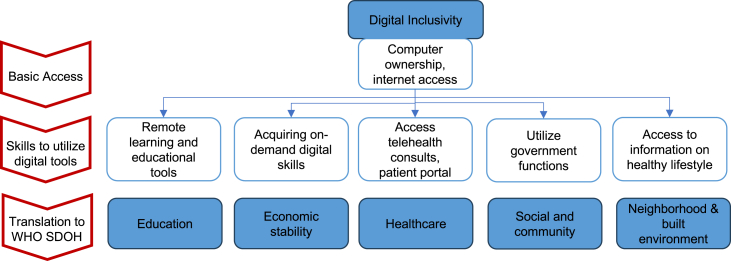

With expanding applications of AI and digital health, it is imperative to devise strategies to bridge the digital divide in our pursuit of health equity. The concept of digital inclusion encompasses activities that allow for equitable access and use of information communication technologies (https://www.digitalinclusion.org/definitions/). Digital inclusion goes beyond simple possession of internet-enabled devices and internet access; elements such as digital literacy training, availability of technical support, and applications to empower users are equally crucial (https://www.digitalinclusion.org/definitions/). Within the EU, only about half of the population possesses basic digital skills, despite widespread access to the internet (https://digital-strategy.ec.europa.eu/en/library/ict-work-digital-skills-workplace). We propose a multitiered approach to evaluate and address digital inclusion as an SDOH (Figure 3).

Figure 3.

A multitiered approach for digital inclusion using WHO SDOH framework

The framework was adapted from Van Dijk.88

Global agenda for ethical and equitable AI practices

The establishment of global guidelines and framework is necessary to guide the development, implementation, and evaluation of AI-based digital health technologies to ensure efficacy sustainability and equitability. Various guidelines and frameworks have been developed to guide the ethical use of AI including guidance from international bodies, e.g., the WHO (https://www.who.int/publications/i/item/9789240029200) and UNI Global Union (https://uniglobalunion.org/report/10-principles-for-ethical-artificial-intelligence/)—however, significant divergences exist in the interpretation and prioritization of specific ethics principles.89 In addition, there is a lack of representation from LMICs such as African and South American countries, revealing a power imbalance in this ethical debate. Relevant stakeholders from different global regions should be involved in the development of a global consensus framework while respecting variability in regional priorities, capabilities, and challenges.60

Furthermore, on the research front, a global research agenda for AI interventions relevant to addressing SDOH will ensure that tools developed respond to population needs.37 Furthermore, AI research in LMICs can be enhanced by international frameworks to promote model transparency such as the “transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD)” reporting guideline and “developmental and exploratory clinical investigations of decision support systems driven by AI (DECIDE-AI).”90,91

Bridge development to implementation

Greater efforts are also required to bridge development to implementation for AI-based technologies to maximize gain. Lessons drawn from implementation science suggest a need to map out unique circumstances and processes within each local setting to identify potential interactions and barriers of technology implementation. Active engagement of local stakeholders such as government agencies and non-governmental organizations (NGOs) in the process of workflow design is essential, especially in resource-limited settings.38,92 For example, DL algorithms developed using computed tomography (CT) images cannot be generalized or implemented in a region where CT scanners are unavailable. Along the same vein, there is a need to conduct economic evaluation of AI-based technologies under different resource settings. For the under-resourced settings, the key is to prevent life-threatening conditions, and thus the referral thresholds by these digital tools will need to be carefully set based on the health economic analysis that is feasible to the specific country. Cost-effective analyses of AI vs. standard of care reveal useful information for policy makers and regulators such as the optimal operating model or whether the new initiative should be adopted at all.44,93

The “black box” nature of DL- and LLM-based models, compounded by the tendency of LLMs to “hallucinate,” is a key impediment to the development of trustworthy and explainable AI models. Beyond improving accuracy of AI model outputs, significant effort and progress has been made in developing explainability techniques for machine learning and DL models, such as saliency-based explainable AI (XAI).94 However, interpretation of such techniques, e.g., SHAP, may not be sufficiently “layman” to facilitate rapid decision-making in clinical practice.95 In addition, there is still a paucity of evidence to quantify improved clinician or patient trust in AI model output through incorporation of these explainability techniques. We encourage more research and exploration in examining factors that foster greater user trust in AI models and the design of conceptual models and frameworks96 to bridge the gap between model development and effective implementation.

Conclusion

Achieving health equity remains a challenge, and addressing SDOH is progressively becoming a global priority. In a post-pandemic world, the widening digital and health divide has thrown caution to the use of AI and digital health technologies. Significant challenges and barriers to equitable AI implementation remain, especially with regard to overcoming infrastructure limitations and algorithmic bias. Newer techniques and global collaborative efforts have the potential to overcome these barriers and allow disruptive and transformative digital health tools to flourish.

Acknowledgments

D.S.W.T. is supported by grants from the National Medical Research Council Singapore (MOH-000655–00 and MOH-001014–00), the Duke-NUS Medical School Singapore (Duke-NUS/RSF/2021/0018, 05/FY2020/EX/15-A58, and 05/FY2022/EX/66-A128), and the Agency for Science, Technology and Research Singapore (A20H4g2141 and H20C6a0032) for research in AI. J.C.L.O. is supported by grants from the National Medical Research Council Singapore (MOH-CIAINV21nov-001) and AI Singapore OTTIC (AISG2-TC-2022-006).

Declaration of interests

D.S.W.T. hold patents on a DL system for the detection of retinal diseases.

References

- 1.Morse D.F., Sandhu S., Mulligan K., Tierney S., Polley M., Chiva Giurca B., Slade S., Dias S., Mahtani K.R., Wells L., et al. Global developments in social prescribing. BMJ Glob. Health. 2022;7:e008524. doi: 10.1136/bmjgh-2022-008524. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.McGinnis J.M., Foege W.H. Actual causes of death in the United States. JAMA. 1993;270:2207–2212. [PubMed] [Google Scholar]

- 3.Schroeder S.A. We Can Do Better — Improving the Health of the American People. NEJM. 2007;357:1221–1228. doi: 10.1056/NEJMsa073350. [DOI] [PubMed] [Google Scholar]

- 4.Marmot M. Universal health coverage and social determinants of health. Lancet. 2013;382:1227–1228. doi: 10.1016/S0140-6736(13)61791-2. [DOI] [PubMed] [Google Scholar]

- 5.Bambra C., Riordan R., Ford J., Matthews F. The COVID-19 pandemic and health inequalities. J. Epidemiol. Community Health. 2020;74:964–968. doi: 10.1136/jech-2020-214401. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Millett G.A., Jones A.T., Benkeser D., Baral S., Mercer L., Beyrer C., Honermann B., Lankiewicz E., Mena L., Crowley J.S., et al. Assessing differential impacts of COVID-19 on black communities. Ann. Epidemiol. 2020;47:37–44. doi: 10.1016/j.annepidem.2020.05.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Unruh L.H., Dharmapuri S., Xia Y., Soyemi K. Health disparities and COVID-19: A retrospective study examining individual and community factors causing disproportionate COVID-19 outcomes in Cook County, Illinois. PLoS One. 2022;17:e0268317. doi: 10.1371/journal.pone.0268317. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Vardavas C.I., Nikitara K. COVID-19 and smoking: A systematic review of the evidence. Tob. Induc. Dis. 2020;18:20. doi: 10.18332/tid/119324. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Goh O.Q., Islam A.M., Lim J.C.W., Chow W.C. Towards health market systems changes for migrant workers based on the COVID-19 experience in Singapore. BMJ Glob. Health. 2020;5:e003054. doi: 10.1136/bmjgh-2020-003054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.World Health Organisation. Social Determinants of Health. ; Available from: https://www.who.int/health-topics/social-determinants-of-health#tab=tab_1. Accessed March 13, 2023.

- 11.Healthy People 2030 Social Determinants of Health. https://health.gov/healthypeople/priority-areas/social-determinants-health Accessed December 3, 2023.

- 12.Daniel H., Bornstein S.S., Kane G.C., Health and Public Policy Committee of the American College of Physicians. Carney J.K., Gantzer H.E., Henry T.L., Lenchus J.D., Li J.M., McCandless B.M., et al. Addressing Social Determinants to Improve Patient Care and Promote Health Equity: An American College of Physicians Position Paper. Ann. Intern. Med. 2018;168:577–578. doi: 10.7326/M17-2441. [DOI] [PubMed] [Google Scholar]

- 13.Lidsky T.I., Schneider J.S. Lead neurotoxicity in children: basic mechanisms and clinical correlates. Brain. 2003;126:5–19. doi: 10.1093/brain/awg014. [DOI] [PubMed] [Google Scholar]

- 14.Lanphear B.P., Kahn R.S., Berger O., Auinger P., Bortnick S.M., Nahhas R.W. Contribution of residential exposures to asthma in us children and adolescents. Pediatrics. 2001;107:E98. doi: 10.1542/peds.107.6.e98. [DOI] [PubMed] [Google Scholar]

- 15.Braveman P., Gottlieb L. The social determinants of health: it's time to consider the causes of the causes. Public Health Rep. 2014;129:19–31. doi: 10.1177/00333549141291S206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Editorial Social Determinants of Health (SDOH) NEJM Catalyst. 2017 https://catalyst.nejm.org/doi/full/10.1056/CAT.17.0312 [Google Scholar]

- 17.Gunasekeran D.V., Tham Y.C., Ting D.S.W., Tan G.S.W., Wong T.Y. Digital health during COVID-19: lessons from operationalising new models of care in ophthalmology. Lancet Digit. Health. 2021;3:e124–e134. doi: 10.1016/S2589-7500(20)30287-9. [DOI] [PubMed] [Google Scholar]

- 18.Ting D.S.W., Carin L., Dzau V., Wong T.Y. Digital technology and COVID-19. Nat. Med. 2020;26:459–461. doi: 10.1038/s41591-020-0824-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Syrowatka A., Kuznetsova M., Alsubai A., Beckman A.L., Bain P.A., Craig K.J.T., Hu J., Jackson G.P., Rhee K., Bates D.W. Leveraging artificial intelligence for pandemic preparedness and response: a scoping review to identify key use cases. NPJ Digit. Med. 2021;4:96. doi: 10.1038/s41746-021-00459-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Arora A., Arora A. The promise of large language models in health care. Lancet. 2023;401:641. doi: 10.1016/S0140-6736(23)00216-7. [DOI] [PubMed] [Google Scholar]

- 21.Vaswani A., Shazeer N., Parmer N., Uszkoreit J., Jones L., Gomez A.N., Kaiser L., Polosukhin I. Attention is all you need. arXiv. 2017 [Google Scholar]

- 22.Sanderson K. GPT-4 is here: what scientists think. Nature. 2023;615:773. doi: 10.1038/d41586-023-00816-5. [DOI] [PubMed] [Google Scholar]

- 23.Thirunavukarasu A.J., Ting D.S.J., Elangovan K., Gutierrez L., Tan T.F., Ting D.S.W. Large language models in medicine. Nat. Med. 2023;29:1930–1940. doi: 10.1038/s41591-023-02448-8. [DOI] [PubMed] [Google Scholar]

- 24.Tan T.F., Thirunavukarasu A.J., Campbell J.P., Keane P.A., Pasquale L.R., Abramoff M.D., Kalpathy-Cramer J., Lum F., Kim J.E., Baxter S.L., Ting D.S.W. Generative Artificial Intelligence Through ChatGPT and Other Large Language Models in Ophthalmology: Clinical Applications and Challenges. Ophthalmol. Sci. 2023;3:100394. doi: 10.1016/j.xops.2023.100394. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Zelmer J., Sheikh A., Zimlichman E., Bates D.W. Transforming Care and Outcomes with Digital Health Through and Beyond the Pandemic. NEJM Catalyst. 2022 [Google Scholar]

- 26.d'Elia A., Gabbay M., Rodgers S., Kierans C., Jones E., Durrani I., Thomas A., Frith L. Artificial intelligence and health inequities in primary care: a systematic scoping review and framework. Fam. Med. Community Health. 2022;10 doi: 10.1136/fmch-2022-001670. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Mullangi S., Aviki E.M., Hershman D.L. Reexamining Social Determinants of Health Data Collection in the COVID-19 Era. JAMA Oncol. 2022;8:1736–1738. doi: 10.1001/jamaoncol.2022.4543. [DOI] [PubMed] [Google Scholar]

- 28.Centers for Disease Control and Prevention. 2021. 2021 National Health Interview Survey Sample Adult Interview. https://www.cdc.gov/nchs/nhis/2021nhis.htm. [Google Scholar]

- 29.Torres I., Thapa B., Robbins G., Koya S.F., Abdalla S.M., Arah O.A., Weeks W.B., Zhang L., Asma S., Morales J.V., et al. Data Sources for Understanding the Social Determinants of Health: Examples from Two Middle-Income Countries: the 3-D Commission. J. Urban Health. 2021;98:31–40. doi: 10.1007/s11524-021-00558-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Patra B.G., Sharma M.M., Vekaria V., Adekkanattu P., Patterson O.V., Glicksberg B., Lepow L.A., Ryu E., Biernacka J.M., Furmanchuk A., et al. Extracting social determinants of health from electronic health records using natural language processing: a systematic review. J. Am. Med. Inform. Assoc. 2021;28:2716–2727. doi: 10.1093/jamia/ocab170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Lybarger K., Ostendorf M., Yetisgen M. Annotating social determinants of health using active learning, and characterizing determinants using neural event extraction. J. Biomed. Inform. 2021;113 doi: 10.1016/j.jbi.2020.103631. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Stemerman R., Arguello J., Brice J., Krishnamurthy A., Houston M., Kitzmiller R. Identification of social determinants of health using multi-label classification of electronic health record clinical notes. JAMIA Open. 2021;4:ooaa069. doi: 10.1093/jamiaopen/ooaa069. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Guevara M., Chen S., Thomas S., Chaunzwa T.L., Franco I., Kann B., Moningi S., Qian J., Goldstein M., Harper S., et al. 2023. Large Language Models to Identify Social Determinants of Health in Electronic Health Records. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Bompelli A., Wang Y., Wan R., Singh E., Zhou Y., Xu L., Oniani D., Kshatriya B.S.A., Balls-Berry J.E., Zhang R. Social and Behavioral Determinants of Health in the Era of Artificial Intelligence with Electronic Health Records: A Scoping Review. Health Data Sci. 2021;2021:9759016. doi: 10.34133/2021/9759016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Shaban-Nejad A., Michalowski M., Buckeridge D.L. Health intelligence: how artificial intelligence transforms population and personalized health. NPJ Digit. Med. 2018;1:53. doi: 10.1038/s41746-018-0058-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Kino S., Hsu Y.T., Shiba K., Chien Y.S., Mita C., Kawachi I., Daoud A. A scoping review on the use of machine learning in research on social determinants of health: Trends and research prospects. SSM Popul. Health. 2021;15 doi: 10.1016/j.ssmph.2021.100836. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Schwalbe N., Wahl B. Artificial intelligence and the future of global health. Lancet. 2020;395:1579–1586. doi: 10.1016/S0140-6736(20)30226-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Ugarte-Gil C., Icochea M., Llontop Otero J.C., Villaizan K., Young N., Cao Y., Liu B., Griffin T., Brunette M.J. Implementing a socio-technical system for computer-aided tuberculosis diagnosis in Peru: A field trial among health professionals in resource-constraint settings. Health Informatics J. 2020;26:2762–2775. doi: 10.1177/1460458220938535. [DOI] [PubMed] [Google Scholar]

- 39.Bellemo V., Lim Z.W., Lim G., Nguyen Q.D., Xie Y., Yip M.Y.T., Hamzah H., Ho J., Lee X.Q., Hsu W., et al. Artificial intelligence using deep learning to screen for referable and vision-threatening diabetic retinopathy in Africa: a clinical validation study. Lancet. Digit. Health. 2019;1:e35–e44. doi: 10.1016/S2589-7500(19)30004-4. [DOI] [PubMed] [Google Scholar]

- 40.Carroll N.W., Jones A., Burkard T., Lulias C., Severson K., Posa T. Improving risk stratification using AI and social determinants of health. Am. J. Manag. Care. 2022;28:582–587. doi: 10.37765/ajmc.2022.89261. [DOI] [PubMed] [Google Scholar]

- 41.Bastawrous A., Rono H.K., Livingstone I.A.T., Weiss H.A., Jordan S., Kuper H., Burton M.J. Development and Validation of a Smartphone-Based Visual Acuity Test (Peek Acuity) for Clinical Practice and Community-Based Fieldwork. JAMA Ophthalmol. 2015;133:930–937. doi: 10.1001/jamaophthalmol.2015.1468. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Dacal E., Bermejo-Peláez D., Lin L., Álamo E., Cuadrado D., Martínez Á., Mousa A., Postigo M., Soto A., Sukosd E., et al. Mobile microscopy and telemedicine platform assisted by deep learning for the quantification of Trichuris trichiura infection. PLoS Negl. Trop. Dis. 2021;15:e0009677. doi: 10.1371/journal.pntd.0009677. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Pokaprakarn T., Prieto J.C., Price J.T., Kasaro M.P., Sindano N., Shah H.R., Peterson M., Akapelwa M.M., Kapilya F.M., Sebastião Y.V., et al. AI Estimation of Gestational Age from Blind Ultrasound Sweeps in Low-Resource Settings. NEJM Evid. 2022;1 doi: 10.1056/evidoa2100058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Xie Y., Nguyen Q.D., Hamzah H., Lim G., Bellemo V., Gunasekeran D.V., Yip M.Y.T., Qi Lee X., Hsu W., Li Lee M., et al. Artificial intelligence for teleophthalmology-based diabetic retinopathy screening in a national programme: an economic analysis modelling study. Lancet. Digit. Health. 2020;2:e240–e249. doi: 10.1016/S2589-7500(20)30060-1. [DOI] [PubMed] [Google Scholar]

- 45.Han J.E.D., Liu X., Bunce C., Douiri A., Vale L., Blandford A., Lawrenson J., Hussain R., Grimaldi G., Learoyd A.E., et al. Teleophthalmology-enabled and artificial intelligence-ready referral pathway for community optometry referrals of retinal disease (HERMES): a Cluster Randomised Superiority Trial with a linked Diagnostic Accuracy Study-HERMES study report 1-study protocol. BMJ Open. 2022;12:e055845. doi: 10.1136/bmjopen-2021-055845. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Winkler J.K., Fink C., Toberer F., Enk A., Deinlein T., Hofmann-Wellenhof R., Thomas L., Lallas A., Blum A., Stolz W., Haenssle H.A. Association Between Surgical Skin Markings in Dermoscopic Images and Diagnostic Performance of a Deep Learning Convolutional Neural Network for Melanoma Recognition. JAMA Dermatol. 2019;155:1135–1141. doi: 10.1001/jamadermatol.2019.1735. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Liu Y., Kohlberger T., Norouzi M., Dahl G.E., Smith J.L., Mohtashamian A., Olson N., Peng L.H., Hipp J.D., Stumpe M.C. Artificial Intelligence-Based Breast Cancer Nodal Metastasis Detection: Insights Into the Black Box for Pathologists. Arch. Pathol. Lab Med. 2019;143:859–868. doi: 10.5858/arpa.2018-0147-OA. [DOI] [PubMed] [Google Scholar]

- 48.Yang W., Li C., Zhang J., Zong C. BigTranslate: Augmenting Large Language Models with Multilingual Translation Capability over 100 Languages. arXiv. 2023 doi: 10.48550/arXiv.2305.18098. Preprint in. [DOI] [Google Scholar]

- 49.Doddapaneni S., Ramesh G., Khapra M.M., Kunchukuttan A., Kumar P. A Primer on Pretrained Multilingual Language Models. arXiv. 2021 doi: 10.48550/arXiv.2107.00676. Preprint in. [DOI] [Google Scholar]

- 50.Shah N.H., Entwistle D., Pfeffer M.A. Creation and Adoption of Large Language Models in Medicine. JAMA. 2023;330:866–869. doi: 10.1001/jama.2023.14217. [DOI] [PubMed] [Google Scholar]

- 51.Harrer S. Attention is not all you need: the complicated case of ethically using large language models in healthcare and medicine. EBioMedicine. 2023;90 doi: 10.1016/j.ebiom.2023.104512. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Bender E.M., Gebru T., McMillan-Major A., Shmitchell S. On the Dangers of Stochastic Parrots. Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency. 2021:610–623. 2023. [Google Scholar]

- 53.Shanahan M. Talking about Large Language Models. arXiv. 2022 Preprint in. [Google Scholar]

- 54.Schmidt M., Schmidt S.A.J., Adelborg K., Sundbøll J., Laugesen K., Ehrenstein V., Sørensen H.T. The Danish health care system and epidemiological research: from health care contacts to database records. Clin. Epidemiol. 2019;11:563–591. doi: 10.2147/CLEP.S179083. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Robeznieks A. 2023. Quality data Is Key to Addressing Social Determinants of Health.https://www.ama-assn.org/delivering-care/health-equity/quality-data-key-addressing-social-determinants-health [Google Scholar]

- 56.Jani A., Liyanage H., Okusi C., Sherlock J., Hoang U., Ferreira F., Yonova I., de Lusignan S. Using an Ontology to Facilitate More Accurate Coding of Social Prescriptions Addressing Social Determinants of Health: Feasibility Study. J. Med. Internet Res. 2020;22 doi: 10.2196/23721. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Truong H.P., Luke A.A., Hammond G., Wadhera R.K., Reidhead M., Joynt Maddox K.E. Utilization of Social Determinants of Health ICD-10 Z-Codes Among Hospitalized Patients in the United States, 2016-2017. Med. Care. 2020;58:1037–1043. doi: 10.1097/MLR.0000000000001418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Were M.C., Kamano J.H., Vedanthan R. Leveraging Digital Health for Global Chronic Diseases. Glob. Heart. 2016;11:459–462. doi: 10.1016/j.gheart.2016.10.017. [DOI] [PubMed] [Google Scholar]

- 59.Weidinger L. Ethical and Social Risks of Harm from Language Models. arXiv. 2021 doi: 10.48550/arXiv.2112.04359. Preprint in. [DOI] [Google Scholar]

- 60.Challenges in digital medicine applications in under-resourced settings. Nat. Commun. 2022;13:3020. doi: 10.1038/s41467-022-30728-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Celi L.A., Cellini J., Charpignon M.L., Dee E.C., Dernoncourt F., Eber R., Mitchell W.G., Moukheiber L., Schirmer J., Situ J., et al. Sources of bias in artificial intelligence that perpetuate healthcare disparities-A global review. PLOS Digit. Health. 2022;1:e0000022. doi: 10.1371/journal.pdig.0000022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Nagendran M., Chen Y., Lovejoy C.A., Gordon A.C., Komorowski M., Harvey H., Topol E.J., Ioannidis J.P.A., Collins G.S., Maruthappu M. Artificial intelligence versus clinicians: systematic review of design, reporting standards, and claims of deep learning studies. BMJ. 2020;368:m689. doi: 10.1136/bmj.m689. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Rae J.W. Scaling Language Models: Methods, Analysis & Insights from Training Gopher. arXiv. 2021 doi: 10.48550/arXiv.2112.11446. Preprint in. [DOI] [Google Scholar]

- 64.Jiang L.Y., Liu X.C., Nejatian N.P., Nasir-Moin M., Wang D., Abidin A., Eaton K., Riina H.A., Laufer I., Punjabi P., et al. Health system-scale language models are all-purpose prediction engines. Nature. 2023;619:357–362. doi: 10.1038/s41586-023-06160-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Perrigo B. Exclusive: The $2 Per Hour Workers Who Made ChatGPT Safer. Time. 2023 https://time.com/6247678/openai-chatgpt-kenya-workers/ January 18, 2023. [Google Scholar]

- 66.Steiger M., Bharucha T.J., Venkatagiri S., Riedl M.J., Lease M. The Psychological Well-Being of Content Moderators: The Emotional Labor of Commercial Moderation and Avenues for Improving Support. Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems. 2021 [Google Scholar]

- 67.Ng I.Y.H., Lim S.S., Pang N. Univ Access Inf Soc; 2022. Making Universal Digital Access Universal: Lessons from COVID-19 in Singapore; pp. 1–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Preiksaitis C., Sinsky C.A., Rose C. ChatGPT is not the solution to physicians' documentation burden. Nat. Med. 2023;29:1296–1297. doi: 10.1038/s41591-023-02341-4. [DOI] [PubMed] [Google Scholar]

- 69.Abràmoff M.D., Tarver M.E., Loyo-Berrios N., Trujillo S., Char D., Obermeyer Z., Eydelman M.B., Foundational Principles of Ophthalmic Imaging and Algorithmic Interpretation Working Group of the Collaborative Community for Ophthalmic Imaging Foundation, Washington, D.C. Maisel W.H. Considerations for addressing bias in artificial intelligence for health equity. npj Digital Medicine. 2023;6:170. doi: 10.1038/s41746-023-00913-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Popejoy A.B., Ritter D.I., Crooks K., Currey E., Fullerton S.M., Hindorff L.A., Koenig B., Ramos E.M., Sorokin E.P., Wand H., et al. The clinical imperative for inclusivity: Race, ethnicity, and ancestry (REA) in genomics. Hum. Mutat. 2018;39:1713–1720. doi: 10.1002/humu.23644. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Manrai A.K., Funke B.H., Rehm H.L., Olesen M.S., Maron B.A., Szolovits P., Margulies D.M., Loscalzo J., Kohane I.S. Genetic Misdiagnoses and the Potential for Health Disparities. N. Engl. J. Med. 2016;375:655–665. doi: 10.1056/NEJMsa1507092. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Rajkomar A., Hardt M., Howell M.D., Corrado G., Chin M.H. Ensuring Fairness in Machine Learning to Advance Health Equity. Ann. Intern. Med. 2018;169:866–872. doi: 10.7326/M18-1990. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Leslie D., Mazumder A., Peppin A., Wolters M.K., Hagerty A. Does "AI" stand for augmenting inequality in the era of covid-19 healthcare? BMJ. 2021;372:n304. doi: 10.1136/bmj.n304. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Seyyed-Kalantari L., Liu G., McDermott M., Chen I.Y., Ghassemi M. CheXclusion: Fairness gaps in deep chest X-ray classifiers. Pac. Symp. Biocomput. 2021;26:232–243. [PubMed] [Google Scholar]

- 75.Obermeyer Z., Powers B., Vogeli C., Mullainathan S. Dissecting racial bias in an algorithm used to manage the health of populations. Science. 2019;366:447–453. doi: 10.1126/science.aax2342. [DOI] [PubMed] [Google Scholar]

- 76.Zhang A., Xing L., Zou J., Wu J.C. Shifting machine learning for healthcare from development to deployment and from models to data. Nat. Biomed. Eng. 2022;6:1330–1345. doi: 10.1038/s41551-022-00898-y. [DOI] [PubMed] [Google Scholar]

- 77.Omiye J.A., Lester J.C., Spichak S., Rotemberg V., Daneshjou R. Large language models propagate race-based medicine. NPJ Digit. Med. 2023;6:195–204. doi: 10.1038/s41746-023-00939-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Verdicchio M., Perin A. When Doctors and AI Interact: on Human Responsibility for Artificial Risks. Philos. Technol. 2022;35:11–28. doi: 10.1007/s13347-022-00506-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Price W.N., Cohen I.G. Privacy in the age of medical big data. Nat. Med. 2019;25:37–43. doi: 10.1038/s41591-018-0272-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Reddy S., Allan S., Coghlan S., Cooper P. A governance model for the application of AI in health care. J. Am. Med. Inform. Assoc. 2020;27:491–497. doi: 10.1093/jamia/ocz192. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Salehinejad H., et al. IEEE International Conference on Acoustics, Speech and Signal Processing. ICASSP; 2018. Generalization of deep neural networks for chest pathology classification in X-rays using generative adversarial networks; pp. 990–994. [Google Scholar]

- 82.Sheller M.J., Edwards B., Reina G.A., Martin J., Pati S., Kotrotsou A., Milchenko M., Xu W., Marcus D., Colen R.R., Bakas S. Federated learning in medicine: facilitating multi-institutional collaborations without sharing patient data. Sci. Rep. 2020;10 doi: 10.1038/s41598-020-69250-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Sun C., van Soest J., Koster A., Eussen S.J.P.M., Schram M.T., Stehouwer C.D.A., Dagnelie P.C., Dumontier M. Studying the association of diabetes and healthcare cost on distributed data from the Maastricht Study and Statistics Netherlands using a privacy-preserving federated learning infrastructure. J. Biomed. Inform. 2022;134 doi: 10.1016/j.jbi.2022.104194. [DOI] [PubMed] [Google Scholar]

- 84.Tan T.E., Anees A., Chen C., Li S., Xu X., Li Z., Xiao Z., Yang Y., Lei X., Ang M., et al. Retinal photograph-based deep learning algorithms for myopia and a blockchain platform to facilitate artificial intelligence medical research: a retrospective multicohort study. Lancet. Digit. Health. 2021;3:e317–e329. doi: 10.1016/S2589-7500(21)00055-8. [DOI] [PubMed] [Google Scholar]

- 85.Chang Y., Fang C., Sun W. A Blockchain-Based Federated Learning Method for Smart Healthcare. Comput. Intell. Neurosci. 2021;2021 doi: 10.1155/2021/4376418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Gibbons C. Digital Access Disparities: Policy and Practice Overview. Panel Discussion, Digital Skills and Connectivity as Social Determinants of Health. Sheon, A Conference Report: Digital Skills: A Hidden “Super” Social Determinant of Health: Interdisciplinary Association for Population Health Science. p. 2018. 10.1007/978-0-387-72815-5_8. [DOI]

- 87.Sieck C.J., Sheon A., Ancker J.S., Castek J., Callahan B., Siefer A. Digital inclusion as a social determinant of health. NPJ Digit. Med. 2021;4:52. doi: 10.1038/s41746-021-00413-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Van Dijk J.A.G.M. A framework for digital divide research. Electron. J. Commun. 2002;12 http://www.cios.org/EJCPUBLIC/012/1/01211.html [Google Scholar]

- 89.Jobin A., Ienca M., Vayena E. The global landscape of AI ethics guidelines. Nat. Mach. Intell. 2019;1:389–399. [Google Scholar]

- 90.Vasey B., Nagendran M., Campbell B., Clifton D.A., Collins G.S., Denaxas S., Denniston A.K., Faes L., Geerts B., Ibrahim M., et al. Reporting guideline for the early-stage clinical evaluation of decision support systems driven by artificial intelligence: DECIDE-AI. Nat. Med. 2022;28:924–933. doi: 10.1038/s41591-022-01772-9. [DOI] [PubMed] [Google Scholar]

- 91.Collins G.S., Dhiman P., Andaur Navarro C.L., Ma J., Hooft L., Reitsma J.B., Logullo P., Beam A.L., Peng L., Van Calster B., et al. Protocol for development of a reporting guideline (TRIPOD-AI) and risk of bias tool (PROBAST-AI) for diagnostic and prognostic prediction model studies based on artificial intelligence. BMJ Open. 2021;11:e048008. doi: 10.1136/bmjopen-2020-048008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Gama F., Tyskbo D., Nygren J., Barlow J., Reed J., Svedberg P. Implementation Frameworks for Artificial Intelligence Translation Into Health Care Practice: Scoping Review. J. Med. Internet Res. 2022;24 doi: 10.2196/32215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Rossi J.G., Rojas-Perilla N., Krois J., Schwendicke F. Cost-effectiveness of Artificial Intelligence as a Decision-Support System Applied to the Detection and Grading of Melanoma, Dental Caries, and Diabetic Retinopathy. JAMA Netw. Open. 2022;5:e220269. doi: 10.1001/jamanetworkopen.2022.0269. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Borys K., Schmitt Y.A., Nauta M., Seifert C., Kramer N., Friedrich C.M., Nensa F. Explainable AI in medical imaging: An overview for clinical practitioners - Saliency-based XAI approaches. Eur. J. Radiol. 2023;162:110787. doi: 10.1016/j.ejrad.2023.110787. [DOI] [PubMed] [Google Scholar]

- 95.Stephens A.F., Šeman M., Hodgson C.L., Gregory S.D. SHAP Model Explainability in ECMO – PAL mortality prediction: A Critical Analysis. Author’s reply. Intensive Care Med. 2023;49:1560–1562. doi: 10.1007/s00134-023-07237-y. [DOI] [PubMed] [Google Scholar]

- 96.Benda N.C., Novak L.L., Reale C., Ancker J.S. Trust in AI: why we should be designing for APPROPRIATE reliance. J. Am. Med. Inform. Assoc. 2021;29:207–212. doi: 10.1093/jamia/ocab238. [DOI] [PMC free article] [PubMed] [Google Scholar]