Summary

Communicating emotional intensity plays a vital ecological role because it provides valuable information about the nature and likelihood of the sender’s behavior1–3. For example, attack often follows signals of intense aggression if receivers fail to retreat4,5. Humans regularly use facial expressions to communicate such information6–11. Yet, how this complex signalling task is achieved remains unknown. We addressed this question using a perception-based data-driven method to mathematically model the specific facial movements that receivers use to classify the six basic emotions—happy, surprise, fear, disgust, anger, and sad—and judge their intensity, in two distinct cultures (East Asian, Western European; total n = 120). In both cultures, receivers expected facial expressions to dynamically represent emotion category and intensity information over time using a multi-component compositional signalling structure. Specifically, emotion intensifiers peaked earlier or later than emotion classifiers and represented intensity using amplitude variations. Emotion intensifiers are also more similar across emotions than classifiers are, suggesting a latent broad-plus-specific signalling structure. Cross-cultural analysis further revealed similarities and differences in expectations that could impact cross-cultural communication. Specifically, East Asian and Western European receivers have similar expectations about which facial movements represent high intensity for threat-related emotions, such as anger, disgust, and fear, but differ on those that represent low threat emotions, such as happiness and sadness. Together, our results provide new insights into the intricate processes by which facial expressions can achieve complex dynamic signalling tasks, by revealing the rich information embedded in facial expressions.

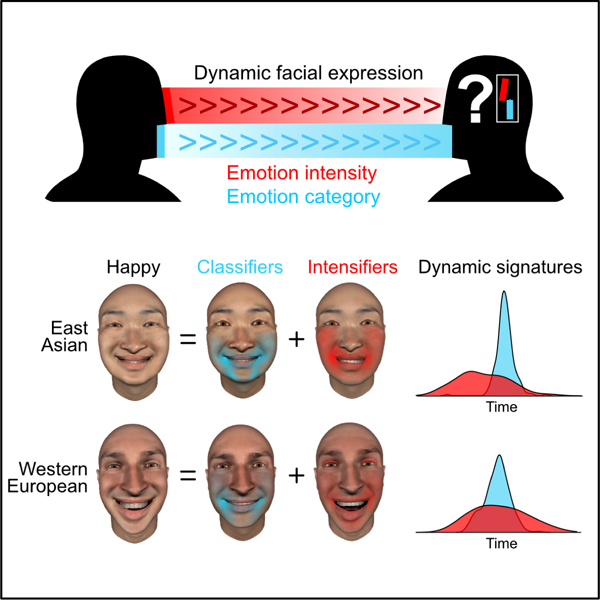

Graphical Abstract

In brief

Chen et al. examine how facial expressions dynamically represent emotion category and intensity information. Using a perception-based data-driven method and information-theoretic analyses, they reveal, in two distinct cultures, that facial expressions represent emotion and intensity information using a specific compositional dynamic structure.

RESULTS AND DISSCUSSION

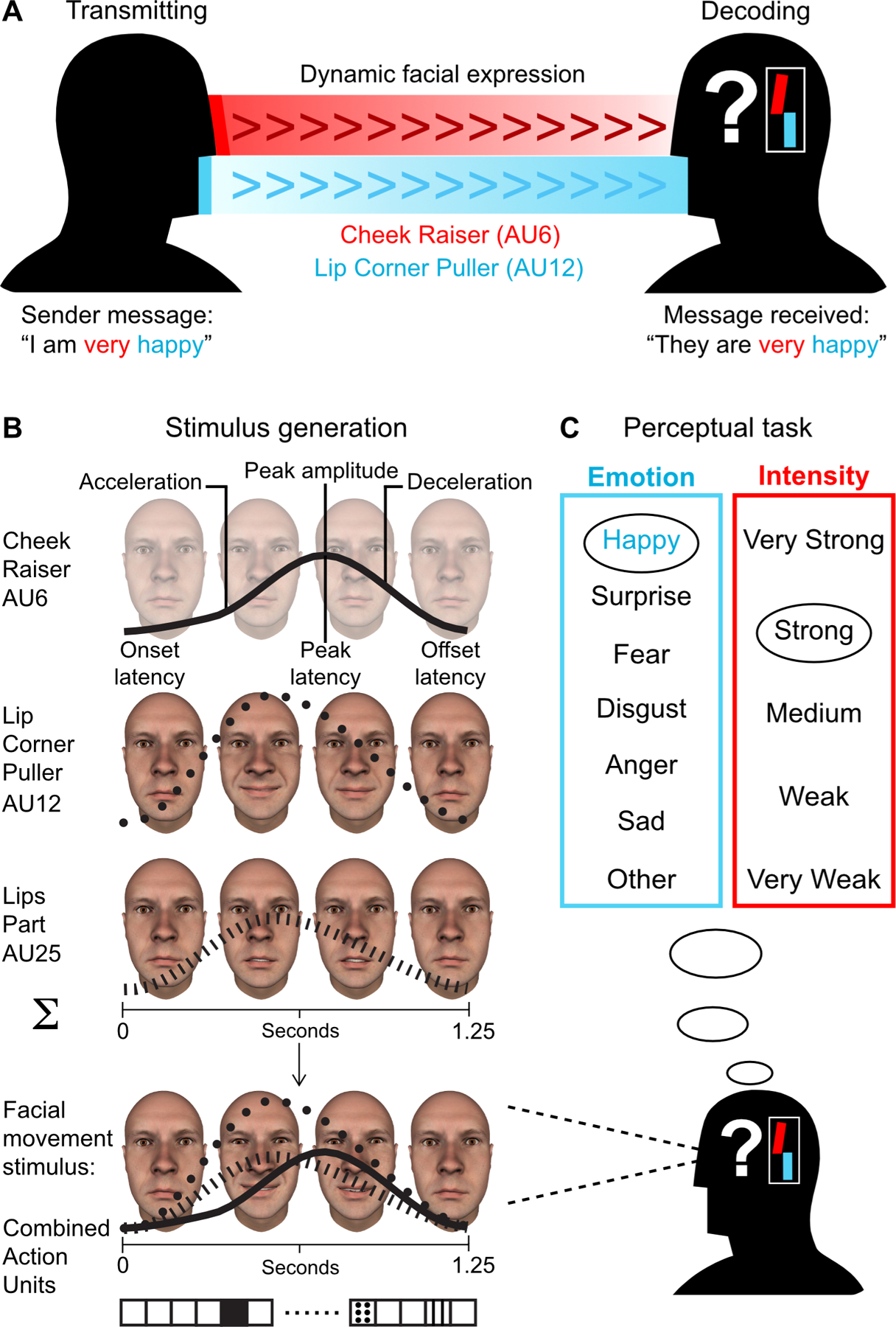

In human societies, communicating emotions and their intensity is often achieved using the information-rich dynamic signals of facial expressions6,8,10,11. For example, different smiles distinguish contentment from delight7,12,13 while different snarls distinguish irritation from fury14 (see also 15,16 for vocal expressions and 17,18 for body movements). Yet, how facial expressions achieve the complex task of dynamically conveying emotion category and intensity information simultaneously remains unknown. Existing knowledge suggests that, as multi-component signals, facial expressions could represent such information using specific compositions of different facial movements (called Action Units, AUs19) as emotion classifiers and emotion intensifiers, as illustrated in Figure 1A. For example, to communicate the emotion message “I am very happy”, the sender would encode it in a dynamic facial expression composed of different facial movements (i.e., AUs): cheek raising (Cheek Raiser—AU6, in red) and smiling (Lip Corner Puller—AU12, in blue; color saturation represents AU amplitude over time). When communication is successful, the receiver accurately decodes the message “they are very happy” from the facial expression signal by comparing it to their prior knowledge (represented by the color-coded signal in the receiver’s mind). Thus, here, the smile (Lip Corner Puller—AU12) elicited in the receiver the perception of the emotion ‘happy’ while the cheek raiser (Cheek Raiser—AU6 in red) elicited the perception of ‘strong’ intensity7,12,20–24. In addition, specific temporal features—such as when facial movements are activated and how strongly they are activated—could also contribute to communicating emotion intensity (see 13,25–27 for smiles). Finally, culture-specific values and preferences often lead to different interpretations of intense facial expressions of emotion28–32, suggesting differences in how emotion intensity is communicated across cultures.

Figure 1. Illustration of communication framework and data-driven method used to model the facial movements that represent emotion and intensity.

(A) General framework of facial expression communication. To communicate the emotion message “I am very happy”, the sender encodes it in a dynamic facial expression signal—here, the signal comprises two facial movements (i.e., Action Units, AUs19): cheek raiser (Cheek Raiser—AU6 in red) and smiling (Lip Corner Puller—AU12, in blue; color saturation represents peak amplitude over time). Successful communication relies on mutual expectations of social signals (here, facial expressions) between the sender and receiver. Here, the receiver accurately decodes the message, “they are very happy”, from the dynamic signal using their prior knowledge (represented by the color-coded signals in the receiver’s mind). Specifically, the receiver perceived the emotion category ‘happy’ based on Lip Corner Puller (AU12) and perceived strong emotion intensity based on Cheek Raiser (AU6).

(B) Stimulus generation. On each experimental trial, we sampled a random combination of facial movements (i.e., AUs, median = 3 AUs across trials) from a core set of 41 AUs and assigned a random movement to each AU using six temporal parameters (see labels illustrating the solid black curve). In this illustrative trial, three AUs are randomly selected: Cheek Raiser—AU6, Lip Corner Puller—AU12, and Lips Part—AU25—and each activated with a random movement (see solid, dotted, and dashed curves representing each AU). The AUs are then combined to produce a photo-realistic facial animation, shown here as four snapshots across time (see bottom row of faces; see also Video S1 for another illustrative stimulus). The resulting facial animation is shown below as four snapshots across time; the vector represents the three AUs randomly sampled in this example trial.

(C) Perceptual task. The receiver classifies the facial expression stimulus as one of the six emotions—’happy,’ ‘surprise,’ ‘fear,’ ‘disgust,’ ‘anger’ or ‘sad’ (blue box)—and rates its intensity on a 5-point scale from ‘very weak’ to ‘very strong’ (red box)—only if they perceived that the facial animation represents that emotion and intensity to the receiver, otherwise selecting ‘other’. Here, this receiver perceived this facial expression stimulus as representing ‘happy’ at ‘strong’ intensity (see ellipses). Each receiver (60 Western European, 60 East Asian) completed 2,400 such trials with all facial expressions displayed on same-ethnicity male and female face identities.

Here, we addressed the ecologically important question of how facial expressions represent emotions and their intensity by modelling the dynamic compositions of facial movements that represent the six basic emotions—happy, surprise, fear, disgust, anger, and sad—and their intensity in two cultures with known differences in facial expression perception—Western European and East Asian29,33–35. Specifically, we modelled these signals using the perceptual expectations of receivers because understanding any system of communication fundamentally relies on explaining what specific signals drive the receivers’ perceptual responses9,36–38, thus reflecting the symbiotic relationship between signal production and perception4,6,39–43. We used an established approach33,44,45,14,46,34,47,48 that combines classic reverse correlation methods used in ethology39, vision science49,50, neuroscience51–53, and engineering36,54–56 with a modern computer graphics-based generative model of human facial movements, subjective human perception33,34,46, and information-theoretic analysis tools57. Figure 1B–C illustrates the approach.

On each experimental trial, we used a generative model of human facial movements58 to agnostically generate a facial expression. Specifically, we pseudo-randomly sampled a biologically plausible combination of AUs from a set of 41 core AUs and assigned a random movement to each AU (see Figure 1B and Stimuli and procedure—STAR Methods). The receiver viewed the random facial animation and classified it as one of the six basic emotions—’happy,’ ‘surprise,’ ‘fear,’ ‘disgust,’ ‘anger,’ or ‘sad’—and rated its intensity on a 5-point scale from ‘very weak’ to ‘very strong,’ only if they perceived that the facial animation accurately represented the emotion and intensity to them. Otherwise, they selected ‘other’. Each receiver (60 Western European, 60 East Asian, see Participants—STAR Methods) completed 2,400 such trials with all facial animations displayed on same-ethnicity photorealistic face identities of real people (see Stimuli and procedure—STAR Methods).

Therefore, on each experimental trial, we captured the dynamic facial movements that elicit the perception of a given emotion category and its intensity—e.g., ‘happy’ at ‘strong’ intensity—in each individual receiver in each culture. Following the experiment, we measured the statistical relationship between the facial movements presented on each trial (i.e., the dynamic AUs) and the receiver’s emotion category and intensity responses separately using the general measure of Mutual Information (MI)57,59. This analysis produced a statistically robust model of the facial movements that elicit the perception of each emotion category and its intensity for each receiver—that is, a quantitative estimate of the physical form of specific AUs and their temporal parameters based on a space of 41 AUs x 6 temporal parameters (see Modelling emotion classifier and intensifier facial movements—STAR Methods). Thus, our data-driven approach can objectively and precisely characterize the facial movements that receivers use to classify emotions and judge their intensity by using the implicit prior knowledge they have developed from interacting with their environment36,60,61.

Emotions and their intensity are represented by specific facial movements

We used the above method to model the facial movements that communicate the six basic emotions and their intensity in two distinct cultures and validated each facial expression model (see Validating emotion classifier and intensifier facial movements—STAR Methods and Figure S1B). To ensure the resulting AUs from the within-receiver analysis reflect the estimated effects in the sampled population, we computed the Bayesian estimate of population prevalence and retained for further analysis the AUs with effects observed receiver numbers above the population prevalence threshold (see Bayesian estimate of population prevalence—STAR Methods). Figure 2A shows the composition of emotion classifier and intensifier facial movements for each emotion using East Asian receivers as an example (Figure S1A shows both cultures).

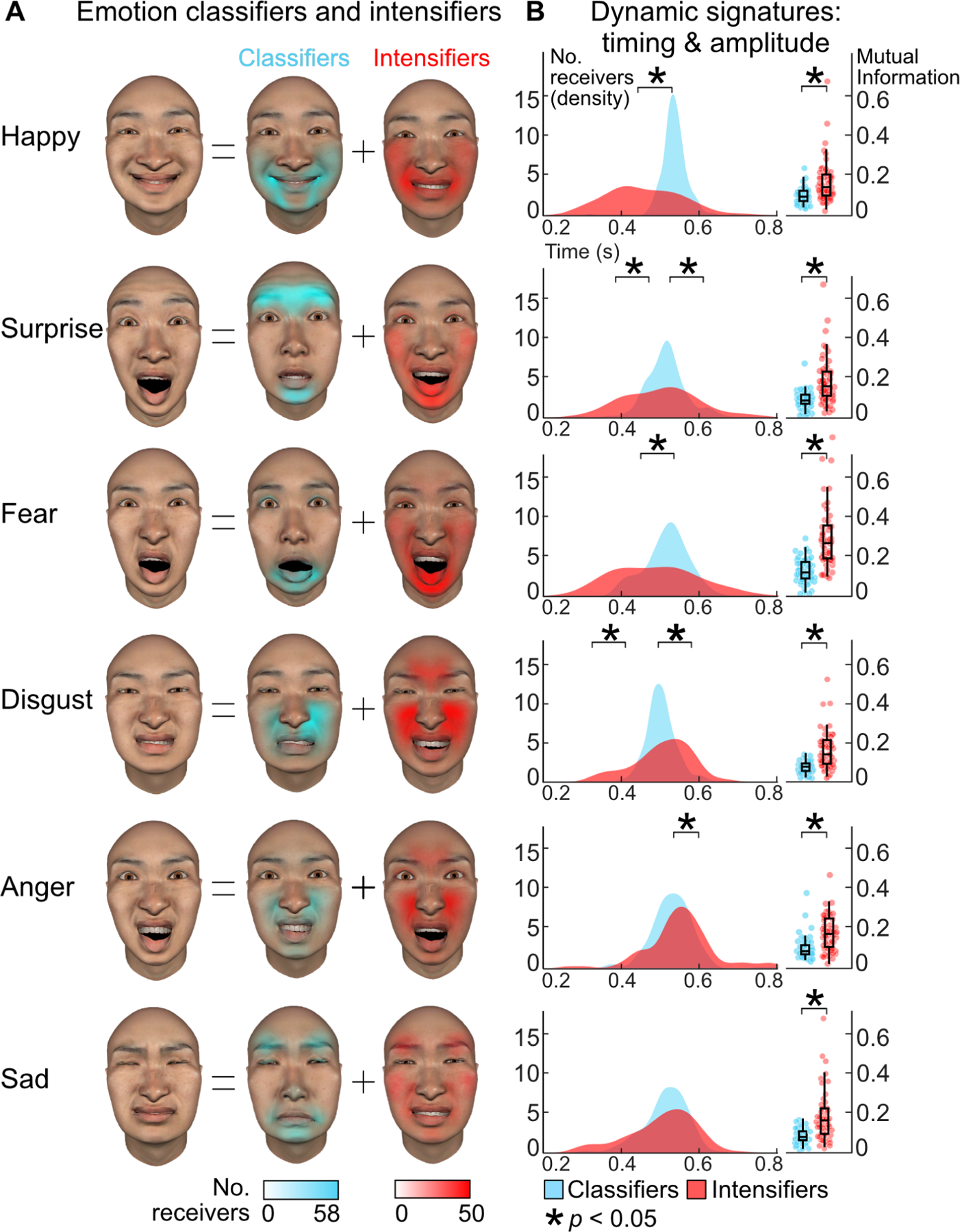

Figure 2. Facial expressions are composed of emotion classifiers and intensifiers.

(A) Emotion classifiers and intensifiers. Color-coded face maps show the composition of emotion classifier (blue) and intensifier (red) facial movements for each emotion, using East Asian receivers as an example (see Figure S1 for both cultures). Color saturation shows the number of receivers showing the effect (see color bar below; see Modelling emotion classifier and intensifier facial movements—STAR Methods). For example, ‘anger’ is classified using lip raising (Upper Lip Raiser—AU10) and intensified using snarling (Nose Wrinkle—AU9), wide open eyes (Upper Lid Raiser—AU5), and mouth gaping (Mouth Stretch—AU27).

(B) Dynamic signatures: timing & amplitude. Color-coded plots show the distribution of emotion classifiers (blue) and intensifiers (red) across time for each emotion. Distribution height represents the Kernel density estimation of the number of receivers at each time point (see Figure S2 for individual data points and both cultures). Asterisks indicate a statistically significant difference. Results show that emotion classifiers and intensifiers reach their peak amplitude at significantly different times (see Temporal analysis of emotion classifier and intensifier facial movements—STAR Methods). Color-coded points and box plots to the right show the relationship between the amplitude of emotion classifiers (blue) or intensifiers (red) and emotion intensity judgments, measured using Mutual Information (MI). Each point represents individual receivers, averaged across all classifiers or intensifiers. Box plots show the distribution of MI values across receivers, for classifiers and intensifiers separately. Asterisks indicate a statistically significant difference (see more details in Table S2). Results show that the amplitude of emotion intensifiers has a stronger relationship with emotion intensity judgments than the amplitude of emotion classifiers do (see Measuring the relationship between facial movement amplitude and emotion intensity judgments—STAR Methods).

Emotion classifiers and intensifiers have distinct dynamic signatures

Given their potentially different communicative roles, emotion classifier and intensifier signals could also have distinct dynamic signatures, such as differences in the order in which they are activated44,62 and how strongly they are activated13,25–27. To test this, we first analyzed the dynamics of the classifier and intensifier facial movements separately for each of the six emotions and each culture (see Temporal analysis of emotion classifier and intensifier facial movements—STAR Methods). Figure 2B shows the results using East Asian receivers as an example (Figure S2 shows both cultures). For each emotion, color-coded plots on the left show the estimated distribution of peak amplitude times of emotion classifiers (blue) and intensifiers (red) across time. Comparison of their distributions showed statistically significant differences (p < 0.05 for all emotions and both cultures, except sad in East Asian culture; see Figure S2). Next, we examined the relationship between the peak amplitude of emotion classifiers and intensifiers and the receivers’ emotion intensity judgments (see Relationship between facial movement amplitude and emotion intensity judgments—STAR Methods and Table S2). Figure 2B shows the results using box plots, with individual points representing individual receivers. Results show that emotion intensifier amplitudes are statistically significantly more strongly associated with emotion intensity judgments than emotion classifier amplitudes are (p < 0.05 for all emotions and both cultures, except sad for Western European receivers; see Table S2). Thus, in line with existing accounts of general communication4,5,63,64, our results show that, in each culture, receivers expect facial expressions of emotion to represent emotion categories and their intensity using a multi-component signal that has a distinctive temporal signature and latent structure for broad and specific signalling.

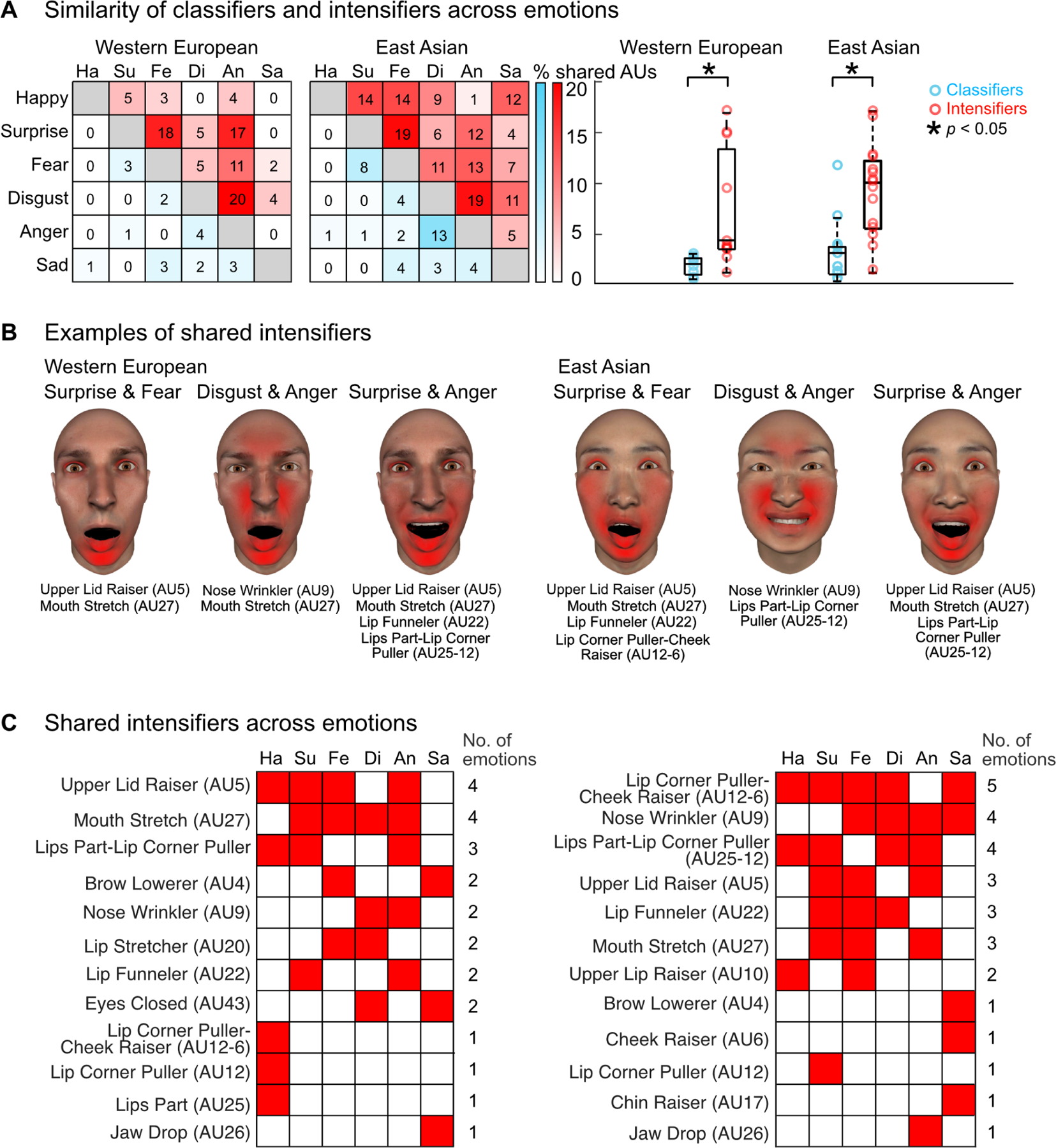

Emotion classifier facial movements are more distinct than emotion intensifiers

Across emotions, a visual inspection of Figure 2A suggests that emotion classifier facial movements are more distinct than emotion intensifiers. For example, for ‘surprise’, ‘fear’ and ‘anger’ East Asian receivers classify these emotions using different facial movements—i.e., eyebrow raising (Inner-Outer Brow Raising—AU1-2), lip stretching (Lip Stretcher—AU20) and lip raising (Upper Lip Raiser—AU10), respectively—but perceive their high intensity using similar facial movements, such as wide-open eyes (Upper Lid Raiser—AU5) and mouth gaping (Mouth Stretch—AU27). Formal analyses confirmed this observation (see Distinctiveness of emotion classifier and intensifier facial movements—STAR Methods). Figure 3 shows the results. In Figure 3A, the color-coded matrix on the left shows the percentage of shared AUs across each pair of emotions. Higher color saturation indicates more similarity (see color bar to right; exact percentages are shown in each square). Box plots on the right show that the average similarity of emotion intensifiers across emotions is statistically significantly higher than that of emotion classifiers (p < 0.05, two-sample t-test). Figure 3B shows examples of AUs that intensify several emotions—for example, in Western receivers, the wide-open eyes (Upper Lid Raiser—AU5) and gaping mouth (Mouth Stretch—AU27) intensify both ‘surprise’ and ‘fear’. In Figure 3C, red squares indicate the emotions that each AU intensifies, with the total number of emotions shown on the right. For example, for East Asian receivers, lip pulling with cheek raising (Lip Corner Puller-Cheek raiser—AU12-6) intensifies 5 of the six emotions (i.e., ‘happy’, ‘surprise’, ‘fear’, ‘disgust’ and ‘sad’). Thus, our results show that in both cultures, emotion classifier facial movements are more distinct across emotions than emotion intensifiers are. This suggests that they could play different communicative roles, with classifiers representing finer-grained emotion categories that enable precise adaptive action while intensifiers represent broader, attention-grabbing information that could quickly signal potential threats and trigger avoidance behaviors20,63–65.

Figure 3. Similarity of classifiers and intensifiers across emotions.

(A) For each culture, the color-coded matrix shows the percentage of emotion classifiers (blue) and intensifiers (red) that are shared across each emotion pair. Higher color saturation indicates more similarity; exact percentages are shown in each square. Color-coded circles and boxplots to the right show the data aggregated across emotion pairs; individual circle represents each emotion pair as shown in the matrix. Asterisks indicate a statistically significant difference. Results show that emotion intensifiers are significantly more similar across emotions than classifiers are (p < 0.05, two-sample t-test, see Signal distinctiveness of classifier and intensifier facial movements across emotions—STAR Methods).

(B) Examples of shared intensifiers. For each culture, face maps show examples of intensifier AUs (labels below) that are shared across emotion pairs. For example, for East Asian receivers, the wide-open eyes (Upper Lid Raiser—AU5), gaping mouth (Mouth Stretch—AU27) and lip pulling (Lips Part-Lip Corner Puller—AU25-12) intensify both ‘surprise’ and ‘anger’.

(C) Shared intensifiers across emotions. For each culture, red squares in the matrix show the emotion(s) that each AU intensifies. The total number of emotions is shown on the right. Intensifiers are ranked from the highest to lowest number of emotions. For example, for Western receivers, the wide opened eyes (Upper Lid Raiser—AU5) and gaping mouth (Mouth Stretch—AU27) intensify 4 out of 6 emotions. For East Asian receivers, lip pulling with cheek raising (Lip Corner Puller-Cheek raiser—AU12-6), nose wrinkling (Nose Wrinkler—AU9) and lip pulling with open mouth (Lips Part-Lip Corner Puller—AU12-25) each intensifies at least 4 emotions.

Emotion classifier and intensifier facial movements vary across cultures

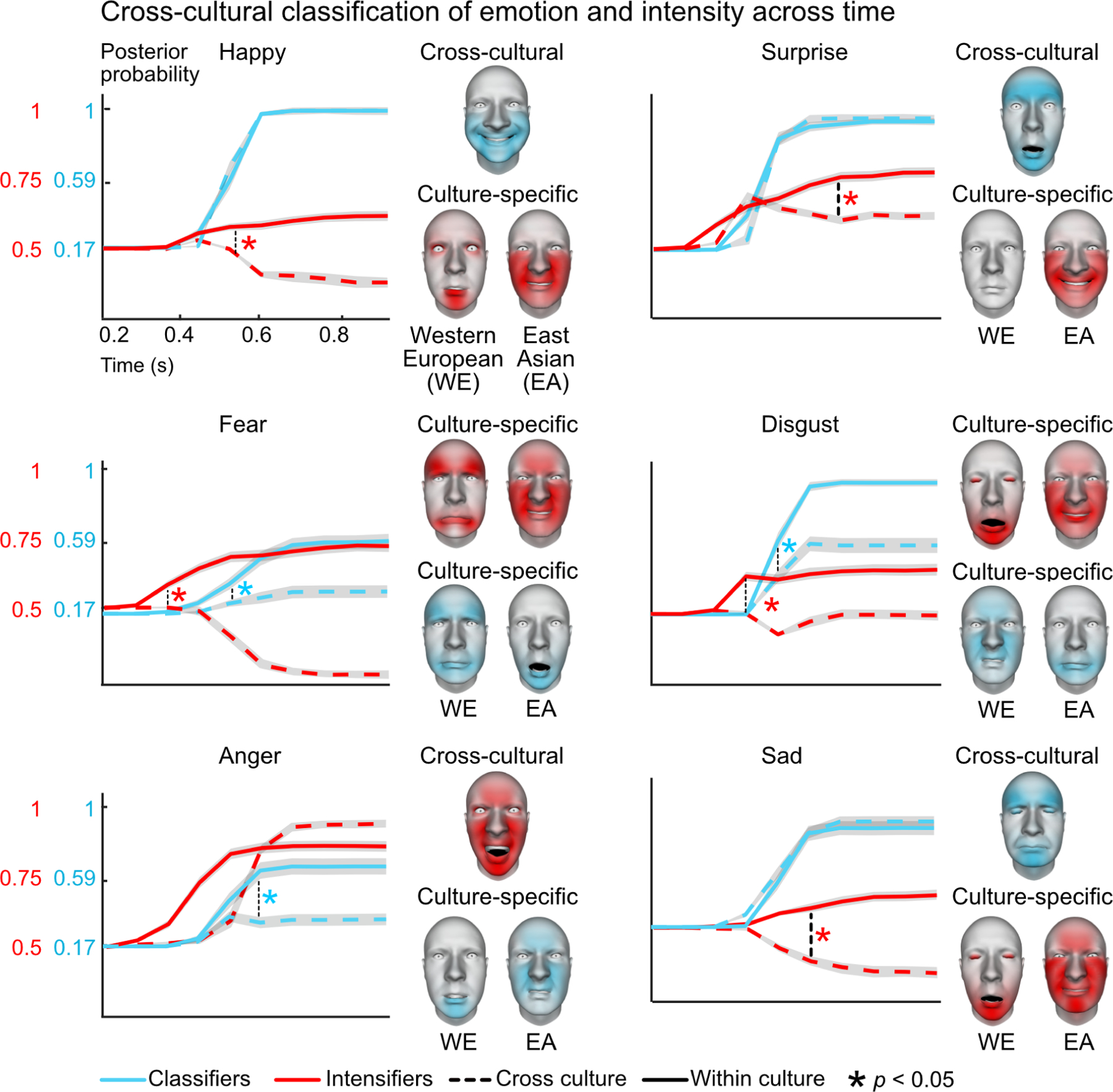

In line with current knowledge of cultural variance in facial expressions9,10,33–35,66,67, a visual inspection of our results suggests that emotion classifiers and intensifiers might differ across cultures, which could impact cross-cultural communication. To test this, we compared the facial expression dynamic temporal signatures across cultures using a Bayesian classifier approach. Specifically, we trained naïve Bayesian classifiers to classify either the six emotion categories or high intensity for each emotion, using the classifier and intensifier facial movements from each culture. We applied this procedure both for within-culture and cross-culture classification using a half-split method (see Cross-cultural classification of emotion and intensity—STAR Methods). Figure 4 shows the results using one example train-test set (see also Figure S3A). For each emotion, color-coded lines (dashed represents cross-culture results; solid represents within-culture results for comparison) show the average (mean) Bayesian classifier performance for the emotion category (blue) and its high intensity (red) at each time point of the unfolding dynamics of facial movements (x-axis). Asterisks and vertical lines indicate when cross-cultural accuracy is significantly lower than within-culture accuracy. Overall, results show that cross-culture classification accuracy is lower than the within-culture classifications—for example, ‘happy,’ ‘surprise,’ and ‘sad’ are classified accurately across cultures but their intensity is not. The opposite pattern is observed for ‘anger’—its intensity is classified accurately across cultures, but the emotion is not (see Figure S3B for specific confusions). ‘Disgust’ and ‘fear’ show an overall lower cross-cultural accuracy.

Figure 4. Cross-cultural classification of emotion and intensity across time.

Each line plot shows the average (mean) Bayesian classifier performance (i.e., posterior probability) over time for the emotion category (blue) and intensity (red). Dashed lines represent cross-culture results; solid lines represent within-culture results for comparison (see also Figure S3). Shaded areas show the standard error of the mean (SEM) across the test samples. Asterisks and vertical lines show when cross-cultural accuracy is statistically significantly lower compared to within-culture performance (see legend below; see Cross-cultural classification of emotion and intensity—STAR Methods). Face maps to the right show the AUs that drive high cross-cultural accuracy (‘Cross-cultural’) or those contribute to low cross-cultural accuracy (‘Culture-specific’). For example, ‘sad’ is classified accurately across cultures based on culturally-shared emotion classifiers, brow lowering (Brow Lowerer—AU4) and lip pursing (Chin Raiser—AU17, Lip Pressor—AU24), whereas its intensity is misclassified based on culture-specific intensifiers (e.g., Western European—mouth opening, Jaw Drop—AU26; East Asian—cheek raising, Cheek Raiser—AU6; see Table S3 for per AU results).

To identify the specific facial movements that drive this performance, we used a leave-one-out approach (see Cross-cultural classification of emotion and intensity—STAR Methods). In Figure 4, color-coded face maps show the results (see also Table S3). For example, ‘sad’ is classified accurately across cultures based on culturally shared emotion classifier facial movements, brow lowering (e.g., Brow Lowerer—AU4) and chin raising (Chin Raiser—AU17). However, its intensity is misclassified due to culture-specific emotion intensifiers (e.g., Western European: Eyes Closed—AU43 and Jaw Drop—AU26; East Asian: Lip Corner Puller-Cheek Raiser—AU12-6 and Nose Wrinkler—AU9). In contrast, ‘anger’ is misclassified based on culture-specific emotion classifiers (e.g., Western European: Lower Lip Depressor—AU16; East Asian: Upper Lip Raiser Left/Right—AU10L/R). However, its intensity is classified accurately based on culturally shared emotion intensifiers (e.g., Upper Lid Raiser—AU5; Nose Wrinkler—AU9; Mouth Stretch—AU27). Our results reveal systematic cultural similarities and differences in the facial movements that represent emotion categories and their intensity. This suggests that emotion communication may be less nuanced across cultures than within culture.

Conclusions and future directions

We aimed to understand how facial expressions achieve the complex task of dynamically signalling multi-layered emotion messages—i.e., emotion categories and their intensity. Using a perception-based data-driven approach within a communication theory framework, we show that, in two distinct cultures, receivers expect facial expressions of emotion to comprise multi-component signals that play two distinct communicative roles—representing finer-grained emotion information (i.e., classifiers) and coarser-grained intensity information (i.e., intensifiers)—with distinct dynamic signatures. Further, cultural variance in these expectations suggests that communicating emotions across cultures could be less nuanced than within culture. Together, our results provide new insights into understanding how facial expressions dynamically represent complex emotion information to receivers in different cultures and raise new questions about how the brain dynamically parses these complex signals. We expand on these points below.

In each culture, emotion classifier facial movements are more distinct across emotions whereas emotion intensifiers are more similar23,65. This suggests that specific facial movements represent finer-grained emotion category information while others represent coarser-grained intensity information44,68. These results provide further support for theories proposing that facial expressions represent both specific and broad emotion information9,65,66,68,69. Further, these results address the seeming paradox of why some facial expressions of emotion comprise similar facial movements, which should reduce signal specificity9. Rather, our results show that such facial movements likely play the different role of representing broad intensity information while other more distinct facial movements represent emotion categories. Relatedly, because our results quantify the strength of the statistical relationship between a specific facial movement and emotion category or intensity perception, this provides an effect size of the causal relationship between stimulus features and perception on the common scale of bits. Consequently, our facial expression models can be used to generate predictions of emotion categorizations and explain the casual role of individual AUs48.

Receivers in each culture also expected emotion classifier and intensifier facial movements to be displayed in a particular temporal order, which largely mirrors the results of studies examining produced facial expressions in laboratory70 and real-world settings71,72. Understanding the temporal dynamics of facial movements has important implications for theories of emotion processing in relation to models of visual attention. Specifically, the human visual system is a bandwidth-limited information channel, in which attention (covert and overt) “guides” the accrual of task-relevant stimulus information for categorical decision73–75. Our results suggest that attention is deployed in space and time—i.e., reading out different facial movements at different time points—to guide the accrual of information. By contrast, certain events could “grab” a receiver’s attention and interrupt this accrual73—for example, the eye whites (Upper Lid Raiser—AU5), which are known to grab attention76,77, are dynamically represented in the occipito-ventral pathway before those of other facial features lower down the face53,78–80. Therefore, the expected temporal decoupling of emotion classifier and intensifier facial movements could reflect a symbiotic adaptation between the receiver’s bandwidth-limited information channel that must accrue dynamic visual information78,79 and the sender’s transmission of a multi-component emotion message. Technological developments in facial expression analysis tools81–83 will enable future work to precisely code these temporal dynamics in real-time production and further examine the relationship between production and perception. How these temporally distinct expressive facial features are processed in the brain is now the focus of ongoing studies78,84.

Cross-cultural analysis showed similarities and differences in the facial movements that receivers expect to represent emotions and their intensity. In line with existing theories32,35,85–87, this suggests that emotion communication could be less nuanced across cultures than within due to culture-specific accents and/or dialects in facial expressions (but see 88). Further, some facial movements served different communicative roles across cultures—for example, Lip Corner Puller-Cheek Raiser (AU12-6) is primarily an emotion classifier in Western European receivers but an intensifier in East Asian receivers. This suggests that the communicative function of human facial movements is not fixed89, highlighting the potential role of culture in shaping facial expression signals28,33,35. Equally, we found clear cultural similarities in facial movements—specifically, for ‘happy,’ ‘surprise,’ and ‘sad’ and high intensity for ‘anger’—that could reflect the preservation of high priority threat-related messages. For example, intense anger is a costly to miss signal because it is critically related to the high likelihood of threat4,64. In contrast, intensity is relatively less important for low-threat emotions, such as happy and sad (but see 90), compared to their specific emotion category information that would enable different adaptive responses91,92. Therefore, these results suggest that facial expressions could have evolved to preserve the communication of high priority messages across cultures using similar facial movements, possibly with biological roots93,94. For example, our results show that facial movements such as eye constriction and mouth gaping intensify several emotions in both cultures, mirroring existing work on cross-cultural intensifier signals7,12,20. Together, these results provide new insights into the cultural diversity of facial expressions and support existing theories that highlight the importance of cultural accents9,32,33,35,95,96 and the breadth of cultural differences13,28,29,34,97,98.

Finally, facial expressions in the real world are typically presented alongside other sources of information, including the sender’s ethnicity99–105, age106–109 and sex110–113, their voice114, gestures and body movements115,116, the social and cultural context9,117–121—under various constraints of the communication channel, including proximal versus distal122 and clear versus occluded123 conditions, all of which could influence how facial expressions are produced and perceived. Future research should further investigate how the precise interplay of different sources of face information (i.e., shape, complexion, movements) contributes to emotion perception36,45,104,107,124,125.

In sum, by revealing the complexities of their dynamic signalling, our results show how facial expressions can perform the complex task of representing multi-component emotion messages. Together, our results provide new insights into the longstanding goal of deciphering the communicative system of human facial expressions with implications for existing theories of emotion communication within and across cultures, and the design of culturally sensitive socially interactive artificial agents126,127.

STAR★Methods

RESOURCE AVAILABILTY

Lead contact

Further information and requests for resources should be directed to and will be fulfilled by the Lead Contact, Chaona Chen (Chaona.Chen@glasgow.ac.uk).

Materials availability

This study did not generate new unique reagents.

Data and code availability

Data reported in this study and the custom code for analyses are deposited at Open Science Framework https://osf.io/3m95w/. Custom code for experiment and visualization are available by request to the Lead Contact.

EXPERIMENTAL MODEL AND STUDY PARTICIPANT DETAILS

Participants

We recruited a total of 120 human participants (referred to in the main text as ‘receivers’)—60 white Western European (59 European, 1 North American, 31 females, mean age = 22 years, SD = 1.71 years) and 60 East Asians (60 Chinese, 24 females, mean age 23 years, SD = 1.55 years). To control for the possibility that the perception of facial expressions could be influenced by cross-cultural experiences, we recruited participants with minimal exposure to and engagement with other cultures, as per self-report (see Screening questionnaire below). We recruited all Western participants in the UK and collected the data at the University of Glasgow. We recruited all East Asian participants in China and collected the data at the University of Electronic Science and Technology of China using the same experimental settings. All East Asian participants had a minimum International English Testing System score of 6.0 (competent user). All participants had normal or corrected-to-normal vision and were free of any emotion-related atypicalities (autism spectrum disorder, depression, anxiety), learning difficulties (e.g., dyslexia), synesthesia, and disorders of face perception (e.g., prosopagnosia), as per self-report. We obtained each participant’s written informed consent before testing and paid £6 or ¥50 per hour for their participation. The University of Glasgow and the University of Electronic Science and Technology of China authorized the experimental protocol (reference ID 300160203 and 1061422030722173, respectively).

Screening questionnaire

To control for the effects of exposure to/experience of other cultures, each potential receiver completed the following questionnaire. We selected only those answering “no” to all questions for participation in the experiments.

Western European receivers

Have you ever:

lived in a non-Western* country before (e.g., on a gap year, summer work, move due to parental employment)?

visited a non-Western country (e.g., vacation)?

dated a non-Westerner?

had a very close friendship with a non-Westerner?

been involved with any non-Western culture societies/groups?

*by Western groups/countries, we are referring to Europe (East and West), USA, Canada, United Kingdom, Australia and New Zealand.

East Asian receivers

Have you ever:

lived in a non-East Asian* country before (e.g., on a gap year, summer work, move due to parental employment)?

visited a non-East Asian country (e.g., vacation)?

dated a non-East Asian person?

had a very close friendship with a non-East Asian person?

been involved with any non-Eastern culture societies/groups?

*by East Asian groups/countries, we are referring to China, Japan, Korea, Thailand and Taiwan.

METHOD DETAILS

Stimuli and procedure

We modelled the dynamic compositions of facial movements that represent the six basic emotions and their intensity in two cultures using the perceptual expectations of receivers. Therefore, we aim to examine the relationship between facial movement signals and the receiver’s perceptual responses rather than the relationship between internal emotion states and external facial displays9,128–130. To do so, we generated random combinations of facial movements on each experimental trial, we used a dynamic facial movement generator comprised of a library of individual 3D facial Action Units (AUs)19 captured using a stereoscopic system from real people who are trained to accurately produce individual Action Units on their face (see 58 for full details). On each experimental trial, the facial movement generator randomly selected a set of individual AUs from a core set of 41 AUs using a binomial distribution (i.e., minimum = 1, maximum = 5 on each trial, median = 3 AUs across trials). A random movement is then applied to each AU separately using random values selected for each of six temporal parameters (onset latency, acceleration, peak amplitude, peak latency, deceleration, and offset latency—see labels illustrating the solid black curve in Figure 1B). These dynamic AUs are then combined to produce a photo-realistic facial animation. The receiver viewed the random facial animation and categorized it according to one of the six basic emotion categories—i.e., ‘happy,’ ‘surprise,’ ‘fear,’ ‘disgust,’ ‘anger’ or ‘sad’—and rated its intensity on a 5-point scale from ‘very weak’ to ‘very strong’. If the receiver did not perceive that the facial animation accurately represented any of the six emotions, including if it represented a compound or blended emotion131, such as ‘happily disgusted,’ we instructed receivers to select ‘other’ without rating intensity. Therefore, we explicitly used a behavioral task that does not force receivers to select facial expressions that they do not perceive as representing the six basic emotion categories and their intensity levels (see 132 for discussion on methodology; see 6,133 for similar applications, see 14,33,34,47 for validation examples). Each receiver completed 2,400 such trials. We based the number of trials per receiver on previous research using a similar generative model of facial movements, sampling procedure, experimental task, data-driven reverse correlation method, and modelling procedure to derive statistically robust and validated facial expression models14,33,34,44–48,134. We also used a dense sampled design135–138 for a thorough exploration of the relationship between stimulus features and each receiver’s perception. To control for the potential influence of face ethnicity on the perception on facial expression139,140, we displayed all facial expression stimuli on the same set of same-ethnicity face identities for all receivers in each culture. All face identities are of real people, captured using a high-resolution 3D face capture system (see 58 for full details). For Western European receivers, we used 8 white Western European face identities (4 males, 4 females, mean age = 23 years, SD = 4.10 years); for East Asian receivers, we used 8 Chinese face identities (4 males, 4 females, mean age = 22.1 years, SD = 0.99 years). We displayed all stimuli on a black background using a 19-inch flat panel Dell monitor (60 Hz refresh rate, 1024 × 1280-pixel resolution) in the center of the receiver’s visual field. Each facial animation played for 1.25 s followed by a black screen. A chin rest maintained a constant viewing distance of 68 cm, with stimuli subtending 14.25° (vertical) × 10.08° (horizontal) of visual angle to represent the average visual angle of a human face141 during typical social interaction142. Each receiver completed the experiment over a series of eighteen ~20-minute sessions with a short break (~5 min) after each session and a longer break (at least 1 hour) after 3 consecutive sessions.

QUANTIFICATION AND STATISTICAL ANALYSIS

Modelling emotion classifier and intensifier facial movements

To identify the facial movements (i.e., AUs) that receivers used to classify the six basic emotions and judge their intensity, we measured the statistical relationship between each AU and each receiver’s emotion classification and intensity responses separately, using Mutual Information (MI)57,59. We computed MI to derive emotion classifier facial movements and intensifier facial movements as follows.

Classifier facial movements

To identify the facial movements that receivers used to classify each of the six emotion categories, we computed the MI between each AU (present vs. absent on each trial) and each receiver’s emotion classification responses (e.g., the individual trials categorized as ‘happy’ vs. those were not categorized as ‘happy’). Table S1 shows the average proportion of emotion responses across receivers in both cultures. A high MI value would indicate a strong relationship between the AU and the receiver’s response of the emotion. A low MI value would indicate a weak relationship. To establish statistical significance, we derived a chance level distribution for each receiver using a non-parametric permutation test and maximum statistics143. Specifically, we randomly shuffled the receiver’s emotion category responses across the 2400 trials, repeated this for 1,000 iterations, computed the maximum MI across all AUs at each iteration to control the family-wise error rate (FWER) over AUs, and used the 95th percentile of the resulting distribution of maximum MI values as a threshold for inference (FWER p < 0.05 within receiver test). For AUs with statistically significantly high MI values, we identified those with a positive relationship with the receiver’s emotion responses (i.e., the receiver categorized a given emotion when the AU is present) using Point-wise Mutual Information (PMI)144. Specifically, a positive PMI value indicates that the presence (vs absence) of an AU (e.g., Lip Corner Puller—AU12) increases the probability of observing a specific emotion response (e.g., selecting ‘happy’) in the receiver.

Intensifier facial movements

To identify the AUs that intensify each emotion, we first extracted the individual trials that each receiver categorized as a given emotion (e.g., ‘anger’), normalized the intensity ratings across these trials for each receiver and emotion separately to ensure that the intensity ratings reflected each receiver’s relative judgements of intensity of each emotion. We then computed, for each emotion and receiver separately, the Mutual Information (MI) between each AU (present vs. absent on each trial) and each receiver’s corresponding intensity ratings. A high MI would indicate a strong relationship between the AU and the receiver’s judgments of intensity of each emotion. A low MI value would indicate a weak relationship.

To establish statistical significance, we used the same non-parametric permutation test with maximum statistics as described above and identified the AUs with statistically significantly high MI values (FWER p < 0.05 within-receiver test). We then identified AUs with a positive relationship with the receiver’s high intensity responses using PMI as described above. Here, a positive PMI value indicates that the presence (vs absence) of an AU (e.g., Upper Lid Raiser—AU5) increases the probability of the receiver perceiving that a given emotion (e.g., anger) is highly intense.

Bayesian estimate of population prevalence

To obtain a population inference from the within-receiver results, we used Bayesian estimate of population prevalence135. In this approach, we model the population from which our experimental receivers are sampled with a binary property: each possible receiver either would, or would not, show a true positive effect in the specific test considered (e.g., that the observer uses a specific AU to classify an emotion). A certain proportion of the population possesses this binary effect. We then perform an inference against the null hypothesis that the value of this population proportion parameter—i.e., the prevalence—is 0. For example, with 60 receivers in each culture as considered, at p < 0.05 with Bonferroni corrections over all AUs, we can reject the null hypothesis that the proportion of the population with the effect is 0 when significant within-receiver results are observed in at least 10 out of the 60 receivers. We therefore retained for further analysis AUs with effects observed in the number of receivers above this population prevalence threshold (e.g., 10/60 receivers).

Validating emotion classifier and intensifier facial movements

We derived the classifier and intensifier facial movements using a highly powered and statistically robust within-receiver analysis145 where results are based on the analysis of trials categorized by each individual receiver separately (vs aggregating across receivers). We demonstrate the validity of our within-receiver modelling approach using an external validation task with a new group of human validators14,34,46,134,146 (see also 34,48 for cross-validation of the approach with production data). Here, we further validated the results of classifier and intensifier facial movements using a naïve Bayesian classifier machine learning approach as follows.

Emotion classifier facial movements

To validate the emotion classifier facial movements in each culture, we built a naïve Bayesian classifier to classify AU patterns according to the six emotion categories in a six-alternative forced choice task using a split-half method. First, for each individual receiver in each culture, we trained a Bayesian classifier using a randomly sampled half of the stimulus AU patterns (i.e., randomly generated facial expressions presented on each experimental trial) that the receiver categorized as each of the six emotions. To ensure a balanced training set across the six emotions, we randomly selected for each emotion the same number of experimental trials (on average 167 trials per receiver per emotion) across all emotions based on the lowest number of trials across emotions. We then used the trained Bayesian classifier to classify the other half of the experimental trials as three testing sets: (1) with the full set of AUs presented on each experimental trial, (2) with the classifier AUs removed from each experimental trial, and (3) with the intensifier AUs removed from each experimental trial. Thus, if the classifier AUs contribute to accurately classifying the six emotions, classification accuracy (i.e., posterior probabilities) would decrease more when the classifier AUs are removed compared to the full set of AUs (set 1) or when the intensifier AUs are removed (set 3). We repeated this procedure for 1,000 iterations and computed the averaged posterior probability over the iterations. To examine the differences in the posterior probabilities of the three test sets, we applied a one-way ANOVA for each emotion and each culture separately, with multiple-comparison tests between each of the two test sets. Results show that in both cultures, the posterior probability of correctly classifying each emotion significantly decreased with the classifier AUs removed compared to the full set of AUs or with the intensifier AUs removed (p < 0.05 with Bonferroni corrected over emotions). On average, with classifier AUs removed, the posterior probabilities decreased by 14.31% for Western European receivers and 14.29% for East Asian receivers across emotions (see Figure S1B for more details).

Emotion intensifier facial movements

To validate the emotion intensifier facial movements, we used the same approach described above by training a naïve Bayesian classifier to classify high intensity emotions. We first re-binned each receiver’s intensity ratings (from 1 to 5) into low and high intensity with an equal-population transformation (i.e., towards an equal number of trials in low and high intensity bins). Specifically, this iterative heuristic procedure combines the smallest bin with its smallest neighbor to reduce the number of bins while maintaining as equal sampling as possible, without splitting the trials from its original bin. We applied this procedure to ensure that the intensity ratings reflected each receiver’s relative judgements of intensity of each emotion. For each emotion, we then trained a classifier to clarify the high intensity emotion using a two-alternative forced choice task (i.e., high intensity or not). We examined the differences in the posterior probabilities to correctly classify high intensity in the three test sets using the same analysis described above. Here, if the intensifier AUs contribute to classifying high intensity, the posterior probabilities would decrease more when the intensifier AUs are removed compared to the full set of AUs or when the classifier AUs are removed.

Results show that in both cultures, the posterior probability to correctly classify high intensity significantly decreased with intensifier AUs removed compared to the full set of AUs or with classifier AUs removed (p < 0.05 with Bonferroni corrected over emotions). On average, performance decreased by 11.71% for Western European receivers and 12.50% for East Asian receivers across emotions (see Figure S1B for more details).

Influence of face ethnicity and individual identity

We also examined whether the ethnicity or the individual identity of the face stimuli influenced the reported results—i.e., whether the AUs represent emotion category and intensity information independent of the face they are displayed on. To do so, we conducted two separate analyses.

Face ethnicity.

In our current design, participants in each culture viewed facial expressions displayed on same-ethnicity faces; therefore, it is possible that any differences in the AUs attributed to culture could instead be due to differences in the face stimuli. To test this, we analyzed an existing data set from a study33 that used the same data-driven reverse correlation method, the same behavioral task (i.e., emotion categorization and intensity ratings), the same receiver group cultures and ethnicities (i.e., 15 white Western European and 15 Chinese East Asian) and a fully balanced stimulus set (i.e., all receivers viewed the same set of same- and other-ethnicity face identities). We repeated the same analysis procedure using Mutual Information (MI) as described in Modelling emotion classifier and intensifier facial movements—STAR Methods, for each face ethnicity separately. We then used these two MI values to compute the Conditional Mutual Information (CMI)57,59 between each AU and each receiver’s emotion classification responses given a specific face ethnicity. By its statistical definition, CMI is the sum of the MI values of each condition weighted by the probability of each condition. In this case, CMI equals the average (mean) of the two MI values because each receiver viewed the same number of trials displayed on same- and other-ethnicity face identities. A high CMI value (with respect to the unconditional MI calculated over all trials) would indicate that the relationship between an AU and the receiver’s responses is biased by the ethnicity of the face stimuli. A low CMI value would indicate that it is not. We established a statistical threshold using non-parametric permutation testing (i.e., by randomly re-shuffling the same- or other face ethnicity of individual trials) with maximum statistics. Results showed that a maximum of 3 out of 15 Western European receivers and 3 out of 15 East Asian receivers showed a statistically significant effect (e.g., Western European receivers: Mouth Stretch—AU27 for anger; East Asian receivers: Mouth Stretch for surprise; see per AU results in Additional Information available on the Open Science Frame repository, https://osf.io/3m95w/). We then obtained a population prevalence inference from these within-receiver results using the population prevalence as described in Bayesian estimate of population prevalence—STAR Methods. With 15 receivers per culture in this study as considered, at p < 0.05 with Bonferroni corrections over all AUs, we can reject the null hypothesis that the proportion of the population with the effect is 0 when significant within-receiver results are observed in at least 4 out of the 15 receivers. In both cultures, the number of receivers showing a statistically significant effect is below this population prevalence threshold (n < 4 in each culture). Failure to reject the null hypothesis does not provide conclusive proof that there is no effect related to face ethnicity but does suggest that any such effect is too weak to introduce a meaningful confound in our results.

Face identity.

We similarly examined whether the individual identity of the face stimuli influenced our results using the current data set and the same CMI analysis described above. Results showed that a maximum of 1 out of 60 Western European receivers and 2 out of 60 East Asian receivers showed a statistically significant effect (e.g., Western European receivers: Inner Brow Raiser—AU1 for anger; East Asian receivers: Lid Tightener Left—AU7L for happy; see per AU results in Additional Information available on the Open Science Frame repository, https://osf.io/3m95w/). In both cultures, the number of receivers showing a statistically significant effect is below the population prevalence threshold (n < 10 in each culture). Thus, the modulating effect of face identity is not expected to be prevalent in the populations sampled. Together, our results show that the perception of AUs as emotion classifiers and intensifiers is not modulated by face ethnicity or face identity, which corresponds with existing work showing that facial movements can override the social perceptions derived from static, non-expressive faces45.

Temporal analysis of emotion classifier and intensifier facial movements

To examine the temporal distribution of the emotion classifier and intensifier facial movements we compared their peak latencies—i.e., when in time the AU reached its peak amplitude. To do so, for each emotion classifier/intensifier AU and each receiver, we first extracted its individual trial peak latencies where the receiver had categorized the stimulus as the emotion in question or rated it as high intensity. Given that the peak latencies comprise continuous values and the way we measured the relationship between the AU presence and the receiver’s responses (i.e., using Mutual Information and Point-wise Mutual Information, as described in Modelling emotion classifier and intensifier facial movements—STAR Methods) is more suitable for measuring the relationship between discrete variables, we computed the average (median) AU peak latency across these experimental trials and compared the distributions of classifier and intensifier AUs using the shift function147. Specifically, the shift function estimates the difference between two distributions using each quantile with a bootstrap estimation of the standard errors, thus enabling comparisons using the entire range of the distributions (versus group averages). Here, we compared the distributions of the peak latencies of the emotion classifier AUs and the emotion intensifier AUs. We applied this analysis to receivers who showed a significant effect for the AU in question (i.e., based on the results of Modelling emotion classifier and intensifier facial movements—STAR Methods). Figure S2 shows the temporal distribution of the classifier and intensifier facial movements in each culture, pooled across receivers. Specifically, in each decile, we computed the 95% bootstrap confidence interval (CI) of the AU peak latencies to identify any statistically significant differences (i.e., where CIs do not include 0). If the CIs > 0, this suggests that the intensifier AUs peaked before the classifier AUs; if the CIs < 0, this suggests that the intensifier AUs peaked after the classifier AUs (see Figure S2).

Relationship between facial movement amplitude and emotion intensity judgments

To examine the relationship between the amplitude of facial movements and emotion intensity judgements, we computed the Mutual Information (MI) between the amplitude of each emotion classifier and intensifier AU (re-binned into 5 bins using the equal-population transformation) and each individual receiver’s emotion intensity ratings (i.e., low vs high intensity; see Modelling emotion classifier and intensifier facial movements). High MI values indicate a strong relationship between the amplitude of facial movement and emotion intensity judgement. Low MI values indicate a weak relationship. We then computed the averaged (mean) MI values across the AUs for classifier and intensifiers separately. In Figure 2B, color-coded boxplots on the right show the results, with individual points representing individual receivers (see Table S2 for both cultures). In each emotion and each culture, we identified any statistically significant differences using a paired-sample t-test (two-tailed, Bonferroni corrected over emotions; p < 0.05, see asterisks in Figure 2B and Table S2).

Signal distinctiveness of classifier and intensifier facial movements across emotions

To examine the distinctiveness of the emotion classifier and intensifier facial movements across emotions, we computed the average (mean) percentage of shared AUs between each pair of emotions (e.g., ‘happy’ and ‘surprise’) for classifiers and intensifiers separately, and for each culture. Specifically, we computed the average percentage of shared AUs between each emotion (e.g., ‘anger’ and ‘disgust’) for all within- and between-receiver pairs within each culture, for each emotion pair separately. Results show that, in each culture, intensifiers are more similar across emotions than classifiers are (two-tailed two-sample t-tests; Western European: t(17) = 2.56, p = 0.02, East Asian: t(23) = 3.41, p = 0.003).

Cross-cultural classification of emotion and intensity

To compare the dynamic signalling of emotion categories and their intensity across cultures, we used a machine learning approach. Specifically, we built at each of 10 time points a naïve Bayesian classifier to perform one of two tasks—to classify the six emotion categories or to classify high intensity for each emotion. For the emotion classification task, we trained the Bayesian classifier at each time point using all classifier AUs that had reached their peak amplitude up until that time point, derived from one culture and tested the classifier’s performance using the corresponding classifier AUs from the other culture (and vice versa). For the intensity classification task, we trained the Bayesian classifiers at each time point to discriminate facial expression intensity using the classifier-plus-intensifier AUs (high intensity) and classifier-only AUs (not high intensity) for each emotion, tested it using the corresponding AUs from the other culture (and vice versa). For each test set, we computed the posterior probability of each test sample (i.e., the facial expression model from each individual receiver). Line plots in Figure 4 show the averaged (mean) posterior probabilities and stand error of the mean (SEM) across test samples. Next, we compared the cross-cultural classification accuracy to the within-cultural accuracy classification, derived by training the Bayesian classifiers for the same tasks within each culture using a half-split method. Specifically, for each culture separately, we randomly sampled half of the data for training and tested on the other half. We repeated this for 1,000 iterations and computed the averaged posterior probability of each test sample across iterations. To examine whether cross-culture accuracy differs from within-culture accuracy at each time point, we compared the posterior probabilities of cross-culture and within-culture test samples using a two-sample t-test (p < 0.05 with Bonferroni correction over emotions; see Figure S3A). We then identified the first time point at which the (1) within-culture classification accuracy is significantly higher than chance (derived by randomly re-shuffling the emotion categories or intensity) and the (2) cross-culture classification accuracy is significantly lower than the within-culture classification accuracy (see asterisks in Figure 4 and Figure S3A). We further examined the cross-cultural misclassifications by analyzing the specific emotion confusions across time. As shown in Figure S3B, we identified when the cross-cultural misclassifications between emotions is significantly higher than the within-culture misclassifications between emotions (two-sampled t-test, two-tailed, p < 0.05 with Bonferroni correction over emotions). Results showed statistically significant cross-cultural confusions between emotions—for example, ‘fear’ is often misclassified as ‘disgust’ across cultures.

Next, we identified the AUs that drive statistically significantly lower vs. accurate cross-culture performance using a leave-one-out approach. In each test, we removed one AU from the train and test set and measured the change in cross-culture classification accuracy. If removing the AU decreased cross-cultural accuracy, we can infer that the AU is cross-cultural; if removing an AU decreased cross-cultural misclassifications, we can infer that the AU is culture specific. In Figure 4, color-coded face maps show the AUs that, when removed, impose a statistically significant change in cross-cultural classification performance (two-tailed two-sample t-test, p < 0.05; see Table S3 for individual AU results).

Supplementary Material

KEY RESOURCES TABLE

| REAGENT or RESOURCE | SOURCE | IDENTIFIER |

|---|---|---|

| Deposited Data | ||

| Deposited data | This paper | https://osf.io/3m95w/ |

| Software and Algorithms | ||

| MATLAB R2021a | MathWorks | RRID: SCR_001622 |

| Psychtoolbox-3 | http://psychtoolbox.org/ | RRID: SCR_002881 |

| Custom Code for analyses | This paper | https://osf.io/3m95w/ |

| Bayesian inference of population prevalence | Ince et al.,135 | https://doi.org/10.1016/j.tics.2022.05.008 |

| Shift function | Rousselet et al., 147 | https://doi.org/10.1111/ejn.13610 |

Highlights.

Emotion categories and intensity are represented by specific facial movements

Emotion classifier facial movements are highly distinct; intensifiers are not

Emotion classifiers and intensifiers have distinct temporal signatures

Cultural variance in facial signals may impact cross-cultural communication

Acknowledgements

This work was supported by the Leverhulme Trust (Early Career Fellowship, ECF-2020-401), the University of Glasgow (Lord Kelvin/Adam Smith Fellowship, 201277) and the Chinese Scholarship Council (201306270029) awarded to C.C. (Chaona Chen); the National Science Foundation (2150830) and the National Institute for Deafness and Other Communication Disorders (R01DC018542) awarded to D.S.M.; the Natural Science Foundation of China (62276051) awarded to H.M.Y.; the China Scholarship Council (201606070109) awarded to Y.D.; the Wellcome Trust (214120/Z/18/Z) awarded to R.A.A.I.; the Wellcome Trust (Senior Investigator Award, UK; 107802) and the Multidisciplinary University Research Initiative/Engineering and Physical Sciences Research Council (USA, UK; 172046-01) awarded to P.G.S.; and the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme (grant agreement FACESYNTAX no. 759796), the British Academy (SG113332 and SG171783), the Economic and Social Research Council (ES/K001973/1 and ES/K00607X/1) and the University of Glasgow (John Robertson Bequest) awarded to R.E.J. The funders had no role in the study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Declaration of interests

The authors declare no competing interest. P.G.S. is on the advisory board of this journal.

Video S1. Illustration video of stimulus generation, Related to Figure 1B and Stimuli and procedure—STAR Methods.

The video illustrates the stimulus generation using an example trial. On each trial, a dynamic face movement generator randomly selected a combination of individual face movements called Action Units (AUs) from a core set of 41 AUs (minimum = 1, maximum = 4, median = 3 AUs selected on each trial). A random movement is assigned to each AU individually using six temporal parameters—onset latency, acceleration, peak amplitude, peak latency, deceleration, and offset latency—represented here using the color-coded curve below each face. In this example trial, four AUs are randomly selected—Nose Wrinkler (AU9) color-coded in orange, Mouth Stretch (AU27) color-coded in purple, Brow Lowerer (AU4) color-coded in blue, and Lip Corner Puller (AU12) color-coded in yellow. The randomly activated AUs are then combined to produce a random facial animation (here, ‘Stimulus trial’). The cultural receiver viewed the random facial animation played once for a duration of 1.25 seconds and classified it as one of the six basic emotions—’happy,’ ‘surprise,’ ‘fear,’ ‘disgust,’ ‘anger,’ or ‘sad’—and rated its intensity on a 5-point scale from ‘very weak’ to ‘very strong’—only if they perceived that the facial animation accurately represented the emotion and intensity.

References

- 1.Frijda NH, Ortony A, Sonnemans J, and Clore GL (1992). The complexity of intensity: Issues concerning the structure of emotion intensity

- 2.Ortony A, Clore G, and Collins A (1988). The Cognitive Structure of Emotions (Cambridge University Press; ). [Google Scholar]

- 3.Ben-Ze’ev A (2001). The subtlety of emotions (MIT press; ). [Google Scholar]

- 4.Bradbury JW, and Vehrencamp SL (1998). Principles of Animal Communication (Sinauer Associates; ). [Google Scholar]

- 5.Seyfarth RM, and Cheney DL (2003). Signalers and receivers in animal communication. Annual review of psychology 54, 145–173. [DOI] [PubMed] [Google Scholar]

- 6.Darwin C (1872). The Expression of the Emotions in Man and Animals 3rd ed (Oxford University Press, USA; ). [Google Scholar]

- 7.Messinger DS, Fogel A, and Dickson KL (2001). All smiles are positive, but some smiles are more positive than others. Developmental Psychology 37, 642. [PubMed] [Google Scholar]

- 8.Andrew RJ (1963). Evolution of Facial Expression. Science 142, 1034–1041. [DOI] [PubMed] [Google Scholar]

- 9.Barrett LF, Adolphs R, Marsella S, Martinez AM, and Pollak SD (2019). Emotional expressions reconsidered: Challenges to inferring emotion from human facial movements. Psychological science in the public interest 20, 1–68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Fridlund AJ (2014). Human Facial Expression: An Evolutionary View (Academic Press; ). [Google Scholar]

- 11.Russell JA, Bachorowski J-A, and Fernández-Dols J-M (2003). Facial and vocal expressions of emotion. Annual review of psychology 54, 329–349. [DOI] [PubMed] [Google Scholar]

- 12.Ekman P, Davidson RJ, and Friesen WV (1990). The Duchenne smile: Emotional expression and brain physiology: II. Journal of personality and social psychology 58, 342. [PubMed] [Google Scholar]

- 13.Hess U, Blairy S, and Kleck RE (1997). The intensity of emotional facial expressions and decoding accuracy. Journal of Nonverbal Behavior 21, 241–257. [Google Scholar]

- 14.Jack RE, Sun W, Delis I, Garrod OG, and Schyns PG (2016). Four not six: Revealing culturally common facial expressions of emotion. Journal of Experimental Psychology: General 145, 708. [DOI] [PubMed] [Google Scholar]

- 15.Bachorowski J-A (1999). Vocal expression and perception of emotion. Current directions in psychological science 8, 53–57. [Google Scholar]

- 16.Scherer KR, Johnstone T, and Klasmeyer G (2003). Vocal expression of emotion (Oxford University Press; ). [Google Scholar]

- 17.Dael N, Mortillaro M, and Scherer KR (2012). Emotion expression in body action and posture. Emotion 12, 1085. [DOI] [PubMed] [Google Scholar]

- 18.Wallbott HG (1998). Bodily expression of emotion. European journal of social psychology 28, 879–896. [Google Scholar]

- 19.Ekman P, and Friesen WV (1978). Manual for the Facial Action Coding System (Consulting Psychologists Press; ). [Google Scholar]

- 20.Messinger DS, Mattson WI, Mahoor MH, and Cohn JF (2012). The eyes have it: Making positive expressions more positive and negative expressions more negative. Emotion 12, 430. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Girard JM, Shandar G, Liu Z, Cohn JF, Yin L, and Morency L-P (2019). Reconsidering the Duchenne Smile: Indicator of Positive Emotion or Artifact of Smile Intensity? In 2019 8th International Conference on Affective Computing and Intelligent Interaction (ACII) (IEEE; ), pp. 594–599. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Messinger DS, Cassel TD, Acosta SI, Ambadar Z, and Cohn JF (2008). Infant smiling dynamics and perceived positive emotion. Journal of Nonverbal Behavior 32, 133–155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Malek N, Messinger D, Gao AYL, Krumhuber E, Mattson W, Joober R, Tabbane K, and Martinez-Trujillo JC (2019). Generalizing Duchenne to sad expressions with binocular rivalry and perception ratings. Emotion 19, 234. [DOI] [PubMed] [Google Scholar]

- 24.Miller EJ, Krumhuber EG, and Dawel A (2022). Observers perceive the Duchenne marker as signaling only intensity for sad expressions, not genuine emotion. Emotion 22, 907–919. 10.1037/emo0000772. [DOI] [PubMed] [Google Scholar]

- 25.Nowicki S Jr, and Carton J (1993). The measurement of emotional intensity from facial expressions. The Journal of social psychology 133, 749–750. [DOI] [PubMed] [Google Scholar]

- 26.Orgeta V, and Phillips LH (2007). Effects of Age and Emotional Intensity on the Recognition of Facial Emotion. Experimental Aging Research 34, 63–79. 10.1080/03610730701762047. [DOI] [PubMed] [Google Scholar]

- 27.Biele C, and Grabowska A (2006). Sex differences in perception of emotion intensity in dynamic and static facial expressions. Exp Brain Res 171, 1–6. 10.1007/s00221-005-0254-0. [DOI] [PubMed] [Google Scholar]

- 28.Tsai JL, Blevins E, Bencharit LZ, Chim L, Fung HH, and Yeung DY (2019). Cultural variation in social judgments of smiles: The role of ideal affect. Journal of Personality and Social Psychology 116, 966. [DOI] [PubMed] [Google Scholar]

- 29.Matsumoto D, and Ekman P (1989). American-Japanese cultural differences in intensity ratings of facial expressions of emotion. Motivation and emotion 13, 143–157. [Google Scholar]

- 30.Engelmann JB, and Pogosyan M (2013). Emotion perception across cultures: the role of cognitive mechanisms. Frontiers in psychology 4, 118. 10.3389/fpsyg.2013.00118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Krys K, Vauclair C-M, Capaldi CA, Lun VM-C, Bond MH, Domínguez-Espinosa A, Torres C, Lipp OV, Manickam LSS, and Xing C (2016). Be careful where you smile: culture shapes judgments of intelligence and honesty of smiling individuals. Journal of nonverbal behavior 40, 101–116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Hess U, Beaupré MG, and Cheung N (2002). Who to whom and why–cultural differences and similarities in the function of smiles. An empirical reflection on the smile 4, 187. [Google Scholar]

- 33.Jack RE, Garrod OGB, Yu H, Caldara R, and Schyns PG (2012). Facial expressions of emotion are not culturally universal. Proceedings of the National Academy of Sciences of the United States of America 109, 7241–7244. 10.1073/pnas.1200155109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Chen C, Crivelli C, Garrod OG, Schyns PG, Fernández-Dols J-M, and Jack RE (2018). Distinct facial expressions represent pain and pleasure across cultures. Proceedings of the National Academy of Sciences 115, E10013–E10021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Elfenbein HA (2013). Nonverbal dialects and accents in facial expressions of emotion. Emotion Review 5, 90–96. [Google Scholar]

- 36.Jack RE, and Schyns PG (2017). Toward a social psychophysics of face communication. Annual Review of Psychology 68, 269–297. [DOI] [PubMed] [Google Scholar]

- 37.Schyns PG, Zhan J, Jack RE, and Ince RA (2020). Revealing the information contents of memory within the stimulus information representation framework. Philosophical Transactions of the Royal Society B 375, 20190705. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Krakauer JW, Ghazanfar AA, Gomez-Marin A, MacIver MA, and Poeppel D (2017). Neuroscience needs behavior: correcting a reductionist bias. Neuron 93, 480–490. [DOI] [PubMed] [Google Scholar]

- 39.Tinbergen N (1948). Social releasers and the experimental method required for their study. The Wilson Bulletin, 6–51.

- 40.Shannon CE (1948). A mathematical theory of communication. ACM SIGMOBILE Mobile Computing and Communications Review 5, 3–55. [Google Scholar]

- 41.Guilford T, and Dawkins MS (1993). Receiver psychology and the design of animal signals. Trends in neurosciences 16, 430–436. [DOI] [PubMed] [Google Scholar]

- 42.Edelman GM, and Gally JA (2001). Degeneracy and complexity in biological systems. Proceedings of the National Academy of Sciences 98, 13763–13768. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.McGregor PK (2005). Animal communication networks (Cambridge University Press; ). [Google Scholar]

- 44.Jack RE, Garrod OGB, and Schyns PG (2014). Dynamic Facial Expressions of Emotion Transmit an Evolving Hierarchy of Signals over Time. Current Biology 24, 187–192. 10.1016/j.cub.2013.11.064. [DOI] [PubMed] [Google Scholar]

- 45.Gill D, Garrod OG, Jack RE, and Schyns PG (2014). Facial movements strategically camouflage involuntary social signals of face morphology. Psychological science 25, 1079–1086. [DOI] [PubMed] [Google Scholar]

- 46.Rychlowska M, Jack RE, Garrod OG, Schyns PG, Martin JD, and Niedenthal PM (2017). Functional smiles: Tools for love, sympathy, and war. Psychological Science 28, 1259–1270. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Liu M, Duan Y, Ince RAA, Chen C, Garrod OGB, Schyns PG, and Jack RE (2022). Facial expressions elicit multiplexed perceptions of emotion categories and dimensions. Current Biology 32, 200–209.e6. 10.1016/j.cub.2021.10.035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Snoek L, Jack RE, Schyns PG, Garrod OGB, Mittenbühler M, Chen C, Oosterwijk S, and Scholte HS (2023). Testing, explaining, and exploring models of facial expressions of emotions. Science Advances 9, eabq8421. 10.1126/sciadv.abq8421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Mangini MC, and Biederman I (2004). Making the ineffable explicit: Estimating the information employed for face classifications. Cognitive Science 28, 209–226. [Google Scholar]

- 50.Murray RF (2011). Classification images: A review. Journal of Vision 11, 2–2. [DOI] [PubMed] [Google Scholar]

- 51.Hubel D, and Wiesel TN (1959). Receptive fields of single neurons in the cat’s striate visual cortex. J. Phys 148, 574–591. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Nishimoto S, Ishida T, and Ohzawa I (2006). Receptive field properties of neurons in the early visual cortex revealed by local spectral reverse correlation. Journal of Neuroscience 26, 3269–3280. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Zhan J, Ince RA, Van Rijsbergen N, and Schyns PG (2019). Dynamic construction of reduced representations in the brain for perceptual decision behavior. Current Biology 29, 319–326. e4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Thompson WB, Owen JC, Germain H. de S., Stark SR, and Henderson TC (1999). Feature-based reverse engineering of mechanical parts. IEEE Transactions on robotics and automation 15, 57–66. [Google Scholar]

- 55.Volterra V, and Whittaker ET (1959). Theory of functionals and of integral and integro-differential equations (Dover publications; ). [Google Scholar]

- 56.Wiener N (1958). Nonlinear Problems In Random Theory Cambridge

- 57.Ince RA, Giordano BL, Kayser C, Rousselet GA, Gross J, and Schyns PG (2017). A statistical framework for neuroimaging data analysis based on mutual information estimated via a gaussian copula. Human brain mapping 38, 1541–1573. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Yu H, Garrod OGB, and Schyns PG (2012). Perception-driven facial expression synthesis. Computers & Graphics 36, 152–162. 10.1016/j.cag.2011.12.002. [DOI] [Google Scholar]

- 59.Cover TM, and Thomas JA (2012). Elements of information theory (John Wiley & Sons; ). [Google Scholar]

- 60.Jack RE, Crivelli C, and Wheatley T (2018). Data-Driven Methods to Diversify Knowledge of Human Psychology. Trends in cognitive sciences 22, 1–5. [DOI] [PubMed] [Google Scholar]

- 61.Jack RE, and Schyns PG (2015). The Human Face as a Dynamic Tool for Social Communication. Current Biology 25, R621–R634. 10.1016/j.cub.2015.05.052. [DOI] [PubMed] [Google Scholar]

- 62.Delis I, Jack R, Garrod O, Panzeri S, and Schyns P (2014). Characterizing the Manifolds of Dynamic Facial Expression Categorization. Journal of Vision 14, 1384–-1384. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Endler JA (1993). Some general comments on the evolution and design of animal communication systems. Philosophical Transactions of the Royal Society of London. Series B: Biological Sciences 340, 215–225. [DOI] [PubMed] [Google Scholar]

- 64.Guilford T, and Dawkins MS (1991). Receiver psychology and the evolution of animal signals. Animal Behaviour 42, 1–14. [Google Scholar]

- 65.Messinger DS (2002). Positive and negative: Infant facial expressions and emotions. Current Directions in Psychological Science 11, 1–6. [Google Scholar]

- 66.Ekman P (2006). Darwin and facial expression: A century of research in review (The Institute for the Study of Human Knowledge; ). [Google Scholar]

- 67.Chen C, and Jack RE (2017). Discovering cultural differences (and similarities) in facial expressions of emotion. Current opinion in psychology 17, 61–66. [DOI] [PubMed] [Google Scholar]

- 68.Liu M, Duan Y, Ince RA, Chen C, Garrod OG, Schyns PG, and Jack RE (2021). Facial expressions elicit multiplexed perceptions of emotion categories and dimensions. Current Biology [DOI] [PMC free article] [PubMed]

- 69.Fujimura T, Matsuda Y-T, Katahira K, Okada M, and Okanoya K (2012). Categorical and dimensional perceptions in decoding emotional facial expressions. Cognition & emotion 26, 587–601. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Krumhuber EG, Skora L, Küster D, and Fou L (2017). A review of dynamic datasets for facial expression research. Emotion Review 9, 280–292. [Google Scholar]

- 71.Jeganathan J, Campbell M, Hyett M, Parker G, and Breakspear M (2022). Quantifying dynamic facial expressions under naturalistic conditions. eLife 11, e79581. 10.7554/eLife.79581. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Bartlett MS, Littlewort G, Frank M, Lainscsek C, Fasel I, and Movellan J (2006). Fully automatic facial action recognition in spontaneous behavior In (IEEE; ), pp. 223–230. [Google Scholar]

- 73.Pratto F, and John OP (1991). Automatic vigilance: the attention-grabbing power of negative social information. Journal of personality and social psychology 61, 380. [DOI] [PubMed] [Google Scholar]

- 74.Lachter J, Forster KI, and Ruthruff E (2004). Forty-five years after Broadbent (1958): still no identification without attention. Psychological review 111, 880. [DOI] [PubMed] [Google Scholar]

- 75.Smith ML, Cottrell GW, Gosselin F, and Schyns PG (2005). Transmitting and decoding facial expressions. Psychological science 16, 184–189. [DOI] [PubMed] [Google Scholar]

- 76.Jessen S, and Grossmann T (2014). Unconscious discrimination of social cues from eye whites in infants. Proceedings of the National Academy of Sciences 111, 16208–16213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Whalen PJ, Kagan J, Cook RG, Davis FC, Kim H, Polis S, McLaren DG, Somerville LH, McLean AA, Maxwell JS, et al. (2004). Human amygdala responsivity to masked fearful eye whites. Science 306, 2061. [DOI] [PubMed] [Google Scholar]