Abstract

While musculoskeletal imaging volumes are increasing, there is a relative shortage of subspecialized musculoskeletal radiologists to interpret the studies. Will artificial intelligence (AI) be the solution? For AI to be the solution, the wide implementation of AI-supported data acquisition methods in clinical practice requires establishing trusted and reliable results. This implementation will demand close collaboration between core AI researchers and clinical radiologists. Upon successful clinical implementation, a wide variety of AI-based tools can improve the musculoskeletal radiologist’s workflow by triaging imaging examinations, helping with image interpretation, and decreasing the reporting time. Additional AI applications may also be helpful for business, education, and research purposes if successfully integrated into the daily practice of musculoskeletal radiology. The question is not whether AI will replace radiologists, but rather how musculoskeletal radiologists can take advantage of AI to enhance their expert capabilities.

© RSNA, 2024

Supplemental material is available for this article.

See also the review “Present and Future Innovations in AI and Cardiac MRI” by Morales et al in this issue.

An earlier incorrect version appeared online. This article was corrected on January 19, 2024.

Summary

With the ongoing trend of increased imaging rates and decreased acquisition times, a variety of artificial intelligence tools can support musculoskeletal radiologists by providing more optimized and efficient workflows.

Essentials

■ The wide implementation of artificial intelligence (AI)–supported data acquisition methods in clinical practice requires establishing a defined and accepted reference standard, thus demanding close collaboration between core AI researchers and clinical radiologists.

■ The use of AI may provide support for workflow optimization in multiple nondiagnostic tasks, including patient scheduling, safety, and operational efficiency.

■ Most algorithms are designed for a very specific task, such as identifying a single pathologic finding or characteristic, to answer a very specific question.

■ The radiologist, health care system, and AI system developer all could be held liable for damages caused by a medical AI error.

Introduction

The availability of various forms of artificial intelligence (AI) in the field of medical imaging has exploded over the past few years. Recent radiology conferences and meetings, as exemplified by the RSNA Annual Meeting held in 2022, include many scientific and educational presentations related to the use of AI in radiology. The subspecialty of musculoskeletal radiology is no exception to this ongoing trend. With the growing amount of cross-sectional images acquired in ever shorter periods of time, the workload for musculoskeletal radiologists is rapidly increasing but without a corresponding increase in the number of musculoskeletal radiologists with a high level of expertise available to interpret those images and in an efficient manner. A big question we need to ask ourselves is “Will AI be the solution?” This article provides an overview of AI applications for musculoskeletal radiology, including basic principles, image acquisition and interpretation, and prediction of future outcomes. The article also discusses AI implementation challenges, the noninterpretive uses of AI, and how AI may transform the daily professional lives of musculoskeletal radiologists.

Using AI for Image Acquisition I: Accelerated Image Acquisition—Faster and Better or Faster and Fake?

The use of AI methods has the potential to improve the efficiency of MRI scan acquisition, and research in this area has picked up substantially since the first developments in 2016 (1–3). Due to the specific demand to resolve fine image details, musculoskeletal radiology was identified early as a high-impact application. We focus on the use of AI for MRI scan acquisition because MRI seems to be the modality that most benefits from application of AI methods.

Despite promising initial results of accelerated superresolution musculoskeletal MRI of peripheral joints (4–6) and accelerated deep learning (DL)–based knee imaging (7–11), the crucial open research question is if AI methods are robust and reliable enough for the challenging environment of routine clinical imaging (12,13). AI methods—in particular, DL models—have the computational capacity to generate completely artificial photorealistic images (14). When used for MRI acquisition, there is a danger that they generate images that do not truly represent the imaged anatomy while at the same time showing no recognizable image imperfections. This is a key difference to techniques like parallel imaging (15–17) and compressed sensing (18), where errors in the image generation process lead to aliasing artifacts, noise amplification, or blurry and cartoonish-looking images easily recognized by radiologists. This is illustrated in Figure 1, which shows the potential and challenges of AI methods (19–21). The challenge with AI-generated images is that it is not possible to distinguish between false-negative and true-negative outputs. Thus, the wide implementation of AI-supported data acquisition methods in clinical practice requires establishing trusted and reliable results by using a defined and accepted reference standard (22,23). The establishment of this reference standard will demand close collaboration between core AI researchers and clinical radiologists.

Figure 1:

Analysis of the results from the 2019 fastMRI challenge to reconstruct knee MRI scans for (B–D) four-times accelerated images acquired on clinical 1.5-T MRI scanners and (G–I) eight-times accelerated images acquired on clinical 3.0-T MRI scanners without any parallel imaging or partial Fourier acceleration and (A, F) the inverse Fourier transform reconstructed images from the fully sampled ground truth. The k-space data were then retrospectively undersampled to obtain the target acceleration rates. The first, second, and third place images each indicate deep learning (DL) reconstructions with different models that placed at the top during the 2019 fastMRI challenge. (E, J) After the conclusion of the challenge, the challenge organizers performed a combined parallel imaging and compressed sensing reconstruction as a state-of-the-art reference. All DL reconstructions showed substantial improvement in image quality in comparison with this reference. However, a horizontal tear of the medial meniscus (rectangle in F) is visible on the ground truth image but lost in the reconstructions from the eight-times accelerated acquisitions. For the four-times accelerated image acquisition, a bone marrow lesion (rectangle in A) is clearly visible on all reconstructions. In general, the four-times accelerated reconstructions from the challenge participants delivered clinical image quality, which indicates that this acceleration rate is acceptable for this particular configuration of hardware and pulse sequence parameters.

Using AI for Image Acquisition II: Beyond Speed—What Can Be Seen Now with the Help of AI?

Next-generation AI

The first generation of DL image reconstruction algorithms focused on reducing artifacts and accelerating image acquisition by means of denoising and parallel imaging–induced aliasing artifact reduction (7,22). But new generations are multidimensional. These next-generation algorithms target all components of the signal-to-noise equation to improve image quality, efficiency, and diagnostic performance in different types of musculoskeletal MRI (4,5).

These recent complex DL-based end-to-end image reconstruction methods apply to the entire range of ultralow- to ultrahigh-field-strength MRI (4,24). These methods also apply to a broad spectrum of pulse sequences, including two- and three-dimensional gradient and spin-echo–based pulse sequences (5,8,25,26). The multidimensional abilities of such end-to-end image reconstruction methods include raw data–based advanced denoising and dithering functionalities; multisection controlled aliasing in parallel imaging resulting in higher acceleration, or CAIPIRINHA (9); and coil g-factor corrections and superresolution interpolation (27) capabilities for musculoskeletal MRI with improved temporal, spatial, and contrast resolution (4).

Low-Field-Strength MRI

Modern low-field-strength MRI systems offer new opportunities for more affordable and accessible MRI worldwide (28). For example, a recently introduced 0.55-T modern low-field-strength whole-body MRI system has a lower cost of ownership than current clinical 1.5- and 3.0-T MRI scanners (29). Low-field-strength MRI offers a combination of strategies, including modern signal transmission and receiver chains, high-performance radiofrequency coils, flexible surface coils (30), near signal-neutral simultaneous multislice acceleration (9), and DL-based advanced image reconstruction algorithms. These strategies improve the signal yield substantially for faster scans with better image quality and future potential to rival 1.5- and 3.0-T MRI (Fig 2) (31,32).

Figure 2:

MRI scans of the right knee in a 42-year-old male patient acquired using a modern 0.55-T low-field-strength whole-body MRI system. (A–E) Coronal T1-weighted two-dimensional turbo spin-echo images of the knee without intravenous contrast material with (A) conventional image reconstruction and (B–E) deep learning (DL) superresolution image reconstruction applying increasing levels of denoising (B, lowest; E, highest). Compared with (A) conventional image reconstruction, (B–E) DL superresolution image reconstruction improved image quality with improved signal-to-noise ratio, contrast, and sharpness, resulting in better fine bone detail visibility (arrows). Increasing denoising levels (B, lowest; E, highest) elevated signal-to-noise ratio but decreased the detail of bone texture (arrows).

Metal Artifact Reduction

Future capabilities of DL image reconstruction aim to improve MRI metal artifact reduction methods (33–37), including correction of acceleration artifacts, image sharpness, and implant-induced artifacts (38). Efficiency gains and expansion of the diagnostic utility of musculoskeletal MRI will also arise from DL-based image contrast transformations. These transformations include generating synthetic CT-like images from MRI data sets (39–41), synthesizing MRI contrasts and quantitative maps (42,43), and generating fat-suppressed images from non–fat-suppressed MRI data (44).

How AI May Change Daily Clinical Routine: Impact on Workflow

The use of AI tools can aid all actions of the radiologist’s workflow, from ordering imaging to reporting (Fig 3). First, the prescription of imaging is usually based on the patient’s symptoms and laboratory test results. Machine learning–derived algorithms may enhance existing clinical decision support tools for referring providers. For example, an algorithm may suggest appropriate imaging examinations depending on the clinical scenario (45–48). The use of AI-enhanced image acquisition, particularly acceleration, will increase the musculoskeletal radiologist’s workload by decreasing image acquisition time, with more examinations performed in a given period. Study protocoling is a time-consuming process, but automation will be possible with machine learning systems (49), including the selection of MRI sequences (50) or the need for intravenous contrast material (51). Radiologists assisted by AI in their interpretative tasks may have a faster reading time than unassisted radiologists, as demonstrated for fracture detection on radiographs or lesion detection on knee MRI scans (52–54). Additionally, AI may prioritize and highlight patients with urgent findings (55). Future AI applications may also incorporate prior examinations for comparisons (56). Finally, AI could aid the reporting workflow and build a patient’s specific structured report (57). Machine learning models may also identify follow-up recommendations in reports (58). Recently, AI chatbots, including ChatGPT, developed by OpenAI, have become available for widespread public use, although it is unknown if future evolutions of these chatbots will impact radiologists’ clinical workflow. The use of AI may also provide support for workflow optimization in multiple nondiagnostic tasks, including patient scheduling, safety, and operational efficiency (59–62). In academic centers, AI may be helpful for educational and research activities. For example, an AI algorithm developed for efficient data set building is able to classify radiographs according to the anatomic region in the assessment of musculoskeletal diseases (63). Moreover, AI may assist in automated measurements for orthopedic purposes (64,65).

Figure 3:

Diagram shows impact of artificial intelligence (AI) on radiologist workflow, with decreased (green boxes) or increased (blue box) workload. Deep learning reconstruction will increase workload by decreasing image acquisition time, with more examinations performed in a given period.

AI and Image Interpretation: Where Are We Today and What Can We Expect?

AI can help in the interpretation of radiologic images in musculoskeletal imaging in various steps (66). These include detection and localization of a pathologic abnormality, such as fractures; characterization of abnormal imaging findings, such as aggressive versus nonaggressive bone lesions; and segmentation and quantification of an anatomic structure or a pathologic feature, such as segmentation of vertebral contours. The Table summarizes examples of DL tasks in musculoskeletal imaging already documented in the literature (Figs 4–7).

Examples of Deep Learning Tasks in Musculoskeletal Imaging

Figure 4:

MRI scans of the lumbar spine in a 68-year-old female patient. (A) Sagittal (Sag) and (B) axial T2-weighted fast spin-echo sequence. The CoLumbo software (Smart Soft Healthcare) segments the vertebral discs (blue), the herniated discs (red), the dural sac (cyan), and the foraminal nerve roots (pink) and measures the size of the herniated discs and the surface of the dural sac. The horizonal oblique green line shows the level at which the axial image was obtained, and the vertical yellow line shows the level at which the sagittal image was obtained. Repetition time (TR) and echo time (TE) are given in milliseconds. The scale is at 5 mm. A = anterior, FS = factor speed, frFSE = fast relaxation fast spin echo, I = inferior, P = posterior, T = thickness.

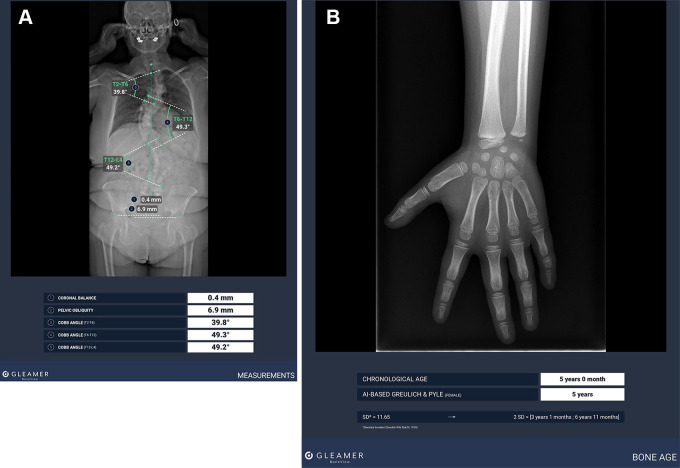

Figure 7:

(A) Standard radiograph with artificial intelligence (AI)–aided Cobb angle measurements for scoliosis evaluation using BoneView, version 2.2.1 (Gleamer). (B) Standard radiograph with AI-aided bone age estimation using BoneView, version 2.2.1.

Figure 5:

(A) Lodwick IC vertebral lytic lesion (box) in a 61-year-old female patient detected with an artificial intelligence (AI) algorithm (Guerbet) for the detection of bone lesions on a thoracic axial section CT scan with a bone windowing. (B) Lodwick IC vertebral mixed lytic and sclerotic lesion (box) in a 57-year-old male patient detected with an AI algorithm (Guerbet) for the detection of bone lesions on a thoracic axial section CT scan with a bone windowing.

Figure 6:

(A) Anteroposterior radiograph shows a lytic bone lesion (box) within the right humerus of a 49-year-old female patient detected with an artificial intelligence algorithm. (B) Coronal T2-weighted fat-suppressed fast spin-echo MRI scan confirmed the presence of an intraosseous T2 hyperintense lesion, corresponding to the radiographic abnormality.

Recent research has shown that fracture detection software can improve the ability of radiologist and nonradiologist readers to detect fractures in adult patients as well as pediatric patients, with high sensitivity and specificity and time savings (53,67–69) (Fig 8). One study (70) analyzed bone radiographs with use of AI software and compared the discrepancies between what the software found and the radiologists’ reports. A significant proportion of the traumatic abnormalities seen on bone radiographs (ie, fractures, dislocations, focal bone lesions, and joint effusions) were not described in the reports and could have been caught by the software (70). Evidence from these publications supports the usefulness of AI to reduce the risk of medical errors. Another software incidentally detected osteoporotic vertebral fractures on thoracoabdominopelvic CT scans (71), which can improve the detection and subsequent management of osteoporosis without the need for bone densitometry. One software has shown good performance in the detection of anterior cruciate ligament ruptures (72) and meniscal tears (73) at MRI of the knee. Another software has shown encouraging results in the detection and segmentation of disk herniation and lumbar stenosis (74). Other studies (75,76) have shown that automatic measurement of bone age by AI software gives results close to visual estimation made by human expert consensus.

Figure 8:

Example of a tibial spine fracture (fract) (boxes) with joint effusion in a 28-year-old male patient presenting with a traumatic acute knee injury, detected by artificial intelligence software (BoneView, version 2.2.1; Gleamer) on (A–C) standard radiographs and confirmed on (D) proton density–weighted fat-suppressed MRI scan without intravenous contrast material and (E) coronal CT reconstruction.

If the detection capacity of AI increases to the point of detecting anomalies that are very difficult or impossible to see with the human eye, AI can become a powerful tool to aid imaging diagnosis. Initial results seem promising for wrist fractures, particularly scaphoid fractures (77).

Finally, the use of radiomics may enable tasks beyond the current capabilities of radiologists. These radiomic tasks include the differentiation between the benign and malignant origin of certain tumors or the prognostication of further outcomes based on imaging features not discernable by human eyes (78,79).

AI and Prediction of Future Outcomes: Relevant for Clinical Routine?

Beyond the interpretative and noninterpretative tasks reviewed earlier, AI shows great potential for more complex tasks, such as disease prognostication and prediction of clinical outcomes over time, which may increase the value of imaging and allow our field to take a big step forward toward precision medicine. These tasks may particularly take advantage of the great amount of quantitative data contained in medical images. These quantitative data are currently underused, particularly because of the need for tedious segmentation tasks.

In the future, it is expected that prognostic and predictive AI models may be built through a pipeline that includes segmentation, imaging feature extraction and selection, and integration of imaging and patient data, such as clinical, genetic, and biologic data, with use of convolutional neural networks (80,81). The quantitative features that may be extracted from medical images in this process are either handcrafted by means of a radiomic pipeline or developed end-to-end by DL approaches (82).

The use of predictive algorithms is still in its infancy, and the literature on potential applications in musculoskeletal imaging is scarce. Integration into clinical patient management is largely unclear to date. In the field of osteoarthritis, being able to predict subsets of patients who will develop the disease or whose disease will progress is fundamental for both the design of clinical trials and patient care, allowing early preventive measures. Imaging biomarkers from radiographs and three-dimensional MRI scans, in isolation or integrating with clinical data, have been successfully used to predict incident osteoarthritis or disease progression (83–85). In the field of oncology, models have the potential to predict the response of sarcomas to treatment or the survival of patients with tumors (86). In the field of osteoporosis, studies have assessed the ability of models to predict the risk of bone loss, osteoporotic fractures, or falls in patients with osteoporosis (87,88).

However, many challenges are yet to be overcome for such algorithms to make their way to clinical practice. These challenges include the requirement for large, good-quality data sets, which is more problematic for uncommon conditions such as musculoskeletal tumors, among others. Multi-institutional collaboration will be essential to the creation of such data sets, but this introduces issues of its own, such as differences in imaging protocols. Thorough clinical validation, the development of uncertainty assessments, and improved interpretability will be key to the wide acceptance of these algorithms.

AI Challenges I: Image Interpretation—Can the Algorithm Be Trusted?

The quantity of data available to train AI models, in particular DL models, is a crucial factor, and it is equally as important as the quality and curation of the data (and the accompanying annotations) in determining the success of the models. In general, the accuracy and robustness of the resulting AI models increase with the amount of training data provided. AI models have demonstrated impressive performance in a variety of medical and health care issues, particularly in the context of radiology. Despite this impressive performance, the apparent “black box” character (ie, vague inner workings) of many of these methods makes it difficult to translate AI models into clinical practice. This raises questions concerning the use of AI in clinical practice because the output of the model (such as the prediction or classification result) is challenging for humans to comprehend and anticipate.

Recent regulatory recommendations stress the importance of explainability and interpretability of AI-based technologies (89), particularly in domains such as health care, where there is a direct impact on humans. While explainability and interpretability are desirable, there is no universal agreement on what this entails. The great majority of methods used to address explainability and interpretability in DL models concentrate on highlighting the regions of the image that contribute to the DL model’s output (90). However, it can be challenging to understand these so-called saliency maps (91).

Another important aspect of trustworthy AI systems is the absence of bias, or in other words, fairness. In the context of decision-making algorithms, fairness can refer to the lack of any prejudice or bias toward an individual or a group based on a set of protected traits, such as race, sex, or age. Ensuring fairness in decision-making algorithms can be challenging. AI methods that implicitly learn rules or patterns from data exacerbate this issue. As a result, it is often difficult to identify decision-making that is unfair (92).

Biases in the data used to train the AI models are frequently (but not always) the root of the problem in data-driven AI approaches. Such data bias can take many different forms. The first step in addressing these biases and creating “fair” AI strategies is to identify them. In particular, transparency on the properties of training, test, and validation data sets in the development and evaluation of an AI algorithm is a crucial component of a fair strategy (93).

AI Challenges II: How to Assess the Quality and Performance of AI Algorithms

AI models are developed using a training data set and evaluated on an independent holdout test data set. The holdout test data consist of data not used for model training and provide an unbiased assessment of model performance (94). These data sets need to be randomly identified to prevent information bias from the training to the testing phase. During model training, a subset of the training data set is used as a validation data set for parameter tuning and optimal model selection, with typically 80% of the data comprising the training set and 20% comprising the validation set. Model evaluation on the holdout test data set identifies its true performance and generalizability. External test data sets can be used to further assess model generalizability by evaluating model performance on a population distinct from the one used for model training and validation (95).

In general, data sets in the medical domain contain an unbalanced number of classes (ie, categories that a label can belong to). For example, the number of patients without a disease is typically larger than the number with a disease. Training DL models by using conventional approaches tends to result in prediction errors, with a bias toward the larger group. Various algorithmic techniques have been used to overcome this problem, including oversampling from the underrepresented class, undersampling from the overrepresented class, hybrid approaches, synthesizing additional data, and weighting the loss function or model prediction error during training based on the frequency of patients with and without the disease. Performance metrics such as accuracy, sensitivity, specificity, and area under the receiver operating characteristic curve are commonly used to compare different DL models by quantifying how well the models distinguished between classes when trained and tested on a balanced data set. Tables S1 and S2 summarize the metrics commonly used to evaluate DL model performance for musculoskeletal interpretive imaging applications (95).

Several guidelines for reporting evaluation of machine learning models (96–99) are available in a checklist format concordant with the Enhancing the Quality and Transparency of Health Research, or EQUATOR, Network guidelines (100), such as the Checklist for AI in Medical Imaging, or CLAIM. This checklist addresses applications of AI in medical imaging, including classification, image reconstruction, text analysis, and workflow optimization, to guide authors in presenting their AI research (101).

AI Challenges III: How to Integrate AI into Current Systems—Challenges and Solutions

The integration of AI into reporting and picture archiving and communication system (PACS) workflows should be seamless and not be a burden to radiologists. It is important to note that PACS usually are not able to run applications beyond those bundled in their software (such as AI applications). Separate AI platforms can contain various types of algorithms for image analysis. These AI platforms are usually separate from the clinical information systems, such as PACS. Thus, images from PACS are sent to the platform and processed, and results are returned for the radiologist to use during interpretation. Instead of being bundled with PACS, the AI platform may reside within the network firewall of the institution as an on-premises device. However, this entails institutional responsibilities for service, maintenance, and updates.

Rather than rely on institutional support, a more favorable solution is to use cloud service support. Yet, information security remains a concern for many institutions. With good data hygiene as a goal (ie, ensuring all data processed by an institution is “clean” or “error-free”), the ideal deployment is a small footprint, lean use of data, and cybersecurity: encryption and anonymous routing. This encompasses data deidentification, flow to the server housing the algorithms, and flow of the output back into the institutional information systems without protected health information leaving the enterprise firewall. Platforms allowing an AI orchestration from a multitude of vendors are able to eliminate the need for multiple contracts and connections to PACS and essentially are able to pick best-of-breed applications (ie, “apps store” paradigm). One example of this is the Sectra Amplifier Marketplace. Most models are converging on AI as a service for delivery, hosting, operating, and managing. This allows for seamless integration of AI into a unified workspace without too many “widgets” cluttering the user interface. Guidelines for integrating AI with radiology reporting workflows are available from professional societies (102). More generally, the integration of AI software into existing systems is only one aspect to consider as part of the evaluation of a commercial AI solution.

Other aspects to consider are detailed in the Evaluating Commercial AI Solutions in Radiology, or ECLAIR, guidelines. These guidelines include the relevance of the solution from the point of view of each stakeholder, the validation and performance of the solution, its usability, as well as regulatory and legal aspects (103). Finally, work is still needed in the medicoeconomic assessment of AI-based solutions, as well as the monitoring of their performance over time.

AI Challenges IV: How AI May Transform Musculoskeletal Radiology—A Clinical Perspective

Recently, we have observed increasing efforts to use AI in the context of image acquisition in the field of musculoskeletal MRI, although AI-based reconstructions are still being evaluated and validated (19). Regarding AI support for the musculoskeletal radiologist after image acquisition, several challenges currently hinder the widespread integration of AI algorithms into the daily clinical routine. Most algorithms are designed for a very specific task, such as identifying a single pathologic finding or characteristic, to answer a very specific question. Two examples where this resulted in successful clinical translation in the domain of musculoskeletal radiology are fracture detection and determination of bone age on conventional radiographs (53,67,104,105). Several applications focusing on these two questions are commercially available and successfully validated and integrated into the routine workflow. Other applications may show adequate performance but may be less relevant in a clinical context, such as classifying disease severity (eg, Kellgren-Lawrence scoring on radiographs) or measurement automation (106,107). Clinical translation of AI is more challenging when performing standardized multitissue assessment, such as for complex examinations like MRI of joints. In the context of trauma, an algorithm may differentiate a normal anterior cruciate ligament from a partially or completely torn ligament (108) or a meniscal tear from a normal meniscus (109) but at the same time may not be able to detect and classify additional associated tissue damage. In addition, AI models are only as accurate as the ground truth used in training (109).

Generalizability is the successful application of an algorithm to different clinical settings. Many of the currently tested algorithms have been trained on highly standardized data sets from a single source. Thus, varying image protocols, image parameters (even when the protocol is roughly comparable), or scanner types across different field strengths may hinder clinical translation (110). Different algorithms may have been trained with different sets of data being used as “ground truth.” In such a circumstance, one type of AI algorithm may not work well in different heterogeneous and nonstandardized data sets. The largest publicly available and highly standardized MRI database in the domain of musculoskeletal imaging, the Osteoarthritis Initiative (https://nda.nih.gov/oai/), which has followed almost 5000 participants over a 10-year period, offers a great opportunity for training and testing certain AI applications and has been applied to AI research widely (85,111,112). However, translating Osteoarthritis Initiative data to our own daily practice requires testing and validation before widespread clinical use (113). Algorithms trained on a specific set of data like the Osteoarthritis Initiative often perform very well on similar data but then show poor performance using data not included in the training set. Unfortunately, no analytic approach is currently available to predict the transferability of an algorithm except for testing the algorithm on data sets from external sources (110). To overcome some of these limitations in the context of algorithm development, a discussion paper on good machine learning practice by the U.S. Food and Drug Administration calls for the use of clinically relevant data “acquired in a consistent, clinically relevant and generalizable manner” with “appropriate separation between training, tuning, and test datasets” (114).

AI Challenges V: Who Is Liable When AI Makes an Error?

Who bears responsibility for an error when a radiologist uses an AI system? Should radiologists always follow an AI system’s advice? And if not, are they liable if ignoring the advice results in patient harm? Medical malpractice centers on the theory of negligence: A plaintiff must demonstrate a duty of care, a breach of that duty, harm to the patient, and that the breach of duty caused the harm (115). However, the novelty of clinical AI and the lack of related case law limits the application of the usual principles of medical malpractice (116). As a result, the issues of liability around the use of AI are complex and largely unsettled and likely will vary among national and regional jurisdictions. It is difficult to predict how the liabilities will be defined and how the implications will differ in various parts of the world.

Two scenarios have been proposed when reliance on an AI system that results in harm to a patient could result in liability (117). In the first scenario, the AI system makes a correct recommendation that falls within the standard of care and the physician ignores AI’s recommendation; a survey of potential jurors found physicians liable under those conditions (118). In the second scenario, the physician follows an incorrect AI recommendation that falls outside of the standard of care; here, potential jurors were less inclined to find the physician liable (118).

Injured patients also could choose to sue the health care system or radiology practice under a different legal theory: vicarious liability, which requires only a showing of harm if the employee-physician has acted within established protocols (115). Could developers be held liable if their AI system results in harm? Software historically has not been regarded as a “product” to which product liability law would apply. But these precedents could be reversed as courts develop a body of AI jurisprudence and as AI systems increasingly pose risks for bodily harm (115).

In general, the radiologist, health care system, and AI system developer all could be held liable for damages caused by a medical AI error. The radiologist could be held liable for any misdiagnosis or failure to diagnose resulting from the use of the AI system, the health care system for any harm caused as a result of inadequate training or supervision of staff, and the developer for any defects in the AI system or for failure to provide adequate instructions for its use.

How Musculoskeletal Radiologists Can Effectively Use AI

It is crucial that musculoskeletal radiologists actively engage in research and clinical efforts to integrate AI into our clinical practice. Opposite to thoughts of a few years ago, the question is not whether AI will replace radiologists, but rather how radiologists can take advantage of AI and associated technologies to enhance their capabilities as experts in musculoskeletal imaging. Only radiologists’ active engagement will prevent nonradiologists from creating an AI-driven image interpretation workflow that excludes radiologists from that workflow. In addition to image acquisition and interpretation, there are other noninterpretive uses for AI to support the workflow of radiologists, as described earlier (119,120). Continued efforts should be made in developing AI-aided algorithms for musculoskeletal radiologists, encompassing not only image acquisition and interpretation but also workflow improvement, including automated musculoskeletal MRI protocol selection and optimization (51), worklist prioritization (121), and the creation of a dynamic hanging protocol based on image content rather than metadata alone (119), as well as business applications such as optimization of billing, report classification, and claim denial reconciliation (119).

Conclusion

With the ongoing trend of increased imaging prescription and decreased acquisition time, a wide variety of artificial intelligence (AI)–based tools can improve the musculoskeletal radiologist’s workflow by triaging imaging examinations, helping with image interpretation, and decreasing the reporting time. Musculoskeletal radiologists should also consider additional AI applications for business, education, and research purposes when integrating AI into their daily practice. The question is not whether AI will replace radiologists, but rather how musculoskeletal radiologists can take advantage of AI to enhance their expert capabilities.

Acknowledgments

Acknowledgments

The authors acknowledge the valuable assistance of Richard Ljuhar, PhD; Jean-Baptiste Chastenet De Gery; Nicolas Jirikoff, Eng; Nynke Breimer, LLM, MBA; and Daniel Forsberg, PhD, for clarifying technical details on how AI can be integrated into clinical information systems.

Disclosures of conflicts of interest: A.G. Consulting fees from Pfizer, TissueGene, Coval, Medipost, TrialSpark, Novartis, and ICM; stock or stock options in Boston Imaging Core Lab; consultant to the editor of Radiology. P.O. Grant from the Swiss National Science Foundation; patent for method and system for generating synthetic images with switchable image contrasts (EP19169543). M.T. No relevant relationships. J.F. Grants from GE HealthCare and Siemens; payment for lectures from GE HealthCare and Siemens; patents or patents pending with Siemens Healthcare, Johns Hopkins University, and New York University; participation on a data safety monitoring board or advisory board for Siemens, SyntheticMR, GE HealthCare, QED, ImageBiopsy Lab, Boston Scientific, Mirata Pharmaceuticals, and Guerbet; leadership role in the RSNA, International Society for Magnetic Resonance in Medicine, American Roentgen Ray Society, European Society of Musculoskeletal Radiology, and Society of Skeletal Radiology; equipment, materials, or gifts from GE HealthCare, Siemens, QED, and SyntheticMR. R.K. No relevant relationships. N.E.R. Chief medical officer and co-founder of Gleamer; stock options in Gleamer. J.C. Consulting fees from Pfizer, Regeneron, Globus, and Covera Health; member of the editorial board for RSNA, American College of Radiology, and International Society of Osteoarthritis Imaging; deputy editor of Radiology. C.E.K. Consulting fees from Deccan Value Investors; advisory panel member for Sectra (travel paid) and member of the LEDE advisory board at the Center for Artificial Intelligence in Medicine and Imaging at Stanford University; editor of Radiology: Artificial Intelligence. F.K. Research partnerships with Siemens Healthineers and Meta AI; grants from the National Institute of Biomedical Imaging and Bioengineering; patent US20170309019A1 (system, method and computer-accessible medium for learning an optimized variational network for medical image reconstruction); stock options in Subtle Medical. D.R. Grants from the Engineering and Physical Sciences Research Council, Wellcome Trust, German Research Foundation (DFG), Alexander von Humboldt Foundation, Federal Ministry of Education and Research (BMBF), InnovateUK, and British Heart Foundation; consulting fees from HeartFlow and IXICO. F.W.R. Grant from Else Kröner-Fresenius-Stiftung; consulting fees from Grünenthal; participation on the data safety monitoring board or advisory board for Grünenthal; editor-in-chief of Osteoarthritis Imaging and associate editor of Radiology; stock or stock options in Boston Imaging Core Lab. D.H. Royalties from Wolters Kluwer.

Abbreviations:

- AI

- artificial intelligence

- DL

- deep learning

- PACS

- picture archiving and communication system

References

- 1. Wang G . A perspective on deep imaging . IEEE Access 2016. ; 4 : 8914 – 8924 . [Google Scholar]

- 2. Hammernik K , Klatzer T , Kobler E , et al . Learning a variational network for reconstruction of accelerated MRI data . Magn Reson Med 2017. ; 79 ( 6 ): 3055 – 3071 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Wang S , Su Z , Ying L , et al . Accelerating magnetic resonance imaging via deep learning . Proc IEEE Int Symp Biomed Imaging 2016. ; 2016 : 514 – 517 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Lin DJ , Walter SS , Fritz J . Artificial intelligence-driven ultra-fast superresolution MRI: 10-fold accelerated musculoskeletal turbo spin echo MRI within reach . Invest Radiol 2023. ; 58 ( 1 ): 28 – 42 . [DOI] [PubMed] [Google Scholar]

- 5. Khodarahmi I , Fritz J . The value of 3 tesla field strength for musculoskeletal magnetic resonance imaging . Invest Radiol 2021. ; 56 ( 11 ): 749 – 763 . [DOI] [PubMed] [Google Scholar]

- 6. Del Grande F , Rashidi A , Luna R , et al . Five-minute five-sequence knee MRI using combined simultaneous multislice and parallel imaging acceleration: comparison with 10-minute parallel imaging knee MRI . Radiology 2021. ; 299 ( 3 ): 635 – 646 . [DOI] [PubMed] [Google Scholar]

- 7. Fritz J , Kijowski R , Recht MP . Artificial intelligence in musculoskeletal imaging: a perspective on value propositions, clinical use, and obstacles . Skeletal Radiol 2022. ; 51 ( 2 ): 239 – 243 . [DOI] [PubMed] [Google Scholar]

- 8. Fritz J , Ahlawat S , Fritz B , et al . 10-min 3D turbo spin echo MRI of the knee in children: arthroscopy-validated accuracy for the diagnosis of internal derangement . J Magn Reson Imaging 2019. ; 49 ( 7 ): e139 – e151 . [DOI] [PubMed] [Google Scholar]

- 9. Fritz J , Fritz B , Zhang J , et al . Simultaneous multislice accelerated turbo spin echo magnetic resonance imaging: comparison and combination with in-plane parallel imaging acceleration for high-resolution magnetic resonance imaging of the knee . Invest Radiol 2017. ; 52 ( 9 ): 529 – 537 . [DOI] [PubMed] [Google Scholar]

- 10. Hammernik K , Klatzer T , Kobler E , et al . Learning a variational network for reconstruction of accelerated MRI data . Magn Reson Med 2018. ; 79 ( 6 ): 3055 – 3071 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Johnson PM , Recht MP , Knoll F . Improving the speed of MRI with artificial intelligence . Semin Musculoskelet Radiol 2020. ; 24 ( 1 ): 12 – 20 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Knoll F , Hammernik K , Kobler E , Pock T , Recht MP , Sodickson DK . Assessment of the generalization of learned image reconstruction and the potential for transfer learning . Magn Reson Med 2019. ; 81 ( 1 ): 116 – 128 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Antun V , Renna F , Poon C , Adcock B , Hansen AC . On instabilities of deep learning in image reconstruction and the potential costs of AI . Proc Natl Acad Sci USA 2020. ; 117 ( 48 ): 30088 – 30095 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Karras T , Aila T , Laine S , Lehtinen J . Progressive Growing of GANs for Improved Quality, Stability, and Variation . arXiv 1710.10196 [preprint] https://arxiv.org/abs/1710.10196. Posted October 2017. Updated February 2018. Accessed January 8, 2023 . [Google Scholar]

- 15. Sodickson DK , Manning WJ . Simultaneous acquisition of spatial harmonics (SMASH): fast imaging with radiofrequency coil arrays . Magn Reson Med 1997. ; 38 ( 4 ): 591 – 603 . [DOI] [PubMed] [Google Scholar]

- 16. Pruessmann KP , Weiger M , Scheidegger MB , Boesiger P . SENSE: sensitivity encoding for fast MRI . Magn Reson Med 1999. ; 42 ( 5 ): 952 – 962 . [PubMed] [Google Scholar]

- 17. Griswold MA , Jakob PM , Heidemann RM , et al . Generalized autocalibrating partially parallel acquisitions (GRAPPA) . Magn Reson Med 2002. ; 47 ( 6 ): 1202 – 1210 . [DOI] [PubMed] [Google Scholar]

- 18. Lustig M , Donoho D , Pauly JM . Sparse MRI: the application of compressed sensing for rapid MR imaging . Magn Reson Med 2007. ; 58 ( 6 ): 1182 – 1195 . [DOI] [PubMed] [Google Scholar]

- 19. Knoll F , Zbontar J , Sriram A , et al . fastMRI: a publicly available raw k-space and DICOM dataset of knee images for accelerated MR image reconstruction using machine learning . Radiol Artif Intell 2020. ; 2 ( 1 ): e190007 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Knoll F , Murrell T , Sriram A , et al . Advancing machine learning for MR image reconstruction with an open competition: overview of the 2019 fastMRI challenge . Magn Reson Med 2020. ; 84 ( 6 ): 3054 – 3070 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Knoll F , Bredies K , Pock T , Stollberger R . Second order total generalized variation (TGV) for MRI . Magn Reson Med 2011. ; 65 ( 2 ): 480 – 491 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Recht MP , Zbontar J , Sodickson DK , et al . Using deep learning to accelerate knee MRI at 3 T: results of an interchangeability study . AJR Am J Roentgenol 2020. ; 215 ( 6 ): 1421 – 1429 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Johnson PM , Lin DJ , Zbontar J , et al . Deep learning reconstruction enables prospectively accelerated clinical knee MRI . Radiology 2023. ; 307 ( 2 ): e220425 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Kijowski R , Fritz J . Emerging technology in musculoskeletal MRI and CT . Radiology 2023. ; 306 ( 1 ): 6 – 19 . [DOI] [PubMed] [Google Scholar]

- 25. Chazen JL , Tan ET , Fiore J , Nguyen JT , Sun S , Sneag DB . Rapid lumbar MRI protocol using 3D imaging and deep learning reconstruction . Skeletal Radiol 2023. ; 52 ( 7 ): 1331 – 1338 . [DOI] [PubMed] [Google Scholar]

- 26. Fritz J , Guggenberger R , Del Grande F . Rapid musculoskeletal MRI in 2021: clinical application of advanced accelerated techniques . AJR Am J Roentgenol 2021. ; 216 ( 3 ): 718 – 733 . [DOI] [PubMed] [Google Scholar]

- 27. Chaudhari AS , Fang Z , Kogan F , et al . Super-resolution musculoskeletal MRI using deep learning . Magn Reson Med 2018. ; 80 ( 5 ): 2139 – 2154 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Arnold TC , Freeman CW , Litt B , Stein JM . Low-field MRI: clinical promise and challenges . J Magn Reson Imaging 2023. ; 57 ( 1 ): 25 – 44 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Campbell-Washburn AE , Ramasawmy R , Restivo MC , et al . Opportunities in interventional and diagnostic imaging by using high-performance low-field-strength MRI . Radiology 2019. ; 293 ( 2 ): 384 – 393 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Wang B , Siddiq SS , Walczyk J , et al . A flexible MRI coil based on a cable conductor and applied to knee imaging . Sci Rep 2022. ; 12 ( 1 ): 15010 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Khodarahmi I , Keerthivasan MB , Brinkmann IM , Grodzki D , Fritz J . Modern low-field MRI of the musculoskeletal system: practice considerations, opportunities, and challenges . Invest Radiol 2023. ; 58 ( 1 ): 76 – 87 . [DOI] [PubMed] [Google Scholar]

- 32. Khodarahmi I , Brinkmann IM , Lin DJ , et al . New-generation low-field magnetic resonance imaging of hip arthroplasty implants using slice encoding for metal artifact correction: first in vitro experience at 0.55 T and comparison with 1.5 T . Invest Radiol 2022. ; 57 ( 8 ): 517 – 526 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Sutter R , Ulbrich EJ , Jellus V , Nittka M , Pfirrmann CW . Reduction of metal artifacts in patients with total hip arthroplasty with slice-encoding metal artifact correction and view-angle tilting MR imaging . Radiology 2012. ; 265 ( 1 ): 204 – 214 . [DOI] [PubMed] [Google Scholar]

- 34. Fritz J , Ahlawat S , Demehri S , et al . Compressed sensing SEMAC: 8-fold accelerated high resolution metal artifact reduction MRI of cobalt-chromium knee arthroplasty implants . Invest Radiol 2016. ; 51 ( 10 ): 666 – 676 . [DOI] [PubMed] [Google Scholar]

- 35. Fritz J , Meshram P , Stern SE , Fritz B , Srikumaran U , McFarland EG . Diagnostic performance of advanced metal artifact reduction MRI for periprosthetic shoulder infection . J Bone Joint Surg Am 2022. ; 104 ( 15 ): 1352 – 1361 . [DOI] [PubMed] [Google Scholar]

- 36. Hayter CL , Koff MF , Shah P , Koch KM , Miller TT , Potter HG . MRI after arthroplasty: comparison of MAVRIC and conventional fast spin-echo techniques . AJR Am J Roentgenol 2011. ; 197 ( 3 ): W405 – W411 . [DOI] [PubMed] [Google Scholar]

- 37. Murthy S , Fritz J . Metal artifact reduction MRI in the diagnosis of periprosthetic hip joint infection . Radiology 2023. ; 306 ( 3 ): e220134 . [DOI] [PubMed] [Google Scholar]

- 38. Seo S , Do WJ , Luu HM , Kim KH , Choi SH , Park SH . Artificial neural network for slice encoding for metal artifact correction (SEMAC) MRI . Magn Reson Med 2020. ; 84 ( 1 ): 263 – 276 . [DOI] [PubMed] [Google Scholar]

- 39. Jans LBO , Chen M , Elewaut D , et al . MRI-based synthetic CT in the detection of structural lesions in patients with suspected sacroiliitis: comparison with MRI . Radiology 2021. ; 298 ( 2 ): 343 – 349 . [DOI] [PubMed] [Google Scholar]

- 40. Fritz J . Automated and radiation-free generation of synthetic CT from MRI Data: does AI help to cross the finish line? Radiology 2021. ; 298 ( 2 ): 350 – 352 . [DOI] [PubMed] [Google Scholar]

- 41. Hilbert T , Omoumi P , Raudner M , Kober T . Synthetic contrasts in musculoskeletal MRI: a review . Invest Radiol 2023. ; 58 ( 1 ): 111 – 119 . [DOI] [PubMed] [Google Scholar]

- 42. Sveinsson B , Chaudhari AS , Zhu B , et al . Synthesizing quantitative T2 maps in right lateral knee femoral condyles from multicontrast anatomic data with a conditional generative adversarial network . Radiol Artif Intell 2021. ; 3 ( 5 ): e200122 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Yi PH , Fritz J . Radiology alchemy: GAN we do it? Radiol Artif Intell 2021. ; 3 ( 5 ): e210125 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Fayad LM , Parekh VS , de Castro Luna R , et al . A deep learning system for synthetic knee magnetic resonance imaging: is artificial intelligence-based fat-suppressed imaging feasible? Invest Radiol 2021. ; 56 ( 6 ): 357 – 368 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Kahn CE Jr . Artificial intelligence in radiology: decision support systems . RadioGraphics 1994. ; 14 ( 4 ): 849 – 861 . [DOI] [PubMed] [Google Scholar]

- 46. Bizzo BC , Almeida RR , Michalski MH , Alkasab TK . Artificial intelligence and clinical decision support for radiologists and referring providers . J Am Coll Radiol 2019. ; 16 ( 9 Pt B ): 1351 – 1356 . [DOI] [PubMed] [Google Scholar]

- 47. Oosterhoff JHF , Doornberg JN ; Machine Learning Consortium . Artificial intelligence in orthopaedics: false hope or not? A narrative review along the line of Gartner’s hype cycle . EFORT Open Rev 2020. ; 5 ( 10 ): 593 – 603 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. Bulstra AEJ ; Machine Learning Consortium . A machine learning algorithm to estimate the probability of a true scaphoid fracture after wrist trauma . J Hand Surg Am 2022. ; 47 ( 8 ): 709 – 718 . [Published correction appears in J Hand Surg Am 2022;47(12):1222.] [DOI] [PubMed] [Google Scholar]

- 49. Tadavarthi Y , Makeeva V , Wagstaff W , et al . Overview of noninterpretive artificial intelligence models for safety, quality, workflow, and education applications in radiology practice . Radiol Artif Intell 2022. ; 4 ( 2 ): e210114 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Lee YH . Efficiency improvement in a busy radiology practice: determination of musculoskeletal magnetic resonance imaging protocol using deep-learning convolutional neural networks . J Digit Imaging 2018. ; 31 ( 5 ): 604 – 610 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51. Trivedi H , Mesterhazy J , Laguna B , Vu T , Sohn JH . Automatic determination of the need for intravenous contrast in musculoskeletal MRI examinations using IBM Watson’s natural language processing algorithm . J Digit Imaging 2018. ; 31 ( 2 ): 245 – 251 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52. Canoni-Meynet L , Verdot P , Danner A , Calame P , Aubry S . Added value of an artificial intelligence solution for fracture detection in the radiologist’s daily trauma emergencies workflow . Diagn Interv Imaging 2022. ; 103 ( 12 ): 594 – 600 . [DOI] [PubMed] [Google Scholar]

- 53. Guermazi A , Tannoury C , Kompel AJ , et al . Improving radiographic fracture recognition performance and efficiency using artificial intelligence . Radiology 2022. ; 302 ( 3 ): 627 – 636 . [DOI] [PubMed] [Google Scholar]

- 54. Astuto B , Flament I , K Namiri N , et al . Automatic deep learning–assisted detection and grading of abnormalities in knee MRI studies . Radiol Artif Intell 2021. ; 3 ( 3 ): e200165 . [Published correction appears in Radiol Artif Intell 2021;3(3):e219001.] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55. Katzman BD , van der Pol CB , Soyer P , Patlas MN . Artificial intelligence in emergency radiology: a review of applications and possibilities . Diagn Interv Imaging 2023. ; 104 ( 1 ): 6 – 10 . [DOI] [PubMed] [Google Scholar]

- 56. Acosta JN , Falcone GJ , Rajpurkar P . The need for medical artificial intelligence that incorporates prior images . Radiology 2022. ; 304 ( 2 ): 283 – 288 . [DOI] [PubMed] [Google Scholar]

- 57. European Society of Radiology (ESR) . What the radiologist should know about artificial intelligence – an ESR white paper . Insights Imaging 2019. ; 10 ( 1 ): 44 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58. Carrodeguas E , Lacson R , Swanson W , Khorasani R . Use of machine learning to identify follow-up recommendations in radiology reports . J Am Coll Radiol 2019. ; 16 ( 3 ): 336 – 343 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59. Choy G , Khalilzadeh O , Michalski M , et al . Current applications and future impact of machine learning in radiology . Radiology 2018. ; 288 ( 2 ): 318 – 328 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60. Letourneau-Guillon L , Camirand D , Guilbert F , Forghani R . Artificial intelligence applications for workflow, process optimization and predictive analytics . Neuroimaging Clin N Am 2020. ; 30 ( 4 ): e1 – e15 . [DOI] [PubMed] [Google Scholar]

- 61. Ranschaert E , Topff L , Pianykh O . Optimization of radiology workflow with artificial intelligence . Radiol Clin North Am 2021. ; 59 ( 6 ): 955 – 966 . [DOI] [PubMed] [Google Scholar]

- 62. Kapoor N , Lacson R , Khorasani R . Workflow applications of artificial intelligence in radiology and an overview of available tools . J Am Coll Radiol 2020. ; 17 ( 11 ): 1363 – 1370 . [DOI] [PubMed] [Google Scholar]

- 63. Hinterwimmer F , Consalvo S , Wilhelm N , et al . SAM-X: sorting algorithm for musculoskeletal x-ray radiography . Eur Radiol 2023. ; 33 ( 3 ): 1537 – 1544 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64. Jang SJ , Kunze KN , Bornes TD , et al . Leg-length discrepancy variability on standard anteroposterior pelvis radiographs: an analysis using deep learning measurements . J Arthroplasty 2023. ; 9 ( 23 ): 00221 – 00228 . [DOI] [PubMed] [Google Scholar]

- 65. Day J , de Cesar Netto C , Richter M , et al . Evaluation of a weightbearing CT artificial intelligence-based automatic measurement for the M1-M2 intermetatarsal angle in hallux valgus . Foot Ankle Int 2021. ; 42 ( 11 ): 1502 – 1509 . [DOI] [PubMed] [Google Scholar]

- 66. Chea P , Mandell JC . Current applications and future directions of deep learning in musculoskeletal radiology . Skeletal Radiol 2020. ; 49 ( 2 ): 183 – 197 . [DOI] [PubMed] [Google Scholar]

- 67. Duron L , Ducarouge A , Gillibert A , et al . Assessment of an AI aid in detection of adult appendicular skeletal fractures by emergency physicians and radiologists: a multicenter cross-sectional diagnostic study . Radiology 2021. ; 300 ( 1 ): 120 – 129 . [DOI] [PubMed] [Google Scholar]

- 68. Hayashi D , Kompel AJ , Ventre J , et al . Automated detection of acute appendicular skeletal fractures in pediatric patients using deep learning . Skeletal Radiol 2022. ; 51 ( 11 ): 2129 – 2139 . [DOI] [PubMed] [Google Scholar]

- 69. Nguyen T , Maarek R , Hermann AL , et al . Assessment of an artificial intelligence aid for the detection of appendicular skeletal fractures in children and young adults by senior and junior radiologists . Pediatr Radiol 2022. ; 52 ( 11 ): 2215 – 2226 . [DOI] [PubMed] [Google Scholar]

- 70. Regnard NE , Lanseur B , Ventre J , et al . Assessment of performances of a deep learning algorithm for the detection of limbs and pelvic fractures, dislocations, focal bone lesions, and elbow effusions on trauma x-rays . Eur J Radiol 2022. ; 154 : 110447 . [DOI] [PubMed] [Google Scholar]

- 71. Kolanu N , Silverstone EJ , Ho BH , et al . Clinical utility of computer-aided diagnosis of vertebral fractures from computed tomography images . J Bone Miner Res 2020. ; 35 ( 12 ): 2307 – 2312 . [DOI] [PubMed] [Google Scholar]

- 72. Tran A , Lassalle L , Zille P , et al . Deep learning to detect anterior cruciate ligament tear on knee MRI: multi-continental external validation . Eur Radiol 2022. ; 32 ( 12 ): 8394 – 8403 . [DOI] [PubMed] [Google Scholar]

- 73. Rizk B , Brat H , Zille P , et al . Meniscal lesion detection and characterization in adult knee MRI: a deep learning model approach with external validation . Phys Med 2021. ; 83 : 64 – 71 . [DOI] [PubMed] [Google Scholar]

- 74. Lehnen NC , Haase R , Faber J , et al . Detection of degenerative changes on MR images of the lumbar spine with a convolutional neural network: a feasibility study . Diagnostics (Basel) 2021. ; 11 ( 5 ): 902 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75. Martin DD , Calder AD , Ranke MB , Binder G , Thodberg HH . Accuracy and self-validation of automated bone age determination . Sci Rep 2022. ; 12 ( 1 ): 6388 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76. Thodberg HH , Thodberg B , Ahlkvist J , Offiah AC . Autonomous artificial intelligence in pediatric radiology: the use and perception of BoneXpert for bone age assessment . Pediatr Radiol 2022. ; 52 ( 7 ): 1338 – 1346 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77. Hendrix N , Scholten E , Vernhout B , et al . Development and validation of a convolutional neural network for automated detection of scaphoid fractures on conventional radiographs . Radiol Artif Intell 2021. ; 3 ( 4 ): e200260 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78. Malinauskaite I , Hofmeister J , Burgermeister S , et al . Radiomics and machine learning differentiate soft-tissue lipoma and liposarcoma better than musculoskeletal radiologists . Sarcoma 2020. ; 2020 : 7163453 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79. Fanciullo C , Gitto S , Carlicchi E , Albano D , Messina C , Sconfienza LM . Radiomics of musculoskeletal sarcomas: a narrative review . J Imaging 2022. ; 8 ( 2 ): 45 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80. Bach Cuadra M , Favre J , Omoumi P . Quantification in musculoskeletal imaging using computational analysis and machine learning: segmentation and radiomics . Semin Musculoskelet Radiol 2020. ; 24 ( 1 ): 50 – 64 . [DOI] [PubMed] [Google Scholar]

- 81. Visser JJ , Goergen SK , Klein S , et al . The value of quantitative musculoskeletal imaging . Semin Musculoskelet Radiol 2020. ; 24 ( 4 ): 460 – 474 . [DOI] [PubMed] [Google Scholar]

- 82. Bera K , Braman N , Gupta A , Velcheti V , Madabhushi A . Predicting cancer outcomes with radiomics and artificial intelligence in radiology . Nat Rev Clin Oncol 2022. ; 19 ( 2 ): 132 – 146 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83. Morales Martinez A , Caliva F , Flament I , et al . Learning osteoarthritis imaging biomarkers from bone surface spherical encoding . Magn Reson Med 2020. ; 84 ( 4 ): 2190 – 2203 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84. Tiulpin A , Klein S , Bierma-Zeinstra SMA , et al . Multimodal machine learning-based knee osteoarthritis progression prediction from plain radiographs and clinical data . Sci Rep 2019. ; 9 ( 1 ): 20038 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85. Calivà F , Namiri NK , Dubreuil M , Pedoia V , Ozhinsky E , Majumdar S . Studying osteoarthritis with artificial intelligence applied to magnetic resonance imaging . Nat Rev Rheumatol 2022. ; 18 ( 2 ): 112 – 121 . [DOI] [PubMed] [Google Scholar]

- 86. Zhong J , Hu Y , Si L , et al . A systematic review of radiomics in osteosarcoma: utilizing radiomics quality score as a tool promoting clinical translation . Eur Radiol 2021. ; 31 ( 3 ): 1526 – 1535 . [DOI] [PubMed] [Google Scholar]

- 87. Smets J , Shevroja E , Hügle T , Leslie WD , Hans D . Machine learning solutions for osteoporosis—a review . J Bone Miner Res 2021. ; 36 ( 5 ): 833 – 851 . [DOI] [PubMed] [Google Scholar]

- 88. Fields BKK , Demirjian NL , Cen SY , et al . Predicting soft tissue sarcoma response to neoadjuvant chemotherapy using an MRI-based delta-radiomics approach . Mol Imaging Biol 2023. ; 25 ( 4 ): 776 – 787 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89. Ethics guidelines for trustworthy AI . European Commission . https://ec.europa.eu/futurium/en/ai-alliance-consultation.1.html. Accessed February 5, 2023 .

- 90. Reyes M , Meier R , Pereira S , et al . On the interpretability of artificial intelligence in radiology: challenges and opportunities . Radiol Artif Intell 2020. ; 2 ( 3 ): e190043 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91. Ghassemi M , Oakden-Rayner L , Beam AL . The false hope of current approaches to explainable artificial intelligence in health care . Lancet Digit Health 2021. ; 3 ( 11 ): e745 – e750 . [DOI] [PubMed] [Google Scholar]

- 92. Seyyed-Kalantari L , Zhang H , McDermott MBA , Chen IY , Ghassemi M . Underdiagnosis bias of artificial intelligence algorithms applied to chest radiographs in under-served patient populations . Nat Med 2021. ; 27 ( 12 ): 2176 – 2182 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93. Recht MP , Dewey M , Dreyer K , et al . Integrating artificial intelligence into the clinical practice of radiology: challenges and recommendations . Eur Radiol 2020. ; 30 ( 6 ): 3576 – 3584 . [DOI] [PubMed] [Google Scholar]

- 94. Holdout Data . C3.ai. https://c3.ai/glossary/data-science/holdout-data/#:~:text=What%20is%20Holdout%20Data%3F,also%20be%20called%20test%20data. Accessed May 24, 2023 .

- 95. LeCun Y , Bengio Y , Hinton G . Deep learning . Nature 2015. ; 521 ( 7553 ): 436 – 444 . [DOI] [PubMed] [Google Scholar]

- 96. Luo W , Phung D , Tran T , et al . Guidelines for developing and reporting machine learning predictive models in biomedical research: a multidisciplinary view . J Med Internet Res 2016. ; 18 ( 12 ): e323 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97. Handelman GS , Kok HK , Chandra RV , et al . Peering into the black box of artificial intelligence: evaluation metrics of machine learning methods . AJR Am J Roentgenol 2019. ; 212 ( 1 ): 38 – 43 . [DOI] [PubMed] [Google Scholar]

- 98. Park SH , Han K . Methodologic guide for evaluating clinical performance and effect of artificial intelligence technology for medical diagnosis and prediction . Radiology 2018. ; 286 ( 3 ): 800 – 809 . [DOI] [PubMed] [Google Scholar]

- 99. Bluemke DA , Moy L , Bredella MA , et al . Assessing radiology research on artificial intelligence: a brief guide for authors, reviewers, and readers—from the Radiology Editorial Board . Radiology 2020. ; 294 ( 3 ): 487 – 489 . [DOI] [PubMed] [Google Scholar]

- 100. Altman DG , Simera I , Hoey J , Moher D , Schulz K . EQUATOR: reporting guidelines for health research . Lancet 2008. ; 371 ( 9619 ): 1149 – 1150 . [DOI] [PubMed] [Google Scholar]

- 101. Mongan J , Moy L , Kahn CE Jr . Checklist for artificial intelligence in medical imaging (CLAIM): a guide for authors and reviewers . Radiol Artif Intell 2020. ; 2 ( 2 ): e200029 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102. Integrating artificial intelligence with the radiology reporting workflows (RIS and PACS) . The Royal College of Radiologists . https://www.rcr.ac.uk/publication/integrating-artificial-intelligence-radiology-reporting-workflows-ris-and-pacs. Updated 2021. Accessed February 5, 2023 .

- 103. Omoumi P , Ducarouge A , Tournier A , et al . To buy or not to buy—evaluating commercial AI solutions in radiology (the ECLAIR guidelines) . Eur Radiol 2021. ; 31 ( 6 ): 3786 – 3796 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 104. Halabi SS , Prevedello LM , Kalpathy-Cramer J , et al . The RSNA pediatric bone age machine learning challenge . Radiology 2019. ; 290 ( 2 ): 498 – 503 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 105. Larson DB , Chen MC , Lungren MP , Halabi SS , Stence NV , Langlotz CP . Performance of a deep-learning neural network model in assessing skeletal maturity on pediatric hand radiographs . Radiology 2018. ; 287 ( 1 ): 313 – 322 . [DOI] [PubMed] [Google Scholar]

- 106. Olsson S , Akbarian E , Lind A , Razavian AS , Gordon M . Automating classification of osteoarthritis according to Kellgren-Lawrence in the knee using deep learning in an unfiltered adult population . BMC Musculoskelet Disord 2021. ; 22 ( 1 ): 844 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 107. Schock J , Truhn D , Abrar DB , et al . Automated analysis of alignment in long-leg radiographs by using a fully automated support system based on artificial intelligence . Radiol Artif Intell 2020. ; 3 ( 2 ): e200198 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 108. Liu F , Guan B , Zhou Z , et al . Fully automated diagnosis of anterior cruciate ligament tears on knee MR images by using deep learning . Radiol Artif Intell 2019. ; 1 ( 3 ): 180091 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 109. Fritz B , Marbach G , Civardi F , Fucentese SF , Pfirrmann CWA . Deep convolutional neural network-based detection of meniscus tears: comparison with radiologists and surgery as standard of reference . Skeletal Radiol 2020. ; 49 ( 8 ): 1207 – 1217 . [Published correction appears in Skeletal Radiol 2020;49(8):1219.] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 110. Prevedello LM , Halabi SS , Shih G , et al . Challenges related to artificial intelligence research in medical imaging and the importance of image analysis competitions . Radiol Artif Intell 2019. ; 1 ( 1 ): e180031 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 111. Pedoia V , Lee J , Norman B , Link TM , Majumdar S . Diagnosing osteoarthritis from T2 maps using deep learning: an analysis of the entire Osteoarthritis Initiative baseline cohort . Osteoarthritis Cartilage 2019. ; 27 ( 7 ): 1002 – 1010 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 112. Wirth W , Eckstein F , Kemnitz J , et al . Accuracy and longitudinal reproducibility of quantitative femorotibial cartilage measures derived from automated U-Net-based segmentation of two different MRI contrasts: data from the osteoarthritis initiative healthy reference cohort . MAGMA 2021. ; 34 ( 3 ): 337 – 354 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 113. Binvignat M , Pedoia V , Butte AJ , et al . Use of machine learning in osteoarthritis research: a systematic literature review . RMD Open 2022. ; 8 ( 1 ): e001998 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 114. Proposed regulatory framework for modifications to artificial intelligence/machine learning (AI/ML)-based software as a medical device (SaMD): Discussion Paper and Request for Feedback . U.S. Food and Drug Administration . https://www.fda.gov/files/medical%20devices/published/US-FDA-Artificial-Intelligence-and-Machine-Learning-Discussion-Paper.pdf. Accessed January 20, 2023 .

- 115. Harvey HB , Gowda V . Clinical applications of AI in MSK imaging: a liability perspective . Skeletal Radiol 2022. ; 51 ( 2 ): 235 – 238 . [DOI] [PubMed] [Google Scholar]

- 116. Sullivan HR , Schweikart SJ . Are current tort liability doctrines adequate for addressing injury caused by AI? AMA J Ethics 2019. ; 21 ( 2 ): E160 – E166 . [DOI] [PubMed] [Google Scholar]

- 117. Price WN 2nd , Gerke S , Cohen IG . Potential liability for physicians using artificial intelligence . JAMA 2019. ; 322 ( 18 ): 1765 – 1766 . [DOI] [PubMed] [Google Scholar]

- 118. Tobia K , Nielsen A , Stremitzer A . When does physician use of AI increase liability? J Nucl Med 2021. ; 62 ( 1 ): 17 – 21 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 119. Richardson ML , Garwood ER , Lee Y , et al . Noninterpretive uses of artificial intelligence in radiology . Acad Radiol 2021. ; 28 ( 9 ): 1225 – 1235 . [DOI] [PubMed] [Google Scholar]

- 120. Shin Y , Kim S , Lee YH . AI musculoskeletal clinical applications: how can AI increase my day-to-day efficiency? Skeletal Radiol 2022. ; 51 ( 2 ): 293 – 304 . [DOI] [PubMed] [Google Scholar]

- 121. Wong TT , Kazam JK , Rasiej MJ . Effect of analytics-driven worklists on musculoskeletal MRI interpretation times in an academic setting . AJR Am J Roentgenol 2019. ; 212 ( 5 ): 1091 – 1095 . [DOI] [PubMed] [Google Scholar]

- 122. Koike Y , Yui M , Nakamura S , et al . Artificial intelligence-aided lytic spinal bone metastasis classification on CT scans . Int J CARS 2023. ; 29 . [DOI] [PubMed] [Google Scholar]

- 123. Ceccarelli F , Sciandrone M , Perricone C , et al . Biomarkers of erosive arthritis in systemic lupus erythematosus: application of machine learning models . PLoS One 2018. ; 13 ( 12 ): e0207926 . [Published correction appears in PLoS One 2019;14(1):e0211791.] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 124. Won D , Lee HJ , Lee SJ , Park SH . Spinal stenosis grading in magnetic resonance imaging using deep convolutional neural networks . Spine 2020. ; 45 ( 12 ): 804 – 812 . [DOI] [PubMed] [Google Scholar]

- 125. Zhao Y , Zhang J , Li H , Gu X , Li Z , Zhang S . Automatic Cobb angle measurement method based on vertebra segmentation by deep learning . Med Biol Eng Comput 2022. ; 60 ( 8 ): 2257 – 2269 . [DOI] [PubMed] [Google Scholar]

- 126. Xie L , Zhang Q , He D , et al . Automatically measuring the Cobb angle and screening for scoliosis on chest radiograph with a novel artificial intelligence method . Am J Transl Res 2022. ; 14 ( 11 ): 7880 – 7888 . [PMC free article] [PubMed] [Google Scholar]

- 127. Archer H , Reine S , Alshaikhsalama A , et al . Artificial intelligence-generated hip radiological measurements are fast and adequate for reliable assessment of hip dysplasia: an external validation study . Bone Jt Open 2022. ; 3 ( 11 ): 877 – 884 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 128. Zheng HD , Sun YL , Kong DW , et al . Deep learning-based high-accuracy quantitation for lumbar intervertebral disc degeneration from MRI . Nat Commun 2022. ; 13 ( 1 ): 841 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 129. Fan X , Qiao X , Wang Z , Jiang L , Liu Y , Sun Q . Artificial intelligence-based CT imaging on diagnosis of patients with lumbar disc herniation by scalpel treatment . Comput Intell Neurosci 2022. ; 2022 : 3688630 . [DOI] [PMC free article] [PubMed] [Google Scholar]