Abstract

Background

Interprofessional education (IPE) facilitates interprofessional collaborative practice (IPCP) to encourage teamwork among dental care professionals and is increasingly becoming a part of training programs for dental and dental technology students. However, the focus of previous IPE and IPCP studies has largely been on subjective student and instructor perceptions without including objective assessments of collaborative practice as an outcome measure.

Objective

The purposes of this study were to develop the framework for a novel virtual and interprofessional objective structured clinical examination (viOSCE) applicable to dental and dental technology students, to assess the effectiveness of the framework as a tool for measuring the outcomes of IPE, and to promote IPCP among dental and dental technology students.

Methods

The framework of the proposed novel viOSCE was developed using the modified Delphi method and then piloted. The lead researcher and a group of experts determined the content and scoring system. Subjective data were collected using the Readiness for Interprofessional Learning Scale and a self-made scale, and objective data were collected using examiner ratings. Data were analyzed using nonparametric tests.

Results

We successfully developed a viOSCE framework applicable to dental and dental technology students. Of 50 students, 32 (64%) participated in the pilot study and completed the questionnaires. On the basis of the Readiness for Interprofessional Learning Scale, the subjective evaluation indicated that teamwork skills were improved, and the only statistically significant difference in participant motivation between the 2 professional groups was in the mutual evaluation scale (P=.004). For the viOSCE evaluation scale, the difference between the professional groups in removable prosthodontics was statistically significant, and a trend for negative correlation between subjective and objective scores was noted, but it was not statistically significant.

Conclusions

The results confirm that viOSCE can be used as an objective evaluation tool to assess the outcomes of IPE and IPCP. This study also revealed an interesting relationship between mutual evaluation and IPCP results, further demonstrating that the IPE and IPCP results urgently need to be supplemented with objective evaluation tools. Therefore, the implementation of viOSCE as part of a large and more complete objective structured clinical examination to test the ability of students to meet undergraduate graduation requirements will be the focus of our future studies.

Keywords: dentist, dental technician, objective structured clinical examination, OSCE, interprofessional education, interprofessional collaborative practice

Introduction

Interprofessional Collaboration Between Dentists and Dental Technicians

Conflicts are part of the life of any organization, and the dental professions are not spared. Jurisdictional battles and supremacy struggles are not alien to dentistry [1]. Unfortunately, despite the obvious reported benefits of interprofessional education (IPE) for interprofessional collaborative practice (IPCP) [2], there is a paucity of data about IPE to promote IPCP among dental professionals. Dentists and dental technicians need to communicate effectively and contribute their professional skills to ensure that they make decisions that are in the best interests of their patients [3]. A clear understanding of the interactions of the dental care team can promote teamwork [4], establish cooperative goals [5], encourage mutual respect [6], and promote IPCP between dental students and dental technology students [7].

IPE encourages teamwork among dental care professionals [8-11] and is increasingly becoming a part of the training programs for dental and dental technology students [12-17]; however, gaps still remain. Perhaps, the largest gaps are owing to the predominant focus of previous studies regarding IPE and IPCP on student and instructor perceptions and a lack of objective assessment of collaborative practice as an outcome measure [18,19]. This marked gap has necessitated the development of a conceptual framework to evaluate the impact of IPE on IPCP to strengthen the evidence for IPE as a tool to improve IPCP between dental and dental technology students [20].

The Objective Structured Clinical Examination

The objective structured clinical examination (OSCE) is an assessment tool based on the principles of objectivity and standardization, in which individual students move through a series of time-limited stations in a circuit for the purpose of assessment of professional performance in a simulated environment. At each station, the student is assessed and marked against standardized scoring rubrics by trained assessors [21]. OSCE has been widely adopted as a summative assessment in the medical undergraduate curriculum and is universally accepted as the gold standard for assessing clinical competence in dental education [22,23]; furthermore, its effectiveness has been confirmed by several studies [24-26]. On the basis of the extensive application of OSCE, the interprofessional OSCE (iOSCE) was initially developed to simulate IPCP [27]. Unlike conventional OSCE, iOSCE involves students from different professions, encourages students to work as a team, and requires the entire team to participate in all tasks [28]. This is performed to objectively evaluate the results of IPE. Within this framework, several variations of iOSCE have been developed to accommodate the training needs of health care teams built to address different disease categories (team OSCE [29,30], group OSCE [31,32], interprofessional team OSCE [28,33], etc). These iOSCE variants can be roughly divided into synchronous [33-36] and asynchronous [29,37] task-based variants. A team working in an operating room typically works synchronously, whereas health care teams of dentists and dental technicians typically work asynchronously. Although the use of iOSCE in medical education has been extensively reported [27-30,38-42], to the best of our knowledge, the use of iOSCE for asynchronous work, especially within dentistry and dental technology cross-professional education, has not been reported.

Although iOSCE may provide an ideal solution for dental and dental technology students to perform IPCP simulation based on real patient cases, the COVID-19 pandemic [43] highlighted the limitations of this traditional approach. For example, a plaster model generated from a clinical case and passed multiple times among students and examiners may pose a risk of infection. In addition, diagnostic stations are usually set up to facilitate OSCE. A station is typically equipped with a trained, standardized patient, and the students complete the diagnosis by asking questions and examining this standardized patient. The risk of infection at this type of station was heightened during the pandemic. Nevertheless, compared with the traditional OSCE, iOSCEs are more time consuming and resource intensive [44,45], especially in dental education; hence, a virtual approach, as developed and piloted in this study, is justified [46,47]. Notably, the conventional virtual OSCE (vOSCE) has been described as a method of performing OSCE using internet technology in medicine [47-49]. The major reason for this technological approach was the scattered nature of the locations of students requiring assessment. However, this approach does not fully leverage virtual technology in dentistry. The integration of digital dental technologies and cloud-based dental laboratory workflows could be practiced within the vOSCE framework [50], which now also forms a professional core course in dental technology education [51-53]. The development of iOSCE based on virtual technology could facilitate the inclusion of digital dental technology in the blueprint design of examination stations. This combination could simulate the critical needs of present-day dental laboratories and promote students’ improved perception about the current demands of the profession.

Objective

To address these research gaps, this study presented a new virtual iOSCE (viOSCE) to objectively assess the effectiveness of IPE as a tool to promote IPCP among dental and dental technology students. We have described the development and piloting of a viOSCE framework and its virtual techniques to validate the user-friendliness of IPE and document its effect on IPCP among dental and dental technology students. Data from both subjective and objective evaluations were collected, and their correlation was assessed.

Methods

Development of viOSCE

The principal investigator (PI) first limited the viOSCE knowledge to content related to the prosthodontics course. Content related to implantology and orthodontics was excluded because it is not part of the core undergraduate coursework for dental or dental technology students. On the basis of the Association for Medical Education in Europe guide [54], a modified Delphi method was used to generate content for viOSCE. The Delphi method is a decision-making process that uses expert opinion, gathered in the form of a survey, under the guidance and direction of the PI to reach group consensus through collaboration, independent analysis, and iteration [55]; this process is the most frequently used method to generate content for OSCEs [54]. The panel of experts in this study consisted of 9 instructors (including the PI) from the College of Stomatology, Chongqing Medical University. All 9 instructors had prosthodontics teaching experience and digital technology practical teaching experience with undergraduate dental and dental technology students. They had also participated in the design and examiner training for traditional OSCE, but only the PI had experience in IPE and vOSCE design.

In this study, there were 4 iterations (rounds) before the viOSCE station design was finalized. In the first round, the PI identified 10 potential topics for viOSCE based on the syllabus of the prosthodontics course for dentistry and dental technology students, gave initial suggestions for the station design, and created a manuscript that was emailed to the panel of experts. Each expert independently gave their opinion and selected 5 topics that they considered as the most important in the syllabus and the most suitable for assessment using viOSCE. In the second round, the PI identified 3 topics with the highest selection rate based on the expert feedback and designed draft blueprints for 20 stations based on the top 3 selected topics using existing virtual technology support. These were sent to the expert panel via email. The expert panel commented about the potential effectiveness of interprofessional collaboration at the stations, made necessary corrections, and returned the design drafts to the PI. In the third round, the PI summarized all the changes made by the expert panel and, finally, decided on 7 stations based on the availability of virtual technology and the time to be spent on the stations within the allotted time frame of the examination. Stations consuming a lot of time, requiring multiple devices for support, or requiring very large spaces were rejected. Next, the selected viOSCE station blueprint design was completed, the virtual technical support was finalized, and the PI sent the final viOSCE station blueprint to the expert panel via email. The expert panel created the scoring rubrics based on the final viOSCE station blueprint, and these were returned to the PI for finalization. In the final round, the PI compiled all the information and met with the group to get a consensus regarding the viOSCE station blueprint and scoring rubrics. Once all the experts approved the viOSCE test station blueprint and scoring rubrics, the PI declared the viOSCE design as complete and declared the panel of experts the viOSCE examiner panel (Figure 1).

Figure 1.

The viOSCE development process based on the modified Delphi method. PI: principal investigator; viOSCE: virtual and interprofessional objective structured clinical examination.

The viOSCE Framework

The developed viOSCE framework consisted of 3 topics, namely, fixed prosthodontics, removable prosthodontics, and clinical diagnostics. There were 7 collaborative examination stations consisting of 4 asynchronous and 3 synchronous stations. All these stations were designed and developed using the Delphi method (Figure 2).

Figure 2.

The framework of the viOSCE. CAD: computer-aided design; RPD: removable partial denture; viOSCE: virtual and interprofessional objective structured clinical examination.

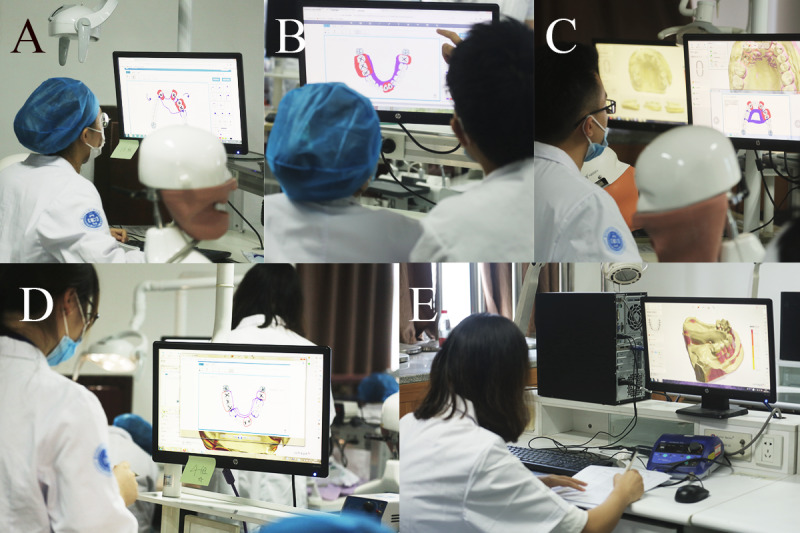

At the fixed prosthodontic stations, the dental student prepared tooth 8 (maxillary right central incisor) on the simulator (Nissan Dental Products) and then worked with the dental technology student to scan the preparations using an intraoral scanner (Panda P2; Freqty Technology). The dental and dental technology students at the intraoral scanning station worked collaboratively. The dental student performed an intraoral scan task, and the dental technology student observed the scan results to determine whether they could be used for the computer-aided design (CAD) wax pattern station. After obtaining a digital model, the dental technology student used a CAD system (Dental system; 3shape) to design a single crown on the digital model of the preparation. Individual scoring rubrics were designed for tooth preparation, intraoral scan, and CAD wax pattern. The 3 examiners scored each of the 3 stations (Figure 3 and Tables 1-3).

Figure 3.

The fixed prosthodontics stations of the viOSCE. (A) A dental student prepared tooth 8 on the simulator. (B) Dental and dental technology students scanned the preparation using an intraoral scanner. (C) A dental technology student created a digital wax pattern using the computer-aided design system. (D) A viOSCE examiner scored the preparation process and results. (E) A viOSCE examiner scored the intraoral scanning process and results. (F) A viOSCE examiner scored the digital wax patterns. viOSCE: virtual and interprofessional objective structured clinical examination.

Table 1.

The scoring rubric used to assess the tooth preparation stations.

| Scoring component | Points | Details |

| Preparation before operation | 5 |

|

| Fine motor skills | 15 |

|

| Preparation during operation | 15 |

|

| Incisal reduction | 10 |

|

| Axial reduction | 15 |

|

| 2-plane reduction | 5 |

|

| Taper | 5 |

|

| Margin placement | 10 |

|

| Details | 20 |

|

Table 3.

The scoring rubric was used to assess the computer-aided design wax pattern stations (crowns).

| Scoring component | Points | Details |

| Order creation | 5 |

|

| Margin | 10 |

|

| Occlusion | 15 |

|

| Proximal contact area | 10 |

|

| Shape | 35 |

|

| Cement space | 5 |

|

| Restoration effect | 20 |

|

Table 2.

The scoring rubric used to assess the intraoral scan stations.

| Scoring component | Points | Details |

| Scanning preparation | 25 |

|

| Scanning operation | 35 |

|

| Scanning integrity | 35 |

|

| Software tool selection | 5 |

|

At the removable prosthodontics station, real patient cases and intraoral digital models were selected and prepared by the PI, followed by approval by the expert panel. The intraoral digital model was a clinical plaster model scanned using Lab Scanner (E4; 3shape). Each dental student used our previously developed Objective Manipulative Skill Examination of Dental Technicians (OMEDT) system [56] to observe the intraoral digital model and to design a removable partial denture (RPD) framework. At the end of the design task, the dental student submitted the design and then discussed the design with the dental technology student; the dental student could make modifications if they wanted to. Next, each dental technology student used a CAD system (Dental system; 3shape) to design the framework of an RPD on the intraoral digital model based on the final design. A viOSCE examiner scored the first RPD design using the OMEDT system. Next, the viOSCE examiner scored the final RPD design and the digital framework of the RPD. The design discussion station was not scored by a separate examiner (Figure 4 and Tables 4 and 5).

Figure 4.

The removable prosthodontics stations of the viOSCE. (A) A dental student designed the framework of a RPD using the Objective Manipulative Skill Examination of Dental Technicians system. (B) Dental and dental technology students discussed the RPD design. (C) A dental technology student created a digital framework of an RPD using the computer-aided design system. (D) A viOSCE examiner scored the first RPD design using the Objective Manipulative Skill Examination of Dental Technician system. (E) A viOSCE examiner scored the final RPD design and the digital framework of the RPD. RPD: removable partial denture; viOSCE: virtual and interprofessional objective structured clinical examination.

Table 4.

The scoring rubric used to assess the removable partial denture design stations.

| Scoring component | Points | Details |

| Case observation | 20 |

|

| Design choices | 40 |

|

| Drawing | 20 |

|

| Consistency with task description | 10 |

|

| Neatness and accuracy in presentation | 10 |

|

Table 5.

The scoring rubric used to assess the computer-aided design wax pattern stations (removable partial denture [RPD] framework).

| Scoring component | Points | Details |

| Order creation | 5 |

|

| Surveying | 10 |

|

| Virtual cast preparation | 20 |

|

| Framework design | 40 |

|

| Form | 25 |

|

The clinical diagnostics station used a virtual standardized patient (VSP) with the haptic device (UniDental, Unidraw). The VSP hardware does not have an anthropomorphic shape, but it interacts through vocal, visual, and haptic devices. On the basis of the novel oral knowledge graph and the coupled, pretrained Bert models, the VSP can accurately interact with a dentist’s underlying intention and express the symptom characteristics in a natural style [57]. On the basis of this algorithm, the PI adjusted and entered the real patient case details, allowing the dental technology student to work with the dental student as a chairside dental technician to make a diagnosis based on the information obtained from the interactions with the VSP. In this study, the clinical case designed on the VSP was a patient who required root canal treatment and full crown restoration. At the end of the dental student’s diagnosis and simulation, the dental technology student was required to assist the dental student in designing the restoration plan and help the patient in choosing the materials for crown restoration (this often determines the price of the treatment). Thus, dental and dental technology students finalized the prosthodontic treatment plan collaboratively. The visual device built a virtual dental clinic environment and VSP model, allowing the students to view the VSP from global, extraoral, and intraoral perspectives. The haptic device allows dental students to perform intraoral and extraoral examinations using essential tools to explore the diagnostic evidence.

Owing to the complexity of collaborative diagnosis, the station was manually scored by 2 examiners independently based on the previously developed scoring rubrics, whereas the UniDental output machine provided an additional score according to the previously developed scoring rubrics. The average of the 3 scores formed the final score for the station. To ensure the relative independence and internal consistency of all scores, the examiners were not informed about the existence of the machine score. The PI exported the machine score data from the VSP at the end of the experiment (Figure 5 and Table 6).

Figure 5.

The clinical diagnostics station of the viOSCE. (A) The VSP with the haptic device, UniDental. (B) Dental and dental technology students performed intraoral palpation on the VSP using the haptic device. (C) Then, 2 viOSCE examiners scored the process and clinical diagnostic results. viOSCE: virtual and interprofessional objective structured clinical examination; VSP: virtual standardized patient.

Table 6.

The scoring rubric used to assess the clinical diagnostic stations.

| Scoring component | Points | Details |

| History taking | 25 |

|

| Intraoral examination | 25 |

|

| Auxiliary examination | 10 |

|

| Case analysis | 20 |

|

| Plan design | 20 |

|

Performance Evaluation of viOSCE

In this study, fourth-year undergraduate dental students and third-year undergraduate dental technology students participated in viOSCE because students at this stage of education had completed preclinical professional training. Overall, 50 students who met these requirements, including 25 (50%) dental students and 25 (50%) dental technology students, were recruited into the viOSCE user evaluation experiment and were divided into groups of 2 comprising 1 dental student and 1 dental technology student. The PI and examiner teams did not influence or determine the team-formation process. All participating students were informed that as this viOSCE was in the experimental phase, it was conducted as a small extracurricular skills competition, thus allowing for self-evaluation without a final examination situation, as previously reported [58]. This approach allowed for the simulation of an examination situation without affecting the final examination grade of the students. A month before commencing the experiment, the PI led an web meeting for students to explain the viOSCE, the relevant knowledge points, and the need to practice fully during the upcoming month. At the end of the meeting, the students completed the Readiness for Interprofessional Learning Scale (RIPLS) pretest questionnaire, which is a 19-item 5-point Likert-scale questionnaire; this type of questionnaire is the most frequently used method for the subjective evaluation of IPE and IPCP [18].

viOSCE was piloted after the 1-month preparation period. The panel of examiners marked points according to the previously prepared scoring rubrics, whereas some of the points were automatically scored by a machine. After this step, the participating students completed the posttest self-made questionnaire, to which a mutual evaluation scale and a viOSCE evaluation scale were added. The mutual evaluation scale asked the students to score the performance of their partner, whereas the viOSCE evaluation scale asked the students to score viOSCE. In total, 6 items were included in the mutual evaluation scale, and 7 items were included in the viOSCE evaluation scale (Textboxes 1 and 2). All items in both questionnaires were set to a maximum score of 100. Before issuing the questionnaire, the panel first reviewed all the questions, clarified ambiguities, and removed any double-barreled questions [59,60]. At the end of the experiment, one-on-one interviews were conducted with all the students to determine their perceptions about viOSCE.

The mutual evaluation scale administered to dental and dental technology student groups who participated in the virtual and interprofessional objective structured clinical examination (viOSCE).

Items

Final contribution

Person-organization fit

Performance in viOSCE

Professional skill

Practice volume before viOSCE

Motivation to participate

The virtual and interprofessional objective structured clinical examination (viOSCE) evaluation scale administered to dental and dental technology student groups who participated in viOSCE.

Items

Evaluation of viOSCE effectiveness

Evaluation of equipment, network operation and maintenance

Evaluation of viOSCE examiners

Evaluation of viOSCE staff

Rationality of the clinical diagnostic design

Rationality of the fixed prosthodontics design

Rationality of the removable prosthodontics design

Statistical Analysis

Data were tabulated in a Microsoft Excel spreadsheet and imported into IBM SPSS Statistics for Windows (version 26.0; IBM Corp) for descriptive analysis. GraphPad PRISM 8.0 software (GraphPad Software) was used to create the graphs. Responses were summarized, and comparisons were made. Output data were presented as percentages and in graphical format. The Shapiro-Wilk test was used to test for normal distribution. Specific data analysis tests performed included descriptive statistics, 2-tailed paired t tests, and correlation analyses.

Ethical Considerations

The research ethics committee of the Affiliated Hospital of Stomatology, Chongqing Medical University, approved this study protocol (COHS-REC-2022; LS number: 096). All participants provided written informed consent before participation in the study.

Results

Of the 50 students, 32 (64%) completed the experiment. Interviews were conducted with the students who dropped out of further participation in the study. The main reasons for dropping out included the students’ belief that they or their collaborating partners had not practiced sufficiently to perform well in the experiment. A group had a verbal confrontation approximately an hour before the experiment began. The main reason for the conflict was that the dental technology student accused the dental student of not practicing sufficiently before the experiment. According to the study protocol, at the end of the experiment, the conflict was resolved by the PI. Both parties were counseled, mediated by the PI, and the 2 parties reconciled.

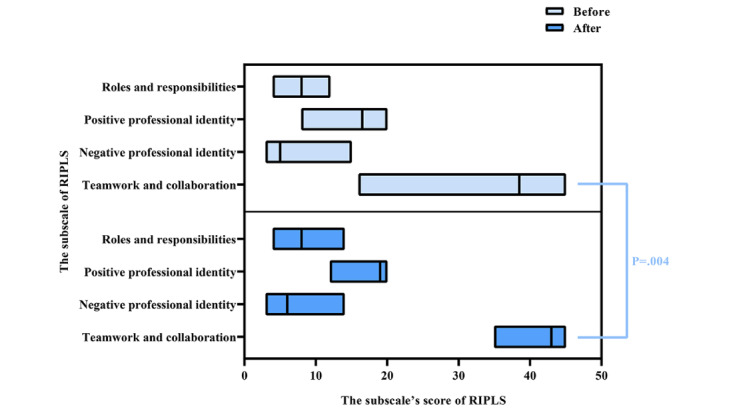

Data from the RIPLS, mutual evaluation scale, and viOSCE evaluation scale were first analyzed to determine the impact of viOSCE on the subjective evaluation of IPCP. All students (32/32, 100%) who completed the experiment were administered the RIPLS questionnaire before and after the experiment. The Cronbach α values were .835 for the pretest data and .731 for the posttest data, suggesting that the reliability and internal consistency were acceptable. The results failed the Shapiro-Wilk test for normality; therefore, the data were analyzed using the Wilcoxon signed rank test. The teamwork and collaboration subscale scores were significantly increased after the experiment (P=.004). In addition, there was an nonsignificant decrease in the negative professional identity subscale scores (P=.21). There was also an insignificant increase in the scores on the positive identity subscale and on the roles and responsibilities subscale (P=.13 and P=.96, respectively). Figure 6 depicts the RIPLS data before and after the viOSCE pilot.

Figure 6.

RIPLS data before and after the virtual and interprofessional objective structured clinical examination pilot study. RIPLS: Readiness for Interprofessional Learning Scale.

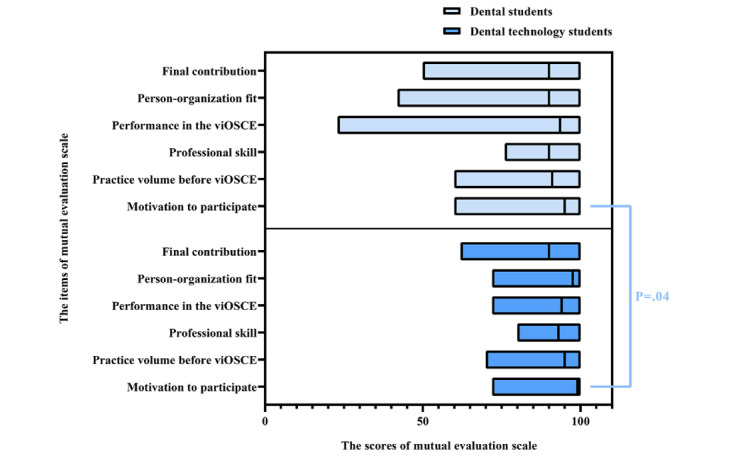

After the experiment, the mutual evaluation scale was administered to all participating students (32/32, 100%) who completed the experiment. The Cronbach α value was .873, suggesting good reliability and internal consistency. Comparison of the results of the dental and dental technology students revealed that only the mutual evaluation scores for competition motivation were significantly different between the 2 groups (P=.04). The dentistry and dental technology students evaluated each other’s motivation to participate in the competition (competition motivation), and the dental students had higher scores than the dental technology students. Figure 7 depicts the mutual evaluation scale scores of the dental and dental technology students.

Figure 7.

Mutual evaluation scale data for the dental and dental technology students. viOSCE: virtual and interprofessional objective structured clinical examination.

Similarly, after the experiment, the viOSCE evaluation scale was administered to all students (32/32, 100%). The Cronbach α value was .706, suggesting acceptable reliability and internal consistency. Comparison of the viOSCE evaluation scale results of the dental and dental technology students with the Wilcoxon signed rank test results revealed that only the evaluation scores for the removable prosthodontics design were statistically significant (P=.01) among the 7 items. Figure 8 depicts the viOSCE evaluation scale scores of the dental and dental technology students.

Figure 8.

viOSCE evaluation scale data for dental and dental technology students. viOSCE: virtual and interprofessional objective structured clinical examination.

To explore the validity of the examiner panel scores in viOSCE, correlation analysis was conducted on the scores of each station under the 3 topics. Using Spearman correlation coefficient, for the fixed prosthodontics topic, a strong positive correlation between the scores of the tooth preparation station and the CAD wax pattern station was noted, and it was statistically significant (r=0.67; P=.005). Positive correlations between the scores of the intraoral scan station and the CAD wax pattern station and between the intraoral scan station and the tooth preparation station were not statistically significant (r=0.179; P=.51 and r=0.387; P=.14, respectively). For the removable prosthodontics topic, 11 (69%) of the 16 student groups finally decided to modify the RPD design initially made by the dental students. A negative but statistically insignificant correlation between the scores of the RPD design station and the CAD wax pattern station was noted (r=−0.111; P=.68). For the clinical diagnostics topic, the correlation analysis was conducted primarily for the machine scores and the examiner scores to determine the usability of the VSP in viOSCE and the consistency of machine scoring and examiner scoring. The results revealed a significant positive correlation between the scores of the 2 examiners, and the positive correlation between the machine scores and the 2 examiners’ scores was also significant. The results are shown in Table 7 and Figure 9.

Table 7.

Spearman correlation analysis of the virtual and interprofessional objective structured clinical examination scores.

| Topic and station | Correlation coefficient | P value (2-tailed) | Participants (n=16), n (%) | |

| Fixed prosthodontics | ||||

|

|

Tooth preparation vs CADa wax pattern | 0.670 | .005b | 16 (100) |

|

|

Intraoral scan vs tooth preparation | 0.387 | .14 | 16 (100) |

|

|

Intraoral scan vs CAD wax pattern | 0.179 | .51 | 16 (100) |

| Removable prosthodontics | ||||

|

|

RPDb design vs CAD wax pattern | −0.111 | .68 | 16 (100) |

| Clinical diagnostics | ||||

|

|

Machine score vs examiner-1 score | 0.601 | .01d | 16 (100) |

|

|

Machine score vs examiner-2 score | 0.629 | .009b | 16 (100) |

|

|

Examiner 1 score vs examiner-2 score | 0.855 | <.001e | 16 (100) |

aCAD: computer-aided design.

bRPD: removable partial denture.

Figure 9.

Spearman correlation analysis of the virtual and interprofessional objective structured clinical examination scores. (A) For the fixed prosthodontics topic, the positive correlations between the scores of the tooth preparation station and the CAD wax pattern station were significant. (B) For the clinical diagnostics topic, the positive correlations between the virtual standardized patient machine score and the examiners’ scores were significant. CAD: computer-aided design.

To explore the relationship between the objective and subjective evaluations, correlation analysis was conducted between the viOSCE scores and the RIPLS scores as well as between the viOSCE scores and the mutual evaluation scale scores. Insignificant negative correlations were noted between the subjective evaluation scores presented by RIPLS and viOSCE. Similarly, the correlation of the mutual evaluation scale score with the viOSCE scores was not significant. The SD of the scores on the mutual evaluation scale showed a decreasing trend among students with higher viOSCE scores and those with lower scores, but an increasing trend was observed among those with median scores (Table 8 and Figure 10).

Table 8.

Spearman correlation analysis between the subjective and objective evaluations presented by the Readiness for Interprofessional Learning Scale (RIPLS) and mutual evaluation scale.

|

|

Correlation coefficient | P value (2-tailed) | Participants (n=16), n (%) |

| The examiner panel scores of viOSCEa vs the intragroup mean score of RIPLS (before the test) | −0.272 | .15 | 16 (100) |

| The examiner panel scores of viOSCE vs the intragroup mean score of RIPLS (after the test) | −0.302 | .13 | 16 (100) |

| The examiner panel scores of viOSCE vs the intragroup mean score of the mutual evaluation scale | −0.038 | .44 | 16 (100) |

aviOSCE: virtual and interprofessional objective structured clinical examination.

Figure 10.

Correlation analysis of the viOSCE scores and the mutual evaluation scale. (A) Correlation analysis of the viOSCE scores and the SD of the scores for each item on the mutual evaluation scale. (B) Correlation analysis of the viOSCE scores and the SD of the mean scores on the mutual evaluation scale. viOSCE: virtual and interprofessional objective structured clinical examination.

In the one-on-one interviews, 29 (91%) of the 32 students approved of the effectiveness of viOSCE and wanted to use it to assess their IPCP ability in the graduation examination. At the fixed prosthodontics station, 56% (9/16) of the dental technology students complained about the lack of lingual space prepared by their partners at the tooth preparation station, which made it difficult to design crown wax patterns, and the corresponding dental students reported not being aware of the condition before viOSCE. At the removable prosthodontics station, almost all the dental students (15/16, 94%) reported that the advice given by the dental technology students was effective in helping them complete the RPD design and considered their design practice to be insufficient. In contrast, the dental technology students reported that helping the dental students complete the RPD design made them feel satisfied with their professional competence and felt that they were truly part of the team during the collaboration. At the clinical diagnosis station, the dental students felt that their clinical practice experience was not sufficient, especially when the dental technology students could provide a diagnostic plan faster than themselves.

In terms of positive feedback, the students believed that viOSCE promoted the friendship between themselves and their partners, helped them realize the continuity and relevance between their own work and the work of their partners, and enabled them to acquire a deep understanding of IPCP. The negative feedback mainly focused on their lack of clinical knowledge, inadequate preparation, and long waiting time at some stations.

Discussion

Principal Findings

The IPCP results of dentists and dental technicians reflect the quality of their IPE, skill training, and clinical experience. The results contribute to the much-needed IPE assessment literature and suggest that teamwork skills can be improved by IPCP and effectively assessed using this new evaluation scale. We used a modified Delphi process in this study. This is in accordance with Simmons et al [27], who found that the modified Delphi process is an effective tool to obtain consensus among professionals for the foundational work required. In addition, our study demonstrated the effectiveness of iOSCE in asynchronous and synchronous collaboration scenarios, while providing a methodological reference for developing a new iOSCE for dental health care professionals. As the collaboration scenario between the dentist and the dental technician may be both asynchronously applied through prescriptions and synchronously conducted in chairside discussions [61-63], it was deemed appropriate for the viOSCE framework to consider both synchronous and asynchronous scenarios.

The viOSCE scores in this study also reflect the effectiveness of the framework design. From the viOSCE examiner scores in the fixed prosthodontics section, a significant positive correlation between the scores of the tooth preparation station and the CAD wax pattern station was evident. This finding is consistent with the actual clinical asynchronous delivery scenario, where the dentist’s preparation largely determines the quality of the dental technician’s crown wax pattern. Qualitative evaluations extracted from the one-on-one interviews also supported this result. For the removable prosthodontics section, the negative correlation between the scores of the RPD design station and the CAD wax pattern station was not statistically significant, which might be owing to the fact that more than half of the groups (15/16, 94%) worked collaboratively to modify the RPD design to possibly compensate for the lack of training, which is consistent with the findings about dentists’ inadequate competence in RPD design reported in other studies [64,65].

As OSCE is essentially a simulated scenario-based examination, the use of virtual technology to build simulated scenarios has become an important direction for OSCE-related studies, especially in the field of dental education [43]. The COVID-19 pandemic has further contributed to dental educators’ interest in this area, as dental clinical practice typically occurs in a virus-laden aerosolized environment [66]. Therefore, providing a safe and robust learning environment in the simulation clinic is also critical to help students compensate for lost educational time. The virtual technologies used to construct the simulated clinical environment in this study include VSP and CAD. Previously, Janda et al [67] developed a virtual patient as a supplement to standard instruction in the diagnosis and treatment planning of periodontal disease. However, it could not fully understand complex or ambiguous questions, and the students felt frustrated during the practice [67].

Tanzawa et al [68] developed a robot patient that could reproduce an authentic clinical situation and introduced it into OSCE. However, the dialogue recognition of the robot patients was prespecified; the robot was unable to identify subjective patient descriptions or the dentist’s interrogation intention and could not support intraoral or extraoral examinations to obtain diagnostic evidence [68]. To fill these gaps, our study used VSP with intention recognition and haptic feedback to construct virtual dental clinical practice and diagnosis scenarios more realistically. As the diagnostic evidence collected by students through interrogation, inspection, and palpation was automatically summarized for the final differential diagnosis, and omissions in the examination process eventually led to a misdiagnosis, the system simulated a high-fidelity clinical environment. In addition, the results showed that 1 (6%) of the 16 student groups misdiagnosed their VSP because of incomplete interrogation and palpation. The correlations between the scores of the 2 examiners and the machine scores were statistically significant, thus confirming the robustness of the high-fidelity simulation scenarios constructed by the VSP and the machine scores. On the basis of these results, the use of VSP should be expanded and integrated into daily teaching to give students more opportunities for clinical practice training.

Consistent with the results of previous OMEDT studies [56], the use of CAD technology in viOSCE significantly reduced the time spent at each station for the dental technology students. Some dental technology students complained about the slowness of the CAD program. Upon further investigation, it was found that they imported both impressions at the same time. In dental laboratory practice, dental technicians usually import the impressions separately to prevent computational issues. This finding exposes the lack of virtual dental laboratory practice skills in teaching, which needs to be addressed.

The results showed that the teamwork and collaboration subscale scores were significantly increased at the end of the study (P=.004), suggesting that viOSCE can improve students’ teamwork skills. The increase in the other 3 subscale scores, although not statistically significant, can be explained by the choice of timing of viOSCE. The optimal time to expose medical students to IPE is still subject to debate [18]. viOSCE, as a clinical IPCP intervention introduced during the clinical year, had no significant effect on the promotion of negative or positive identity or roles and responsibilities. This finding may be due to the fact that the students’ professional cognition had been stereotyped at this time, making it difficult to effect significant changes through IPE or IPCP intervention. This conclusion is supported by a previous study [69].

The results of the mutual evaluation scale showed statistically significant difference in participant motivation between the 2 professional groups, which could be explained by the results of the roles and responsibilities subscale. Of the 16 dental technology students, 4 (25%) expressed that they would not practice as dental technicians in the future because they wanted to choose other careers. The differences in the scores of the other items were not statistically significant, thus showing the effectiveness of viOSCE in the development of teamwork spirit. This result confirms that the OSCE design is well suited as a final evaluation of IPE and IPCP. In addition, the average score of each item of viOSCE was >60, indicating that the students were satisfied with the design and operation of viOSCE. The differences in scores between the 2 types of professionals were not statistically significant, except at the removable prosthodontics station, which was probably caused by the dental technology students’ unfamiliarity with the CAD program.

Overall, the internal consistency of all subjective evaluations was acceptable, and the results met expectations. Interesting observations were also made regarding the correlation between the subjective and objective evaluations. The SD of the scores on the mutual evaluation scale showed a decreasing trend among the dental and dental technology students with higher viOSCE scores and those with lower scores, but an increasing trend in the median score was observed. Although this trend was not statistically significant due to sample size limitations, this early finding provides data support for a summary of clinical experience published previously by Preston [70], who reported that the intensity of the relationship between dentists and dental technicians is determined by the difference in their professional skills. If the professional skills of both parties are high, there will be few problems in their cooperative relationship. The more discriminating and demanding the technician or dentist becomes, the more the relationship is strained when either fails to perform up to the other’s standards. This result suggests that in the study of IPE and IPCP for dentists and dental technicians, it is not sufficient to explore the improvement of the traditional assessment dimensions such as team collaboration skills and identity. The final quality of the output must be included in the assessment dimension. This also reaffirms the effectiveness of viOSCE as an objective, quantitative evaluation tool for IPE and IPCP.

Limitations and Future Studies

The main limitation of our study is the small convenience sample of participating students, which could have led to self-selection bias. The sample size should be expanded in the future to obtain more data and to further verify the robustness of the viOSCE framework. In addition, whether viOSCE should be made a part of the large and more complete OSCE to test the ability of students to meet undergraduate graduation requirements will also be the focus of our next study. Moreover, the independent application of the novel VSP in the education of dental students is an interesting topic that will be explored in the next step of this study.

Recommendations

On the basis of our results, we provide the following recommendations:

All dental health professionals should be educated to deliver patient-centered care as members of an interdisciplinary team [16].

IPE intervention–related skills should be introduced as preclinical skills.

The cooperation of the dental care team is complex, and the training for improving the cooperation ability of the dental care team should include both subjective and objective assessments.

viOSCE and scale assessment should be introduced for the assessment of IPE and IPCP at the clinical stage of training.

Conclusions

In this study, a novel viOSCE framework was developed and piloted. Data based on subjective evaluation scales and objective examiner scores were collected and analyzed, confirming the effectiveness of viOSCE as an objective evaluation tool for IPE and IPCP. The experimental design should be expanded to include more randomly selected students with a scientifically determined sample size to further develop studies focused on IPE and IPCP in dentistry and dental technology, ultimately promoting quality in dental clinical practice.

Acknowledgments

This study was funded by a major project of Chongqing Higher Education and Teaching Reform of the People’s Republic of China (reference number 201019 to JS and reference number 193070 to MP) and the Teaching Reformation Fund of the College of Stomatology, Chongqing Medical University (KQJ202105 to YD and KQJ202003 to MP). All authors thank Beijing Unidraw Virtual Reality Technology Research Institute Co Ltd for assisting in the pilot study and the maintenance of the virtual standardized patient and Objective Manipulative Skill Examination of Dental Technicians systems.

Abbreviations

- CAD

computer-aided design

- IPCP

interprofessional collaborative practice

- IPE

interprofessional education

- OMEDT

Objective Manipulative Skill Examination of Dental Technicians

- OSCE

objective structured clinical examination

- PI

principal investigator

- RIPLS

Readiness for Interprofessional Learning Scale

- RPD

removable partial denture

- viOSCE

virtual and interprofessional objective structured clinical examination

- vOSCE

virtual objective structured clinical examination

- VSP

virtual standardized patient

Data Availability

The data sets generated and analyzed during this study are available from the corresponding author upon reasonable request.

Footnotes

Authors' Contributions: MP was the principal investigator who organized the group of experts to develop the framework of the virtual and interprofessional objective structured clinical examination (viOSCE); designed the validity experiment; collected and analyzed the data; and drafted the manuscript. YD organized the viOSCE pilot study and assisted with fundraising and distribution, expert panel recruitment, and data collection. XZ and JW led an engineer team from Beijing Unidraw Virtual Reality Technology Research Institute Co Ltd to complete the development of the virtual standardized patient and Objective Manipulative Skill Examination of Dental Technicians systems and assisted in the maintenance of the virtual standardized patient and Objective Manipulative Skill Examination of Dental Technicians systems during the viOSCE pilot study. Li Jiang assisted in the preparation of clinical cases and equipment related to viOSCE, checked all the details, and was responsible for maintaining order at the viOSCE facility. PJ and JS supervised and directed the project. Lin Jiang supervised the advancement of the project and assisted in recruiting the participating students. All authors approved the manuscript.

Conflicts of Interest: None declared.

References

- 1.Adams TL. Inter-professional conflict and professionalization: dentistry and dental hygiene in Ontario. Soc Sci Med. 2004 Jun;58(11):2243–52. doi: 10.1016/j.socscimed.2003.08.011.S0277953603004283 [DOI] [PubMed] [Google Scholar]

- 2.Teuwen C, van der Burgt S, Kusurkar R, Schreurs H, Daelmans H, Peerdeman S. How does interprofessional education influence students' perceptions of collaboration in the clinical setting? A qualitative study. BMC Med Educ. 2022 Apr 27;22(1):325. doi: 10.1186/s12909-022-03372-0. https://bmcmededuc.biomedcentral.com/articles/10.1186/s12909-022-03372-0 .10.1186/s12909-022-03372-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Rosenstiel SF, Land MF, Walter R. Contemporary Fixed Prosthodontics - Elsevier eBook on VitalSource. Amsterdam, the Netherlands: Elsevier Health Sciences; 2022. [Google Scholar]

- 4.Reeson MG, Walker-Gleaves C, Jepson NJ. Emerging themes: a commentary on interprofessional training between undergraduate dental students and trainee dental technicians. In: Williams PA, editor. Dental Implantation & Technology. Hauppauge, NY: Nova Science Publishers; 2009. pp. 57–69. [Google Scholar]

- 5.Malament KA, Pietrobon N, Neeser S. The interdisciplinary relationship between prosthodontics and dental technology. Int J Prosthodont. 1996;9(4):341–54. [PubMed] [Google Scholar]

- 6.Barr H, Koppel I, Reeves S, Hammick M, Freeth DS. Effective Interprofessional Education: Argument, Assumption and Evidence. Hoboken, NJ: John Wiley & Sons; 2008. [Google Scholar]

- 7.Evans J, Henderson A, Johnson N. The future of education and training in dental technology: designing a dental curriculum that facilitates teamwork across the oral health professions. Br Dent J. 2010 Mar 13;208(5):227–30. doi: 10.1038/sj.bdj.2010.208. https://core.ac.uk/reader/143878464?utm_source=linkout .sj.bdj.2010.208 [DOI] [PubMed] [Google Scholar]

- 8.Morison S, Marley J, Stevenson M, Milner S. Preparing for the dental team: investigating the views of dental and dental care professional students. Eur J Dent Educ. 2008 Feb;12(1):23–8. doi: 10.1111/j.1600-0579.2007.00487.x.EJE487 [DOI] [PubMed] [Google Scholar]

- 9.Formicola AJ, Andrieu SC, Buchanan JA, Childs GS, Gibbs M, Inglehart MR, Kalenderian E, Pyle MA, D’Abreu KD, Evans L. Interprofessional education in U.S. and Canadian dental schools: an ADEA team study group report. J Dent Educ. 2012 Sep;76(9):1250–68. doi: 10.1002/j.0022-0337.2012.76.9.tb05381.x. doi: 10.1002/j.0022-0337.2012.76.9.tb05381.x. [DOI] [PubMed] [Google Scholar]

- 10.Kersbergen MJ, Creugers NH, Hollaar VR, Laurant MG. Perceptions of interprofessional collaboration in education of dentists and dental hygienists and the impact on dental practice in the Netherlands: A qualitative study. Eur J Dent Educ. 2020 Feb;24(1):145–53. doi: 10.1111/eje.12478. https://europepmc.org/abstract/MED/31677206 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Hissink E, Fokkinga WA, Leunissen RR, Lia Fluit CR, Loek Nieuwenhuis AF, Creugers NH. An innovative interprofessional dental clinical learning environment using entrustable professional activities. Eur J Dent Educ. 2022 Feb;26(1):45–54. doi: 10.1111/eje.12671. https://europepmc.org/abstract/MED/33512747 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Evans JL, Henderson A, Johnson NW. Interprofessional learning enhances knowledge of roles but is less able to shift attitudes: a case study from dental education. Eur J Dent Educ. 2012 Nov;16(4):239–45. doi: 10.1111/j.1600-0579.2012.00749.x. doi: 10.1111/j.1600-0579.2012.00749.x. [DOI] [PubMed] [Google Scholar]

- 13.Evans JL, Henderson A, Johnson NW. Traditional and interprofessional curricula for dental technology: perceptions of students in two programs in Australia. J Dent Educ. 2013 Sep 01;77(9):1225–36. doi: 10.1002/j.0022-0337.2013.77.9.tb05596.x. https://onlinelibrary.wiley.com/doi/abs/10.1002/j.0022-0337.2013.77.9.tb05596.x . [DOI] [PubMed] [Google Scholar]

- 14.Evans J, Henderson AJ, Sun J, Haugen H, Myhrer T, Maryan C, Ivanow KN, Cameron A, Johnson NW. The value of inter-professional education: a comparative study of dental technology students' perceptions across four countries. Br Dent J. 2015 Apr 24;218(8):481–7. doi: 10.1038/sj.bdj.2015.296.sj.bdj.2015.296 [DOI] [PubMed] [Google Scholar]

- 15.Storrs MJ, Alexander H, Sun J, Kroon J, Evans JL. Measuring team-based interprofessional education outcomes in clinical dentistry: psychometric evaluation of a new scale at an Australian dental school. J Dent Educ. 2015 Mar;79(3):249–58.79/3/249 [PubMed] [Google Scholar]

- 16.Sabato EH, Fenesy KE, Parrott JS, Rico V. Development, implementation, and early results of a 4-year interprofessional education curriculum. J Dent Educ. 2020 Jul;84(7):762–70. doi: 10.1002/jdd.12170. doi: 10.1002/jdd.12170. [DOI] [PubMed] [Google Scholar]

- 17.Storrs MJ, Henderson AJ, Kroon J, Evans JL, Love RM. A 3-year quantitative evaluation of interprofessional team-based clinical education at an Australian dental school. J Dent Educ. 2022 Jun;86(6):677–88. doi: 10.1002/jdd.12873. [DOI] [PubMed] [Google Scholar]

- 18.Berger-Estilita J, Fuchs A, Hahn M, Chiang H, Greif R. Attitudes towards Interprofessional education in the medical curriculum: a systematic review of the literature. BMC Med Educ. 2020 Aug 06;20(1):254. doi: 10.1186/s12909-020-02176-4. https://bmcmededuc.biomedcentral.com/articles/10.1186/s12909-020-02176-4 .10.1186/s12909-020-02176-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Velásquez ST, Ferguson D, Lemke KC, Bland L, Ajtai R, Amezaga B, Cleveland J, Ford LA, Lopez E, Richardson W, Saenz D, Zorek JA. Interprofessional communication in medical simulation: findings from a scoping review and implications for academic medicine. BMC Med Educ. 2022 Mar 26;22(1):204. doi: 10.1186/s12909-022-03226-9. https://bmcmededuc.biomedcentral.com/articles/10.1186/s12909-022-03226-9 .10.1186/s12909-022-03226-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Medicine I. Measuring the Impact of Interprofessional Education on Collaborative Practice and Patient Outcomes. Washington, DC: The National Academies Press; 2015. [PubMed] [Google Scholar]

- 21.Khan KZ, Ramachandran S, Gaunt K, Pushkar P. The objective structured clinical examination (OSCE): AMEE guide no. 81. part I: an historical and theoretical perspective. Med Teach. 2013 Sep;35(9):e1437–46. doi: 10.3109/0142159X.2013.818634. [DOI] [PubMed] [Google Scholar]

- 22.Manogue M, Brown G. Developing and implementing an OSCE in dentistry. Eur J Dent Educ. 1998 May;2(2):51–7. doi: 10.1111/j.1600-0579.1998.tb00039.x. doi: 10.1111/j.1600-0579.1998.tb00039.x. [DOI] [PubMed] [Google Scholar]

- 23.Brown G, Manogue M, Martin M. The validity and reliability of an OSCE in dentistry. Eur J Dent Educ. 1999 Aug;3(3):117–25. doi: 10.1111/j.1600-0579.1999.tb00077.x. doi: 10.1111/j.1600-0579.1999.tb00077.x. [DOI] [PubMed] [Google Scholar]

- 24.Näpänkangas R, Karaharju-Suvanto T, Pyörälä E, Harila V, Ollila P, Lähdesmäki R, Lahti S. Can the results of the OSCE predict the results of clinical assessment in dental education? Eur J Dent Educ. 2016 Feb;20(1):3–8. doi: 10.1111/eje.12126. doi: 10.1111/eje.12126. [DOI] [PubMed] [Google Scholar]

- 25.Park SE, Anderson NK, Karimbux NY. OSCE and case presentations as active assessments of dental student performance. J Dent Educ. 2016 Mar;80(3):334–8. doi: 10.1002/j.0022-0337.2016.80.3.tb06089.x. doi: 10.1002/j.0022-0337.2016.80.3.tb06089.x. [DOI] [PubMed] [Google Scholar]

- 26.Park SE, Price MD, Karimbux NY. The dental school interview as a predictor of dental students' OSCE performance. J Dent Educ. 2018 Mar;82(3):269–76. doi: 10.21815/JDE.018.026.82/3/269 [DOI] [PubMed] [Google Scholar]

- 27.Simmons B, Egan-Lee E, Wagner SJ, Esdaile M, Baker L, Reeves S. Assessment of interprofessional learning: the design of an interprofessional objective structured clinical examination (iOSCE) approach. J Interprof Care. 2011 Jan 20;25(1):73–4. doi: 10.3109/13561820.2010.483746. [DOI] [PubMed] [Google Scholar]

- 28.Symonds I, Cullen L, Fraser D. Evaluation of a formative interprofessional team objective structured clinical examination (ITOSCE): a method of shared learning in maternity education. Med Teach. 2003 Jan 03;25(1):38–41. doi: 10.1080/0142159021000061404.07NCJ26L2VL39EX7 [DOI] [PubMed] [Google Scholar]

- 29.Emmert MC, Cai L. A pilot study to test the effectiveness of an innovative interprofessional education assessment strategy. J Interprof Care. 2015 Jun 19;29(5):451–6. doi: 10.3109/13561820.2015.1025373. [DOI] [PubMed] [Google Scholar]

- 30.Barreveld AM, Flanagan JM, Arnstein P, Handa S, Hernández-Nuño de la Rosa MF, Matthews ML, Shaefer JR. Results of a team objective structured clinical examination (OSCE) in a patient with pain. Pain Med. 2021 Dec 11;22(12):2918–24. doi: 10.1093/pm/pnab199.6306103 [DOI] [PubMed] [Google Scholar]

- 31.Yusuf IH, Ridyard E, Fung TH, Sipkova Z, Patel CK. Integrating retinal simulation with a peer-assessed group OSCE format to teach direct ophthalmoscopy. Can J Ophthalmol. 2017 Aug;52(4):392–7. doi: 10.1016/j.jcjo.2016.11.027. doi: 10.1016/j.jcjo.2016.11.027.S0008-4182(16)30287-3 [DOI] [PubMed] [Google Scholar]

- 32.Brazeau C, Boyd L, Crosson J. Changing an existing OSCE to a teaching tool: the making of a teaching OSCE. Acad Med. 2002 Sep;77(9):932. doi: 10.1097/00001888-200209000-00036. [DOI] [PubMed] [Google Scholar]

- 33.Burn CL, Nestel D, Gachoud D, Reeves S. Board 191 - program innovations abstract simulated patient as co-facilitators: benefits and challenges of the interprofessional team OSCE (submission #1677) Simul Healthc. 2013;8(6):455–6. doi: 10.1097/01.sih.0000441456.58165.96. https://journals.lww.com/simulationinhealthcare/abstract/2013/12000/board_191___program_innovations_abstract_simulated.100.aspx . [DOI] [Google Scholar]

- 34.Oza SK, Boscardin CK, Wamsley M, Sznewajs A, May W, Nevins A, Srinivasan M, E. Hauer K. Assessing 3rd year medical students’ interprofessional collaborative practice behaviors during a standardized patient encounter: a multi-institutional, cross-sectional study. Med Teach. 2014 Oct 14;37(10):915–25. doi: 10.3109/0142159x.2014.970628. [DOI] [PubMed] [Google Scholar]

- 35.Boet S, Bould MD, Sharma B, Revees S, Naik VN, Triby E, Grantcharov T. Within-team debriefing versus instructor-led debriefing for simulation-based education: a randomized controlled trial. Ann Surg. 2013 Jul 15;258(1):53–8. doi: 10.1097/SLA.0b013e31829659e4. [DOI] [PubMed] [Google Scholar]

- 36.Oza SK, Wamsley M, Boscardin CK, Batt J, Hauer KE. Medical students' engagement in interprofessional collaborative communication during an interprofessional observed structured clinical examination: A qualitative study. J Interprof Educ Pract. 2017 Jun;7:21–7. doi: 10.1016/j.xjep.2017.02.003. doi: 10.1016/j.xjep.2017.02.003. [DOI] [Google Scholar]

- 37.Pogge EK, Hunt RJ, Patton LR, Reynolds SC, Davis LE, Storjohann TD, Tennant SE, Call SR. A pilot study on an interprofessional course involving pharmacy and dental students in a dental clinic. Am J Pharm Educ. 2018 Apr 01;82(3):6361. doi: 10.5688/ajpe6361. https://europepmc.org/abstract/MED/29692442 .S0002-9459(23)01907-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Gerstner L, Johnson BL, Hastings J, Vaskalis M, Coniglio D. Development and success of an interprofessional objective structured clinical examination for health science students. JAAPA. 2018 Dec;31(12):1–2. doi: 10.1097/01.JAA.0000549503.50739.ba. https://journals.lww.com/jaapa/citation/2018/12000/development_and_success_of_an_interprofessional.23.aspx . [DOI] [Google Scholar]

- 39.Doloresco F, Maerten-Rivera J, Zhao Y, Foltz-Ramos K, Fusco NM. Pharmacy students' standardized self-assessment of interprofessional skills during an objective structured clinical examination. Am J Pharm Educ. 2019 Dec 12;83(10):7439. doi: 10.5688/ajpe7439. https://europepmc.org/abstract/MED/32001878 .S0002-9459(23)02254-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Cyr PR, Schirmer JM, Hayes V, Martineau C, Keane M. Integrating interprofessional case scenarios, allied embedded actors, and teaching into formative observed structured clinical exams. Fam Med. 2020 Mar;52(3):209–12. doi: 10.22454/FamMed.2020.760357. [DOI] [PubMed] [Google Scholar]

- 41.Glässel A, Zumstein P, Scherer T, Feusi E, Biller-Andorno N. Case vignettes for simulated patients based on real patient experiences in the context of OSCE examinations: workshop experiences from interprofessional education. GMS J Med Educ. 2021;38(5):Doc91. doi: 10.3205/zma001487. https://europepmc.org/abstract/MED/34286071 .zma001487 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.González-Pascual JL, López-Martín I, Saiz-Navarro EM, Oliva-Fernández Ó, Acebedo-Esteban FJ, Rodríguez-García M. Using a station within an objective structured clinical examination to assess interprofessional competence performance among undergraduate nursing students. Nurse Educ Pract. 2021 Oct;56:103190. doi: 10.1016/j.nepr.2021.103190. doi: 10.1016/j.nepr.2021.103190.S1471-5953(21)00226-2 [DOI] [PubMed] [Google Scholar]

- 43.Donn J, Scott JA, Binnie V, Mather C, Beacher N, Bell A. Virtual objective structured clinical examination during the COVID-19 pandemic: an essential addition to dental assessment. Eur J Dent Educ. 2023 Feb 29;27(1):46–55. doi: 10.1111/eje.12775. [DOI] [PubMed] [Google Scholar]

- 44.Bobich AM, Mitchell BL. Transforming dental technology education: skills, knowledge, and curricular reform. J Dent Educ. 2017 Sep;81(9):eS59–64. doi: 10.21815/JDE.017.035.81/9/eS59 [DOI] [PubMed] [Google Scholar]

- 45.Kuuse H, Pihl T, Kont KR. Dental technology education in Estonia: student research in Tallinn health care college. Prof Stud Theory Pract. 2021;23(8):63–80. https://svako.lt/uploads/svako-pstp-2021-8-23-63-80.pdf . [Google Scholar]

- 46.Blythe J, Patel NS, Spiring W, Easton G, Evans D, Meskevicius-Sadler E, Noshib H, Gordon H. Undertaking a high stakes virtual OSCE ("VOSCE") during COVID-19. BMC Med Educ. 2021 Apr 20;21(1):221. doi: 10.1186/s12909-021-02660-5. https://bmcmededuc.biomedcentral.com/articles/10.1186/s12909-021-02660-5 .10.1186/s12909-021-02660-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Silverman JA, Foulds JL. Development and use of a virtual objective structured clinical examination. Can Med Educ J. 2020 Dec;11(6):e206–7. doi: 10.36834/cmej.70398. https://europepmc.org/abstract/MED/33349786 .CMEJ-11-e206 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Jang HW, Kim KJ. Use of online clinical videos for clinical skills training for medical students: benefits and challenges. BMC Med Educ. 2014 Mar 21;14(1):56. doi: 10.1186/1472-6920-14-56. https://bmcmededuc.biomedcentral.com/articles/10.1186/1472-6920-14-56 .1472-6920-14-56 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Weiner DK, Morone NE, Spallek H, Karp JF, Schneider M, Washburn C, Dziabiak MP, Hennon JG, Elnicki DM, University of Pittsburgh Center of Excellence in Pain Education E-learning module on chronic low back pain in older adults: evidence of effect on medical student objective structured clinical examination performance. J Am Geriatr Soc. 2014 Jun 15;62(6):1161–7. doi: 10.1111/jgs.12871. https://europepmc.org/abstract/MED/24833496 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Tordiglione L, De Franco M, Bosetti G. The prosthetic workflow in the digital era. Int J Dent. 2016;2016:9823025. doi: 10.1155/2016/9823025. doi: 10.1155/2016/9823025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Zheng L, Yue L, Zhou M, Yu H. Dental laboratory technology education in China: current situation and challenges. J Dent Educ. 2013 Mar;77(3):345–7. doi: 10.1002/j.0022-0337.2013.77.3.tb05476.x. doi: 10.1002/j.0022-0337.2013.77.3.tb05476.x. [DOI] [PubMed] [Google Scholar]

- 52.Hwang JS. A study current curriculum on the department dental technology for advanced course at colleges. J Tech Dent. 2022 Mar 30;44(1):8–14. doi: 10.14347/jtd.2022.44.1.8. https://www.jtd.or.kr/journal/view.html?uid=916&vmd=Full . [DOI] [Google Scholar]

- 53.Takeuchi Y, Koizumi H, Imai H, Furuchi M, Takatsu M, Shimoe S. Education and licensure of dental technicians. J Oral Sci. 2022 Oct 01;64(4):310–4. doi: 10.2334/josnusd.22-0173. doi: 10.2334/josnusd.22-0173. [DOI] [PubMed] [Google Scholar]

- 54.Khan KZ, Ramachandran Sankaranarayanan, Gaunt Kathryn, Pushkar P. The Objective Structured Clinical Examination (OSCE): AMEE Guide No. 81. Part I: an historical and theoretical perspective. Med Teach. 2013 Sep;35(9):e1437–46. doi: 10.3109/0142159X.2013.818634. [DOI] [PubMed] [Google Scholar]

- 55.Rowe G, Wright G. The Delphi technique as a forecasting tool: issues and analysis. Int J Forecast. 1999;15(4):353–75. doi: 10.1016/S0169-2070(99)00018-7. doi: 10.1016/S0169-2070(99)00018-7. [DOI] [Google Scholar]

- 56.Pang M, Zhao X, Lu D, Dong Y, Jiang L, Li J, Ji P. Preliminary user evaluation of a new dental technology virtual simulation system: development and validation study. JMIR Serious Games. 2022 Sep 12;10(3):e36079. doi: 10.2196/36079. https://games.jmir.org/2022/3/e36079/ v10i3e36079 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Song W, Hou X, Li S, Chen C, Gao D, Wang X, Sun Y, Hou J, Hao A. An intelligent virtual standard patient for medical students training based on oral knowledge graph. IEEE Trans Multimedia. 2023;25:6132–45. doi: 10.1109/tmm.2022.3205456. https://ieeexplore.ieee.org/document/9887972 . [DOI] [Google Scholar]

- 58.Garcia SM, Tor A, Schiff TM. The psychology of competition: a social comparison perspective. Perspect Psychol Sci. 2013 Nov 04;8(6):634–50. doi: 10.1177/1745691613504114.8/6/634 [DOI] [PubMed] [Google Scholar]

- 59.Ellard JH, Rogers TB. Teaching questionnaire construction effectively: the ten commandments of question writing. Contempor Soc Psychol. 1993;17(1):17–20. doi: 10.1525/9780520930223-004. https://psycnet.apa.org/record/1993-43043-001 . [DOI] [Google Scholar]

- 60.Menold N. Double barreled questions: an analysis of the similarity of elements and effects on measurement quality. J Off Stat. 2020;36(4):855–86. doi: 10.2478/jos-2020-0041. https://sciendo.com/article/10.2478/jos-2020-0041 . [DOI] [Google Scholar]

- 61.Mykhaylyuk N, Mykhaylyuk B, Dias NS, Blatz MB. Interdisciplinary esthetic restorative dentistry: the digital way. Compendium. 2021 Nov;42(10) https://www.aegisdentalnetwork.com/cced/2021/11/interdisciplinary-esthetic-restorative-dentistry-the-digital-way . [Google Scholar]

- 62.Coachman C, Sesma N, Blatz MB. The complete digital workflow in interdisciplinary dentistry. Int J Esthet Dent. 2021;16(1):34–49.925827 [PubMed] [Google Scholar]

- 63.Nassani MZ, Ibraheem S, Shamsy E, Darwish M, Faden A, Kujan O. A survey of dentists' perception of chair-side CAD/CAM technology. Healthcare (Basel) 2021 Jan 13;9(1):68. doi: 10.3390/healthcare9010068. https://www.mdpi.com/resolver?pii=healthcare9010068 .healthcare9010068 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Afzal H, Ahmed N, Lal A, Al-Aali KA, Alrabiah M, Alhamdan MM, Albahaqi A, Sharaf A, Vohra F, Abduljabbar T. Assessment of communication quality through work authorization between dentists and dental technicians in fixed and removable prosthodontics. Appl Sci. 2022 Jun 20;12(12):6263. doi: 10.3390/app12126263. https://www.mdpi.com/2076-3417/12/12/6263 . [DOI] [Google Scholar]

- 65.Punj A, Bompolaki D, Kurtz KS. Dentist-laboratory communication and quality assessment of removable prostheses in Oregon: a cross-sectional pilot study. J Prosthet Dent. 2021 Jul;126(1):103–9. doi: 10.1016/j.prosdent.2020.05.014.S0022-3913(20)30384-X [DOI] [PubMed] [Google Scholar]

- 66.Hung M, Licari FW, Hon ES, Lauren E, Su S, Birmingham WC, Wadsworth LL, Lassetter JH, Graff TC, Harman W, Carroll WB, Lipsky MS. In an era of uncertainty: impact of COVID-19 on dental education. J Dent Educ. 2021 Feb;85(2):148–56. doi: 10.1002/jdd.12404. doi: 10.1002/jdd.12404. [DOI] [PubMed] [Google Scholar]

- 67.Schittek Janda M, Mattheos N, Nattestad A, Wagner A, Nebel D, Färbom C, Lê DH, Attström R. Simulation of patient encounters using a virtual patient in periodontology instruction of dental students: design, usability, and learning effect in history-taking skills. Eur J Dent Educ. 2004 Aug;8(3):111–9. doi: 10.1111/j.1600-0579.2004.00339.x.EJE339 [DOI] [PubMed] [Google Scholar]

- 68.Tanzawa T, Futaki K, Tani C, Hasegawa T, Yamamoto M, Miyazaki T, Maki K. Introduction of a robot patient into dental education. Eur J Dent Educ. 2012 Feb 14;16(1):e195–9. doi: 10.1111/j.1600-0579.2011.00697.x. [DOI] [PubMed] [Google Scholar]

- 69.Hudson JN, Lethbridge A, Vella S, Caputi P. Decline in medical students' attitudes to interprofessional learning and patient-centredness. Med Educ. 2016 May;50(5):550–9. doi: 10.1111/medu.12958. doi: 10.1111/medu.12958. [DOI] [PubMed] [Google Scholar]

- 70.Preston JD. The dental technician-educated or trained? Int J Prosthod. 1991;4(5):413. http://www.quintpub.com/journals/ijp/abstract.php?article_id=7166 . [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data sets generated and analyzed during this study are available from the corresponding author upon reasonable request.