SUMMARY

Changes in an animal’s behavior and internal state are accompanied by widespread changes in activity across its brain. However, how neurons across the brain encode behavior and how this is impacted by state is poorly understood. We recorded brain-wide activity and the diverse motor programs of freely-moving C. elegans and built probabilistic models that explain how each neuron encodes quantitative behavioral features. By determining the identities of the recorded neurons, we created an atlas of how the defined neuron classes in the C. elegans connectome encode behavior. Many neuron classes have conjunctive representations of multiple behaviors. Moreover, while many neurons encode current motor actions, others integrate recent actions. Changes in behavioral state are accompanied by widespread changes in how neurons encode behavior, and we identify these flexible nodes in the connectome. Our results provide a global map of how the cell types across an animal’s brain encode its behavior.

INTRODUCTION

Animals generate diverse behavioral outputs that vary depending on their environment, context, and internal state. The neural circuits that control these behaviors are distributed across the brain. However, it is challenging to record activity across the brain of a freely-moving animal and relate brain-wide activity to comprehensive behavioral information. For this reason, it has remained unclear how neurons and circuits across entire nervous systems represent an animal’s varied behavioral repertoire and how this flexibly changes depending on context or state.

Recent studies suggest that internal states and moment-by-moment behaviors are associated with widespread changes in neural activity1–7. Behavioral states, like quiet versus active wakefulness, and homeostatic states, like thirst, are associated with activity changes in many brain regions1,7,8. In addition, instantaneous motor actions are associated with altered neural activity across many brain regions5,7. However, our understanding of how global dynamics spanning many brain regions encodes behavior remains limited. In mammals, representations of motor actions are found in cortex, cerebellum, spinal cord, and more. Given the vast number of cell types involved and their broad spatial distributions, characterizing this entire system is not yet tractable.

The C. elegans nervous system consists of 302 neurons with known connectivity9–13. C. elegans generates a well-defined repertoire of motor programs: locomotion, feeding, head oscillations, defecation, egg-laying, and postural changes. C. elegans express different behaviors as they switch behavioral states14,15. For example, animals enter sleep-like states during development and after intense stress16,17, awake animals exhibit different foraging states like roaming versus dwelling18–21, and aversive stimuli induce sustained states of increased arousal22,23. In C. elegans, it may be feasible to decipher how behavior is encoded across an entire nervous system and how this can flexibly change across behavioral states.

Previous studies identified some C. elegans neurons that reliably encode specific behaviors. The neurons AVA, AIB, and RIM encode backwards motion; AVB, RIB, AIY and RID encode forwards motion; SMD encodes head curvature; and HSN encodes egg-laying24–31. In addition, corollary discharge signals from RIM and RIA propagate information about motor state to other neurons32–34. Proprioceptive responses to postural changes have also been observed in a handful of neurons35–37. Large-scale recordings suggest that there are widespread activity changes related to behavior. Brain-wide recordings in immobilized animals identified population activity patterns associated with fictive locomotion25,26. In moving animals, velocity and curvature can be decoded from population activity3. While this suggests that many neurons carry behavioral information, we still lack an understanding of how quantitative behavioral features are encoded by most C. elegans neurons.

Here, we elucidate how neurons across the C. elegans brain encode the animal’s behavior. We developed technologies to record high-fidelity brain-wide activity and the diverse motor programs of >60 freely-moving animals. We then devised a probabilistic encoding model that fits most recorded neurons, providing an interpretable description of how each neuron encodes behavior. By also determining neural identity in 40 of these datasets, we created an atlas of how most C. elegans neuron classes encode behavior. This revealed the encoding properties of all recorded neurons and showed that ~30% of the neurons flexibly change how they encode behavior in a state-dependent manner. Our results reveal how activity across the defined cell types of an animal’s brain encodes its behavior.

RESULTS

Technologies to record brain-wide activity and behavior

We built a microscopy platform for brain-wide calcium imaging in freely-moving animals and wrote software to automate processing of these recordings. We constructed a transgenic C. elegans strain that expresses NLS-GCaMP7f and NLS-mNeptune2.5 in all neurons. Recording nuclear-localized GCaMP makes it feasible to record brain-wide activity, though this approach misses local calcium signals in neurites34. Transgenic animals’ behavior was normal, based on assays for chemotaxis and learning (Fig. S1A). Animals were recorded on a microscope with two light paths 38,39. The lower light path is coupled to a spinning disk confocal for volumetric imaging of fluorescence in the head. The upper light path has a near-infrared (NIR) brightfield configuration to capture images for behavior quantification (Movie S1). To allow for closed-loop animal tracking, the location of the worm’s head is identified in real time with a deep neural network40 and input into a PID controller that moves the microscope stage to keep the animal centered.

We wrote software to automatically extract calcium traces from these videos (Fig. 1D). First, a 3D U-Net41 uses the time-invariant mNeptune2.5 to locate and segment all neurons in all timepoints. We then register images from different timepoints to one another and use clustering to link neurons’ identities over time (see Methods). To test whether this accurately tracks neurons, we recorded a control strain expressing NLS-GFP at different levels in different neurons (Peat-4::NLS-GFP), along with pan-neuronal NLS-mNeptune2.5 (Fig. S1B). Mistakes in linking neurons’ identities would be obvious here, since GFP levels would fluctuate in a neural trace if timepoints were sampled from different neurons. This analysis showed that neural traces were correctly sampled from individual neurons in 99.7% of the frames. We estimated motion artifacts by recording a strain with pan-neuronal NLS-GFP and NLS-mNeptune2.5 (Fig. 1E–G; Fig. S1C). Fluorescent signals were far more narrowly distributed for GFP compared to GCaMP7f, suggesting that motion artifacts are negligible (Fig. 1F). Nevertheless, we used the GFP datasets to control for any such artifacts in all analyses below (see Methods). Compared to previous imaging systems38, there was an order of magnitude increase in SNR of the GCaMP traces from this platform (likely due to 3D U-Net segmentation; see Methods).

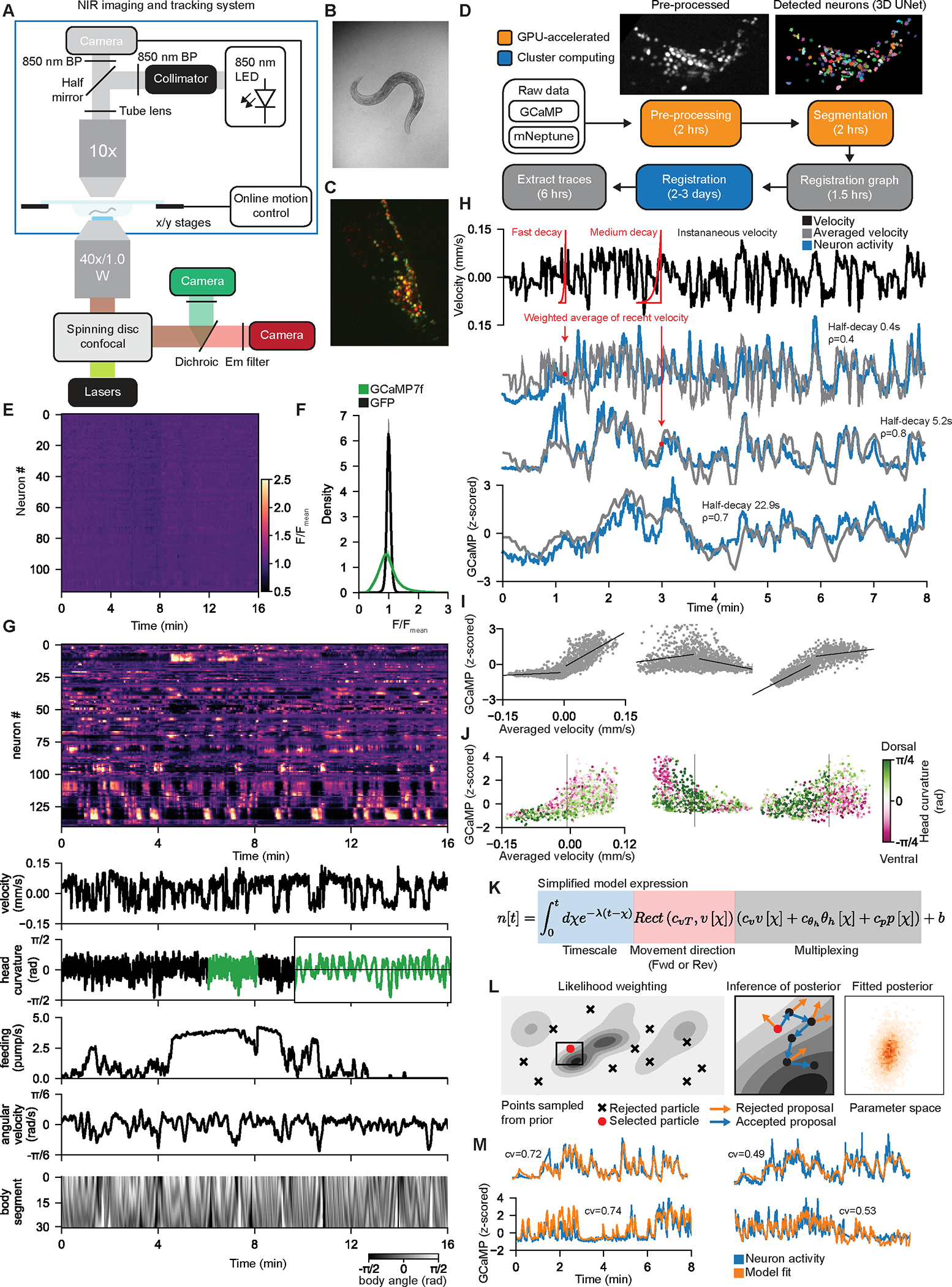

Figure 1. A probabilistic encoder model reveals how neurons across the C. elegans brain represent behavior.

(A) Light path of the microscope. Top: behavioral data are collected in NIR brightfield. Images (panel B) are processed by the online tracking system, which sends commands to the stage to cancel out the motion. Bottom: spinning disk confocal for imaging head fluorescence.

(B-C) Example images from the two light paths in (A). Panel (C) is a maximum intensity projection.

(D) Software pipeline to extract GCaMP signals from the confocal volumes. See Methods.

(E) Heatmap of neural traces collected from a pan-neuronal GFP control animal. Data are shown using same color scale as GCaMP data in (G).

(F) Comparison of signal variation in all neurons from GFP and GCaMP recordings.

(G) Example dataset, with GCaMP data and behavioral features. GCaMP data displayed on same color scale as (E). Body segment is a vector of body angles from head to tail. Inset (green) shows a zoomed region to illustrate fast head oscillations.

(H) Three example neurons from one animal that encode velocity over different timescales. Each neuron (blue) is correlated with an exponentially-weighted (red kernels) moving average (gray) of the animal’s recent velocity, over different timescales. Inset shows half-decay times of exponentials and correlations of neurons to gray traces.

(I) Example tuning scatterplots for three neurons (different from those in H) showing how their activity relates to velocity. Dots are individual timepoints.

(J) Example tuning scatterplots for three neurons that combine information about head curvature (color) and velocity (x-axis). Dots are individual timepoints. For each neuron, the red and green dots separate from one another only for negative or positive velocity values.

(K) Simplified expression of the deterministic component of CePNEM. Here, we represent the effect of timescale via an integral, whereas Equation 1 in the text represents timescale via recursion.

(L) Left and Middle: Fitting procedure. Likelihood weighting selects a particle with the best fit to the data and uses it to initialize a Monte Carlo process that infers the posterior distribution (see Methods for details). Gray shading indicates model likelihood. Right: example posterior distribution for a neural trace, shown for two model parameters for illustrative purposes.

(M) Example neural traces and median of all posterior CePNEM fits for that neuron. Inset cross-validation (cv) scores are pseudo-R2 scores on withheld testing data (see Methods).

We also wrote software that extracts behavioral variables from the brightfield images: velocity, body posture, feeding (or pharyngeal pumping), angular velocity, and head curvature (bending of the head, associated with steering). Animals did not exhibit egg-laying or defecation in these recording conditions. Together, these advances permit us to quantify brain-wide calcium signals and a diverse list of behavioral variables from freely-moving C. elegans.

A probabilistic neural encoding model reveals how C. elegans neurons encode behavior

We recorded brain-wide activity and behavior from 14 animals as they explored sparse food over 16 minutes (data available at www.wormwideweb.org). We obtained data from 143 ± 12 head neurons per animal (example in Fig. 1G). 94.7% of the recorded neurons exhibited clear dynamics and could be classified as active (see Methods). Our goal was to build models of how each neuron “encodes” or “represents” the animal’s behavior, in other words how its activity is quantitatively associated with behavioral features. Our initial efforts revealed three features of neural encoding that we describe here. We systematically identify neurons with these features below (Fig. 2).

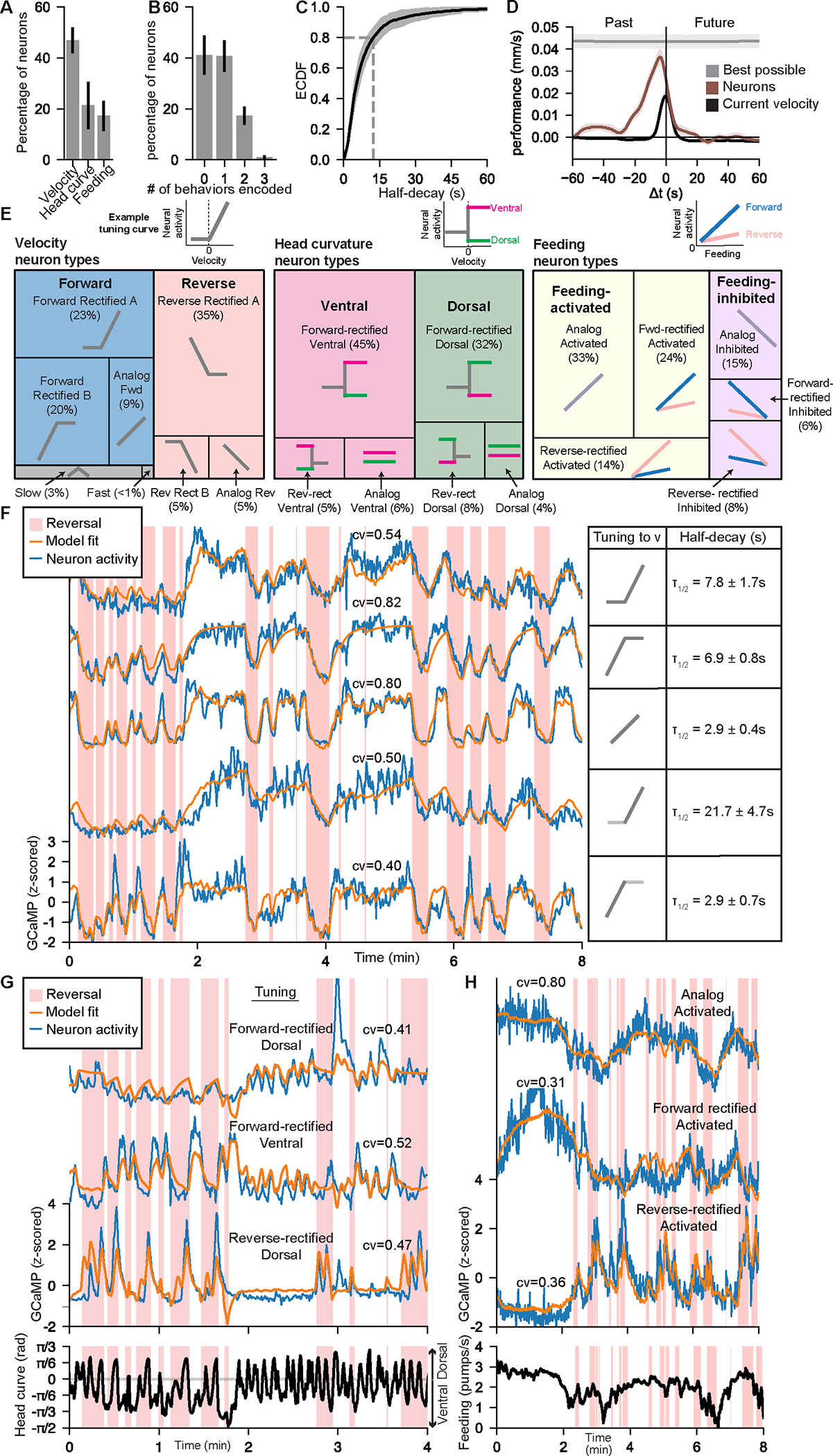

Figure 2. Varied representations of behavior across the C. elegans brain.

(A) Fraction of neurons per animal that encode indicated behaviors. If a neuron encoded >1 behavior, it is represented in multiple categories. Error bars show standard deviation between animals.

(B) Fraction of neurons per animal that encode 0, 1, 2, or 3 of the behaviors. Error bars show standard deviation between animals.

(C) ECDF of the median model half-decay time for neurons that encode at least one behavior. Shading shows standard deviation between animals.

(D) Performance of linear decoders that predict velocity at times offset from current neural activity (brown). Performance is the difference in error between the actual decoders and control scrambled decoders. Predicted velocity values were averaged over a 10-sec sliding window centered Δ𝑡 seconds from the current time. Decoders trained to make this prediction based on current velocity (black) or velocity values at all times (gray) are also shown. Shading shows standard deviation across animals.

(E) Distributions of how neurons encode the indicated behaviors. Neurons were categorized based on their tuning curves to each behavior (see Methods). Example tuning curves are shown above and prototypical tuning curves for each category are shown.

(F) Five example neurons that encode forward locomotion, together with CePNEM-derived tuning curves for each neuron, and the mean and standard deviation of each neuron’s half-decay time.

(G) Three example neurons that encode head curvature in conjunction with movement direction, together with CePNEM-derived tuning parameters.

(H) Three example neurons that encode feeding information, together with CePNEM-derived tuning parameters.

First, neurons encoded behavior over a wide range of timescales. For example, the activity of individual neurons that encode velocity was precisely correlated with an exponentially weighted average of the animal’s recent velocity. The decays of the exponentials, which determine how much a given neuron’s activity weighs past versus present velocity, varied widely across neurons (range of half-decay: 0.9 – 31.7 sec; GCaMP7f half-decay is <1 sec42,43). Fig. 1H illustrates this by showing correlations between individual neurons’ activities and velocity that has been convolved with exponential filters with varying decay times (see also Fig. S1D–E). We also observed a broad range of timescales for neurons that encode other behaviors (see below). This suggests that C. elegans neurons differ in how much they reflect the animal’s past versus present behavior.

Second, neurons reflected individual behaviors in a heterogeneous fashion. For example, for neurons that encode velocity, this encoding can be captured by a tuning curve that relates the neuron’s activity to velocity. Some neurons displayed analog tuning, but others displayed “rectification”, where the slopes of their tuning curves during reverse and forward velocity differed (Fig. 1I). While many neurons were more active during forward or reverse movement, others encoded slow locomotion regardless of movement direction (Fig. 1I, middle). This suggests that neurons that encode velocity can represent overall speed, movement direction, or finely tuned aspects of forward or reverse movement.

Third, many neurons conjunctively represented multiple motor programs. For example, most neurons whose activities were correlated with oscillatory head bending showed different tunings to head curvature during forwards versus reverse movement (Fig. 1J). Similarly, many neurons conjunctively represented the animal’s velocity and feeding rate. This suggests that many C. elegans neurons encode multiple motor programs in combination.

Based on these observations, we constructed an encoding model that uses behavioral features to predict each neuron’s activity (Equation 1; Fig. 1K). This model provides a quantitative explanation of how each neuron’s activity is related to behavior. The relationship between activity and behavior for a given neuron could be due to that neuron causally influencing behavior or, alternatively, due to the neuron receiving proprioceptive or corollary discharge signals. In contrast to decoding analyses3, which reveal the presence of behavioral information in groups of neurons, an encoding model can provide precise information about how each neuron’s dynamics relate to behavior. Each neuron’s activity was modeled as a weighted average of the animal’s recent behavior with a single decay parameter , allowing for different timescale encoding. Neurons can additively weigh multiple behavioral predictor terms (based on coefficients , and ), which can interact with the animal’s movement direction parameterized by . This allows for rectified and non-rectified tunings to behavior, as well as conjunctive encoding of multiple behaviors. We compared the goodness of fit of this full model to partial models with parameters deleted (and to a linear model) and found that deletion of any parameter significantly increased model error (Fig. S1F–G).

The model parameters are interpretable, describing how each neuron encodes each behavioral feature. However, because the model is fit on a finite amount of data, these parameters have a level of uncertainty that is important to estimate. Therefore, we determined the posterior distribution of all model parameters that were consistent with our recorded data, where consistency was defined as likelihood in the context of a Gaussian process residual model parameterized by , and (see Methods). This allowed us to quantify our uncertainty in each model parameter and perform meaningful statistical analyses. The posterior distribution was determined using a custom inference algorithm implemented with the probabilistic programming system Gen44 (Fig. 1L). We confirmed the validity of this approach using simulation-based calibration, a technique that ensures that approximations from such inference algorithms are sufficiently accurate (Fig. S2A)45.

Equation 1: The C. elegans Probabilistic Neural Encoding Model (CePNEM) expression

| Parameter | Meaning |

|---|---|

| Observed velocity, head curvature, and pumping rate | |

| Modeled neural activity. | |

| Locomotion direction rectification term with different values based on forwards versus reverse movement. | |

| Locomotion direction rectification parameter. | |

| Velocity, head curvature, and feeding parameters. | |

| Exponentially weighted moving average (EWMA) timescale parameter. | |

| Baseline activity parameter. | |

| Initial condition parameter. | |

| White noise parameter. | |

| Autocorrelative residual parameter. | |

| Autocorrelative residual timescale parameter. | |

| Gaussian process. | |

| Gaussian process kernels. |

We fit this model (The C. elegans Probabilistic Neural Encoding Model, or CePNEM) on all neurons and found significant encoding of at least one behavioral feature in 83 ± 10 out of 143 neurons per animal (examples in Fig. 1M and Fig. S2B; see also Fig. S2C and Methods for statistics). To ensure that these results were not due to motion artifacts, we applied the model to animals expressing pan-neuronal GFP and found that only 2.1% of GFP neurons significantly encoded behavior (versus 58.6% in GCaMP datasets; Fig. S2D). We were also concerned whether the model could potentially explain neural activity via overfitting and tested this using two approaches. First, we tested whether neural activity from one animal could be explained using behavioral features from other animals. However, only 2.7% of neurons encoded this incorrect behavior (Fig. S2D). Second, we performed 5-fold cross-validation across recorded neurons and found a high level of performance on withheld testing data (Fig. S2E).

There were active neurons with calcium dynamics not well fit by CePNEM (see Fig. S2F). However, it was ambiguous whether these neurons encoded behavior in a manner not captured by CePNEM or whether their activity was related to other ongoing sensory or internal variables. To distinguish between these possibilities, we examined the model residuals, i.e. the neural activity unexplained by CePNEM. We attempted to decode behavioral features using all neurons’ model residuals and, as a control, the original neural activity traces. Decoding from the full neural traces was successful, but decoding from the residuals was close to chance (Fig. S2G). This suggests that neural variance unexplained by CePNEM is unrelated to the overt behaviors quantified here. These residuals may be related to sensory inputs, internal states, or behaviors that we were unable to detect. Decoding of specific behavior features was also most successful from neurons that CePNEM suggested encode those features (Fig. S2H). Thus, CePNEM determines the encoding features of neurons in a manner that is concordant with decoding analyses.

Diverse representations of behavior across the C. elegans brain

We used the CePNEM results to analyze how the neurons across each animal’s brain encode its behavior. Among the recorded neurons, encoding of velocity was most prevalent, followed by head curvature and feeding (Fig. 2A). 58.6% of recorded neurons encoded at least one behavior (Fig. 2B), with approximately one third of these conjunctively encoding multiple behaviors (Fig. 2B). Most neurons primarily encoded current behavior, but a sizeable subset weighed past behavior (Fig. 2C). Long timescale encoding was especially prominent among forward-active velocity neurons (Fig. S2I–J). This suggested that current neural activity may contain information about past velocity. Indeed, we were able to train a linear decoder to predict past velocity up to at least 20 sec prior based on current neural activity (Fig. 2D; black line shows this was not due to current velocity predicting past velocity). A similar decoder could predict past head bending behavior, albeit less robustly (Fig. S2K). However, we were not able to predict future velocity or head bending from current neural activity (Fig. 2D, S2K).

We analyzed how each behavior was represented across the full set of neurons, first focusing on velocity. Using the CePNEM fits, we determined the shapes of each neuron’s tuning curve to velocity (see Methods). There were eight ways that a neuron could be tuned to velocity (Fig. 2E; examples in Fig. 2F). Most neurons (83%) exhibited rectified tunings, in which the encoding of forward and reverse speed differed. A smaller set of neurons represented analog velocity and others encoded slow locomotion. To highlight how CePNEM accurately captures the dynamics of neurons with different tunings, Fig. 2F shows five neurons with higher activity during forward movement, but with different dynamics. The CePNEM fits to each neuron reveal how they encode velocity with different tunings and timescales.

Among the neurons that encoded head curvature, many did so in a manner that depended on locomotion direction (Fig. 2E). Thus, we categorized these neurons based on both their head curvature tuning and velocity tuning. Most neurons only displayed head curvature-associated activity changes during forward or reverse movement, with more neurons in the forward-rectified group (Fig. 2E; examples in Fig. 2G). These results indicate that the network that controls head steering is broadly impacted by the animal’s movement direction, which could relate to the fact that steering behavior must be controlled differently during forward versus reverse movement (see also Fig. S2L). In addition to these neurons that encode the animal’s acute head curvature, a smaller group of neurons encoded angular velocity (Fig. S2M).

Neural representations of the animal’s feeding rates were also diverse (Fig. 2E; examples in Fig. 2H). Many neurons displayed analog tuning to feeding rates; others encoded feeding in conjunction with movement direction. Neurons could be positively or negatively correlated with feeding.

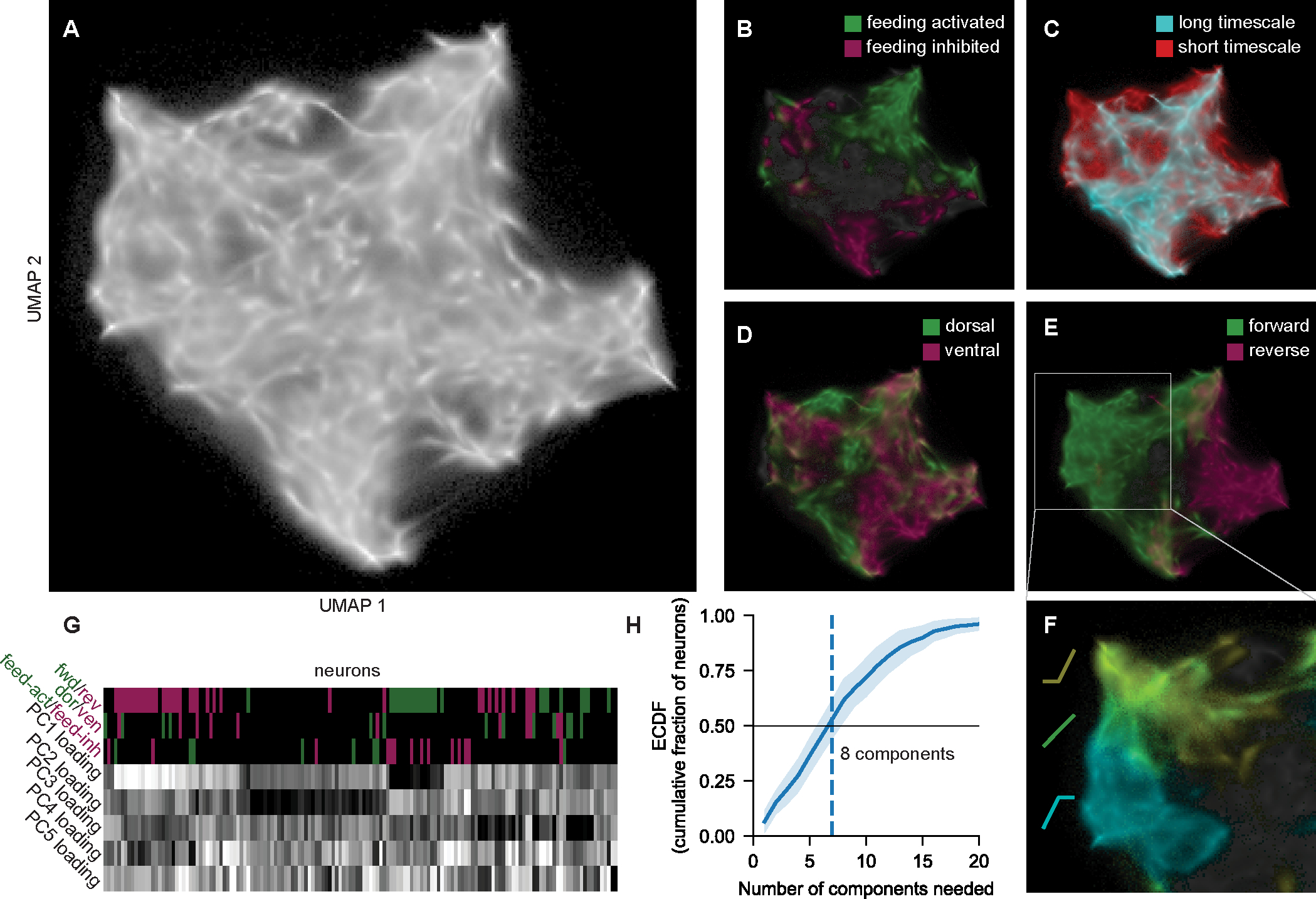

The above analyses suggest a surprising amount of heterogeneity in how C. elegans neurons encode behavior. To obtain a more global view of these representations, we embedded the neurons into a two-dimensional UMAP subspace where proximity between neurons indicates how similarly they encode behavior (Fig. 3A; see Fig. S3A–D for related analyses). This analysis could reveal clusters of cells that encode behavior the same way or, alternatively, the neurons could be evenly distributed if the representations were more heterogeneous. We found that the neurons were diffusely distributed, with no evident clustering (Fig. 3A). However, neurons’ localization still depended on their encoding (Fig. 3B–E). For example, encoding of velocity was graded along one axis, and encoding of feeding was graded along the other. The continuous distribution of neurons was especially evident when examining neurons with related tuning curves (Fig. 3F). Other standard clustering approaches also suggested that the neurons were not clusterable into discrete groups based on their encoding (Fig. S3E). These results suggest that in general the C. elegans neurons represent behavior along a continuum.

Figure 3. Global analysis of how neurons encode behavior in the C. elegans nervous system.

(A) UMAP embedding of all neurons in 14 animals, where proximity indicates encoding similarity (see Methods). Here, we projected all points from each neuron’s CePNEM posterior. Fig. S3D shows only one dot per neuron.

(B-E) UMAP space where neurons are colored by their behavioral encodings. Long versus short timescale is split at half-decay time of 20 sec.

(F) Zoomed portion of UMAP space, where neurons are color-coded by their velocity tuning curves.

(G) Example animal, showing neurons’ tuning to behavior and loadings onto the top five PCs. Neurons are hierarchically clustered by their PC loadings.

(H) Number of PCs needed to explain 75% of the variance in a given neuron, averaged across neurons in 14 animals. Data are means and standard deviation across animals.

See also Figure S3.

How do these diverse representations of behavior arise? C. elegans neural activity can be decomposed into different modes of dynamics shared by the neurons26, identifiable through Principal Component Analysis (PCA). In our data, the first three PCs explained 42% of the variance in neural activity, and 18 PCs were required to explain 75% of the variance (Fig. S3F). Single neurons were almost exclusively described as complex mixtures of PCs rather than single PCs (Fig. 3G–H). The weights of the PCs on different neurons were diverse, and hierarchical clustering of these data revealed little structure. However, as expected, the loadings were still predictive of the neuron’s encoding type (Fig. 3G). Overall, these results suggest that there are many ongoing modes of dynamics shared among neurons, which relate to their distinct representations of behavior.

An atlas of how the defined neuron classes in the C. elegans connectome encode behavior

We next sought to map these diverse representations of behavior onto the defined cell types of the C. elegans connectome. Thus, we collected additional datasets in which we determined neural identity using NeuroPAL46, a transgene in which three fluorescent proteins are expressed under well-defined genetic drivers. This makes it possible to determine neural identity based on neuron position and multi-spectral fluorescence. We crossed the pan-neuronal NLS-GCaMP7f transgene to NeuroPAL (using otIs670, a low brightness NeuroPAL integrant). Data were collected as above, except animals were immobilized by cooling47 after each freely-moving recording. We then collected multi-spectral NeuroPAL fluorescence (Fig. S4A) and registered those images to the freely-moving images.

We collected data from 40 NeuroPAL/GCaMP7f animals. Compared to the above datasets, a similar number of neurons encoded behavior (52.0%, compared to 58.6%); behavioral parameters and other metrics of neural activity were also mostly similar (Fig. S4B–E; Fig. S3B; though NeuroPAL animals reversed more frequently and had a slight ventral bias). Across recordings, we obtained data from 78 of the 80 neuron classes in the head. While most neuron classes are single left/right pairs, 13 classes consist of 2–3 pairs of neurons in 4- or 6-fold symmetric arrangements. In these cases, we separately analyzed each neuron pair. Left/right pairs were pooled for all neuron classes except four that displayed asymmetric activities (ASE, SAAD, IL1, IL2; see Methods). We generated CePNEM fits for all of these neurons to reveal how they encode behavior (Fig. 4A; Table S1; Fig. S4F–H). The encoding properties of the neuron classes determined via CePNEM predicted their activity changes in event-triggered averages aligned to key behaviors (Fig. S4G). For well-studied neurons, our results provided a clear match to previous work: AVB, RIB, AIY, and RID encoded forward movement; AVA, RIM, and AIB encoded reverse movement; and SMDD and SMDV encoded dorsal and ventral head curvature, respectively24–30.

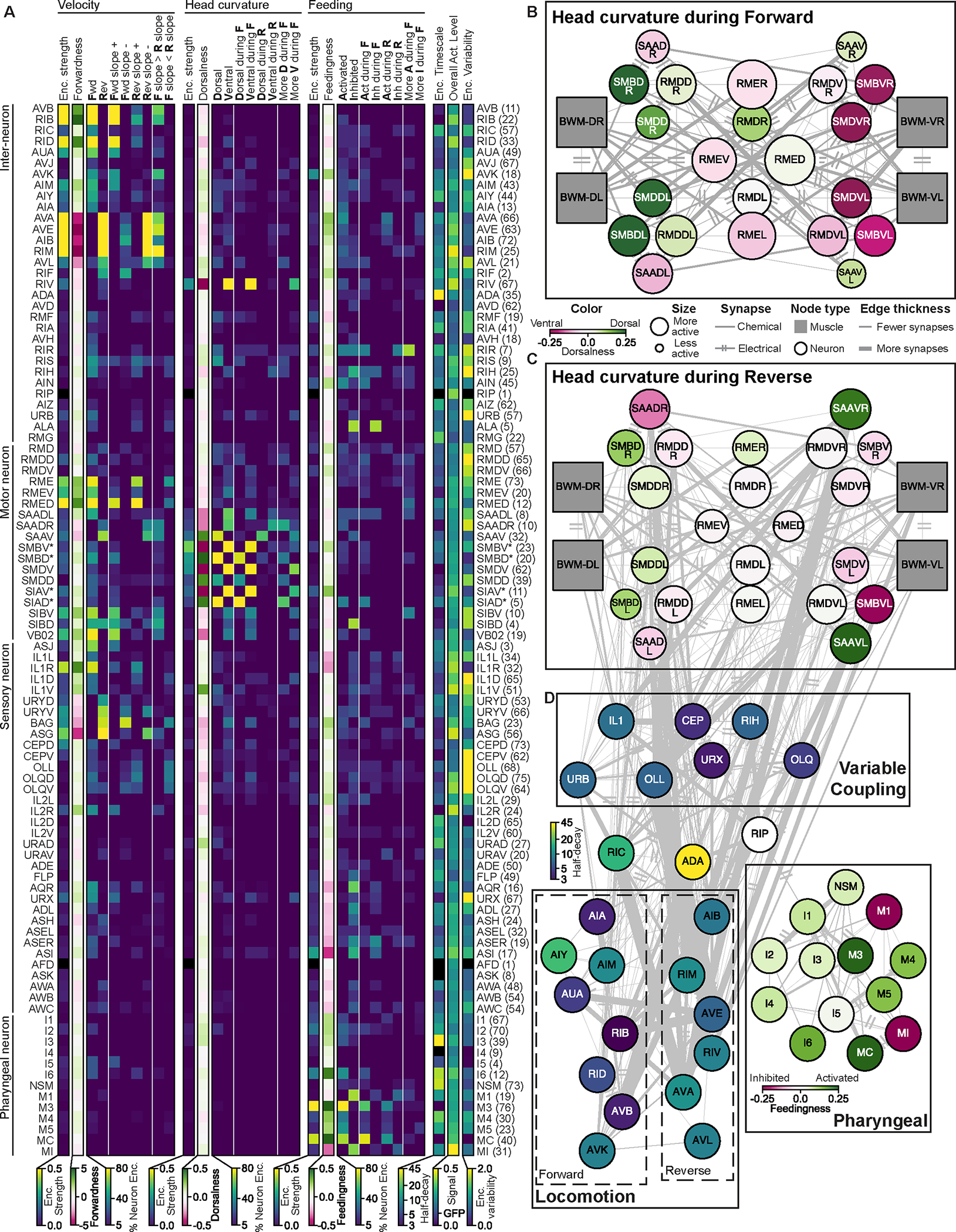

Figure 4. An atlas of how the different C. elegans neuron classes encode behavior.

(A) An atlas of how the indicated neuron classes encode behavior, derived from analysis of fit CePNEM models. Columns show:

• Encoding strength: approximate variance in neural activity explained by each behavioral variable.

• Forwardness, Dorsalness, and Feedingness: slope of the tuning to each behavior.

• Enc. timescale: median half-decay time

• Overall act. level: standard deviation of the calcium traces when normalized as F/Fmean.

• Enc. Variability: how differently the neuron class encoded behavior across recordings. Other columns show the fraction of recorded neurons that significantly encoded behaviors:

• Fwd, Rev, Dorsal, Ventral, Activated, and Inhibited: neurons with that overall tuning to behavior.

• Fwd slope −, Fwd slope +, Rev slope −, and Rev slope +: neurons with that slope in their velocity tuning curves during the specified movement direction.

• F slope > R slope and F slope < R slope: neurons displaying rectification in their velocity tuning curves.

• Dorsal during F, Ventral during F, Dorsal during R, Ventral during R, Act during F, Inh during F, Act during R, and Inh during R: neurons with that tuning to behavior during the specified movement direction (Forward or Reverse).

• More D during F, More V during F, More A during F, and More I during F: neurons with different tunings to behavior during forward versus reverse.

Parenthesis on right indicates the number of CePNEM fits per neuron class (first and second halves of videos, which have different model fits, are counted separately).

(B-C) Circuit diagram of neurons that innervate head muscles with overlaid behavioral encodings during forward (B) and reverse (C) movement. Edge thickness indicates number of synapses between neurons. Left/right neurons shown separately, because one of these pairs (SAAD) exhibited asymmetric activity, suggesting an asymmetry in this circuit.

(D) Circuit diagrams of behavioral circuits.

See also Figures S4, Figure S5, and Table S1.

This analysis revealed many features of how the C. elegans nervous system is organized to control behavior. Among the velocity-encoding neurons, those that encode forward movement displayed a wide range of tunings to velocity and included many neurons not previously implicated (AIM, AUA, and others). The reverse neurons were more uniform in their tunings to velocity, but several also represented head curvature, suggesting that they may control turning during reversals. Neural representations of velocity also spanned multiple timescales. For example, RIC, ADA, AVK, AIM, and AIY integrated the animal’s recent velocity over tens of seconds. We silenced some neurons that encoded velocity (AIM, RIC, AUA, AVL, RIF) and found that this specifically altered animals’ velocity (Fig. S4I). In addition, we optogenetically stimulated ASG sensory neurons, which encoded reverse movement, and found that this triggered reversals (Fig. S4I). Thus, results from the neuron atlas can predict causal effects on behavior.

These data also revealed neural dynamics in the circuit that controls head steering. The neuron classes in this network are often 4-fold symmetric, consisting of separate neuron pairs that innervate the ventral and dorsal head muscles. These opposing dorsal and ventral neurons were functionally antagonistic in our analysis (Fig. 4A–C). We found that the neural control of head steering is different during forward versus reverse motion (Fig. 4B–C). Some neurons that encode head curvature are more active during forward (RMED/V) or reverse (SAAV) movement. Others have more robust tuning to head curvature during forward movement (SMDD/V, SMBD/V). In addition, RMDD was more active during dorsal head bending during forwards motion, but preferred ventral head bending during reverse movement. The forward-rectified tuning of SMD was previously described and matches our results25. Our data now show that this entire network shifts its functional properties depending on movement direction. This suggests that the network functions differently while animals steer forwards towards a target compared to when they back away from one. We ablated some neurons that jointly encoded movement direction and head curvature (SAA, SMB) and found that this altered animal’s head bending and velocity (Fig. S4I).

Most neurons that encoded feeding were in the pharyngeal nervous system, but several extrapharyngeal neurons also encoded feeding, including AIN, ASI, and AVK. Neurons within the pharyngeal system encoded feeding with both positive (I6, M3, M4, etc) and negative (M1, MI) relationships. Optogenetically silencing neurons that encoded feeding (M4, MC) specifically inhibited feeding behavior (Fig. S4I).

Finally, we observed that many neurons (OLL, OLQ, IL1, RIH, URB, others) had tunings to different motor programs that were variable across animals (Fig. 4D). To directly examine this, we computed a variability index that describes how dissimilar each neuron class’s encoding of behavior was across all datasets (Fig. 4A; see Methods). While many neuron classes had invariant representations of behavior across animals (AVA, AIM, many others), others had high variability (Fig. 4A; Fig. S5A). NeuroPAL labeling and registration procedures for the neurons with high variability were determined with equal confidence to the other neuron classes, suggesting that identification errors are unlikely to explain these observations (Fig. S5B–D). Further supporting this, these neurons also changed encoding over the course of continuous recordings (see below). The ability of models trained on one set of animals to generalize to other animals inversely scaled with the neuron class’s variability index (Fig. S5E). For neurons with high variability, it is informative to look at the range of possible encodings reported in Fig. 4A rather than just the encoding strength metric. Overall, these datasets provide a functional map of how most neuron classes in the C. elegans nervous system encode the animal’s behavior.

Different encoding features are localized to distinct regions of the connectome

We next examined how these representations of behavior relate to connectivity in the C. elegans connectome. We first examined whether synaptically connected neurons had similar dynamics. Indeed, connected neurons – especially those connected through electrical synapses – were more highly correlated than neurons that were not synaptically connected (Fig. 5A). In addition, neurons were more strongly correlated (either positively or negatively) to their synaptic input and output neurons, compared to random controls (Fig. 5B).

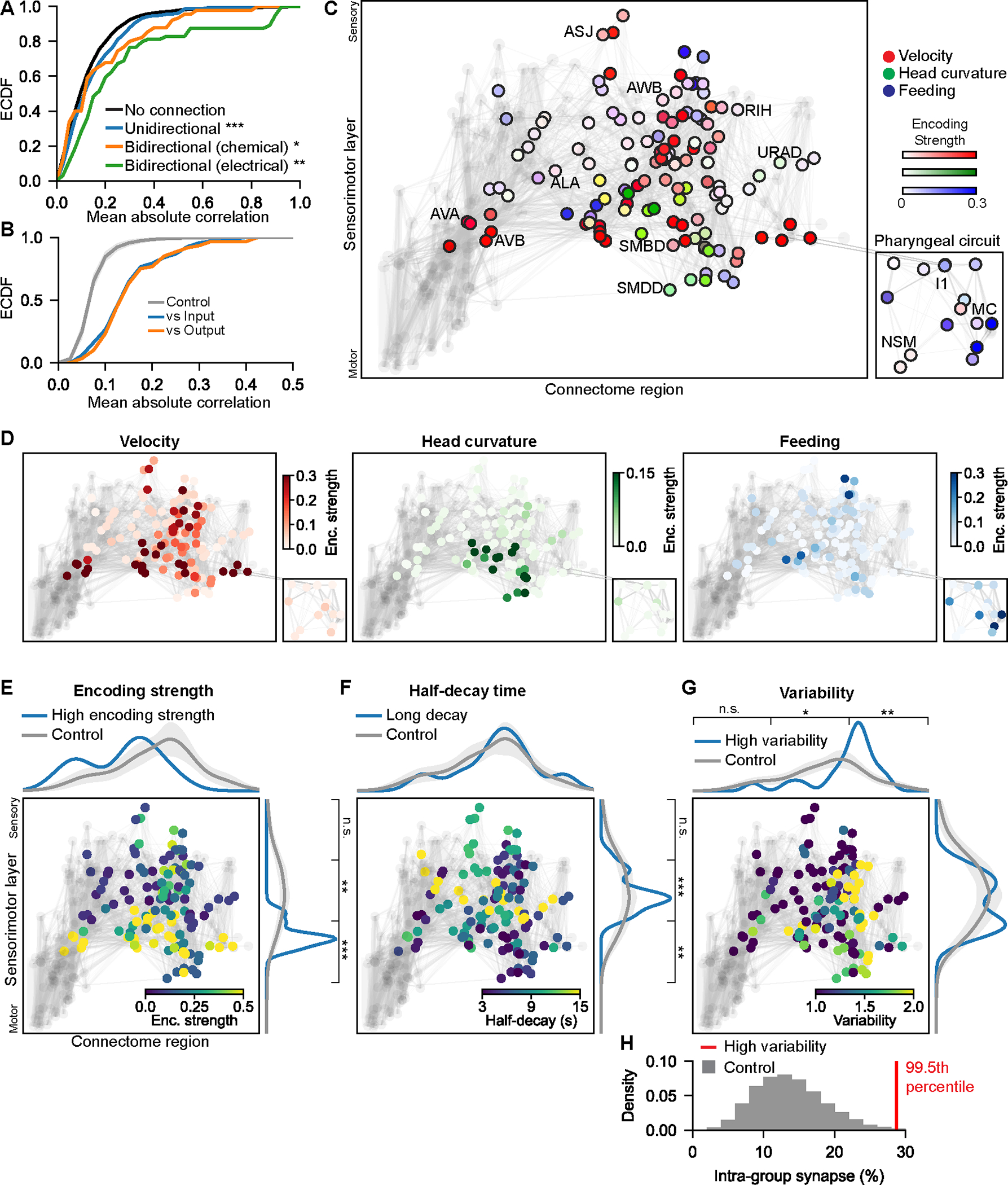

Figure 5. Neural encoding features map onto different regions of the connectome.

(A) Cumulative distribution of the correlation coefficients of activities of pairs of neurons connected in different ways. Left/right pairs were merged for this analysis, so it only considers relationships between different neuron classes. *p<0.05 **p<0.005 ***p<0.0005, Mann-Whitney U-test.

(B) Median correlation coefficients between each neuron and its synaptic inputs (blue) or outputs (orange). Control (gray) shows randomly selected neurons of equal group size.

(C) Neurons (circles) and connections (gray lines) in the C. elegans connectome, with behavior encoding information. Connectome region (x-axis): neurons with similar wiring are adjacent on this axis, computed as the second eigenvector of the laplacian of the connectome graph. Sensorimotor layer (y-axis): neurons arranged from sensory to motor (see Methods). Some neurons are labeled to provide rough orientation to the layout.

(D) Same as in (C), but one behavior per plot.

(E-G) Distribution of encoding features in the connectome, arranged as in (C). Marginal distributions (blue) show values of each behavioral feature along each axis. Gray control lines show how behavioral features are distributed when randomly shuffled. *p< 0.05 **p<0.005, ***p<0.0005, one sample Z-test for proportion.

(H) The number of synapses connecting the neurons with high variability (see Methods) is shown as a red line. Gray shows the number of synapses connecting random neuron groups. Inset shows rank of the true value in this shuffle distribution.

This raised the possibility that local communities of neurons in the connectome may encode related behavioral information. To examine this, we determined the localization of behavioral information in the connectome. We examined localization with respect to whether neurons are connected to one another, and whether neurons are closer to sensory versus motor layers (x- and y-axes of the Fig. 5C–G). Velocity information was widespread, whereas head curvature and feeding were located in more restricted connectomic regions (Fig. 5C–D). In general, behavioral information was most prominent at lower sensorimotor layers, closer to motor output (Fig. 5E). Neurons with long timescale information were located at middle sensorimotor layers, primarily in interneurons that innervated premotor and motor neurons (Fig. 5F). The neurons with variable encoding across animals were largely localized in one synaptic community (Fig. 5G–H), suggesting that they comprise an interconnected circuit that exhibits variable coupling. Together, these observations suggest that different features of behavior encoding are located in different regions of the C. elegans connectome.

The encoding of behavior is dynamic in many neurons

We noted that the encoding properties of some neurons appeared to change over time in a single recording. Therefore, we analyzed our data to determine whether neural representations of behavior dynamically change. We fit two CePNEM models trained on the first and second halves of the same neural trace and used the Gen statistical framework to test whether the model parameters significantly changed between time segments (see Methods; and Fig. S6A–B). Based on this test, ~31% of neurons that encoded behavior changed that encoding over the course of our continuous recordings. A similar fraction (24%) of neurons changed encoding in the NeuroPAL strain. These identified neurons substantially overlapped with those that variably encode behavior between animals (Fig. 6A; Fig. S6C) and were densely interconnected (Fig. 6B; see also Fig. S6D). Neurons changed encoding in different ways: some changed which behaviors they encoded; others showed gains or losses of encoding; and others showed subtle changes in tuning (Fig. 6C; examples in Fig. 6D–E). This suggests that some neurons in the C. elegans connectome are variably coupled to behavioral circuits and remap how they couple to these circuits over time.

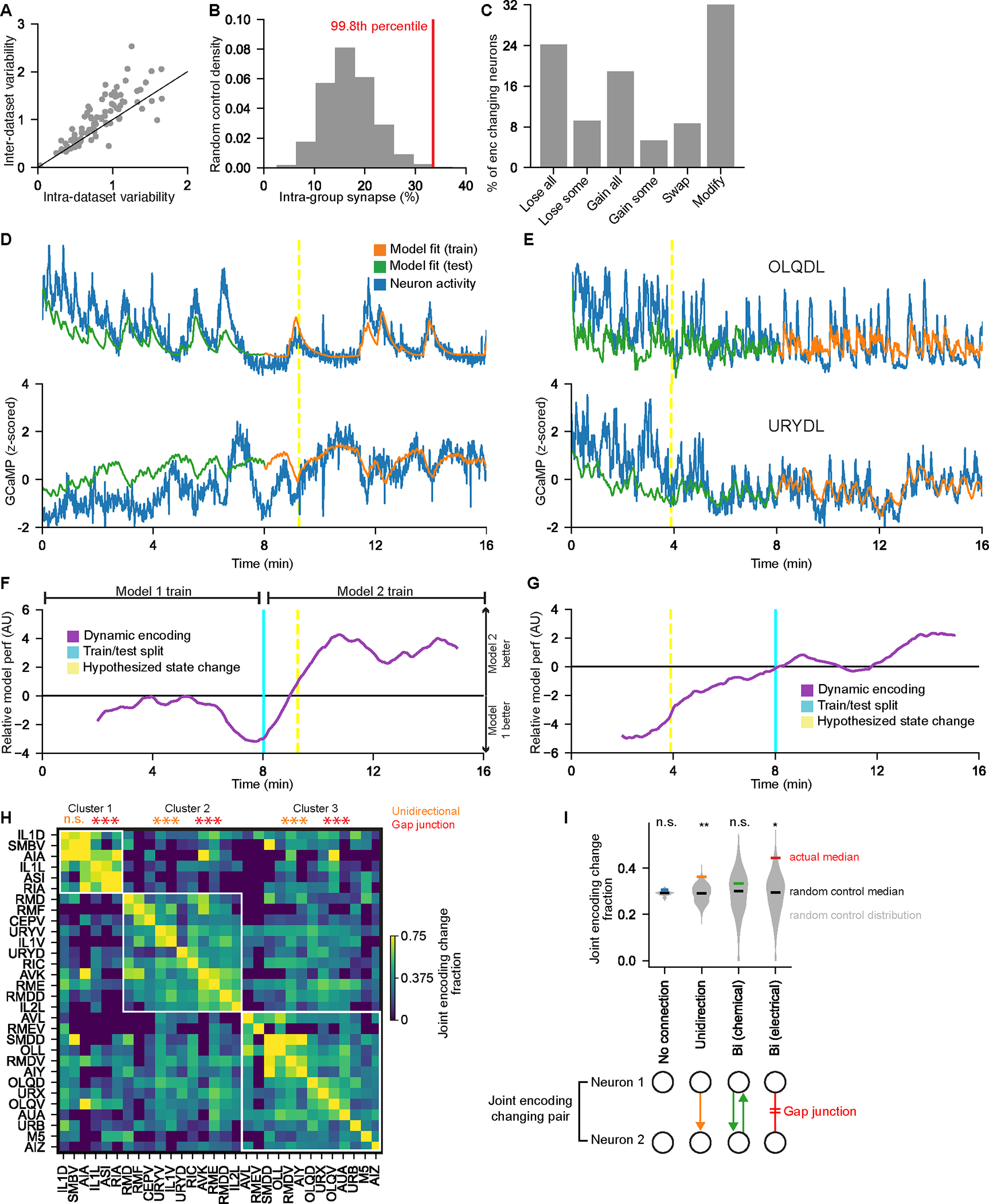

Figure 6. Neural representations of behavior dynamically change over time.

(A) Analysis of inter- versus intra-dataset encoding variability. Each dot is a neuron class.

(B) For the group of neurons that frequently change encoding, red line shows percent of synapses onto these neurons that come from neurons within the group. Gray controls are the same values for random groups of neurons of similar size. Inset percentile shows rank of true number.

(C) How neurons changed encoding across SWF415 animals. Categories are: “lose all” (lost tuning to behavior), “lose some” (lost tuning to one or more behavior), “gain all”, “gain some”, “swap” (both gained and lost tuning to behaviors), and “modify” (encode the same behavior(s), but differently).

(D) Two example neurons with CePNEM fits, showing a change in neural encoding of behavior. Yellow dashed lines indicate times when neurons across the full dataset displayed a sudden shift in encoding (see (F)).

(E) Example neurons OLQDL and URYDL, depicted as in (D).

(F) Data from same animal as (D) showing a sharp change in neural encoding of behavior. We fit CePNEM models to the first and second halves of the recording (Model 1 and Model 2). We then computed the difference between the errors of the two median model fits and smoothened with a 200-timepoint moving average. This was then averaged across encoding changing neurons. A sudden change (yellow line) indicates a sudden shift in behavior encoding across neurons.

(G) Data from the same animal as (E) showing a sudden change in neural encoding, displayed as in (F).

(H) Fraction of times that neuron classes changed encoding at the same moment, relative to their encoding changes overall. Rows were clustered and white outlines depict main clusters. **p<0.005, empirical p-value that clustering would perform as well during random shuffles. Within each cluster, the neurons were more likely to have unidirectional synapses and/or gap junctions with one another compared to random shuffles, as indicated. ***p<0.0005, empirical p-value.

(I) Neuron pairs with unidirectional synapses or electrical synapses were more likely to change encoding together, compared to random shuffles (gray distributions). *p<0.05, **p<0.005, empirical p-value.

See also Figure S6.

We next sought to understand the temporal structure of these encoding changes. For instance, individual neurons could remap independently or in a synchronized manner. We developed a metric to identify when an encoding change took place based on the difference between the errors of models trained on different time regions of the same trace (Fig. 6F–G; controls in Fig. S6E–F). We observed sharp changes (yellow lines) where many neurons simultaneously changed encoding in many datasets (Fig. 6F–G), although in some datasets there were gradual shifts (Fig. S6G–H). Certain neuron classes were more likely to change encoding at the same time as one another such that they could be grouped into clusters (Fig. 6H). The neurons that remapped their encoding at the same time were more likely to be synaptically connected, especially via gap junctions (Fig. 6I). Moreover, the number of neurons that changed encoding was positively correlated with the degree of behavioral change across the hypothesized moment of the change (Fig. S6I). Therefore, at times there is a coordinated remapping where many neurons change how they represent behavior.

The encoding of behavior is influenced by the behavioral state of the animal

We next tested whether changes in the animal’s behavioral state could elicit these synchronous encoding changes. Behavioral states are persistent changes in behavior that outlast the sensory stimuli that initiate them48,49. Previous work has shown that aversive stimuli can induce this type of response in C. elegans22,23,50. Therefore, we recorded 30 datasets where we delivered a sudden, noxious heat stimulus to animals part way through the recording (19 of these datasets had NeuroPAL labels). For stimulation, we heated the agar around the worm’s head by 10°C for 1 second (Fig. 7A; temperature decayed to baseline within 3 seconds). This elicited an immediate avoidance (reversal) behavior and reduction in feeding (Fig. 7B). Animals continued to exhibit reduced feeding and increased reversals for minutes after the stimulus, revealing a persistent behavioral state change (Fig. 7B). However, behavior reverted to normal within an hour and animal viability was not adversely impacted by the stimulus (Fig. S7A–B).

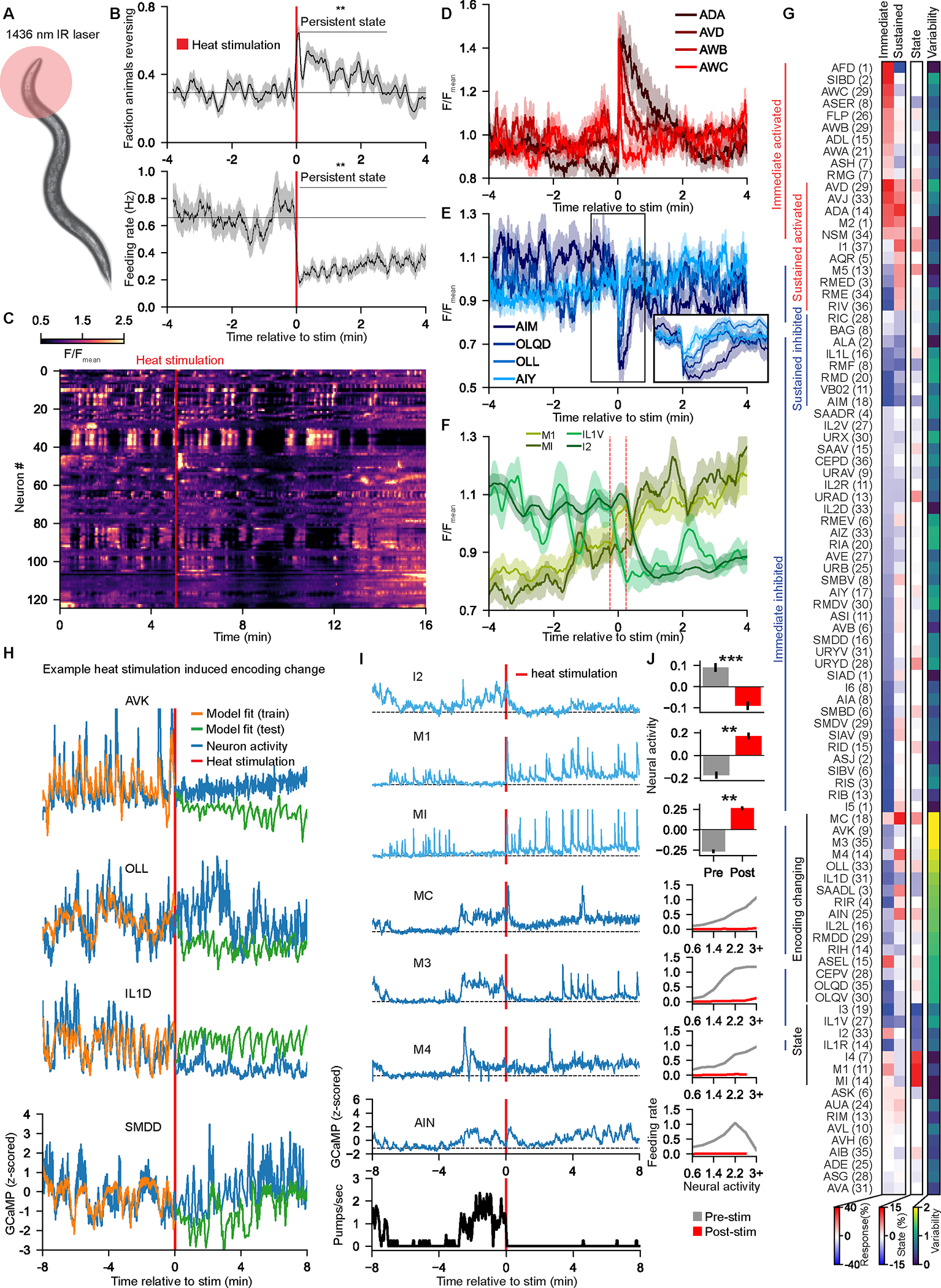

Figure 7. Behavioral state changes cause a widespread remapping of how neurons encode behavior.

(A) Illustrative cartoon: a 1436nm IR laser transiently increases the temperature around the animal’s head by 10°C for 1 sec.

(B) Event-triggered averages of behavior of 32 animals in response to the heat stimulus. **p<0.05, Wilcoxon signed rank test, pre- versus post-stimulus.

(C) Neural data from an animal that received a heat stimulus (red line).

(D-F) Event-triggered averages of neural activity aligned to the heat stimulus for some neurons with (D) excitatory or (E) inhibitory responses to the stimulus, or (F) persistent activity changes. ETAs in (F) are smoothed over 30 seconds; dashed lines indicate where the stim is within the moving average window.

(G) Responses of different neuron classes to the heat stimulus (n=19 animals):

• Immediate (<4 seconds) and sustained (15–30 seconds) GCaMP responses

• Persistent activity changes. See Methods.

• Encoding variability pre- vs post-stimulus. See Methods.

(H) Example neurons that showed abrupt changes in their behavior encoding immediately after the stimulus.

(I) Example dataset. Light blue neurons had persistent activity changes. Dark blue neurons changed encoding after the stimulus.

(J) Top three plots: Average activity, computed as , before and after the heat stimulus. Error bars show SEM across animals. **p<0.005, ***p<0.0005, Wilcoxon signed rank test. Bottom four plots: tuning curves to feeding behavior for each neuron class (pre- versus post-heat-stimulus data). Data are pooled across 19 animals.

See also Figure S7.

We measured brain-wide responses during this behavioral state change (Fig. 7C–G). Several neurons displayed transient responses to the sensory stimulus, including thermosensory neurons AFD, AWC, FLP, and others (Fig. 7D–E; see also Fig. S7C–D)51,52. Other neurons displayed minutes-long responses to the stimulus. We also identified some neurons with persistent changes in activity that lasted for the rest of the recordings after the stimulus (Fig. 7F). Finally, we found that 35% of the neurons that encoded behavior changed encoding time-locked to the heat stimulus (compared to 24% in animals without any stimulus; p<0.05, Mann-Whitney U-Test; Fig. S7E; examples in Fig. 7H). The neurons that changed encoding were stereotyped across animals, especially the neurons related to feeding, which is the behavior most robustly altered by the heat stimulus (Fig. S7F; see also Fig. S7G–H). Thus, inducing a behavioral state change elicits a reliable shift in the network that remaps the relationship between neural activity and behavior.

We examined how these activity changes related to the behavioral changes that comprise the aversive behavioral state, focusing on the robust suppression of feeding. Three neurons that encoded feeding showed persistent activity changes that paralleled the state: I2 activity persistently decreased and MI and M1 activity increased. In addition, four feeding neurons showed a change in encoding after the heat stimulus. These neurons, MC, M3, M4, and AIN, had correlated activity bouts aligned with bouts of feeding prior to the heat stimulus (Fig. 7I–J). After the stimulus, activity bouts still occurred in these neurons, but this was not accompanied by feeding. Notably, at baseline, MI and M1 activity were highest during pauses in feeding (Fig. 7I–J). This suggests that MI and M1 might inhibit feeding and that the state-dependent increase in MI and M1 activity might suppress feeding normally elicited by MC/M3/M4/AIN. Overall, these results show how changes in behavioral state are accompanied by persistent activity changes and alterations in how neural activity is functionally coupled to behavior.

DISCUSSION

Animals must adapt their behavior to a constantly changing environment. How neurons represent these behaviors and how these representations flexibly change in the context of the whole nervous system was unknown. To address this question, we developed technologies to acquire high quality brain-wide activity and behavioral data. Using the probabilistic encoder model CePNEM, we constructed a brain-wide map of how each neuron encodes behavior. By also determining the ground-truth identity of these neurons, we overlaid this map upon the physical wiring diagram. Behavioral information is richly expressed across the brain in many different forms – with distinct tunings, timescales, and levels of flexibility – that map onto the defined neuron classes of the C. elegans connectome.

Previous work showed that animal behaviors are accompanied by widespread changes in activity across the brain, resulting in a low-dimensional neural space53. Here we found that an extra layer of complexity emerged when we determined each neuron’s encoding of behavior. Representations were complex and diverse, and this heterogeneity could be largely explained by four motifs: varying timescales, non-linear tunings to behavior, conjunctive representations of multiple motor programs, and different levels of flexibility. Having many different forms of behavior representation present may confer the nervous system with computational flexibility. Depending on the context, the brain may be able to combine different representations to construct new coordinated behaviors. We did not distinguish whether a given neuron’s encoding of behavior reflected the neuron causally driving behavior versus receiving a corollary discharge or proprioceptive signal related to behavior32–37. Future work separating these classes of signals across the C. elegans network should reveal the full set of causal interactions between neurons and behavior.

While many neurons encoded current behavior, others integrated recent motor actions with varying timescales. This allows the brain to encode the animal’s locomotion state of the recent past. Combining representations with different timescales could allow the animal’s nervous system to perform computations that relate past and present behavior. We also observed that the dynamics of the nervous system can change over longer time courses. In particular, many neurons flexibly remapped their relationships to behavior over minutes. These changes may be triggered by changes in neuromodulation or other state-dependent shifts in circuit function. This remapping may then change sensorimotor responses and the generation of behavior.

Our results here reveal how neurons across the C. elegans nervous system encode the animal’s behavior. Under the environmental conditions explored here, we observed that ~30% of the worm’s nervous system can flexibly remap. Future studies conducted in a wider range of contexts will reveal whether this comprises the core flexible neurons in the connectome or, alternatively, whether the neurons that remap differ depending on context or state.

LIMITATIONS OF THE STUDY

We wish to highlight three limitations of our study. First, our neural recordings were performed using nuclear-localized GCaMP. While this makes brain-wide recordings feasible, the spatial and temporal resolution of this imaging is more limited than other approaches. Second, some recorded neurons were not well fit by CePNEM. Our results suggest that these neurons may carry sensory, internal, or behavioral information not studied here, but additional work will be necessary to resolve this. Finally, we examined animals under a limited set of environmental conditions. Future recordings in different contexts may identify other types of behavior encoding not yet revealed in our recordings.

STAR METHODS

RESOURCE AVAILABILITY

Lead Contact

Further information and requests for resources and reagents should be directed to and will be fulfilled by the lead contact, Steven Flavell (flavell@mit.edu).

Materials Availability

All plasmids, strains, and other reagents generated in this study are freely available upon request. The key strains SWF415 and SWF702 are openly available through the Caenorhabditis Genetics Center (CGC).

Data and Code Availability

Data: All brain-wide recordings and accompanying behavioral data are freely available in a browsable and downloadable format at www.wormwideweb.org. The data files have also been deposited at Zenodo and Github and are publicly available as of the date of publication. DOIs are listed in the key resources table.

Code: All original code has been deposited at Github and Zenodo and is publicly available as of the date of publication. DOIs are listed in the key resources table.

Any additional information required to reanalyze the data reported in this paper is available from the Lead Contact upon request.

KEY RESOURCES TABLE.

| REAGENT or RESOURCE | SOURCE | IDENTIFIER |

|---|---|---|

| Antibodies | ||

| Bacterial and Virus Strains | ||

| E. coli: Strain OP50 | Caenorhabditis Genetics Center (CGC) | OP50 |

| Chemicals, Peptides, and Recombinant Proteins | ||

| Rhodamine 110 | Millipore Sigma |

Cat#83695 |

| Rhodamine B | Millipore Sigma |

Cat#83689 |

| Deposited Data | ||

| Original code and data related to recording and analyzing neural activity and behavior | This paper | Data: https://doi.org/10.5281/zenodo.8150515 and www.wormwideweb.org Code: https://doi.org/10.5281/zenodo.8151918 and https://github.com/flavell-lab/AtanasKim-Cell2023 |

| Experimental Models: Organisms/Strains | ||

| C. elegans: flvIsl 7[tag-168: :NLS-GCaMP7F, gcy-28.d::NLS-tag-RFPt, ceh-36:NLS-tag-RFPt, inx-1::tag-RFPt, mod-1 ::tag-RFPt, tph-1 (short)::NLS-tag-RFPt, gcy-5::NLS-tag-RFPt, gcy-7::NLS-tag-RFPt]; flvIs18[tag-168: :NLS-mNeptune2.5]; lite-1(ce314); gur-3(ok2245) | This paper | SWF415 |

| C. elegans: flvIs17; otIs670 [low-brightness NeuroPAL]; lite-1(ce314); gur-3(ok2245) | This paper | SWF702 |

| C. elegans: flvEx450[eat-4::NLS-GFP, tag-168: :NLS-mNeptune2.5]; lite-1(ce314); gur-3(ok2245) | This paper | SWF360 |

| C. elegans: flvEx451[tag-168::NLS-GFP, tag-168::NLS-mNeptune2.5]; lite-1(ce314); gur-3(ok2245) | This paper | SWF467 |

| C. elegans: flvEx207[nlp-70::HisCl1, elt-2::nGFP] | This paper | SWF515 |

| C. elegans: flvEx301[tbh-1:: TeTx: :sl2-mCherry, elt-2::nGFP] | This paper | SWF688 |

| C. elegans: flvEx481[flp-8::inv[unc-103-sl2-GFP], ceh-6::cre, myo-2::mChrimson] | This paper | SWF996 |

| C. elegans: flvEx482[unc-25::inv[unc-103-sl2-GFP], flp-22::cre, myo-2::mChrimson] | This paper | SWF997 |

| C. elegans: kyEx4268 [mod-1::nCre, myo-2::mCherry]; kyEx4499 [odr-2(2b)::inv[TeTx::sl2GFP], myo-3::mCherry] | This paper | SWF703 |

| C. elegans: leIs4207 [Plad-2::CED-3 (p15), Punc-42::CED-3 (p17), Plad-2::GFP, Pmyo-2::mCherry] | This paper | UL4207 |

| C. elegans: leIs4230 [Pflp-12s::CED-3 (p15), Pflp-12s::CED-3 (p17), Pflp-12s::GFP, Pmyo-2::mCherry] | This paper | UL4230 |

| C. elegans: flvEx485[gcy-21::Chrimson-t2a-mScarlett, elt-2::nGFP] | This paper | SWF1000 |

| C. elegans: flvEx502[ceh-28::GtACR2-t2a-GFP, myo-2::mCherry] | This paper | SWF1026 |

| C. elegans: flvEx499[ceh-19::inv[GtACR2-sl2-GFP], ins-10::nCre, myo-2::mCherry] | This paper | SWF1023 |

| Recombinant DNA | ||

| pSF300 [tag-168 ::NLS-GCaMP7F] | This paper | pSF300 |

| pSF301[tag-168::NLS-mNeptune2.5] | This paper | pSF301 |

| pSF302[tag-168::NLS-GFP] | This paper | pSF302 |

| pSF303 [tag-168::NLS-tag-RFPt] | This paper | pSF303 |

| Software and Algorithms | ||

| NIS-Elements (v4.51.01) | Nikon | https://www.nikoninstruments.com/products/software |

| Other | ||

| Zyla 4.2 Plus sCMOS camera | Andor | N/A |

| Ti-E Inverted Microscope | Nikon | N/A |

EXPERIMENTAL MODEL AND STUDY PARTICIPANT DETAILS

C. elegans

C. elegans Bristol strain N2 was used as wild-type. All transgenic and mutant strains used in this study are listed in the Key Resources Table. One day-old adult hermaphrodite animals were used for experiments, after growth on nematode growth medium (NGM) supplemented with OP50. For crosses, animals were genotyped by PCR. For making transgenic animals, DNA was injected into the gonads of young adult hermaphrodites.

METHOD DETAILS

Transgenic animals

Four transgenic strains were used for large-scale recordings in this study, as described in the text. The first (SWF415) contained two integrated transgenes: (1) flvIs17: tag-168::NLS-GCaMP7f, along with NLS-TagRFP-T expressed under the followed promoters: gcy-28.d, ceh-36, inx-1, mod-1, tph-1(short), gcy-5, gcy-7; and (2) flvIs18: tag-168::NLS-mNeptune2.5. The second strain we recorded (SWF702) contained two integrated transgenes: (1) flvIs17: described above; and (2) otIs670: low-brightness NeuroPAL (Yemini et al., 2021). Strains were backcrossed 5 generations after integration events. The third and fourth strains are non-integrated transgenic strains expressing NLS-GFP and NLS-mNeptune2.5 in defined neurons, listed in the Key Resources Table (SWF360 and SWF467).

We also generated strains for neural activation and silencing. The promoters used for cell-specific expression were as follows: RIC (Ptbh-1), AIM (Pnlp-70), AUA (Pflp-8+Pceh-6; intersectional Cre/Lox), AVL (Punc-25+Pflp-22; intersectional Cre/Lox), RIF (Podr-2b+Pmod-1; intersectional Cre/Lox), SAA (Plad-2+Punc-42; split Caspase), SMB (Pflp-12, 350bp), ASG (Pgcy-21), M4 (Pceh-28), MC (Pceh-19+Pins-10; intersectional Cre/Lox). The split caspase plasmids have been previously described54. For Cre/Lox intersection expression, we used the inverted/floxed plasmid design that has been previously described18. All promoters, including Cre/Lox intersectional combinations, were validated via co-expression of fluorophores (which were co-expressed via sl2 or t2a in each strain). Cell ablation lines were confirmed by loss of co-expressed GFP signal in the ablated cells.

Recordings of neural activity and behavior

Microscope

Animals were recorded under a dual light-path microscope that is similar though not identical to one that we have previously described20. The light path used to image GCaMP, mNeptune, and the fluorophores in NeuroPAL at single cell resolution is an Andor spinning disk confocal system with Nikon ECLIPSE Ti microscope. Light supplied from a 150 mW 488 nm laser, 50 mW 560 nm laser, 100 mW 405 nm laser, or 140 mW 637 nm laser passes through a 5000 rpm Yokogawa CSU-X1 spinning disk unit with a Borealis upgrade (with a dual-camera configuration). A 40x water immersion objective (CFI APO LWD 40X WI 1.15 NA LAMBDA S, Nikon) with an objective piezo (P-726 PIFOC, Physik Instrumente (PI)) was used to image the volume of the worm’s head (a Newport NP0140SG objective piezo was used in a subset of the recordings). A custom quad dichroic mirror directed light emitted from the specimen to two separate sCMOS cameras (Zyla 4.2 PLUS sCMOS, Andor), which had in-line emission filters (525/50 for GcaMP/GFP, and 610 longpass for mNeptune2.5; NeuroPAL filters described below). Data was collected at 3 × 3 binning in a 322 × 210 region of interest in the center of the field of view, with 80 z planes collected at a spacing of 0.54 um. This resulted in a volume rate of 1.7 Hz (1.4 Hz for the datasets acquired with the Newport piezo).

The light path used to image behavior was in a reflected brightfield (NIR) configuration. Light supplied by an 850-nm LED (M850L3, Thorlabs) was collimated and passed through an 850/10 bandpass filter (FBH850–10, Thorlabs). Illumination light was reflected towards the sample by a half mirror and was focused on the sample through a 10x objective (CFI Plan Fluor 10x, Nikon). The image from the sample passed through the half mirror and was filtered by another 850-nm bandpass filter of the same model. The image was captured by a CMOS camera (BFS-U3–28S5M-C, FLIR).

A closed-loop tracking system was implemented in the following fashion. The NIR brightfield images were analyzed at a rate of 40 Hz to determine the location of the worm’s head. To determine this location, the image at each time point is cropped and then analyzed via a custom-trained network with transfer learning using DeepLabCut40 that identified the location of three key points in the worm’s head (nose, metacorpus of pharynx, and grinder of pharynx). The tracking target was determined to be halfway between the metacorpus and grinder (central location of neuronal cell bodies). Given the target location and the error, the PID controller configured in disturbance rejection sends velocity commands to the stage to cancel out the motion at an update rate of 40 Hz. This permitted stable tracking of the C. elegans head.

Mounting and recording

L4 worms were picked 18–22 hours before the imaging experiment to a new NGM agar plate seeded with OP50 to ensure that we recorded one day-old adult animals. A concentrated OP50 culture to be used in the mounting buffer for the worm was inoculated 18h before the experiment and cultured in a 37C shaking incubator. After 18h of incubation, 1mL of the OP50 culture was pelleted, then resuspended in 40uL of M9. This was used as the mounting buffer. Before each recording, we made a thin, flat agar pad (2.5cm × 1.8cm × 0.8mm) with NGM containing 2% agar. On the 4 corners of the agar pad, we placed a single layer of microbeads with a diameter of 80um to alleviate the pressure of the coverslip on the worm. Then a worm was picked to the middle of the agar pad, and 9.5uL of the mounting buffer was added on top of the animal. Finally, a glass coverslip (#1.5) was added on top of the worm. This caused the mounting buffer to spread evenly across the slide. We waited for 5 minutes after mounting the animal before imaging.

Procedure for NeuroPAL imaging

For NeuroPAL recordings, animals were imaged as described above, but they were subsequently immobilized by cooling, after which multi-spectral information was captured. The slide was mounted back on the confocal with a thermoelectric cooling element attached to it, set to cool the agar temperature to 4°C 55. A closed-loop temperature controller (TEC200C, Thorlabs) with a micro-thermistor (SC30F103A, Amphenol) embedded in the agar kept the agar temperature at the 1 °C set point. Once the temperature reached the set point, we waited 5 minutes for the worm to be fully immobilized before imaging. Details on exactly which multi-spectral images were collected are in the NeuroPAL annotation section below.

Heat stimulation

For experiments involving heat stimulation, animals were recorded using the procedure described above, but were stimulated with a 1436-nm 500-mW laser (BL1436-PAG500, Thorlabs) a single time during the recording. The laser was controlled by a driver (LDC220C, Thorlabs) and cooled by the built-in TEC and a temperature controller (TED200C, Thorlabs). The light emitted by the laser fiber was collimated by a collimator (CFC8-C, Thorlabs) and expanded to be about 600 um at the sample plane. The laser light was fed into the NIR brightfield path via a dichroic with 1180-nm cutoff (DMSP1180R, Thorlabs). We determined the amplitude and kinetics of the heat stimulus in calibration experiments where temperature was determined based on the relative intensities of rhodamine 110 (temperature-insensitive) and rhodamine B (temperature-sensitive). This procedure was necessary because the thermistor size was considerably larger than the 1436-nm illumination spot, so it could not provide a precise measurement of temperature within the spot. Slides exactly matching our worm imaging slides were prepared with dyes added (and without worms). Dyes were suspended in water at 500mg/L and diluted into both agar and mounting buffer at a 1:100 dilution (final concentration of 5mg/L). Rhodamine 110 was imaged using a 510/20 bandpass filter and rhodamine B was imaged with a 610LP filter. We recorded data using the confocal light path during a calibration procedure where a heating element ramped the temperature of the entire agar pad from room temp to >50°C. Temperature was simultaneously recorded via a thermistor embedded on the surface of the agar, approximating the position of the worm. Fluorescence was also recorded at the same time, at the precise position where the worm’s head is imaged. This yielded a calibration curve that mapped the ratio of Rhodamine B/Rhodamine 110 intensity at the site of the worm’s head onto precise temperatures. Slides were then stimulated with the 1436-nm laser using identical setting to the experiments with animals. The response profile of the ratio of the fluorescent dyes was then converted to temperature. We quantitatively characterized the change in temperature, noting the mean temperature over the first second of stimulation (set to be exactly 10.0°C) and its decay (0.39 sec exponential decay rate, such that it returns to baseline within 3 sec).

Extraction of behavioral parameters from NIR videos

We quantified behavioral parameters of recorded animals by analyzing the NIR brightfield recordings. All of these behaviors are initially computed at the NIR frame rate of 20Hz, and then transformed into the confocal time frame using camera timestamps, averaging together all of the NIR data corresponding to each confocal frame.

Velocity

First, we read out the (x,y) position of the stage (in mm) as it tracks the worm. To account for any delay between the worm’s motion and stage tracking, at each time point we added the distance from the center of the image (corresponding to the stage position) to the position of the metacorpus of pharynx (detected from our neural network used in tracking). This then gave us the position of the metacorpus over time. To decrease the noise level (eg: from neural network and stage jitter), we then applied a Group-Sparse Total-Variation Denoising algorithm to the metacorpus position. Differentiating the metacorpus position then gives us a movement vector of the animal.

Because this movement vector was computed from the location of the metacorpus, it contains two components of movement: the animal’s velocity in its direction of motion, and oscillations of the animal’s head perpendicular to that direction. To filter out these oscillations, we projected the movement vector onto the animal’s facing direction, i.e. the vector from the grinder of the pharynx to its metacorpus (computed from the stage-tracking neural network output). The result of this projection is a signed scalar, which is reported as the animal’s velocity.

Worm spline and body angle

To generate curvature variables, we trained a 2D U-Net to detect the worm from the NIR images. Specifically, this network performs semantic segmentation to mark the pixels that correspond to the worm. To ensure consistent results if the worm intersects itself (for instance, during an omega-turn), we use information from worm postures at recent timepoints to compute where a self-intersection occurred, and mask it out. Next, we compute the medial axis of the segmented and masked image and fit a spline to it. Since the tracking neural network was more accurate at detecting the exact position of the worm’s nose, we set the first point of the spline to the point closest to the tracking neural network’s nose position. We next compute a set of points along the worm’s spline with consistent spacing (8.85 μm along the spline) across time points, with the first point at the first position on the spline. Body angles are computed as the angles that vectors between adjacent points make with the x-axis; for example, the first body angle would be the angle that the vector between the first and second point makes with the x-axis, the second body angle would be , and so on.

Head curvature

Head curvature is computed as the angle between the points 1, 5, and 8 (ie: the angle between and ). These points are 0 μm, 35.4 μm, and 61.9 μm along the worm’s spline, respectively.

Angular velocity

Angular velocity is computed as smoothed , which is computed with a linear Savitzky-Golay filter with a width of 300 time points (15 seconds) centered on the current time point.

Body curvature

Body curvature is computed as the standard deviation of for between 1 and 31 (ie: going up to 265 μm along the worm’s spline). This value was selected such that this length of the animal would almost never be cropped out of the NIR camera’s field of view. To ensure that these angles are continuous in , they may each have added or subtracted as appropriate.

Feeding (pumping rate)

Pumping rate was manually annotated using Datavyu, by counting each pumping stroke while watching videos slowed down the 25% of their real-time speeds. The rate is then filtered via a moving average with a width of 80 time points (4 seconds) to smoothen the trace into a pumping rate rather than individual pumping strokes.

Extraction of normalized GCaMP traces from confocal images

We developed the Automatic Neuron Tracking System for Unconstrained Nematodes (ANTSUN) software pipeline to extract neural activity (normalized GCaMP) from the confocal data consisting of a time series of z-stacks of two channels (TagRFP-T or mNeptune2.5 for the marker channel and gCaMP7f for the neural activity channel). Each processing step is outlined below.

Pre-processing

The raw images first go through several pre-processing steps before registration and trace extraction. For datasets with a gap in the middle, all of the following processing is done separately and independently on each half of the dataset.

Shear correction

Shear correction is performed on the marker channel, and the same parameters are also used to transform the activity channel. Since the images in a z-stack are acquired over time, there exists some translation across the images within the same z-stack, causing some shearing. To resolve this, we wrote a custom GPU accelerated version of the FFT based subpixel alignment algorithm 56. Using the alignment algorithm, each successive image pair is aligned with x/y-axis translations.

Image cropping

We crop the z-stacks to remove the irrelevant non-neuron pixels. For each z-stack in the time series, the shear-corrected stack is first binarized by thresholding intensity. Using principal component analysis on the binarized worm pixels, the rotation angle about the z-axis is determined. Then the stack is rotated about the z-axis with the determined angle to align the worm’s head. Then the 3D bounding box is determined using the list of worm pixels after the rotation. Finally, the rotated z-stack is cropped using the determined 3D bounding box. Similar to shear correction, this procedure is first done on the marker channel, and the same parameters are then applied to the activity channel.

Image filtering using total variation minimization

To filter out noise on the marker channel images, we wrote a custom GPU accelerated version of the total variation minimization filtering method, commonly known as the ROF model 57. This method excels at filtering out noise while preserving the sharp edges in the images. Note that the activity channel is kept unfiltered for GCaMP extraction.

Registering volumes across time points

To match the neurons across the time series, we register the processed z-stacks across time points. However, simply registering all time points to a single fixed time point is intractable because of the high amount of both global and small-scale deformations. To resolve this, we compute a similarity metric across all possible time point pairs that reports the similarity of worm postures. We then use this metric to construct a registration graph where nodes are timepoints and edges are added between timepoints with high posture similarity. The graph is constrained to be fully connected with an average connectedness of 10. Therefore, it is possible to fully link each time point to every other time point. Using this graph, we register strategically chosen pairs of z-stacks from different time points (i.e. the ones with edges). The details of the procedure are outlined below.

Posture similarity determination

For each z-stack, we first find the anterior tip of the animal using a custom trained 2D U-Net, which outputs the probability map of the anterior tip given a maximum intensity projection of the z-stack. We then fit a spline across the centerline of the neuron pixels beginning at the determined anterior tip, which is the centroid of the U-Net prediction. Using the spline, we compare across time points pairs to determine the similarity.

Image registration graph construction

Next, we construct a graph of registration problems, with time points as vertices. For each time point, an edge is added to the graph between that time point and each of the ten time points with highest similarity to it. The graph is then checked for being connected.

Image registration

For each registration problem from the graph, we perform a series of registrations that align the volumes, iteratively in multiple steps in increasing complexity: Euler (rotation and translation), affine (linear deformation), and B-spline (non-linear deformation). In particular, the B-spline registration is performed in four scales, decreasing from global (the control points are farther apart) to local (the control points are placed closer together) registration. The image registrations and transformations are performed using elastix on OpenMind, a high-performance computing cluster. They are performed on the mNeptune2.5 marker channel.

Channel alignment registration

To align the two cameras used to acquire the marker and the activity channels, we perform Euler (translation and rotation) registration across the two channels over all time points. Then we average the determined transformation parameters from the different time points and apply across all time points.

Neuron ROI determination

To segment out the pixels and find the neuron ROIs, we first use a custom trained 3D U-Net. The instance segmentation results from the U-Net are further refined with the watershed algorithm.

Simultaneous semantic and instance segmentation with 3D U-Net

We trained a 3D U-Net to simultaneously perform semantic and instance segmentation of the neuronal ROIs in the z-stacks of the unfiltered marker images. To achieve instance segmentation, we labeled and assigned high weights to the boundary pixels of the neurons, which guides the network to learn to segment out the boundaries and separate out neighboring neurons. Given a z-stack, the network outputs the probability of each pixel being a neuron. We threshold and binarize this probability volume to mark pixels that are neurons.

Instance segmentation refinement

To refine the instance segmentation results from the 3D U-Net, we perform instance segmentation using the watershed algorithm. This generates, for each time point, a set of ROIs in the marker image corresponding to distinct neurons.

Neural trace extraction

ROI Similarity Matrix

To link neurons over time, we first create a symmetric similarity matrix, where is the number of total ROIs detected by our instance segmentation algorithm across all time points. Thus, for each index in this matrix, we can define the corresponding time point and the corresponding ROI from that time point. This matrix is sparse, as its th entry is nonzero only if there was a registration between and that maps the ROI to . In the case of such a registration existing, the th entry of the matrix is set to a heuristic intended to estimate confidence that the ROIs and are actually the same neuron at different timepoints. This heuristic includes information about the quality of the registration mapping to (computed using Normalized Correlation Coefficient), the fractional volume of overlap between the registration-mapped and (i.e. position similarity), the difference in marker expression between and (i.e. similarity of mNeptune expression), and the fractional difference in volume between and (i.e. size similarity of ROIs). The diagonal of the matrix is additionally set to a nonzero value.

Clustering the Similarity Matrix

Next, we cluster the rows of this similarity matrix using a custom clustering method; each resulting cluster then corresponds to a neuron. First, we construct a distance matrix between rows of the similarity matrix using L2 Euclidean distance. Next, we apply minimum linkage hierarchical clustering to this distance matrix, except that after a merge is proposed, the resulting cluster is checked for ROIs belonging to the same time point. If too many ROIs in the resulting cluster belong to the same time point, that would signify an incorrect merge, since neurons should not have multiple different ROIs at the same time point. Thus, if that happens, the algorithm does not apply that merge, and continues with the next-best merge. This continues until the algorithm’s next best merge reaches a merge quality threshold, at which point it is terminated, and the clusters are returned. These clusters define the grouping of ROIs into neurons.

Linking multiple datasets

For datasets that were recorded with a gap in the middle, the above process was performed separately on each half of the data. Then, the above process was repeated to link the two halves of the data together, except that only two edges that must connect to the other half of the data are added to the registration graph per time point, and the clustering algorithm does not merge clusters beyond size 2.

Trace extraction

Next, neural traces are extracted from each ROI in each time point belonging to that neuron’s cluster. Specifically, we obtain the mean of the pixels in the ROI at that time point. This is done in both the marker and activity channels. They are then put through the following series of processing steps:

Background-subtraction, using the median background per channel per time point.

Deletion of neurons with too low of signal in the activity channel (mean activity lower than the background – this was not done in the SWF360 control dataset due to the presence of GFP-negative neurons in that strain), or too few ROIs corresponding to them (less than half of the total number of time points).

Correction to account for laser intensity changing halfway through our recording sessions (done separately on each channel based on intensity calibration measurements taken at various values of laser power).

Linear interpolation to any time point that lacked an ROI in the cluster.

Division of the activity channel traces by the marker channel traces, to filter out various types of motion artifacts. These divided traces are the neural activity traces.

Bleach correction