Abstract

Different aspects of cognitive functions are affected in patients with Alzheimer’s disease. To date, little is known about the associations between features from brain-imaging and individual Alzheimer’s disease (AD)-related cognitive functional changes. In addition, how these associations differ among different imaging modalities is unclear. Here, we trained and investigated 3D convolutional neural network (CNN) models that predicted sub-scores of the 13-item Alzheimer’s Disease Assessment Scale–Cognitive Subscale (ADAS–Cog13) based on MRI and FDG–PET brain-imaging data. Analysis of the trained network showed that each key ADAS–Cog13 sub-score was associated with a specific set of brain features within an imaging modality. Furthermore, different association patterns were observed in MRI and FDG–PET modalities. According to MRI, cognitive sub-scores were typically associated with structural changes of subcortical regions, including amygdala, hippocampus, and putamen. Comparatively, according to FDG–PET, cognitive functions were typically associated with metabolic changes of cortical regions, including the cingulated gyrus, occipital cortex, middle front gyrus, precuneus cortex, and the cerebellum. These findings brought insights into complex AD etiology and emphasized the importance of investigating different brain-imaging modalities.

Supplementary Information

The online version contains supplementary material available at 10.1186/s40708-024-00218-x.

Keywords: Alzheimer's disease, Neural network, Brain imaging, Cognitive function

Introduction

Alzheimer’s disease (AD) is the most frequent cause of dementia [1]. AD pathology is characterized by the accumulation of toxic species, such as amyloid beta plaques and tau tangles, alterations in glucose metabolism, as well as brain atrophy [2]. The progression of AD impacts an individual’s cognitive functions, such as memory, language, and spatial navigation [3, 4]. The pathological changes of AD brain is best captured through neuroimaging techniques, such as magnetic resonance imaging (MRI) for brain structural changes, positron emission tomography (PET) for metabolic and chemical composition changes [5, 6], etc. On the other hand, the cognitive function of AD patients can be evaluated via Alzheimer’s Disease Assessment Scale–Cognitive subscale (ADAS–Cog), which quantifies cognitive functions from different aspects (e.g., word recall, orientation, comprehension, etc.) with continuous values, and is frequently used in research and clinical settings [7, 8].

Study of brain-imaging data is important for understanding AD etiology and improving AD diagnosis, prognosis [9, 10], and development of treatments. To date, researchers have identified brain-imaging biomarkers that are strongly associated with AD diagnosis: atrophy in the hippocampus and the medial temporal lobe, hypometabolism of glucose in the cingulate cortex, etc. [10–13]. Furthermore, advanced statistical models have been trained to accurately classify AD vs. healthy control brains based on imaging data [14, 15]. However, most studies focused on AD diagnosis instead of individual components of cognitive function, which assesses brain functions from various aspects (e.g., as quantified by ADAS–Cog13 sub-scores). Associations between individual cognitive functions and brain-imaging features remain unclear. Furthermore, how these associations vary in different imaging modalities such as MRI vs. PET remains to be studied. To the best of our knowledge, no previous study has systematically investigated these questions.

To understand the relationship between ADAS–Cog13 sub-scores and brain-imaging features, we first linked these two types of data through a statistical model. Here, we chose to use a three-dimensional (3D) convolutional neural network (CNN) model to predict ADAS–Cog13 sub-scores, since CNNs demonstrated to have superior performance in classification and regression when dealing with imaging data [16, 17]. We trained the model, validated it, and further investigated the model. While neural networks (NNs) were commonly used as “black-box” tools in the past, recent advances in methods for interpreting NNs allow researchers to identify features important for the models’ performance [18–20]. In this study, we applied occlusion [18–20], a commonly used method, to investigating the trained model and identified brain features most important for predicting ADAS–Cog13 sub-scores.

In this study, we obtained 9862 brain MRI and PET imaging data, along with ADAS–Cog13 sub-scores for subjects from Alzheimer’s Disease Neuroimaging Initiative (ADNI) database (http://adni.loni.usc.edu). We used a same pipeline to train CNN models for predicting key ADAS–Cog13 sub-scores with different imaging modalities. We further investigated these trained models to identify brain regions strongly associated with ADAS–Cog13 sub-scores. Our analytical pipeline brought new insights for associations between brain features and individual cognitive functions and can be applied to studying other brain diseases, where imaging data are available.

Materials and methods

ADNI data

The data used was obtained from the Alzheimer’s Disease Neuroimaging Initiative (ADNI) database (http://adni.loni.usc.edu). Data used in the preparation of this article were obtained from the Alzheimer's Disease Neuroimaging Initiative (ADNI) database (adni.loni.usc.edu). The ADNI was launched in 2003 as a public–private partnership, led by Principal Investigator Michael W. Weiner, MD. The primary goal of ADNI has been to test whether serial magnetic resonance imaging (MRI), positron emission tomography (PET), other biological markers, and clinical and neuropsychological assessment can be combined to measure the progression of mild cognitive impairment (MCI) and early Alzheimer's disease (AD). For up-to-date information, see www.adni-info.org. As specified by the ADNI protocol, each participant within the study was willing, spoke English or Spanish, was able to perform all test procedures described in the protocol and had a study partner able to provide an independent evaluation of functioning. In this study, we included all T1 images from ADNI 1, ADNI 2, ADNI GO, and ADNI 3 cohorts, totaling 9862 unique imaging entries with cognitively normal (CN), mild–cognitive impairment (MCI) or AD diagnosis. The detailed imaging acquisition parameters are available on the ADNI website (https://adni.loni.usc.edu/methods/mri-tool/mri-acquisition/). Diagnosiswise, there were 3314 CN, 4412 with MCI, and 2136 with AD. Regarding imaging modality, 4014 were MRI, 3953 were FDG–PET, and 1895 were AV45–PET. Demographic and clinical characteristics of these entries are shown in Table 1.

Table 1.

Demographic information of ADNI samples

| Normal cognition | MCI | Alzheimer’s disease | ||

|---|---|---|---|---|

| (n = 3314) | (n = 4412) | (n = 2136) | ||

| Count (%) | Count (%) | Count (%) | ||

| Sex | F | 1722 (52) | 1747 (39.6) | 909 (42.6) |

| M | 1592(48) | 2665(60.4) | 1227(57.4) | |

| Mean (stdev) | Mean (stdev) | Mean (stdev) | ||

| Age | 76.9 (6.6) | 75.1 (7.8) | 76.7 (7.5) | |

| Total ADAS–Cog13 | 9.4 (4.6) | 16.3 (7.5) | 32.5 (11.2) | |

Random forest (RF) classifier for Alzheimer’s disease (AD) vs. non-AD (nAD)

A random forest model [21] was used to classify AD vs. nAD with 13 ADAS–Cog13 sub-scores as predictors. MCI and CN participants were grouped into the nAD class. To balance the weights of the 13 ADAS–Cog13 sub-scores, each sub-score was normalized to a scale of 0 to 1 using min–max scaling. Train/test splitting with a ratio of 80:20 was carried out before model training process. The training and testing sets were balanced by random sampling to adjust for the ratio of nAD and AD. During the training process, model hyperparameters were optimized using grid search [22]. The top ADAS–Cog13 sub-scores scores that were representable of the AD cognitive performance were used as regression outputs of the subsequent 3D CNN models.

Imaging data pre-processing

3D brain images used in this study underwent multiple preprocessing steps in FreeSurfer [23], as illustrated in Additional file 1: Fig. S1. First, images were registered with the MNI152 standard space structural brain template [24]. Brain volumes and positions were standardized. Second, a standardized brain mask was applied to strip the cranium and brain stem, retaining only the cerebrum and cerebellum.

For MRI imaging data, white stripe normalization [25] was applied. The normal-appearing white matter (NAWM) with least pathological variation was selected as the reference tissue [25]. All MRI images were then transformed by matching their distributions of NAWM to that of the reference MRI with a fixed mean (µref) and standard deviation (σref), as shown in Additional file 1: Fig. S1. Note that some MRI images failed white stripe normalization, showing a mismatched NAWM peak following normalization (Additional file 1: Fig. S1). Abnormal and low-quality MRI images were excluded from subsequent experiments.

For PET imaging data, normalization was done following the ADNI protocol, where the intensities were pre-normalized by the ratio between the radiotracer and the body weight [26]. In addition, we applied a customized “cohort normalization” to scale PET images into a range between 0 to 1 at a cohort level. Mathematically, all PET images were divided by the maximum of nstats from the training cohort, where nstats was the voxel intensity at the 99.9 percentile from the PET image from an individual patient. We used 99.9 percentile instead of the maximum intensity value was to avoid the influence from outliers.

Building 3D convolutional neural networks (3D CNNs)

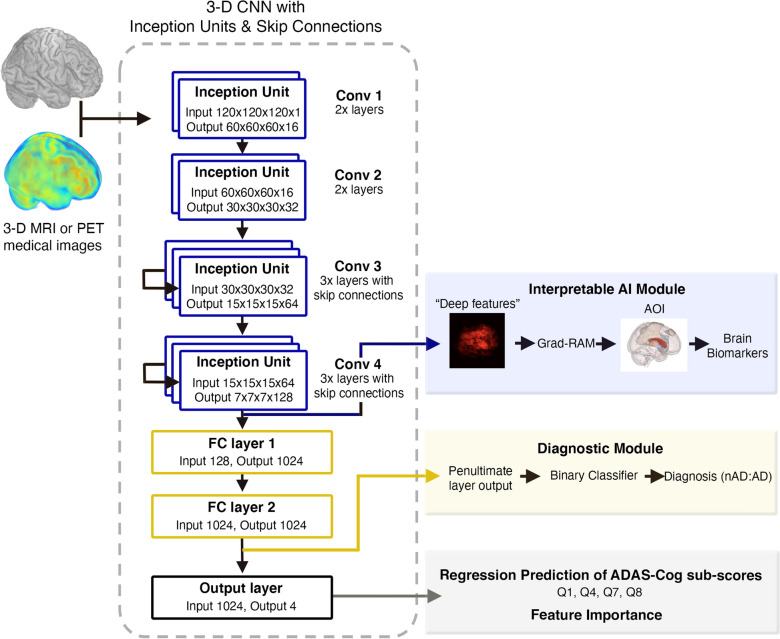

We built a 3D convolutional neural network (3D CNN) with skip connection and inception units to analyze 3D brain-imaging data (Fig. 1). The backbone of the 3D-CNN is based on Python Tensorflow VGG16 model [27, 28], which consists of 14 convolutional (conv) layers (including 4 max pooling layers), followed by 2 fully connected layers (FC1 and FC2) and a final output layer which generates predictions of four ADAS–Cog13 sub-scores as in a multi-task feature learning process [29]. When training the model, a batch size of 8 imaging samples was used. Rectified linear unit (ReLU) was applied as the activation functions for all the conv layers. Batch normalization (BN) was applied before the activation function. The channel number (nchannel) for the conv layers inside Conv 1, Conv 2, Conv 3 and Conv 4 were 16, 32, 64 and 128. At the end of Conv 1, Conv 2, Conv 3 and Conv 4, a max pooling operation with kernel size of 2 were applied to reduce the spatial size of activation maps into half. Conv 3 and Conv 4 were modified into residual blocks [30] with skip connections which aggregate the output of the 1st and 2nd conv layers in the group before feeding the results into the 3rd conv layer. The output of the Conv 4 with a size of (nbatch, 7, 7, 7, 128) was flattened into an array with size (nbatch, 43,904) before proceeding to the fully connected (FC) layers: FC1 and FC2. Both FC1 and FC2 contained 1024 neurons which converted the input array into arrays of size (nbatch, 1024). The tanh activation function was applied to both FC layers. Finally, the output layer with a customized sigmoid activation function (, where was a trainable parameter) that converts the FC2 layer output into the final predictions of size (nbatch, 4).

Fig. 1.

3D CNN model architecture. The CNN model is composed of 10 convolutional layers grouped: Conv 1 (2 layers), Conv 2 (2 layers), Conv 3 (3 layers) and Conv 4 (3 layers). This model processes brain-imaging data and can predict both AD diagnosis (yellow box) and ADAS–Cog13 sub-scores (grey box)

To train the model, the samples were split into training and test sets with a ratio of 80:20. The mean squared error (MSE) between the true ADAS–Cog13 scores and the predicted scores were used as the loss function. Model parameters were then optimized using the Adam algorithm [31] to minimize the MSE of the training set. Cosine annealing [32] was used as the learning rate scheduler to help the model converge rapidly to a local minima and at the same time prevent the model from getting stuck in one single local minima by abruptly increasing the learning rate to maximum at the beginning of each cycle. The maximum and minimum learning rate used in our study were 0.01 and 0.0001, respectively. To boost the model accuracy, ensemble technique was applied by selecting 5–7 best models (models with minimum MSE on the test set) from the saved models and calculated the mean of predictions from multiple models for each ADAS–Cog13 sub-score.

To evaluate model performance, we used multiple metrics, including the mean absolute error (MAE) and R2. The MAE for each ADAS–Cod sub-score was defined as the mean of |ytrue–ypred| across the cohort, where ytrue and ypred are the true and predicted ADAS–Cog13 scores.

To compare the model performance with clinical practice, we calculated the inter-test variability (ITV) for each ADAS–Cog13 sub-score on a complete ADNI ADAS–Cog13 data set with 9862 unique samples (the same cohort as listed in Table 1). ITV was calculated as the maximum difference in recorded score for each ADNI participant within a period of ± 3 months. Mathematically, the ITV for entry i () was defined as:

| 1 |

where were the ADAS–Cog13 cog sub-score (Q1, Q4, Q7 or Q8) from all the visits that were within ± 3 months with regard to entry i. The mean ITV for each sub-score represents the mean of all ADNI participants’ ITV.

Diagnostic extension and validation of 3D CNN models

The diagnostic extension of the 3D CNN predicted AD vs. nAD, where the penultimate layer (FC2) output with a size of (nentries, 1024) was utilized to train a binary classification model, where nentries was the number of entries. Classification models, including logistic regression [33], k-nearest neighbor (KNN) [34] and random forest [21], were trained to predict diagnosis using the values in the penultimate layer. Similar to the main AI model, multiple sub-models with the same architecture were ensembled and a voting classifier was applied to generate final predictions.

The validation data set for nAD and AD diagnosis was obtained from the Rush Alzheimer’s Disease Center (RADC) Religious Order Study (ROS) [35], a clinical–pathologic study of aging and dementia. The demographic information of samples from RADC is summarized in Additional file 1: Table S1. MRI images from RADC were processed in the same way as for ADNI images.

Identifying features important for 3D CNN model

We estimated feature importance scores of 56 brain regions using occlusion method as described in previous studies [18–20]. The feature importance of a specific brain region quantifies its importance that was not encoded in other brain regions. In the analyses, we used Harvard–Oxford cortical and subcortical structural atlases from the Center for Morphometric Analysis (https://cma.mgh.harvard.edu). With the occlusion method, the change of model prediction error after removing a brain region was measured. More specifically, the CNN feature importance score of brain region i was defined as

| 2 |

where is the absolute change of CNN MAE after removing the ith brain region from model input.

Calculating pairwise correlation among ADAS–Cog13 sub-scores

We calculated pairwise correlation among ADAS–Cog13 sub-scores Q1, Q4, Q7, and Q8 based on their associations with 56 brain regions. To be specific, for each ADAS–Cog13 sub-score we obtained feature importance scores of 56 brain regions in a CNN model. We then calculated Spearman’s correlation between each pair of sub-scores based on the 56-dimentional feature importance scores. The pairwise correlations among sub-scores were obtained for MRI and FDG–PET-based CNN models separately.

Results

Identifying ADAS–Cog13 sub-scores important for AD diagnosis

We identified the ADAS–Cog13 sub-scores most important for AD diagnosis by training a random forest (RF) model for classifying AD vs. non-AD (nAD) patients based on these sub-scores using ADNI sample (see Methods section and Table 1). The RF classifier was able to classify the AD diagnosis with an accuracy of 95% (98% precision and 90% recall). In this model, top four ADAS–Cog13 sub-scores were responsible for 82% of the RF feature importance: word recall (Q1), 16%; delayed word recall (Q4), 29%; orientation (Q7), 25%; word recognition (Q8), 11% (Additional file 1: Table S2). These top four sub-scores scores are representable of the AD cognitive performance and are, therefore, used as regression outputs of the subsequent 3D CNN models.

Predicting key ADAS–Cog13 sub-scores with CNNs based on brain images

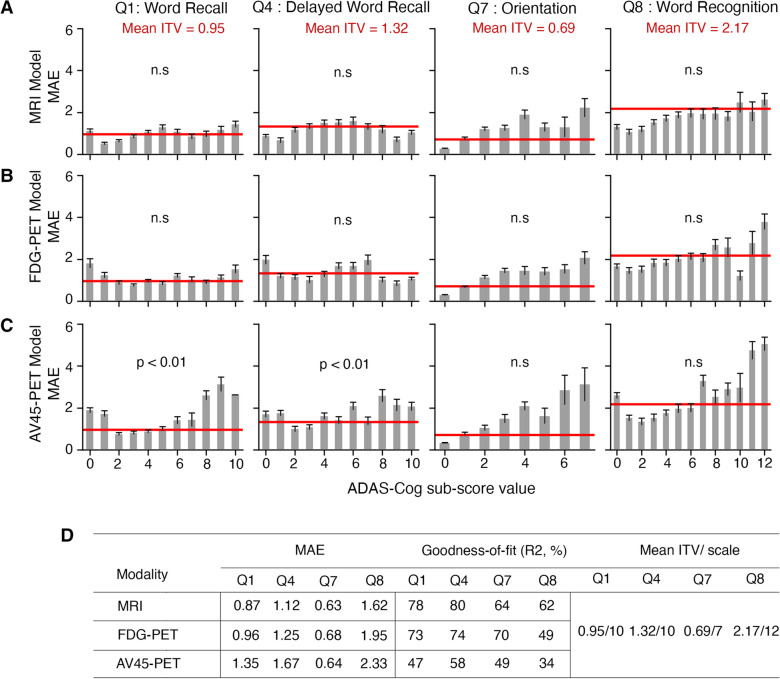

We trained 3D CNNs that utilized brain images to predict 4 ADAS–Cog13 sub-scores (Q1, Q4, Q7, and Q8) based on MRI, FDG–PET, and AV45–PET imaging modalities (see Methods section for details). We found that the MRI-based CNN model performed the best in predicting sub-scores, with R2 of 78%, 80%, 64%, and 62% for Q1, Q4, Q7 and Q8, respectively (Fig. 2D). The FDG–PET-based 3D CNN performed similar to the MRI model, except for poor performance on Q8 (49%). The AV45–PET-based model had the lowest prediction accuracy (Fig. 2D).

Fig. 2.

Accuracy of CNN models on predicting ADAS–Cog13 sub-scores. A–C Graphical representation of the mean absolute error (MAE) across ADAS–Cog sub-scores for the MRI (A), FDG–PET (B) and AV45–PET (C) CNNs. The inter-test variabilities (ITVs) for four ADAS–Cog13 sub-scores are represented by the red horizontal line. One-tailed t tests was performed to compare MAEs and ITVs for each ADAS–Cog13 sub-score. ‘n.s.’ means there is no significant difference between MAE and ITV. D Test set MAE and R2 for the MRI, FDG–PET and AV45–PET-based CNN models, along with the mean ITV and scale of each ADAS–Cog13 sub-score

To assess the models’ accuracy in predicting ADAS–Cog13 sub-scores, we compared the mean absolute error (MAE) of our model predictions with the inter-test variability of sub-scores (ITV), which reflects natural fluctuations of ADAS–Cog13 (see Methods section for details). The MAEs of MRI and FDG-based 3D CNN model did not show significant difference from ITVs for Q1, Q4, Q7, or Q8 (Fig. 2A, B, D). This indicated that errors in the model predictions are comparable to intrinsic variations in the sub-scores. As a comparison, AV45–PET-based model performed worse, with MAEs significantly higher than ITVs for Q1 and Q4 (Fig. 2C, D).

To further assess the 3D CNN models’ accuracy, we extended them for classifying nAD vs. AD (i.e., AD diagnosis). The deep learning features from the final fully connected layer (FC2) were used for AD classification (Fig. 1). The highest classification accuracy was achieved by the FDG–PET model with a k-nearest neighbor (KNN) extension (AUROC = 90%), followed by the MRI model (AUROC = 89%) and AV45–PET model (AUROC = 84%) (Additional file 1: Fig. S2, Additional file 1: Table S3). To further test the generalizability of the 3D CNN models, an external validation was done by applying our models to the RADC data set (see Methods section and Additional file 1: Table S1) to classify nAD vs. AD. Despite significant differences in age, overall patient populations and scanning protocols between ADNI and RADC data sets, our model achieved an AUROC of 0.74 for RADC data.

Identifying brain regions associated with ADAS–Cog13 sub-scores

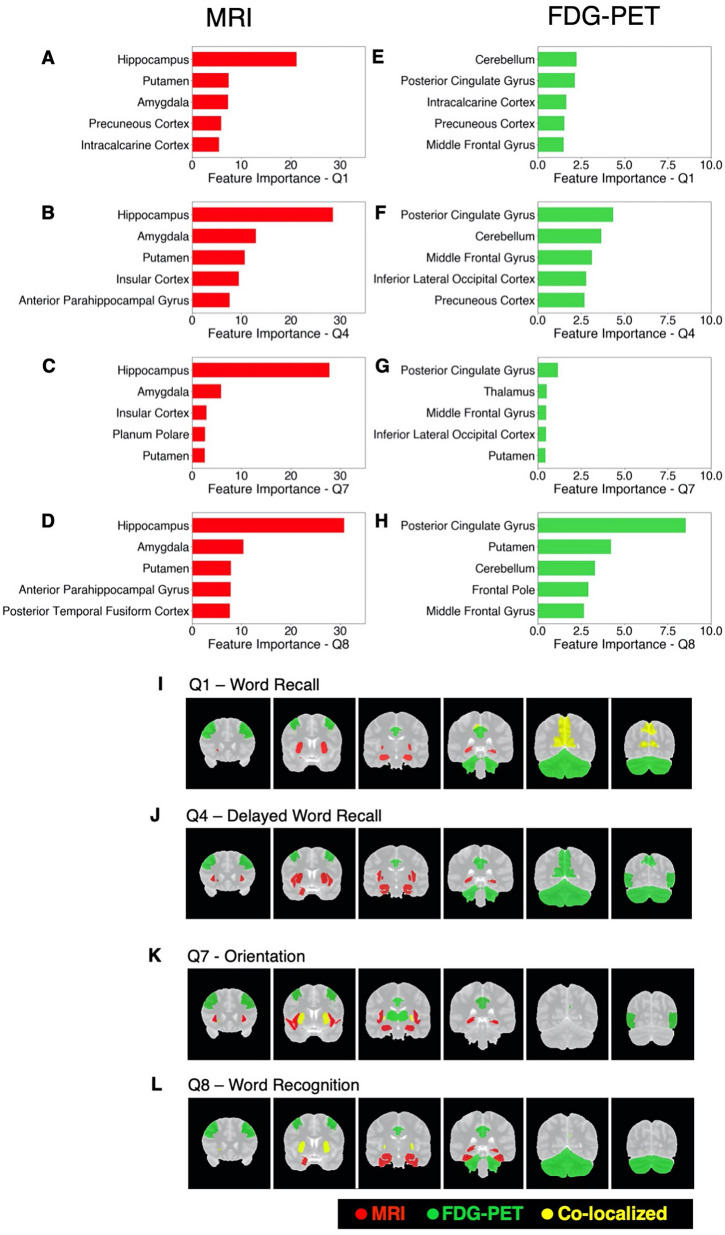

After model training, we investigated MRI and FDG–PET-based models with occlusion method to identify brain regions important for predicting ADAS–Cog13 sub-scores (see Methods section for details).

We found that the MRI and FDG–PET CNNs utilized different brain regions for predicting ADAS–Cog13 sub-scores. Furthermore, each ADAS–Cog13 sub-score was associated with a specific set of brain features. In the MRI-based 3D CNN model, sub-score Q1 was most strongly associated with brain structural changes in the hippocampus and the putamen, etc. Q4, Q7, and Q8 were strongly associated with changes in the hippocampus and the amygdala, etc. (Fig. 3A–D). Three regions (the amygdala, the hippocampus, and the putamen) appeared among the top ten for all four ADAS–Cog13 sub-score. Additional file 2: Table S4 lists feature importance scores of all brain regions in the MRI-based CNN. In the FDG–PET-based 3D CNN model, Q1 and Q4 were most strongly associated with brain metabolic changes in the cerebellum and the cingulate gyrus (posterior division), etc. Q7 was strongly associated with changes in the cingulate gyrus (posterior division) and the thalamus, etc. Q8 was associated with changes in the cingulate gyrus (posterior division) and the putamen, etc. (Fig. 3E–H). Five brain regions appeared among the top ten for all four ADAS–Cog13 sub-scores: the cingulate gyrus (posterior division), middle frontal gyrus, precuneous cortex, lateral occipital cortex (inferior division), and cerebellum. Additional file 3: Table S5 lists feature importance scores of all brain regions in the FDG–PET-based CNN. Figure 3I–L visualizes the top five regions associated with each ADAS–Cog13 sub-score in MRI model (red) and FDG–PET model (green), respectively. A few important brain regions overlap between MRI and FDG–PET-based CNNs, as highlighted in yellow color.

Fig. 3.

Important brain regions in MRI and FDG–PET-based 3D CNN models. A–D Feature importance scores of top five brain regions for ADAS–Cog13 sub-scores Q1, Q4, Q7, and Q8 in the MRI-based CNN. E–H Feature importance scores of top five brain regions for Q1, Q4, Q7, and Q8 in FDG–PET-based CNN. I–L Coronal views of top five important brain regions for each ADAS–Cog13 sub-scores in the MRI (red) and the FDG–PET (green) 3D CNNs. Regions that are important for both MRI and FDG–PET models are highlighted in yellow color

We further investigated the brain-imaging associations in three disease sub-groups: cognitively normal (CN), MCI, and AD. We observed that within different disease sub-groups, the importance scores of a specific brain region varied. For example, the hippocampus was a most important region in the MRI-based CNN model in CN subjects for all ADAS–Cog13 sub-scores, while its importance in the model diminished in MCI and AD patients. The cerebellum was a most important region in the FDG–PET model in AD subjects for sub-score Q7, while its importance was much lower in CN and MCI subjects (Additional file 1: Fig. S3).

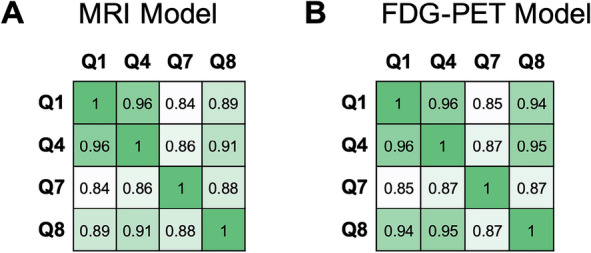

Pairwise correlation among ADAS–Cog13 sub-scores

We also calculated pairwise correlation among ADAS–Cog13 sub-scores Q1, Q4, Q7, and Q8 in terms of their associations with brain features (see Methods section for details). In both MRI and FDG–PET modalities, pairwise correlation among these sub-scores were significant (FDR adjusted p value < 0.05). In the MRI-based model, the strongest correlation was between Q1 and Q4 (Spearman’s correlation = 0.94), followed by Q4 and Q8 (Spearman’s correlation = 0.91). In comparison, Q7 had lower correlations with other sub-scores. All pairwise correlations are shown in Fig. 4A. Note that Q1 measures function for word recall, Q4 measures delayed word recall, Q7 measures orientation, and Q8 measures word recognition. The pairwise correlations of sub-scores based on brain feature importance scores were higher within language-related sub-scores than those between language and orientation sub-scores. In the FDG–PET model, the strongest pairwise correlation of cognitive functions was between Q1 and Q4 (Spearman’s correlation = 0.96), followed by Q4 and Q8 (Spearman’s correlation = 0.95). All pairwise correlations are shown in Fig. 4B. Like the observation for the MRI-based model, the pairwise correlations were higher within language-related sub-scores than those between language and orientation-related sub-scores.

Fig. 4.

Pairwise correlations among ADAS–Cog13 sub-scores Q1, Q4, Q7, and Q8, calculated based on each sub-score’s association with brain regions reflected in CNN. A Correlations in MRI-based CNN. B Correlations in FDG–PET-based CNN

Discussion

In this study, we made a first attempt to train 3D CNN models based on brain-imaging data for predicting ADAS–Cog13 sub-scores that were crucial for AD diagnosis. We then investigated the CNN models to identify brain regions strongly associated with these sub-scores.

The MRI and FDG–PET-based 3D CNN models predicted the ADAS–Cog13 sub-scores with R2 above 60%, except for low performance of FDG–PET-based model on predicting sub-score Q8. The MAEs of these models’ prediction on ADAS–Cog13 sub-scores were comparable to the ITVs of these sub-scores, indicating that errors in CNN models’ prediction were comparable to natural variations of the ADAS–Cog13 sub-scores. We provided additional internal validation of the MRI and FDG–PET-based models by demonstrating that they can be applied to classifying nAD vs. AD subjects accurately (with an AUROC of 0.89 and 0.9, respectively). Furthermore, the MRI-based model performed well in classifying nAD vs. AD subjects when applied to an external test data set, RADC, without any modifications. Compared to MRI and FDG–PET-based CNN models, the AV45–PET–CNN model performed worse in predicting ADAS–Cog13 sub-scores but had comparable performance in identify AD vs. nAD. As revealed in previous studies, amyloid deposition as measured by AV45–PET has an impact on cognition in early stages, while ADAS–Cog13 is not sensitive enough for measuring changes in early cognitive stage of MCI or AD [36, 37]. This is a potential explanation for poor performance of AV45–PET-based model in predicting ADAS–Cog13 sub-scores. In real-world practice, many clinical trials for AD drugs monitor cognitive endpoints such as ADAS–Cog13 and amyloid beta based on AV45–PET imaging [38]. Our observation suggests that cognitive functions had a stronger association with MRI and FDG–PET imaging signals than with AV45–PET imaging signals. In addition, MRI and/or FDG–PET neuroimaging biomarker monitored during drug treatments can provide valuable information on change in brain structure and metabolism in response to treatment and overall progression of disease.

After training MRI and FDG–PET-based 3D CNN models, we identified brain regions associated with key ADAS–Cog13 sub-scores through investigating these models with occlusion method. Thanks to the statistical nature of this method, we were able to quantify the contribution of each brain region on prediction of ADAS–Cog13 sub-scores that was not encoded in other brain regions. We found that these models utilized distinct sets of brain regions for predicting the sub-scores. For example, the hippocampus region had a high importance score in predicting all ADAS–Cog13 sub-scores in MRI-based CNN model (Fig. 3, Additional file 3: Table S4). This is a subcortical region important for memory formation and is well-known to undergo atrophy in AD patients [10, 13]. In comparison, the hippocampus region did not appear to be highly important for the FDG–PET-based CNN model. Instead, a network of cortical regions, led by cingulate gyrus, appeared to be highly important for all the sub-scores in the FDG-based model (Additional file 4: Table S5). This finding corroborated previous studies that reported abnormal metabolism in cingulate cortex of AD patients [11, 12]. Furthermore, we found that cerebellum, which is essential for motor activity and motor learning, was an important region associated with cognitive functions, especially Q1, Q4, and Q8, in the FDG–PET modality. Previous studies have reported that metabolites of cerebellar neurons promote amyloid-β clearance, and that cerebellar glucose metabolism was significantly lower in AD patients compared to control subjects [39, 40].

Our analyses further showed that within an imaging modality (MRI or FDG–PET) each ADAS–Cog13 sub-scores were associated with a specific set of brain regions. In the MRI-based model, sub-score Q1 was most strongly associated with brain structural changes in the hippocampus and the putamen, etc. Q4, Q7, and Q8 were strongly associated with changes in the hippocampus and the amygdala, etc. In the FDG–PET-based model, Q1 and Q4 were most strongly associated with brain metabolic changes in the cerebellum and the cingulate gyrus (posterior division), etc. Q7 was strongly associated with changes in the cingulate gyrus (posterior division) and the thalamus, etc. Q8 was associated with changes in the cingulate gyrus (posterior division) and the putamen, etc. These findings indicate a complex underlying relationship between structural and functional changes in brain regions (as measured by brain biomarkers) and changes in specific cognitive functions as observed in AD etiology. Nevertheless, the cognitive function pairs that were similar to each other were highly correlated in terms of their associations with brain regions (as shown in Fig. 4). We further made a first attempt to investigate the CNN models within each disease sub-group and found that ranks of brain region importance scores were different among disease sub-groups (Additional file 1: Fig. S3). For example, the hippocampus, a most important region in the MRI-based model in CN subjects for all ADAS–Cog13 sub-scores, showed lower importance score in MCI and AD patients. This indicated that AD etiology is dynamic, with different brain regions becoming strongly associated with cognitive function as the disease progresses.

Our study had some limitations. First, when identifying the most important AD sub-scores, we grouped MCI and CN participants into the nAD class to increase the sample size. While we acknowledge there is difference between MCI and CN brains, this grouping approach will not impact our finding on the association between cognitive function and brain structure. In our CNN model, we included cognitive function, instead of diagnosis, as response variable. Second, we chose our 3D CNN model structure and parameters based on previous knowledge on training CNNs. CNNs have lots of variations in their structures and parameters. Exploring more combinations of CNN structures and parameters may improve the model’s accuracy in predicting ADAS–Cog13 sub-scores. Third, our definition of brain feature importance was based on occlusion method, while alternative definitions such as GRAD–RAM are available and may reveal other insights [20, 41]. Fourth, our current model predicted cognitive functions collected at a single timepoint. A natural extension of this model would be to incorporate time factor, so that it predicts the change of cognitive function in the future.

In clinical practice, our findings may help to refine the process of AD early interventions and clinical trials. It is known that the changes of the brain, although associated with cognitive function changes, can occur a long time before changes in cognitive function. For example, it was reported that brain structural changes were detectable in the hippocampus and the medial temporal lobe up to 10 years before any AD symptom arises [42]. In addition, researchers were able to predict progression from mild cognitive impairment to AD 2 years in advance using FDG–PET or MRI data [14, 43]. Based on our analyses, we further suggest that brain features identified in our model, along with the cognitive scores predicted based on brain-imaging data, may assist AD risk assessment before diagnoses, allowing early disease intervention. During patient enrollment for clinical trials, our model may also help to stratify the patients in terms of their disease progression risk and increase the power of these trials. Most such current applications use more traditional radio-imaging features, such as volume, average grey value etc., which ignore the deeper associations in the grey values across the 3D space. CNN models can capture deeper associations and generate more nuanced brain feature-based patient stratification. We further propose that brain structural and metabolic features be monitored after initiation of drug intervention: changes of these features, while highly associated with ADAS–Cog sub-scores, may occur well before any change of cognitive functions and can, therefore, suggest AD stabilization (or even reversion) and help clinicians to better understand and evaluate drug efficacy.

Conclusions

In summary, we developed 3D CNN models for analyzing 3D brain-imaging data. These models predicted ADAS–Cog13 sub-scores based on different imaging modalities. Through investigating the trained CNN model, we gained a comprehensive view of imaging modality-specific brain features that are associated with key ADAS–Cog scores for the first time. Our models can accelerate clinical trials for AD and be further expanded to analyze imaging data for different types of brain diseases.

Supplementary Information

Additional file 1: Fig. S1. Imaging preprocessing. A Schematic representation of the image processing pipeline. Example of white-stripe normalization for MRI images, with pixel intensity distributions of the reference MRI (grey lines), a successfully normalized MRI (black lines) and an abnormal MRI that fails white stripe normalization (red lines) from raw imaging B to normalized imaging (C). Fig. S2. Receiver Operating Characteristic (ROC) curves of classification models on the ADNI and RADC data sets. A–C MRI, FDG–PET, and AV45–PET-based CNN models with diagnostic extension applied to ADNI samples. D MRI-based CNN models with diagnostic extension applied to RADC samples. Random forest (black), K nearest neighbours (grey), and logistic regression (blue) were used for the diagnosis extension. Fig. S3. Feature importance scores of selected brain regions within MRI A–D and FDG–PET E–H in different diagnosis sub-groups. Table S1. Demographic information of RADC samples. Table S2. Random forest feature importance for predicting AD vs. nAD based on ADAS–Cog13 sub-scores. Table S3. Accuracy of CNN model extension for AD diagnosis.

Additional file 2: Table S4. Feature importance scores of brain regions in MRI-based 3D CNN model.

Additional file 3: Table S5. Feature importance scores of brain regions in FDG–PET-based 3D CNN model. Feature importance scores of brain regions in FDG–PET-based 3D CNN model.

Additional file 4: Table S6. Feature importance scores of brain regions in FDG–PET-based 3D CNN model.

Acknowledgements

For the Alzheimer’s Disease Neuroimaging Initiative Data used in preparation of this article were obtained from the Alzheimer’s Disease Neuroimaging Initiative (ADNI) database (adni.loni.usc.edu). As such, the investigators within the ADNI contributed to the design and implementation of ADNI and/or provided data but did not participate in analysis or writing of this report. A complete listing of ADNI investigators can be found at: http://adni.loni.usc.edu/wpcontent/uploads/how_to_apply/ADNI_Acknowledgement_List.pdf.

Author contributions

KN, PBC: research conception and design. KN, JY, SS, LW: methodology, data analysis and interpretation. All authors: manuscript writing. JS: project supervision.

Funding

No funding was received for conducting this study.

Availability of data and materials

Not applicable.

Declarations

Ethics approval and consent to participate

No ethical approval was required.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Nelson PT, et al. Alzheimer’s disease is not “brain aging”: neuropathological, genetic, and epidemiological human studies. Acta Neuropathol. 2011;121:571–587. doi: 10.1007/s00401-011-0826-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Kent SA, Spires-Jones TL, Durrant CS. The physiological roles of tau and Aβ: implications for Alzheimer’s disease pathology and therapeutics. Acta Neuropathol. 2020 doi: 10.1007/s00401-020-02196-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Corey-Bloom J. The ABC of Alzheimer's disease: cognitive changes and their management in Alzheimer's disease and related dementias. Int Psychogeriatr. 2002;14(Suppl 1):51–75. doi: 10.1017/s1041610203008664. [DOI] [PubMed] [Google Scholar]

- 4.Coughlan G, Laczo J, Hort J, Minihane AM, Hornberger M. Spatial navigation deficits - overlooked cognitive marker for preclinical Alzheimer disease? Nat Rev Neurol. 2018;14:496–506. doi: 10.1038/s41582-018-0031-x. [DOI] [PubMed] [Google Scholar]

- 5.Whitwell JL, et al. Imaging correlations of tau, amyloid, metabolism, and atrophy in typical and atypical Alzheimer's disease. Alzheimers Dement. 2018;14:1005–1014. doi: 10.1016/j.jalz.2018.02.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Dukart J, et al. Relationship between imaging biomarkers, age, progression and symptom severity in Alzheimer's disease. NeuroImage Clin. 2013;3:84–94. doi: 10.1016/j.nicl.2013.07.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Cano SJ, et al. The ADAS-cog in Alzheimer's disease clinical trials: psychometric evaluation of the sum and its parts. J Neurol Neurosurg Psychiatry. 2010;81:1363–1368. doi: 10.1136/jnnp.2009.204008. [DOI] [PubMed] [Google Scholar]

- 8.Balsis S, Benge JF, Lowe DA, Geraci L, Doody RS. How do scores on the ADAS-cog, MMSE, and CDR-SOB correspond? Clin Neuropsychol. 2015;29:1002–1009. doi: 10.1080/13854046.2015.1119312. [DOI] [PubMed] [Google Scholar]

- 9.Johnson KA, Fox NC, Sperling RA, Klunk WE. Brain imaging in Alzheimer disease. Cold Spring Harb Perspect Med. 2012;2:a006213. doi: 10.1101/cshperspect.a006213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Weiner MW, et al. 2014 update of the Alzheimer's disease neuroimaging initiative: a review of papers published since its inception. Alzheimers Dement. 2015;11:e1–120. doi: 10.1016/j.jalz.2014.11.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Choi H, et al. Cognitive signature of brain FDG PET based on deep learning: domain transfer from Alzheimer's disease to Parkinson's disease. Eur J Nucl Med Mol Imaging. 2020;47:403–412. doi: 10.1007/s00259-019-04538-7. [DOI] [PubMed] [Google Scholar]

- 12.Mullins R, Reiter D, Kapogiannis D. Magnetic resonance spectroscopy reveals abnormalities of glucose metabolism in the Alzheimer's brain. Ann Clin Transl Neurol. 2018;5:262–272. doi: 10.1002/acn3.530. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Scheltens P, et al. Atrophy of medial temporal lobes on MRI in "probable" Alzheimer's disease and normal ageing: diagnostic value and neuropsychological correlates. J Neurol Neurosurg Psychiatry. 1992;55:967–972. doi: 10.1136/jnnp.55.10.967. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Ning K, et al. Classifying Alzheimer's disease with brain imaging and genetic data using a neural network framework. Neurobiol Aging. 2018;68:151–158. doi: 10.1016/j.neurobiolaging.2018.04.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Young J, et al. Accurate multimodal probabilistic prediction of conversion to Alzheimer's disease in patients with mild cognitive impairment. Neuroimage Clin. 2013;2:735–745. doi: 10.1016/j.nicl.2013.05.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Hinton GE, Salakhutdinov RR. Reducing the dimensionality of data with neural networks. Science. 2006;313:504–507. doi: 10.1126/science.1127647. [DOI] [PubMed] [Google Scholar]

- 17.LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015;521:436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- 18.Levakov G, Rosenthal G, Shelef I, Raviv TR, Avidan G. From a deep learning model back to the brain-Identifying regional predictors and their relation to aging. Hum Brain Mapp. 2020;41:3235–3252. doi: 10.1002/hbm.25011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Yang C, Rangarajan A, Ranka S. Visual explanations from deep 3D convolutional neural networks for Alzheimer's disease classification. AMIA Annu Symp Proc. 2018;2018:1571–1580. [PMC free article] [PubMed] [Google Scholar]

- 20.Zeiler MD, Fergus R (2014) Visualizing and understanding convolutional networks. ECCV 2014: Computer Vision.

- 21.Breiman L. Random forests. Mach Learn. 2001;45:5–32. doi: 10.1023/A:1010933404324. [DOI] [Google Scholar]

- 22.Bergstra J, Yoshua B. Random search for hyper-parameter optimization. J Mach Learn Res. 2012;13:281–305. [Google Scholar]

- 23.Fischl B. FreeSurfer. Neuroimage. 2012;62:774–781. doi: 10.1016/j.neuroimage.2012.01.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Mazziotta J, et al. A probabilistic atlas and reference system for the human brain: International Consortium for Brain Mapping (ICBM) Philos Trans R Soc B Biol Sci. 2001;356:1293–1322. doi: 10.1098/rstb.2001.0915. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Shinohara RT, et al. Statistical normalization techniques for magnetic resonance imaging. NeuroImage Clin. 2014;6:9–19. doi: 10.1016/j.nicl.2014.08.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Jagust WJ, et al. The Alzheimer's disease neuroimaging initiative positron emission tomography core. Alzheimers Dement. 2010;6:221–229. doi: 10.1016/j.jalz.2010.03.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556.

- 28.Martín Abadi AA, Barham P, Brevdo E, et al (2015) TensorFlow: large-scale machine learning on heterogeneous systems.

- 29.Argyrious A, Evgenious T, Pontil M (2006) Multi-task feature learning. NeurIPS, 41–48

- 30.He K, Zhang X, Ren S, Sun J Proceedings of the IEEE conference on computer vision and pattern recognition. 770–778.

- 31.Kingma DP, Ba J (2014) Adam: a method for stochastic optimization. arXiv preprint arXiv:1412.6980

- 32.Huang G et al (2017) Snapshot ensembles: Train 1, get m for free. arXiv preprint arXiv:1704.00109

- 33.Fan R-E, Chang K-W, Hsieh C-J, Wang X-R, Lin C-J. LIBLINEAR: A library for large linear classification. J Mach Learn Res. 2008;9:1871–1874. [Google Scholar]

- 34.Bentley J. Multidimensional binary search trees used for associative searching. CACM. 1975;18(9):509–517. doi: 10.1145/361002.361007. [DOI] [Google Scholar]

- 35.Bennett D, Schneider J, Arvanitakis Z, Wilson R. Overview and findings from the religious orders study. Curr Alzheimer Res. 2012;9:628–645. doi: 10.2174/156720512801322573. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Landau SM, et al. Amyloid deposition, hypometabolism, and longitudinal cognitive decline. Ann Neurol. 2012;72:578–586. doi: 10.1002/ana.23650. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Podhorna J, Krahnke T, Shear M, Harrison JE, Alzheimer׳s Disease Neuroimaging Initiative Alzheimer's Disease Assessment Scale-Cognitive subscale variants in mild cognitive impairment and mild Alzheimer's disease: change over time and the effect of enrichment strategies. Alzheimers Res Ther. 2016;8:8. doi: 10.1186/s13195-016-0170-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Selkoe DJ. Alzheimer disease and aducanumab: adjusting our approach. Nat Rev Neurol. 2019;15:365–366. doi: 10.1038/s41582-019-0205-1. [DOI] [PubMed] [Google Scholar]

- 39.Du J, et al. Metabolites of cerebellar neurons and hippocampal neurons play opposite roles in pathogenesis of Alzheimer's disease. PLoS ONE. 2009;4:e5530. doi: 10.1371/journal.pone.0005530. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Ishii K, et al. Reduction of cerebellar glucose metabolism in advanced Alzheimer's disease. J Nucl Med. 1997;38:925–928. [PubMed] [Google Scholar]

- 41.Selvaraju RR et al (2017) Grad-CAM: Visual explanations from deep networks via gradient-based localization. 618–626

- 42.Tondelli M, et al. Structural MRI changes detectable up to ten years before clinical Alzheimer's disease. Neurobiol Aging. 2012;33(825):e825–836. doi: 10.1016/j.neurobiolaging.2011.05.018. [DOI] [PubMed] [Google Scholar]

- 43.Cabral C, Morgado PM, Campos Costa D, Silveira M, Alzheimer׳s Disease Neuroimaging Initiative Predicting conversion from MCI to AD with FDG-PET brain images at different prodromal stages. Comput Biol Med. 2015;58:101–109. doi: 10.1016/j.compbiomed.2015.01.003. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Additional file 1: Fig. S1. Imaging preprocessing. A Schematic representation of the image processing pipeline. Example of white-stripe normalization for MRI images, with pixel intensity distributions of the reference MRI (grey lines), a successfully normalized MRI (black lines) and an abnormal MRI that fails white stripe normalization (red lines) from raw imaging B to normalized imaging (C). Fig. S2. Receiver Operating Characteristic (ROC) curves of classification models on the ADNI and RADC data sets. A–C MRI, FDG–PET, and AV45–PET-based CNN models with diagnostic extension applied to ADNI samples. D MRI-based CNN models with diagnostic extension applied to RADC samples. Random forest (black), K nearest neighbours (grey), and logistic regression (blue) were used for the diagnosis extension. Fig. S3. Feature importance scores of selected brain regions within MRI A–D and FDG–PET E–H in different diagnosis sub-groups. Table S1. Demographic information of RADC samples. Table S2. Random forest feature importance for predicting AD vs. nAD based on ADAS–Cog13 sub-scores. Table S3. Accuracy of CNN model extension for AD diagnosis.

Additional file 2: Table S4. Feature importance scores of brain regions in MRI-based 3D CNN model.

Additional file 3: Table S5. Feature importance scores of brain regions in FDG–PET-based 3D CNN model. Feature importance scores of brain regions in FDG–PET-based 3D CNN model.

Additional file 4: Table S6. Feature importance scores of brain regions in FDG–PET-based 3D CNN model.

Data Availability Statement

Not applicable.