Summary:

Alarm fatigue (and resultant alarm non-response) threatens the safety of hospitalized patients. Historically threats to patient safety, including alarm fatigue, have been evaluated using a Safety I perspective analyzing rare events such as failure to respond to patients’ critical alarms. Safety II approaches call for learning from the everyday adaptations clinicians make to keep patients safe. To identify such sources of resilience in alarm systems we conducted 59 in-situ simulations of a critical hypoxemic-event alarm on medical/surgical and intensive care units at a tertiary care pediatric hospital between December 2019 and May 2022. Response timing, observations of the environment, and post-simulation debrief interviews were captured. Four primary means of successful alarm responses were mapped to domains of Systems Engineering Initiative for Patient Safety (SEIPS) framework to inform alarm system design and improvement.

INTRODUCTION:

Alarm fatigue (and resultant alarm non-response) threatens the safety of hospitalized patients [1–3]. Consistent with best practices, our institution applies established methods such as root-cause analysis and failure mode effect analysis to learn from events where a patient experienced harm following non-response to a critical, actionable alarm. Although such strategies (Safety I) are helpful, they generally conceptualize systems as predictable and comprised of linear cause-and-effect relationships and thus incompletely account for the variability and complexity of clinical tasks such as alarm response [4–6]. The tendency of Safety I approaches to oversimplify complex work has limited their impact and utility [6]. Increasingly safety scientists have argued for frameworks that recognize the inherent variability of healthcare, viewing the adaptations made by clinicians under varying conditions as sources of resilience, rather than undesirable process deviations [4,6,7]. Safety II approaches recognize that “things go right much more often than they go wrong” [4] and thus seek to learn from everyday work instead of from (rare) adverse events [6].

Understanding sources of system resilience (Safety II) and supporting those features may prevent system failures. The sources of alarm system resilience, allowing nurses to consistently respond to critical alarms most of the time, have not been well characterized. More broadly, few tools exist to evaluate Safety II features of the health system. As part of a larger quality improvement effort aimed at evaluating response to critical alarms, we applied observational approaches to characterize sources of alarm system resilience.

METHODS:

Setting:

In this single center quality improvement initiative, we conducted in-situ simulations to evaluate response to critical alarms in medical-surgical and critical care units of a pediatric hospital. The existing alarm system consists of bedside cardiorespiratory monitors, central monitors, and a secondary notification system routing critical alarms to the primary nurse’s mobile phone. Using previously described methods [8] we simulated clinically significant patient alarms on eligible patients purposefully sampled based on risk of harm from alarm fatigue in our current alarm system (rather than patients receiving heightened surveillance).

Simulation:

We generated fictitious critical hypoxemic-event alarms (simulated alarm) and routed the resultant notification to nurses’ mobile phone (secondary notification) and the unit’s centralized, remote display monitors (central monitors). The simulated alarms appeared to originate from an actual patient’s bedside monitor but did not sound in the patient room nor alter the actual patient’s physiologic waveform or numeric vital sign readout on the bedside monitor. Simulations concluded when a staff member responded to the simulated alarm (simulated-alarm response), arrived at the bedside for routine care (routine care), or after 10 minutes elapsed (non-response). Successful response was defined as either response to the simulated alarm or routine care; we considered arriving at the bedside (for any reason) to be alarm response because it is unlikely alarms would go unnoticed at the bedside.

We coordinated timing of simulations with unit leadership to reduce risks to patient safety and interruptions in care. Consistent with our hospital culture around mock codes, simulations were not considered an evaluation of individual performance. We partnered with a simulation expert to ensure psychological safety and maintain principles of just culture. Nurse names were recorded to limit the number of times an individual participated in simulations; names or outcomes of individual simulations were not shared with leadership.

Observational Data Collection:

Observers had clinical, human-factors engineering, and/or research expertise and were unknown to unit staff. In keeping with best practices [9,10], direct observation training included immersion in project objectives and familiarization with data collection tools and unit environment (e.g., location of monitors). Observers positioned themselves to unobtrusively ensure line of sight of patient rooms. They documented clinician interactions with central monitors or their mobile phone and how the simulations ended. Observers used REDCap-based [11] questionnaires (Appendix 1) to record time to response, responder role, and observations about environment and alarm-perception. Immediately after each simulation we debriefed with unit staff and inquired whether the staff member was responding to the alarm or had arrived at the bedside for routine care. This work was deemed exempt from IRB review.

Analysis:

The observer team met weekly to review questionnaires and characterize modes of successful alarm-response and categorized them according to pertinent Systems Engineering Initiative for Patient Safety (SEIPS) domains: technology and tools, organization, clinical team, physical environment, and tasks [12,13]. We then applied interaction mapping, a SEIPS analytic tool, to evaluate and describe how each of the SEIPS factors interacted with each other to produce the observed modes of successful alarm-response [14]. Our interprofessional team iteratively reviewed data categorizations and resolved discrepancies during weekly discussions to achieve consensus [15].

RESULTS:

We conducted 59 critical hypoxemic-event alarm simulations, 39 on medical/surgical units and 20 in ICUs, between December 2019 and May 2022 with an overall successful response rate of 78% (46 of 59). Of the 46 responses, staff members responded to the simulated alarm in 85% (39 of 46) and arrived at the bedside for routine care in 15% (7 of 46). The median response time was 40 seconds. All responders were nurses. Non-response rate was 22% (13 of 59).

We observed four modes of critical alarm response:

Secondary notification:

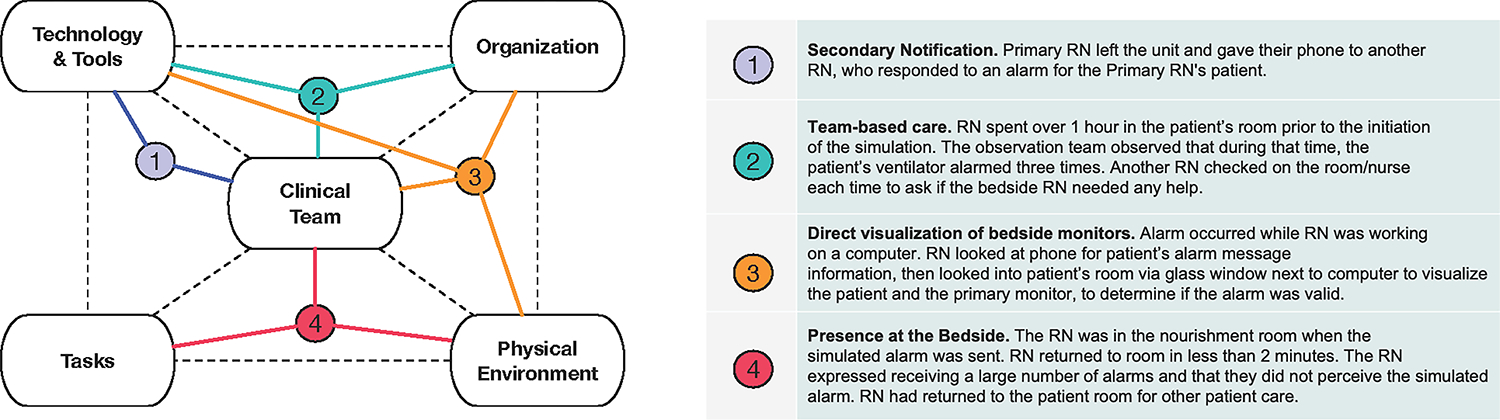

Secondary notification of an alarm on nurses’ phones was the leading means of alarm perception, observed in 82% (32 of 39) of instances where staff members responded to the simulated alarm. During debriefs, nurses described utilizing device features to enhance perception of notifications, for instance using auditory and/or vibratory manipulations and strategic positioning of phones (e.g., clipping phone to collar). Within the SEIPS framework, we mapped this mode of response to interactions between team members and technology (Figure 1).

Figure.

SEIPS interaction diagram highlighting sources of system resilience.

The SEIPS framework (left,dotted lines) overlayed with examples of sources of system resilience for alarm response observedduring the simulations. The diagram highlights system interactions that contribute to successful alarm respons.

Team-based care:

In 15% (6 of 39) of instances where staff members responded to the simulated alarm, the respondent was not the primary nurse (e.g., leadership nurses, a nurse holding the primary nurses’ phone). Observed team-based behaviors included nurses checking on each other, nurses checking on other nurses’ patients, and phone handoffs when nurses left the unit. These modes of response rely on interactions between team members, organizational features of the nurse resource support systems, and technology.

Direct visualization of bedside monitors from outside the patient room:

Particularly in the ICU, where nurses typically care for 1–2 closely-roomed patients, direct visualization of patients and bedside monitors was a frequent mode of alarm response. Most ICU nurses (60%, 12 of 20) were directly outside the patient room at the time of simulation, allowing for instantaneous alarm perception and response. Comparatively, medical/surgical units (where nurses typically care for ≥3 patients dispersed throughout the unit) nurses were most frequently observed to be working at centralized nursing stations (49%, 19 of 39). Aligning with the SEIPS environmental segment, proximity to patients, transparent doors/windows, computer workstations outside patient rooms, and bedside monitor visibility were factors that supported response to the simulated alarm. Central monitors were a technological element of the physical environment that allowed nurses to perceive alarms without being at the bedside. In 15% (6 of 39) of the instances where a staff member responded to the simulated alarm, the nurse evaluated the alarm waveform on a central monitor. In 21% (8 of 39) the primary nurse did not perceive the secondary notification, but the central monitor prompted another unit nurse to respond, illustrating the importance of interactions of technology, clinical team members, organizational culture, and physical environment in promoting alarm response.

Frequent presence at the bedside:

In 12% (7 of 59) of simulations the responding nurse did not perceive the simulated alarm via secondary notification, direct visualization, or a central monitoring station, but nonetheless presented to the bedside for a different reason as part of routine care (e.g., medication administration) and would have been positioned to respond to a critical alarm. Within the SEIPS framework, we conceptualized that frequent nurse/patient interactions during the course of routine care provide a “safety net” layer for alarm response allowing nurses to detect a critical situation in a timely fashion (even when technologically delivered notifications were not perceived). Organizational features (e.g., staffing models), tasks, and physical environment characteristics (e.g., size of unit and proximity to patients) facilitate the frequency of bedside interactions and contribute to resilience in alarm response.

Discussion/Conclusion

Despite variable inpatient clinical environments, nurses perceive and quickly respond to rare, critical alarms most of the time. In-situ simulations allowed us to characterize modes of alarm response and the sources of alarm system resilience suppporting response. Each mode of response reflects adaptive interactions between different elements of the team, physical environment, technology, organizational structure, and tasks that comprise the sociotechnical work environment.

Attention to sources of resilience is critical in developing safer alarm systems. For instance, clinicians’ sight lines to patients and monitors are important elements of the physical environment that should be factored into unit design. Likewise, delegating nursing tasks to other staff members may reduce time at the bedside in ways that impact alarm recognition and response. A Safety II perspective allows for expanding beyond individual nurse-focused understanding of alarm response toward appreciation of the complex sociotechnical system interactions enabling completion of this critical task.

We analyzed observations using interaction mapping [14], a strategy for SEIPS-based analysis depicting how multiple systems elements intersect to produce outcomes. Others analyzed in-situ simulation to understand system resilience (in other settings) using the Functional Resonance Analysis Method [16]; we found the SEIPS tools better suited to evaluating alarm response because they are designed for analysis of the interactions between sociotechnical systems elements.

Our results must be contextualized within limitations of the approach. We used data collected as part of a broader improvement project focused on time to alarm response, potentially affecting nurses’ alarm response. Social desirability bias may have influenced nurses’ debriefing responses; to mitigate we relied primarily on observational data. Because of the design of the simulation, we primarily observed nurses’ response to alarms and may not have appreciated ways other team members serve as safety net responders. We did not debrief all unit members who did not respond, potentially missing some data about the response system. Research is needed to expand on opportunities for resilience identified here. Although generalizability of quality improvement results is limited, our description of in-situ simulation and use of SEIPS analytic tools may be helpful to others interested in operationalizing Safety II methods.

Supplementary Material

Funding:

Pediatric patient safety learning laboratory to re-engineer continuous physiologic monitoring systems, R18HS026620 from the Agency for Healthcare Research and Quality (AHRQ), U.S. Department of Health and Human Services (HHS). Additionally, Dr. Rasooly’s effort was funded by K08 HS028682 from the Agency for Healthcare Research and Quality (AHRQ), U.S. Department of Health and Human Services (HHS).

Footnotes

Conflicts of Interest: No conflicts of interest to state.

Contributor Information

Melissa McLoone, 3500 Civic Center Blvd, The Hub, Philadelphia, PA 19104, United States; Department of Nursing Practice and Education, Children’s Hospital of Philadelphia, Philadelphia, PA, USA.

Meghan McNamara, 3500 Civic Center Blvd, The Hub, Philadelphia, PA 19104, United States.; Department of Nursing Practice and Education, Children’s Hospital of Philadelphia, Philadelphia, PA, USA

Megan A. Jennings, 3500 Civic Center Blvd, The Hub, Philadelphia, PA 19104, United States.; Department of Nursing Practice and Education, Children’s Hospital of Philadelphia, Philadelphia, PA, USA

Hannah R. Stinson, 3500 Civic Center Blvd, The Hub, Philadelphia, PA 19104, United State; Department of Anesthesia and Critical Care Medicine, Children’s Hospital of Philadelphia, Philadelphia, PA, USA; Department of Anesthesia and Critical Care, University of Pennsylvania, Perelman School of Medicine, Philadelphia, PA, USA.

Brooke T. Luo, 3500 Civic Center Blvd, The Hub, Philadelphia, PA 19104, United States.; Section of Pediatric Hospital Medicine, Children’s Hospital of Philadelphia, Philadelphia, PA, USA Department of Biomedical and Health Informatics, Children’s Hospital of Philadelphia, Philadelphia, PA, USA; Department of Pediatrics, Children’s Hospital of Philadelphia, Philadelphia, PA, USA.

Daria Ferro, 3500 Civic Center Blvd, The Hub, Philadelphia, PA 19104, United States.; Section of Pediatric Hospital Medicine, Children’s Hospital of Philadelphia, Philadelphia, PA, USA Department of Biomedical and Health Informatics, Children’s Hospital of Philadelphia, Philadelphia, PA, USA; Department of Pediatrics, Children’s Hospital of Philadelphia, Philadelphia, PA, USA.

Kimberly Albanowski, 3500 Civic Center Blvd, The Hub, Philadelphia, PA 19104, United States.; Section of Hospital Medicine, Children’s Hospital of Philadelphia, Philadelphia, PA, USA

Halley Ruppel, 2716 South Street, Philadelphia, PA 19146, United States.; University of Pennsylvania School of Nursing, Philadelphia, PA, USA Center for Pediatric Clinical Effectiveness, Children’s Hospital of Philadelphia, Philadelphia, PA, USA; Leonard Davis Institute of Health Economics, University of Pennsylvania, Philadelphia, PA, USA.

James Won, Center for Healthcare Quality & Analytics (CHQA), Children’s Hospital of Philadelphia, Philadelphia, PA, USA.

Christopher P. Bonafide, 3500 Civic Center Blvd, The Hub, Philadelphia, PA 19104, United States; Section of Hospital Medicine, Children’s Hospital of Philadelphia, Philadelphia, PA, USA; Center for Pediatric Clinical Effectiveness, Children’s Hospital of Philadelphia, Philadelphia, PA, USA; Department of Pediatrics, Perelman School of Medicine, University of Pennsylvania, Philadelphia, PA, USA; Penn Implementation Science Center at the Leonard Davis Institute of Health Economics (PISCE@LDI), University of Pennsylvania, Philadelphia, PA, USA.

Irit R. Rasooly, 3500 Civic Center Blvd, The Hub, Philadelphia, PA 19104, United States; Section of Hospital Medicine, Children’s Hospital of Philadelphia, Philadelphia, PA, USA; Center for Pediatric Clinical Effectiveness, Children’s Hospital of Philadelphia, Philadelphia, PA, USA; Department of Pediatrics, Perelman School of Medicine, University of Pennsylvania, Philadelphia, PA, USA.

References

- 1.Sendelbach S, Funk M. Alarm fatigue: a patient safety concern. AACN Adv Crit Care 2013;24:378–86; quiz 387–8. doi: 10.1097/NCI.0b013e3182a903f9 [DOI] [PubMed] [Google Scholar]

- 2.Medical device alarm safety in hospitals. Jt. Comm. Sentin. Event Alert. 2013.https://www.jointcommission.org/-/media/tjc/documents/resources/patient-safety-topics/sentinel-event/sea_50_alarms_4_26_16.pdf (accessed 24 Jun 2020). [PubMed]

- 3.Winters BD, Cvach MM, Bonafide CP, et al. Technological distractions (part 2): a summary of approaches to manage clinical alarms with intent to reduce alarm fatigue. Crit Care Med 2018;46:130–7. doi: 10.1097/CCM.0000000000002803 [DOI] [PubMed] [Google Scholar]

- 4.Hollnagel E, Wears R, Braithwaite J. From safety-I to safety-II: a white paper. Published simultaneously by the University of Southern Denmark, University of Florida, USA, and Macquarie University, Australia: 2015. http://resilienthealthcare.net/onewebmedia/WhitePaperFinal.pdf (accessed 7 Nov 2022). [Google Scholar]

- 5.Peerally MF, Carr S, Waring J, et al. The problem with root cause analysis. BMJ Qual Saf 2016;:bmjqs-2016–005511. doi: 10.1136/bmjqs-2016-005511 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Verhagen MJ, de Vos MS, Sujan M, et al. The problem with making Safety-II work in healthcare. BMJ Qual Saf 2022;31:402–8. doi: 10.1136/bmjqs-2021-014396 [DOI] [PubMed] [Google Scholar]

- 7.Biddle L, Wahedi K, Bozorgmehr K. Health system resilience: a literature review of empirical research. Health Policy Plan 2020;35:1084–109. doi: 10.1093/heapol/czaa032 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Luo B, McLoone M, Rasooly IR, et al. Analysis: Protocol for a New Method to Measure Physiologic Monitor Alarm Responsiveness. Biomed Instrum Technol 2020;54:389–96. doi: 10.2345/0899-8205-54.6.389 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Alfred M, Del Gaizo J, Kanji F, et al. A better way: training for direct observations in healthcare. BMJ Qual Saf 2022;31:744–53. doi: 10.1136/bmjqs-2021-014171 [DOI] [PubMed] [Google Scholar]

- 10.Catchpole K, Neyens DM, Abernathy J, et al. Framework for direct observation of performance and safety in healthcare. BMJ Qual Saf 2017;26:1015–21. doi: 10.1136/bmjqs-2016-006407 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Harris PA, Taylor R, Minor BL, et al. The REDCap consortium: Building an international community of software platform partners. J Biomed Inform 2019;95:103208. doi: 10.1016/j.jbi.2019.103208 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Holden RJ, Carayon P, Gurses AP, et al. SEIPS 2.0: a human factors framework for studying and improving the work of healthcare professionals and patients. Ergonomics 2013;56:1669–86. doi: 10.1080/00140139.2013.838643 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Carayon P, Schoofs Hundt A, Karsh B-T, et al. Work system design for patient safety: the SEIPS model. Qual Health Care 2006;15:i50–8. doi: 10.1136/qshc.2005.015842 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Holden RJ, Carayon P. SEIPS 101 and seven simple SEIPS tools. BMJ Qual Saf 2021;:bmjqs-2020–012538. doi: 10.1136/bmjqs-2020-012538 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Cohen DJ, Crabtree BF. Evaluative criteria for qualitative research in health care: controversies and recommendations. Ann Fam Med 2008;6:331–9. doi: 10.1370/afm.818 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.MacKinnon RJ, Pukk-Härenstam K, Kennedy C, et al. A novel approach to explore Safety-I and Safety-II perspectives in in situ simulations—the structured what if functional resonance analysis methodology. Adv Simul 2021;6:21. doi: 10.1186/s41077-021-00166-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.