Abstract

Purpose

Overdiagnosis is a concept central to making informed breast cancer screening decisions, and yet some people may react to overdiagnosis with doubt and skepticism. The present research assessed four related reactions to overdiagnosis: Reactance, self-Exemption, Disbelief, and Source derogation (“REDS”). The degree to which the concept of overdiagnosis conflicts with participants’ prior beliefs and health messages (“Information Conflict”) was also assessed as a potential antecedent of REDS. We developed a scale to assess these reactions, evaluated how those reactions are related, and identified their potential implications for screening decision-making.

Methods

Female participants age 39-49 years read information about overdiagnosis in mammography screening and completed survey questions assessing their reactions to that information. We used a multidimensional theoretical framework to assess dimensionality and overall domain-specific internal consistency of the REDS and Information Conflict questions. Exploratory and confirmatory factor analysis were performed using data randomly split into a training set and test set. Correlations between REDS, Information Conflict, screening intentions and other outcomes were evaluated.

Results

Five-hundred-twenty-five participants completed an online survey. Exploratory and confirmatory factor analyses identified that Reactance, self-Exemption, Disbelief, Source derogation, and Information Conflict represent unique constructs. A reduced 20-item scale was created by selecting 4 items per construct, which showed good model fit. Reactance, Disbelief and Source derogation were associated with lower intent to use information about overdiagnosis in decision-making and the belief that informing people about overdiagnosis is unimportant.

Conclusions

Reactance, self-Exemption, Disbelief, Source derogation (REDS), and Information Conflict, are distinct but correlated constructs that are common reactions to overdiagnosis. Some of these reactions may have negative implications for making informed screening decisions.

INTRODUCTION

A person’s ability to make informed, high quality health decisions depends on their acceptance of well-supported scientific conclusions. Considerable research has established health communication methods that maximize comprehension, such as avoiding high literacy words, minimizing medical jargon and presenting numerical information with graphical visualizations.1-4 Yet one of the most recognized challenges in health communication—one that goes beyond comprehension—is how to maximize openness and willingness to accept evidence-based information (and relatedly, minimize acceptance of claims that are unsupported by evidence), and thereby promote informed decision-making.5-7

Breast cancer screening is an area of medicine in which informed decision-making has been emphasized. For example, from 2009-2023 the United States Preventive Services Task Force (USPSTF) recommended that women in their 40s make individual decisions about screening with their doctor about when to start having regular mammograms, and how frequently to screen.8,9 One of the key reasons for that recommendation was evidence that there are harms of screening that may outweigh the benefits for some people. In particular, screening can cause overdiagnosis, defined as the diagnosis of some cases of cancer that will not grow or spread, for which the diagnosis and consequent treatment are therefore not beneficial but rather harmful.10-12 As of this writing, a draft USPSTF guideline appears poised to change the recommended age to start screening from 50 to 40. However, promoting informed decision-making remains a priority. In 2022 the USPSTF wrote that that informed decision-making is a “core value” in U.S. healthcare, and that “it is the ethical right of patients to be provided with information and to make decisions collaboratively with their clinician” (p.1171).13 The article further elaborated that informed decision making is important at all levels of USPSTF recommendations.

Informed decision making may be a core value,13 but communicating evidence for mammography benefits and harms presents challenges. Prior research has found that many people overestimate the benefit of mammograms,14 have never heard about overdiagnosis,15 and are very enthusiastic about screening.16-18 Overdiagnosis, in particular, presents a communication challenge.11 Overdiagnosis is a difficult concept to explain because knowledge of it is derived from population data rather than individual cases, and the idea of overdiagnosis contradicts many people’s basic beliefs about cancer (e.g., that cancer is always life-threatening).11 Perhaps as a result, people do not always immediately accept information about overdiagnosis, and instead express skepticism. For example,n a 2016 survey fewer than 1-in-4 people thought that overdiagnosis in breast cancer screening was“believable”.15

In light of these considerations, the focus of the present research was to identify, and explore the potential consequences of, reactions to overdiagnosis which suggest that a person is skeptical about the evidence. In so doing, we created a measurement scale that assesses different kinds of skeptical reactions, and which may facilitate a broader understanding of why people do not always accept evidence-based health information.

A Conceptual Framework: Defensive Information Processing

Defensive coping is defined as reactions that help the perceiver avoid unwelcome ideas or implications of a message (also known as motivated reasoning).19,20 Learning that mammograms can cause harm might be an unwelcome message for many people, especially (for example) individuals who are very worried about the possibility that they might one day develop breast cancer. McQueen and colleagues provide a comprehensive review of the various defensive reasoning strategies that people engage to avoid unwelcome health messages.20 Defensive responses can occur early in information processing stages; for example, a person might try to avoid exposure to unwanted messages by selecting certain news sources and not others, or avoiding seeing a doctor who might deliver unwelcome information about their health. Once exposed to an unwanted message, a person might disengage attention, and avoid comprehension or further elaboration of that message.

In the present research, our focus was on skeptical reactions to evidence which occur after people have processed a message. McQueen and colleagues list a number of defensive reactions that occur at this later stage of information processing, falling into two broad categories: suppression and counterarguing.20 Suppression reactions downplay the personal implications of the message, for example, accepting that the information may be relevant to others but rejecting its relevance to oneself. Suppression has also been described as downplaying benefits or harms, but in situations that call for informed decisions, people are expected to subjectively evaluate the importance of benefits and harms. Therefore, a person who considers overdiagnosis as less important than a small reduction in cancer risk is not necessarily acting defensively, and is not considered as such in this research. Counterarguing reactions attempt to discount the message itself, for example, by deciding the message is manipulative (reactance), not believable (disbelief), or by questioning its source (source derogation).

Based on these concepts, we identified relevant defensive reactions and abbreviate these reactions using the mnemonic “REDS”: 1. Reactance, defined as perceived manipulation20; 2. self-Exemption, defined as rejecting the relevance of the information for oneself; 3. Disbelief, defined as doubting that the information is true; and 4. Source derogation, defined as perceiving the source as untrustworthy and/or incompetent. Each of these reactions reflect skepticism toward information, and each may result in that information not being considered as part of the decision. In the present research we focused on these four reactions, while acknowledging that there are other reactions to overdiagnosis that might be relevant to screening decisions that we did not include due to the limitations of our research aims and scope.

Beyond Defensive Processing: Information Conflict

Until this point, we have characterized Reactance, self-Exemption, Disbelief and Source derogation (henceforth, REDS) as defensive reactions; that is, responses that help people to reject information that is aversive, and come to a more palatable conclusion.19,20 However, it is alternatively possible that REDS responses are not defensive per se, and instead stem from a normative probablistic inference that when information is very different from prior messages and beliefs, it is unlikely to be true.21,22 We named this experienced discrepancy between new information and past messages or beliefs“Information Conflict”.

The literature on mental models—defined as knowledge structures that guide our understanding of how something works—is relevant here.23,24 When new information conflicts with a person’s existing mental model (i.e., high Information Conflict), they can either revise their mental model to fit the new data or they can reject the information and keep their mental model intact.25 Sometimes people find it easier to identify explanations for why information is unlikely to be true, rather than change their mental model. Overdiagnosis is a concept that conflicts with the common belief that cancer is always deadly and treatment is always necessary, so rather than revise those key beliefs about cancer, it may be easier to reason that information about overdiagnosis is somehow not true or trustworthy. In this study, we therefore assessed Information Conflict and explored the possibility that it is an antecedent of REDS.

The Present Research

The objective of this study is to develop a measure of REDS and Information Conflict in reaction to information about overdiagnosis, to determine whether they are distinct constructs, and explore the association of REDS and Information Conflict with screening judgments. We studied this issue among women age 39-49, because at the time of the study mammography screening guidelines in the United States recommended that women make an informed choice about when to start regular mammography screening (e.g., at 40 or 50 years of age).8,9

As we lack pre-existing measures of REDS and Information Conflict, our methods included item development and exploratory and confirmatory factor analyses. A comprehensive measure of defensive information processing for colon cancer exists, but it focuses on reactions to messages strongly promoting screening and does not easily translate to other health contexts. However, that measure served as a conceptual guide for the present research.20 Furthermore, although prior research has assessed the believability of overdiagnosis and other health information,15,26 for the presently developed measure we sought to capture a broader array of reactions to screening evidence and create items that could be adapted to other health domains.

This research posed the following research questions (RQ):

RQ 1: Are REDS and Information Conflict all distinct constructs?

RQ 2: If REDS and Information Conflict are distinct constructs, how do they correlate with each other?

RQ 3: Is there an association of REDS and Information Conflict with cancer screening judgments? Specifically, we hypothesized that people who express more REDS will be less likely (relative to people who express less REDS) to say that the information about overdiagnosis will cause them to wait until 50 to screen regularly. As a secondary outcome, we assessed the perceived importance of informing women about overdiagnosis. We predicted that participants who expressed more REDS would be more likely to believe that it is unimportant for anyone to learn about overdiagnosis.

METHODS

The preregistration for this study can be found on AsPredicted.org, #74897. Our conceptual model included the four dimensions of REDS (Reactance, Self-Exemption, Disbelief, Source derogation) and Information Conflict each reflecting different reactions to overdiagnosis:20,22,27 Information Conflict is not included in the REDS acronym because it was not conceptualized as a defensive reasoning mechanism, and theory suggests it could be an antecedent of REDS.

Item Development

Item development was performed by the study team based on this five-dimensional model. Item development began with a search for existing similar scales, and consideration of relevant question items. Three items to assess Disbelief were identified from an existing scale.26 The study team who include experts in survey design, scale development, cancer screening and overdiagnosis, then engaged in an iterative process of item generation. The first author generated an initial set of items, which were then added-to, edited, and discussed by the study team. The research group followed the prescription that item generation should err on the side of over-inclusiveness by including items that might ultimately prove to be tangential to the core construct.28 After multiple rounds of item generation and discussion, this process resulted in a total of 35 items. Our goal was to have both a psychometrically valid and clinically useful tool, so we sought to limit each construct to 4 items (the minimum number of items required to estimate fit of a single factor in confirmatory factor analysis). All items were worded as statements so-as to be assessed on an agree/disagree Likert scale (1=“strongly disagree” to 5=“strongly agree”).

Overdiagnosis Information Materials

The information about breast cancer overdiagnosis included four pages adapted from a decision aid about breast screening for women over 50 in Australia.29 Key information from that decision aid was used in this study with permission from the original authors. We made modifications to those materials which we describe in detail in the supplement. Briefly, the process of modification included 1. Identifying essential elements explaining overdiagnosis from the original DA, 2. Revising rates of overdiagnosis using updated literature, 3. Soliciting feedback from 5 internationally recognized experts in cancer screening and overdiagnosis, and 4. Conducting cognitive interivews with patients to refine the materials to ensure that the explanation of overdiagnosis was understandable.Full materials can be found in the supplement.

Participants and Survey Overview

The information about breast cancer overdiagnosis was distributed electronically to female participants aged 39-49 from across the United States using Dynata, a company that maintains a panel of millions of individuals worldwide who opted in to participate in surveys in exchange for small cash incentives. Participants were invited to participate in this online survey and told that they would evaluate one part of a larger booklet that provides information about the benefits and harms of breast cancer screening. After reading the information about overdiagnosis, participants responded to items intended to measure REDS and Information Conflict, outcome measures and standard demographics.

Outcome Measures and Associated Hypotheses

The only question assessed prior to presenting the information about overdiagnosis was whether participants had ever heard of overdiagnosis as a harm of breast cancer screening. After reading the information about overdiagnosis, participants were asked to respond to the REDS and Information Conflict items described previously. Participants then responded to the primary outcome measure, impact of breast cancer overdiagnosis on screening intentions. This measure had four categorical response options: This information made me want to start regular screening sooner, This information made me want to wait to get regular screening until I'm older, This information will not influence my decision, and Other, please specify. A slight adjustment in wording was made for participants who had received breast cancer screening in the past (e.g., “start regular screening” was replaced with “continue regular screening”). The primary analytic outcome was defined as a binary indicator of whether respondents selected “made me want to wait to get regular screeing until I am older” (1) versus the remaining response options (0). We hypothesize that participants who express more REDS in response to information about overdiagnosis would be less likely to state that this information influenced them to wait to get regular breast cancer screening until they are older. This directional hypothesis was preregistered.

As a secondary outcome, respondents were asked Based on what you have learned, do you think overdiagnosis is important for women to know about before starting mammography screening? 1=not important, 5=very important). Prior to data analysis, we hypothesized that women who expressed more REDS would perceive overdiagnosis as less important for women to know about.

We included five exploratory outcome measures for the purpose of exploring their association with REDS and Information Conflict. These were all assessed after the overdiagnosis information and primary outcome measures. Knowledge about overdiagnosiswas assessed with five questions used in prior research.29 A knowledge score was computed as the proportion of questions answered correctly. We also administered the Medical Maximizing-Minimizing Scale, which assesses an individual’s desire for more vs. less intensive approaches to healthcare, and computed an average score in which higher scores indicate a preference for receiving more intensive healthcare and lower scores indicate a preference for less intensive healthcare.30 Trust in the healthcare system was assessed using an average score from a standardized scale31 where higher values correspond to greater trust. Finally, health literacy32 and subjective numeracy were assessed.33

At the end of the survey, standard demographics and participant characteristics were collected in order to describe the degree to which this the sample is similar to the general population that would be eligible for breast cancer screening. These measures included age, race, ethnicity, educational attainment, health insurance status, general health status,34 prior diagnosis of breast cancer and BRCA1/2 gene mutation. The survey also included two attention check items appearing in the second half of the survey (e.g., To show that you are still reading these questions, select “strongly agree”) and we pre-registered a plan to remove from analyses participants who failed both checks.

Analyses

Respondent data were excluded if they did not complete the survey, or if they responded incorrectly to both attention check items. In our preregistration, we planned to conduct maximum likelihood exploratory factor analysis (EFA) with an appropriate rotation but upon completing data collection we decided to perform both exploratory and confirmatory factor analyses (CFA) using this data set, based on advice from our analytic team, to make more efficient use of these data. Therefore, the data were randomly split into a training set and test set for psychometric analyses. Based on rules of thumb of 10-15 participants per item35 since we expected to reduce the data to 4 questions per construct (20 items total), we selected a test set of 225 respondents and used the remainder for training. EFA was conducted in the training set using Promax rotation and CFA was conducted in the test set to assess model fit. We assessed model fit with chi-square, Root Mean Square Error of Approximation (RMSEA), Comparative Fit Index (CFI) and Tucker-Lewis index (TLI) using robust maximum likelihood estimation. An acceptable model fit would be indicated by chi-square p-value>0.05, RMSE<0.08, and CFI and TLI >0.90. A likelihood ratio test (LRT) using the chi-square statistic was used to assess whether nested models with constrained parameters were a better fit.

We planned that if there were more than 4 items per factor retained in the EFA based on factor loadings >0.50, we would reduce the items by retaining items with the highest factor loadings. After obtaining the data and noting many items with high factor loadings, we decided to also consider retaining items based on the most clear and unambiguous wording and lower literacy words. Decisions on item reduction were made by consensus among the investigative team. Reliability and internal consistency were assessed for the final measures in the training set using overall and domain-specific Cronbach’s alpha and by computing item-scale correlations corrected for item overlap and scale reliability.

Subscale items were scored from 1 to 5 with reverse coding for positively-worded items, and then averaged to obtain a subscale score. Descriptive statistics were presented for the full data to summarize the scores for each of the subscales. Within-subjects (or repeated-measures) ANOVA was used to assess for statistically significant differences between the subscales. Pairwise comparisons between the subscales were performed using paired t-tests and p-values were adjusted for multiple comparisons using Holm’s method.36 Pearson correlation coefficients (and Holm-adjusted p-values) were used to assess the association between each of the subscales.

Once a good fitting measurement model was obtained from EFA and CFA, we planned that further tests would use the full data with point biserial correlations between each subscale and the binary primary outcome. We also explored Pearson correlations between the subscales and secondary outcomes. Holm’s method was used to account for multiple comparisons within each outcome.36

RESULTS

A total of 668 female participants aged 39-49 completed the survey, of whom 137 (21%) did not respond correctly to both attention checks. There were 6 (1%) respondents who did not complete all survey items, and minimal missing data in the sample (<1% per item), so a complete case analysis was performed using a final sample of 525 respondents. Table 1 shows sample demographics and characteristics. The test set consisted of 225 randomly selected respondents, and the training set was comprised of the remaining 300 respondents.

Table 1.

Respondent characteristics (N=525).

| Patient Characteristic | n (%) / Median (IQR) 1 |

|---|---|

| Age | 43 (41, 46) |

| Race | |

| American Indian | 6 (1.1%) |

| Asian | 26 (5.0%) |

| Black | 43 (8.2%) |

| Native Hawaiian/Pacific Islander | 2 (0.4%) |

| White | 418 (80%) |

| Other | 29 (5.5%) |

| Hispanic | 81 (15%) |

| Education | |

| High school diploma or less | 130 (24.4%) |

| Trade school | 20 (3.8%) |

| Some college no degree | 101 (19%) |

| Associate’s degree | 71 (14%) |

| Bachelor’s degree or more | 202 (38.3%) |

| Work in a medical field | 68 (13%) |

| Have health insurance | 445 (85%) |

| General health status | |

| Excellent | 77 (15%) |

| Very Good | 147 (28%) |

| Good | 168 (32%) |

| Fair | 92 (18%) |

| Poor | 40 (7.6%) |

| Prior breast cancer diagnosis | 17 (3.2%) |

| Have BRCA1/BRCA2 gene mutation | |

| Yes | 28 (5.3%) |

| No | 258 (49%) |

| I don’t know | 238 (45%) |

| Health literacy (How often do you need to have someone help you when you read instructions, pamphlets, or other written material from your doctor or pharmacy?) | |

| Never | 308 (59%) |

| Rarely | 90 (17%) |

| Sometimes | 62 (12%) |

| Often | 36 (6.9%) |

| Always | 28 (5.3%) |

| Average numeracy score | 4.33 (3.33, 5.33) |

| Prior knowledge about overdiagnosis | |

| I have never heard of it | 289 (55%) |

| I’m not sure | 79 (15%) |

| I have heard of overdiagnosis but I do not know much about it | 99 (19%) |

| I have heard of overdiagnosis and I understand it well | 58 (11%) |

Missingness was minimal (n=1) for some characteristics

Research Question (RQ) 1: Dimensionality and item reduction

To address RQ 1, we assessed dimensionality of the proposed scale items to identify whether Reactance, self-Exemption, Disbelief, Source derogation and Information Conflict are all separable constructs. No items exhibited substantial kurtosis or skewness, so all were included in the EFA on the training data. Maximum likelihood EFA was conducted with Promax rotation. Six factors were extracted with eigenvalues > 1 that jointly explained 62% of the variance. Factor loadings are displayed in Table 2. Examining item loadings >0.5 suggested the factor 1 (eigenvalue = 5.43) represented Reactance with 7 items; factor 2 (eigenvalue = 5.33) represented Source derogation and included 7 items; factor 3 (eigenvalue = 3.74) represented Disbelief and included 6 items; factor 4 (eigenvalue = 3.05) represented self-Exemption and included 4 items; and factor 5 (eigenvalue = 2.57) represented Information Conflict and included 5 items. Factor 6 (eigenvalue = 1.61) included two items that that were related to conspiracy-like beliefs (e.g., that the people who created this information were being paid by government to ration healthcare). These 2 items were anticipated to be overinclusive and potentially outside of the REDS constructs, and their loading on a separate factor confirmed that this was the case. Since these 2 items did not load onto the five intended constructs, we performed another EFA with five factors, which explained 59% of the variance. There were 4 items with factor loadings <0.5 that were identified for removal, resulting in an initial 31 items (Table 2).

Table 2.

Factor loadings for all survey items. Bolded font indicates 31 items with factor loadings >0.5. Asterisked (*) items indicate items retained for 20-item measure.

| Survey Item | Factor 1 “Reactance” |

Factor 2 “Source derogation” |

Factor 3 “Disbelief” |

Factor 4 “self- Exemption” |

Factor 5 “Information Conflict” |

Factor 6 “Conspiracy beliefs” |

|---|---|---|---|---|---|---|

| Eigenvalues | 5.43 | 5.33 | 3.74 | 3.05 | 2.57 | 1.61 |

| *In my opinion, the information about overdiagnosis was believable | −0.05 | 0.09 | 0.77 | −0.07 | 0.03 | 0.02 |

| *In my opinion, the information about overdiagnosis was convincing | −0.04 | 0.17 | 0.65 | −0.12 | 0.09 | −0.04 |

| *In my opinion, the information about overdiagnosis was accurate | −0.04 | −0.03 | 0.89 | 0.03 | −0.04 | 0.06 |

| In my opinion, the information about overdiagnosis was credible | −0.05 | −0.04 | 0.86 | 0.02 | 0.02 | −0.01 |

| In my opinion, the information about overdiagnosis was reliable | 0.07 | 0.02 | 0.91 | −0.03 | 0.01 | 0.00 |

| In my opinion, the information about overdiagnosis was doubtful | 0.29 | −0.07 | −0.10 | 0.21 | 0.03 | 0.08 |

| *Overall, how much do you disagree or agree with the information you read about overdiagnosis? | −0.01 | 0.32 | 0.51 | 0.09 | −0.08 | −0.13 |

| *In my opinion, the information presented about overdiagnosis seemed exaggerated | 0.88 | −0.01 | −0.04 | −0.08 | 0.02 | 0.03 |

| In my opinion, the information presented about overdiagnosis seemed dishonest | 0.76 | −0.04 | 0.00 | 0.06 | 0.01 | −0.07 |

| In my opinion, the information presented about overdiagnosis seemed fake | 0.80 | 0.04 | −0.10 | 0.10 | −0.02 | −0.09 |

| *In my opinion, the information presented about overdiagnosis seemed biased | 0.79 | −0.01 | −0.03 | −0.03 | −0.01 | 0.06 |

| *In my opinion, the information presented about overdiagnosis seemed deceptive | 0.89 | −0.05 | 0.07 | −0.03 | 0.08 | −0.04 |

| In my opinion, the information presented about overdiagnosis seemed like it was trying to manipulate me | 0.94 | 0.03 | −0.01 | −0.15 | 0.01 | 0.05 |

| *In my opinion, the information presented about overdiagnosis seemed like it was trying to pressure me to make a particular decision | 0.87 | −0.05 | 0.03 | −0.07 | −0.01 | 0.00 |

| In my opinion, the information presented about overdiagnosis seemed balanced | 0.09 | 0.65 | 0.06 | 0.13 | −0.11 | −0.06 |

| *Overdiagnosis might happen to some women but would never happen to me | −0.06 | −0.05 | −0.02 | 0.80 | −0.05 | 0.01 |

| *The information about overdiagnosis may be true for some women but is not true for me | −0.09 | −0.05 | 0.00 | 0.81 | 0.06 | 0.01 |

| The information about overdiagnosis applies to me personally | 0.33 | 0.36 | 0.18 | 0.19 | −0.14 | −0.10 |

| *The numbers presented about overdiagnosis may be true for the average woman but do not apply to me | −0.13 | −0.06 | −0.04 | 0.91 | 0.03 | 0.00 |

| A mammogram would never cause me harm | 0.17 | 0.08 | 0.04 | 0.20 | −0.01 | 0.13 |

| *A mammogram would never benefit me | 0.04 | 0.08 | 0.02 | 0.77 | −0.01 | −0.18 |

| *In my opinion, the researchers who created this information about overdiagnosis seem trustworthy | −0.05 | 0.87 | 0.01 | −0.04 | 0.02 | 0.07 |

| *In my opinion, the researchers who created this information about overdiagnosis seem to have my best interests in mind | 0.08 | 0.92 | −0.04 | −0.02 | −0.05 | 0.00 |

| In my opinion, the researchers who created this information about overdiagnosis are trying to help people like me | 0.01 | 0.94 | −0.06 | −0.10 | 0.10 | 0.04 |

| *In my opinion, the researchers who created this information about overdiagnosis are experts | −0.04 | 0.78 | 0.03 | 0.00 | 0.00 | 0.05 |

| *In my opinion, the researchers who created this information about overdiagnosis are competent | −0.05 | 0.88 | −0.03 | −0.05 | 0.05 | 0.15 |

| In my opinion, the researchers who created this information about overdiagnosis understand science | −0.17 | 0.77 | −0.08 | 0.09 | 0.12 | 0.04 |

| In my opinion, the researchers who created this information about overdiagnosis are probably on the payroll of insurance companies | 0.02 | 0.17 | −0.01 | −0.06 | −0.04 | 0.98 |

| In my opinion, the researchers who created this information about overdiagnosis are probably helping the government to ration healthcare | −0.02 | 0.06 | 0.10 | 0.19 | −0.06 | 0.63 |

| The information presented about overdiagnosis conflicts with other things I know to be true | 0.12 | −0.10 | 0.03 | 0.04 | 0.65 | 0.11 |

| *The information presented about overdiagnosis is different from what my doctor has told me about cancer screening. | 0.08 | 0.01 | 0.04 | 0.05 | 0.68 | 0.03 |

| The information presented about overdiagnosis can’t be true, given other things I know about cancer and cancer screening. | 0.19 | −0.20 | 0.02 | 0.25 | 0.19 | 0.20 |

| *The information presented about overdiagnosis conflicts with other health messages I have heard. | 0.09 | −0.13 | 0.07 | 0.05 | 0.63 | 0.04 |

| *The information presented about overdiagnosis is very different from what I believed before. | −0.06 | 0.16 | −0.08 | −0.04 | 0.80 | −0.08 |

| *The information presented about overdiagnosis is surprising | −0.03 | 0.19 | 0.01 | −0.02 | 0.71 | −0.18 |

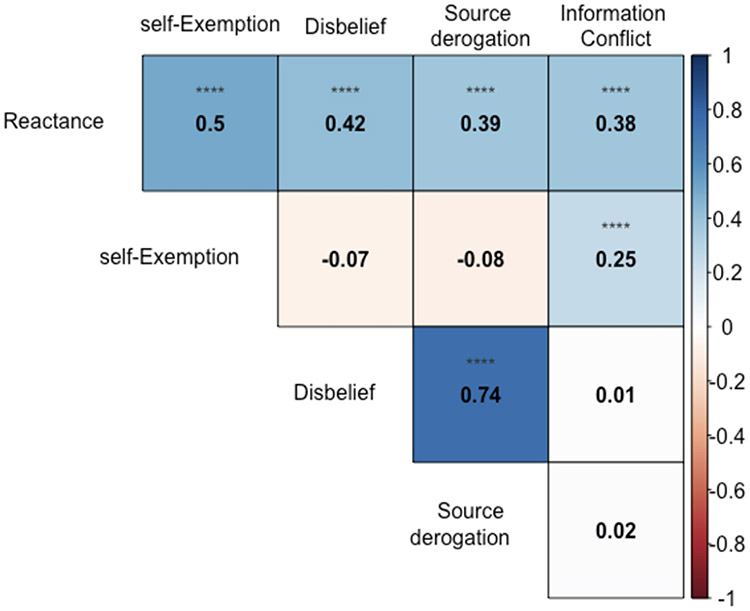

The team then sought to further reduce the number of items per construct to four. This was accomplished by either accepting the highest-loading items, or based on group discussion selecting high-loading items that had the most clear and unambiguous wording and/or lower literacy words. Final selected items are denoted with asterisks in Table 2. The reliability of the resultant 20 items together was high (alpha=0.88) and reliability for the subscales was also high: Reactance (alpha=0.92), self-Exemption (alpha=0.85), Disbelief (alpha=0.89), Source derogation (alpha=0.91), Information Conflict (alpha=0.79). Intercorrelations between the subscales ranged from −0.08 to 0.74 (Figure 1). Item-scale correlations within the subscales ranged from 0.64 to 0.87. Due to the high correlation between the Disbelief and Source derogation constructs (r=0.74), we compared a 2-factor model of the two constructs with a 1-factor model where the correlation between them is constrained to 1 and identify that there is significant evidence to consider these as distinct constructs (LRT p<0.001).

Figure 1/.

Pearson correlation coefficients for associations between subscales. Color saturation indicates strength of associations with blue indicating positive correlations and red indicating negative correlations. Asterisks indicate statistical significance with p-value adjusted using Holm’s method (*0.01<p≤0.05, **0.001<p≤0.01, ***0.0001<p≤0.001, ****p≤0.0001)

The EFA results indicated that meaningful definitions of all the constructs could be identified, and that using each of the constructs as domains in a five-factor model for assessing perceptions would be appropriate. In CFA for a five-factor model with the reduced 20 items, we found that there was good model fit. Though the model chi-square was statistically significant (254.4; p<0.001), this value is sensitive to sample size and RMSEA (0.057), CFI (0.96), and TLI (0.95) all supported a good fitting model.

RQ 2: Descriptive Analyses and Associations Among Constructs

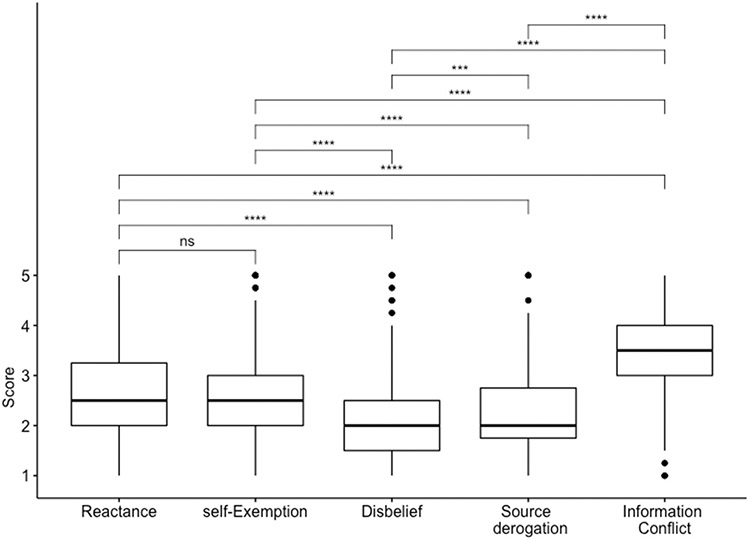

To address RQ2, we assessed correlations among these constructs to identify how they are related to each other. First, Figure 2 boxplots shows sample medians and interquartile ranges (IQR) for REDS and Information Conflict [Means and standard deviations: Reactance: 2.62 (1.07), self-Exemption: 2.55 (0.91), Disbelief: 2.15 (0.84), Source derogation: 2.25 (0.83), Information conflict: 3.56 (0.84)]. Figure 2 shows that participants expressed more Information Conflict than REDS, and within REDS experienced more Reactance than the other constructs. There was a statistically significant difference in the scores for the different constructs (p<0.001 within-subjects ANOVA). In pairwise comparisons between the different constructs, there was a statistically significant difference between all constructs except for Reactance and self-Exemption.

Figure 2/.

Box plots of subscale medians and interquartile ranges. Asterisks indicate statistical significance of pairwise t-test with p-value adjusted using Holm’s method (ns: p>0.05, *0.01<p≤.05, **0.001<p≤0.01, ***0.0001<p≤0.001, ****p≤0.0001)

Correlations between mean scores among each of the 4-item scales (Figure 1) indicated that Reactance was moderately correlated with all four other constructs, with the highest correlation between Reactance and Disbelief (r=.50). Meanwhile, self-Exemption had weak correlations with Disbelief (r=−0.07) and Source derogation (r=−0.08), indicating that a person’s belief that information is (or is not) personally relevant is unrelated to the overall believability of that information. Disbelief and Source derogation showed the strongest correlation among all the subscales, r=.74 (note that during dimension reduction these were identified to be distinct constructs despite this high correlation). Unexpectedly, Information Conflict was only weakly correlated with Disbelief (r=.01) and Source derogation (r=0.02), but was moderately positively correlated with Reactance (r=0.38) and self-Exemption (r=0.25).

RQ 3: Associations with Outcome Measures

To address RQ 3, we examined correlations between REDS, Information Conflict and the primary and secondary outcome measures to explore to potential implications of REDS and Information Conflict for screening decision making (Table 3). Consistent with the pre-registered hypothesis, greater Reactance, Disbelief and Source derogation were each significantly associated with being less likely to want to wait to receive screening as a result of learning about overdiagnosis (and more likely to report that the information did not affect their decision or made them want to screen earlier) (all ps<0.003). Also consistent with expectations (but not preregistered), participants expressing greater Reactance, Disbelief and Source derogation were less likely to think that it was important to inform women about overdiagnosis. However, neither of these outcomes were significantly associated with self-Exemption. Additionally, Information Conflict was not significantly associated with either outcome.

Table 3.

Pearson correlation coefficients for associations between REDS, Information Conflict, and outcomes. Asterisks indicate statistical significance with p-value adjusted using Holm’s method (*p≤0.05, **p≤0.01, ***p≤0.001)

| Outcome 1 | Reactance | Self- Exemption |

Disbelief | Source derogation |

Information conflict |

|---|---|---|---|---|---|

| Screening intentions | −0.21*** | −0.07 | −0.13** | −0.15** | 0.03 |

| Perceived importance of informing people about overdiagnosis | −0.33*** | 0.004 | −0.59*** | −0.60*** | −0.02 |

| Exploratory correlations | |||||

| Knowledge score | −0.25*** | −0.20*** | −0.08 | −0.08 | −0.05 |

| Trust in the healthcare system | −0.12* | −0.11* | −0.08 | −0.13** | −0.14** |

| Medical maximizer-minimizer scale | 0.17*** | 0.24*** | −0.15*** | −0.19*** | 0.13*** |

| Health literacy | −0.26*** | −0.47*** | 0.28*** | 0.25*** | −0.18*** |

| Subjective numeracy | −0.10* | −0.09* | 0.16** | 0.16** | −0.18** |

All outcomes are coded such that higher values indicate increased levels of the outcome; for screening intentions, 0=made me want to screen sooner or did not affect my decision, 1=made me want to wait to receive screening

Exploratory results

Exploratory analyses included investigating associations between REDS and post-intervention knowledge, healthcare system trust, Medical Maximizing-Minimizing, health literacy and subjective numeracy (Table 3). Participants who had less post-intervention knowledge about overdiagnosis were more likely to express Reactance and self-Exemption, but were not more likely to express Disbelief, Source derogation or Information Conflict. Low healthcare system trust was weakly associated with greater REDS (all four constructs) and Information Conflict. Medical maximizing, lower literacy, and lower numeracy were all associated with more Reactance, self-Exemption, and Information Conflict, and less Disbelief and Source derogation.

DISCUSSION

Beliefs about mammography are informed by health messages, doctor’s advice, and broader mental models about what breast cancer is and how it behaves.37 The notion of overdiagnosis may conflict with some or even all of those prior messages and beliefs. Many people who learn about overdiagnosis believe it is important information but does not outweigh the potential benefit of screening,38,39 however, the present research was concerned with the possibility that for some people overdiagnosis is a concept that is difficult to accept. The present research showed that a substantial number of people react to information about overdiagnosis with Reactance, self-Exemption, Disbelief and Source derogation.

REDS are reactions which suggest that a person is skeptical of the information, and as a result, may be unwilling to consider the information as a factor in their decision-making. Consistent with this idea, individuals who expressed Reactance, Disbelief, and Source derogation were less likely to say their decisions would be influenced by information about overdiagnosis, and less likely to think it is important for people to be informed about overdiagnosis. At the same time, self-Exemption was not significantly related to these outcomes, suggesting that self-Exemption may not have as strong an impact on screening judgments as R, D, and S.

Information Conflict (the perception that overdiagnosis conflicts with prior knowledge and messages) was the most common reaction to overdiagnosis, which suggests that people are still not being informed about overdiagnosis routinely. Information Conflict was correlated with both Reactance and self-Exemption, which is consistent with the idea that Information Conflict may be an antecedent of REDS. However, Information Conflict was not associated with Disbelief or Source derogation. One possible interpretation of the pattern of correlations observed in Figure 1 is that when a message is very different from prior beliefs and health messages (i.e., when people experience Information Conflict), this causes people to suspect that the message is manipulative (R), or that it is not personally relevant (E), and then react with additional responses that further undermine the message (D, and S). Although future research is clearly needed to provide evidence for causal relationships, one implication is that if REDS reactions do stem directly or indirectly from Information Conflict, then these reactions might lessen over time as messages about overdiagnosis are repeated and become more familiar.

Results involving exploratory correlations were interesting but sometimes difficult to interpret. REDS and Information Conflict were all slightly negatively associated with trust in the healthcare system, which is consistent with prior research showing that conflicting or changing health information can negatively impact trust (although the correlation with Disbelief did not reach statistical significance).40-42 However, another explanation is that those with low trust in the healthcare system are more skeptical of new health information.

Lower post-intervention knowledge was associated with greater Reactance and self-Exemption only, and it is unclear from these data whether people who expressed more R and E learned less from the materials or had less knowledge to begin with. Health literacy, numeracy and medical maximizing all showed similar patterns of correlations: Individuals with lower health literacy, lower numeracy and greater maximizing preferences expressed greater Reactance, self-Exemption, and Information Conflict, but less Disbelief and Source derogation. These patterns of associations were not predicted and so we can not infer a causal direction, although these findings may be useful for hypothesis generation.

Limitations

A limitation of this research is that it was not designed to identify whether REDS are best characterized as defensive responses (stemming from a motivation to avoid aversive conclusions) versus a normative probabilistic inference (i.e., information that conflicts starkly with previously held beliefs and messages is unlikely to be true). We did find some support for the latter idea, insofar as Information Conflict was associated with Reactance and self-Exemption. However, we suspect that both characterizations of REDS may have merit. A mental models approach could be useful in discerning these different characterizations of REDS.24,43 A related limitation is that, due to the correlational design, we cannot make strong conclusions about causal mechanisms. However, these data can inform future research that tests questions of causality.

Moreover, this study examined REDS and Information Conflict only in reaction to overdiagnosis in breast cancer screening. REDS may or may not have similar implications when people are confronted with information about overdiagnosis for other health conditions. Future research could use the measures developed in this study to examine similar questions in other contexts were overdiagnosis occurs, for example, in prostate cancer screening.

Although we solicited feedback from experts and conducted cognitive interviews with patients to test the materials prior to conducting this study, another limitation is that the presentation of overdiagnosis may have been suboptimal in some ways. For example, the presentation of numerical values did not use icon arrays and did not use constant denominators, and as a result, it may have been difficult for some participants to understand. Moreover, we did not collect extensive information about family history of breast cancer, prior mammography results or experiences with procedures such as biopsies. These experiences could influence REDS, Information Conflict and screening intentions. This research also does not reflect reactions that people might have to information that includes both screening benefits and harms. Future research should identify how prior healthcare experiences and more complete information about mammography screening may influence REDS responses and Information Conflict, and its implications for health decisions.

Finally, the present sample was not obtained using probability sampling, and so cannot claim national representativeness or that the observed rates of REDS and Information Conflict are reflective of the broader U.S. population. This sample was more educated and lacked racial and ethnic diversity compared to the U.S. population, and so future research is needed to validate the measures in more diverse populations. Future research should continue to evaluate the psychometric properties of the instrument, including but not limited to test-retest reliability, model fit in samples drawn from different populations, and predictive validity for real-world decisions.

Conclusions and Future Directions

This study reports the initial validation of a scale to assess REDS and Information Conflict. REDS and Information Conflict may impact the communication of evidence-based health information, and could have consequences for how patients incorporate and consider information on overdiagnosis as part of cancer screening decisions. Measurement of these constructs may also prove useful in communication across other health domains. In health communication, experts often focus on message effectiveness when the message is meant to persuade, and on outcomes such as knowledge and decisional conflict in preference-sensitive message contexts.44 The study of REDS and Information Conflict allows a deeper understanding of how health messages might be failing, for example, by being at odds with conventional expectations. By expanding the domains of perceived message effectiveness to REDS, researchers may increase their chances of identifying why, specifically, a category of messages or decision aids might fail, and in the process infer how the next generation of messages might be designed to alleviate the failure.

Supplementary Material

Highlights:

Overdiagnosis is a concept central to making informed breast cancer screening decisions, and yet when provided information about overdiagnosis some people are skeptical.

This research developed a measure that assessed different ways in which people might express skepticism about overdiagnosis (reactance, self-exemption, disbelief, source derogation), and also the perception that overdiagnosis conflicts with prior knowledge and health messages (information conflict).

These different reactions are distinct but correlated, and are common reactions when people learn about overdiagnosis.

Reactance, disbelief and source derogation are associated with lower intent to use information about overdiagnosis in decision-making, as well as the belief that informing people about overdiagnosis is unimportant.

Acknowledgements and transparency:

All individuals who contributed to this manuscript are authors on this manuscript. This study was pre-registered: https://aspredicted.org/nt2jp.pdf. Data, study materials and pre-registration can be found here: https://osf.io/eux4q/

Financial support for this study was provided entirely by a grant from the National Cancer Institute, R37CA254926. The funding agreement ensured the authors’ independence in designing the study, interpreting the data, writing, and publishing the report.

REFERENCES

- 1.Berkman ND, Sheridan SL, Donahue KE, et al. The Effect of Interventions To Mitigate the Effects of Low Health Literacy. Published March 2011. Accessed January 27, 2014. http://www.ncbi.nlm.nih.gov/books/NBK82433/ [Google Scholar]

- 2.DeWalt DA, Pignone MP. Reading is fundamental: the relationship between literacy and health. Archives of internal medicine. 2005;165(17):1943. [DOI] [PubMed] [Google Scholar]

- 3.Fagerlin A, Ubel PA, Smith DM, Zikmund-Fisher BJ. Making numbers matter: present and future research in risk communication. American Journal of Health Behavior. 2007;31(Supplement 1):S47–S56. [DOI] [PubMed] [Google Scholar]

- 4.Pignone M, DeWalt DA, Sheridan S, Berkman N, Lohr KN. Interventions to improve health outcomes for patients with low literacy. Journal of general internal medicine. 2005;20(2):185–192. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Chou WYS, Oh A, Klein WMP. Addressing Health-Related Misinformation on Social Media. JAMA. 2018;320(23):2417–2418. doi: 10.1001/jama.2018.16865 [DOI] [PubMed] [Google Scholar]

- 6.Goldacre B. Media misinformation and health behaviours. The Lancet Oncology. 2009;10(9):848. doi: 10.1016/S1470-2045(09)70252-9 [DOI] [PubMed] [Google Scholar]

- 7.Scherer LD, Pennycook G. Who is susceptible to online health misinformation? American Journal of Public Health. 2020;110(S3):S276–S277. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Siu AL. Screening for Breast Cancer: U.S. Preventive Services Task Force Recommendation Statement. Ann Intern Med. 2016;164(4):279–296. doi: 10.7326/M15-2886 [DOI] [PubMed] [Google Scholar]

- 9.Oeffinger KC, Fontham ETH, Etzioni R, et al. Breast Cancer Screening for Women at Average Risk: 2015 Guideline Update From the American Cancer Society. JAMA. 2015;314(15):1599. doi: 10.1001/jama.2015.12783 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Carter SM, Rogers W, Heath I, Degeling C, Doust J, Barratt A. The challenge of overdiagnosis begins with its definition. BMJ. 2015;350(mar04 2):h869–h869. doi: 10.1136/bmj.h869 [DOI] [PubMed] [Google Scholar]

- 11.McCaffery KJ, Jansen J, Scherer LD, et al. Walking the tightrope: communicating overdiagnosis in modern healthcare. BMJ. Published online February 5, 2016:i348. doi: 10.1136/bmj.i348 [DOI] [PubMed] [Google Scholar]

- 12.Welch HG, Black WC. Overdiagnosis in cancer. Journal of the National Cancer Institute. 2010;102(9):605–613. [DOI] [PubMed] [Google Scholar]

- 13.Davidson KW, Mangione CM, Barry MJ, et al. Collaboration and shared decision-making between patients and clinicians in preventive health care decisions and US Preventive Services Task Force Recommendations. Jama. 2022;327(12):1171–1176. [DOI] [PubMed] [Google Scholar]

- 14.Hoffmann TC, Del Mar C. Patients’ Expectations of the Benefits and Harms of Treatments, Screening, and Tests: A Systematic Review. JAMA Internal Medicine. 2015;175(2):274. doi: 10.1001/jamainternmed.2014.6016 [DOI] [PubMed] [Google Scholar]

- 15.Nagler RH, Franklin Fowler E, Gollust SE. Women’s Awareness of and Responses to Messages About Breast Cancer Overdiagnosis and Overtreatment: Results From a 2016 National Survey. Medical Care. 2017;55(10):879–885. doi: 10.1097/MLR.0000000000000798 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Schwartz LM, Woloshin S, Fowler FJ Jr, Welch HG. Enthusiasm for cancer screening in the United States. Jama. 2004;291(1):71–78. [DOI] [PubMed] [Google Scholar]

- 17.Waller J, Osborne K, Wardle J. Enthusiasm for cancer screening in Great Britain: a general population survey. British Journal of Cancer. 2015;112(3):562–566. doi: 10.1038/bjc.2014.643 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Scherer LD, Valentine KD, Patel N, Baker SG, Fagerlin A. A bias for action in cancer screening? Journal of Experimental Psychology: Applied. 2019;25(2):149. [DOI] [PubMed] [Google Scholar]

- 19.Kunda Z. The case for motivated reasoning. Psychological bulletin. 1990;108(3):480. [DOI] [PubMed] [Google Scholar]

- 20.McQueen A, Vernon SW, Swank PR. Construct definition and scale development for defensive information processing: An application to colorectal cancer screening. Health Psychology. 2013;32(2):190–202. doi: 10.1037/a0027311 [DOI] [PubMed] [Google Scholar]

- 21.Botvinik-Nezer R, Jones M, Wager T. Fraud beliefs following the 2020 us presidential election: A belief systems analysis. Published online 2021. [DOI] [PubMed] [Google Scholar]

- 22.Druckman JN, McGrath MC. The evidence for motivated reasoning in climate change preference formation. Nature Climate Change. Published online January 21, 2019:1. doi: 10.1038/s41558-018-0360-1 [DOI] [Google Scholar]

- 23.Johnson-Laird PN. Mental models of meaning. Published online 1981. [Google Scholar]

- 24.Morgan MG, Fischhoff B, Bostrom A, Atman CJ. Risk Communication: A Mental Models Approach. Cambridge University Press; 2002. [Google Scholar]

- 25.Chi MT. Three types of conceptual change: Belief revision, mental model transformation, and categorical shift. In: International Handbook of Research on Conceptual Change. Routledge; 2009:89–110. [Google Scholar]

- 26.Gollust SE, Cappella JN. Understanding public resistance to messages about health disparities. Journal of Health Communication. 2014;19(4):493–510. [DOI] [PubMed] [Google Scholar]

- 27.McQueen A, Swank PR, Vernon SW. Examining patterns of association with defensive information processing about colorectal cancer screening. Journal of health psychology. 2014;19(11):1443–1458. [DOI] [PubMed] [Google Scholar]

- 28.Clark LA, Watson D. Constructing validity: Basic issues in objective scale development. Psychological assessment. 1995;7(3):309. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Hersch J, Barratt A, Jansen J, et al. Use of a decision aid including information on overdetection to support informed choice about breast cancer screening: a randomised controlled trial. The Lancet. 2015;385(9978):1642–1652. doi: 10.1016/S0140-6736(15)60123-4 [DOI] [PubMed] [Google Scholar]

- 30.Scherer LD, Caverly TJ, Burke J, et al. Development of the Medical Maximizer-Minimizer Scale. Health Psychology. 2016;35(11):1276–1287. doi: 10.1037/hea0000417 [DOI] [PubMed] [Google Scholar]

- 31.Shea JA, Micco E, Dean LT, McMurphy S, Schwartz JS, Armstrong K. Development of a Revised Health Care System Distrust Scale. Journal of General Internal Medicine. 2008;23(6):727–732. doi: 10.1007/s11606-008-0575-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Chew LD, Griffin JM, Partin MR, et al. Validation of Screening Questions for Limited Health Literacy in a Large VA Outpatient Population. Journal of General Internal Medicine. 2008;23(5):561–566. doi: 10.1007/s11606-008-0520-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Fagerlin A, Zikmund-Fisher BJ, Ubel PA, Jankovic A, Derry HA, Smith DM. Measuring numeracy without a math test: Development of the subjective numeracy scale. Medical Decision Making. 2007;27(5):672–680. [DOI] [PubMed] [Google Scholar]

- 34.Ware JE Jr, Kosinski M, Keller SD. A 12-Item Short-Form Health Survey: construction of scales and preliminary tests of reliability and validity. Medical care. 1996;34(3):220–233. [DOI] [PubMed] [Google Scholar]

- 35.Tabachnick BG, Fidell LS. Using Multivariate Statistics, Allyn and Bacon, Boston, MA. Using Multivariate Statistics, 4th ed Allyn and Bacon, Boston, MA. Published online 2001. [Google Scholar]

- 36.Holm S. A simple sequentially rejective multiple test procedure. Scandinavian journal of statistics. Published online 1979:65–70. [Google Scholar]

- 37.Silverman E, Woloshin S, Schwartz LM, Byram SJ, Welch HG, Fischhoff B. Women’s views on breast cancer risk and screening mammography: a qualitative interview study. Medical Decision Making. 2001;21(3):231–240. [DOI] [PubMed] [Google Scholar]

- 38.Hersch J, Jansen J, Barratt A, et al. Women’s views on overdiagnosis in breast cancer screening: a qualitative study. BMJ. 2013;346. Accessed May 13, 2015. http://www.bmj.com/content/346/bmj.f158.abstract [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Waller J, Douglas E, Whitaker KL, Wardle J. Women’s responses to information about overdiagnosis in the UK breast cancer screening programme: a qualitative study. BMJ Open. 2013;3(4):e002703. doi: 10.1136/bmjopen-2013-002703 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Nagler RH. Adverse Outcomes Associated With Media Exposure to Contradictory Nutrition Messages. Journal of Health Communication. 2014;19(1):24–40. doi: 10.1080/10810730.2013.798384 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Nagler RH, Yzer MC, Rothman AJ. Effects of Media Exposure to Conflicting Information About Mammography: Results From a Population-based Survey Experiment. Ann Behav Med. doi: 10.1093/abm/kay098 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Gollust SE, Fowler EF, Nagler RH. Prevalence and potential consequences of exposure to conflicting information about mammography: Results from nationally-representative survey of US Adults. Health communication. Published online 2021:1–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Byram SJ, Schwartz LM, Woloshin S, Fischhoff B. WOMEN’S BELIEFS ABOUT BREAST CANCER RISK FACTORS: A MENTAL MODELS APPROACH. In: Rationality and Social Responsibility. Psychology Press; 2008. [Google Scholar]

- 44.Kennedy ADM. On what basis should the effectiveness of decision aids be judged? Health Expectations. 2003;6(3):255–268. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.