Abstract

In the digital age, where information is a cornerstone for decision-making, social media's not-so-regulated environment has intensified the prevalence of fake news, with significant implications for both individuals and societies. This study employs a bibliometric analysis of a large corpus of 9678 publications spanning 2013–2022 to scrutinize the evolution of fake news research, identifying leading authors, institutions, and nations. Three thematic clusters emerge: Disinformation in social media, COVID-19-induced infodemics, and techno-scientific advancements in auto-detection. This work introduces three novel contributions: 1) a pioneering mapping of fake news research to Sustainable Development Goals (SDGs), indicating its influence on areas like health (SDG 3), peace (SDG 16), and industry (SDG 9); 2) the utilization of Prominence percentile metrics to discern critical and economically prioritized research areas, such as misinformation and object detection in deep learning; and 3) an evaluation of generative AI's role in the propagation and realism of fake news, raising pressing ethical concerns. These contributions collectively provide a comprehensive overview of the current state and future trajectories of fake news research, offering valuable insights for academia, policymakers, and industry.

Keywords: Deep fake, Ethics, Fake news, Generative AI, Prominence percentile, Sustainable development goal

1. Introduction

With the proliferation of digital social media platforms and the enablement of instant messaging services, traditional journalism has evolved into integrating sensationalism into the news [1]. At the same time, it is never wrong to use literary tactics to attract the reader's attention, convoluting the truth and spreading misinformation. The need to gain popularity and likeability amidst stiff competition has facilitated the tendency of some news and social media providers to overlook ethical aggregation and dissemination of information. Fake news or the dissemination of false information as if it were true [2,3] has become a deplorable problem since it intentionally manipulates facts to spread misinformation and deceive the audience.

Research into fake news within the context of generative AI is crucial, as advanced AI algorithms have increasingly become tools for both generating and detecting deceptive information [4,4,6]. Understanding the capabilities of generative AI in creating convincing fake narratives is vital for crafting more effective countermeasures, as well as for assessing the ethical implications associated with AI-enabled misinformation.

The availability of information and its quality have a proportional relationship with decision-making. However, the inherent biases, either implicit or confirmatory, cloud the knowledge gained, impairing objective decision-making [7,8]. Prior studies have shown how personal experiences from physical interactions embed information that leads one to develop a positive, negative, or neutral outlook on current circumstances. More recent studies [9] indicate that different circuits within the brain are attributable to ingraining positive or negative experiences and the decision to rely on them. Thus, the resources that provide information directly impact our opinions, reactions, and behaviour. Humans also tend to gravitate towards sensational and negative news or information, irrespective of their relevance.

Fake news encapsulates both misinformation and disinformation [2,10], the latter two differing from one another along continuums of truth and intent. Disinformation reflects the spread of untrue information known to be false by the person disseminating the information [3], thereby reflecting falsehood and malicious intent. Misinformation refers to the spread of false information perceived to be true by the sender, thereby reflecting falsehood and non-malicious intent.

The recent past has seen significant growth in fake news with surprisingly significant contributions from mainstream media, as Tsfati et al. [11] reported. Their review paper succinctly presents worldwide examples of the wide-ranging impact of fake news, especially with political agenda at the core of disinformation campaigning strategy and even swaying election results in developed countries. This weaponizing tendency of fake news with financial and ideological allegiances was further elaborated by Tandoc Jr [12]. in his review. Study by Baptisda et al. [13] detail the linguistic characteristics and motivations that aid in sharing and consuming fake news. This finding requires delving into both ends of the spectrum, i.e., specific elements of the shared content and demographics of those engulfed by fake news. Their work exposes the underlying causes for creating and disseminating the fakest news induced by social reputation reinforcement and justification of beliefs that may be deep, partisan, polarized or ideological [14]. The persuasive language and graphics are intended to invoke provocation and go viral. Perhaps the only way to mitigate the impact of fake news is to create awareness among those most vulnerable [15]. A complimentary review by Bryanov et al. [16] elaborates on the compelling traits of people most susceptible to fake news. The tendency to dwell on or believe fake news depended on consumers’ naiveté, emotionality, and lack of analytical or reflective thinking. Other factors, such as frivolous time spent online or on social media, also heightened their propensity. The question remains: what type of systemic changes can transform this draw toward fake news? Soler-Costa et al. [17] refer to the dire need for promoting ‘netiquette', a burgeoning field of research combining ethics, education, and behaviour on the internet.

Social media has been a powerful tool during emergencies [18,19] for getting swift public involvement in rescue operations and online support. Due to a lack of awareness of verifiability, the risk of misinformation is rampant in such situations. Before the pandemic, the research on health misinformation [20,21], portrayed disinformation primarily related to vaccines and infectious diseases. However, there was a lack of sufficient data and theory-driven methods to draw adequate conclusions on user susceptibility. COVID-19 flooded social media with information, and the tremendous anxiety and panic augmented the spread of related news with little or no self-regulation of facts [22]. The coining of new terminology 'infodemics' during the pandemic [23] is a testament to the aggressive discordant dimension of fake news. Suarez-Lledo et al. [24] developed methodologies to measure the spread of health misinformation and found Twitter to be the most exploited platform, which was further confirmed by Ling et al. [25]. Fake news had up to 87 % spread in some cases. Gabarron et al. [26] comprehensively reviewed 22 studies on the COVID pandemic that highlighted tackling strategies such as demystifying hoaxes, health literacy and improved social media regulatory policies. While these futuristic suggestions are helpful, their implementation in developing or populous countries with many illiterate and vulnerable citizens can be challenging. Akram et al. [27] portray a noticeable gap in comprehending the direct impact of disinformation on the construction of communal psychosocial narratives.

With the deadline for the Sustainable Development Goals (SDGs) set for 2030 rapidly drawing near, the urgency to meet these objectives is mounting, stimulating intensified research activity in various fields. The SDGs were articulated with a vision to empower people, communities and nations with economic prosperity and healthy living and to protect the environment using balanced approaches. Fake news that compromises the integrity of organizations, personalities or countries can directly impact their economic stature and public trust. Several sustainable development goals, such as SDGs 4 (Quality Education), 10 (Reduced Inequalities), 13 (Climate Action), and 16 (Peace, Justice, and Institutions), will remain unachievable due to the debilitating impact of fake news. The issue of misinformation is complex in that it is prevalent even among trained scientific personnel. For example, publications that lack sufficient rigour render them non-reproducible and potentially unreliable. When others cite such studies, the domino effect of misinformation continues [6]. SDG 4 targets the provision of equal opportunity in education to all. When even well-educated professionals are susceptible to fake news such as the scientific community, it is unsurprising that the dynamics and purposes of misinformation will be much less evident to the larger masses. When it comes to scientific phenomena that have a long-term impact, such as SDG 13, challenges exist with verifiability that can lead to the exposition and spread of misleading information. The costs to disprove falsity are also staggering and unrealistic. Despite numerous studies aiming to map research domains with the SDGs [[28], [29], [30]], there exists a conspicuous lack of systematic study of fake news-related research mapped to individual SDGs. Our study seeks to fill this void, leveraging the Elsevier SDG Mapping Initiative to introduce a fresh viewpoint on this topic [31].

Our research aims to address the following questions.

-

1.

What are the evaluation patterns of fake news research, as measured by the temporal growth in publications and citations, contributions from top institutions, highly cited journals, and the impact of collaboration?

-

2.

What are the thematic clusters based on keyword co-occurrence analysis?

-

3.

How well does fake news research map to Sustainable Development Goals?

-

4.

What is the role of generative AI in the propagation of fake news?

-

5.

Which are the emerging topics related to fake news research based on prominence percentile?

The study starts by describing the protocol used for the systematic literature review, followed by the results and discussion sections. The overall research performance and temporal evolution of publications and citations, patterns of top institutions and highly cited journals, and the impact of collaboration are highlighted in the discussion section. The next section describes the thematic structure of fake news research based on keywords. This is followed by a section describing the mapping of fake news research to SDGs. In the following section, we discuss fake news research in the context of generative AI, which is a first. Then, we discuss implications for future research based on prominence topics and keyphrases, which is a unique contribution of our study. The conclusions and limitations of the study are discussed in the last section.

2. Methods

The field of bibliometrics studies publication and citation patterns by using quantitative techniques. Bibliometrics can be either descriptive, i.e., analyzing the publications by an organization, or evaluative, i.e., exploring how the publications influenced subsequent research by others using various techniques like citation analysis. Performance analysis uses publications and citation data to assess multiple scientific characteristics, including countries, institutions, etc. and science mapping analysis, which evaluates the social and cognitive makeup of a study area [32,33].

The top-cited fake news publications and the journals that most frequently cite those publications were identified for analyzing the recognition and influence of fake news publications. The Scopus database was chosen as it has strict quality standards for indexation, and the journals it indexes are more comprehensive [34]. Scopus is the most important citation and abstract database and the most widely used search database [35]. The Science mapping technique that uses the thematic development of the study topic's co-occurrence analysis was used as part of the study (Chen et al., 2017). In the study, to investigate the evolution of themes about fake news, authors and index keywords were retrieved and thematically organized into groups [16,36].

VOSviewer and Scival are used for bibliometric analysis and network visualizations [29]. VOSviewer creates and displays bibliometric maps [37], demonstrating science investigation's dynamic and structural features [38]. This software is used for keyword co-occurrence analyses and bibliographic coupling. Bibliographic coupling [39,40] is used in the study, which occurs when two documents cite the same third document. This technique is used to understand patterns of intellectual content shared between the publications. The Elsevier SDG Mapping Initiative [41], a feature integrated within the Scopus database, utilizes distinct SDG-specific queries created in line with the targets and sub-targets of each goal. This tool has been refined through an extensive process of review and feedback, complemented by a machine learning model to ensure a precision rate above 80 %. Furthermore, the Scopus database enhances the research procedure by offering preset search queries for each SDG.

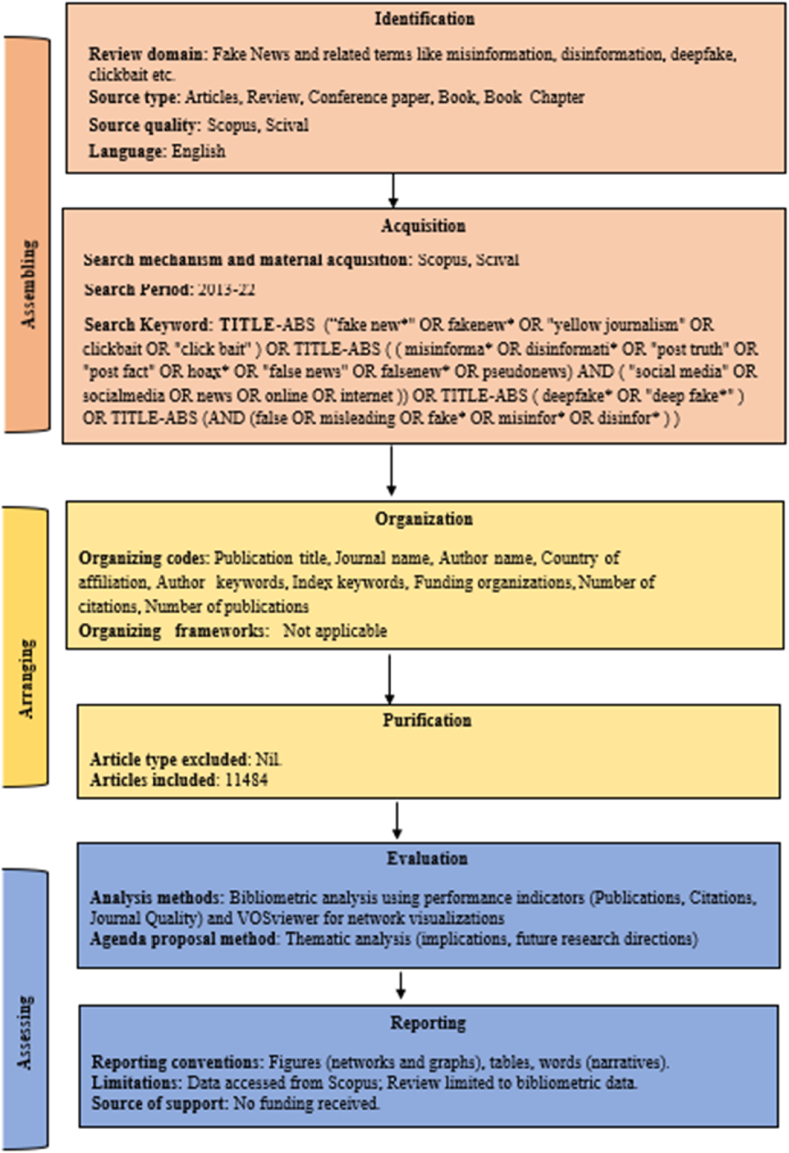

Preparing a protocol is the first step in the systematic literature review. A protocol ensures careful planning, consistency in implementation, and transparency, enabling replication. SPAR-4-SLR protocol developed by Paul et al. [42] has been used in this study, as highlighted in Fig. 1. This protocol consists of three main steps – Assembling (identification and acquisition), Arranging (organization and purification), and Assessing (evaluation and reporting). Data identification happened between the 1st and May 6, 2023.

Fig. 1.

SPAR-4-SLR protocol-based research design.

3. Results and discussion

3.1. Performance analysis

3.1.1. Publications and citations trends

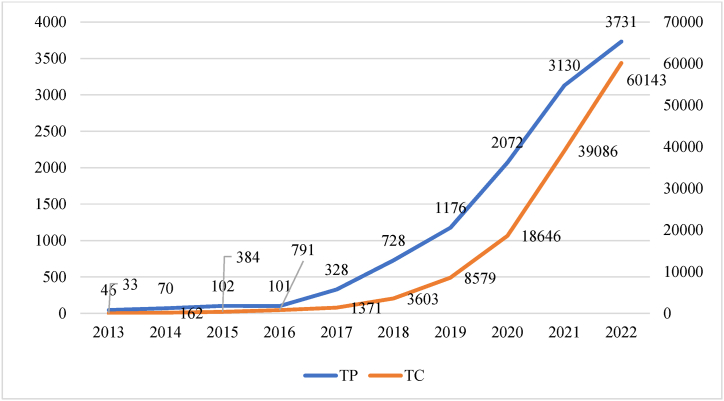

Fig. 2 shows the publication and citation trends of articles per year related to fake news research. As we have frequently seen over the past two years, COVID-19 has been not only a viral pandemic but also a pandemic of false information. The World Health Organization defines it as an “infodemic" [43]. Fake news attracted the attention of academics due to the increasing attention given to the COVID-19 pandemic between 2020 and 2022. The dominant number of publications were made during 2022. Almost 77 % of the entire publication count during 2013–2022 was in 2020, 2021 and 2022, which possibly shows higher influence of social media and COVID-19. About 31 % of publications were related to Fake news and COVID-19.

Fig. 2.

Publications and Citations trends. Note: TP = Total publications; TC = Total citations.

3.1.2. Top institutions

It is essential to recognize the top contributing institutions researching fake news-related topics. Table 1 shows the top institutions, publications (TP), citation (TC), and citation mean (TC/TP) ranked by citations. The outcomes portray that 80 % of the top 10 institutions belong to the United States. Massachusetts Institute of Technology (MIT) in the US has the highest citation count (TC:4171). Still, when looking into the citation mean ratio, the National Bureau of Economic Research (NBER) has the highest TC/TP:981. The top four institutions that lead in terms of TC are from the United States, i.e., MIT, New York University, Stanford University and Harvard University. They have TCs of 7168, 5070, 4821 and 4171, respectively. Italy and Australia are the only two non-US countries with universities on this list.

Table 1.

Top 10 institutions ranked according to publications and citations.

| Ranked according to TP |

Ranked according to TC |

||||||||

|---|---|---|---|---|---|---|---|---|---|

| Institution | Country | TP | TC | TC/TP | Institution | Country | TC | TP | TC/TP |

| Harvard University | United States | 99 | 4171 | 42.1 | Massachusetts Institute of Technology | United States | 7168 | 68 | 105.4 |

| Nanyang Technological University | Singapore | 97 | 2759 | 28.4 | New York University | United States | 5070 | 70 | 72.4 |

| University of Oxford | United Kingdom | 91 | 1346 | 14.8 | Stanford University | United States | 4821 | 66 | 73.0 |

| Chinese Academy of Sciences | China | 81 | 2345 | 29.0 | Harvard University | United States | 4171 | 99 | 42.1 |

| Arizona State University | United States | 80 | 2312 | 28.9 | University of Southern California | United States | 3995 | 73 | 54.7 |

| University of Texas at Austin | United States | 80 | 986 | 12.3 | Indiana University Bloomington | United States | 3238 | 67 | 48.3 |

| Pennsylvania State University | United States | 76 | 2227 | 29.3 | National Bureau of Economic Research | United States | 2943 | 3 | 981.0 |

| University of Southern California | United States | 73 | 3995 | 54.7 | National Research Council of Italy | Italy | 2909 | 40 | 72.7 |

| University of Chinese Academy of Sciences | China | 71 | 2151 | 30.3 | University of Washington | United States | 2883 | 54 | 53.4 |

| New York University | United States | 70 | 5070 | 72.4 | Nanyang Technological University | Singapore | 2759 | 97 | 28.4 |

Note: TP = Total publications; TC = Total citations; TC/TP = Total citations per publication.

3.1.3. Highly cited journals

Between 2013 and 2022, the distribution of publications and citations among the journals that contribute the most to fake news was analyzed. Table 2 shows the top ten journals publishing fake news, with metrics aimed at assessing their impact and quality. The “Journal of Medical Internet Research" and “PLoS ONE" stand out with exceptionally high TC values of 3500 and 3444, respectively, along with Q1 SJR rankings. These metrics signify not only the prolific citation of their articles but also their top-tier quality within their subject areas. In contrast, “Lecture Notes in Computer Science" has a lower TC/TP ratio of 5.8 and a Q3 SJR rank, which may suggest a narrower impact or specialized focus. Furthermore, “Proceedings of the National Academy of Sciences of the United States of America" exhibits an extraordinarily high TC/TP ratio of 144.8, underscoring the high-impact nature of its publications despite a low TP of 16.

Table 2.

Top ten journals based on citations.

| Journal Title | TC | TP | TC/TP | SJR |

|---|---|---|---|---|

| Journal of Medical Internet Research | 3500 | 86 | 40.7 | Q1 |

| PLoS ONE | 3444 | 94 | 36.6 | Q1 |

| Lecture Notes in Computer Science | 2584 | 444 | 5.8 | Q3 |

| Digital Journalism | 2334 | 44 | 53.0 | Q1 |

| Proceedings of the National Academy of Sciences of the United States of America | 2317 | 16 | 144.8 | Q1 |

| IEEE Access | 1943 | 80 | 24.3 | Q1 |

| International Journal of Environmental Research and Public Health | 1782 | 84 | 21.2 | Q1 |

| New Media and Society | 1525 | 50 | 30.5 | Q1 |

| Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition | 1427 | 18 | 79.3 | – |

| JMIR Public Health and Surveillance | 1296 | 24 | 54.0 | Q1 |

Note: TP = Total publications; TC = Total citations; TC/TP = Total citations per publication; SJR= Scimago Journal Rank.

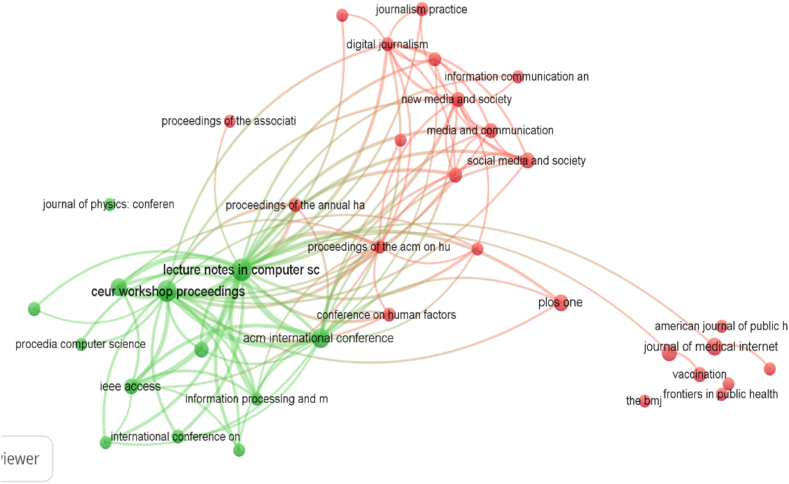

Bibliographic coupling occurs when two documents cite a common third document and is frequently used as a measure of similarity among documents [44,45]. The network map shown in Fig. 3 demonstrates that two main clusters of publication sources cite each other. It also means that the links between documents will remain constant over time [46]. A threshold of 20 publications was kept, identifying the bibliographic coupling of sources, and 37 publication sources met the threshold. Cluster 1 (Red) has 24 journals, and Cluster 2 (Green) has 13 publication sources. The publication source with the most publications and citations in Cluster 1 is the Journal of Medical Internet Research (TP:86, TC:2909), and in Cluster 2 is Lecture Notes in Computer Science (TP:277, TC:1812).

Fig. 3.

Bibliographic coupling of journals.

The first cluster has the Proceedings of the ACM on Human-Computer Interaction (LS:12005) with the highest link strength and is mainly sharing links with New Media and Society (LS:11756) and Digital Journalism (LS:10847). The second cluster has Lecture Notes in Computer Science with the highest link strength. It is mainly coupled with CEUR Workshop Proceedings (LS:28091), ACM International Conference Proceeding Series (LS:21534), and IEEE Access (LS:14352).

3.1.4. Impact of collaboration

The extent to which an entity's publications have international, national, or institutional co-authorship and single authorship indicates collaboration. Table 3 displays the collaboration pattern over the years 2013–2022. Notably, international collaboration yields the highest TC/TP ratio of 22.0 despite accounting for only 19.3 % of the total publications share. This suggests that publications arising from international collaborations are generally more cited and potentially have a broader impact. Publications resulting from only national collaboration represent 24.2 % of the share and have a lower TC/TP ratio of 16.1, indicating a comparatively reduced but still significant impact. Publications originating from institutional collaborations occupy the largest share at 34.3 %, yet they have a lower TC/TP ratio of 12.9, suggesting that such collaborations, while more frequent, do not necessarily lead to higher-impact papers. Finally, single-author papers, with no collaboration, account for 22.2 % of the total share but have the lowest TC/TP ratio of 6.8, which may indicate a more limited scope or impact.

Table 3.

Impact of collaboration.

| Metric | % Share | TC | TC/TP |

|---|---|---|---|

| International collaboration | 19.3 % | 48,734 | 22.0 |

| Only national collaboration | 24.2 % | 44,753 | 16.1 |

| Only institutional collaboration | 34.3 % | 50,883 | 12.9 |

| Single authorship (no collaboration) | 22.2 % | 17,387 | 6.8 |

Note: TP = Total publications; TC = Total citations; TC/TP = Total citations per publication.

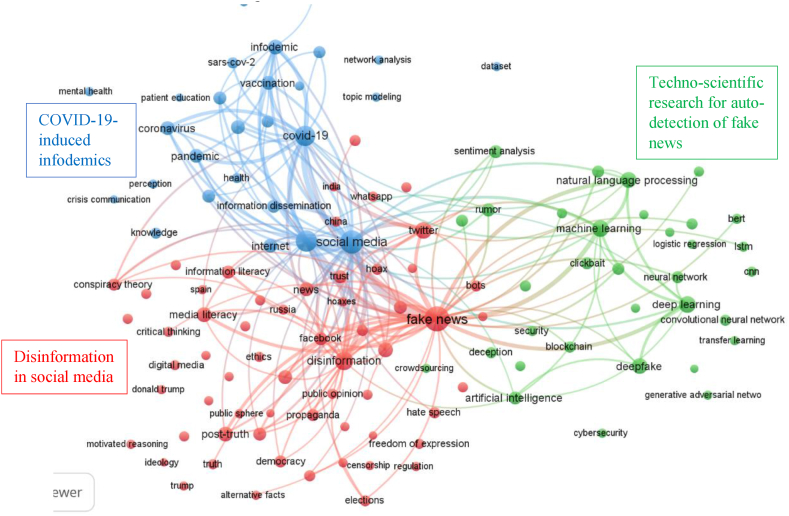

3.2. Thematic clusters based on keyword co-occurrences

Keyword co-occurrence analysis reveals the structure of a research landscape by identifying relationships between keywords. Node size signifies keyword frequency and importance, colour distinguishes thematic clusters, and edge thickness indicates the strength of keyword associations. The keyword co-occurrence network has resulted in three thematic clusters, as seen in Fig. 4.

Fig. 4.

Keyword co-occurrence network.

Table 4 has further details about each cluster.

Table 4.

Thematic clusters based on keyword co-occurrence.

| Cluster | Cluster 1 (red) | Cluster 2 (green) | Cluster 3 (blue) |

|---|---|---|---|

| Cluster theme | Disinformation in social media | Techno-scientific research for auto-detection of fake news | COVID-19-induced infodemics |

| TP | 3273 | 4293 | 3983 |

| TC/TP | 15.6 | 15.6 | 17.9 |

| TC | 51141 | 66856 | 71370 |

| Top ten keywords | fake news disinformation media literacy fact checking post-truth journalism political communication trust |

machine learning deep learning natural language processing deep fake artificial intelligence rumour sentiment analysis text classification neural network support vector machine |

social media misinformation covid-19 infodemic coronavirus pandemic vaccination internet public health health communication vaccine hesitancy |

| Top three cited articles, their focus, number of citations | Del Vicario, M.D. et al. Misinformation online TC = 1029 Wang, Y. et al. Health Misinformation on Social Media TC = 589 Zubiaga, A. et al. Rumours in social media TC = 79 |

Tandoc, E.C. et al. Typology for fake news TC = 981 Rossler, A. et al. Machine learning detection TC = 977 Ruchansky, N. et al. Deep learning for fake news TC = 588 |

Kata, A. Anti-vaccine TC = 584 Cinelli, M. et al. COVID-10 infodemic TC = 742 Pennycook, G. et al. COVID-19 Misinformation TC = 717 |

Next, we analyze the top three cited articles from each of the clusters.

3.2.1. Cluster 1: disinformation in social media (red)

Cluster 1 is focused on disinformation in news, journalism, social media, Twitter, Facebook, political conspiracy theory, propaganda, digital media and bots. The cluster also includes media literacy, fact-checking, information literacy, credibility, and truth to counter disinformation. With the proliferation of social media platforms and the enablement of instant messaging services, traditional journalism has evolved into integrating sensationalism into the news. The recent past has seen considerable growth in fake news with surprisingly significant contributions from mainstream media, as reported by Ref. [11].

The first article in this cluster by Del Vicario, MD et al. states that misinformation online, including fake news, has become a major issue on social media platforms and is recognized as a threat to society by the World Economic Forum [47]. Once accepted, it can be difficult for individuals to correct false information. Social norms and personal beliefs can influence the acceptance of news items [48,49]. The next research emphasizes that health misinformation on social media often revolves around themes such as vaccines, infectious diseases, cancer, smoking, and nutrition [21]. The spread, impact, and strategies for coping with this type of misinformation on social media are also important areas of study. The open nature of social media platforms provides opportunities for researchers to study how users share and discuss rumours and to develop techniques for automatically assessing their veracity, such as natural language processing and data mining. Researchers of the last article classify rumours such as rumour detection, rumour tracking, rumour stance classification, and rumour veracity classification [50]. These approaches can help to understand better the nature and impact of rumours on social media.

3.2.2. Cluster 2: techno-scientific research towards the detection of fake news (green)

Articles included in Cluster 2 often involve the use of machine learning, artificial intelligence, deep learning, and natural language processing techniques. These techniques can be used to automate the process of classifying news as fake or real. Deepfake, in particular, uses deep learning techniques to generate realistic images and videos, which has led to an increase in research on techniques for detecting deepfake images and videos. Textual fake news techniques often utilize embedding and named entity recognition, along with textual entailment, to determine the logical flow of the text. The proliferation of fake news on social media and other online platforms, often spread through clickbait and online social networks, has made the development of these detection techniques increasingly important.

Fake news can be classified into several categories based on levels of deception and facticity: (1) news satire, (2) news parody, (3) fabrication, (4) manipulation, (5) advertising, and (6) propaganda (Tandoc et al., 2017). In addition to these categories, fake news can also be spread with news bots, which create a network of fake sites that imitate the omnipresence of news [2]. The next article looks at the ease of manipulation and generation of images, which has led to the development of deep learning methods for detecting synthetic or modified images, including facial images and poor-quality video [51]. These approaches can help to counter the spread of fake news and disinformation on social media and other online platforms. Fake news can be characterized by three factors: the content of the article, the user response it receives, and the sources promoting it. The last article in this cluster proposes a model called Capture, Score, and Integrate (CSI) that combines all three characteristics to improve the accuracy and automation of fake news detection, outperforms existing models and extracts meaningful latent representations of both users and articles [52].

3.2.3. Cluster 3: COVID-19-induced infodemics (blue)

COVID-19 has led to an increase in the spread of misinformation, also known as an “infodemic," on social media. This flood of information, combined with anxiety and panic about the virus, has led to the rapid spread of false or misleading news with little fact-checking. This has caused panic, mental distress, and vaccine hesitancy in countries around the world, including the US and the UK [22,53,54]. The term “infodemic" has been coined to describe the aggressive and discordant nature of fake news related to the pandemic [23]. Individuals need to be vigilant and critically evaluate the sources and accuracy of information they encounter about COVID-19, especially given the potential impact on public health and vaccine uptake.

In the current healthcare landscape, there has been a shift in power from doctors to patients, and there is a growing trend of questioning the legitimacy of science and redefining expertise. This has created an environment in which anti-vaccine activists are able to spread their messages effectively [55]. Research has shown that individuals often turn to the internet for vaccination advice and these sources can influence vaccination decisions [56]. In the next article, researchers conducted data analysis on multiple social media platforms to study the diffusion of information about COVID-19 (Cinelli et al. n. d.). They analyze engagement and interest in the topic with epidemic models and identify the spread of misinformation from questionable sources [57]. The last article in this cluster looks at cognitive factors, such as a lack of critical thinking, that contribute to the sharing of misinformation online. Simple interventions, like reminding people to fact-check before sharing, can help reduce the spread of false information [58].

3.3. Fake news research mapped to SDGs

The study of fake news and its relationship to Sustainable Development Goals (SDGs) is highly relevant for researchers for several reasons. Table 5 shows the total articles on fake news research sorted according to SDGs. Only 27 % of the corpus is found to be mapped to SDGs using the SDG mapping algorithm [31]. The greatest number of articles (TP:1610) is in SDG 3 (Good Health and Well-Being), followed by SDG 16 (Peace, Justice, and Strong Institutions) (TP:857) and SDG 9 (Industry, Innovation, and Infrastructure) (TP:214).

Table 5.

Total publications on fake news research mapped to SDGs.

| SDG Name | SDG Mapped publications |

|---|---|

|

1610 |

|

857 |

|

214 |

|

180 |

|

159 |

|

117 |

|

116 |

|

86 |

|

70 |

|

49 |

|

40 |

|

26 |

|

26 |

|

25 |

|

17 |

|

11 |

It is possible for fake news to influence individual behaviours in a way that undermines the SDGs. For example, misinformation about vaccines can discourage people from getting vaccinated, undermining SDG 3 (Good Health and Well-being). SDG 16 aims to promote peace, justice, and strong institutions. By fostering social tensions, fuelling conflict, and undermining trust in institutions, fake news threatens to undermine this goal. As a result of fake news, trust in institutions is undermined, and peace and transparency are disrupted, resulting in chaos and anarchy. Fake news can significantly distort public understanding of important issues, often sensationalizing or trivializing matters that require serious attention. For example, By spreading denialism or downplaying climate change's severity, misinformation about SDG 13 (Climate Action) will undermine the goal.

In light of Table 5 and the discussion on clustering research themes in fake news, one can observe the concentration of articles dealing with COVID-19, especially those mapped to SDG 3 (Good Health and Well-being) (Table 6). Research by Cinelli et al. Pennycook et al., and Loomba et al. [53,57,58]. underscore this focus, indicating acute academic attention toward health misinformation amidst a global pandemic. This is further emphasized by their high citation-per-year ratios, such as Loomba et al. [53], with 323 citations in a single year, indicative of the timely and societal relevance attributed to this subset of research.

Table 6.

Highly cited SDG-mapped publications.

| Title | TC | Year | TC/Year | Authors | Journal title | SDG Mappings |

|---|---|---|---|---|---|---|

| The spread of true and false news online | 3210 | 2018 | 642 | Vosoughi, S. et al. | Science |  |

| The COVID-19 social media infodemic | 742 | 2020 | 247 | Cinelli, M et al. | Scientific Reports |  |

| 717 | 2020 | 239 | Pennycook, G. et al. | Psychological Science |  |

|

| Measuring the impact of COVID-19 vaccine misinformation on vaccination intent in the UK and USA | 646 | 2021 | 323 | Loomba, S. et al. | Nature Human Behaviour |  |

| Beyond Misinformation: Understanding and Coping with the “Post-Truth” Era | 606 | 2017 | 101 | Lewandowsky, S. et al. | Journal of Applied Research in Memory and Cognition |  |

Fighting COVID-19 Misinformation on Social Media: Experimental Evidence for a Scalable Accuracy-Nudge Intervention.

4. Fake news in the era of generative AI

Advancements in Artificial Intelligence (AI) have significantly simplified daily human activities. Developed by OpenAI and introduced in late 2022, the Chat Generative Pretrained Transformer (ChatGPT) serves as an exemplar of these AI technologies. ChatGPT operates as a text-based conversational agent, providing textual responses to user queries [59]. AI algorithms have been shown to be useful in detecting fake news or misinformation that may be interfering with efficiency and optimization [60,61]. Proponents of using AI in the detection of fake news suggest that certain principles need to be followed, including the development of strategies by the software designers to combat fake news, enabling software users to report fake news when detected, and keeping users informed of the dissemination of fake news [62]. For example, deep learning, machine learning, and natural language processing can extract text- or image-based cues to train models to aid in the prediction of the authenticity of news [2,63]. Alternatively, AI can be used to examine the social context of the news article, including features of the poster, such as the number of shares or retweets of the post [2].

However, Generative AI tools (GAI) like ChatGPT can also facilitate the spread of misinformation or fake news [64] to the detriment of those seeking information on virtually any topic, particularly health, finances, and politics. In extreme cases, the spread of misinformation through the use of AI-generated videos or written content can set factions against one another, with violence and death.

To understand the scope of GAI tools in the spread of fake news, it's important to understand the difference between simply posting a query on a search engine as opposed to posting the same question to an AI-based chatbot. Asking search engines Google or Bing a question, such as “Are COVID vaccines safe?” will return a variety of sites that a user can then choose to peruse, many of which may contain false information but some of which may be accurate. Alternatively, with generative AI, the choice component is removed as the platform pools content from across various news sources and provides a single response to the requestor [65]. “At first glance, one has a self-contained answer that appears entirely trustworthy. But in truth, the reader has no idea if the source is the BBC, QAnon or a Russian bot, and no alternative views are provided” [65].

Goldstein et al. [66] discussed the manipulation of fake persona creation, AI-generated imagery, outsourcing, etc., that may cause false data interpretation toward career-oriented paths. Although OpenAI made promises, this new AI tool produces incorrect information more frequently and persuasively than its predecessor. NewsGuard found an 80–98 % probability of fake news on the generative AI sites ChatGPT and Bard [67]. While problematic in itself, the problem is confounded by the fact that users of generative AI technologies are often unable to distinguish true information from misinformation [68]. Additionally, the sources on which large language models are trained may be full of misinformation, leading the large language models to produce false content [61].

One of the most pressing concerns about Generative AI tools is about the accuracy and bias of the information generated by these models [69] to improve public health and advance scientific research [5]. There are also questions about the ownership and control of the data used to train these models and the potential for these models to exacerbate existing health disparities. Additionally, there is concern about the ability of these tools to protect sensitive health data [69]. GAI tools can generate compelling fake news and propaganda, which could be used to manipulate public opinion. Bioethics studies ethical, social, and legal issues in biomedicine and biomedical research. The ChatGPT has raised the same problems for bioethics as current medical AI. These include data ownership, consent for data use, data representativeness and bias, and privacy [70].

Similarly, GAI tools can manipulate financial decision-making [71]. Intending to raise their profits and harm other businesses, fake websites may be created or false information disseminated online that is then manipulated in AI models. People accessing this information to ask questions and receive answers related to financial decision-making may see their individual or business futures destroyed. Social bots may be created to post false information designed to benefit particular companies or organizations to the detriment of others [2,72]. Whether with financial information or another topic, social bots immediately increase engagement with information as soon as it is created to increase its perceived legitimacy and spread [2]. In addition, the bots target users who are most likely to share or repost the information, again with the goal of increasing the spread of the disinformation.

The impact of GAI tools on the general public and research community is also a topic of discussion. There are concerns about the impact of LLMs on the research community, including the potential for these models to be used for plagiarism and the impact of preprints on disseminating research [6] and declaration of use of Generative AI tools for manuscript writing [73]. In this work by Grünebaum et al. [74], the authors have presented the advancements in natural language processing and how it can be applied to medicine, including the potential use of ChatGPT as a clinical tool. However, there are limitations to ChatGPT, including the possibility of producing seemingly credible but incorrect responses. It also discusses guidelines for using chatbots in medical publications and the implications of nonhuman “authors" on the integrity of scientific publications and medical knowledge. Amaro et al., 2023 have experimented with 62 university students who extensively employ ChatGPT. They collected the participants' perceptions of trust, satisfaction, and usability and the net promoter score (NPS). It is observed that usability and the NPS also resulted higher when the fake news was detected in the late interaction. The study also investigates whether early or late knowledge of this possibility affects user perceptions differently. They involved 62 participants who were randomly assigned to a control group or one of two treatment groups. The participants interacted with ChatGPT and answered questions about their perceptions of its trustworthiness, usability, and satisfaction. The study found that the occasional production of fake information by ChatGPT harmed user trust and satisfaction. Still, this effect was mitigated when users were informed of the possibility of counterfeiting details early on.

5. Emerging research topics based on prominence percentile

Prominence is an indicator of the momentum or visibility of a particular research topic and has the potential to predict whether a topic will grow or decline in the near future. This is regardless of whether the topic is considered to be emergent or not [75]. The higher the momentum, the more funds per author available for research on that topic. A correlation exists between the prominence (momentum) of a particular topic and the amount of funding per author.

It is calculated by weighing three metrics for papers clustered in a topic.

-

•

Citation Count in year n to papers published in n and n-1

-

•

Scopus Views Count in year n to papers published in n and n-1

-

•

Average CiteScore for year n

Table 7 shows the emerging research topics related to Fake news in the top 1 % Prominence Percentile (PP), with each topic having at least 50 publications. .

Table 8.

Top cited publication from each of the topics from Table 7.

| Topic No. | Topic name | Publication title | Authors | TC |

|---|---|---|---|---|

| 1 | Object Detection; Deep Learning; IOU | Adversarial Perturbations Fool Deepfake Detectors | Gandhi and Jain (2020) | 37 |

| 2 | COVID-19; Psychological Support; Mindfulness | The Covid-19 ‘infodemic’: a new front for information professionals | (Naeem and Bhatti, 2020) | 161 |

| 3 | Bitcoin; Ethereum; Internet Of Things | A Comprehensive Review of the COVID-19 Pandemic and the Role of IoT, Drones, AI, Blockchain, and 5G in Managing its Impact | Chamola et al. (2020) | 681 |

| 4 | Vaccine Hesitancy; Measles; Anti-Vaccination Movement | Measuring the impact of COVID-19 vaccine misinformation on vaccination intent in the UK and USA | Loomba et al. (2021) | 644 |

| 5 | Embedding; Named Entity Recognition; Entailment | “Liar, liar pants on fire”: A new benchmark dataset for fake news detection | Wang, W.Y. (2020) | 497 |

| 6 | Rumour; Social Media; Disinformation | The spread of true and false news online | Vosoughi et al. (2018) | 3203 |

| 7 | Intergovernmental Panel on Climate Change; Climate Change; Skepticism | NASA Faked the Moon Landing-Therefore, (Climate) Science Is a Hoax: An Anatomy of the Motivated Rejection of Science | Lewandowsky et al. (2013) | 368 |

| 8 | Political Participation; Social Media; Media Use | Science audiences, misinformation, and fake news | Scheufele and Krause (2019) | 381 |

Table 7.

Emerging research topics according to Prominence Percentile.

| Research Topic | PP | TP | TC | TC/TA |

|---|---|---|---|---|

| Object Detection; Deep Learning; IOU | 99.999 | 56 | 190 | 3.4 |

| COVID-19; Psychological Support; Mindfulness | 99.997 | 100 | 1872 | 18.7 |

| Bitcoin; Ethereum; Internet Of Things | 99.990 | 53 | 1205 | 22.7 |

| Vaccine Hesitancy; Measles; Anti-Vaccination Movement | 99.971 | 399 | 8230 | 20.6 |

| Embedding; Named Entity Recognition; Entailment | 99.949 | 220 | 1954 | 8.9 |

| Rumour; Social Media; Disinformation | 99.820 | 3128 | 62517 | 20.0 |

| Intergovernmental Panel on Climate Change; Climate Change; Skepticism | 99.735 | 93 | 1577 | 17.0 |

| Political Participation; Social Media; Media Use | 99.538 | 313 | 6107 | 19.5 |

Now, we analyze the top-cited publications from each of the emerging topics (Table 8).

According to the top-cited article by Gandhi and Jain 2020 in Topic 1, Deepfake is an AI-synthesized application that produces entrancing counterfeit multimedia objects empowered by deep learning approaches. Deepfake was introduced in 2017 by a Reddit community user who swapped faces in the porn-graphics with Hollywood celebrities. Recently, deepfakes have become a growing concern in fields ranging from media integrity to personal privacy. A deepfake detector's role is to classify the deepfake as fake correctly. However, to fool the deepfake detector, Gandhi and Jain (2020) enhanced the capability of deepfake images using adversarial perturbation. Authors have utilized the Fast Gradient Sign Method and the Carlini and Wagner norm attack to create the adversarial perturbation. The experimental outcome suggests that the deepfake detectors achieved 75 % accuracy for unperturbed deepfakes, whereas the accuracy falls to 27 % with perturbed deepfakes.

A highly cited article in Topic 2 concludes that in the wake of the COVID-19 pandemic, the public has witnessed a massive rise in fake news or disinformation about the unfamiliar disease. The malicious actors manipulate and present tampered information over the vulnerabilities of the pandemic. This falsity of the new pandemic spreads much faster than the pandemic itself, resulting in a situation of infodemic. This state of affairs leads to an overflow of credible and unreliable information, leaving the public confused. Naeem and Bhatti [76] highlighted how fighting with the infodemic is a new front during the outbreak. The authors have stressed busting the common myths, such as that consuming alcohol and eating garlic can protect from COVID-19, taking hot baths and spraying chlorine kills the coronavirus. Further, the situation was so vulnerable that Tedros Adhanom Ghebreyesus, WHO Director-General, said: ‘We're not just fighting an epidemic; we're fighting an infodemic.

Technology has been critical in responding to the Coronavirus Disease (COVID-19) epidemic. Chamola et al. (2020), in topic 3, highlighted the role of several technologies, such as the Internet of Things (IoT), Unmanned Aerial Vehicles (UAVs), blockchain, Artificial Intelligence (AI), and 5G, to mitigate the impact of COVID-19. The Internet of Medical Things (IoMT), also called the healthcare IoT, played a crucial role in remote patient monitoring, telemedicine, etc. Maintaining social distance is an effective way of controlling the spread of the COVID-19 outbreak. The UAV drones ensure more accessible delivery of medical supplies to remote and unreachable locations with less or no interaction. Further, drones are deployed to monitor sensitive places for crowd surveillance. Similarly, the global positioning system (GPS) based on satellite navigation is helpful for tracking the real-time as well as the historical location of a person. To curb the spread of the disease using GPS location, India's Ministry of Electronics and IT (MeitY) has developed an app called Aarogya Setu. The prime objective of this app was contact tracing. However, besides identifying the various zones of an individual, this app was also invaluable for vaccine distribution. Likewise, in recent times, AI has been among the benchmark technological developments. AI can combat the aftermath of the virus, including disease surveillance, busting fake news, risk prediction, etc. Correspondingly, blockchain has a considerable role in boosting the modern healthcare system, including patient traceability, encrypted storage and retrieval of electronic health records, etc.

Another recent study in Topic 4, according to Loomba et al. (2021), concludes that nearly 3 % of all COVID-19 vaccine-related articles offer at least one disinformation regarding the vaccine. Further, the volume of such misleading studies has rapidly increased in recent years. Loomba et al. [53] conducted a trial in the UK and the USA to quantify the disinformation and myth about the impact of vaccines. The authors also observed a decline in vaccine acceptance due to the perception of herd immunity and the long-term side effects of vaccines. Further, scientific-sounding misinformation, such as the virus has emerged from 5G mobile networks and a trial participant has died after vaccination, were more strongly linked with a decline in vaccination acceptance.

The dataset's size and quality significantly impact the model's accuracy and prediction ability, according to Wang (2017) under topic 5. The vast amounts of data result in a more accurate machine-learning model. The existing statistical models are proven to be less effective in combating fake news detection due to the absence of labeled benchmark datasets. Wang [21] suggested a ‘LIAR' data set consisting of 12.8K human-labeled short words, significantly the largest dataset. Finally, the author underlined that amalgamating meta-data with text improves automatic fake news detection.

In topic 6, Vosoughi et al. [77] confirmed that with the emergence of social media, fake news has rapidly evolved. Fake news is fabricated content intentionally published and propagated through various social media. A study has been conducted to understand the speed with which fake news spreads. Vosoughi et al. [77] analyzed a data set of rumour cascades on Twitter from 2006 to 2017. Authors identified that the top 1 % of fake news spreads between 1000 and 10,000 people in a certain period. However, the true news is that it hardly reaches 1000 people during the same span. Therefore, it can be judiciously concluded that the lies spread faster than the truth.

In topic 7, the study by Lewandowsky et al. (2013) deals with the fact that the impact of science denial can also be seen beyond COVID-19. Certain people remain unconvinced about the changes in the world's climate due to carbon dioxide, childhood vaccination, etc. Lewandowsky et al. [78] found that conspiracy beliefs are one of the most contributory aspects of science skepticism and rejection of science. Authors have surveyed the climate-blog visitors to understand the logic behind the acceptance and rejection of climate science. The common public remains dubious by the testimony of science. It has been observed that apart from conspiracist thinking, endorsement of free markets is also one of the significant factors behind this rejection.

We live in a time and society of uncertainty that readily accepts fake news and rejects science without hesitancy. Scheufele and Krause [79] surveyed how sociocultural circumstances undermine the belief of the common public in science. From the ages, detachment between common public impression and scientific consent remains prevalent, including vaccine safety, climate change, etc. The primary cause of this disconnection between the public and science is the dissemination of misinformation and disinformation by the media houses and political environments. Therefore, epistemic knowledge, including information and disinformation on a specific topic, is far more crucial to making an impression.

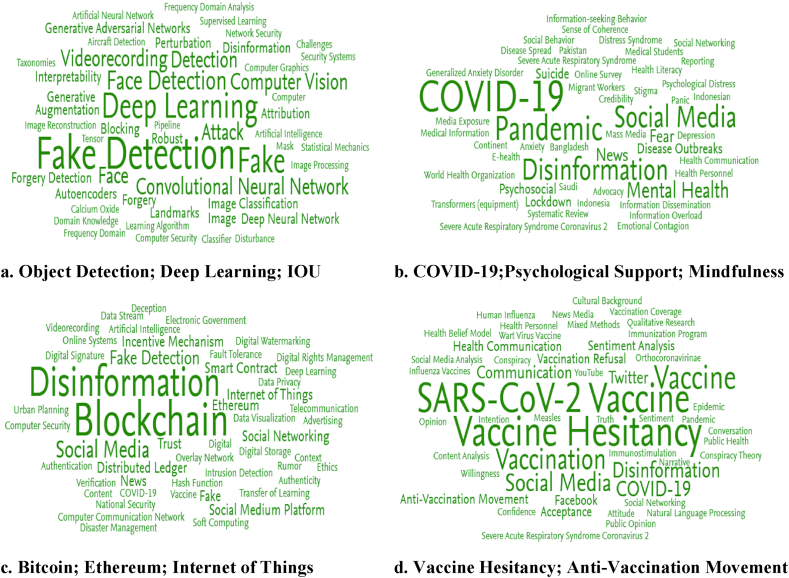

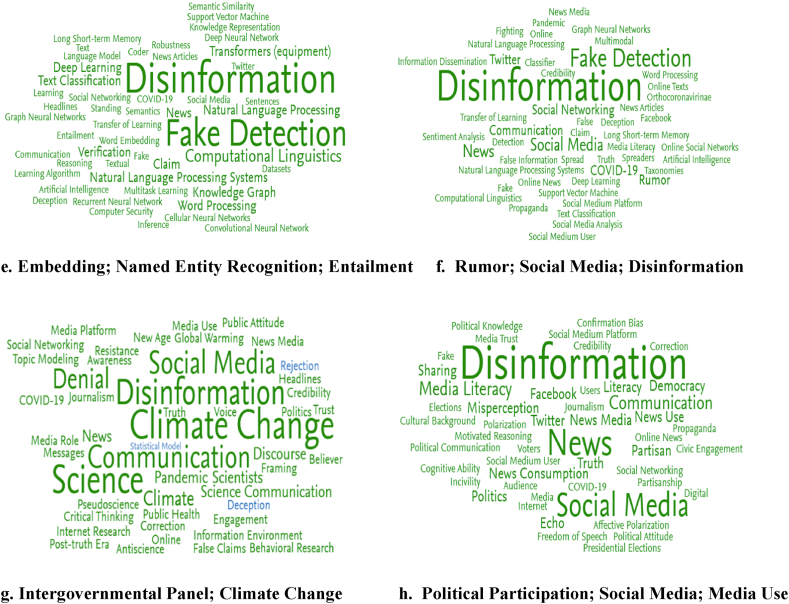

5.1. Keyphrases of emerging topics

Next, we summarize the keyphrases under each of the emerging topics. Several studies have used keyphrases analysis to identify research hotspots for a research theme [30, [80]. SciVal allows the extraction of top keyphrases by text mining from titles and abstracts of articles. Fig. 5a to h shows the keyphrases for each of the eight emerging topics. The top keyphrases are “Fake Detection”, “COVID-19”, “Disinformation”, “Vaccine Hesitancy” and “Climate Change”.

Fig. 5.

Keyphrases for the top prominent topics

6. Conclusions

Fake news impairs decision-making since it leads to biases in information interpretation. People tend to accept negative information irrespective of its impact since it increases their attention and arousal. However, positive news has a less significant effect on the human psyche. In the evolving landscape of fake news research, understanding the factors that contribute to the field's research performance becomes critical. Firstly, the surge in academic interest in fake news coincides with the emergence of the COVID-19 pandemic, illuminating the role of a contemporary crisis in shaping research agendas. Secondly, the analysis reveals a geographical concentration of research output, predominantly from U.S.-based institutions like MIT, thereby emphasizing the need for a more globally diverse academic contribution. Thirdly, the role of specific journals, such as the Journal of Medical Internet Research and PLoS ONE, as platforms for impactful work in this area is evident. Lastly, international collaborations stand out for generating the most impactful publications, as indicated by the highest citation impacts.

The keyword co-occurrence analysis identifies three distinct clusters of research in the domain of fake news. Cluster 1 emphasizes the role of social media and traditional journalism in perpetuating disinformation, underscoring the need for media literacy interventions. Cluster 2 highlights the utility of machine learning and natural language processing for fake news detection, accentuating the technological arms race in combating deceptive content. Cluster 3 focuses on the COVID-19 ″infodemic," revealing both the gravity and the breadth of misinformation amid global crises. The clusters illustrate an intricate web of challenges ranging from technological to psychological and societal. Their collective implication highlights the urgency of multi-disciplinary approaches to counteract the sophisticated and pervasive nature of fake news.

For the first time, direct mapping of fake news to SDGs was conducted. Despite the heavy focus on SDG 3, especially concerning the health misinformation perpetuated during the COVID-19 pandemic, other SDGs are also well-represented (SDG 16, 9, 10). Research on SDG 16 reveals the broader social implications of fake news, particularly how it undermines peace, justice, and strong institutions. Furthermore, SDG 9, focusing on Industry, Innovation, and Infrastructure, also indicates an academic interest in technological approaches to combating disinformation. SDG 10, focused on reduced inequalities, suggests an understanding of the socio-economic dimensions of misinformation.

For the first time, our study used the metric of Prominence percentile for identifying the most salient and urgently discussed topics related to fake news. For instance, the high prominence of topics such as “Object Detection in Deep Learning" and “COVID-19; Psychological Support; Mindfulness" indicates not only scientific but also economic prioritization. The notable prominence of topics related to misinformation and public sentiment, such as “Rumour; Social Media; Disinformation," underscores the critical need for research into ethical frameworks and computational tools for information verification.

The prevalence of large language models like ChatGPT in various domains, from healthcare to information dissemination, is undeniable. While they show promise in democratizing access to information and aiding in research, ethical and accuracy-related challenges loom large. Notably, the models' capacity for generating misleading or false information raises ethical concerns, such as in the realm of fake news generation. The consequence extends from eroding trust in AI systems to affecting user perceptions, as corroborated by empirical studies. Additionally, personal harm can befall users as misinformation about health and finances, among other things, is generated and disseminated. Data ownership, user consent, and representational bias are additional layers of complexity in this discourse. Therefore, it is crucial to address these issues comprehensively for the responsible and equitable application of these potent tools in diverse sectors.

There is a need for future research to focus on the characteristics of individuals that lead them to be drawn to fake news [81]. It would be interesting for researchers to examine further the personality characteristics that may make some people more likely to believe and spread fake news than others. This could involve examining factors such as persuasibility, influenceability, and other traits that have been studied in past. It would also be interesting for future research to examine personality characteristics that lead individuals to be drawn to generative AI techniques and for what purpose. Are some people blanket users of generative AI tools, whereas others are more selective users? What distinguishes the two, and how do those distinguishing features relate to the consumption of fake news?

It is also worth considering why fake news and disinformation only began to receive significant attention in the research literature in 2012, despite the widespread use of social media for several years prior. It is possible that there were specific events or circumstances in countries such as the US, UK, and India that prompted the sudden emergence of research on this topic. Further research could explore the factors that may have led to the increased interest in fake news and disinformation and how they relate to the rise of social media and other technological developments. Emerging economies that are seeing higher adoption of internet technologies should also be the focus of future research [82].

In scrutinizing the landscape of fake news research, several limitations must be acknowledged. First, the exclusive use of bibliometric data may not capture the full scope of scholarly activity, as grey literature, internal reports, and methodological papers often go unindexed. This omission could potentially skew the representation of research priorities. Second, bibliometric indicators such as citation counts and Scopus Views Count inherently favor well-established fields over nascent areas of study, possibly underestimating the prominence of emerging topics. Fourth, the methodology does not account for the multi-disciplinary nature of SDGs, possibly leading to an incomplete or biased mapping of the publications to SDGs.

Data availability statement

Data will be made available on reasonable request. Data associated with our study has not been deposited into a publicly available repository.

Funding statement

This research received no specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

CRediT authorship contribution statement

Raghu Raman: Writing – review & editing, Writing – original draft, Conceptualization. Vinith Kumar Nair: Writing – review & editing, Methodology, Investigation, Data curation. Prema Nedungadi: Writing – review & editing, Writing – original draft. Aditya Kumar Sahu: Writing – review & editing, Investigation. Robin Kowalski: Writing – review & editing, Writing – original draft. Sasangan Ramanathan: Writing – review & editing. Krishnashree Achuthan: Writing – review & editing, Writing – original draft, Methodology, Investigation, Conceptualization.

Declaration of competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgements

We want to express our immense gratitude to our beloved Chancellor, Mata Amritanandamayi Devi (AMMA), for providing the motivation and inspiration for this research work.

Contributor Information

Raghu Raman, Email: raghu@amrita.edu.

Vinith Kumar Nair, Email: vinithkumarnair@am.amrita.edu.

Prema Nedungadi, Email: prema@amrita.edu.

Aditya Kumar Sahu, Email: s_adityakumar@av.amrita.edu.

Robin Kowalski, Email: rkowals@clemson.edu.

Sasangan Ramanathan, Email: sasangan@amrita.edu.

Krishnashree Achuthan, Email: krishna@amrita.edu.

References

- 1.Gordon A.D., Kittross J.M., Merrill J.C., Babcock W.A., Dorsher M., Armstrong J.A.…Singer J.B. Controversies in Media Ethics. Routledge; 2012. Infotainment, sensationalism, and “reality”; pp. 432–458. [Google Scholar]

- 2.Aimeur E., Amri S., Brassard G. Fake news, disinformation, and misinformation: a review. Social Network Analysis and Mining. 2023;13 doi: 10.1007/s13278-023-01028-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Lim W.M. Fact or fake? The search for truth in an infodemic of disinformation, misinformation, and malinformation with deepfake and fake news. J. Strat. Market. 2023 doi: 10.1080/09625254X.2023.2253805. [DOI] [Google Scholar]

- 4.Amaro I., Barra P., Della Greca A., Francese R., Tucci C. Believe in artificial intelligence? A user study on the ChatGPT's fake information impact. IEEE Transactions on Computational Social Systems. 2023 [Google Scholar]

- 5.De Angelis L., Baglivo F., Arzilli G., Privitera G.P., Ferragina P., Tozzi A.E., Rizzo C. ChatGPT and the rise of large language models: the new AI-driven infodemic threat in public health. Front. Public Health. 2023;11 doi: 10.3389/fpubh.2023.1166120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Dadkhah M., Oermann M.H., Mihály H., Raman R., Dávid L.D. Diagnosis; 2023. Detection of Fake Papers in the Era of Artificial Intelligence. [DOI] [PubMed] [Google Scholar]

- 7.Kosnik L.R.D. Refusing to budge: a confirmatory bias in decision making? Mind Soc. 2008;7(2):193–214. [Google Scholar]

- 8.Lai C.K., Hoffman K.M., Nosek B.A. Reducing implicit prejudice. Social and Personality Psychology Compass. 2013;7(5):315–330. [Google Scholar]

- 9.LeDoux J. Rethinking the emotional brain. Neuron. 2012;73(4):653–676. doi: 10.1016/j.neuron.2012.02.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Nour N., Gelfand J. Deepfakes: a digital transformation leads to misinformation. The Gray Journal. 2022;18(2):85–94. doi: 10.26069/greynet-2022-000.471-gg. [DOI] [Google Scholar]

- 11.Tsfati Y., Boomgaarden H.G., Strömbäck J., Vliegenthart R., Damstra A., Lindgren E. Causes and consequences of mainstream media dissemination of fake news: literature review and synthesis. Annals of the International Communication Association. 2020;44(2):157–173. [Google Scholar]

- 12.Tandoc E.C., Jr. The facts of fake news: a research review. Sociology Compass. 2019;13(9) [Google Scholar]

- 13.Baptista J.P., Gradim A. Understanding fake news consumption: a review. Soc. Sci. 2020;9(10):185. [Google Scholar]

- 14.Marwick A.E. Why do people share fake news? A sociotechnical model of media effects. Georgetown law technology review. 2018;2(2):474–512. [Google Scholar]

- 15.Gunawan B., Ratmono B.M., Abdullah A.G., Sadida N., Kaprisma H. Research mapping in the use of technology for fake news detection: bibliometric analysis from 2011 to 2021. Indonesian Journal of Science and Technology. 2022;7(3):471–496. [Google Scholar]

- 16.Bryanov K., Vziatysheva V. Determinants of individuals' belief in fake news: a scoping review determinants of belief in fake news. PLoS One. 2021;16(6) doi: 10.1371/journal.pone.0253717. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Soler-Costa R., Lafarga-Ostáriz P., Mauri-Medrano M., Moreno-Guerrero A.J. Netiquette: ethic, education, and behavior on internet—a systematic literature review. Int. J. Environ. Res. Publ. Health. 2021;18(3):1212. doi: 10.3390/ijerph18031212. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Hagg E., Dahinten V.S., Currie L.M. The emerging use of social media for health-related purposes in low and middle-income countries: a scoping review. Int. J. Med. Inf. 2018;115:92–105. doi: 10.1016/j.ijmedinf.2018.04.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Simon T., Goldberg A., Adini B. Socializing in emergencies—a review of the use of social media in emergency situations. Int. J. Inf. Manag. 2015;35(5):609–619. [Google Scholar]

- 20.Li Y.J., Cheung C.M., Shen X.L., Lee M.K. Health misinformation on social media: a literature review. PACIS 2019 Proceedings. 2019:194. [Google Scholar]

- 21.Wang Y., McKee M., Torbica A., Stuckler D. Systematic literature review on the spread of health-related misinformation on social media. Soc. Sci. Med. 2019;240 doi: 10.1016/j.socscimed.2019.112552. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Rocha Y.M., de Moura G.A., Desidério G.A., de Oliveira C.H., Lourenço F.D., de Figueiredo Nicolete L.D. The impact of fake news on social media and its influence on health during the COVID-19 pandemic: a systematic review. J. Publ. Health. 2021:1–10. doi: 10.1007/s10389-021-01658-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Alvarez-Galvez J., Suarez-Lledo V., Rojas-Garcia A. Determinants of infodemics during disease outbreaks: a systematic review. Front. Public Health. 2021;9 doi: 10.3389/fpubh.2021.603603. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Suarez-Lledo V., Alvarez-Galvez J. Prevalence of health misinformation on social media: systematic review. J. Med. Internet Res. 2021;23(1) doi: 10.2196/17187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Ling G.J., Yaacob A., Latif R.A. A bibliometric analysis on the influence of social media during the COVID-19 pandemic. SEARCH Journal of Media and Communication Research. 2021;13(3):35–55. [Google Scholar]

- 26.Gabarron E., Oyeyemi S.O., Wynn R. COVID-19-related misinformation on social media: a systematic review. Bull. World Health Organ. 2021;99(6):455. doi: 10.2471/BLT.20.276782. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Akram M., Nasar A., Arshad-Ayaz A. A bibliometric analysis of disinformation through social media. Online J. Commun. Media Technol. 2022;12(4) [Google Scholar]

- 28.Achuthan K., Nair V.K., Kowalski R., Ramanathan S., Raman R. Cyberbullying research—alignment to sustainable development and impact of COVID-19: bibliometrics and science mapping analysis. Comput. Hum. Behav. 2023;140 [Google Scholar]

- 29.Raman R., Subramaniam N., Nair V.K., Shivdas A., Achuthan K., Nedungadi P. Women entrepreneurship and sustainable development: bibliometric analysis and emerging research trends. Sustainability. 2022;14(15):9160. [Google Scholar]

- 30.Raman R., Nair V.K., Prakash V., Patwardhan A., Nedungadi P. Green-hydrogen research: what have we achieved, and where are we going? Bibliometrics analysis. Energy Rep. 2022;8:9242–9260. [Google Scholar]

- 31.Jayabalasingham B., Boverhof R., Agnew K., Klein L. Identifying research supporting the United Nations sustainable development goals. Mendeley Data. 2019;1:1. [Google Scholar]

- 32.Donthu Naveen, Kumar Satish, Mukherjee Debmalya, Pandey Nitesh, Weng Marc Lim. How to conduct a bibliometric analysis: an overview and guidelines. J. Bus. Res. 2021;133:285–296. ISSN 0148-2963. [Google Scholar]

- 33.Donthu N., Kumar S., Mukherjee D., Pandey N., Lim W.M. How to conduct a bibliometric analysis: an overview and guidelines. J. Bus. Res. 2021;133:285–296. [Google Scholar]

- 34.Sau K., Nayak Y. Scopus based bibliometric and scientometric analysis of occupational therapy publications from 2001 to 2020 [version 1; peer review: 1 approved with reservations] F1000Research. 2022;11:155. [Google Scholar]

- 35.Patra R.K., Pandey N., Sudarsan D. 2022. Bibliometric analysis of fake news indexed in Web of Science and Scopus; pp. 2001–2020. (Global Knowledge, Memory and Communication). ahead-of-print No. ahead-of-print. [Google Scholar]

- 36.Chaudhari D.D., Pawar A.V. Propaganda analysis in social media: a bibliometric review. Information Discovery and Delivery. 2021;49(1):57–70. [Google Scholar]

- 37.van Eck N.J., Waltman L. Software survey: VOSviewer, a computer program for bibliometric mapping. Scientometrics. 2010;84(2):523–538. doi: 10.1007/s11192-009-0146-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Ha L., Andreu Perez L., Ray R. Mapping recent development in scholarship on fake news and misinformation, 2008 to 2017: disciplinary contribution, topics, and impact. Am. Behav. Sci. 2021;65(2):290–315. [Google Scholar]

- 39.Ding Y., Wang Y., Wang Y. 2021 IEEE 4th International Conference on Computer and Communication Engineering Technology (CCET); 2021. It's Time to Confront Fake News and Rumors on Social Media: A Bibliometric Study Based on VOSviewer; pp. 226–232. [Google Scholar]

- 40.Pool J., Fatehi F., Akhlaghpour S. Infodemic, misinformation and disinformation in pandemics: scientific landscape and the road ahead for public health informatics research. Stud. Health Technol. Inf. 2021;281:764–768. doi: 10.3233/SHTI210278. [DOI] [PubMed] [Google Scholar]

- 41.Roberge Guillaume, Kashnitsky Yury, James Chris. Elsevier Data Repository; 2022. “Elsevier 2022 Sustainable Development Goals (SDG) Mapping”. vol. 1. [DOI] [Google Scholar]

- 42.Paul J., Lim W.M., O'Cass A., Hao A.W., Bresciani S. Scientific procedures and rationales for systematic literature reviews (SPAR-4-SLR) Int. J. Consum. Stud. 2021;45:O1–O16. [Google Scholar]

- 43.Renstrom J. 2022, June 4. How Science Itself Fuels a Culture of Misinformation.https://science.thewire.in/the-sciences/how-science-fuels-misinformation-culture/ Thewire.In. [Google Scholar]

- 44.Aria M., Cuccurullo C. bibliometrix: an R-tool for comprehensive science mapping analysis. Journal of Informetrics. 2017;11(4):959–975. doi: 10.1016/j.joi.2017.08.007. [DOI] [Google Scholar]

- 45.Zupic I., Čater T. Bibliometric methods in management and organization. Organ. Res. Methods. 2015;18(3):429–472. doi: 10.1177/1094428114562629. [DOI] [Google Scholar]

- 46.Silva P.C.D. Pandemic metaphors: bibliometric study of the COVID-19 (co) llateral effects. Research, Society and Development. 2020;9(11) [Google Scholar]

- 47.Howell L. Digital wildfires in a hyperconnected world. 2013. https://www.weforum.org/reports/world-economic-forum-global-risks-2013-eighth-edition/ WEF Report 2013.

- 48.Del Vicario M., Bessi A., Zollo F., Petroni F., Scala A., Caldarelli G.…Quattrociocchi W. The spreading of misinformation online. Proc. Natl. Acad. Sci. USA. 2016;113(3):554–559. doi: 10.1073/pnas.1517441113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Frenda S.J., Nichols R.M., Loftus E.F. Current issues and advances in misinformation research. Curr. Dir. Psychol. Sci. 2011;20(1):20–23. [Google Scholar]

- 50.Zubiaga A., Aker A., Bontcheva K., et al. Detection and resolution of rumours in social media: a survey. ACM Comput. Surv. 2018;51(2):32. ISSN 0360-0300. [Google Scholar]

- 51.Rossler A. 2019 ieee/cvf international conference on computer vision (iccv) Faceforensics: Learning to Detect Manipulated Facial Images. 2019:1–11. [Google Scholar]

- 52.Ruchansky N., Seo S., Liu Y. Proceedings of the 2017 ACM on Conference on Information and Knowledge Management. 2017, November. Csi: a hybrid deep model for fake news detection; pp. 797–806. [Google Scholar]

- 53.Loomba S., de Figueiredo A., Piatek S.J., de Graaf K., Larson H.J. Measuring the impact of COVID-19 vaccine misinformation on vaccination intent in the UK and USA. Nat. Human Behav. 2021;5(3):337–348. doi: 10.1038/s41562-021-01056-1. [DOI] [PubMed] [Google Scholar]

- 54.Sallam M., Dababseh D., Eid H., Al-Mahzoum K., Al-Haidar A., Taim D.…Mahafzah A. High rates of COVID-19 vaccine hesitancy and its association with conspiracy beliefs: a study in Jordan and Kuwait among other Arab countries. Vaccines. 2021;9(1):42. doi: 10.3390/vaccines9010042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Kata A. Anti-vaccine activists, Web 2.0, and the postmodern paradigm–An overview of tactics and tropes used online by the anti-vaccination movement. Vaccine. 2012;30(25):3778–3789. doi: 10.1016/j.vaccine.2011.11.112. [DOI] [PubMed] [Google Scholar]

- 56.Larson H.J., Cooper L.Z., Eskola J., Katz S.L., Ratzan S. Addressing the vaccine confidence gap. Lancet. 2011;378(9790):526–535. doi: 10.1016/S0140-6736(11)60678-8. [DOI] [PubMed] [Google Scholar]

- 57.Cinelli M., Quattrociocchi W., Galeazzi A., Valensise C.M., Brugnoli E., Schmidt A.L.…Scala A. The COVID-19 social media infodemic. Sci. Rep. 2020;10(1):1–10. doi: 10.1038/s41598-020-73510-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Pennycook G., McPhetres J., Zhang Y., Lu J.G., Rand D.G. Fighting COVID-19 misinformation on social media: experimental evidence for a scalable accuracy-nudge intervention. Psychol. Sci. 2020;31(7):770–780. doi: 10.1177/0956797620939054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.OpenAI Optimizing Language models for dialogue. https://openai.com/blog/chatgpt/

- 60.Akhtar P., Ghouri A.M., Khan H.U.R., Amin ul Haq M., Awan U., Zahoor N., Khan Z., Ashraf A. Detecting fake news and disinformation using artificial intelligence and machine learning to avoid supply chain disruptions. Ann. Oper. Res. 2023;327(2):633–657. doi: 10.1007/s10479-022-05015-5. https://doi-org.libproxy.clemson.edu/10.1007/s10479-022-05015-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Montoro-Montarroso A., Cantón-Correa J., Rosso P., Chulvi B., Panizo-Lledot Á., Huertas-Tato J., Calvo-Figueras B., José Rementeria M., Gómez-Romero J. Fighting disinformation with artificial intelligence: fundamentals, advances and challenges. El Prof. Inf. 2023;32(3):1–16. https://doi-org.libproxy.clemson.edu/10.3145/epi.2023.may.22 [Google Scholar]

- 62.Wellner G., Mykhailov D. Caring in an algorithmic world: ethical perspectives for designers and developers in building AI algorithms to fight fake news. Sci. Eng. Ethics. 2023;29(4):30. doi: 10.1007/s11948-023-00450-4. https://doi-org.libproxy.clemson.edu/10.1007/s11948-023-00450-4 [DOI] [PubMed] [Google Scholar]

- 63.Kim B., Xiong A., Lee D., Han K. A systematic review on fake news research through the lens of news creation and consumption: research efforts, challenges, and future directions. PLoS One. 2021;16(12) doi: 10.1371/journal.pone.0260080. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Shin D., Kee K.F. Editorial note for special issue on Al and fake news, mis(dis)information, and algorithmic bias. J. Broadcast. Electron. Media. 2023;67(3):241–245. https://doi-org.libproxy.clemson.edu/10.1080/08838151.2023.2225665 [Google Scholar]

- 65.Cecil J. April/May). Can you believe it? World Today. 2023 [Google Scholar]

- 66.Goldstein J.A., DiResta R. Research note: this salesperson does not exist: how tactics from political influence operations on social media are deployed for commercial lead generation. Harvard Kennedy School (HKS) Misinformation Review. 2022;3(5) [Google Scholar]

- 67.AI Chatbots Spreading Fake News? ChatGPT, Google Bard Producing News-Related Misinformation, Finds Report. Technology; 2023. https://www.latestly.com/technology/ [Google Scholar]

- 68.Laird J. ChatGPT makes spotting fake news impossible for most people. 2023, June 29. https://tech.co/news/chatgpt-spotting-fake-news-impossible#:∼:text=The%20survey%20found%20that%20not,them%20when%20they%20were%20false

- 69.WHO cautions against the usage of AI chatbots like ChatGPT, Bard in healthcare. (May 16, 2023). Available https://www.latestly.com/technology/who-cautions-against-the-usage-of-ai-chatbots-like-chatgpt-bard-in-healthcare-5131887.html.

- 70.Cohen I.G. What Should ChatGPT Mean for Bioethics? 2023 doi: 10.1080/15265161.2023.2233357. Available at: SSRN 4430100. [DOI] [PubMed] [Google Scholar]

- 71.Mridha M.F., Keya A.J., Hamid M.A., Monowar M.M., Rahman M.S. A comprehensive review on fake news detection with deep learning. IEEE Access. 2021;9:156151–156170. doi: 10.1109/ACCESS.2021.3129329. [DOI] [Google Scholar]

- 72.Hajli N., Saeed U., Tajvidi M., Shirazi F. Social bots and the spread of disinformation in social media: the challenges of artificial intelligence. Br. J. Manag. 2022;33:1238–1253. doi: 10.1111/1467-8551.12554. [DOI] [Google Scholar]

- 73.Raman R. Transparency in research: an analysis of ChatGPT usage acknowledgment by authors across disciplines and geographies. Account. Res. 2023 doi: 10.1080/08989621.2023.2273377. [DOI] [PubMed] [Google Scholar]

- 74.Grünebaum A., Chervenak J., Pollet S.L., Katz A., Chervenak F.A. The exciting potential for ChatGPT in obstetrics and gynecology. Am. J. Obstet. Gynecol. 2023;228(6):696–705. doi: 10.1016/j.ajog.2023.03.009. [DOI] [PubMed] [Google Scholar]

- 75.Klavans R., Boyack K.W. Research portfolio analysis and topic prominence. Journal of Informetrics. 2017;11(4):1158–1174. [Google Scholar]

- 76.Naeem S.B., Bhatti R. The Covid‐19 ‘infodemic’: a new front for information professionals. Health Inf. Libr. J. 2020;37(3):233–239. doi: 10.1111/hir.12311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Vosoughi S., Roy D., Aral S. The spread of true and false news online. Science. 2018;359(6380):1146–1151. doi: 10.1126/science.aap9559. [DOI] [PubMed] [Google Scholar]

- 78.Lewandowsky S., Ecker U.K., Cook J. Beyond misinformation: understanding and coping with the "post-truth" era. Journal of applied research in memory and cognition. 2017;6(4):353–369. [Google Scholar]

- 79.Scheufele D.A., Krause N.M. Science audiences, misinformation, and fake news. Proc. Natl. Acad. Sci. USA. 2019;116(16):7662–7669. doi: 10.1073/pnas.1805871115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.He M., Zhang Y., Gong L., Zhou Y., Song X., Zhu W.…Zhang Z. Bibliometric analysis of hydrogen storage. Int. J. Hydrogen Energy. 2019;44(52):28206–28226. [Google Scholar]

- 81.Lazer D., Baum M., Grinberg N., Friedland L., Joseph K., Hobbs W., Mattsson C. 2017. Combating Fake News: an Agenda for Research and Action. [Google Scholar]

- 82.Abu Arqoub O., Abdulateef Elega A., Efe Özad B., Dwikat H., Adedamola Oloyede F. Mapping the scholarship of fake news research: a systematic review. Journal. Pract. 2022;16(1):56–86. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data will be made available on reasonable request. Data associated with our study has not been deposited into a publicly available repository.