Abstract

Recent advances in MRI‐guided radiation therapy (MRgRT) and deep learning techniques encourage fully adaptive radiation therapy (ART), real‐time MRI monitoring, and the MRI‐only treatment planning workflow. Given the rapid growth and emergence of new state‐of‐the‐art methods in these fields, we systematically review 197 studies written on or before December 31, 2022, and categorize the studies into the areas of image segmentation, image synthesis, radiomics, and real time MRI. Building from the underlying deep learning methods, we discuss their clinical importance and current challenges in facilitating small tumor segmentation, accurate x‐ray attenuation information from MRI, tumor characterization and prognosis, and tumor motion tracking. In particular, we highlight the recent trends in deep learning such as the emergence of multi‐modal, visual transformer, and diffusion models.

Keywords: deep learning, MRI‐guided, radiation therapy, radiotherapy, review

1. INTRODUCTION

Recent innovations in magnetic resonance imaging (MRI) and deep learning are complementary and hold great promise for improving patient outcomes. With the advent of the Magnetic Resonance Imaging Guided Linear Accelerator (MRI‐LINAC) and MR‐guided radiation therapy (MRgRT), MRI allows for accurate and real‐time delineation of tumors and organs at risk (OARs) that may not be visible with traditional CT‐based plans. 1 Deep learning methods augment the capabilities of MRI by reducing acquisition times, generating electron density information crucial to treatment planning, and increasing spatial resolution, contrast, and image quality. In addition, MRI auto‐segmentation and dose calculation methods greatly reduce the required human effort on tedious treatment planning tasks, enabling physicians to further optimize treatment outcomes. Finally, deep learning methods offer a powerful tool in predicting the risk of tumor recurrence and adverse effects. These advancements in MRI and deep learning usher in the era of fully adaptive radiation therapy (ART) and the MRI‐only workflow. 2

Deep learning methods represent a broad class of neural networks which derive abstract context through millions of sequential connections. While applicable to any imaging modality, these algorithms are especially well suited to MRI due to its high information density. 3 Deep learning demonstrates state of the art performance over traditional hand‐crafted and machine learning methods but are computationally intensive and require large datasets. For MRI and other imaging tasks, convolutional neural networks (CNNs), built on local context, have traditionally dominated the field. However, advancements in network architecture, availability of more powerful computers, large high‐quality datasets, and increased academic interest have led to rapid innovation. Especially exciting are the rapid adaptation of cutting‐edge transformer and generative methods, which utilize data from multiple input modalities.

Deep learning techniques can be organized according to their applications in MRgRT in the following groups: segmentation, synthesis, radiomics (classification), and real‐time/4D MRI. Shown in Figure 1 is an example of all of these groups working together for an MRI‐only workflow for prostate cancer. Segmentation methods automatically delineate tumors, organs at risk (OARs), and other structures. However, deep learning approaches face challenges when adapting to small tumors, multiple organs, low contrast, and differing ground truth contour quality and style. These challenges differ greatly depending on the region of the body, so segmentation methods are primarily organized by anatomical region. 4

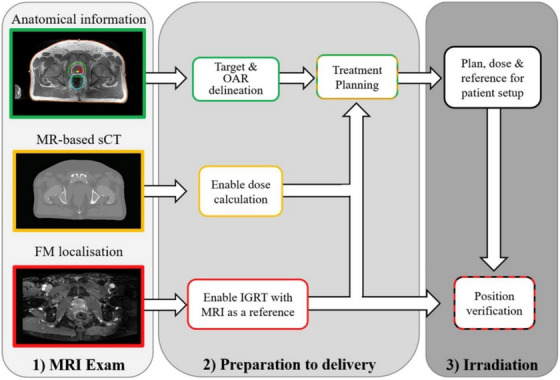

FIGURE 1.

An MRI‐only workflow for prostate cancer. From top to bottom, a diagnostic MRI is taken to determine the sites of the target volume and organs at risk (OARS) which can be aided by segmentation models and prognostic radiomics models. Simultaneously, sCT enables x‐ray attenuation information. Finally, fiducial markers (FMs) are identified to define the prostate position which can be monitored during treatment with real time MRI. Reprinted by permission from Elsevier: Clinical Oncology, Magnetic Resonance Imaging only Workflow for Radiotherapy Simulation and Planning in Prostate Cancer by Kerkmeijer et al. 2018. 160

Synthesis methods are best understood by their input and output modalities. Going from MRI to CT, synthetic CT (sCT) provides accurate attenuation information not apparent in MRI, augmenting the information of co‐registered CT images. In an MRI‐only workflow, sCT avoids registration errors and the radiation exposure associated with traditional CT. 5 In addition, synthetic relative proton stopping power (sRPSP) maps can be generated to directly obtain dosimetric information for proton radiation therapy. 6 The dosimetric uncertainty can be further enhanced with deep learning dose calculation methods, which greatly reduce inference time and could yield lower dosimetric uncertainties compared to traditional Monte Carlo (MC) methods. Synthetic MRI (sMRI), generated from CT, is appealing by combining the speed and dosimetric information of CT with MRI's high soft tissue contrast. However, CT's lower soft tissue contrast makes this application much more challenging, but sMRI has still found success in improving CT‐based segmentation accuracy. 7 , 8 , 9 Alternatively, there are rich intra‐modal applications by generating one MRI sequence from another. For example, the spatial resolution of clinical MRI can be increased by predicting a higher resolution image 10 , 11 and applying contrast can be avoided with synthetic contrast MRI. 12

Radiomics represents an eclectic body of works but can be divided into studies which classify structures in an MRI image 13 or prognostic models which use MR images to predict treatment outcomes such as tumor recurrence or adverse effects. 14 , 15 Deep learning methods in real‐time and 4D MRI overcome MRI's long acquisition time and the low field strengths of the MRI‐LINAC by reconstructing images from undersampled k‐space, 16 synthesizing additional MRI slices, 17 and exploiting periodic motion to improve image quality. 18

In this review, we systematically examine studies that apply deep learning to MRgRT, categorizing them based on their application and highlighting interesting or important contributions. We identify four distinct areas of deep learning methods which enhance the clinical workflow: segmentation, synthesis, radiomics (classification), and real‐time/4D MRI. For each category, the sections are ordered as follows: foundational deep learning methods, challenges specific to MRI, commonly used evaluation metrics, and finally subcategories including a brief overview followed by interesting or influential studies in that subcategory. Since deep learning methods build on each other, the unfamiliar reader is encouraged to read the explanations on deep learning methods sequentially. In Section 7, we discuss current trends in deep learning architectures and how they may benefit new clinical techniques and MRI technologies like the MRI‐LINAC and higher strength MRI scanners.

2. LITERATURE SEARCH

This systematic review surveys literature which implements deep learning methods and MRI for radiation therapy research. “Deep learning” is defined to be any method which includes a neural network directly or indirectly. These include machine learning models and other hybrid architectures which take deep learning derived features as input. Studies including MRI as at least part of the dataset are included. Studies must list their purpose as being for radiation therapy and include patients with tumors. Studies on immunotherapy and chemotherapy without radiation therapy are excluded. Conference abstracts and proceedings are excluded due to an absence of strict peer review.

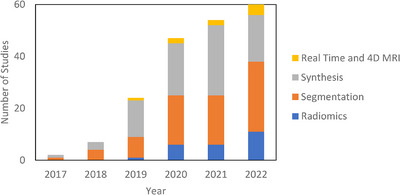

The literature search was performed on PubMed on December 31, 2022, with the following search criteria in the title or abstract: “deep learning and (MRI or MR) and radiation therapy” and is displayed in Table 1. This search yielded 335 results. Of these results, 197 were included based on manual screening using the aforementioned criteria. Seventy‐eight were classified as segmentation, 81 as synthesis, 24 as radiomics (classification), and 14 as real‐time or 4D MRI. There is inevitably some overlap in these categories. In particular, studies which use sMRI for the purposes of segmentation are classified as synthesis and papers which deal with real‐time or 4D MRI are placed in Section 6: Real‐Time and 4D MRI. Figure 2 shows the papers sorted by category and year. Compared to other review papers, this review paper is more comprehensive in its literature search and is the first specifically on the topic of deep learning in MRgRT. In addition, this work uniquely focuses on the underlying deep learning methods as opposed to their results. Figure 3 shows technical trends in deep learning methods implementing 3D convolution, attention, recurrent, and GAN techniques.

FIGURE 2.

Number of deep learning studies with applications towards MRgRT per year by category including references 161–277 in Supporting Information.

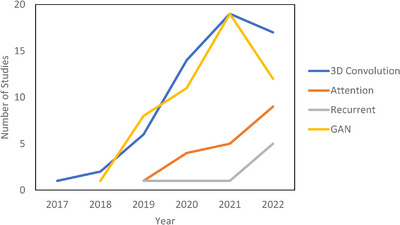

FIGURE 3.

Technical trends in deep learning.

3. IMAGE SEGMENTATION

Contouring (segmentation) in MRgRT is the task of delineating targets of interest on MR images, which can be broadly divided into distinct categories: contouring of organs at risk and other anatomical structures expected to receive radiation dose and contouring of individual tumors. Both tumor and multi‐organ segmentation suffer from intra‐ and inter‐ observer variability. 19 MRI does not capture the true extent of the tumor volume, as well as poorly defined boundaries and similar structures like calcifications lead to institutional and intra‐observer variability. Physician contouring conventions and styles further complicate the segmentation task and lead to inter‐observer variability. 20 , 21 Multi‐organ segmentation is mostly challenged by the large number of axial slices and OARs which make the task tedious and prone to error. Automated solutions to MRI segmentation have been proposed to reduce physician‐workload and provide expert‐like performance. In this section, we first review foundational deep learning methods starting with CNN and moving into recurrent and transformer architectures.

3.1. Deep learning methods

Since the application of CNNs to MRI‐based segmentation in 201722, fully convolutional networks (FCNs) have outperformed competing atlas‐based and hand‐crafted auto‐segmentation methods, often matching the intra‐observer variability among physicians. 23 FCNs employ convolutional layers which are trained to detect patterns in either nearby voxels or feature maps output from previous convolutional layers. In contrast with traditional CNNs, FCNs forgo densely connected layers. This design choice enables voxel‐wise segmentation, allows for variable sized images, and reduces model complexity and training time. Different types of convolutions include atrous and separable convolutions. Atrous convolutions sample more sparsely to gain a wider field of view and can be mix‐and‐matched to capture large and small features in the same layer. Separable convolutions divide a 2D convolution into two 1D convolutions to use fewer parameters for similar results. By connecting multiple convolutional layers together with non‐linear activation functions, larger and more abstract regions of the input image are analyzed to form the encoder. For pixelwise segmentation, the final feature map is expanded to the original image resolution through a corresponding series of transposed convolutional layers forming the decoder. All FCNs include pooling layers to conserve computational resources whereby the resolution of feature maps is reduced by choosing the largest (max‐pooling) or average local pixel. 24

Advances from the field of natural language processing (NLP) have had a tremendous impact on segmentation tasks. Recurrent neural networks (RNNs) are defined by the output of their node being connected to the input of their node. To avoid an infinite loop, the output is only allowed to connect to its input a set number of times. This property allows for increased context and the ability to handle sequential data which is especially important in language translation. Applied to CNNs, each recurrent convolutional layer (convolution + activation function) is performed multiple times which creates a wider field of view and more context with each subsequent convolution. However, recurrent layers can suffer from a vanishing gradient problem. Long short‐term memory blocks (LSTM) solve this by adding a “forget” gate, which forgets irrelevant information. In addition, LSTMs are more capable of making long range connections. Similar to the LSTM gate, the gated recurrent unit (GRU) has an update and reset gate which decide which information to pass on and which to forget. Both LSTM and GRU also have bidirectional versions which pass information forward and backwards. 25 , 26 Relative performance between the LSTM and GRU gates are situational with the GRU gate being less computationally expensive. 27

Related developments from NLP are the concepts of attention and the transformer. In terms of MRI, attention is the idea that certain regions of the MRI volume are more important to the segmentation task and should have more resources allocated to them. ROI schemes can then be defined as a form of hard attention by only considering the region around a tumor. A version of soft attention would weight the region around the tumor heavily and process the information in high resolution but also give a smaller weighting to nearby organs and process it in lower resolution. 28 In practice, attention modules include a fully connected feedforward neural network to generate weights between a feature map of the encoder and a shallower feature map in the decoder. These weights are improved upon through backpropagation of the entire network to give higher representational power to contextually significant areas of the image. This fully connected network can also be replaced with other models such as the RNN, GRU, or LSTM. 29 If the same feature map is compared with itself, this is called self‐attention and is the basis for the transformer architecture. 30 The transformer can be thought of as a global generalization of the convolution and can even replace convolutional layers. The advantages of the transformer are explicit long‐range context and the transformer's multi‐head attention block allows for attention to be focused on different structures in parallel. However, transformers require more data to train and can be very computationally expensive. Such computational complexity can be remedied by including convolutional layers in hybrid CNN‐transformer architectures, 31 by making long range connections between voxels sparse, 32 or by implementing more efficient self‐attention models like FlashAttention. 33

From the field of neuroscience, deep spiking neural networks (DSNNs) attempt to more closely model biological neurons by connecting neurons with asynchronous time dependent spikes instead of the continuous connections between neurons of traditional neural networks. Potential advantages include lower power use, real‐time unsupervised learning, and new learning methods. However, these advantages are only fully realized with special neuromorphic hardware, are difficult to train, and currently lag conventional approaches. For these reasons, they are currently only represented by one paper in this review. 34

3.2. Challenges in MRI

The properties of MRI datasets have driven innovation. Multiple MRI sequences, with and without contrast, are often available. To capture all data, the different sequences are co‐registered and input as multiple channels yielding multiple segmentations. These segmentations are combined to produce a final segmentation using an average, weighted average, or more advanced method. To account for MRI's high through‐plane resolution relative to its in‐plane resolution, 3D convolutional layers are often utilized to capture features not apparent in 2D convolution. However, 3D convolutions are computationally expensive, so numerous 2.5D architectures have been proposed. 35 , 36 , 37 In a 2.5D architecture, adjacent MRI slices are input as channels, and 2D convolutions are performed. It is also common to see new papers forgo the 3D convolution to save resources for new computationally intense methods. An unfortunate fact is that high‐quality MRI datasets are often small. To remedy this, data augmentation methods such as rotating and flipping the MR images are ubiquitous. In addition, the generation of synthetic images to increase dataset size and generalizability is an exciting field of research. 38 Public datasets and competitions have also helped in this regard. For example, the Brain Tumor Segmentation Challenge (BraTS) dataset, 39 updated since 2012, has been a primary contributor to brain segmentation progress, spawning the popular DeepMedic framework. 40 Another approach for small datasets is transfer learning. In transfer learning, a model is trained on a large dataset, and then retrained on a smaller dataset with the idea that many of the previously found features are transferable. 41

A major issue faced in MRI‐segmentation can be characterized as “the small tumor problem”. Small structures like tumors or brachytherapy fiducial markers represent a small fraction of the total MRI volume, where CNNs can struggle to find them or be confused by noise. Further exacerbating the problem is that applying a deep CNN to whole MR images consumes extensive computational resources, so the MRI must be downsampled. In this case, the down sampling is very likely to cause small tumors to be missed entirely. One of the simplest ways to improve performance is to alter the loss function. Standard loss functions are cross‐entropy and dice loss which seek to maximize voxel wise classification accuracy and overlap between the predicted and ground truth contours, respectively. These can be modified to achieve higher sensitivity to small structures at the expense of accuracy. Focal loss is the cross‐entropy loss modified for increased sensitivity 42 and Tversky loss does the same for the dice loss. 43 In addition, borders of the contours are the most important part of the segmentation, so boundary loss functions seek to improve model performance by placing increased emphasis on regions near the contour edge. 44 , 45 Another approach to solve the problem, albeit at the expense of long‐range context, is with two stage networks. In the first stage, regions of interest (ROIs) are identified, and target structures are then contoured in the ROIs in the second stage. Notable efforts include Mask R‐CNN 46 and Retina U‐Net 47 which implement convolution‐based ROI sub‐networks with advanced correction algorithms. Seqseg instead replaces the correction algorithms with a reinforcement learning based model. 45 An agent is guided by a reward function to iteratively improve the conformity of the bounding box. Seqseg reported comparable performance with higher bounding box recall and intersection over union (IoU) compared to Mask R‐CNN.

Many new models for MRI segmentation have been created by modifying U‐Net. U‐Net derives its name from its shape which features convolutional layers in the encoder and transposed convolutional layers in the decoder. Its main innovation, however, is its long‐range skip connections between the encoder and decoder. Dense U‐Net densely connects convolutional layers in blocks, 48 ResU‐Net includes residual connections, 49 Retina U‐Net is a two‐stage network, RU‐Net includes recurrent connections, R2U‐Net adds residual recurrent connections. 50 Attention modules have also been added at the skip connections. 51 , 52 Both V‐Net 53 and nnUNet 54 were designed with 3D convolutional layers with nnUNet additionally automating preprocessing and learning parameter optimization. Pix2pix uses U‐Net as the generator with a convolutional discriminator (PatchGAN). 55 Other state‐of‐the‐art architectures include Mask R‐CNN, DeepMedic, and DeepLabV3+. 56 Mask R‐CNN is a two‐stage network with a ResNet backbone. Mask Scoring RCNN (MS‐RCNN) improves upon Mask R‐CNN by adding a module which penalizes ROIs with high classification accuracy but low segmentation performance. 57 DeepMedic, designed for brain tumor segmentation, is an encoder‐only CNN which inputs a ROI and features two independent row‐resolution and normal resolution channels. These channels are joined in a fully connected convolutional layer to predict the final segmentation. The convolutions in the encoder‐only style reduce the final segmentation map dimensions compared to the original ROI (25 × 25 × 25 vs. 9 × 9 × 9 voxels). DeepLabV3+ leverages residual connections and multiple separable atrous convolutions. Xception improves upon the separable convolution by reversing the order of the convolutions and including ReLU blocks after each operation for non‐linearity. 58

3.3. Evaluation metrics

To evaluate performance, various evaluation metrics are employed with the Dice similarity coefficient (DSC) being the most prevalent. The DSC is defined in Equation 1 as the overlap between the ground truth physician contours and the predicted algorithmic volumes with a value of 0 corresponding to no overlap and 1 corresponding to complete overlap. Mathematically, it is defined as follows where VOLGT is the ground truth volume and VOLPT is the predicted volume59:

| (1) |

Additional metrics include the Hausdorff distance 59 which measures the farthest distance between two points of the ground truth and algorithmic volumes, volume difference, 60 which is simply the difference in volumes, and the Jaccard Index, 61 which is similar to the DSC and measures the overlap between VOLPT and VOLGT relative to their combined volumes. A discussion of these metrics is found in Müller et al. 62 However, performance between datasets must be evaluated with caution due to high inter‐observer variation between physicians and dataset quality.

3.4. Brain

Largely unaffected by patient motion and comprised of detailed soft tissue structures, the brain is an ideal site to benchmark segmentation performance for MRI and represents the dominant category in MRI segmentation research. Unique to brain MRI preprocessing is skull stripping, where the skull and other non‐brain tissue are removed from the image. This can significantly improve results, especially for networks with limited training data. 63 Shown in Table 2, the majority of the studies focus on segmenting different brain tumors such as glioma, Glioblastoma Multiforme (GBM), and metastases. A small minority of studies focuses on OARs like the hippocampus. Advancements in brain segmentation have come, in large part, from the yearly Multimodal Brain Tumor Image Segmentation Benchmark (BraTS) challenge, which includes high quality T1‐weighted (T1W), T2‐weighted (T2W), T1‐contrast (T1C), and T2 ‐Fluid‐Attenuated Inversion Recovery (FLAIR) sequences with the purpose of segmenting the whole tumor (WT), tumor core (TC), and enhancing tumor (ET) volumes. The WT is defined as the entire spread of the tumor visible on MRI; The ET is the inner core which shows significant contrast compared to healthy brain tissue, and the TC is the entire core including low contrast tissue. The most popular architectures are DeepMedic, created for the BraTS challenge, and U‐Net.

Notable efforts in the BraTS challenge include Momin et al achieving an exceptional WT dice score of 0.97 ± 0.03 with a Retina U‐Net based model and mutual enhancement strategy. In their model, Retina U‐Net finds a ROI and segments the tumor. This feature map is fed into the classification localization map (CLM module) which further classifies the tumor into subregions. The CLM shares the encoding path with a segmentation module, so classification and segmentation share information and are improved iteratively. 64 Huang et al. focuses on correctly segmenting small tumors. Based on DeepMedic, the method incorporates a prior scan and custom loss function, the volume‐level sensitivity–specificity (VSS), which rates and significantly improves the metastasis sensitivity and specificity to segment small brain metastases. 65 Another paper improves small tumor detection by 2.5 times compared to the standard dice loss by assigning a higher weight to small tumors. 66 Both Tian et al. 67 and Ghaffari et al. 68 utilize transfer learning datasets to cope with limited data. Ahmadi et al. achieves competitive results in the BraTS challenge with a DSNN. 34

3.5. Head and neck

The head and neck (H&N) region contains many small structures, making high‐resolution and high‐contrast imaging of great importance. MRI is especially preferred over CT imaging for patients with amalgam dental fillings due to the metallic content that can cause intense streaking artifacts on CT. 69 In addition, MRI is the standard of care for nasopharyngeal carcinoma (NPC), leading to significant research attention on auto‐segmentation algorithms for H&N MR images (Table 3). Other research efforts include segmentation of oropharyngeal cancer, glands, and lymph nodes in the American Association of Physicists in Medicine (AAPM)’s RT‐MAC challenge, 70 as well as multi‐organ segmentation.

Notable efforts include the two‐stage multi‐channel Seqseg architecture for NPC segmentation. 71 Seqseg uses reinforcement learning to refine the position of the bounding box, implements residual blocks, recurrent channel and region‐wise attention, and a custom loss function that emphasizes segmentation of the edges of the tumor. Outierial et al. 72 improves the dice score by 0.10 with a two‐stage approach compared to single‐state 3D U‐Net for oropharyngeal cancer segmentation. For multiparametric MRI (mp‐MRI), Deng et al. 73 concludes that the union output from T1W and T2W sequences has similar performance to T1C MRI, suggesting that contrast may not be necessary for NPC segmentation. Similarly, Wahid et al. 74 finds that T1W and T2W sequences significantly improve performance, but dynamic contrast‐enhanced MRI (DCE) and diffusion‐weighted imaging (DWI) have little effect. The first stage segments the OARs in low resolution to create a bounding box, followed by U‐Net segmenting the ROI in high resolution. Jiang et al. segments the parotid glands using T2W MRI and unpaired CT images with ground truth contours. First, sMRI is generated from the CT volumes using a GAN. In the second step, U‐Net generates probabilistic segmentation maps for both the sMRI and MRI based on the CT ground truth contours. These maps, along with sMRI and MRI data, are then input into the organ attention discriminator, which is designed to learn finer details during training, ultimately producing the final segmentations. 75

3.6. Abdomen, heart, and lung

In contrast to the brain, the abdomen is susceptible to respiratory and digestive motion of the patient often leading to poorly defined boundaries. While motion management techniques like patient breath‐hold and not eating or drinking before treatment can mitigate these effects, the long acquisition time of MRI will inevitably lead to errors. Often physicians must rely on anatomical knowledge to deduce the boundaries of OARs. This makes segmentation challenging for CNN‐based architectures, which build from local context. In addition, registration errors make including multiple sequences impractical. OARs segmented in the abdomen include the liver, kidneys, stomach, bowel, and duodenum. The liver and kidneys are not associated with digestion and are relatively stable while the stomach, bowel, and duodenum are considered unstable. The duodenum is the most difficult for segmentation algorithms due to its small size, low contrast, and variability in shape. In addition, radiation induced duodenal toxicity is often dose‐limiting in dose escalation studies making accurate segmentation of high importance. 76 Similar problems occur in the heart and lung because of their periodic motion with the lung being particularly challenging since it is filled with low‐signal air. However, MR segmentation of cardiac subregions have shown growing interest as these are not visible on CT and have different tolerances to radiation. 77

The results are summarized in Table 4. Due to the large number of organs segmented in several of these studies, only the stomach and duodenum dice scores are reported to establish how the algorithms handle unstable organs. Zhang et al. 78 generates a composite image from the current slice, prior slice, and contour map to predict the current segmentation with U‐Net. Luximon et al. 78 takes a similar approach by having a physician contour every 8th slice. These contours are then linearly interpolated and improved upon with a 2D Dense U‐Net. The remaining studies do not require previous information and struggle to segment the duodenum. Ding et al. 79 improves upon a physician‐defined acceptable contour rate by up to 39% with an active contour model. Morris et al. segments heart substructures with a 2 channel 3D U‐Net. 80 Wang et al. segments lung tumors with high accuracy relying on segmentation maps from previous weeks with the aim of adaptive radiation therapy (ART). 81 An addition study by the same group feeds the features from the CNN into a GRU based RNN to predict tumor position over the next 3 weeks. Attention is included to weigh the importance of the prior weeks’ segmentation maps. 82

3.7. Pelvis

The anatomy of the pelvis allows both external beam radiation therapy (EBRT) and brachytherapy approaches for radiation therapy. Therefore, MRI segmentation studies have proposed methods to contour fiducial markers and catheters for cervical and prostate therapy, as well as tumors and OARs. However, a current challenge is that fiducials and catheters are designed for CT and are not optimal for MRI segmentation. For example, in prostate EBRT, gold fiducial markers localize the prostate with high contrast and correct for motion. However, metal does not emit a strong signal on MRI, so fiducials on MRI are characterized by an absence of signal, which can be confused with calcifications. Despite this, MRI is enabling treatments with higher tumor conformality. For instance, the gross tumor volume (GTV) of prostate cancer is not well delineated on CT but is often visible on MRI. In addition, the prostate apex is significantly clearer on MRI. 83 MRI‐based focal boost radiation therapy, in addition to a single dose level to the whole prostate, escalates additional dose to the GTV to reduce tumor recurrence. 84 , 85

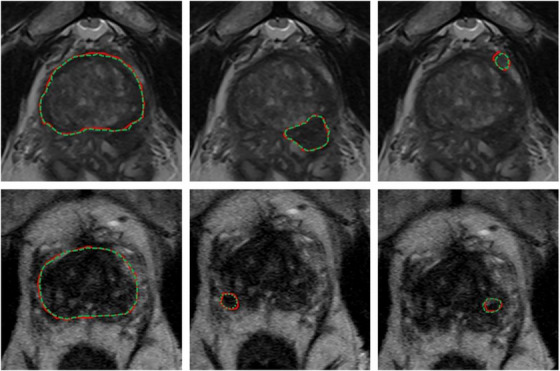

Table 5 shows relevant auto‐segmentation techniques applied to the pelvic region. Shaaer et al. 86 segments catheters with a T1W and T2W MRI‐based U‐Net model and takes advantage of catheter continuity to refine the contours in post processing. Zabihollahy et al. 87 creates an uncertainty map of cervical tumors by retraining the U‐Net model with a randomly set dropout layer. This technique is called Monte Carlo Dropout (MCDO). Cao et al. 23 takes pre‐implant MRI and post‐implant CT as input channels to their network. After preforming intra‐observer variability analysis, they achieve performance more similar to a specialist radiation oncologist for cervical tumors in brachytherapy than a non‐specialist. Eidex et al. 61 segments dominant intraprostatic lesions (DILs) and the prostate for focal boost radiation therapy with a Mask R‐CNN based architecture. Sensitivity is found to be an important factor in evaluating model performance because weak models can appear strong by missing difficult lesions entirely. Figure 4 shows an example of automatic contours of the prostate and DIL on T2w MRI which would not be visible on CT. STRAINet 88 realizes exceptional performance by utilizing a GAN with stochastic residual and atrous convolutions. In contrast with standard residual connections, each element of the input feature map which does not undergo convolution has a 1% chance of being set to zero. Singhrao et al. 89 implements a pix2pix architecture for fiducial detection achieving 96% detection with the misses caused by calcifications.

FIGURE 4.

Expert (red) versus proposed auto‐segmented (green dashed) prostate and DIL contours on axial MRI. From left to right: prostate manual and auto‐segmented contours overlaid on MRI, and two DIL manual and auto‐segmented contours overlaid on MRI. The upper and lower rows are representative of two patients. Reprinted by permission from John Wiley and Sons: Medical Physics, MRI‐based prostate and dominant lesion segmentation using cascaded scoring convolutional neural network by Eidex et al. 61 © 2022.

4. IMAGE SYNTHESIS

Image synthesis is an exciting field of research, defined as translating one imaging modality into another. Benefits of synthesis include avoiding potential artifacts, reducing patient cost and discomfort, and avoiding radiation exposure. 90 In addition, utilizing multiple modalities introduces registration errors which can be avoided with synthetic images. Current methods in MRgRT include synthesis of sCT from MRI, sMRI from CT, and relative proton stopping power images from MRI. Other areas of synthesis research include creating higher resolution MRI (super‐resolution) and predicting organ displacement based on periodic motion in 4D MRI. Segmentation can also be thought of as a special case of synthesis because the input MRI is translated into voxel‐wise masks which assume discrete values according to their class. The distinction between synthesis and segmentation is particularly muddied when the segmentation ground truth is from a different imaging modality. 91

4.1. Generative models

Synthesis architectures are fundamentally interchangeable with segmentation architectures but have diverged in practice. For example, U‐Net, described in detail in Section 3, is the predominant backbone in both areas. However, synthesis models require that the entire image be translated, so that they do not include two‐stage architectures and are dominated by generational adversarial network (GAN)‐based architectures. The GAN is comprised of a CNN or self‐attention‐based generator which generates synthetic images. The generator competes with a discriminator which attempts to correctly classify synthetic and real images. As the GAN trains, a loss function is applied to the discriminator when it mislabels the image, whereas a loss function is applied to the generator when the discriminator is correct. The model is ideally considered trained once the discriminator can no longer correctly identify the synthetic images. Conditional GANs (cGANs) expand on the standard GAN by also inputting a vector with random values or additional information into both the generator and discriminator. 92 In the case of MRI, the values of the vector can correspond to the MRI sequence type and clinical data to account for differences in patient population and setup. The CycleGAN adds an additional discriminator and generator loop. 93 For example, an MRI would be translated into a sCT. The sCT would then be translated into a sMRI. Since the input is ultimately tested against itself, this allows for training with unpaired data. The need for co‐registration is eliminated but requires significantly more data to achieve comparable results with paired training.

Despite their success, GANs can at times be unstable during training and may encounter difficulties with complex synthesis problems. One approach to enhancing training performance and stability is the implementation of the Wasserstein GAN (WGAN). Contrary to the discriminator in traditional GANs, which classifies images as either real or fake, the WGAN evaluates the probability distributions of the real and synthetic images and calculates the Wasserstein distance, or the distance between these distributions. The discriminator strives to maximize this distance while the generator endeavors to minimize it. Although not exclusive to WGANs, spectral normalization is frequently incorporated to constrain the training weights of the discriminator, thereby preventing gradient explosion. 94 The Wasserstein GAN with Gradient Penalty (WGAN‐GP) further amends the WGAN by adding a gradient penalty to the loss function, which helps to stabilize training and improve the model's performance. 95 Another innovative approach that claims superior performance to the WGAN is the Relativistic GAN (RGAN). The RGAN postulates that the generator should not only increase the likelihood of synthetic images appearing realistic but also enhance the probability that real images appear fake to the discriminator. Absent this condition, in the late stages of training with a well‐trained generator, the discriminator may conclude that every image it encounters is real, contradicting the a priori knowledge that half of the images are synthetic. A standard GAN can be converted to a RGAN by modifying its loss function. 96

Diffusion models, another approach to synthesis, are more recent entrants to the field. These models gradually add and remove noise from the image to better learn the latent space. The key advantage of diffusion models is increased stability during training. Despite these advantages, diffusion models are computationally intensive since the noise is added in small steps and the model must learn to reconstruct the images at varying noise levels. 97 Given their promising performance and stability, diffusion models represent an exciting avenue for future exploration in MRgRT applications and achieve state‐of‐the‐art performance in many computer vision tasks.

4.2. Evaluation metrics

To evaluate image synthesis performance, various metrics are used to compare voxel values between the ground truth and synthesized volume. The most common metric is the mean absolute error (MAE), 98 , 99 which is reported in Tables 6 and 7 if available. The MAE is defined below in Equation 2, where xi and yi are the corresponding voxel values of the ground truth and synthesized volume, respectively, and n is the number of voxels.

| (2) |

For sCT studies, the MAE is typically reported in Hounsfield units (HU) but can also be dimensionless if reported with normalized units. In contrast, MRI intensity is only relative and not in definitive units like CT, so MAE is less clinically meaningful than other metrics for MRI synthesis studies. Therefore, peak signal to noise ratio (PSNR) is preferentially reported. 100 Other common metrics in literature are the mean error, 101 which forgoes the absolute value in MAE, the mean squared error (MSE), 102 which substitutes absolute value for the square, and the Structural Similarity Index (SSIM), which varies from −1 to 1 where −1 represents extremely dissimilar images and 1 represents identical images. 103 A full discussion of these metrics can be found in Necasova et al. 104 Since sCT is primarily intended for treatment planning, dosimetric quantities which measure the deviation between CT‐ and sCT‐derived plans are often reported. One of the most common metrics is gamma analysis. Repurposed as a metric to compare treatment plan dose to actual dose on LINACs, gamma analysis looks at each point on the dose distribution and evaluates if the acceptance criteria are met. The American Association of Physicists in Medicine (AAPM) Task Group 119 recommends a low dose threshold of 10%, meaning that points, which receive less than 10% of the maximum dose are excluded from the calculation. Other metrics include the mean dose difference and the minimum dose delivered to 95% of the clinical treatment volume (D95) difference.

4.3. MRI‐based synthetic CT

MRI‐based sCT is the most extensively researched and influential application of synthesis models in radiation therapy. While MR images provide excellent soft tissue contrast, they do not contain the necessary attenuation information for dose calculation that is embedded in CT images. Owing to this limitation, CT has traditionally been the workhorse for treatment planning while MRI has been relegated to diagnostic applications. However, CT suffers from lower soft tissue contrast and imparts a non‐negligible radiation dose, especially for patients receiving standard fractionated image guided radiation therapy (IGRT). In addition, metallic materials found in dental work and implants can lead to severe artifacts in CT, reducing the quality of the treatment plan. By augmenting CT with sCT, these problems can be avoided. Furthermore, according to the “As Low As Reasonably Achievable” (ALARA) principle, the replacement of CT with sCT for an MRI only workflow could be justified with its high accuracy, especially in radiosensitive populations like pediatric patients. 105 , 106

The primary challenge to sCT methods is the accurate reconstruction of bone and air, due to their low proton density and weak signal. This can make it difficult for sCT to distinguish between the two, leading to large errors. In addition, further complicating the issue is that bone makes up a small fraction of the patient volume in radiation therapy tasks or applications which is similar to the “small tumor problem” seen in segmentation. Other issues that can arise include small training sets, misalignment between CT and MRI, and causes of high imaging variability such as intestinal gas.

Calculation of dose distribution using MRI‐based sCT can be enhanced by replacing traditional Monte Carlo simulation (MC) techniques with deep learning. MC accurately predicts the dose distribution based on physical principles, including the electron return effect (ERE), which adds additional dose to boundaries with different proton densities in the presence of a magnetic field. However, the technique can be extremely slow, as it relies on randomly generating paths of tens of thousands of particles. The higher number of particles reduces dosimetric uncertainty. This problem is particularly noticeable in proton therapy, where MC or pencil beam algorithm (PBA) calculations can take several minutes on a CPU, and it can take hours to optimize a single treatment plan. 107 As a result, compromises must be made in clinical practice between dosimetric uncertainty, MC run time, and treatment plan optimization. Deep learning methods show exceptional potential to improve upon MC dose calculation models. Once trained, deep learning algorithms take only a few seconds to synthesize a dose distribution. In addition, they can be trained on extremely high accuracy MC generated dose distributions that would be impractical in everyday clinical practice.

Sampling notable MRI‐based sCT works for photon radiation therapy, several take advantage of cGANs to include additional information. Liu et al. improve upon the CycleGAN by including a dense block, which captures structural and textural information and better handles local mismatches between MRI and ground truth CT images. In addition, a compound loss function with adversarial and distance losses improves boundary sharpness. An example patient is shown in Figure 5. 108 A conditional CycleGAN in Boni et al. passes in MR manufacturer information and achieves good results despite using unpaired data and different centers for their training and test sets. 109 Many studies also experiment with multiple sequences. Massa et al. train a U‐Net with Inception‐V3 blocks on 1.5T T1W, T2W, T1C, and FLAIR sequences separately and finds no statistical difference. 110 However, Koike et al. use multiple MR sequences for sCT generation employing a cGAN to provide better image quality and dose distribution results compared with those from only a single T1W sequence. 111 Dinkla et al. find that sCT removes dental artifacts. 112 Reaungamornrat et al. decompose features into modality specific and modality invariant spaces between high‐ and low‐resolution Dixon MRI with the Huber distance. In addition, separable convolutions are used to reduce parameters, and a relativistic loss function is applied to improve training stability. 113 Finally, Zhao et al. represent the first MRI‐based sCT paper to implement a hybrid transformer‐CNN architecture outperforming other state‐of‐the‐art methods. Their method implements a conditional GAN. The generator consists of CNN blocks in the shallow layers to capture local context and save computational resources, while transformers are used in deeper layers to provide better global context. 114

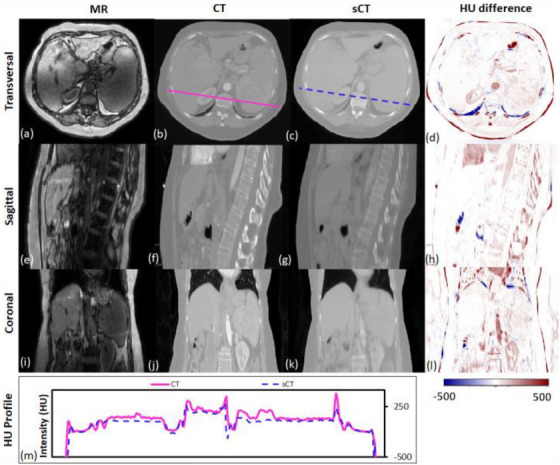

FIGURE 5.

Traverse, sagittal, and coronal images of a representative patient. MRI, CT, and sCT images and the HU difference map between CT and sCT are presented. The CT (solid line) and sCT (dashed line) voxel‐based HU profiles of the traverse images are compared in the lowermost panel. Reprinted by permission from British Journal of Radiology, MRI‐based treatment planning for liver stereotactic body radiotherapy: validation of a deep learning‐based synthetic CT generation method by Liu et al.108© 2019.

4.4. Synthetic CT for proton radiation therapy

Generating sCTs from MRI for the purposes of proton therapy is not fundamentally different from the process for photon therapy. However, proton therapy takes advantage of the Bragg peak, which concentrates the radiation in a small region to spare healthy tissue. While this is beneficial, this puts a tighter constraint on sCT errors. Another difference is that sCT images must first be converted to relative proton stopping power maps before they can be used in treatment planning. Therefore, directly generating synthetic proton relative stopping power (sRPSP) maps instead of sCT would be ideal. Boron therapy is a form of targeted radiation therapy in which boronated compounds are delivered to the site of the tumor and irradiated with neutrons. The boron undergoes a fission reaction, releasing alpha particles that kill the tumor cells. However, the targeting mechanism typically relies on targeting cancer cells’ high metabolic rate. Epidermal tissue that also has a high metabolic rate uptakes boron, making skin dose an important concern in boron therapy. Therefore, methods for generating sCT images for boron therapy should emphasize accurate reconstruction around the skin.

Shown in Table 7, many methods show high dosimetric accuracy for proton therapy. Liu et al develops a conditional cycleGAN to synthesize both high and lower energy CT. 115 Wang et al. create the first synthetic relative proton stopping power maps from MRI with a cycleGAN and loss function to take advantage of paired data. Their method achieves an excellent MAE of 42 ± 13 HU, but struggles with dosimetric accuracy. 6 Maspero et al. achieve a 2%/2 mm gamma pass rate above 99% for proton therapy by averaging predictions from three separate GANs trained on axial, sagittal, and coronal views, respectively. 116 Replacing traditional MC dose calculation methods, Tsekas et al. generate VMAT (volumetric modulated arc therapy) dose distributions in static positions with sCT. 117 Finally, SARU, a self‐attention Res‐UNet, lowers skin dose for boron therapy, achieving better results than the pix2pix method. 118

4.5. CT and CBCT‐based synthetic MRI

Generating sMRI from CT leverages MRI's high soft tissue contrast for improved segmentation accuracy and pathology detection for CT‐only treatment planning. In addition, the ground truth x‐ray attenuation information is maintained compared to an MRI‐only workflow. Cone beam CT (CBCT) is primarily used for patient positioning before each fraction of radiation therapy. Kilovoltage (kV) and megavoltage (MV) energies are standard in CBCT with kV images providing superior contrast and MV images providing superior tissue penetration. However, noise and artifacts can often reduce CBCT image quality. 119 Generating CBCT‐based sMRI can yield higher image quality and soft‐tissue contrast while also retaining CBCT's fast acquisition speed. CT and CBCTs’ rapid acquisition time can make it preferable over MRI for patients with claustrophobia during the MR simulation or for pediatric patients who would require additional sedation. In addition, MRI is not suitable for patients with metal implants such as pacemakers. However, sMRI is significantly more challenging to generate compared to sCT. This is primarily due to the recovery of soft tissue structures visible only in MRI. For this reason, sMRI is often used to improve segmentation results in CT and CBCT. However, some studies report direct use of sMRI for segmentation.

The studies of CT and CBCT‐based synthetic MRI are summarized in Table 8. For CT‐based sMRI, Dae et al implements a cycleGAN for sMRI synthesis with dense blocks in the generator. The sMRIs are input into MS‐RCNN improving segmentation performance. 120 Lei et al incorporates dual pyramid networks to extract features from both sMRI and CT and includes attention to achieve exceptional results. 121 BPGAN synthesizes both sMRI and sCT bidirectionally with a cycleGAN. Pathological prior information, an edge retention loss, and spectral normalization improve accuracy and training stability. 122 Both CBCT‐based sMRI studies, from Emory's Deep Biomedical Imaging Lab, significantly improve CBCT segmentation results. In their first paper, Lei et al generates sMRI with a CycleGAN, then inputs this into an attention U‐Net. 8 Fu et al. makes additional improvements by generating the segmentations with inputs from both CBCT and sMRI and also including additional pelvic structures. Example contours overlaid onto CBCT and sMRI are shown in Figure 6. 123

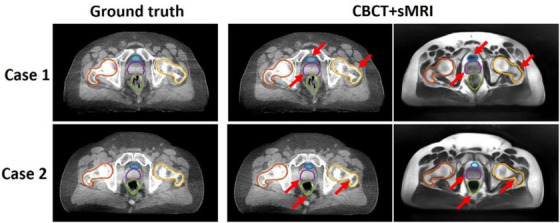

FIGURE 6.

Contours of segmented pelvic organs for two representative patients. Ground truth contours are overlaid onto CBCT. The predicted contours of the proposed method are overlaid on CBCT and sMRI. Red arrows highlight regions in which CBCT and sMRI provide complementary information for bony structure and soft tissue segmentation. Reprinted by permission from John Wiley and Sons: Medical Physics, Pelvic multi‐organ segmentation on cone‐beam CT for prostate adaptive radiotherapy by Fu et al. 123 © 2020.

4.6. Intramodal MRI synthesis and super resolution

It can be beneficial to synthesize MRI sequences from other MRI sequences. Intra‐modal applications include generating synthetic contrast MRI to prevent the need for injected contrast, super‐resolution MRI to improve image quality and reduce acquisition time, and synthetic 7T MRI due to its lack of widespread availability and improve spatial resolution and contrast. 124 To reduce complexity and cost, a potential approach to radiation therapy is to rotate the patient instead of using a gantry. However, the patient's organs deform under gravity, requiring multiple MRIs at different angles for MRgRT. MR images of patients rotated at different angles can better enable gantry free radiation therapy. In this section, synthesis studies which synthesize other MRI sequences are discussed and summarized in Table 9.

Preetha et al. synthesize T1C images with a multi‐channel T1W, T2W, and FLAIR MRI sequences using the pix2pix architecture. 12 Another study included a A cycleGAN with a ResUNet generator to generate lateral and supine MR images for gantry‐free radiation therapy. 125 ResUNet is also implemented to generate ADC uncertainty maps from ADC maps for prostate cancer and mesothelioma. 126 Studies designed explicitly for super‐resolution include Chun et al. and Zhao et al. In the former study, a U‐Net based denoising autoencoder is trained to remove noise from clinical MRI. 11 The same architecture is employed in Kim et al 127 for real‐time 3D MRI to increase spatial resolution. In addition, dynamic keyhole imaging is formulated to reduce acquisition time by only sampling central k‐space data associated with contrast. The peripheral k‐space data associated with edges is added from previously generated super‐resolution images in the same position. 127 Zhao et al. make use of super‐resolution for brain tumor segmentation, increasing the dice score from 0.724 to 0.786 with 4x super resolution images generated from a GAN architecture. The generator has low‐ and high‐resolution paths and dense blocks. 10 Often in clinical practice, the through place resolution is increased to reduce the MRI scan time. Xie et al achieves near perfect accuracy in recovering 1 from 3 mm through plane resolution by training parallel CycleGANs, which predict the higher resolution coronal and sagittal slices, respectively. These predictions are then fused to create the final 3D prediction. 128

5. RADIOMICS (CLASSIFICATION)

Unlike synthesis which maps one imaging modality to another, radiomics extracts imaging data to classify structures or to predict a value. Deep learning applications to MRI‐based radiomics often achieve state‐of‐the‐art performance over hand‐crafted methods in detection and treatment outcome prediction tasks. Traditional radiomics algorithms apply various hand‐crafted matrices based on shape, intensity, texture, and imaging filters to generate features. The majority of these features have no predictive power, and would confuse the model if all were directly implemented. Therefore, an important step is feature reduction which screens out features without statistical significance. Typically, this is done with a regression such as analysis of variance (ANOVA), Least Absolute Shrinkage and Selection Operator (LASSO), or ridge regression. Alternatively, a CNN or other neural network can learn significant features. The advantage of the deep learning approach is that the network can learn any relevant features including handcrafted ones. However, this assumes a large enough dataset which can be problematic for small medical datasets. Hand‐crafted features have no such constraint and are easily interpretable. It is often the case that a hybrid approach including both hand‐crafted and deep learning features yields the highest performance. Biometric data like tumor grade, patient age, and biomarkers can also be included as features. Once the significant features are found, supervised machine learning algorithms like support vector machines, artificial neural networks, and random forests are employed to make a prediction from these features. Recently, CNNs like Xception and InceptionResNet, 129 recurrent neural networks with GRU and LSTM blocks, and transformers have also found favor in this task, as introduced in Section 3. Radiomics can also be done purely with deep learning as it is done with segmentation and synthesis. In this section, we divide the studies into those detecting or classifying objects in the image and studies predicting a value such as the likelihood of distant metastases, treatment response, and adverse effects. While detection is traditionally under the purview of segmentation, the architectures of detection methods and the classification task are in common with other radiomics methods, and so are discussed here.

While radiomics algorithms can excel on local datasets, the main concern for MRI applications is the generalizability of the methods. Variability in MR imaging characteristics such as field strength, scanner manufacturer, pulse sequence, ROI or contour quality, and the feature extraction method can result in different features being significant. This variability can largely be mitigated by normalizing the data to a reference MRI and including data from multiple sources. 130

5.1. Evaluation metrics

Classification accuracy is an appealing evaluation metric due to its simplicity, but accuracy can be misleading with unbalanced data. For example, if 90% of tumors in the dataset are malignant, a model can achieve 90% accuracy by labeling every tumor as malignant. Precision, 131 the ratio of true positives to all examples labeled as positive by the classifier, and recall, 15 the ratio of true positives to all actual positives, will also both differ if given imbalanced data. The F1 score 132 is defined in Equation 3, ranging from 0 to 1 and combining precision and recall to provide a single metric. A high F1 value indicates both high precision and recall and is resilient towards unbalanced data.

| (3) |

The most common evaluation metric resistant to unbalanced data is the area under the curve (AUC) of a receiver operating characteristics (ROC) curve. 133 , 134 , 135 In a ROC curve, the x‐axis represents the false positive (FP) rate, while the y‐axis relates the true positive (TP) rate. In addition, the ROC curve can be viewed as a visual representation to help find the best trade‐off between sensitivity and specificity for the clinical application by comparing one minus the specificity versus the sensitivity of the model. The AUC value provides a measurement for the overall performance of the model with a value of 0.5 representing random chance and a value of 1 being perfect classification. If the AUC value is below 0.5, the classifier would simply need to invert its predictions to achieve higher accuracy. It is important to note that all these metrics are for binary classification but are commonly used in multi‐class classification by comparing a particular class with an amalgamation of every other category. Finally, the concordance index (C‐index) measures how well a classifier predicts a sequence of events and is most appropriate for prognostic models which predict the timing of adverse effects, tumor recurrence, or patient survival times. The C‐index ranges from 0 to 1 with a value of 1 being perfect prediction. 136 , 137 A full discussion of evaluation metrics for classification tasks is found in Hossin and Suliaman. 138

5.2. Cancer detection and staging

Effectively detecting and classifying tumors is vital for treatment planning. Deep learning detection methods supersede segmentation algorithms when the tumors are difficult to accurately segment or cannot easily be distinguished from other structures. In addition, detection models can further improve segmentation results by eliminating false positives. When applied to MRI, detection studies also have the potential to differentiate between cancer types and tumor stage to potentially avoid unnecessary invasive procedures like biopsy.

As shown in Table 10, The majority of works in detection are for brain lesion classification. Chakrabarty et al. attain exceptional results in differentiating between common types of brain tumors with a 3D CNN and outperforms traditional hand‐crafted methods. 133 Radiation‐induced cerebral microbleeds appear as small dark spots in 7T time of flight magnetic resonance angiography (TOF MRA) and can be difficult to distinguish from look‐a‐like structures. Chen et al. utilize a 3D ResNet model to differentiate between true cerebral microbleeds and mimicking structures with high accuracy. 139 Finally, Gao et al. distinguish between radiation necrosis and tumor recurrence for gliomas, significantly outperforming experienced neurosurgeons with a CNN. 140

5.3. Treatment response

The decision to treat with radiation therapy is often definitive. Since radiation dose will unavoidably be delivered to healthy tissue, treatment response and the risk of adverse effects are heavily considered. Further compounding the decision, dose to healthy tissue is cumulative that is complicating any subsequent treatments. In addition, unknown distant metastasis can derail radiation therapy's curative potential. Therefore, predicting treatment response and adverse effects are of high importance, and significant work has gone into applying deep learning algorithms to prognostic models.

Diffusion‐weighted imaging (DWI) has attracted strong interest in studies which predict the outcome of radiation therapy. DWI measures the diffusion of water through tissue often yielding high contrast for tumors. Cancers can be differentiated by altering DWI's sensitivity to diffusion with the b value, in which higher b values correspond to an increased sensitivity to diffusion. By sampling at multiple b‐values, the attenuation of the MR signal can be measured locally in the form of apparent diffusion coefficient (ADC) values. A drawback of DWI is that the spatial resolution is often significantly worse than T1W and T2W imaging. 141 Unlike segmentation and synthesis which require highly accurate structural information, high spatial resolution is not necessary for treatment outcome prediction, so the functional information from DWI is most easily exploited in predictive algorithms.

The majority of studies summarized in Table 11 seek to predict treatment outcomes and tumor recurrence. Zhu et al. take the interesting approach of concatenating DWI histograms across twelve b values to create a “signature image.” A CNN is then applied to the signature image to achieve exceptional performance in predicting pathological complete response. 14 Jing et al., in addition to MRI data includes clinical data like age, gender, and tumor stage to improve predictive performance. 142 Keek et al. achieves better results in predicting adverse effects by combining hand‐crafted radiomics and deep learning features. 15 Other notable papers include Huisman et al., which uses an FCN suggesting that radiation therapy accelerates brain aging by 2.78 times, 143 Hua et al., which predicts distant metastases with an AUC of 0.88, 144 and Jalalifar et al., which achieves excellent results by feeding in clinical and deep learning features into an LSTM model 145 An additional study by Jalalifar et al. finds the best performance for local treatment response prediction using a hybrid CNN‐transformer architecture when compared to other methods. Residual connections and algorithmic hyperparameter selection further improve results. 146

6. REAL‐TIME AND 4D MRI

Real‐time MRI during treatment has recently been made possible in the clinical setting with the creation of the MRI‐LINAC. Popular models include the Viewray MRIdian (ViewRay Inc, Oakwood, Ohio, USA) and the Elekta Unity (Elekta AB, Stockholm). Electron return effect (ERE), which increasing dose at boundaries with differing proton densities such as the skin at an external magnetic field, guides the architecture of these models. 147 At higher field strengths, the ERE becomes more significant, but MR image quality increases. In addition, a higher field strength can reduce the acquisition time for real‐time MRI. Therefore, a balance must be struck. Both the Elekta Unity and Viewray Mridian with 1.5T and 0.35T magnetic fields, respectively, compromise by choosing lower field strengths The Elekta Unity prioritizes image quality and real‐time tracking capabilities at the expense of a more severe ERE. 148 The MRI‐LINAC has enabled an exciting new era of ART wherein anatomical changes and changes to the tumor volume can be accurately discerned and optimized between treatment fractions. In addition, unique to MRgRT, the position of the tumor can be directly monitored during treatment, potentially leading to improved tumor conformality and improved patient outcomes. 149

Periodic respiratory and cardiac motion are common sources of organ deformation and should be accounted for optimal dose delivery to the PTV. Tracking these motions is problematic with conventional MRI since scans regularly take approximately 2 min per slice leading to a total typical scan time of 20–60 min. 150 In addition to motion restriction techniques like patient‐breath hold, cine MRI accounts for motion in real‐time by reducing acquisition times to 15 seconds or less. This is achieved by only sampling one (2D) or more (3D) slices with short repetition times, increasing slice thickness, and undersampling. In addition, the MR signal is sampled radially in k‐space to reduce motion artifacts. Capturing a 3D volume across multiple timesteps of periodic motion is known as 4D MRI. 151

Deep learning methods can further reduce acquisition time by reconstructing intensely undersampled cine MRI slices. In addition to reconstructing from undersampled k‐space MRI sequences, several approaches further reduce acquisition time. In the first approach, cine MRI and/or k‐space trajectories are used to predict the timestep of a previously taken 4D MRI. However, this method requires a lengthy 4D MRI and does not adapt to changes in the tumor volume over the course of the treatment. Additional approaches include synthesizing a larger volume than cine MRI slice captures to reduce acquisition time, predicting the deformation vector field (DVF) which relays real‐time organ deformation information, or determining the 3D iso‐probability surfaces of the organ to stochastically determine tumor position if real‐time motion adaptation is not possible.

Shown in Table 12, this category is experiencing rapid growth with majority of papers being published within the current year. Notable works include Gulamhussene et al., which predict a 3D volume from 2D cine MRI or a 4D volume from a sequence of 2D cine MR slices. A simple U‐Net, introduced in Section 3, is implemented to reduce inference time. The performance degrades for synthesized slices far away from the input slices but achieves an exceptional target registration error. 17 Nie et al. instead uses autoregression and the LSTM time series modeling to predict the diaphragm position and to find the matching 4D MRI volume. Autoregression outperforms an LSTM model which could be attributed to a low number of patients. 152 Patient motion is alternatively predicted in Terpestra et al. by using undersampled 3D cine MRI to generate the DVF with a CNN with low target registration error. 153 Similarly, Romaguera et al. predict liver deformation using a residual CNN and prior 2D cine MRI. This prediction is then input into a transformer network to predict the next slice. 154 Driever et al. simply segments the stomach with U‐Net and constructs iso‐probability surfaces centered about the center of mass to isolate respiratory motion. These probability distributions can then be implemented in treatment planning. 155

7. OVERVIEW AND FUTURE DIRECTIONS

Over the last 6 years, we have seen growing adoption of MRgRT and the rapid development of powerful deep learning techniques which encourage an efficient, adaptive MRI only workflow. Shown in Figure 2, powerful models which better exploit MRI's 3D and long‐range context and generative learning continue to gain research interest and be improved upon. In addition, the MRI‐LINAC has spawned the exciting new field of real time MRI. In this section, we discuss the progress of deep learning applications to MRgRT as well as promising future trends of clinical interest.

In our literature search, we identify three overarching trends for deep learning models in MRgRT:

(1) multimodal approaches—Methods which leverage many different types of information such as different MR sequences, clinical data, and synthetically generated information have demonstrated state‐of‐the art results and often outperform models using only one source of data. Following this trend, we anticipate that data sources commonly applied to radiomics methods like genomics data, 156 biomarkers, 142 and additional imaging sources to have an increased role in other MRgRT applications. Including synthetic data can also enhance performance leading to the blurring of the subfields of MRgRT (image segmentation, image synthesis, radiomics, and real time MRI). For example, studies achieved higher performance on CT segmentation tasks by performing the contouring on sMRI images. 8 Therefore, it is foreseeable that future models may consider information from all subfields for optimal adaptive treatment planning.

(2) transformer models—Transformer models have been proved to be powerful by directly learning global relations in MRI but can become computationally expensive. Currently a balance is often struck with hybrid CNN‐Transformer models in which convolutional layers capture fine detail in early layers while transformers capture global context in deeper layers. These may transition to purely transformer models as computational resources and more efficient approaches are developed. Attention mechanisms for multi‐modal segmentation and synthesis have improved upon multi‐modal image synthesis by preferentially weighting input channels with stronger context. We predict that this success will be improved upon with transformers. An additional exciting property of transformer models is that they first divide images into a 1D input sequence of patches, so it trivial to add additional patches to represent diverse data sources. Along with recent computationally efficient multimodal approaches, 157 this feature makes transformers a strong contender to effectively employ data from many sources.

Finally, transformer models show promise in better handling small tumor volumes. While state‐of‐the‐art CNN models first identify a ROI so that the network can better focus on relevant features, transformers can directly identify important regions of the image while still considering long‐range context. This emphasis on global context may also help when significant motion blurring or other artifacts prevent CNNs from learning meaningful local features and a holistic understanding is required to achieve accurate results.

(3) generative models—Generative models create data and have proved especially powerful in image segmentation and synthesis tasks. GANs and its variants such as the WGAN‐GP and CycleGAN have been the dominant model in MRgRT applications but are difficult to train and often suffer from instability. Although not represented in any publications at the time of this review, diffusion models solve these issues and have achieved state state‐of‐the art results in computer vision tasks. We expect diffusion models to quickly gain interest for MRgRT applications as these algorithms improve and become less computationally intensive.

Alongside the development of novel algorithms, breakthroughs in MRI technology and the clinical workflow will undoubtedly benefit from advanced deep learning architectures. Image segmentation models mitigate tedious contouring and intra‐observer variability. This need will rise with the advent of the MRI‐LINAC and real‐time MRI, as tracking tumor motion will necessitate real‐time contouring or prediction of the deformable vector field. In addition, the increased soft tissue contrast of MRI has allowed for the differentiation of substructures with differing radiation tolerances, requiring additional contouring. Although already finding success in a variety of tasks like generating sCT, sMRI, and super‐resolution, synthesis models are expected to continuously adapt to emerging technologies. For instance, the image quality of MR‐LINACs is comparably poor due to lower field strengths necessary to mitigate the ERE and could be enhanced with image synthesis models based on high quality diagnostic scans. Furthermore, 7T MRI is gaining clinical interest due to its higher resolution and image contrast, but scanners are not widespread and may cause side effects like nausea. 158 Image synthesis models could increase the quality of existing 1.5T and 3T scanners in a similar fashion. Finally, radiomics models might also find new applications in providing insights into treatment progress and outcomes. 159

8. CONCLUSION

New deep learning approaches to MRgRT are rapidly improving state‐of‐the‐art performance in segmentation, synthesis, radiomics, and real‐time MRI. Trends such as multimodal approaches, transformer models, and generative models demonstrate great potential in tackling current areas of research such as generating accurate x‐ray attenuation information, the “small tumor problem” in image segmentation, and generating high quality radiomics predictions. In addition, these approaches pave the way to better integrate real time MRI into the clinical workflow and improve image quality at shorter acquisition times and lower field strengths.

AUTHOR CONTRIBUTIONS

Conception and design: Zach Eidex, Tian Liu, Xiaofeng Yang; Data collection: Zach Eidex, Yifu Ding, Jing Wang; Analysis and interpretation: Zach Eidex, Elham Abouei, Richard L.J. Qiu; Draft manuscript preparation: Zach Eidex, Richard L.J. Qiu, Tonghe Wang, Xiaofeng Yang.

CONFLICT OF INTEREST STATEMENT

The author declares no conflicts of interest.

Supporting information

Supporting information

Supporting information

ACKNOWLEDGMENTS

This research is supported in part by the National Cancer Institute of the National Institutes of Health under Award Numbers R01CA215718, R56EB033332, R01EB032680 and P30 CA008748.

Eidex Z, Ding Y, Wang J, et al. Deep learning in MRI‐guided radiation therapy: A systematic review. J Appl Clin Med Phys. 2024;25:e14155. 10.1002/acm2.14155

REFERENCES

- 1. Chin S, Eccles CL, McWilliam A, et al. Magnetic resonance‐guided radiation therapy: a review [published online ahead of print 20191023]. J Med Imaging Radiat Oncol. 2020;64(1):163‐177. [DOI] [PubMed] [Google Scholar]

- 2. Murgic J, Chung P, Berlin A, et al. Lessons learned using an MRI‐only workflow during high‐dose‐rate brachytherapy for prostate cancer [published online ahead of print 20160129]. Brachytherapy. 2016;15(2):147‐155. [DOI] [PubMed] [Google Scholar]

- 3. Johnstone E, Wyatt JJ, Henry AM, et al. Systematic review of synthetic computed tomography generation methodologies for use in magnetic resonance imaging‐only radiation therapy [published online ahead of print 20170908]. Int J Radiat Oncol Biol Phys. 2018;100(1):199‐217. [DOI] [PubMed] [Google Scholar]

- 4. Fu Y, Lei Y, Wang T, Curran WJ, Liu T, Yang X. A review of deep learning based methods for medical image multi‐organ segmentation [published online ahead of print 20210513]. Phys Med. 2021;85:107‐122. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Fu Y, Lei Y, Wang T, Curran WJ, Liu T, Yang X. Deep learning in medical image registration: a review. Phys Med Biol. 2020;65(20). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Wang C, Uh J, Patni T, et al. Toward MR‐only proton therapy planning for pediatric brain tumors: synthesis of relative proton stopping power images with multiple sequence MRI and development of an online quality assurance tool [published online ahead of print 20220211]. Med Phys. 2022;49(3):1559‐1570. [DOI] [PubMed] [Google Scholar]

- 7. Dong X, Lei Y, Tian S, et al. Synthetic MRI‐aided multi‐organ segmentation on male pelvic CT using cycle consistent deep attention network [published online ahead of print 20191017]. Radiother Oncol. 2019;141:192‐199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Lei Y, Wang T, Tian S, et al. Male pelvic multi‐organ segmentation aided by CBCT‐based synthetic MRI [published online ahead of print 20200204]. Phys Med Biol. 2020;65(3):035013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Kieselmann JP, Fuller CD, Gurney‐Champion OJ, Oelfke U. Cross‐modality deep learning: contouring of MRI data from annotated CT data only [published online ahead of print 20201213]. Med Phys. 2021;48(4):1673‐1684. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Zhou Z, Ma A, Feng Q, et al. Super‐resolution of brain tumor MRI images based on deep learning [published online ahead of print 20220915]. J Appl Clin Med Phys. 2022;23(11):e13758. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Chun J, Zhang H, Gach HM, et al. MRI super‐resolution reconstruction for MRI‐guided adaptive radiotherapy using cascaded deep learning: in the presence of limited training data and unknown translation model [published online ahead of print 20190807]. Med Phys. 2019;46(9):4148‐4164. [DOI] [PubMed] [Google Scholar]

- 12.Jayachandran Preetha C, Meredig H, Brugnara G, et al. Deep‐learning‐based synthesis of post‐contrast T1‐weighted MRI for tumour response assessment in neuro‐oncology: a multicentre, retrospective cohort study [published online ahead of print 20211020]. Lancet Digit Health. 2021;3(12):e784‐e794. [DOI] [PubMed] [Google Scholar]

- 13. Yang Z, Chen M, Kazemimoghadam M, et al. Deep‐learning and radiomics ensemble classifier for false positive reduction in brain metastases segmentation [published online ahead of print 20220119]. Phys Med Biol. 2022;67(2). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Zhu HT, Zhang XY, Shi YJ, Li XT, Sun YS. The conversion of MRI data with multiple b‐Values into signature‐like pictures to predict treatment response for rectal cancer [published online ahead of print 20211216]. J Magn Reson Imaging. 2022;56(2):562‐569. [DOI] [PubMed] [Google Scholar]

- 15. Keek SA, Beuque M, Primakov S, et al. Predicting adverse radiation effects in brain tumors after stereotactic radiotherapy with deep learning and handcrafted radiomics [published online ahead of print 20220713]. Front Oncol. 2022;12:920393. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Shao HC, Li T, Dohopolski MJ, et al. Real‐time MRI motion estimation through an unsupervised k‐space‐driven deformable registration network (KS‐RegNet) [published online ahead of print 20220629]. Phys Med Biol. 2022;67(13). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Gulamhussene G, Meyer A, Rak M, et al. Predicting 4D liver MRI for MR‐guided interventions [published online ahead of print 20220909]. Comput Med Imaging Graph. 2022;101:102122. [DOI] [PubMed] [Google Scholar]

- 18. Frueh M, Kuestner T, Nachbar M, Thorwarth D, Schilling A, Gatidis S. Self‐supervised learning for automated anatomical tracking in medical image data with minimal human labeling effort [published online ahead of print 20220827]. Comput Methods Programs Biomed. 2022;225:107085. [DOI] [PubMed] [Google Scholar]

- 19. Fiorino C, Reni M, Bolognesi A, Cattaneo GM, Calandrino R. Intra‐ and inter‐observer variability in contouring prostate and seminal vesicles: implications for conformal treatment planning. Radiother Oncol. 1998;47(3):285‐292. [DOI] [PubMed] [Google Scholar]

- 20. Behjatnia B, Sim J, Bassett LW, Moatamed NA, Apple SK. Does size matter? Comparison study between MRI, gross, and microscopic tumor sizes in breast cancer in lumpectomy specimens [published online ahead of print 20100222]. Int J Clin Exp Pathol. 2010;3(3):303‐309. [PMC free article] [PubMed] [Google Scholar]

- 21. Balagopal A, Morgan H, Dohopolski M, et al. PSA‐Net: deep learning–based physician style–aware segmentation network for postoperative prostate cancer clinical target volumes. Artificial Intelligence in Medicine. 2021;121:102195. [DOI] [PubMed] [Google Scholar]

- 22. Liu Y, Stojadinovic S, Hrycushko B, et al. A deep convolutional neural network‐based automatic delineation strategy for multiple brain metastases stereotactic radiosurgery [published online ahead of print 20171006]. PLoS One. 2017;12(10):e0185844. [DOI] [PMC free article] [PubMed] [Google Scholar]