Abstract

Transcranial alternating current stimulation (tACS) has attracted interest as a technique for causal investigations into how rhythmic fluctuations in brain neural activity influence cognition and for promoting cognitive rehabilitation. We conducted a systematic review and meta-analysis of the effects of tACS on cognitive function across 102 published studies, which included 2893 individuals in healthy, aging, and neuropsychiatric populations. A total of 304 effects were extracted from these 102 studies. We found modest to moderate improvements in cognitive function with tACS treatment that were evident in several cognitive domains, including working memory, long-term memory, attention, executive control, and fluid intelligence. Improvements in cognitive function were generally stronger after completion of tACS (“offline” effects) than during tACS treatment (“online” effects). Improvements in cognitive function were greater in studies that used current flow models to optimize or confirm neuromodulation targets by stimulating electric fields generated in the brain by tACS protocols. In studies targeting multiple brain regions concurrently, cognitive function changed bidirectionally (improved or decreased) according to the relative phase, or alignment, of the alternating current in the two brain regions (in phase versus antiphase). We also noted improvements in cognitive function separately in older adults and in individuals with neuropsychiatric illnesses. Overall, our findings contribute to the debate surrounding the effectiveness of tACS for cognitive rehabilitation, quantitatively demonstrate its potential, and indicate further directions for optimal tACS clinical study design.

INTRODUCTION

Rhythmic fluctuations in neural activity are among the most salient neural phenomena associated with cognition. Studies spanning nearly a century have informed our current understanding of the role of these brain rhythms in cognitive function (1). Variability and abnormalities in these spectral signatures of brain activity have been associated with differences in cognitive function among individuals, including diverse cognitive deficits and symptoms across a spectrum of neuropsychiatric illnesses (2). Consequently, successful modulation of these rhythms in a safe, noninvasive manner has emerged as a potential strategy for improving cognitive function. Transcranial alternating current stimulation (tACS) is a noninvasive technique that mediates frequency-specific entrainment of cortical activity (3). The rapidly growing number of publications assessing the state of the field (4–6), emergence of new rhythmic neuromodulation protocols (7–9), and conflicting evidence about the effectiveness of tACS (10) suggest the need for a systematic and quantitative examination of the existing literature.

Here, we performed a systematic review and meta-analysis of 102 studies that examine the modulation of cognitive function using tACS. We included randomized, sham-controlled studies published between January 2006 and January 2021 targeting the following cognitive domains in healthy young and older adults and in clinical populations: visual attention, working memory, long-term memory, executive control, fluid intelligence, learning, decision-making, motor learning, and motor memory.

RESULTS

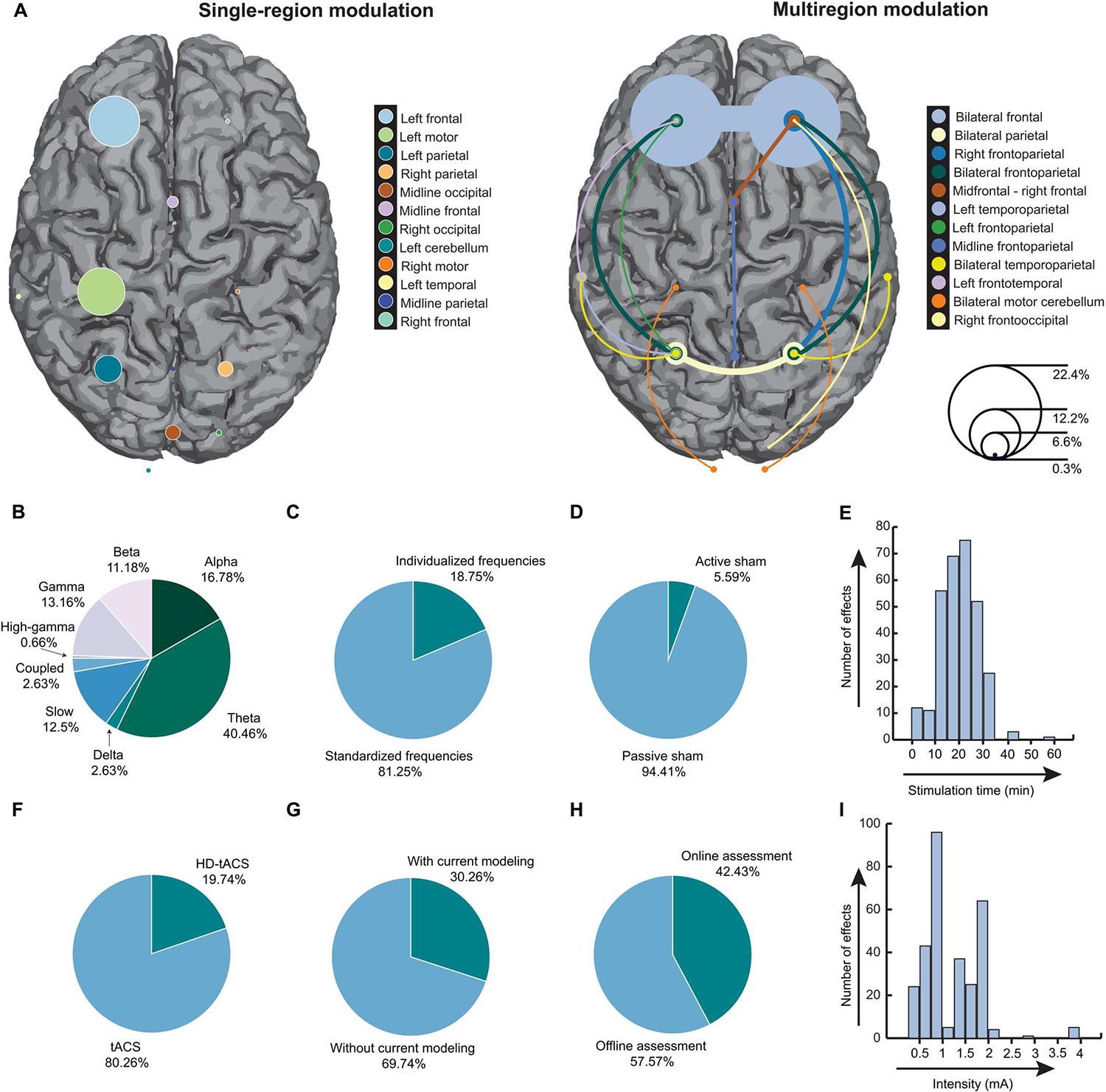

A total of 102 published studies, reporting 304 effect size estimates, met the eligibility criteria and were included in the meta-analysis (search and selection strategy is reported in fig. S1). Together, the studies included 2893 participants (1290 males and 1603 females) with an average age of 30.82 + 15.9 years. Of these, 333 participants were older adults with an average age of 67.35 + 6.96 years. A total of 177 participants had a clinical disorder (average age of 39.37 + 19.31 years). These clinical conditions included major depressive disorder, attention deficit hyperactivity disorder (ADHD), epilepsy, Parkinson’s disease, schizophrenia, and mild cognitive impairment. We did not observe any instances of overlap between the participant pools of the 102 studies, although there were occasional overlaps in the effects determined from multiple experiments or measures examined in the same study, which were accounted for using a robust variance estimation (RVE) approach for effect size estimation (see Materials and Methods) (11). The characteristics, demographics, and citations of the included studies are available in data file S1. An overview of the included effects is shown in Fig. 1 and figs. S2 and S3. Figure 1 shows a summary of the experimental and neuromodulation parameters used in the tACS protocols underlying the 304 effects in the 102 published studies of our meta-analysis. Bilateral frontal brain regions were the most common targets (Fig. 1A), whereas theta frequency was the most commonly used modulation frequency (Fig. 1B). The tACS protocols underlying most effects involved the use of a standardized (as opposed to personalized) frequency modulation (Fig. 1C), as well as a passive sham control (Fig. 1D), and took between 20 and 25 min to complete (Fig. 1E). Most effects involved conventional sponge-based systems as opposed to high-definition systems (Fig. 1F), did not involve simulation of the current flow (Fig. 1G), were examined after (offline) as opposed to during (online) tACS (Fig. 1H), and were obtained with a peak-to-peak modulation intensity between 0.75 and 1 mA (Fig. 1I).

Fig. 1. Summary characteristics of tACS studies included in the meta-analysis.

(A) A proportional representation of the brain regions targeted by tACS across the 304 effects in the 102 published studies of the meta-analysis. The left panel shows single-region brain targets, and the right panel describes studies targeting at least two brain regions, including bilateral tACS study designs and phase-dependent multisite high-definition (HD)–tACS study designs. The sizes of the circles over the respective brain regions correspond to the proportion of effects targeting those regions (right inset, scale). The most common targets were bilateral frontal regions (22.4% of all effects examined; right), followed by left frontal regions (12.2% of all effects examined; left). (B) The different stimulation frequencies used and the corresponding proportion of effects are shown. Note that frequencies were entered as a continuous variable in the analysis but are represented here categorically using conventional frequency ranges (slow: <0.5 Hz; delta: 0.5 to 4 Hz; theta: 4 to 8 Hz; alpha: 8 to 12 Hz; beta: 12 to 30 Hz; gamma: 30 to 40 Hz; high-gamma: >40 Hz). Instances when complex phase-amplitude coupled waveforms were used are marked as “coupled.” (C) The proportion of effects in which tACS frequencies were personalized to every individual versus those in which a standardized frequency was used is shown. (D) The proportion of effects in which a tACS condition of interest was compared against an active sham condition versus a passive sham condition is shown. (E) The graph shows the distribution of neuromodulation duration across the examined effects. A total of 20- to 25-min stimulation duration was the most common in the literature. (F) The proportion of effects using conventional tACS versus HD-tACS is shown. (G) The proportion of effects observed in experiments with and without current flow models is shown. (H) The proportion of effects in which the behavioral measurements were made during tACS administration (“online”) versus after tACS had finished (“offline”) is shown. (I) The graph shows the distribution of peak-to-peak modulation intensity across the examined effects. The most popular intensity ranges in the literature were 0.75 to 1 mA and 1.75 to 2 mA.

tACS improves cognitive function across all cognitive domains

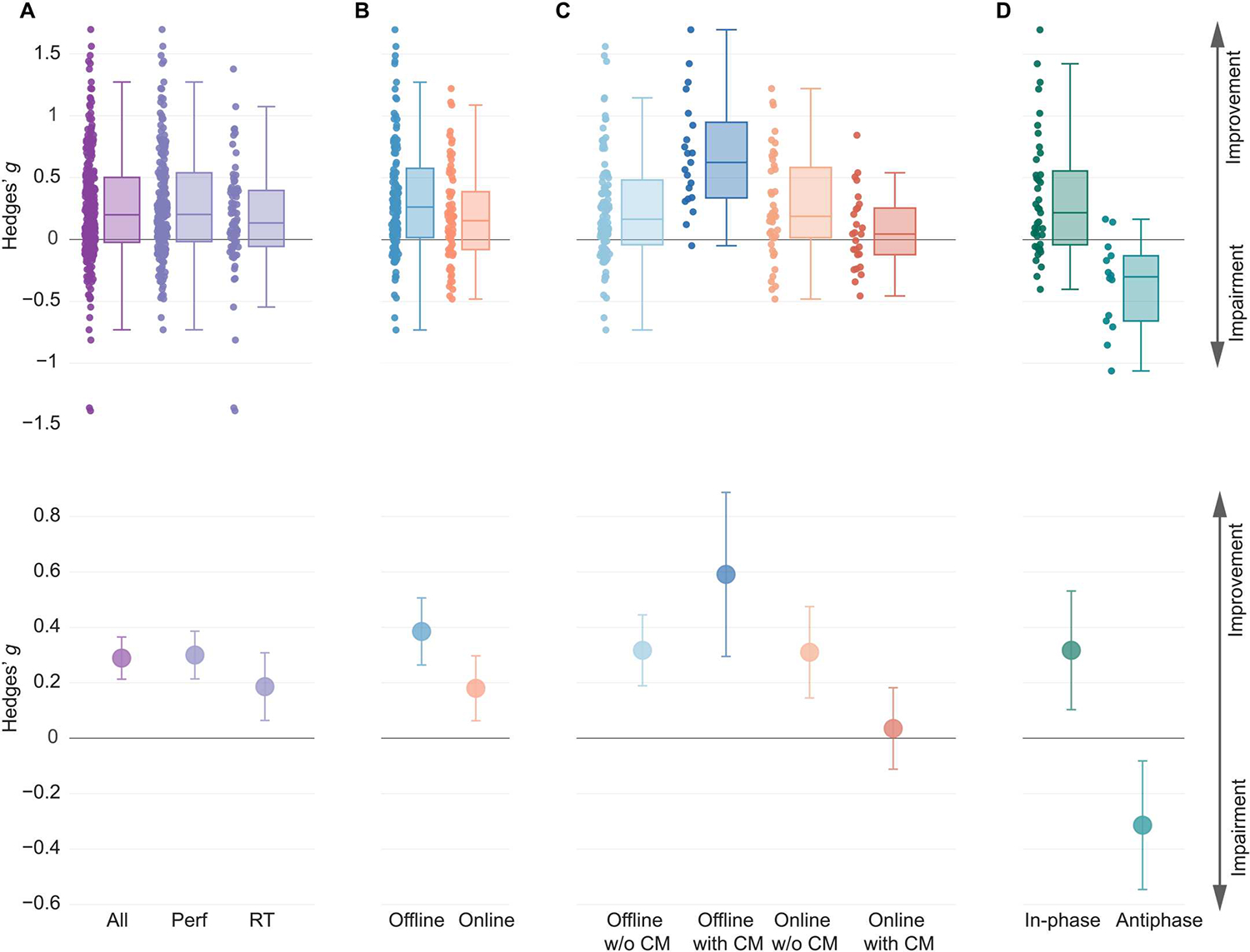

We found robust improvements with tACS on cognitive function across studies examining all cognitive domains including visual attention, working memory, long-term memory, executive control, fluid intelligence, learning, decision-making, motor learning, and motor memory. In the outcome-based analysis, we estimated the overall effect size across experiments explicitly designed to improve functional outcomes or that were exploratory in nature (number of studies, N = 96; number of effects, k = 265). For these experiments, we estimated the magnitude of improvement in cognitive function outcomes in the active tACS condition relative to sham treatment. Because of the nature of the analysis across cognitive domains and the wealth of different experimental designs and behavioral variables for investigation within each domain, a wide variety of cognitive function outcomes were included. These variables could be broadly categorized into performance-based measures (for example, accuracy, sensitivity, or task score) and reaction time (RT)–based measures. We examined the effect of tACS on all variables combined (“All”) and separately for performance-based (“Performance”) and RT-based (“RT”) measures (Fig. 2). We found a modest to moderate positive effect of tACS across All measures [N = 96, k = 265, Hedges’ g = 0.29, 95% confidence interval (CI) [0.21, 0.37], P < 0.0001, df = 95, I2 = 66.25, where df refers to degrees of freedom and I2 reflects heterogeneity; Fig. 2A]. To account for the heterogeneity across effects, we excluded effects that were deemed outliers (see Materials and Methods). We continued to observe improvements in cognitive function, suggesting that this finding was not driven by a minority of studies with large effect sizes (N = 94, k = 249, Hedges’ g = 0.29, 95% CI [0.23, 0.36], P < 0.0001, df = 93, I2 = 54.01). We further confirmed the robustness of this observation through sensitivity analyses in which we varied the correlation between the experiments belonging to the same study and the correlation between the active and control conditions in studies that had within-subjects designs. We found significant effects of tACS during active conditions relative to sham treatment across all correlation values (P values < 0.0001; tables S1 and S2). We also observed improvements separately in both Performance measures and RT measures with active tACS relative to sham treatment both before and after outlier removal (Fig. 2A and tables S1 and S2). Exclusion of effects in clinical populations did not change the pattern of results in these omnibus analyses.

Fig. 2. Summary of meta-analysis results.

(A) Box plots and point estimates of outcome-based effects on cognitive function overall (Performance, RT, and clinical symptoms combined) and on Performance and RT-based outcomes separately are shown. (B) Box plots and point estimates for offline and online effects on outcome-based Performance measures are shown. (C) Box plots and point estimates of interaction effects according to the timing of behavioral assessment and use of current flow models (CM) that simulate the flow of electrical current and strength of the electric field in the human brain are shown. Performance, RT measures, and clinical symptoms were combined. (D) Box plots and point estimates of hypothesis-based effects of phase manipulation are shown.“In-phase” represents the improving effect of in-phase synchronization on functional outcome, and “antiphase” represents the disrupting effect of antiphase synchronization on functional outcome. All plots show data before outlier removal. In all plots, individual points represent individual effect size point estimates for each experiment. Box plot center line, median; box plot limits, upper and lower quartiles; whiskers, maximum and minimum values. Error bars around point estimates reflect 95% confidence intervals. Perf, Performance; RT, reaction time; Offline w/o CM, offline effects in experiments without the current flow model; Offline with CM, offline effects in experiments with the current flow model; Online w/o CM, online effects in experiments without the current flow model; Online with CM, online effects in experiments with the current flow model.

In addition to examining improvements in cognitive function, we also tested whether the changes in cognitive function aligned with explicit hypotheses formulated by studies in the hypothesis-based analysis. Here, too, we found evidence of changes in cognitive function along the hypothesized direction (tables S1 to S3). Together, our observations suggest improvements in cognitive function, largely aligning with study hypotheses, after tACS across all cognitive domains, evident across Performance-based and RT-based measures.

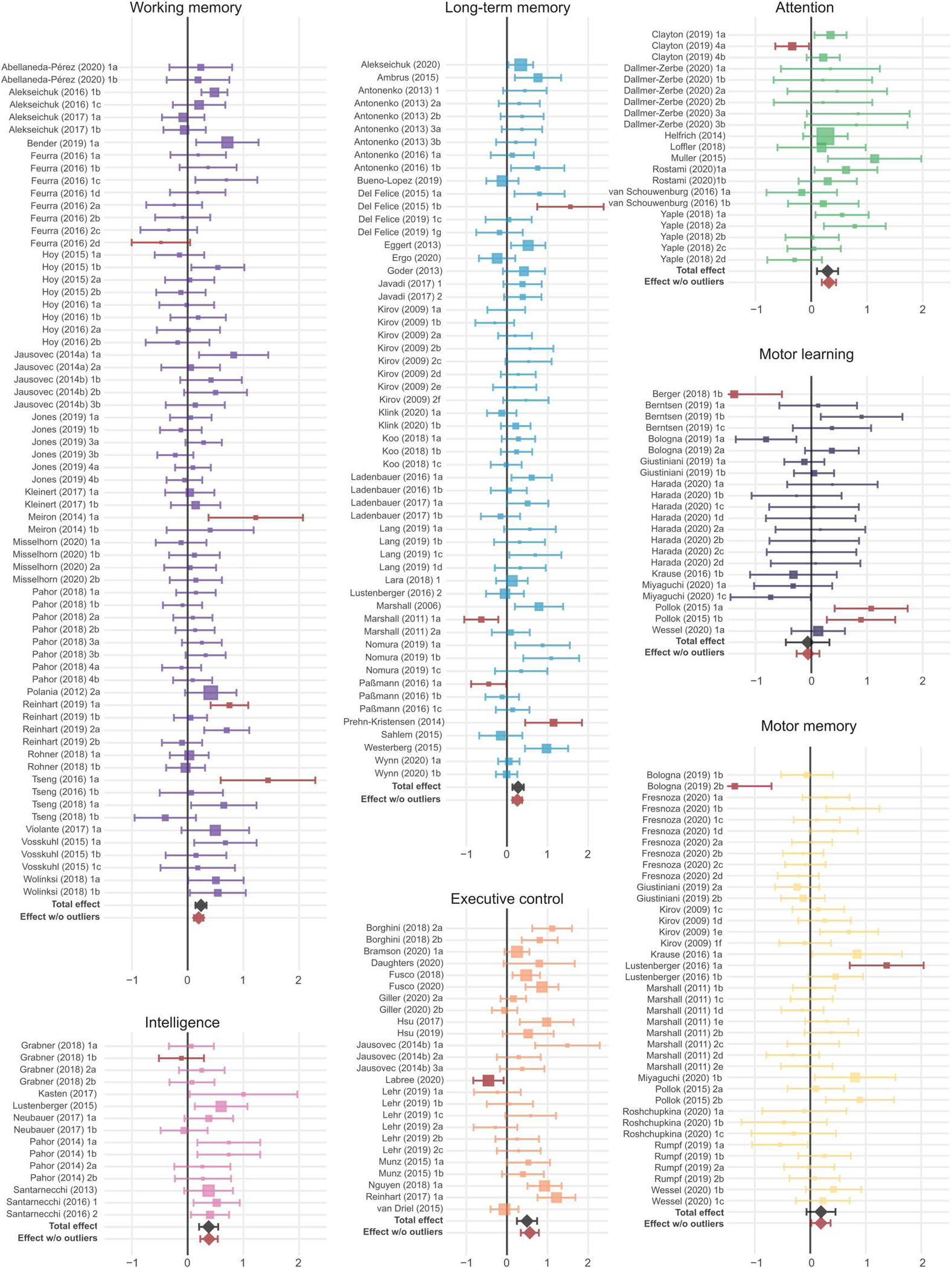

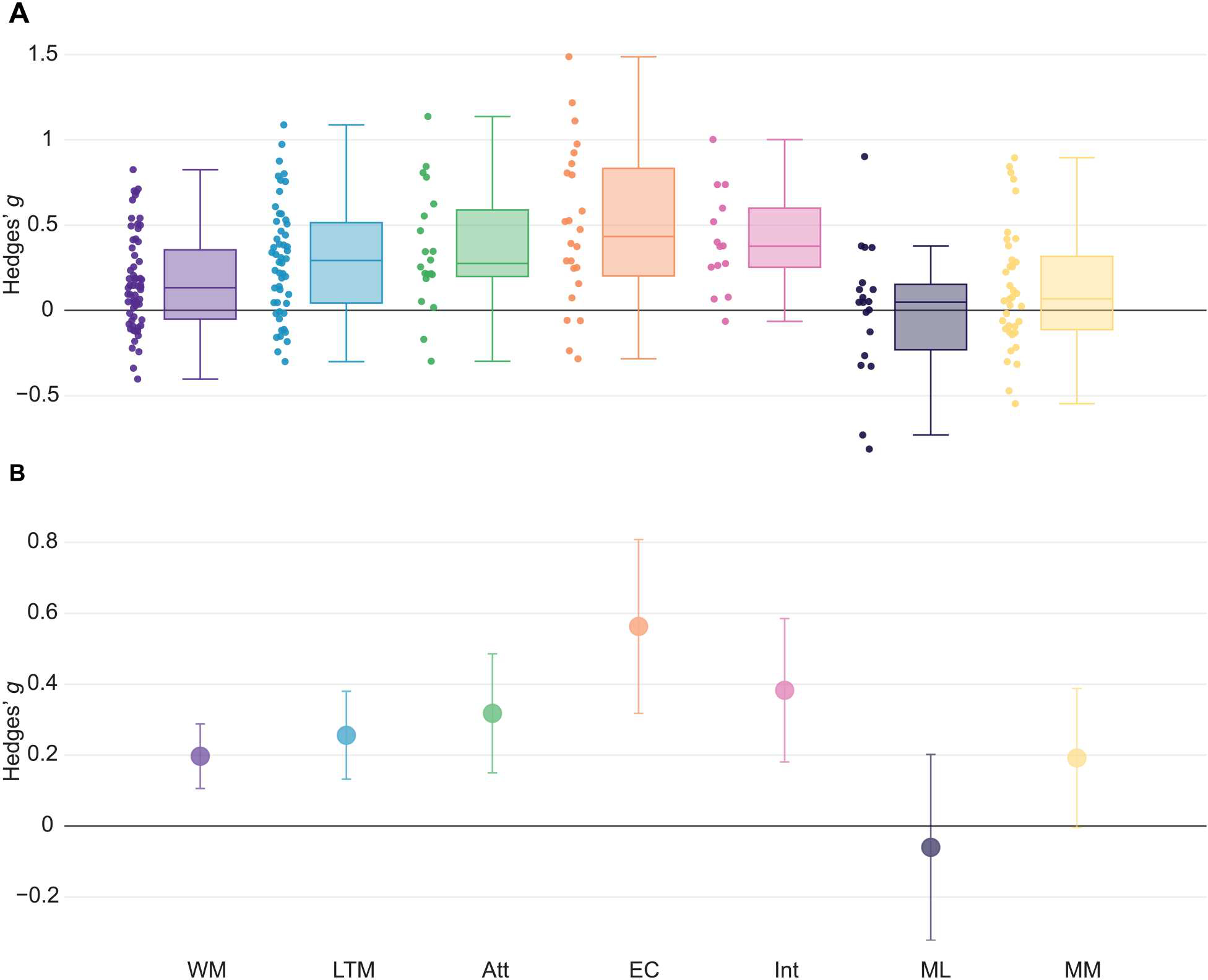

Improvements in specific cognitive domains with tACS

Enhancements in cognitive functional outcomes were evident when separately studying different cognitive domains. First, we examined the impact of tACS on All effects within each cognitive domain (Figs. 3 and 4 and fig. S4; see tables S4 to S9 for the full description). We found improvements with tACS in working memory (N = 22, k = 63, Hedges’ g = 0.20, 95% CI [0.11, 0.29]), long-term memory (N = 26, k = 52, Hedges’ g = 0.26, 95% CI [0.13, 0.38]), attention (N = 8, k = 20, Hedges’ g = 0.32, 95% CI [0.15, 0.49]), and intelligence (N = 7, k = 14, Hedges’ g = 0.38, 95% CI [0.18, 0.59]) (Figs. 3 and 4). Moderate- to large-sized improvements were observed in studies testing executive control (N = 14, k = 24, Hedges’ g = 0.56, 95% CI [0.32, 0.81]) (Figs. 3 and 4). When examining Performance measures separately, significant effects were observed after outlier removal in working memory, long-term memory, attention, and executive control (table S5). When examining RT measures separately, significant effects were observed for attention and intelligence (table S6). Exclusion of studies with clinical populations did not alter the pattern of results for these analyses, except for reducing the significant effect of tACS on Performance measures in the attention domain before outlier removal to a trend level (table S10). Other cognitive domains such as motor learning, motor memory, learning, and decision-making did not show significant modulations with tACS or did not offer sufficient df for a reliable analysis. Results for these domains are reported in tables S4 to S9.

Fig. 3. Forest plots of the effects on All outcomes organized by cognitive domains.

Outcome-based Hedges’ g effect size estimates are represented along with 95% confidence intervals for individual experiments within each cognitive domain and for each cognitive domain overall (total effect) before outlier removal (the black diamond). Experiments identified as outliers within the respective cognitive domains are marked in brown, and the overall effect size after removal of these outliers is indicated by the brown diamond. Note that outlier detection was performed individually for each analysis, so outlier identification may differ between the omnibus and the domain-specific analyses. Sizes of squares reflect the weight attributed to a given experiment by the robust variance estimation procedure, with larger sizes reflecting greater contributions of that experiment to the overall effect size. Experiment names correspond to the list of studies in data file S1.

Fig. 4. Summary of effects on All outcomes in cognitive domains after outlier removal.

(A) Box plots of outcome-based effect size estimates in cognitive domains after outlier removal are shown. Individual points represent individual effect size point estimates for each experiment within a specific domain. (B) Overall effect size estimates after outlier removal in cognitive domains with the corresponding 95% confidence intervals are shown. Box plot center line, median; box limits, upper and lower quartiles; whiskers, maximum and minimum values. WM, working memory; LTM, long-term memory; Att, attention; EC, executive control; Int, intelligence; ML, motor learning; MM, motor memory.

Offline improvements in Performance measures with tACS are stronger than online improvements

We next performed a meta-regression analysis of the impact of different experimental design variables on tACS outcomes (see Materials and Methods). The regression was performed at the omnibus level with All measures, and separately for Performance and RT measures, across cognitive domains. We found a significant effect of the time of behavioral assessment relative to neuromodulation with tACS. The “assessment timing” covariate determined whether the behavioral assessment primarily occurred while tACS was being performed (“online”) or after tACS had been completed (“offline”). We found that offline improvements in Performance measures with active tACS relative to sham treatment were greater than online improvements (Hedges’ g = 0.39 and 0.17, respectively; P = 0.010) (Fig. 2B). Significant differences were evident even after removal of potential outliers (Hedges’ g = 0.36 and 0.18, respectively; P = 0.02). We did not observe significant differences when examining RT measures alone or when examining All measures together. These results suggest stronger offline improvements with tACS compared with online improvements, primarily for Performance measures.

Current flow models strengthen offline but weaken online effects of tACS

Given the significant main effect of the assessment timing on performance metrics, we explored the interaction effect of assessment timing with other modulation parameters on performance effects. We found a significant interaction of assessment timing with the use of current flow models, that is, simulations used to examine the pattern of current flow and resultant electric field strengths in the human brain (P < 0.0001; Fig. 2C). Pairwise comparisons showed stronger offline improvements with tACS in experiments using current flow models compared with those without current flow models (Hedges’ g = 0.59 and 0.32, respectively; P = 0.009), and this effect remained significant after removal of outliers (Hedges’ g = 0.54 and 0.3, respectively; P = 0.012). In contrast, we observed weaker online effects in studies using current flow models than those without current flow models (Hedges’ g = 0.04 and 0.3, respectively; P = 0.01), and this effect was not influenced by removal of outliers (Hedges’ g = 0.07 and 0.29, P = 0.015). Typically, studies used current flow models to simulate the voltage gradients in the targeted brain regions, presumably using them for the selection of appropriate montages, which include the spatial arrangement of the stimulation electrodes on the scalp and the current intensity in each electrode. A subset of studies further used these models to optimize modulation parameters such as electrode location and current intensities, as is typically the case with high-definition (HD)–tACS study designs. We also observed a marginal interaction of the use of HD-tACS with the assessment timing variable on performance effects before outlier removal (P = 0.015), but this effect did not survive correction for multiple comparisons and outlier removal. These results suggest that using current flow models influences tACS outcomes, particularly strengthening offline effects while reducing the magnitude of online effects.

Potential influence of neuromodulation intensity and frequency on cognitive outcomes

We found some evidence for the influence of neuromodulation intensity and frequency on cognitive enhancement. First, the meta-regression on RT measures across cognitive domains suggested an inverse relationship between the modulation intensity and improvement in RT outcomes (β = −0.15, P = 0.048; outliers removed), suggesting that high modulation intensities may not always be associated with further improvement in outcomes. Given prior evidence with transcranial direct current stimulation (tDCS) (12), we examined the presence of a nonlinear relationship between these variables in an exploratory analysis, testing whether a second-order polynomial better predicts the relationship between modulation intensity and RT effect sizes. However, we did not find evidence in favor of such a relationship (P values > 0.636). In addition, this inverse relationship did not remain significant after exclusion of studies with clinical populations (table S10), suggesting that this effect may be more prominent in clinical populations. Next, we repeated the meta-regression analyses separately for cognitive domains in which we observed significant effects with tACS. Accordingly, we performed regression analyses on All effects in working memory, long-term memory, attention, executive control, and intelligence domains. We found evidence for a modest association between effect size of All working memory measures and the modulation frequency, with lower frequencies showing slightly greater effectiveness (β = −0.006, P = 0.032; outliers removed). Because of the modest variation in effects across frequencies and because of the absence of this relationship when excluding clinical studies (table S10), this association may need further examination in future studies. After exclusion of both studies with clinical populations and outliers, we also found an inverse relationship between modulation intensity and executive control effect sizes across All (β = −0.722, P = 0.036), Performance (β = −0.814, P = 0.043), and RT measures (β = −0.966, P = 0.03; table S10). These associations also align with the inverse relationships between intensity and RT effects across cognitive domains identified above but were not present before outlier removal or with inclusion of clinical studies. In sum, these findings suggest a possible role of neuromodulation intensity in influencing cognitive outcomes, particularly RT measures and measures of executive control, as well as potentially stronger benefits in working memory with lower modulation frequencies.

Bidirectional modulation of cognitive function using phase synchronization

Increasingly, tACS is being used to modulate synchronized activity between multiple brain regions (5). In these treatment designs, two brain regions are targeted with alternating currents at the same frequency, but the relative alignment of two sinusoidal currents (or relative phase) may differ. In-phase and antiphase synchronization are two popular designs where the two targeted brain regions are synchronized with a relative phase of 0° or 180°, respectively. Although these designs produce complex biophysical effects in the brain (13), recent studies have suggested that in-phase synchronization may improve neuronal communication and cognitive function, whereas antiphase synchronization may have the opposite effect (14, 15). We used the hypothesis-based approach and tested whether in-phase synchronization improved cognitive function and whether antiphase outcome impaired cognitive function in multisite tACS studies explicitly stating these expected hypotheses. Examining in-phase and antiphase experiments across All measures, we found that multisite tACS significantly changed behavior in the expected direction relative to sham treatment (before outlier removal: N = 22, k = 62, Hedges’ g = 0.35, 95% CI [0.17, 0.53], P = 0.0006, df = 20.1, I2 = 72.42; table S11). Significant effects were evident when examining Performance measures alone but not for RT measures (table S11). When separately examining in-phase studies across All measures, we found significant improvements in cognitive function (before outlier removal: N = 19, k = 41, Hedges’ g = 0.32, 95% CI [0.10, 0.53], P = 0.0061, df = 17.3, I2 = 75.89; Fig. 2D). However, significance was not detected when examining Performance and RT measures separately (table S11). Antiphase experiments showed evidence for impairment of cognitive function (N = 9, k = 14, Hedges’ g = 0.31, 95% CI [0.08, 0.55], P = 0.0146, df = 7.48, I2 = 52.91; Fig. 2D), and these effects were evident in Performance measures alone as well (table S11). Our analysis suggests that tACS can be used to modulate Performance-based measures of cognitive function bidirectionally using in-phase and antiphase synchronization.

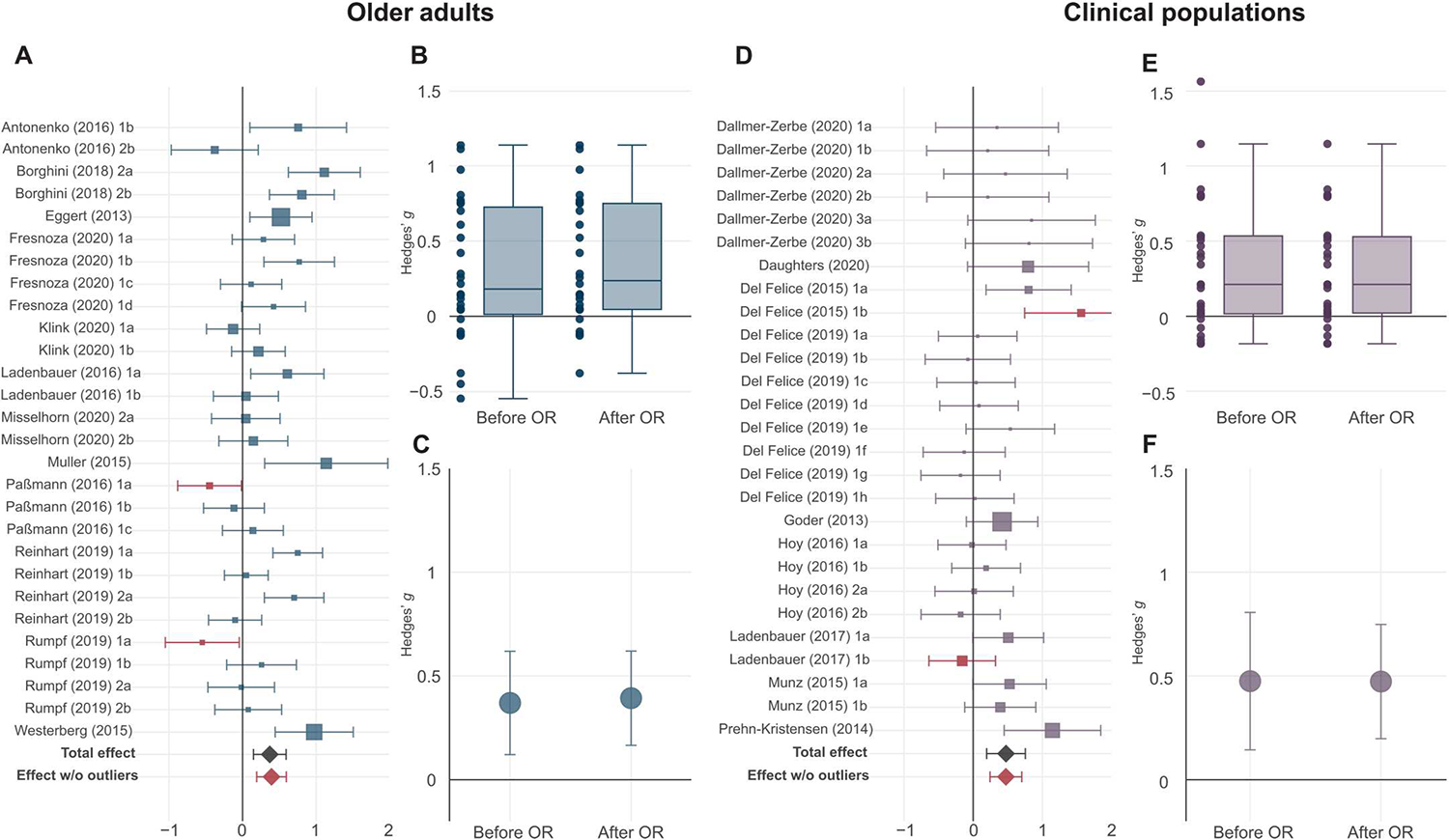

Cognitive improvements in older adults and clinical subgroups

The translational potential of tACS for improving cognitive function remains an open question. To address this, we performed additional analyses specifically for studies involving healthy older adults and those in clinical populations. We sought evidence for the effect of tACS on cognitive measures in both subgroups and on characteristic symptom scores in the clinical populations (see Materials and Methods). We found a significant effect of tACS on cognitive function in older adults when examining All measures together (N = 12, k = 28, Hedges’ g = 0.37, 95% CI [0.12, 0.62], P = 0.008, df = 10.8, I2 = 73.84; Fig. 5, A to C), which improved after rejection of outliers (table S12). The effect was even stronger when examining Performance measures alone (table S12). However, examination of RT measures alone was not reliable because of low df (table S12). In clinical populations, we found a significant effect of tACS on All cognitive measures (excluding clinical symptoms; N = 9, k = 27, Hedges’ g = 0.48, 95% CI [0.14, 0.81], P = 0.01, df = 7.66, I2 = 54.81; Fig. 5, D to F), which remained significant after rejection of outliers (table S12). Performance measures in clinical populations also showed significant modulation with tACS, which was sustained after removal of outliers (table S12). Now, the low number of RT effects and clinical symptom scores in clinical populations prevented us from drawing reliable inferences (table S12). Thus, more research is needed to examine the impact of tACS on clinical symptoms specifically, but there is evidence for cognitive enhancement using tACS in both older adults and clinical populations.

Fig. 5. Effects of tACS on healthy older adults and in clinical populations.

(A) Forest plot of outcome-based effect sizes along with 95% confidence intervals for All outcomes in older adults is shown. The overall effect size before outlier removal is indicated by the black diamond. Outlier experiments are highlighted in brown, and the overall effect size after outlier removal is indicated by the brown diamond. Sizes of squares reflect the weight attributed to a given experiment by the robust variance estimation procedure, with larger sizes reflecting greater contributions of that experiment to the overall effect size. Experiment names correspond to the list of studies in data file S1. (B) Box plot of the effects of tACS on All outcomes in healthy older adults before (left) and after (right) outlier removal (OR) is shown. Individual points represent individual effect size point estimates for each experiment. (C) Point estimate with the corresponding 95% confidence intervals of the overall effect of tACS on healthy older adults (All outcomes) before (left) and after (right) outlier removal is shown. (D) Forest plot of outcome-based effect sizes along with 95% confidence intervals for All cognitive measures (not including clinical symptoms) in clinical neuropsychiatric populations is shown. (E) Box plot of the effects of tACS on All cognitive measures in clinical neuropsychiatric populations before (left) and after (right) outlier removal is shown. (F) Point estimate with the corresponding 95% confidence intervals of the overall effect of tACS in clinical neuropsychiatric populations (All cognitive measures) before (left) and after (right) outlier removal is shown. Box plot center line, median; box limits, upper and lower quartiles; whiskers, maximum and minimum values.

Quality assessments and publication bias

We used established methods to examine the likelihood of biases and to assess quality in the sample of studies included in our analyses. First, we used the revised Cochrane risk-of-bias (RoB) tools for randomized controlled trials (RCTs) (16). Four studies (17–20) explicitly indicated as clinical trials were subjected to this rigorous assessment. Two studies were deemed to be low in risk of bias, one study suggested some concerns related to the potential effects of missing data, and one study suggested some concerns related to both randomization and carryover effects, leading to a “high risk” assessment (fig. S5). All remaining studies were not registered as clinical trials. For these non-RCTs, we made some accommodations to the risk-of-bias tools (see Materials and Methods) to tentatively gauge bias in the existing literature. The application of the accommodated risk-of-bias criteria broadly suggested concerns in randomization, blinding, carryover effects, and potential reporting biases (fig. S6). A consistent, qualitative observation was insufficient reporting of randomization protocols.

Next, we examined publication bias using a funnel plot, showing individual effect sizes against their corresponding SEs (fig. S7A) and the trim-and-fill procedure (21). Whereas, visually, the plot was asymmetrical, the trim-and-fill procedure did not detect any missing studies (P = 0.5). Then, we excluded the outliers, and, this time, the trim-and-fill procedure detected four effects missing on the left side of the funnel plot (P = 0.03), indicating the presence of a publication bias. After imputing the missing effects (fig. S7B), the adjusted total effect size across all outcome-based measures and cognitive domains decreased in magnitude but remained significant (Hedges’ g = 0.28, 95% CI [0.22, 0.33], P < 0.0001). Next, we used the Egger’s regression test to determine plot asymmetry (22). This test suggested the presence of asymmetry before outlier removal (z = 3.870, P < 0.001) and after outlier removal (z = 4.637, P < 0.001) as well as after executing the trim-and-fill procedure (z = 3.276, P = 0.001), suggesting some residual asymmetry. We also used the test of excess significance to determine whether the number of significant effect sizes in the meta-analysis exceeded expectations on the basis of their power (23). This analysis also demonstrated a higher number of significant effects both before (P < 0.0001; observed number = 67 of 265, expected number = 34.114) and after (P < 0.0001; observed number = 51 of 249, expected number = 30.9) removal of outliers than would be expected.

Last, we examined how many studies were preregistered given that the rate of positive results was noted to be much higher in studies that were not registered reports (24). In addition to the four RCTs identified above (17–20), only two other studies explicitly noted the preregistration of study procedures before execution (25, 26). Overall, these analyses suggest a potential presence of selective outcome reporting in the literature and highlight the need for more RCTs with aptly controlled designs and rigorous procedures.

DISCUSSION

In a meta-analysis across 102 published studies of tACS that included 2893 individuals and 304 effects covering nine cognitive domains, we found evidence for improvements in measures of cognitive function. When examining these domains individually, we found reliable evidence for moderate to large improvements in measures of executive control and modest to moderate improvements in measures of attention, long-term memory, working memory, and fluid intelligence. Together, these findings suggest a potential role for tACS as an investigational tool for studying neurophysiological mechanisms of cognitive function and a rehabilitative tool for cognitive enhancement.

Meta-regression analyses showed a significant effect of tACS assessment timing, indicating that offline improvements in Performance measures were stronger than online improvements. Stronger offline effects suggest that changes in neural activity with tACS likely accumulate over time. Such accumulation might be driven by spike timing–dependent neural plasticity (5), although the effect of nonspecific factors arising because of variability in tACS designs across studies cannot be ruled out. These offline improvements were found to be even stronger in studies using current flow models for simulating the pattern of current flow and the strength of the resultant electric field in the human brain. This influence might be driven by a more spatially focused stimulation of the region of interest or by stimulating the region at higher intensities, which is possible with designs involving this additional step. Why these current flow models exert a negative influence on online effects is an intriguing question. It is possible that a complex interaction between preferred resonance frequencies of distinct neuronal populations in targeted brain regions with the applied electric field at a specific frequency might destructively influence behavior during the online phase. Besides the use of current flow models, neuromodulation intensity was shown to influence tACS effect sizes. However, whereas studies in nonhuman primates suggest that neuronal entrainment (or locking of spiking activity to specific phases of the applied electric field) increases with modulation intensity, we found some evidence for an inverse relationship. Such an association perhaps suggests the presence of an optimal degree of phase-locking for promoting functional specificity without diminishing flexibility (27) and might also agree with nonlinear relationships reported using tDCS (12). These parameters merit further systematic investigation in future empirical studies to identify improved neuromodulation designs.

Our findings suggest potentially promising translational benefits for cognitive function with neuromodulation administered by tACS. We found enhancements in cognitive function in older adults and improvements in cognitive function in clinical populations after tACS treatment. These studies included randomized, double-blind, placebo-controlled clinical trials in individuals with various neurological or psychiatric disorders (17–20). Moreover, bidirectional modulation of cognitive function with phase-dependent tACS at multiple sites, at least for Performance-based measures, offers additional translational benefits because certain cognitive functions may show hyper- or hypoactivity under different clinical conditions, for instance, reward processing in major depressive disorder versus bipolar disorder (28). Phase-specific synchrony modulation may allow the flexibility to optimize cognitive function according to the characteristic symptoms of neuropsychiatric illnesses along specific diagnostic dimensions (29). Additional studies, incorporating recommendations for rational clinical trial designs with tACS (6), can provide further insights into the replicability and sustainability of these findings.

There are some limitations in the present work. Whereas we have attempted to examine individual cognitive domains, the studies included within each domain used diverse experimental tasks and neuromodulation protocols targeting different brain regions and frequencies. A quantitative examination of specific protocols within each domain is not yet possible because of the absence of a sufficient number of replications. We hope that with more replication attempts in the future, our findings can be complemented by circumscribed investigations into each cognitive domain with specific neuromodulation protocols. Our present analyses of the literature also suggest the presence of publication bias. In addition, most studies included in our analysis (98 of 102) were not registered RCTs. More robust meta-analytic conclusions can be drawn in the future if studies strive to incorporate practices to improve methodological and reporting rigor, including preregistration of hypotheses and study procedures, clear identification of confirmatory and exploratory analyses, explicit statements about deviations from study procedures, limiting the number of comparisons, and reporting randomization methodology. In addition, studies following methodologically sound procedures should be encouraged to report null effects to minimize the likelihood of publication biases. Last, although the present analyses suggest enhancements in cognitive function with tACS, they do not currently inform the sustainability of these improvements. Future studies should attempt to quantify the duration for which any changes in cognitive function are observed to facilitate examination of this critical question in the future.

We view the present study as a stepping stone toward further systematic investigations into tACS. Our quantitative consolidation of the existing published body of work suggests a promising role for neuromodulation of brain rhythms using tACS as a tool for cognitive enhancement. The enhancements observed in older adults and psychiatric populations motivate further examination into the translational potential of tACS in future clinical studies that are more systematic and rigorous.

MATERIALS AND METHODS

Study design

The objective of this study was to perform a systematic review and meta-analysis of peer-reviewed, published studies using tACS to modulate cognitive function in healthy, aging, and psychiatric populations. To perform this study, we searched for tACS studies (see the “Search strategy” section below); extracted information about the study design, experimental design, and neuromodulation protocol (see the “Data extraction” section below); and used meta-analytic statistical tools for quantification of overall effect sizes, subgroup analyses, and meta-regression analyses (see the “Statistical analyses” section below). Our study was not preregistered.

We used an RVE approach (11) to include eligible data from all experiments while accounting for the statistical dependence between multiple experiments nested within the same study. We then determined the overall effect of rhythmic neuromodulation using tACS on cognitive function measures across all cognitive domains. We separately examined the ability of tACS to improve cognitive function independently of the study hypothesis and to change cognitive function along a hypothesized direction. We also examined the impact of neuromodulation within each cognitive domain. We extracted a battery of neuromodulation parameters for each experiment and performed moderator analyses using meta-regression. With multisite tACS designs becoming more common (5), we performed a separate subgroup analysis on studies using such designs and examined the impact of relative phase on tACS outcomes. Last, to address the translational potential of tACS, we separately examined effects in two subgroups: older adults and clinical populations.

Search strategy

A series of iterative searches for articles published between January 2006 and January 2021 was performed through the PubMed and PsycInfo databases. We used the following Boolean keywords in the search: (i) “transcranial alternating current stimulation” or “tACS” and “cognition” and (ii) “transcranial alternating current stimulation” or “tACS” and “clinical” or “disorder.” Relevant papers found in these initial searches identified nine domains of cognition [working memory, long-term memory, attention, executive control, motor learning, motor memory, fluid intelligence, learning (non-motor), and decision-making] and six clinical populations (schizophrenia, depression, anxiety, obsessive-compulsive disorder, Parkinson’s disease, and ADHD) that were targeted using tACS. These cognitive domains were identified on the basis of multiple factors. First, we determined which cognitive functions the authors of the study claim to examine and how they interpret their findings. In cases where this approach did not yield a conclusive answer, we further applied the following strategy. We next examined the kind of cognitive task and measures of behavioral performance used in the study and qualitatively compared them with those used in other studies claiming to study the same cognitive function. We also examined the cortical structures targeted by a given study and their similarity with other studies examining similar cognitive functions. One study (18) solely examined cognitive function using a battery of neuropsychological assessments, which could not be cleanly parsed into one specific category. These examples were included in the omnibus analyses but not in any subgroup analysis of specific cognitive functions. The second series of searches were completed with “tACS” or “transcranial alternating current stimulation” keywords with each permutation of identified cognitive domains and clinical populations. An additional search was conducted for a less-common form of tACS called transcranial oscillatory direct current stimulation (toDCS), where the alternating current applied is unipolar (i.e., superimposed on a direct current), instead of the more common bipolar waveform, using the search terms “oscillatory transcranial direct current stimulation,” “oscillatory tDCS,” “frequency modulated transcranial direct current stimulation,” or “frequency modulated tDCS.” Only a small subset of studies using toDCS was identified, all belonging to the long-term memory domain. For completeness, we included these studies under the tACS umbrella because they are localized to a single cognitive domain. Only studies with human participants were extracted from the search results. We also examined the bibliographies of the studies identified during the search that satisfied the eligibility criteria (see below) for suggestions about further relevant studies. No limitations were set on the language or publication date. Abstracts and titles were independently reviewed for relevance by two reviewers.

Eligibility criteria

Studies were included if they (i) used a sham control group and (ii) had at least one primary outcome of modulating one or more of the following cognitive domains: working memory, long-term memory, attention, executive control, motor learning, motor memory, fluid intelligence, learning (non-motor), and decision-making. We applied the following exclusion criteria: (i) case studies or studies with a final sample size of less than five, (ii) studies that used multiple concurrent methods of modulating cognition, and (iii) experiments that were explicitly labeled as controls. Eligibility assessment at all stages of the review was performed independently by two reviewers. The sample size criterion of five was set to minimize the likelihood of including articles that could be deemed as case studies, because it might be difficult to infer generalizable outcomes from such studies, against ensuring inclusion of studies with clinical populations, which are already scarce in the literature. Studies using multiple concurrent methods were excluded because of ambiguities in determining the relative contribution of tACS to the changes in cognitive function.

Data extraction

After a full-text review, data extraction was performed independently by the first two authors and was verified by the third author. All primary outcome measures for all arms of each study were included in quantitative analysis as separate experiments. The extracted data included the means and SDs of the primary outcome variable for the experimental and control measurements, when reported, and several design parameters needed for meta-regression (see the “Subgroup analysis and meta-regression” section below). The unreported means and SDs were requested from the corresponding authors or extracted from the figures using the WebPlotDigitizer online tool (30). A more detailed discussion of how the effect sizes were categorized for each analysis is in Supplementary Materials and Methods.

Quality assessment

Quality assessment of eligible studies was performed by two independent authors. We examined risk of bias in the RCTs included in the meta-analysis using the RoB 2.0 tool (16). The tool helps to identify the risk of bias arising from five sources: (i) the randomization process, (ii) deviations from intended interventions, (iii) missing outcome data, (iv) measurement of the outcome, and (v) selection of the reported result. The tool is designed primarily for examining risk of bias in RCTs. Four such trials were identified in the analysis. All remaining studies were not RCTs, and many used tACS as a causal investigational tool. Nonetheless, we attempted to examine bias in these studies. We judged that a stringent application of the RoB tools on studies not meant to be RCTs may be unreasonable. To still offer some measurement of bias in these studies, we made some accommodations to the stringent criteria within the RoB 2.0 tool to allow a reasonable application. These accommodations include the following. First, the absence of information regarding the precise method of randomization for participant allocation was not deemed risk-worthy if the study confirmed that participant allocation to the study conditions was randomized in some manner. Although this is a critical factor for clinical trials, we relaxed this requirement because these studies were not clinical trials and usually followed procedures for randomization common to the field. Second, we assumed that a given study did not deviate from the intended intervention protocol unless any deviations were explicitly stated. Third, we assumed that the outcome assessors in a study, which would be the participants themselves in many cognitive paradigms, were not aware of the intervention if the study was at least single-blinded and no evidence suggesting unsuccessful blinding was provided. Last, we assumed that the analysis plan described in the methods of each study was prespecified before data collection and that any analyses performed post hoc were explicitly specified. Following these assumptions, each study was categorized as having a “low risk of bias,” “some concerns,” or “high risk of bias” on the basis of the two independent assessments. We discuss the findings from these analyses in the context of these assumptions, when appropriate.

Statistical analysis

Computation of effect sizes

We used the standardized mean difference (SMD) between active tACS and sham conditions as the measure of the effect size. To compute unbiased SMD, we first calculated Cohen’s d and its variance and then applied a correction factor to compute Hedges’ g and its corresponding variance. The pool of selected studies included both experiments with independent groups (between-subjects design) and crossover experiments (within-subjects design). Statistically, combining studies with different designs in a single meta-analysis does not present a problem if the necessary adjustments are made in the computations of effect sizes for each design (31). We used the following formulas to compute Hedges’ and its variance (31). We used the following formulas to compute Hedges’ and its variance (31).

Between-subjects design.

Hedges’ where

where and are the group means, and are the group SDs, and are the numbers of participants in each group, is the correction factor, and and are the variances of and Hedges’ , respectively.

Within-subjects design.

Hedges’ , where

where and are the condition means, and are the condition SDs, is the total sample size or the number of pairs, is the correction factor, and and are the variances of and Hedges’ , respectively.

When the SD of the difference between the active and sham conditions was reported instead of the individual condition SDs, Cohen’s was computed using the following formula

The next steps in the calculation of Hedges’ were identical to those described above.

Note that, for within-subjects designs, the calculation of Hedges’ g involves a correlation coefficient between the active and control conditions, which is not reported in most studies. To overcome this problem, we estimated r in a subset of studies where it was possible (n = 13) using the method provided by Morris and DeShon (32) and used an average value of this correlation in our further computations. This analysis yielded an average correlation of 0.5. Therefore, in our analyses, we assumed a correlation of 0.5 and performed an additional sensitivity analysis with r = 0.3 and r = 0.7.

A classic interpretation of effect size proposed by Cohen (33) categorizes effect sizes into small (g = 0.2), moderate (g = 0.5), and large (g = 0.8). Accordingly, we categorized effect sizes between 0.2 and 0.5 as modest to moderate, those between 0.5 and 0.8 as moderate to large, and those above 0.8 as large.

Synthesis of the effect sizes

One important assumption of the conventional meta-analysis is the independence of the effect sizes. However, a large portion of included studies report effect sizes that are not independent of each other. Such dependence arises from (i) multiple experiments conducted on the same group(s) of participants, (ii) multiple tasks used to measure the same cognitive function in the same experiment, (iii) using the same control group for comparison with more than one treatment group, and (iv) multiple time points of outcome assessment. In all these cases, the effect sizes will be correlated and cannot be modeled using the standard meta-analytical procedures. Therefore, to compute pooled effect sizes, we used the RVE method (11) that handles the statistical dependence of multiple effect sizes within a study by accounting for the correlation between them and adjusts the SEs of the pooled effect size estimates. Moreover, RVE adjusts the effect sizes’ weights to account for the nesting within a study. By default, the RVE method assumes a correlation of 0.8 between dependent effect sizes; however, we also conducted a sensitivity analysis with r = {0, 0.2, 0.4, 0.6, 1}. In a few cases (see data file S1), we applied averaging of the effect sizes within one study. This was done if (i) the means and SDs of the outcome were extracted from the figure that provided measurements at different time points/blocks of the task that were irrelevant to our analysis or (ii) the study used multiple variations of the same task that were irrelevant to our analysis to measure the same behavioral outcome. In these cases, an average effect size was computed simply by computing the mean of individual effect sizes, and the average variance was computed using the formula provided in (31), assuming a correlation of 0.5.

We performed two types of pooled effect size estimation. First, we performed the “outcome-based” analysis, in which we estimated the pooled effect size using RVE for all studies that either predicted improvement of the outcome or were exploratory. For this analysis, the directionality of effects was reversed for the outcomes that were expected to decrease with behavioral improvement (e.g., RTs, error rates, etc.), with higher effect sizes representing an increase in cognitive performance or an improvement in clinical symptoms. Second, we performed the “hypothesis-based” analysis, in which we estimated the pooled effect size for all studies that had a defined hypothesis. For this analysis, the directionality of effects was adjusted according to the hypothesized direction. For instance, consider the case of an experiment hypothesized to improve a cognitive outcome. If such an observation is made, then we adjust the sign of the effect size, if needed, to ensure that the effect size entered into our analysis is positive. On the other hand, if the direction of change in the dependent variable is contrary to the expected hypothesis, then the effect size entered into the analysis is adjusted to always be negative. In both analyses, we first pooled all effect sizes regardless of the dependent variable targeted in the experiment. Next, we computed pooled effect sizes separately for Performance and RT measures.

Subgroup analysis and meta-regression

Subgroup and moderator analyses were only performed within the outcome-based approach, except for the subgroup analysis on bidirectional manipulation in multisite tACS designs, which used hypothesis-based effect sizes. Subgroup analysis was performed on the basis of clustering of studies by cognitive domain. Moderation analysis at the level of all effects was performed using meta-regression with the following predictor variables: timing of behavioral assessment relative to modulation (online and offline), stimulation state (at resting state and during the task), duration of modulation (less than or equal to 20 min and more than 20 min), modulation intensity [peak-to-peak and both continuous and categorical (less than or equal to 1 mA and more than 1 mA)], current density, application of individualized modulation frequency (individual and nonindividual), intentional manipulation of tACS phase (with and without phase manipulation), HD-tACS (HA-tACS and conventional tACS), protocol optimization (using current flow models to optimize stimulation locations and intensities versus not using current flow models at all or using them to simulate, but not optimize, stimulation locations and intensities), neuroanatomical guidance (using electroencephalography, magnetic resonance imaging, or transcranial magnetic stimulation to guide tACS montage), blinding (single and double), and age of the participants (younger and older adults). We selected 20 min and 1 mA as the cutoff values for categorical assessment of modulation duration and intensity because these were the median values across the experiments included in the meta-analysis. Moreover, a 1-mA intensity cutoff facilitated comparison with a previous meta-analysis (4). The timing of behavioral assessment refers to whether the behavioral task performance included in the meta-analysis primarily occurred after tACS had ended (offline) or whether it occurred while tACS was ongoing (online). Conversely, the stimulation state variable refers to the state of the participant when tACS was being performed (whether they were at rest or whether they were engaged in a task). This dual characterization allowed us to include any potential experiments where tACS was performed during one kind of task, but the effects included in the meta-analysis belonged to a different task. After the meta-regression, we performed exploratory analysis of interactions of significant predictors with other variables. Significance of interactions was estimated using the likelihood ratio test that compares the goodness of fit of two competing models (with and without an interaction term). Furthermore, given that the interaction was found to be significant, we performed pairwise comparisons of predictor combinations. Additional post hoc moderation analyses were performed for the working memory, long-term memory, attention, executive control, and intelligence domains. Here, in addition to the abovementioned predictors, we also included modulation frequency; montage (anterior, posterior, and anterior-posterior); lateralization of modulation (bilateral and unilateral); specific phase manipulation (in-phase, antiphase, and no manipulation); and, in case of working memory, task (change detection, n-back, and digit span). Modulation frequency was examined as a continuous variable. For studies that used individualized frequencies or amplitudes, the average frequency or amplitude across all participants was used for analyses. Predictor variables with less than 10 effect sizes were not analyzed, following the recommendation of Higgins and Thompson (34).

Outlier removal

We accounted for the sensitivity of any observed effects to potential outliers. For all analyses where reliable estimation was possible (df ≥ 4), we detected outliers using standardized deleted residuals (35) and examined effects both before and after removal of outliers. We did not perform outlier analyses for those cognitive domains where reliable estimation of effects was not possible because of fewer df (df < 4).

Testing heterogeneity and publication bias

We tested for heterogeneity (I2) using the method proposed by Higgins and Thompson (36) to measure the inconsistency of effect sizes across studies within each cognitive domain. The I2 statistic represents the percentage of variation in effect sizes beyond the sampling error. Although the interpretation of heterogeneity can depend on multiple factors, an I2 of 25 to 75% is typically considered moderate, whereas I2 > 75% is considered a substantial heterogeneity (37). An increase in heterogeneity can be caused by the presence of studies with extremely high or low effect sizes. Thus, when heterogeneity was at or approached a substantial level, we tested for the presence of outliers. This was examined when examining All, Performance, and RT measures across cognitive domains and when examining each cognitive domain individually. To identify outliers, we used studentized deleted residuals after the recommendation of Viechtbauer and Cheung (35). Identified outliers were excluded, and pooled effect size was recomputed. Publication bias was assessed by creating a funnel plot by plotting individual effect sizes against their SEs. Special attention was paid to the asymmetry of the plot and the absence of effects in the lower-left corner of the funnel, which indicates the lack of published small-sample studies that found negative effect sizes. We also used the Egger’s regression test for plot asymmetry to detect publication bias (22). Furthermore, we applied the trim-and-fill method to estimate and impute missing effect sizes (21). In addition, we used the test of excess significance (23) to determine whether the number of significant effects in the meta-analysis exceeds the number of significant effects expected given the power of the tests, using the “tes” function in the “metafor” package (38).

Estimation of effect sizes and meta-regression was performed using the robumeta package (39); the metafor package (38) was used for computation of studentized deleted residuals, parsing interactions, and testing for publication bias. All analyses were performed in R version 4.0.2.

Supplementary Material

Acknowledgments:

We thank A. Cronin-Golomb and J. Q. Wu for helpful discussions.

Funding:

This work was supported by grants from the National Institutes of Health (R01-MH114877 and R01-AG063775 to R.M.G.R.) and a gift from an individual philanthropist (to R.M.G.R.).

Footnotes

Competing interests: The authors declare that they have no competing interests.

Data and materials availability: All data associated with this study are present in the paper or the Supplementary Materials. The data used to perform the analyses and the citations for all studies included in the meta-analysis are available in data file S1. The full analysis code and data files executable by the provided code can be accessed from Zenodo with the DOI: 10.5281/zenodo.7868618.

REFERENCES AND NOTES

- 1.Sejnowski TJ, Paulsen O, Network oscillations: Emerging computational principles. J. Neurosci. 26, 1673–1676 (2006). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Uhlhaas PJ, Singer W, Neuronal dynamics and neuropsychiatric disorders: Toward a translational paradigm for dysfunctional large-scale networks. Neuron 75, 963–980 (2012). [DOI] [PubMed] [Google Scholar]

- 3.Johnson L, Alekseichuk I, Krieg J, Doyle A, Yu Y, Vitek J, Johnson M, Opitz A, Dose-dependent effects of transcranial alternating current stimulation on spike timing in awake nonhuman primates. Sci. Adv. 6, eaaz2747 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Schutter DJLG, Wischnewski M, A meta-analytic study of exogenous oscillatory electric potentials in neuroenhancement. Neuropsychologia 86, 110–118 (2016). [DOI] [PubMed] [Google Scholar]

- 5.Grover S, Nguyen JA, Reinhart RMG, Synchronizing brain rhythms to improve cognition. Annu. Rev. Med. 72, 29–43 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Frohlich F, Riddle J, Conducting double-blind placebo-controlled clinical trials of transcranial alternating current stimulation (tACS). Transl. Psychiatry 11, 1–12 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Alekseichuk I, Turi Z, Amador de Lara G, Antal A, Paulus W, Spatial working memory in humans depends on theta and high gamma synchronization in the prefrontal cortex. Curr. Biol. 26, 1513–1521 (2016). [DOI] [PubMed] [Google Scholar]

- 8.Polanía R, Moisa M, Opitz A, Grueschow M, Ruff CC, The precision of value-based choices depends causally on fronto-parietal phase coupling. Nat. Commun. 6, 1–10 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.de Lara GA, Alekseichuk I, Turi Z, Lehr A, Antal A, Paulus W, Perturbation of theta-gamma coupling at the temporal lobe hinders verbal declarative memory. Brain Stimul. 11, 509–517 (2018). [DOI] [PubMed] [Google Scholar]

- 10.Lafon B, Henin S, Huang Y, Friedman D, Melloni L, Thesen T, Doyle W, Buzsáki G, Devinsky O, Parra LC, Liu AA, Low frequency transcranial electrical stimulation does not entrain sleep rhythms measured by human intracranial recordings. Nat. Commun. 8, 1–14 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Hedges LV, Tipton E, Johnson MC, Robust variance estimation in meta-regression with dependent effect size estimates. Res. Synth. Methods 1, 39–65 (2010). [DOI] [PubMed] [Google Scholar]

- 12.Esmaeilpour Z, Marangolo P, Hampstead BM, Bestmann S, Galletta E, Knotkova H, Bikson M, Incomplete evidence that increasing current intensity of tDCS boosts outcomes. Brain Stimul. 11, 310–321 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Alekseichuk I, Falchier AY, Linn G, Xu T, Milham MP, Schroeder CE, Opitz A, Electric field dynamics in the brain during multi-electrode transcranial electric stimulation. Nat. Commun. 10, 2573 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Reinhart RMG, Disruption and rescue of interareal theta phase coupling and adaptive behavior. Proc. Natl. Acad. Sci. U.S.A. 114, 11542–11547 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Reinhart RMG, Nguyen JA, Working memory revived in older adults by synchronizing rhythmic brain circuits. Nat. Neurosci. 22, 820–827 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Sterne JAC, Savović J, Page MJ, Elbers RG, Blencowe NS, Boutron I, Cates CJ, Cheng H-Y, Corbett MS, Eldridge SM, Emberson JR, Hernán MA, Hopewell S, Hróbjartsson A, Junqueira DR, Jüni P, Kirkham JJ, Lasserson T, Li T, McAleenan A, Reeves BC, Shepperd S, Shrier I, Stewart LA, Tilling K, White IR, Whiting PF, Higgins JPT, RoB 2: A revised tool for assessing risk of bias in randomised trials. BMJ 366, l4898 (2019). [DOI] [PubMed] [Google Scholar]

- 17.Alexander ML, Alagapan S, Lugo CE, Mellin JM, Lustenberger C, Rubinow DR, Fröhlich F, Double-blind, randomized pilot clinical trial targeting alpha oscillations with transcranial alternating current stimulation (tACS) for the treatment of major depressive disorder (MDD). Transl. Psychiatry 9, 106 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Del Felice A, Castiglia L, Formaggio E, Cattelan M, Scarpa B, Manganotti P, Tenconi E, Masiero S, Personalized transcranial alternating current stimulation (tACS) and physical therapy to treat motor and cognitive symptoms in Parkinson’s disease: A randomized cross-over trial. Neuroimage Clin. 22, 101768 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Mellin JM, Alagapan S, Lustenberger C, Lugo CE, Alexander ML, Gilmore JH, Jarskog LF, Fröhlich F, Randomized trial of transcranial alternating current stimulation for treatment of auditory hallucinations in schizophrenia. Eur. Psychiatry 51, 25–33 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Daughters SB, Yi JY, Phillips RD, Carelli RM, Fröhlich F, Alpha-tACS effect on inhibitory control and feasibility of administration in community outpatient substance use treatment. Drug Alcohol Depend. 213, 108132 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Duval S, Tweedie R, Trim and fill: A simple funnel-plot-based method of testing and adjusting for publication bias in meta-analysis. Biometrics 56, 455–463 (2000). [DOI] [PubMed] [Google Scholar]

- 22.Egger M, Davey Smith G, Schneider M, Minder C, Bias in meta-analysis detected by a simple, graphical test. BMJ 315, 629–634 (1997). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Ioannidis JPA, Trikalinos TA, An exploratory test for an excess of significant findings. Clin. Trials 4, 245–253 (2007). [DOI] [PubMed] [Google Scholar]

- 24.Scheel AM, Schijen MRMJ, Lakens D, An excess of positive results: Comparing the standard psychology literature with registered reports. Adv. Methods Pract. Psychol. Sci. 4, 25152459211007468 (2021). [Google Scholar]

- 25.Bramson B, den Ouden HEM, Toni I, Roelofs K, Improving emotional-action control by targeting long-range phase-amplitude neuronal coupling. eLife 9, e59600 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Clayton MS, Yeung N, Cohen Kadosh R, The effects of 10 Hz transcranial alternating current stimulation on audiovisual task switching. Front. Neurosci. 12, 67 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Tognoli E, Kelso JAS, The metastable brain. Neuron 81, 35–48 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Whitton AE, Treadway MT, Pizzagalli DA, Reward processing dysfunction in major depression, bipolar disorder and schizophrenia. Curr. Opin. Psychiatry 28, 7–12 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Insel T, Cuthbert B, Garvey M, Heinssen R, Pine DS, Quinn K, Sanislow C, Wang P, Research domain criteria (RDoC): Toward a new classification framework for research on mental disorders. Am. J. Psychiatry 167, 748–751 (2010). [DOI] [PubMed] [Google Scholar]

- 30.Rohatgi A, WebPlotDigitizer (2020); https://automeris.io/WebPlotDigitizer/. [Google Scholar]

- 31.Borenstein M, Hedges LV, Higgins JPT, Rothstein HR, Introduction to Meta-Analysis (John Wiley & Sons, 2021). [Google Scholar]

- 32.Morris SB, DeShon RP, Combining effect size estimates in meta-analysis with repeated measures and independent-groups designs. Psychol. Methods 7, 105–125 (2002). [DOI] [PubMed] [Google Scholar]

- 33.Cohen J, Statistical Power Analysis for the Behavioral Sciences (Lawrence Erlbaum Associates, ed. 2, 1988). [Google Scholar]

- 34.Higgins JPT, Thompson SG, Controlling the risk of spurious findings from meta-regression. Stat. Med. 23, 1663–1682 (2004). [DOI] [PubMed] [Google Scholar]

- 35.Viechtbauer W, Cheung MW-L, Outlier and influence diagnostics for meta-analysis. Res. Synth. Methods 1, 112–125 (2010). [DOI] [PubMed] [Google Scholar]

- 36.Higgins JPT, Thompson SG, Quantifying heterogeneity in a meta-analysis. Stat. Med. 21, 1539–1558 (2002). [DOI] [PubMed] [Google Scholar]

- 37.Higgins JPT, Thompson SG, Deeks JJ, Altman DG, Measuring inconsistency in meta-analyses. BMJ 327, 557–560 (2003). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Viechtbauer W, Conducting meta-analyses in R with the metafor package. J. Stat. Softw. 36, 1–48 (2010). [Google Scholar]

- 39.Fisher Z, Tipton E, robumeta: An R-package for robust variance estimation in meta-analysis. arXiv:1503.02220 [stat.ME] (7 March 2015). [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.