Abstract.

Significance

Over the past decade, machine learning (ML) algorithms have rapidly become much more widespread for numerous biomedical applications, including the diagnosis and categorization of disease and injury.

Aim

Here, we seek to characterize the recent growth of ML techniques that use imaging data to classify burn wound severity and report on the accuracies of different approaches.

Approach

To this end, we present a comprehensive literature review of preclinical and clinical studies using ML techniques to classify the severity of burn wounds.

Results

The majority of these reports used digital color photographs as input data to the classification algorithms, but recently there has been an increasing prevalence of the use of ML approaches using input data from more advanced optical imaging modalities (e.g., multispectral and hyperspectral imaging, optical coherence tomography), in addition to multimodal techniques. The classification accuracy of the different methods is reported; it typically ranges from to 90% relative to the current gold standard of clinical judgment.

Conclusions

The field would benefit from systematic analysis of the effects of different input data modalities, training/testing sets, and ML classifiers on the reported accuracy. Despite this current limitation, ML-based algorithms show significant promise for assisting in objectively classifying burn wound severity.

Keywords: machine learning, burn severity, burn assessment, burn wound, debridement, optical imaging, artificial intelligence, tissue classification

1. Introduction

Incorporating emerging technologies into the clinical workflow for the early staging of burn severity may provide a crucial inroad toward improved diagnostic accuracy and personalized treatment.1 Early knowledge of the severity of the burn gives the clinician the ability to discuss treatment options and prognostication for hospital stays, healing, and scarring. Within the spectrum of burn severity, superficial partial thickness burns often do not require skin grafting but can be managed with daily wound care or covered with various synthetic or biologic dressings. Full thickness burns typically require skin grafting because they will take weeks to heal and can result in symptomatic and constricting scars. Deep partial thickness burns can act like full thickness burns and require skin grafting. Distinguishing the burn severity along the spectrum can be difficult early after injury and is subjective when based on previous clinical experience. Modalities that allow for additional objective data promptly after injury helps the clinician to manage the wound properly to enable healing of the damaged tissue and reduce infection, contracture, and other unfavorable outcomes.2 In many cases, some portions of a burn wound need grafting but other portions do not, so it is vital to develop imaging techniques that can spatially-segment tissue regions with deeper burns from locations where the burn is more superficial. For the above reasons, burn wound assessment is a prime example of an application for which the combination of optical imaging devices and machine learning (ML) algorithms has recently made notable progress toward translation to clinical care.

ML algorithms are becoming ubiquitous in a wide variety of disciplines. In the medical field, ML is attractive due to its potential for objective classification of disease and injury, categorization of stage of disease and severity of injury, informing treatment, and prognosticating clinical outcomes. ML can conveniently manage and interpret high-dimensional multimodal clinical datasets to facilitate the translation of these data into powerful tools to help inform clinical decision making.3–5 Over the past decade, numerous research groups have begun to test the efficacy of merging ML algorithms with imaging technologies for classifying burn wounds.6–12 The input data in these studies is frequently obtained from standard red, green, and blue (RGB) color images. However, other emerging techniques (e.g., multispectral imaging, optical coherence tomography, spatial frequency domain imaging, and terahertz imaging) are being developed for this application as well. This literature is growing at a rapid rate, both in terms of the number of new reported studies and the range of different technologies used for obtaining input data to train ML classifiers (Fig. 1). The “ground-truth” categorization of burn severity used for training the algorithms is typically the clinical impression, which is regarded as the diagnostic/prognostic “gold standard” but can be incorrect in to 50% of cases.13–16 The outputs of the algorithm are typically (1) the segmentation of burned versus unburned tissue and (2) the classification of depth or severity of the burn. The literature encompasses studies both in preclinical animal models and in clinical settings.

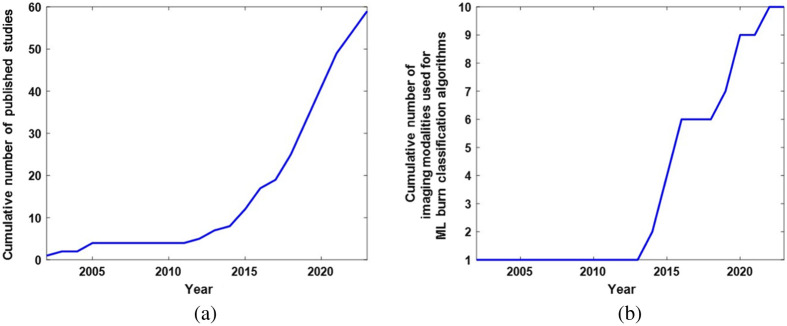

Fig. 1.

Over the past decade, there has been a rapid increase in the number of studies developing machine learning approaches for burn wound classification using imaging data. (a) Cumulative number of published studies on ML burn classification methods using imaging data as a function of time over the past two decades. A progressively steeper increase in the cumulative number of publications is observed, especially over the past decade. (b) Cumulative number of different imaging modalities employed to train ML-based burn wound classification algorithms in published studies, plotted as a function of time over the past two decades. As with the cumulative number of published studies, the cumulative number of imaging modalities used in these applications has increased sharply over the past decade.

The purpose of this review article is to provide an overview of the progress thus far in the combination of ML algorithms with different optical imaging modalities to assist with burn wound assessment. The review is organized according to the type of imaging modality used as input data for different ML studies, which are summarized in Table 1. Section 2 focuses on ML techniques using data from conventional color photography. Section 3 discusses the use of ML algorithms for analyzing multispectral (and hyperspectral) imaging data. Section 4 analyzes the use of other modalities of imaging data (optical coherence tomography, ultrasound, thermal imaging, laser speckle and laser Doppler imaging, and terahertz imaging) with ML technology. Section 5 provides a summary of the findings of this review and briefly discusses potential future directions.

Table 1.

Summary of different ML-based burn classification studies using imaging data as inputs to the ML algorithms. Modality, data processing techniques, ML classifiers, validation procedures, and reported accuracy values are shown in the columns of the table.

| Data modality | Studies | Pre-processing | ML classifier | Validation methods | Accuracy |

|---|---|---|---|---|---|

| Digital color |

17–52 |

E.g., , , ; texture analysis |

E.g., SVM, LDA, KNN, deep CNN |

E.g., k-fold CV; separate test set |

80.9% +/− 6.4% without deep learning; 86.2% +/− 9.8% with deep learning (see Fig. 7) |

| Multispectral | 53 | Outlier detection | SVM, KNN | tenfold CV | 76% |

| 54 | LDA, QDA, KNN | 68%–71% | |||

| 55 | CNN | Sensitivity = 81%; PPV = 97% | |||

| Hyperspectral | 56 | Denoising | Unsupervised segmentation | Comparison between segmentation and histology | Not reported |

| Multispectral SFDI |

57

|

Calibrated reflectance |

SVM |

tenfold CV |

92.5% |

| Digital color + multispectral |

58,59 | Texture analysis, mode filtering | QDA | twelvefold CV (in Ref. 59) | 78% |

| 60 | QDA + k-means clustering | 34-fold CV | 24% better than QDA alone for identifying non-viable tissue | ||

|

61

|

Outlier removal using Mahalanobis distance |

|

|

|

|

| OCT |

62 | OCT and pulse speckle imaging | Naïve Bayes classifier | ROC AUC = 0.86 | |

| 63 | A-line, B-scan, and phase data | Multilevel ensemble classifier | tenfold CV | 92.5% | |

|

64

|

Eight OCT parameters |

Linear model classifier |

Test set |

91% |

|

| Ultrasound |

65 (ex vivo) | Texture analysis | SVM and kernel Fisher | |

93% |

|

66 (in situ postmortem) |

B-mode ultra sound data |

Deep CNN |

99% |

||

| Thermography |

67 | Thermography and multispectral | CNN pattern recognition | Training, validation, and test sets | Precision = 83% |

|

68

|

Temperature difference relative to healthy skin |

Random forest |

Training and validation sets |

85% |

|

| Blood Flow |

69

|

LSI |

CNN |

|

>93% |

| Terahertz Imaging | 70,71 | Wavelet denoising, Wiener deconvolution | SVM, LDA, Naïve Bayes, neural network | Fivefold CV | ROC AUC = 0.86-0.93 |

| 72 | Permittivity | Three-layer fully connected neural network | Fivefold CV | ROC AUC = 0.93 |

Note: PPG, photoplethysmography; OCT, optical coherence tomography; LSI, laser speckle imaging; SVM, support vector machine; LDA, linear discriminant analysis; KNN, K-nearest neighbors; QDA, quadratic discriminant analysis; CNN, convolutional neural network; CV, cross-validation; ROC, receiver operating characteristic; AUC, area under curve.

2. Use of ML with Color Photography

To date, the majority of studies that have examined the use of ML to categorize burns have done so using color photography data as inputs. These studies date back nearly two decades17–20 but have become significantly more numerous over the past decade, especially during the past 5 years.

2.1. Early Studies

The first reported work in this area17–20 analyzed images in the color space, which is representative of the human perception of color. Parameters related to texture and color were extracted from the images and used as inputs to the ML algorithm, which was based on a neural network known as a Fuzzy-ARTMAP (fuzzy logic merged with adaptive resonance theory for analog multidimensional mapping). This approach was tested on clinically obtained color images of full thickness, deep dermal, and superficial dermal burns. In Refs. 18 and 19, with a dataset of 62 images, the ML classification method provided a mean overall accuracy (across all three categories) of 82%, relative to the “gold standard” of visual inspection by burn care experts. In Ref. 20, with a dataset of 35 images, the mean overall accuracy of the ML classifier was 89%.

Additional research began to emerge roughly half a decade later. A 2012 study21 compared support vector machine (SVM), K-nearest neighbor (KNN), and Bayesian classifiers, using image segmentation to identify burn regions and input parameters from texture analysis and h-transformed data to classify burn severity. Fourfold cross-validation provided the highest classification accuracy of burn severity (89%) when SVM was used. A “blind test” of the SVM provided a classification accuracy of 75% for distinguishing between grades of burn severity. A 2013 report22 compared SVM, KNN, and template matching (TM) algorithms for classifying three different burn severity categories (superficial, partial thickness, and full thickness) in patients with a range of different demographic characteristics (age, gender, and ethnicity). Using a sample size of 120 images (40 superficial burns, 40 partial thickness burns, and 40 full thickness burns), the overall classification accuracy was 88% for the SVM, 75% for the TM, and 66% for the KNN.

Another 2013 study23 used multidimensional scaling (MDS) to quantify features of color images that were related to burn depth. Parameters from this model were input into a KNN algorithm for classification. The KNN provided 66% accuracy for distinguishing between three different burn depth categories (superficial dermal, deep dermal, and full thickness) and 84% accuracy for distinguishing between burns requiring grafts versus burns not needing grafting. When principal component analysis (PCA) was performed and the three most significant principal components were used as inputs into the KNN, the accuracy decreased to 51% for the three-group classification (superficial dermal, deep dermal, and full thickness) and 72% for the two-group classification (graft needed versus no graft needed). When the MDS parameters were input into an SVM, the accuracy values were 76% and 82% for the aforementioned three-group classification and two-group classification, respectively. A similar study in 201524 reported an accuracy of 80% for distinguishing burns in need of grafts from burns not in need of grafts, when MDS parameters were used in conjunction with an SVM classification algorithm on an independent test dataset of 74 images.

Another 2015 study25 used an SVM to classify burns by severity (second degree, third degree, and fourth degree) with 73.7% accuracy when twofold cross-validation was performed. A 2017 report26 compared 20 different algorithms for classifying burn severity into three categories (superficial partial thickness, deep partial thickness, and full thickness), using both tenfold cross-validation and an independent test dataset. The highest classification accuracy (73%) obtained using cross-validation was achieved with a simple logistic regression algorithm. Five of the algorithms were identified as the most accurate for classifying burns in the test dataset, and their mean accuracies were all 69%. The low classification accuracy was primarily attributed to difficulties in using the algorithms to distinguish between superficial partial thickness and deep partial thickness burns. This issue was linked to the observation that the superficial partial thickness burns often included some deep partial thickness burn regions, and vice versa, making classification difficult.

2.2. Studies from 2019 to 2023: Emergence of Deep Learning Approaches

At the time of this report, the majority of studies on ML methods for classifying color images of burns were published from 2019 to 2023. Although some preliminary burn classification work using digital color images and deep learning technology had been reported prior to 2019,27 the period from 2019 to 2023 saw a substantial increase in the use of deep learning approaches for burn wound classification.28–45

Several studies in this time period used deep learning algorithms to segment images into burned and un-burned regions.31,34,35,38–40 A 2019 study31 used 1,000 images to train a mask region with a convolutional neural network (Mask R-CNN) algorithm, comparing several different underlying network types and obtaining a maximum accuracy of 85% for identifying burn regions in images of different severities of burns. Another 2019 study38 used deep learning with semantic segmentation to distinguish between burn, skin, and background portions of images. Two 2021 studies34,35 (Fig. 2) used deep learning algorithms to segment burned versus un-burned tissue to determine the total body surface area (TBSA) that was burned.

Fig. 2.

Results of a deep learning algorithm for classifying burn severity using color photography data, mapped across the tissue surfaces of patients. Data from color images (a) were input into a multi-layer deep learning procedure including segmentation and feature fusion. The algorithm classified burn regions (c) as superficial partial-thickness (blue), deep partial-thickness (green), and full-thickness (red). Ground-truth categorization (b) is shown for comparison (adapted from Ref. 34, with permission).

A 2019 study28 used deep CNN based approaches for burn classification, comparing four different networks. The ResNet-101 deep CNN algorithm distinguished between four different burn categories (superficial partial-thickness, superficial-to-intermediate partial-thickness, intermediate-to-deep partial-thickness, and deep partial thickness to full thickness) with a mean accuracy of 82% when tenfold cross-validation was performed. In another study by this same group,29 a tensor decomposition technique was employed to extract parameters related to texture for input into a cluster analysis algorithm that segmented the images into three categories (non-tissue “background,” healthy skin, and burned skin) with a sensitivity of 96%, positive predictive value of 95%, and faster computation time than other image analysis techniques (e.g., PCA). A third report from this group30 used digital color images acquired with a commercial camera specialized for tissue imaging (TiVi700, WheelsBridge AB, Sweden) that uses polarization filters. The polarized images were used to train a U-Net deep CNN for distinguishing between four different burn severities (superficial partial-thickness, superficial-to-intermediate partial-thickness, intermediate-to-deep partial-thickness, and deep-partial thickness to full-thickness, which is defined based on healing times). The accuracy of this technique was 92% when a separate test set (consisting of data not included in the training set) was used.

A 2020 study32 used a ResNet-50 deep CNN to classify burns into three levels of severity (shallow, moderate, and deep, which is based on the time/intervention required to heal) with an overall accuracy of when applied to a separate test dataset. Another 2020 study41 used a deep CNN to classify burns as superficial, deep dermal, or full thickness in a separate test dataset with an average accuracy of 79%. A third study from 202033 employed separate SVMs, trained with features identified by deep CNNs, for each body part examined (inner forearm, hand, back, and face), achieving burn severity (low, moderate, and severe) classification accuracy of 92% and 85% for two different test datasets. A 2021 report42 used deep neural network and recurrent neural network approaches to classify burns as first, second, or third degree with accuracies of 80% and 81%, respectively.

A 2023 study36 performed deep learning on color images of patients with a wide range of Fitzpatrick skin types to identify burned regions and classify whether those regions required surgical intervention. Patients were split into two subsets: those with Fitzpatrick Skin Types I-II and those with Fitzpatrick Skin Types III-VI. The classification algorithm employed a deep CNN made available via commercially available software (Aiforia Create, Helsinki, Finland). For distinguishing burns for which surgical intervention was needed from burns that did not require surgical intervention, using a separate test dataset, the area under the receiver operating characteristic curve (ROC AUC) was nearly identical for the dataset from patients with lighter skin (AUC = 0.863) and the dataset from patients with darker skin (). This result is expected due to the fact that the burn removed the epidermis (where melanin is located). Despite the high AUC, the overall accuracy of the algorithm was 64.5%. Two studies by the same group43,44 used new deep CNN algorithms to classify burn severity (superficial, deep dermal, and full thickness) with accuracy and distinguish between burns in need of grafting and burns not requiring grafts with accuracy, using fivefold cross-validation.

Another 2022 study37 compared “traditional” (non-deep-learning-based) ML algorithms with deep learning approaches for classifying images of burns in patients. For distinguishing between first degree (superficial), second degree (partial thickness), and three degree (full thickness) burns in a separate test set, the most accurate “traditional” ML approach was a random forest classifier with an augmented training dataset (accuracy = 80%). For performing the same classification, the most accurate deep learning approach was a deep CNN that used transfer learning from a pre-trained model (VGG16). The accuracy of this algorithm was 96%, considerably higher than the best “traditional” method. A 2021 study45 performed a similar comparison, in which a deep learning approach incorporating CNN and transfer learning classified burn images into three categories (superficial dermal, partial thickness, and full thickness) with an accuracy of 87% compared with 82% when an SVM was used.

Over the period from 2019 to 2023, there were also several studies that used “traditional” (non-deep-learning-based) ML algorithms for burn wound classification.46–52 One such study46 (Fig. 3) used an SVM trained on a subset of the test set to distinguish between burns requiring a graft versus burns not requiring a graft, with an accuracy of 82%. In a subsequent report,47 this same group used a kurtosis metric, obtained following the segmentation of color images via simple linear iterative clustering (SLIC), as an input into an SVM for classifying burns as requiring grafting versus not requiring grafting. Using an open-access database (BURNS BIP-US) to form the training set and test set, the classification accuracy of the SVM was 89%. Another recent study48 used a procedure to extract feature vectors (incorporating data related to texture and color) from different regions of color images to categorize the burns as first degree, second degree, or third degree in each region with sensitivity and precision for each category when fourfold cross-validation was used. A recent set of studies49–52 developed burn classification ML algorithms for a diverse range of datasets spanning patients with notably different skin tones. The percentage of studies in the 2019 to 2023 period that used non-deep-learning ML algorithms was significantly less than the pre-2019 period due to the substantially increased prevalence of deep learning techniques.

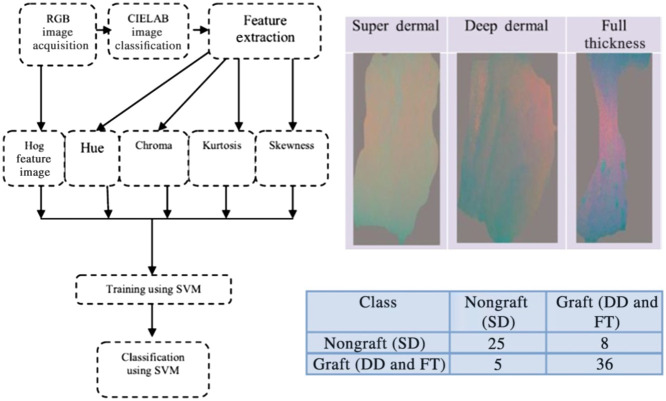

Fig. 3.

Workflow of human burn severity classification method using parameters obtained from color images. Four different parameters (hue, chroma, kurtosis, and skewness) are extracted from the color images in the CIELAB space. Additionally, the histogram of oriented gradients (Hog) feature is calculated to provide local information about the shape of the region of the tissue that was burned. These parameters are employed to train an SVM to classify the severity of the burn. The combination of , , and parameters shows different types of contrast for different categories of burns (super dermal, deep dermal, and full thickness). For distinguishing super dermal burns (which do not require grafting) from the other two categories (which require grafting), 61 out of 74 burns (82%) were classified correctly (adapted from Ref. 46, with permission).

3. Use of ML with Multispectral and Hyperspectral Imaging

3.1. ML and Multispectral Imaging

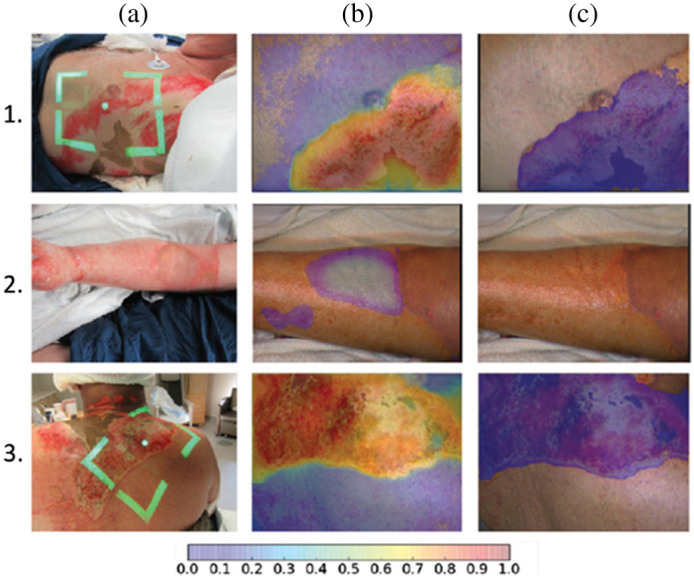

Studies over the past decade have merged ML approaches with multispectral imaging to enhance the input datasets used for training the burn classification algorithms. A 2015 report53 used a broadband light source and monochrome camera with a filter wheel in front, to acquire images in eight wavelength bands with center wavelengths ranging from 420 to 972 nm. The system was used to image burns in male Hanford pigs, and a maximum likelihood estimation-based algorithm for outlier detection was employed for post-processing. Subsequently, the remaining dataset (with outliers removed) was input into the KNN and SVM algorithms for distinguishing between six different types of tissue (healthy, wound bed, partial injury, full injury, blood, and hyperemia). When a tenfold cross-validation procedure was used, the overall classification accuracy was 76%. The authors noted a particular challenge with classifying blood due to the multiple peaks of its absorption spectrum in the wavelength range imaged. Another recent report54 compared the classification accuracy of eight different ML algorithms for differentiating among the aforementioned six tissue categories using multispectral imaging data from male Hanford pigs as inputs. Four of the algorithms (linear discriminant analysis, weighted-linear discriminant analysis, quadratic discriminant analysis, and KNN) had average accuracy values between 68% and 71%, and the other four algorithms (decision tree, ensemble decision tree, ensemble KNN, and ensemble linear discriminant analysis) had average accuracy values ranging from 37% to 62%. A more recent clinical study55 (Fig. 4) used a multispectral imager consisting of a light-emitting diode and a camera with a filter wheel including filters centered at eight different VIS-NIR wavelength bands (420, 581, 601, 620, 669, 725, 860, and 855 nm). Patients with three different burn categories (superficial partial-thickness, deep partial-thickness, and full-thickness, as confirmed via biopsy and histopathology) were imaged within the first 10 days following injury. The imaging data were used to train three different CNNs for distinguishing non-healing burns (deep partial-thickness and full-thickness) from all other tissue types. The most accurate CNN (a Voting Ensemble algorithm) provided a sensitivity of 80.5% and a PPV of 96.7% for the aforementioned healing versus non-healing classifications. CNN-based classification was also applied to the subset of burns that had initially been classified as “indeterminate depth” by clinicians at the time they were imaged. For this subset, the sensitivity of the ML algorithm was 70.3% and the PPV was 97.1% for correctly classifying the burns into healing versus non-healing categories.

Fig. 4.

Tissue classification results using multispectral imaging data to train CNN. (a) Digital color images from three burn patients. The patients in Rows 1 and 3 had severe burns; the patient in Row 2 had a superficial burn. (b) A probability map of burn severity, where purple/blue colors represent a low probability of a severe burn and orange/red colors denote a high probability of a severe burn. The clear-appearing region in the middle of burn (2b) represents a set of pixels with probability < 0.05 of severe burn. (c) A segmented probability map in which purple pixels denote a probability of severe burn that exceeds a user-defined threshold. The algorithm performed well at correctly identifying the two severe burns and distinguishing them from the superficial burn (reproduced from Ref. 55, with permission).

3.2. ML and Hyperspectral Imaging

ML techniques have also recently been used with hyperspectral imaging systems to incorporate even more robust input datasets into burn classification algorithms. A 2016 study56 (Fig. 5) used two different cameras (one in the 400 to 1000 nm spectral range and another in the 960 to 2500 nm spectral range) to image burns in Noroc pigs (50%/25%/25% hybrid of Norwegian Landrace, Yorkshire, and Duroc). Both cameras performed line scans using push-broom techniques. The resulting hyperspectral imaging data were input into an unsupervised algorithm for performing image segmentation in both the spatial and spectral dimensions. This segmentation technique was found to compare favorably with K-means segmentation for distinguishing different burn severities. A recent case study73 performed hyperspectral (400 to 1000 nm) imaging of a human partial thickness burn and used principal component analysis and a spectral unmixing technique to categorize different types of tissue.

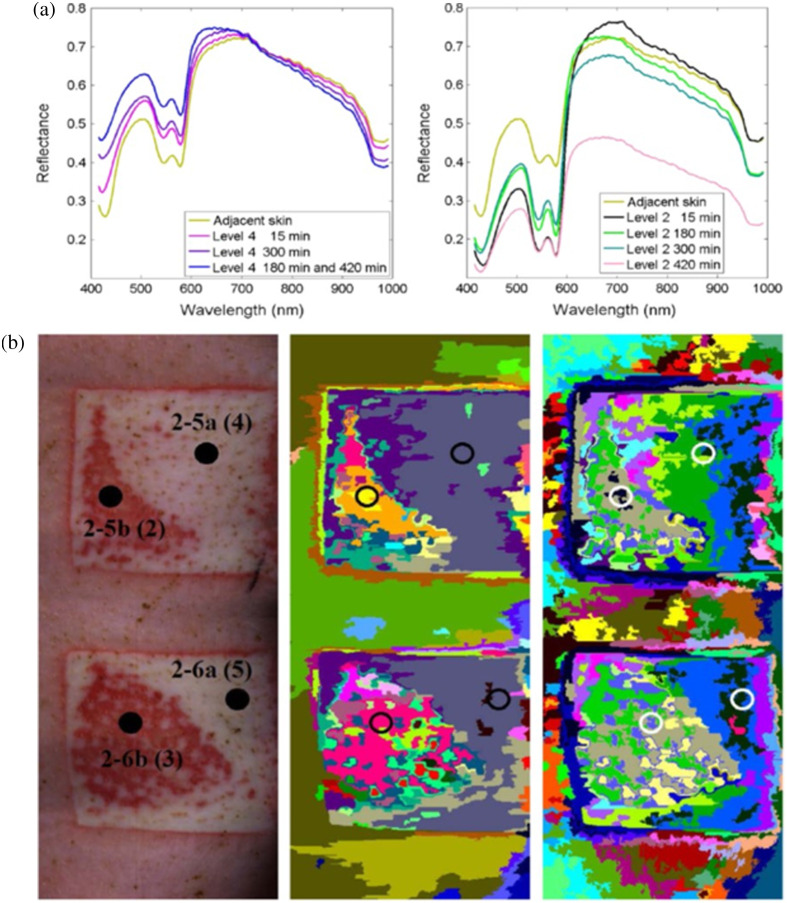

Fig. 5.

Classification of burn severity using hyperspectral imaging data. (a) Notable differences are seen in the 400 to 600 nm range of the reflectance spectra of more severe burns (Level 4) and less severe burns (Level 2) in a porcine model. These differences are likely attributable to changes in the concentration of hemoglobin (which strongly absorbs light in this wavelength regime) due to different levels of damage to the tissue vasculature. (b) Different burn severities (left column) are classified using two different segmentation algorithms: a spectral-spatial algorithm (center column) and a K-means algorithm (right column) (adapted from Ref. 56, with permission).

3.3. Combining Multispectral Imaging with Color Image Analysis and Photoplethysmography

A set of four recent studies58–61 used a combination of (1) multispectral imaging (eight wavelengths, ranging from 420 to 855 nm), (2) texture analysis of color image data, and (3) photoplethysmography (PPG) for classifying burn wounds. The initial work of this group58,59 demonstrated that inputting data from these three modalities into a quadratic discriminant analysis (QDA) ML algorithm provided an accuracy of 78% for classifying four different tissue types (deep burn, shallow burn, viable wound bed, and healthy skin) in Hanford pigs. This represented a dramatic improvement over the classification accuracy obtained using just PPG (45%), a notable improvement over that obtained with only color image texture analysis parameters (62%), and a slight improvement over the result from using only multispectral imaging data (75%). These values of overall accuracy were impacted significantly by the fact that the classification algorithms typically yielded accuracy values below 50% for classifying shallow burns. In a follow-up study by the same group,60 the QDA technique (which is supervised) was combined with a k-means clustering algorithm (which is unsupervised) to classify human burn wounds. The combination of k-means clustering and QDA resulted in an overall mean accuracy of 74% for distinguishing between viable and non-viable skin compared with 70% when only QDA was used. An additional report61 incorporated a post-processing procedure using Mahalanobis distance calculations to help remove outliers, but it only used multispectral and color images (not PPG). This algorithm provided an accuracy of 66% for classifying non-viable human tissue compared with 58% when classification was performed without the outlier-removal routine. Thus, in this study, the omission of PPG data likely contributed to the decreased classification accuracy.

3.4. Multispectral Spatial Frequency Domain Imaging

A recent report by our group57 (Fig. 6) used an emerging technique called multispectral spatial frequency domain imaging for ML-based burn wound classification in a Yorkshire pig model. In this study, light with combinations of eight different visible to near-infrared wavelengths (470 to 851 nm) and spatially modulated sinusoidal patterns of five different spatial frequencies (0 to ) was used to image different severities of pig burns. The rationale behind using the spatially modulated light was that the different spatial frequencies are known to have different mean penetration depths into the tissue, thereby potentially providing more detailed information about the extent of burn-related tissue damage beneath the surface. Calibrated diffuse reflectance images from different combinations of the wavelengths and spatial frequencies were then input into an SVM to classify the severity of the burns. When images from all 40 combinations of the five spatial frequencies and eight wavelengths, acquired 1 day post-burn, were used to train the SVM, burn severity classification (no graft required versus graft required) with an accuracy of 92.5% was obtained for a tenfold cross-validation. For comparison, when only the unstructured (spatial frequency = 0) images at the eight different wavelengths were used as inputs (to mimic standard multispectral imaging), the accuracy of the classification algorithm was 88.8% for the same cross-validation procedure.

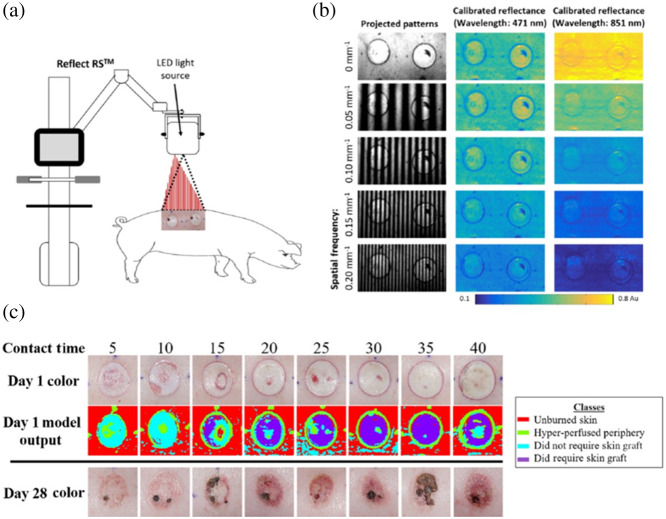

Fig. 6.

ML-based classification of burn severity in a preclinical model using multispectral spatial frequency domain imaging (SFDI) data. (a) A commercial device (Modulim Reflect RS™) projected patterns of light with different wavelengths and spatially modulated (sinusoidal) patterns onto a porcine burn model and detected the backscattered light using a camera. (b) The backscattered images at the different spatial frequencies were demodulated and calibrated to obtain reflectance maps at each wavelength. The relationship between reflectance and spatial frequency was different at the different wavelengths (e.g., 471 nm versus 851 nm, as shown here). (c) The reflectance data at each wavelength were used to train an SVM to distinguish between four different types of tissue (unburned skin, hyper-perfused periphery, burns that did not require grafting, and burns that required grafting). The ML algorithm reliably distinguished more severe burns (originating from longer thermal contact times) from less severe burns. When using a tenfold cross-validation procedure, the overall diagnostic accuracy of the method was 92.5% (adapted from Ref. 57, with permission).

4. Use of ML with Other Imaging Techniques

4.1. Optical Coherence Tomography (OCT)

Several studies have used OCT, either alone or in combination with another technology, to measure data for input into ML burn classification algorithms. A 2014 report62 acquired pulse speckle imaging (PSI) data along with OCT 1 h post-burn to distinguish full-thickness, partial-thickness, and superficial burns in a Yorkshire pig model. Using a Naïve Bayes classifier with data from the combination of these two techniques yielded an area under the receiver operating characteristic curve (ROC AUC) of 0.86 for accuracy of classifying the three categories of burns, compared with 0.62 when only OCT data were used and 0.78 when only PSI data were used. A 2019 study74 combined OCT with Raman spectroscopy (using laser excitation at 785 nm) to inform ML-based classification of full-thickness, partial-thickness, and superficial partial-thickness porcine burns ex vivo. Parameters from Raman spectroscopy measured data related to tissue biochemical composition, specifically, the NCαC/CC proline ring ratio (), CH bending/Amide III ratio (), and bending/Amide ICO stretch ratio (). Parameters from OCT provided data about the tissue structure. The combination of OCT and Raman spectroscopy data resulted in an ROC AUC of 0.94 for classifying the three different types of burns. A recent study on human skin in vivo63 used parameters measured with polarization sensitive OCT (phase information, in addition to A and B scans) for a multi-level ensemble classification technique, distinguishing burns with an accuracy of 93%. An additional human study64 performed feature extraction from OCT data and input eight extracted features into a linear classifier based on an ML algorithm to distinguish margins of surgically resected burn tissue from healthy surrounding tissue. For a training set of 34 tissue samples and a test set of 22 tissue samples, the sensitivity and specificity of the classification algorithm were 92% and 90%, respectively.

4.2. Ultrasound

Within the past several years, the use of ML burn classification algorithms based on ultrasound data has also been shown. A 2020 report65 performed texture analysis of ultrasound images from porcine tissue ex vivo. The resulting data was used to train an algorithm for distinguishing between four different burn severities, using a combination of kernel Fisher discriminant analysis and an SVM. This technique provided 93% accuracy for classifying four different burn duration/temperature combinations meant to correspond to superficial-partial thickness, deep partial-thickness, light full-thickness, and deep full-thickness burns. A subsequent porcine study, involving ex vivo and postmortem in situ skin,66 employed a deep CNN with an encoder–decoder network, using ultrasound data (B-mode) as inputs, to distinguish between the four aforementioned burn categories with an accuracy of 99%.

4.3. Thermal Imaging

Recent literature has also included the incorporation of thermal imaging data in the infrared wavelength regime into ML algorithms to help classify burn severity. A 2016 study67 used data from color images and thermal images in tandem to inform an ML-based classification algorithm that combined multiple techniques, including pattern recognition routines and CNNs. A 2018 report68 used thermography with a commercial infrared camera (T400, FLIR System, Wilsonville, OR) to determine the difference in temperature between burns of different treatment groups (amputation, skin graft, and re-epithelialization without grafting) for patients within several days post-burn. An ML algorithm using a random forest technique was developed to predict the burn treatment group (amputation, skin graft, and re-epithelialization) using this temperature data, yielding an accuracy of 85%.

4.4. Blood Flow Imaging

Blood flow measurements using coherent light-based techniques (Laser Speckle Imaging, Laser Doppler Imaging) have frequently been employed to identify signatures of burn severity.75–81 Recent research has begun to incorporate data from such measurements into ML algorithms to classify the severity of burns. A recent study69 used Laser Speckle Imaging data from a Yorkshire pig burn model as inputs into a CNN to categorize burn depth and predict whether a graft would fail. The algorithm provided accuracies of over 93% for both of these classifications.

4.5. Terahertz Imaging

Terahertz (THz) imaging is of interest in a burn severity classification context because, in theory, THz imaging enables wound visualization through gauze bandages. Recent studies have used THz imaging systems as inputs into ML algorithms to diagnose burn severity. Khani et al. and Osman et al.70,71 used a portable time-domain THz scanner to measure three different severities of burns (full thickness, deep partial thickness, and superficial partial thickness) in female Yorkshire70 and female Landrace71 porcine models. When the THz imaging data were employed to train ML-based classification, the area under the ROC curves for distinguishing between these burn categories ranged from 0.86 to 0.93. In a subsequent study, Khani et al.72 used Debye parameters from THz imaging to assess the permittivity of burns in a Landrace pig model, potentially providing a simplified methodology for training ML-based procedures for classifying burn severity.

5. Discussion and Conclusions

5.1. Summary of Literature to Date, and Current Limitations

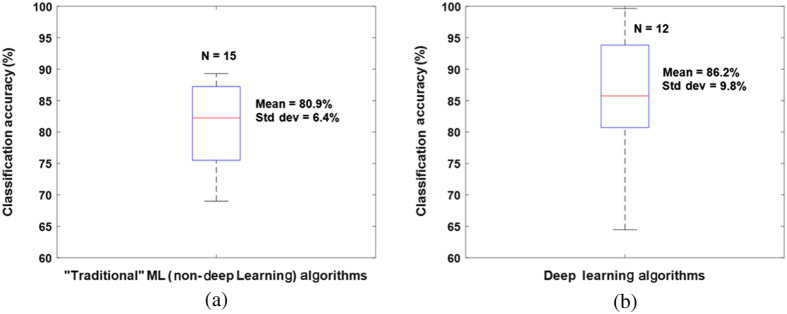

Table 1 summarizes the methodologies, classifiers, validation methods, and classification accuracies of the ML methods trained on the imaging modalities described in this review. Over half of these studies used conventional digital color images as inputs to the ML algorithms. A box plot illustrating the distribution of the accuracies of color image-based ML algorithms using “traditional” (non-deep-learning based) ML methods and deep learning approaches is provided in Fig. 7. It is important to note the wide range of reported classification accuracies reported in these studies. The initial purpose of this review was to provide a quantitative comparison between the accuracy of ML algorithms for burn classification using different tissue imaging modalities. However, upon review of the literature, it became clear that the large number of additional variables that are different between the studies make it extremely difficult to objectively identify the most accurate technique(s). These covariates include differences in the preclinical models or patient populations studied; the sizes of the datasets used for training; the specific ML classifiers employed; the training, validation, and testing procedures utilized; and the number and complexity of categories used for classification. Table 1 summarizes several of these covariates, but more work is needed to quantify, in a statistically rigorous manner, the specific effects of each of these different covariates on the reported classification accuracies of the ML algorithms. The rate of growth of this literature and the expansion of different techniques used for obtaining input data to train ML classifiers are depicted in Fig. 1. As the literature in this area continues to expand, it will become even more critical to perform rigorous meta-analyses of the reported results to determine which aspects of the algorithms are most crucial for enabling optimal classification accuracy. For example, the recent trend toward increased use of deep learning approaches appears promising for improving the accuracy of burn wound severity classification, but this hypothesis must be confirmed more rigorously across a wider range of datasets of varying degrees of diversity and complexity.

Fig. 7.

Box plots showing the means, standard deviations, and distributions of reported accuracy values from burn wound classification studies using (a) “traditional” (non-deep learning) ML algorithms and (b) deep learning ML algorithms with digital color images as inputs. Classification results from 15 different “traditional” ML algorithms and 12 different deep learning algorithms were used; the data are from Refs. 19–26, 28, 30, 32, 33, 36, 37, 41–43, and 45–47. Several studies comparing multiple ML algorithms21,23,26,33,37,43,45 provided multiple data points that were included in these box plots. Overall, the deep learning algorithms trended toward higher mean accuracy, and the five highest accuracy values were all from deep learning algorithms. However, the deep learning algorithms still had a wide range of reported accuracy values, likely due to the substantial presence of other factors that differed between the studies (e.g., size and composition of dataset; training, validation, and testing procedures; type of ML algorithm employed; types of data pre-processing; and categories used for classification).

Furthermore, data from additional emerging technologies such as photoacoustic imaging82,83 may be of significant use for training ML-based burn classification algorithms, motivating additional comparisons with existing literature to assess the effectiveness of these new approaches relative to previously employed imaging modalities. In addition to the potential emergence of new data modalities to provide inputs to ML burn classification algorithms, expanded sets of parameters measured via technologies described in this report may also enhance the input data used for training such algorithms. One example of this possibility is the use of multispectral SFDI (described in Sec. 3.4) to obtain information about the water content of burns with different severities, as an additional input into the ML-based classification procedure. For instance, our group has previously used SFDI to show that water content (denoting edema) can be significantly greater in deep partial-thickness burns than in superficial partial-thickness burns.84 This finding is a potentially important inroad into addressing the ongoing clinical need for techniques to more accurately distinguish between these two types of burns, which can appear very similar visually but require very different medical treatment protocols to facilitate healing.

In addition to the need to systematically assess the effects of different components of the ML algorithms on the resulting accuracy, it is also crucial to make sure that the training of the ML classifier is optimal for clinical translation. Multiple studies cited in this report clearly illustrated that certain burn categories were more difficult to accurately classify than others. Potential reasons for this challenge may include biophysical variations between the tissues within a given category or the possibility that certain tissue sites could contain a mixture of different burn categories (e.g., superficial partial thickness and deep partial thickness burn regions) within the same imaged area and sampled tissue volume. Training ML algorithms that are robust in the presence of this level of physiological realism should be prioritized in future studies to facilitate appropriate clinical translation. Establishing clear consensus definitions of each category or one classification system can allow for a better comparison between algorithms and techniques. Also, in clinical settings, it can be a major challenge to obtain enough imaging data from tissue that can unequivocally be classified into each of the “ground-truth” burn severity categories needed to train the ML algorithms. In preclinical studies, the variation in physiology between the different porcine models provides another potential confounding variable that makes direct comparison between studies difficult and may have implications for the effective clinical translation of classifiers.

The “ground-truth” diagnostic information used for training ML-based burn wound classification algorithms is typically provided by clinical observation. It is important to note that, in some cases, the clinical impression itself may not be accurate, especially at time points soon after the creation of the burn. Previous studies have reported that clinical observation can, in some cases, only be accurate for classifying to 80% of burn wounds.13–16 Certain critical distinctions (e.g., distinguishing superficial partial-thickness burns, which will heal without skin grafting, from deep partial-thickness burns, which require grafting) can be particularly challenging for clinicians to make promptly and accurately via observation alone.15 A recent multi-center initiative85 used histology data to train an algorithm for distinguishing between four different burn severities. This algorithm was applied to a dataset of 66 patients (117 burns, 816 biopsies), and following histopathological examination, it was found that 20% of the burns had been mis-classified as severe enough to need grafting. These limitations of current clinical practice provide clear motivation for the development of ML-based classification algorithms but also introduce difficulty in accurately training and validating the algorithms. Furthermore, in many of the reported studies, there was not a clear description of the exact type of “clinical impression” that was used for the “ground-truth” diagnosis/prognosis when training the algorithms. Among the studies that did describe the clinical impression process in more detail, there was notable variation in the time points used for clinical assessment. This absence of a consistent gold standard across studies introduced a further confounding variable that made it difficult to quantitatively compare the accuracies provided by the different imaging modalities.

5.2. Conclusions

In this report, we have assembled a comprehensive summary of the literature to date that has used imaging technology to inform ML algorithms to identify burn wounds and classify their severity. Numerous studies indicate that these approaches hold significant promise for helping to inform prompt and accurate clinical decisions as to whether surgical treatment (i.e., grafting) of a burn wound is necessary to enable proper recovery. However, the literature to date is quite disparate, consisting of numerous different combinations of tissue segmentation/tissue classifications, imaging technologies, ML classifiers, and methods for training and validating the algorithms. This wide variance in the literature with respect to multiple different independent variables currently makes it extremely difficult to perform rigorous, systematic, quantitative comparisons between the accuracy of different methodologies with respect to a single independent variable (e.g., imaging modality or ML classifier used). Therefore, to facilitate the optimal translation of these technologies to a wide range of clinical settings, it is crucial for future studies to emphasize the advantages and limitations of their methodologies relative to other reported approaches, with the long-term goal of developing a standardized methodology throughout the field. Incorporation of the most informative of these techniques in a user-friendly and real-time interface is essential for clinical adoption, which would ideally be employed in the operating room.

Acknowledgments

We thankfully recognize support from the National Institutes of Health (NIH), including the National Institute of General Medical Sciences (NIGMS) (R01GM108634). In addition, this material is based, in part, upon technology development supported by the U.S. Air Force Office of Scientific Research (FA9550-20-1-0052). We also thank the Arnold and Mabel Beckman Foundation. The content is solely the authors’ responsibility. Any opinions, findings, and conclusions or recommendations expressed in this material are the authors’ and do not necessarily reflect or represent official views of the NIGMS, NIH, U.S. Air Force, or Department of Defense.

Biography

Biographies of the authors are not available.

Contributor Information

Robert H. Wilson, Email: rwilson3@udayton.edu.

Rebecca Rowland, Email: rakrowland@comcast.net.

Gordon T. Kennedy, Email: gordon.kennedy@uci.edu.

Chris Campbell, Email: cacampbe@hs.uci.edu.

Victor C. Joe, Email: vcjoe@hs.uci.edu.

Theresa L. Chin, Email: chintl1@hs.uci.edu.

David M. Burmeister, Email: david.burmeister@usuhs.edu.

Robert J. Christy, Email: Christyr1@uthscsa.edu.

Anthony J. Durkin, Email: adurkin@uci.edu.

Disclosures

Dr. Durkin is a co-founder of Modulim but does not participate in the operation or management of Modulim and has not shared these results with Modulim. He is compliant with UCI and NIH conflict of interest management policy (revisited annually). The other authors have no financial interests or commercial associations representing conflicts of interest with the information presented here.

Code and Data Availability

As this study is a review of existing literature, the data utilized in this study can be found within the prior publications cited below.

References

- 1.Kaiser M., et al. , “Noninvasive assessment of burn wound severity using optical technology: a review of current and future modalities,” Burns 37, 377–386 (2011). 10.1016/j.burns.2010.11.012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Rowan M. P., et al. , “Burn wound healing and treatment: review and advancements,” Crit. Care 19, 243 (2015). 10.1186/s13054-015-0961-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Dilsizian S. E., Siegel E. L., “Artificial intelligence in medicine and cardiac imaging: harnessing big data and advanced computing to provide personalized medical diagnosis and treatment,” Curr. Cardiol. Rep. 16, 441 (2014). 10.1007/s11886-013-0441-8 [DOI] [PubMed] [Google Scholar]

- 4.Handelman G. S., et al. , “eDoctor: machine learning and the future of medicine,” J. Intern.l Med. 284, 603–619 (2018). 10.1111/joim.12822 [DOI] [PubMed] [Google Scholar]

- 5.Topol E. J., “High-performance medicine: the convergence of human and artificial intelligence,” Nat. Med. 25, 44–56 (2019). 10.1038/s41591-018-0300-7 [DOI] [PubMed] [Google Scholar]

- 6.Liu N. T., Salinas J., “Machine learning in burn care and research: a systematic review of the literature,” Burns 41, 1636–1641 (2015). 10.1016/j.burns.2015.07.001 [DOI] [PubMed] [Google Scholar]

- 7.Moura F. S. E., Amin K., Ekwobi C., “Artificial intelligence in the management and treatment of burns: a systematic review,” Burns Trauma 9, tkab022 (2021). 10.1093/burnst/tkab022 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Huang S., et al. , “A systematic review of machine learning and automation in burn wound evaluation: a promising but developing frontier,” Burns 47, 1691–1704 (2021). 10.1016/j.burns.2021.07.007 [DOI] [PubMed] [Google Scholar]

- 9.Boissin C., Laflamme L., “Accuracy of image-based automated diagnosis in the identification and classification of acute burn injuries. A systematic review,” Eur. Burn J. 2, 281–292 (2021). 10.3390/ebj2040020 [DOI] [Google Scholar]

- 10.Feizkhah A., et al. , “Machine learning for burned wound management,” Burns 48, 1261 (2022). 10.1016/j.burns.2022.04.002 [DOI] [PubMed] [Google Scholar]

- 11.Robb L., “Potential for machine learning in burn care,” J. Burn Care Res. 43(3), 632–639 (2022). 10.1093/jbcr/irab189 [DOI] [PubMed] [Google Scholar]

- 12.Despo O., et al. , “BURNED: towards efficient and accurate burn prognosis using deep learning,” 2017, Stanford University, http://cs231n.stanford.edu/reports/2017/pdfs/507.pdf.

- 13.Devgan L., et al. , “Modalities for the assessment of burn wound depth,” J. Burns Wounds 5, e2 (2006). [PMC free article] [PubMed] [Google Scholar]

- 14.McGill D. J., et al. , “Assessment of burn depth: a prospective, blinded comparison of laser Doppler imaging and videomicroscopy,” Burns 33, 833–842 (2007). 10.1016/j.burns.2006.10.404 [DOI] [PubMed] [Google Scholar]

- 15.Monstrey S., et al. , “Assessment of burn depth and burn wound healing potential,” Burns 34, 761–769 (2008). 10.1016/j.burns.2008.01.009 [DOI] [PubMed] [Google Scholar]

- 16.Watts A. M. I., et al. , “Burn depth and its histological measurement,” Burns 27, 154–160 (2001). 10.1016/S0305-4179(00)00079-6 [DOI] [PubMed] [Google Scholar]

- 17.Acha Pinero B., Serrano C., Acha J. I., “Segmentation of burn images using the L*u*v* space and classification of their depths by color and texture information,” Proc. SPIE 4684, 1508–1515 (2002). 10.1117/12.467117 [DOI] [Google Scholar]

- 18.Acha B., et al. , “CAD tool for burn diagnosis,” in Proc. Inf. Process. Med. Imaging 18th Annu. Conf., Taylor C. J., Noble J. A., Eds., Springer, pp. 294–305 (2003). [DOI] [PubMed] [Google Scholar]

- 19.Acha B., et al. , “Segmentation and classification of burn images by color and texture information,” J. Biomed. Opt. 10(3), 034014 (2005). 10.1117/1.1921227 [DOI] [PubMed] [Google Scholar]

- 20.Serrano C., et al. , “A computer assisted diagnosis tool for the classification of burns by depth of injury,” Burns 31, 275–281 (2005). 10.1016/j.burns.2004.11.019 [DOI] [PubMed] [Google Scholar]

- 21.Wantanajittikul K., et al. , “Automatic segmentation and degree identification in burn color images,” in 4th 2011 Biomed. Eng. Int. Conf., Chiang Mai, Thailand, pp. 169–173 (2012). [Google Scholar]

- 22.Suvarna M., Kumar S., Niranjan U. C., “Classification methods of skin burn images,” Int. J. Comput. Sci. Inf. Technol. 5(1), 109–118 (2013). 10.5121/ijcsit.2013.5109 [DOI] [Google Scholar]

- 23.Acha B., et al. , “Burn depth analysis using multidimensional scaling applied to psychophysical experiment data,” IEEE Trans. Med. Imaging 32(6), 1111–1120 (2013). 10.1109/TMI.2013.2254719 [DOI] [PubMed] [Google Scholar]

- 24.Serrano C., et al. , “Features identification for automatic burn classification,” Burns 41, 1883–1890 (2015). 10.1016/j.burns.2015.05.011 [DOI] [PubMed] [Google Scholar]

- 25.Tran H., et al. , “Burn image classification using one-class support vector machine,” in Context-Aware Systems and Applications. ICCASA 2015, Vinh P., Alagar V., Eds., Vol. 165, pp. 233–242, Springer, Cham: (2015). [Google Scholar]

- 26.Kuan P. N., et al. , “A comparative study of the classification of skin burn depth in human,” JTEC 9, 15–23 (2017). [Google Scholar]

- 27.Tran H. S., Le T. H., Nguyen T. T., “The degree of skin burns images recognition using convolutional neural network,” Indian J. Sci. Technol. 9(45), 106772 (2016). 10.17485/ijst/2016/v9i45/106772 [DOI] [Google Scholar]

- 28.Cirillo M. D., et al. , “Time-independent prediction of burn depth using deep convolutional neural networks,” J. Burn Care Res. 40(6), 857–863 (2019). 10.1093/jbcr/irz103 [DOI] [PubMed] [Google Scholar]

- 29.Cirillo M. D., et al. , “Tensor decomposition for colour image segmentation of burn wounds,” Sci. Rep. 9, 3291 (2019). 10.1038/s41598-019-39782-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Cirillo M. D., et al. , “Improving burn depth assessment for pediatric scalds by AI based on semantic segmentation of polarized light photography images,” Burns 47(7), 1586–1593 (2021). 10.1016/j.burns.2021.01.011 [DOI] [PubMed] [Google Scholar]

- 31.Jiao C., et al. , “Burn image segmentation based on mask regions with convolutional neural network deep learning framework: more accurate and more convenient,” Burns Trauma 7, 6 (2019). 10.1186/s41038-018-0137-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Wang Y., et al. , “Real-time burn depth assessment using artificial networks: a large-scale, multicentre study,” Burns 46, 1829–1838 (2020). 10.1016/j.burns.2020.07.010 [DOI] [PubMed] [Google Scholar]

- 33.Chauhan J., Goyal P., “BPBSAM: body part-specific burn severity assessment model,” Burns 46, 1407–1423 (2020). 10.1016/j.burns.2020.03.007 [DOI] [PubMed] [Google Scholar]

- 34.Liu H., et al. , “A framework for automatic burn image segmentation and burn depth diagnosis using deep learning,” Comput. Math. Methods Med. 2021, 5514224 (2021). 10.1155/2021/5514224 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Chang C. W., et al. , “Deep learning-assisted burn wound diagnosis: diagnostic model development study,” JMIR Med. Inf. 9(12), e22798 (2021). 10.2196/22798 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Boissin C., et al. , “Development and evaluation of deep learning algorithms for assessment of acute burns and the need for surgery,” Sci. Rep. 13, 1794 (2023). 10.1038/s41598-023-28164-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Suha S. A., Sanam T. F., “A deep convolutional neural network-based approach for detecting burn severity from skin burn images,” Mach. Learn. Appl. 9, 100371 (2022). 10.1016/j.mlwa.2022.100371 [DOI] [Google Scholar]

- 38.Şevik U., et al. , “Automatic classification of skin burn colour images using texture-based feature extraction,” IET Image Process. 13(11), 2018–2028 (2019). 10.1049/iet-ipr.2018.5899 [DOI] [Google Scholar]

- 39.Chauhan J., Goyal P., “Convolution neural network for effective burn region segmentation of color images,” Burns 47(4), 854–862 (2021). 10.1016/j.burns.2020.08.016 [DOI] [PubMed] [Google Scholar]

- 40.Chang C. W., et al. , “Application of multiple deep learning models for automatic burn wound assessment,” Burns 49(5), 1039–1051 (2023). 10.1016/j.burns.2022.07.006 [DOI] [PubMed] [Google Scholar]

- 41.Khan F. A., et al. , “Computer-aided diagnosis for burnt skin images using deep convolutional neural network,” Multimedia Tools Appl. 79, 34545–34568 (2020). 10.1007/s11042-020-08768-y [DOI] [Google Scholar]

- 42.Karthik J., Nath G. S., Veena A., “Deep learning-based approach for skin burn detection with multi-level classification,” in Advances in Computing and Network Communications, Lecture Notes in Electrical Engineering, Thampi S. M., et al., Eds., Vol. 736, pp. 31–40, Springer, Singapore: (2021). [Google Scholar]

- 43.Yadav D. P., Jalal A. S., Prakash V., “Human burn depth and grafting prognosis using ResNeXt topology based deep learning network,” Multimedia Tools Appl. 81, 18897–18914 (2022). 10.1007/s11042-022-12555-2 [DOI] [Google Scholar]

- 44.Yadav D. P., et al. , “Spatial attention-based residual network for human burn identification and classification,” Sci. Rep. 13(1), 12516 (2023). 10.1038/s41598-023-39618-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Pabitha C., Vanathi B., “Densemask RCNN: a hybrid model for skin burn image classification and severity grading,” Neural Process. Lett. 53, 319–337 (2021). 10.1007/s11063-020-10387-5 [DOI] [Google Scholar]

- 46.Yadav D. P., et al. , “Feature extraction based machine learning for human burn diagnosis from burn images,” Med. Imaging Diagn. Radiol. 7, 1800507 (2019). 10.1109/JTEHM.2019.2923628 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Yadav D. P., “A method for human burn diagnostics using machine learning and SLIC superpixels based segmentation,” IOP Conf. Ser: Mater. Sci. Eng. 1116, 012186 (2021). 10.1088/1757-899X/1116/1/012186 [DOI] [Google Scholar]

- 48.Rangel-Olvera B., Rosas-Romano R., “Detection and classification of burnt skin via sparse representation of signals by over-redundant dictionaries,” Comput. Biol. Med. 132, 104310 (2021). 10.1016/j.compbiomed.2021.104310 [DOI] [PubMed] [Google Scholar]

- 49.Abubakar A., Ugail H., Bukar A. M., “Noninvasive assessment and classification of human skin burns using images of Caucasian and African patients,” J. Electron. Imaging 29(4), 041002 (2019). 10.1117/1.JEI.29.4.041002 [DOI] [Google Scholar]

- 50.Abubakar A., Ugail H., Bukar A. M., “Assessment of human skin burns: a deep transfer learning approach,” J. Med. Biol. Eng. 40, 321–333 (2020). 10.1007/s40846-020-00520-z [DOI] [Google Scholar]

- 51.Abubakar A., et al. , “Burns depth assessment using deep learning features,” J. Med. Biol. Eng. 40, 923–933 (2020). 10.1007/s40846-020-00574-z [DOI] [Google Scholar]

- 52.Abubakar A., Ajuji M., Yahya I. U., “Comparison of deep transfer learning techniques in human skin burns discrimination,” Appl. Syst. Innov. 3, 20 (2020). 10.3390/asi3020020 [DOI] [Google Scholar]

- 53.Li W., et al. , “Outlier detection and removal improves accuracy of machine learning approach to multispectral burn diagnostic imaging,” J. Biomed. Opt. 20(12), 121305 (2015). 10.1117/1.JBO.20.12.121305 [DOI] [PubMed] [Google Scholar]

- 54.Squiers J. J., et al. , “Multispectral imaging burn wound tissue classification system: a comparison of test accuracies between several common machine learning algorithms,” Proc. SPIE 9785, 97853L (2016). 10.1117/12.2214754 [DOI] [Google Scholar]

- 55.Thatcher J. E., et al. , “Clinical investigation of a rapid non-invasive multispectral imaging device utilizing an artificial intelligence algorithm for improved burn assessment,” J. Burn Care Res. 44, 969–981 (2023). 10.1093/jbcr/irad051 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Paluchowski L. A., et al. , “Can spectral-spatial image segmentation be used to discriminate experimental burn wounds?” J. Biomed. Opt. 21(10), 101413 (2016). 10.1117/1.JBO.21.10.101413 [DOI] [PubMed] [Google Scholar]

- 57.Rowland R., et al. , “Burn wound classification model using spatial frequency-domain imaging and machine learning,” J. Biomed. Opt. 24(5), 056007 (2019). 10.1117/1.JBO.24.5.056007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Heredia-Juesas J., et al. , “Non-invasive optical imaging techniques for burn-injured tissue detection for debridement surgery,” in Annu. Int. Conf. IEEE Eng. Med. Biol. Soc., pp. 2893–2896 (2016). 10.1109/EMBC.2016.7591334 [DOI] [PubMed] [Google Scholar]

- 59.Heredia-Juesas J., et al. , “Burn-injured tissue detection for debridement surgery through the combination of non-invasive optical imaging techniques,” Biomed. Opt. Express 9(4), 1809–1826 (2018). 10.1364/BOE.9.001809 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Heredia-Juesas J., et al. , “Merging of classifiers for enhancing viable vs non-viable tissue discrimination on human injuries,” in 40th Annu. Int. Conf. of the IEEE Eng. in Med. and Biol. Soc. (EMBC) (2018). 10.1109/EMBC.2018.8512378 [DOI] [PubMed] [Google Scholar]

- 61.Heredia-Juesas J., et al. , “Mahalanobis outlier removal for improving the non-viable detection on human injuries,” in 40th Annu. Int. Conf. of the IEEE Eng. in Med. and Biol. Soc. (EMBC) (2018). 10.1109/EMBC.2018.8512321 [DOI] [PubMed] [Google Scholar]

- 62.Ganapathy P., et al. , “Dual-imaging system for burn depth diagnosis,” Burns 40, 67–81 (2014). 10.1016/j.burns.2013.05.004 [DOI] [PubMed] [Google Scholar]

- 63.Dubey K., Srivastava V., Dalal K., “In vivo automated quantification of thermally damaged human tissue using polarization sensitive optical coherence tomography,” Comput. Med. Imaging Graphics 64, 22–28 (2018). 10.1016/j.compmedimag.2018.01.002 [DOI] [PubMed] [Google Scholar]

- 64.Singla N., Srivastava V., Mehta D. S., “In vivo classification of human skin burns using machine learning and quantitative features captured by optical coherence tomography,” Laser Phys. Lett. 15, 025601 (2018). 10.1088/1612-202X/aa9969 [DOI] [Google Scholar]

- 65.Lee S., et al. , “Real-time burn classification using ultrasound imaging,” Sci. Rep. 10, 5829 (2020). 10.1038/s41598-020-62674-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Lee S., et al. , “A deep learning model for burn depth classification using ultrasound imaging,” J. Mech. Behav. Biomed. Mater. 125, 104930 (2022). 10.1016/j.jmbbm.2021.104930 [DOI] [PubMed] [Google Scholar]

- 67.Badea M.-S., et al. , “Severe burns assessment by joint color-thermal imagery and ensemble methods,” in IEEE 18th Int. Conf. e-Health Networking, Appl. and Serv. (Healthcom) (2016). 10.1109/HealthCom.2016.7749450 [DOI] [Google Scholar]

- 68.Martínez-Jiménez M. A., et al. , “Development and validation of an algorithm to predict the treatment modality of burn wounds using thermographic scans: prospective cohort study,” PLoS One 13(11), e0206477 (2018). 10.1371/journal.pone.0206477 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Liu N. T., et al. , “Predicting graft failure in a porcine burn model of various debridement depths via Laser Speckle Imaging and Deep Learning,” J. Burn Care Res. 41(Suppl. 1), S77 (2020). 10.1093/jbcr/iraa024.118 [DOI] [Google Scholar]

- 70.Khani M. E., et al. , “Supervised machine learning for automatic classification of in vivo scald and contact burn injuries using the terahertz Portable Handheld Spectral Reflection (PHASR) Scanner,” Sci. Rep. 12, 5096 (2022). 10.1038/s41598-022-08940-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Osman O. B., et al. , “Deep neural network classification of in vivo burn injuries with different etiologies using terahertz time-domain spectral imaging,” Biomed. Opt. Express 13(4), 1855–1868 (2022). 10.1364/BOE.452257 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Khani M. E., et al. , “Triage of in vivo burn injuries and prediction of wound healing outcome using neural networks and modeling of the terahertz permittivity based on the double Debye dielectric parameters,” Biomed. Opt. Express 14(2), 918–931 (2023). 10.1364/BOE.479567 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Calin M. A., et al. , “Characterization of burns using hyperspectral imaging technique – A preliminary study,” Burns 41, 118–124 (2015). 10.1016/j.burns.2014.05.002 [DOI] [PubMed] [Google Scholar]

- 74.Rangaraju L. P., et al. , “Classification of burn injury using Raman spectroscopy and optical coherence tomography: an ex-vivo study on porcine skin,” Burns 45, 659–670 (2019). 10.1016/j.burns.2018.10.007 [DOI] [PubMed] [Google Scholar]

- 75.Hoeksema H., et al. , “Accuracy of early burn depth assessment by laser Doppler imaging on different days post burn,” Burns 35, 36–45 (2009). 10.1016/j.burns.2008.08.011 [DOI] [PubMed] [Google Scholar]

- 76.Ponticorvo A., et al. , “Quantitative assessment of graded burn wounds in a porcine model using spatial frequency domain imaging (SFDI) and laser speckle imaging (LSI),” Biomed. Opt. Express 5(10), 3467–3481 (2014). 10.1364/BOE.5.003467 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Burmeister D. M., et al. , “Utility of spatial frequency domain imaging (SFDI) and laser speckle imaging (LSI) to non-invasively diagnose burn depth in a porcine model,” Burns 41, 1242–1252 (2015). 10.1016/j.burns.2015.03.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Burke-Smith A., Collier J., Jones I., “A comparison of non-invasive imaging modalities: infrared thermography, spectrophotometric intracutaneous analysis and laser Doppler imaging for the assessment of adult burns,” Burns 41, 1695–1707 (2015). 10.1016/j.burns.2015.06.023 [DOI] [PubMed] [Google Scholar]

- 79.Shin J. Y., Yi H. S., “Diagnostic accuracy of laser Doppler imaging in burn depth assessment: systematic review and meta-analysis,” Burns 42, 1369–1376 (2016). 10.1016/j.burns.2016.03.012 [DOI] [PubMed] [Google Scholar]

- 80.Ponticorvo A., et al. , “Quantitative long-term measurements of burns in a rat model using spatial frequency domain imaging (SFDI) and laser speckle imaging (LSI),” Lasers Surg. Med. 49, 293–304 (2017). 10.1002/lsm.22647 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Ponticorvo A., et al. , “Evaluating clinical observation versus spatial frequency domain imaging (SFDI), laser speckle imaging (LSI) and thermal imaging for the assessment of burn depth,” Burns 45, 450–460 (2019). 10.1016/j.burns.2018.09.026 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Ida T., et al. , “Real-time photoacoustic imaging system for burn diagnosis,” J. Biomed. Opt. 19(8), 086013 (2014). 10.1117/1.JBO.19.8.086013 [DOI] [PubMed] [Google Scholar]

- 83.Ida T., et al. , “Burn depth assessments by photoacoustic imaging and laser Doppler imaging,” Wound Repair Regen. 24(2), 349–355 (2016). 10.1111/wrr.12374 [DOI] [PubMed] [Google Scholar]

- 84.Nguyen J. Q., et al. , “Spatial frequency domain imaging of burn wounds in a preclinical model of graded burn severity,” J. Biomed. Opt. 18(6), 066010 (2013). 10.1117/1.JBO.18.6.066010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Phelan H. A., et al. , “Use of 816 consecutive burn wound biopsies to inform a histologic algorithm for burn depth categorization,” J. Burn Care Res. 42(6), 1162–1167 (2021). 10.1093/jbcr/irab158 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

As this study is a review of existing literature, the data utilized in this study can be found within the prior publications cited below.