Abstract

In many neural populations, the computationally relevant signals are posited to be a set of ‘latent factors’ – signals shared across many individual neurons. Understanding the relationship between neural activity and behavior requires the identification of factors that reflect distinct computational roles. Methods for identifying such factors typically require supervision, which can be suboptimal if one is unsure how (or whether) factors can be grouped into distinct, meaningful sets. Here, we introduce Sparse Component Analysis (SCA), an unsupervised method that identifies interpretable latent factors. SCA seeks factors that are sparse in time and occupy orthogonal dimensions. With these simple constraints, SCA facilitates surprisingly clear parcellations of neural activity across a range of behaviors. We applied SCA to motor cortex activity from reaching and cycling monkeys, single-trial imaging data from C. elegans, and activity from a multitask artificial network. SCA consistently identified sets of factors that were useful in describing network computations.

Introduction

The study of computation by neural populations has experienced a conceptual shift. Traditionally, one first characterized single-neuron response properties, then extrapolated to determine how the population would behave. This approach worked well in situations where population-level function (e.g. saccade generation) was a straightforward extension of single-neuron responses (e.g. tuning for target amplitude and direction in the superior colliculus)1,2. Yet with time, it became appreciated that this strategy often needs to be inverted. In many areas, single-neuron responses are complex, heterogeneous, and make little sense except in the context of population-level computation. This realization led to an increased desire for tools for investigating computation at the population level.

Population-level computations, in many biological and artificial networks, involve ‘latent factors’3–10. Latent factors are simply signals that are shared across the population. In many networks, the factors are the computationally relevant signals, as they are consistent across trials, while the activity of individual neurons is noisy and variable11. The evolution and interactions of factors can yield explanations of how a particular computation is accomplished (e.g.,11–20). However, factors cannot be observed directly; instead, they must be estimated from population activity using dimensionality reduction. This need for inference poses a hurdle. While there are typically many statistically valid estimates of factors, not all provide equally interpretable views of the underlying computational mechanisms.

As a concrete example of the importance and utility of extracting interpretable factors, consider motor cortical activity during delayed reaching (Fig. 1A). Individual-neuron responses are complex and difficult to interpret21. Most neurons are active during both the delay period and during movement, with no clear similarity between these two activity patterns22–25. Yet at the population level, one can identify two distinct sets of factors. ‘Preparatory factors’ (Fig. 1A, left) are active solely during the delay, while ‘execution-related factors’ (Fig. 1A, right) are active solely during the reach26,27. This separation maps onto basic hypotheses regarding network computation, where execution dynamics generate activity patterns that form descending commands15, while preparatory factors seed an initial state from which upcoming execution-related factors evolve22,28,29. This framework, and the ability to empirically separate preparatory and execution factors, has made it possible to address a variety of outstanding questions regarding how movements are prepared27, executed15, and arranged in sequences30. This progress was possible only because it was suspected, in advance, that preparation and execution might be distinct processes subserved by distinct sets of factors. Careful task design also allowed researchers to know when, within each trial, these two processes occurred and where the likely boundary was between them.

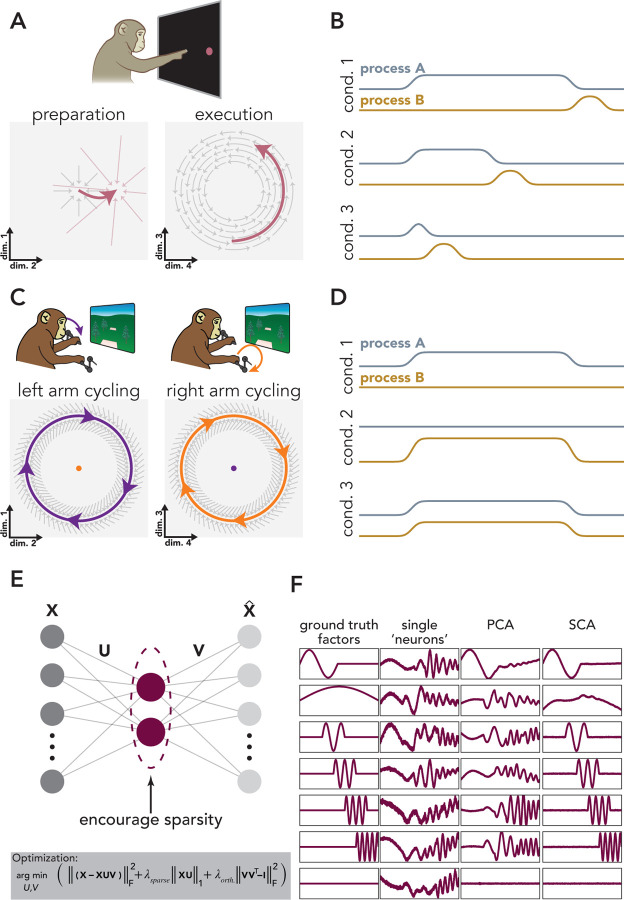

Figure 1. SCA seeks non-synchronous factors.

A. Non-synchronous factors during reaching. Left: preparation: Condition-specific inputs (small red arrows) cause preparatory factors (thick red arrow) to settle at a single fixed point. Absent this input, activity would decay to a baseline determined by the intrinsic dynamics (gray arrows). Right: execution dynamics shared across conditions (gray arrows) and the initial state of the system (beginning of red arrow) determine the trajectory of the execution-related factors (red arrow). B. Time course of two non-synchronous neural processes that are not independent (e.g., A). The two processes exhibit a degree of temporal flexibility because the duration of Process A (silver trace) can differ across conditions. However, Process B (gold trace) always follows the end of process A. C. Non-synchronous factors during cycling. Motor cortical dynamics (gray arrows) maintain stable limit cycles that generate left-arm moving (purple) and right-arm moving (orange) factors. These two processes occur in orthogonal subspaces; the space that captures a large amount of left-arm variance (left) only accounts for a small fraction of the neural variance when the right-arm is moving (orange dot, left space), and vice versa. D. Time course of two non-synchronous processes that are independent (e.g., C). E. SCA architecture and cost function. F. SCA recovers ground-truth latent factors in idealized data. We constructed a population of 50 ‘neurons’ via orthonormal mappings from the ground-truth factors, plus Gaussian noise.

Reach preparation and execution provide a specific example of a general need: parsing population activity into dimensions that respect basic divisions between the computational roles of different factors, if such divisions exist. It has become common to use unsupervised dimensionality reduction methods, such as principal component analysis (PCA) as a first step in estimating factors. Yet a well-known hurdle is that unsupervised methods frequently fail to provide the desired parcellation; PCA-estimated factors are often as ‘mixed’ as the individual neurons one started with. The field has thus developed a variety of approaches that can be more appropriate in specific situations. A simple form of supervision is to manually label times, dividing data into groups based on prior knowledge or assumptions, before applying an otherwise-unsupervised method (e.g.,17,19). Alternatively, explicitly supervised methods such as demixed PCA (dPCA) use labeling based both on time within a trial and on the condition label of that trial31. Related methods such as targeted dimensionality reduction (TDR) and its successor, model-based TDR,32 use regression to identify factors that covary with task-related variables12. Finally, methods such as jPCA15 avoid labels, but seek hypothesized structure that is typically dataset- or experiment-specific (e.g., factors that obey linear dynamics). All these approaches are limiting in the many situations where one may not know the correct labels, temporal divisions, or potential forms of population-level structure.

Here, we present an unsupervised method for identifying interpretable latent factors. Sparse Component Analysis (SCA) seeks factors that are sparse in time and evolve within orthogonal dimensions. In seeking this simple structure, SCA is able to recover factors that only reflect a single computational role. SCA does so by leveraging the fact that many neural computations involve distinct processes, each associated with different sets of latent factors, which are not always active at the same time. SCA is a variant of dictionary learning33,34, with a simple cost function that reflects very general assumptions about neural computations and therefore can be usefully applied to a wide range of datasets.

We applied SCA to data collected from three experimental models (rhesus monkeys, C. elegans, and artificial networks) during a variety of behavioral tasks. SCA identified factors that have been previously described using supervised methods, verifying that the method can recover expected features without the need for supervision. SCA also identified previously undescribed factors, validating the method as a tool for scientific discovery. In monkey motor cortical activity collected during a reaching task, SCA identified separate factors related to reach preparation, execution, and postural maintenance. In activity recorded during unimanual cycling, a potentially more complicated behavior that involves more extended movements, SCA produced surprisingly analogous factors to those observed during reaching – factors related to movement preparation, movement execution, and postural maintenance. When applied to bimanual cycling activity, SCA identified factors that were selective for one of the two arms. Surprisingly, many of these factors were active during both unimanual movements and bimanual movements, demonstrating that SCA will not ‘invent’ sparsity that is not present in data. Next, we applied SCA to neural data collected from C. elegans during mating. SCA identified factors, and individual neurons, related to specific mating motifs. Finally, in a multi-task RNN, SCA recovered factors that reflected the compositional nature of the network’s computations13,35.

Results

An unsupervised method for identifying interpretable factors

When attempting to understand a given neural computation, it is often useful to partition neural activity into signals that reflect distinct computational roles. Typically, this partitioning is accomplished via careful task design. By controlling the timing of key task events, researchers determine when different neural processes occur. Once distinct processes are confined to specific task epochs, unsupervised dimensionality reduction methods can be used to identify the latent factors active during that epoch. For example, in a delayed reaching task, researchers control the duration of the delay period and therefore determine when preparatory and execution-related processes occur23,24. These two processes not only occur at different times, but also within different sets of dimensions; the neural dimensions occupied during preparation are nearly orthogonal to those occupied during execution26. Preparatory and execution-related factors can therefore be identified by training PCA on delay-period or execution-period activity (Fig. 1A).

Preparatory and execution-related factors exhibit a property that may be common to sets of factors that perform different computational roles: they are ‘non-synchronous’, meaning that these two sets of factors can be active at different times. In other words, preparation and execution exhibit temporal flexibility relative to one another. Preparation can be lengthy or brief27,36,37, preparation for a second movement can occur simultaneously with execution of a first30,36, or can occur after an initial movement ends30. This flexibility is incomplete – preparation must presumably always precede execution – yet it is still the case that the envelopes of preparation and execution are quite different in different situations (Fig. 1B). A second example of non-synchronous factors is observed during bimanual coordination (Fig. 1C,D). Distinct sets of factors are active when the left or the right arm moves38,39 and are co-active when both arms move simultaneously 40. As was true for preparatory and execution factors, left-arm and right-arm factors evolve in orthogonal sets of dimensions38,39. Further examples of non-synchronous factors that occupy orthogonal dimensions have been observed across brain regions and species, during a wide variety of tasks (e.g.,16,17,19,31,41–43). In the past, discerning the presence of non-synchronous factors required either clever task design (e.g., the classic delayed-reach paradigm) or supervised methods to separate one factor from another (e.g., dPCA, TDR). A natural goal is thus a method that identifies non-synchronous factors in an unsupervised manner.

We designed SCA (Fig. 1E) to accomplish this goal. SCA was designed to identify factors that are active at different times and/or overlap in time but display the temporal flexibility described above. SCA finds:

The first term ensures that the learned latent factors capture a large fraction of the neural variance, the second term encourages the factors to be sparse in time (either within a trial or across conditions), and the final term encourages the loadings to be orthogonal.

Let be a matrix of neural data, where and indicate the number of recorded neurons and number of time points, respectively. If there are multiple experimental conditions, neural data within can be concatenated across conditions, so represents the total number of time points across conditions. In order to find the low-dimensional factors, we learn the factors via a mapping, , from the neural activity space (Fig. 1E), where indicates the number of requested factors. is a matrix that reconstructs the high-dimensional neural activity from the factors. We also include offset terms in both these mappings (omitted for brevity in the equation below, see Methods for full equation). We weight the samples in , via the diagonal matrix , such that the reconstruction of timepoints of high firing-rate neural activity are not given inherent precedence over low firing rate activity (e.g., the reconstruction of preparatory activity does not suffer from the attempt to reconstruct the relatively higher firing rates associated with execution-related activity, see details in Methods).

It may seem odd to encourage sparsity in the factors, as the factors of interest are often non-sparse, and may be active during many, or even most, task epochs. However, encouraging sparsity means that the cost–function is minimized when non-synchronous factors are isolated in different dimensions. For example, a solution in which factor 1 is active only during preparation and factor 2 is active only during movement is preferred to one in which both dimensions are active at both times. Thus, SCA aims to yield factors that can be naturally grouped in ways that aid scientific interpretation.

Importantly, this cost function is agnostic regarding the experimental ‘meaning’ of different times. For example, SCA has no knowledge of whether activity corresponds to an imposed preparatory period versus an execution epoch or whether activity occurs during one experimental condition versus another. The same cost function can therefore be used across a great variety of experimental situations, as we illustrate below.

To begin, we verified that SCA recovers the expected factors from a population of artificially-generated ‘neurons’ (Fig. 1F). By design, the activity of these individual neurons reflects the influence of multiple non-synchronous factors (Fig. 1F, second column). PCA, as expected, does not resolve this mixing (Fig. 1F, third column); individual PCA factors and neurons are both combinations of the factors. SCA, however, recovers the ground truth factors (Fig. 1F, fourth column). While it is useful to validate that SCA can recover non-synchronous factors in idealized data, in empirical neural data, we do not have access to the ‘ground truth’ factors. In such cases, why are SCA factors preferable over PCA factors if both are statistically valid views of the data? SCA factors are preferable if they invite testable interpretations about how a given computation is performed. We demonstrate below, using a variety of datasets, that SCA often provides such factors.

Center-out reaching

Motor cortical activity during center-out reaching has been studied (and modeled) extensively14,15,27,44,45. This task is thus a useful testbed for SCA because the field has developed a reasonably mature understanding of the neural mechanisms that underlie this behavior. Reaching involves three distinct processes, each reflected by a set of latent factors. Preparatory factors become active shortly after the onset of the target and remain relatively static throughout the delay period27,46. Shortly after the delivery of the go-cue (and approximately 150 ms before the hand begins to move), activity in preparatory dimensions collapses while execution-related factors simultaneously become active26,27. Finally, factors related to postural-maintenance become active while the animal holds its hand at the peripheral target47,48. To determine whether SCA could identify preparatory, execution-related, and posture-related factors, without the supervision such parcellation previously required, we applied SCA to trial-averaged activity from motor cortex, recorded while monkeys performed a delayed center-out reaching task (Fig. 2A,B).

Figure 2. SCA identifies preparatory, execution-related, and posture-related factors in motor cortical reaching data.

A. Trial timing. B. Example behavior for one condition (averaged across trials). C. Six example motor cortical neurons. Each trace corresponds to a reach direction. Gray rectangles indicate the median duration of all reaches. D. Top eight PCA factors. The factors have been ordered by time of maximum occupancy. E. Projections in eight SCA dimensions. As in D, factors have been ordered by time of maximum occupancy. F. Outward reach activity projected into three SCA dimensions. G. Projection of return reach activity in the same space as F.

In this task, monkeys held their hand at a central touchpoint and were shown one of eight peripheral targets. Following an unpredictable go cue, the animals quickly captured the instructed target and maintained this position until a juice reward was delivered. After receiving a reward, the monkey returned to the starting position to begin the next trial. This return reach was self-initiated; the task did not require the monkey to return to the touchpoint within a certain amount of time.

The fact that reaching is composed of three distinct processes was not apparent either at the level of single units (Fig. 2D) nor factors identified via PCA (Fig. 2D). In both, neural activity relating to preparatory, execution-related, and posture-related processes are mixed. For example, the neuron shown in the top row of Fig. 2C was active during the delay period, the outward reach, the hold period (when the monkey was holding the captured target), and the return reach. Many of the PCA factors were also continuously active throughout the trial (e.g., Fig. 2D, factors 2 and 3). While individual neurons and PCA factors hint at the existence of discrete processes – for example, the ‘tuning’ of individual neurons often changed between the delay period and the execution epoch (e.g., Fig. 2C, top row) and some individual PCA factors were more active during a single task epoch (e.g., Fig. 2D, top row) – these hints are somewhat subtle. Neither view of the data readily offers an interpretable view of the underlying computation.

SCA, conversely, produced a remarkably clear view of the divisions between neural processes (Fig. 2E). Without supervision, SCA found factors that were primarily active during only one task epoch: preparation, reach execution, or postural-maintenance. Consider activity before and during the outward reach (Fig. 2E). Shortly after target onset, activity emerges in dimensions 1 and 2 and is maintained throughout the delay period, a pattern expected for preparatory activity during this task22,27,46. Shortly before reach onset, activity in these first two dimensions collapses, while activity in dimensions 4, 6, 7, and 8 grows. Dimensions 6, 7, and 8 are occupied briefly – for approximately the same duration as the outward reach – while activity in dimensions 4 and 5 remain high. These patterns are consistent with dimensions 1 and 2 being preparatory dimensions, dimensions 6, 7, and 8 being execution-related dimensions, and dimensions 4 and 5 being posture-related dimensions.

The factors’ patterns of activity around the return reach agree with this interpretation. The putative posture-related factors (dimensions 4 and 5) remain active while the monkey maintains his hand position at the peripheral target. Approximately 250 ms before the self-initiated return reach, activity in these posture dimensions begins to recede. At the same time, activity reemerges in dimension 1, and grows more dramatically in dimension 3. That dimension 3 is only occupied prior to the return reach is not unexpected given kinematic differences between the outward and return reaches, a point we return to below. Before the onset of the return reach, activity in dimensions 1 and 3 decreases, while dimensions 6, 7, and 8 (the putative execution-related dimensions) become transiently occupied, as they were during the outward reach. Similar results were found using data from a second monkey (Figure S1); each SCA factor was primarily active during the preparatory, execution, or posture epoch.

In order to visualize the relationship between factors, it is often useful to plot activity in a state space (Fig. 2F,G). Here, we’ve plotted activity in a three dimensional space defined by one preparatory dimension (SCA dimension 1) and two execution-related dimensions (SCA dimensions 6 and 8). This view highlights both the relationship between preparatory activity and execution activity as well as the relationship between execution-related factors. During outward reaches (Fig. 2F), preparatory activity sets the initial state for execution-related activity22. Just before movement, preparatory activity diminishes, while activity emerges in execution-related dimensions (Fig. 2F, gray plane). Activity within this plane appears to be dictated by a single set of dynamics, which are shared across conditions15. During return reaches (Fig. 2G), the same process unfolds in the same three dimensions (although the preparatory activity is weaker in this dimension, see below). The ordering of conditions is largely reversed between outward and return reaches. This reversal reflects task geometry; conditions that begin with an outwards reach to the right are followed by a return reach to the left.

Quantitative Comparisons and Characterization

Qualitatively, SCA appears to produce more interpretable factors than PCA. Specifically, SCA factors seem to correspond to distinct computational roles, while PCA factors reflect a mixture of computational processes. To validate this impression, we performed a number of quantitative comparisons between SCA and PCA factors. We compared SCA factors to factors recovered by weighted PCA (wPCA, see Methods), a PCA variant that is more comparable to SCA in that it also uses sample-weighting. The only substantial difference between these two methods is that wPCA does not attempt to identify sparse factors.

First, we found that the improved interpretability of SCA factors does not come at the cost of decreased reconstruction accuracy. Although SCA factors are only active during a single epoch, SCA and wPCA factors account for virtually identical amounts of total neural variance (Fig. 3A). Additionally, SCA and wPCA perform equally well when asked to generalize to held-out neurons and conditions (Fig. 3A, inset).

Figure 3. Quantitative comparisons of SCA and PCA when applied to reaching data.

A. SCA and PCA account for virtually identical fractions of neural variance. Inset. SCA and PCA were trained on the activity from a subsample of neurons during all but one condition. R2 was calculated between the predicted and actual activity of held-out neurons during held-out conditions (see Methods). Solid and dashed traces correspond to the mean R2 across conditions (PCA and SCA, respectively) and shading indicates standard error. B. Left: Fraction of total occupancy (cross-condition variance) accounted for by each epoch, for each SCA dimension. Right: Mean and standard deviation of occupancy concentration (see Methods) calculated across bootstrapped neural populations (p < 0.01, n=100 resampled populations). C. Reconstructing a single SCA or PCA factor from preparatory, execution, or posture-related factors. Error bars correspond to mean and standard deviation across resampled populations (n=100). Reconstructions of SCA factors were significantly higher than those of PCA factors (p < 0.01, bootstrap test). Horizontal lines correspond to reconstruction performance when using all sets of supervised factors (preparatory, execution-related, and posture-related) to reconstruct each SCA or PCA factor. The difference in R2 between predicting factors using a single set of supervised factors vs. all supervised factors (i.e., the difference between the solid horizontal line and the filled circle) was significantly smaller for SCA than PCA (p < 0.01, bootstrap test).

To quantify the degree to which SCA identifies latents related to a single computation, we measured the cross-condition variance (i.e., ‘occupancy’, see Methods) of each dimension during preparatory, execution, and posture-related epochs (Fig. 3B, left). If a factor is only active (i.e., that dimension is ‘occupied’) during a single task epoch, then the occupancy will be high during one epoch and low during the other two. Indeed, all SCA dimensions were primarily occupied during a single epoch; in all 8 dimensions, a single epoch accounted for greater than 60% of that dimension’s total occupancy. Across all dimensions, occupancy was significantly more concentrated in SCA factors than wPCA factors (Fig. 3B, right, p < 0.01 bootstrap test). This result did not trivially follow from SCA prioritizing sparsity. We performed a control analysis, where we trained SCA and wPCA only on delay-period activity, divided the delay period into three ‘epochs’, and calculated the occupancy concentration of the resulting factors. Here, occupancy concentration did not significantly differ between the SCA and wPCA factors (p = 0.68, bootstrap test). SCA cannot recover non-synchronous factors when they do not exist. Excepting the very end of the delay period (when execution-related factors become active), only preparatory factors are active during the delay period26,27, so performing SCA and wPCA on delay-period activity produces factors that are comparably sparse.

To directly compare SCA factors to those recovered by a supervised method, we used the method introduced by Elsayed et al.26 to identify three orthogonal subspaces, one for each task epoch (Fig. 3C). We wish to know whether SCA naturally discovers the same divisions – factors specific to preparation, execution and posture – sought by the supervised method. If so, then each SCA factor should be a linear combination of supervised-method factors that are restricted to be of the same type (e.g. only preparation-epoch factors or only execution-epoch factors). This was indeed the case. Across all SCA dimensions, the mean best fit was 0.93±0.04 (R2±std). This fit was just slightly worse than that achieved with no restrictions: fitting with all sets of supervised dimensions yielded an R2 of 0.99. Thus, SCA found a very similar division of factors as the supervised method, despite having no prior knowledge of epoch boundaries (or even how many epochs there might be). wPCA, which is not designed to find sets of distinct factors, found more ‘mixed’ factors. Repeating the analysis above, the fit to PCA factors was 0.79±0.04 when restricted to supervised factors of a single type, versus 0.99 when using all supervised factors. In addition to better matching supervised-factors, SCA also provides more consistent factors across resampled neural populations (Fig. S2).

SCA performed similarly across a range of hyperparameters. SCA has three hyperparameters:, which scales the penalty for non-sparse latent factors, , which scales the penalty for non-orthogonal decoding dimensions, and a requested number of latent factors. We found that the main result from the reaching datasets – that preparatory, execution, and posture-related processes occur in largely non-overlapping subspaces – was robust to relatively large changes in all hyperparameters (Figure S3). The largest difference that we observed with changes in hyperparameters was whether activity related to outwards and return reaches was captured within the same or different dimensions (Figures S4, S5). For example, consider the execution-related dimensions from monkey B (Fig. 2C, dimensions 6, 7, 8). Requesting eight dimensions produces latents where execution-related activity for outward and return reaches is captured within the same dimensions, but requesting twenty-four dimensions produces dimensions where these patterns are somewhat segregated (Figure S4). While many dimensions are occupied for both outward and return movements (e.g., Figure S4 dimensions 5, 6, and 15), there are others that are only occupied during the outward reach or return reach (e.g., Figure S4, dimension 7 and 14).

SCA can segregate activity related to outward and return movements because the neural spaces that are occupied during these two reaches are only partially overlapping. The return reaches are notably slower than the outward reaches (29% and 52% longer durations and 30% and 49% lower peak speeds, monkeys B and A, respectively). The alignment index is a concise method for measuring the overlap between two k-dimensional spaces26. Two spaces that are completely orthogonal have an alignment index of 0, while two spaces with perfect alignment have an alignment index of 1. The alignment index between delay-period activity and outward-reach related activity is 0.1, in agreement with previous results26,27,30. In contrast, the alignment index between outward-reach activity and return-reach activity is 0.43. SCA reflects the true alignment between activity during these task periods. Regardless of the number of dimensions requested, preparatory and execution-related factors are segregated into different dimensions, which is the desired behavior given the near-orthogonality of the dimensions occupied during preparation and execution. On the other hand, during outward and return reaches, neural activity occupies partially overlapping neural dimensions, and thus the degree to which outward- and return-reach related activity are separated by SCA depends on the number of dimensions requested. If few SCA factors are recovered, activity relating to outward and return reaches is largely placed within the same dimensions, while requesting a greater number of dimensions leads to greater separation.

Importantly, SCA, unlike alternative methods that also yield temporally sparse factors, does not seem to find ‘overly-sparse’ factors – i.e., prioritizing sparsity does not lead to a distorted view of the data. Independent component analysis (ICA) is an unsupervised dimensionality reduction method that seeks to minimize the mutual information between factors49 and often yields factors that are sparse in time50. In reaching data, we found that the sparsity of a given ICA factor is heavily influenced by the total number of requested dimensions (Figure S4). When using ICA, requesting a large number of dimensions will result in variance being ‘spread out’ across all dimensions. SCA does not exhibit this behavior. The orthogonality term in the SCA cost function discourages the recovery of factors with similar loadings (Figure S4). Therefore, requesting a large number of dimensions yields factors that are qualitatively similar to those returned when fewer dimensions are requested, in addition to many factors that only reflect noise.

A related concern is that SCA might provide a distorted view of factor dynamics. Suppose factors reflect purely oscillatory dynamics: trajectories rotate from dimension-one into dimension-two, and then back into dimension-one. One might worry that SCA could distort such structure, making dynamics appear more ‘non-normal’: activity rotating from dimension-one into dimension-two, and then into dimension-three without returning51–53. In fact, such distortion appears minimal. We applied SCA to activity from an RNN that uses oscillatory dynamics to generate empirical patterns of EMG (Figure S6). SCA recovered the oscillatory latents. This argues that, for the monkey whose data is shown in Fig. 2E, there is indeed a large ‘non-normal’ component to the dynamics, with activity rotating from each dimension into the next, and mostly not returning. In contrast, for an additional reaching monkey, SCA recovered strongly oscillatory latent factors (Figure S6). This suggests that the exact form of the rotational dynamics during reaching is heterogeneous across animals and that SCA can help identify this heterogeneity (see Figure S6 for further discussion).

Unimanual Cycling

Motor cortex activity during delayed center-out reaching was a natural choice for validating SCA because the distinct component processes that produce a reach have been characterized using supervised methods. We could thus ask whether SCA recovered factors that reflect the known computational division of labor. We now turn to a behavior, cycling54, where less is known about potential divisions. Unlike delayed reaching, which involves brief (~250 ms) movements whose timing is tightly controlled by the task requirements, cycling movements unfold over multiple seconds. Here, the number, or even existence, of distinct sets of computationally distinct factors has not been established.

In this task, monkeys used a hand-held pedal to traverse a virtual track (Fig. 4A). On each trial, the monkey performed either a seven, four, two, one, or one-half cycle movement to travel from a starting position to a final target. The monkey performed forward and backward cycles in multi-trial blocks, with the virtual environment cuing the monkey as to the required cycling direction. Each trial began with the pedal in one of two positions, either at the top of the cycle or the bottom (‘top-start’ and ‘bottom-start’, respectively). As in the reaching task, each trial began with a variable delay period. During this epoch, a target appeared in the distance, conveying the number of cycles necessary to complete the trial.

Figure 4. SCA identifies preparatory, execution-related, posture-related, and stopping-related factors in motor cortical unimanual cycling data.

A. Top and Middle: Task cartoon. Monkeys pedaled forward (top) or backward (middle), pedaled for seven, four, two, one, or half a cycle, and began each trial at either the top of a cycle or the bottom. Bottom: legend for B-D. B. Left: vertical pedal position during four conditions: seven-cycle, forward (green traces) and backward (red traces), top-start (light traces) and bottom-start (dark traces). Center: vertical pedal position during four four-cycle conditions. Right: pedal position during four half-cycle conditions. C. Trial-averaged EMG activity from the trapezius muscle. Same format as B. D. Projections in example putative posture-related (dimension 14), preparatory (dimension 20), steady-state (i.e., execution-related, dimension 1), and stopping-related (dimension 26) SCA dimensions.

We applied SCA to trial-averaged motor cortical activity from all twenty conditions. The recovered factors fell into four categories, putatively related to postural maintenance, movement preparation, steady-state cycling, and stopping (top to bottom in Fig. 4D). One example factor from each category is plotted in Fig. 4D. All SCA factors are plotted in Figure S7. Note that SCA does not assign factors to different categories, nor does the method even ‘know’ if distinct categories exist. This illustrates an appealing feature of SCA; by simply prioritizing sparsity, the method can find factors that naturally cluster into categories amenable to interpretation. This clustered structure is not imposed by SCA, but is inherent to the data and revealed by SCA. In the case of reaching, factors clustered into categories (preparatory, execution, posture) that agreed with prior analysis and interpretation. For cycling, less is presently known and the labels presently applied to each set of factors should thus be considered tentative and descriptive.

For example, we refer to factors like that in the top row of Fig. 4D as ‘posture’ factors, because they are active only when the monkey maintains its arm at a certain position. Whether such factors generate postural muscle activity and/or reflect proprioceptive feedback remains to be determined. Yet even in advance of determining the source of the posture-related information, inspection reveals a consistent relationship between posture factors and the specific posture being held. For example, the ‘posture’ factor in Fig. 4D is most active when the animal’s arm is stationary at the bottom of a cycle, which occurs at the start and end of bottom-start, 7-cycle and 4-cycle conditions (represented by the dark red and dark green traces). At the end of 0.5-cycle conditions, this pattern appears to reverse, with bright green and red traces (top-start forward and backward, respectively) showing the greatest activity. This pattern makes sense: for the 0.5-cycle conditions, top-start conditions end at the bottom of the cycle.

The second set of SCA factors likely reflect preparatory processes (e.g., Fig. 4D, dimension 20). As during reaching, preparatory factors become active (and differentiate among conditions) during the delay period, peak approximately 140 ms before movement onset, and remain inactive during movement. The preparatory state just prior to movement onset was similar regardless of the number of upcoming cycles. This is true not only for factor 20 (Fig. 4D), but for all preparatory factors (Figure S7). This observation agrees with prior work: motor cortex activity does not reflect behavior that will occur multiple seconds in the future, in contrast to the supplementary motor area55. Notice that activity in preparatory dimensions remains low even after the movement ends, whereas activity in the posture dimensions tends to increase at the end of the trial.

SCA also recovers factors related to steady-state cycling (e.g., Fig. 4D, dimension 1). These factors are quiescent during the delay period and become active at approximately the same time that activity in the preparatory dimension begins to fall – analogous to the evolution of preparatory and execution factors during reaching. As in this example, steady-state factors were similarly active for top-start and bottom-start conditions, with responses that were 180 degrees out of phase, consistent with behavior (Figure S7, light and dark traces). This is consistent with the observation that the steady-state factor-trajectories are similar regardless of starting position54 and illustrates that SCA does not separate conditions into different dimensions if the data do not support that division. In contrast, forward and backward cycling occupied overlapping, but not identical, subspaces. Some steady-state factors (Fig. 4D, dimension 1) are active during both, while others are primarily active for only one direction (e.g., Figure S7, dimension 3). In agreement with the above observations, the alignment index was high when comparing top-start and bottom-start cycling (0.85±0.05, mean and standard deviation across pairs of top-start/bottom-start conditions) and smaller when comparing forward and backward cycling (0.40±0.03).

The final set of SCA factors were only active during the final cycle of a movement. For example, the factor in dimension 26 (Fig. 4) was active only when the animals stopped at the bottom after cycling forward. For 7- and 4-cycle bouts, this occurred during bottom-start conditions (due to the integer number of cycles). During 0.5-cycle bouts, this occurred during top-start conditions (which start at the top of the cycle and end at the bottom). SCA does not find separate dimensions for each individual cycle – e.g., no factors were active only during the third cycle of a seven-cycle bout. Nor were there factors that were active for 7-cycle bouts but not 4-cycle bouts. This is consistent with the prior finding that motor cortex activity repeats across steady-state cycles (i.e., all but the first and last cycle) regardless of distance55. This again illustrates that SCA does not create separation where none exists. The presence of a distinct stopping dimension is suggested by prior observations55 and agrees with analyses based on the alignment index (Figure S8). Alignment is high across all steady-state cycles and considerable when comparing initial and steady-state cycles. Alignment is much lower between steady-state cycles and the final cycle. Thus, SCA usefully identifies a feature – factors active only when stopping – whose existence is confirmed by additional analyses. SCA produced similar results for a second monkey (monkey D, Figures S9, S10): factors naturally fell into posture-related, preparatory, steady-state, and stopping-related categories (Figure S10).

In cycling, as in reaching, SCA provided a clean segregation of neural activity into groups of interpretable factors. Three of these groups (posture-related, preparatory, and steady-state) mirror the sets of factors recovered from reaching (posture-related, preparatory, and execution-related). Additionally, SCA recovered a fourth category, stopping-related factors. The existence of these stopping factors may not have been predicted from behavior alone, yet their relationship to behavior is clear.

Limitations of SCA

We pause here to offer two practical caveats. The first is to note the instances when SCA will (and won’t) be successful. In both reaching and cycling, SCA was able to parse neural activity into preparatory, execution-related (steady-state), and posture-related factors because the factors related to these processes were non-synchronous: these processes did not always occur at the same time. Consider the idealized data shown in Fig. 1F. Here, there was significant overlap between when the true factors were active, yet SCA was able to recover these factors because for any pair, there were times when one factor was active and the second was not. Similarly, in the reaching dataset (Fig. 2E), SCA was able to separate posture-related factors from execution-related factors even though posture-related activity (particularly the activity in dimension 4) emerged at the same time as execution-related activity (dimensions 6–8). SCA cannot accurately segregate two computationally-distinct factors if those factors are perfectly co-active. For example, prior work54 has demonstrated that the largest signals during steady-state cycling primarily serve an internal computational role: maintaining low-’tangling’. Other factors, identifiable via regression, are more directly related to producing descending motor commands. These two sets of factors (‘low-tangling factors’ and ‘muscle-readout factors’) are close to orthogonal and serve two distinct computational purposes. However, SCA has no way of disentangling these two components as they occur simultaneously at all times, across all conditions.

The second consideration involves interpreting SCA factors. Even if SCA successfully parses a given factor such that it only reflects a single computational role, the precise mechanistic role that factor plays can be ambiguous, especially if that factor is only observed during a small number of self-similar experimental conditions. As a concrete example, we return to motor cortical activity during a reaching task27. In this task, the monkey performed center-out reaches either with or without a delay period. If we apply SCA only to activity recorded during non-delayed reaching, it is unclear whether there is preparatory activity (Figure S11). There exist two factors (1 and 2) that appear likely to be related to preparation yet could just as easily reflect early execution-related activity. Only when activity from delayed conditions is projected into the same dimensions does it become clear that these factors do indeed reflect preparatory computations. Thus, even though SCA correctly separated preparatory from execution-related factors using only non-delayed conditions, additional data (from delayed conditions) was required to know how to group factors based on their functional role. This highlights the fact that while SCA often recovers factors that are amenable to interpretation, it does not itself supply that interpretation. This is very much intended: the goal of SCA is to remain unsupervised, while still aiding interpretation in ways that previously required the use of more supervised methods.

Bimanual cycling

In the reaching and cycling data above, SCA identified factors that were active at different times within a trial, presumably reflecting different computational roles (e.g., Fig. 1A,B). We now ask whether SCA can identify factors that are potentially differentially active across experimental conditions (e.g., Fig. 1C,D). We analyzed data from two monkeys performing a bimanual version of the cycling task38. Each trial required the monkey to perform seven complete cycles, cycling either forward or backward, starting at either the top or bottom of the cycle. Depending on the condition, the monkey cycled with the left arm, right arm, or both arms simultaneously (Fig. 5A,B). Monkeys performed two types of bimanual movements: with the arms in phase (bimanual-0) and with the arms 180° out of phase (bimanual-180).

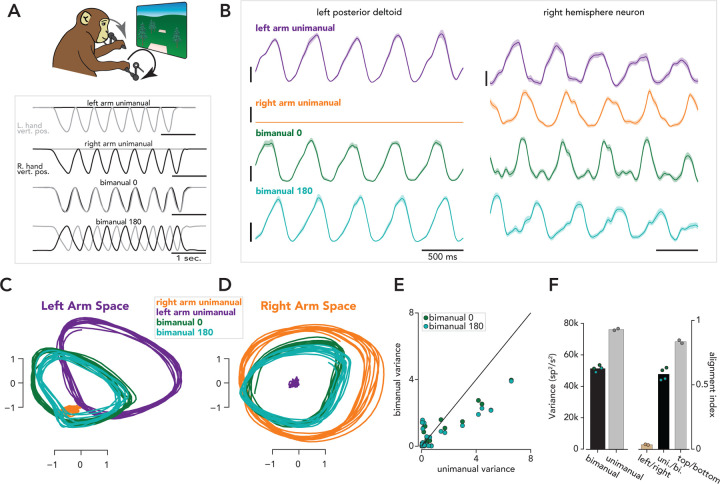

Figure 5. SCA identifies distinct left-arm and right-arm factors during unimanual cycling and partial overlap in the factors active during unimanual and bimanual cycling.

A. Task schematic (top) and trial structure (bottom). Monkeys performed seven-cycle movements with either the left arm only (left arm unimanual), right arm only (right arm unimanual), both arms with a 0 degree phase offset between arms (bimanual 0), or a 180 degree phase offset (bimanual 180). Conditions either began at the top or bottom of a cycle. Traces correspond to hand positions during four example trials. B. Left. Trial-averaged EMG (mean and standard deviation) from the posterior deltoid during four conditions. Right. Example motor cortex neuron. C. All forward conditions plotted in a space that is spanned by the two SCA dimensions that accounted for the largest fraction of left arm unimanual variance. D. Same as C, but for SCA dimensions that captured a large fraction of right arm unimanual variance. E. Bimanual and unimanual variance in 50 SCA dimensions. F. Left: total neural variance during bimanual and unimanual conditions. Blue and green dots correspond to variance during a single bimanual condition, and gray dots correspond to the sum of the variances during left arm unimanual and right arm unimanual conditions. The two dots indicate variances during top-start and bottom-start conditions. Bar heights correspond to means across dots. Right: Alignment indices between left and right unimanual conditions (tan, each dot corresponds to a pair of top start/bottom start conditions), unimanual and bimanual conditions (black, each dot corresponds to the comparison between a single bimanual condition and a pair of unimanual conditions), and top start and bottom start unimanual conditions (gray, each dot corresponds to a top start/bottom start pair).

Neural activity was recorded from the primary motor cortices of both hemispheres. As illustrated by the example neuron in Figure 5B, most M1 neurons are strongly active during both ipsilateral and contralateral movements38,39,56,57. At the same time, it is well-established that the impacts of M1 microstimulation and inactivation are almost purely contralateral. A potential resolution to this seeming paradox is that left-arm and right-arm signals may be carried by distinct population-level factors that have distinct downstream effects38,39. Can SCA identify putative candidate factors, without knowing the underlying hypothesis and accompanying condition-labels?

We applied SCA to steady-state activity (i.e., activity from cycles 2–6) from all recorded neurons, during all 16 conditions (two cycling directions by two starting positions by four handedness requirements). The factors recovered by SCA point towards a marked division between the neural processes that generate left- and right-arm movements. Figure 5C,D plots the evolution of four SCA factors in state-space. Left-arm factors (e.g., Fig. 5C) were active for all conditions during which the left arm moved (left-arm unimanual, bimanual-0, and bimanual-180 conditions, purple, light green, and dark green traces, respectively) and were inactive when the right arm moved alone (orange traces). Right-arm factors (e.g., Fig. 5D) were active for all conditions during which the right arm moved (right-arm unimanual, bimanual-0, and bimanual-180, orange, light green, and dark green traces, respectively) and were inactive when the left arm moved alone (purple). This near-complete arm specificity was true of most SCA dimensions; if a factor was active when the left arm moved alone, it was rarely active when the right arm moved alone (Figure S12). The existence of arm-specific factors agrees with prior findings from both cycling38 and reaching39.

SCA revealed that the same factors that drive unimanual cycling also contribute to bimanual cycling. Factors active when the left-arm moves alone (e.g., Fig. 5C, purple traces) are also active during both bimanual conditions (Fig. 5C, light and dark green traces). The same is true for right-arm factors (Fig. 5D). A similar result has recently been observed during reaching40. SCA recovered additional factors that were only active during bimanual cycling (Figure S12). The set of bimanual-only factors reflected the form of coordination: bimanual-0 versus bimanual-180. Interestingly (and consistent with prior reaching results40) total neural variance during bimanual conditions is lower than the sum of the variance during the component unimanual conditions (Figure 5F, left). This was true of most individual dimensions as well (Figure 5E) and is reflected in the modestly smaller bimanual-condition orbits (dark and light green trajectories) in Figure 5C,D.

The SCA factors imply a specific computational division of labor. Computations related to movements of the left or right arm are separate and distinct, as suggested by the existence of distinct left-arm and right-arm factors (Fig. 5C,D). These computations can occur separately (as is the case during unimanual movements) or simultaneously (as is the case during bimanual movements). SCA also suggests that the computations that produce bimanual movements are similar, yet not identical, to those that produce unimanual movements, as evidenced by the existence of ‘bimanual-only’ factors and the fact that factors active during unimanual movements are also active during bimanual movements. Importantly, SCA did not find unique sets of factors for all possible distinctions. For example, SCA did not recover any ‘top-start only’ or ‘third-cycle only’ factors, consistent with the results in Figure 4. We validated the computational organization suggested by SCA using the PCA-based alignment index. When comparing the population response during left-arm and right-arm unimanual conditions, the alignment index was very low (0.03, Fig. 5F, right, light brown bar, computed for forward cycling), while the alignment index between unimanual and bimanual movements was higher (0.58, Fig. 5F, right, black bar). The alignment between top-start and bottom-start conditions was higher still (0.83, Fig. 5F, right, gray bar). Figure 5 presented results from forward cycling conditions in one monkey (monkey F). As noted above, SCA was trained on all 16 conditions simultaneously, and our primary findings held true both for backward conditions (Figure S12) and for a second monkey (monkey E, Figures S13, S14).

In a second monkey, SCA recovered factors relating to a behavioral idiosyncrasy. Monkey E increased his pedaling speed toward the end of each trial (Figure S14). Prior work, where cycling speed was intentionally varied, makes the strong prediction that increasing speed should be accompanied by a translation in state space, yielding a spiral trajectory58. Because the translation should be orthogonal to the rhythmic trajectory itself, there should exist at least one factor with a pronounced ramp, yet minimal rhythmic variation. With SCA, this predicted structure was readily identifiable (Figure S15).

SCA identifies factors related to specific mating behaviors in C. elegans

In mammalian cortex, neurons tend to exhibit heterogeneous responses with ‘mixed selectivity’59–65, consistent with single-neuron rates reflecting random projections of population factors21,42,66,67. Is SCA useful in networks where neurons are more specialized? To probe the scope of SCA’s utility, we applied the method to single-trial calcium-imaging data recorded during C. elegans mating68, a complex behavior composed of discrete behavioral motifs (Fig. 6A). Prior work has mapped the individual neurons in the posterior brain of male C. elegans (the ganglia responsible for generating mating-related behaviors69–71) and found that the activity of many neurons is tightly linked to a few (or even a single) behavioral motif68,72–74.

Figure 6. SCA identifies factors related to specific mating motifs in C. elegans.

A. Behavioral motifs exhibited by worm-109. Continuous behavior was automatically classified; not all worms exhibited all behavioral motifs. B. Top: ethogram for a single mating bout in one worm (worm-109). Bottom: Activity from forty-nine neurons during the same mating bout. C. Left: Four SCA factors, all from worm-109. Activity of these four factors most closely corresponds to vulva detection, copulation, turning, and excretory pore detection (top to bottom, respectively). Right: Loadings for the four SCA dimensions plotted on the left. D. Correlations between all worm-109 factors and all behaviors. Top: SCA factors, Bottom: PCA factors. E. Maximum correlations between activity in a single SCA or PCA dimension and behavior (p < 0.001, rank-sum test, maximum calculated across behaviors, see Methods). The rightwards shift of the purple trace (relative to the gray trace) indicates that the correlations between SCA factors and behavior were higher than those between PCA factors and behavior.

We analyzed behavioral and calcium imaging data from the posterior brain of eight male worms during mating (49–54 simultaneously recorded and identified neurons, Fig. 6B, bottom traces). The worms’ behavior throughout the trial (i.e., a mating bout) was classified into one of twelve motifs (see Methods, Fig. 6B, top). We applied SCA to the neural activity of each individual worm separately. Note that, as in the previous analyses, SCA was not provided any task-related timing information (i.e., when different motifs occurred).

Activity in many individual SCA dimensions was tightly related to a single behavioral motif. Consider the top row of Fig. 6C, dimension 5 (for all SCA factors from all worms see Figures S16, S17). This factor’s magnitude (white trace) is low except around vulva detection (orange rectangles). The three remaining factors (Fig. 6C, dimensions 1, 10, and 11) have similarly tight relationships with the onset of copulation (red), turning (blue), and excretory pore detection (black), respectively. The loadings learned by SCA are consistent with previously described functional roles of many individual neurons (Fig. 6C, right). Neurons PCB, PCC, and HOB are sensory neurons known to contribute to vulva detection68,73, and these neurons contribute heavily to the factor that is active during vulva detection (Fig. 6C, top trace and leftmost column). Similarly, neuron PVV contributes heavily to the factor related to turning (Fig. 6C, third trace and third column), in agreement with prior work, which demonstrated that PVV drives turning behavior during mating68.

We measured the correlation between each SCA factor and all behaviors (see Methods). The majority of SCA factors from worm-109 were closely related to a single behavior (Fig. 6D, top); most wPCA factors, on the other hand, were weakly correlated with multiple behaviors (Fig. 6D, bottom). Across all SCA factors, in all worms, the mean correlation between the factors and the most closely related behavior was 0.47 ±0.02 (standard error) (Fig. 6E). Performing the same analysis with PCA factors yielded significantly lower correlations (0.37 ±0.01, p < 0.001, rank sum test), indicating that the SCA factors bore a closer relationship to the timing of individual motifs than did wPCA factors.

We next asked whether SCA could recover similar neural dimensions from different worms. If a given set of neurons are responsible for generating a specific motif across animals, then SCA should recover factors that are primarily related to that motif, and the accompanying loadings should be similar between worms. As described above, many SCA factors were tightly related to specific motifs. For example, we found SCA factors that were closely related to copulation in all worms that exhibited this behavioral motif (six of eight, Fig. 7A). Similarly, factors related to vulva detection, turning, and excretory pore detection were also common across worms (Fig. 7A).

Figure 7. SCA identifies similar dimensions across individual worms.

A. Example SCA factors related to specific behavioral motifs. B. Loadings for SCA dimensions related to the same behavioral motif. Note that there are different numbers of columns for each motif because not all worms exhibited the same motifs, and SCA did not always identify a dimension that clearly corresponded to a particular motif. The latter is partially due to different combinations of neurons being recorded across worms. C. ‘Hybrid factors’ generated from SCA weights from worm 184 and the neural activity of five other worms that copulated. D. Correlations between hybrid factors generated from SCA or PCA weights and behavior (p < 0.001, rank-sum test). The rightwards shift of the purple trace (relative to the gray trace) indicates that the correlations between SCA factors and behavior were higher than those between PCA factors and behavior.

The SCA loadings were also similar across worms. Qualitatively, SCA recovered similar relationships between individual neurons and particular motifs, across worms. For example, for copulation-related factors, SCA tended to learn large weights for neurons SPC, SPD, and HOA, neurons that are known to play an important role in copulation (Fig. 7B, red box)68,72. Similarly, for turning-related SCA dimensions (Fig. 7B, blue box), SCA recovered large weights for neuron PVV (a neuron known to be important for turning). To quantify the similarity between SCA loadings, we measured the angle between the loading vectors of individual worms (Figure S18). When compared to the angle between wPCA loadings, we found a modest but highly significant difference between SCA angles and wPCA angles (Figure S18), indicating that the SCA loadings are indeed more replicable across worms than wPCA loadings.

To further test the generalizability of the SCA loadings, we used the SCA loadings from one worm to predict the behavior of a second worm (Fig. 7C). Given an SCA factor that was related to a particular motif in one worm (worm-A) and a second worm that exhibited the same motif (worm-B), we generated a ‘hybrid factor’ using the SCA loadings from worm-A and the neural activity from worm-B. We then asked how well the hybrid factor correlated with the behavior of worm-B. For an example, see Fig. 7C. Here, we used the SCA loadings from worm-184 and the neural activity of the other five worms that copulated to generate five hybrid factors (Fig. 7C, traces). These hybrid factors were well correlated with copulation timing (rho = 0.59±0.13, mean and standard deviation, across worms). Across all worms and motifs, we found that hybrid factors generated from SCA loadings were significantly more correlated with behavior than the comparable factors generated from wPCA loadings (mean rho = 0.48±0.02 versus 0.39±0.02, p < 0.001, rank sum test, Fig. 7D).

Identifying compositionality in a multitask RNN

As demonstrated above, SCA leverages the fact that many neural computations (e.g., reaching) are composed of multiple distinct processes (e.g., preparation and execution). This discrete computational structure opens the possibility of reusing component processes across multiple tasks. Component processes (i.e., component computations) could be used as ‘building blocks’ that are reassembled or reordered to perform different tasks. While such ‘compositionality’ has yet to be observed in neural data (partially due to the difficulty in training a single animal to perform multiple tasks simultaneously), reuse of component computations has been observed in artificial networks. Driscoll et al.13, extending earlier work by Yang et al.35, found that single networks trained to perform fifteen different cognitive tasks learned a small number of component computations. These computational building blocks were used across multiple tasks and even allowed networks to quickly learn new tasks. Importantly, Driscoll et al., found that compositionality was ubiquitous across networks, even when such structure was obscured at the level of individual neurons. In networks composed of units with non-negative activation functions (softplus or rectified tanh), individual units primarily participated in a small number of component computations. In tanh networks, however, units tended to be active during most component computations.

To determine whether SCA could recover the compositionality masked at the level of individual neurons, we performed SCA on the activity of a tanh network trained to perform 15 cognitive tasks (Fig. 8A, see Methods and Figure S19 for a thorough description of each task). In all tasks, the network received scaled, directional inputs (in the form of the cosine and sine of different angles) and generated a 2-dimensional directional output. As was previously reported13, the majority of neurons were active during most tasks (Fig. 8B).

Figure 8. SCA identifies compositionality in a multitask network.

A. Network architecture. B. Variance of individual units across task epochs. Epochs were delineated by the timing of the network inputs, and the number of epochs differed across tasks. C. Activity in three SCA dimensions during nine tasks. The first factor (top row) reflected the stimulus, the second (middle row) reflected the network’s ultimate decision, and the third (bottom row) reflected the timing of the network’s output.

During the ‘Delay-pro’ task, the network received a directional stimulus and generated an output in the same direction after receiving a ‘go cue’. The ‘Delay-anti’ task was identical, except the network needed to generate an output that was 180° from the input stimulus.

The ‘Memory-pro’ and ‘-anti’ tasks were the same as the ‘Delay-’ tasks, except the network did not receive a continual directional stimulus.

The remaining five tasks required the network to compare the amplitude of two successive stimuli and respond in the direction of the larger of the two. For ‘Compare-stim 1’ and ‘Compare-stim 2’, the network compared the amplitude of stimuli delivered via a single pair of stimulus dimensions (stimulus 1 or stimulus 2). For the ‘ContextCompare-’ tasks, the network received stimuli from both pairs of stimulus dimensions and needed to ignore one (‘ContextCompareStim 1’ and ‘ContextCompareStim 2’) or compare across both pairs of inputs (‘ContextCompareStimuli’). During network training, the duration of the stimulus periods (and memory periods, when present) varied randomly from trial to trial to prevent the network from predicting task period transitions.

The factors identified by SCA reflected the compositionality present in the network and pointed towards a computational strategy that could be used to solve the tasks. To illustrate this strategy, we highlight the activity of three SCA factors during nine tasks (Fig. 8C, for a more thorough description of all recovered factors during all tasks, see Figure S20). The network’s putative strategy could be summarized as follows: use a single set of factors to represent the stimuli (e.g., Fig. 8C, dimension 1), a second set of factors to reflect the network’s decision (e.g., Fig. 8C, dimension 6), and condition-invariant factors to determine when activity flows into output-potent dimensions (e.g., Fig. 8C, dimension 17).

Across tasks, the network received directional input via two ‘channels’ (two, 2-dimensional directional inputs, Fig. 8A, stimulus 1 and 2). For some tasks, the network received input from only one of these channels (e.g., Delay-pro, Compare-stim2), while in others, both stimulus channels were active (e.g., ContextCompare). SCA identified a factor that reflected the stimulus across all tasks, regardless of input channel (Fig. 8C, dimension 1). Activity in this dimension reflected the time courses of the stimuli; during the Delay-tasks, activity in this dimension was constant after the stimulus was delivered. The same dimension reflects the stimuli regardless of the input source; activity in dimension 1 is highly similar during Compare-stim1 and Compare-stim2 even though the input sources are different in these two tasks. SCA also identified dimensions that only reflected the input from stimulus channel 2 (Figure S20, dimensions 3 and 4).

A second set of factors (e.g., Fig. 8C, dimension 6) reflected the network’s ultimate output. Consider activity during the Memory tasks. Here, the network receives a brief directional stimulus. In the Memory-pro task, the network must report this same direction after the go cue is delivered (i.e., when the fixation signal is removed) while in the Memory-anti task, the network must report the direction 180° from the instructed direction. After the stimulus is removed, activity grows in dimension 6, but with opposite ordering (purple traces are above the green traces in dimension 6 during Memory-pro, while the reverse is true during Memory-anti). This ordering reflects the ultimate ‘decision’ of the network. We use the term ‘decision’ loosely here, in reference to the fact that activity in dimension 6 reflects the ultimate output of the network, even before that output is produced. During Compare- and ContextCompare tasks, where the correct output is not known until the second of two stimuli is delivered (see the legend of Fig. 8C for more details), activity remains low until the second stimulus is removed.

Finally, the network uses a third set of factors to determine the timing of the output (e.g., Fig. 8C, dimension 17). The evolution of these factors was highly stereotyped across conditions and tasks, reminiscent of the condition-invariant ‘trigger signal’ that has been observed during reaching in non-human primates75, decision making in rodents43, and speech in humans67. In reaching monkeys, the trigger signal translates preparatory activity to a region of state space dominated by rotational dynamics14,15,75. While preparatory activity is output-null (with respect to motor cortical output26,76, execution-related activity has a non-zero projection onto output dimensions. Likewise, dimension 6 is output-null, and it is likely that the activity in dimension 17 translates the activity in these dimensions to new dimensions (not shown) that have non-zero projections onto the network’s readout dimensions.

While these three sets of SCA factors do not offer a full description of how the network solves these tasks (this would require direct interrogation and characterization of the network dynamics as in13), they suggest important aspects of the computational strategy. Such a clear representation of the network’s underlying computations could not be gleaned from the activity of single units (Fig. 8B) nor from PCA (Figure S21).

Discussion

For many networks, computation is best understood in terms of population-level factors. This is known to be true of a sizable class of network models (e.g.,11,12,14,17, and strongly suspected to be true of many cortical (and perhaps subcortical) areas. The idea of a factor is simple: factors are the non-noise signals present in a network – i.e., signals that are reliable enough to subserve computation. Factors cannot be directly observed; they need to be estimated from the activity of many individual neurons. If one simply wishes to recover factors that can account for the activity of individual neurons, the choice of basis set does not matter (as long as it spans the full factor space). Yet this choice may matter a great deal to an experimenter attempting to understand the system; interpretation may be greatly aided by recovering factors that can be parcellated into sets that serve somewhat different functions. This has been a long-standing goal in our field, but gaining such interpretability has, historically, required supervision, either explicitly, in the form of supervised dimensionality reduction methods (e.g.,12,31,32,77) or implicitly, in the form of manual parsing of the neural activity into separate task epochs (e.g.,17,19,26,66).

Here, we introduced SCA, an unsupervised method that has many of the advantages of supervised methods, but with less need for the experimenter to anticipate or guess at the types of structure that might be observed. We found that, when applied to a variety of datasets, SCA often identified factors that reflected a single computational role. For example, when applied to data during reaching, SCA identified factors that could be grouped into one of three sets: one reflecting preparation, one reflecting execution, and one reflecting postural maintenance. This parcellation agreed with that previously found using supervised methods26,48, demonstrating that SCA can be useful in situations where one had previously needed supervised methods, and confirming that prior approaches identified naturally present divisions in the data. Across a variety of datasets, we repeatedly found that SCA identified nearly orthogonal sets of factors that had different computational ‘meanings’, in the sense that were associated with a particular behavior (e.g., cycling with one arm versus the other, Fig. 5), a particular aspect of a larger behavior (e.g., stopping at the end of a bout of cycling, Fig. 4), or internal process (e.g., reach preparation, Fig. 2). At a technical level, this demonstrates the utility of SCA. At a scientific level, it supports the emerging idea that a common network strategy is to use distinct factors, occupying orthogonal dimensions, to perform distinct computations or distinct aspects of a larger computation.

An advantage of SCA, and the reason we could usefully apply it to many datasets, is that it makes minimal, but powerful, assumptions: neural activity is low dimensional, factors associated with different computational roles are non-synchronous, and such non-synchronous factors tend to occupy unaligned (nearly orthogonal) subspaces. The assumption that factors are non-synchronous is much less stringent than it might initially appear. It is not necessary that factors be active at completely different times, merely that there be some flexibility in when they occur relative to one another. For example, SCA was able to segregate left-arm and right-arm factors even though these two sets of factors are simultaneously active during both bimanual cycling conditions (Fig. 5C,D). Additionally, SCA identified stopping factors during unimanual cycling (Fig. 4D), despite the fact that, whenever the stopping factors were active, steady-state factors were also active. SCA was able to separate these two sets of factors because there were times when steady-state factors were active but stopping factors were not.

Despite using a simple cost function, SCA allowed a surprisingly clear parcellation of the factors underlying multiple behaviors. This clarity was maintained across a broad range of hyperparameters (Figures S3, S4, S5). In addition to recovering ‘expected’ factors, SCA also found previously uncharacterized factors, such as preparatory cycling factors (Fig. 4) and bimanual-only cycling factors (Fig. 5). While the computational role of these (and any other newly characterized factors) cannot be determined without further study (e.g., more/different experimental conditions), their potential role is clear. This interpretability is what makes SCA a powerful method; in all examined datasets, SCA yielded factors that invited hypotheses of how a given neural computation was performed.

Interpretability

‘Interpretable’ is a label that we, as researchers, use when we are able to assign meaning to or derive meaning from a particular neural signal or set of signals. The ‘meaning’ that we ascribe to SCA factors relates to their putative computational role; SCA factors are interpretable insofar as they aid us in making testable predictions about how a particular computation occurs. By seeking sparsity and orthogonality, SCA facilitates the grouping of factors into sets that ostensibly relate to distinct computational processes. Non-synchronous factors are assumed to reflect different computational roles (e.g., preparatory and execution-related factors), while synchronous factors are assumed to perform closely related roles (e.g., two posture-related factors). The factors identified by SCA tend to form natural groups (e.g., preparatory, posture, and execution factors) to which one may be able to assign meaning. Yet SCA does not itself determine how individual factors should be grouped, nor does the method assign ‘meaning’ to a set of factors; parcellation and interpretation of factors requires understanding of the task being performed and considerable scientific knowledge. For example, the execution-related factors for monkey B (Fig. 2E, dimensions 6–8) are not perfectly synchronous, yet we assume that they contribute to a single computational process because they are all only active during a reach and prior work has demonstrated how non-normal dynamics can be used to generate a reach51. The goal of SCA is not to obviate the need for scientific reasoning, but rather to make such reasoning easier by supplying factors that are likely to lend themselves to straightforward interpretations.

Alternatively, factors can be interpretable insofar as they correlate with a particular behavior. The ‘meaning’ that is assigned to such factors does not necessarily relate to an internal computational role, but rather references the close relationship between a factor and an externally measured signal. There are a wide variety of methods that seek such factors. For example, two recent methods, pi-VAE78 and CEBRA79, use generative modeling and contrastive learning, respectively, to find low-dimensional spaces in which similar locations in state-space correspond to similar behaviors. Two additional methods, preferential subspace identification (PSID)80 and targeted neural dynamical modeling (TNDM)81, use instead a dynamical systems approach to identify ‘behaviorally-relevant’ and ‘behaviorally-irrelevant’ factors (i.e., factors that do and do not correlate with a particular behavior). Note that the goals of SCA and methods that seek behaviorally-correlated factors are typically quite different and are thus complementary. For example, in the case of the cycling data (Fig. 4), the factors identified by SCA are those that one would likely wish to use when reverse engineering the solution used by a network (biological or artificial) to produce its output. However, if one’s goal is to predict behavior from neural activity, SCA is likely not the best choice, as the factors that are active during forwards and backwards cycling are quite different82 (e.g., Fig. S10). A natural choice would be to use a method like CEBRA to identify a single set of factors that are highly active during both forwards and backwards cycling.

Factors and neurons

The degree to which computationally distinct factors relate to physically distinct neurons will vary by brain area and species. In C. elegans, where the connectivity of individual neurons is preserved across animals, SCA identifies factors that relate to specific component processes (behavioral motifs, Fig. 6A), and many of these factors primarily reflect the activity of a small number of neurons (e.g., Fig. 6B, excretory pore detection factors). In this system, distinct processes are often reliant on the activity of single neurons (e.g., ‘excretory pore detection’ requires PHA activity68) and can be understood, at the circuit level, in terms of interactions between individual neurons. In contrast, in monkeys, there exist distinct reach-preparation factors but very few ‘preparation only’ neurons; nearly all neurons that are active during preparation are active during execution, especially if many conditions are tested. Similarly, there exist distinct left-arm and right-arm factors, but relatively few ‘right-arm-only’ neurons, even in primary motor cortex38. This appears to be a common property in much of cortex21,61,66,76. Nevertheless, SCA identifies similar factors across animals (e.g., Fig. 2 and Figure S1 and Fig. 3 and Figure S9), reflecting the fact that the underlying factors that generate a behavior can be similar across animals83, even if there is not a one-to-one mapping between neurons.

Alternative strategies and related methods

SCA is specifically designed to leverage particular structure within neural activity, yet there are alternative approaches for identifying non-synchronous factors. Traditionally, non-synchronous sets of factors are identified via careful task design. Controlling when different component processes occur (e.g., preparation during a reaching task) allows a researcher to use an unsupervised method to identify factors that only reflect a particular computational role. However, even with careful task design, it can be difficult to predict when different processes occur or even how many distinct processes contribute to a particular computation. For example, it is not obvious, from behavior alone, that stopping-related factors should exist (Fig. 4).

SCA uses end-to-end optimization to identify factors that can account for large amounts of neural variance and are temporally sparse. One could instead use a step-wise strategy – i.e., first identify a set of factors, then subsequently rotate this basis set to increase the sparsity of the factors. A recent study84 used such an approach to characterize population-level activity in human motor cortex during real and imagined movement. Here, the authors used PCA to identify a low-dimensional space, then used a varimax rotation to maximize the variance of the projections (across factors) at each time point. While the varimax rotation does not directly optimize for sparsity, such an approach may approximate the solution found by SCA. The results of Dekleva et al. provide further evidence of the utility of identifying non-synchronous factors.