Abstract

Objectives

The research's purpose is to develop a software that automatically integrates and overlay 3D virtual models of kidneys harboring renal masses into the Da Vinci robotic console, assisting surgeon during the intervention.

Introduction

Precision medicine, especially in the field of minimally-invasive partial nephrectomy, aims to use 3D virtual models as a guidance for augmented reality robotic procedures. However, the co-registration process of the virtual images over the real operative field is performed manually.

Methods

In this prospective study, two strategies for the automatic overlapping of the model over the real kidney were explored: the computer vision technology, leveraging the super-enhancement of the kidney allowed by the intraoperative injection of Indocyanine green for superimposition and the convolutional neural network technology, based on the processing of live images from the endoscope, after a training of the software on frames from prerecorded videos of the same surgery. The work-team, comprising a bioengineer, a software-developer and a surgeon, collaborated to create hyper-accuracy 3D models for automatic 3D-AR-guided RAPN. For each patient, demographic and clinical data were collected.

Results

Two groups (group A for the first technology with 12 patients and group B for the second technology with 8 patients) were defined. They showed comparable preoperative and post-operative characteristics. Concerning the first technology the average co-registration time was 7 (3–11) seconds while in the case of the second technology 11 (6–13) seconds. No major intraoperative or postoperative complications were recorded. There were no differences in terms of functional outcomes between the groups at every time-point considered.

Conclusion

The first technology allowed a successful anchoring of the 3D model to the kidney, despite minimal manual refinements. The second technology improved kidney automatic detection without relying on indocyanine injection, resulting in better organ boundaries identification during tests. Further studies are needed to confirm this preliminary evidence.

Keywords: three-dimensional imaging, robotic surgery, renal cell carcinoma, kidney cancer, nephron-sparing surgery, artificial intelligence

Introduction

Modern medicine is increasingly focusing on patients’ individual aspects and unique characteristics, defining a new field: precision medicine. 1 This new approach includes the use of preclinical, clinical, and surgical tools to guarantee the best treatment for each patient. To address this need, surgery has started to evolve towards the creation of instruments to minimize the impact of anatomical variability between patients, which constitutes one of the greatest issues for the standardization of surgery. 2

To overcome this problem, imaging techniques offer the best tool to thoroughly represent the patient's anatomy and CT-scan and MRI images surely are the most suitable instruments, giving the high level of details offered. 3 These techniques, although very important and informative, have intrinsic limits, such as bi-dimensionality of the image and the minimum contrast-enhancement difference between some organs. 4 The surgeon, in fact, needs to perform a building in mind process to completely understand the anatomy and the spatial relationships between the intraabdominal organs’, healthy and pathological tissues. This is why three-dimensional virtual models (3DVMs) have gained popularity overtime and represent perfect tools to reach the goals set by precision medicine. 5 These models are, indeed, easy to understand, rich of detailed information and, most of all, completely patient-tailored. Several publications in literature have already highlighted the pros of such technology,6–9 which requires on the other hand a high level of expertise and last generation softwares to be used.

3D models find their application in a large number of scenarios, starting from the pre-surgical discussion of a clinical case (eg, complex renal mass) to training for novice surgeons,10–12 to intraoperative navigation. 13

But the real step forward in this setting is undoubtedly represented by the possibility to be guided by such reconstructions during surgery, performing augmented reality (AR) procedures.

Some preliminary experiences have developed and already tested this AR technology in different surgical settings, mainly for prostate and renal cancer surgery. 14

Pioneering results have been achieved for robotic radical prostatectomy (AR-RARP), 15 partial nephrectomy (AR-RAPN) 16 and kidney transplantation (AR-RAKT) in patients with large atheromatic plaques, 17 demonstrating advantages in terms of safety and/or oncologic and functional outcomes.18, 19

In all these experiences, a specific software-based system was used to superimpose the 3DVMs on the endoscopic view displayed by the remote da Vinci surgical console via TilePro™ multi-input display technology (Intuitive Surgical Inc., Sunnyvale, CA, USA). Using a 3D mouse, a dedicated assistant was allowed to manually pan, zoom, and rotate the 3DVM over the operative field.

However, the constant need for an assistant to correctly overlay the 3D model on the real anatomy during the different phases of the surgical procedure, impacted significantly the technology-related costs both in terms of human resources and money, which needs to be overcome to make this technology widely available. 20

To advance in this field of research, we aimed to explore innovative ways to reach a fully automated HA3DTM model overlapping via different strategies. The goal of this study is to present the feasibility of two different technologies to allow automation of the 3DVM anchoring to the real organ and to assess their accuracy in the overlapping process.

Patients and Methods

The current prospective observational study, aimed to test the feasibility of two different automatic AR software and included consecutive patients with a radiological diagnosis of an organ-confined single renal mass with the indication to perform RAPN from January 2020 to December 2022. Patients were divided into two different groups according to the time the procedure was performed (Group A from 01/2020 to 12/2021 and Group B from 01/2022 to 12/2022). The study was conducted in accordance with good clinical practice guidelines and informed consent was obtained from the patients for the use of the CT scan images to create the 3D models. After consulting our Ethical Committee no specific approval was required from them, because the study only focused on the feasibility of the technology without any influence on intraoperative decision making or outcomes. All patients underwent abdominal four-phase contrast-enhanced computed tomography (CT) within 3 months before surgery. Exclusion criteria were the presence of anatomic abnormalities (eg, horseshoe-shaped, ectopic kidney), multiple renal masses, qualitatively inadequate preoperative imaging (eg, CT images with slice acquisition interval >3 mm or suboptimal enhancement) and imaging older than three months. In addition, the intraoperative presence of the bioengineer and the software developer was essential for the success of the automatic AR surgical procedure and consequently for the study purpose. Therefore, in the absence of either of these professionals, the patients were excluded.

To perform automatic 3D-AR-guided RAPN, independently from the technological strategy tested, a team consisting of different professional figures was needed: first, the bioengineer was responsible to create the 3D-model; second, the software developer had to set the software for the automatic superimposition; finally, the surgeon was in charge to perform the surgery using these technologies.

Bioengineers working at Medics3D (www.medics3d.com, Turin, Italy) created hyper-accuracy 3D (HA3D™) models using a dedicated software starting from multiphase CT images in DICOM format. From these files, as previously described,15–17 images are segmented and the patient specific model is built, thoroughly displaying the organ, tumor, arterial and venous branches, and the collecting system. Each model was generated in .stl format and was therefore uploaded on a dedicated platform, to be downloaded and displayed by every authorized user.

To perform a totally automatic AR intraoperative navigation, two different strategies were tested by the software developers. The common goal was to infer the 6 degrees of freedom (6-DoF) of the kidney (3 for position and 3 for rotation) in Euclidean Coordinates, from the RGB (Red Green Blue) images streaming from the intraoperative endoscope. The 6-DoF were used to determine the anchor point for the overlay of the 3DVM to the image, to augment the endoscope video stream.

The first strategy consisted of the employment of computer vision (CV) technology, specifically the adaptive thresholding method, that allows to segment an RGB image isolating those pixels whose color falls inside a defined range. This strategy exploited the distinctive reddish shade of the kidney to isolate it from the rest of the image and allowed the use the organ itself as a “landmark” to determine the position for the 3DVM. Rotation for the virtual superimposed model was obtained using heuristics that fitted the segmented kidney's borders to a bounding ellipse and then predicted the organ's rotation using this ellipse minor and major axis orientation. These heuristics worked under some empirical assumptions concerning the rotation ranges the kidney may assume intraoperatively, as it is constrained by its blood vessels and other structures. One of the main challenges was represented by the color spectrum of the intraoperative field shown via endoscopic camera, mainly represented by shades of red which sometimes foiled the thresholding ability to segment.

This issue represented by the similarity of colors of the surgical field was overcome by super-enhancement of the target organ's color, using the NIRF Firefly® fluorescence imaging technology offered by daVinci dedicated camera. 21 The final software (named IGNITE), was able to identify the correct position of the real organ, leveraging its shiny green aspect over the dark surrounding background, and to automatically anchor the 3D virtual kidney. 22

The second strategy attempted was based on machine-learning strategies, specifically the convolutional neural network (CNN) technology, which allow to process live images directly from the endoscope. To make this technology work, frames from prerecorded robotic partial nephrectomies videos were extracted, so that the CNN could be trained to recognize its position and segment its tissue. 23 Starting from an autonomously assembled tagged images of kidneys obtained from a video library of RAPNs, the software developer processed data using ResNet.50 (ie, Residual Network) with its output layer customized to return the kidney segmentation mask. This mask is an image whose black pixels identify the background and elements not of interest, red pixels identify the kidney, and blue pixels identify the medical instruments. The obtained network, once trained, was able to get as inputs the frames from the endoscope, and to output this segmentation mask, later processed by CV algorithms as per the first method. The rotation of the organ can be evaluated by analyzing the geometric properties of the kidney, with particular attention on the organ's center of mass, the major and minor axis’ orientation, and the dimension of the organ itself. Once these data have been set, the final software (named iKidney) was able to process it and superimpose the 3D model over the endoscopic live video images, transmitting the merged result to the robotic console. CNN-based strategy was able to circumvent the need for fluorescence use, by providing very accurate segmentation of the kidney using endoscope RGB images. (Note: in terms of Intersection over Union, IOU, standard measurement for segmentation CNNs performances, our trained network performance is between IOU = 0937 and IOU 0985).

All RAPNs were performed by a highly experienced surgeon exploiting one of the two technologies described. Regardless the technology used, after trocar placement and robot docking, the anterior face of the kidney was exposed in order to visualize the organ.

When the CV based strategy was used, indocyanine green (0.1–0.5 mg/Kg) was intravenously injected, enhancing the whole organ thanks to its bright green, with the surrounding tissues being dark grey or black. At this point, the registration phase (ie, the 3DVM overlay) required a precise evaluation of both position and rotation of the kidney: the green kidney was included in a virtual box to test the different pixels to find its most probable center of mass, setting the x and y coordinates of this point. The further rotation of the organ was partially hampered by the presence of the vascular pedicle and the ureter, so the model may be less precise. This is why, for the fine-tuning of the model, a professional operator was required to manually modify the model's position, to reduce to a minimum the mismatches. At this point, the model was automatically anchored, allowing the surgeon to switch to normal color vision and perform the robotic surgery.

In case the second strategy based on CNN was used, the hindrances represented by the vessels and collecting system were excluded by the software, which automatically limited the degrees of freedom, recalculated the rotations and produced an adjusted result. After the recalculating process, the iKidney software was able to use this information to superimpose the 3DVM on the live endoscopic images.

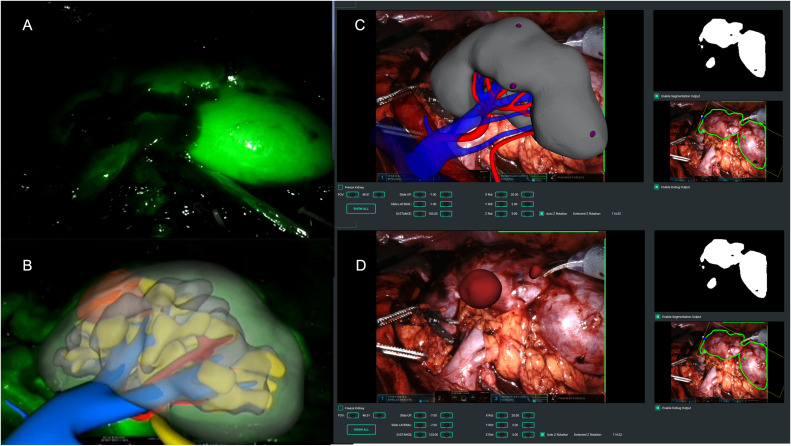

In case of endophytic masses, regardless the technology used, the surgeon marked the lesion's perimeter on the organ's surface, guided by the 3DVM, and therefore performed the surgery following the plane indicated by the model in an automatic AR fashion (Figure 1).

Figure 1.

Intraoperative automatic augmented reality co-registration process based on the indocyanine-green (ICG) based computer vision and the convolutional neural network strategy. (A) Right kidney brightening after ICG injection; (B) automatic overlapping of the virtual kidney over the real one leveraging computer vision technology; (C) automatic overlapping of the virtual kidney over the real one leveraging CNN technology, without the need of the organ super-enhancement; (D) removal of the right renal parenchyma to allow visualization of the small endophytic renal mass to be removed.

For each patient enrolled in the study, we prospectively collected demographic data including age, body mass index (BMI), comorbidities classified according to the Charlson's Comorbidity Index (CCI), 24 and clinical tumor size, side, location, and complexity according to the PADUA score. 25 Perioperative data including management of the renal pedicle, type and duration of ischemia, the anchoring time of the 3D model, static and dynamic overlap errors and postoperative complications graded according to the Clavien-Dindo classification 26 were also collected. Finally, pathological data including TNM stage and functional outcomes (ie, serum creatinine and the estimated glomerular filtration rate (eGFR) 3 months after surgery) were collected.

Statistical Analysis

Patient characteristics were compared using Fisher's exact test for categorical variables and the Mann-Whitney test for continuous variables. Results were expressed as median (interquartile range [IQR]) or mean (standard deviation [SD]) for continuous variables, and as frequency and proportion for categorical variables. Baseline and postoperative functional data (eg, serum creatinine, eGFR) were compared using paired-sample T-tests. Data were analyzed using Jamovi v.2.3. The reporting of this study conforms to STROBE guidelines. 27 No power calculation to estimate the sample size selected for the study was performed.

Results

A total of 20 patients underwent AR-RAPN during the enrollment phase: 12 were included in group A and 8 in group B. The demographic and preoperative characteristics of the patients are shown in Table 1. The mean age was 66 (15) years and 67 (14) years, the mean BMI were 25.8 (3.4) kg/m2 and 26.1 (4.2) kg/m2 and the median ICC was 2 (IQR 2–3) and 2 (IQR 2–3) for groups A and B, respectively. The median tumor size was 36 mm (IQR 25–48) for group A and 32 mm (IQR 22–43) for group B, and the median PADUA score was 9 (IQR 8–10) for both groups. Concerning the first technology used (ie, ICG-guided procedure) the average anchoring time was 7 (3–11) seconds while in the case of the second technology (ie, CNN-based) the average anchoring time was 11 (6–13) seconds. Unintended 3D model automatic co-registration temporary failures happened in a stationary setting in 2 patients in group A and in 1 patient in group B respectively, while it happened in 1 patient in group A and 1 patient in group B in a dynamic setting. There was 1 failure in group A and 0 failures in group B; in this single case, an ultrasound drop-in probe was used to detect the neoplasm and the surgery was performed under ultrasound guidance instead of AR guidance. The mean ischemia time was 17.3 (3.5) min for global clamping and 23.2 (4.3) min for selective clamping in group A and 18.1 (3.7) min for global clamping and 23.1 (4.6) min for selective clamping in group B. Pure enucleation was performed in 4 (33.3%) and 2 (25%) cases in the two groups and violation of the collecting system was recorded for 4/12 (33.3%) and 2/8 (25%) of the surgeries in the group A and B, respectively. The mean operative time was 87.9 (21.7) minutes in the first group and 89.2 (23.3) minutes in group B. The mean EBL was 183.8 (131.9) ml and 189.9 (135.2) ml in group A and B, respectively, with no differences regarding the hilar clamping strategy. No major intraoperative nor postoperative complications (ie, Clavien-Dindo >2) were recorded for both groups. Perioperative and postoperative data were collected up to 3 months after surgery. Pathological and functional data are reported in Table 2. No positive surgical margins were recorded. There were no differences in terms of functional outcomes between the groups at every time-point considered.

Table 1.

Perioperative Variables.

| Variables | Group A | Group B | |

|---|---|---|---|

| Number of patients | 12 | 8 | |

| Age. yrs. mean (SD) | 66 (15) | 67 (14) | |

| BMI (kg/m2), mean (SD) | 25.8 (3.4) | 26.1 (4.2) | |

| CCI. median (IQR) | 2 (2–3) | 2 (2–3) | |

| ASA score. median (IQR) | 2 (1–2) | 2 (1–2) | |

| CT lesion size. mm. mean (IQR) | 36 (25–48) | 32 (22–43) | |

| Clinical stage. No. (%) | • cT1a | 5 (41.7) | 3 (37.5) |

| • cT1b | 4 (33.3) | 3 (37.5) | |

| • >cT2 | 3 (25) | 2 (25) | |

| Tumor location. No. (%) | • Upper pole | 2 (16.7) | 2 (25) |

| • Mesorenal | 7 (58.3) | 4 (50) | |

| • Lower pole | 3 (25) | 2 (25) | |

| Tumor growth pattern. No. (%) | • >50% Exophytic | 2 (16.7) | 1 (12.5) |

| • <50% Exophytic | 3 (25) | 2 (25) | |

| • Endophytic | 7 (58.3) | 5 (62.5) | |

| Kidney face location. No. (%) | • Anterior face | 8 (66.7) | 6 (75) |

| • Posterior face | 4 (33.3) | 2 (25) | |

| Kidney Rim location. No. (%) | • Lateral margin | 9 (75) | 6 (75) |

| • Medial margin | 3 (25) | 2 (25) | |

| PADUA Score. median (IQR) | 9 (8–10) | 9 (8–10) | |

| Preoperative Scr (mg/dL). mean SD | 0.98 (0.49) | 0.92 (0.46) | |

| Preoperative eGFR (ml/min). mean SD – MDRD formula | 86.6 (18.82) | 88.2 (17.85) | |

| Operative time (min). mean (SD) | 87.9 (21.7) | 89.2 (23.3) | |

| Hilar clamping. n. % | • Global ischemia | 4 (33.3) | 3 (37.5) |

| • Selective ischemia | 6 (50) | 4 (50) | |

| • Clampless | 2 (16.7) | 1 (12.5) | |

| Ischemia time (min), mean (SD) | • Global ischemia | 17.3 (3.5) | 18.1 (3.7) |

| • Partial ischemia | 23.2 (4.3) | 23.1 (4.6) | |

| EBL (cc), mean (SD) | 183.8 (131.9) | 189.8 (135.2) | |

| Transfusion rate. n. % | 1 (8.3) | 1 (12.5) | |

| Extirpative technique. n. % | • Pure enucleation | 4 (33.3) | 2 (25) |

| • Enucleoresection | 8 (66.6) | 6 (75) | |

| Opening Collecting System. n. % | • Yes | 4 (33.3) | 2 (25) |

| • No | 8 (66.6) | 6 (75) | |

| Intraoperative Complications. n. % | 0 (0) | 0 (0) | |

| Postoperative Complications. n. % | 2 (16.7) | 1 (12.5) | |

| Postoperative Complication according to Clavien-Dindo. n. % | • >2 | 0 (0) | 0 (0) |

ASA: American Society of Anesthesiologists; BMI: body mass index; CCI: Charlson’s comorbidity index; CT: computed tomography; IQR: interquartile range; SD: standard deviation.

Table 2.

Functional and Pathological Variables.

| Variables | Group A | Group B | |

|---|---|---|---|

| Postoperative Scr (mg/dL). mean (SD) | 1.1 (0.83) | 1.1 (0.79) | |

| Postoperative eGFR (ml/min). mean (SD) – MDRD formula | 76.8 (22.17) | 77.9 (23.18) | |

| Pathological Stage. n. % |

|

1 (8.3) | 1 (12.5) |

|

6 (50) | 5 (62.5) | |

|

5 (41.7) | 2 (25) | |

| Pathological size (mm). mean SD | 44.8 (22.4) | 45.1 (24.2) | |

| Positive surgical margin rate. n. % | 0 (0) | 0 (0) | |

| Histopathological findings. n. % |

|

8 (66.7) | 5 (67.5) |

|

2 (16.7) | 2 (25) | |

|

1 (8.3) | 1 (12.5) | |

|

1 (8.3) | 0 | |

| ISUP Grade. n. % |

|

4 (33.3) | 2 (25) |

|

5 (41.7) | 5 (67.5) | |

|

1 (8.3) | 1 (12.5) | |

|

2 (16.7) | 0 |

eGFR: estimated glomerular filtration rate; SCr: serum creatinine; ISUP: International Society of Urological Pathology; IQR: interquartile range; SD: standard deviation.

Discussion

Among the new ancillary technologies created to intraoperatively drive the surgeon during robotic procedures,21, 28, 29 augmented reality currently covers an increasingly interesting role.5

The main advantage related to this technology is the possibility to avoid the “building in mind” process necessary to understand two-dimension cross-sectional imaging; in addition, the surgeon can be constantly assisted during its intervention by a virtual model reproducing the patient specific anatomy.15, 16 Up to now, AR has been intraoperatively used to allow the co-registration of 3D models over the real anatomy, but the process was complex and totally manual, requiring the presence of an expert operator. In addition, this process may be less precise due to the operator's experience (eg, misunderstanding of kidney's rotation) and discrepancies with the real anatomy. 20 The real advance in this field was therefore represented by the possibility to be guided by such reconstructions with an independent and totally automatic anchoring system, with the aim to perform pure automatic AR procedures,30–32 potentially overcoming the current limitations given by the unprecise knowledge of the different anatomical structures of interest to perform RAPN. Even if a proper study of the kidney and renal pedicle anatomy is done preoperatively, the intraoperative location of such structures, particularly the vessels, is not always clear, leading many surgeons to adopt surgical approaches in which there is no need to identify, isolate, dissect and clamp the renal pedicle. This is the case of preoperative arterial embolization and off clamp approaches, in which the enucleation is conducted without any renal pedicle management.33, 34 However, even in these cases, the automatic AR technology can be of aid, giving the surgeon, especially the one running his/her learning-curve, a constant and proper knowledge of the arterial branches’ location, in order to let him feel confident in embarking for an off-clamp approach with the safety to be able to switch to a selective or global clamping in case of any need.

With the current study, we present our preliminary experience of automatic AR–RAPN aided by two different types of technologies, based on new artificial intelligence strategies that leverage the process of images detection and processing as well as the deep learning theories. These artificial intelligence tools have found great spread in recent years, being able to analyze a huge amount of data in a little time, shortening the diagnostic workup process and data processing, like for COVID-19 at the time of the pandemic.35, 36 Focusing on a selection of patient's characteristics and symptoms, the deep learning-based software were able to score the risk of COVID-19 infection. 35 Similarly, some deep-learning algorithms were able to identify interstitial pneumonia after consultation of huge number of CT-scan images, 36 while others contributed to the nutritional monitoring in medical cohorts analyzing automatically food habits of a selected population. 37 Leveraging the potential given by these type of studies, we tested deep learning strategies applied to intraoperative images during RAPN, to make the target organ identifiable by the software and to allow the anchorage of the 3DVM in a totally automatic manner.

The first strategy, based on computer vision (CV) algorithms, requires the identifications of intraoperative anatomical landmarks, serving as reference points. In our previous experience, we applied this technology during robot-assisted radical prostatectomy (RARP) using the bladder catheter as a reference 38 while during RAPN we decided to use the whole dissected kidney as the intraoperative landmark to be identified by the software. To make it more easily depictable by the software, it was also super-enhanced by the use of ICG.

The automatic ICG-guided AR technology was able to anchor the 3DVM to the real kidney without human intervention in all the cases, with a mean registration time of 7 s. Particularly, the IGNITE software correctly estimated the position of the kidney and its orientation in the three spatial axes and allowed to maintain the model overlapped even during the movements of the camera. 22

However, an assistant was required to fine tune the overlay of the 3DVM, in particular focusing on the rotation of the organ in the abdominal cavity. In addition, ICG may have enhanced some microvascular aspects potentially leading to overlapping errors.

This first strategy approach experienced some technical limitations, mainly due to specific intraoperative factors such as light variations, endoscope movements and ICG-related color differences reflecting the vascular heterogeneity.

To overcome these issues, a new convolutional neural network (CNN) based software version was created. 23 Thanks to this technology, each pixel belonging to the kidney could be independently identified, avoiding the need of specific landmarks. By manually extracting and tagging still image frames from recorded robotic surgeries procedures, it is possible to determine the rotation, position and scale assumed by the organ. The tagged images are therefore used as a training set for deep learning algorithms able to assist the intraoperative 3D organ registration.

Thanks to the CNNs, it was possible to detect the kidney using its intrinsic characteristics (ie, pixels) rather than its ICG-based visual conditions, significantly increasing the organ detection and making the intraoperative ICG injection unnecessary. Thanks to this second technology, the kidney's border identification was more precise, increasing the registration success during preliminary computational tests. In fact, when comparing the two strategies in terms of co-registration outcomes, the latter resulted in longer time but without any failure in anchoring the virtual model to the real organ (Table 3).

Table 3.

Automatic co-Registration Data with the two Different Technologies.

| Variables | Group A | Group B |

|---|---|---|

| Co-registration time (s), Median (IQR) | 7 (3–11) | 11 (6–13) |

| Static co-registration temporary failure, No. of patients (%) | 2 (16.6%) | 1 (12.5%) |

| Dynamic co-registration temporary failure, No. of patients (%) | 1 (8.3%) | 1 (12.5%) |

| Co-registration complete failure, No. of patients (%) | 1 (8.3%) | 0 (0) |

IQR: interquartile range.

However, this second technology showed some limitations, such as the sporadic lack of precision in anchoring the model mask to the real kidney. That is probably due to co-registration errors of the 3DVM along its three main axes. Nevertheless, the tests performed on this second technology showed that the iKidney software was able to guarantee a visually accurate 3DVM overlay during the surgery (as assessed by the surgeons performing the procedure), if at least one of the axes of the kidney mask was fully visible.

Since a fully automatic method is not yet available, minimal human assistance was still required to set the axis’ origin during the initial registration phase (ie, fixing the rotation and/or correcting the translation).

Another issue which may decrease the CNN technology's accuracy was represented by the movements of the robotic instruments at the time of the 3DVM co-registration process. De Backer et al 39 recently published an intriguing study specifically addressing this current limitation of AR, describing an algorithm based on deep learning networks able to detect in real-time all “nonorganic” items (eg, instruments) in the surgical field during RAPN and robot-assisted kidney transplantation.

All these considered, one open question still remains: how many and which technologies can be integrated to create the “ultimate” artificial intelligent software, able to allow an automatic co-registration process of a virtual model over the real anatomy in a live surgical setting with a high grade of precision? The growing body of literature continues to provide new evidence on this field, analyzing all its multifaceted potentialities. 40

There are several limitations in this study: we are currently in an embryonic and experimental stage of the software, which in fact can only be used for a well-defined part of the surgical procedure. In fact, the software needs the target organ to be stationary in the surgical field with no instruments (eg, robotic scissors or clamps) obstructing the overlapping process. In addition, at present, many professionals are needed with a large human resource commitment. However, with continued development, these limitations are expected to be reduced and resolved, and will decrease as the automatic coregistration process is standardized.

However, the future perspectives in this field of research will be oriented to ameliorate the code-writing of these algorithms in order to make the co-registration process faster and more efficient, without any need of human assistance. To make it possible, not only a higher amount of data to be analyzed is needed, but also a translational process able to integrate all the artificial intelligence software together is encouraged, to optimize the complexity of such multifaceted developing technological tool.

Acknowledgements

The authors would like to thank Dr Andrea Bellin and Dr Simona Barbuto (Medics3D) for their contribution to the current study.

Footnotes

The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Ethics Statement: The study was conducted in accordance with good clinical practice guidelines and informed consent was obtained from the patients

Funding: The authors received no financial support for the research, authorship, and/or publication of this article.

ORCID iD: Davide Campobasso https://orcid.org/0000-0003-2072-114X

References

- 1.Autorino R, Porpiglia F, Dasgupta P, et al. Precision surgery and genitourinary cancers. Eur J Surg Oncol. 2017;43(5):893-908. doi: 10.1016/j.ejso.2017.02.005 [DOI] [PubMed] [Google Scholar]

- 2.Ghazi A, Melnyk R, Hung AJ, et al. Multi-institutional validation of a perfused robot-assisted partial nephrectomy procedural simulation platform utilizing clinically relevant objective metrics of simulators (CROMS). BJU Int. 2021;127(6):645-653. doi: 10.1111/bju.15246 [DOI] [PubMed] [Google Scholar]

- 3.Gottlich HC, Gregory AV, Sharma V, et al. Effect of dataset size and medical image modality on convolutional neural network model performance for automated segmentation: A CT and MR renal tumor imaging study. J Digit Imaging. 2023;36(4):1770-1781. doi: 10.1007/s10278-023-00804-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Kliewer MA, Hartung M, Green CS. The search patterns of abdominal imaging subspecialists for abdominal computed tomography: Toward a foundational pattern for new radiology residents. J Clin Imaging Sci. 2021;11:1. Published 2021 Jan 9. doi: 10.25259/JCIS_195_2020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Parikh N, Sharma P. Three-dimensional printing in urology: History, current applications, and future directions. Urology. 2018;121:3-10. doi: 10.1016/j.urology.2018.08.004 [DOI] [PubMed] [Google Scholar]

- 6.Checcucci E, Amparore D, Pecoraro A, et al. 3D mixed reality holograms for preoperative surgical planning of nephron-sparing surgery: Evaluation of surgeons’ perception. Minerva Urol Nephrol. 2021;73(3):367-375. doi: 10.23736/S2724-6051.19.03610-5 [DOI] [PubMed] [Google Scholar]

- 7.Amparore D, Pecoraro A, Checcucci E, et al. 3D Imaging technologies in minimally invasive kidney and prostate cancer surgery: Which is the urologists’ perception? Minerva Urol Nephrol. 2022;74(2):178-185. doi: 10.23736/S2724-6051.21.04131-X [DOI] [PubMed] [Google Scholar]

- 8.Porpiglia F, Bertolo R, Checcucci E, et al. Development and validation of 3D printed virtual models for robot-assisted radical prostatectomy and partial nephrectomy: Urologists’ and patients’ perception. World J Urol. 2018;36(2):201-207. doi: 10.1007/s00345-017-2126-1 [DOI] [PubMed] [Google Scholar]

- 9.Checcucci E, Amparore D, Fiori C, et al. 3D Imaging applications for robotic urologic surgery: An ESUT YAUWP review. World J Urol. 2020;38(4):869-881. doi: 10.1007/s00345-019-02922-4 [DOI] [PubMed] [Google Scholar]

- 10.Bertolo RG, Zargar H, Autorino R, et al. Estimated glomerular filtration rate, renal scan and volumetric assessment of the kidney before and after partial nephrectomy: A review of the current literature. Minerva Urol Nefrol. 2017;69(6):539-547. doi: 10.23736/S0393-2249.17.02865-X [DOI] [PubMed] [Google Scholar]

- 11.Yang H, Wu K, Liu H, et al. An automated surgical decision-making framework for partial or radical nephrectomy based on 3D-CT multi-level anatomical features in renal cell carcinoma. Eur Radiol. 2023;33(11):7532-7541. doi: 10.1007/s00330-023-09812-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Jeong S, Caveney M, Knorr J, et al. Cost-effective and readily replicable surgical simulation model improves trainee performance in benchtop robotic urethrovesical anastomosis. Urol Pract. 2022;9(5):504-511. doi: 10.1097/UPJ.0000000000000312 [DOI] [PubMed] [Google Scholar]

- 13.Piramide F, Kowalewski KF, Cacciamani G, et al. Three-dimensional model-assisted minimally invasive partial nephrectomy: A systematic review with meta-analysis of comparative studies. Eur Urol Oncol. 2022;5(6):640-650. doi: 10.1016/j.euo.2022.09.003 [DOI] [PubMed] [Google Scholar]

- 14.Amparore D, Piramide F, De Cillis S, et al. Robotic partial nephrectomy in 3D virtual reconstructions era: Is the paradigm changed? World J Urol. 2022;40(3):659-670. doi: 10.1007/s00345-022-03964-x [DOI] [PubMed] [Google Scholar]

- 15.Porpiglia F, Checcucci E, Amparore D, et al. Three-dimensional elastic augmented-reality robot-assisted radical prostatectomy using hyperaccuracy three-dimensional reconstruction technology: A step further in the identification of capsular involvement. Eur Urol. 2019;76(4):505-514. doi: 10.1016/j.eururo.2019.03.037 [DOI] [PubMed] [Google Scholar]

- 16.Porpiglia F, Checcucci E, Amparore D, et al. Three-dimensional augmented reality robot-assisted partial nephrectomy in case of Complex tumours (PADUA ≥10): A new intraoperative tool overcoming the ultrasound guidance. Eur Urol. 2020;78(2):229-238. doi: 10.1016/j.eururo.2019.11.024 [DOI] [PubMed] [Google Scholar]

- 17.Piana A, Gallioli A, Amparore D, et al. Three-dimensional augmented reality-guided robotic-assisted kidney transplantation: Breaking the limit of atheromatic plaques. Eur Urol. 2022;82(4):419-426. doi: 10.1016/j.eururo.2022.07.003 [DOI] [PubMed] [Google Scholar]

- 18.Checcucci E, Pecoraro A, Amparore D, et al. The impact of 3D models on positive surgical margins after robot-assisted radical prostatectomy. World J Urol. 2022;40(9):2221-2229. doi: 10.1007/s00345-022-04038-8 [DOI] [PubMed] [Google Scholar]

- 19.Amparore D, Pecoraro A, Checcucci E, et al. Three-dimensional virtual Models’ assistance during minimally invasive partial nephrectomy minimizes the impairment of kidney function. Eur Urol Oncol. 2022;5(1):104-108. doi: 10.1016/j.euo.2021.04.001 [DOI] [PubMed] [Google Scholar]

- 20.Amparore D, Checcucci E, Fiori C, Porpiglia F. Reply to mengda zhang and long wang’s letter to the editor re: Francesco porpiglia, enrico checcucci, daniele amparore, et al. Three-dimensional augmented reality robot-assisted partial nephrectomy in case of Complex tumours (PADUA ≥ 10): A new intraoperative tool overcoming the ultrasound guidance. Eur Urol. 2020;77(6):e163-e164. In press. doi: 10.1016/j.eururo.2019.11.024, doi: [DOI] [PubMed] [Google Scholar]

- 21.Diana P, Buffi NM, Lughezzani G, et al. The role of intraoperative indocyanine green in robot-assisted partial nephrectomy: Results from a large, multi-institutional series. Eur Urol. 2020;78(5):743-749. doi: 10.1016/j.eururo.2020.05.040 [DOI] [PubMed] [Google Scholar]

- 22.Amparore D, Checcucci E, Piazzolla P, et al. Indocyanine green drives computer vision based 3D augmented reality robot assisted partial nephrectomy: The beginning of “automatic” overlapping era. Urology. 2022;164:e312-e316. doi: 10.1016/j.urology.2021.10.053 [DOI] [PubMed] [Google Scholar]

- 23.Padovan E, Marullo G, Tanzi L, et al. A deep learning framework for real-time 3D model registration in robot-assisted laparoscopic surgery. Int J Med Robot. 2022;18(3):e2387. doi: 10.1002/rcs.2387 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Charlson ME, Pompei P, Ales KL, MacKenzie CR. A new method of classifying prognostic comorbidity in longitudinal studies: Development and validation. J Chronic Dis. 1987;40(5):373-383. doi: 10.1016/0021-9681(87)90171-8 [DOI] [PubMed] [Google Scholar]

- 25.Ficarra V, Novara G, Secco S, et al. Preoperative aspects and dimensions used for an anatomical (PADUA) classification of renal tumours in patients who are candidates for nephron-sparing surgery. Eur Urol. 2009;56(5):786-793. doi: 10.1016/j.eururo.2009.07.040 [DOI] [PubMed] [Google Scholar]

- 26.Dindo D, Demartines N, Clavien PA. Classification of surgical complications: A new proposal with evaluation in a cohort of 6336 patients and results of a survey. Ann Surg. 2004;240(2):205-213. doi: 10.1097/01.sla.0000133083.54934.ae [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.von Elm E, Altman DG, Egger M, et al. The strengthening the reporting of observational studies in epidemiology (STROBE) statement: Guidelines for reporting observational studies [published correction appears in Ann Intern Med. 2008 jan 15;148(2):168]. Ann Intern Med. 2007;147(8):573-577. doi: 10.7326/0003-4819-147-8-200710160-00010 [DOI] [PubMed] [Google Scholar]

- 28.Sun Y, Wang W, Zhang Q, Zhao X, Xu L, Guo H. Intraoperative ultrasound: Technique and clinical experience in robotic-assisted renal partial nephrectomy for endophytic renal tumors. Int Urol Nephrol. 2021;53(3):455-463. doi: 10.1007/s11255-020-02664-y [DOI] [PubMed] [Google Scholar]

- 29.Iarajuli T, Caviasco C, Corse T, et al. Does utilizing IRIS, a segmented three-dimensional model, increase surgical precision during robotic partial nephrectomy? . J Urol. 2023;210(1):171-178. doi: 10.1097/JU.0000000000003453 [DOI] [PubMed] [Google Scholar]

- 30.Roberts S, Desai A, Checcucci E, et al. “Augmented reality” applications in urology: A systematic review. Minerva Urol Nephrol. 2022;74(5):528-537. doi: 10.23736/S2724-6051.22.04726-7 [DOI] [PubMed] [Google Scholar]

- 31.Sica M, Piazzolla P, Amparore D, et al. 3D Model artificial intelligence-guided automatic augmented reality images during robotic partial nephrectomy. Diagnostics (Basel). 2023;13(22):3454. Published 2023 Nov 16. doi: 10.3390/diagnostics13223454 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Di Dio M, Barbuto S, Bisegna C, et al. Artificial intelligence-based hyper accuracy three-dimensional (HA3D®) models in surgical planning of challenging robotic nephron-sparing surgery: A case report and snapshot of the state-of-the-art with possible future implications. Diagnostics (Basel). 2023;13(14):2320. Published 2023 Jul 10. doi: 10.3390/diagnostics13142320 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Gallucci M, Guaglianone S, Carpanese L, et al. Superselective embolization as first step of laparoscopic partial nephrectomy. Urology. 2007;69(4):642-646. doi: 10.1016/j.urology.2006.10.048 [DOI] [PubMed] [Google Scholar]

- 34.Simone G, Papalia R, Guaglianone S, Forestiere E, Gallucci M. Preoperative superselective transarterial embolization in laparoscopic partial nephrectomy: Technique, oncologic, and functional outcomes. J Endourol. 2009;23(9):1473-1478. doi: 10.1089/end.2009.0334 [DOI] [PubMed] [Google Scholar]

- 35.Wang W, Pei Y, Wang SH, Gorrz JM, Zhang YD. PSTCNN: Explainable COVID-19 diagnosis using PSO-guided self-tuning CNN. Biocell. 2023;47(2):373-384. doi: 10.32604/biocell.2021.0xxx [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Wang W, Zhang X, Wang SH, Zhang YD. COVID-19 diagnosis by WE-SAJ. Syst Sci Control Eng. 2022;10(1):325-335. doi: 10.1080/21642583.2022.2045645 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Mohanty SP, Singhal G, Scuccimarra EA, et al. The food recognition benchmark: Using deep learning to recognize food in images. Front Nutr. 2022;9:875143. Published 2022 May 6. doi: 10.3389/fnut.2022.875143 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Tanzi L, Piazzolla P, Porpiglia F, Vezzetti E. Real-time deep learning semantic segmentation during intra-operative surgery for 3D augmented reality assistance. Int J Comput Assist Radiol Surg. 2021;16(9):1435-1445. doi: 10.1007/s11548-021-02432-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.De Backer P, Van Praet C, Simoens J, et al. Improving augmented reality through deep learning: Real-time instrument delineation in robotic renal surgery. Eur Urol. 2023;84(1):86-91. doi: 10.1016/j.eururo.2023.02.024 [DOI] [PubMed] [Google Scholar]

- 40.Franco A, Amparore D, Porpiglia F, Autorino R. Augmented reality-guided robotic surgery: Drilling down a giant leap into small steps. Eur Urol. 2023;84(1):92-94. doi: 10.1016/j.eururo.2023.03.021 [DOI] [PubMed] [Google Scholar]