Abstract

Objectives: In an era characterized by dynamic technological advancements, the well-being of the workforce remains a cornerstone of progress and sustainability. The evolving industrial landscape in the modern world has had a considerable influence on occupational health and safety (OHS). Ensuring the well-being of workers and creating safe working environments are not only ethical imperatives but also integral to maintaining operational efficiency and productivity. We aim to review the advancements that have taken place with a potential to reshape workplace safety with integration of artificial intelligence (AI)-driven new technologies to prevent occupational diseases and promote safety solutions.

Methods: The published literature was identified using scientific databases of Embase, PubMed, and Google scholar including a lower time bound of 1974 to capture chronological advances in occupational disease detection and technological solutions employed in industrial set-ups.

Results: AI-driven technologies are revolutionizing how organizations approach health and safety, offering predictive insights, real-time monitoring, and risk mitigation strategies that not only minimize accidents and hazards but also pave the way for a more proactive and responsive approach to safeguarding the workforce.

Conclusion: As industries embrace the transformative potential of AI, a new frontier of possibilities emerges for enhancing workplace safety. This synergy between OHS and AI marks a pivotal moment in the quest for safer, healthier, and more sustainable workplaces.

Keywords: occupational diseases, pneumoconiosis, artificial intelligence, machine learning, neural network, workplace safety

Key points

Artificial Intelligence (AI) is an evolving field that is rapidly integrating into traditional applications of occupational health and safety. However, there is a noticeable deficiency in systematic summarization on this subject. The present study illuminates cutting-edge AI solutions for the detection of occupational lung diseases through the utilization of chest X-ray algorithms and technological innovations aimed at enhancing worker safety. This synopsis delivers a thorough and current assessment of AI-driven solutions that are beneficial for safeguarding health and minimizing the risk of injury. It offers invaluable insights for practitioners, policymakers, and researchers operating within this domain.

1. Introduction

Occupational health and safety focuses on safeguarding the well-being of individuals at the workplace. Although, there are various occupational diseases associated with workplaces, the lung diseases associated with exposure to coal, silica, asbestos, aluminum, cotton, lead, beryllium, etc remain the most frequently diagnosed work-related conditions.1 Despite efforts, occupational diseases and accidents continue to pose a global public health concern, resulting in significant health and economic repercussions2 in both lower- to middle-income nations like Russia, China, and India, as well as in higher-income countries.3,4 Poor occupational health and safety conditions across the globe cause 60 to 150 million occupational diseases that are estimated to cost approximately 4%-5% of the total GDP of different countries.5 According to the Global Burden of Disease, 125 000 people have been killed due to coal workers’ pneumoconiosis (CWP), silicosis, and asbestosis.6 Although there has been a decline in the global occurrence of pneumoconiosis since 2015, a significant patient population still exists.7-9 The mortality rate among those afflicted with pneumoconiosis has remained high in recent times, resulting in more than 21 000 fatalities annually from 2015 onward.2

In addition, industries like manufacturing, construction, transportation, and storage encounter high rates of work-related accidents.10 The International Labour Organization (ILO) projects that approximately 2.3 million individuals globally lose their lives to work-related incidents or illnesses annually, equating to over 6000 fatalities each day. On a global scale, there are about 340 million work-related accidents and 160 million individuals affected by occupational illnesses every year.11

This review encapsulates the advancements that have taken place with a potential to reshape workplace safety with integration of AI-driven new technologies to prevent occupational diseases and promote safety solutions.

2. Methodology

The published literature was identified using the scientific databases of Embase, PubMed, and Google scholar including a lower time bound of 1974—a year in which was reported the first use of a digital approach in screening occupational lung diseases using chest radiographs—to capture chronological advances in occupational disease detection and technological solutions employed in industrial set-ups. The following search terms were used: "Artificial Intelligence,” “Occupational Health and Safety,” “Health and Safety Automation,” “Workplace Monitoring,” “Occupational Disease Detection,” “Incident Prevention,” “Wearable Technology,” “Workplace Injuries,” and “Occupational Accidents” in different combinations with further refinements using terms such as “Deep Learning,” “Computer Vision,” or “Natural Language Processing” to perform the literature search in the above-mentioned databases.

2.1. AI and occupational disease prevention

Diagnosing occupational diseases poses a significant challenge in clinical settings, primarily because of their extended latency periods, exemplified by conditions like pneumoconiosis,12 silicosis, asbestosis, lung cancer, and chronic obstructive pulmonary disease (COPD),13 often complicating overall management. With recent advancements, AI algorithms with deep learning (DL) functionality have shown great promise in lung image processing, making a significant impact on disease diagnosis based on plain radiographs.14 AI algorithms can analyze lung images, such as chest X-rays (CXRs) , computed tomography (CT) scans, or magnetic resonance imaging (MRI) scans, leading to accurate detection and diagnosis of various lung conditions for improved decision making. They can detect and classify abnormalities, identify nodules, masses, or patterns indicative of diseases like mesothelioma, COPD, and silicosis.

A CXR is conducted for every individual being assessed for pneumoconiosis, which remains a significant occupational health concern among workers exposed to dust. This condition poses a notable global burden, particularly affecting low- and middle-income nations.15 Confirmatory diagnosis of CWP, silicosis, or asbestosis involves complex decision making and offers a critical challenge to radiologists. AI models can be very effective in analyzing imaging data with high precision and accuracy.16 Advanced AI techniques can help in data augmentation, mitigation of image noise, and synthetic data generation, which can generate synthetic lung images that resemble real patient data. This information can be valuable in predicting the future state of an affected worker for limiting the exposure from a hazardous work process in dust-exposed industries. With new capabilities, imaging has a vital major role in the assessment of pulmonary diseases, with global interest in commercial AI algorithms developed for chest imaging and recognized by regulatory bodies to make them commercially available in more than 20 countries.17,18

Early algorithm-based attempts can be traced back to Kruger et al,19 when medical decision making applied hybrid optical-digital methods involving the optical Fourier transformation for screening for diagnosis related to maintenance and compensation for affected individuals. Initial applications aimed at textural feature extraction used classical methods including wavelets, density distribution, histograms, and co-occurrence matrices to evaluate entropy, correlation, homogeneity, variance, and skewness to measure the shape and size of opacities in X-ray images. Texture analysis helped in generating insights into tissue composition, structure, and disease characterization. Investigations were subsequently augmented by the application of multilayer perception (MLP),20 multiresolution support vector machine (SVM)-based algorithm, and advanced by the application of convolutional neural networks (CNNs) and DL algorithms for CXR image analysis.19,21,22

Building on the above, AI has been used for addressing and redressing various aspects of occupational health and hazards, primarily in enhancing diagnostic precision and accuracy in the area of occupational lung diseases, some of which are summarized in the following 4 subsections.

2.2. Background noise removal using neural networks in CXRs

Occupation lung disease diagnosis, as per the ILO, is based on 2 important evaluation categories—number and area density classification, and the size of abnormalities in the region of interest (ROI) of a postero-anterior chest radiograph. Now, the primary challenge encountered during texture analysis of chest radiographs is the intricate “background” superimposed by overlapping normal anatomical structures, requiring the analysis to exhibit a certain degree of insensitivity. Though background trend correction attempts have long been made to remove relatively small ROIs,23 significant progress has been demonstrated by Kondo and Kouda24 using a back propagation neural network (NN) with 3 layers for detection of the small rounded opacity by filtering off the rib shade and vessel shadows in the CXRs. The NN application developed a suitable bi-level ROI image. The comprehensive assessment, which was conducted based on size and shape categorization, demonstrated that the proposed approach yields significantly more dependable outcomes compared with conventional methods by using a “moving normalization” technique to remove background noise. The algorithm calculates number density and area density of rounded opacities which yield a classification result by comparing with the value of ILO standard X-ray images.25 A quantified decisive classification has important implications for job relocation and workmen’s accident compensation due to pneumoconiosis.26,27

2.3. NN-based DL applications in non-texture analysis

NN techniques have undergone significant evolution, unlike manual feature extraction, for texture analysis and additionally offer time-saving benefits. DL methods have revolutionized non-texture CXR analysis by offering automated disease detection, segmentation, localization, and interpretability. These advancements have significantly improved the accuracy, efficiency, and utility of pneumoconiosis classification and interpretation in clinical practice. Okumura et al28 developed a detection scheme for pneumoconiosis based on a rule-based plus artificial neural network (ANN) analysis by employing 3 enhancement methods (window function, top-hat transformation, and gray-level co-occurrence matrix analysis) to differentiate abnormal patterns. When applied to chest radiographs of severe and low grade pneumoconiosis to differentiate between normal and abnormal (ROIs), the method achieved a significant classification performance by achieving areas under the curve ( AUCs) of 0.93 ± 0.02 and 0.72 ± 0.03, respectively, shown as (mean ± SD). Further diagnostic performance for AUC was reported for both low and severe pneumoconiosis by Okumura et al,29 as 0.89 ± 0.09 and 0.84 ± 0.12, respectively, by using a 3-stage ANN. Evidently, NN algorithms suffer in their inability to efficiently learn complex representations of pneumoconiosis CXRs and don’t achieve high levels of accuracy. This can hinder their effectiveness in tackling intricate tasks and broader applications.

In the age of AI, medical imaging has embraced the utilization of DL techniques. Among these techniques, convolutional neural networks (CNNs) brought about a paradigm shift in medical image processing. The classical CNNs are LeNet, AlexNet, GoogLeNet, and ResNet. LeNet marks a significant milestone in the early stages of CNN development, employing a 32 × 32 image size for digital classification.30 Nevertheless, its performance reached a plateau due to inherent limitations. The year 2012 witnessed exceptional achievements of AlexNet in the ImageNet31 competition, paving the way for the application of CNNs in various other domains.32 In 2014, the network architecture known as GoogLeNet33 was introduced. Following that, in 2015, the ResNet34 model was presented, representing a significant advancement in both network depth and convergence speed.

In subsequent years, researchers started adapting CNN architectures to the specific challenges of CXR classification. One notable multiplier came with the introduction of transfer learning,35,36 where pretrained CNN models from large-scale image datasets were fine-tuned for CXR analysis. This approach addressed the scarcity of labeled medical data, as collecting medical images with accurate annotations can be time-consuming and costly.37

Advanced investigation for pneumoconiosis CXR applications using CNN have been performed notably by a handful of researchers, including Devnath et al,38 Devnath et al,39 Devnath et al,40 Arzhaeva et al,41 Zhang et al,42 and Xiaohua et al.43-46 The majority of lung diagnostic techniques typically utilize the ImageNet pretrained CNN model. For the specific task of pneumoconiosis diagnosis, Zheng et al47 utilized various CNN models including LeNet, ALexNet, and GoogLeNet (Inception-v1 and v2). The optimized structure Inception-CF (or GoogLeNet-CF) could achieve an accuracy of approximately 96.88% when training images are increased to 1600, compared with GoogLeNet (94.2%) followed by InceptionV2(90.70%), AleXNet(87.90%), and LeNet(71.6%). In another application of deep CNNs, with one of the largest datasets of 33 493 CXRs, the accuracy was found to be 92% with a very high sensitivity (99%), which minimizes the chances of missed diagnoses.46 The model’s exceptional sensitivity46 recommended it as the ideal instrument for pneumoconiosis screening during occupational health assessments in China, as it adeptly detects almost all possible instances of the condition. Wang et al employed the Inception-V3 CNN (GoogleNet) architecture for pneumoconiosis detection. This approach yielded an AUC of 87.80 (95% CI, 0.811-0.946), indicating the potential viability of employing DL methods in pneumoconiosis screening.43

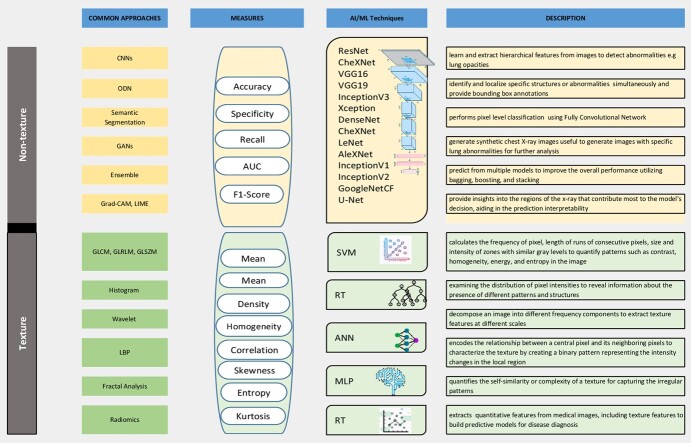

Multiple investigations were carried out by Devnath et al,48 including CNN classifier performance with and without deep transfer learning to study the impact on classification of black lung disease using models VGG16, VGG19, InceptionV3, Xception, ResNet50, DenseNet12148 and CheXNet.40,49 Due to data size limitation (71 Posterior-Anterior (PA) CXR), the authors also employed Cycle-Consistent Adversarial Networks (CycleGAN) and the Keras Image Data Generator, to generate additional augmented and synthetic radiographs including ILO standard radiographs. Accuracy varied from 80% to 88% in order of performance thus: InceptionV3 > CheXNet, Xception, ResNet > DenseNet > VGG16 > VGG19, with an overall suitability of the CheXNet model among all 7 DL models for small datasets.40 In another investigation, Devnath et al39 employed a pair of CNN models for the extraction of multidimensional features from pneumoconiosis CXR images. These models encompassed an unpretrained DenseNet and a pretrained CheXNet architecture. These extracted features were then fed into a conventional machine learning (ML) classifier, specifically SVM. Notably, the hybrid CheXNet approach attained a remarkable accuracy of 92.68% in the automated identification of pneumoconiosis, surpassing various alternative methods based on both traditional ML and DL. A visual schema for AI methodologies is given in Figure 1.

Figure 1.

Illustration of artificial intelligence (AI) techniques used for imaging diagnostics.

2.4. Preclinical stage classification of pneumoconiosis using DL methods

After a diagnosis of pneumoconiosis is made, typically the patient’s condition is already at a highly critical and challenging stage for treatment. To effectively manage this illness, it is imperative to identify it during its preclinical stage with a sense of urgency. Doing so would lead to a decrease in its occurrence and a reduction in its severity among individuals exposed to its risks in the workforce.2 AI-based research for preclinical stage diagnosis has been attempted recently by Wang et al using a novel approach of a 3-stage cascaded learning model. In the initial phase, training involved a YOLOv250 network to detect lung regions within digital chest radiography (DR) images.44 Subsequently, 6 distinct CNN models underwent training to recognize the preclinical phase of CWP. Lastly, a hybrid ensemble learning (EL) model was constructed in the third phase, utilizing a soft voting approach to combine the outcomes from the 6 CNN models. The 1447 digital radiographs used included ones of drillers, coal-getters, auxiliary workers, general workers, and other coal workers. The 6 CNNs included Inception-V3, ShuffleNet, Xception, DenseNet, ResNet101, and MobileNet for training by the authors. The results showed the AUC of the cascade model to be 93.1% with an accuracy of 84.7%.44 The performance of the suggested model is highly promising and represents progress in the effective preclinical screening of coal workers.

2.5. Vision transformer-based pneumoconiosis CT image classifications

The Transformer51 architecture introduced to NN algorithms, initially for natural language processing (NLP) applications, has also found its way into computer vision very rapidly with development of Vision Transformer (ViT)52 due to its competitive performance in comparison with conventional CNNs on several image classification benchmarks. Although conventional techniques in ML have effectively been applied to categorize abnormalities in 2D CXR images of pneumoconiosis,28,29,39,49,53-55 the literature evidence is not available for utilization of 3D CT images. From a data quality perspective, chest CT is known for its higher resolution and enhanced diagnostic insights, and has emerged as a reliable method for assessing lung disorders due to its sensitivity compared with CXR. Recently, a study conducted by Huang et al,45 at the largest center for authenticating occupational diseases in western China, reported application of a transformer-based factorized encoder (TBFE) to deal with 3D CT images of pneumoconiosis. This has demonstrated the ability of TBFE to reasonably classify the severity of pneumoconiosis by analyzing interaction information of intra-slice and inter-slice. Among other highly used 3D-CNN methods, including CheXNet, COVID-Net, versions of ResNet, ResNext, and Seresnext, the performance of TBFE was significantly better; specifically, the accuracy of classification in stage 0 was also enhanced comparatively, suggesting that the proposed approach demonstrates improved capability in predicting the initial phase of pneumoconiosis. The reported results showcased the dominance of TBFE compared with other 3D-CNN networks, attaining an accuracy level of 97.06% and an F1 score of 93.33% with high precision and recall. Based on these performance indicators, this TBFE may assist in early diagnosis of pneumoconiosis based on CT images.

3. AI and occupational safety enhancements

Artificial intelligence plays a crucial role in various aspects of the work process, revolutionizing how tasks are performed and managed. Through computational methods that analyze and process data, AI enables specific actions that impact labor dynamics. Noteworthy AI technologies encompass ML, DL, NLP, and rule-based expert systems (RBES). These AI technologies have also made their way into occupational health, facilitating the examination of both structured and unstructured data. This technology finds application in diverse areas, such as coordinating machinery and industrial processes, workforce management (especially from a human resources [HR] perspective), customer risk assessment, benefits analysis, and staff safety evaluation.56 To safeguard their workforce, employers can adopt various strategies recommended by the US National Institute for Occupational Safety and Health (NIOSH). These measures include providing information and training on job hazards, implementing comprehensive safety programs, and supplying PPE.

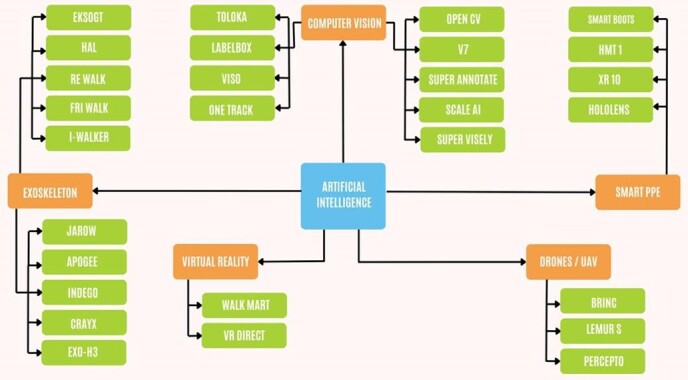

Despite these efforts, human error remains a significant cause of workplace accidents. This is where AI can play a vital role. Due to its ability to rapidly process and analyze data, AI can effectively identify potential risks and hazards that may go unnoticed by humans. AI has introduced new approaches to work, exemplified by the assessment of employee performance using technological processes.57 A flowchart encapsulating the various developments is given in Figure 2.

Figure 2.

Artificial intelligence (AI)-based solutions in advancement of occupational safety at the workplace.

3.1. AI-driven exoskeletons

The utilization of exoskeletons, wearable robotic suits designed to enhance the dynamics of limbs and joints, has emerged as a means to boost productivity and protect worker well-being.58 Numerous research studies indicate a rise in the utilization of various artificial neural network (ANN) structures in modern exoskeleton-related technologies.59 Additionally, traditional control methods are being combined with intelligent or adaptive optimizers to develop resilient or hybrid systems. Throughout the history of biomechatronics and intelligent systems, ANNs have been a foundational element.60 These networks have facilitated the growth of intelligent medical assistive devices like brain-machine interface-controlled prostheses and advanced robotic exoskeletons designed for rehabilitation tasks.59 Exoskeletons facilitate biometric analysis and rehabilitation after injuries, and they can alleviate pressure on the spine, resulting in better physical health for employees.61 Germanic Bionics’ two commercially available exoskeletons, the Cray X and the recently launched Apogee, are designed to be worn like a backpack.62 They are powered by electric motors, and sense when the user is moving, providing up to 30 kg of extra force to your back, core, and legs, when and where you need it.63 They provide additional support to the lower back, the part of the body that typically takes the most stress from heavy lifting. Additionally, several powered exoskeletons such as Indego,64 Exo H3,65 ReWalk,66 HAL,67 and Ekso GT,68 and smart walkers such as JARoW,69 i-Walker,70 and FriWalk robotic walker,71 have been developed in recent years for user assistance and/or rehabilitation in the clinics.

Occupational exoskeletons are strategically engineered to mitigate the risk of injuries to the back and shoulders. Their purpose is to provide support to workers and enhance overall workplace safety, particularly in situations where conventional ergonomic measures are not feasible. Prominent corporations like Toyota, Ford, and Boeing have been integrating exoskeleton technology within specific teams of their workforce for nearly a decade. During this period, these companies have witnessed a noteworthy 83% reduction in injuries within the groups utilizing exoskeletons.72 Workers who used exoskeletons reported minimal discomfort, a decrease in injuries, and lower workers’ compensation expenses. Studies have demonstrated that occupational exoskeletons and exposits can alleviate muscle strain and fatigue during physical tasks applicable to a range of industries, for example, logistics, construction, manufacturing, military, and healthcare.73

3.2. Workplace safety and AI-enabled PPE

Keeping in view workers’ safety and the frequency of accidents at the workplace it becomes imperative to modernize conventional tools and procedures within the workplace to align with the emerging technological landscape. Over the past few years, studies have also explored the utilization of AI in the manufacturing sector.74-77 This integration not only interconnects the industry but also maximizes the safety and security of workers. With the advent of smart PPE and wearable technologies, it becomes feasible to gather data regarding both the workforce and their surroundings. This data-driven approach holds the potential to curtail the frequency of accidents and work-related ailments, thereby ushering in a notable enhancement in overall workplace conditions.78

Advancements in smart PPE enable the tracking of essential health indicators and the assessment of industrial surroundings. Multiple strategies exist for the incorporation of wearables into work settings, allowing for an examination of how networks of interconnected devices can contribute to safeguarding individuals. These solutions employ a range of AI techniques, including NNs, fuzzy logic, Bayesian networks, decision trees, and other hybrid inference methods. Typically, conventional safety systems in workplaces are tailored to meet the specific requirements of individual companies and primarily respond to measured stimuli, provided these stimuli surpass a minimum threshold (a reactive “action-reaction” approach). This approach offers limited adaptability or flexibility when encountering new situations. In contrast, AI systems with learning mechanisms introduce a different approach. These systems rely on a set of rules, coupled with knowledge acquired from solutions to known problems, to anticipate the outcomes of uncharted scenarios. Incorporating technologies like NNs,79 case-based reasoning (CBR) systems,80 DL,81 or hybrid neuro-symbolic algorithms,82 such systems can assess whether a given situation poses a risk based on certain contextual conditions.

3.2.1. Smart boots

Smart boots are equipped with sensors and AI algorithms that continuously monitor the wearer’s surroundings, detect unsafe conditions, and provide real-time alerts or interventions to prevent accidents. Whether detecting slippery surfaces, identifying obstacles, or monitoring environmental factors, smart boots offer a proactive approach to mitigating risks. This innovation not only demonstrates employee well-being but also reflects the potential of AI to revolutionize occupational safety standards. Smart boot innovations encompass a range of capabilities, including fall detection, geofencing, nocturnal flashlight functionality, local data storage and analysis, a bidirectional alert communication system, and tactile feedback. The technical enterprises have developed an engineered hardware module that seamlessly integrates with alternative safety shoes, endowing them with intelligent functionalities.83

3.2.2. Smart helmets

Smart helmets are equipped with a suite of sensors including global positioning system (GPS), an RFID (radio frequency identification) sensor, an ultra-wideband (UWB) sensor, and an around-view monitor (AVM). These sensors collectively monitor the location of workers, their activities, the surrounding environment, and personal health. Several sensors on the helmet have the capability to detect air quality, serving as an essential tool to alert wearers through alarms and on-site safety officers via alerts about the presence of hazardous gases and pollutants. The prominent smart helmets currently available in the market are: Guardhat Communicator; HMT1; XR10 with HoloLens 2; and Smart Helmet by Excellent Web World. The Smart Helmet developed by Excellent Web World stands out by effectively gathering and transmitting job site data along with personal information to promote a safe workplace and a healthy workforce. This feature proves invaluable for individuals working in confined spaces, tunnels, or areas with gas lines.84

3.3. Workplace safety through AI-based robots

The transformative influence of AI-based robotics has a significant impact on workplace safety and reducing injuries and fatalities by eliminating workers’ exposure to dangerous machinery and workplace hazards.85-87 Recent evidence based on leveraging establishment-level data on injury rates by Gihleb et al 2022 uncovered that a 1 SD increase in robot exposure (1.34 robots per 1000 workers) is associated with a reduction in work-related injury rates of approximately 1.2 injuries per 100 full-time workers (0.15 SDs; 95% CI, 1.8-0.53).84,86

High-risk work environments such as hazardous material handling, working at heights, and in confined spaces, can exploit these technological advances driven by data science and AI. Nevertheless, robotics come with their own set of risks and dangers that can also have an adverse impact on the working environment.88,89 Artificial intelligence, ML, and DL are pivotal technologies within the realm of robotics,90 and by 2024 it is projected that up to 75% of enterprises will have embraced AI within their operational workflows.91 Due to increasing robotics use in industry, the Center for Occupational Robotics Research (CORR) was established by NIOSH, in 2017, with the purpose of assessing the possible advantages and drawbacks of incorporating robots into the workforce.92

The implementation of autonomous mobile robots (AMRs) contributes to enhanced safety measures in the workplace.93 Automated guided vehicles (AGVs) and AMRs have been widely used in construction and hospital logistics including disinfection equipment.94,95 To understand the capability of an AMR, the robots developed by a Denmark-based robotics company can handle a payload of 1350 kg, managing tasks effectively within dynamic environments, and minimizing the role of humans in tasks that entail collision risks or physical strain injuries such as back injuries and falls. AMRs, for instance, are equipped with multisensor safety systems comprising laser scanners, 3D cameras, and proximity sensors that feed data into an advanced planning algorithm. This system guides the robot’s path, enabling adjustments or stops when necessary. Additionally, advanced AI robots possess features that facilitate safe decision-making in case of sensor malfunction, further solidifying their status as among the safest AMRs globally.96

The current landscape of AI robot designs encompasses various models created for diverse applications. Notably, these robots incorporate the latest AI approximations, as true AI is still in the research phase. Some prominent AI robots in the industry are listed below.

Digit by Agility Robotics: A humanoid bipedal robot capable of navigating complex terrain and delivering packages. Digit can climb stairs, catch itself during falls, and plan footsteps.97 Digit could be deployed in environments where certain tasks are dangerous for workers, ie, emergency response and disaster recovery tasks.

Atlas and Spot by Boston Dynamics: These AI-based robots are advanced robotic platforms with diverse capabilities with applications in search-and-rescue operations, Atlas and Spot could locate and provide assistance to personnel in hazardous or hard-to-reach areas.98 Their features make them suitable for deployment to handle and transport hazardous materials, chemical agents, or explosives, reducing the risks to the human workforce such as fire breakouts or explosions in industrial units.

HRP-5P by AIST: Developed by Japan’s Institute of Advanced Industrial Science and Technology (AIST), HRP-5P excels in heavy labor tasks and construction activities. Its capabilities include autonomously installing gypsum boards on walls, handling large plywood panels, demonstrating its potential for practical use in the construction industry and ensuring the mitigation of hazards in heavy labor tasks.99

Aquanaut by Houston Mechatronics: Aquanaut serves as an unmanned underwater submersible designed for extended submerged tasks. It can travel over 200 km underwater and manipulate objects in hazardous underwater environments. With onboard cameras, sensors, and powerful arms, Aquanaut minimizes the need for human involvement.100 The robot may aid in risky underwater exploration missions.

Stuntronics by Disney: Disney’s Stuntronics is an advanced stunt-double robot that performs acrobatics for movies/cinemas. It employs sensors and autonomous pose control to execute complex maneuvers with precision and repeatability. Occupational injuries are well discussed in studies conducted among stunt performers.101-103 This technology aims to replace human stunt doubles in risky scenes.

Although still in its nascent stages, AI robotics endeavors to enhance workplace safety, driving us toward greater technological breakthroughs.

3.4. AI computer vision in monitoring and surveillance tools for workplace safety

Computer vision holds great promise in enhancing workplace safety through AI applications.104 It enables various functions, including monitoring employee conduct, identifying potential risks, and issuing real-time alerts. An excellent example of its implementation is the use of thermal cameras to identify heat stress in workers. This technology allows employers to continuously monitor their employees’ body temperatures and promptly offer necessary support, such as cooling breaks or appropriate PPE. Furthermore, computer vision is instrumental in surveillance efforts. AI-powered cameras can track employee movements and swiftly detect potential hazards, such as trip hazards or unsecured equipment. Moreover, these cameras can also identify instances when a person enters a restricted or hazardous area, thus ensuring a safer work environment.105,106

The proliferation of AI has brought about a paradigm shift due to technological advancement in data management, computer vision, and ML. This trend is evident in the emergence of several innovative AI-powered platforms that cater to diverse needs, from data labeling and curation to object detection and video analysis. Some of these cutting-edge platforms include: Scale AI, Supervisely, V7, Viso, Labelbox, Toloka, Superannotate, and OpenCV; the latter remains an integral part of the AI landscape, offering an open-source library for computer vision and ML.

In the evolving realm of DL, various computer vision challenges and concerns, including classification, recognition, language processing, video analysis, gesture detection, and robotics, have emerged. However, it remains uncertain whether all computer vision problems will be considered solved in the current landscape of DL advancements. The advent of CNNs has indeed transformed the entire field of computer vision through recent model-based developments. These models have streamlined the process of creating sophisticated DL configurations, allowing for easy development by fine-tuning pretrained weights.107

3.5. AI-based virtual reality for training employees

Virtual reality (VR) has emerged as a valuable tool for safety training, especially in high-risk industries where learning through real-life experience can be dangerous. Employers can use VR to provide employees with practical knowledge and experience in risky situations, helping them stay safe, and thus minimize the risk of workplace fatalities and injuries by increasing their educative role for building up risk-preventive knowledge.108 This technology is cost-effective, goal-oriented, and enhances accident prevention by minimizing errors. The use of VR in safety training replaces traditional methods like PowerPoint and videos, offering a more engaging and retentive learning experience.109 Industries such as chemicals, construction, mining, and defense have adopted VR-based training programs due to their cost-effectiveness and reduction in workplace injuries and casualties.

Examples of VR applications in different industries include:

Chemical Processing: the Immersive Virtual Reality Plant experience guides employees through dangerous scenarios, preparing them to respond appropriately.

Construction: Building Information Modelling technology creates VR simulations, familiarizing workers with hazardous zones and safety practices.

Mining: VR is used to train students in emergency response at the University of New South Wales School of Mining Engineering.

Military: the Naval Engineering Academy uses VR technology from Ethosh to train sailors in emergency and disaster response, ensuring precise and effective protocols are followed.

Occupational safety training organizations, for example, AST Arbeitssicherheit & Technik, have started implementing VR platforms in digital manual teaching on how to safely handle earth-moving machinery.110 Chemical and consumer goods company Henkel partnered with VRdirect to create a VR training experience for educating employees about health and safety risks at the workplace.111 The training gamifies the process by tasking employees to identify potential risks in busy scenes, making it both successful and enjoyable. Additionally, various other industrial corporations such as Walmart, FedEx, and BP have incorporated training for industrial safety using VR.112 A classification of VR applications is shown as Figure 3.

Figure 3.

Classification of virtual reality (VR) applications in industrial safety.

3.6. AI-driven site drones

The incorporation of drones and unmanned aerial vehicles (UAVs) represents a stride toward executing a broad array of tasks without direct human intervention. The UAV and ML platforms have employed numerous algorithms in their work. Of these algorithms, the random forest stands out with the largest portion of usage.20,22,24 It is the most commonly utilized algorithm because of its effectiveness in managing data noise. The second most prevalent algorithm is the support vector machine, accounting for 21% of total usage.7,26,27 Following closely are the implementations of CNNs14,17,16 and k-nearest neighbours,27,28 with 16% and 11% shares in all employed algorithms, respectively. The remaining algorithms, including Naïve Bayes, liquid state, multi-agent learning, and ANNs, have had sporadic usage.

For the construction sector, UAVs have the potential to prevent injuries, fatalities, exposure to toxic chemicals, electrical hazards, and accidents involving vehicles and equipment. As highlighted in the United States Drone Market Report 2019, the commercial drone market in the United States has expanded significantly, with projections indicating a tripling in size by 2024. Furthermore, the attributes of drones, including precise controls, computer vision, GPS capabilities, geofencing, substantial carrying capacities, and AI, position construction-focused UAVs to effectively oversee safety protocols in commercial construction projects, thereby sparing human involvement in perilous situations. UAVs exhibit the capability to swiftly navigate challenging zones of job sites, surpassing human speed. These UAVs can be equipped with video cameras, sensors, and communication equipment to promptly relay real-time data relevant to construction tasks Moreover, they can undertake tasks akin to those executed by manned vehicles, achieving higher efficiency and reduced expenses.113 Notably, recent advancements in UAV design, encompassing battery longevity, GPS, navigation prowess, and control reliability, have facilitated the creation of economical and lightweight aerial systems.114 The availability of such cost-effective and user-friendly UAVs has led to a substantial surge in their use within the last decade.115-117 The expanding role of UAVs in the construction industry spans various domains, including aiding construction project planning through aerial site mapping, overseeing construction workflow, managing job site logistics, and conducting inspections to gauge structural integrity and maintenance needs. Furthermore, UAVs have found application in a spectrum of other domains within architecture, engineering, and construction. These encompass traffic surveillance,118 landslide monitoring,119,120 cultural heritage preservation,119,121 and urban planning.122,123 Some of the best uses of drones in construction safety are outlined below.

3.6.1. Pre-construction site inspections

Commencing from the pre-construction phase, site inspections have pivotal importance throughout various stages of a building project. Certain areas may be unstable or challenging to access, elevating risks for human inspectors. Drone technology enhances the safety of site inspections by enabling remote assessment without the need to venture into hazardous zones. Drone inspections can be carried out off-site swiftly, curtailing expenses associated with additional workforce and delays.114 Due to 3D mapping software, near- and far-infrared cameras, and laser range finders, drones offer precise measurements, reducing the necessity for repeated inspections.115,119

3.6.2. Maintenance inspections

Construction personnel engaged in activities on skyscrapers, towers, and bridges face risks associated with great heights, and accessing these sites often entails substantial expense. Drones can conduct scheduled maintenance inspections of these tall structures, mitigating the need for human inspectors.113

Folio3, a California-based leading innovator of technological solutions, stands as a prominent developer and supplier of AI-powered intelligent solutions, offering automated drone systems to streamline processes and aid businesses across various industries. Similarly, Percepto is a collaborative team consisting of hardware engineers, software developers, specialists in machine vision, enthusiasts in robotics, and experienced industry professionals. As a company, Percepto excels in crafting comprehensive solutions encompassing both software and hardware for AI-driven drones. Its AIM visual data management system finds applications in drones, robots, and cameras. This advanced software harnesses the power of AI and DL to conduct site surveys for tasks like construction inspections, 3D modeling, and security patrols. With a global vote of confidence from regulators, Percepto’s drone-in-a-box is available in 3 distinct models, trusted for applications in mining and energy facilities. These models effectively detect gas leaks, monitor construction sites, and fulfill various other functions.

The LEMUR S drone, developed by Brinc and headquartered in Nevada, showcases a quadcopter configuration, equipped with night vision functionalities, and offers an impressive 31-minute flight duration. Additionally, it possesses the remarkable capability to remain in an idle state for up to 10 hours while consistently recording video and audio. Specifically designed for scenarios involving heightened risk, the LEMUR S proves to be an optimal choice for first responders and search and rescue teams. Its integrated microphone and video recording features enable both conversations and reconnaissance activities.124

4. Conclusions

Artificial intelligence-based tools offer huge potential gains from algorithm-based CXR analysis for developing countries, where there could be a delay in availability of specialized radiologists. AI methods prove useful in addressing the challenge of diagnosing occupational lung diseases from chest radiographs. Additionally, AI algorithms are crucial in evaluating compensation claims for dust-exposed workers in pneumoconiosis boards, mitigating delays caused by non-confirmatory diagnoses, including conditions like silicosis. Some of the latest developments, such as the TBFE, have shown promising results in pneumoconiosis detection during its preclinical stages. The resurgence of pneumoconiosis in nations such as the United States and Australia accentuates the imperative for AI advancements. Even with advanced healthcare systems, rigorous workplace safety protocols, and mechanized mining techniques designed to reduce worker particle exposure, the absence of a definitive treatment for pneumoconiosis necessitates a deeper integration of AI solutions.

By collecting and analyzing data on worker activities, equipment performance, and environmental conditions, AI tools provide valuable insights that enable proactive risk management. This proactive approach empowers organizations to swiftly address emerging dangers, implement necessary safety measures, and intervene before accidents occur. These tools establish a data-driven feedback loop, enabling continuous improvement by identifying risk patterns, analyzing trends, and deploying targeted interventions to prevent recurrence. Ultimately, workplace monitoring and surveillance tools will play a central role in fostering a safer and more secure work environment, significantly reducing the incidence of occupational injuries.

Artificial intelligence offers a promising future where linguistic diversity and multiple risk factors compound vulnerability to poor understanding of workplace risks. AI technologies can be used to understand and address the needs of various occupational groups, better inform policy, and help formulate strategies to improve workers’ health and safety environment.

Author contributions

I.A.S. and S.D.M. performed the literature search and collected references. I.A.S. prepared the draft and S.D.M. critically revised the manuscript and created figures. I.A.S. and S.D.M. read and approved the final manuscript.

Funding

No funding wass involved in this work.

Conflicts of interest

The authors declare no conflicts of interest for this article.

Data availability

Data sharing is not applicable to this article because no new data were created or analyzed in this study.

Acknowledgments

We would like to express the deepest gratitude for the encouragement and guidance from the Director of the National Institute of Occupational Health.

Contributor Information

Immad A Shah, Division of Health Sciences, ICMR-National Institute of Occupational Health, Ahmedabad, Gujarat, India.

SukhDev Mishra, Department of Biostatistics, Division of Health Sciences, ICMR-National Institute of Occupational Health, Ahmedabad, Gujarat, India.

References

- 1. Matyga AW, Chelala L, Chung JH. Occupational lung diseases: spectrum of common imaging manifestations. Korean J Radiol. 2023;24(8):795-806. 10.3348/kjr.2023.0274 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Qi X-M, Luo Y, Song M-Y, et al. Pneumoconiosis: current status and future prospects. Chin Med J. 2021;134(8):898-907. 10.1097/CM9.0000000000001461 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Takala J, Hämäläinen P, Sauni R, Nygård CH, Gagliardi D, Neupane S. Global-, regional- and country-level estimates of the work-related burden of diseases and accidents in 2019. Scand J Work Environ Health. 2023. 10.5271/sjweh.4132 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Yates DH, Perret JL, Davidson M, Miles SE, Musk AW. Dust diseases in modern Australia: a discussion of the new TSANZ position statement on respiratory surveillance. Med J Aust. 2021;215(1):13-15.e1. 10.5694/mja2.51097 [DOI] [PubMed] [Google Scholar]

- 5. Youxin L. Economic burden. In: Guidotti TL, ed. Global Occupational Health, online edn,. Oxford University Press; 2011:536-543. 10.1093/acprof:oso/9780195380002.003.0029. Accessed May 1, 2011. [DOI] [Google Scholar]

- 6. Lozano R, Naghavi M, Foreman K et al. Global and regional mortality from 235 causes of death for 20 age groups in 1990 and 2010: a systematic analysis for the global burden of disease study 2010. Lancet. 2012; 380(9859):2095‐2128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. James SL, Abate D, Abate KH, et al. Global, regional, and national incidence, prevalence, and years lived with disability for 354 diseases and injuries for 195 countries and territories, 1990–2017: a systematic analysis for the Global Burden of Disease Study 2017. Lancet. 2018;392(10159):1789-1858. 10.1016/S0140-6736(18)32279-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Vos T, Allen C, Arora M, et al. Global, regional, and national incidence, prevalence, and years lived with disability for 310 diseases and injuries, 1990–2015: a systematic analysis for the Global Burden of Disease Study 2015. Lancet. 2016;388(10053):1545-1602. 10.1016/S0140-6736(16)31678-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. GBD 2015 Mortality and Causes of Death Collaborators . Global, regional, and national incidence, prevalence, and years lived with disability for 301 acute and chronic diseases and injuries in 188 countries, 1990-2013: a systematic analysis for the Global Burden of Disease Study 2013. Lancet. 2015; 386(9995):743‐800. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. United Nations Global Compact Academy . A safe and healthy working environment. 2022. Accessed November 23, 2023. https://unglobalcompact.org/take-action/safety-andhealth

- 11. WHO/ILO WHO. WHO/ILO Joint Estimates of the Work-Related Burden of Disease and Injury . Global Monitoring Report. World Health Organization (WHO) and International Labour Organization (ILO). 2000–2016; 2021:9240034943. [Google Scholar]

- 12. Blackley DJ, Halldin CN, Laney AS. Continued increase in prevalence of coal workers’ pneumoconiosis in the United States, 1970–2017. Am J Public Health. 2018;108(9):1220-1222. 10.2105/AJPH.2018.304517 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Vlahovich KP, Sood A. A 2019 update on occupational lung diseases: a narrative review. Pulm Ther. 2021;7(1):75-87. 10.1007/s41030-020-00143-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Çallı E, Sogancioglu E, van Ginneken B, van Leeuwen KG, Murphy K. Deep learning for chest X-ray analysis: a survey. Med Image Anal. 2021;72:102125. 10.1016/j.media.2021.102125 [DOI] [PubMed] [Google Scholar]

- 15. Li J, Yin P, Wang H et al. The burden of pneumoconiosis in China: an analysis from the Global Burden of Disease Study 2019. BMC Public Health. 2022; 22(1):1114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Cellina M, Cè M, Irmici G, et al. Artificial intelligence in lung cancer imaging: unfolding the future. Diagnostics. 2022;12(11):2644. 10.3390/diagnostics12112644 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Choe J, Lee SM, Hwang HJ et al. Artificial intelligence in lung imaging. Semin Respir Crit Care Med. 2022; 43(06):946‐960. [DOI] [PubMed] [Google Scholar]

- 18. Rajpurkar P, Lungren MP. The current and future state of AI interpretation of medical images. N Engl J Med. 2023;388(21):1981-1990. 10.1056/NEJMra2301725 [DOI] [PubMed] [Google Scholar]

- 19. Kruger R, Thompson W, Turner A. Computer diagnosis of pneumoconiosis. IEEE Trans Syst Man Cybern. 1974;SMC-4(1):40-49. 10.1109/TSMC.1974.5408519 [DOI] [Google Scholar]

- 20. Haykin S. Neural Networks and Learning Machines. 3rd ed. Pearson Education India; 2009. [Google Scholar]

- 21. Litjens G, Kooi T, Bejnordi BE et al. A survey on deep learning in medical image analysis. Med Image Anal. 2017; 42:60‐88. 10.1016/j.media.2017.07.005. [DOI] [PubMed] [Google Scholar]

- 22. Sundararajan R, Xu H, Annangi P, Tao X, Sun X, Mao L. A multiresolution support vector machine based algorithm for pneumoconiosis detection from chest radiographs. Paper presented at: 2010 IEEE International Symposium on Biomedical Imaging: From Nano to Macro, 14-17 April 2010. Rotterdam, The Netherlands; 2010. [Google Scholar]

- 23. Katsuragawa S, Doi K, MacMahon H, Nakamori N, Sasaki Y, Fennessy JJ. Quantitative computer-aided analysis of lung texture in chest radiographs. Radiographics. 1990;10(2):257-269. 10.1148/radiographics.10.2.2326513 [DOI] [PubMed] [Google Scholar]

- 24. Kondo H, Kouda T. Computer-aided diagnosis for pneumoconiosis using neural network. Paper presented at: 14th IEEE Symposium on Computer-Based Medical Systems. CBMS. July, 2001. Bethesda, MD, USA; 2001:467‐472. 10.1109/CBMS.2001.941763 [DOI] [Google Scholar]

- 25. Kondo H, Zhang L, Kouda T. Computer aided diagnosis for pneumoconiosis radiographs using neural network. International Archives of Photogrammetry and Remote Sensing. 2000; 33:453‐458. [Google Scholar]

- 26. Jagoe JR. Gradient pattern coding—an application to the measurement of pneumoconiosis in chest X rays. Comput Biomed Res. 1979;12(1):1-15. 10.1016/0010-4809(79)90002-8 [DOI] [PubMed] [Google Scholar]

- 27. Sishodiya PK. Silicosis—an ancient disease: providing succour to silicosis victims, lessons from Rajasthan model. Indian J Occup Environ Med. 2022; 26(2):57‐61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Okumura E, Kawashita I, Ishida T. Development of CAD based on ANN analysis of power spectra for pneumoconiosis in chest radiographs: effect of three new enhancement methods. Radiol Phys Technol. 2014;7(2):217-227. 10.1007/s12194-013-0255-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Okumura E, Kawashita I, Ishida T. Computerized classification of pneumoconiosis on digital chest radiography artificial neural network with three stages. J Digit Imaging. 2017;30(4):413-426. 10.1007/s10278-017-9942-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Lecun Y, Bottou L, Bengio Y, Haffner P. Gradient-based learning applied to document recognition. Proc IEEE. 1998;86(11):2278-2324. 10.1109/5.726791 [DOI] [Google Scholar]

- 31. Deng J, Dong W, Socher R, Li LJ, Kai L, Li F-F. ImageNet: a large-scale hierarchical image database. Paper presented at: 2009 IEEE Conference on Computer Vision and Pattern Recognition; 20-25 June. Miami, FL, USA; 2014:248-255. 10.1109/CVPR.2009.5206848 [DOI] [Google Scholar]

- 32. Krizhevsky A, Sutskever I, Hinton GE. ImageNet classification with deep convolutional neural networks. Communications of the ACM. 2017;60(6):84-90. 10.1145/3065386 [DOI] [Google Scholar]

- 33. Szegedy C, Wei L, Yangqing J, et al. Going deeper with convolutions. Paper presented at: 2015. IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 7-12 June, 2015. Boston, MA; 2015:1-9. [Google Scholar]

- 34. He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. Paper presented at: 2016. IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 27-30 June, 2016. Las Vegas, NV, USA. [Google Scholar]

- 35. Bozinovski S. Reminder of the first paper on transfer learning in neural networks, 1976. Informatica (Slovenia). 2020; 44: 291-302. [Google Scholar]

- 36. Bozinovski S, Fulgosi A. The influence of pattern similarity and transfer of learning upon training of a base perceptron B2 (original in Croatian: Utjecaj slicnosti likova i transfera ucenja na obucavanje baznog perceptrona B2). Paper presented at: Proc. Symp. Informatica, Bled; 1976:3-121. [Google Scholar]

- 37. Wang S, Li C, Wang R et al. Annotation-efficient deep learning for automatic medical image segmentation. Nat Comm. 2021; 12(1):5915. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Devnath L, Summons P, Luo S, et al. Computer-aided diagnosis of coal workers' pneumoconiosis in chest X-ray radiographs using machine learning: a systematic literature review. Int J Environ Res Public Health. 2022;19(11):6439. 10.3390/ijerph19116439 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Devnath L, Luo S, Summons P, Wang D. Automated detection of pneumoconiosis with multilevel deep features learned from chest X-ray radiographs. Comput Biol Med. 2021;129:104125. 10.1016/j.compbiomed.2020.104125 [DOI] [PubMed] [Google Scholar]

- 40. Devnath L, Luo S, Summons P, Wang D. Performance comparison of deep learning models for black lung detection on chest X-ray radiographs. In: Proceedings of the 3rd International Conference on Software Engineering and Information Management, Sydney. United States: Computing Machinery New York NY; 2020:150-154. [Google Scholar]

- 41. Arzhaeva Y, Wang D, Devnath L et al. Development of Automated Diagnostic Tools for Pneumoconiosis Detection from Chest X-Ray Radiographs. CSIRO. 2019; Report No. EP192938. [Google Scholar]

- 42. Zhang L, Rong R, Li Q et al. A deep learning-based model for screening and staging pneumoconiosis. Sci Rep. 2021; 11(1):2201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Xiaohua W, Juezhao Y, Qiao Z, et al. Potential of deep learning in assessing pneumoconiosis depicted on digital chest radiography. Occup Environ Med. 2020;77(9):597-602. 10.1136/oemed-2019-106386 [DOI] [PubMed] [Google Scholar]

- 44. Wang Y, Cui F, Ding X, et al. Automated identification of the preclinical stage of coal workers' pneumoconiosis from digital chest radiography using three-stage cascaded deep learning model. Biomed Signal Process Control. 2023;83:104607. 10.1016/j.bspc.2023.104607 [DOI] [Google Scholar]

- 45. Huang Y, Si Y, Hu B, et al. Transformer-based factorized encoder for classification of pneumoconiosis on 3D CT images. Comput Biol Med. 2022;150:106137. 10.1016/j.compbiomed.2022.106137 [DOI] [PubMed] [Google Scholar]

- 46. Li X, Liu CF, Guan L, Wei S, Yang X, Li SQ. Deep learning in chest radiography: detection of pneumoconiosis. Biomed Environ Sci. 2021; 34(10):842‐845. [DOI] [PubMed] [Google Scholar]

- 47. Zheng R, Deng K, Jin H, Liu H, Zhang L. An improved CNN-based pneumoconiosis diagnosis method on X-ray chest film. In: Paper presented at: Human Centered Computing: 5th International Conference, HCC 2019. Čačak, Serbia; August 5-7, 2019: Revised selected papers; 2019. [Google Scholar]

- 48. Huang G, Liu Z, Maaten LVD, Weinberger KQ. Densely connected convolutional networks. Paper presented at: 2017. IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 21-26 July, 2017. Honolulu, HI, USA. [Google Scholar]

- 49. Devnath L, Luo S, Summons P, Wang D. An accurate black lung detection using transfer learning based on deep neural networks. Paper presented at: 2019. International Conference on Image and Vision Computing New Zealand (IVCNZ); 2-4 December, 2019. Dunedin, New Zealand. [Google Scholar]

- 50. Redmon J, Farhadi A. YOLO9000: Better, faster, stronger. Paper presented at: 2017. IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 21-26 July, 2017. Honolulu, HI, USA. [Google Scholar]

- 51. Vaswani A, Shazeer N, Parmar N et al. Attention is all you need. Adv Neural Inf Proces Syst. 2017; 30:5998‐6008. [Google Scholar]

- 52. Dosovitskiy A, Beyer L, Kolesnikov A, et al. An image is worth 16x16 words: transformers for image recognition at scale. arXiv. 2020;2010:11929.

- 53. Okumura E, Kawashita I, Ishida T. Computerized analysis of pneumoconiosis in digital chest radiography: effect of artificial neural network trained with power spectra. J Digit Imaging. 2011;24(6):1126-1132. 10.1007/s10278-010-9357-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54. Zhu B, Luo W, Li B et al. The development and evaluation of a computerized diagnosis scheme for pneumoconiosis on digital chest radiographs. Biomed Eng Online. 2014; 13(1):141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55. Wang D, Arzhaeva Y, Devnath L, et al. Automated pneumoconiosis detection on chest X-rays using cascaded learning with real and synthetic radiographs. Paper presented at: 2020. Digital Image Computing: Techniques and Applications (DICTA); 29 November to 2 December, 2020. Melbourne, Australia. [Google Scholar]

- 56. Deranty J-P, Corbin T. Artificial intelligence and work: a critical review of recent research from the social sciences. AI & Soc. 2022. 10.1007/s00146-022-01496-x [DOI] [Google Scholar]

- 57. Brown RC. Made in China 2025: implications of robotization and digitalization on MNC labor supply chains and workers' labor rights in China. Tsinghua China L Rev. 2016;9:186. 10.2139/ssrn.3058380 [DOI] [Google Scholar]

- 58. Sawicki GS, Beck ON, Kang I, Young AJ. The exoskeleton expansion: improving walking and running economy. J Neuroeng Rehabil. 2020;17(1):1-9. 10.1186/s12984-020-00663-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59. Smita N, Rajesh KD. Application of artificial intelligence (AI) in prosthetic and orthotic rehabilitation. In: Volkan S, Sinan Ö, Pınar Boyraz B eds. Service Robotics. IntechOpen; 2020: ch. 2. [Google Scholar]

- 60. Bonato P. Advances in wearable technology and applications in physical medicine and rehabilitation. J Neuroeng Rehabil. 2005; 2(1):2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61. Ajunwa I, Greene D. Platforms at work: automated hiring platforms and other new intermediaries in the organization of work. In: Work and Labor in the Digital Age. 2019; 61‐91 10.1108/S0277-283320190000033005. [DOI] [Google Scholar]

- 62. German Bionic. Exoskeleton tools for workplace safety. 2023. Accessed August 16, 2023. https://germanbionic.com/en/solutions/exoskeletons/

- 63.Orthexo. Exoskeleton tools for workplace safety. 2023. https://orthexo.de/en/15.11.2023

- 64. Murray SA, Farris RJ, Golfarb M, Hartigan C, Kandilakis C, Truex D. Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC). NJ, Piscataway: IEEE; 2018. https://doi.org/10.1109/embc.2018.8512810 [DOI] [PubMed] [Google Scholar]

- 65. Inkol KA, McPhee J. Assessing control of fixed-support balance recovery in wearable lower-limb exoskeletons using multibody dynamic modelling. Paper presented at: 8th IEEE RAS/EMBS International Conference for Biomedical Robotics and Biomechatronics (BioRob) 2020. New York City, New York, USA. [Google Scholar]

- 66. Zeilig G, Weingarden H, Zwecker M, Dudkiewicz I, Bloch A, Esquenazi A. Safety and tolerance of the ReWalk™ exoskeleton suit for ambulation by people with complete spinal cord injury: a pilot study. J Spinal Cord Med. 2012;35(2):96-101. 10.1179/2045772312Y.0000000003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67. Jansen O, Grasmuecke D, Meindl RC, et al. Hybrid assistive limb exoskeleton HAL in the rehabilitation of chronic spinal cord injury: proof of concept; the results in 21 patients. World Neurosurg. 2018;110:e73-e78. 10.1016/j.wneu.2017.10.080 [DOI] [PubMed] [Google Scholar]

- 68. Evans RW, Shackleton CL, West S, et al. Robotic locomotor training leads to cardiovascular changes in individuals with incomplete spinal cord injury over a 24-week rehabilitation period: a randomized controlled pilot study. Arch Phys Med Rehabil. 2021;102(8):1447-1456. 10.1016/j.apmr.2021.03.018 [DOI] [PubMed] [Google Scholar]

- 69. Lee G, Ohnuma T, Chong NY. Design and control of JAIST active robotic walker. Intell Serv Robot. 2010;3(3):125-135. 10.1007/s11370-010-0064-5 [DOI] [Google Scholar]

- 70. Morone G, Annicchiarico R, Iosa M, et al. Overground walking training with the i-Walker, a robotic servo-assistive device, enhances balance in patients with subacute stroke: a randomized controlled trial. J Neuroeng Rehabil. 2016;13(1):1-10. 10.1186/s12984-016-0155-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71. Andreetto M, Divan S, Fontanelli D, Palopoli L. Passive robotic walker path following with bang-bang hybrid control paradigm. Paper presented at: 2016. IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) 2016. IEEE; 2016:1054-1060. 10.1109/IROS.2016.7759179 [DOI] [Google Scholar]

- 72.Ford pilots new exoskeleton technology to help lessen chance of worker fatigue, injury [press release]. 2017. https://media.ford.com/content/fordmedia/fna/us/en/news/2017/11/09/ford-exoskeleton-technology-pilot.html. Accessed November 15, 2023.

- 73. Lamers EP, Zelik KE. Design, modeling, and demonstration of a new dual-mode back-assist exosuit with extension mechanism. Wearable Technol. 2021;2:e1. 10.1017/wtc.2021.1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74. Cockburn D, Jennings NR. ARCHON: A distributed artificial intelligence system for industrial applications. In: O’Hare GMP, Jennings NR, eds. Foundation of Distributed Artificial Intelligence. University of Southampton Institutional Repository (01/01/96). Wiley; 1996a:319-344. [Google Scholar]

- 75. Podgorski D, Majchrzycka K, Dąbrowska A, Gralewicz G, Okrasa M. Towards a conceptual framework of OSH risk management in smart working environments based on smart PPE, ambient intelligence and the internet of things technologies. Int J Occup Saf Ergon. 2017;23(1):1-20. 10.1080/10803548.2016.1214431 [DOI] [PubMed] [Google Scholar]

- 76. Li B-h, Hou B-c, Yu W-t, Lu X-b, Yang C-w. Applications of artificial intelligence in intelligent manufacturing: a review. Frontiers of Information Technology and Electronic Engineering. 2017;18(1):86-96. 10.1631/FITEE.1601885 [DOI] [Google Scholar]

- 77. Sun S, Zheng X, Gong B, Garcia Paredes J, Ordieres-Meré J. Healthy operator 4.0: a human cyber–physical system architecture for smart workplaces. Sensors. 2020;20(7):2011. 10.3390/s20072011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78. Márquez-Sánchez S, Campero-Jurado I, Herrera-Santos J, Rodríguez S, Corchado JM. Intelligent platform based on smart PPE for safety in workplaces. Sensors. 2021;21(14):4652. 10.3390/s21144652 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79. Yegnanarayana B. Artificial Neural Networks. PHI Learning Pvt Ltd; 2009. [Google Scholar]

- 80. Leake DB. Case-Based Reasoning: Experiences. Lessons and Future Directions: MIT Press; 1996. [Google Scholar]

- 81. Goodfellow I, Bengio Y, Courville A. Deep Learning. MIT Press; 2016. [Google Scholar]

- 82. Riverola FF, Corchado JM. Sistemas híbridos neuro-simbólicos: una revisión. Inteligencia artificial. Revista Iberoamericana de. Inteligencia Artificial. 2000; 4(11):12‐26. [Google Scholar]

- 83.Intellinium. Connected safety shoes, smart PPE and connected worker. 2023. https://intellinium.io/smart-connected-safety-shoes/. Date accessed November 15, 2023.

- 84.CONEXPO-CON/AGG. Improving jobsite productivity and safety with smart helmets. 2022. https://www.conexpoconagg.com/news/improving-jobsite-productivity-and-safety-with-sma#:~:text=The%20helmet%20comes%20equipped%20with,%2C%20environment%2C%20and%20personal%20health. Date accessed November 15, 2023.

- 85. Howard J. Artificial intelligence: implications for the future of work. Am J Ind Med. 2019;62(11):917-926. 10.1002/ajim.23037 [DOI] [PubMed] [Google Scholar]

- 86. Gihleb R, Giuntella O, Stella L, Wang T. Industrial robots, workers’ safety, and health. Labour Econ. 2022;78:102205. 10.1016/j.labeco.2022.102205. [DOI] [Google Scholar]

- 87. Bebon J. Using robotics to improve workplace safety. EHS Administration, Technology and Innovation; 2023. https://ehsdailyadvisor.blr.com/2023/06/using-robotics-to-improve-workplace-safety/ [Google Scholar]

- 88. Yang S, Zhong Y, Feng D, Li RYM, Shao XF, Liu W. Robot application and occupational injuries: are robots necessarily safer? Saf Sci. 2022;147:105623. 10.1016/j.ssci.2021.105623 [DOI] [Google Scholar]

- 89. Kabe T, Tanaka K, Ikeda H, Sugimoto N. Consideration on safety for emerging technology—case studies of seven service robots. Saf Sci. 2010;48(3):296-301. 10.1016/j.ssci.2009.11.008 [DOI] [Google Scholar]

- 90. Woschank M, Rauch E, Zsifkovits H. A review of further directions for artificial intelligence, machine learning, and deep learning in smart logistics. Sustainability. 2020;12(9):3760. 10.3390/su12093760 [DOI] [Google Scholar]

- 91. Brian M. Gartner’s IT automation trends for 2023. 2023. Accessed August 16, 2023. https://www.advsyscon.com/blog/gartner-it-automation/#:~:text=By%202024%2C%2075%25%20of%20organizations,tenfold%20growth%20in%20compute%20requirements

- 92. The National Institute for Occupational Safety and Health (NIOSH) . NIOSH’s role in robotics. 2017. Accessed August 16, 2023.

- 93. Blanchard D, Sauelko A, Stempak N. Improving workplace safety with robots. EHS Today; 2023. https://www.ehstoday.com/safety-technology/article/21266877/improving-workplace-safety-with-robots [Google Scholar]

- 94. Zhang J, Yang X, Wang W, Guan J, Ding L, Lee VCS. Automated guided vehicles and autonomous mobile robots for recognition and tracking in civil engineering. Autom Constr. 2023;146:104699. 10.1016/j.autcon.2022.104699 [DOI] [Google Scholar]

- 95. Fragapane G, Hvolby H-H, Sgarbossa F, Strandhagen JO. Autonomous mobile robots in hospital logistics. In: IFIP International Conference on Advances in Product ion Management Systems, Cham: Springer International Publishing. Novi Sad, Serbia: Springer, Cham. 10.1007/978-3-030-57993-7_76 [DOI] [Google Scholar]

- 96. Moshayedi AJ, Xu G, Liao L, Kolahdooz A. Gentle survey on MIR industrial service robots: review & design. J Mod Process Manuf Prod. 2021; 10(1):31‐50. [Google Scholar]

- 97.Agility Robotics Inc. Agility Robotics launches next generation of Digit: world’s first human-centric, multi-purpose robot made for logistics work. 2022. https://agilityrobotics.com/news/2022/future-robotics-l3mjh. Accessed August 16, 2023.

- 98. Dynamics B. In: Dynamics B ed. Robotics' role in public safety. Boston Dynamics; 2022. [Google Scholar]

- 99. National Institute of Advanced Industrial Science and Technology (AIST) . Development of a humanoid robot prototype, HRP-5P, capable of heavy labor. 2018. https://www.aist.go.jp/aist_e/list/latest_research/2018/20181116/en20181116.html. Accessed August 16, 2023.

- 100. Ackerman E. Meet aquanaut, the underwater transformer. IEEE Spectr. 2019; 25. https://spectrum.ieee.org/open-source-ai-2666932122 . Accessed November 15, 2023. [Google Scholar]

- 101. Russell JA, McIntyre L, Stewart L, Wang T. Concussions in dancers and other performing artists. Phys Med Rehabil Clin N Am. 2021;32(1):155-168. 10.1016/j.pmr.2020.09.007 [DOI] [PubMed] [Google Scholar]

- 102. Senn AB, McMichael LP, Stewart LJ, Russell JA. Head trauma and concussions in film and television stunt performers: an exploratory study. J Occup Environ Med. 2023;65(1):e21-e27. 10.1097/JOM.0000000000002738 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103. Cohen A, Nguyen D, King D, Fraser C. Falling into line: eye tests and impact monitoring in stunt performers. Clin Exp Ophthalmol. 2016; 44:77. [Google Scholar]

- 104. Akinsemoyin A, Awolusi I, Chakraborty D, Al-Bayati AJ, Akanmu A. Unmanned aerial systems and deep learning for safety and health activity monitoring on construction sites. Sensors. 2023;23(15):6690. 10.3390/s23156690 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 105. Ray SJ, Teizer J. Dynamic blindspots measurement for construction equipment operators. Saf Sci. 2016; 85:139-151. 10.1016/j.ssci.2016.01.011 [DOI] [Google Scholar]

- 106. Kim Y, Choi Y. Smart helmet-based proximity warning system to improve occupational safety on the road using image sensor and artificial intelligence. Int J Environ Res Public Health. 2022;19(23):16312. 10.3390/ijerph192316312 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 107. Patel P, Thakkar A. The upsurge of deep learning for computer vision applications. International Journal of Electrical and Computer Engineering. 2020;10(1):538. 10.11591/ijece.v10i1.pp538-548 [DOI] [Google Scholar]

- 108. Li X, Yi W, Chi H-L, Wang X, Chan APC. A critical review of virtual and augmented reality (VR/AR) applications in construction safety. Autom Constr. 2018;86:150-162. 10.1016/j.autcon.2017.11.003 [DOI] [Google Scholar]

- 109. Gao Y, González VA, Yiu TW, et al. Immersive virtual reality as an empirical research tool: exploring the capability of a machine learning model for predicting construction workers’ safety behaviour. Virtual Reality. 2022;26(1):361-383. 10.1007/s10055-021-00572-9 [DOI] [Google Scholar]

- 110.How AST revolutionizes safety training with virtual reality [press release]. 07/2022 2022. https://www.vrdirect.com/success-stories/how-ast-revolutionizes-safety-training-with-vr/. Accessed November 15, 2023.

- 111.VRdirect GmbH. VR for training & HR archives. 2023. https://www.vrdirect.com/blog/category/vr-for-training-hr/feed/. Accessed August 16, 2023

- 112. Kolo K. Virtual reality is revolutionizing enterprise and industrial training. VR/AR Association; 2021. Accessed August 16, 2023. https://www.thevrara.com/blog2/2021/9/14/virtual-reality-is-revolutionizing-enterprise-and-industrial-training-see-success-stories-from-walmart-verizon-porsche-bp-henkel-fedex [Google Scholar]

- 113. Albeaino G, Gheisari M, Franz BW. A systematic review of unmanned aerial vehicle application areas and technologies in the AEC domain. J Inform Technol Constr. 2019;24:381-405. [Google Scholar]

- 114. Zhou S, Gheisari M. Unmanned aerial system applications in construction: a systematic review. Constr Innov. 2018;18(4):453-468. 10.1108/CI-02-2018-0010 [DOI] [Google Scholar]

- 115. Ham Y, Han KK, Lin JJ, Golparvar-Fard M. Visual monitoring of civil infrastructure systems via camera-equipped unmanned aerial vehicles (UAVs): a review of related works. Visualiz Eng. 2016;4(1):1-8. 10.1186/s40327-015-0029-z [DOI] [Google Scholar]

- 116. Liu P, Chen AY, Huang Y-N et al. A review of rotorcraft unmanned aerial vehicle (UAV) developments and applications in civil engineering. Smart Struct Syst. 2014; 13(6):1065‐1094 10.12989/sss.2014.13.6.1065. [DOI] [Google Scholar]

- 117. Zucchii M. Drones: a gateway technology to full site automation. Engineering News-Record. 2015. Accessed August 16, 2023. https://www.enr.com/articles/9040-drones-a-gateway-technology-to-full-site-automation. [Google Scholar]

- 118. Hart WS, Gharaibeh NG. Use of micro unmanned aerial vehicles in roadside condition surveys. Paper presented at: Transportation and Development Institute Congress 2011: Integrated Transportation and Development for a Better Tomorrow, March 13-16, 2011. Chicago, Illinois: First Congress of the Transp ortation and Development Institute of ASCE; 2011. [Google Scholar]

- 119. Koutsoudis A, Vidmar B, Ioannakis G, Arnaoutoglou F, Pavlidis G, Chamzas C. Multi-image 3D reconstruction data evaluation. J Cult Herit. 2014;15(1):73-79. 10.1016/j.culher.2012.12.003 [DOI] [Google Scholar]

- 120. Niethammer U, James M, Rothmund S, Travelletti J, Joswig M. UAV-based remote sensing of the super-Sauze landslide: evaluation and results. Eng Geol. 2012;128:2-11. 10.1016/j.enggeo.2011.03.012 [DOI] [Google Scholar]

- 121. Uysal M, Toprak A, Polat N. Photo realistic 3D modeling with UAV: Gedik ahmet pasha mosque in afyonkarahisar. Int Arch Photogramm Remote Sens Spat Inf Sci. 2013;40:659-662. 10.5194/isprsarchives-XL-5-W2-659-2013 [DOI] [Google Scholar]

- 122. Banaszek A, Zarnowski A, Cellmer A, Banaszek S. Application of new technology data acquisition using aerial (UAV) digital images for the needs of urban revitalization. Paper presented at: "Environmental Engineering" 10th International Conference. Lithuania: Vilnius Gediminas Technical University; 2017. [Google Scholar]

- 123. Bulatov D, Solbrig P, Gross H, Wernerus P, Repasi E, Heipke C. Context-based urban terrain reconstruction from UAV-videos for geoinformation applications. Int Arch Photogramm Remote Sens Spat Inf Sci. 2012;38:75-80. 10.5194/isprsarchives-XXXVIII-1-C22-75-2011 [DOI] [Google Scholar]

- 124. Daley S. AI drones: how artificial intelligence works in drones and examples. BuiltIn; 2022. https://builtin.com/artificial-intelligence/drones-ai-companies Accessed August 18, 2023.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data sharing is not applicable to this article because no new data were created or analyzed in this study.