Abstract

Importance. With the booming growth of artificial intelligence (AI), especially the recent advancements of deep learning, utilizing advanced deep learning-based methods for medical image analysis has become an active research area both in medical industry and academia. This paper reviewed the recent progress of deep learning research in medical image analysis and clinical applications. It also discussed the existing problems in the field and provided possible solutions and future directions.Highlights. This paper reviewed the advancement of convolutional neural network-based techniques in clinical applications. More specifically, state-of-the-art clinical applications include four major human body systems: the nervous system, the cardiovascular system, the digestive system, and the skeletal system. Overall, according to the best available evidence, deep learning models performed well in medical image analysis, but what cannot be ignored are the algorithms derived from small-scale medical datasets impeding the clinical applicability. Future direction could include federated learning, benchmark dataset collection, and utilizing domain subject knowledge as priors.Conclusion. Recent advanced deep learning technologies have achieved great success in medical image analysis with high accuracy, efficiency, stability, and scalability. Technological advancements that can alleviate the high demands on high-quality large-scale datasets could be one of the future developments in this area.

1. Introduction

With rapid developments of artificial intelligence (AI) technology, the use of AI technology to mine clinical data has become a major trend in medical industry [1]. Utilizing advanced AI algorithms for medical image analysis, one of the critical parts of clinical diagnosis and decision-making, has become an active research area both in industry and academia [2, 3]. Recent applications of deep leaning in medical image analysis involve various computer vision-related tasks such as classification, detection, segmentation, and registration. Among them, classification, detection, and segmentation are fundamental and most widely used tasks.

Although there exist a number of reviews on deep learning methods on medical image analysis [4-13], most of them emphasize either on general deep learning techniques or on specific clinical applications. The most comprehensive review paper is the work of Litjens et al. published in 2017 [12]. Deep learning is such a quickly evolving research field; numerous state-of-the-art works have been proposed since then. In this paper, we review the latest developments in the field of medical image analysis with comprehensive and representative clinical applications.

We briefly review the common medical imaging modalities as well as technologies for various specific tasks in medical image analysis including classification, detection, segmentation, and registration. We also give more detailed clinical applications with respect to different types of diseases and discuss the existing problems in the field and provide possible solutions and future research directions.

2. AI Technologies in Medical Image Analysis

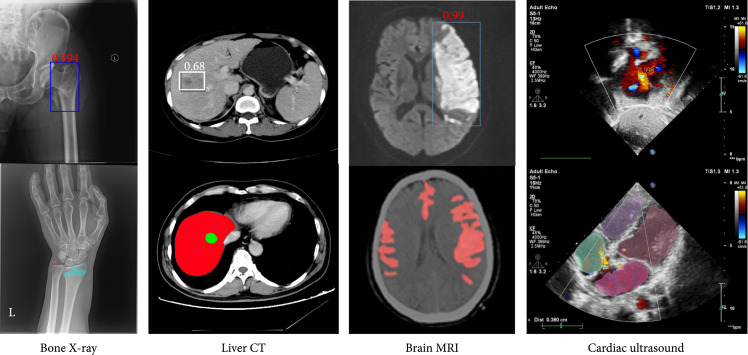

Different medical imaging modalities have their unique characteristics and different responses to human body structure and organ tissue and can be used in different clinical purposes. The commonly used image modalities for diagnostic analysis in clinic include projection imaging (such as X-ray imaging), computed tomography (CT), ultrasound imaging, and magnetic resonance imaging (MRI). MRI sequences include T1, T1-w, T2, T2-w, diffusion-weighted imaging (DWI), apparent diffusion coefficient (ADC), and fluid attenuation inversion recovery (FLAIR). Figure 1 demonstrates a few examples of medical image modalities and their corresponding clinical applications.

Figure 1.

Examples of medical image modalities and their corresponding applications (original copy).

2.1. Image Classification for Medical Image Analysis

As a fundamental task in computer vision, image classification plays an essential role in computer-aided diagnosis. A straightforward use of image classification for medical image analysis is to classify an input image or a series of images as either containing one (or a few) of predefined diseases or free of diseases (i.e., healthy case) [14, 15]. Typical clinical applications of image classification tasks include skin disease identification in dermatology [16, 17], eye disease recognition in ophthalmology (such as diabetic retinopathy [18, 19], glaucoma [20], and corneal diseases [21]). Classification of pathological images for various cancers such as breast cancer [22] and brain cancer [23] also belongs to this area.

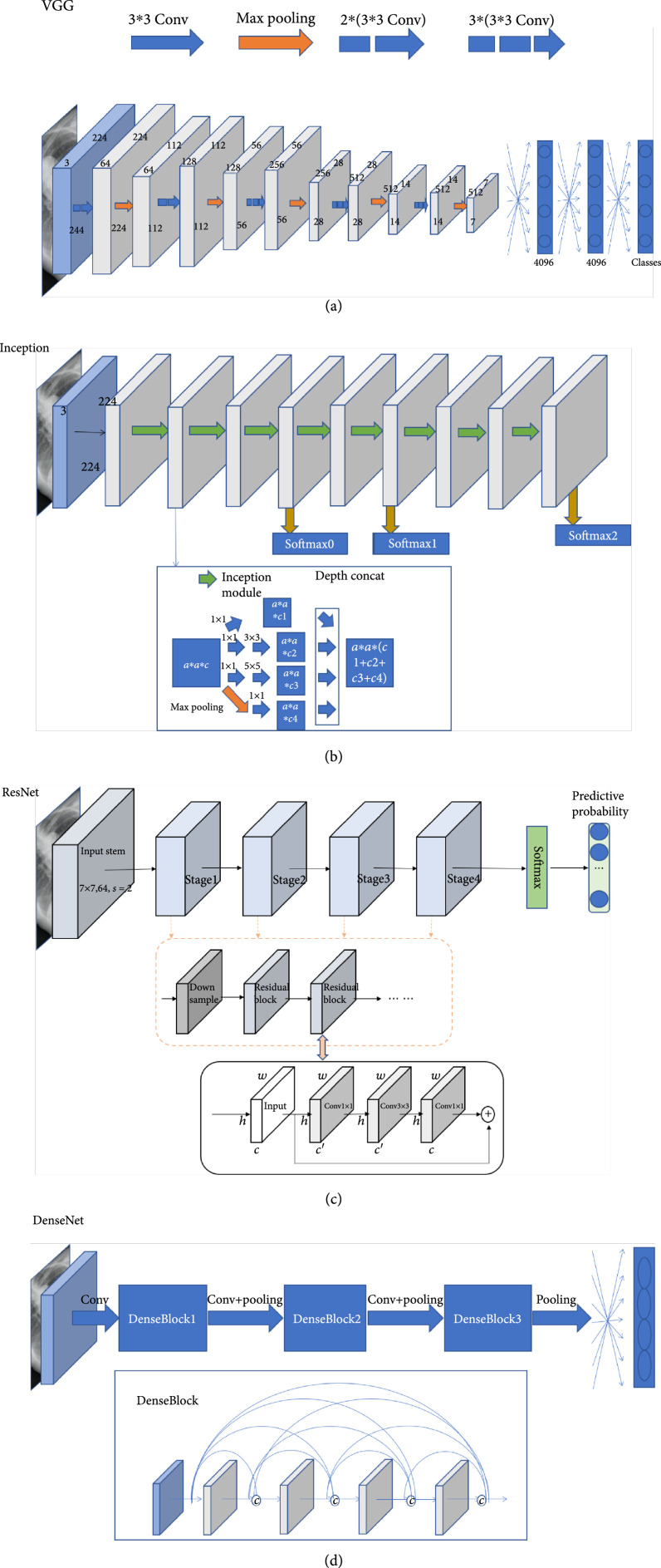

Convolutional neural network (CNN) is the dominant classification framework for image analysis [24]. With the development of deep learning, the framework of CNN has continuously improved. AlexNet [25] was a pioneer convolutional neural network, which was composed of repeated convolutions, each followed by ReLU and max pooling operation with stride for downsampling. The proposed VGGNet [26] used convolution kernels and maximum pooling to simplify the structure of AlexNet and showed improved performance by simply increasing the number and depth of the network. Via combining and stacking , , and convolution kernels and pooling, the inception network [27] and its variants [28, 29] increased the width and the adaptability of the network. ResNet [30] and DenseNet [31] both used skip connections to relieve the gradient vanishing. SENet [32] proposed a squeeze-and-excitation module which enabled the model to pay more attention to the most informative channel features. The family of EfficientNet [33] applied AUTOML and a compound scaling method to uniformly scale the width, depth, and resolution of the network in a principled way, resulting in improved accuracy and efficiency. Figure 2 demonstrates some of the commonly used CNN-based classification network architectures.

Figure 2.

Examples of CNN-based classification networks (original copy).

Besides the direct use for image classification, CNN-based networks can also be applied as the backbone models for other computer vision tasks, such as detection and segmentation.

To evaluate the algorithms of image classification, researchers use different evaluation metrics. Precision is the proportion of true positives in the identified images. The recall is the proportion of all positive samples in the test set that are correctly identified as positive samples. The accuracy rate is used to evaluate the global accuracy of a model. The score can be considered a harmonic average of the precision and the recall of the model, which takes both the precision and recall of the classification model into account. ROC (receiver operating characteristic) curve is usually used to evaluate the prediction effect of the binary classification model, and the kappa coefficient is a method to measure the accuracy of the model in multiclassification tasks.

Here, we denote TP as true positives, FP as false positives, FN as false negatives, TN as true negatives, and as the number of the testing samples.

2.2. Object Detection for Medical Image Analysis

Generally speaking, object detection algorithms include both identification and localization tasks. The identification task refers to judging whether objects belonging to certain classes appear in regions of interest (ROIs) whereas the localization task refers to localizing the position of the object in the image. In medical image analysis, detection is commonly aimed at detecting the earliest signs of abnormality in patients. Exemplar clinical applications of detection tasks include lung nodule detection in chest CT or X-ray images [34, 35], lesion detection on CT images [36, 37], or mammograms [38].

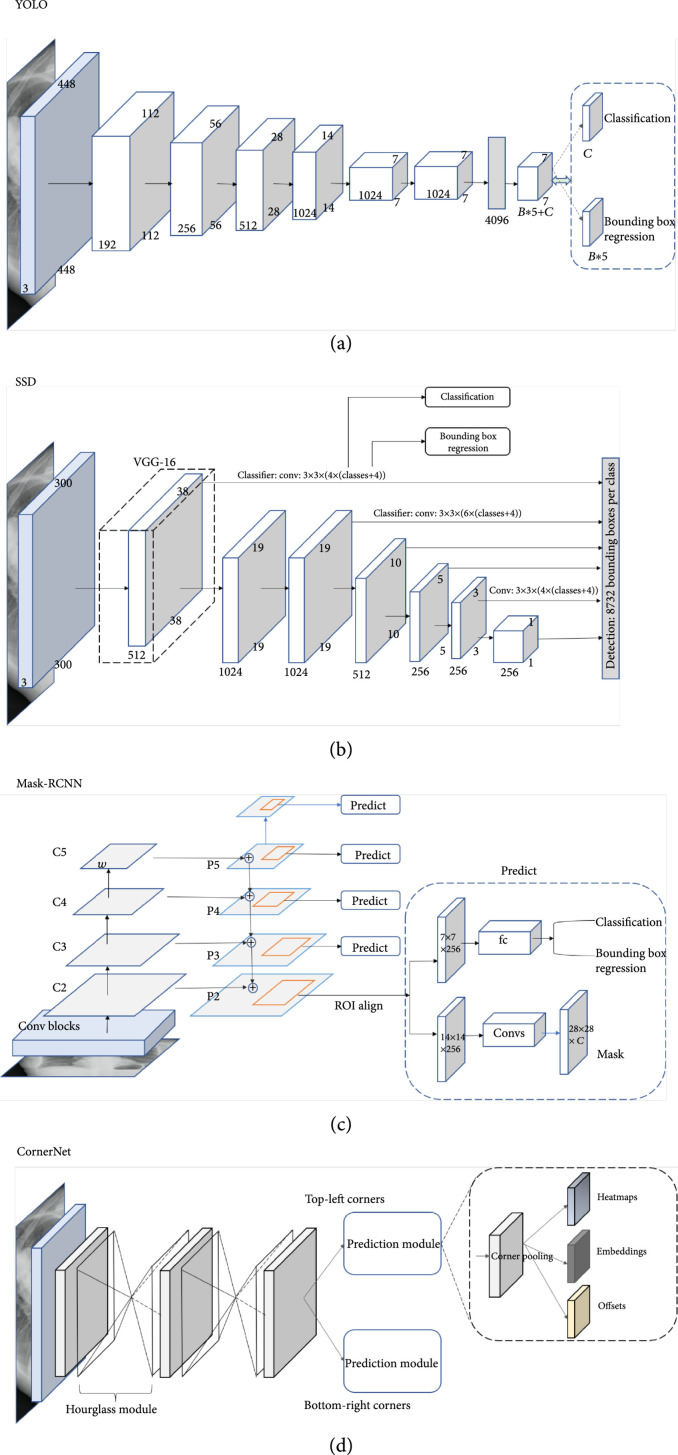

Object detection algorithms can be categorized into two approaches, the anchor-based approach or anchor-free approach, where anchor-based algorithms can be further divided as single-stage algorithms or two/multistage algorithms. In general, single-stage algorithms are computationally efficient whereas two/multistage algorithms have better detection performance. The family of YOLO [39] and the single-shot multibox detector (SSD) [40] are two classic and widely used single-stage detectors with simple model architectures. As shown in Figures 3(a) and 3(b), both architectures are based on feed-forward convolutional networks producing a fixed number of bounding boxes and their corresponding scores for the presence of object instances of given classes in the boxes. A nonmaximum suppression step is applied to generate the final predictions. Different from YOLO which works on a single-scale feature map, the SSD utilizes multiscale feature maps, thereby producing better detection performance. Two-stage frameworks generate a set of ROIs and classify each of them through a network. The Faster-RCNN framework [41] and its descendant Mask-RCNN [42] are the most popular two-stage frameworks. As shown in Figure 3(c), the Faster/Mask-RCNN first generates object proposals through a region proposal network (RPN) and then classifies those generated proposals. The major difference between the Faster-RCNN and the Mask-RCNN is that the Mask-RCNN has an instance segmentation branch. Recently, there is a research trend on developing anchor-free algorithms. CornerNet [43] is one of the popular ones. As illustrated in Figure 3(d), CornerNet is a single convolutional neural network which eliminates the use of anchor boxes via utilizing paired key points where an object bounding box is indicated by the top-left corner and the bottom-right corner.

Figure 3.

Examples of object detection frameworks (original copy).

There are two main metrics to evaluate the performance of detection methods: the mean average precision (mAP) and the false positive per image (FP/I @ recall). mAP is used to calculate the average of all average precisions (APs) of all categories. FP/I @ recall rate is a measure of false positive (FP) of each image under a certain recall rate which takes into account the balance between false positives and the missing rate.

2.3. Segmentation for Medical Image Analysis

Image segmentation is a pixel labeling problem, which partitions an image into regions with similar properties. For medical image analysis, segmentation is aimed at determining the contour of an organ or anatomical structure in images. Segmentation tasks in clinical applications include segmenting a variety of organs, organ structures (such as the whole heart [44] and pancreas [45]), tumors, and lesions (such as the liver and liver tumor [46]) across different medical imaging modalities.

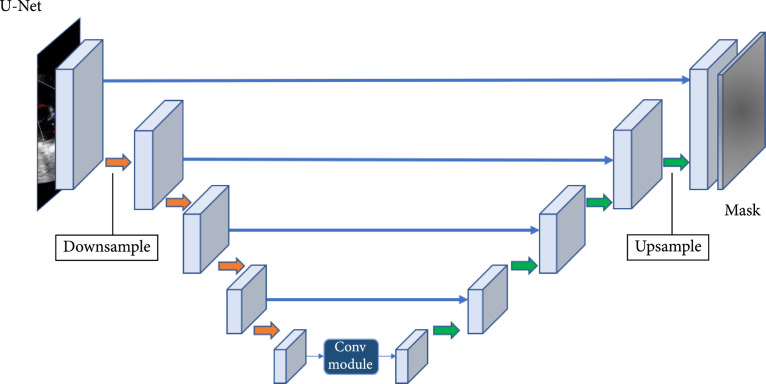

Since the fully convolutional neural network (FCN) [47] has been proposed, image segmentation has achieved great success. FCN was the first CNN which turned the classification task to dense segmentation task with in-network upsampling and a pixelwise loss. Through a skip architecture, it combined coarse, semantic, and local information to dense prediction. Medical image segmentation methods can be divided into two categories: the 2D methods and the 3D methods according to the input data dimension. The U-Net architecture [48] is the most popular FCN for medical image segmentation. As shown in Figure 4, U-Net consists of a contracting path (the downsample side) and an expansive path (the upsample side). The contracting path follows the typical CNN architecture. It consists of the repeated application of convolutions, each followed by ReLU and max pooling operation with stride for downsampling. At each downsampling step, it also doubles the number of feature channels. Each step in the expansive path is composed of feature map upsampling followed by deconvolution that halves the number of feature channels; a concatenation with the correspondingly cropped feature map from the contracting path is also applied. Variants of U-Net-based architectures have been proposed. Isensee et al. [49] proposed a general framework called nnU-Net (No new U-Net) for medical image segmentation, which applied a dataset fingerprint (representing the key properties of the dataset) and a pipeline fingerprint (representing the key design of the algorithms) to systematically optimize the segmentation task via formulating a set of heuristic rules from domain knowledge. The nnU-Net achieved state-of-the-art performance on 19 different datasets with 49 segmentation tasks across a variety of organs, organ structures, tumors, and lesions in a number of imaging modalities (such as CT, MRI).

Figure 4.

Examples of image segmentation frameworks (original copy).

Dice similarity coefficient and intersection over union (IOU) are the two major evaluation metrics to evaluate the performance of segmentation methods, and they are defined as follows:

where TP, FP, and FN denote true positive, false positive, and false negative, respectively.

2.4. Image Registration for Medical Image Analysis

Image registration, also known as image warping or image fusion, is a process of aligning two or more images. The goal of medical image registration is aimed at establishing optimal correspondence within images acquired at different times (for longitudinal studies), by different imaging modalities (such as CT, MRI), across different patients (for intersubject studies), or from distinct viewpoints. Image registration plays a crucial preprocessing step in many clinical applications including computer-aided intervention and treatment planning [50], image-guided/assisted surgery or simulation [51], and fusion of anatomical images (e.g., CT or MRI images) with functional images (such as positron emission tomography, single-photon emission computed tomography, or functional MRI) for disease diagnosis and monitoring [52].

Depending on different points of view, image registration methodologies can be categorized differently. For instance, image registration methods can be classified as monomodal or multimodal based on imaging modalities involved. From the nature of geometric transformation, methods can also be categorized as rigid or nonrigid classes. By data dimensionality, registration methods can be classified as 2D/2D, 3D/3D, 2D/3D, etc., and from similarity measure point of view, registration can be categorized as feature-based or intensity-based groups. Previously, image registration has been extensively explored as an optimization problem whose aim is to search the best geometric transformation iteratively through optimizing a similarity measure such as sum of squared differences (SSD), mutual information (MI), and cross-correlation (CC). Ever since the beginning of the deep learning renaissance, various deep learning-based registration methods have been proposed and achieved the state-of-the-art performance [53].

Yang et al. [54] proposed a fully supervised deep learning method to align 2D/3D intersubject brain MR in a single step via a U-Net-like FCN. Jun et al. [55] also applied a CNN to perform deformable registration of abdominal MR images to compensate respiration deformation. Despite the success of supervised learning-based methods, the nature of acquisition of reliable ground truth remains significantly challenging. Weakly supervised and/or unsupervised methods can effectively alleviate the issue of lack of training datasets with ground truth. Li and Fan [56] trained an FCN to perform deformable 3D brain MR images using self-supervision. Inspired by the spatial transfer network (STN) [57], Kuang et al. [58] applied a STN-based CNN to perform deformable registration of MRI T1-W brain volumes.

Recently, Generative Adversarial Network- (GAN-) and Reinforcement Learning- (RL-) based methods have also motivated great attentions. Yan et al. [59] performed a rigid registration of 3D MR and ultrasound images. In their work, the generator was trained to estimate rigid transformation where the discriminator was used to distinguish between images that were aligned by ground-truth transformations or by predicted ones. Kreb et al. [60] applied a RL method to perform the nonrigid deformable registration of 2D/3D prostate MRI images where they utilized a low-resolution deformation model for registration and a fuzzy action control to influence the action selection.

For performance evaluation, Dice coefficient and mean square error (MSE) are two major evaluation metrics. Target registration error (TRE) can also be applied if landmark correspondence can be acquired.

3. Clinical Applications

In this section, we review state-of-the-art clinical applications in four major systems of the human body involving the nervous system, the cardiovascular system, the digestive system, and the skeletal system. To be more specific, AI algorithms on medical image diagnostic analysis for the following representative diseases including brain diseases, cardiac diseases, and liver diseases, as well as orthopedic trauma, are discussed.

3.1. Brain

In this section, we discuss three most critical brain diseases, namely, stroke, intracranial hemorrhage, and intracranial aneurysm.

3.1.1. Stroke

Stroke is one of the leading causes of death and disability worldwide and imposes an enormous burden for health care systems [61]. Accurate and automatic segmentation of stroke lesions can provide insightful information for neurologists.

Recent studies have presented tremendous ability in stroke lesion segmentation. Chen et al. [62] used DWI images as input to segment acute ischemic lesions and achieved an average Dice score of 0.67. Clèrigues et al. [63] proposed a deep learning methodology for acute and subacute stroke lesion segmentation using multimodal MRI images, and the Dice scores of the two segmentation tasks were 0.84 and 0.59, respectively. Liu et al. [64] used a U-shaped network (Res-CNN) to automatically segment acute ischemic stroke lesions from multimodality MRIs, and the average Dice coefficient was 0.742. Zhao et al. [65] proposed a semisupervised learning method using the weakly labeled subjects to detect the suspicious acute ischemic stroke lesions and achieved a mean Dice coefficient of 0.642. Compared to using MRI, a 2D patch-based deep learning approach was proposed to segment the acute stroke lesion core from CT perfusion images [66], and the average Dice coefficient was 0.49.

3.1.2. Intracranial Hemorrhage

Recent studies have also shown great promise in automated detection of intracranial hemorrhage and its subtypes. Chilamkurthy et al. [67] achieved an AUC of 0.92 for detecting intracranial hemorrhage based on a publicly available dataset called CQ500 consisting of 313,318 head CT scans from 20 centers. They use the original clinical radiology report and consensus of three independent radiologists as the gold standard to evaluate their method. Ye et al. [68] proposed a novel three-dimensional (3D) joint convolutional and recurrent neural network (CNN-RNN) for the detection of intracranial hemorrhage. They developed and evaluated their method on a total of 2,836 subjects (ICH/normal, 1,836/1,000) from three institutions. Their algorithm achieved an AUC of 0.94 for intraparenchymal, 0.93 for intraventricular, 0.96 for subdural, 0.94 for extradural, and 0.89 for subarachnoid for the subtype classification task. Ker et al. [69] proposed to apply an image thresholding in the preprocessing step to improve the classification F1 score from 0.919 to 0.952 for their 3D CNN-based acute brain hemorrhage diagnosis. Singh et al. [70] also proposed an image preprocessing method to improve the 3D CNN-based acute brain hemorrhage detection via normalizing 3D volumetric scans using intensity profile. Their experimental results demonstrated the best F1 scores of 0.96, 0.93, 0.98, and 0.99, respectively, for four types of acute brain hemorrhages (i.e., subarachnoid, intraparenchymal, subdural, and intraventricular) on the CQ500 dataset [67].

3.1.3. Intracranial Aneurysm

Intracranial aneurysm is a common life-threatening disease usually caused by trauma, vascular disease, or congenital development with a prevalence of 3.2% in the population [71]. Rupture of an intracranial aneurysm is a serious incident with high mortality and morbidity rates [72]. As such, the accurate detection of intracranial aneurysms is also important. Computed tomography angiography (CTA) and magnetic resonance angiography (MRA) are noninvasive methods and widely used for the diagnosis and presurgical planning of intracranial aneurysms [73]. Nakao et al. [74] used a CNN classifier to predict whether each voxel was inside or outside aneurysms by inputting MIP images generated from a volume of interest around the voxel. They detected 94.2% of aneurysms with 2.9 false positives per case. Stember et al. [75] employed a CNN based on U-Net architecture to detect aneurysms on MIP images and then to derive aneurysm size. Sichtermann et al. [76] established a system based on an open-source neural network named DeepMedic for the detection of intracranial aneurysms from 3D TOF-MRA data. Ueda et al. [77] adopted ResNet for the detection of aneurysms from MRA images and reached a sensitivity of 91% and 93% for the internal and external test datasets, respectively. Allison et al. [78] proposed a segmentation model called HeadXNet to segment aneurysms on CTA images. Recently, Shi et al. [79] proposed a 3D patch-based deep learning model for detecting intracranial aneurysm in CTA images. The proposed model utilized both spatial and channel attentions within a residual-based encoder-decoder architecture. Experimental results on multicohorta studies proofed the clinical applicability.

3.2. Cardiac/Heart

Echocardiography, CT, and MRI are commonly used medical imaging modalities for noninvasive assessment of the function and structure of the cardiovascular system. Automatic analysis of images from the above modalities can help physicians study the structure and function of heart muscle, find the cause of a patient’s heart failure, identify potential tissue damages, and so on.

3.2.1. Identification of Standard Scan Planes

Identification of standard scan planes is an important step in clinical echocardiogram interpretation since many cardiac diseases are diagnosed based on standard scan planes. Zhang et al. [80] built a fully automated, scalable, analysis pipeline for echocardiogram interpretation, including view identification, cardiac chamber segmentation, quantification of structure and function, and disease detection. They trained a 13-layer CNN on 14,035 echocardiograms spanning on a 10-year period for identification of 23 viewpoints and trained a cardiac chamber segmentation network across 5 common standard scan planes. Then, the segmentation output was used to quantify chamber volumes and LV mass, determine ejection fraction, and facilitate automated determination of longitudinal strain through speckle tracking. Howard et al. [81] trained a two-stream network on over 8,000 echocardiographic videos for 14 different scan plane identification, which contained a time-distributed network to get spatial feature and a temporal network to get optical flow feature of moving objects between frames. Experiments showed that the proposed method can halve the error rate for video scan plane classification, and the types of misclassification the method made were very similar to differences of opinion between human experts.

3.2.2. Segmentation of Cardiac Structures

Vigneault et al. [82] presented a novel deep CNN architecture called Ω-Net for fully automatic whole-heart segmentation. The network was trained end to end from scratch to segment five foreground classes (the four cardiac chambers plus the LV myocardium) in three views (SA, 4C, and 2C) with data acquired from both 1.5-T and 3-T magnets as part of a multicenter trial involving 10 institutions. Xiong et al. [83] developed a 16-layer CNN model called AtriaNet to automatically segment the left atrial (LA) epicardium and endocardium. AtriaNet consists of a multiscaled dual-pathway architecture with two different sizes of input patches centered on the same region that captures both the local arterial tissue and geometry and the global positional information of LA. Benchmarking experiments showed that AtriaNet had outperformed the state-of-the-art CNNs, with a Dice score of 0.940 and 0.942 for the LA epicardium and endocardium at the time. Moccia et al. [84] modified and trained the ENet, a fully convolutional neural network, to provide scar-tissue segmentation in the left ventricle. Bai et al. [85] proposed an image sequence segmentation algorithm by combining a fully convolutional network with a recurrent neural network, which incorporated both spatial and temporal information into the segmentation task. The proposed method achieved an average Dice metric of 0.960 for the ascending aorta and 0.953 for the descending aorta. Morris et al. [86] developed a novel pipeline that paired MRI/CT data that were placed into separate image channels to train a 3D neural network using the entire 3D image for sensitive cardiac substructure segmentation. The paired MR/CT multichannel data inputs yielded robust segmentations on noncontrast CT inputs, and data augmentation and 3D Conditional Random Field (CRF) postprocessing improved deep learning contour agreement with ground truth.

3.2.3. Coronary Artery Segmentation

Shen et al. [87] proposed a joint framework for coronary CTA segmentation based on deep learning and traditional-level set method. A 3D FCN was used to learn the 3D semantic features of coronary arteries. Moreover, an attention gate was added to the entire network, aiming to enhance the vessels and suppress irrelevant regions. The output of 3D FCN with the attention gate was optimized by the level set to smooth the boundary to better fit the ground-truth segmentation. The coronary CTA dataset used in this work consisted of 11,200 CTA images from 70 groups of patients, of which 20 groups of patients were used as a test set. The proposed algorithm provided significantly better segmentation results than vanilla 3D FCN intuitively and quantitatively. He et al. [88] developed a novel blood vessel centerline extraction framework utilizing a hybrid representation learning approach. The main idea was to use CNNs to learn local appearances of vessels in image crops while using another point-cloud network to learn the global geometry of vessels in the entire image. This combination resulted in an efficient, fully automatic, and template-free approach to centerline extraction from 3D images. The proposed approach was validated on CTA datasets and demonstrated its superior performance compared to both traditional and CNN-based baselines.

3.2.4. Coronary Artery Calcium and Plaque Detection

Zhang et al. [89] established an end-to-end learning framework for artery-specific coronary calcification identification in noncontrast cardiac CT, which can directly yield accurate results based on given CT scans in the testing process. In this framework, the intraslice calcification features were collected by a 2D U-DenseNet, which was the combination of DenseNet and U-Net. While those lesions spanned multiple adjacent slices, authors performed 3D U-Net extraction to the interslice calcification features, and the joint semantic features of 2D and 3D modules were beneficial to artery-specific calcification identification. The proposed method was validated on 169 noncontrast cardiac CT exams collected from two centers by cross-validation and achieved a sensitivity of 0.905, a PPV of 0.966 for calcification number, a sensitivity of 0.933, a PPV of 0.960, and a score of 0.946 for calcification volume, respectively. Liu et al. [90] proposed a vessel-focused 3D convolutional network for automatic segmentation of artery plaque including three subtypes: calcified plaques, noncalcified plaques, and mixed calcified plaques. They first extracted the coronary arteries from the CT volumes and then reformed the artery segments into straightened volumes. Finally, they employed a 3D vessel-focused convolutional neural network for plaque segmentation. This proposed method was trained and tested on a dataset of multiphase CCTA volumes of 25 patients. The proposed method achieved Dice scores of 0.83, 0.73, and 0.68 for calcified plaques, noncalcified plaques, and mixed calcified plaques, respectively, on the test set, which showed a potential value for clinical application.

3.3. Liver

CT and MRI are widely used for the early detection, diagnosis, and treatment of liver diseases. Automatic segmentation of the liver and/or liver lesion with CT or MRI is of great importance in radiotherapy planning, liver transplantation planning, and so on.

3.3.1. Liver Lesion Detection and Segmentation

Vorontsov et al. used deep CNNs to detect and segment liver tumors [91]. For lesion sizes smaller than 10 mm (), 10-20 mm (), and larger than 20 mm (), the detection sensitivities of the method were 10%, 71%, and 85%; positive predictive values were 25%, 83%, and 94%; and dice similarity coefficients were 0.14, 0.53, and 0.68. Wang et al. proposed an attention network by using an extra network to gather information from continuous slices for lesion segmentation [92]. This method had a Dice per case score of 74.1% on LiTS test dataset. In order to improve the performance on small lesions, modified U-Net (mU-Net) is proposed by Seo et al. which obtained a Dice score of 89.72% on validation set for liver tumor segmentation [93]. An edge enhanced network was proposed by Tang et al. [94] for liver tumor segmentation with a Dice per case score of 74.8% on LiTS test dataset.

3.3.2. Liver Lesion Classification

Unlike liver lesion segmentation or detection, there are few works about lesion classification, as there is no public dataset about lesion classification, and it is difficult to collect enough data. A liver tumor classification system trained with 1,210 patients and validated in 201 patients based on deep learning was proposed by Zhen et al. [95]. The system can distinguish malignant from benign liver tumors with an AUC score of 94.6% using only unenhanced images, and the performance can be improved a lot with clinical information.

3.3.3. Liver Fibrosis Staging

Liver fibrosis staging is important for the prevention and treatment of chronic liver disease. Although the amount of the works based on deep learning for liver fibrosis staging is few, these methods have shown their capability for this task. Liu et al. proposed a method using CNNs and SVM to classify the capsules on ultrasound images to get the stage score, and this method had a classification AUC score of 97.03% [96]. Yasaka et al. proposed two deep CNNs models to obtain stage scores, respectively, from CT [97] and MRI [98] images, achieving AUC scores of 0.73-0.76 and 0.84-0.85, respectively. Choi et al. trained a model based on deep learning using 7,491 patients and validated on 891 patients, and the AUC score on the validation dataset was 0.95-0.97 [99]. Recently, a model based on multimodal ultrasound images received an AUC score of 0.93-0.95 [100] which used transfer learning to improve the classification performance.

3.3.4. Other Liver Disease

Prediction of microvascular invasion (MVI) before surgery is valuable for liver cancer patients’ treatment planning since MVI is an adverse prognostic factor for these patients [101]. Men et al. proposed 3D CNNs with LSTM to predict MVI on enhanced MRI images receiving an AUC score of 89% [102]. Jiang et al. [103] also reported a 3D CNN-based one with enhanced CT images achieving an AUC score of 90.6%.

3.4. Bone

Bone fracture, also called orthopedic trauma, is a relatively common disease. Bone fracture recognition in X-ray images has become a promising research direction since 2017 with the development of deep learning technology. In general, there are two main approaches for bone fracture recognition, namely, the classification-based approach and the object detection-based approach.

3.4.1. Classification-Based Approach

For the classification-based approach, researchers usually use the labels of “no fracture” and “fracture” for the whole image. The pioneer and dedicated work of the classification pipeline was from Olczak et al. [104]. By adopting the VGGNet as the backbone of the classification pipeline, they trained the model on 256,000 well-labeled images of the wrists, hands, and ankles for recognizing fractures. With a large amount of validating data, the model set a strong and credible baseline of the accuracy of 83%. Urakawa et al. [105] used the same network architecture as Olczak et al.’s in classifying intertrochanteric hip fractures on 3,346 radiographs. The results have shown a 95.5% accuracy whereas an accuracy of orthopedic surgeons was reported at 92.2%. Gale et al. [106] extracted 53,000 clinical X-rays to get an area under the ROC curve of 0.994 whereas Krogue et al. [107] labeled 3,034 images to get an area under the curve of 0.973. They both applied DenseNet into the classification task on hip fracture radiographs.

3.4.2. Object Detection-Based Approach

The object detection-based approach is aimed at localizing the fracture locations in the images. Gan et al. [108] trained a Faster R-CNN model to locate the area of wrist fracture; then, they sent the ROI to an inception framework for classification. The AUC score achieved 0.96 overpassing radiologists’ performance by 9% in accuracy on a set of 2,340 anteroposterior wrist radiographs. Thian et al. [109] employed the same Faster R-CNN architecture and also ran the model on wrist radiographs with a larger volume of the dataset of 7,356 images. The result had an indistinctive AUC score of 0.957. Still on wrist radiographs, using the idea of semantic segmentation, Lindsey et al. [110] adopted an extension of U-Net to predict a heat map probability of fractures for each image pixel. Even using 135,409 wrist radiographs, the article only reported an average clinician sensitivity of 91.5% and specificity of 93.9% aided with a trained model, which seemed to be inferior to the above research. Wu et al. [111] proposed an end-to-end multidomain facture detection network which treated each body part as a domain. The proposed network was composed of two subnetworks, namely, a domain classification network for predicting the domain type of an image and a fracture detection network for detecting fractures on X-ray images of different domains. By constructing feature enhancement modules and multifeature-enhanced r-CNN, the proposed network extracted more representative features for each domain. Experimental results on real-clinical data demonstrated the effectiveness with the best -score on all the domains over existing Faster R-CNN-based state-of-the-art methods. Recently, Wu et al. [112] proposed a novel feature ambiguity mitigation model to improve the bone fracture detection on X-ray radiographs. A total of 9,040 radiographic images for various body parts including the hand, wrist, elbow, shoulder, pelvic, knee, ankle, and foot were studied. Experimental results demonstrated performance improvements in all body parts.

4. Challenges and Future Directions

Although deep learning models have achieved great success in medical image analysis, small-scale medical datasets are still the main bottleneck in this field. Inspired by the idea of transfer learning technique, one possible way is to do domain transfer which adapts a model trained on natural images to medical image applications or from one image modality to another. Another possible way is to apply federated learning [113] by which training can be performed among multiple data centers collaboratively. In addition, researchers have also begun to collect benchmark datasets for various medical image analysis purposes. Table 1 summarized examples of the publicly available datasets.

Table 1.

Publicly available Benchmark datasets.

| Dataset name | Organ/modalities | Image size | No. classes | No. of cases | Tasks | Resources |

|---|---|---|---|---|---|---|

| LIDC-IDRI | Lung/CT | 3 | 1018 | Lung nodules | [114] | |

| LUNA | Lung/CT | 1 | 888 | Lung nodules | [115] | |

| DDSM | Breast/mammography | — | 3 | 2,500 | Breast mass | [116] |

| DeepLesion | Diversity CT | — | 3+ | 4427 | Lung nodules, liver tumors, lymph nodes | [117] |

| LiTS | Liver/CT | 2 | 131 | Liver, liver tumors | [118] | |

| Brain tumor | Brain/MRI | 3 | 484 | Edema, tumor, necrosis | [119] | |

| Heart | Heart/MRI | 1 | 20 | Left ventricle | [119] | |

| Prostate | Prostate/MRI | 2 | 32 | Peripheral and transition zone | [119] | |

| Pancreas | Pancreas/CT | 2 | 282 | Pancreas, pancreas cancer | [119] | |

| Spleen | Spleen/CT | 1 | 41 | Spleen | [119] | |

| Colon | Colon/CT | 1 | 126 | Colon cancer | [119] |

Class imbalance is another major problem of medical image analysis. A number of researches on novel loss function design, such as focal loss [120], grading loss [121], contrastive loss [122], and triplet loss [123], have been proposed to tackle this problem. Making use of domain subject knowledge is another direction. For instance, Jiménez-Sánchez et al. [124] proposed a curriculum learning method to classify proximal femoral fractures in X-ray images, whose core idea is to control the sampling weight of samples in the training process based on a priori knowledge. Chen et al. [125] also proposed a novel pelvic fracture detection framework based on bilaterally symmetric structure assumption.

5. Conclusion

The rise of advanced deep learning methods has enabled great success in medical image analysis with high accuracy, efficiency, stability, and scalability. In this paper, we reviewed the recent progress of CNN-based deep learning techniques in clinical applications including image classification, object detection, segmentation, and registration. More detailed image analysis-based diagnostic applications in four major systems of the human body involving the nervous system, the cardiovascular system, the digestive system, and the skeletal system were reviewed. To be more specific, state-of-the-art works for different diseases including brain diseases, cardiac diseases, and liver diseases, as well as orthopedic trauma, are discussed. This paper also described the existing problems in the field and provided possible solutions and future research directions.

Acknowledgments

This study was supported in part by grants from the Zhejiang Provincial Key Research & Development Program (No. 2020C03073).

Authors’ Contributions

Y. Yu and X. Liu conceptualized, organized, and revised the manuscript. X. Liu contributed to all aspects of the preparation of the manuscript. K. Gao, B. Liu, C. Pan, K. Liang, L. Yan, J. Ma, F. He, S. Pan, and S. Zhang were involved in the writing of the manuscript. All authors contributed to this paper. Xiaoqing Liu, Kunlun Gao, Bo Liu, Chengwei Pan, Kongming Liang, and Lifeng Yan contributed equally to this work.

References

- 1.Shen H. T., Zhu X., Zhang Z., Wang S.-H., Chen Y., Xu X., and Shao J., “Heterogeneous data fusion for predicting mild cognitive impairment conversion,” Information Fusion, vol. 66, pp. 54-63, 2021 [Google Scholar]

- 2.Zhu Y., Kim M., Zhu X., Kaufer D., and Wu G., “Long range early diagnosis of Alzheimer's disease using longitudinal MR imaging data,” Medical Image Analysis, vol. 67, p. 101825, 2021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Zhu X., Song B., Shi F., Chen Y., Hu R., Gan J., Zhang W., Li M., Wang L., Gao Y., Shan F., and Shen D., “Joint prediction and time estimation of COVID-19 developing severe symptoms using chest CT scan,” Medical Image Analysis, vol. 67, p. 101824, 2021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Mitra S., and Uma Shankar B., “Medical image analysis for cancer management in natural computing framework,” Information Sciences, vol. 306, pp. 111-131, 2015 [Google Scholar]

- 5.Miranda E., Aryuni M., and Irwansyah E., “A survey of medical image classification techniques,” in 2016 International Conference on Information Management and Technology (ICIMTech), Bandung, Indonesia, 2016, [Google Scholar]

- 6.Shen D., Wu G., and Suk H.-I., “Deep learning in medical image analysis,” Annual Review of Biomedical Engineering, vol. 19, no. 1, pp. 221-248, 2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Suzuki K., “Survey of deep learning applications to medical image analysis,” Medical Imaging Technology, vol. 35, pp. 212-226, 2017 [Google Scholar]

- 8.Zhou S. K., Greenspan H., and Shen D.. Deep Learning for Medical Image Analysis, Academic Press, 2017 [Google Scholar]

- 9.Ker J., Wang L., Rao J., and Lim T., “Deep learning applications in medical image analysis,” IEEE Access, vol. 6, pp. 9375-9389, 2018 [Google Scholar]

- 10.Liu S., Wang Y., Yang X., Lei B., Liu L., Li S. X., Ni D., and Wang T., “Deep learning in medical ultrasound analysis: a review,” Engineering, vol. 5, no. 2, pp. 261-275, 2019 [Google Scholar]

- 11.Maier A., Syben C., and Lasser T., “A gentle introduction to deep learning in medical image processing,” Zeitschrift für Medizinische Physik, vol. 29, pp. 86-101, 2019 [DOI] [PubMed] [Google Scholar]

- 12.Litjens G., Kooi T., Bejnordi B. E., Setio A. A. A., Ciompi F., Ghafoorian M., van der Laak J. A. W. M., van Ginneken B., and Sánchez C. I., “A survey on deep learning in medical image analysis,” Medical Image Analysis, vol. 42, pp. 60-88, 2017 [DOI] [PubMed] [Google Scholar]

- 13.Singh S. P., Wang L., Gupta S., Goli H., Padmanabhan P., and Gulyás B., “3D deep learning on medical images: a review,” Sensors, vol. 20, no. 18, article 5097, 2020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Yadav S., and Jadhav S., “Deep convolutional neural network based medical image classification for disease diagnosis,” Journal of Big Data, vol. 6, no. 1, p. 113, 2019 [Google Scholar]

- 15.Wang C., Zhang F., Yu Y., and Wang Y., “BR-GAN: bilateral residual generating adversarial network for mammogram classification,” Medical Image Computing and Computer Assisted Intervention - MICCAI 2020. MICCAI 2020, Martel A. L.et al., , Eds., Springer, Cham, vol. 12262, Lecture Notes in Computer Science, 2020 [Google Scholar]

- 16.Esteva A., Kuprel B., Novoa R. A., Ko J., Swetter S. M., Blau H. M., and Thrun S., “Dermatologist-level classification of skin cancer with deep neural networks,” Nature, vol. 542, no. 7639, pp. 115-118, 2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Wu H., Yin H., Chen H., Sun M., Liu X., Yu Y., Tang Y., Long H., Zhang B., Zhang J., Zhou Y., Li Y., Zhang G., Zhang P., Zhan Y., Liao J., Luo S., Xiao R., Su Y., Zhao J., Wang F., Zhang J., Zhang W., Zhang J., and Lu Q., “A deep learning, image based approach for automated diagnosis for inflammatory skin diseases,” Annals of Translational Medicine, vol. 8, no. 9, p. 581, 2020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Ting D. S. W., Cheung C. Y. L., Lim G., Tan G. S. W., Quang N. D., Gan A., Hamzah H., Garcia-Franco R., San Yeo I. Y., Lee S. Y., Wong E. Y. M., Sabanayagam C., Baskaran M., Ibrahim F., Tan N. C., Finkelstein E. A., Lamoureux E. L., Wong I. Y., Bressler N. M., Sivaprasad S., Varma R., Jonas J. B., He M. G., Cheng C. Y., Cheung G. C. M., Aung T., Hsu W., Lee M. L., and Wong T. Y., “Development and validation of a deep learning system for diabetic retinopathy and related eye diseases using retinal images from multiethnic Populations with diabetes,” JAMA, vol. 318, no. 22, pp. 2211-2223, 2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Gulshan V., Peng L., Coram M., Stumpe M. C., Wu D., Narayanaswamy A., Venugopalan S., Widner K., Madams T., Cuadros J., Kim R., Raman R., Nelson P. C., Mega J. L., and Webster D. R., “Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs,” JAMA, vol. 316, no. 22, pp. 2402-2410, 2016 [DOI] [PubMed] [Google Scholar]

- 20.Bai X., Niwas S. I., Lin W., Ju B.-F., Kwoh C. K., Wang L., Sng C. C., Aquino M. C., and Chew P. T. K., “Learning ECOC code matrix for multiclass classification with application to glaucoma diagnosis,” Journal of Medical Systems, vol. 40, no. 4, 2016 [DOI] [PubMed] [Google Scholar]

- 21.Gu H., Guo Y., Gu L., Wei A., Xie S., Ye Z., Xu J., Zhou X., Lu Y., Liu X., and Hong J., “Deep learning for identifying corneal diseases from ocular surface slit-lamp photographs,” Scientific Reports, vol. 10, no. 1, p. 17851, 2020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Spanhol F. A., Oliveira L. S., Cavalin P. R., Petitjean C., and Heutte L., “Deep features for breast cancer histopathological image classification,” in 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 2017, pp. 1868-1873 [Google Scholar]

- 23.Ker J., Bai Y., Lee H. Y., Rao J., and Wang L., “Automated brain histology classification using machine learning,” Journal of Clinical Neuroscience, vol. 66, pp. 239-245, 2019 [DOI] [PubMed] [Google Scholar]

- 24.Ciresan D., Meier U., and Schmidhuber J., “Multi-column deep neural networks for image classification,” in 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 2012, pp. 3642-3649 [Google Scholar]

- 25.Krizhevsky A., Sutskever I., and Hinton G. E., “Imagenet classification with deep convolutional neural networks,” Communications of the ACM, vol. 60, no. 6, pp. 84-90, 2017 [Google Scholar]

- 26.Simonyan K., and Zisserman A.. Very deep convolutional networks for large-scale image recognition, International Conference on Learning Representations, San Diego, CA, USA, Computer, 2014 [Google Scholar]

- 27.Szegedy C., Liu W., Jia Y., Sermanet P., Reed S., Anguelov D., Erhan D., Vanhoucke V., and Rabinovich A., “Going deeper with convolutions,” in 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 2015, pp. 1-9 [Google Scholar]

- 28.Szegedy C., Vanhoucke V., Ioffe S., Shlens J., and Wojna Z., “Rethinking the inception architecture for computer vision,”, 2015,https://arxiv.org/abs/1512.00567.

- 29.Szegedy C., Ioffe S., Vanhoucke V., and Alemi A., “Inception-v4, inception-resnet and the impact of residual connections on learning,”, 2016,https://arxiv.org/abs/1602.07261.

- 30.He K., Zhang X., Ren S., and Sun J., “Deep residual learning for image recognition,” in 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 2016, [Google Scholar]

- 31.Huang G., Liu Z., Van Der Maaten L., and Weinberger K. Q., “Densely connected convolutional networks,” in 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 2017, [Google Scholar]

- 32.Hu J., Shen L., and Sun G., “Squeeze-and-Excitation Networks,” in 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, Utah, USA, 2018, [Google Scholar]

- 33.Tan M., and Le Q. V., “EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks,” in Proceedings of the 36th International Conference on Machine Learning, Long Beach, California, USA, 2019, pp. 6105-6114 [Google Scholar]

- 34.Lo S.-C. B., Lou S.-L. A., Lin J.-S., Freedman M. T., Chien M. V., and Mun S. K., “Artificial convolution neural network techniques and applications for lung nodule detection,” IEEE Transactions on Medical Imaging, vol. 14, no. 4, pp. 711-718, 1995 [DOI] [PubMed] [Google Scholar]

- 35.Liu J., Zhao G., Yu F., Zhang M., Wang Y., and Yizhou Y., “Align, attend and locate: chest x-ray diagnosis via contrast induced attention network with limited supervision,” in 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 2019, pp. 10632-10641 [Google Scholar]

- 36.Li Z., Zhang S., Zhang J., Huang K., Wang Y., and Yizhou Y., “MVP Net: multi-view FPN with position-aware attention for deep universal lesion detection,” Medical Image Computing and Computer Assisted Intervention - MICCAI 2019. MICCAI 2019, Shen D.et al., , Eds., Springer, Cham, vol. 11769, Lecture Notes in Computer Science, 2019 [Google Scholar]

- 37.Zhang S., Xu J., Chen Y.-C., Ma J., Li Z., Wang Y., and Yizhou Y., “Revisiting 3D context modeling with supervised pre-training for universal lesion detection in CT slices,” Medical Image Computing and Computer Assisted Intervention - MICCAI 2020. MICCAI 2020, Martel A. L.et al., , Eds., Springer, Cham, vol. 12264, Lecture Notes in Computer Science, 2020 [Google Scholar]

- 38.Liu Y., Zhang F., Zhang Q., Wang S., Wang Y., and Yizhou Y., “Cross-view correspondence reasoning based on bipartite graph convolutional network for mammogram mass detection,” in 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, June 2020, [Google Scholar]

- 39.Redmon J., Divvala S., Girshick R., and Farhadi A., “You only look once: unified, real-time object detection,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 779-788 [Google Scholar]

- 40.Liu W., Anguelov D., Erhan D., Szegedy C., Reed S., Fu C.-Y., and Berg A. C., “SSD: single shot MultiBox detector,” Computer Vision - ECCV 2016. ECCV 2016, Leibe B., Matas J., Sebe N., and Welling M., Eds., Springer, Cham, vol. 9905, Lecture Notes in Computer Science, [Google Scholar]

- 41.Ren S., He K., Girshick R., and Sun J., “Faster R-CNN: towards real-time object detection with region proposal networks,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 39, no. 6, pp. 1137-1149, 2017 [DOI] [PubMed] [Google Scholar]

- 42.Gkioxari G., Dollar P., and Girshick R., “Mask R-CNN,” in Proceedings of the IEEE International Conference on Computer Vision (ICCV), 2017, pp. 2961-2969 [Google Scholar]

- 43.Law H., “CornerNet: detecting objects as paired keypoints,” Computer Vision - ECCV 2018. ECCV 2018, Ferrari V., Hebert M., Sminchisescu C., and Weiss Y., Eds., Springer, Cham, vol. 11218, Lecture Notes in Computer Science, pp. 765-781, 2018 [Google Scholar]

- 44.Ye C., Wang W., Zhang S., and Wang K., “Multi-depth fusion network for whole-heart CT image segmentation,” IEEE Access, vol. 7, pp. 23421-23429, 2019 [Google Scholar]

- 45.Fang C., Li G., Pan C., Li Y., and Yizhou Y., “Globally guided progressive fusion network for 3D pancreas segmentation,” Medical Image Computing and Computer Assisted Intervention - MICCAI 2019. MICCAI 2019, Shen D.et al., , Eds., Springer, Cham, vol. 11765, Lecture Notes in Computer Science, 2019 [Google Scholar]

- 46.Li X., Chen H., Qi X., Dou Q., Fu C. W., and Heng P. A., “H-DenseUNet: hybrid densely connected UNet for liver and tumor segmentation from CT volumes,” IEEE Transactions on Medical Imaging, vol. 37, no. 12, pp. 2663-2674, 2018 [DOI] [PubMed] [Google Scholar]

- 47.Long J., Shelhamer E., and Darrell T., “Fully convolutional networks for semantic segmentation,” IEEE trans Pattern Anal Mach Intel, vol. 39, no. 4, pp. 640-651, 2014 [DOI] [PubMed] [Google Scholar]

- 48.Ronneberger O., Fischer P., and Brox T., “U-Net: Convolutional networks for biomedical image segmentation,” Medical Image Computing and Computer-Assisted Intervention - MICCAI 2015. MICCAI 2015, Navab N., Hornegger J., Wells W., and Frangi A., Eds., Springer, Cham, vol. 9351, Lecture Notes in Computer Science, 2015 [Google Scholar]

- 49.Isensee F., Jaeger P. F., Kohl S. A. A., Petersen J., and Maier-Hein K. H., “Automated design of deep learning methods for biomedical image segmentation,”, https://arxiv.org/abs/1904.08128. [DOI] [PubMed]

- 50.Staring M., van der Heide U. A., Klein S., Viergever M. A., and Pluim J., “Registration of cervical MRI using multifeature mutual information,” IEEE Transactions on Medical Imaging, vol. 28, no. 9, pp. 1412-1421, 2009 [DOI] [PubMed] [Google Scholar]

- 51.Miller K., Wittek A., Joldes G., Horton A., Dutta-Roy T., Berger J., and Morriss L., “Modelling brain deformations for computer-integrated neurosurgery,” International Journal for Numerical Methods in Biomedical Engineering, vol. 26, no. 1, pp. 117-138, 2010 [Google Scholar]

- 52.Xishi Huang, Jing Ren, Guiraudon G., Boughner D., and Peters T. M., “Rapid dynamic image registration of the beating heart for diagnosis and surgical navigation,” IEEE Transactions on Medical Imaging, vol. 28, no. 11, pp. 1802-1814, 2009 [DOI] [PubMed] [Google Scholar]

- 53.Haskins G., Kruger U., and Yan P., “Deep learning in medical image registration: a survey,” Machine Vision and Applications, vol. 31, no. 1-2, 2020 [Google Scholar]

- 54.Yang X., Kwitt R., and Niethammer M., “Fast predictive image registration,” Deep Learning and Data Labeling for Medical Applications., pp. 48-57, 2016

- 55.Lv J., Yang M., Zhang J., and Wang X., “Respiratory motion correction for free-breathing 3D abdominal MRI using CNN-based image registration: a feasibility study,” The British Journal of Radiology, vol. 91, 2018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Li H., and Fan Y., “Non-rigid image registration using self-supervised fully convolutional networks without training data,” in 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018), Washington, DC, USA, 2018, pp. 1075-1078 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Jaderberg M., Simonyan K., Zisserman A., and Kavukcuoglu K., “Spatial transfer networks,” Advances in Neural Information Processing Systems, vol. 28, pp. 2017-2025, 2015 [Google Scholar]

- 58.Kuang D., and Schmah T., “FAIM-a ConvNet method for unsupervised 3D medical image registration,”, 2018,https://arxiv.org/abs/1811.09243.

- 59.Yan P., Xu S., Rastinehad A. R., and Wood B. J., “Adversarial image registration with application for MR and TRUS image fusion,”, 2018,https://arxiv.org/abs/1804.11024.

- 60.Kreb J., Mansi T., Delingette H., Zhang L., Ghesu F. C., Miao S., Maier A. K., Ayache N., Liao R., and Kamen A., “Robust non-rigid registration through agent-based action learning,” Medical Image Computing and Computer Assisted Intervention − MICCAI 2017. MICCAI 2017, Descoteaux M., Maier-Hein L., Franz A., Jannin P., Collins D., and Duchesne S., Eds., Springer, Cham, vol. 10433, Lecture Notes in Computer Science, pp. 344-352, 2017 [Google Scholar]

- 61.Katan M., and Luft A., “Global burden of stroke,” Seminars in Neurology, vol. 38, no. 2, p. 208, 2018 [DOI] [PubMed] [Google Scholar]

- 62.Chen L., Bentley P., and Rueckert D., “Fully automatic acute ischemic lesion segmentation in dwi using convolutional neural networks,” Neuroimage Clin, vol. 15, pp. 633-643, 2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Clèrigues A., Valverde S., Bernal J., Freixenet J., Oliver A., and Lladó X., “Acute and sub-acute stroke lesion segmentation from multimodal MRI,” Computer Methods and Programs in Biomedicine, vol. 194, article 105521, 2020 [DOI] [PubMed] [Google Scholar]

- 64.Liu L., Chen S., Zhang F., Wu F. X., Pan Y., and Wang J., “Deep convolutional neural network for automatically segmenting acute ischemic stroke lesion in multi-modality MRI,” Neural Computing and Applications, vol. 32, no. 11, pp. 6545-6558, 2020 [Google Scholar]

- 65.Zhao B., Ding S., Wu H., Liu G., Cao C., Jin S., and Liu Z., “Automatic acute ischemic stroke lesion segmentation using semi-supervised learning,”, 2019,https://arxiv.org/abs/1908.03735.

- 66.Clèrigues A., Valverde S., Bernal J., Freixenet J., Oliver A., and Lladó X., “Acute ischemic stroke lesion core segmentation in CT perfusion images using fully convolutional neural networks,” Computers in Biology and Medicine, vol. 115, article 103487, 2019 [DOI] [PubMed] [Google Scholar]

- 67.Chilamkurthy S., “Deep learning algorithms for detection of critical findings in head CT scans: a retrospective study,” The Lancet, vol. 392, no. 10162, pp. 2388-2396, 2018 [DOI] [PubMed] [Google Scholar]

- 68.Ye H., Gao F., Yin Y., Guo D., Zhao P., Lu Y., Wang X., Bai J., Cao K., Song Q., Zhang H., Chen W., Guo X., and Xia J., “Precise diagnosis of intracranial hemorrhage and subtypes using a three-dimensional joint convolutional and recurrent neural network,” European Radiology, vol. 29, no. 11, pp. 6191-6201, 2019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Ker J., Singh S. P., Bai Y., Rao J., Lim T., and Wang L., “Image thresholding improves 3-dimensional convolutional neural network diagnosis of different acute brain hemorrhages on computed tomography scans,” Sensors, vol. 19, no. 9, p. 2167, 2019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Singh S., Wang L., Gupta S., Gulyas B., and Padmanabhan P., “Shallow 3D CNN for detecting acute brain hemorrhage from medical imaging sensors,” IEEE Sensors Journal, p. 1, 2020

- 71.Vlak M. H., Algra A., Brandenburg R., and Rinkel G. J. E., “Prevalence of unruptured intracranial aneurysms, with emphasis on sex, age, comorbidity, country, and time period: a systematic review and meta-analysis,” Lancet Neurology, vol. 10, no. 7, pp. 626-636, 2011 [DOI] [PubMed] [Google Scholar]

- 72.Nieuwkamp D. J., Setz L. E., Algra A., Linn F. H. H., de Rooij N. K., and Rinkel G. J. E., “Changes in case fatality of aneurysmal subarachnoid haemorrhage over time, according to age, sex, and region: a meta-analysis,” The Lancet Neurology, vol. 8, no. 7, pp. 635-642, 2009 [DOI] [PubMed] [Google Scholar]

- 73.Turan N., Heider R. A., Roy A. K., Miller B. A., Mullins M. E., Barrow D. L., Grossberg J., and Pradilla G., “Current perspectives in imaging modalities for the assessment of unruptured intracranial aneurysms: a comparative analysis and review,” World Neurosurgery, vol. 113, pp. 280-292, 2018 [DOI] [PubMed] [Google Scholar]

- 74.Nakao T., Hanaoka S., Nomura Y., Sato I., Nemoto M., Miki S., Maeda E., Yoshikawa T., Hayashi N., and Abe O., “Deep neural network-based computer assisted detection of cerebral aneurysms in MR angiography,” Journal of Magnetic Resonance Imaging, vol. 47, no. 4, pp. 948-953, 2018 [DOI] [PubMed] [Google Scholar]

- 75.Stember J. N., Chang P., Stember D. M., Liu M., Grinband J., Filippi C. G., Meyers P., and Jambawalikar S., “Convolutional neural networks for the detection and measurement of cerebral aneurysms on magnetic resonance angiography,” Journal of Digital Imaging, vol. 32, no. 5, pp. 808-815, 2019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Sichtermann T., Faron A., Sijben R., Teichert N., Freiherr J., and Wiesmann M., “Deep learning-based detection of intracranial aneurysms in 3D TOF-MRA,” American Journal of Neuroradiology, vol. 40, no. 1, pp. 25-32, 2019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Ueda D., Yamamoto A., Nishimori M., Shimono T., Doishita S., Shimazaki A., Katayama Y., Fukumoto S., Choppin A., Shimahara Y., and Miki Y., “Deep learning for MR angiography: automated detection of cerebral aneurysms,” Radiology, vol. 290, no. 1, pp. 187-194, 2019 [DOI] [PubMed] [Google Scholar]

- 78.Park A., Chute C., Rajpurkar P., Lou J., Ball R. L., Shpanskaya K., Jabarkheel R., Kim L. H., McKenna E., Tseng J., Ni J., Wishah F., Wittber F., Hong D. S., Wilson T. J., Halabi S., Basu S., Patel B. N., Lungren M. P., Ng A. Y., and Yeom K. W., “Deep learning-assisted diagnosis of cerebral aneurysms using the HeadXNet model,” JAMA Network Open, vol. 2, no. 6, article e195600, 2019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Shi Z., Miao C., Schoepf U. J., Savage R. H., Dargis D. M., Pan C., Chai X., Li X. L., Xia S., Zhang X., Gu Y., Zhang Y., Hu B., Xu W., Zhou C., Luo S., Wang H., Mao L., Liang K., Wen L., Zhou L., Yu Y., Lu G. M., and Zhang L. J., “A clinically applicable deep-learning model for detecting intracranial aneurysm in computed tomography angiography images,” Nature Communications, vol. 11, no. 1, p. 6090, 2020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Zhang J., Gajjala S., Agrawal P., Tison G. H., Hallock L. A., Beussink-Nelson L., Lassen M. H., Fan E., Aras M. A., Jordan C. R., Fleischmann K. E., Melisko M., Qasim A., Shah S. J., Bajcsy R., and Deo R. C., “Fully automated echocardiogram interpretation in clinical practice,” Circulation, vol. 138, no. 16, pp. 1623-1635, 2018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Howard J. P., Tan J., Shun-Shin M. J., Mahdi D., Nowbar A. N., Arnold A. D., Ahmad Y., McCartney P., Zolgharni M., Linton N. W. F., Sutaria N., Rana B., Mayet J., Rueckert D., Cole G. D., and Francis D. P., “Improving ultrasound video classification: an evaluation of novel deep learning methods in echocardiography,” Journal of Medical Artificial Intelligence, vol. 3, 2020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Vigneault D. M., Xie W., HodDavid C. Y., Bluemke D. A., and Noble J. A., “Ω-Net (Omega-Net): fully automatic, multi-view cardiac MR detection, orientation, and segmentation with deep neural networks ,” Medical Image Analysis, vol. 48, pp. 95-106, 2018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Xiong Z., Fedorov V. V., Fu X., Cheng E., Mecleod R., and Zhao J., “Fully automatic left atrium segmentation from late gadolinium enhanced magnetic resonance imaging using a dual fully convolutional neural network,” IEEE Transactions on Medical Imaging, vol. 38, no. 2, pp. 515-524, 2019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Moccia S., Banali R., Martini C., Muscogiuri G., Pontone G., Pepi M., and Caiani E. G., “Development and testing of a deep learning-based strategy for scar segmentation on CMR-LGE images,” Magnetic Resonance Materials in Physics, Biology and Medicine, vol. 32, no. 2, pp. 187-195, 2019 [DOI] [PubMed] [Google Scholar]

- 85.Bai W., Suzuki H., Qin C., Tarroni G., Oktay O., Matthews P. M., and Rueckert D., “Recurrent neural networks for aortic image sequence segmentation with sparse annotations,” Medical Image Computing and Computer Assisted Intervention - MICCAI 2018. MICCAI 2018, Frangi A., Schnabel J., Davatzikos C., Alberola-López C., and Fichtinger G., Eds., Springer, Cham, vol. 11073, Lecture Notes in Computer Science, 2019 [Google Scholar]

- 86.Morris E. D., Ghanem A. I., Dong M., Pantelic M. V., Walker E. M., and Glide-Hurst C. K., “Cardiac substructure segmentation with deep learning for improved cardiac sparing,” Medical Physics, vol. 74, no. 2, pp. 576-586, 2020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Shen Y., Fang Z., Gao Y., Xiong N., Zhong C., and Tang X., “Coronary arteries segmentation based on 3D FCN with attention gate and level set function,” IEEE Access, vol. 7, 2019 [Google Scholar]

- 88.He J., Pan C., Yang C., Zhang M., Yang W., Zhou X., and Yizhou Y., “Learning hybrid representations for automatic 3D vessel centerline extraction,” Medical Image Computing and Computer Assisted Intervention - MICCAI 2020. MICCAI 2020, Martel A. L.et al., , Eds., Springer, Cham, vol. 12266, Lecture Notes in Computer Science, 2020 [Google Scholar]

- 89.Zhang W., Zhang J., Du X., Zhang Y., and Li S., “An end-to-end joint learning framework of artery-specific coronary calcium scoring in non-contrast cardiac CT,” Computing, vol. 101, no. 6, pp. 667-678, 2019 [Google Scholar]

- 90.Liu J., Jin C., Feng J., Du Y., Lu J., and Zhou J., “A vessel-focused 3D convolutional network for automatic segmentation and classification of coronary artery plaques in cardiac CTA,” Statistical Atlases and Computational Models of the Heart. Atrial Segmentation and LV Quantification Challenges. STACOM 2018, Pop M.et al., , Eds., Springer, Cham, vol. 11395, Lecture Notes in Computer Science, 2018 [Google Scholar]

- 91.Vorontsov E., Cerny M., Régnier P., di Jorio L., Pal C. J., Lapointe R., Vandenbroucke-Menu F., Turcotte S., Kadoury S., and Tang A., “Deep learning for automated segmentation of liver lesions at CT in patients with colorectal cancer liver metastases,” Radiology: Artificial Intelligence, vol. 1, no. 2, article 180014, 2019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Wang X., Han S., Chen Y., Gao D., and Vasconcelos N., “Volumetric attention for 3D medical image segmentation and detection,” Medical Image Computing and Computer Assisted Intervention - MICCAI 2019. MICCAI 2019, Shen D.et al., , Eds., Springer, Cham, vol. 11769, Lecture Notes in Computer Science, 2019 [Google Scholar]

- 93.Seo H., Huang C., Bassenne M., Xiao R., and Xing L., “Modified U-Net (mU-Net) with incorporation of object-dependent high level features for improved liver and liver-tumor segmentation in CT images,” IEEE Transactions on Medical Imaging, vol. 39, no. 5, pp. 1316-1325, 2020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Tang Y., Tang Y., Zhu Y., Xiao J., and Summers R. M., “E2Net: an edge enhanced network for accurate liver and tumor segmentation on CT scans,”, https://arxiv.org/abs/2007.09791.

- 95.Zhen S.-h., Cheng M., Tao Y.-b., Wang Y.-f., Juengpanich S., Jiang Z. Y., Jiang Y. K., Yan Y. Y., Lu W., Lue J. M., Qian J. H., Wu Z. Y., Sun J. H., Lin H., and Cai X. J., “Deep learning for accurate diagnosis of liver tumor based on magnetic resonance imaging and clinical data,” Frontiers in Oncology, vol. 10, p. 680, 2020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96.Liu X., Song J. L., Wang S. H., Zhao J. W., and Chen Y. Q., “Learning to diagnose cirrhosis with liver capsule guided ultrasound image classification,” Sensors, vol. 17, p. 149, 2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Yasaka K., Akai H., Kunimatsu A., Abe O., and Kiryu S., “Deep learning for staging liver fibrosis on CT: a pilot study,” European Radiology, vol. 28, no. 11, pp. 4578-4585, 2018 [DOI] [PubMed] [Google Scholar]

- 98.Yasaka K., Akai H., Kunimatsu A., Abe O., and Kiryu S., “Liver fibrosis: deep convolutional neural network for staging by using gadoxetic acid-enhanced hepatobiliary phase MR images,” Radiology, vol. 287, no. 1, pp. 146-155, 2018 [DOI] [PubMed] [Google Scholar]

- 99.Choi K. J., Jang J. K., Lee S. S., Sung Y. S., Shim W. H., Kim H. S., Yun J., Choi J. Y., Lee Y., Kang B. K., Kim J. H., Kim S. Y., and Yu E. S., “Development and validation of a deep learning system for staging liver fibrosis by using contrast agent-enhanced CT images in the liver,” Radiology, vol. 289, no. 3, pp. 688-697, 2018 [DOI] [PubMed] [Google Scholar]

- 100.Xue L. Y., Jiang Z. Y., Fu T. T., Wang Q. M., Zhu Y. L., Dai M., Wang W. P., Yu J. H., and Ding H., “Transfer learning radiomics based on multimodal ultrasound imaging for staging liver fibrosis,” European Radiology, vol. 30, no. 5, pp. 2973-2983, 2020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Tang Z., Liu W. R., Zhou P. Y., Ding Z. B., Jiang X. F., Wang H., Tian M. X., Tao C. Y., Fang Y., Qu W. F., Dai Z., Qiu S. J., Zhou J., Fan J., and Shi Y. H., “Prognostic value and predication model of microvascular invasion in patients with intrahepatic cholangiocarcinoma,” Journal of Cancer, vol. 10, no. 22, pp. 5575-5584, 2019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102.Men S., Ju H., Zhang L., and Zhou W., “Prediction of microvascular invasion of hepatocellar carcinoma with contrast-enhanced MR using 3D CNN And LSTM,” in 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019),, Venice, Italy, 2019, pp. 810-813 [Google Scholar]

- 103.Jiang Y.-Q., Cao S.-E., Cao S., Chen J.-N., Wang G.-Y., Shi W.-Q., Deng Y.-N., Cheng N., Ma K., Zeng K.-N., Yan X.-J., Yang H.-Z., Huan W.-J., Tang W.-M., Zheng Y., Shao C.-K., Wang J., Yang Y., and Chen G.-H., “Preoperative identification of microvascular invasion in hepatocellular carcinoma by XGBoost and deep learning,” Journal of Cancer Research and Clinical Oncology, vol. 147, pp. 821-833, 2021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 104.Olczak J., Fahlberg N., Maki A., Razavian A. S., Jilert A., Stark A., Sköldenberg O., and Gordon M., “Artificial intelligence for analyzing orthopedic trauma radiographs,” Acta Orthopaedica, vol. 88, no. 6, pp. 581-586, 2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 105.Urakawa T., “Detecting intertrochanteric hip fractures with orthopedist-level accuracy using a deep convolutional neural network,” Skeletal Radiology, vol. 48, no. 2, pp. 239-244, 2019 [DOI] [PubMed] [Google Scholar]

- 106.Gale W., Oakden-Rayner L., Carneiro G., Bradley A. P., and Palmer L. J., “Detecting hip fractures with radiologist-level performance using deep neural networks,”, 2017,https://arxiv.org/abs/1711.06504.

- 107.Krogue J. D., “Automatic hip fracture identification and functional subclassification with deep learning. Radiology,” Artificial Intelligence, vol. 2, no. 2, article e190023, 2020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 108.Gan K., Xu D., Lin Y., Shen Y., Zhang T., Hu K., Zhou K., Bi M., Pan L., Wu W., and Liu Y., “Artificial intelligence detection of distal radius fractures: a comparison between the convolutional neural network and professional assessments,” Acta Orthopaedica, vol. 90, no. 4, pp. 394-400, 2019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 109.Thian Y. L., Li Y., Jagmohan P., Sia D., Chan V. E. Y., and Tan R. T., “Convolutional neural networks for automated fracture detection and localization on wrist radiographs,” Radiology: Artificial Intelligence, vol. 1, article e180001, 2019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 110.Lindsey R., Daluiski A., Chopra S., Lachapelle A., Mozer M., Sicular S., Hanel D., Gardner M., Gupta A., Hotchkiss R., and Potter H., “Deep neural network improves fracture detection by clinicians,” Proceedings of the National Academy of Sciences of the United States of America, vol. 115, no. 45, pp. 11591-11596, 2018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 111.Wu S., Yan L., Liu X., Yu Y., and Zhang S., “An end-to-end network for detecting multi-domain fractures on X-ray images,” in 2020 IEEE International Conference on Image Processing (ICIP), Abu Dhabi, October 2020, [Google Scholar]

- 112.Wu H.-Z., Yan L. F., Liu X. Q., Yu Y. Z., Geng Z. J., Wu W. J., Han C. Q., Guo Y. Q., and Gao B. L., “The feature ambiguity mitigate operator model helps improve bone fracture detection on X-ray radiograph,” Scientific Reports, vol. 11, no. 1, article 1589, 2021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 113.Kairouz P., McMahan H., Avent B., Bellet A., Bennis M., Bhagoji A. N., Bonawitz K., Charles Z., Cormode G., Cummings R., D'Oliveira R. G. L., Eichner H., El Rouayheb S., Evans D., Gardner J., Garrett Z., Gascón A., Ghazi B., Gibbons P. B., Gruteser M., Harchaoui Z., He C., He L., Huo Z., Hutchinson B., Hsu J., Jaggi M., Javidi T., Joshi G., Khodak M., Konečný J., Korolova A., Koushanfar F., Koyejo S., Lepoint T., Liu Y., Mittal P., Mohri M., Nock R., Özgür A., Pagh R., Raykova M., Qi H., Ramage D., Raskar R., Song D., Song W., Stich S. U., Sun Z., Suresh A. T., Tramèr F., Vepakomma P., Wang J., Xiong L., Xu Z., Yang Q., Yu F. X., Yu H., and Zhao S., “Advances and open problems in Federated Learning,”, https://arxiv.org/abs/1912.04977.

- 114.Armato I. I. I., McLennan G., Bidaut L., McNitt-Gray M. F., Meyer C. R., Reeves A. P., Zhao B., Aberle D. R., Henschke C. I., Hoffman E. A., Kazerooni E. A., MacMahon H., van Beek E. J. R., Yankelevitz D., Biancardi A. M., Bland P. H., Brown M. S., Engelmann R. M., Laderach G. E., Max D., Pais R. C., Qing D. P. Y., Roberts R. Y., Smith A. R., Starkey A., Batra P., Caligiuri P., Farooqi A., Gladish G. W., Jude C. M., Munden R. F., Petkovska I., Quint L. E., Schwartz L. H., Sundaram B., Dodd L. E., Fenimore C., Gur D., Petrick N., Freymann J., Kirby J., Hughes B., Vande Casteele A., Gupte S., Sallam M., Heath M. D., Kuhn M. H., Dharaiya E., Burns R., Fryd D. S., Salganicoff M., Anand V., Shreter U., Vastagh S., Croft B. Y., and Clarke L. P., “The lung image database consortium (LIDC) and image database resource initiative (IDRI): a completed reference database of lung nodules on CT scans,” Medical Physics, vol. 38, no. 2, pp. 915-931, 2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 115.Setio A. A. A., Traverso A., de Bel T., Berens M. S. N., Bogaard C., Cerello P., Chen H., Dou Q., Fantacci M. E., Geurts B., Gugten R., Heng P. A., Jansen B., de Kaste M. M. J., Kotov V., Lin J. Y. H., Manders J. T. M. C., Sóñora-Mengana A., García-Naranjo J. C., Papavasileiou E., Prokop M., Saletta M., Schaefer-Prokop C. M., Scholten E. T., Scholten L., Snoeren M. M., Torres E. L., Vandemeulebroucke J., Walasek N., Zuidhof G. C. A., Ginneken B., and Jacobs C., “Validation, comparison, and combination of algorithms for automatic detection of pulmonary nodules in computed tomography images: the LUNA16 challenge,” Medical Image Analysis, vol. 42, pp. 1-13, 2017 [DOI] [PubMed] [Google Scholar]

- 116.Bowyer K., Kopans D., Kegelmeyer W. P., Moore R., Sallam M., Chang K., and Woods K., “The digital database for screening mammography,” in Third international workshop on digital mammography, 1996, vol. 58, p. 27, [Google Scholar]

- 117.Yan K., Wang X., Lu L., and Summers R., “DeepLesion: automated mining of large-scale lesion annotations and universal lesion detection with deep learning,” Journal of Medical Imaging, vol. 5, 2018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 118.Bilic P., Christ P. F., Vorontsov E., Chlebus G., Chen H., Dou Q., Fu C. W., Han X., Heng P. A., Hesser J., and Kadoury S., “The liver tumor segmentation benchmark (LiTS),”, https://arxiv.org/abs/1901.04056.

- 119.Simpson A. L., Antonelli M., Bakas S., Bilello M., Farahani K., van Ginneken B., Kopp-Schneider A., Landman B. A., Litjens G., Menze B., Ronneberger O., Summers R. M., Bilic P., Christ P. F., Do R. K. G., Gollub M., Golia-Pernicka J., Heckers S. H., Jarnagin W. R., McHugo M. K., Napel S., Vorontsov E., Maier-Hein L., and Cardoso M. J., “A large annotated medical image dataset for the development and evaluation of segmentation algorithms,”, 2019,https://arxiv.org/abs/1902.09063.

- 120.Lin T.-Y., Goyal P., Girshick R., He K., and Dollar P., “Focal loss for dense object detection,” in 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 2017, [Google Scholar]

- 121.Husseini M., Sekuboyina A., Loeffler M., Navarro F., Menze B. H., and Kirschke J. S., “Grading loss: a fracture grade-based metric loss for vertebral fracture detection,”, 2020,https://arxiv.org/abs/2008.07831.

- 122.Hadsell R., Chopra S., and LeCun Y., “Dimensionality reduction by learning an invariant mapping,” in 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition - Volume 2 (CVPR'06), New York, NY, USA, 2006, vol. 2, pp. 1735-1742 [Google Scholar]

- 123.Schroff F., Kalenichenko D., and Philbin J., “FaceNet: a unified embedding for face recognition and clustering,” in 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 2015, pp. 815-823 [Google Scholar]

- 124.Jiménez-Sánchez A., Mateus D., Kirchhoff S., Kirchhoff C., Biberthaler P., Navab N., González Ballester M. A., and Piella G., “Medical-based deep curriculum learning for improved fracture classification,” Medical Image Computing and Computer Assisted Intervention - MICCAI 2019. MICCAI 2019, Shen D.et al., , Eds., Springer, Cham, vol. 11769, Lecture Notes in Computer Science, 2019 [Google Scholar]

- 125.Chen H., Wang Y., Zheng K., Li W., Cheng C.-T., Harrison A. P., Xiao J., Hager G. D., Le Lu C.-H. L., and Miao S., “Anatomy-aware Siamese network: exploiting semantic asymmetry for accurate pelvic fracture detection in X-ray images,”, 2020,https://arxiv.org/abs/2007.01464.