Abstract

Since 2016, deep learning (DL) has advanced tomographic imaging with remarkable successes, especially in low-dose computed tomography (LDCT) imaging. Despite being driven by big data, the LDCT denoising and pure end-to-end reconstruction networks often suffer from the black box nature and major issues such as instabilities, which is a major barrier to apply deep learning methods in low-dose CT applications. An emerging trend is to integrate imaging physics and model into deep networks, enabling a hybridization of physics/model-based and data-driven elements. In this paper, we systematically review the physics/model-based data-driven methods for LDCT, summarize the loss functions and training strategies, evaluate the performance of different methods, and discuss relevant issues and future directions.

1. Introduction

Since the invention of computed tomography (CT) in 1970s, it has become an indispensable imaging modality for screening, diagnosis, and therapeutic planning. Due to the potential damage to healthy tissues, the radiation dose minimization for x-ray CT has been widely studied over past two decades. In some major clinical tasks, the radiation dose of a single CT scan can be up to 43 milli-Sieverts (mSv) [1], which is an order of magnitude higher than the amount of the natural background radiation one receives annually. The radiation dose can be reduced by lowering the x-ray flux physically, which is called low-dose CT (LDCT). However, LDCT will degrade the signal-to-noise ratio (SNR) and compromise the subsequent image quality.

The conventional tomographic reconstruction algorithm can hardly achieve satisfactory LDCT image quality. To meet the clinical requirements, advanced algorithms are required to suppress noise and artifacts associated with LDCT. Up to now, promising results have been obtained, improving LDCT quality and diagnostic performance in various clinical scenarios. Generally speaking, LDCT algorithms can be divided into four categories: sinogram domain filtering, image domain post-processing, model-based iterative reconstruction (MBIR), and deep learning (DL) methods.

Sinogram domain filtering directly performs denoising in the space of projection data. Then, the denoised raw data can be reconstructed into high-quality CT images using analytic algorithms. Depending on the noise distribution, appropriate filters can be designed. Structural adaptive filtering [2] is a representative algorithm in this category, which effectively refines the clarity of LDCT images. The main advantage of sinogram domain filtering is that it can suppress noise based on the known distribution. However, any model mismatch or inappropriate operations in the projection domain will introduce global interference, compromising the accuracy and robustness of sinogram domain filtering results.

Image domain post-processing is more flexible and stable than sinogram domain filtering. Based on appropriate prior assumptions of CT image, such as sparsity, several popular methods were developed [3]. These methods can effectively denoise LDCT images, but their prototypes were often developed for natural image processing. In many aspects, the properties of LDCT are quite different from natural images. For example, LDCT image noise does not follow any known distribution, depends on underlying structures, and is difficult to model analytically. The image noise distribution is complex, and so is the image content prior. These are responsible for limited performance of image domain post-processing.

MBIR combines the advantages of the two kinds of methods mentioned above and works to minimize an energy-based objective function. The energy model usually consists of two parts: the fidelity term with the noise model in the projection domain and the regularization term with the prior model in the image domain. Since the noise model for LDCT in the projection domain is well-established, research efforts in developing MBIR are more focused on the prior model. Utilizing the well-known image sparsity for LDCT, a number of methods were proposed [4-6]. The MBIR algorithms usually deliver robust performance and achieve clinically satisfactory results after the regularization terms are properly designed along with well-tuned balancing parameters. However, these requirements for an MBIR algorithm may restrict its applicability. Customizing an MBIR algorithm takes extensive experience and skills. Also, MBIR algorithms suffer from expensively computational cost.

Recently, DL was introduced for tomographic imaging. Driven by big data, DL promises to overcome the main shortcoming of conventional algorithms which demands the explicit design of regularizers and cannot guarantee the optimality and generalizability. The DL methods extract information from a large amount of data to ensure the objectivity and comprehensiveness of the extracted information. By learning the mapping from LDCT scans to normal-dose CT (NDCT) images, a series of studies were performed [7-11]. These methods can be seen as a combination of image domain post-processing and data-driven methods. They inherit the advantages of the post-processing algorithms and DL methods, and have high processing efficiency, excellent performance, and great clinical potential. However, they also have drawbacks. These methods usually use the approximate or pseudo-inversion of the raw data as the input of the network. The initially reconstructed images may miss some structures, which cannot be easily restored by the network if raw data are unavailable. On the other hand, noise and artifacts in filtered back-projection (FBP) reconstructions could be perceived as meaningful structures by a denoising network. Both circumstances will compromise the diagnostic performance, resulting in either false positives or false negatives.

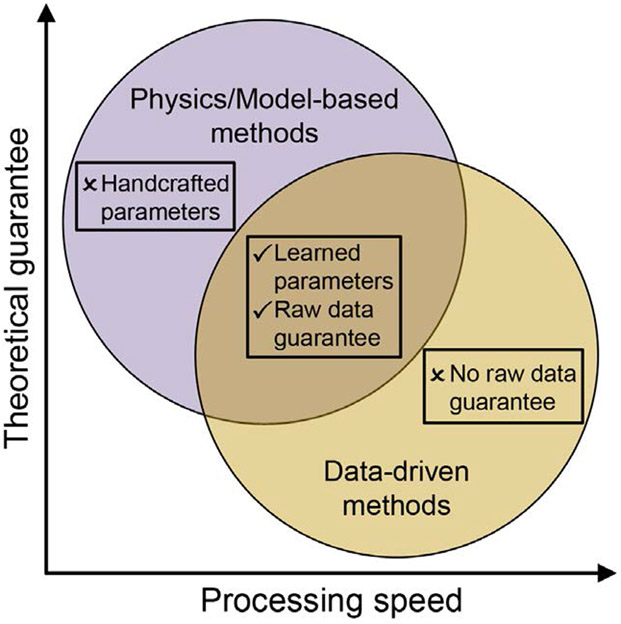

Naturally, synergizing physics/model-based methods and data-driven methods will enjoy the best of both worlds. While deep image denoising only handles reconstructed images, MBIR methods are more robust and safer. In each iteration of MBIR, raw data will be used to rectify intermediate results and improve the data consistency. By introducing the CT physics or MBIR model, researchers can embed the raw data constraint into the network, which avoids the information loss in the process of image reconstruction. Over the past years, a number of physics/model-based data-driven methods for LDCT have been proposed [12-14]. As shown in Fig. 1, these methods address the short-comings of the physics/model-based methods and data-driven networks, and achieve an excellent balance between the improved accuracy with learned parameters and the robustness aided by data fidelity.

Figure 1:

Advantages of synergizing physics/model-based methods and data-driven methods.

In this paper, we will review these methods. In the next section, the problem of LDCT is described, and the conventional modeling and optimization methods are introduced. In the third section, different kinds of methods are summarized to incorporate physics/model into deep learning framework. In the fourth section, several experiments are conducted to compare different hybrid methods for LDCT. In the fifth section, we discuss relevant issues. Finally, we will conclude the paper in the last section.

2. Physics/Model-based LDCT Methods

2.1. CT Physics

Assuming that an x-ray tube has an incident flux which can be measured in an air scan, the number of photons received by a detector, , can be formulated as , where is the linear attenuation coefficient, and represents the x-ray path. After a logarithmic transformation, the line integral can be obtained as

| (1) |

Such line integrals are typically organized into projections and stored as a sinogram. The line integrals in the form of Eq. (1) can be discretized into a linear system , where denotes the attenuation coefficient distribution to be solved, represents the projection data, and is the system matrix for a pre-specified scanning geometry.

2.2. CT Noise

The noise in CT data mainly consists of the following two components [15]:

Statistical noise: Statistical noise, also known as quantum noise, is the main noise component in LDCT and originates from statistical fluctuations in the emission of x-ray photons.

Electronic noise: Electronic noise occurs when analog signals are converted into digital signals.

2.3. Simulation

In clinical practice, it is difficult to obtain paired LDCT and NDCT datasets from two separate scans due to uncontrollable organ movement and radiation dose limitation. As a result, numerical simulation is important to produce LDCT data from an NDCT scan.

In [16], a noise simulation method was proposed for LDCT research, and applied to generate the public dataset “the 2016 NIH-AAPM-Mayo Clinic Low-Dose CT Grand Challenge”. The number of detected x-ray photons can be approximately considered as normally distributed, and formulated as: , where , represents the variance of electronic noise, and denotes the dose factor.

2.4. Conventional CT Reconstruction Methods

Conventional CT image reconstruction takes both measurement data and prior knowledge into account, and is performed by minimizing an energy model iteratively. The general energy model for LDCT reconstruction can be formulated as

| (2) |

which has two parts: a fidelity term and a regularization term , with being the penalty parameter.

The fidelity term is a metric of the reconstruction result measuring the consistence to the measurement data. The weighted least-squares (WLS) function is usually adopted as the fidelity term: , where is a diagonal matrix with its elements on the main diagonal being the estimated variances of data. Since the ideal flux is unknown, the variance is usually estimated as where [6].

Dedicated regularization terms were designed for different types of images, depending on the nature of images and researchers’ expertise. Over the past years, various regularization terms were proposed along with the improved understanding of CT image properties. Importantly, the well-known sparsity can be expressed as , where is the norm, and is a sparsifying transform matrix. Commonly used sparsifying transforms include the gradient transform (total variation) [4], learned sparsifying transform [5, 6, 17], etc. Subsequently, further leveraging the two-dimensional structure of an image, low-rank became popular for LDCT reconstruction [18-21]. The low-rank constraint can be relaxed to minimization of the nuclear norm , where is the -th largest singular value of .

Generally, these models in Eq. (2) do not have closed solutions and need to be iteratively optimized. Sometimes, auxiliary and dual variables are introduced to simplify the calculation and facilitate the convergence. The idea of introducing auxiliary variables is in the same spirit of plug-and-play (PnP) [22, 23], which can decouple the primal problem and inverse conveniently. For example, based on the PnP scheme with the WLS fidelity, Eq. (2) can be rewritten as

| (3) |

Then, the primal variable and auxiliary variable can be alternately optimized. Two representative alternating optimization algorithms are ADMM and Split-Bregman, which divide the model into sub-problems and solve them accordingly.

3. Physics/Model-based Data-driven Methods

The popular approach for LDCT denoising with DL employs convolution layers and activation functions to build a neural network, whose input and output are both images. These methods are simple to implement and deliver impressive denoising performance, but they can hardly recover details lost in the input image. On the other hand, the MBIR algorithm is safer. In each iteration of MBIR, it uses the measurement to correct an intermediate result. Constrained by the measurement, the MBIR result respects the data consistency and restores missing structures well in the reconstructed image. Following the idea of the DL-based post-processing method, it is natural to synergize the physics/model-based and data-driven methods. Such hybrid methods would not only have data-driven benefits but also have better robustness and interpretability out of the physics/model-based formulation. Table 1 summarizes these methods. The rest of this section introduces this kind of method.

Table 1:

Representative physics/model-based data-driven methods

| Reference | Highlight | |

|---|---|---|

| - | Würfl et al. [25] | Learned filters of FBP |

| HDNet | Hu et al. [26] | Dual domain processing with FBP |

| VVBPTensorNet | Tao et al. [27] | Filtered back-projection view-by-view |

| CLEAR | Zhang et al. [28] | Multi-level consistency loss |

| AUTOMAP | Zhu et al. [29] | Learned transform with FC |

| iRadonMap | He et al. [24] | Transform based on scan trajectory |

| DSigNet | He et al. [30] | Downsampling of geometry and volume |

| iCTNet | Li et al. [31] | Shared parameters for different views |

| - | Fu et al. [32] | Hierarchical architecture |

| KSAE | Wu et al. [33] | Learned sparsifying transform |

| CNNRPGD | Gupta et al. [12] | Learned projection operation |

| REDAEP | Zhang et al. [34] | Learned denoising autoencoding prior |

| MomentumNet | Chun et al. [35] | Momentum-based extrapolation |

| SUPER | Ye et al. [36] | Combination of learned regularizations |

| LEARN | Chen et al. [13] | Unrolled gradient descent algorithm |

| LPD | Adler et al. [14] | Unrolled PDHG algorithm |

| AHP-Net | Ding et al. [37] | Learned hyperparameters with FC |

| MetaInvNet | Zhang et al. [38] | Learned initializer for conjugate gradient |

| FistaNet | Xiang et al. [39] | Unrolled FISTA algorithm |

3.1. Physics-based Data-driven Methods

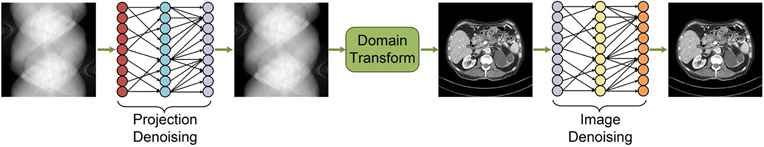

As shown in Fig. 2, the physics-based data-driven methods include a differentiable domain transform based on the CT physics between the projection and image domains in the network. The input and output of the network are usually projection data and image data, respectively. In the beginning, the network used a conventional domain transform from the projection domain to the image domain. Then, inspired by the work on learned domain transformation, researchers built networks with fully connected (FC) layers to replace the conventional domain transform and learn the inverse Radon transform directly [24].

Figure 2:

General workflow for the physics-based data-driven LDCT denoising.

Conventional transform

The architecture of this kind of network is often featured by two sub-networks: one in the projection domain and the other in the image domain, both of which are usually implemented with convolutional neural networks (CNNs). The projection data is fed into the first subnetwork, in which the measurement data can be denoised. The denoised projection is then converted into an image using a differentiable conventional transformation. Finally, the image is processed by the second sub-network to improve the reconstruction quality. Many network architectures widely used for image processing in the literature can be adapted into the sub-networks in both domains. Since the statistical distributions of CT noise in the projection and image domains are quite different, the combination of the denoising processes in the two domains can be complementary, making the denoising process more effective and more stable. The differentiable domain transform allows the information exchange between the two sub-networks. The simplest domain transform is back-projection, which is a differentiable linear transform [25]. A more reasonable yet very efficient transform is filtered back-projection (FBP) [26, 28]. A main advantage of FBP lies in that the projection data can be directly transformed into a suitable numerical range, which is more friendly to the subsequent image domain processing. Another interesting domain transform is the FBP view-by-view [27]. This transform back-projects the projection data into multichannels in the image space, each of which is the back-projection from one projection view. It decouples the data from multi-views to obtain more information. These domain transforms are limited by the understanding and modeling of CT physics. With deep learning, it is feasible to learn the involved kernels and perform the domain transform.

| Algorithm 1 Training a denoiser-based method in an iteration-independent/dependent fashion. | |

|---|---|

|

|

Learned transform

The learned transform can use FC layers to learn the physics-based transform from the projection domain to the image space. AUTOMAP is a representative network, which maps tomographic data to a reconstructed image through FC layers [29]. However, such an architecture would be unaffordable in most cases of medical images because of the expensive computational and memory costs. As a result, major efforts were made to improve the learned transforms by reducing the computational overhead [24, 30, 31]. Since each pixel traces a sinusoidal curve in the projection domain, [24] proposed to sum linearly along the trajectory so that the weights of FC layers are sparse. In an improved version of this work, the geometry and volume were down-sampled to further reduce the computational cost [30]. Another effective way to reduce the cost is to use shared parameters. In [31], the measurements of different views are processed with shared parameters for the domain transform. And in [32], authors proposed a hierarchical architecture, where the shared parameters are gradually localized to the pixel level. Compared with the conventional transform, the learned transform has the potential to achieve better performance.

By incorporating the CT physics into the denoising process and working in both projection and image domains, image denoising can be effectively performed. However, the issue of generalizability is important for clinical applications. The learned transform has limited generalizability because it can only be applied for a fixed imaging geometry. When the geometry and volume differ from what is assumed in the training setup, the trained network will be inapplicable. In contrast, the traditional transform is more stable and only needs to adjust the corresponding parameters for different geometries and volumes. Therefore, the further development of learned transforms needs to make them more flexible and more generalizable.

3.2. Model-based Data-driven Methods

Given the generalizability, stability and interpretability of the MBIR algorithm, it is desirable to combine MBIR and DL for LDCT denoising. Deep learning is effective in solving complicated problems with big data. MBIR-based reconstruction has a fixed fidelity term and needs efforts to find a good regularizer. For model-based data-driven reconstruction, researchers replaced the handcrafted regularization terms with neural networks and produced results often superior to the traditional MBIR counterparts. By the way of embedding a neural network into the MBIR scheme, we can divide the model-based data-driven methods into two categories: denoiser and unrolling.

Denoiser

This approach follows the conventional iterative optimization scheme. In its iterative process, a neural network is introduced with the idea of PnP [23]. Drawing on the framework of PnP, a regularization by denoising (RED) was proposed [40]. With this regularization, the CT optimization model can be formulated as

| (4) |

where is a denoiser implemented by the neural network. The optimization process can be expressed as

| (5) |

The optimization of can be done using a method for solving the quadratic problem. The solution of can be obtained either directly from the denoiser

| (6) |

or as a semi-denoised result

| (7) |

Of course, there are other solutions and combinations [34, 36].

There are two ways to train the denoiser. The first is to train a general denoiser for all iterations [12, 33, 34]. In this option, the denoiser can be obtained with noisy images and the corresponding labels as training pairs. However, it is difficult for the denoiser to achieve optimal denoising performance in each iteration. The second option can partially solve this problem by training an iteration-dependent denoiser dynamically [35, 36]. In each iteration, the denoiser will denoise an intermediate image with different parameters to optimize denoising performance. Of course, training model parameters for each iteration will demand a much higher computational cost. Algorithm 1 supports either iteration-independent or iteration-dependent denoisers, where denotes projection data, represents a noise-free image, and the mean square error (MSE) is assumed as the loss function.

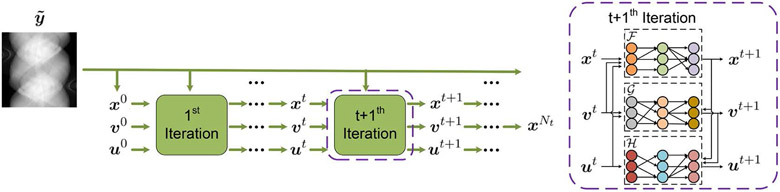

Unrolling

Unrolling is to expand the iterative optimization process into a finite number of stages and map them to a neural network [13,14,37-39,41]. As a general objective function, Eq. (3) can be extended using augmented Lagrangian method (ALM) as follows:

| (8) |

A general iterative optimization method ADMM can be formulated as

| (9) |

Each of these variables can be optimized using a corresponding algorithm. In an unrolling-based data-driven method, each optimization problem can be solved using a sub-network. When the total number of iterations is fixed, Eq. (9) can be realized as a neural network:

| (10) |

where , and denote the three sub-networks, respectively. Fig. 3 shows a top-level of the workflow.

Figure 3:

Workflow of an unrolled data-driven reconstruction process.

With different energy models and optimization algorithms, various network architectures were developed for unrolled data-driven image reconstruction. In [13], the simplest gradient descent algorithm was unrolled into a neural network. In [14], the primal-dual hybrid gradient (PDHG) algorithm was designed as a generalized unrolling technique. In [39], the momentum method commonly used for traditional optimization was adapted into a reconstruction network for better performance with a limited number of iterations. However, it is difficult to directly judge the performance of the network based on the performance of the unrolled optimization scheme, and it remains an important topic how to unroll an optimization scheme and train it optimally.

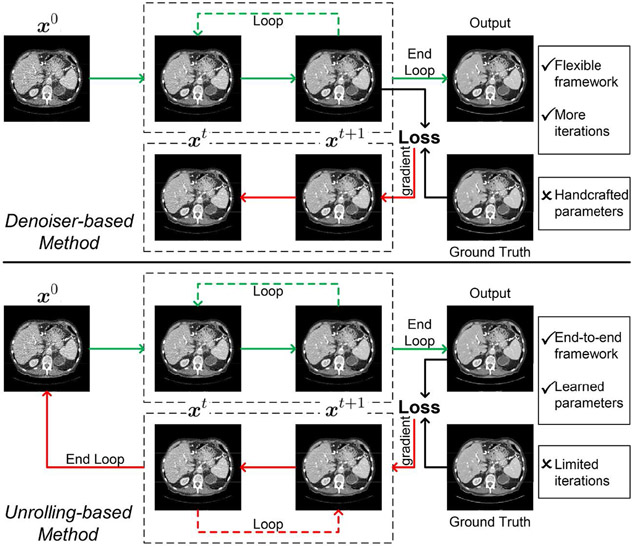

The denoiser-based method is based on the traditional iterative algorithm, where the training and optimization are separated. On the other hand, the unrolling-based method is an end-to-end procedure, where the optimization is incorporated into the training. Fig. 4 shows the forward and backward processes for denoiser-based and unrolling-based methods, where the green and red arrows represent forward and backward directions respectively. As shown in Fig. 4, the forward data streams for the two methods are similar, but the backward data stream is end-to-end for the unrolling-based method; i.e., the complete backward data stream is a back-projection of error signals from the output to the input. While the denoiser-based method adopts a separate training strategy, the unrolling-based method can be trained in a unified fashion, where all parameters, including the regularization parameters, can be obtained from training. However, it is unavoidable that the model requires larger memory and thus limits the number of iterations for unrolling. In many cases, the model performance is closely related to the number of iterations. In contrast, the denoiser-based method allows for more iterations and the trained denoiser can be embedded in different optimization schemes, making the denoiser-based method more flexible. Nevertheless, the denoiser-based method still needs to set the parameters manually, which have a significant impact on the performance. Hence, it is more important for the denoiser-based method to appropriately set parameters and be coupled with a method for adaptive parameter adjustment.

Figure 4:

Forward and backward processes for of denoiser-based (top) and unrolling-based methods (bottom) respectively.

3.3. Loss Functions

The commonly used loss functions for LDCT imaging are mean square error (MSE) and mean absolute error (MAE). To better remove noise and artifacts, total variation (TV) regularization, which performs well in compressed sensing methods for image denoising, is often used as an auxiliary loss [42]. In [8], the discriminator was used to make the denoised image have the same data distribution as that of clinical images. Additionally, a model pre-trained for the classification task was used to extract features, and the perceptual loss was computed in the feature space. The adversarial loss and perceptual loss can improve the visual performance and suppress the over-smoothness. However, the adversarial loss for generative adversarial networks may introduce erroneous structures [43]. Similarly, the perceptual loss could generate checkerboard artifacts [44], when the constraint is imposed on the feature space downsampled with maxpooling. In [42], the structural similarity index metric (SSIM) was introduced to promote structures closer to the ground truth. Similarly, to protect edgeness in denoised images, the Sobel operator was applied to extract edges and keep the edge coherence [10]. The identity loss is also relevant for image denoising tasks, which means that if a noise-free image is fed to the network then it should be dormant, i.e., the network output should be close to the clean input [9]. To maintain the measurement consistency, the result of the network needs to be transformed into the projection domain to compute the MSE or MAE loss [42]. Table 2 summarizes these commonly used loss functions.

Table 2:

Representative loss functions.

| Reference | Pros | Cons |

|---|---|---|

| MSE | Good denoising performance | Oversmoothing |

| MAE | Good denoising performance | Oversmoothing |

| TV loss [42] | Good denoising performance | Oversmoothing |

| Adversarial loss [8] | Good visual effect | Erroneous structures |

| Perceptual loss [8] | Good visual effect | Erroneous structures |

| SSIM loss [38] | Better structural protection | - |

| Edge incoherence [10] | Better structural protection | - |

| Identity loss [9] | More robust network | - |

| Projection loss [42] | Higher measurement consistency | Worse denoising performance |

4. Experimental Comparison

In this section, we report our comparative study on the performance of some popular physics/model-based data-driven methods and different loss functions. This evaluation was performed with a unified code framework to ensure fairness as much as possible. All codes have been succinctly documented to help readers understand the models1. For simplicity and fairness, the MSE loss function and AdamW optimizer were employed for all the methods when evaluating the models. And LPD was adopted as the backbone for the evaluation of different loss functions. Training was performed in a naive way, without any trick. For fair comparison, all the models have been trained within 200 epochs, which is sufficient for convergence of all the methods. The penalty/regularization parameters of the models have been carefully tuned in our experiments to guarantee the optimal performance of each model on the relevant dataset. After training, the optimal model for validation was taken as the final model and used for testing. Of course, there are many factors that affect the performance of the neural network. Therefore, the results in this paper are for reference only, which may not perfectly reflect the performance of these methods.

4.1. Dataset

The dataset used for our experiments is the public LDCT data from “the 2016 NIH-AAPM-Mayo Clinic Low-Dose CT Grand Challenge”. The dataset contains 2378 slices of 3 mm full-dose CT images from 10 patients. In this study, 600 images from seven patients were randomly chosen as the training set, 100 images from one patient were used as the validation set, and 200 images from the remaining two patients were the testing set. The projection data were simulated with distance-driven method. The geometry and volume were set according to the scanning parameters associated with the dataset. The noise simulation was done using the algorithm in [16]. The incident photon number for NDCT is the same as that provided in the dataset. The incident photon number of LDCT was set to 20% of that for NDCT. The variance of the electronic noise was assumed to be 8.2 according to the recommendation in [16].

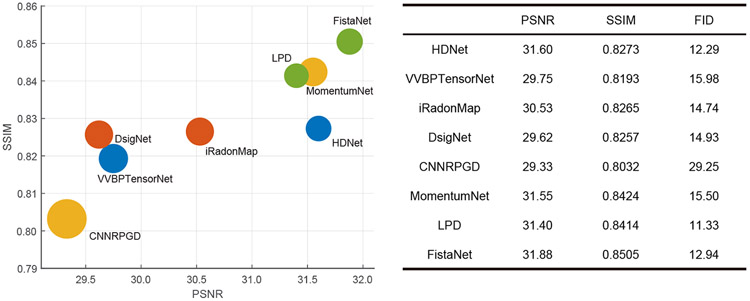

4.2. Model Study

The training process for model evaluation was to minimize the MSE loss function with different kinds of the methods. The commonly used PSNR and SSIM metrics were adopted to quantify the performance of different denoising methods. To evaluate the visual effect of the results, we introduced the Frechet inception distance (FID) score [45]. A smaller FID score means a visual impression closer to the ground truth. Fig. 5 shows the means of PSNR, SSIM, and FID scores on the whole testing set. In Fig. 5, the 2D positions of the different methods are specified by the horizontal and vertical coordinates representing PSNR and SSIM of the results respectively, and the radii of the circles indicate the FID values of different methods. It can be seen that the unrolling-based methods have more robust performance. FistaNet and LPD are in favorable spots. The denoiser-based methods also have outstanding performance, especially MomentumNet based on an iteration-dependent denoiser. The comparison between MomentumNet and CNNRPGD shows that iteration-dependent denoiser has clearly better performance. However, the training of an iteration-dependent denoiser is more complicated and time-consuming. The training time of MomentumNet for 200 epochs is more than 5 days, which is much longer than that needed by CNNRPGD. Additionally, the denoiser-based methods need manually setting regularization parameters, which often has a greater impact on the performance than the network architecture and requires a major fine-tuning effort. HDNet delivers the best performance among the physics-based methods, which proves that the simple FBP transform is effective for dual-domain-based reconstruction. For the learned transform-based method, since the FC layers is of a large scale, the training process is relatively difficult, compromising the stability of reconstruction results.

Figure 5:

Quantitative results obtained using different methods on the whole testing set.

Table 3 shows the computational time of the compared methods. It can be seen that most methods can complete the reconstruction in a short time, which is beneficial for clinical applications. Given a large number of iterations, the computational time of the denoiser-based iterative methods are much greater than that of other methods.

Table 3:

Computational costs of the compared methods.

| Method | Training time | Testing time | Number of Parameters |

|---|---|---|---|

| HDNet | 11.67 h | 0.16 s | 75.3 M |

| VVBPTensorNet | 9.77 h | 0.19 s | 0.47 M |

| iRadonMap | 11.30 h | 0.12 s | 270 M |

| DSigNet | 9.58 h | 0.27 s | 19.2 M |

| CNNRPGD | 3.10 h | 3.22 s | 34.6 M |

| MomentumNet | 122.50 h | 1.16 s | 7.5 M |

| LPD | 7.95 h | 0.20 s | 0.25 M |

| FistaNet | 7.83 h | 0.21 s | 0.78 M |

Unlike the unrolling-based methods which are end-to-end networks, the denoiser-based methods are implemented in the iterative framework. Therefore, it is important to study their convergence properties. In [12] and [35], the authors proved that the denoiser-based iterative methods can converge. Furthermore, even if the denoiser is applied to other iterative optimization schemes with a good convergence property, they should converge similarly, which demand a more rigorous justification in the future.

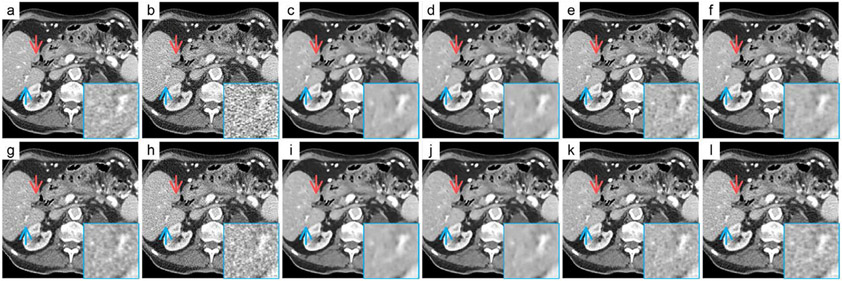

4.3. Loss Function Study

To evaluate the effects of loss functions, we have combined the loss functions in various ways and applied each representative combination to a unified LPD model. Table 4 shows these combinations of the loss functions. The corresponding reconstructions of an abdominal slice are shown in Fig. 6. Note that the weights of combinations have been fine-tuned experimentally for optimal visibility. In Fig. 6, the LPDs trained with different loss functions can all keep the key information on the metastases indicated by the red arrows. The area indicated by the blue arrows is enlarged for better visualization. Based on the same network architecture, while the restored information of different results is basically the same, the main difference among them can be still visually appreciated. MSE and MAE have evident an over-smoothing effect. The adversarial and perceptual losses can effectively improve the visual impression, giving the reconstructed textures similar to the ground truth. With the help of the adversarial and TV losses, the network can achieve satisfactory results via unsupervised learning.

Table 4:

Loss functions used for experimental comparison.

| c | d | e | f | g | h | i | j | k | l | |

|---|---|---|---|---|---|---|---|---|---|---|

| MSE | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||||

| MAE | ✓ | |||||||||

| TV loss | ✓ | |||||||||

| Adversarial loss | ✓ | ✓ | ✓ | ✓ | ||||||

| Perceptual loss | ✓ | ✓ | ||||||||

| SSIM loss | ✓ | |||||||||

| Edge incoherence | ✓ | ✓ | ||||||||

| Identity loss | ✓ | |||||||||

| Projection loss | ✓ | |||||||||

| supervised learning | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| unsupervised learning | ✓ | ✓ |

Figure 6:

Results obtained using LPD with different combinations of loss functions. (a) Normal-dose, (b) Low-dose, (c)-(l) the reconstructions with the combinations of loss functions shown in Table 4. The display window is [−160, 240] HU.

5. Discussions

Physics/model-based data-driven methods have received increasing attention in the tomographic imaging field because they incorporate the CT physics or models into the neural networks synergistically, resulting in superior imaging performance. With rapid development over the past years, researchers have proposed a number of models based on physics/models from different angles. Although they are promising, these models still need further improvements. We believe that the following issues are worth further investigation. The first issue is the generalizability of learned transform-based data-driven methods. Training the networks separately for each imaging geometry is an unaffordable cost in clinical applications. Therefore, a major problem with these methods is to make a trained model applicable to multiple geometries and volumes. Interpolation can help match sizes of input data, required by a reconstruction network. Furthermore, a deep learning method can be a good solution to convert projection data from a source geometry to a target geometry. The second topic is the parametric setting for the denoiser-based data-driven methods. Currently, this kind of method requires handcrafted setting, which limits its generalization to different datasets. The introduction of adaptive parameters or learned parameters is worthy of attention. Reinforcement learning could be another option to automatically select hyper-parameters. The above are of our specific interest for physics/model-based data-driven methods for LDCT. From a larger perspective, the tomographic imaging field has other open topics and challenges, which are also closely related to LDCT.

Transformer

Transformer is an emerging technology of deep learning. It has shown great potential in various areas [46]. In the denoising task, a transformer directs attention to various important features, resulting in adaptive denoising based on image content and features. Coupled with the transformer, physics/model-based data-driven methods will have more design routes. It is predictable that transformers will further improve the performance of physics/model-based data-driven methods.

Self-supervised learning

Paired training data has always been a conundrum plaguing data-driven tomography. The mainstream method is now unsupervised [9] and self-supervised learning [47], which does not require paired/labeled data. Self-supervised training treats the input as the target in appropriate ways to calculate losses, and performs denoising according to the statistical characteristics of underlying data. Clearly, a combination of self-supervised training and physics/model-based data-driven methods can help us meet the challenge of LDCT in clinical applications.

Task-driven tomography

Tomographic imaging is always a service for diagnosis and intervention. Thus, reconstructed images are often processed or analyzed before being clinically useful. To optimize the whole workflow, we can take the downstream image analysis tasks into account to improve the performance of reconstruction network in a task-specific fashion. The physics/model-based task/data-driven method can be designed with shared feature layers linked to task loss functions. A deep tomographic imaging network incorporated with a task-driven technique can reconstruct results that are more suitable for the intended task in terms of diagnostic performance.

Domain generalization

Deep learning-based tomographic imaging may suffer from a domain heterogeneity problem from different distributions of training data, which originate from different scanners, populations, tasks, settings, and so on [48]. Existing tomographic imaging methods could generalize poorly on datasets in shifted domains, especially unseen ones. Domain generalization is to learn a model from one or several different but related domains, which has attracted increasing attention [49]. This is a promising direction to address the data domain heterogeneity and advance the clinical translation of deep tomographic imaging methods.

Image quality assessment

At present, the main means to evaluate reconstructed image quality is still mostly the popular quantitative metrics. But in many cases the classic quantitative evaluation is not consistent with the visual effects and clinical utilities. Especially, the way to evaluate medical images is very different from that of natural images. Therefore, it is currently an open problem to have a set of metrics suitable to evaluate the diagnostic performance of tomographic imaging. For natural image processing, there are neural networks reported for image quality assessment (IQA) [50], which suggest new solutions for medical image quality evaluation. Ideally, DL-based IQA should not only judge the reconstruction quality and diagnostic performance but also help tomographic imaging in the form of loss functions. It is expected that more DL-based IQA methods will be developed for medical imaging, and eventually can perform advanced numerical observer studies as well as human reader studies.

6. Conclusion

In this paper, we have systematically reviewed the physics/model-based data-driven methods for LDCT. In important clinical applications of LDCT imaging, DL-based methods bring major gains in image quality and diagnostic performance and are undoubtedly becoming the mainstream of LDCT imaging research and translation. In the next few years, our efforts would cover dataset enrichment, network adaption, and clinical evaluation, as well as methodological innovation and theoretical investigation. From a larger perspective, DL-based tomographic imaging is only in its infancy. It offers many problems to solve for numerous healthcare benefits and opens a new era of AI-empowered medicine.

Acknowledgments

This work was supported in part by the Sichuan Science and Technology Program under Grant 2021JDJQ0024, in part by the Sichuan University ‘From 0 to 1’ Innovative Research Program under Grant 2022SCUH0016, in part by the National Natural Science Foundation of China under Grant 62101136, in part by the Shanghai Sailing Program under Grant 21YF1402800, in part by the Shanghai Municipal of Science and Technology Project under Grant 20JC1419500, in part by Shanghai Center for Brain Science and Brain-inspired Technology, in part by the National Institute of Biomedical Imaging and Bioengineering (NIBIB) of the National Institutes of Health (NIH) under Grant R01EB026646 , and in part by the National Institute of General Medical Sciences (NIGMS) of the National Institutes of Health (NIH) under Grant R42GM142394

Footnotes

Codes are released at https://github.com/Deep-Imaging-Group/Physics-Model-Data-Driven-Review, related datasets and checkpoints can also be found on that page.

Contributor Information

Wenjun Xia, School of Cyber Science and Engineering, Sichuan University, Chengdu 610065, China.

Hongming Shan, Institute of Science and Technology for Brain-Inspired Intelligence and MOE Frontiers Center for Brain Science, Fudan University, Shanghai, and also with Shanghai Center for Brain Science and Brain-Inspired Technology, Shanghai 200433, China.

Ge Wang, Department of Biomedical Engineering, Rensselaer Polytechnic Institute, Troy, NY 12180 USA.

Yi Zhang, School of Cyber Science and Engineering, Sichuan University, Chengdu 610065, China.

References

- [1].Smith-Bindman R, Lipson J, Marcus R et al. , “Radiation dose associated with common computed tomography examinations and the associated lifetime attributable risk of cancer,” Arch. Int. Med, vol. 169, no. 22, pp. 2078–2086, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Balda M, Hornegger J, and Heismann B, “Ray contribution masks for structure adaptive sinogram filtering,” IEEE Trans. Med. Imaging, vol. 31, no. 6, pp. 1228–1239, 2012. [DOI] [PubMed] [Google Scholar]

- [3].Chen Y, Yin X, Shi L et al. , “Improving abdomen tumor low-dose CT images using a fast dictionary learning based processing,” Phys. Med. Biol, vol. 58, no. 16, pp. 5803–5820, 2013. [DOI] [PubMed] [Google Scholar]

- [4].Yu G, Li L, Gu J et al. , “Total variation based iterative image reconstruction,” in Int. Workshop on Comput. Vis. Biomed. Image Appl Springer, 2005, pp. 526–534. [Google Scholar]

- [5].Wen B, Ravishankar S, and Bresler Y, “Structured overcomplete sparsifying transform learning with convergence guarantees and applications,” Int. J. Comput. Vis, vol. 114, no. 2, pp. 137–167, 2015. [Google Scholar]

- [6].Chun IY, Zheng X, Long Y, and Fessler JA, “Sparse-view x-ray CT reconstruction using regularization with learned sparsifying transform,” in 14th Int. Meeting Fully 3-D Image Recon. Radiol. Nucl. Med. SPIE, 2017, pp. 115–119. [Google Scholar]

- [7].Chen H, Zhang Y, Kalra MK et al. , “Low-dose CT with a residual encoder-decoder convolutional neural network,” IEEE Trans. Med. Imaging, vol. 36, no. 12, pp. 2524–2535, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Yang Q, Yan P, Zhang Y et al. , “Low-dose CT image denoising using a generative adversarial network with wasserstein distance and perceptual loss,” IEEE Trans. Med. Imaging, vol. 37, no. 6, pp. 1348–1357, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Kang E, Koo HJ, Yang DH et al. , “Cycle-consistent adversarial denoising network for multiphase coronary CT angiography,” Med. Phys, vol. 46, no. 2, pp. 550–562, 2019. [DOI] [PubMed] [Google Scholar]

- [10].Shan H, Padole A, Homayounieh F et al. , “Competitive performance of a modularized deep neural network compared to commercial algorithms for low-dose ct image reconstruction,” Nat. Mach. Intell, vol. 1, no. 6, pp. 269–276, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Nishii T, Kobayashi T, Tanaka H et al. , “Deep learning-based post hoc CT denoising for myocardial delayed enhancement,” Radiology, p. 220189, 2022. [DOI] [PubMed] [Google Scholar]

- [12].Gupta H, Jin KH, Nguyen HQ et al. , “CNN-based projected gradient descent for consistent CT image reconstruction,” IEEE Trans. Med. Imaging, vol. 37, no. 6, pp. 1440–1453, 2018. [DOI] [PubMed] [Google Scholar]

- [13].Chen H, Zhang Y, Chen Y et al. , “LEARN: Learned experts’ assessment-based reconstruction network for sparse-data CT,” IEEE Trans. Med. Imaging, vol. 37, no. 6, pp. 1333–1347, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [14].Adler J and Öktem O, “Learned primal-dual reconstruction,” IEEE Trans. Med. Imaging, vol. 37, no. 6, pp. 1322–1332, 2018. [DOI] [PubMed] [Google Scholar]

- [15].Diwakar M and Kumar M, “A review on CT image noise and its denoising,” Biomed. Signal Proc. Control, vol. 42, pp. 73–88, 2018. [Google Scholar]

- [16].Yu L, Shiung M, Jondal D et al. , “Development and validation of a practical lower-dose-simulation tool for optimizing computed tomography scan protocols,” J. Comput. Assist. Tomogr, vol. 36, no. 4, pp. 477–487, 2012. [DOI] [PubMed] [Google Scholar]

- [17].Zheng X, Ravishankar S, Long Y, and Fessler JA, “PWLS-ULTRA: An efficient clustering and learning-based approach for low-dose 3D CT image reconstruction,” IEEE Trans. Med. Imaging, vol. 37, no. 6, pp. 1498–1510, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].Cai J-F, Jia X, Gao H et al. , “Cine cone beam CT reconstruction using low-rank matrix factorization: algorithm and a proof-of-principle study,” IEEE Trans. Med. Imaging, vol. 33, no. 8, pp. 1581–1591, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Kim K, Ye JC, Worstell W et al. , “Sparse-view spectral CT reconstruction using spectral patch-based low-rank penalty,” IEEE Trans. Med. Imaging, vol. 34, no. 3, pp. 748–760, 2014. [DOI] [PubMed] [Google Scholar]

- [20].Xia W, Wu W, Niu S et al. , “Spectral CT reconstruction—ASSIST: Aided by self-similarity in image-spectral tensors,” IEEE Trans. Comput. Imaging, vol. 5, no. 3, pp. 420–436, 2019. [Google Scholar]

- [21].Chen X, Xia W, Liu Y et al. , “FONT-SIR: Fourth-order nonlocal tensor decomposition model for spectral CT image reconstruction,” IEEE Trans. Med. Imaging, 2022. [DOI] [PubMed] [Google Scholar]

- [22].Venkatakrishnan SV, Bouman CA, and Wohlberg B, “Plug-and-play priors for model based reconstruction,” in IEEE Global Conf. Signal Inform. Proc. IEEE, 2013, pp. 945–948. [Google Scholar]

- [23].Rond A, Giryes R, and Elad M, “Poisson inverse problems by the plug-and-play scheme,” J. Vis. Commun. Image Represent, vol. 41, pp. 96–108, 2016. [Google Scholar]

- [24].He J, Wang Y, and Ma J, “Radon inversion via deep learning,” IEEE Trans. Med. Imaging, vol. 39, no. 6, pp. 2076–2087, 2020. [DOI] [PubMed] [Google Scholar]

- [25].Würfl T, Hoffmann M, Christlein V et al. , “Deep learning computed tomography: Learning projection-domain weights from image domain in limited angle problems,” IEEE Trans. Med. Imaging, vol. 37, no. 6, pp. 1454–1463, 2018. [DOI] [PubMed] [Google Scholar]

- [26].Hu D, Liu J, Lv T et al. , “Hybrid-domain neural network processing for sparse-view CT reconstruction,” IEEE Trans. Radiat. Plasma Med. Sci, vol. 5, no. 1, pp. 88–98, 2020. [Google Scholar]

- [27].Tao X, Wang Y, Lin L et al. , “Learning to reconstruct CT images from the VVBP-tensor,” IEEE Trans. Med. Imaging, 2021. [DOI] [PubMed] [Google Scholar]

- [28].Zhang Y, Hu D, Zhao Q et al. , “CLEAR: comprehensive learning enabled adversarial reconstruction for subtle structure enhanced low-dose CT imaging,” IEEE Trans. Med. Imaging, vol. 40, no. 11, pp. 3089–3101, 2021. [DOI] [PubMed] [Google Scholar]

- [29].Zhu B, Liu JZ, Cauley SF et al. , “Image reconstruction by domain-transform manifold learning,” Nature, vol. 555, no. 7697, pp. 487–492, 2018. [DOI] [PubMed] [Google Scholar]

- [30].He J, Chen S, Zhang H et al. , “Downsampled imaging geometric modeling for accurate CT reconstruction via deep learning,” IEEE Trans. Med. Imaging, vol. 40, no. 11, pp. 2976–2985, 2021. [DOI] [PubMed] [Google Scholar]

- [31].Li Y, Li K, Zhang C et al. , “Learning to reconstruct computed tomography images directly from sinogram data under a variety of data acquisition conditions,” IEEE Trans. Med. Imaging, vol. 38, no. 10, pp. 2469–2481, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [32].Fu L and De Man B, “A hierarchical approach to deep learning and its application to tomographic reconstruction,” in 15th Int. Meeting Fully 3-D Image Recon. Radiol. Nucl. Med., vol. 11072. SPIE, 2019, p. 1107202. [Google Scholar]

- [33].Wu D, Kim K, El Fakhri G, and Li Q, “Iterative low-dose CT reconstruction with priors trained by artificial neural network,” IEEE Trans. Med. Imaging, vol. 36, no. 12, pp. 2479–2486, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [34].Zhang F, Zhang M, Qin B et al. , “REDAEP: Robust and enhanced denoising autoencoding prior for sparse-view CT reconstruction,” IEEE Trans. Radiat. Plasma Med. Sci, vol. 5, no. 1, pp. 108–119, 2020. [Google Scholar]

- [35].Chun IY, Huang Z, Lim H et al. , “Momentum-Net: Fast and convergent iterative neural network for inverse problems,” IEEE Trans. Pattern Anal. Mach. Intell, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [36].Ye S, Li Z, McCann MT et al. , “Unified supervised-unsupervised (super) learning for x-ray CT image reconstruction,” IEEE Trans. Med. Imaging, vol. 40, no. 11, pp. 2986–3001, 2021. [DOI] [PubMed] [Google Scholar]

- [37].Ding Q, Nan Y, Gao H, and Ji H, “Deep learning with adaptive hyper-parameters for low-dose CT image reconstruction,” IEEE Trans. Comput. Imaging, vol. 7, pp. 648–660, 2021. [Google Scholar]

- [38].Zhang H, Liu B, Yu H et al. , “MetaInv-Net: Meta inversion network for sparse view CT image reconstruction,” IEEE Trans. Med. Imaging, vol. 40, no. 2, pp. 621–634, 2020. [DOI] [PubMed] [Google Scholar]

- [39].Xiang J, Dong Y, and Yang Y, “Fista-net: Learning a fast iterative shrinkage thresholding network for inverse problems in imaging,” IEEE Trans. Med. Imaging, vol. 40, no. 5, pp. 1329–1339, 2021. [DOI] [PubMed] [Google Scholar]

- [40].Romano Y, Elad M, and Milanfar P, “The little engine that could: Regularization by denoising (RED),” SIAM J. Imaging Sci, vol. 10, no. 4, pp. 1804–1844, 2017. [Google Scholar]

- [41].Gilton D, Ongie G, and Willett R, “Neumann networks for linear inverse problems in imaging,” IEEE Trans. Comput. Imaging, vol. 6, pp. 328–343, 2019. [Google Scholar]

- [42].Unal MO, Ertas M, and Yildirim I, “An unsupervised reconstruction method for low-dose CT using deep generative regularization prior,” Biomed. Signal Proc. Control, vol. 75, p. 103598, 2022. [Google Scholar]

- [43].Ledig C, Theis L, Huszár F et al. , “Photo-realistic single image super-resolution using a generative adversarial network,” in IEEE Conf. Comput. Vis. Pattern Recognit. IEEE, 2017, pp. 4681–4690. [Google Scholar]

- [44].Sugawara Y, Shiota S, and Kiya H, “Super-resolution using convolutional neural networks without any checkerboard artifacts,” in 25th IEEE Int. Conf. Image Proc. IEEE, 2018, pp. 66–70. [Google Scholar]

- [45].Heusel M, Ramsauer H, Unterthiner T et al. , “GANs trained by a two time-scale update rule converge to a local nash equilibrium,” in Adv. Neur. Inf. Proc. Syst, vol. 30, 2017. [Google Scholar]

- [46].Liu Z, Lin Y, Cao Y et al. , “Swin transformer: Hierarchical vision transformer using shifted windows,” in IEEE/CVF Int. Conf. Comput. Vis., 2021, pp. 10 012–10 022. [Google Scholar]

- [47].Kim K and Ye JC, “Noise2Score: Tweedie’s approach to self-supervised image denoising without clean images,” in Adv. Neur. Inf. Proc. Syst, vol. 34, 2021. [Google Scholar]

- [48].Xia W, Lu Z, Huang Y et al. , “CT reconstruction with PDF: parameter-dependent framework for data from multiple geometries and dose levels,” IEEE Trans. Med. Imaging, vol. 40, no. 11, pp. 3065–3076, 2021. [DOI] [PubMed] [Google Scholar]

- [49].Wang J, Lan C, Liu C et al. , “Generalizing to unseen domains: A survey on domain generalization,” IEEE Trans. Knowl. Data Eng, 2022. [Google Scholar]

- [50].Wang Z, Bovik AC, Sheikh HR et al. , “Image quality assessment: from error visibility to structural similarity,” IEEE Trans. Image Process, vol. 13, no. 4, pp. 600–612, 2004. [DOI] [PubMed] [Google Scholar]