Abstract

Discrete-choice experiments (DCEs) are a frequently used method to explore the preferences of patients and other decision-makers in health. Pretesting is an essential stage in the design of a high-quality choice experiment and involves engaging with representatives of the target population to improve the readability, presentation, and structure of the preference instrument. The goal of pretesting in DCEs is to improve the validity, reliability, and relevance of the survey, while decreasing sources of bias, burden, and error associated with preference elicitation, data collection, and interpretation of the data. Despite its value to inform DCE design, pretesting lacks documented good practices or clearly reported applied examples. The purpose of this paper is: (1) to define pretesting and describe the pretesting process specifically in the context of a DCE, (2) to present a practical guide and pretesting interview discussion template for researchers looking to conduct a rigorous pretest of a DCE, and (3) to provide an illustrative example of how these resources were operationalized to inform the design of a complex DCE aimed at eliciting tradeoffs between personal privacy and societal benefit in the context of a police method known as investigative genetic genealogy (IGG).

Key Points

| Pretesting is one of several essential stages in the design of a high-quality discrete-choice experiment (DCE) and involves engaging with representatives of the target population to improve the readability, presentation, and structure of the preference instrument. |

| There is limited available guidance for pretesting DCEs and few transparent examples of how pretesting is conducted. |

| Here, we present and apply a guide which prompts researchers to consider aspects of the content, presentation, comprehension, and elicitation when conducting a DCE pretest. |

| We also present a pretesting interview discussion template to support researchers in operationalizing this guide to their own DCE pretest interviews. |

Introduction

Discrete-choice experiments (DCEs) are a frequently used method to explore the preferences of patients and other stakeholders in health [1–5]. The growth in the application of DCEs can be explained by an abundance of foundational theory and methods guidance [6–9], the establishment of good research practices [1, 8, 10, 11], and interest in the approach by decision makers [12, 13]. In recent years, greater emphasis has been placed on confirming the quality and internal and external validity of DCEs to ensure their usefulness, policy relevance, and impact [5, 14–16].

The value that decision-makers place in DCE findings is in large part dependent on the quality of the instrument design process itself. Numerous quality indicators of DCEs have been discussed in the literature, including validity and reliability [5], match to research question [17], patient-centricity [18], heterogeneity assessment [19], comprehensibility [20], and burden [21]. Developing a DCE that reflects these qualities requires a rigorous design process, which is often achieved through activities such as evidence synthesis, expert consultation, stakeholder engagement, pretesting, and pilot testing [15, 17]. Of these, there is ample guidance on activities related to evidence synthesis [22, 23] including qualitative methods [24, 25], stakeholder engagement [26, 27], and pilot testing [11, 27].

By contrast, there remains a paucity of literature on the procedures, methodologies, and theory for pretesting DCEs; even studies which report having completed pretesting typically report minimal explanation of their approach. Existing literature on pretesting DCEs has typically reported on pretesting procedures within an individual study, rather than providing generalized or comprehensive guidance for the field. Practical guidance on how to conduct the pretesting for all components of a DCE is needed to help establish a shared understanding and transparency around pretesting. Ultimately, this information can lead to improvements in the overall DCE design process and the confidence in findings from DCE research.

This paper has three objectives. The first objective is to define pretesting and describe the pretesting process specifically in the context of a DCE. The second objective is to present a guide and corresponding interview discussion template which can be applied by researchers when conducting a pretest of their own studies. The third objective is to provide an illustrative example of how these resources were applied to the pretest of a complex DCE instrument aimed at eliciting trade-offs between personal privacy and societal benefit in the context of a police method known as investigative genetic genealogy (IGG).

What is Pretesting?

Pretesting describes the process of identifying problem areas of surveys and making subsequent modifications to rectify these problems. Pretesting can be used to evaluate and improve the content, format, and structure of a survey instrument. It generally does this by engaging members of the target population to review and provide feedback on the instrument. Additionally, pretesting can be used to reduce survey burden, improve clarity, identify potential ethical issues, and mitigate sources of bias [28]. Pretesting is considered critical to improving survey validity in the general survey design field [29]. Empirical evidence demonstrates that pretesting can help identify problems, improve survey quality, reliability, and improve participant satisfaction in completing surveys [30].

Pretesting typically begins after the design of a complete survey draft. It occurs between a participant from the target population and one or more survey researchers. It is typical to explain to the participant that the activity is a pretest, and that their responses will be used to inform the design of the survey. Researchers often take field notes during the pretest. After each individual or at most any small set of pretests, research teams debrief to review findings and to make survey modifications. The survey is iterated throughout this process.

Several approaches can be used to collect data during a pretest of a survey generally as well as specifically for surveys including DCEs [31]. One approach is cognitive interviewing, which tests the readability and potential bias of an instrument through prospective or retrospective prompts [32]. Cognitive interviewing can ask participants to “think aloud” over the course of the survey, allowing researchers to understand how participants react to questions and how they arrive at their answers, as well as to follow up with specific probes. Another approach is the debriefing approach, wherein participants independently complete the survey or a section of it. Researchers then ask participants to reflect on what they have read, describe what they believe they were asked, and reflect on any specific aspects of interest to researchers such as question phrasing or order of survey content [33].

In behavioral coding approaches, researchers observe participants as they silently complete the activity, noting areas of perceived hesitation or confusion [33]. This is sometimes done through eye-tracking approaches, wherein eye movements are studied to explore how information is being processed [34]. Pretesting can also occur through codesign approaches, which are more participatory in nature. In a codesign approach, researchers may ask participants not just to reflect on the instrument as it is presented but to actively provide input that can be used to refine the instrument [35]. Across all methods, strengths and weaknesses of the instrument can be identified inductively or deductively.

Pretesting in the explicit context of choice experiments has not been formally defined. Rather, it has been used to describe a range of exploratory and flexible approaches for assessing how participants perceive and interact with a choice experiment [1, 36]. Recently, there has been greater emphasis on the interpretation, clarity, and ease of using choice experiments [37] given their increasing complexity and administration online [38–40]. We propose a definition of pretesting for choice experiments here (Box 1).

Pretesting of DCEs is as much an art as it is a science. Specifically, pretesting is often a codevelopment type of engagement with potential survey respondents. This engagement can empower the pretesting participants to suggest changes and to highlight issues. The research team (and potentially other stakeholders) work with these pretesting participants to solve issues jointly. As a type of engagement (as opposed to a qualitative study), we argue that it is process heavy, with the desired outcome of the engagement often being the development of a better instrument. Pretesting may also be incomplete and involve making judgement calls about what may or may not work or what impacts certain additions or subtractions may have.

| Box 1. Defining pretesting in choice experiments | |

|---|---|

| One of the key stages of developing a choice experiment, pretesting is a flexible process where representatives of the target population are engaged to improve the readability, presentation, and structure of the survey instrument (including educational material, choice experiment tasks, and all other survey questions). The goal of pretesting a DCE is to improve the validity, reliability, and relevance of the survey, while decreasing sources of bias, burden, and error associated with preference elicitation, data collection, and interpretation of the data. |

Additional considerations above and beyond those made during general survey design are required when pretesting surveys including DCEs. Specific efforts should be made to improve the educational material used to motivate and prepare people to participate in the survey. A great deal of effort should be placed on the choice experiment tasks and the process by which information is presented, preferences are elicited, and tradeoffs are made [11, 25, 38, 41]. More than one type of task format and/or preference elicitation mechanism may be assessed during pretesting. It is also important to assess all other survey questions to ensure that data is collected appropriately and to assess the burden and impact of the survey. Pretesting can be aimed at reducing any error associated with preference elicitation and data collection. Information garnered during pretesting may help generate hypotheses or give the research team greater insights into how people make decisions. Hence, pretesting, as with piloting a study, may provide insights that may help with the interpretation of the data from the survey, both a priori and a posteriori.

Pretesting is one of several activities used to inform DCE development (Table 1). Activities that precede pretesting include evidence synthesis through activities such as literature reviews, stakeholder engagement with members of the target population, and expert input from professionals in the field. These activities can be used to identify and refine the scope of the research questions as well as develop draft versions of the survey instrument. Pilot testing typically proceeds pretesting. Although the terms have sometimes been used interchangeably [42], pretesting and pilot testing are distinct aspects of survey design with unique objectives and approaches. While both methods generally seek to improve surveys, pretesting centers on understanding areas in need of improvement, and quantitative pilot testing typically explores results and whether the survey questions and choice experiment are performing as intended.

Table 1.

Stages of choice experiment design

| Objective | Typical approaches | Example activities related to DCE development | Key Refs. | |

|---|---|---|---|---|

| Evidence synthesis | To gather information from various published sources to comprehensively understand a topic |

Literature reviews Environmental scans Meta analyses |

Identify concepts and questions of interest Extract potential attributes/levels Identify points of contention in the literature |

[22, 23, 54] |

| Expert input | To ensure clinical accuracy and/or policy relevance of the instrument |

Expert advisory boards Engagement methods Qualitative methods |

Identify concepts and questions of interest Identify relevant tradeoffs and policy analysis Refine to verify clinical accuracy |

[15, 24, 25, 55] |

| Patient and end-user input | To improve content and relevance of the instrument from the patient or end-user’s perspective |

Community advisory boards Engagement methods Qualitative methods |

Identify concepts and questions of interest Foster engagement and enthusiasm in research Assess relevance of instrument |

[26, 27, 36, 55, 56] |

| Pretesting | To improve the readability, presentation, and structure of the instrument |

Cognitive interviewing Debriefing Codesign/cocreation |

Refine and reframe attributes and levels Identify and resolve areas of burden Identify if tradeoffs are being made |

[57–59] |

| Pilot testing | To preliminarily explore results and check if instrument is behaving as intended |

Survey methods Completion with subset of target population |

Test if results are aligned with expected trends Test if results demonstrate tradeoffs Look for drop off in responses |

[11, 27, 60] |

Adapted and expanded from Janssen et al., 2016

A Guide for Pretesting Discrete-Choice Experiments

We developed a practical guide to help researchers conduct more thorough pretesting of DCEs. This guide is based on our research team’s practical experience in pretesting dozens of DCE instruments. The guide is organized into four domains for assessment during the pretest of a DCE (content, presentation, comprehension, and elicitation) and poses guiding questions for researchers to consider across each domain (Table 2). These questions are not meant to be asked verbatim to pretesting participants but rather are ones that the researcher might ask themselves to help guide their own pretesting process. This guide is relevant to inform the pretesting of DCE materials including background/educational material, example tasks, choice experiment tasks, and any other survey questions related to the DCE, such as debriefing questions. In practice there is often overlap across questions relevant to different domains.

Table 2.

Guide for Pretesting DCEs

| Domain | Guiding questions for researchers |

|---|---|

|

Content Relevance and comprehensiveness of the DCE |

Do attributes collectively capture the concept of interest? Are attributes/levels appropriately ordered? Are levels appropriate values? Are topic and elicitation relevant to the decision context? |

|

Presentation Visualization and formatting of the DCE |

Do color, images, and layout effectively convey the intended messaging? Are materials (i.e., introduction and example tasks) informative? Is the order that information is presented in logical? Is presentation optimized to reduce participant burden? |

|

Comprehension Understanding of the DCE |

Are key terms understood? Can respondents envision the scenario? Is there an appropriate recall of key terms? Are all materials consistently comprehendible? |

|

Elicitation Process of making a choice in the choice task of the DCE |

Are tradeoffs being made? Are underlying heuristics driving decision making? Is outside information being included in decision making? Are responses consistent with participant preferences? |

DCE discrete-choice experiment

The content domain of the guide primes researchers to consider the relevance and comprehensiveness of the DCE. Addressing the content of the DCE includes refining and reframing attributes and levels. Additional considerations in this domain include whether attributes collectively capture the concept of interest, if attribute levels are presented in an appropriate and logical order, and if the level values are appropriate. Finally, the elicitation should be scrutinized for its relevance to the decision context.

The presentation domain of the guide recommends researchers consider aspects of the DCE such as its visualization and formatting. Addressing the presentation of the DCE could include identifying areas with response burden and discussing ways to reduce that burden (e.g., altering the organization of the survey, creating a color-coding scheme, etc.). Additional aspects of consideration include whether the visual aspects of the DCE, such as color, images, and layout effectively convey the intended messaging: whether materials, including the introduction and example tasks, are informative; whether materials are logically ordered; if numeric and risk concepts have been optimally presented; and if the presentation can be further optimized to reduce time, cognitive, or other burdens on the participant.

The comprehension domain of the guide reminds researchers to consider how well the DCE is being understood by participants. Addressing the comprehension of the DCE could include identifying if key terms are understood and recalled and if materials are consistently comprehensible. Additionally, it is valuable to assess whether participants are able to envision the proposed scenario and decision context in which they are being asked to make choices.

The elicitation domain of the guide refers to the process of making a choice within a given choice task. Addressing the elicitation of the DCE could include identifying whether and/or why tradeoffs are being made, if underlying heuristics are driving decision making, if choices are being made based on outside information, and if participant preferences are reflected by their choices.

Applying the Guide to a Complex DCE

In this section, we describe how we applied the novel pretesting guide to inform the design of a DCE regarding preferences for the use of a new police method called investigative genetic genealogy (IGG). IGG is the practice of uploading crime scene DNA to genetic genealogy databases with the intention of identifying the criminal offender’s genetic relatives and, eventually, locating the offender in their family tree [43]. Although IGG has brought justice to victims and their families by helping to close hundreds of cases of murder and sexual assault, including by identifying the notorious Golden State Killer [44], there are concerns that IGG may interfere with the privacy interests of genetic genealogy database participants and their families. Studies predating IGG have demonstrated that individuals have concerns about genetic privacy, yet they are still sometimes willing to share their genetic data under specific conditions [45–48].

Understanding the tradeoffs that the public makes when assessing the acceptability of the use of genetic data during IGG has become increasingly important as policy makers consider new protections for personal data maintained in commercial genetic databases and restrictions on the practice of IGG [49–51]. To help inform these conversations, we designed a DCE to measure public preferences regarding when and how law enforcement should be permitted to participate in genetic genealogy databases.

Approach

Our study team followed good practices in choice experiment design. A literature search was conducted to understand the context for the forthcoming choice experiment by exploring salient ethical, legal, and social implications of IGG. We sought expert input through a series of qualitative interviews with law enforcement, forensic scientists, genetic genealogy firms, and genetic genealogists to obtain a technically precise and comprehensive description of current IGG practices and forecasts of its future. Public input on IGG was elicited through eight US-based, geographically diverse focus groups to identify what the general population believes are the most salient attributes of law enforcement participation in genetic genealogy databases. The findings from these activities informed the elicitation question, identification and selection of attributes and levels, and use of an opt-out in the initial version of the DCE.

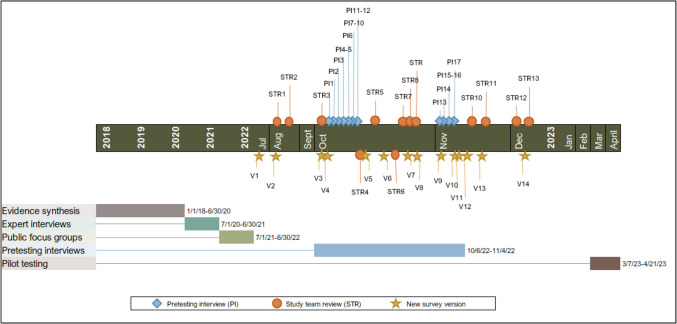

Pretesting of the DCE occurred from October–November 2022 (Fig. 1). Pretest participants from our target population were recruited through the AmeriSpeak panel, a US-based population panel [52]. All interviews were conducted over Zoom and recorded. One research team member led the pretest interview, while another took field notes on a physical copy of the survey and flagged areas for potential modification. We followed a hybrid cognitive interview and debrief style pretest and set expectations for participants by stating that “sometimes we will ask you to read some sections of the survey aloud, and other times we will ask you to read in your head but to let us know if there is anything unclear.”

Fig. 1.

DCE development timeline

We applied a version of our pretesting discussion template (Box 2) with increased specificity regarding the IGG content of the DCE. This discussion template operationalizes domains and overarching questions posed by the guide and organizes them according to the typical flow of a DCE embedded in a survey. This pretest interview discussion template provides an example of questions to be asked of participants directly when reflecting upon the different sections of the DCE with the survey, including introduction to the choice experiment, review of attributes/levels, example task, choice tasks, debriefing, and finally format and structure considerations pertinent to the DCE. Interviews explored the domains of the guide for all aspects of the DCE. Tradeoffs were assessed in several ways, including asking participants to think aloud as they completed choice tasks to ascertain how they were making tradeoffs, probing as to why one profile was chosen over another and if tradeoffs needed to be made when making their choice, as well as probing if any attributes or levels were more impactful in their decision making than others.

| Box 2. Pretesting interview discussion template |

|---|

| Introduction to the choice experiment |

| This is the introduction to the next part in the survey. Do you mind reading this aloud? |

| Can you read the description out loud? Is anything unclear? |

| Do you have any issues or questions? |

| Review of attributes/levels (one attribute at a time) |

| Can you explain the attribute and its levels in your own words based on what you know so far? |

| Are the description of the attribute and its levels clear? Would you make any change to how they are described? |

| What do you think about the levels of the attribute presented? Are they too similar or too different? |

| Do you think the levels for this attribute are presented in the right order? |

| Example task |

| Can you explain to me in your own words what you think is being asked in this task? |

| Is the choice we are asking you to make clear? Can you imagine the scenario we are describing here? |

| Do you think you could answer this question? |

| Was this example task useful? Do you feel prepared to do the next task on your own? |

| Choice tasks |

| Can you think aloud for me as you review this choice task? |

| Do you like either of these profiles better than the other? |

| If so, can you explain why? |

| Was there any tradeoff you had to make in choosing that profile? |

| Was there something you like better in the profile you didn’t choose? |

| Was there anything in the profile you chose that was not ideal? |

| Were any of the attributes more important in your decision making than others? |

| Is there any information outside of what we’ve presented here that factored into your choice? |

| Should there have been an opportunity to choose neither profile? As in, to opt-out of making a choice? |

| How many of these tasks could you do before getting tired? Is there anything we could change to make it easier to do more of these tasks? |

| [If presenting multiple choice task formats] Which of these formats do you like more? |

| Debriefing |

| How consistent were your answers with your preferences? |

| How easy were the choice tasks to understand? |

| How easy were the choice tasks to answer? |

| How long do you think it would have taken you to complete X tasks? |

| Format and structure |

| What kind of device do you usually use to take surveys (e.g., phone, computer)? |

| If by phone, what orientation do you typically use when taking surveys on your phone? Would you flip it to portrait to accommodate a wide choice task? Or prefer it to be up/down? |

Input from pretest interviews was routinely integrated into new versions of the survey. Suggested modifications for which there was high consensus across the research team (e.g., changes to syntax) were made immediately following a pretesting interview. Lower consensus modifications and more substantive changes to the instrument were made every three to four pretests, or as soon as the problem and solution became clear to the research team. All activities were approved by the Baylor University IRB (H-47654).

Results

In total, 17 pretesting interviews were conducted with sociodemographically diverse participants in the USA. Interviews averaged 50 minutes in length (ranging 30–74 min) for a roughly 20-min survey. Substantial modifications to the DCE were made within each of the four domains posed in the checklist (Table 3). Regarding content, early into pretesting participants indicated they had difficulty fully understanding and connecting with each attribute and level introduced. To address this, attributes and levels were reframed to include meaningful potential benefits not initially accounted for to encourage better understanding as to why each attribute and level is being discussed. Following this change, participants expressed that they were able to provide more genuine responses in the DCE.

Table 3.

Application of the pretesting guide to a DCE on IGG

| Domain | Issues identified | Example survey modifications made | Impact |

|---|---|---|---|

|

Content Relevance and comprehensiveness of the DCE |

Participants indicated that they had difficulty understanding and connecting with each attribute and level | Attributes and levels were reframed to include a meaningful potential benefit not initially accounted for | Changing the framing of the attributes and levels promoted more genuine responses in the DCE |

|

Presentation Visualization and formatting of the DCE |

Participants had difficulty distinguishing between the DCE choice task profiles | Attribute levels were color coded in the DCE choice tasks | Response burden decreased and time spent on answering tasks decreased |

|

Comprehension Understanding of the DCE |

Participants had difficulty recalling key terms, presented through an educational video | Educational video explaining IGG and key terms was swapped for a simple text-based description with comprehension questions | Improved comprehension and recall of terms |

|

Elicitation Process of making a choice in the choice task of the DCE |

Some participants indicated they would “never support IGG” and that profiles were inconsistent with preferences | An opt-out was added to each choice task | Response burden decreased and willingness to complete tasks increased |

DCE discrete-choice experiment, IGG investigative genetic genealogy

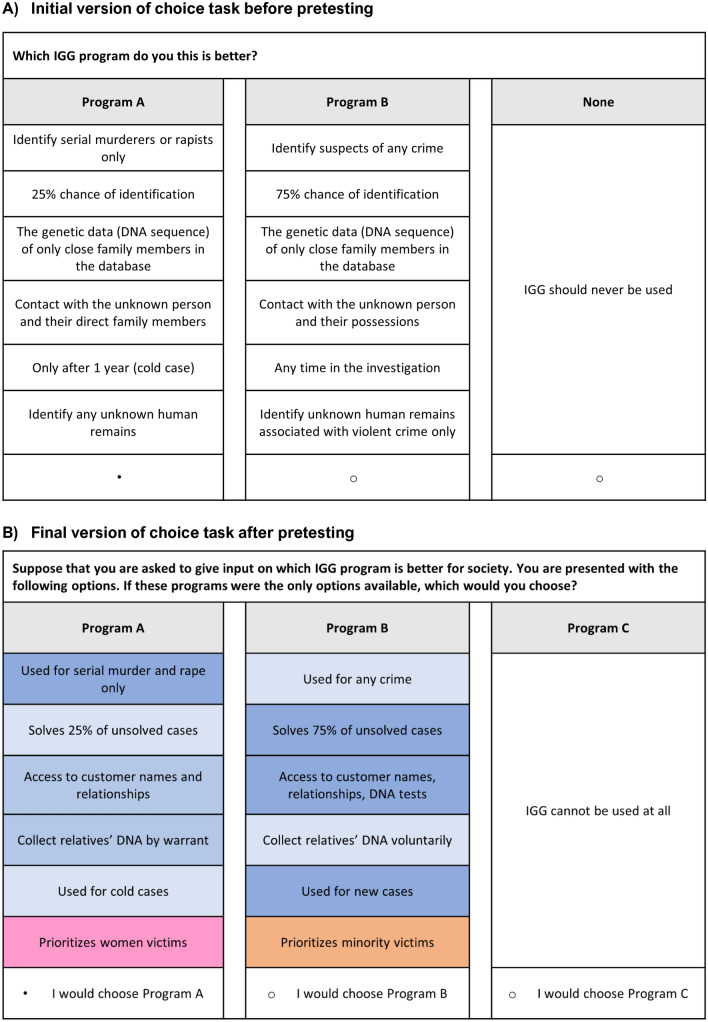

Initially, the DCE choice tasks simply listed the appropriate attribute levels for each profile. For all choice tasks, attributes remained in the same position with levels changing according to the experimental design. While pretesting, participants expressed that it was difficult to distinguish differences between the two profiles. To address this, attribute levels were color coded, allowing for easier comparison across attribute levels in each profile. As a result, we observed a decrease in the amount of time it took for participants to complete the tasks and greater certainty in their choices.

At the outset to improve comprehension we included a brief video on the survey which provided a general overview of IGG. We anticipated that this video would be more engaging than explaining key information about IGG via text. However, early into pretesting we established that the video was introducing too many key terms that were not featured in the survey itself. We also learned that pretest participants were inclined to skip videos and “get on” with the rest of the survey. To address this, we replaced the educational video with a short, high-level description of only the most relevant terms, and included two comprehension questions to confirm understanding. Participants in later rounds of pretesting expressed satisfaction with the approach and comprehension of key terms appeared to increase.

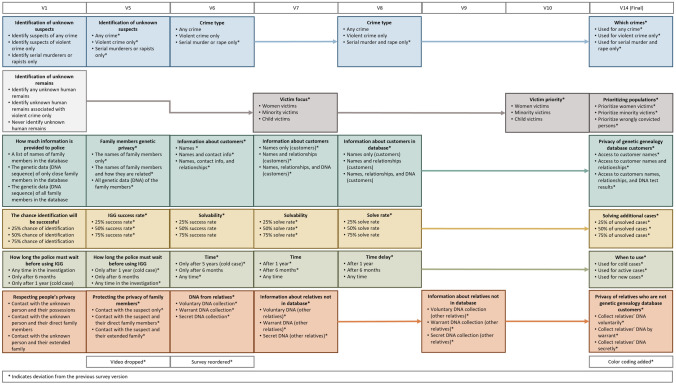

Attributes and levels evolved over the pretesting process (Fig. 2). Changes included reordering the order in which levels were introduced, rewording both the attribute and levels, and adding/removing an attribute. A change in the order in which levels were introduced occurred in the attribute “how long the police must wait before using IGG.” This change was prompted by a suggestion made by a participant, making the levels and their descriptions flow in a more sensible manner. Each interview provided insights for how individuals may interpret each of the attributes and levels; this promoted modifications, both major and minor, to the language used to label and describe the concepts. This included simplifying language and being intentional with the use of words. Changes were also made by taking into consideration how the attribute and level would be displayed in the choice task. The attribute “identification of unknown remains” was dropped after the first version of the survey, later replaced by “victim focus.” This change came about as the interpretation and understanding of the initial attribute varied too much from person to person. Simplifying the language allowed for a clearer understanding of the attribute and its levels.

Fig. 2.

Major changes in DCE across the pretesting process

The choice task itself also evolved during pretesting (Fig. 3). Each choice task initially contained the two profiles for participants to choose between, as well as the ability to choose that IGG cannot be used at all. Early into pretesting, some participants indicated that they would have a hard time completing the DCE portion of the survey without the ability to opt-out of IGG because they “would never support the use of IGG” and therefore felt the profiles would be inconsistent with their preferences. The language that was used to describe the opt-out was modified throughout pretesting. We observed that participants who were opposed to the use of IGG found it easier to answer the questions, and that they were more willing to complete all tasks in the section by incorporating an opt-out option.

Fig. 3.

Choice task before and after pretesting

Discussion

In this paper we explore the concept of pretesting in the context of DCEs, highlighting the unique considerations for pretesting choice experiments that go above and beyond those of survey research more generally. To demonstrate these considerations, we present a novel guide for pretesting DCEs. We anticipate that this guide will provide practical guidance on pretesting DCEs and help researchers to conduct more comprehensive and relevant pretesting. Applying the guide to our own DCE helped identify multiple areas for improvement and substantial modifications to the instrument as a result.

There is a need for greater transparency around all stages of DCE design, especially pretesting. Fewer than one-fifth of DCE studies report including pretesting in their development [53]. Among those that do report having done a pretest, is it not typical that they report their specific methods and approaches to pretesting. Increasing transparency of reporting around pretesting will help identify the diversity of methods used for pretesting in DCEs, help establish norms for pretesting, and facilitate a better understanding of how to conduct a high-quality pretest.

We hope that this guide will spur future work to establish good practices in pretesting. This guide can be used to promote a common understanding of what pretesting is, as a concept, and what the process entails. There is an array of interpretations of what pretesting is, with inconsistent methods and applications. Continued discussion on pretesting as a concept as well as procedural guidance is needed to ensure pretesting practices are incorporating good research practices. To support the development of good practices, research should seek to answer questions such as: When are certain pretesting approaches most effective? What are indicators of pretesting success? How do we ascertain when pretesting is complete and a survey is ready for pilot testing?

There is not typically a clear indication of when the pretesting phase of instrument design is complete. In the absence of established indicators of completeness, our team’s experience has been that the decision to stop pretesting relies upon a bevy of factors including budget, timeline, and perceived improvement/quality in the instrument. In the current application of pretesting a DCE for IGG, we conducted what we believe to be a rigorous pretesting process with 17 pretesting interviews. Our decision to close pretesting was based on our increasing confidence that participants were understanding the survey and the experiment embedded within it, that they were making tradeoffs, and that those tradeoffs were reflecting their preferences. The success of our pretesting process was ultimately reflected in the subsequent pilot test, which demonstrated that the DCE evoked tradeoffs consistent with those expressed by pretesting participants. Notably the opt-out, which was included because of input during pretesting, offered meaningful insight into preference heterogeneity.

There are several aspects of this research which limit its generalizability. First, this guide was developed to advise primarily on questions relevant for pretesting DCEs rather than across broad methods of preference or priority elicitation. We anticipate that the domains posed in the guide are broadly applicable to all forms of preference elicitation, but the content of specific guiding questions would likely vary based on the method being applied. For instance, best–worst scaling case 1 does not use levels; therefore, questions about level ordering posed in the current guide are not relevant.

Second, pretesting is a semistructured, rapid-cycle activity that is not meant to be generalizable. The researcher is themselves an instrument in the pretesting process, and unique characteristics such as the researcher’s former experiences and knowledge of the research topic/population will influence how, when, and why changes are ultimately made to the survey. Researchers can be reflexive about their role in the instrument design process and should seek to internally understand their own motivations or rationales for making changes.

Conclusions

Pretesting is an essential but often under-described stage in the DCE design process. This paper provides practical guidance to help facilitate comprehensive and relevant pretesting of DCEs and operationalizes this guidance using a pretesting interview discussion template. These resources can facilitate future activities and discussions to develop good practices for pretesting which may ultimately help facilitate higher quality preference research with greater value to decision makers.

Author Contributions

Study design: CG, JB, NLC; Study conduct: NBC, WB, NLC, JB; Manuscript drafting: NBC, JB, NLC; Critical manuscript revision: All authors.

Funding

This research was supported by the National Human Genome Research Institute of the National Institutes of Health under Award Number R01HG011268. The content is solely the responsibility of the authors and does not represent the official views of the National Institutes of Health, the authors’ employers, or any institutions with which they are or have been affiliated. Dr. Bridges holds an Innovation in Regulatory Science Award from the Burroughs Wellcome Fund. There are no other funding sources or COI to declare.

Data Availability

Data are available upon reasonable request from Norah Crossnohere.

Declarations

Conflicts of Interest

Authors have no conflicts of interest to declare.

Consent to participate

Not applicable.

Consent for publication

Not applicable.

References

- 1.Bridges JFP, et al. Conjoint analysis applications in health—a checklist: a report of the ISPOR good research practices for conjoint analysis task force. Value Health. 2011;14(4):403–413. doi: 10.1016/j.jval.2010.11.013. [DOI] [PubMed] [Google Scholar]

- 2.Marshall D, et al. Conjoint analysis applications in health—how are studies being designed and reported?: an update on current practice in the published literature between 2005 and 2008. Patient. 2010;3(4):249–256. doi: 10.2165/11539650-000000000-00000. [DOI] [PubMed] [Google Scholar]

- 3.Soekhai V, et al. Discrete choice experiments in health economics: past, present and future. Pharmacoeconomics. 2019;37(2):201–226. doi: 10.1007/s40273-018-0734-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Determann D, et al. Designing unforced choice experiments to inform health care decision making: implications of using opt-out, neither, or status quo alternatives in discrete choice experiments. Med Decis Mak. 2019;39(6):681–692. doi: 10.1177/0272989X19862275. [DOI] [PubMed] [Google Scholar]

- 5.Janssen EM, et al. Improving the quality of discrete-choice experiments in health: How can we assess validity and reliability? Expert Rev Pharmacoecon Outcomes Res. 2017;17(6):531–542. doi: 10.1080/14737167.2017.1389648. [DOI] [PubMed] [Google Scholar]

- 6.Louviere JJ, Flynn TN, Carson RT. Discrete choice experiments are not conjoint analysis. J Choice Model. 2010;3(3):57–72. doi: 10.1016/S1755-5345(13)70014-9. [DOI] [Google Scholar]

- 7.Bliemer MC, Rose JM. Designing stated choice experiments: state-of-the-art. In: 11th international conference on travel behaviour research, Kyoto. Kyoto University. 2006.

- 8.Hensher DA, Rose JM, Greene WH. Applied choice analysis: a primer. Cambridge: Cambridge University Press; 2005. [Google Scholar]

- 9.Ryan M, Gerard K, Amaya-Amaya M. Using discrete choice experiments to value health and health care. Editors. 2008. p. 1–255.

- 10.Hauber AB, et al. Statistical methods for the analysis of discrete choice experiments: a report of the ISPOR conjoint analysis good research practices task force. Value Health. 2016;19(4):300–315. doi: 10.1016/j.jval.2016.04.004. [DOI] [PubMed] [Google Scholar]

- 11.Lancsar E, Louviere J. Conducting discrete choice experiments to inform healthcare decision making. Pharmacoecon. 2008;26(8):661–677. doi: 10.2165/00019053-200826080-00004. [DOI] [PubMed] [Google Scholar]

- 12.Ho M, et al. A framework for incorporating patient preferences regarding benefits and risks into regulatory assessment of medical technologies. Value Health. 2016;19(6):746–750. doi: 10.1016/j.jval.2016.02.019. [DOI] [PubMed] [Google Scholar]

- 13.FDA. Patient preference information—voluntary submission, review in premarket approval applications, humanitarian device exemption applications, and de novo requests, and inclusion in decision summaries and device labeling: Guidance for industry. Food and Drug Administration Staff, and Other Stakeholders. 2016.

- 14.Bridges JFP, et al. A roadmap for increasing the usefulness and impact of patient-preference studies in decision making in health: a good practices report of an ISPOR task force. Value Health. 2023;26(2):153–162. doi: 10.1016/j.jval.2022.12.004. [DOI] [PubMed] [Google Scholar]

- 15.Janssen EM, Segal JB, Bridges JFP. A framework for instrument development of a choice experiment: an application to type 2 diabetes. Patient. 2016;9(5):465–479. doi: 10.1007/s40271-016-0170-3. [DOI] [PubMed] [Google Scholar]

- 16.de Bekker-Grob EW, et al. Can healthcare choice be predicted using stated preference data? Soc Sci Med. 2020;246:112736. doi: 10.1016/j.socscimed.2019.112736. [DOI] [PubMed] [Google Scholar]

- 17.van Overbeeke E, et al. Factors and situations influencing the value of patient preference studies along the medical product lifecycle: a literature review. Drug Discov Today. 2019;24(1):57–68. doi: 10.1016/j.drudis.2018.09.015. [DOI] [PubMed] [Google Scholar]

- 18.Naunheim MR, et al. Patient preferences in subglottic stenosis treatment: a discrete choice experiment. Otolaryngol Head Neck Surg. 2018;158(3):520–526. doi: 10.1177/0194599817742851. [DOI] [PubMed] [Google Scholar]

- 19.Wright SJ, et al. Accounting for scale heterogeneity in healthcare-related discrete choice experiments when comparing stated preferences: a systematic review. Patient. 2018;11(5):475–488. doi: 10.1007/s40271-018-0304-x. [DOI] [PubMed] [Google Scholar]

- 20.Haynes A, et al. Physical activity preferences of people living with brain injury: formative qualitative research to develop a discrete choice experiment. Patient. 2023;16(4):385–398. doi: 10.1007/s40271-023-00628-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Himmler S, et al. What works better for preference elicitation among older people? Cognitive burden of discrete choice experiment and case 2 best-worst scaling in an online setting. J Choice Model. 2021;38:100265. doi: 10.1016/j.jocm.2020.100265. [DOI] [Google Scholar]

- 22.Petrou S, McIntosh E. Women's preferences for attributes of first-trimester miscarriage management: a stated preference discrete-choice experiment. Value Health. 2009;12(4):551–559. doi: 10.1111/j.1524-4733.2008.00459.x. [DOI] [PubMed] [Google Scholar]

- 23.Jiang S, et al. Patient preferences in targeted pharmacotherapy for cancers: a systematic review of discrete choice experiments. Pharmacoeconomics. 2023;41(1):43–57. doi: 10.1007/s40273-022-01198-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Hollin IL, et al. Reporting formative qualitative research to support the development of quantitative preference study protocols and corresponding survey instruments: guidelines for authors and reviewers. Patient. 2020;13(1):121–136. doi: 10.1007/s40271-019-00401-x. [DOI] [PubMed] [Google Scholar]

- 25.Coast J, et al. Using qualitative methods for attribute development for discrete choice experiments: Issues and recommendations. Health Econ. 2012;21(6):730–741. doi: 10.1002/hec.1739. [DOI] [PubMed] [Google Scholar]

- 26.Hollin IL, et al. Developing a patient-centered benefit-risk survey: a community-engaged process. Value Health. 2016;19(6):751–757. doi: 10.1016/j.jval.2016.02.014. [DOI] [PubMed] [Google Scholar]

- 27.Seo J, et al. Developing an instrument to assess patient preferences for benefits and risks of treating acute myeloid leukemia to promote patient-focused drug development. Curr Med Res Opin. 2018;34(12):2031–2039. doi: 10.1080/03007995.2018.1456414. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Beatty PC, Willis GB. Research synthesis: The practice of cognitive interviewing. Public Opin Q. 2007;71(2):287–311. doi: 10.1093/poq/nfm006. [DOI] [Google Scholar]

- 29.Alaimo K, Olson CM, Frongillo EA. Importance of cognitive testing for survey items: an example from food security questionnaires. J Nutr Educ. 1999;31(5):269–275. doi: 10.1016/S0022-3182(99)70463-2. [DOI] [Google Scholar]

- 30.Lenzner T, Hadler P, Neuert C. An experimental test of the effectiveness of cognitive interviewing in pretesting questionnaires. Qual Quant. 2023;57(4):3199–3217. doi: 10.1007/s11135-022-01489-4. [DOI] [Google Scholar]

- 31.Ruel E, Wagner WE, III, Gillespie BJ. The practice of survey research: theory and applications. Thousand Oaks: SAGE Publications Inc; 2016. [Google Scholar]

- 32.Lenzner T, Neuert C, Otto W. Cognitive pretesting. GESIS Survey Guidelines. 2016. p. 3.

- 33.DeMaio TJ, Rothgeb J, Hess J. Improving survey quality through pretesting. Washington, DC: US Bureau of the Census; 1998. [Google Scholar]

- 34.Vass C, et al. An exploratory application of eye-tracking methods in a discrete choice experiment. Med Decis Mak. 2018;38(6):658–672. doi: 10.1177/0272989X18782197. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Cope L, Somers A. Effective pretesting: an online solution. Res Notes. 2011;43:32–35. [Google Scholar]

- 36.Barber S, et al. Development of a discrete-choice experiment (DCE) to elicit adolescent and parent preferences for hypodontia treatment. Patient. 2019;12(1):137–148. doi: 10.1007/s40271-018-0338-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Windle J, Rolfe J. Comparing responses from internet and paper-based collection methods in more complex stated preference environmental valuation surveys. Econ Anal Policy. 2011;41(1):83–97. doi: 10.1016/S0313-5926(11)50006-2. [DOI] [Google Scholar]

- 38.Abdel-All M, et al. The development of an Android platform to undertake a discrete choice experiment in a low resource setting. Archives Pub Health. 2019;77(1):20. doi: 10.1186/s13690-019-0346-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Determann D, et al. Impact of survey administration mode on the results of a health-related discrete choice experiment: online and paper comparison. Value Health. 2017;20(7):953–960. doi: 10.1016/j.jval.2017.02.007. [DOI] [PubMed] [Google Scholar]

- 40.Clark MD, et al. Discrete choice experiments in health economics: a review of the literature. Pharmacoeconomics. 2014;32(9):883–902. doi: 10.1007/s40273-014-0170-x. [DOI] [PubMed] [Google Scholar]

- 41.Pearce AM, et al. How are debriefing questions used in health discrete choice experiments? An online survey. Value Health. 2020;23(3):289–293. doi: 10.1016/j.jval.2019.10.001. [DOI] [PubMed] [Google Scholar]

- 42.Hovén E, et al. Psychometric evaluation of the Swedish version of the PROMIS sexual function and satisfaction measures in clinical and nonclinical young adult populations. Sex Med. 2023;11(1):qfac006. doi: 10.1093/sexmed/qfac006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Kling D, et al. Investigative genetic genealogy: Current methods, knowledge and practice. Forensic Sci Int Genet. 2021;52:102474. doi: 10.1016/j.fsigen.2021.102474. [DOI] [PubMed] [Google Scholar]

- 44.Gafni M, Krieger L. Here’s the ‘open-source’ genealogy DNA website that helped crack the Golden State Killer case. 2018. https://www.eastbaytimes.com/2018/04/26/ancestry-23andme-deny-assisting-law-enforcement-in-east-area-rapist-case/.

- 45.Nagaraj CB, et al. Attitudes of parents of children with serious health conditions regarding residual bloodspot use. Public Health Genom. 2014;17(3):141–148. doi: 10.1159/000360251. [DOI] [PubMed] [Google Scholar]

- 46.Skinner C, et al. Factors associated with African Americans’ enrollment in a national cancer genetics registry. Public Health Genom. 2008;11(4):224–233. doi: 10.1159/000116883. [DOI] [PubMed] [Google Scholar]

- 47.Robinson JO, et al. Participants and study decliners’ perspectives about the risks of participating in a clinical trial of whole genome sequencing. J Empir Res Hum Res Ethics. 2016;11(1):21–30. doi: 10.1177/1556264615624078. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Oliver JM, et al. Balancing the risks and benefits of genomic data sharing: Genome research participants’ perspectives. Public Health Genom. 2012;15(2):106–114. doi: 10.1159/000334718. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Ram N, Murphy EE, Suter SM. Regulating forensic genetic genealogy. Science. 2021;373(6562):1444–1446. doi: 10.1126/science.abj5724. [DOI] [PubMed] [Google Scholar]

- 50.Beal-Cvetko B. Legislature passes 'Sherry Black bill' to regulate genealogy search by law enforcement. 2023. https://ksltv.com/529189/legislature-passes-sherry-black-bill-to-regulate-genealogy-search-by-law-enforcement/.

- 51.Guerrini CJGD, Kramer S, Moore C, Press M, McGuire A. State genetic privacy statutes: good intentions, unintended consequences? Harvard Law Petrie-Flom Center: Bill of Health. 2023.

- 52.AmeriSpeak. 2023. https://www.amerispeak.org/about-amerispeak.

- 53.Vass C, Rigby D, Payne K. The role of qualitative research methods in discrete choice experiments: a systematic review and survey of authors. Med Decis Mak. 2017;37(3):298–313. doi: 10.1177/0272989X16683934. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Helter TM, Boehler CEH. Developing attributes for discrete choice experiments in health: a systematic literature review and case study of alcohol misuse interventions. J Subst Use. 2016;21(6):662–668. doi: 10.3109/14659891.2015.1118563. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Föhn Z, et al. Stakeholder engagement in designing attributes for a discrete choice experiment with policy implications: an example of 2 Swiss studies on healthcare delivery. Value Health. 2023;26(6):925–933. doi: 10.1016/j.jval.2023.01.002. [DOI] [PubMed] [Google Scholar]

- 56.Aguiar M, et al. Designing discrete choice experiments using a patient-oriented approach. Patient. 2021;14(4):389–397. doi: 10.1007/s40271-020-00431-w. [DOI] [PubMed] [Google Scholar]

- 57.Kløjgaard ME, Bech M, Søgaard R. Designing a stated choice experiment: the value of a qualitative process. J Choice Model. 2012;5(2):1–18. doi: 10.1016/S1755-5345(13)70050-2. [DOI] [Google Scholar]

- 58.Mangham LJ, Hanson K, McPake B. How to do (or not to do) … Designing a discrete choice experiment for application in a low-income country. Health Policy Plan. 2008;24(2):151–158. doi: 10.1093/heapol/czn047. [DOI] [PubMed] [Google Scholar]

- 59.Ostermann J, et al. Heterogeneous HIV testing preferences in an urban setting in Tanzania: results from a discrete choice experiment. PLoS ONE. 2014;9(3):e92100. doi: 10.1371/journal.pone.0092100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Barthold D, et al. Improvements to survey design from pilot testing a discrete-choice experiment of the preferences of persons living with HIV for long-acting antiretroviral therapies. Patient. 2022;15(5):513–520. doi: 10.1007/s40271-022-00581-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data are available upon reasonable request from Norah Crossnohere.