Abstract

BACKGROUND:

Moyamoya disease (MMD) is a rare and complex pathological condition characterized by an abnormal collateral circulation network in the basal brain. The diagnosis of MMD and its progression is unpredictable and influenced by many factors. MMD can affect the blood vessels supplying the eyes, resulting in a range of ocular symptoms. In this study, we developed a deep learning model using real-world data to assist a diagnosis and determine the stage of the disease using retinal photographs.

METHODS:

This retrospective observational study conducted from August 2006 to March 2022 included 498 retinal photographs from 78 patients with MMD and 3835 photographs from 1649 healthy participants. Photographs were preprocessed, and an ResNeXt50 model was developed. Model performance was measured using receiver operating curves and their area under the receiver operating characteristic curve, accuracy, sensitivity, and F1-score. Heatmaps and progressive erasing plus progressive restoration were performed to validate the faithfulness.

RESULTS:

Overall, 322 retinal photographs from 67 patients with MMD and 3752 retinal photographs from 1616 healthy participants were used to develop a screening and stage prediction model for MMD. The average age of the patients with MMD was 44.1 years, and the average follow-up time was 115 months. Stage 3 photographs were the most prevalent, followed by stages 4, 5, 2, 1, and 6 and healthy. The MMD screening model had an average area under the receiver operating characteristic curve of 94.6%, with 89.8% sensitivity and 90.4% specificity at the best cutoff point. MMD stage prediction models had an area under the receiver operating characteristic curve of 78% or higher, with stage 3 performing the best at 93.6%. Heatmap identified the vascular region of the fundus as important for prediction, and progressive erasing plus progressive restoration result shows an area under the receiver operating characteristic curve of 70% only with 50% of the important regions.

CONCLUSIONS:

This study demonstrated that retinal photographs could be used as potential biomarkers for screening and staging of MMD and the disease stage could be classified by a deep learning algorithm.

Keywords: brain, collateral circulation, humans, moyamoya disease, prognosis

Moyamoya disease (MMD) is a rare but well-known complex vaso-occlusive disease.1 MMD is characterized by an abnormally fine network of collateral circulation formed at the base of the brain due to progressive bilateral stenosis or occlusion in the main branches of the circle of Willis.1–3 Decision-making for medical management is complicated due to the nature of its bilateral involvement and the narrowing of the distal internal carotid arteries (ICAs).4–6 The progression pattern varies in both hemispheres, and predicting the natural course and progression of MMD is challenging.6 A sufficiently long follow-up period makes disease progression observable in most patients through symptoms or imaging studies, but diverse manifestations can be seen in the interim course.7 Factors determining the course and prognosis of MMD reported to date include the extent and speed of vascular occlusion, the extent of collateral circulation development, required blood flow, age of symptom onset, neurological abnormalities, and the presence of an infarct on imaging studies. Therefore, the determination of the natural course of MMD has been based on the balance between the extent of vascular occlusion and the effective collateral circulation developed at a particular time.

Due to these characteristics of MMD, the development of objective tools for its diagnosis and monitoring is essential. Conventional angiography, magnetic resonance imaging (MRI), or magnetic resonance angiography (MRA) is used for diagnosing MMD.2 For decision-making and proper management, clinical symptomatic follow-up and assessment of angiographic status are critical.7 However, the invasiveness and cost of both these imaging techniques limit their widespread feasibility.8 Retinal examination, involving the measurement of changes in several retinal vessels caused by the progression of MMD, may serve as an alternative diagnostic tool.9 MMD affects the region of the ICA where the ophthalmic artery bifurcates, potentially becoming a risk factor for retinal vessel abnormalities. Ethmoidal collaterals to the frontal lobe are common through the ophthalmic artery. As a result, it is often seen that the ophthalmic artery is enlarged and the retinal vascular filling is increased in digital subtraction angiography and MRA. Multiple ocular conditions, such as morning glory optic disc anomaly, chorioretinal coloboma, anterior ischemic optic neuropathy, ocular ischemic syndrome, and retinal vascular occlusions, have all been associated with this disease.9–15 Although these ophthalmic manifestations associated with MMD have sufficient diagnostic potential, they have not yet been adequately studied. This is due to the fact that minute changes are difficult to observe, while quantitative changes are difficult to measure.

Herein, we aimed to develop a deep learning model to discriminate the occurrence and stage of MMD using specific features of retinal photography on patients with MMD to validate the model internally and subsequently identify screening biomarkers of the disease in retinal photography. To our knowledge, this is the first large, longitudinal study based on real-world data to determine the occurrence and stage of MMD through retinal photography.

METHODS

Data Availability

The study code was conducted with Python, v3.6.8; it is available online on GitHub at the following link: https://github.com/DigitalHealthcareLab/23moyamoya.

Study Design and Overview

This retrospective observational study was conducted from August 1, 2006, to March 31, 2022, in accordance with the ethical principles outlined in the Declaration of Helsinki16 and was approved by the institutional review board of the Severance Hospital (institutional review board number: 2020-0473-0001). The need for informed consent was waived by the ethics committee, as this study utilized routinely collected data that were anonymously managed at all stages, including data cleaning and statistical analyses. We followed the Strengthening the Reporting of Observational Studies in Epidemiology statement.17

We developed and validated a deep learning model to detect MMD and determine its Suzuki stage using retinal photographs.1 Figure S1 presents an overview of the study. We collected 498 retinal photographs from 78 patients diagnosed with MMD by MRA between August 2006 and March 2022 at the Severance Hospital, South Korea.2 Clinical and demographic data, along with information on encephaloduroarteriosynangiosis surgery and follow-up duration of patients with MMD, were collected. The stage of MMD was evaluated based on the angiography results of the left and right hemispheres by a pediatric neurosurgeon having over 20 years of experience and matched with the most similar time point of the retinal images. The median difference between taking retinal photographs and the angiography results was 279 (interquartile range, 95.25–592) days. We further collected 3835 retinal photographs from 1649 healthy participants, who visited for a health checkup, between May 2007 and November 2022 at the hospital. Photographs without a history of eye disease, surgery, or other suspicious ophthalmic findings were considered healthy retinal images. The patient was excluded from the healthy group if the cup-to-disc ratio was higher than 0.7 or if myopic changes were observed. Furthermore, we excluded poor-quality photographs, characterized by indiscernible optic discs, pixel values below 20 at the edge of the photographs, or >11% cropping at the top and bottom, making the blood vessels nondiscernible.18 After exclusion, 322 retinal photographs from 67 patients with MMD and 3752 retinal photographs from 1616 healthy participants were obtained and reviewed by a certified ophthalmologist with over 15 years of experience.

Preprocessing of Retinal Photography

To ensure that the models focused on retinal photographs and not on differences among images, such as background, 6 preprocessing steps were adopted. In the first step, photographs were scaled to a single color to reduce the color difference between the photographs. In the second step, the dark areas were eliminated from the photographs to emphasize vessels over background elements. In the third step, histogram equalization was applied to enhance generalization and reduce overfitting. In the fourth step, all photographs were standardized within a square dimension and filled with a black background to eliminate variations in cropping from the top and bottom. In the fifth step, we cropped the photographs into a circle to remove the noninformative areas. Finally, the photographs were cropped by 5.5% each from the top and bottom of all the images to ensure uniformity of shape and scale. Details of this procedure are outlined in Figure S2.

Model Development and Statistical Analysis

All data set construction steps for learning were conducted at the level of age-matched participants to create data sets for screening MMD and predicting the stages of MMD; these steps also served to prevent overfitting, information leakage, and the risk of bias.19 We used retinal photographs from both eyes, as the progression of MMD manifested in both hemispheres, separately. The patients with stages 0 to 5 were randomly divided at a ratio of 8:2 to create a test data set, and stage 6, being a smaller subset with only 2 patients, was randomly selected by 1 person. Healthy patients were randomly selected to match the age distribution of the test data set for patients with MMD. The final test data set was composed of 83 retinal photographs of 17 patients with MMD and 83 retinal photographs of 33 healthy participants. Both the screening model and the stage prediction model utilized the same test data set. All photographs of patients with MMD, which were not included in the test data set, were selected as the training data set. The healthy training data sets for screening MMD were randomly selected 3 times to match the age distribution of the training data set for MMD and repeated 10 times to create a 10-fold training data set to reduce bias. The healthy training data sets for stage prediction were also randomly selected to follow the age distribution by the number of MMD images of the target stage.

For the development of the model, we adopted the typical neural network, ResNeXt50.20 Transfer learning from the ImageNet data set was used to provide base knowledge, freezing 4 of the 5 convolutional neural network blocks of the pretrained model weights, considering that the last convolutional neural network block learns the most task-specific information from images.21 We further applied a resizing method to convert the photographs into 224×224 pixels before inputting them into the model. Augmentation methods, such as random rotation or horizontal flip, could potentially introduce confusion for models aiming to determine the possible differences between the left and right brain hemispheres. Therefore, we only used flip-up-down, brightness, and saturation augmentation methods in the training to prevent overfitting.18 Model weights were optimized using the Adam optimization algorithm, with a batch size of 100 and an initial learning rate of 0.01. To prevent overfitting, the ReduceLROnPlateau algorithm was applied, which reduces the learning rate if the validation loss does not decrease for certain epochs (3 in our case).22 An early stopping mechanism was applied to stop training when further improvement was not probable after a certain number of epochs (10 in our case), which further minimized overfitting.

To train the model for the first aim of the study, each fold of the data set randomly selected patients with MMD and healthy participants ensuring consistent age distribution, and the retinal photographs of the selected patient with MMD were used as the validation data set with respect to the rest of the photographs. To train the model for the second aim, we selected retinal photographs corresponding to the healthy and target stages, and the aforementioned procedure for the first aim was applied. Moreover, we additionally checked the performance of models using the retinal images of stage-progressed patients, and we conducted experiments for the stage progression prediction model using images from healthy, stage 3, and stage 4 participants. For all experiments, the models trained with 10-fold data sets were evaluated using the same test data set by checking for 80% or a much greater area under the receiver operating characteristic curve (AUC), which is one of the most commonly used biomarkers in a clinical setting.23 We further measured accuracy, sensitivity, and the F1-score for staging MMD using the retinal photographs. The best cutoff point was determined using the Youden index.24 CIs were computed using a bootstrapping procedure with 83 samples to obtain 95% CIs and reported at the 2.5th and 97.5th percentiles. Image-based attention maps were generated using gradient-weighted class activation mapping (GradCAM) from the test data sets, with the best model achieving the highest AUC.25 We also used progressive erasing plus progressive restoration to validate whether the global attention map was faithful to the method of the model to make predictions and investigate how the performance of the model degraded upon removing either the most or the least important regions.26

RESULTS

Basic Characteristics

The data set for developing a screening model for MMD comprised 322 retinal photographs from 67 patients with MMD and 3752 retinal photographs from 1616 healthy participants (Table). The mean age of patients with MMD was 44.1±17.8 years compared with 40.2±12.2 years for healthy patients. The age of all patients was based on the date of the retinal photographs. The mean follow-up time for patients with MMD was 115 (SD, 62.7) months. Among the total number of patients, 14 underwent encephaloduroarteriosynangiosis surgery, a cerebral revascularization procedure.27 According to the staging of MMD, based on angiography results, stage 3 images were the most common at 118 (36.6%) images, followed by stages 4, 5, 2, 1, 6 and healthy with 60 (18.6%), 56 (17.4%), 34 (10.6%), 25 (7.8%), and 7 (2.2%) images and 22 (7.8%) healthy images, respectively. The number of patients and images for each Suzuki stage and hemisphere is described in Tables S1 and S2.

Table.

Basic Characteristics of the Study Participants

Deep Learning Model for MMD Screening

The predictive performance for the screening patients with MMD and healthy participants was quantified by a 10-fold data set. Our screening model achieved an average AUC of 94.6% (95% CI, 93.2%–95.4%). The average receiver operating characteristic curves are shown in Figure 1. The best cutoff point of the model, calculated by the Youden index, obtained a sensitivity of 89.8%, a specificity of 90.4%, an accuracy of 87.1%, and an F1-score of 86.4%. This result demonstrated that our model had a higher sensitivity and accuracy than those of MRA, which could only achieve a sensitivity of 73.1% and an accuracy of 78.8%.28

Figure 1.

Receiver operating characteristic (ROC) curves evaluating the performance of the moyamoya disease (MMD) screening model in distinguishing fundus images from patients with MMD and age-matched healthy individuals. AUC indicates area under the ROC curve.

Deep Learning Model for Moyamoya Staging

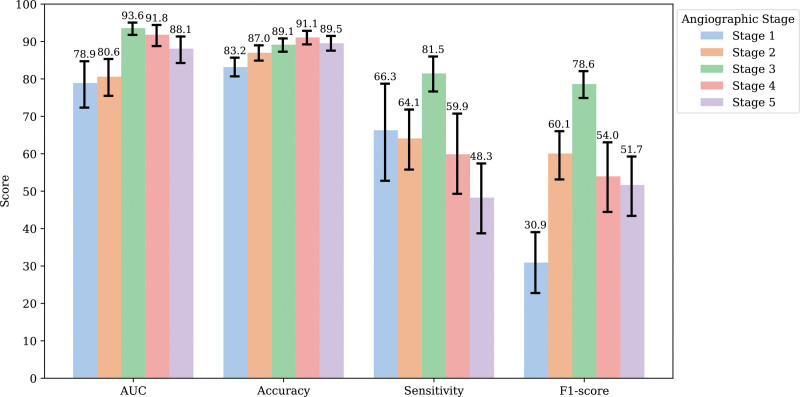

Among the various stage prediction models, the majority exhibited an average AUC value of 78% or higher; particularly, stage 3 demonstrated the highest performance, with an average AUC of 93.6% (Figure 2). In terms of accuracy, all the models at each stage demonstrated >80% performance, and a pattern was identified in which the numerical values gradually increased as the stage increased. Stage 4 demonstrated the highest performance, with an average accuracy of 91.1%. Stage 3 model demonstrated the highest overall performance with an AUC of 93.6%, a sensitivity of 81.5%, and an F1-score of 78.6%. We further assessed the model performances on retinal images from patients whose disease stage had progressed (Figure S3). The model identified the progression from stage 4 to stage 5 in a patient over a 4-year period. The model showed predictions with a confidence of 97% for stage 4 and 99% for stage 5. To differentiate between healthy, stage 3, and stage 4 participants, the stage prediction model achieved a micro-AUC of 92.9% and AUC of 88.5%, provided in Figure S4.

Figure 2.

Performance of the moyamoya stage prediction model. AUC indicates area under the receiver operating characteristic curve.

GradCAM was able to identify pathological regions on the retinal photographs, which were presented as a heatmap. A representative heatmap generated from the MMD stage prediction model using GradCAM is shown in Figure 3. For the healthy retina represented by stage 0, the heatmap highlighted areas that were irrelevant or distant from major vascular structures. In contrast, retinal images corresponding to stages 1 to 5, representing different MMDs, highlighted main vasculatures. The distinction of the stage is not to reflect the severity of the disease but to predict angiographic progression to determine treatment policies such as additional cerebrovascular examinations in relation to clinical conditions. The difference in the highlighted area was less significant between stages. This indicated that our deep learning model evaluates the stage of the fundus vessels in patients with MMD.

Figure 3.

Heatmaps of the moyamoya disease stage prediction models. GradCAM indicates gradient-weighted class activation mapping.

We further used progressive erasing plus progressive restoration to validate the faithfulness of the global attention map and to investigate how the performance of the model degrades when removing either the most or the least important regions. Figure 4 shows the AUC for each label of the test set with different parts of the image removed. When gradually erasing the least important image areas according to the global attention map, removing 50% of the image did not reduce the AUC below 70%. Additionally, when >90% of the image was removed, the most important area left was the vascular part of the fundus. In stages 1, 2, and 5, a pattern with higher performance was observed compared with the 80% threshold.

Figure 4.

Data-driven identification of informative regions using the deep learning model. AUC indicates area under the receiver operating characteristic curve.

DISCUSSION

In this study, we observed that retinal photographs could be used as significant screening and stage markers for MMD. Using a deep learning algorithm, we were able to classify the stages of MMD from retinal images with a performance comparable to that of MRA.28 The algorithm for deep learning analyzes alterations in the major retinal vessels based on the stage of MMD. Although conventional fundus examinations were performed only after retinal ischemia changes, hemorrhage, or edematous lesions caused by occlusion had manifested, this study revealed a new diagnostic approach capable of identifying changes in retinal vascular architecture. This study is the first to quantitatively screen and evaluate the stage of MMD in a longitudinal patient group.

To date, alterations in the retinal vasculature of patients with MMD, detectable through digital subtraction angiography and MRA owing to the ethmoid collateralization to the frontal lobe, have been neglected. The correlation between MMD and ocular diseases is caused by vascular abnormalities.1,11,29 Furthermore, MMD is a condition in which stenosis and occlusion of the ICA progress, and the primary site of manifestation is near the ophthalmic artery; therefore, retinal vascular alterations are possible.10,30–33 However, changes in the retinal vessels may not be significant in young individuals due to their ability to endure ischemic stress.14 In addition, early signs of retinal vessel alterations due to MMD may be missed.14 Nevertheless, challenges in retinal hemodynamics that accumulate before the onset of conditions, such as central retinal artery occlusion or central retinal vein occlusion, exert a strain on the retinal vascular structures, leading to microdamage.34 Tortuosity of retinal arteries and posterior segment neovascularization with venous dilatation in children are conditions that indicate significant hemodynamic stress on the retina.35 Fibrous intimal thickening of artery-compressing veins that progresses chronically increases the risk of thrombogenesis and contributes to the development of retinal vein occlusion.36 The measurement of these changes through retinal vessels has a high diagnostic value for confirming the progression of MMD.2

Generally, MRA has been widely used in the diagnosis and staging of MMD.2 The quality of MRA images, however, can be influenced by various factors, including the expertise and competence of the technician or radiologist, as well as the equipment and software used.37 Furthermore, due to the nature of the examination, which directly examines the cerebral blood vessels, a high degree of accuracy is anticipated. Nevertheless, the sensitivity for diagnosis of MMD using MRA, MRI, MRA plus MRI, and high-resolution MRI ranges from 73% to 92%, and the specificity is 100%.28,38,39 These limitations pose a significant challenge when MRA is used as a routine follow-up tool. Based on the results of this study, retinal photographs can be seen as a diagnostic tool that can surmount the disadvantages of MRA because the measurement is standardized and the technicians have minimal room for interference.

Despite utilizing a small data set, the results of this study demonstrated that the screening model for MMD using retinal photographs presented an AUC of 94.6% and a sensitivity of 89.8%, which was higher than those of the MMD screening model using a plain skull image from X-ray, which demonstrated an AUC of 91% and a sensitivity of 80.7% in a previous study.40 Studies on stage prediction for MMD using deep learning are currently lacking, which could be attributed to the rarity of MMD and the difficulty associated with its diagnosis in early stages (stages 1 and 2) using MRA.1,41 Therefore, this study observed the possibility of prediction of Suzuki stages although the images corresponding to the early Suzuki stages perform relatively poorly with fewer images compared with models that predict stage 1 with 25 images, stage 2 with 34 images, stage 3 with 118 images, and stage 4 with 60 images. In MMD, although the stage may not clearly differentiate the severity of the disease only by the status of cerebral vasculatures, it is possible to estimate changes in retinal blood flow as the disease advances. However, to confirm this, further research with prospective and large-scale data is required in the future.

In the present study, we used GradCAM to generate heatmaps of the regions that the model deemed essential for predicting the occurrence and stage of MMD. Deep learning–based model networks are often characterized as black box approaches due to their complex internal logic, which might potentially hamper the real-world application.42 Because MMD is relevant to ICA occlusion and ICA stenosis is relevant to the retinal vein, it is important for models to highlight the main vasculature region of the images.43 The GradCAM showed that the retinal images of the patient with MMD focused on the main vasculature, unlike the heatmap of a healthy patient, which highlighted nonvasculature areas. The deep learning method was effective in providing evidence for the presence of retinal vasculature occlusion in patients with MMD.30 We also assessed the interpretability of screening and predicting models for MMD. We adopted a progressive erasing plus progressive restoration algorithm to validate the faithfulness of the attention map by progressively erasing the least important image regions and predicting the model performance with the remaining important areas. Our stage prediction models for MMD highlighted the main vasculature, achieving AUC values of ≥70% even when only 50% of significant regions were retained. Thus, our models demonstrated the potential for applications in screening and stage progression prediction of the MMD.

The advantages of measuring MMD staging progression with retinal photographs are given as follows. First, retinal examination with a fundus camera offers an exceptionally high level of safety relative to other diagnostic methods. The eye is the only organ in the human body where the blood vessel is visible and, hence, can be examined with the naked eye.44 As a result, there is no need to use other contrast agents or expose the patient to radiation for penetration. Owing to the rise in popularity of nonmydriatic fundoscopy, pupil dilation is no longer required, and retinal pictures can be measured without a delay or the need for pupil dilation. Second, the retinal images were easily accessible. Compared with MRI and MRA, retinal imaging devices have low installation costs, the workforce required for measurement is small, and the equipment occupies a small space. The recent distribution of these devices to primary eye care facilities and district hospitals has substantially expanded patient accessibility compared with other currently available MMD diagnostic technologies. Moreover, the portable fundus camera facilitates measurement for individuals with limited mobility, including disabled individuals or children.45 Third, the cost is reasonable. Although the cost of a retinal examination varies from country to country, the expense that patients encounter in all health care systems is low relative to that of MRI or MRA. Finally, fundus imaging is one of the most standardized examinations, and its interpretation is less challenging than that of MRA. The rapid evolution of deep learning technology in ophthalmology and its ability to detect minute changes in retinal vasculature holds the promise that enhancing the diagnostic value of retinal images in MMD is anticipated to increase further.46,47 All of these benefits ensure excellent medical availability for patients, allowing for early detection and prompt intervention.

The limitations of this study are given as follows: First, the sample size was relatively small although the patients were age-matched in an attempt to increase the statistical power. Additionally, due to the nature of the patients diagnosed with MMD, the number of patients and images for each stage is insufficient. Although the number of patients was small compared with other medical studies employing deep learning algorithms, such as diabetic retinopathy, to the best of our knowledge, this study has the largest number of participants among studies conducted on MMD. Second, the algorithm used in this study was validated at a single institution. To confirm the robustness of the MMD deep learning algorithm, additional external validation in multiethnic and multicenter cohorts is required. Third, our data set lacked fundus photographs of children with MMD due to the nature of the retrospective study. Taking retinal fundus photography is not common for children, and therefore, fundus images for the early Suzuki stage were lacking. To determine the performance of the model with subgrouping based on the 2 peaks of MMD, prospective data collection is needed. Finally, in patients with other vascular comorbidities, such as atherosclerosis, this algorithm may give confounding results. Additional research is required to ascertain the capacity of the algorithm to distinguish between disorders that induce comparable vascular alterations.

CONCLUSIONS

We developed a deep learning algorithm to classify MMD stages using retinal photographs. This study confirmed, for the first time, the feasibility of retinal examination, which is equivalent to MRA in terms of performance and is significantly more convenient in terms of safety, accessibility, and usage. Further research for external validation and multistage classification is additionally needed; however, this study demonstrated the significant potential of retinal photographs as a novel diagnostic tool for detecting MMD progression could be established.

ARTICLE INFORMATION

Acknowledgments

The authors would like to thank the Medical Informatics Collaborative Unit members of the Yonsei University College of Medicine for their assistance in data analysis. J. Hong: methodology, data curation, visualization, and writing—original draft. Dr Yoon: validation and writing—review and editing. Dr Shim: conceptualization and supervision. Dr Park: conceptualization and project.

Sources of Funding

This study received financial support from the Ministry of Science and Information and Communication Technologies of Korea through the Medical AI Education and Overseas Expansion Support Project (S1124-22-1001) and a faculty research grant from the Yonsei University College of Medicine (6-2020-0217). Funding was used toward labor costs for data collection and analysis. The funding sources had no role in the design and conduct of the study.

Disclosures

None.

Supplemental Material

Tables S1–S2

Figures S1–S4

Supplementary Material

Nonstandard Abbreviations and Acronyms

- AUC

- area under the receiver operating characteristic curve

- GradCAM

- gradient-weighted class activation mapping

- ICA

- internal carotid artery

- MMD

- moyamoya disease

- MRA

- magnetic resonance angiography

- MRI

- magnetic resonance imaging

J. Hong and S. Yoon contributed equally.

For Sources of Funding and Disclosures, see page 723.

Supplemental Material is available at https://www.ahajournals.org/doi/suppl/10.1161/STROKEAHA.123.044026.

Contributor Information

JaeSeong Hong, Email: jayhong7200@yuhs.ac.

Sangchul Yoon, Email: littleluke@yuhs.ac.

REFERENCES

- 1.Suzuki J, Takaku A. Cerebrovascular “moyamoya” disease. Arch Neurol. 1969;20:288–299. doi: 10.1001/archneur.1969.00480090076012 [DOI] [PubMed] [Google Scholar]

- 2.Kuroda S, Fujimura M, Takahashi J, Kataoka H, Ogasawara K, Iwama T, Tominaga T, Miyamoto S. Diagnostic criteria for moyamoya disease - 2021 revised version. Neurol Med Chir (Tokyo). 2022;62:2022–0072. doi: 10.2176/jns-nmc.2022-0072 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Research Committee on the Pathology and Treatment of Spontaneous Occlusion of the Circle of Willis; Health Labour Sciences Research Grant for Research on Measures for Infractable Diseases. Guidelines for diagnosis and treatment of moyamoya disease (spontaneous occlusion of the circle of Willis). Neurol Med Chir (Tokyo). 2012;52:245–266. doi: 10.2176/nmc.52.245 [DOI] [PubMed] [Google Scholar]

- 4.Kuroda S, Houkin K. Moyamoya disease: current concepts and future perspectives. Lancet Neurol. 2008;7:1056–1066. doi: 10.1016/S1474-4422(08)70240-0 [DOI] [PubMed] [Google Scholar]

- 5.Kurokawa T, Chen Y, Tomita S, Kishikawa T, Kitamura K. Cerebrovascular occlusive disease with and without the moyamoya vascular network in children. Neuropediatrics. 1985;16:29–32. doi: 10.1055/s-2008-1052540 [DOI] [PubMed] [Google Scholar]

- 6.Kuroda S, Ishikawa T, Houkin K, Nanba R, Hokari M, Iwasaki Y. Incidence and clinical features of disease progression in adult moyamoya disease. Stroke. 2005;36:2148–2153. doi: 10.1161/01.STR.0000182256.32489.99 [DOI] [PubMed] [Google Scholar]

- 7.Ha EJ, Kim KH, Wang KC, Phi JH, Lee JY, Choi JW, Cho B, Y J, Byun YH, Kim S. Long-term outcomes of indirect bypass for 629 children with moyamoya disease. Stroke. 2019;50:3177–3183. doi: 10.1161/STROKEAHA.119.025609 [DOI] [PubMed] [Google Scholar]

- 8.Li J, Jin M, Sun X, Li J, Liu Y, Xi Y, Wang Q, Zhao W, Huang Y. Imaging of moyamoya disease and moyamoya syndrome. J Comput Assist Tomogr. 2019;43:257–263. doi: 10.1097/RCT.0000000000000834 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Lee AG, Al Othman B, Prospero Ponce C, Kini A. Ocular manifestations of moyamoya disease. American Academy of Ophthalmology. Accessed June 30, 2023. https://eyewiki.aao.org/Ocular_Manifestations_of_Moyamoya_Disease [Google Scholar]

- 10.Chace R, Hedges TR. Retinal artery occlusion due to moyamoya disease. J Clin Neuroophthalmol. 1984;4:31–34. [PubMed] [Google Scholar]

- 11.Barrall JL, Summers CG. Ocular ischemic syndrome in a child with moyamoyadisease and neurofibromatosis. Surv Ophthalmol. 1996;40:500–504. doi: 10.1016/s0039-6257(96)82016-9 [DOI] [PubMed] [Google Scholar]

- 12.Chen CS, Lee AW, Kelman S, Wityk R. Anterior ischemic optic neuropathy in moyamoya disease: a first case report. Eur J Neurol. 2007;14:823–825. doi: 10.1111/j.1468-1331.2007.01819.x [DOI] [PubMed] [Google Scholar]

- 13.Brodsky MC, Parsa CF. The moyamoya optic disc. JAMA Ophthalmol. 2015;133:164. doi: 10.1001/jamaophthalmol.2014.602 [DOI] [PubMed] [Google Scholar]

- 14.Seong HJ, Lee JH, Heo JH, Kim DS, Kim YB, Lee CS. Clinical significance of retinal vascular occlusion in moyamoya disease. Retina. 2021;41:1791–1798. doi: 10.1097/IAE.0000000000003181 [DOI] [PubMed] [Google Scholar]

- 15.Taşkintuna I, Öz O, Teke MY, Koçak H, Firat E. Morning glory syndrome. Retina. 2003;23:400–402. doi: 10.1097/00006982-200306000-00018 [DOI] [PubMed] [Google Scholar]

- 16.The World Medical Association. Declaration of Helsinki - ethical principles for medical research involving human subjects. Accessed June 30, 2023. https://www.wma.net/wp-content/uploads/2018/07/DoH-Oct2008.pdf [DOI] [PubMed]

- 17.von Elm E, Altman DG, Egger M, Pocock SJ, Gøtzsche PC, Vandenbroucke JP; STROBE Initiative. The Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) statement: guidelines for reporting observational studies. Lancet. 2007;370:1453–1457. doi: 10.1016/S0140-6736(07)61602-X [DOI] [PubMed] [Google Scholar]

- 18.Rim TH, Lee G, Kim Y, Tham Y, Lee CJ, Baik SJ, Kim YA, Yu M, Deshmukh M, Lee BK, et al. Prediction of systemic biomarkers from retinal photographs: development and validation of deep-learning algorithms. Lancet Digit Health. 2020;2:e526–e536. doi: 10.1016/S2589-7500(20)30216-8 [DOI] [PubMed] [Google Scholar]

- 19.Naseer M, Prabakaran BS, Hasan O, Shafique M. UnbiasedNets: a dataset diversification framework for robustness bias alleviation in neural networks. Mach Learn. 2023; doi: 10.1007/s10994-023-06314-z [Google Scholar]

- 20.Xie S, Girshick R, Dollar P, Tu Z, He K. Aggregated residual transformations for deep neural networks. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). IEEE; 2017:5987–5995. doi: 10.1109/CVPR.2017.634 [Google Scholar]

- 21.Yosinski J, Clune J, Bengio Y, Lipson H. How transferable are features in deep neural networks? Advances in Neural Information Processing Systems 27. 2014. [Google Scholar]

- 22.Al-Kababji A, Bensaali F, Dakua SP. Scheduling techniques for liver segmentation: ReduceLRonPlateau vs OneCycleLR. 2022:204–212. doi: 10.1007/978-3-031-08277-1_17

- 23.Hanley JA, McNeil BJ. The meaning and use of the area under a receiver operating characteristic (ROC) curve. Radiology. 1982;143:29–36. doi: 10.1148/radiology.143.1.7063747 [DOI] [PubMed] [Google Scholar]

- 24.Schisterman EF, Perkins NJ, Liu A, Bondell H. Optimal cut-point and its corresponding Youden index to discriminate individuals using pooled blood samples. Epidemiology. 2005;16:73–81. doi: 10.1097/01.ede.0000147512.81966.ba [DOI] [PubMed] [Google Scholar]

- 25.Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D. Grad-CAM: visual explanations from deep networks via gradient-based localization. In: 2017 IEEE International Conference on Computer Vision (ICCV). IEEE; 2017:618–626. doi: 10.1109/ICCV.2017.74 [Google Scholar]

- 26.Engelmann J, Storkey A, Bernabeu MO. Global explainability in aligned image modalities. 2021. arXiv preprint; arXiv:2112.09591.

- 27.Gonzalez NR, Dusick JR, Connolly M, Bounni F, Martin N, Wiele B, Liebeskind D, Saver J. Encephaloduroarteriosynangiosis for adult intracranial arterial steno-occlusive disease: long-term single-center experience with 107 operations. J Neurosurg. 2015;123:654–661. doi: 10.3171/2014.10.JNS141426 [DOI] [PubMed] [Google Scholar]

- 28.Yamada I, Suzuki S, Matsushima Y. Moyamoya disease: comparison of assessment with MR angiography and MR imaging versus conventional angiography. Radiology. 1995;196:211–218. doi: 10.1148/radiology.196.1.7784569 [DOI] [PubMed] [Google Scholar]

- 29.Kim SK, Seol HJ, Cho BK, Hwang YS, Lee DS, Wang KC. Moyamoya disease among young patients: its aggressive clinical course and the role of active surgical treatment. Neurosurgery. 2004;54:840–4; discussion 844. doi: 10.1227/01.neu.0000114140.41509.14 [DOI] [PubMed] [Google Scholar]

- 30.Wang YY, Zhou KY, Ye Y, Song F, Yu J, Chen J, Yao K. Moyamoya disease associated with morning glory disc anomaly and other ophthalmic findings: a mini-review. Front Neurol. 2020;11:338. doi: 10.3389/fneur.2020.00338 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Wagner SK, Fu DJ, Faes L, Liu X, Huemer J, Khalid H, Ferraz D, Korot E, Kelly C, Balaskas K, et al. Insights into systemic disease through retinal imaging-based oculomics. Transl Vis Sci Technol. 2020;9:6. doi: 10.1167/tvst.9.2.6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Ho H, Cheung CY, Sabanayagam C, Yip W, Ikram MK, Ong PG, Mitchell P, Chow KY, Cheng CY, Tai ES, et al. Retinopathy signs improved prediction and reclassification of cardiovascular disease risk in diabetes: a prospective cohort study. Sci Rep. 2017;7:41492. doi: 10.1038/srep41492 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Cheung CYL, Tay WT, Ikram MK, Ong YT, De Silva DA, Chow KY, Wong TY. Retinal microvascular changes and risk of stroke. Stroke. 2013;44:2402–2408. doi: 10.1161/STROKEAHA.113.001738 [DOI] [PubMed] [Google Scholar]

- 34.Ashok Kumar M, Amirtha Ganesh B. CRAO in moyamoya disease. J Clin Diagn Res. 2013;7:545–547. doi: 10.7860/JCDR/2013/4579.2819 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Allen MT, Matthews KA. Hemodynamic responses to laboratory stressors in children and adolescents: the influences of age, race, and gender. Psychophysiology. 1997;34:329–339. doi: 10.1111/j.1469-8986.1997.tb02403.x [DOI] [PubMed] [Google Scholar]

- 36.Ahn J, Hwang DDJ. Peripapillary retinal nerve fiber layer thickness in patients with unilateral retinal vein occlusion. Sci Rep. 2021;11:18115. doi: 10.1038/s41598-021-97693-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Magalhães AC, Polachini Júnior I. Clinical applications of magnetic resonance angiography. Rev Hosp Clin Fac Med Sao Paulo. 1995;50:212–221. [PubMed] [Google Scholar]

- 38.Ryoo S, Cha J, Kim SJ, Choi JW, Kim KH, Jeon P, Kim JS, Hong SC, Bang OY. High-resolution magnetic resonance wall imaging findings of moyamoya disease. Stroke. 2014;45:2457–2460. doi: 10.1161/STROKEAHA.114.004761 [DOI] [PubMed] [Google Scholar]

- 39.Hasuo K, Mihara F, Matsushima T. MRI and MR angiography in moyamoya disease. J Magn Reson Imaging. 1998;8:762–766. doi: 10.1002/jmri.1880080403 [DOI] [PubMed] [Google Scholar]

- 40.Kim T, Heo J, Jang DK, Sunwoo L, Kim J, Lee KJ, Kang S, Park SJ, Kwon O, Oh CW. Machine learning for detecting moyamoya disease in plain skull radiography using a convolutional neural network. EBioMedicine. 2019;40:636–642. doi: 10.1016/j.ebiom.2018.12.043 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Houkin K, Aoki T, Takahashi A, Abe H. Diagnosis of moyamoya disease with magnetic resonance angiography. Stroke. 1994;25:2159–2164. doi: 10.1161/01.str.25.11.2159 [DOI] [PubMed] [Google Scholar]

- 42.London AJ. Artificial intelligence and black-box medical decisions: accuracy versus explainability. Hastings Cent Rep. 2019;49:15–21. doi: 10.1002/hast.973 [DOI] [PubMed] [Google Scholar]

- 43.Wu DH, Wu LT, Wang YL, Wang JL. Changes of retinal structure and function in patients with internal carotid artery stenosis. BMC Ophthalmol. 2022;22:123. doi: 10.1186/s12886-022-02345-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Meienberg O. [The eye as a reference organ for diseases of the nervous system. Simple examination methods for office practice and at the bedside]. Schweiz Rundsch Med Prax. 1990;79:1161–1165. [PubMed] [Google Scholar]

- 45.Iqbal U. Smartphone fundus photography: a narrative review. Int J Retina Vitreous. 2021;7:44. doi: 10.1186/s40942-021-00313-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Cheung CY, Ran AR, Wang S, Chan VTT, Sham K, Hilal S, Venketasubramanian N, Cheng C, Sabanayagam C, Tham YC, et al. A deep learning model for detection of Alzheimer’s disease based on retinal photographs: a retrospective, multicentre case-control study. Lancet Digit Health. 2022;4:e806–e815. doi: 10.1016/S2589-7500(22)00169-8 [DOI] [PubMed] [Google Scholar]

- 47.De Fauw J, Ledsam JR, Romera-Paredes B, Nikolov S, Tomasev N, Blackwell S, Askham H, Glorot X, O’Donoghue B, Visentin D, et al. Clinically applicable deep learning for diagnosis and referral in retinal disease. Nat Med. 2018;24:1342–1350. doi: 10.1038/s41591-018-0107-6 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The study code was conducted with Python, v3.6.8; it is available online on GitHub at the following link: https://github.com/DigitalHealthcareLab/23moyamoya.