Abstract

Decades of research have estimated the effect of entering a community college on bachelor’s degree attainment. In this study, we examined the influence of methodological choices, including sample restrictions and identification strategies, on estimated effects from studies published between 1970 and 2017. After systematically reviewing the literature, we leveraged meta-analysis to assess average estimates and examine the role of moderators. In our preferred model, entering a community college was associated with a 23-percentage-point decrease in the probability of baccalaureate attainment, on average, compared with entering a four-year college. The size of effects appeared to grow over the past three decades, though this coincides with substantial shifts in the college-going population. Methodological choices, particularly how researchers define the treatment group, explain some variation in estimates across studies. We conclude with a discussion of the implications for future inquiry and for policy.

Keywords: community colleges, higher education, diversionary effect, community college transfer, bachelor’s degree attainment, meta-analysis

Community colleges offer an entry point to postsecondary education for many individuals in the United States who might otherwise forgo college. The aspirations of community college entrants are high, but fewer than a third transfer; even fewer attain a four-year credential (Shapiro et al. 2017). Peer-reviewed, Goodman, Hurwitz, and Smith 2015; Long and Kurlaender 2009; Rouse 1995).1 However, the presence of some null and positive effects contributes to continued debate (Denning 2017; Melguizo and Dowd 2009; Melguizo, Kienzl, and Alfonso 2011).

To estimate the effect on baccalaureate attainment of community college entrance, it is necessary to disentangle the effects of community college entrance from the systematic variation between students who start at community colleges versus four-year colleges. Methodological choices seem to play a role in the magnitude, and even the direction, of estimated effects (Kalogrides 2008). In this article, we review evidence regarding the effect of attending community college on bachelor’s degree completion. After conducting a systematic review of the literature, we use meta-analysis to examine the following research questions:

What is the average effect of entering a community college, compared with a baccalaureate-granting college, on bachelor’s degree attainment across 50 years of research, controlling for a host of methodological choices?

Which methodological choices and study features, such as sample restrictions, identification strategy, covariates, and data characteristics, predict observed effects?

This study makes several contributions. Although several reviews examine scholarship on community colleges, most provide narrative summaries of the growing literature (e.g., Belfield and Bailey 2011; Goldrick-Rab 2010; Romano 2011; Schudde and Goldrick-Rab 2015). To our knowledge, this is the first meta-analysis of the effect of community college attendance on baccalaureate attainment and thus the first to draw conclusions about the size of effects based on decades of research. By highlighting the role that research design and identification strategy play in shaping results, our study will inform future research on this topic. The results also have implications for ongoing policy discussions, including interventions to improve transfer across postsecondary sectors and the growing “free community college” movement. We elaborate on the policy implications in our discussion.

COMMUNITY COLLEGES AS AN ENTRYWAY INTO POSTSECONDARY EDUCATION

Several key theories behind the community college effects literature stem from sociology. Clark’s (1960a, 1960b) cooling-out hypothesis offers a functional argument—but limited empirical evidence—for the systematic letdown of community college students’ educational expectations. Brint and Karabel’s (1989) The Diverted Dream ascribes a more pernicious nature to community college’s role. They describe community colleges as diversionary institutions, channeling students into vocational programs and lower postsecondary tracks and away from baccalaureate programs. Brint and Karabel argue that many community college students are capable of succeeding at a four-year college, and community colleges preserve the social hierarchy and maintain the selectivity of elite institutions. More recent scholarship acknowledges the structural obstacles at community colleges that thwart student pathways, rather than attributing the failure to a purposeful letdown by institutional actors (Rosenbaum, Deil-Amen, and Person 2007; Scott-Clayton 2011).

The community college pathway to a baccalaureate appeals to policy makers and students as a low-cost alternative to the first two years at a four-year college. In practice, however, students who intend to transfer face challenges at several stages: during the early period of college, when some students fall behind academically; during the process of transferring from a two-year to a four-year college; and during the period after transitioning to a new institution (Monaghan and Attewell 2015). The different phases of transfer are intricately connected. For instance, credit loss is a particularly salient problem facing transfer students (Monaghan and Attewell 2015). Although students may not become cognizant of credit loss until after moving to a baccalaureate-granting institution, the problem begins with the accumulation of unnecessary course work early in college (Bailey et al. 2016; Jenkins and Fink 2016). Most community college entrants do not complete the transfer process, and most leave college empty-handed (Shapiro et al. 2017).

Isolating the Effects

Identifying the effects of enrolling initially at a community college is challenging because community college students differ systematically from four-year college entrants across a variety of factors. On average, individuals who start at a community college are more likely to identify as non-white, come from households with lower socioeconomic status (SES) and wealth, and enter college less academically prepared (Monaghan and Attewell 2015; Xu, Jaggars, and Fletcher 2016). These differences pose a serious challenge to analysts interested in estimating the effects of community college attendance. In the following sections, we describe potential implications of methodological choices made throughout the literature, including the definition of treatment and comparison groups and the selection of identification strategies.

Defining treatment and control groups and related sample restrictions.

In a counterfactual approach to answering causal questions with observational data, social scientists anticipate that an individual can be exposed to only one of two alternative conditions: the condition of interest, referred to as the treatment, or its alternative, referred to as the control (Morgan and Winship 2007). Defining treatment and control groups and modeling selection into treatment or control are crucial to estimating treatment effects. Throughout the literature, decisions regarding the definition of treatment and control inform sample selection strategies.

Which community college students are susceptible to ‘diversion’?

There is a fundamental disagreement in the literature about which community college students to include in the treatment group. Many scholars claim that only community college students with clear aspirations toward a baccalaureate should be included in analyses, because including students with no intention of transferring would overestimate the attainment gap between two- and four-year college entrants (Alfonso 2006; Kalogrides 2008; Melguizo and Dowd 2009). Across the literature, several studies follow this logic, restricting the treatment group to baccalaureate aspirants based on stated educational intentions (Alba and Lavin 1981; Breneman and Nelson 1981; Christie and Hutcheson 2003; Long and Kurlaender 2009; Monaghan and Attewell 2015). However, given the potential for students with varied expectations to transfer, it is problematic to condition rates of degree attainment on aspirations/expectations (Goldrick-Rab 2010). An alternative approach among studies using covariate adjustment is to include educational aspirations as a covariate in the model (Alfonso 2006; Brand, Pfeffer, and Goldrick-Rab 2014; Reynolds 2012; Velez 1985).

Other researchers take the debate even further, arguing that because baccalaureates are conferred at the university level, only students who make it to a four-year university truly have the opportunity to attain a bachelor’s degree. Kalogrides (2008:24) reasons that limiting the treatment group to those who successfully transfer to a baccalaureate-granting institution avoids the challenge of identifying which community college students “really aspire to transfer” (emphasis added), ensuring a “fair comparison.” Using this logic, several authors restrict their community college sample to students who already transferred (Dietrich and Lichtenberger 2015; Glass and Harrington 2002; Lee, Mackie-Lewis, and Marks 1993; Melguizo 2009; Melguizo et al. 2011; Melguizo and Dowd 2009; Nutting 2011).

Because barriers to student success arise at various phases in the community college experience, including only students who transferred in the treatment group may not fully capture the diversionary effects of community college entrance. Researchers focused on community college students who transferred to a university tend to find null or positive effects on baccalaureate attainment, with community college students’ attainment matching or exceeding that of “native” students’ (those who started at a four-year college). Studies that include community college entrants without restricting on transfer tend to find negative effects. Sample restrictions based on vertical transfer minimize researchers’ ability to detect diversion by focusing narrowly on students who “survived” the first two phases of transfer—a form of survivorship bias. Some researchers acknowledge this limitation, noting that they test the diversion effect on a “very selective sample of community college students” and that results are generalizable only to “successful transfers” (Melguizo and Dowd 2009:58).

Who is the appropriate comparison group?

Researchers also face the difficult task of determining which students compose the comparison group. Most existing research treats initial enrollment at a baccalaureate-granting institution as the counterfactual to entering a community college. Depending on the definition of the treatment group, similar restrictions should be placed on the control group to ensure an appropriate comparison.

For the most part, peer-reviewed publications using transfers as the treatment group take steps to produce an equivalent group of four-year college entrants. For example, Melguizo (2009) and Melguizo and Dowd (2009) compare community college transfer students to rising juniors at a four-year college (i.e., students who completed their first two years of course work), although the requirement for accumulated credits was not equivalent across the two groups. Other studies require certain credit thresholds for both groups (Monaghan and Attewell 2015; Xu et al. 2016). However, some samples in the literature allow “cohort mismatch,” in which the two groups do not align in terms of credits completed or academic status (e.g., comparing community college students who transferred as juniors with a cohort of university entrants without capturing college attrition). Cohort mismatch appears to advantage the treatment group (Arnold 2001; Galloway 2000; Johnson 2014; Morris 2005; Nurkowski 1995; Richardson and Doucette 1980). Failing to account for early college attrition in the treatment group inflates the estimate of degree attainment compared with the control group.

In studies that use the full sample of community college students as the treatment group, researchers are tasked with deciding whether the appropriate comparison group should include all four-year college entrants in a given population. Anticipating that community college students are more comparable to students who enter broad-access universities, some scholars restrict their control group to students attending nonselective four-year colleges (Long and Kurlaender 2009; Monaghan and Attewell 2015).

Recent research explores the implications of various counterfactuals. Brand and colleagues (2014) decompose the control condition—students who did not initially enroll in a community college—into multiple subgroups that represent educational alternatives to community college entrance within one year of high school graduation: no postsecondary schooling and entering a nonselective, selective, or highly selective four-year college. They found variation in the community college effect based on the control group: the diversionary effect was substantially larger when the control group included students attending very selective four-year colleges rather than only students at nonselective institutions. They also found no evidence of a negative effect of community college attendance when comparing community college entrants with students who did not immediately enroll in college within a year of graduating high school. Brand and colleagues’ (2014) study is the only one, to our knowledge, to stratify the control group and explicitly discuss the implications of selecting different counterfactual conditions for the estimated effects.

Identification Strategies and Covariate Selection

Scholars interested in the effects on baccalaureate attainment of initially enrolling at community colleges have used a variety of identification strategies (i.e., statistical approaches to understand the relationship between the two variables). The simplest studies use unadjusted mean comparisons in attainment rates among community college and university entrants. This approach dominates the literature, particularly among unpublished studies. Other studies control for selection into college sector methodologically, attempting to adjust for precollege differences among students who pursue different postsecondary pathways.

The most common approach is to use statistical adjustments based on observable measures (covariate adjustment through regression and propensity score matching). In discussing covariate adjustment to attenuate selection effects, Anderson (1984) argues that one must control for both educational expectations and postsecondary enrollment patterns. More than three decades later, capturing educational expectations (either as a covariate or through sample restrictions) appears common across the literature. However, most researchers fail to control for such enrollment patterns as stopping out (gaps in enrollment), enrollment intensity (full- vs. part-time), and delayed enrollment (taking time off between secondary and postsecondary schooling) (notable exceptions include Doyle 2009; Long and Kurlaender 2009; Stephan, Rosenbaum, and Person 2009; Wang 2015; Xu et al. 2016). Most research also appears indifferent to time, failing to consider it in research design or statistical models, despite the fact that degree attainment is an “inherently time-dependent process” and institutional transfer likely lengthens time to degree (Doyle 2009:200).2 Most research using multivariate regression shows unambiguously negative associations between community college attendance and baccalaureate completion (Alfonso 2006; Anderson 1984; Rouse 1995; Sandy, Gonzalez, and Hilmer 2006; Smith and Stange 2016).

Propensity score matching (PSM) offers a modest improvement in identification strategy compared with regression with statistical controls. The approach models selection into treatment, estimating the relationship between observable attributes and treatment status, in order to match individuals who end up in different conditions despite similar overall probabilities of entering treatment. Unlike regression, PSM excludes observations from the analytic sample that differ substantially from the average attributes of students in the opposite condition, referred to as restricting the sample to the common support. Several studies use PSM to isolate the effects of community college (Brand et al. 2014; Doyle 2009; Long and Kurlaender 2009; Monaghan and Attewell 2015; Reynolds 2012; Wang 2015; Xu et al. 2016). Estimates range from a 17-percentage-point decrease in the predicted probability of earning a degree when comparing baccalaureate-aspiring community college entrants with nonselective, public, four-year college entrants (Monaghan and Attewell 2015) to a 36-percentage-point decrease among community college students in science, technology, engineering, and mathematics pathways compared to students at four-year colleges (Wang 2015).

Rather than perform covariate adjustment, a smaller group of studies relies on exogenously induced variation in treatment status. An instrumental variable (IV) approach exploits variation in the treatment caused by factors otherwise unrelated to the outcome. IV estimates apply only to those whose selection into treatment is influenced by the value of the instrument. Miller (2007), Long and Kurlaender (2009), and Rouse (1995) all use distance to college as an instrument to examine the effects of beginning at a community college. Rouse (1995:222–23) describes the potential endogeneity of her instruments and the need to include statistical controls that may be correlated with unobservable measures in the final model.

Like IV estimators, a regression discontinuity (RD) estimator provides an estimate of local average treatment effects, focusing on observations that fall on either side of an assignment threshold that determines treatment condition. One study in our sample uses this identification strategy. Goodman and colleagues (2015) leverage admissions cutoffs imposed by the Georgia State University System based on SAT score. They show that among students with modest academic abilities as measured by the SAT, those who enrolled in a community college instead of a public university in Georgia were 41 percentage points less likely to earn a bachelor’s degree, on average. This estimate was higher than the one they obtained using regression (26 percentage points).

METHOD

A close review of the literature illustrates varied patterns in effects across sampling and identification strategies. In our meta-analyses, we rely on two primary samples of studies to further evaluate these patterns: all relevant studies in the literature and a smaller sample of studies that meet stricter inclusion criteria. First, we examine the patterns of effects across methodological choice using descriptive statistics and ordinary least squares (OLS) regression. To estimate the average effect of initial enrollment in a community college and examine the role of various study characteristics as modifiers (our research questions), we leverage meta-analysis using cluster-robust variance estimation models.

Search Strategy, Inclusion Criteria, and Coding

To identify the sample of studies, we searched relevant databases, including Sociological Abstracts, Education Source, the Educational Resources Information Clearinghouse (ERIC), PsycINFO, ProQuest Dissertations and Theses, PAIS, and Sage Premiere, as well as the academic search engine Google Scholar. Our searches included the following terms and phrases in various combinations: community college (or community college effect), baccalaureate attainment (or bachelor’s degree or college completion), and native.

The search terms resulted in varying hits across each database. For instance, our search of community college effect and baccalaureate attainment returned 743 reports from Sociological Abstracts, 423 from Education Source, 1,063 from ERIC, 63 from PsycINFO, 990 from ProQuest Dissertations and Theses, 367 from PAIS, 617 from SAGE Premiere, and 464 from Google Scholar. Many searches had overlapping results. For each list, we winnowed the results based on study title, eliminating papers that were unrelated to community college and baccalaureate attainment. For those with relevant titles, we reviewed the abstract to confirm a focus on baccalaureate attainment among community college students. Our search results yielded more than 20,000 items (including many redundancies), which we narrowed to 245 possible studies through our title and abstract review.

Inclusion criteria for full analytic sample.

We performed a closer examination of each paper to ensure it met the following inclusion criteria: focused on institutions within the United States, included a comparison group, used baccalaureate attainment as an outcome, and conducted after 1965, when the Higher Education Act was enacted and postsecondary education began to resemble the current structure. These criteria resulted in 50 studies using 60 unique data sources (we counted data sources as distinct if they did not include overlapping students) for a total of 140 effect sizes in our full sample.

Coding.

We each independently coded all studies that met the inclusion criteria, capturing the following characteristics: (a) year the study was conducted; (b) peer-reviewed publication status; (c) use of sample restrictions; (d) use of a non-probability-sampling method; (e) cohort alignment (i.e., did the treatment and control groups have the same approximate year of entry, academic standing, or credits accumulated?); (f) data source; (g) identification strategy; (h) year of college entry; (i) follow-up length; (j) sample size of treatment, control, and overall; and (k) additional information to calculate the effect size. We agreed approximately 93 percent of the time and resolved differences through discussion (see Table S1 in the online appendix for a complete list of studies that includes analytic variables. Studies included in the meta-analysis but not cited in the article are listed in the appendix’s Additional References section.)

Inclusion criteria for best-evidence sample.

We restrict the full sample to studies that meet higher standards for inclusion to create our “best-evidence” sample. We eliminated studies that use only mean comparison because the literature shows systematic differences between students who initially enter community colleges versus four-year institutions. Assessing our systematic review, we decided to eliminate studies that restrict the community college subsample to students who transferred because they are unable to capture diversionary effects from various phases of the transfer process (; plus some effect sizes from Monaghan and Attewell 2015; Xu and colleaugues 2016). Finally, we eliminated one study in which the sampling frame is not transparent (other studies that use convenience sampling were previously eliminated due to other restrictions). Our best-evidence sample includes 21 studies, 17 different data sources, and 36 effect sizes (see Table S1 in the online appendix).

Open-source data.

To improve the reproducibility of this meta-analysis and make it feasible for others to build on this work, we provide our full data sets—the data collected and coded from the literature—and R code for our meta-analyses online (Schudde and Brown, 2019), as recommended by Lakens, Hilgard, and Staaks (2016).

Effect Size Measure

For all analyses, we use risk difference as the effect size. In this case, it represents the difference in the rate of bachelor’s degree attainment between community college entrants and four-year college entrants. The risk difference is a useful and widely used measure of effect size in studies where the response variable is dichotomous (Borenstein et al. 2009b). It is quite intuitive and aligns with the presentation of results in the literature: most recent studies present effects in terms of predicted probability (average marginal effects) rather than odds ratios.3

When studies report effects at multiple time points (only three studies do so), we capture the estimate with the longest follow-up. We capture multiple effect sizes in studies that use more than one identification strategy (e.g., mean comparison, regression, IV approach) or various sample restrictions. We collected outcomes from the following specifications: (a) mean comparison (no covariates); (b) the most complete model—or preferred model, as indicated in the paper—from the primary identification strategy beyond mean comparison; and (c) an additional preferred model if the researcher uses a second identification strategy.

Analytic Strategy

Traditional meta-analytic techniques are based on the assumption that effect size estimates are independent and have sampling distributions with known conditional variances (Cooper, Hedges, and Valentine 2009). Our data structure is unlikely to align with that assumption, given our desire to keep multiple effect sizes from the same author or data set to understand variation in estimates across methodological choices. Several studies in our sample use the same nationally representative data, estimating effect sizes from an overlapping set of students. Thus, we expect correlated dependence, which can occur when a single study provides multiple measures of effect size from the same sample (for an overview about dependence in effect sizes, see Hedges, Tipton, and Johnson 2010). In some cases, we include authors who use different, nonoverlapping samples of students from the same state. Given that the same authors performed the analyses, we expect correlation between the true effect parameters—hierarchical dependence. In other cases, single studies use multiple data sources (e.g., Sandy et al. 2006), making the data structure cross-classified.

Treating dependent effect sizes as independent can increase Type I error by reducing variance estimates, and it may give more weight to studies that have multiple effect sizes (Borenstein et al. 2009a; Scammacca, Roberts, and Stuebing 2014). To minimize bias induced by dependence in effect sizes, our analytic approach must account for correlated effect sizes. Our data structure does not perfectly align with an approach that deals with dependence through either a hierarchical or a correlated weighting scheme. Our primary goal is to address dependence in effect sizes without dropping or averaging effect size estimates, which would disregard variation in estimates across methods, a key interest in our inquiry. To accomplish this, we use cluster-robust variance estimation, a procedure for handling statistically dependent effect sizes in meta-analysis (Hedges et al. 2010). The strategy creates clusters of effects, allowing for dependence within clusters but maintaining independence, as possible, between clusters, and estimates robust standard errors for the effect. We used the rma.mv function within the R Metafor package for all analyses (Viechtbauer 2017). To calculate robust variance estimates, we also used the clubSandwich package (Pustejovsky 2017a).

Modeling approach.

We seek to estimate the average risk difference between community college and four-year entrance on bachelor’s degree attainment. We use the model

where represents the effect size estimate for study , data source , and specification is a vector of covariates; is a random effect at the data-source level; is a random effect at the study level; and is the sampling error in the effect size estimate.

To address the cross-classified structure of our data, we use a random-effects model that allows for variation in the true effects across data source and study while accounting for additional dependence across effect size estimates from the same study. Random effects capture variation at the data source and study levels, but they do not deal with dependence at the effect size level. We expect effect sizes obtained from the same data source, including different authors using the same data source and different model specifications from the same author using the same data source, will have correlated sampling errors. To correlate effect sizes drawn from the same author and data source, we impute covariance matrices for the errors (as discussed in Borenstein et al. 2009c; Hedges 2007):

Pustejovsky (2017b) illustrates the procedure we use to construct these matrices. For the meta-analyses presented as our main results, we set the correlation for effect sizes drawn from the same author and data set at 0.8—as we assume a fairly high degree of correlation. We ran sensitivity analyses allowing for alternative assumptions to test the robustness of our results to a lower correlation between effect sizes (similar to Wilson and Tanner-Smith 2013).

To further address the dependence of effect sizes, we calculate robust variance estimates with small sample adjustments on regression coefficients (Tipton 2015) and statistics for categorical effect size moderators from an approximate Hotelling -squared test when we use multiple dummy or indicator variables (Tipton and Pustejovsky 2015). This approach is not a panacea for the complexity of our data structure, but it avoids the pitfalls of ignoring either correlated or hierarchical dependence.

Analytic samples.

We found substantial variation in effect sizes within the full sample, mostly stratified by the methodological choice to restrict the treatment group to focus only on transfer students. When the sample of effect sizes is too heterogeneous, it can be difficult to provide reliable meta-regression estimates (Deeks, Higgins, and Altman 2011a). Thus, we ran meta-regressions on two subsamples of the full set of studies—a subsample that uses only community college transfer students and a subsample that uses all community college entrants—and the best-evidence sample. The subsample that includes all community college entrants in the treatment group contains 21 studies from 17 distinct data sources and yields 68 effect sizes.4 The posttransfer subsample contains 31 studies and 48 unique data sources with 72 effect sizes. Two studies (Monaghan and Attewell 2015; Xu et al. 2016) are included in both the all-entrants and posttransfer samples because they contain specifications that use different sample restrictions for different effect sizes. The covariates included in the final model vary across analytic sample; some measures are relevant only to some samples.

Centering analytic variables.

To produce useful and meaningful parameter estimates in multilevel models, researchers must choose between centering Level 1 predictors around the grand mean (CGM) and centering within cluster (CWC) (Enders and Tofighi 2007). We define clusters as effect sizes with the same author and data source. To determine our centering approach, we considered our research questions and examined whether there is variation in measures within clusters (see Table S2 in the online appendix). For predictors where 80 percent or more of the clusters display no variation, we use CGM, because most of the variation seems to be between clusters. For predictors with variation in over 20 percent of clusters, we use CWC (in the all-entrants sample: background characteristics, aspirations, and identification strategy measures; in the best-evidence sample: identification strategy measures). For identification strategy, we anticipate meaningful variation within and between clusters and include two versions of each identification strategy dummy variable, one using CWC and one with cluster-level values centered around the grand mean. These can be interpreted, respectively, as representing the average difference in effect size between an identification strategy and the reference strategy, as applied to the same author/data-source cluster, and the degree to which effect size is predicted by the typical identification strategy applied to a given author/data-source cluster. Within-cluster effects may be of greater interest because they isolate variation in identification strategy from other sources of variation across authors and data sources, much as fixed-effects regression controls for confounding factors that are constant at the cluster level.

RESULTS

General Characteristics and Patterns of Effects

Table 1 provides descriptive statistics for the samples of effect sizes. Slightly over half of the effect sizes restrict the community college subsample to students that transferred, 19 percent restrict to baccalaureate aspirants, and 18 percent use different cohorts for the treatment and control groups. Forty-three percent of effect sizes control for student background variables (e.g., SES, high school grade point average, gender), with fewer controlling for educational aspirations (38 percent) and enrollment patterns (16 percent).

Table 1.

Descriptive Characteristics of Full and Best-Evidence Samples.

| Characteristic | Full sample |

Best-evidence sample |

||||

|---|---|---|---|---|---|---|

|

, |

, |

|||||

| % | % | |||||

|

| ||||||

| Peer-reviewed publication | 62 | 44 | 26 | 72 | ||

| Sample restrictions | ||||||

| Posttransfer | 84 | 52 | 0 | 0 | ||

| Different cohort definitions | 26 | 18 | 0 | 0 | ||

| Baccalaureate aspirants | 27 | 19 | 12 | 33 | ||

| Identification strategy | ||||||

| Mean comparison | 76 | 54 | 0 | 0 | ||

| Regression | 39 | 28 | 19 | 53 | ||

| Exploit exogenous variation | 9 | 6 | 7 | 19 | ||

| Propensity score matching | 18 | 13 | 10 | 28 | ||

| Statistical controls | ||||||

| Background characteristics | 60 | 42 | 36 | 100 | ||

| Aspirations | 33 | 23 | 21 | 58 | ||

| Enrollment pattern | 23 | 16 | 10 | 28 | ||

| Data characteristics | ||||||

| Year began collegea | 1990.8 (11.44) | 1991.17 (10.94) | ||||

| Follow-up perioda | 6.68 (1.91) | 7.31 (2.04) | ||||

| Control-group graduation ratea | .64 (0.17) | .6 (0.14) | ||||

| Effect size | −.16 (0.18) | −.23 (0.1) | ||||

Note: = number of data sources; = number of effect sizes.

Continuous variable.

The risk difference varies widely, ranging from −0.56 to 0.34. Given our interest in three analytic samples—transfer students, all community college entrants, and best evidence—it is useful to consider the variation in the unadjusted average risk difference across those samples. For illustrative purposes, we first estimate the overall mean effect size and prediction intervals for each sample using an empty model that accounts for dependency. The unadjusted risk difference is −0.04 for the transfer student sample , 95 percent confidence interval [CI] at data level [−0.28, 0.20], 95 percent CI at study level [−0.17, 0.10]), −0.31 for the all community college entrants sample , 95 percent CI at data level [−0.32, −0.31], 95 percent CI at study level [−0.61, 0.02]), and −0.23 for the best-evidence sample , 95 percent CI at data level [−0.36, −0.11], 95 percent CI at study level [−0.38, −0.08]) (see Model 1 in Tables 2, 3, and 4, respectively).

Table 2.

The Relationship between Methodological Choices and the Estimated Diversionary Effects of Community College Entrance: Results from Studies That Restrict Treatment to Transfer Students.

| Meta-regression models |

|||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Model 1 |

Model 2 |

Model 3 |

Model 4 |

Model 5a |

|||||||||||

| Variable | |||||||||||||||

|

| |||||||||||||||

| Intercept Sample restrictions |

−.04 (.02) | 46.7 | .075 | −.06 (.02) | 41.8 | .008 | −.05 (.02) | 41.6 | .032 | −.06 (.02) | 42.6 | .01 1 | −.06 (.02) | 22.7 | .008 |

| Different cohort defmition | . 15 (.06) | 2.0 | .128 | . 16 (.06) | 2.0 | .124 | . 15 (.06) | 2.2 | .1 17 | . 15 (.06) | 2.5 | .095 | |||

| Baccalaureate aspirations Identification strategyb |

.02 (.07) | 1.0 | .840 | −.06 (.04) | l.l | .346 | −.08 (.05) | l.l | .292 | −.14 (.12) | 1.2 | .451 | |||

| Regression | .04 (.05) | 2.1 | .523 | .02 (.05) | l.l | .707 | .02 (.05) | 1.2 | .695 | ||||||

| PSM | . 17 (.06) | 1.7 | .131 | .14 (.07) | 1.2 | .261 | .14 (.07) | 1.2 | .259 | ||||||

| Exogenous variation Statistical controls |

.12 (.03) | 2.2 | .053 | .08 (.03) | 1.3 | .146 | .08 (.02) | 1.3 | .137 | ||||||

| Background | .05 (.00) | 1.4 | .000 | .05 (.00) | 2.6 | .000 | |||||||||

| Aspirations | −.04 (.05) | 1.2 | .526 | −.06 (.05) | 1.2 | .420 | |||||||||

| Enrollment pattern Data characteristics |

−. 17 (.05) | 1.5 | .1 14 | −. 17 (.06) | 1.5 | .127 | |||||||||

| Year began collegec | .00 (.00) | 14.5 | .096 | ||||||||||||

| Years follow-upc | .03 (.02) | 7.3 | .238 | ||||||||||||

| Control group graduationc Variance components |

−.46 (. 13) | 16.0 | .003 | ||||||||||||

| Study level | .00 (.01) | .01 (.01) | .01 (.01) | .01 (.01) | .01 (.01) | ||||||||||

| Data level | .02 (.01) | .01 (.01) | .01 (.01) | .01 (.01) | .01 (.00) | ||||||||||

Note: The analytic sample includes 31 studies with 48 data sources and 72 effect sizes. The table presents unstandardized coefficients (), Standard errors , degrees of freedom (), and values () obtained from mixed-effects meta-regression models using robust standard errors and an estimated intercorrelation within author/data-source clusters of 0.80. represents the change in the risk difference for every one-unit change in the predictor. Intercept represents the estimated risk difference for entering a community college while holding other measures in the model at their means (all variables are grand-mean centered). Coefficients in bold are statistically significant ( when ; when ). PSM = propensity score matching.

In Model 5, we drop one study, Gonzales (1999), that does not provide information about the length of follow-up. We ran additional analyses including Gonzales (1999) and dropping the years follow-up measure; this did not alter the patterns of effects.

Reference category is mean comparison.

Continuous variable.

Table 3.

The Relationship between Methodological Choices and the Estimated Diversionary Effects of Community College Entrance: Results from Studies That Include All Community College Entrants.

| Model 1 |

Model 2 |

Model 3 |

Model 4 |

Model 5 |

|||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Variable | |||||||||||||||

|

| |||||||||||||||

| Intercept Sample restrictions |

−.31 (.03) | 1.2 | .000 | −.31 (.03) | 1.2 | .000 | −.28 (.03) | 1 1.4 | .000 | −.29 (.03) | 12.3 | .000 | −.28 (.02) | 8.7 | .000 |

| Baccalaureate aspirations Identification strategya |

.06 (.02) | 1.0 | .231 | .06 (.02) | 1.0 | .214 | .07 (.03) | 1.0 | .223 | .07 (.03) | 1.0 | .245 | |||

| Regression (CWC) | .17 (.03) | 4.4 | .003 | . 1 1 (.06) | 5.2 | .095 | . 1 1 (.06) | 5.2 | .095 | ||||||

| PSM (CWC) | .23 (.04) | 3.3 | .01 1 | .17 (.07) | 4.5 | .070 | .17 (.07) | 4.5 | .070 | ||||||

| Exogenous variation (CWC) | .24 (.03) | 4.5 | .000 | . 13 (.06) | 5.2 | .069 | .13 (.06) | 5.2 | .069 | ||||||

| Regression | −.52 (.10) | 3.3 | .012 | −.53 (.15) | 3.1 | .036 | −.20 (.25) | 3.9 | .465 | ||||||

| PSM | −.20 (.11) | 3.6 | .153 | −.22 (.14) | 2.9 | .224 | .09 (.21) | 3.8 | .681 | ||||||

| Exogenous variation Statistical controls |

.14 (.19) | 6.8 | .488 | . 17 (.20) | 5.7 | .419 | .24 (.15) | 5.5 | .175 | ||||||

| Background (CWC) | .03 (.07) | 4.3 | .682 | .03 (.07) | 4.3 | .687 | |||||||||

| Aspirations (CWC) | .06 (.07) | 4.3 | .392 | .06 (.07) | 4.3 | .391 | |||||||||

| Enrollment pattern Data characteristics |

.01 (.06) | 3.0 | .876 | .01 (.06) | 3.0 | .871 | |||||||||

| Year began collegeb | −.01 (.00) | 6.4 | .009 | ||||||||||||

| Years follow-upb | .02 (.01) | 2.4 | .147 | ||||||||||||

| Control group graduationb Variance components |

−.45 (.04) | 1.0 | .058 | ||||||||||||

| Study level | .02 (.01) | .02 (.01) | .01 (.01) | .01 (.01) | .01 (.01) | ||||||||||

| Data level | .00 (.01) | .00 (.01) | .01 (.01) | .01 (.01) | .00 (.00) | ||||||||||

Note: The analytic sample includes 20 studies with 16 data sources and 66 effect sizes. The table presents unstandardized coefficients (), Standard errors , degrees of freedom (), and values () from mixed-effects meta-regression models using robust standard errors and an estimated intercorrelation within author/data-source clusters of 0.80. represents the change in risk difference for every one-unit change in the predictor. The intercept represents the estimated risk difference for entering a community college, controlling for other measures in the model. Unless otherwise noted, variables are grand-mean centered. For categorical variables with sufficient variation within author/data-source clusters, we center within cluster (marked CWC). For identification strategy measures, we include a version with cluster-level values centered around the grand mean. Coefficients in bold are statistically significant ( when ; when ). PSM = propensity score matching.

Reference category is mean comparison.

Continuous variable.

Table 4.

The Relationship between Methodological Choices and the Estimated Diversionary Effects of Community College Entrance: Results from the Best-Evidence Sample.

| Variable | Model 1 |

Model 2 |

Model 3 |

||||||

|---|---|---|---|---|---|---|---|---|---|

|

| |||||||||

| Intercept | −.23 (.02) | 15.2 | .000 | −.23 (.02) | 13.8 | .000 | −.23 (.01) | 8.9 | .000 |

| Identification strategya | |||||||||

| PSM (CWC) | .06 (.07) | 1.8 | .463 | .06 (.07) | 1.8 | .462 | |||

| Exogenous variation (CWC) | .01 (.00) | 2.0 | .080 | .01 (.00) | 2.0 | .080 | |||

| PSM | .04 (.05) | 5.2 | .448 | .12 (.06) | 4.7 | .089 | |||

| Exogenous variation | −.02 (.06) | 4.6 | .761 | .06 (.05) | 5.0 | .293 | |||

| Data characteristics | |||||||||

| Year began collegeb | −.01 (.00) | 5.6 | .006 | ||||||

| Years follow-upb | .00 (.01) | 3.3 | .913 | ||||||

| Control group graduationb | −.12 (.10) | 5.4 | .315 | ||||||

| Variance components | |||||||||

| Study level | .01 (.01) | .01 (.01) | .01 (.00) | ||||||

| Data level | .00 (.01) | .00 (.01) | .00 (.00) | ||||||

Note: The analytic sample includes 21 studies with 17 data sources and 36 effect sizes. The table presents unstandardized coefficients (), standard errors , degrees of freedom (), and -values () from mixed-effects meta-regression models using robust standard errors and an estimated inter-correlation within author/data-source clusters of 0.80. represents the change in risk difference for every one-unit change in the predictor. The intercept represents the estimated risk difference for entering a community college, controlling for other measures in the model. Unless otherwise noted, variables are grand-mean centered. For categorical variables with sufficient variation within author/data-source clusters, we center within cluster (marked CWC). For identification strategy measures, we include a version with cluster-level values centered around the grand mean. Coefficients in bold are statistically significant ( when ; when ). PSM = propensity score matching.

Reference category is regression.

Continuous variable.

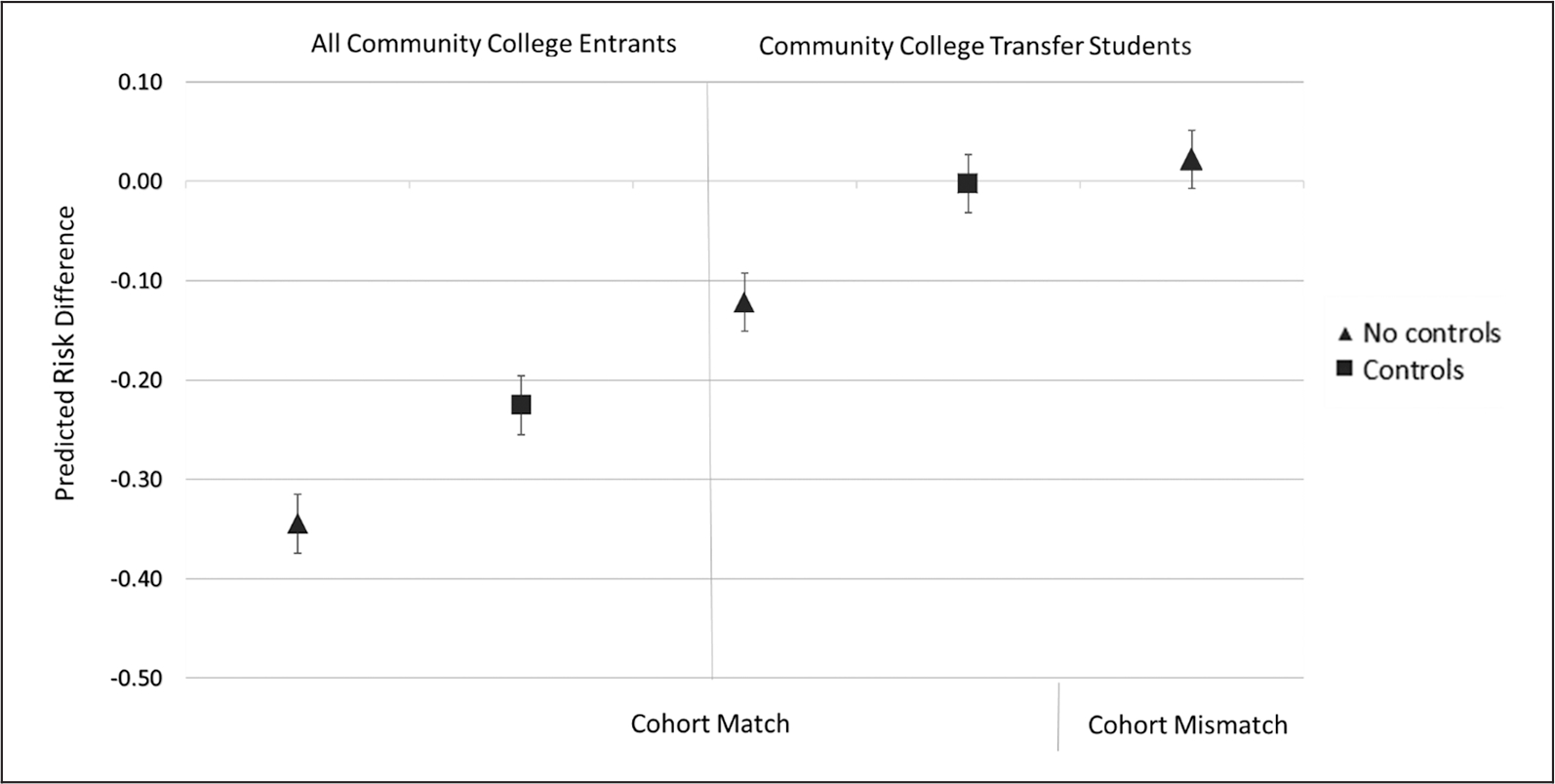

To further illustrate the variation in effects across the full sample of studies, Figure 1 displays the average effect sizes across three methodological choices: restricting the sample based on whether students transferred, including statistical controls, and using different cohorts for treatment and control. The first two points plot the average effects for studies that compare all community college entrants with a matched cohort of their peers. Studies that include all community college entrants produce larger diversionary effects, on average, than do those that restrict the sample to transfer students. Studies that fail to include statistical controls find the largest difference in degree attainment between community college and four-year college entrants. Including statistical controls attenuates that difference.

Figure 1.

Average risk difference for groups of studies based on methodological choices.

Note: Estimates are drawn from an ordinary least squares regression analysis performed on the full sample of studies (see Table S3, column 1, in the online appendix). The no controls estimates include effect sizes that simply compare the means for baccalaureate attainment of community college and four-year college entrants; controls includes effect sizes from analyses that use statistical controls. The left-hand panel shows effect sizes among studies that capture all community college entrants in the treatment group; the right-hand panel shows studies that restrict the treatment group to community college entrants who transferred to a four-year college (some of those studies ensure students in the treatment and control groups entered college at the same time; others do not match based on entry cohort).

The right-hand panel of Figure 1 represents the average point estimates of studies that restrict the treatment to students who transferred. Overall, effects are smaller among studies that restrict the community college group based on transfer compared to studies that do not. Average estimates are larger for studies that do not include statistical controls than for those that do. Including covariates moves the average effect on baccalaureate attainment toward zero. Studies that rely on mismatched cohorts for the treatment and control groups—comparing groups who did not start college at the same time or accumulated a different number of credits—tend to find somewhat positive effects of starting at a community college (although null on average). Overall, the results reported in Figure 1 illustrate the role that methodological choice plays in predicting the diversionary effect of community colleges, supporting our decision to include several of these key variables in our random-effects meta-analysis model.

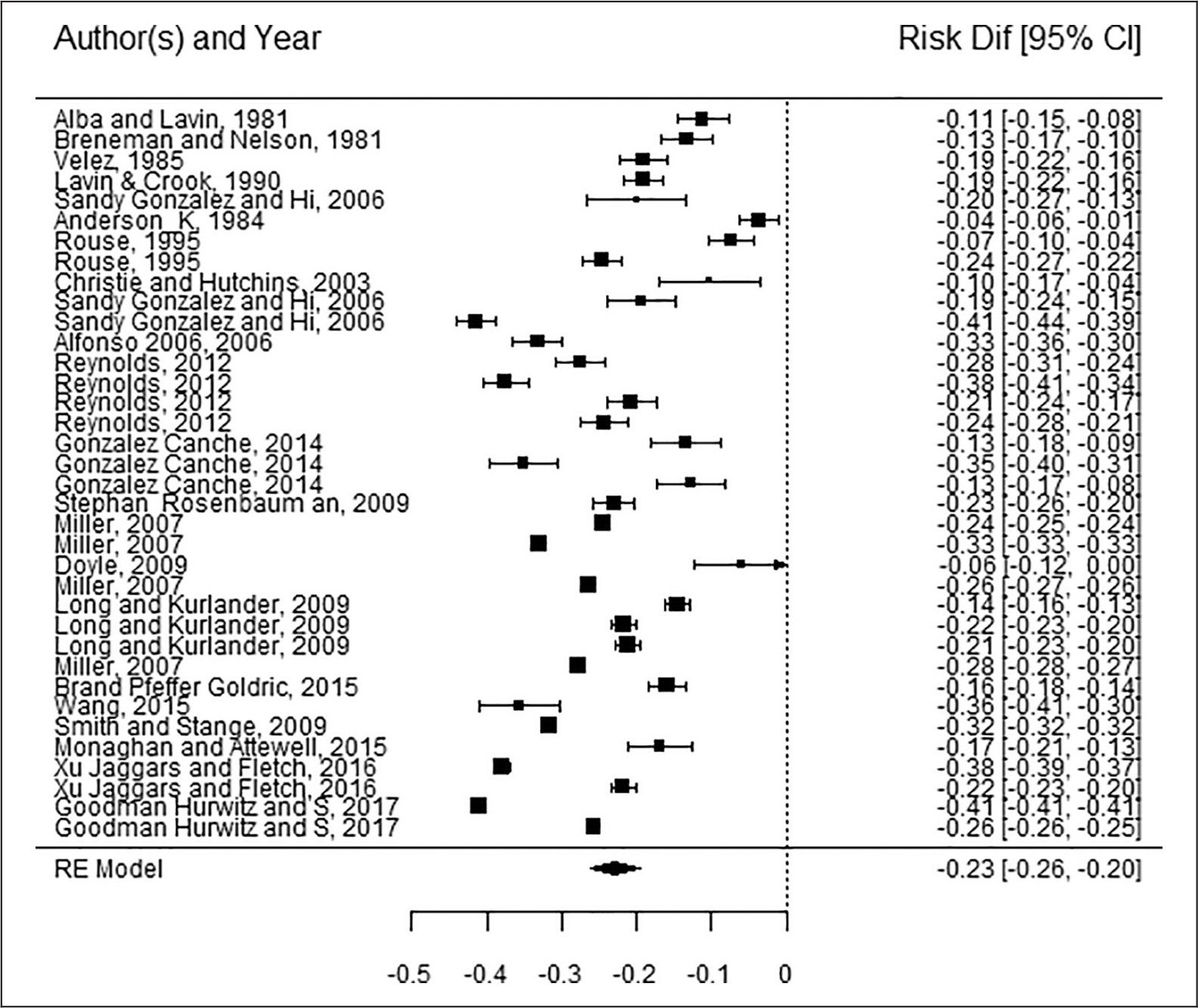

The best-evidence sample includes 36 effect sizes from 21 studies and 17 unique data sources. All studies in the best-evidence sample control for student background. This sample has a longer average follow-up period than the full sample, at 7.31 years. The range of the average risk difference in the best-evidence sample is smaller and all negative, −0.036 to −0.41. To further illustrate the variation in effect sizes, Figure 2 presents a forest plot of the best-evidence sample.

Figure 2.

Forest plot: Best-evidence sample.

Note: The figure presents the unadjusted risk difference with 95 percent confidence intervals from each effect size in the best-evidence sample, ordered by year of college entry.

Meta-analysis of Full Sample of Studies

We present the results from our meta-analyses for three different samples: the full sample of studies that restrict the treatment group to transfer students, the full sample of studies that do not restrict the treatment group based on transfer status (“all community college entrants”), and the sample of studies that meets our best-evidence criteria. Tables 2, 3, and 4 show results for stepwise models (adding in blocks of covariates leading to the final model) for each sample, respectively.

Restricting based on transfer.

Table 2 presents estimates from studies that restrict the sample of community college students based on transfer. Column 1 (Model 1) provides the weighted risk difference, after accounting for dependency in the data, with no predictors in the model. Models 2 through 5 illustrate changes in the intercept and moderator coefficients as we add explanatory variables incrementally.

Several methodological choices contribute to the variation in the intercept between Model 1 and Model 5. Studies that control for student background appear to find a smaller attainment gap between community college transfer students and native four-year students (perhaps because restricting the sample to transfer students positively selects students), resulting in a 5-percentage-point improvement in bachelor’s degree attainment among community college transfer students compared with studies that do not include background measures (Model 5: ). We also find a relationship between the rate of degree attainment among the control group and the observed gap between treatment and control. For every 10 percent increase in the control group graduation rate, the risk difference increases by approximately 4.6 percent (). To examine whether the relationship between control-group graduation rate and risk difference is related to our choice of risk difference as the effect size measure, we conducted the analysis with a relative effect size measure—risk ratio—and we find a similar relationship.

The final model suggests that among studies that restrict the treatment group based on transfer, entering a community college is associated with a 6-percentage-point decrease in the probability of earning a baccalaureate compared with starting at a four-year college, holding all other study indicators constant (). The variance components obtained from the random-effects models illustrate that among studies using a subsample of community college students who transferred, there is greater variation in effect sizes at the data level than at the study level.

All community college entrants.

Results look somewhat different among studies that include all community college entrants in the treatment, as shown in Table 3 (the analysis excludes one influential data point5). The intercept is much larger in the all-entrants sample. The intercept-only model suggests that starting at a community college is associated with a 31-percentage-point decrease in the probability of earning a baccalaureate compared with entering a four-year college (Model 1: ).

Restricting the sample based on educational aspirations does not significantly influence the risk difference (Models 2 through 5). Identification strategy, on the other hand, appears to have some influence. Model 3 incorporates a categorical measure of identification strategy, where the reference category is mean comparison. For effect sizes obtained from the same author and data source, using a more rigorous identification strategy than mean comparison reduces the risk difference, minimizing the apparent diversionary effect of community colleges. Within the same cluster, regression closes the estimated risk difference by 17 percentage points (), PSM by 23 percentage points (), and an exogenous variation strategy by 24 percentage points () compared with mean comparison. Results from a postregression approximate Hotelling -squared test suggest the overall effect of identification strategy is statistically significant within cluster (); it is not significant between clusters. Incorporating measures capturing the type of controls used in the study (i.e., student background, aspirations, and enrollment patterns) diminishes the relationship between identification strategy and risk difference (see Models 4 and 5). In the final model, the effect for identification strategy is not significant (). We ran an alternative model to examine the possibility of multicollinearity; we wanted to determine whether including both the type of statistical controls used and the identification strategy explains away the effect of identification strategy (because covariate adjustment likely drives some of the influence of identification strategy in Model 3). In an alternative final model (see Table S5 in the online appendix) that omits the statistical controls block of variables, the CWC identification strategy measures remain statistically significant after controlling for date of entry, years follow-up, and control-group graduation rate ().

The final model includes the year students in the sample started college, the years of follow-up data used, and the graduation rate of the control group. For every one-year increase, the difference in graduation rate between community college students and native students increases by approximately 1 percentage point (). The final model suggests that among studies that do not restrict the treatment group based on transfer status, entering a community college is associated with a 28-percentage-point decrease in the probability of baccalaureate attainment compared with entering a four-year college, holding all other study indicators constant (). The variance components obtained from the random-effects models suggest there is greater variation in effect sizes at the study level than at the data level.

Best-evidence analysis.

Table 4 presents results from the best-evidence sample.6 The intercept, the predicted risk difference, does not substantively change even as we add measures into the model in this sample, which includes only studies that use appropriate comparison groups and statistical controls. In Model 2, identification strategy appears less predictive here than in the all-entrants sample. This is likely because all effect sizes in the best-evidence sample were obtained using, at the least, covariate adjustment. Identification strategy does not significantly predict risk difference, suggesting that among studies that meet our inclusion criteria for best evidence, PSM and strategies that leverage exogenous variation do not result in significantly different estimates than regression, the reference category (Model 2, identification strategy [CWC]: ).

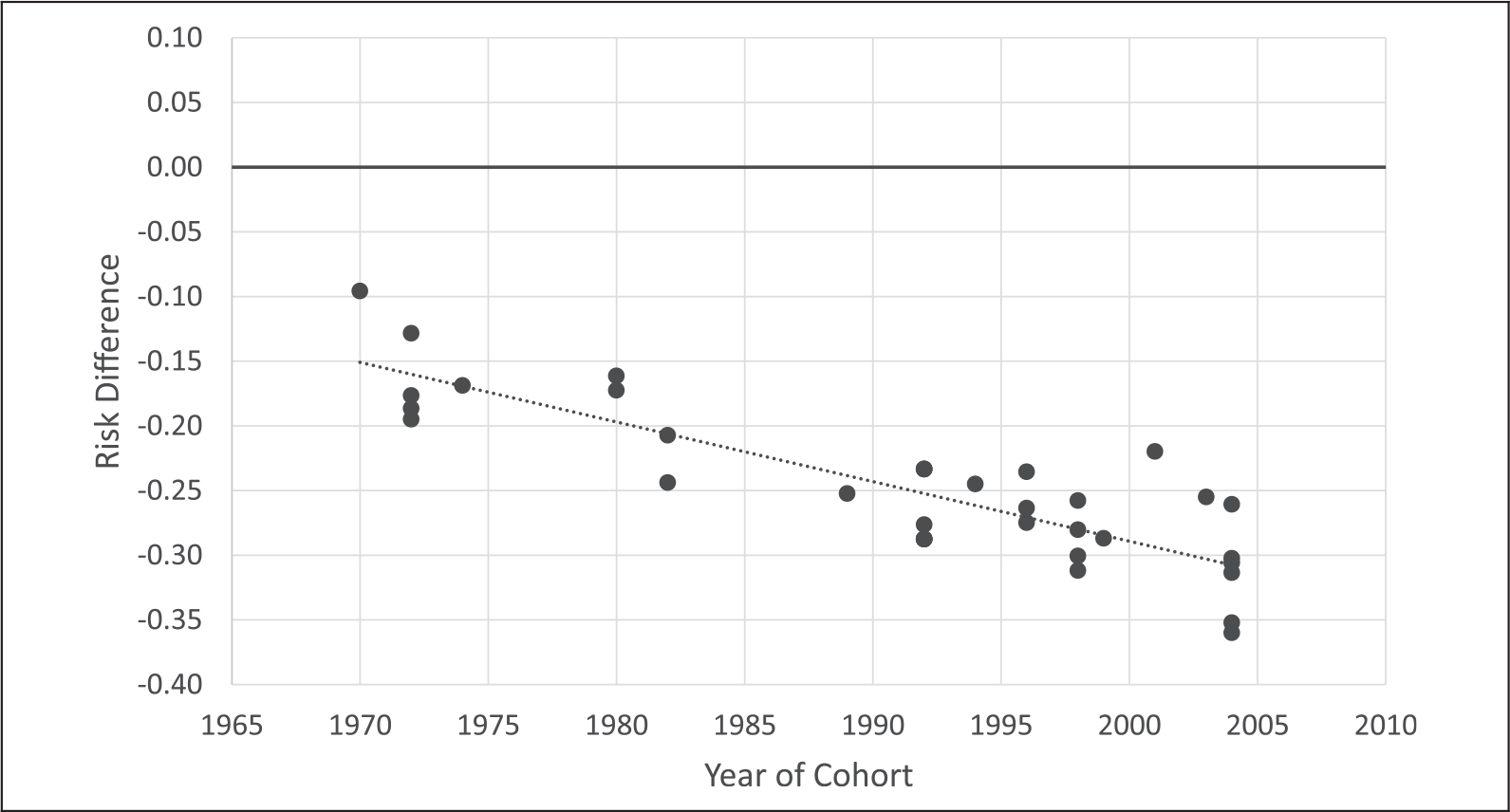

The final model, Model 3, suggests that entering a community college rather than a four-year institution is associated with a 23-percentage-point decrease in baccalaureate attainment among studies that rely on regression, when controlling for all other variables (). The year students began college increases the estimated gap in baccalaureate attainment between community college and four-year entrants. Each additional year in the college entry date increases the gap in risk difference by almost 1 percentage point (). To further illustrate the relationship between year and the community college effect, Figure 3 plots the predicted risk difference obtained in Model 3 by the year students began college.

Figure 3.

Scatterplot of predicted risk difference by year students began college: Best-evidence sample.

Note: The scatterplot presents adjusted estimates of risk difference for each effect size, obtained from the final model performed on the best-evidence sample (Table 4, Model 3).

Sensitivity analyses.

We test whether the final models for each analytic sample are robust to changing the estimated correlation between effect sizes drawn from the same study and data source. Findings for the studies that restrict the community college sample to transfer students are mostly robust across values of ranging from 0 to 1 (Table S6 in the online appendix). When the value of is set at .9, the intercept and the coefficient for different cohort definition are no longer statistically significant, but the overall interpretation of results holds. The sensitivity analyses for the studies focused on all community college entrants (Table S7 in the online appendix) and the best-evidence sample (Table S8 in the online appendix) suggest the findings are largely robust across levels of .

In addition to using random-effects models, we ran parallel analyses using OLS for each of our analytic samples to ensure that applying weights did not substantially alter the effects. The pattern and direction of effects look similar to those from our meta-analyses (see Table S3 in the online appendix, columns 2, 3, and 4).

Finally, to test for publication bias, we generated a funnel plot on the final models applied to the posttransfer subsample and best-evidence sample, presented in Figures S1 and S2 in the online appendix (we do not present one for the all-entrants subsample because it largely overlaps with the best-evidence sample, although it has more unpublished work). The distributions are not perfectly funnel shaped, but they are symmetric, with an approximately equal number of effect sizes on each side of the axis. We also applied a rank correlation test (Begg and Mazumdar 1994), which tests the interdependence of variance and effect size. The correlation is not significant for the posttransfer final model () or the best-evidence final model (), suggesting publication bias is unlikely.

DISCUSSION

Community colleges play a vital role in improving postsecondary access, particularly for first-generation college students, non-white students, and students from low-income families (Leigh and Gill 2003; Long and Kurlaender 2009; Rouse 1995). Understanding the influence of community college entry on baccalaureate attainment is necessary to inform student choice, explore the implications of policies that promote community college attendance, and improve transfer and attainment for community college enrollees. In this article, we reviewed research on the effect of enrolling at a community college on the probability of earning a baccalaureate. We then used meta-analysis to estimate the average effect of enrolling initially at a community college versus a four-year college on bachelor’s degree attainment. Findings from our best-evidence sample suggest that on average, entering a community college, compared with entering a four-year college, decreases the probability of earning a baccalaureate by 23 percentage points. We also examined moderators of the diversionary effect, informed by our systematic review.

We illustrated how restricting the sample based on transfer status influences the estimated diversionary effect. Studies that use a treatment group restricted to students who transferred find much smaller gaps in attainment between community college students and four-year college entrants than do studies that keep all community college entrants. These patterns held when we divided the full sample into two analytic samples based on the decision to restrict treatment based on transfer status. Estimates from the transfer sample suggest that starting at community college, rather than a four-year college, is associated with a 6-percentage-point decrease in the probability of earning a bachelor’s degree, whereas estimates from the all-entrants sample indicate a 29-percentage-point decrease. Evidence from the full sample of studies illustrates the importance of methodological choices, particularly decisions related to sample restrictions. Overall, the findings suggest that even across different definitions of the treatment group, the average effects of initially entering a community college on baccalaureate degree attainment are negative.

Identification strategy partially explains the gap in degree attainment between community college and four-year college students in the all-entrants sample but not in the other samples. In results from the all-entrants sample, effect sizes drawn from the same author and data source using regression, PSM, or an IV/RD approach obtain a more conservative estimate of the baccalaureate gap than do those using mean comparison. Identification strategy does not seem to have a strong relationship with risk difference in the transfer student sample, but this may be due at least partly to lack of statistical power—the bulk of studies in the posttransfer subsample rely on mean comparison (only one study uses IV and a handful use PSM). We also found little evidence of an influence of identification strategy among studies that meet the more stringent inclusion standards for best evidence, but all the studies use covariate adjustment (regression was the baseline).

An interesting result from our final model in the best-evidence sample (and one that was also present in the all-entrants sample) was that the year students began college significantly predicts the risk difference, increasing the estimated influence of community college entrance on earning a bachelor’s degree. Looking over the full sample of studies, the majority of our best-evidence studies use data from the new millennium, and those effects appear stronger than those from earlier decades (a point we did not account for in our systematic review). The results suggest, particularly within the best evidence sample, that the diversionary effects of community colleges are growing over time. Several factors could be responsible for this trend. As more students entered postsecondary education and college costs soared (particularly in the four-year sector), stratification across postsecondary sectors appears to have grown—there is potentially more variation in student background across community college and four-year college entrants than in the past (Alon 2009). Broader shifts in enrollment and selection could influence the gap over time, as could the shifting role and mission of colleges and the resources available per student. The patterns we observe may reflect these changes, but the origin of the growing gap is beyond the scope of our inquiry. We hope our observation of this pattern spurs additional research.

Implications for Future Research

By highlighting the role of methodological choice, our results should encourage thoughtful research design for future inquiries. Researchers interested in the effects of community college entrance must carefully consider which community college students are of interest in their inquiry and the appropriate counterfactual. Restricting the treatment group to community college entrants who transferred to a four-year college obscures pretransfer attrition that contributes to diversionary effects. To date, the most common counterfactual to community college attendance used throughout the literature is initially entering a four-year college, but a more appropriate counterfactual may be nonenrollment (Brand et al. 2014; Rouse 1995; Sandy et al. 2006). For that reason, the average effects we find here might “overstate the penalty” to community college attendance by comparing community college students only to four-year college entrants (Brand et al. 2014:451).

Tied to the discussion of counterfactuals is the need for additional research on other outcomes. If research explores alternative counterfactuals, like nonenrollment, baccalaureate attainment would be an inappropriate outcome measure, as the comparison group is ineligible to earn a degree. Using alternatives such as labor market outcomes could build our knowledge of the positive and negative consequences of community college attendance. Although some studies estimate “democratizing effects” of community college through additional years of education, the literature is comparatively small. Ideally, community colleges should be evaluated by more than one outcome.

Finally, although our findings suggest the average effects on baccalaureate attainment are negative, future research should further explore heterogeneous effects and changes in the relationship over time. Extant work suggests effects may differ across race (evidence appears mixed; see González Cánche 2012; Long and Kurlaender 2009) and family income (Goodman et al. 2015). Additionally, future studies should extend the length of the follow-up period. The gap in baccalaureate attainment between community college entrants and four-year entrants might decrease as follow-up increases. Transfer students might require additional time to meet degree requirements at a new institution (perhaps due to credit loss or inefficiency). In our study, there may have been too little variation in follow-up lengths to detect a relationship between length of follow-up and risk difference.

Implications for Policy

Most community college entrants intend to earn a bachelor’s degree, but our results indicate that entering a community college instead of a four-year college lowers students’ probability of doing so. Scholars, practitioners, and policy makers must continue to innovate and evaluate interventions at community colleges to ensure public and individual investments are worthwhile. Efforts to minimize wasted credits, whether through accelerating developmental education (i.e., course work students can take to meet college-readiness standards but that does not count toward a degree) or improving the transfer pipeline between institutions, are increasingly common, but there is a pressing need for evidence that links effective practices and policies to long-term student outcomes (Schudde and Grodsky 2018). Despite widespread efforts to streamline pathways through programs of study (e.g., Guided Pathways initiatives) and across institutions (e.g., statewide articulation agreements), policy makers and practitioners need rigorous evidence about whether and in which contexts these efforts are effective.

The effects observed in this article may offer insights into unintended consequences of policies that place additional demands on these institutions. Scholarship programs touting free community college for eligible students may increase enrollment at community colleges. The Tennessee Promise program, which offers free community college tuition to eligible graduating high school seniors, was associated with increased first-year enrollment at community and technical colleges (25 and 20 percent in first-time freshmen, respectively; Goodman et al. 2015). Across Tennessee, public baccalaureate-granting universities faced losses in freshman enrollment of 8 percent, with almost a 5 percent loss at University of Tennessee campuses. This suggests the policy at least partially redirected some students away from universities and toward community colleges.

The effects of free community college are likely complex and will take time to sort out. On the one hand, free community college may radically democratize higher education (as noted in our discussion of counterfactuals, our analyses cannot speak to the effect of community college entrance for students who would otherwise not attend college). On the other hand, we might see increased diversion away from baccalaureate attainment among students who choose to attend a community college over a baccalaureate-granting institution. This tension should be part of the conversation as states and colleges roll out new policies. Policy makers may want to offer two free years of college at any public postsecondary institution, not just community colleges (Goldrick-Rab and Kendall 2014). That said, states will continue to rely on community colleges to offer affordable postsecondary access for a broad swath of constituents. Continued investment in improving the outcomes of community college enrollees is crucial to improving individual status attainment and achieving population-level educational attainment goals.

CONCLUSION

Community colleges welcome a diverse population of students with a variety of needs, offering “something for everyone,” and they are expected to do so with fewer resources per student than four-year institutions (Dougherty 1994; Dowd 2007:407). Many community college entrants face financial and geographic constraints that make community college their most feasible pathway to a baccalaureate. However, our findings support a theory of diversionary effects. Compared with entering a baccalaureate-granting institution, entering a community college substantially lowers a student’s probability of earning a bachelor’s degree. Scholars and practitioners will need to further investigate effective means to smooth transfer pathways from community colleges to universities. These efforts will likely require in-depth organizational research to inform interventions and broader structural reforms throughout public higher education, as institutional transfer involves the intersection of complex organizational structures, hidden curricula, and state and institutional policies (Rosenbaum et al. 2007; Schudde, Bradley, and Absher forthcoming).

Supplementary Material

Biographies

Author Biographies

Lauren Schudde is an assistant professor of educational leadership and policy at the University of Texas at Austin. Her research examines the impact of educational policies and practices on college student outcomes, with a primary interest in how higher education can be better leveraged to ameliorate socioeconomic inequality in the United States. Ongoing projects examine how community college students respond to institutional transfer policies, the influence of developmental-education math reform on student outcomes, and labor market returns to different types of postsecondary degrees.

Raymond Stanley Brown is a doctoral student in educational leadership and policy at the University of Texas at Austin. His research uses quantitative methods to examine whether and how programmatic and policy changes improve student outcomes in higher education.

Footnotes

We use baccalaureate-granting and four-year college or institution interchangeably.

Only one study, Doyle (2009), uses event history analysis to capture the time-dependent nature of degree attainment. A smaller subset of literature uses years of schooling as an outcome, meant to assess the “democratizing” effect of community college entrance (e.g., Leigh and Gill 2003; Rouse 1995).

Almost all the studies we reviewed provide a risk difference estimate, with three exceptions: Weiss (2011), and Stratton (2015) present only log odds or odds ratios from logistic regression, and Doyle (2009) presents a hazard ratio from a Cox proportional hazards model. We transformed logit results from odds ratios into the risk difference using a two-by-two contingency table (Deeks, Higgens, and Altman 2011b). For Doyle (2009), we first transformed the hazard ratio into a risk ratio (Shor, Roelfs, and Vang 2017) and then the risk ratio into an estimate of risk difference (Deeks, Higgens, and Altman 2011a). We referred back to Beginning Postsecondary Students Longitudinal Study 1995–1996 technical reports to obtain the average graduation rate for the control group, which is not available in Doyle’s paper. Producing an accurate risk ratio estimate from the hazard ratio requires that the risk stay relatively constant over time, which is unlikely for college graduation. Because we cannot know the accuracy of our risk difference estimate for Doyle (2009), we ran alternative analyses without the effect size; results are robust to its inclusion.

We include studies that did and did not restrict thesample based on baccalaureate aspirations in the all-community-college-entrants group. The coefficient assessing the influence of sample restrictions based on aspirations is small and not statistically significant (see Table 3).

Viechtbauer and Cheung 2010) that prompted us to run analyses with and without effect sizes from Smith and Stange (2016) (see Table S4 in the online appendix for results that include the study). The associated hat value for both effect sizes from their study is about 0.54, which is more than three times as large as the average hat value for the sample .

Although we drop Smith and Stange (2016) from the all-entrants analysis in the full sample, it is included in the best-evidence sample and does not appear to be an influential data point.

SUPPLEMENTAL MATERIAL

Appendixes are available in the online version of this journal.

REFERENCES

References marked with an asterisk indicate studies included in the meta-analysis.

- *Alba Richard., and Lavin David E. 1981. “Community Colleges and Tracking in Higher Education.” Sociology of Education 54(4):223–37. [Google Scholar]

- Alexander Karl, Bozick Robert, and Enwisle Doris. 2008. “Warming Up, Cooling Out, or Holding Steady? Persistence and Change in Educational Expectations after High School.” Sociology of Education 81(4):371–96. doi: 10.1177/003804070808100403. [DOI] [Google Scholar]

- *Alfonso Mariana. 2006. “The Impact of Community College Attendance on Baccalaureate Attainment.” Research in Higher Education 47(8):873–903. doi: 10.1007/s11162-006-9019-2. [DOI] [Google Scholar]

- Alon Sigal. 2009. “The Evolution of Class Inequality in Higher Education: Competition, Exclusion, and Adaptation.” American Sociological Review 74(5):731–55. doi: 10.1177/000312240907400503. [DOI] [Google Scholar]

- *Anderson Kristine L. 1984. Institutional Differences in College Effects. Washington, DC: National Institute of Education. https://eric.ed.gov/?id=ED256204. [Google Scholar]

- *Arnold James C. 2001. “Student Transfer between Oregon Community Colleges and Oregon University System Institutions.” New Directions for Community Colleges 114:45–59. doi: 10.1002/cc.20. [DOI] [Google Scholar]

- Bailey Thomas, , Jenkins R. Davis, Fink John, Cullinane Jenna, and Schudde Lauren. 2016. “Policy Levers to Strengthen Community College Transfer Student Success in Texas: Report to the Greater Texas Foundation.” New York: Community College Research Center. doi: 10.7916/D8JS9W20. [DOI] [Google Scholar]

- Begg Colin B., and Mazumdar Madhuchhanda. 1994. “Operating Characteristics of a Rank Correlation Test for Publication Bias.” Biometrics 50(4):1088–101. doi: 10.2307/2533446. [DOI] [PubMed] [Google Scholar]

- Belfield Clive R., and Bailey Thomas. 2011. “The Benefits of Attending Community College: A Review of the Evidence.” Community College Review 39(1):46–68. doi: 10.1177/0091552110395575. [DOI] [Google Scholar]

- Borenstein Michael, Hedges Larry V., Higgins Julian P. T., and Rothstein Hannah R.. 2009a. “Complex Data Structures: Overview.” Pp. 215–16 in Introduction to Meta-analysis, edited by Borenstein M, Hedges LV, Higgins J, and Rothstein. Chichester HR, UK: Wiley. [Google Scholar]

- Borenstein Michael, Hedges Larry V., Higgins Julian P. T., and Rothstein Hannah R.. 2009b. “Effect Sizes Based on Binary Data (2 × 2 Tables).” Pp. 33–39 in Introduction to Meta-analysis, edited by Borenstein M, Hedges LV, Higgins J, and Rothstein. Chichester HR, UK: Wiley. [Google Scholar]

- Borenstein Michael, Hedges Larry V., Higgins Julian P. T., and Rothstein Hannah R.. 2009c. “Multiple Outcomes or Time-Points Within a Study.” Pp. 225–38 in Introduction to Meta-analysis, edited by Borenstein M, Hedges LV, Higgins J, and Rothstein. Chichester HR, UK: Wiley. [Google Scholar]

- *Brand Jennie E., Pfeffer Fabian T, and Goldrick-Rab Sara. 2014. “The Community College Effect Revisted: The Importance of Attending to Heterogeneity and Complex Counterfactuals.” Sociological Science 1(October):448–65. doi: 10.15195/v1.a25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- *Breneman David W., and Nelson Susan C. 1981. Financing Community Colleges: An Economic Perspective. Washington, DC: Brookings Institute. [Google Scholar]

- Brint Steven, and Karabel Jerome. 1989. The Diverted Dream: Community Colleges and the Promise of Educational Opportunity in America, 1900–1985. New York: Oxford University Press. [Google Scholar]

- *Christie Ray L., and Philo Hutcheson 2003. “Net Effects of Institutional Type on Baccalaureate Degree Attainment of Traditional Students.” Community College Review 31(2):1–20. doi: 10.1177/009155210303100201. [DOI] [Google Scholar]

- Clark Burton. 1960a. “The Cooling-Out Function in Higher Education.” American Journal of Sociology 65(6):569–76. doi: 10.1086/222787. [DOI] [Google Scholar]

- Clark Burton. 1960b. The Open Door College: A Case Study. New York: McGraw-Hill. [Google Scholar]

- Cooper Harris, Hedges Larry. V, and Valentine Jeffrey C. 2009. The Handbook of Research Synthesis and Meta-analysis, 2nd ed. New York: Russell Sage Foundation. [Google Scholar]

- Deeks Jonathan J., Higgins Julian P. T., and Altman Douglas G.. 2011a. “Effect Measures for Dichotomous Outcomes.” Chap. 9.2.2 in Cochrane Handbook for Systematic Reviews of Interventions (Version 5.1.0), edited by Higgins JPT, and Green S. http://hand-book-5-1.cochrane.org [Google Scholar]

- Deeks Jonathan J., Higgins Julian P. T., and Altman Douglas G.. 2011b. “Heterogeneity.” Chap. 9.5 in Cochrane Handbook for Systematic Reviews of Interventions (Version 5.1.0), edited by Higgins JPT, and Green S. http://handbook-5-1.cochrane.org. [Google Scholar]

- Denning Jeffrey T. 2017. “College on the Cheap: Consequences of Community College Tuition Reductions.” American Economic Journal: Economic Policy 9(2):155–88. [Google Scholar]

- *Dietrich Cecil C., and Lichtenberger Eric J. 2015. “Using Propensity Score Matching to Test the Community College Penalty Assumption.” Review of Higher Education 38(2):193–219. doi: 10.1353/rhe.2015.0013. [DOI] [Google Scholar]

- Dougherty Kevin. 1994. The Contradictory College: The Conflicting Origins, Impacts, and Futures of the Community College. Albany, NY: SUNY Press. [Google Scholar]

- Dowd Alicia C. 2007. “Community Colleges as Gateways and Gatekeepers: Moving beyond the Access ‘Saga’ toward Outcome Equity.” Harvard Educational Review 77(4):407–419. doi: 10.17763/haer.77.4.1233g31741157227. [DOI] [Google Scholar]

- *Doyle William R. 2009. “The Effect of Community College Enrollment on Bachelor’s Degree Completion.” Economics of Education Review 28(2):199–206. doi: 10.1016/j.econedurev.2008.01.006. [DOI] [Google Scholar]

- Enders Craig K., and Tofighi Davood. 2007. “Centering Predictor Variables in Cross-Sectional Multilevel Models: A New Look at an Old Issue.” Psychological Methods 12(2):121–38. doi: 10.1037/1082-989X.12.2.121. [DOI] [PubMed] [Google Scholar]

- *Galloway Yvette C. 2000. “The Success of Community College Transfer Students as Compared to Native Four-Year Students at a Major Research University: A Look at Graduation Rates and Academic and Social Integration Factors.” EdD dissertation, College of Education, North Carolina State University, Raleigh. [Google Scholar]

- *Garcia Phillip. 1994. “Graduation and Time to Degree: A Research Note from the California State University.” Paper presented at the Association for Institutional Research Annual Forum, New Orleans, LA. [Google Scholar]

- *Glass J. Conrad, and Harrington Anthony R. 2002. “Academic Performance of Community College Transfer Students and ‘Native’ Students at a Large State University.” Community College Journal of Research & Practice 26(5):415–30. doi: 10.1080/02776770290041774. [DOI] [Google Scholar]

- Goldrick-Rab Sara. 2010. “Challenges and Opportunities for Improving Community College Student Success.” Review of Educational Research 80:437–69. doi: 10.3102/0034654310370163. [DOI] [Google Scholar]

- Goldrick-Rab Sara, and Kendall Nancy. 2014. Redefining College Affordability: Securing America’s Future with a Free Two Year College Option. https://www.luminafoundation.org/files/resources/redefining-college-affordability.pdf.