Abstract

Introduction

Slow patient accrual in cancer clinical trials is always a concern. In 2021, the University of Kansas Comprehensive Cancer Center (KUCC), an NCI-designated comprehensive cancer center, implemented the Curated Cancer Clinical Outcomes Database (C3OD) to perform trial feasibility analyses using real-time electronic medical record data. In this study, we proposed a Bayesian hierarchical model to evaluate annual cancer clinical trial accrual performance.

Methods

The Bayesian hierarchical model uses Poisson models to describe the accrual performance of individual cancer clinical trials and a hierarchical component to describe the variation in performance across studies. Additionally, this model evaluates the impacts of the C3OD and the COVID-19 pandemic using posterior probabilities across evaluation years. The performance metric is the ratio of the observed accrual rate to the target accrual rate.

Results

Posterior medians of the annual accrual performance at the KUCC from 2018 to 2023 are 0.233, 0.246, 0.197, 0.150, 0.254, and 0.340. The COVID-19 pandemic partly explains the drop in performance in 2020 and 2021. The posterior probability that annual accrual performance is better with C3OD in 2023 than pre-pandemic (2019) is 0.935.

Conclusions

This study comprehensively evaluates the annual performance of clinical trial accrual at the KUCC, revealing a negative impact of COVID-19 and an ongoing positive impact of C3OD implementation. Two sensitivity analyses further validate the robustness of our model. Evaluating annual accrual performance across clinical trials is essential for a cancer center. The performance evaluation tools described in this paper are highly recommended for monitoring clinical trial accrual.

Keywords: Patient recruitment, Performance assessment, Bayesian methods

1. Introduction

Patient accrual in cancer clinical trials is challenging. According to recent research, only about 5% of adult cancer patients participate in clinical trials [1,2]. Slow accrual may lead to multiple negative outcomes, including longer study completion times, lower statistical power, and early study termination. In 2010, the National Cancer Institute (NCI) and the American Society of Clinical Oncology (ASCO) hosted a Cancer Trial Accrual Symposium, where new interventions to facilitate clinical trial enrollment were identified [3]. Three new interventions highlighted after the symposium include using available site data for patient recruitment, developing clinical trial management tools to evaluate site progress, and implementing site and clinical trialist performance standards that qualify clinical investigators based in part on their accrual performance.

The University of Kansas Comprehensive Cancer Center (KUCC) is one of the fifty-three NCI-designated comprehensive cancer centers. The KUCC Biostatistics and Informatics Shared Resource (BISR) Core develops innovative tools to support and enhance patient accrual, echoing the suggestions of the 2010 NCI-ASCO Cancer Trial Accrual Symposium. In 2018, the BISR Core published a Curated Cancer Clinical Outcomes Database (C3OD) to accelerate eligibility screening [4]. The C3OD centralizes real-time data from multiple sources and platforms, including electronic medical records, tumor registry, bio-specimen repository, and other data sources, allowing researchers to screen and recruit potential clinical trial participants more rapidly. In 2020, the BISR Core standardized monitoring tools and processes to improve the efficiency and reliability of investigator-initiated trials (IITs) sponsored by internal or external funding agencies [5]. Starting in 2021, the KUCC Executive Resourcing Committee made C3OD feasibility analysis a requirement for all KUCC trials.

Patient accrual monitoring is essential for cancer studies due to potentially small patient populations and prolonged recruitment time. The BISR Core has developed multiple innovative Bayesian models for patient accrual monitoring. Gajewski et al. [6] introduced the idea of predicting accrual using the Bayesian framework. Jiang et al. extended the model using adaptive priors [7] and developed an R package and a smartphone application to apply the Bayesian accrual model for interim reviews of clinical studies [8]. Liu et al. developed a Bayesian accrual model with the web-based KUCC Accrual Application, which provides accrual information such as the predicted completion date and probability of achieving accrual targets for all active cancer studies at the KUCC [9]. Liu et al. also proposed a Bayesian multicenter accrual model by combining a subject accrual model and a varying center activation time model [10].

Although the KUCC Accrual Application provides daily updates on patient accrual for each active study, there is an increasing need to evaluate overall accrual performance across studies by year. A 2021 study assessed the accrual sufficiency of Phase 2 and 3 genitourinary cancer clinical trials marked completed or terminated on ClinicalTrials.gov [11]. Accrual sufficiency was defined as the ratio of actual accrual to the pre-specified targeted number of patients (anticipated accrual). A binary outcome of sufficient accrual was calculated using a cutoff of 85% [11,12]. However, while this method can suggest sufficient accrual of an individual cancer clinical trial, it cannot quantify individual trial accrual performance or compare accrual performance between trials. In a 2016 study, the Ohio State University Comprehensive Cancer Center (OSUCCC) evaluated the enhancement of patient enrollment using an annual accrual rate [13]. This annual accrual rate was calculated as the number of patients accrued to clinical studies in a given calendar year divided by the number of new analytical cases seen at the cancer center for that same year, as determined by the tumor registry. Although this method could estimate annual accrual performance, it does not show cumulative accrual performance since study activation.

In 2020, the U.S. Clinical and Translational Science Award (CTSA) Consortium developed a standard metric called Median Accrual Ratio (MAR) to assess the performance of clinical trial recruitment. MAR is defined as the median across a set of clinical trials of the following within-trial ratio [14]:

A similar metric was developed by Corregano et al. called Accrual Index [15]. However, the MAR equals 0 when the number of participants accrued at the time of evaluation is 0. Hence, it may not accurately evaluate the accrual performance of cancer clinical trials considering their longer recruitment period.

The remaining manuscript is structured as follows: In Section 2, we present the motivating data collected at the KUCC and detail both the primary and sensitivity analyses. Given the primary interest of the KUCC Clinical Trials Office in assessing a cancer center's annual performance for subject accrual, we first propose a Bayesian hierarchical model with noninformative priors and no covariate adjustment, outlined in Section 2.1. This primary model combines the accrual performance of individual studies and the variation in performance across studies given a specific timeframe. In addition, recognizing the speed of subject accrual may vary among study types and acknowledging the value of Bayesian informative priors, we propose two additional models for sensitivity analyses, discussed in Section 2.2. In Section 3, we present all analysis results. The discussion and conclusions are presented in Section 4. We conclude that our proposed primary model is a robust and effective tool for evaluating the annual accrual performance of cancer clinical trials at a cancer center. This model allows us to evaluate accrual performance during the COVID-19 pandemic and the impact of the C3OD at the KUCC.

2. Methods

2.1. Data and primary analysis

We extract the data of all active cancer clinical trials hosted on the KUCC Accrual App on December 31st of the following years: 2018, 2019, 2020, 2021, 2022, and 2023. Consider that the accrual target of a cancer clinical trial at KUCC is to recruit participants within months, and at the time of observation, patients have been recruited within months. If a trial is completed prior to the date of data extraction, is reduced to the actual trial operation time.

We assume that for each clinical trial, the number of enrolled participants () after months of enrollment follows a Poisson distribution, , where represents the expected accrual target number given that fixed period. We denote as the adjusted accrual target given months. Hence, is proportional to the accrual time at the targeted accrual speed, so we calculate it as . In addition, a non-negative parameter is introduced as the accrual performance of this trial. Therefore, we set . Furthermore, the observed accrual performance of a single clinical trial could be reorganized as the ratio of observed accrual rate to target accrual rate, which is exactly the within-trial accrual ratio calculated in the CTSA Consortium paper:

The accrual performance of a single clinical trial would be equal to or greater than 1 if the accrual is on target or outperforms the target.

Since study startup is typically time consuming, we give each study at least a year as a “burn-in” period before evaluating the annual performance. For example, for the 2018 evaluation, we evaluate only studies that started in 2017 and use the dataset created on December 31st, 2018. Moreover, for the th study record (), we calculate a year index , i.e., , where represents the year of evaluation.

The primary Bayesian hierarchical model of performance evaluation is given by , where , and

The hyper-prior distributions are

As is described, is the accrual performance of an individual cancer clinical trial. The major inferential parameter represents the annual accrual performance across all active cancer clinical trials at the KUCC evaluated in the th year. This primary Bayesian hierarchical model accounts for within- and across-trial variation using the Poisson and hierarchical components, respectively.

To further assess the impact of C3OD on patient accrual following its implementation in 2021, we construct five distributions representing the differences in annual accrual performance across different years: between 2023 (minimally affected by the COVID-19 pandemic and the third year with C3OD) and 2022 (slightly affected by the COVID-19 pandemic and the second year with C3OD), between 2023 and 2021 (affected by the COVID-19 pandemic and the first year with C3OD), between 2023 and 2019, between 2022 and 2021, and between 2022 and 2019 (prior to the COVID-19 outbreak). We then analyze the density curves of these distributions and calculate the area under the density curve from 0 to infinity to determine the posterior probability that one year's performance is superior to the other.

The posterior distributions of the model parameters are analytically intractable, so we use Metropolis-Hastings updates to estimate them. To be specific, in the statistical software R, we use the package R2OpenBUGS [16] to call OpenBUGS (version 3.2.3 rev 2012) to run 50,000 Markov-chain Monte Carlo iterations (after discarding 10,000 iterations as burn-in) and report the posterior medians, standard deviations (SDs), and 95% Bayesian credible intervals (CIs).

2.2. Sensitivity analyses

2.2.1. Sensitivity analysis by adjusting for a suspension period

Patient recruitment at the KUCC was suspended from April 2 to May 7, 2021, because the informed consent process was under evaluation. Hence, we perform two analyses by adjusting and not adjusting for the suspension period. The adjustment rule is that for studies that ended later than April 2nd and activated prior to May 7th, the days that overlap with the suspension period are subtracted off; otherwise, no adjustment is needed. Lastly, if there is no substantial difference in the results between the two analyses, we pick the analysis with adjustment; otherwise, we report both results and compare them.

2.2.2. Sensitivity analysis by adjusting for study type

To account for potential variations in accrual speed across different study types, we add one more hierarchy for study type, derived from the study title, into the Bayesian hierarchical model:

The hyper-prior distributions are

Note that is the study record index (), is the year index (), and is the study type index ( for Phase 1,2,3 studies and for other studies, e.g., ancillary studies, ). Seamless Phase 1/2 and 2/3 studies are coded as Phase 2 and Phase 3 studies, respectively. Therefore, and represent the expected annual accrual performance for Phase and other studies in the th year, respectively.

By replacing with , we construct a more parsimonious model that reduces the number of parameters to be estimated by 15. In this model, ’s are the annual accrual performance of other studies. Its trend is expected to be consistent with the trend in the unadjusted annual accrual performance observed in the primary model. represents the difference in the annual accrual performance between the Phase studies and other studies within the same year. To conclude this section, we leverage the Bayesian Deviance Information Criterion (DIC) to compare the two models and select the one incorporating study type as an adjustment.

2.2.3. Sensitivity analysis by incorporating previous year's result as a prior

In the primary Bayesian hierarchical model, we assume that the accrual performance for each year to be independent of the others, with each year assigned an independent normal prior. To explore the potential influence of past performance and leverage the flexibility of the Bayesian framework, we propose a sensitivity analysis utilizing a Bayesian hierarchical Normal Dynamic Linear Model (NDLM). This model is specified as follows.

The hyper-prior distribution is

3. Results

We adjusted for the suspension period at the KUCC for analysis since these results do not show a substantial difference in statistical inference compared to the analysis results without adjustment.

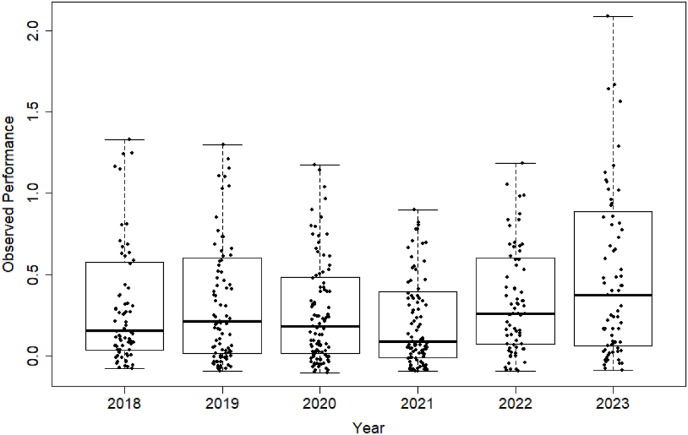

Table 1 summarizes the observed accrual performance of cancer clinical trials at the KUCC by evaluation year. The scattered boxplots in Fig. 1 visualize the observed performance. Note that in a scattered boxplot, any data points more than 1.5 times the interquartile range (IQR) below the first quartile or more than 1.5 times the IQR above the third quartile are considered outliers and are removed from the figure for a better illustration of the data distribution.

Table 1.

Summary of observed accrual performance of cancer clinical trials at the KUCC by evaluation year.

| Evaluation Year | Number of Clinical Trials | Observed Accrual Performance: Median (Min, Max) |

|---|---|---|

| 2018 | 76 | 0.204 (0, 2.502) |

| 2019 | 91 | 0.230 (0, 6.471) |

| 2020 | 107 | 0.177 (0, 8.177) |

| 2021 | 104 | 0.077 (0, 8.156) |

| 2022 | 75 | 0.276 (0, 2.227) |

| 2023 | 79 | 0.326 (0, 11.102) |

Fig. 1.

Scattered boxplots of observed accrual performance of cancer clinical trials at the KUCC by evaluation year. Data points are jittered horizontally to reduce overlap.

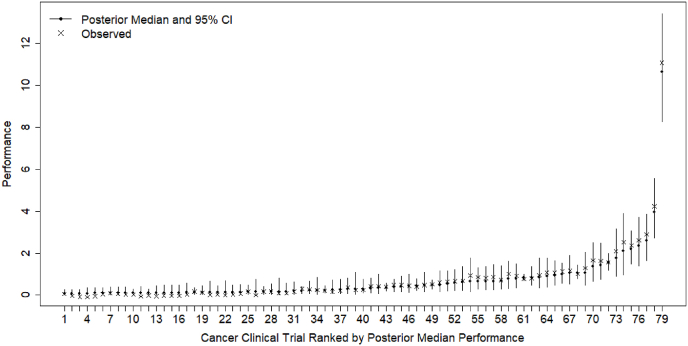

Fig. 2 shows the posterior and observed accrual performance of all active cancer clinical trials at the KUCC in 2023. Figures S1-S5 in the supplemental files shows the posterior and observed accrual performance evaluated in previous years. In those figures, the observed accrual performance and posterior results of individual cancer clinical trials are plotted using cross and bullet points and ranked in ascending order. The vertical lines represent the posterior 95% CIs: a wider CI suggests more posterior uncertainty in accrual performance. In Fig. 2, we can also observe higher uncertainty in a trial's accrual performance when it is much higher or lower than the average accrual performance across trials estimated in that evaluation year. Although the accrual performance of some trials appears high and outlying, they have been validated by the KUCC Clinical Trials Office, so we refrain from removing them.

Fig. 2.

Posterior and observed accrual performance of all active cancer clinical trials at the KUCC in 2023.

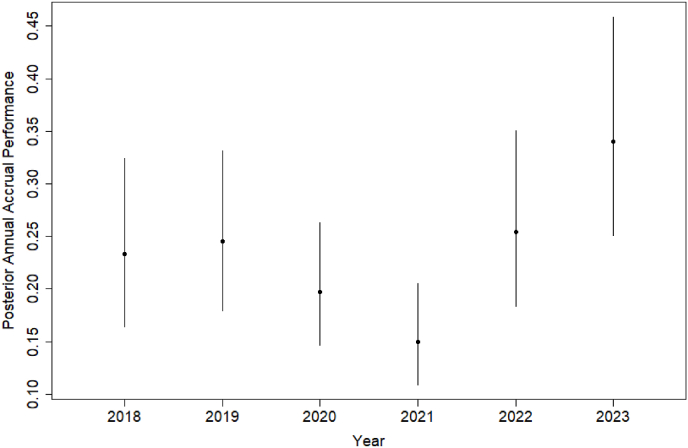

Table 2 summarizes the posterior distributions of annual accrual performance of all cancer clinical trials at the KUCC by evaluation year, and Fig. 3 visualizes the posterior medians and 95% CIs in line plots. Based on the results, the median annual accrual performance at KUCC increased from 0.233 in 2018 to 0.246 in 2019. It decreased to 0.197 in 2020 and 0.150 in 2021 but rebounded to 0.254 in 2022 and 0.340 in 2023. The COVID-19 pandemic started in 2020 has negatively affected patient care and staff turnover, resulting in low accrual to clinical trials in the U.S [17]. This impact was especially pronounced before 2022, prior to the widespread availability of COVID-19 vaccines and antiviral treatment under the FDA's Emergency Use Authorizations. The trend observed in our results reflects the negative impact of the COVID-19.

Table 2.

Summary of posterior distributions of annual accrual performance of all cancer clinical trials at the KUCC by evaluation year.

| Evaluation Year | Number of Clinical Trials | Annual Accrual Performance |

||

|---|---|---|---|---|

| Median | SD | 95% CI | ||

| 2018 | 76 | 0.233 | 0.041 | (0.165, 0.324) |

| 2019 | 91 | 0.246 | 0.039 | (0.180, 0.331) |

| 2020 | 107 | 0.197 | 0.030 | (0.146, 0.263) |

| 2021 | 104 | 0.150 | 0.025 | (0.109, 0.206) |

| 2022 | 75 | 0.254 | 0.043 | (0.184, 0.351) |

| 2023 | 79 | 0.340 | 0.053 | (0.251, 0.459) |

Fig. 3.

Posterior distributions of annual accrual performance of cancer clinical trials at the KUCC by evaluation year.

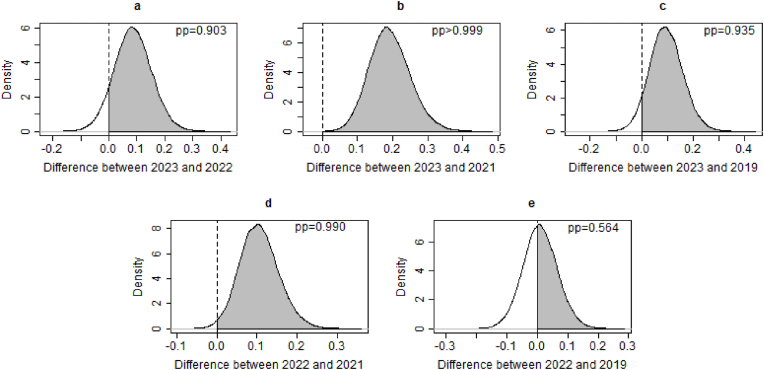

The KUCC C3OD has contributed to the Executive Resourcing Committee's review of clinical trials and patient enrollment since 2021. Fig. 4 displays the posterior distributions of the differences in annual accrual performance across different years, before and after the implementation of C3OD. First, the posterior probabilities indicating superior annual accrual performance in 2023 compared to both 2022 and 2021 are 0.903 and greater than 0.999, respectively. The posterior probability that the annual accrual performance in 2022 is better than in 2021 is 0.990. Second, the posterior probabilities suggesting better performance in 2023 and 2022 compared to 2019 are 0.935 and 0.564, respectively. This implies a return to pre-pandemic accrual levels in 2022 and an enhancement in accrual in 2023, facilitated by C3OD. The above findings suggest continuous enhancements in subject accrual with the utilization of C3OD. However, a significant surge is not immediately observed following C3OD's implementation. This delay may be attributed to the time required for screening and consenting after potential participants are identified by C3OD. Consequently, we should consider a more prolonged assessment of C3OD's influence and allow for a more extended “burn-in” period for annual accrual evaluation in future analyses.

Fig. 4.

Posterior distributions of differences in annual accrual performance across different years. Note that pp stands for posterior distribution.

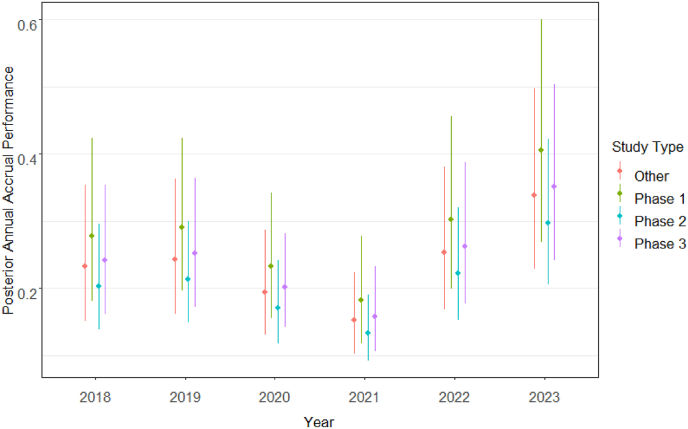

The sensitivity analysis of study type adjustment favored the parsimonious model because of its efficiency, indicated by fewer estimators, and a slightly lower Deviance Information Criterion (1971 vs. 1972). Table 3 details the estimated annual accrual performance for each study type and evaluation year based on this model. Fig. 5 further visualizes this information through line plots representing posterior medians and 95% confidence intervals. Fig. 5's trends in the estimated accrual performance align with those observed in Fig. 2's unadjusted annual accrual performance, with Phase 1 studies exhibiting the highest accrual performance, followed by other and Phase 3 studies. Adjusting for study type reveals an improvement in accrual for 2023 compared to 2022 (), 2021 () and 2019 ().

Table 3.

Summary of posterior distributions of annual accrual performance of all cancer clinical trials at the KUCC by study type and evaluation year.

| Evaluation Year | Study Type | Number of Studies (%) | Annual Accrual Performance |

||

|---|---|---|---|---|---|

| Median | SD | 95% CI | |||

| 2018 | Phase 1 | 12 (15.8%) | 0.277 | 0.063 | (0.181, 0.424) |

| Phase 2 | 28 (36.8%) | 0.204 | 0.041 | (0.138, 0.297) | |

| Phase 3 | 22 (28.9%) | 0.241 | 0.049 | (0.161, 0.354) | |

| Other | 14 (18.4%) | 0.233 | 0.052 | (0.151, 0.354) | |

| 2019 | Phase 1 | 22 (24.2%) | 0.291 | 0.058 | (0.196, 0.424) |

| Phase 2 | 35 (38.5%) | 0.213 | 0.039 | (0.149, 0.300) | |

| Phase 3 | 22 (24.2%) | 0.252 | 0.049 | (0.173, 0.364) | |

| Other | 12 (13.2%) | 0.243 | 0.051 | (0.162, 0.363) | |

| 2020 | Phase 1 | 19 (17.8%) | 0.233 | 0.048 | (0.155, 0.343) |

| Phase 2 | 31 (29.0%) | 0.170 | 0.032 | (0.118, 0.242) | |

| Phase 3 | 39 (36.4%) | 0.202 | 0.035 | (0.143, 0.281) | |

| Other | 18 (16.8%) | 0.195 | 0.040 | (0.131, 0.287) | |

| 2021 | Phase 1 | 15 (14.4%) | 0.182 | 0.041 | (0.118, 0.278) |

| Phase 2 | 43 (41.3%) | 0.134 | 0.025 | (0.092, 0.190) | |

| Phase 3 | 22 (21.2%) | 0.158 | 0.032 | (0.106, 0.233) | |

| Other | 24 (23.1%) | 0.153 | 0.031 | (0.103, 0.224) | |

| 2022 | Phase 1 | 16 (21.3%) | 0.303 | 0.065 | (0.200, 0.456) |

| Phase 2 | 25 (33.3%) | 0.222 | 0.043 | (0.152, 0.321) | |

| Phase 3 | 18 (24.0%) | 0.263 | 0.054 | (0.177, 0.388) | |

| Other | 16 (21.3%) | 0.253 | 0.055 | (0.168, 0.381) | |

| 2023 | Phase 1 | 15 (19.0%) | 0.405 | 0.085 | (0.268, 0.601) |

| Phase 2 | 25 (31.6%) | 0.297 | 0.055 | (0.206, 0.423) | |

| Phase 3 | 20 (25.3%) | 0.352 | 0.067 | (0.241, 0.504) | |

| Other | 19 (24.1%) | 0.339 | 0.069 | (0.228, 0.497) | |

Fig. 5.

Posterior distributions of annual accrual performance of cancer clinical trials at the KUCC by study type and evaluation year.

The NDLM posterior distributions of annual accrual performance show discrepancies from the primary model only in the third decimal place (see Table S1 in supplemental files).

4. Discussion and conclusions

In this paper, we proposed three Bayesian hierarchical models to assess the annual clinical trial accrual performance of a cancer center. These models were applied to accrual data from 532 trials at the KUCC from 2018 to 2023. This primary model considers both within- and between-trial variation at a cancer center, using a Poisson model and a hierarchical component. The other two models were used in the sensitivity analyses: one incorporating study type as a covariate in the proposed primary model, and another utilizing a different NDLM with an informative prior based on the previous year's performance evaluation.

We conclude that the primary model serves as a robust and satisfactory tool for assessing the annual accrual performance of cancer clinical trials at a cancer center, underscoring a key strength of this paper. This conclusion is supported by the sensitivity analyses with the KUCC accrual data, which reveal consistent trends and only minor deviations from the primary model's results. Moreover, the primary model is favored over the NDLM since the primary model's priors are noninformative and independent of the data. Another strength of the primary model is its capability to construct posterior probabilities to examine potential effects of policy changes or unforeseen events over time.

Through our application at the KUCC, we investigated accrual performance from 2018 to 2023 and identified a negative impact of COVID-19 pandemic and a beneficial impact of C3OD, a tool designed to expedite eligibility screening at the KUCC. Despite the KUCC maintaining only 79 active studies in 2023, roughly 75% its pre-pandemic peak, the median observed accrual performance reached a new peak at 0.326. This suggests that accrual performance evaluation and closure of underperforming studies are essential for a research institute's development. These steps also agree with the suggestions from the 2010 NCI-ASCO Cancer Trial Accrual Symposium.

One limitation of this study is that the estimated performance of cancer clinical trial accrual may potentially be confounded by other factors, such as sponsor type, cancer type, and stage of investigation. For example, in the analysis of the KUCC accrual data, the current C3OD workflow does not consider the clustering that may occur among similar types of cancers with overlapping inclusion and exclusion criteria. Consequently, the impact of C3OD on accrual performance could be confounded by cancer type.

In summarizing the strengths and limitations of this study, we first highlight the strength of a robust and effective tool for evaluating the annual accrual performance of cancer clinical trials at a cancer center. The Bayesian tool provides important posterior calculations such as the posterior distribution of study performance as well as institutional accrual performance. Another strength of this tool is it can accommodate the assessment of temporal changes, e.g., policy changes (C3OD) and uncontrollable events (pandemic). However, a notable limitation is the potential influence of additional factors on performance evaluation, e.g. trials that compete for the same patient pool. Hence, for future studies, we recommend the adoption of our proposed primary model and the incorporation of potential covariates, such as competing trials, for sensitivity analyses. Incorporating more important covariates may give more accurate performance estimations.

When talking about the success of a cancer clinical trial, we often focus on its clinical outcome. However, achievement of patient accrual is the stepstone of trial success. Evaluating annual accrual performance across clinical trials is essential to building a successful clinical research portfolio for a cancer center. The performance evaluation tools described in this paper are applicable and extentable, so they are highly recommended for use by institutions conducting clinical trials.

Funding

This research is partially funded by the NCI University of Kansas Comprehensive Cancer Center (KUCC, P30CA168524).

CRediT authorship contribution statement

Xiaosong Shi: Writing – review & editing, Writing – original draft, Visualization, Validation, Software, Methodology, Formal analysis, Conceptualization. Dinesh Pal Mudaranthakam: Writing – review & editing, Validation, Software, Funding acquisition, Data curation. Jo A. Wick: Writing – review & editing, Supervision, Methodology, Funding acquisition. David Streeter: Writing – review & editing, Software, Data curation. Jeffrey A. Thompson: Writing – review & editing, Software, Funding acquisition, Data curation. Natalie R. Streeter: Writing – review & editing, Data curation, Conceptualization. Tara L. Lin: Writing – review & editing, Funding acquisition, Data curation, Conceptualization. Joseph Hines: Writing – review & editing, Data curation. Matthew S. Mayo: Writing – review & editing, Software, Methodology, Data curation, Conceptualization. Byron J. Gajewski: Writing – review & editing, Supervision, Methodology, Conceptualization.

Declaration of generative AI and AI-assisted technologies in the writing process

During the preparation of this work, the authors used ChatGPT and Google Gemini to improve the language and readability. After using these tools, the authors reviewed and edited the content as needed and took full responsibility for the content of the publication.

Declaration of competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Footnotes

Supplementary data to this article can be found online at https://doi.org/10.1016/j.conctc.2024.101281.

Appendix A. Supplementary data

The following is the Supplementary data to this article.

Data availability

Data will be made available on request.

References

- 1.Guerra CE, Fleury ME, Byatt LP, et al. Strategies to Advance Equity in Cancer Clinical Trials. American Society of Clinical Oncology Educational Book; 127–137.. [DOI] [PubMed]

- 2.Unger JM, Hershman DL, Till C, et al. “when offered to participate”: a systematic review and meta-analysis of patient agreement to participate in cancer clinical trials. JNCI: Journal of the National Cancer Institute; 113: 244–257.. [DOI] [PMC free article] [PubMed]

- 3.Denicoff AM, McCaskill-Stevens W, Grubbs SS, et al. The national cancer institute–American society of clinical Oncology cancer trial accrual symposium: summary and recommendations. Journal of Oncology Practice; 9: 267–276.. [DOI] [PMC free article] [PubMed]

- 4.Mudaranthakam DP, Phadnis MA, Krebill R, et al. Improving the efficiency of clinical trials by standardizing processes for investigator initiated trials. Contemporary Clinical Trials Communications; 18: 100579.. [DOI] [PMC free article] [PubMed]

- 5.Mudaranthakam DP, Thompson J, Hu J, et al. A curated cancer clinical outcomes database (C3OD) for accelerating patient recruitment in cancer clinical trials. JAMIA Open; 1: 166–171.. [DOI] [PMC free article] [PubMed]

- 6.Gajewski BJ, Simon SD, Carlson SE. Predicting accrual in clinical trials with Bayesian posterior predictive distributions. Stat. Med.; 27: 2328–2340.. [DOI] [PubMed]

- 7.Jiang Y, Simon S, Mayo MS, et al. Modeling and validating bayesian accrual models on clinical data and simulations using Adaptive Priors. Stat. Med.; 34: 613–629.. [DOI] [PMC free article] [PubMed]

- 8.Jiang Y, Guarino P, Ma S, et al. Bayesian accrual prediction for Interim Review of ClinicalStudies: Open Source R package and smartphone application. Trials. 2016;17 doi: 10.1186/s13063-016-1457-3. Epub aheadof print 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Liu J, Wick JA, Mudaranthakam DP, et al. Accrual prediction program: a web-based clinical trials tool for monitoring and predicting accrual for early-phase cancer studies. Clin. Trials; 16: 657–664.. [DOI] [PMC free article] [PubMed]

- 10.Liu J, Wick J, Jiang Y, et al. Bayesian accrual modeling and prediction in multicenter clinical trials with varying center activation times. Pharmaceut. Stat.; 19: 692–709.. [DOI] [PMC free article] [PubMed]

- 11.Carlisle B, Kimmelman J, Ramsay T, et al. Unsuccessful trial accrual and human subjects protections: an empirical analysis of recently closed trials. Clin. Trials; 12: 77–83.. [DOI] [PMC free article] [PubMed]

- 12.Stensland K, Kaffenberger S, Canes D, et al. Assessing genitourinary cancer clinical trial accrual sufficiency using archived trial data. JCO Clinical Cancer Informatics; 614–622.. [DOI] [PubMed]

- 13.Porter M, Ramaswamy B, Beisler K, et al. A comprehensive program for the enhancement of accrual to clinical trials. Ann. Surg Oncol.; 23: 2146–2152.. [DOI] [PubMed]

- 14.Daudelin, DH, Peterson, LE, Selker, HP, Pilot test of an accrual common metric for the NIHclinical and Translational Science Awards (CTSA) consortium: Metric feasibility and DataQuality. J. Clin. Trans. Sci. 2020 5 10.1017/cts.2020.537; Epub ahead of print 2020. [DOI] [PMC free article] [PubMed]

- 15.Corregano L, Bastert K, Correa da Rosa J, et al. Accrual index: a real-time measure of the timeliness of clinical study enrollment. Clinical and Translational Science; 8: 655–661.. [DOI] [PMC free article] [PubMed]

- 16.Sturtz, S, Ligges, U, Gelman, A, R2OpenBUGS: a package for running OpenBUGS from R.R Package Version 2020. , pp. 3.2–3.2.1.Source Text: srct0040Sturtz S, Ligges U, Gelman A. R2OpenBUGS: a package for running OpenBUGS from R. R Package Version 2020:3.2-3.2.1.

- 17.Boughey JC, Snyder RA, Kantor O, et al. Impact of the COVID-19 pandemic on cancer clinical trials. Ann. Surg Oncol.; 28: 7311–7316.. [DOI] [PMC free article] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Data will be made available on request.