Abstract

An early achievement in language is carving a variable acoustic space into categories. The canonical story is that infants accomplish this by the second year, when only unsupervised learning is plausible. I challenge this view, synthesizing five lines of developmental, phonetic and computational work. First, unsupervised learning may be insufficient given the statistics of speech (including infant-directed). Second, evidence that infants “have” speech categories rests on tenuous methodological assumptions. Third, the fact that the ecology of the learning environment is unsupervised does not rule out more powerful error driven learning mechanisms. Fourth, several implicit supervisory signals are available to older infants. Finally, development is protracted through adolescence, enabling richer avenues for development. Infancy may be a time of organizing the auditory space, but true categorization only arises via complex developmental cascades later in life. This has implications for critical periods, second language acquisition, and our basic framing of speech perception.

1. Introduction

The ability to categorize sounds is fundamental for spoken language comprehension. While phonemes subjectively feel like invariant percepts, their instantiations vary across talkers, coarticulatory context and speaking rates (Hillenbrand et al., 1995; McMurray & Jongman, 2011). Thus, listeners must abstract over this variation to recognize a phoneme and generalize to new exemplars (e.g., new talkers). That is, they must categorize (Holt & Lotto, 2010). Categorization in speech is partially an acquisition problem. Languages carve up the acoustic / phonetic space into different sets of categories. Spanish, for example, has five vowels, while English has 12. As a result, an early aspect of language acquisition is identifying the categories of the language.

For some years, there was a near consensus on the development of speech categorization: Children acquire speech categories during infancy in a way that can be described as perceptual narrowing (Kuhl et al., 2005; Werker & Curtin, 2005; Werker & Yeung, 2005), which is thought to be undergirded by a form of unsupervised statistical learning (Maye et al., 2003). However, as I argue here, this theory was built on an understanding of the basic nature of speech perception that turned out to be wrong (categorical perception, Liberman et al., 1957), and which enabled an inappropriately rich interpretation of infant methods (Feldman et al., 2021). On a more positive note, recent research has expanded the range of learning mechanisms (Nixon & Tomaschek, 2021), the available information in the learning environment (Feldman, Myers, et al., 2013; Teinonen et al., 2008) and our understanding of the developmental progression of skills (Hazan & Barrett, 2000; McMurray et al., 2018). This suggests alternative conceptualizations of the problem. While this is not yet a complete theoretical picture, this review will hopefully prompt a shift toward a new synthesis.

It is important to note two limits to my argument. First, speech categorization is but one small part of the auditory skills necessary for speech perception (see Werker & Yeung, 2005, for a nice review). Second, I use the term categorization in the broadest sense, skipping debates about the nature of the representation, to focus on a core that most theories agree on: the functional ability to group acoustically distinct sounds together (e.g., across talkers, contexts) and to contrast these sounds from others along linguistically relevant dimension using phoneme-sized chunks of the signal (see Schatz et al., 2021, for a similar definition).

I start with a short review of speech categorization, before presenting the canonical view. I then address the challenges to that consensus before outlining new paths for future work.

1.1. Speech perception as categorization

Phonemes are defined by continuous acoustic cues such as Voice Onset Time (VOT: the time difference between the release of the articulators and the vowel; Lisker & Abramson, 1964), formant frequencies (resonant frequencies of the vocal tract that contrast vowels; Hillenbrand et al., 1995), or the spectral peak of a fricative (Jongman et al., 2000). Cues are continuous and take a range of values. VOT, for example, ranges from −180 to 180 msec, with English voiced and voiceless sounds typically near 0 and 60 msec (Lisker & Abramson, 1964). Cues vary across contextual factors like talker, speaking rate, and coarticulatory context. Consequently, a phoneme can be described as a region of allowable cue values—a category.

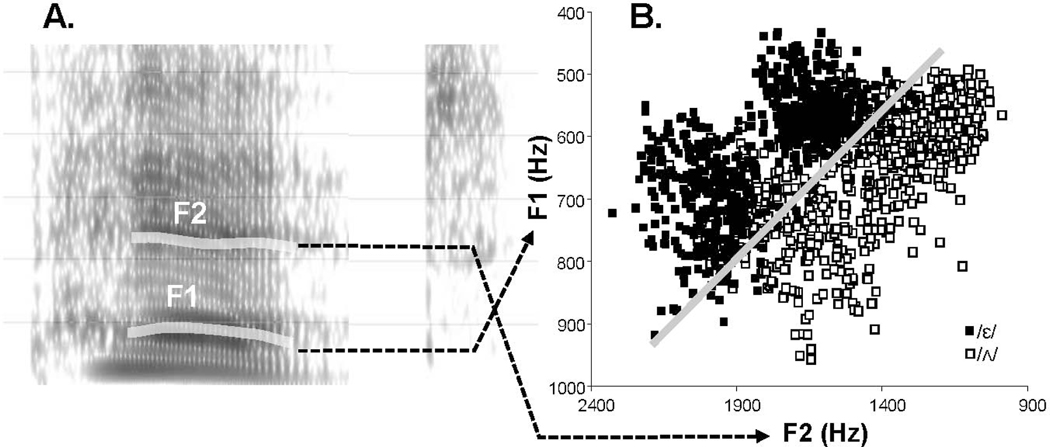

As an example, Figure 1A shows a spectrogram of the word pet. Marked are the first and second formants (F1 and F2), whose frequencies are cues to vowel identity. Figure 1B shows the distribution of F1 and F2 frequencies across a number of talkers and contexts (adapted from Cole et al., 2010). Typically, the /ɛ/ in pet (filled squares) has a lower F1 and a higher F2 than the /ʌ/ in putt (open squares), as shown by the fact that its distribution is generally shifted up and to the right. However, across many talkers and contexts, there is variability in both. Thus, the /ɛ/ and /ʌ/ categories can be described as regions of acoustic phonetic space with a multi-dimensional boundary (the gray line) and some overlap due to context.

Figure 1:

A) Spectrogram of the word pet. The first and second formants or energy bands (the critical cues for vowel discrimination) are marked. B) Formant frequencies measured for several hundred utterances containing the vowels /ɛ/ and /ʌ/ (Cole et al., 2010). This shows clustering in a 2-dimensional space. These utterances span 10 talkers, 6 neighboring contexts, and four neighboring vowels, thus capturing significant natural variation; factors which account for approximately 90% of the variance in the formant frequencies.

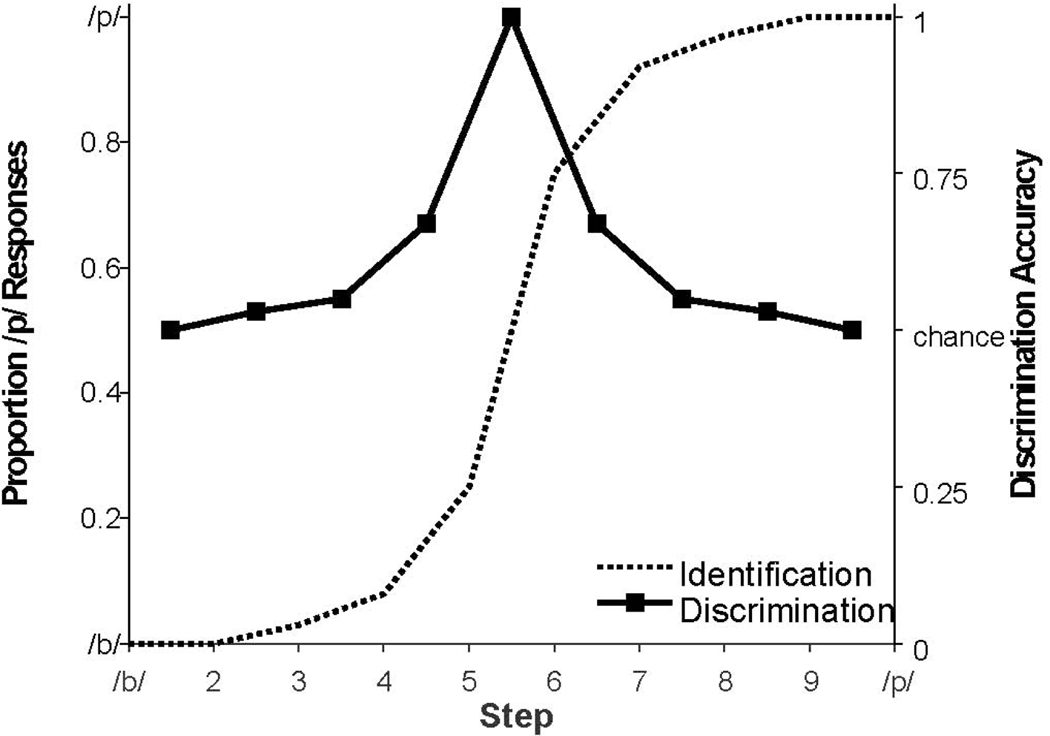

Classic approaches to adult speech categorization assumed categorical perception (CP, Figure 2; Liberman et al., 1957). Categorical Perception was initially an empirical finding, that listeners were poorer at discriminating phonemes within the same category (e.g., two /b/’s with VOTs of 0 and 15 msec, if the boundary is at 20 msec) than two equivalently distant phonemes spanning the boundary (a /b/ with a VOT of 15 msec and a /p/ at 30 msec). In principle, discrimination tasks do not require listeners to categorize or label the stimuli—listeners can decide that two /b/’s are same or different based on their acoustic properties alone. Thus, the fact that discrimination—which reflects auditory encoding—was shaped by the categories of the language was thought to indicate a tight coupling between the acoustic encoding and categorization (e.g., a warping of the acoustic space).

Figure 2:

Canonical results in a Categorical Perception (CP) paradigm. Step refers to position along a speech continuum, where step 1 might be a prototypical /b/ and step 10, a prototypical /p/. Identification (dashed line, left axis) is the proportion of responses matching one of the endpoints and shows a steep boundary at 5. Discrimination is assessed between neighboring points (e.g., between step 1 and 2, 2, and 3, and so forth). The peak indicates that discrimination is better between steps 5 and 6 (spanning the boundary) than between 1 and 2 (both /b/’s). This kind of isomorphism between discrimination and identification appeared to make it straightforward to assume categorization on the basis of differences in discrimination alone.

CP influenced developmental work in two ways. First, it suggested that listeners rapidly transduce auditory inputs to a quasi-symbolic representation. That is, the “goal” of development is to learn to discard irrelevant variation and achieve this kind of abstraction. Second, the tight coupling between identification and discrimination was crucial for overcoming the limits of infant methods. As I describe, most infant methods are essentially discrimination measures. By assuming CP, one can use discrimination measures to make inferences about categorization.

Unfortunately, CP turned out to be wrong, both empirically and theoretically (see McMurray, submitted, for a comprehensive review). Empirically, many studies have demonstrated that the discrimination peak at the boundary is an artifact of memory demands or bias in discrimination tasks. When less biased or demanding tasks are used, within-category discrimination is good as between (Carney et al., 1977; Gerrits & Schouten, 2004; Massaro & Cohen, 1983; Pisoni & Lazarus, 1974; Schouten et al., 2003). Moreover, neuroimaging methods have more directly assessed encoding of cues like VOT and find no evidence for warping (Frye et al., 2007; Toscano et al., 2018; Toscano et al., 2010). Pisoni and Tash (1974) suggest a way to synthesize a continuous encoding of auditory cues like VOT and the empirically observed differences in discrimination for within- and between-category contrasts: If phonological categories are separate from the auditory encoding, but both can contribute to discrimination, then discrimination should be better across the boundary (where both factors conspire to support discrimination). Thus, the argument is not that prior observation of differences in discrimination are not replicable findings; rather that differences in discrimination for within- and between-category contrasts do not reflect differences in how those contrasts are encoded at an auditory or cue level (e.g., how a VOT is coded). Rather those differences arise from how the categories that are extracted from it shape downstream memory and other processes.

Theoretically work has also moved past the claim of CP that categories are discrete and that listeners seek to suppress within-category detail. Instead, speech categories have a gradient structure: listeners track the degree to which a given cue value is a good or poor exemplar or a category (Andruski et al., 1994; McMurray et al., 2002; Miller, 1997). This gradiency may help listeners be more flexible in resolving ambiguities (Kapnoula et al., 2021), integrating cues (Kapnoula et al., 2021; Kapnoula et al., 2017), and tracking the degree of uncertainty (Clayards et al., 2008). This suggests the goal of the developing system may not be to ignore irrelevant variation, but to acquire categories that capture the structure of this variation.

1.2. The Canonical View of Speech Category Acquisition.

Classic work suggests infants rapidly tune into the categories of their language during the first year (e.g., Eimas et al., 1971; Werker & Tees, 1984) (see Kuhl, 2004; Werker & Curtin, 2005, for reviews). Prior to 6 months, infants discriminate most speech sounds. These abilities become tuned to the native language by 12–18 months, with decreases in discrimination for non-native contrasts, and increases for contrasts used in the language (Galle & McMurray, 2014; Kuhl, Stevens, Deguchi, et al., 2006; Tsuji & Cristia, 2014). This is not the only pattern: some contrasts are initially hard to discriminate and acquired with experience (Eilers et al., 1977; Narayan et al., 2010). But even in these less common cases, this occurs early in development. If one assumes that these discrimination results reflect categorization (CP), these studies suggest emergence of language-specific categorization in the first year of life.

This has led to a consensus loosely framed around perceptual narrowing (Maurer & Werker, 2014), infants’ perceptual systems narrow to focus only on differences that are contrastive in their language. This narrowing includes within-category distinctions that are lost to support CP (e.g., two VOTs that both comprise a /b/), as well as phonemic contrasts that are not used in the native language (e.g., alveolar /d/ and dental /d̪/ which are contrasted in Hindi). Critically, by claiming that this is perceptual narrowing (contrasted with interpretive narrowing: Hay et al., 2015), this is not simply a claim that infants have a set of categories. Rather it claims that auditory encoding is narrower (warped), consistent with the strong theoretical claims of CP.

Even in infancy, these strong claims are not fully supported. First, infants’ speech categories appear to have a gradient or prototype like structure (Kuhl, 1991; McMurray & Aslin, 2005; Miller & Eimas, 1996), ruling out the strong assumption of CP that within-category contrasts are not discriminable. Second, for both voicing (Galle & McMurray, 2014) and vowels (Tsuji & Cristia, 2014), meta-analyses suggest that the dominant pattern in development may be increasing sensitivity to between-category contrasts (see also Kuhl, Stevens, Deguchi, et al., 2006), not loss of sensitivity to within-category. Nonetheless, these findings have not largely been seen as a challenge to narrowing (Maurer & Werker, 2014).

The canonical view is that this development occurs early, under most accounts by 12–18 months. Critically at these ages, researchers have assumed that infants have little access to anything to help them learn. They do not get feedback on speech perception from caregivers. Speech production is poorly organized at this age, and higher-level factors like lexical structure may not be available as there are not sufficient words in the lexicon. Given the lack of available teaching signals, the canonical view assumes that this early acquisition of speech categories proceeds as a form of perceptual or statistical learning (Jusczyk, 1993; Werker & Curtin, 2005).

The most prominent of these accounts takes the form of unsupervised distributional (statistical) learning (de Boer & Kuhl, 2003; Maye & Gerken, 2000; Maye et al., 2003; McMurray, Aslin, et al., 2009). The idea is that infants track how often specific cue values occur (e.g., how often a specific VOT is heard, Figure 3A). These distributions are shaped by the categories of the language such that each category corresponds to a “cluster.” By identifying these clusters, infants can derive the likely categories of the input.

Figure 3:

A) Histograms of VOT from McMurray et al. (2013) (adult directed speech) show a clear bimodal distribution with peaks corresponding to /b/ and /p/. B) Similarly for sibilant fricatives (from McMurray & Jongman, 2011), the spectral mean (the frequency at which most energy is clustered) shows a bimodal distribution with peaks for /s/ and /ʃ/. C) However, for vowels (Cole et al., 2010) when the phonemic identity is not known (the same data as Figure 1B), the most important clustering is by talker gender.

A number of studies have isolated this form of learning in artificial language learning experiments (Maye et al., 2008; Maye et al., 2003; Yoshida et al., 2010). In this distributional learning paradigm, infants hear a series of stimuli whose cues form clusters. After this, discrimination reflects the number of clusters in the input (e.g., a baby exposed to a distribution with two modes will discriminate tokens that straddle those modes, while a baby exposed to a unimodal distribution does not. Distributional learning is not limited to infancy, but can is shown by adults (Escudero et al., 2011; Escudero & Williams, 2014; Goudbeek et al., 2008).

As a package, the canonical view offers a clear picture. Infants acquire speech categories early, and the relative dearth of skills and environmental factors to support learning suggests that only unsupervised learning (e.g., distributional)—can achieve these goals1. This early mastery of speech perception skills then sets the foundation for later language skills (word learning, syntax, etc.) (Werker & Yeung, 2005). While this offers a simple and compelling story, mounting research, more advanced models, and new thinking suggests a richer story is needed.

2.0. The Ecology and Mechanisms of Learning.

In making the case for a new approach to speech category acquisition, it is helpful to draw a distinction between the ecology of learning and the mechanisms of learning (for analogous arguments in other areas of language, see Claussenius-Kalman et al., 2021; Ramscar, 2021). Figure 4 shows a schematic. As a simplification, I will describe the speech system as the system that maps an incoming auditory token to a category (the white box).

Figure 4.

A schematic illustrating the difference between the ecology and mechanisms of learning. For simplification, the speech system (central white box) is as the aspect of the system that maps acoustic inputs to categories. In most models, learning mechanisms are presumed to operate within this system (even as they may get information from other systems). The ecology of the learning system includes properties of the environment such as the distribution of cues, or the availability of signals such as visual cues. It can include factors that are internal to the child such as the lexicon, knowledge of the reading system, or speech production (many of which are discussed later).

In this framing, the ecology of learning refers to anything outside of the speech system that may impact learning—the broader developmental context in which that capacity is acquired. In contrast, the mechanisms of learning live inside the speech system (though they may use information from outside it) and describe at a computational level how experiences (shaped by the ecology) are internalized in the system. To think about this concretely, in any computational model of category learning there are a small set of operations that take some inputs, map them to categories and update the representations or connections that enable such a mapping. This comprises the speech system, and the latter updating rule constitutes the learning mechanism. However, in addition to this, any model must also include a lot more code that specifies the structure of the auditory inputs, the order in which they are presented, other information that may be available to the system (e.g., referents, visual cues), and cognitive operations outside of speech that may impose structure on the speech system (e.g., attention). These constitute the ecology of learning—the rest of the system.

Critically, the canonical view has often conflated the ecology of the learning environment with the learning mechanism: because the ecology of infancy is largely unsupervised (there are few sources of information outside the speech signal to help separate categories, the learning mechanism must also be unsupervised. I argument that this is a mistake. Instead, by thinking about the learning ecology and mechanism independently, this may enable richer and more powerful theories of development. For example, we may be able to accommodate more powerful error-driven [supervised] learning mechanism even in an unsupervised ecology. Or we may be able to identify subtle sources of information in the environment that could contribute to an unsupervised (or supervised) learning mechanism.

2.1. Ecology of the Learning Environment

The Ecology of Learning includes the ecology of the environment (Figure 4, dark gray box) which includes the available sources of input (e.g., the distribution of speech cues, the types of visual cues available). It also includes the internal ecology of the learner—the skills and capacities (including the results of prior learning) the child brings to bear on the problem at that moment in development, but which lie outside of the speech system. This might include speech production, the words that are known, reading, or domain general cognitive skills (many of which are discussed below).

A critical aspect of the learning ecology is whether it includes a potential teaching signal or whether learning in this system must be purely unsupervised. In this framing, a supervised learning ecology means that there is some source of information that comes from outside of the speech system that can serve as an additional marker for which category a speech sound should be in. This need not be from an explicit teacher (though it could be). For example, the presence of a visual referent, or a response from a conversation partner that indicates the child misperceived a sound could serve as supervisory signals from outside the speech system that tell the child how a sound should have been grouped. Similarly, the child’s own productions could serve as a supervisory signal that is outside of the speech system but internal to the child. Whenever some non-speech information is available to help guide the development of speech categorization, we can describe the learning ecology as supervised or partially supervised.

In contrast, an unsupervised ecology entails learning based on only the inputs available to the speech system. Because early infancy is a time when there is little information outside the speech perception (e.g., words, references, visual speech cues, production, spelling), the canonical view has argued that the ecology is largely unsupervised, though I question that in sections below.

2.2. Mechanisms of Learning

The mechanisms of learning describe at a more computational level how experiences (shaped by the ecology) are internalized in the system. These are presumed to lie within the speech system as the rules which form or alter the maps between speech cues and categories. As I describe shortly, these can take multiple forms. For example, they may use traditional error-driven principles like the Rescorla-Wagner rule (Rescorla & Wagner, 1972) or back-propagation, they may follow Hebbian principles (cell that fire together wire together) or something else (e.g., exemplar models). Critically, the same basic computational principles may (or may not) take advantage of teaching signals available in the external ecology. For this reason, part of my he argument is that we should stop talking about learning rules as supervised or unsupervised—they can be described much more clearly in terms of the nature of the updating—and use those terms to focus on the ecology.

2.3. The Canonical View Conflates Ecology and Mechanism.

Traditional thinking, represented by the canonical view, often conflates the ecology of learning and the learning mechanism. Much of the canonical view of infant speech category acquisition is motivated by researchers’ assumptions about the learning ecology of infants: the lack of a social feedback, and under-developed skills like speech production, the lexicon, or reading skills, that could support learning. Under the canonical view, largely unsupervised ecology has suggested to many researchers (including earlier versions of me2) that only passive mechanisms of learning (e.g., counting distributions, clustering and/or Hebbian learning) are likely to be relevant to the problems faced by infancy.

In retrospect, this is over-simplified: the ecology and mechanisms of learning are not isomorphic. Even in an unsupervised ecology, error driven learning—even purely within the speech system—is possible using internally generated prediction error (Elman, 1990; Nixon & Tomaschek, 2021). Conversely, even with an explicit teacher in the ecology, the learning mechanisms could take the form of simple associations (without evaluating error). Thus, it is important to independently consider both the ecology and mechanisms of learning in evaluating the canonical view and developing new approaches.

3. Challenges to the Canonical Model

The canonical model has been challenged in five ways. First, recent work has asked if distributional learning is sufficient for acquiring speech categorization, investigating both the strength of evidence for this form of learning in the lab, and the statistical properties of the infant language learning environment (the ecology). Second, computational work has challenged the supposed need for unsupervised learning mechanisms, illustrating how more powerful learning mechanisms could be involved, even in an unsupervised ecology. Third, alternative interpretations of the classic results in infancy challenge the notion that infants even acquire speech categories during the first year or so. Fourth, a richer understanding of the ecology of the infant learning environment suggests new avenues for learning that are not entirely unsupervised. Finally, and it is now clear that the development of speech categories is protracted through childhood and even adolescence, when the ecology of learning can change dramatically to support phonetic category acquisition in new ways.

3.1. The limits of distributional learning

Distributional learning appeared to offer a compelling approach to early speech category acquisition: it requires no explicit teacher, and it is consistent with phonetics showing how many speech cues are distributed. Figure 3A,B show examples: by counting how often individual cue values (e.g., specific VOTs) occur, clusters that correspond to the categories of the language appear. A language like English or Spanish with two categories along the VOT dimension will show two clusters; a language with three (like Thai) will show three (Kessinger & Blumstein, 1998; Lisker & Abramson, 1964). Since distributional learning can estimate the number and location of these clusters without a teacher, this model fits with assumptions about the learning ecology of the infants. However, this simple story has been challenged in two ways.

3.1.1. Empirical Results.

The initial case for distributional learning came from artificial language learning experiments in which infants were briefly exposed to a series of speech sounds whose cue values formed a unimodal or bimodal distribution. After this, discrimination differed based on this exposure (Maye et al., 2008; Maye et al., 2003). However, subsequent work has shown that this effect is hard to replicate (e.g., Yoshida et al., 2010), and a recent meta-analysis concluded that effects may be small and impacted by the manner of discrimination testing (Cristia, 2018). While this does not rule out this mechanism, it offers some context, as a small effect in a controlled laboratory (where only a single cue is manipulated) may not easily translate to the much more statistically complex real world.

3.1.2. The Statistics of The Environment.

A bigger concern is that richer analyses of the statistics of the input suggest that distributional learning not be sufficient for many problems. First, for many types of categories, clusters do not appear. For example, while Figure 1B shows what appears to be clustering among two vowels observed, this figure is misleading as the vowels are color coded. When we remove the colors (Figure 3C) the patterning by vowel disappears; instead, the only visible clusters correspond to talker gender! Similarly, while sibilant fricatives like /s/ and /ʃ/ show clear structure (Figure 3B), non-sibilants like /θ, ð, f, v/ do not have such structure and few phonetic cues for these sounds have been identified (Jongman et al., 2000). In fact, even combining 24 different cues with a supervised classifier, McMurray and Jongman (2011) were only able to classify these sounds at about 60% accuracy. Notably this work was based on clear lab-produced speech – in the real world the situation is likely worse. Finally, Swingley (2019) showed little evidence for robust bimodality for the Dutch vowel length distinction, and in fact, bimodality was just as strong as for English – which does not use that distinction phonologically.

Moreover, many studies of phonetic clustering assume phonemes are equally likely. However, if some phonemes are less likely than others, small clusters of low frequency phonemes can be masked by the larger clusters of higher frequency ones (Bion et al., 2013). Thus, for many phonemes, the statistical distributions of speech cues may not reflect the phonology of the language, and clusters may not be sufficiently separable to learn categories.

3.1.3. Infant Directed Speech.

One might argue that infant directed speech (IDS) could help. Caregivers could tune their productions to make clusters more distinct, a modification of the ecology to support a fairly weak learning mechanism. This was suggested by Kuhl et al. (1997): across several languages, adults stretched their vowel spaces when talking to infants.

This claim was not widely supported by later research. First, IDS is not universally employed across the world (McClay et al., in press), suggesting it may not fully solve the problem. Second, more extensive analyses of IDS suggest that it doesn’t help separate categories. In some cases, IDS reduces the distances between category centers (Benders et al., 2019), and in others it increases the variance relative to adult-directed speech (Benders, 2013; Cristia & Seidl, 2013; McMurray et al., 2013; Figure 5 for examples from vowel productions). Both conspire to make phoneme categories less discriminable. Instead, speech modifications in IDS may derive from broader factors like speaking rate and prosody (McMurray et al., 2013) or even the fact that parents smile more when talking to infants than adults (Benders, 2013). While these studies examined individual phonetic contrasts (e.g., voicing), Martin et al. (2015) offer a comprehensive analysis. They used machine learning to examine the pairwise discriminability of almost all phoneme contrasts in a natural corpus and found few for which IDS showed superior discriminability to adult directed (see also, Ludusan et al., 2021).

Figure 5:

Vowel formant measurements from McMurray et al. (2013) for A) adult directed speech; and B) infant directed speech. In each plot, the ellipses indicate the SD of the first and second formant for that vowel. Squares indicate the mean values. The connected circles reflect the other register (e.g., for panel A the mean of IDS, and for panel B, ADS) to show the change between registers.

The claim that IDS is not beneficial for phonetic category acquisition has not gone uncontested. For example, Tsao et al. (2006) links the amount of vowel space expansion in IDS to children’s speech discrimination. However, discrimination was not tested for vowels, and it is possible that the vowel space expansion is a broader marker of things like the quality of the language learning environment, rather than a causal factor in learning. There are also arguments that the enhanced variation in IDS could be beneficial as a form of desirable difficulty (Eaves Jr et al., 2016); however, studies of the properties of real IDS suggest it may not be characterized by the kind of variation necessary for these learning effects (Ludusan et al., 2021).

Beyond the intriguing issue of whether IDS is “intended” to facilitate category acquisition, these studies do not suggest it dramatically restructures to overcome the broader problems with distributional learning such as the heavy overlap between many categories, the effects of differing phoneme frequency, or the phonetically irrelevant factors like gender. This does not deny that distributional learning likely plays a role in development or that IDS modifies the distributions. However, it suggests that at best, distributional learning may only be part of the solution to the problem of acquiring speech categories.

3.1.4. What does distributional learning accomplish?

Supporting this, Schatz et al. (2021) present the most comprehensive study. They trained the best available unsupervised machine learning tools on English and Japanese recordings of natural utterances, and then tested them in an analog of an infant discrimination task. While the models showed sensitivity to the contrasts of the language (e.g., English-trained models could discriminate r/l, Japanese-trained models could not), it did not do so by acquiring anything like phonetic categories – it learned over 600 categories that were far shorter than typical phonemes (<20 msec), and not context invariant. This raises the possibility that while distributional learning can support the kinds of behaviors we study, it may not support phonetic category acquisition.

3.2. What do babies know and when do they know it?

These investigations of distributional learning were largely premised on the idea that infants do acquire categories by some point in their second year (Werker & Yeung, 2005). If babies have categories at this point, then an unsupervised mechanism is needed to acquire them. Given the challenges to distributional learning (and the questions raised by Schatz et al., 2021), it is worth reexamining the evidence for this claim. Categorization is defined as the ability to treat a set of discriminably different tokens similarly (often by labeling them or identifying them the same) (Goldstone & Kersten, 2003). Neither of these criteria are well-met by most infant methods.

The bulk of infant methods necessarily measure discrimination. It is simply not possible to tell a prelinguistic infant to do one thing if they hear a /b/ and something else if they hear a /p/. In the high amplitude sucking procedure (Eimas et al., 1971), infants cue a stimulus by sucking on an artificial nipple. Initially this stimulus is a repeating baseline, but when the sucking rate declines (habituates), this stimulus is switched. Consequently, if the infant hears the difference (discrimination) the novel stimulus should reestablish sucking. Similarly, in the head turn preference procedure (Jusczyk & Aslin, 1995), infants fixate a blinking light (or a central image) to cue an auditory stimulus. Again, a habituation paradigm is employed – when looking starts to extinguish, the stimulus is switched. Similarly, ERP methods (e.g., Garcia-Sierra et al., 2016) typically use variations of the mismatch negativity paradigm which assess neural response to a deviant stimulus against a constant background. There are multiple variants of these sorts of tasks, but all feature a baseline stimulus that is switched to assess discrimination.

Given this, how can categorization be inferred? When these methods were first employed, the dominant theories of speech perception assumed categorical perception (CP) which suggested discrimination and identification were isomorphic. If a listener failed to discriminate two tokens they were assumed to be in the same category. However, under the modern gradient view described previously, it is not clear that such inferences are warranted for three reasons. First, the argument against CP in adults suggests that poor discrimination of certain contrasts does not reflect a failure to encode those contrast at an auditory level; rather it reflects the fact that discrimination (and presumably habituation tasks in infancy) does not just reflect auditory encoding but can reflect other things. Thus, the canonical pattern of infant discrimination is not wrong as a description of performance; however, it may not reflect auditory differences (narrowing). Second, consistent with the more gradient model infants have been shown to discriminate within-category contrasts (McMurray & Aslin, 2005; Miller & Eimas, 1996), and a systematic review by Galle and McMurray (2014) demonstrates that this ability grows over the first few years of life (and beyond: McMurray et al., 2018). This doesn’t argue that children can’t categorize (in fact, it suggests their categories may have a graded prototype structure). However, it does undercut the premise that we can infer categorization directly from the contrasts that infants succeed and fail at discriminating. Finally, the fact that we are inferring categorization from lack of discrimination undercuts the standard definition of categorization in cognitive psychology—that tokens must be discriminable to count as being categorized!

A more limited literature has attempted identification tasks. For example, in the conditioned head turn procedure (Kuhl, 1979), infants are trained to turn their head whenever they hear a specific stimulus – a form of identification. However, this is often done against a repeating baseline, becoming a form of discrimination (can the child detect a difference; e.g., Werker & Tees, 1984). Further, since there is no way to “tell” the infant to respond at the category level (as opposed to responding to an individual exemplar), researchers must train infants on multiple varying exemplars (e.g., multiple talkers), essentially teaching infants a category, rather than assessing an already established one. To overcome this, there have been attempts to train infants to make differential eye-movements in response to two stimuli (e.g., look left for a /b/, and right for a /p/) (Albareda-Castellot et al., 2011; McMurray & Aslin, 2004; Shukla et al., 2011). Here, the contrast implicitly expressed by the tokens assigned to each response can isolate the response to a single relevant dimension (e.g., VOT). However, again the infant must be trained on at least the two anchor tokens. Moreover, these tasks are difficult: babies struggle to learn the mappings, it is hard to construct visual displays that elicit the anticipatory looks, and eye-tracking infants is no mean feat. Thus, they are not widely utilized.

Finally, all infant methods typical assess performance after multiple repetitions. In a familiarization/habituation design, the child might hear 30 repetitions of the baseline and then another 10 or 20 of the deviant stimuli, getting multiple bites at the apple. Results are averaged across babies and as a result of this, successful discrimination could derive from the performance of subset of children, a subset of trials, or even a subset of repetitions within a trial. That is, what we interpret as successful categorization of a speech sound, may actually reflect a 1 in 20 chance of categorizing any given sound (since performance spans multiple repetitions), or it may reflect a small bias toward the correct category after any single sound that accumulates across the repetitions. Adding to the troubles, all it takes to claim that babies have successfully discriminated to sounds is statistical significance; results from individual babies (or trials) are assumed to be not meaningful and there is no absolute measure of performance like accuracy.

In contrast, methods used with adults or older children employ a single presentation and the listener responds after each one. They also assess accuracy, allowing analyses of individual subjects. As a result, the standard of evidence for having speech categories is far higher for older children and adults than for infants (see Simmering, 2016, for a demonstration of this in working memory).

None of this argues that older infants do not have speech categories and at this point this hypothesis cannot be rejected. However, the available evidence also does not permit a strong claim of categorization, echoing a broader debate about how richly one should interpret infant methods (Haith, 1998). This raises the need to consider alternatives.

Feldman et al. (2021) offer a compelling reconceptualization (which builds on earlier insights by Jusczyk, 1993). Drawing on advances in machine learning, they argue that infants do not learning categories at all. Rather they are learning the relevant auditory “space.” This could include learning what dimensions are relevant (e.g., VOT is relevant, but pitch is not), what regions are important (e.g., F1 is unlikely to go outside of 100 to 800 Hz) or how dimensions or cues seem to work together (e.g., VOT is usually correlated with speaking rate). In many ways, this may be what the micro-categories discovered by Schatz et al. (2021) are attempting to capture. This continuous representation of the space can be built on by later learning mechanisms that harness the richer environment of older children to find true categories. If infants have developed such a space, the lengthy baseline presentations of most infant methods may be sufficient to generate the patterns of discrimination that many have taken to infer the availability of native-language categories.

There is increasing empirical support for the claim that infants are largely learning dimensions, and not categories. A number of studies examining later infancy (12 – 24 months) suggest that infants go through a stage of determining what cues are relevant for word learning (Dietrich et al., 2007; Hay et al., 2015; Rost & McMurray, 2009, 2010), usually guided by statistical properties of the input such as the relative variation of various acoustic dimensions (Apfelbaum & McMurray, 2011; Rost & McMurray, 2010). Moreover, this view is also consistent with an unexamined assumption of many computational models of infant categorization from (Elman & Zipser, 1988; Gauthier et al., 2007; Guenther & Gjaja, 1996; Nixon, 2020; Toscano & McMurray, 2010; Westermann & Miranda, 2004). In these models, the “goal” of unsupervised learning is to develop such a continuous space, in the hidden units, a composite dimension, or in a self-organizing map. Somewhat ironically, the authors must go to some pains to interpret continuous patterns in the representational space as a form of categorization. But what if the space is all there is (at this point in development)?

While it is too early to rule out that infants are acquiring categories, it is also clear that a pure distributional learning account is insufficient to develop them and that the only evidence for true categorization in infancy is indirect and based on a faulty assumption of CP. In fact, the alternative approach advocated by Feldman et al. (2021) could represent a more robust form of learning. Acquiring categories too early could be bad: if the input statistics are poorly structured, distributional learning may collapse categories that later need to be separated (a costly mistake that may be difficult to correct later in development). In contrast, if distributional learning needs only to construct a map of the perceptual space, mistakes would be less costly. Consequently, an account in which infant development primarily serves to organize the acoustic space is a compelling alternative.

3.3. Broadening the array of relevant learning mechanisms.

By conflating the ecology of learning with the mechanisms of learning, the canonical view underestimates the types of learning that even infants could engage to acquire speech categories. Mechanisms of learning can be broadly categorized in two ways, which I loosely term associative/statistical and discriminative/error-driven (see also Ramscar, 2021). Associative or statistical mechanisms emphasize extracting regularities in the world for their own sake. Because of this, they are often linked to unsupervised ecologies and have thus been widely considered in speech category acquisition. In contrast, discriminative learning requires a teaching signal of some form, learning is optimized to the goals of a task, to minimize the discrepancy between the performance of the system and some ideal. A core basis of the canonical view is the necessity of a teaching signal in error driven learning would seem to rule out discriminative learning mechanism as a description of infant speech category acquisition.

When it comes to supervised or unsupervised learning, the mechanism and ecology of learning are widely conflated in both the infant and computational literature—for example, error driven learning is often simply called supervised. However, in the framework of ecology vs. mechanism, these are logically separable, and this may have important implications for our understanding of infant development. The dismissal of error-driven learning, for example, assumes that the teaching signal must come from a teacher or at least from something outside the speech perception system. But what if the teaching signal comes for free, as part of the incoming speech signal? This would enable a more powerful error-driven learning mechanism to rely on an unsupervised ecology. Similarly, statistical or associative learning need not be unsupervised: such learning could operate by linking information in the ecology (e.g., a visual referent) to regions of the cue space. While not error driven, these associative mechanisms are supervised as they rely on information from outside the speech system (that can augment the perhaps ambiguous speech information to separate categories). That is, the nature of the computations that govern learning (the mechanism) is equally important as the nature of the inputs that guide it (the ecology).

When we characterize the ecology of learning independently of the mechanism, four permutations are relevant for the acquisition of speech categorization (Table 1). We can characterize a learning system as having a supervised ecology if it relies on information from outside the speech system, and an unsupervised ecology if it does not. Similarly, learning mechanisms can be characterized as discriminative or associative based on whether an error signal is used to modify the internal representations –regardless of where the prediction error comes from. We start this section by considering how both associative and discriminative learning can work in even an unsupervised ecology (Table 1, Columns 1 & 3). However, as our understanding of the ecology of speech category acquisition grows, we later must consider supervised ecologies (Columns 2, 4).

Table 1:

Learning mechanisms and learning ecologies. Note that in keeping with the dimensions I am trying to contrast, the unsupervised or supervised tags refer specifically to the ecology (whether the learning rule needs a teaching signal from outside of the speech system), not the classic definitions of learning rules. Examples are not meant to be definitive statements that these paradigms are solely discriminative or error driven. It is often possible to use either an associative or discriminative rule to model the same phenomena.

| Mechanism | Associative / Statistical | Discriminative (Error Driven) | ||

|---|---|---|---|---|

| Ecology | Unsupervised | Supervised | Unsupervised (Self-Supervised) | Supervised |

| Input | Cue values representing auditory input | |||

| Nature of output | Category | Some aspect of environment | Category | |

| Output | Computed from input; Refined by internal decision process | Set by teaching signal (during learning) | Computed from input | Computed from input |

| Error | ⊘ | ⊘ | Match of predictions to environment | Match of predictions to teaching signal? |

| Representations strengthened by | Input & Output Activity | Prediction – Output | ||

| Examples | Competitive learning, Distributional learning, Mixture of Gaussians, Generative models | Exemplar models, use of referents to separate categories | Prediction-error based models; simple recurrent nets | Classical, operant conditioning |

3.3.1. Associative/statistical mechanisms in an unsupervised ecology.

Associative3 or statistical learning mechanisms harken back to Hebb’s (1949) proposal that the strength of the connection between two neurons is a function of the degree to which both are active. Critically, no teaching signal is provided, and learning is not intended to achieve any particular goal. Rather the system adapts itself in a way that reflects patterns in the input (or patterns of neural activity). Hebb’s proposal has been implemented in numerous connectionist architectures (Kohonen, 1982; Rumelhart & Zipser, 1986); but associative learning of this type is not limited to connectionist models of learning. Classic conceptualizations of statistical learning—in which learners directly track transition probabilities or frequencies of occurrence—also reflect this task-free form of learning. In this way, distributional learning as classically described in the infancy literature (Maye et al., 2003) is a key example of this kind of learning. Statistical learning is also characteristic of some probabilistic models (e.g., mixtures of Gaussians) which simply optimize the most likely set of probability distributions to describe the input (de Boer & Kuhl, 2003; McMurray, Aslin, et al., 2009; Vallabha et al., 2007). The most recent Bayesian generative models (Feldman, Griffiths, et al., 2013; Feldman et al., 2009) also fit this framework (and are perhaps the most powerful statistical learners) as they attempt to identify the underlying probability distributions that were most likely to have generated the observed inputs.

As these associative learning mechanisms do not require a teaching signal, they are an obvious candidate for speech category acquisition given the presumed unsupervised ecology (Table 1, Column 1). In virtually all formulations of these models, the input represents a description of the cue-space (e.g., VOTs, formant frequencies), and the output is a category assigned to that token (e.g., /b/ or /p/). Learning then just links regions of the cue space to the outputs. But without any externally provided category, how does the model know what to link? Virtually all associative models that have been applied to categorization take roughly the same approach: the model starts with random mappings of some form and makes a guess in some internal decision space. Then a decision rule is applied to the output layer to “clean up” or stabilize that guess (e.g., choose the winning category), and those mappings are strengthened. The arbitrarily chosen region of the decision space (the output units) across repeated exposures essentially becomes an internal tag for that region of the category space – a label.

This basic scheme appears in connectionist approaches using explicitly Hebbian learning in a competitive framework (McMurray, Horst, et al., 2009), and also in mixtures of Gaussians where an array of potential categories are posited (each represented by a mean value of the phonetic cue space), and the best is selected and updated (de Boer & Kuhl, 2003; McMurray, Aslin, et al., 2009; Vallabha et al., 2007). Generative models do something more complex, but the act of choosing the most likely distributions is not dissimilar to that of strengthening a mapping between cues and categories.

This scheme also appears in self organizing feature maps (Kohonen, 1982), with an important distinction. Here the goal is not to learn categories, but to remap the input to a space that devotes more representation to frequently occurring cue-values. These models have been used for speech category acquisition (Gauthier et al., 2007; Guenther & Gjaja, 1996; Westermann & Miranda, 2004), and they capture Feldman et al.’s (2021) argument that unsupervised learning is primarily about building a perceptual space. But like all statistical or associative models, the models’ ability to form these maps is based solely on Hebbian learning: cue values that are highly frequent tend to reinforce themselves over time and nothing other than the distribution of cue values tells the model how to do this.

All of these statistical/associative models generally show some form of narrowing as regions of the space that are not relevant for categorization are minimized, pruned, or assigned to low probabilities. But they also show strengthening as correct categories are built and refined.

3.3.2. Unsupervised Discriminative Learning.

In contrast to statistical/associative mechanisms, in discriminative learning, the system is adapted to the goals of a specific task. In these models (Table 1, Column 4), the system receives an input and generates an output. This output is a form of prediction. For example, the model may predict the correct referent for a word. The system then receives explicit feedback about whether those predictions are right or not (was that referent present). Learning is not based just on that teaching signal (e.g., learn that when you hear this word, output that referent). Rather learning is optimized to minimize the prediction error. In the context of speech categorization, the model is not necessarily told what categories go with which sounds, it is just trained to minimize prediction error.

Discriminative models harken back to thinking in the animal learning (behaviorist) literature (Rescorla & Wagner, 1972) (and see Rescorla, 1988, for an excellent discussion of discriminative vs. associative learning). However, connectionist thinking has significantly expanded it by generalizing it to multi-layer networks where the same learning rule is used to tune internal representations between inputs and outputs (back-propagation; Rumelhart et al., 1986); and by demonstrating the power of applying discriminative learning to distributed representations that capture the similarity structure of a set of inputs.

As numerous authors have argued and demonstrated, the error-driven nature of discriminative learning does considerably more than just track co-occurrences (as in an associative model); rather the model becomes a prediction engine that can display many non-obvious patterns of learning. For example, if a model is trained to successfully predict an outcome, when a new input pattern is introduced, it may not be learned at all—even if that input perfectly predicts the outcome (blocking). That is the model does not learn all contingencies—it learns the ones it needs to accomplish this task (Rescorla, 1988). Because these models are optimized to a task, and do not simply capture regularities, discriminative learning is much more powerful (particularly when combined with internal representations as in back-propagation). It can find non-obvious statistical regularities that solve the problem, regularities that may not be discovered by simpler associative frameworks. Critically, in the case of speech category learning, discriminative learning does not require clearly separable statistical clusters: it can arbitrarily map any region of the acoustic space to an output, and can learn what appear to be ill-formed or poorly shaped categories (c.f., O’Donoghue et al., 2022, for an extreme example).

A key problem for discriminative learning in infant speech category acquisition is the teaching signal. In an unsupervised ecology where does the teaching signal come from? How does the infant know the correct category for a given input? A clever solution has been proposed under the rubric of “self-supervised” discriminative learning (Elman, 1990). In these schemes the model’s job is not to predict the category, but to predict some aspect of the input itself. For example, Elman and Zipser (1988) trained a model whose input was a spectral representation of speech, and its job was to output the same input (an auto encoder). Critically, between the input and the prediction there were only a small number of intermediate units. Thus, to successfully recover the input, the model had to figure out how to compress it into a small dimensional space. Analysis of what these features are doing showed clear evidence for categories. Similarly, Nixon and Tomaschek (2021) presents an unsupervised model which adapts this to a predictive framework. Their model uses each time window of the speech input to predict the next time window. After training, the predictions were transformed into a metric of discrimination, which showed evidence for something resembling categories. Intriguingly, in both cases these models do not have explicit category labels—they are trained to predict future inputs, not the category. Nonetheless the perceptual space they organize reflects category-like structure and could serve as a platform for later category learning (reflecting Feldman et al., 2021). Thus, these models demonstrate that without a teacher, and without any information from outside the speech system (an unsupervised ecology) error-driven learning is feasible and may permit a much more powerful learning mechanism than statistical/associative learning (distributional learning).

Importantly, some of the hallmarks of discriminative learning have been shown in adult speech category acquisition: Nixon (2020) trained listeners on artificial auditory categories and found ordering effects like blocking and overshadowing that are hallmarks of discriminative but not associative learning. It remains to be seen if infants would show similar effects. Nonetheless the viability of the self-supervised paradigms proposed by Nixon and Elman overcome the critical problem posed by the presumed unsupervised ecology of infant learning.

3.3.3. Supervised Ecologies.

Discriminative learning was originally developed for learning in a supervised ecology (e.g., operant learning). It is clear what a truly supervised ecology would look like in this case: the learner hears a sound, predicts the correct category, receives feedback on this decision and updates their representations based on prediction error (Table 1, Column 4). In speech categorization, this would appear to be an uncommon situation: when does a child realize that they categorized a speech sound wrong? However, as I argue next, there may be other sources of supervision in the learning ecology that could serve this role: the system could predict visual speech cues or the presence of a referent and use that to generate the prediction error. The key is that these live outside the speech system and that they offer information that can help disambiguate category membership category.

But what about supervised associative learning? Imagine a situation in which the child heard speech sounds in the presence of a referent (e.g., a doll vs. a ball). By strengthening the link between the visual referent and that region of the cue space, this might help separate categories that are otherwise acoustically very close. Indeed second language learning paradigms like those of Wade and Holt (2005) implement this. In their study, adult learners played a game in which speech sounds were paired with consistent visual events; while not an explicit teaching signal these events can be associated to auditory features to build categories. While such learning could certainly be accomplished with discriminative mechanisms (and perhaps more powerfully), they are not strictly necessary, simply linking regions of the space to an external tag can do the job. Indeed that is the basis—and the power—of all exemplar models of speech perception (Goldinger, 1998).

The canonical view is that the ecology of infancy appeared to rule out a supervised ecology for speech category acquisition. Nonetheless beyond phonemes (outside of my narrow definition of speech perception), there may be possibilities. Words or referents could serve in such a role (as in exemplar models), or later in development, an orthographic system may help. However, this demands a reconsideration of the timecourse of development and the ecology of the infant (or even child) language learning environments.

3.4. Is the ecology of infant speech category learning truly unsupervised?

Recent empirical and computational studies question the assumption of a purely unsupervised ecology. While clearly infants do not get direct feedback on their categorization, there may be implicit supervisory signals that help separate categories. This could overcome the limits of distributional learning as an implicit supervisor could harness the power of supervised learning (discriminative or non-discriminative) to arbitrarily delineate regions of the acoustic input.

For example, computational work by Westermann and Miranda (2004) proposes that during the second year of life a child’s productions might stabilize speech categories. Empirical work by Teinonen et al. (2008) suggests visual cues could help even earlier. In a distributional learning experiment, six-month-olds showed better b/d discrimination when sounds were accompanied by a video showing the matching articulation. However, visual cues are not robust for many phonemes (Binnie et al., 1974), suggesting the need for a broader source of support.

One obvious candidate for such a signal is words: a child could note that sounds with lower formants at onset tend to refer to different things (balls) than those with higher formant frequencies (dolls). This was proposed by Walley et al. (2003) as the lexical restructuring hypothesis to account for the refinement of speech categories by older children. As children learn dense neighborhoods of words, speech perception adapts to support finer-grained discriminations. This kind of referential or lexical support could be available as early as six months (Bergelson & Swingley, 2012), and would obviously become more helpful later in development as the lexicon grows. Even a few referents may help. Yeung, Werker and colleagues (Yeung et al., 2014; Yeung & Werker, 2009) showed that nine-month-olds could learn to discriminate a non-native phonetic contrast only when the two sounds were paired with distinct images. Moreover, visual referents may not just support the ability to categorize along dimensions like (VOT): computational work by Apfelbaum and McMurray (2011) suggests that the ability to contrast referents may help infants discover what dimensions are relevant for contrasting words (e.g., VOT is relevant, but not talker).

One concern is scaling this up. Infants rarely encounter isolated objects (though this may be more common than thought in explicit teaching situations: Pereira et al., 2013). Räsänen and Rasilo (2015) offer a computational demonstration that even sporadically available visual referents can help. They built a model that segmented real speech in the presence of visual referents. Critically, there were often multiple visual referents available, but across presentations, one was consistently more likely to co-occur with a word (cross-situational learning). These referents led to improved segmentation, suggesting the plausibility of using visual referents and meaning as an implicit supervisor.

Even absent a referent, a broader consideration of the complete wordform could help learn speech categories (Feldman, Griffiths, et al., 2013). For example, children could notice that /æ/ occurs in distinct words (cat, dad) than /ɛ/ (leg, egg). In this case, the surrounding phonemes (the /k/ and /t/ in cat) serve the implicit supervisory role, helping to separate these close vowels (a form of bootstrapping). Feldman, Griffiths, et al. (2013) demonstrated the feasibility of this computationally, and later empirical work showed 8–14-month old infants can learn this way (Feldman, Myers, et al., 2013; Thiessen, 2007; Thiessen & Pavlik, 2016).

Together this work argues for a variety of implicit supervisory signals available in the ecology of infancy: production, visual speech cues, referents, and segmented wordforms. Likely more sources of supervision will yet be discovered. Such mechanisms make it clear that the ecology of infant learning is not fully unsupervised, and this could enable infants to harness a variety of speech-external information sources to separate categories, as well as the greater power of supervised associative learning or discriminative learning to help solve problems that distributional learning cannot. However, many of these mechanisms become more useful later in development. Vocabulary grows dramatically during the second year of life and even more dramatically during the school age years; and visual speech skills develop throughout childhood (McGurk & MacDonald, 1976). This raises the possibility that higher level aspects of language (e.g., words) may be at least initially learned without fully specified phonemes (e.g., on the basis of some kind of more holistic representation of sound). It also begs the question of whether speech category acquisition is limited to infancy.

3.5. Beyond infancy.

The foregoing discussion points to the idea that acquiring speech categories may need to be protracted: the supervisory signals (e.g., the lexicon, production) needed to overcome the limits of distributional learning are more useful later in development. And the limits of infant methods make it more plausible that infants simply are not acquiring speech categories, but reorganizing the acoustic space (Feldman et al., 2021; Schatz et al., 2021), leaving true categories for later.

Hints within the infancy literature have pointed to this for a while. For example, work on the intersection of speech perception and word learning during the second year seems to suggest a second wave of perceptual development from 12–24 months. Stager and Werker showed that 14-month-old infants who succeed at discriminating similar phonemes like /b/ and /d/, struggle to map those onto words in a challenging habituation/word learning task. But by 24 months they succeed. Initial explanations for this pointed to the task demands of word learning (Fennell & Waxman, 2010; Yoshida et al., 2009); however in parallel, Rost, McMurray and colleagues (Galle et al., 2015; Rost & McMurray, 2009, 2010) began exploring perceptual phenomena. They argued that if infants’ perceptual categories were under-developed at that point, then enhanced acoustic variability (which should increase task demands) may be needed to buttress these categories. This is exactly what we found—more variation led to better learning. Intriguingly, it was not variation in relevant phonetic cues (e.g., VOT) that drove better learning; rather infants needed variation in irrelevant factors like talker and pitch (Galle et al., 2015; Rost & McMurray, 2010) (and Bulgarelli et al., 2021, quantified this variability in the real world). This suggests that the critical problem at these ages is learning which dimensions are relevant. (see also Dietrich et al., 2007; Hay et al., 2015), and it is in line with Feldman et al.’s (2021) proposal of a reorganizing space. This pushes the developmental window at least to 24-months.

This may not be late enough. Research on older children, which is largely ignored by major theories of language development, pushes this window farther (Bernstein, 1983; Hazan & Barrett, 2000; Nittrouer, 1992, 2002; Nittrouer & Miller, 1997; Nittrouer & Studdert-Kennedy, 1987; Slawinski & Fitzgerald, 1998). Critically, older children can do much more sophisticated tasks, including identification/labeling tasks that help eliminate some of the limitations of relying on discrimination. These studies use tasks in which children hear tokens from a speech continuum spanning two phonemes (e.g., /b/ to /p/) and label each token by letter or word (e.g., bear/pear). From this, one can construct standard categorization functions (as is commonly done in adults) and compare them across ages. These studies have examined a variety of phonetic contrasts including approximants (Slawinski & Fitzgerald, 1998), voicing and place of articulation in stops (Bernstein, 1983; Hazan & Barrett, 2000) and fricatives (Nittrouer, 1992, 2002; Nittrouer & Miller, 1997; Nittrouer & Studdert-Kennedy, 1987). Many have further pushed the envelope by examining the contributions of multiple cues (e.g., VOT and F0 for voicing) (Bernstein, 1983; Nittrouer, 2004; Nittrouer & Miller, 1997). Collectively, they show changes from 3–12 years of age with a gradual steeping of the slope across the school age years (see Figure 6 for a schematic), suggesting increasingly precise categorization.

Figure 6:

Typical results of experiments examining speech categorization in school-age children (e.g., Hazan & Barrett, 2000). Schematic results from tasks in which children hear a token from a speech continuum (e.g., spanning /b/ to /p/) and label it. Younger children typically show shallower slopes than older.

More recently, my own lab conducted such a study using eye-tracking in the Visual World Paradigm (the VWP) (McMurray et al., 2018). Unlike traditional categorization tasks, the VWP uses eye-movements to trace out the real-time dynamics of how a phoneme or word is identified over time– the processes that lead up to the final response reflected in the categorization decisions. Children from 7 to 18 years performed a typical identification task in which they heard a word from either a /b/-/p/ or /s/-/ʃ/ continuum and clicked on a matching picture. To find the picture, children make a series of fixations; these begin immediately after the word, with 3–4 fixations as the trial unfolds. Since fixations only reflect processing up to the point they are launched, the sequence of fixations reflects the unfolding commitment to a category. That is the degree to which a child fixates /b/ vs. /p/ at 500 msec reflects the degree to which the child is committed to one or the other at that time. This revealed two key findings.

First, we converted the likelihood of fixating each word at a particular time into a standard identification function (Figure 7A-D; and see http://osf.io/w5bqgforanimations). That is, at 500 msec (for example), we asked how biased are the fixations toward /b/ or /p/ at each continuum step, analogous to the data in Figure 6. This can then be done at consecutive time steps to visualize how phoneme categorization unfolds over time and development. Initially, these curves were mostly flat (Figure 7A). However, at early moments in processing (Figure 7B,C), there were marked developmental differences in categorization. This was particularly true between the 7–8 year-olds and the older groups, but some differences were also significant even between the 12- and 17-year-old age groups (particularly for the slope of the function)! It would be easy to dismiss this—perhaps younger children are just slower, or perhaps they are just noisier. But during real language children may not have the luxury of waiting 1000 msec for their decision to unfold and to overcome any noise in the system. It doesn’t matter why speech categorization is incomplete, if it is not available quickly and robustly enough to support language processing. In this light, at 300 msec (approximately the length of a word), it is notable that the 7–8 year-olds were basically at chance (Figure 7B).

Figure 7:

Results from McMurray et al. (2018). Fixations in the Visual World Paradigm (VWP) are converted to measure analogous to standard phoneme identification (Figure 6) to assess how categorization unfolds over time. Here a fixation bias of −1 indicates that the listener is fully committed to /b/ at that time, while a bias of +1 indicates a complete commitment to /p/. At 300 msec, 7–8-year-old children show little departure from 0 at any step along the continuum, suggesting they have not yet begun to categorize the sounds. However, older children show some departure, with differences between 11–12 and 17–18 y.o. children. At later times (B-D), the categorization function expands, but developmental differences can be seen throughout. E) Proportion of looks to the competitor (area under the curve) as a function of distance from the category boundary, for trials in which the child chose the “correct” phoneme. Older children (blue lines), like adults, show a gradient response with competition falling off as the continuum step departs in either direction from the boundary at 0. This suggests robust sensitivity to fine grained detail. In contrast, younger children show heightened competition overall, and reduced sensitivity.

Second, a critical value of this variant of the VWP is that one can examine fixations only on trials where the child categorized the stimuli the same. That is, given that the child ultimately heard a /b/, were their fixations influenced by fine-grained differences along the continuum (McMurray et al., 2002)? Any differences in fixations thus reflect truly within-category sensitivity (since ultimately all the tokens were categorized the same). A true categorical model predicts a flat function—if listeners are discarding within category detail, a 20 msec VOT (near the boundary) should elicit no more activation for competitors than a 0 msec VOT (a clear /b/) since they are both /b/’s. In contrast, adults typically show increasing competitor fixations as the VOT approaches the boundary (McMurray et al., 2002): listeners are considering the competitor more, even though they are fully confident in its category membership. This was a key source of evidence against CP.

When we did this with children (Figure 7E), 7–8 year olds’ fixations did not reflect fine-grained changes in VOT; they show much flatter functions predicted by CP, and this ability showed clear developmental differences between all three age groups. The ability to encode these gradient differences (appearing as more competition near the boundary) did not emerge until later in development. Thus, over development, children were not reducing sensitivity to within category detail (as predicted by perceptual narrowing)—they were increasing it.

3.5.1. The Canonical Model in light of Older Children.

These studies of later development promote an important rethinking of the canonical model. By childhood, the ecology of speech development changes dramatically making all sorts of new developmental pathways possible. Even at the level of distributional learning, children have a much larger social network than infants, creating new opportunities for perceptual learning. However, opportunities for implicit supervision also expand. School age children have large vocabularies and increasingly sophisticated lexical processing skills (McMurray et al., 2022) to deal with competing words. Visual speech perception skills improve (McGurk & MacDonald, 1976); and speech production is more precise (Sadagopan & Smith, 2008). Finally, children are more active conversational participants: if they mishear a word, they may be more likely to use later context or ask a question to correct it (and learn from the mistake). These all support both implicit and explicit supervisory signals.

Even more importantly, by 5–6, many North American children begin learning to read. Reading can further push vocabulary and language development more broadly (Duff et al., 2015). Moreover, in alphabetic languages, the act of learning to read and spell may help children to develop more precise representations of sound (Dich & Cohn, 2013), and letters may serve as a sort of implicit supervisor for auditory categories. A wealth of studies link dyslexia to impaired speech categorization (Noordenbos & Serniclaes, 2015). Under the canonical view, these deficits are causal: speech categories fail to develop in infancy leading to later reading deficits. However, the converse may also be true: if speech perception develops partially in response to reading, speech perception deficits could be caused by a failure to learn to read well.

The ecology of learning in later childhood is much richer than in infancy: there is a more complex input to support unsupervised learning, better and new avenues for supervised learning, and a richer set of internal skills that can be brought to bear (reading, larger vocabularies). Perhaps then, the initial developments in infancy are fairly limited, and children rely on later and much richer experiences to develop full blown speech categories. There may be advantages to slower development, when a more favorable ecology for learning is available. This may help explain why higher level auditory skills like word recognition (Rigler et al., 2015; Sekerina & Brooks, 2007) and talker identification (Creel & Jimenez, 2012) also develop slowly.

4. Extensions

While the canonical model cannot be conclusively discarded, the foregoing review makes it clear that a) unsupervised learning may not be sufficient; b) there is not strong evidence that infants “have” speech categories by 12 months; c) error-driven learning mechanisms can be engaged even in infancy; d) several implicit supervisory signals are available to older infants; and e) development is protracted through the school-age years, enabling richer mechanisms of change. These facts have implications beyond the immediate problem of speech category learning for three domains: critical periods, second language (L2) learning, and the problem of lack of invariance that motivates much work in speech perception. I briefly discuss each before concluding.

4.1. Critical Periods

While a complete treatment of critical periods is outside of the scope of this paper (see Thiessen et al., 2016, for ideas consistent with those presented here), the concept of critical or sensitive period has long been linked to the canonical view (Kuhl et al., 2005; Maurer & Werker, 2014; Werker & Hensch, 2015). The belief was (and is) that infancy is a special time in which people are prepared to rapidly do this kind of perceptual learning, and if one doesn’t acquire speech perception skills within this window, it could be problematic. Indeed, the difficulty that most adults face in learning non-native phonetic contrasts seems to support this view (Strange & Shafer, 2008). However, if infancy only sets the stage for a more protracted learning during childhood (or young adulthood), this seems problematic for critical periods.

However, these ideas may be consistent with two more recent lines of work. First, Hartshorne et al. (2018) pointed out that most studies of critical periods in language focus on attainment at a certain point (e.g., at presumed adulthood). However, if language learning is highly protracted (e.g., it takes a decade or more), people who start late also have less exposure to the new language by the time they are tested at 25 or so. Hartshorn incorporated this logic into a simple learning model that estimated L2 performance (on a variety of language skills) for over 600,000 English learners; from both the length of exposure to the language and the age at which exposure began (the sensitive period). This led to estimates of about 30 years to fully master English syntax with a sensitive period at 17.5 years of age. While their model focused on syntax, this is a close match to data suggesting protracted development of speech perception (Hazan & Barrett, 2000; McMurray et al., 2018). While there is no doubt that adults do struggle with L2 speech sounds, this suggests the answer may not be as simple as “they started too late”.

Second, a wealth of work has documented rapid, robust and powerful forms of perceptual learning for speech in adults, usually in the context of adaptation and retuning to novel speech (e.g., Bradlow & Bent, 2008; Caplan et al., 2021; Davis et al., 2005; Norris et al., 2003). Indeed, a handful of studies have compared children and adults in controlled laboratory learning studies and report similar or even better learning of speech categories by adults than children (Fuhrmeister et al., 2020; Heeren & Schouten, 2010; Wang & Kuhl, 2003). While this learning is not infinitely flexible, it undercuts the claims that plasticity is uniquely a province of infancy and is not lost at the closing of some critical window.