Abstract

Objective: The aim of this study was to preliminarily determine the feasibility of probabilistically generating problem-specific computerized provider order entry (CPOE) pick-lists from a database of explicitly linked orders and problems from actual clinical cases.

Design: In a pilot retrospective validation, physicians reviewed internal medicine cases consisting of the admission history and physical examination and orders placed using CPOE during the first 24 hours after admission. They created coded problem lists and linked orders from individual cases to the problem for which they were most indicated. Problem-specific order pick-lists were generated by including a given order in a pick-list if the probability of linkage of order and problem (PLOP) equaled or exceeded a specified threshold. PLOP for a given linked order-problem pair was computed as its prevalence among the other cases in the experiment with the given problem. The orders that the reviewer linked to a given problem instance served as the reference standard to evaluate its system-generated pick-list.

Measurements: Recall, precision, and length of the pick-lists.

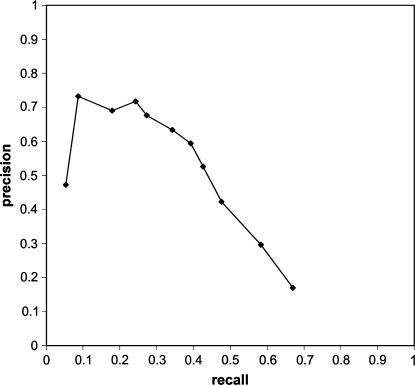

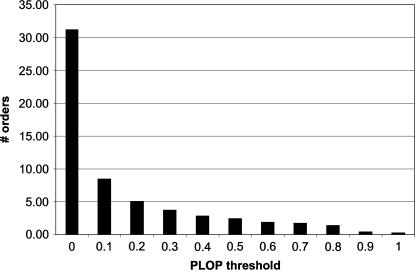

Results: Average recall reached a maximum of .67 with a precision of .17 and pick-list length of 31.22 at a PLOP threshold of 0. Average precision reached a maximum of .73 with a recall of .09 and pick-list length of .42 at a PLOP threshold of .9. Recall varied inversely with precision in classic information retrieval behavior.

Conclusion: We preliminarily conclude that it is feasible to generate problem-specific CPOE pick-lists probabilistically from a database of explicitly linked orders and problems. Further research is necessary to determine the usefulness of this approach in real-world settings.

Two of the main technologies driving current interest in health care information technology are computerized provider order entry (CPOE) and electronic health records (EHR).1,2 Both technologies have been touted by influential groups as critical to improving patient safety and reducing medical errors,3,4 although good reasons exist to implement such systems even independently of these issues.5

Several investigators have begun to show that integrating CPOE with clinical documentation functionality in EHR systems may create synergies. Rosenbloom et al.6 have reported improving levels of computerized physician documentation by integrating clinical documentation functionality into CPOE work flow. Jao et al.7 reported preliminary results suggesting that integrating problem list maintenance functionality into CPOE work flow may improve problem list maintenance. We suggest that coupling CPOE with problem list maintenance even more tightly may lead to further synergies. In this paper, we explore one potential synergy. We investigated how explicitly linking orders to the problems that prompted them could enable generation of problem-specific CPOE pick-lists probabilistically, without the need for predefined order sets.

Background

Several studies in the outpatient setting have shown that CPOE may reduce clinician order entry time8 or at least be time neutral.9,10 Nonetheless, an impediment to physician acceptance of CPOE systems in the inpatient setting is the (real or perceived) increased time burden of order entry compared with paper-based ordering.11,12,13,14 A major solution that has emerged is the use of predefined, problem/situation-specific order sets. An order set is a static collection of orderable items that are appropriate for a given clinical situation. Order sets are usually presented to the end user as pick-lists. The liberal use of order sets has been named as a key factor in achieving physician acceptance of CPOE implementations.15,16 Other solutions for decreasing the time burden of CPOE have also emerged. Lovis et al.17 have shown promise in the use of a natural language–based command-line CPOE interface, but incidental findings from the same study nonetheless suggest the speed superiority of order sets. The use of preconfigured orders (e.g., medication orders whose dose, frequency, and route of administration have already been specified) and hierarchical order menus have also emerged as time-saving techniques for placing common orders.18

Centrally defined order sets are believed to provide an advantage in increasing clinical practice consistency and adherence to evidence-based practice guidelines3; however, an important advantage of order sets from the end user's perspective is that they save time when entering orders via CPOE.15,19 Indeed, personal order sets, which are order sets that individual users can create, seem to exhibit much variability from user to user, but they are nonetheless popular among end users,19,20 ostensibly because of their time-saving advantages. Few if any reports have described the extent to which currently deployed order sets, whether centrally defined or individually defined, are based on scientific evidence.

In many currently available CPOE systems, problem-specific order pick-list functionality is present in the form of static order sets or collections of orders on menus. Centrally defined order sets frequently have the benefit of institutional backing, yet static order sets have several inherent problems. First, Payne et al.18 have shown that centralized order set configuration is time-consuming and that 87% of their centrally defined order sets were never even used, although their study did show higher proportional use of preconfigured orders. This suggests that much time and many resources are being spent on constructing unused order sets and other order configuration entities. Second, newly emerging best practices may be slow to be incorporated into centralized order sets.21 Third, centralized order sets are often defined only for frequently occurring problems, leaving rarer problems unsupported. Finally, as the number of order sets in a CPOE system increases, locating the appropriate order set among all the available order sets can be difficult.

We believe that explicitly linking patients' problems with the orders used to manage those problems may be a source of synergies between order entry and other processes in the health care information flow, including order entry itself, results retrieval, clinical documentation, reimbursement, public health reporting, clinical research, quality assurance, and many others. We believe that we can harness these synergies by employing the problem-driven order entry (PDOE) process. PDOE requires the clinician to perform two activities with the EHR/CPOE system, which together constitute a necessary and sufficient embodiment of the process: (1) enter the patient's problems using a controlled terminology and (2) enter every order in the context of the one problem for which it is most indicated. Orders may be entered via a pick-list or another mechanism such as a command-line interface, but each order must be entered in the context of a problem. Through this process, PDOE produces a database of linked order-problem pairs (LOPPs). We hypothesize that it is feasible to use a database of LOPPs to generate useful problem-specific order pick-lists for CPOE by employing a simple probabilistic pick-list generation algorithm. This is an example of case-based reasoning.

With respect to the orders that a pick-list contains, we suggest that the ideal problem-specific pick-list embodies the following criteria: (1) all orders that the user wants to place for a given problem are in the pick-list (i.e., perfect recall), (2) all orders in the pick-list are orders that the user wants to place for the given problem (i.e., perfect precision), and (3) the orders in the pick-list reflect clinical best practice and are backed by scientific evidence. Based on these criteria, the information retrieval metrics of recall and precision are necessary, albeit not sufficient, measures of the usefulness of problem-specific order pick-lists. Acknowledging this limitation, we believe that they are reasonable for this pilot study.

Methods

Case Selection

After obtaining an institutional review board exemption, the legacy CPOE system22 at Johns Hopkins Hospital (JHH) was queried for orders and identifying data for all patients admitted between June 19 and October 16, 2003. Records from this query were joined with case mix data to identify those cases in which the patient was admitted to the Internal Medicine service (one of several general internal medicine services at JHH) and the length of stay was less than seven days. The International Classification of Diseases, Ninth Revision (ICD-9)–coded admission diagnosis was also selected. We arbitrarily chose the seven-day length of stay cutoff to try to limit the complexity of the cases, reasoning that complex cases tend to have longer lengths of stay. We wanted to limit case complexity to simplify the core study tasks for our reviewers.

We selected cases for inclusion in the study in which the frequency of the ICD-9–coded admission diagnosis within the above set was greater than six. We arbitrarily set the cutoff at six to yield what we believed would be a manageably small number of cases given our resource constraints but perhaps sufficient to demonstrate the ability of our approach to generate useful pick-lists. Although the utility of ICD-9–coded diagnoses for clinical purposes has been questioned,23,24 we used them to help us identify subsets of cases that would be at least moderately similar to one another. We aimed to achieve a moderate level of similarity among cases in our sample because LOPP similarity across cases is the basis for our process of probabilistic pick-list generation, and we hoped to demonstrate its feasibility using our sample.

We eliminated cases in which insufficient data were present to carry out the requirements of this study based on two prospective criteria: (1) unavailability of the paper medical record or (2) an order count less than ten. We arbitrarily decided that cases with so few orders would not contribute sufficient data to be cost-effective in this experiment.

Case Preparation

The paper medical records for our study set were requisitioned and retrieved, and the handwritten history and physical document (H & P) that was written by the physician who entered the admission orders was photocopied, digitally scanned, and manually de-identified. This physician was usually an intern, although many patients were admitted by a moonlighting resident trained at Hopkins. Also, in a few cases, the admitting physician's H & P was not available in the chart, so the attending physician's or medical student's H & P was used. In these few cases, the person who entered the orders (i.e., an intern) was different from the person who wrote the H & P. We chose to use the H & P because it is an information-rich narrative document25 that tends to explicitly or implicitly offer reasons for orders placed during the early part of a patient's hospital stay. This experiment required third-party physicians to enumerate patients' problems and to link orders to the problem for which they were indicated, so we needed such documentation that correlated temporally with orders. As we were limited by time and manpower, we chose to focus on the period of the first 24 hours after admission since a disproportionately large number of orders are placed during this time period.

For cases that met our inclusion criteria, we selected the order records for all orders placed within the 24-hour period following the time of the first order placed for a given case. As the legacy CPOE system allowed end-user modification of orders' text descriptions as well as free-text order entry, these order records required standardization before being used in this experiment. These standardized orders would essentially serve as our controlled terminology for describing orders. To standardize the records, we first manually deleted order records that we considered to be meaningless or advantageous to enter in ways other than as orders, as might be implemented in future clinical information technology infrastructures. We considered an order record meaningless if it did not describe an executable order (e.g., “NURSING MISCELLANEOUS DISCHARGE ORDER”). We considered a record possibly advantageous to enter in a way other than as an order if that order was administrative in nature and did not deal specifically with patient care (e.g., “DISCHARGE NEW PATIENT” or “CHANGE INTERN TO”) or if the concept that the order described might be more usefully classified as a problem (e.g., “ALLERGY”).

Next, we manually standardized the syntax of the remaining order records by eliminating order attribute information. This involved removing text referring to medication dose, timing, and route of administration. Terms for medications that referred to qualitatively distinct preparations were left as separate terms (e.g., the sustained release form of a drug was considered to be different from the regular form). This standardization also involved standardizing highly similar free-text orders to a single term (e.g., “EKG” standardized to “12-LEAD EKG”).

Next, we eliminated duplicate order records within individual cases. This occurred frequently, for example, where serial measurements of cardiac enzymes were ordered for the purpose of assessing a patient for the presence of a myocardial infarction. JHH's CPOE system recorded serial orders by placing multiple instances of the same order, all of which were retrieved in our query. We chose to eliminate such duplicates because, regardless of how serial orders may be stored in a CPOE system, many CPOE systems allow ordering of serial tests through a single order with the order's serial nature expressed as an attribute of the order rather than having to explicitly enter a separate order for each instance of the test, and in this study, we simulated the order entry process. Finally, the display sequence of orders within each case was randomized at the storage level to control for possible confounding on order-problem linking from order sequence.

Reviewers

Ten physicians from seven different institutions agreed to be reviewers for this study, serving as proxies for the actual physicians who authored the H & Ps and entered the orders for our study cases.

Study Tasks and Data Collection

Each of the ten reviewers was assigned 17 cases. Five of these 17 cases, randomly selected as a stratified sample from each of the five most frequent ICD-9 admission diagnoses, were redundantly assigned to all reviewers for a substudy looking at problem list agreement; results are not discussed in this report. Of the remaining 120 cases, 12 were randomly and nonredundantly assigned to each reviewer. For each assigned case, the reviewers reviewed the H & P and orders, entered a coded problem list, and linked each order to the problem for which it was most indicated (i.e., created a LOPP instance). The reviewers could not add or delete orders. They entered all data directly using PDOExplorer, a Web-based interface to a relational database, which we designed specifically for this study.

The reviewers created coded problem lists by entering problems using free-text and mapping them to a Unified Medical Language System (UMLS) concept.26 UMLS look-up functionality was implemented using the UMLSKS Java API findCUI method of the KSSRetriever class, which was called using the English-language 2003AC UMLS release27 and the normalized string index as arguments. Because this was an internal research application, we did not limit source vocabularies against which problem list entries could be mapped. After selecting a UMLS concept, reviewers could add one of the following qualifiers: “history of,” “rule out,” “status-post,” and “prevention of/prophylaxis.” We actively discouraged use of qualifiers in the study instructions and by visual prompting in the user interface. If the UMLSKS did not return any potential matches for a given input term or if the users thought that none of the returned UMLS concepts were appropriate matches, they were given the option of searching UMLS with a new term. They were also given the option of adding the free-text term to the problem list, which we also actively discouraged in the study instructions and by visual prompting in the user interface. Every problem list automatically contained the universal problem “Hospital admission, NOS.” Although the reviewers were free to choose not to link any orders to this problem, we suggested in the instructions that it might be appropriate for orders relating to the patient's simply being admitted to the hospital (e.g., “notify house officer if…”). Reviewers were not permitted to delete this problem.

Data collection took place between mid-February and mid-April 2004. Reviewers were trained either in person or via telephone. During this training, they familiarized themselves with PDOExplorer using a practice case that was not included for data analysis. The complete written instructions were displayed before beginning their first real case and were available in PDOExplorer for review at any point during the experiment. Although we briefed the reviewers on the experiment's hypothesis, we did not give them guidance on how to make case-specific decisions. The reviewers were not shown their actual cases before data collection.

“Problem instance” refers to a single assertion of a problem by a reviewer for a specific patient. Similarly, “order instance” refers to a single clinical order of a specific patient, and “LOPP instance” refers to the linking of an order instance to a problem instance.

Analysis

The main unit of analysis in this experiment was the simulated order pick-list that was generated for each problem instance that participated in at least one LOPP instance (i.e., each “linked problem” instance). The pick-list generation algorithm that we employed caused a given order to be included in the pick-list of a given problem instance if the probability of linkage of order and problem (PLOP) equaled or exceeded a specified threshold. We refer to this threshold in the remainder of this paper as the “PLOP threshold.” We computed the PLOP as the proportion of cases containing the linked problem that also contained the LOPP. The LOPPs of the problem instance whose pick-list was being evaluated were not included in the pool for calculating its respective PLOP.

We calculated recall, precision, and pick-list length for each pick-list for the range of PLOP thresholds from 0 to 1 in increments of 0.1. Problems that had qualifiers were considered as distinct entities from their base problem and from each other (e.g., “rule out myocardial infarction” was considered to be different than both “myocardial infarction” and “history of myocardial infarction”). For a given pick-list and its reference standard, recall is defined as the proportion of orders in the reference standard that are also in the pick-list. Precision is defined as the proportion of orders in the pick-list that are also in the reference standard. Every problem instance had its own independent reference standard, namely, the set of orders that the reviewer linked to that problem instance. Just as a clinician in real time can enter inappropriate orders for a problem, so could a reviewer have linked inappropriate orders to a problem. We used the reference standards in lieu of the orders that the clinician actually caring for a given patient might have thought were indicated for a given problem instance.

To summarize pick-list performance, we averaged recall, precision, and length of all pick-lists in the experiment at each PLOP threshold. Precision for pick-lists with a recall of 0 was incalculable, so these pick-lists were not included in the average for precision. We also computed cumulative frequency distributions for recall, precision, and pick-list length at each PLOP threshold.

Results

Demographics of Physician Reviewers

All except two of our reviewers had formal training in internal medicine: an emergency medicine physician with eight years of post-residency clinical experience and a family practitioner with two years of post-residency clinical experience. Of those trained in internal medicine, one had one year of post-residency clinical experience, one had two years of post-residency clinical experience, one had seven years of post-residency clinical experience, two were in the third year of their residency, one was in the second year of her residency, one had completed 1½ years of residency, and one had postponed his clinical training after his internship. Five of these physicians were engaged in formal medical informatics training at the time of data collection.

Description of the Sample

A total of 137 cases met our inclusion criteria. The medical records department was unable to provide us with the paper medical records for nine cases, which were either missing or in use for other purposes such as reimbursement coding. We eliminated three cases for containing fewer than ten orders. These cases had only two orders each. ▶ shows the ICD-9 admission diagnoses of the cases that were used in this study.

Table 1.

Frequency Distribution of ICD-9 Admission Diagnoses in Sample

| ICD-9 Diagnosis | Frequency |

|---|---|

| Chest pain NOS | 22 |

| Syncope and collapse | 19 |

| Chest pain NEC | 14 |

| Congestive heart failure | 10 |

| Shortness of breath | 9 |

| Gastrointestinal hemorrhage NOS | 9 |

| Pneumonia, organism NOS | 8 |

| Asthma with acute exacerbation | 8 |

| Intermediate coronary syndrome | 7 |

| Cellulitis of leg | 7 |

| Abdominal pain, unspecified site | 7 |

ICD-9 = International Classification of Diseases, Ninth Revision; NEC = not elsewhere classified; NOS = not otherwise specified.

▶ shows descriptive statistics about orders and reviewer-entered problems in the study. Where distributions are normal, the means and 95% confidence intervals are given; where distributions are skewed, the medians and ranges are given.

Table 2.

Descriptive Statistics about Sample

| Item | Value |

|---|---|

| No. of distinct orders in sample | 433 |

| Median no. of instances per order | 2 (range: 1–120) |

| Average no. of orders per case | 30.46 (95% CI: 28.79–32.12) |

| Average no. of linked order-problem pairs per case | 30.34 (95% CI: 28.68–32.00) |

| Median no. of instances per linked order-problem pair | 1 (range: 1–116) |

| Median no. of linked-to orders per problem instance | 2 (range: 1–25) |

| No. of distinct problems in experiment | 291 |

| No. of distinct UMLS-coded problems in experiment | 288 |

| % distinct problems in experiment coded with UMLS | 99.0% |

| No. of distinct linked-to problems in experiment | 213 |

| Median no. of instances per linked-to problem | 1 (range: 1–120) |

| Average no. of linked problem instances per case | 7.35 (95% CI: 6.89–7.81) |

CI = confidence interval; UMLS = Unified Medical Language System.

Pick-list Information Retrieval Performance

▶ shows average recall and precision through the range of PLOP thresholds from 0 (right-most point) to 1 (left-most point). A PLOP threshold of 0 causes a given order to be included in the pick-list if it is linked to any other instance of the given problem in the experiment; a PLOP threshold of 1 causes a given order to be included in the pick-list only if it is linked to all other instances of the given problem in the experiment. A point on the curve summarizes the results at a given PLOP threshold. It represents the estimated precision of a randomly chosen pick-list that contained at least one order and the estimated recall of a randomly chosen pick-list regardless of whether it contained any orders. ▶ shows average pick-list length as a function of PLOP threshold. The cumulative frequency distributions of recall, precision, and pick-list length as a function of PLOP threshold are shown in ▶▶▶, respectively.

Figure 1.

Average recall and precision of probabilistically generated, problem-specific order pick-lists as a function of probability of linkage of order and problem (PLOP) threshold. Each data point represents the average recall and precision at a different PLOP threshold, ranging from 0 (right-most point) to 1 (left-most point).

Figure 2.

Average length of probabilistically generated, problem-specific order pick-lists as a function of probability of linkage of order and problem threshold.

Table 3.

Cumulative Frequency Distribution of Recall by PLOP Threshold

| PLOP Threshold |

|||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 |

0.1 |

0.2 |

0.3 |

0.4 |

0.5 |

0.6 |

0.7 |

0.8 |

0.9 |

1 |

|

| Recall | Cumulative Frequency | ||||||||||

| 0 | 0.24 | 0.27 | 0.35 | 0.39 | 0.41 | 0.48 | 0.55 | 0.60 | 0.67 | 0.76 | 0.92 |

| 0.1 | 0.24 | 0.27 | 0.35 | 0.39 | 0.42 | 0.49 | 0.56 | 0.61 | 0.68 | 0.88 | 0.92 |

| 0.2 | 0.25 | 0.29 | 0.38 | 0.42 | 0.46 | 0.53 | 0.61 | 0.64 | 0.72 | 0.90 | 0.93 |

| 0.3 | 0.26 | 0.30 | 0.40 | 0.46 | 0.50 | 0.56 | 0.63 | 0.67 | 0.74 | 0.91 | 0.94 |

| 0.4 | 0.28 | 0.33 | 0.46 | 0.52 | 0.56 | 0.62 | 0.68 | 0.72 | 0.78 | 0.92 | 0.95 |

| 0.5 | 0.34 | 0.43 | 0.56 | 0.61 | 0.65 | 0.69 | 0.76 | 0.78 | 0.87 | 0.94 | 0.96 |

| 0.6 | 0.35 | 0.45 | 0.58 | 0.64 | 0.67 | 0.71 | 0.80 | 0.82 | 0.91 | 0.94 | 0.96 |

| 0.7 | 0.38 | 0.51 | 0.63 | 0.69 | 0.71 | 0.76 | 0.85 | 0.87 | 0.91 | 0.94 | 0.96 |

| 0.8 | 0.42 | 0.58 | 0.68 | 0.73 | 0.76 | 0.80 | 0.86 | 0.88 | 0.92 | 0.94 | 0.96 |

| 0.9 | 0.45 | 0.62 | 0.72 | 0.76 | 0.78 | 0.81 | 0.87 | 0.89 | 0.92 | 0.94 | 0.96 |

| 1 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 |

| N | 882 | 882 | 882 | 882 | 882 | 882 | 882 | 882 | 882 | 882 | 882 |

PLOP = probability of linkage of order and problem.

Table 4.

Cumulative Frequency Distribution of Precision by PLOP Threshold

| PLOP Threshold |

|||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 |

0.1 |

0.2 |

0.3 |

0.4 |

0.5 |

0.6 |

0.7 |

0.8 |

0.9 |

1 |

|

| Precision | Cumulative Frequency | ||||||||||

| 0 | 0.12 | 0.15 | 0.24 | 0.26 | 0.26 | 0.23 | 0.24 | 0.21 | 0.23 | 0.21 | 0.41 |

| 0.1 | 0.45 | 0.25 | 0.25 | 0.26 | 0.26 | 0.24 | 0.24 | 0.21 | 0.23 | 0.21 | 0.41 |

| 0.2 | 0.73 | 0.43 | 0.33 | 0.33 | 0.29 | 0.26 | 0.26 | 0.22 | 0.25 | 0.23 | 0.44 |

| 0.3 | 0.84 | 0.55 | 0.42 | 0.35 | 0.31 | 0.28 | 0.28 | 0.25 | 0.28 | 0.24 | 0.47 |

| 0.4 | 0.90 | 0.69 | 0.50 | 0.42 | 0.36 | 0.31 | 0.31 | 0.26 | 0.30 | 0.27 | 0.53 |

| 0.5 | 0.97 | 0.86 | 0.68 | 0.52 | 0.44 | 0.40 | 0.33 | 0.29 | 0.32 | 0.29 | 0.59 |

| 0.6 | 0.97 | 0.93 | 0.71 | 0.53 | 0.45 | 0.41 | 0.35 | 0.30 | 0.32 | 0.29 | 0.59 |

| 0.7 | 0.98 | 0.97 | 0.75 | 0.58 | 0.48 | 0.44 | 0.37 | 0.33 | 0.33 | 0.30 | 0.59 |

| 0.8 | 0.98 | 0.98 | 0.83 | 0.64 | 0.50 | 0.46 | 0.38 | 0.34 | 0.36 | 0.30 | 0.59 |

| 0.9 | 0.98 | 0.98 | 0.84 | 0.68 | 0.55 | 0.48 | 0.41 | 0.36 | 0.40 | 0.30 | 0.59 |

| 1 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 |

| N | 754 | 754 | 752 | 728 | 702 | 596 | 523 | 447 | 376 | 266 | 116 |

Table 5.

Cumulative Frequency Distribution of Pick-list Length by PLOP Threshold

| PLOP Threshold |

|||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 |

0.1 |

0.2 |

0.3 |

0.4 |

0.5 |

0.6 |

0.7 |

0.8 |

0.9 |

1 |

|

| Pick-list Length | Cumulative Frequency | ||||||||||

| 0 | 0.15 | 0.15 | 0.15 | 0.17 | 0.20 | 0.32 | 0.41 | 0.49 | 0.57 | 0.70 | 0.87 |

| 2 | 0.24 | 0.28 | 0.52 | 0.63 | 0.72 | 0.78 | 0.82 | 0.84 | 0.84 | 0.97 | 0.97 |

| 4 | 0.35 | 0.42 | 0.66 | 0.73 | 0.83 | 0.84 | 0.85 | 0.86 | 0.86 | 0.99 | 0.99 |

| 8 | 0.46 | 0.55 | 0.82 | 0.85 | 0.86 | 0.86 | 0.86 | 0.86 | 1.00 | 1.00 | 1.00 |

| 16 | 0.59 | 0.79 | 0.85 | 0.99 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 |

| 32 | 0.70 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 |

| 64 | 0.82 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 |

| 128 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 |

| N | 882 | 882 | 882 | 882 | 882 | 882 | 882 | 882 | 882 | 882 | 882 |

Our experiment achieved a maximum average recall of .67 at a PLOP threshold of 0 with a corresponding average precision of .17 and average pick-list length of 31.22. This level of recall means that on average, the pick-list contained 67% of the orders that the reviewing physician linked to a given problem instance. This level of precision means that, on average, 17% of the orders in the pick-list were among those that the reviewing physician linked to that problem in that case. As the PLOP threshold was increased, the average precision increased in an approximately linear fashion to a maximum of .73 at a PLOP threshold of .9 with a corresponding average recall of .09 and average pick-list length of .42.

Discussion

Order sets (or, more generally, order pick-lists) for CPOE serve two purposes: (1) to increase clinician adherence to established best practices and (2) to increase order entry speed. The basic task of entering an order into a CPOE system is composed of two subtasks: (1) retrieving a given order from the set of all available orders in the system and (2) populating that order's attributes with the appropriate values. How efficiently these subtasks can be performed may contribute to the speed of order entry. Our probabilistic pick-list generation process serves the second of the above-mentioned purposes by hastening the first of the above-mentioned subtasks, and it does this without the need for predefined order sets. We preliminarily evaluated the usefulness of these probabilistically generated pick-lists in a laboratory setting by computing the information retrieval measures of recall and precision. With our pilot study, we have shown that our approach can produce pick-lists with fair to good recall and precision.

The pick-list generation algorithm that we used in this experiment produces pick-lists of orderable items but does not address how to populate orders' attributes. We realize that order sets and order menus in conventional CPOE systems frequently contain statically preconfigured orders and that they can save clinicians time when entering orders. We removed order attribute information in our experiment because we did not want to conflate retrieval of an order class with specification of its attributes. We can imagine methods for CPOE systems to configure orders dynamically. One such method might use a rule-based decision support system to suggest appropriate dosing parameters for a given medication based on patient-specific data available in an EHR and a medication knowledge base. Another such method might suggest attributes for a given medication order based on how those attributes have been populated when that medication has been ordered in the past, either for a population or for a specific patient. This latter method is analogous to the process of pick-list generation that we describe in this paper; the method is simply applied to the attribute population subtask of order entry rather than the order retrieval subtask.

Our results generally exhibited classic information retrieval behavior,28 with precision increasing and recall decreasing as the item inclusion criterion stringency (i.e., the PLOP threshold) was increased. As expected, average recall reached its maximum at the PLOP threshold of 0 and its minimum at the PLOP threshold of 1. Although average precision reached its minimum at the PLOP threshold of 0 as expected, it did not reach its maximum at a PLOP threshold of 1. There was a flattening of the curve at PLOP thresholds from 0.7 to 0.9 and a precipitous drop in average precision at the highest PLOP threshold value (indicated by the left-most segment in ▶), where classic information retrieval behavior would have predicted it to be at its maximum. We believe that these artifacts were caused primarily by the presence of numerous very low-frequency problems in our sample, but a thorough discussion of this issue is beyond the scope of this paper.

Numerous controlled terminologies have been created specifically for coding problem lists,29,30,31,32 several of which are UMLS source terminologies.31,33 SNOMED CT was not yet included in UMLS when we performed this experiment, but it has recently been selected to be the standard problem list terminology by the National Committee on Vital and Health Statistics.34,35 We chose to use UMLS as a controlled terminology in its own right to maximize concept coverage36; however, any terminology with sufficient coverage of our domain would have been suitable. Although others have reported using UMLS as a problem list terminology,37,38,39 we were aware that UMLS was not designed to be used as a controlled terminology in its own right.

Among the terminology-related issues that we encountered, problem coding was at times inconsistent across reviewers and cases. For example, the reviewers used three different UMLS concepts to represent type 2 diabetes: diabetes mellitus; diabetes, non–insulin-dependent; and diabetes. By choosing to use UMLS as a terminology, we knowingly sacrificed concept redundancy and ambiguity in exchange for concept coverage. In the interest of user interface simplicity, we chose not to display additional concept-specific information available through UMLS, which might have improved problem coding consistency.

Even though problem coding inconsistency among reviewers, possibly caused in part by using UMLS as a terminology, may have led to decreased pick-list information retrieval performance, the empiric nature of our probabilistic pick-list generation process makes it permissive of inconsistent problem coding. So long as the system has previously “seen” a problem coded in a particular way, it would be able to generate a pick-list, possibly allowing clinicians to locate an appropriate set of problem-specific orders more easily than via conventional CPOE systems' approaches. While this feature could be achieved by storing problems as free text, mapping problems to a controlled terminology may lead to more consistent referencing of problems and would also enable additional CPOE system features (e.g., problem-specific decision support) that free-text problems do not directly support. Our approach to probabilistic pick-lists circumvents some of the cognitive difficulties that currently available CPOE systems impose through their dependence on users' internalization of a rigid system-embedded conceptual model.40

Implications of Implementing Probabilistic Pick-lists in Real-World Computerized Provider Order Entry Systems

Our probabilistic pick-list generation process requires a database of LOPP instances, which PDOE provides. This is why we consider probabilistic pick-list generation in lockstep with PDOE, even though PDOE could exist without probabilistic pick-lists. We have heard anecdotal reports of health care institutions that have begun requiring clinicians to explicitly link orders to problems in some manner. We believe that this paper is the first to discuss the use of dynamic CPOE pick-lists in general and probabilistic CPOE pick-lists in particular.

We suggest that probabilistic CPOE pick-lists may complement rather than replace deterministic pick-lists such as order sets. Our problem-driven approach to generating probabilistic pick-lists offers certain advantages over deterministic pick-lists, but it also introduces some potential disadvantages. The main advantage of probabilistic pick-lists is that they can provide problem-specific pick-list functionality without the need to predefine order sets. This might be especially useful for rare problems that do not have order sets defined or in the early stages of a CPOE system roll-out before many (or any) order sets have been created. Probabilistic pick-lists may be able to serve as “rough drafts” to hasten the construction of deterministic order sets. We can also imagine hybrid approaches whereby both deterministically and probabilistically selected orders are included in the same problem-specific pick-list. A hybrid approach could simultaneously leverage deterministic pick-lists' encouragement of adherence to established best practices and probabilistic pick-lists' dynamic adaptation to changes in clinical practice.21

Independent of pick-list generation, PDOE itself may offer benefits. One immediate potential benefit of PDOE is strong encouragement of clinicians to maintain accurate and up-to-date coded problem lists, which themselves have many benefits.41,42 Explicitly linking orders to problems provides a logical scaffold for organizing an EHR that would allow automatic display of order-related information (e.g., active orders, test results, order status, and medication administration record information) in the context of its relevant problem and that tightly integrates problem-specific order entry with problem-specific clinical documentation.43 The database of LOPP instances created by PDOE would enable dynamic generation of order pick-lists that take into account factors such as problem-specific ordering history by clinician, problem-specific ordering history by patient, and interactions between pick-lists for a patient's problems.

A potential disadvantage of probabilistic pick-lists is that they may encourage popular but inappropriate ordering behavior. Such behavior includes errors of both commission and omission of primary orders and corollary orders. Probabilistic pick-lists are only as good as the aggregate thoughts and actions of the clinicians who previously entered orders for a given problem. The orders on probabilistic pick-lists might be popular but not adhere to established best practices. This issue may be especially salient when the most current best practice for a given problem departs from historic best practice. For example, best-practice antibiotic selection for nosocomial infections changes as new microbial antibiotic resistance patterns emerge. If pick-lists are based solely on past LOPP frequency, as they are when using the simple pick-list generation algorithm that we describe in this paper, then new best practice orders for a given problem might take a long time to get incorporated into its pick-list (i.e., the system might have to encounter many LOPP instances with the new order), depending on the given PLOP threshold and the frequency of the given problem. Further, probabilistically included pick-list items in potential “hybrid” pick-lists will need to be reconciled with deterministically included pick-list items. We will need to develop more sophisticated pick-list generation algorithms to address these and other issues.

As aggregate clinician ordering behavior changes, probabilistic pick-lists would also change. The cognitive burden associated with dynamically generated and evolving pick-lists is another disadvantage.44 Building an intuitive user interface for probabilistic CPOE pick-lists will thus require especially careful design.

Problem-driven order entry will cost clinicians time to enter coded problem lists. Even though our anecdotal experience with the reviewers in this study did not suggest that this step is highly burdensome, mapping free-text problems to a controlled terminology may be able to be performed automatically45 to save time. The added step of placing an order in the context of a problem may also add time to the order entry process. This might be especially burdensome for “one-offs” or very short ordering sessions,13 yet it is also possible that PDOE may decrease time spent on non-order entry tasks such as searching for patient-specific clinical information. Such effects have been described for conventional CPOE and EHR systems.8,9,12,13

Study Limitations

Our study is limited by both sample and methodology. Our sample included only 120 cases. All were inpatient cases from the domain of general internal medicine and only covered the time period of the first 24 hours after admission. We selected the cases in our sample by frequency of ICD-9 admission diagnosis. While our sample included only 11 common ICD-9 admission diagnoses, the diversity of problems represented in our sample was substantially greater, as indicated by the 291 distinct problems that our reviewers entered in this experiment. Because our sample was small and nonrandom, however, our results may not be generalizable to problems and orders associated with less common internal medicine ICD-9 admission diagnoses or to problems and orders more commonly encountered in other medical and surgical domains (e.g., pre- and post-procedure orders).

Our sample cannot be used to study how well our probabilistic pick-list generation process might perform over patients' entire hospital stays or in the outpatient setting because it encompassed only the 24-hour post-admission time period. Our sample also cannot be used for comprehensively studying how probabilistic pick-lists' information retrieval performance changes over time as the database of LOPP instances grows because our set of cases was not chronologically continuous.

We designed our study as a retrospective validation. For logistical reasons, the critical steps of problem list creation and order-problem linking were performed by reviewers rather than the clinicians who actually cared for the patients whose cases we studied. In a few cases, the H & P was written by a clinician other than the clinician who entered the orders; the H & P by the ordering clinician might have contained greater or less detail and different interpretations of clinical data. Both of these issues may have introduced noise in identifying problems and linking orders to problems. We believe that a retrospective validation was a reasonable study design given the youth of the process that we sought to study, but we acknowledge that pick-list performance in the real world might vary from what we measured.

We averaged recall, precision, and pick-list length at each PLOP threshold to generate the data points plotted in ▶ and ▶. Averaging allowed us to summarize the overall experimental results in a single graph, but in doing so, it necessarily obscured information about the distribution of data at each PLOP threshold. Since our data were not normally distributed, we could not conveniently estimate parameters by calculating confidence intervals or the like. This problem is well-known in the information retrieval community,46 who routinely average recall and precision47 despite it. We present cumulative frequency distributions in lieu of confidence intervals.46

Our experimental design limited the metrics that we could employ to study the usefulness of probabilistic pick-lists. We described above our reasoning for choosing the information retrieval metrics of recall and precision. Before definitively concluding the usefulness of PDOE and probabilistic pick-list generation, other factors must be evaluated. One important such factor is the actual time required to enter orders using probabilistic pick-lists with PDOE. Another such factor is how well orders placed via probabilistic pick-lists reflect established best practices. Other important-to-evaluate factors are the external benefits of PDOE such as its effect on problem list maintenance and its effect on the efficiency of performing other clinical tasks.

Our analysis aggregated results only at the level of the individual pick-lists. Pick-list performance may vary by patient, ordering session, clinician type, individual clinician, problem, problem type, etc. Future studies, which will require larger, more diverse, and ideally prospective samples, would benefit from analysis at other levels of aggregation.

Conclusions

We conclude that it is preliminarily feasible to use a simple probabilistic algorithm to generate problem-specific CPOE pick-lists from a database of explicitly linked orders and problems. Further research is necessary to determine the usefulness of this approach in real-world settings.

Dr. Rothschild is currently with the Department of Biomedical Informatics, Columbia University, New York, NY.

Presented in part at the 2004 National Library of Medicine Training Program Directors' Meeting in Indianapolis, IN in a plenary session and MEDINFO 2004 in San Francisco in poster form.

Supported by the National Library of Medicine training grant 5T15LM007452 (ASR).

The authors thank the following physicians for serving as reviewers: David Camitta, Richard Dressler, Cupid Gascon, Mark Laflamme, Arun Mathews, Zeba Mathews, Greg Prokopowicz, Danny Rosenthal, Shannon Sims, and Chuck Tuchinda. The authors thank Bill Hersh, George Hripcsak, and Gil Kuperman for their expert advice on analysis and manuscript preparation.

References

- 1.14th Annual HIMSS Leadership Survey sponsored by Superior Consultant Company: Health care CIO Survey Final Report. [cited 2004 October 27]. Available from: http://www.himss.org/2003survey/docs/Healthcare_CIO_final_report.pdf.

- 2.Goldsmith J, Blumenthal D, Rishel W. Federal health information policy: a case of arrested development. Health Aff. 2003;22:44–55. [DOI] [PubMed] [Google Scholar]

- 3.Kohn LT, Corrigan JM, Donaldson MS, editors. To err is human: building a safer health system. Washington, DC: National Academy Press, 2000. [PubMed]

- 4.Metzger J, Turisco F. Computerized physician order entry: a look at the vendor marketplace and getting started. [cited 2004 October 27]. Available from: http://www.leapfroggroup.org/media/file/Leapfrog-CPO_Guide.pdf.

- 5.McDonald CJ, Overhage JM, Mamlin BW, Dexter PD, Tierney WM. Physicians, information technology, and health care systems: a journey, not a destination. J Am Med Inform Assoc. 2004;11:121–4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Rosenbloom ST, Grande J, Geissbuhler A, Miller RA. Experience in implementing inpatient clinical note capture via a provider order entry system. J Am Med Inform Assoc. 2004;11:310–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Jao C, Hier DB, Wei W. Simulating a problem list decision support system: can CPOE help maintain the problem list? Medinfo. 2004;2004(CD):1666. [Google Scholar]

- 8.Overhage JM, Perkins S, Tierney WM, McDonald CJ. Controlled trial of direct physician order entry: effects on physicians' time utilization in ambulatory primary care internal medicine practices. J Am Med Inform Assoc. 2001;8:361–71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Rodriguez NJ, Murillo V, Borges JA, Ortiz J, Sands DZ. A usability study of physicians interaction with a paper-based patient record system and a graphical-based electronic patient record system. Proc AMIA Symp. 2002:667–71. [PMC free article] [PubMed]

- 10.Pizziferri L, Kittler A, Volk L, Honour M, Gupta S, Wang S, et al. Primary care physician time utilization before and after implementation of an electronic health record: a time-motion study. J Biomed Inform. In press 2005. Epub 2005 Jan 31. [DOI] [PubMed]

- 11.Massaro T. Introducing physician order entry at a major academic medical center: I. Impact on organizational culture and behavior. Acad Med. 1993;68:20–5. [DOI] [PubMed] [Google Scholar]

- 12.Shu K, Boyle D, Spurr C, Horsky J, Heiman H, O'Connor P, et al. Comparison of time spent writing orders on paper with computerized physician order entry. Medinfo. 2001;10:1207–11. [PubMed] [Google Scholar]

- 13.Bates DW, Boyle DL, Teich JM. Impact of computerized physician order entry on physician time. Proc Annu Symp Comput Appl Med Care. 1994:996. [PMC free article] [PubMed]

- 14.Tierney WM, Miller ME, Overhage JM, McDonald CJ. Physician inpatient order writing on microcomputer workstations. Effects on resource utilization. JAMA. 1993;269:379–83. [PubMed] [Google Scholar]

- 15.Ahmad A, Teater P, Bentley TD, Kuehn L, Kumar RR, Thomas A, et al. Key attributes of a successful physician order entry system implementation in a multi-hospital environment. J Am Med Inform Assoc. 2002;9:16–24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Ash JS, Stavri PZ, Kuperman GJ. A consensus statement on considerations for a successful CPOE implementation. J Am Med Inform Assoc. 2003;10:229–34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Lovis C, Chapko MK, Martin DP, Payne TH, Baud RH, Hoey PJ, et al. Evaluation of a command-line parser-based order entry pathway for the Department of Veterans Affairs electronic patient record. J Am Med Inform Assoc. 2001;8:486–98. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Payne TH, Hoey PJ, Nichol P, Lovis C. Preparation and use of preconstructed orders, order sets, and order menus in a computerized provider order entry system. J Am Med Inform Assoc. 2003;10:322–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Ash JS, Gorman PN, Lavelle M, Payne TH, Massaro TA, Frantz GL, et al. A cross-site qualitative study of physician order entry. J Am Med Inform Assoc. 2003;10:188–200. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Thomas SM, Davis DC. The characteristics of personal order sets in a computerized physician order entry system at a community hospital. Proc AMIA Symp. 2003:1031. [PMC free article] [PubMed]

- 21.Bates DW, Kuperman GJ, Wang S, Gandhi T, Kittler A, Volk L, et al. Ten commandments for effective clinical decision support: making the practice of evidence-based medicine a reality. J Am Med Inform Assoc. 2003;10:523–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Weiner M, Gress T, Thiemann DR, Jenckes M, Reel SL, Mandell SF, et al. Contrasting views of physicians and nurses about an inpatient computer-based provider order-entry system. J Am Med Inform Assoc. 1999;6:234–44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Campbell JR, Carpenter P, Sneiderman C, Cohn S, Chute CG, Warren J. Phase II evaluation of clinical coding schemes: completeness, taxonomy, mapping, definitions, and clarity. CPRI Work Group on Codes and Structures. J Am Med Inform Assoc. 1997;4:238–51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Chute CG, Cohn SP, Campbell KE, Oliver DE, Campbell JR. The content coverage of clinical classifications. For The Computer-Based Patient Record Institute's Work Group on Codes & Structures. J Am Med Inform Assoc. 1996;3:224–33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Wagner M, Bankowitz R, McNeil M, Challinor S, Jankosky J, Miller R. The diagnostic importance of the history and physical examination as determined by the use of a medical decision support system. Symp Comput Appl Med Care. 1989:139–44.

- 26.Humphreys BL, Lindberg DA, Schoolman HM, Barnett GO. The Unified Medical Language System: an informatics research collaboration. J Am Med Inform Assoc. 1998;5:1–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.UMLS Knowledge Sources. January Release 2003AA Documentation. , 14th ed. Bethesda (MD): National Library of Medicine, 2003.

- 28.Hersh WR. Information retrieval: a health and biomedical perspective. , 2nd ed. New York: Springer-Verlag, 2003.

- 29.Elkin PL, Mohr DN, Tuttle MS, Cole WG, Atkin GE, Keck K, et al. Standardized problem list generation, utilizing the Mayo canonical vocabulary embedded within the Unified Medical Language System. Proc AMIA Annu Fall Symp. 1997:500–4. [PMC free article] [PubMed]

- 30.Chute CG, Elkin PL, Fenton SH, Atkin GE. A clinical terminology in the post modern era: pragmatic problem list development. Proc AMIA Symp. 1998:795–9. [PMC free article] [PubMed]

- 31.Brown SH, Miller RA, Camp HN, Guise DA, Walker HK. Empirical derivation of an electronic clinically useful problem statement system. Ann Intern Med. 1999;131:117–26. [DOI] [PubMed] [Google Scholar]

- 32.Hales JW, Schoeffler KM, Kessler DP. Extracting medical knowledge for a coded problem list vocabulary from the UMLS Knowledge Sources. Proc AMIA Symp. 1998:275–9. [PMC free article] [PubMed]

- 33.UMLS Metathesaurus Fact Sheet. [cited 2005 January 5]. Available from: http://www.nlm.nih.gov/research/umls/sources_by_categories.html.

- 34.Wasserman H, Wang J. An applied evaluation of SNOMED CT as a clinical vocabulary for the computerized diagnosis and problem list. AMIA Annu Symp Proc. 2003:699–703. [PMC free article] [PubMed]

- 35.Consolidated Health Informatics Standards Adoption Recommendation: Diagnosis and Problem List. [cited 2005 January 5]. Available from: http://www.whitehouse.gov/omb/egov/downloads/dxandprob_full_public.doc.

- 36.Campbell JR, Payne TH. A comparison of four schemes for codification of problem lists. Proc Annu Symp Comput Appl Med Care. 1994:201–5. [PMC free article] [PubMed]

- 37.Johnson KB, George EB. The rubber meets the road: integrating the Unified Medical Language System Knowledge Source Server into the computer-based patient record. Proc AMIA Annu Fall Symp. 1997:17–21. [PMC free article] [PubMed]

- 38.Elkin PL, Tuttle M, Keck K, Campbell K, Atkin G, Chute CG. The role of compositionality in standardized problem list generation. Medinfo. 1998;9:660–4. [PubMed] [Google Scholar]

- 39.Goldberg H, Goldsmith D, Law V, Keck K, Tuttle M, Safran C. An evaluation of UMLS as a controlled terminology for the Problem List Toolkit. Medinfo. 1998;9:609–12. [PubMed] [Google Scholar]

- 40.Horsky J, Kaufman DR, Oppenheim MI, Patel VL. A framework for analyzing the cognitive complexity of computer-assisted clinical ordering. J Biomed Inform. 2003;36:4–22. [DOI] [PubMed] [Google Scholar]

- 41.Weed LL. Medical records that guide and teach. N Engl J Med. 1968;12:593–600, 652–7. [DOI] [PubMed] [Google Scholar]

- 42.Safran C. Searching for answers on a clinical information-system. Methods Inf Med. 1995;34:79–84. [PubMed] [Google Scholar]

- 43.Rothschild AS, Lehmann HP. Problem-driven order entry: an introduction. Medinfo. 2004;2004(CD):1838. [Google Scholar]

- 44.Poon AD, Fagan LM, Shortliffe EH. The PEN-Ivory project: exploring user-interface design for the selection of items from large controlled vocabularies of medicine. J Am Med Inform Assoc. 1996;3:168–83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Aronson AR. Effective mapping of biomedical text to the UMLS Metathesaurus: the MetaMap program. Proc AMIA Symp. 2001:17–21. [PMC free article] [PubMed]

- 46.van Rijsbergen CJ. Information retrieval. London: Butterworths, 1979.

- 47.Voorhees E. Overview of TREC 2003. [cited 2005 January 7]. Available from: http://trec.nist.gov/pubs/trec12/papers/OVERVIEW.12.pdf.