Significance

From Mesopotamian cities to industrialized nations, gossip has been at the center of bonding human groups. Yet the evolution of gossip remains a puzzle. The current article argues that gossip evolves because its dissemination of individuals’ reputations induces individuals to cooperate with those who gossip. As a result, gossipers proliferate as well as sustain the reputation system and cooperation.

Keywords: gossip, cooperation, indirect reciprocity, evolutionary game theory, agent-based model

Abstract

Gossip, the exchange of personal information about absent third parties, is ubiquitous in human societies. However, the evolution of gossip remains a puzzle. The current article proposes an evolutionary cycle of gossip and uses an agent-based evolutionary game-theoretic model to assess it. We argue that the evolution of gossip is the joint consequence of its reputation dissemination and selfishness deterrence functions. Specifically, the dissemination of information about individuals’ reputations leads more individuals to condition their behavior on others’ reputations. This induces individuals to behave more cooperatively toward gossipers in order to improve their reputations. As a result, gossiping has an evolutionary advantage that leads to its proliferation. The evolution of gossip further facilitates these two functions of gossip and sustains the evolutionary cycle.

Gossip, the exchange of personal information about absent third parties, has long been a significant part of human life (1). As early as in Mesopotamia, gossiping has prevailed in the cities and markets (2). In ancient Greece, stories of gossip were widely recorded in literary works such as The Odyssey and Aesop’s Fables (3, 4). In hunter–gatherer societies, gossip is at the center of daily life (5). In modern societies, people are estimated to spend approximately an hour per day gossiping (6). Though individual differences exist, almost everyone gossips, young and old, women and men, rich and poor, and across personality types (6).

Despite its ubiquity, the evolution of gossip remains a puzzle. Previous theories tried to explain the origin of gossip in terms of its role in human survival, particularly for bonding large groups and sustaining cooperation (7–10). Gossip disseminates information about people’s reputations and as such enables people to choose to help cooperative others and avoid being exploited by selfish ones—a mechanism that is widely studied as indirect reciprocity that sustains cooperation (11–14). Beyond that, the possibility of being gossiped about also elicits people’s reputational concerns. As a result, people tend to behave more cooperatively under the threat of gossip (15, 16).

Though this research has elucidated the benefit of gossip for the group, the evolution of gossip is puzzling from a number of perspectives. First, from a gossiper’s perspective, it remains unclear why individuals evolve to gossip in the first place. To gossip means to voluntarily share one’s informational resources with others. It is time and energy consuming but does not necessarily provide direct benefit for gossipers themselves. As is widely acknowledged in evolutionary theory, a behavior usually is selected because it enhances the reproductive fitness of its performers (17). Given that gossip is so prevalent across human groups, a theory of gossip needs to explain why gossiping is an adaptive strategy and evolved at all. Second, from a gossiper receiver’s perspective, gossip cannot be transformed into material benefits unless receivers utilize the gossip to guide their behavior. Thus, a theory of gossip also needs to illustrate why people evolve to utilize the information in gossip and how gossip specifically benefits its receivers. Finally, beyond disseminating reputational information as noted above, gossip also has its deterrent power in that the existence of gossipers alone can motivate cooperative behavior (15). But why do people behave more cooperatively in front of gossipers? A theory of gossip also needs to account for this.

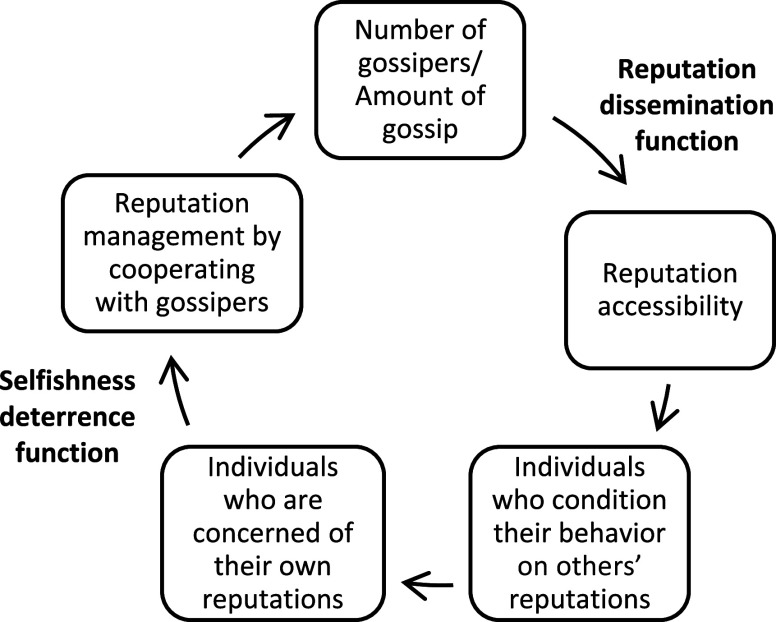

Altogether, the evolution of gossip is puzzling and cannot be simply explained by its role in promoting group cooperation. A theory that is tailored to the features and functions of gossip is needed to understand its origin and persistence. To explain this puzzle, the current article proposes an evolutionary cycle of gossip (Fig. 1). In this theory, gossip is expected to evolve under the joint effect of its reputation dissemination and selfishness deterrence functions. Specifically, we argue that the reputation dissemination function of gossip makes reputations more accessible and, thus, leads more people to take others’ reputations into account when interacting with them (12). As more people condition their behavior on others’ reputations, more people get concerned about their own reputations, too. This reputational concern drives them to manage their reputations by behaving more cooperatively when interacting with gossipers. Put differently, a gossiper can deter individuals from acting selfishly by making it known that their reputations will be spread to others, which manifests as the selfishness deterrence function of gossip (15). The deterrent power of gossipers gives gossipers an evolutionary advantage over nongossipers, thus leading to their proliferation. This further facilitates the reputation dissemination and selfishness deterrence functions of gossip, which jointly sustains the evolutionary cycle. As will be shown, ultimately, gossiping and reputational concerns coevolve and boost cooperation.

Fig. 1.

The evolutionary cycle of gossip. From the top of the circle, when there are some gossipers existing in the population, the reputation dissemination function of gossip makes reputations more accessible and, thus, leads more individuals to condition their behavior on others’ reputations. As a result, more individuals get concerned about their own reputations, which drives them to manage their reputations by behaving more cooperatively when interacting with gossipers, manifesting the selfishness deterrence function of gossip. The deterrent power of gossip gives gossipers an evolutionary advantage over nongossipers, which leads to the evolution of more gossipers. Again, the evolution of more gossipers facilitates the reputation dissemination and selfishness deterrence functions of gossip and sustains this cycle.

We designed an evolutionary game-theoretic modeling framework that incorporates the two gossiping functions to formalize and assess this theory. With a series of agent-based models, we first test whether the reputation dissemination and selfishness deterrence functions of gossip are jointly sufficient to sustain the evolution of gossip. We then test the causal pathways in the evolutionary cycle step by step.

Agents’ Strategies

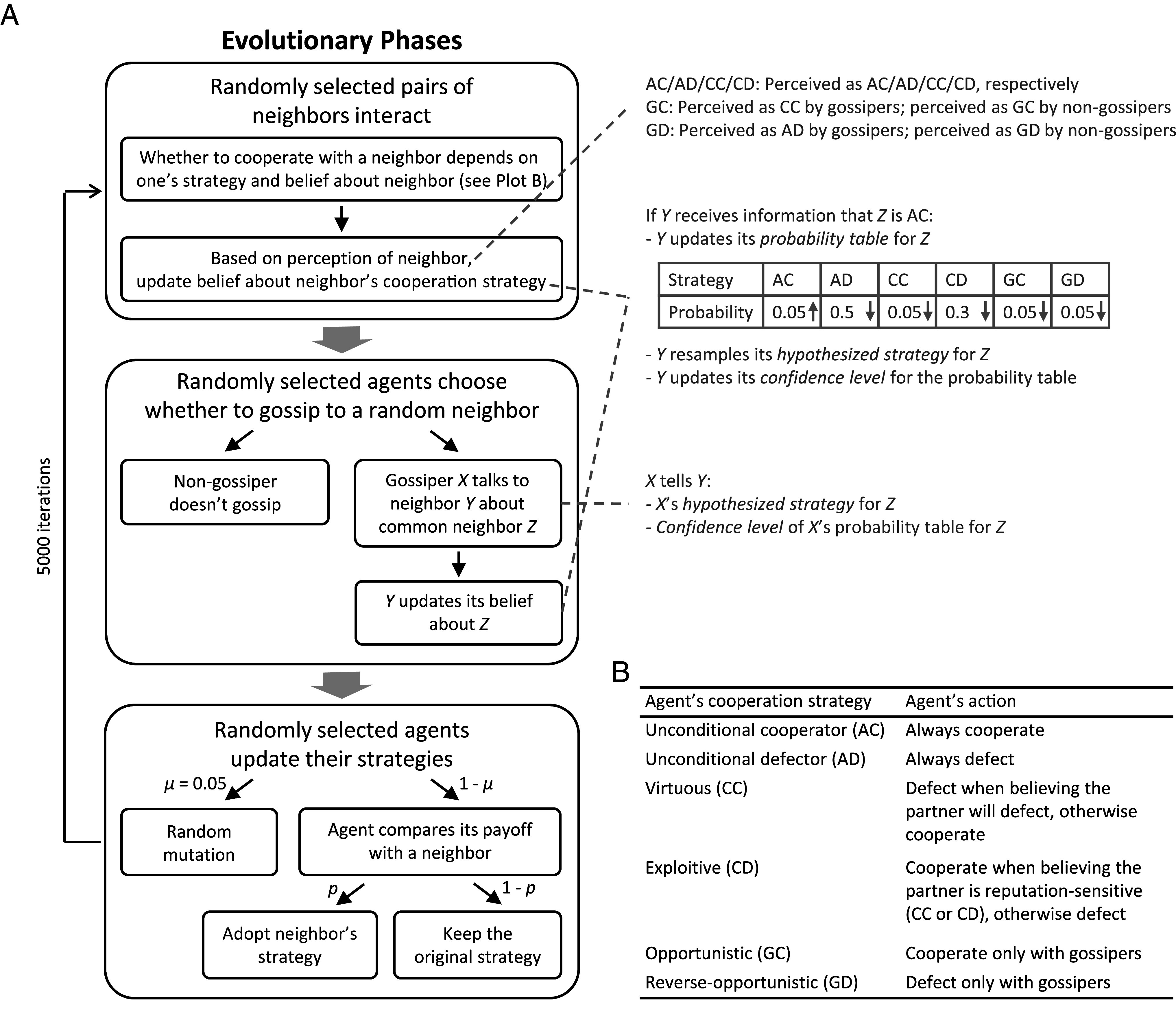

In our model, each agent makes two decisions: 1) whether to cooperate with an agent and 2) whether to gossip to an agent (gossip receiver) about a third party (gossip target). Each agent has two strategies that guide their decisions—a cooperation strategy and a gossiping strategy.

An agent’s cooperation strategy decides under what circumstances the agent cooperates with an interaction partner. We implement six options of cooperation strategies that fall into three categories to examine the two functions of gossip. The first category includes two unconditional strategies—unconditional cooperators (AC) who always cooperate and unconditional defectors (AD) who never cooperate (i.e., always defect). Agents of this category are insensitive to social information and care about neither others’ nor their own reputations. They are influenced by neither the reputation dissemination nor the selfishness deterrence function of gossip.

The second category includes two reputation-sensitive strategies. These agents condition their cooperation behavior on others’ reputations. Though there are technically infinite ways of how an individual can utilize reputation information, we categorize them into two types that are directly related to our theory: 1) agents who use the reputation information to protect the self and 2) agents who use the reputation information to exploit others. Thus, we implement two reputation-sensitive strategies: Virtuous agents (CC) use the reputation information to protect themselves. They defect when they believe that their interaction partner will defect with them; otherwise, they cooperate. Exploitive agents (CD), however, exploit others when they can. They cooperate only when they believe that their interaction partner is also reputation-sensitive and, thus, not easily exploited*; otherwise, they defect. Reputation-sensitive agents benefit directly from the reputation dissemination function of gossip.

The third category includes two gossiper-sensitive strategies. These agents are subject to the selfishness deterrence function of gossip. They behave differently when interacting with gossipers vs. nongossipers in order to manage their reputation in gossip (18). Specifically, opportunistic agents (GC) cooperate with gossipers and defect with nongossipers while reverse-opportunistic agents (GD) defect with gossipers and cooperate with nongossipers (see Fig. 2B for a summary of the six cooperation strategies).

Fig. 2.

Plot (A) illustrates the evolutionary phases. Our evolutionary game consists of three phases: 1) interaction, 2) gossiping, and 3) strategy updating. In the interaction phase, a set of agents are selected to play a cooperation game with a neighbor and gain corresponding payoffs. In the gossiping phase, another set of agents are selected as speakers. If the speaker is a gossiper, they will gossip about a certain number of targets to a randomly selected neighbor. During the strategy-updating phase, another set of agents are selected to update their strategies. By repeating the iterations, we observe the evolutionary trajectories of different strategies and behaviors. Plot (B) illustrates an agent’s action as a function of their own strategy and their belief about the interaction partner’s strategy.

An individual makes their cooperation decision based on their own strategy and their belief about the partner’s strategy (see SI Appendix, Table S1 for decisions under different “self-strategy × hypothesized-strategy-of-partner” combinations). The way how agents form beliefs about each other will be elaborated on later. If an agent decides to cooperate, they spend a cost to do so, and their partner will receive a larger benefit. Such a scenario, also known as a cooperation game, has been widely applied in research to capture human interactions (see Materials and Methods for more information) (7, 19–21).

An agent’s gossiping strategy decides whether the agent gossips. There are two options of gossiping strategies—gossipers (AG) who always gossip and nongossipers (AN) who never gossip. If an agent decides to gossip, they share their knowledge about the target with the receiver and the receiver integrates the shared information into their own representation of the target, a point we will return below. An agent may have any combination of a cooperation strategy and a gossiping strategy. Thus, there are 6 × 2 = 12 combinations. Every agent knows whether another agent is a gossiper or nongossiper. However, agents need to gradually form beliefs about each other’s cooperation strategy through either direct interactions or gossip. As a result, each agent may form a unique belief about another agent’s cooperation strategy.

The Reputation System

A key innovation of our model is the process of how agents form their beliefs about others. We assume that for each other agent Y, an agent X estimates the probability of Y having each cooperation strategy, as shown in the probability table in SI Appendix, Fig. S1. X also has a hypothesized strategy of Y, SXY, which is chosen at random from the probability distribution given in the probability table. Additionally, X has a confidence level for their probability table for Y, defined as the maximum value in the table. When X has no information about Y, the probability of every strategy is equal, the hypothesized strategy is randomly selected from a uniform distribution over all the possible strategies, and the confidence is the lowest. But as X gains knowledge about Y, this probability table becomes uneven, so that X is more likely to believe that Y has one of the cooperation strategies rather than the others—resembling the formation of X’s belief about Y (SI Appendix, section 1.1). With this setup, agents form assumptions of each other’s underlying strategy, instead of evaluating each other simply as “good” or “bad” dichotomously or on a one-dimensional spectrum as in previous models (12, 22–25).

We set up the reputation system in this way for the following reasons. First, our setup allows for the inference of each other’s conditional behavior. This is especially important with the existence of exploitive agents who identify unconditional cooperators and exploit them, which cannot be achieved by a binary reputation system. Second, this avoids the complexity of higher-order moral judgments on conditional behavior (23, 24). Traditional models usually determine an agent’s reputation binarily using first- and/or higher-order assessments. In the first-order assessment, agents are judged as “good” or “bad” based solely on their behavior (i.e., whether they cooperated or defected) (12). In the second-order assessment, an individual is judged based on both their behavior and their partner’s reputation. In the third-order assessment, the individual’s own reputation is also taken into consideration (24). Higher-order assessments are found to be essential to sustain cooperation (26). However, there are many ways to decide which behavior should be considered “good” under which condition. There are 256 different assessment rules with all three levels of assessments, eight of which are able to maintain a high level of cooperation (26). If individuals are also judged based on whether they cooperate or defect with a gossiper or a nongossiper, there will be even more options of assessment rules. To avoid the complexity of exploring all these assessment rules, we let individuals gain knowledge of each other’s underlying strategy directly. This way, one’s reputation no longer needs to contain a binary moral judgment, but becomes a prediction of one’s situational behavior—their cooperation strategy. Finally, echoing the second point, we assume that from direct interaction and/or gossip, individuals can gain richer information about others than just observing their cooperation behavior or evaluating someone as “good” or “bad”. People are highly attuned to drawing personality inferences when interacting with each other. In addition to observing another’s behavior, much information about personality traits can be inferred from a person’s physiognomy, outer appearance, demeanor, language, etc. (27, 28). Thus, we assume that individuals form beliefs about each other’s cooperation strategy as a whole, which will be elaborated in the next paragraph. Although we use a reputation dynamic different from previous models, our reputation system serves a similar reputation dissemination function as in previous models that used a binary reputation system, a point that we will return in Discussion.

Ways Agents Gain Information about Others

There are two ways in which agents gain knowledge about each other. The first is through direct interaction. When an agent directly interacts with another in a cooperation game, they gain some information about their partner’s cooperation strategy. Specifically, when an agent interacts with a partner who is an unconditional cooperator (AC), unconditional defector (AD), virtuous (CC), or exploitive (CD) agent, this partner will be correctly identified as AC, AD, CC, or CD, respectively. However, the case is different for a partner who is opportunistic (GC) or reverse-opportunistic (GD). As mentioned above, GC and GD behave differently when interacting with gossipers (compared to nongossipers) to manage their reputation in gossip. As a result, when a gossiper interacts with a GC, the GC will be perceived as virtuous (CC) by the gossiper due to the reputation management. On the other hand, a GD will be perceived as an AD by a gossiper because a GD intentionally defects with gossipers.† On the other hand, when a GC or GD interacts with a nongossiper, there will be no reputation management and they will be correctly identified as GC or GD, respectively (see the first side note in Fig. 2A). Note that GDs, who intentionally leave a bad reputation in gossip, should be rare in real life, but we implement this strategy so that our strategy set is not artificially biased toward gossipers (29).

After interacting with a partner, an agent integrates the information gained about the partner’s strategy into their belief about the partner. Specifically, in their probability table of the partner, the probability corresponding to the perceived strategy increases by dirW, a parameter reflecting the amount of information gained from a single direct interaction (i.e., interaction depth). Accordingly, the agent is more likely to believe that this partner is of the perceived type (see the second side note in Fig. 2A and Materials and Methods).

The second way that an agent gains knowledge about another is through gossip. When a gossiper X gossips to a receiver Y about a target Z, X shares their hypothesized strategy of Z, SXZ, along with the confidence level of X’s probability table for Z, CXZ. Y then updates their belief about Z: In Y’s probability table of Z, the probability corresponding to the gossiped strategy increases by . The parameter bias controls the extent to which agents give more weight to more confident gossip (30, 31). The parameter indirW controls the extent to which agents’ beliefs about others are influenced by gossip in general. As the probability of one strategy increases, the probabilities of others decrease proportionally for all the probabilities to sum to 1 (Materials and Methods).

Phases of the Evolutionary Game

We initialize the population with N agents with random strategies embedded in a small-world network (SI Appendix, section 1.2.1) (32). We then begin an evolutionary game consisting of three phases: 1) interaction, 2) gossiping, and 3) strategy updating (Fig. 2). In the interaction phase, a set of agents are selected to play a cooperation game with a neighbor and gain corresponding payoffs. In the gossiping phase, another set of agents are selected as speakers (i.e., given the opportunity to gossip). If the speaker is a gossiper, they will gossip about a certain number of targets to a randomly selected neighbor. During the strategy-updating phase, another set of agents are selected to update their strategies. If an agent is selected, they compare their payoff with that of a randomly selected neighbor. The lower the agent’s payoff is compared with the neighbor’s payoff, the higher likelihood that the agent will be replaced by a new agent with the neighbor’s strategy (Materials and Methods). This resembles the cultural transmission process in which strategies related to higher fitness are more likely to be adopted by the next generation (33). Thus, throughout these iterations, agents interact, gossip, and gradually form beliefs about each other’s cooperation strategy. We then observe the evolutionary trajectories of 1) the proportion of gossipers, 2) the proportions of different cooperation strategies, and 3) cooperation rate (Materials and Methods). A summary of the default model parameters is in SI Appendix, Table S3.

We also varied many of these parameters in further analyses to assess their effects and the robustness of the findings, including 1) bias toward more confident gossip when listeners process gossip, 2) frequency of direct interactions, 3) frequency of conversations, 4) interaction depth—the amount of information gained from a single direct interaction, 5) general trust of gossip—the extent to which agents’ representation of others are influenced by gossip, 6) network structures, 7) frequency of strategy updating, and 8) network mobility (SI Appendix, sections 2.2.3 and 2.3.1).

Results

In what follows we review the results of our simulation. First, we illustrate whether the reputation dissemination and selfishness deterrence functions of gossip are jointly sufficient to sustain the evolution of gossip (Fig. 1). Next, we assess the causal pathways in the evolutionary cycle step by step. In our first step (Step 1), we test whether the reputation dissemination function of gossip increases reputation accessibility and, consequently, increases the proportion of reputation-sensitive agents. In our second step (Step 2), we test whether the existence of gossiper-sensitive agents and their reputation management in front of gossipers—the selfishness deterrence function—lead to the evolution of gossip.

The Evolutionary Cycle of Gossip.

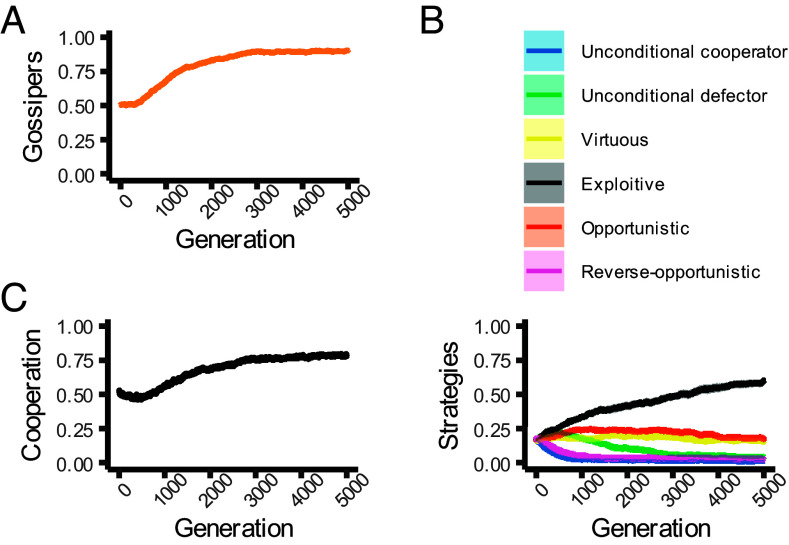

Fig. 3 illustrates the evolutionary trajectories when all pathways in Fig. 1 are included. Fig. 3A shows that the majority (90%, calculated as the average from the 4,000th to the 5,000th iterations) of the population evolves to be gossipers when all pathways are included. In fact, further analyses show that the evolution of gossipers is so robust that even if gossiping is costly, as long as this cost is not too high, gossipers still evolve (SI Appendix, Fig. S5). This is consistent with the empirical observation that people are willing to gossip even at personal costs (15). Fig. 3B shows the trajectory of each cooperation strategy. The strategy that is adopted the most is exploitive (CD, 57%). The next most adopted strategy is opportunistic (GC): 18% of agents behave cooperatively when and only when under the threat of gossip. The evolution of opportunists indicates that it is evolutionarily adaptive to develop reputational concerns even if that means one will lose the instant benefit of being selfish when interacting with gossipers (15, 16). Following that are virtuous agents (CC, 16%). The other three strategies each constitute a very small proportion of the population (<5%). Fig. 3C illustrates the trajectory of cooperation. Despite the large proportion of exploitive and opportunistic agents, the cooperation rate ends up being high (overall 78%; see SI Appendix, Fig. S6 for cooperation rates among different strategies). This is because the majority of the population becomes reputation-sensitive so that the exploitives cannot easily exploit them. At the same time, the majority of the population also evolves to be gossipers and successfully deters the opportunists from defection.

Fig. 3.

The evolutionary trajectories of different strategies and behaviors. The lines are average trajectories from 30 simulation runs with all the six cooperation strategies under the default parameter choice. The shadows show the SEs of the average trajectories. Plot (A) illustrates the evolution of gossipers. Plot (B) illustrates the evolution of different cooperation strategies. Plot (C) illustrates the evolution of cooperation.

Step 1: Effects of the Reputation Dissemination Function.

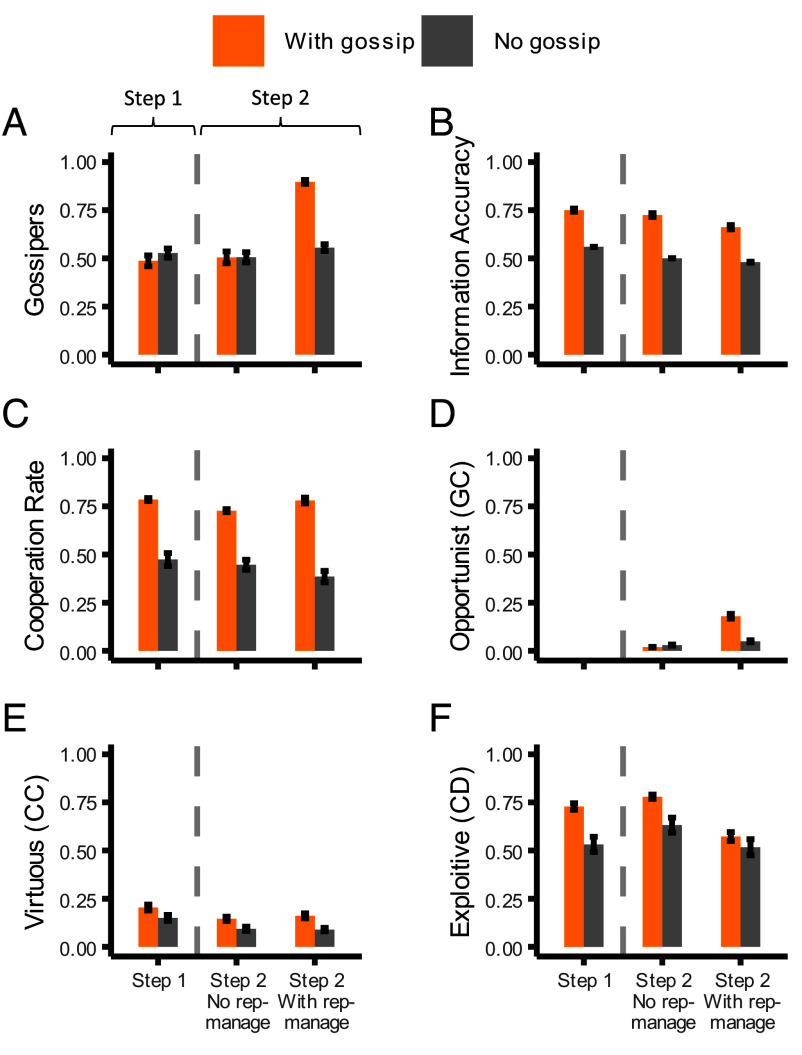

The results above show that the current model setup is sufficient to sustain the evolution of gossip. Next, we test the causal pathways in the evolutionary cycle step by step. In the first step, we test whether the reputation dissemination function increases reputation accessibility and, consequently, causes the evolution of reputation-sensitive agents. We implement only four cooperation strategies this time—unconditional cooperators (AC), unconditional defectors (AD), virtuous agents (CC), and exploitive agents (CD). We run two sets of simulations where we contrast two conditions: In one condition, agents gossip whereas in the other condition, agents do not gossip (with gossip vs. no gossip, see SI Appendix, section 1.3 for more details about the method).‡ Fig. 4B (Step 1) shows that the information disseminated through gossip indeed increases reputation accessibility—when agents can gossip, they form more accurate hypotheses of their neighbors’ strategies [t(30) = 23.29, P < 0.001; Welch’s t was used for all t tests]. Moreover, as expected, Fig. 4 E and F (Step 1) shows that when agents can gossip, more reputation-sensitive agents—agents who condition their behavior on others’ reputations—evolve [virtuous agents: t(58) = 2.45, P = 0.017; exploitive agents: t(39) = 4.55, P < 0.001]. Additionally, gossip increases the overall cooperation rate [t(32) = 9.03, P < 0.001, see Fig. 4C, Step 1; see SI Appendix, Fig. S10 for a scatter plot with results from each individual simulation run].

Fig. 4.

Results of Steps 1 and 2. Each condition is the average of 30 simulation runs. The value is calculated as the average value from the 4,000th to the 5,000th iterations of each simulation run. The error bars show the SEs. On the Left side of each plot are the results from Step 1; on the Right are the results from Step 2. Plot (A) shows that gossipers evolve only when both reputation dissemination and selfishness deterrence functions exist (i.e., with-gossip and with-rep-manage). Plots (B and C) show that the existence of gossipers (yellow) increases reputation accessibility and cooperation. Plot (D) shows that opportunists evolve only when both reputation dissemination and selfishness deterrence functions exist. Plots (E and F) show that the existence of gossipers increases the proportion of reputation-sensitive agents.

In SI Appendix, section 2.2.1, we also manipulate reputation accessibility directly by exogenously implementing agents’ hypothesized strategies of each other with a certain level of accuracy. Results show that increased reputation accessibility directly causes the evolution of more reputation-sensitive agents (SI Appendix, Fig. S8). Altogether, these results support that gossip increases reputation accessibility and, consequently, causes more individuals to condition their behavior on others’ reputations. Moreover, the evolution of reputation-sensitive agents also leads to the evolution of more cooperation. These findings are consistent with previous theoretical and empirical work on indirect reciprocity and the reputation dissemination function of gossip (12, 13, 19).

However, Fig. 4A (Step 1) shows that, when gossip only has its reputation dissemination function, gossipers do not evolve [t test on the proportion of gossipers between the two conditions: t(56) = −1.11, P = 0.270; for the with-gossip condition, one sample t test from chance (50%): t(29) = −0.47, P = 0.643]. In fact, further analyses show that if gossip has only its reputation dissemination function, if we make gossiping even a little costly, few gossipers can survive (SI Appendix, Fig. S9 and section 2.2.2). Therefore, the fact that gossip disseminates reputation information and facilitates cooperation is not sufficient to explain the evolution of gossipers (34). If so, what is missed in the causal chain?

Step 2: The Joint Effects of Reputation Dissemination and Selfishness Deterrence.

In the next set of simulations, we show that the selfishness deterrence function of gossip is also needed to explain the evolution of gossipers. In particular, we test whether the existence of opportunistic agents and their reputation management in front of gossipers are the keys to the evolution of gossipers. We ran another set of simulations with all the six cooperation strategies and manipulated two variables this time. As in Step 1, the first variable is whether gossipers can gossip (with-gossip vs. no-gossip). The second variable is whether gossiper-sensitive agents can manage their reputations when interacting with gossipers (with-rep-manage vs. no-rep-manage). In the condition where agents can manage their reputations (i.e., with-rep-manage), the default model is used: Opportunistic agents (GC) cooperate with gossipers and present to gossipers that they are virtuous agents whereas defect with nongossipers and present their real strategy to nongossipers. Reverse-opportunistic agents (GD), on the contrary, defect with gossipers and present to gossipers that they are unconditional defectors whereas cooperate with nongossipers and present their real strategy to nongossipers. In the condition where agents cannot manage their reputations (i.e., no-rep-manage), though opportunistic agents still cooperate with gossipers and defect with nongossipers whereas reverse-opportunistic agents defect with gossipers and cooperate with nongossipers, they no longer dissemble their real strategies. Instead, both GC and GD present their real strategies to both gossipers and nongossipers.§ By contrasting the two conditions, we test whether gossipers evolve only when its selfishness deterrence function exists, in other words, when opportunistic agents exist and can manage their reputations. Our rationale is that if these opportunistic agents cannot manage their reputations in gossip (i.e., as in the no-rep-manage condition), being a gossiper is no longer a deterrent, and gossipers will not evolve as a result (see SI Appendix, section 1.4 for more details about the method).

Our arguments are supported by Fig. 4. Fig. 4D (Step 2) shows that more opportunists evolve when opportunists can manage their reputations by cooperating with gossipers [i.e., in the with-gossip and with-rep-manage condition; interaction effect: F(1, 116) = 103.23, P < 0.001; simple main effect of rep-manage under with-gossip conditions: t(30) = 12.89, P < 0.001]. Most importantly, Fig. 4A (Step 2) shows that many more gossipers evolve when both the reputation dissemination and selfishness deterrence functions of gossip exist [interaction effect: F(1, 116) = 57.14, P < 0.001; simple main effect of rep-manage under with-gossip conditions: t(35) = 12.05, P < 0.001; see SI Appendix, Fig. S19 for a scatter plot with results from each individual simulation run]. Together, these results illustrate that the reputation dissemination and selfishness deterrence functions of gossip are both needed for the evolution of gossipers.

We also rerun the model using a variety of other model choices to check the boundary conditions of our results and explore the environmental factors that moderate the evolution of gossip. We find that a crucial factor in gossip’s ability to increase reputation accessibility is that people have to give confident gossip sufficiently higher weight than unconfident gossip (30, 31). Otherwise, if any gossiper can gossip and agents weigh any gossip equally, the existence of gossip will decrease information accuracy and harm cooperation, and moreover, not many gossipers will evolve in this case. Other than this parameter, gossipers evolve under a wide range of parameter choices though the proportions of them vary as a function of these parameters. Particularly, more gossipers evolve if individuals are in a social network where they have a lot of stable connections, if they have in-depth interactions with these social connections, if they interact frequently, if they have conversations with each other frequently, if their beliefs are influenced by gossip from moderately to greatly, and if the evolution happens slowly (SI Appendix, section 2.3.1).

Discussion

From Ancient Greece to industrialized nations, gossip has been at the center of bonding human groups (7, 8), but we still have limited knowledge of why gossip evolves and how it is maintained. In this article, we propose the evolutionary cycle of gossip and use an agent-based evolutionary game theoretic model to support it. We argue that the reputation dissemination and selfishness deterrence functions of gossip jointly lead to the evolution of gossip. Specifically, the reputation dissemination function increases reputation accessibility and increases the proportion of individuals who condition their behavior on others’ reputations. As a result, individuals become motivated to manage their own reputations by behaving more cooperatively when interacting with gossipers, manifesting the selfishness deterrence function of gossip. Gossipers, thus, gain an advantage over nongossipers, which leads to their evolution. The evolution of gossip further facilitates the two functions of gossip and sustains the cycle. Eventually, gossipers proliferate and facilitate cooperation (1, 7, 9–11, 13, 15, 16, 35).

Our model also illustrates the evolution of the opportunists—a strategy that seems dishonest at first but is the key for boosting gossip and cooperation. As opportunists actively manage their reputations, they decrease information accuracy and discount the reputation dissemination function of gossip (see Fig. 4B, Step 2). But paradoxically, these opportunists are critical in sustaining the evolution of gossipers. Moreover, being a gossiper deters the selfishness in opportunists and facilitates a system of mutual surveillance (36, 37). Eventually, opportunists and gossipers coevolve.

Like other models, our model starts with several oversimplified assumptions (38). We had to choose a small set of strategies from all the possible variants of human behaviors. We choose three categories of cooperation strategies, with the rationale that 1) the contrast between reputation-sensitive and unconditional agents examines the reputation dissemination function, and 2) the contrast between gossiper-sensitive and unconditional agents examines the selfishness deterrence function of gossip. However, we acknowledge that this is not an exhaustive strategy set. For example, we did not include the well-known tit-for-tat (TFT) strategy (39). Nevertheless, we believe that our virtuous agents (CC) play a similar role to TFT because they also defect with prospective defectors and cooperate with prospective cooperators. This is particularly true when the parameter dirW (interaction depth) is high and the parameter indirW (reliance on gossip) is low.

As for other potential strategies, because agents can condition their behavior on the hypothesized strategy of others, the strategy space will double in size whenever a new strategy is added. Each of the prior strategies will have two variants, depending on whether it cooperates or defects with the newly added strategy. Because of this, it is not feasible to test an exhaustive strategy set. Nevertheless, in SI Appendix, section 3, we provide some thought experiments to infer the possible effects of other strategies that would not change the generalizability of our findings.

Notably, our findings regarding the reputation dissemination function replicate previous literature. For example, ref. 12 found that indirect reciprocity can only sustain cooperation when the probability that a player knows the reputation of another player exceed the ratio of the cooperation cost to the benefit. Although we cannot match this threshold numerically, we also found that information accuracy and cooperation have a stepwise relationship: When information accuracy is below a certain threshold, cooperation is rare; but once when information accuracy passes a certain threshold, cooperation rate becomes high and increases as information accuracy increases (SI Appendix, Fig. S8).

Our method of implementing reputation dissemination also echoes previous literature. For example, as in refs. 22 and 25, we also implemented two ways for agents to gain information—1) direct interaction or 2) gossip. The major difference between our and previous models is the content of gossip. Since our model does not have a binary reputation, instead of spreading the target’s image score, gossipers spread the hypothesized strategy of the target. In addition, we simply assume that all gossipers spread both positive and negative information honestly, without differentiating between different types of gossipers. Nevertheless, our findings regarding the reputation dissemination function of gossip overlap greatly with previous literature. For example, both our and previous models found that it is important for conditional cooperators to have enough accurate information in order to beat unconditional defectors. If the information pool is polluted either by intentional lies (22, 25) or random errors (our model), conditional cooperators will not be able to recognize and cooperate with each other, a result that echoes literature in indirect reciprocity (12). In this sense, our bias parameter plays a similar role to the “conditional_advisor” strategy in ref. 25, a strategy that does not trust gossip from lying defectors.

Our results are also consistent with previous findings regarding the environmental moderators of the effects of gossip. For example, both ref. 25 and our model found that as direct interactions increase, the reputation dissemination function of gossip becomes less crucial (SI Appendix, Fig. S12). Both ref. 25 and our model found that gossip is less helpful for cooperation when there is too little or too much gossip (SI Appendix, Fig. S13). Consistent with ref. 22 which found that gossiping is more effective for larger population size, we found that the reputation dissemination function of gossip is more prominent in a network with a higher average degree (SI Appendix, Fig. S16). In summary, although the reputation dynamics are implemented in a different way in our model, they have produced a similar reputation dissemination function to other models.

Beyond previous models, one major innovation of our model is that we implemented gossiper-sensitive strategies and found that the selfishness deterrence function of gossip is the key to the evolution of gossipers. Previous literature found that although gossip benefits indirect reciprocity, having even a little cost to reputation building destroys indirect reciprocal cooperation (40). In fact, in Step 1, when only the reputation dissemination function of gossip exists, we also found that even if gossiping is just a little costly, few gossipers can survive (SI Appendix, Fig. S9 and section 2.2.2). However, in Step 2, after adding gossiper-sensitive strategies, a substantial proportion of gossipers evolve even when gossiping has a cost (SI Appendix, Fig. S5). This both illustrates the consistency of our model with previous literature and shows the essentiality of selfishness deterrence function for sustaining gossip even under gossiping cost.

Moreover, the predictions of our model are also consistent with empirical findings. For example, empirical studies suggest that gossip is more prevalent in rural towns because of the low population growth, closeness, and connectivity between individuals (41, 42). Our model also found that more gossipers evolve when strategy updating is infrequent, direct interactions are frequent and deep, network degree is high, and mobility is low (SI Appendix, section 2.3.1). Nonetheless, the evolutionary cycle of gossip still requires more validation from future empirical studies. For example, if the reputation management of opportunists is critical for the evolution of gossipers, we should expect fewer gossipers in a group where reputation cannot be easily managed (e.g., when individuals’ behavior are monitored and made public by a centralized system).

Admittedly, it is an oversimplification to make gossipers always gossip and share accurate information unconditionally. Although we believe the two gossiping strategies (AG and AN) should capture the major variance between gossipers and nongossipers, more complicated gossiping strategies may be implemented in the future to address the evolution of different types of gossipers. Particularly, it might be interesting to study the evolution of conditional and dishonest gossipers and examine their impacts on cooperation (22, 25). We also have assumed that people are influenced by positive and negative gossip equally, which is not necessarily the case in real life (43).

We also note that other mechanisms may contribute to the evolution of gossip as well. For example, gossiping conversations may facilitate intimacy between individuals (8, 44, 45). Gossiping can be simply entertaining and serve as “a bulwark against life’s monotony” (1). A group with much gossip may be more cooperative and thus more likely to survive group selection (19). While our model cannot cover all these mechanisms, we show that even without these mechanisms, it is sufficient for gossipers to evolve given the dual roles of reputation dissemination and selfishness deterrence in cooperation. More generally, we would suspect that this modeling framework can be fruitfully integrated with other models of cooperation and be used to examine various hypotheses about gossip and cooperation as well as more general intra- and interpersonal processes that are so fundamental to human interaction.

Materials and Methods

Pairwise Cooperation Game.

When agents engage in a pairwise cooperation game, an agent X’s decision whether to cooperate with an agent Y depends on X’s strategy and X’s hypothesized strategy SXY for Y (SI Appendix, Table S1). If X chooses to cooperate, then X pays a cost, c, for Y to receive a larger benefit, b (19). If X chooses to defect, they pay no cost and Y receives nothing from this decision. In the current model, b = 3 and c = 1 (46). The payoff matrix of this pairwise cooperation game can be found in SI Appendix, Table S2. When a pair of agents play the cooperation game, the two players make decisions simultaneously.

When two agents interact, each gains information about the other’s strategy. If an agent Y perceives that X’s strategy is Sm, then in Y’s probability table for X, the probability of Sm (i.e., ) will be increased by dirW until it reaches 1, as shown in Eq. 1, where is the original probability corresponding to Sm and is the new probability. The parameter dirW (0 < dirW ≤ 1) controls the amount of information gained from a single interaction. When dirW is large, Y’s belief about X will be greatly influenced by even one single interaction. This parameter can be interpreted as interaction depth.

| [1] |

As a consequence, the probability of other strategies will be decreased proportionally for the table to sum to 1, as shown in Eq. 2. In Eq. 2, is the original probability corresponding to each strategy except Sm and is the new probability (see SI Appendix, Fig. S2 for a numerical illustration).

| [2] |

These changes to the probability table will also change Y’s confidence level CYX and hypothesized strategy SYX as described in the main text. In the default model, dirW was set as 0.5. Other values of dirW were explored in robustness tests.

Gossiping Process.

When X gossips to Y about Z, X shares two pieces of information: X’s hypothesized strategy for Z, SXZ, and the confidence level CXZ. Research has shown that people are biased to learn from others that are more confident (30, 31). To implement this in our model, Y assigns the following weight wXZ to X’s gossip about Z, as shown in Eq. 3.

| [3] |

The parameter bias ≥0 controls the extent to which agents are biased toward more confident gossip. When bias = 0, the weight wXZ will always be 1, in which case agents value all gossip equally. As bias becomes larger, agents give higher weight to more confident gossip and lower weight to unconfident gossip, as shown in SI Appendix, Fig. S3. The default value of bias was set as 5. As shown in SI Appendix, sections 2.2.3.1 and 2.3.1.1, a sufficiently high bias is essential for agents to form accurate beliefs (i.e., hypothesized strategies) of each other.

Next, Y updates Y’s probability table for Z based on the gossip from X. Specifically, if X tells Y that Z’s strategy is Sm, then in Y’s probability table for Z, the probability of Sm will be increased by indirW × wXZ until it reaches 1, as shown in Eq. 4. The probabilities of other strategies will be decreased proportionally, following the same rule as in Eq. 2 and SI Appendix, Fig. S2.

| [4] |

The parameter 0 ≤ indirW ≤ 1 controls the maximum weight of a piece of gossip. The larger indirW is, the more that agents change their probability tables based on what they hear from gossip. By default, indirW = 0.5, though other values were also explored. After changing the probability table, Y also updates the hypothesized strategy SYZ and confidence level CYZ as explained earlier.

In the default model, we did not consider the cost of gossiping based on the assumption that the effort spent on gossiping is negligible compared with the effort needed for a cooperative behavior. However, in robustness tests, we explored the situations when gossiping is costly. If gossiping is costly, each time when an agent gossips about a target, they pay a gossip cost, gCost, per target.

Phases in Each Iteration.

To initialize each simulation, N = 200 agents with random cooperation and gossiping strategies are embedded in a social network. Each agent has a uniformly distributed probability table and a randomly selected hypothesized strategy for every other agent. Then, the simulation repeats iterations consisting of the following three steps: 1) interaction phase, 2) gossiping phase, and 3) strategy updating phase.

Interaction phase.

At the beginning of each iteration, N × intF agents are randomly selected (with replacement). Each of these selected agents, one by one, randomly selects a neighbor, if any, and plays a pairwise cooperation game with the neighbor, as elaborated above. intF indicates the frequency of direct interactions. The default value of intF was 0.1, though other values were also explored in robustness tests. Through direct interactions, agents get payoffs and update their beliefs about their interaction partners.

Gossiping phase.

Next, N × talkF agents are randomly selected (with replacement) as speakers. talkF indicates the frequency of conversations. The default value of talkF was 10, assuming that conversations are very frequent (6). It is possible that an agent speaks for more than one time in an iteration. Other values of talkF were also explored in robustness tests. For each selected speaker X, a listener Y is randomly selected from the speaker’s neighbors, if any. If X is a gossiper (i.e., X’s gossiping strategy is AG), then there are two cases:

If there are fewer than some number targetN of common neighbors between the speaker and the listener (i.e., agents that are neighbors of both X and Y), X will gossip about all of them to Y, if any. In our model, we used targetN = 2.

If there are more than targetN common neighbors, X will gossip about targetN randomly selected ones to Y (without replacement).

As a result, the listener updates their probability tables for the targets, as elaborated above. If X is a nongossiper (AN), no action will be conducted. The conversations are conducted one by one.

Strategy updating phase.

N × updF agents are selected (without replacement) as students who update their strategies based on the Fermi rule (47). updF indicates the frequency of strategy updating or the speed of evolution. updF = 0.01 was used by default, though other values were also explored in robustness tests. When updating their strategies, each student randomly selects a neighbor, if any, as their teacher. With a probability of P, the student will adopt both the gossiping and cooperation strategies of the teacher. The magnitude of P is decided by the difference between the student’s payoff, , and the teacher’s payoff, , as shown in Eq. 5 and SI Appendix, Fig. S4. In general, the higher payoff the teacher has compared to the student, the more likely the student will adopt the teacher’s strategies. The parameter s = 5 represents the strength of selection. One’s payoff is calculated as the average payoff from all the cooperation interactions in the current iteration subtracted by the total gossip cost in the current iteration, if any.¶ If an agent did not play a cooperation game with anyone in the interaction phase in the current iteration, the payoff from the latest iteration where there was an interaction in a cooperation game was used. If the teacher or the student has not been involved in any cooperation game throughout the simulation yet, the student does not update their strategy. The strategy updating process resembles the natural selection process in which individuals with higher fitness are more likely to reproduce to the next generation.

| [5] |

In addition to the payoff-based strategy updating, with a probability of µ = 0.05, the student will randomly select a gossiping strategy and a cooperation strategy from all the possible strategies, regardless of their payoff. This resembles the random mutation in evolution.

If a student changes their strategy in the payoff-based updating or they are selected for random mutation, their payoff will be reset to 0, their behavioral history will be deleted, their probability table for everyone else will be reset to uniform, their hypothesized strategy for everyone else will be randomly selected, and their confidence level for everyone else will drop back to 1/n, where n is the total number of possible strategies. In addition, everyone else’s probability table for this student will be reset to uniform, their hypothesized strategies for this student will be randomly selected, and the confidence level for this student will drop back to 1/n. The strategy updating happens in parallel for all the students.

The population repeatedly performs the three steps for iterN = 5,000 iterations in each simulation run. This is long enough for the proportion of different strategies to fluctuate around a stabilized value. A summary of default model parameters can be found in SI Appendix, Table S3. For each simulation, the following four characteristics were extracted: 1) cooperation rate, calculated by the proportion of “to cooperate” actions in all agents’ latest interactions, 2) the proportion of gossipers, 3) information accuracy, calculated by the average of the proportion that an agent’s hypothesized strategy for a neighbor is the same as that neighbor’s real strategy,# and 4) the proportions of different cooperation strategies in the population.

Supplementary Material

Appendix 01 (PDF)

Acknowledgments

We thank Paul J. Hanges, James A. Grand, and Joshua C. Jackson for valuable suggestions on the manuscript. This research was funded in part by Air Force Office of Scientific Research Grant 1010GWA357. The views expressed are those of the authors and do not reflect the official policy or position of the funders. We acknowledge the University of Maryland supercomputing resources (http://hpcc.umd.edu/) made available for conducting the research reported in this article. Portions of this article originally appeared as part of the first author’s master’s thesis.

Author contributions

X.P., V.H., D.S.N., and M.J.G. designed research; X.P. performed research; X.P. contributed new reagents/analytic tools; X.P. analyzed data; and X.P., D.S.N., and M.J.G. wrote the paper.

Competing interests

The authors declare no competing interest.

Footnotes

Reviewers: C.H., Max-Planck-Institut fur Evolutionsbiologie; and M.M., London School of Economics and Political Science.

*Our rationale to make an exploitive agent cooperate with another reputation-sensitive agent is based on Kantian reasoning: When an exploitive agent meets a reputation-sensitive agent, they both assume that their interaction partner will act as they do. Under this new constraint, they should both cooperate to maximize their utility (49).

†Our rationale of making GCs be perceived as CCs is that among all the strategies, CCs and CDs are mostly likely to be treated cooperatively by others. Moreover, compared with CDs, CCs also represent a more virtuous strategy. Thus, if the GC strategy resembles a person who wants to manage their reputation positively, they should present that they are a CC. On the contrary, our rationale of making GDs be perceived as ADs is that among all the strategies, ADs are mostly likely to be defected by others. We also tried another reputation management option for GD—by making GDs be perceived as CCs by nongossipers but perceived as GDs by gossipers. That way, GC and GD strategies are completely symmetric. The results remain robust. See SI Appendix, section 2.3.2 for details.

‡Agents are still tagged as “gossipers” or “nongossipers,” but gossipers do not gossip.

§Though these agents can no longer dissemble their real strategies to gossipers, we still let them behave discriminatively toward gossipers vs. nongossipers. This is to rule out the alternative explanation that the results are purely driven by pro- vs. antigossiper behavior instead of reputation management.

¶The total gossip cost is subtracted after calculating the average payoff from cooperation interactions.

#We only calculate their information accuracy about the immediate neighbors in the social network because these neighbors are the only agents that an individual interacts with.

Contributor Information

Xinyue Pan, Email: xinyuepan@cuhk.edu.cn.

Michele J. Gelfand, Email: gelfand1@stanford.edu.

Data, Materials, and Software Availability

Code and data for these results are available at the Open Science Framework (https://osf.io/w3kjq/?view_only=7b1fa51a66874a0fbcaf92f3e036c4c7) (48). All other data are included in the manuscript and/or SI Appendix.

Supporting Information

References

- 1.Foster E. K., Research on gossip: Taxonomy, methods, and future directions. Rev. Gen. Psychol. 8, 78–99 (2004). [Google Scholar]

- 2.Tsouparopoulou C., “Spreading the royal word: The (im)materiality of communication in Early Mesopotamia” in Communication and Materiality, Enderwitz S., Sauer R., Eds. (De Gruyter, 2015), pp. 7–24. [Google Scholar]

- 3.Aesop, Aesop’s Fables (OUP Oxford, 2002). [Google Scholar]

- 4.Homer H., The Odyssey (Xist Publishing, 2015). [Google Scholar]

- 5.Wiessner P. W., Embers of society: Firelight talk among the Ju/’hoansi Bushmen. Proc. Natl. Acad. Sci. U.S.A. 111, 14027–14035 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Robbins M. L., Karan A., Who gossips and how in everyday life? Soc. Psychol. Pers. Sci. 11, 185–195 (2020). [Google Scholar]

- 7.Dunbar R. I. M., Gossip in evolutionary perspective. Rev. Gen. Psychol. 8, 100–110 (2004). [Google Scholar]

- 8.Dunbar R. I. M., Marriott A., Duncan N. D. C., Human conversational behavior. Hum. Nat. 8, 231–246 (1997). [DOI] [PubMed] [Google Scholar]

- 9.Emler N., “Gossip, reputation, and social adaptation” in Good Gossip, Good Gossip R. F., Ben-Ze’ev A., Eds. (University Press of Kansas, 1994), pp. 117–138. [Google Scholar]

- 10.Giardini F., Wittek R., “Gossip, reputation, and sustainable cooperation: Sociological foundations” in The Oxford Handbook of Gossip and Reputation, Giardini F., Wittek R., Eds. (Oxford University Press, 2019), pp. 21–46. [Google Scholar]

- 11.Enquist M., Leimar O., The evolution of cooperation in mobile organisms. Anim. Behav. 45, 747–757 (1993). [Google Scholar]

- 12.Nowak M. A., Sigmund K., Evolution of indirect reciprocity by image scoring. Nature 393, 573–577 (1998). [DOI] [PubMed] [Google Scholar]

- 13.Sommerfeld R. D., Krambeck H.-J., Semmann D., Milinski M., Gossip as an alternative for direct observation in games of indirect reciprocity. Proc. Natl. Acad. Sci. U.S.A. 104, 17435–17440 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Henrich J., Muthukrishna M., The origins and psychology of human cooperation. Annu. Rev. Psychol. 72, 207–240 (2021). [DOI] [PubMed] [Google Scholar]

- 15.Feinberg M., Willer R., Stellar J., Keltner D., The virtues of gossip: Reputational information sharing as prosocial behavior. J. Pers. Soc. Psychol. 102, 1015–1030 (2012). [DOI] [PubMed] [Google Scholar]

- 16.Piazza J., Bering J. M., Concerns about reputation via gossip promote generous allocations in an economic game. Evol. Hum. Behav. 29, 172–178 (2008). [Google Scholar]

- 17.Neuberg S. L., Kenrick D. T., Schaller M., “Evolutionary social psychology” in Handbook of Social Psychology, Fiske S. T., Gilbert D., Lindzey G., Eds. (John Wiley & Sons Inc., 2010), pp. 761–796. [Google Scholar]

- 18.Leary M. R., Kowalski R. M., Impression management: A literature review and two-component model. Psychol. Bull. 107, 34–47 (1990). [Google Scholar]

- 19.Nowak M. A., Five rules for the evolution of cooperation. Science 314, 1560–1563 (2006). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Axelrod R., An evolutionary approach to norms. Am. Political Sci. Rev. 80, 1095–1111 (1986). [Google Scholar]

- 21.Kameda T., Takezawa M., Hastie R., The logic of social sharing: An evolutionary game analysis of adaptive norm development. Pers. Soc. Psychol. Rev. 7, 2–19 (2003). [DOI] [PubMed] [Google Scholar]

- 22.Seki M., Nakamaru M., A model for gossip-mediated evolution of altruism with various types of false information by speakers and assessment by listeners. J. Theor. Biol. 407, 90–105 (2016). [DOI] [PubMed] [Google Scholar]

- 23.Sigmund K., Moral assessment in indirect reciprocity. J. Theor. Biol. 299, 25–30 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Nowak M. A., Sigmund K., Evolution of indirect reciprocity. Nature 437, 1291–1298 (2005). [DOI] [PubMed] [Google Scholar]

- 25.Nakamaru M., Kawata M., Evolution of rumours that discriminate lying defectors. Evol. Ecol. Res. 6, 261–283 (2004). [Google Scholar]

- 26.Ohtsuki H., Iwasa Y., How should we define goodness?—Reputation dynamics in indirect reciprocity. J. Theor. Biol. 231, 107–120 (2004). [DOI] [PubMed] [Google Scholar]

- 27.Macrae C. N., Quadflieg S., “Perceiving people” in Handbook of Social Psychology, Fiske S. T., Gilbert D., Lindzey G., Eds. (John Wiley & Sons Inc., 2010), pp. 428–463. [Google Scholar]

- 28.Willis J., Todorov A., First impressions: Making up your mind after a 100-ms exposure to a face. Psychol. Sci. 17, 592–598 (2006). [DOI] [PubMed] [Google Scholar]

- 29.García J., Traulsen A., Evolution of coordinated punishment to enforce cooperation from an unbiased strategy space. J. R. Soc. Interface 16, 20190127 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Birch S. A. J., Akmal N., Frampton K. L., Two-year-olds are vigilant of others’ non-verbal cues to credibility. Dev. Sci. 13, 363–369 (2010). [DOI] [PubMed] [Google Scholar]

- 31.Jaswal V. K., Malone L. S., Turning believers into skeptics: 3-year-olds’ sensitivity to cues to speaker credibility. J. Cognit. Dev. 8, 263–283 (2007). [Google Scholar]

- 32.Milgram S., The small world problem. Psychol. Today 2, 60–67 (1967). [Google Scholar]

- 33.Pan X., Gelfand M., Nau D., Integrating evolutionary game theory and cross-cultural psychology to understand cultural dynamics. Am. Psychol. 76, 1054–1066 (2021). [DOI] [PubMed] [Google Scholar]

- 34.Paine R., What is gossip about? An alternative hypothesis. Man 2, 278–285 (1967). [Google Scholar]

- 35.Ge E., Chen Y., Wu J., Mace R., Large-scale cooperation driven by reputation, not fear of divine punishment. R. Soc. Open Sci. 6, 190991 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Kurland N. B., Pelled L. H., Passing the word: Toward a model of gossip and power in the workplace. Acad. Manage. Rev. 25, 428–438 (2000). [Google Scholar]

- 37.Kniffin K. M., Sloan Wilson D., Evolutionary perspectives on workplace gossip: Why and how gossip can serve groups. Group Organ. Manage. 35, 150–176 (2010). [Google Scholar]

- 38.Smaldino P. E., “Models are stupid, and we need more of them” in Computational Social Psychology, Vallacher R. R., Read S. J., Nowak A., Eds. (Routledge, 2017), pp. 311–331. [Google Scholar]

- 39.Axelrod R., The Evolution of Cooperation (Basic Books, New York, 1984). [Google Scholar]

- 40.Suzuki S., Kimura H., Indirect reciprocity is sensitive to costs of information transfer. Sci. Rep. 3, 1435 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Haugen M. S., Villa M., Big Brother in rural societies: Youths’ discourses on gossip. Norwegian J. Geogr. 60, 209–216 (2006). [Google Scholar]

- 42.Parr H., Philo C., Burns N., Social geographies of rural mental health: Experiencing inclusions and exclusions. Trans. Inst. Br. Geogr. 29, 401–419 (2004). [Google Scholar]

- 43.De Bruin E. N. M., Van Lange P. A. M., Impression formation and cooperative behavior. Euro. J. Social Psychol. 29, 305–328 (1999). [Google Scholar]

- 44.Emmers-Sommer T. M., The effect of communication quality and quantity indicators on intimacy and relational satisfaction. J. Soc. Pers. Relat. 21, 399–411 (2004). [Google Scholar]

- 45.Shaw A. K., Tsvetkova M., Daneshvar R., The effect of gossip on social networks. Complexity 16, 39–47 (2011). [Google Scholar]

- 46.Roos P., Gelfand M., Nau D., Lun J., Societal threat and cultural variation in the strength of social norms: An evolutionary basis. Organ. Behav. Hum. Decis. Process. 129, 14–23 (2015). [Google Scholar]

- 47.Roca C. P., Cuesta J. A., Sánchez A., Evolutionary game theory: Temporal and spatial effects beyond replicator dynamics. Phys. Life Rev. 6, 208–249 (2009). [DOI] [PubMed] [Google Scholar]

- 48.Pan X., Hsiao V., Nau D. S., Gelfand M., Code and data from “Explaining the evolution of gossip.” Open Science Framework. https://osf.io/w3kjq/?view_only=7b-1fa51a66874a0fbcaf92f3e036c4c7. Deposited 24 November 2023.

- 49.Laffont J.-J., Macroeconomic constraints, economic efficiency and ethics: An introduction to Kantian economics. Economica 42, 430–437 (1975). [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Appendix 01 (PDF)

Data Availability Statement

Code and data for these results are available at the Open Science Framework (https://osf.io/w3kjq/?view_only=7b1fa51a66874a0fbcaf92f3e036c4c7) (48). All other data are included in the manuscript and/or SI Appendix.