Abstract

A fluent conversation with a virtual assistant, person-tailored news feeds, and deep-fake images created within seconds—all those things that have been unthinkable for a long time are now a part of our everyday lives. What these examples have in common is that they are realized by different means of machine learning (ML), a technology that has fundamentally changed many aspects of the modern world. The possibility to process enormous amount of data in multi-hierarchical, digital constructs has paved the way not only for creating intelligent systems but also for obtaining surprising new insight into many scientific problems. However, in the different areas of biosciences, which typically rely heavily on the collection of time-consuming experimental data, applying ML methods is a bit more challenging: Here, difficulties can arise from small datasets and the inherent, broad variability, and complexity associated with studying biological objects and phenomena. In this Review, we give an overview of commonly used ML algorithms (which are often referred to as “machines”) and learning strategies as well as their applications in different bio-disciplines such as molecular biology, drug development, biophysics, and biomaterials science. We highlight how selected research questions from those fields were successfully translated into machine readable formats, discuss typical problems that can arise in this context, and provide an overview of how to resolve those encountered difficulties.

I. INTRODUCTION

In many areas of medicine and materials science, analyzing complex datasets is a crucial task; those datasets, for instance, consist of images that can be used to identify pathologies or to quantify the progress of diseases1–3 as well as for detecting defects on materials4–6 and monitoring experimental7–9 and production10,11 processes. When performed manually, those tasks require time-consuming expert involvement but, nevertheless, may remain error-prone and biased. This is where computer-based decision processes can help. In the recent decade, machine learning (ML) approaches have gained vastly increased attention and have been successfully applied to different problems. Machine learning is a field of data science that encompasses a variety of algorithms that automatically learn from provided information and then draw conclusions. Such approaches aim at simplifying, extending, or replacing human decision and analysis processes. Examples include object detection12,13 and monitoring,14,15 identification of patterns or correlations between datasets,16,17 as well as data classification,18–20 regression,21,22 or clustering23,24 (Fig. 1).

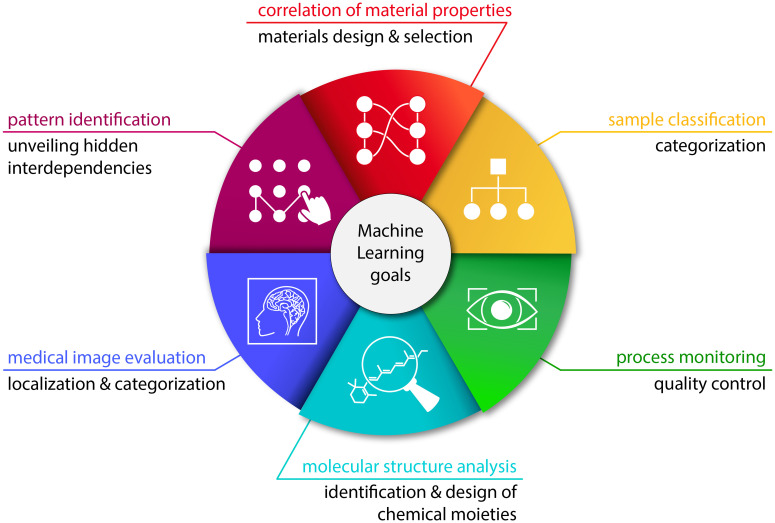

FIG. 1.

Typical objectives of machine learning approaches. A ML-based analysis of data from the biosciences can have different goals. Typical examples include the correlation of material properties, the classification of samples, the identification of patterns, process monitoring, molecular structure analysis, and the evaluation of medical images. Correlating material properties can, for instance, be useful to predict the behavior or certain characteristics of materials to provide guidance for a target-oriented design or selection process. Sample classification finds broad applications in areas where samples need to be assigned to discrete categories, e.g., for the classification of disease patterns based on various biomarkers. ML-based process monitoring can be an essential part of quality control to automatically identify and react to defects or variations in the process flow. Analyzing molecular structures by means of ML allows us to scan large databases to identify or even to design chemical moieties with certain properties. Another growing area of application for ML is the automated evaluation of medical images to, e.g., localize and categorize organs or pathological manifestations in tissues. Finally, one versatile purpose of ML is to identify patterns in databases to unveil hidden dependencies between different characteristics and attributes.

A key task for which machine learning has turned out to be highly helpful is image analysis.25–28 Here, image segmentation and object detection methods can be used to automatically identify and locate the presence of certain objects within an image or video.29–31 By receiving example images as an input, the algorithms learn to find informative regions in the pictures and extract characteristic features such as edges or specific shapes from them.32,33 At the moment, such approaches are extensively applied to face recognition or autonomous driving tasks; yet, this technique offers great potential in other areas as well where decisions are made based on visual impressions: The progression of glaucoma,34–36 dementia,37,38 or cancer39–42 was successfully extracted from medical images, cell nuclei were detected in microscope images,43,44 microtissue-contraction measurements were automatically analyzed in laboratory experiments,45 and additive manufacturing processes of biomaterials were optimized.46,47

In addition to analyzing images, ML algorithms can also handle other data types such as numerical values or text. Instead of an image, the samples then comprise multiple input parameters (commonly referred to as features) and—optionally—an output label or value. In materials science, such data analyses can uncover links among the composition, structure, and characteristics of known materials and extrapolate this knowledge to propose potential new materials with predefined properties.48–50 Here, the algorithms search for patterns and correlations in the dataset, from which conclusions can be drawn.51 With such an approach, it was possible to explore therapeutics that target specific diseases52–55 to study glycan functions,56,57 to enhance single molecule sensing,58 and to improve manufacturing processes such as 3D bioprinting59 or microparticle production.60

By mapping such input data, e.g., experimental findings, onto output labels, predictive algorithms can be established. Depending on the type of possible outputs, one can distinguish between classification and regression attempts. Classification describes the prediction of discrete outputs, i.e., samples are assigned to specific classes. Examples are the categorization of surfaces with regard to their wetting behavior61 or sorting the state of polymer conformations.62 In contrast, regression algorithms predict properties that can be described by continuous values such as interaction affinities,63–65 transcriptional activities of DNA motifs,66 or material parameters describing mechanical responses.67,68 These approaches are especially useful when mathematical equations based on physical models are still unknown.

II. PRINCIPLES, ADVANTAGES, AND LIMITATIONS OF DIFFERENT ML ALGORITHMS

Considering the large variety of available ML algorithms, selecting the most suitable one for a given problem is not always trivial: The best choice depends on the problem statement, the database, the desired output, interpretability, and many other factors. In Sec. II, we give an overview over common learning strategies, we highlight selected ML models (including random ensemble-based, probabilistic, linear, and deep learning methods), and we explain their working principles and characteristics. Although some models can make use of different learning strategies, in the following, each of them is assigned to the most commonly used one. Graphical representations of the algorithms discussed here are depicted in Fig. 2, and an overview of the advantages and disadvantages is given in Table I.

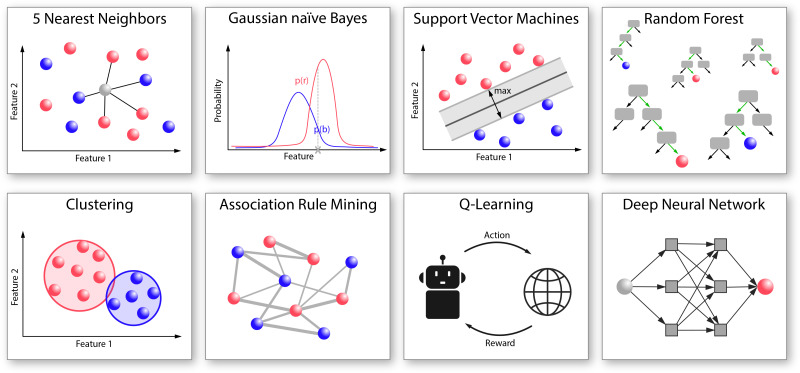

FIG. 2.

Schematic representation of typical ML algorithms used for analyzing problems from the different fields of biosciences. The k nearest neighbor (KNN) algorithm classifies a query sample according to the k samples that are most similar to it, i.e., which have the lowest distance in an n-dimensional hyperspace (here, n corresponds to the number of analyzed features). The Gaussian Naïve Bayes algorithm determines conditional probabilities and classifies samples based on a “most probable” principle. Support vector machines define hyperplanes in the n-dimensional feature space to distinctly separate samples of different classes while maximizing the distance of all samples to this separating hyperplane. The Random Forest classifier combines many randomly generated, uncorrelated decision trees to perform predictions in a popular-vote-like manner. Clustering refers to algorithms that group unlabeled data based on their characteristics. Association rule mining describes the process of finding dependencies that govern correlations and associations between samples. Q-learning assesses the quality of each action available for a given state by rewarding a subset of desired outcomes. Deep neural networks mimic the structure of the human brain by combining activatable units in consecutive, interconnected layers that process information in various manners.

TABLE I.

Overview of the advantages and disadvantages of the different ML algorithms discussed here.

| K nearest neighbors 69–72 | |

| No training phase needed | High dimensionality leads to decreased accuracies |

| Intuitive and simple algorithm | Can become slow for big datasets |

| Easily adapts to new training data | Needs feature scaling |

| Only one hyperparameter to tune | Has problems with imbalanced datasets |

| Missing values are problematic | |

| Naïve Bayes 73–78 | |

| Very fast | The assumption of independent features that equally contribute to the output rarely holds true |

| Needs less training data than most other algorithms | Zero probability problem: If one feature of a sample exhibits a value of zero probability according to the trained model, the class will be assigned a probability of zero. |

| Works well with high-dimensional data | |

| Support vector machines 79–86 | |

| Kernel functions can be used to solve complex problems | Choosing an appropriate kernel can be difficult |

| Effective in high-dimensional spaces even for comparably small sample sizes | Training times can become long with large datasets |

| Memory efficient, as it uses a subset of training points for the decision function | Limited capability to handle noisy or strongly overlapping classes |

| Random forest 87–93 | |

| Robust to outliers, noise, and imbalanced datasets | Long training times for large datasets |

| Lower risk of overfitting | Little control over model formation |

| Runs efficiently with large datasets | Limited ability to extrapolate |

| Easy data preparation | |

| Can handle high dimensionalities | |

| Clustering 94–99 | |

| Can handle unlabeled data | It can be difficult to interpret the sorting decision |

| Algorithms of different complexity are available | Big datasets can lead to long running times |

| Can be used on very small and very large datasets | The criteria to stop clustering or the number of clusters need to be defined |

| Association rule mining 100–104 | |

| Offers an easy way to detect correlations in unsorted datasets | Does not guarantee statistical significance |

| Unveils relationships between elements | Requires nominal variables; continuous values need to be translated |

| Q-Learning 105–109 | |

| After sufficient training, it finds optimal actions | Can be computationally expensive since each state/action pair needs to be evaluated multiple times |

| Can solve problems without explicitly being told how to | Does not include risk assessments into the decision making |

| Can have problems with high dimensionality | |

| Deep neural networks 110–113 | |

| Highly flexible and suitable to approximate complex functions | Requires lots of training data |

| Can be difficult to interpret (black box) | |

| Once trained, the predictions are fast | Training can be computationally expensive |

| There are multiple different network architectures already available | Finding the best network architecture can be challenging |

Overall, data fed into an algorithm can serve three different purposes: First, a “training set” is required to allow the algorithms to develop a model. Second, a “test set” is used for validation, and this set contains data the algorithms are only confronted with once they have established the model. Third, once validation was successful, so-called “query samples” are fed into the algorithm with the aim to get classified or to make predictions for. In all those datasets, input variables that quantify individual measurable characteristics of a data point are referred to as “features,” outputs assigned to training or test samples are called “labels,” and the output of the algorithm (be it continuous or discrete values) created for a query sample is called “prediction.”

A. Supervised learning

In supervised learning, models are developed based on labeled data—similar to how parents teach their children to name objects. The algorithm needs to be provided a training dataset, containing a sufficiently large number of samples; each of them is represented by input data—i.e., information (descriptors) that is likely to characterize the desired output—and corresponding output labels. Such datasets could, for example, comprise histological images of cancerous tissue (input) labeled with the name of the affected organ (output),114 or they could link the composition of a polymeric biomaterial (input) to its mechanical behavior (output).115,116 With such information offered, the ML models aim at identifying relationships between the input and the output and can then perform classification or prediction tasks for new data they were not confronted with before.

1. k nearest neighbor (KNN) algorithms

The simple but powerful k nearest neighbor algorithm follows the assumption that similarity between samples is accompanied by proximity in the data space; in other words, similar samples are expected to come with similar inputs. Instead of developing a generalized model, predictions are made by comparing a query sample to the training data. Then, the k nearest neighbors, i.e., the most similar samples according to their feature values, are identified, and a prediction is made considering the labels of those data points in a popular-vote-like manner. The number of neighbors k can be varied to find a valid compromise between robustness toward outliers (which is achieved for high values of k) and distinctness (which is a typical result for low values of k).117,118

KNN algorithms can be used for multi-class problems,119 and their accuracy can easily be improved by adding more data points to the training set. Providing more input data, however, typically comes at the cost of long computational runtimes.69 Moreover, KNN algorithms have limitations when it comes to handling imbalanced datasets70 (e.g., training data with a dominant class): For predictions to be reliable, a certain amount of data points from all classes is required to achieve a suitable (local) density in the data space. Also, KNN algorithms tend to struggle with large numbers of input features—a phenomenon, which is known as “curse of dimensionality.”71 Finally, as the input features are usually weighted equally when calculating the distance of a query sample to its nearest neighbors, it is important to ensure that the input features have the same scale72 (which is why some preprocessing of the data might be required).

2. Naïve Bayes methods

Naïve Bayes approaches are probabilistic learning methods that are mostly used for classification tasks. Here, the training data are used to determine likelihood distributions (e.g., Gaussian, multinomial, Bernoulli, or categorical distributions120,121) of the feature values representing each class. Then, the probability that a query sample belongs to one of the classes is calculated based on the Naïve assumption that all features are independent and contribute equally to the output. The corresponding mathematical relationship is formulated in Bayes' theorem.122 Although Naïve Bayes approaches typically rely on over-simplified assumptions, those algorithms can outperform even highly sophisticated methods.123

Compared to other algorithms, Naïve Bayes classifiers can be extremely fast73 and require a small amount of training data only.74 Owing to the independent likelihood estimation applied to each feature, those algorithms also perform well when tasked with high-dimensional problems75 (i.e., those, where many input features are considered) and multi-class classifications119—and they can process both, categorical124,125 and continuous input data.126 However, the simplified assumptions made by Naïve Bayes classifiers do not always hold true when real-life problems are studied: Here, only rarely all features of a sample are truly independent;76 similarly, it is not likely that all sample features contribute equally to the output77 and all feature distributions meet the assumed profile. Furthermore, categorical inputs of the query sample that were not present in the training data will lead to an incorrect probability of zero, known as the “zero frequency problem.”78

3. Support vector machines (SVMs)

Support vector machines (SVMs) define hyperplanes in the n-dimensional feature space, which then can be used to either distinctly separate the dataset into single-variety classes (i.e., for classification) or to approximate the training data (i.e., for regression). To allow for handling problems that would otherwise involve complex mathematical operations, kernel functions that transform input data into higher dimensionality can be integrated into those models.127,128

Since only a subset of training points is used for calculating the decision function, support vector methods can handle data spaces of high dimensionality79,80 while remaining efficient regarding memory and runtime.81 However, for large datasets, the training times can increase significantly.82 Due to the large variety of kernel functions that can be selected and specified for creating the decision function,83,84 the algorithms are very versatile and can even be applied to unstructured data. Still, support vector classifiers can have problems with handling very noisy data129 or classes that strongly overlap.85,86

4. Decision trees and random forest (RF) algorithms

Decision trees are flow chart-like representations of hierarchical decision-making models that are created by analyzing a labeled training set. They consist of nodes (i.e., consecutive stages in which distinct decisions are made) and branches that connect these nodes. Starting with a root node, the training data are (based on individual input features) split in a stepwise manner by creating and answering simple true/false questions. A new (=query) sample can then be classified/predicted by running through the tree using the input values of this new sample and the previously established decision rules.

According to the principle of swarm intelligence, the accuracy of such an approach can be improved by combining an ensemble of non-correlating decision trees—a random forest.130 Enforcing this mandatory variation among the trees is mainly achieved by applying two methods known as feature randomization (here, only a random subset of features is provided for splitting the data) and bootstrap aggregation (short: bagging, i.e., randomly eliminating samples of the training set and replacing them with duplicates of the remaining samples).131

Random forest algorithms can achieve very high accuracies even in high-dimensional data spaces.87 These algorithms run efficiently for large datasets,88 and they can handle variable input data types, including binary, categorical, and numerical features.132 They are well suitable for unbalanced data,89 robust toward non-linearity,90 and outliers91 and—when a sufficient number of independent decision trees is used—rather insensitive to overfitting. Moreover, the decision criteria chosen by the decision trees can be extracted and used to rank the importance of individual features for the categorization process.133,134 However, the self-directed formation of the different trees strongly restricts options to influence random forest algorithms. Importantly, random forest models are not able to extrapolate correlations, and this limits them to making predictions within the created knowledge space.92 Finally, even though running efficiently once the model has been established, training can be computationally costly93 since many trees (usually between 100 and 1000) must be created to obtain a robust random forest.

B. Unsupervised learning

When it is not clear yet what the algorithm is supposed to find, or if labeled data are not available, unsupervised machine learning is more suitable. In such a data-driven approach, the algorithm is simply fed with unsorted input data and allowed to draw its own conclusions by either autonomously clustering the samples or by identifying trends, similarities, extreme points, or patterns in the data. With such a strategy, it was possible to quantify the morphological heterogeneity of cells based on a specified set of geometrical parameters135 and to automatically control the quality of electro-spun nanofibers.136

1. Clustering

An important concept in the field of unsupervised learning is clustering; this approach can be used to identify patterns in a set of unlabeled data. Here, a dataset (containing input values only) is analyzed by sorting the samples into subgroups (clusters) by identifying similarities among them. A common subtype of this approach is k-means clustering. Here, the samples are assigned to k clusters in an exclusive manner by iteratively adjusting cluster centroids until the variety of samples within the formed clusters is minimized while the variety between the clusters is maximized. K-means clustering algorithms are simple and fast, which is why they can handle large datasets.94 They can easily adapt to new samples or data, and their sorting result can be influenced by predefining the initial centroids.95,96 Yet, identifying the correct number k of clusters to be formed can be far from trivial and might require preliminary analyses.97,98 Also, as common for distance-based algorithms, high data dimensionalities can cause issues.99 Finally, basic k-means algorithms encounter problems when the created clusters differ in terms of size or density; however, generalization methods can be applied to deal with this particular issue.137

In addition to the rather simple k-means clustering algorithms, there are also other clustering variants that are selected when more complex datasets need to be processed. Mean-shift clustering, for example, searches regions of high data density by sliding pre-defined analysis windows over the data until the windows containing the highest number of data points are identified. There are two main advantages of this algorithm variant: First, the number of final clusters does not need to be pre-defined; second, centroids in close proximity to each other are automatically merged. A very powerful extension of such mean-shift clustering is the DBSCAN method (density-based spatial clustering of applications with noise), which is capable of identifying clusters of any shape and size while detecting and ignoring outliers. In addition, methods that establish clusters of different hierarchies were shown to work efficiently as well.138

2. Association rule mining

Another popular example of an unsupervised ML method is association rule mining. This approach aims at unveiling correlations between variables in a set of unlabeled data. Such association rules can be interpreted as “if–then” statements, where certain variables (antecedents) are linked to correlating ones (consequents). To identify the most important rules, the dataset is first searched for such if-then patterns, which are then ranked using different significance measures. A major drawback of this approach is that calculating those metrics for all identified relations becomes computationally expensive rather soon. The so-called a priori algorithm provides a good solution to this problem: Here, item sets containing variables or subsets with low importance in one metric are quickly eliminated, and this drastically reduces the amount of data that need to be analyzed regarding the other measures. In addition, there is a broad variety of other approaches for association rule mining that allow for handling different datasets and problems of higher complexity.100–102 Yet, in any case, a sufficiently high data density is essential for these algorithms to avoid random correlations from becoming too prominent.

C. Reinforcement learning

A third learning strategy is reinforcement learning—an action-focused training approach. Here, the machine chooses from different possible actions and is punished or rewarded depending on whether or not it made a “correct” choice. Typically, this is implemented by the algorithm trying to optimize a reward function: Here, positive values are assigned when the algorithm chooses the desired outcome, which presents an incentive for the machine to make this choice; consistently, assigning negative values to “wrong” choices serves as a punishment rendering undesired behavior less likely. With this reward/penalty strategy, a machine can, for example, learn to play a simple board game by repeatedly exploring possible actions in a trial-and-error like fashion and trying to maximize the cumulative reward that is granted upon victory. So far, in materials science, reinforcement learning has been applied to a lower extent than supervised or unsupervised learning strategies. Nevertheless, reinforcement-based training strategies were shown to be suitable for controlling the growth of microbial co-cultures in bioreactors139 and for automatically designing RNA sequences with desired secondary structures.140

1. Q-learning

Q-learning is a simple but efficient method to teach an algorithm to automatically act and react in the context of playing a game or to perform certain workflows. By repeatedly (over thousands or even millions of trials) exploring all available actions during the training phase and iteratively assessing their quality based on the final received reward, the algorithm learns to identify the best available action for a given state.

A major advantage of Q-learning is that it does not require an actual model of the environment. The algorithm does not undergo any explicit external teaching step but learns on its own by autonomously exploring the possible options. This allows for gaining competence in areas that might otherwise remain unexplored by humans. Such wide-ranging exploration, however, can easily become computationally expensive. Another drawback is that—in its basic form—Q-learning is only useful for stationary environments; for non-stationary problems, new training is required to adapt the decision values. However, there are several modified versions of Q-learning, where these issues are dealt with.105–107

D. Deep learning

In addition to the learning strategies discussed so far, there are also “deep learning” approaches. Deep learning can be performed in a supervised, unsupervised, or reinforced manner and aims at mimicking the anatomical structure of biological neural networks and the decision-making process of the human brain. Therefore, multi-hierarchical structures of algorithms are established that can handle and analyze data at different levels of abstraction. This approach holds the potential to analyze even highly complex problems but comes at a prize: Owing to the autonomous, multi-stage data processing procedure, such algorithms act as a black-box. In addition to the provided input, only the generated results are accessible: It remains concealed how exactly the algorithm arrived at a particular decision, and this makes it difficult to rationalize the models suggested by deep learning. Nevertheless, deep learning models have demonstrated tremendous success across a plethora of research areas including biomaterials science; for instance, they precisely predicted the skin permeation behavior of drugs released from biopolymeric films,141 supported the design of anti-fouling polymer coatings and materials,142–145 size-tunable poly(lactic-co-glycolic acid) particles,146 or nucleus-targeting polypeptides,147 they successfully detected single molecule activity from patch-clamp electrophysiology trials,148 and they could accurately model biopolymerization processes.149,150

1. Deep neural networks (DNNs)

Deep neural networks (DNNs) denote digital constructs that mimic the architecture and mode of operation of the human brain. Here, the key players are artificial neurons—small, digital units that can be triggered with a (typically) non-linear activation function. Those neurons are structured in subsequent, interconnected layers, and the individual computations made by each neuron are eventually combined into a final output. Each neuron transforms the received input variables and transmits the result to the next layer. Between each input and output layer, there can be a variable number of “hidden” layers comprising different numbers of neurons with distinct activation functions. A basic example of a DNN making use of forward-only data processing is the so-called multi-layer perceptron (MLP). MLPs are suitable for supervised learning problems (both, regression and classification tasks) and are basically able to model any non-linear function, which is why they are also referred to as “universal function approximators.” Recurrent neural networks (RNNs) are extensions of such DNNs and aim at including more complex information into the decision-making process: Different from MLPs, RNNs combine information from preceding and subsequent layers with the goal of not only to analyze single elements but also to consider their context as well.

DNNs are especially suitable for large-scale datasets, for problems that are too complex for other ML algorithms, and when the problem space is not well understood. Their architecture can be flexibly adapted to other problems, applications, learning strategies, or data types. These networks are able to handle data of high dimensionality, can analyze problems at different levels of abstraction, and learn progressively over time. For DNNs to outperform other ML techniques, though, usually a very large amount of data is needed, and this comes with high computational costs. However, once the costly training phase is completed, making predictions on query samples can be very fast. For instance, a deep model that learned to segment and track cells from microscopy images (which involved large experimental and computational costs) was able, after training, to perform segmentation tasks in less than a second.151 Owing to the high complexity of DNNs in combination with the low transparency of their decision-making process, choosing the right approach and interpreting the obtained results or models can be extremely challenging.

2. Convolutional neural networks (CNNs)

When aiming at processing images or videos, convolutional neural networks (CNNs) usually are the method of choice. When given an image as an input, CNNs use trainable weights to assign importance gradings to different aspects of an image or to objects within the image. The networks can then be used to analyze or classify images, or to identify trained objects within an image. For this purpose, CNNs mainly make use of three procedures: convolution, pooling, and flattening. For image convolution, filters are applied to each pixel. This can help the network to identify certain structures such as edges or peaks. Pooling can lower the computational cost by combining pixels from the same region into one, thus reducing the size of the image. After applying (multiple) convolution and pooling steps, the individual pixels of the resulting image matrix are fed into a standard neural network—a process, which is referred to as “flattening.”

III. SELECTED EXAMPLES OF MACHINE LEARNING APPLICATIONS FROM DIFFERENT BIOSCIENCES

For years, ML approaches have been an integral part of many scientific areas and have been used to develop computer vision for autonomous systems,152,153 to design synthetic materials,154,155 or for human behavioral analysis.156–159 Yet, their application in biophysics or biomaterials science has been less frequent. The scientific questions addressed in these bio-disciplines are characterized by a very high complexity that arises from biological variance and, thus, noisy, divergent data. Hence, it can be quite challenging to translate experimental results from those areas into a format that can be well interpreted by ML models and algorithms. However, once this major hurdle is taken, ML approaches can deliver highly valuable insight into bio-based data as well: Implementation of ML was successfully achieved in the fields of biofabrication,160–165 biosensors and -markers,166–174 pharmaceutical science,175–185 pathophysiology,186–198 biomacromolecule science,199–210 gene analysis,211–221 biomaterials,222–231 and process optimization232–240 (Fig. 3; for more details, see Table II). In this section, we discuss selected examples from those areas, and we highlight what type of data was used by the different ML algorithms to obtain predictions or classifications that—using classical data analysis approaches—would have either been way more time consuming to achieve or outright impossible.

FIG. 3.

Research areas from the biosciences in which machine learning has already been successfully applied. ML approaches were successfully implemented in different fields dealing with biofabrication, biomarkers and sensors, pharmaceuticals, pathophysiology, biomacromolecules, gene analysis, biomaterials, or process optimization. Biofabrication includes various production methods, such as 3D printing or electrospinning; here, ML can be used for process and quality control or for the a priori definition of process parameters. In the context of biomarkers and biosensors, ML can support the identification and the monitoring of diagnostic molecules, and it can assist in the analysis of signals. Pharmaceutical sciences benefit from ML in drug screening and design applications as well as in extensive studies on drug delivery, response, and efficiency. In the context of pathophysiology, ML can help with the classification of diseases as well with diagnostics, prognostics, and the assessment of risk levels. Moreover, a ML-driven analysis of biomacromolecules can help us to investigate polymer-ligand binding, to predict molecule conformations, and to correlate molecular structures with their properties. As part of gene analysis, ML can be employed in the fields of epigenetics, chemogenomics, taxonomy, and genome editing. Biomaterials science and development profit strongly from an ML-driven correlation of properties and functions of different materials including particles, films, or three-dimensional bulk materials. As a final example, process optimization can be achieved by ML-based monitoring and an analysis of microscopy or other experimental procedures/bioengineering processes.

TABLE II.

Overview of studies from various research areas, in which ML was applied.

| Question | Approach | Outcome | Study |

|---|---|---|---|

| Predicting biophysical interactions | |||

| Affinity of protein-peptide interactions across multiple protein families | Hierarchical statistical model | Interaction affinities were successfully predicted based on the amino acid sequences and the inferred structured Hamiltonians (mathematical functions that map the state of a system to its energy). | 16 |

| The model outperformed both, other computational methods293–295 and high-throughput experimental assays developed for the same purpose | |||

| Good performance in high-data and low-data domains | |||

| Protein-ligand binding | SVM, random forest, gradient boosting tree, and a CNN | Successful prediction of protein-ligand binding affinities based on molecular descriptors obtained from topological models | 63 |

| Comparable to or even outperforming other state-of-the-art models296–299 | |||

| Powerful feature engineering | |||

| Compound-protein interactions | Combination of GNNs and CNNs (both supervised); networks were analyzed with neural attention mechanisms | Data-driven representations of compounds (as graphs) and proteins (as sequences of characters) were achieved that proved to be more robust than traditional chemical and biological feature vectors | 64 |

| Competitive or even better performance compared to state-of-the-art models300,301 | |||

| Wettability of a surface based on its topography | KNN, linear regression, Naïve Bayes, random forest, and a DNN | Successful mapping of surface topography parameters to the wetting behavior of the surfaces | 61 |

| Feature elimination was performed to reduce dimensionality and to identify the most influential surface parameters, the choice of which otherwise relies on expert assessment | |||

| The random forest outperformed the other models | |||

| Pathogen attachment to macromolecular coatings | Bayesian regularized artificial neural networks | Successful mapping of individual pathogen attachment to copolymers represented by a set of molecular descriptors | 145 |

| Multiple-pathogen modeling was achieved | |||

| Functional interactions between human genes | Decision tree, logistic regression, Naïve Bayes, random forest | Phylogenetic profiling was performed, and the combination with ML considerably improved the prediction of functional interactions between genes | 217 |

| The random forest outperformed the other models | |||

| Cytotoxicity of nanoparticles (NPs) | Association rule mining | Knowledge about the toxicity of inorganic, organic and carbon-based NPs was extracted from the literature | 257 |

| NPs properties most relevant for their toxicity were identified with a focus on hidden relationships | |||

| Molecular analysis | |||

| Identifying polymer states | DNN | Based on a simulated 3D polymer configuration represented by spatial coordinates, the model can identify different configurational patterns | 62 |

| Phase transition points identified by the model compared well with those obtained from independent specific-heat calculations | |||

| Designing functional protein sequences | Generative model | The model was trained on evolutional protein sequence data and, by this, learned sequence constraints | 202 |

| A diverse library of nanobody sequences was designed that significantly increases the efficiency of discovering stable, functional nanobodies compared to synthetic libraries | |||

| Predicting protein liquid–liquid phase separation | DNN | The ML classifier was trained based on a pre-analysis of datasets comprising proteins of different phase separation tendencies and learned the underlying principles of phase separation behavior with similar accuracy to classifiers using knowledge-based features | 209 |

| Analyzing the structural folding of proteins | Naïve Bayes, SVM, Bayesian generalized linear model | The classifiers accurately predicted mainfolds of proteins based on provided biophysical properties of the amino acids | 252 |

| The Bayesian model outperformed the other two models | |||

| Investigating structures and functions of proteins | Unsupervised language processing (transformer neural networks) | Based on the amino acid character sequences of more than 250 × 106 proteins as an input, knowledge of intrinsic biological properties was developed without supervision | 259 |

| Sensing of single molecules | CNN | A CNN was trained to classify translocation events of single molecules based on time-series signals obtained from nanopore sensors The network was able to automatically extract such information with higher accuracies than previously possible | 58 |

| Disease classification | |||

| Automated detection of glaucoma | Modified CNN (DenseNet), decision trees | Multiple different models were combined to automatically detect glaucoma based on medical images as well as demographic and systemic data | 36 |

| The model shed light onto features that were previously not considered for diagnosis | |||

| Predicting the primary origin of cancer | CNN with an attention model | The model was trained based on labeled images of tumors of known primary origin | 114 |

| The trained model first classified unknown tumors to be either metastatic or primary; then it predicted its site of origin with high accuracy | |||

| Detection of brain tumors | Random forest, SVM, decision trees | Based on geometric features extracted from MRI images, the different models were able to distinguish normal from abnormal brain images | 88 |

| The SVM had the highest sensitivity for detecting brain tumors, whereas the RF had the highest accuracy | |||

| Assessing sepsis through biomarker host response | Naïve Bayes, decision trees | Multiple biomarker measures from plasma samples were used to distinguish septic from healthy cohorts with high accuracies | 168 |

| Naïve Bayes and decision trees performed better than other classifiers—especially regarding the small data size | |||

| COVID-19 detection from x-ray images | Pretrained CNN | Transfer learning (based on a CNN trained on images of general objects) was employed to train a CNN to analyze chest x-ray images | 249 |

| The model successfully distinguished between healthy patients and those suffering from pulmonary diseases; from ill patients, it could identify those with COVID-19 and marked regions of interest in the x-ray images | |||

| Classification of EEG signals in dementia | MLP, logistic regression, SVM | Different feature sets extracted from EEG signals obtained from neurological patients were analyzed and used to make highly accurate predictions of cognitive disorders | 196 |

| The MLP outperformed the other models, and a combination of two different feature sets was shown to entail the most accurate results | |||

| Biomaterials design | |||

| Antifouling polymer brushes | DNN, SVR | A DNN was trained on a benchmark database to rationalize the antifouling properties of existing polymer brushes | 142 |

| A functional group-based SVR was then used to design new antifouling polymer brushes that indeed showed excellent protein resistance properties | |||

| Abiotic nuclear-targeting mini-proteins | Directed, evolution-inspired deep learning | The ML model was provided with data from high-throughput experiments and was then capable of predicting activities of mini-proteins in cells and to decipher sequence-activity predictions for new designs | 147 |

| The ML-designed mini-proteins were more effective than any previously known variant | |||

| Gas-separation polymer membranes | Regression | A rather small set of known polymer membranes (represented by binary fingerprints) and their experimental gas permeability data were used to train the model to predict the gas-separation behavior of a large dataset of polymers that have not been tested for these properties yet | 21 |

| Tested membranes produced from the most promising candidates (based on the prediction) were shown to exhibit excellent gas-separation performance | |||

| Mechanically tough bio-nano-composites | Decision tree and random forest (both as regressors) | Using material compositions linked to the resulting fracture toughness obtained from experimental trials and finite elements analysis, the ML models successfully predicted composition/strength relationships which assist the design of new composites without time-consuming trial-and-error experimentation | 68 |

| Stabilized silver clusters | SVM | The algorithm learned how the sequence of 10 base pair DNA strands correlates to the wavelength of fluorescent light emitted from silver-DNA clusters | 166 |

| With the motifs extracted from the analysis, the model was able to predict the fluorescence color of silver clusters with DNA sequences of variable length | |||

| 3D-printable bioinks | Regression | Different bioink formulations were evaluated regarding their rheological properties and printability, and a general relationship between those properties was established | 59 |

| Cell image analysis | |||

| Extracting biological information from bright field images | Generative adversarial neural network | After being trained on a dataset comprising bright field and fluorescently labeled cell images, the model was able to virtually stain cellular compartments, which eliminates the need for actual (possibly toxic) staining | 278 |

| Quantitative measures of cellular structures were then extracted from the virtually stained images | |||

| Identifying cell morphologies | Image segmentation, principal component analysis, k-means clustering | Cell contours were first identified by image segmentation. After aligning the cell shapes, a principal component analysis was conducted and the cell shape was reconstructed based on the determined eigen-vectors. Finally, different shape modes were identified by k-means clustering. | 135 |

| The protocol is highly automated and very fast in quantifying the cell morphologies | |||

| Predicting osteogenic differentiation | SVM | Based on the cell morphology recorded after 1 day of incubation on nanofiber scaffolds, a pretrained classifier was able to successfully predict the osteogenic differentiation fate of cells | 226 |

| Detecting leukemia | CNN | Characteristic features of white blood cell leukemia were extracted from images and sorted regarding importance | 302 |

| By applying statistics-based feature elimination, the model outperformed several CNN-only based models | |||

| Tracking cell migration | CNN | Stain-free, instance-aware segmentation of cells from phase contrast images was achieved with a CNN and provided unique identifiers for each cell | 237 |

| Based on those identifiers, the same cell could be followed in a series of images taken at different times | |||

| Highly accurate visualization and analysis of cell migration was achieved | |||

| Pharmaceutical development | |||

| Analyzing existing drugs regarding their suitability to target SARS-CoV-2 | Natural language processing with self-attention mechanism | A pre-trained model was used to predict binding affinities between antiviral drugs (represented as strings) and amino acid sequences of the target proteins without providing explicit structural information on the binding epitope | 55 |

| A list of antiviral drugs with good inhibitory potencies against SARS-CoV-2 related proteins was identified | |||

| Identifying self-aggregating drug formulations | Random forest | First, a RF model was used to identify self-aggregating drugs | 177 |

| Then, another RF model precisely predicted the co-aggregation properties of different drugs and excipients and was able to find suitable excipients for a novel drug | |||

| Generation of anticancer molecules | Conditional generative model | A reinforcement learning-based model was trained to design anticancer molecules with specific drug sensitivity and toxicity properties to target individual transcriptomic profiles | 271 |

| Such designed molecules exhibit (in silico) comparable physicochemical properties as existing cancer drugs | |||

| Predicting cancer patient drug responses | Linear regression, ridge regression, support vector regression | Based on transcriptomic data obtained from 3D culture models, different biomarkers were identified that allow for accurate patient/drug response predictions | 273 |

| Identifying drug targets | Naïve Bayes | Multiple different data types were combined to train the model based on a dataset of known molecule/target correlations | 180 |

| Novel drug binding targets were predicted | |||

| Biofabrication | |||

| Predicting the molecular weight of synthesized bio-molecules | MLP, SVM | Biopolymers were synthesized via enzymatic polymerization, and various reaction parameters were tuned to alter the molecular weight of the product | 150 |

| An SVM was shown to be highly suitable to predict the molecular weight despite the small training data size | |||

| Controlling the size of elastin-based particles | K-means clustering | A dataset comprising the properties of elastin-based particles and the corresponding fabrication parameters were analyzed by the clustering algorithm | 60 |

| The influence of the fabrication parameters on the size of the created particles was revealed, and this information was used to fine-tune the fabrication process | |||

| Controlling microbial co-cultures in bioreactors | Q-learning | Process feedback via a trained reinforcement learning model successfully supported maintaining populations at pre-defined target levels | 139 |

| The model was shown to be robust toward variations in the initial states and targets and outperformed standard control approaches | |||

| Identifying high-quality printing configurations | Random forest | With the printing conditions (resulting from the material composition) and the printing parameters as inputs, a classification model could distinguish between “high” and “low” quality prints, and a regression model returned a direct quality metric | 245 |

| The random forest outperformed a simple linear model | |||

| Monitoring anomalies in 3D bioprinting | CNN, SVM | SVM models were trained to predict whether a specific defect is directly visible in the image of a printed object | 274 |

| A CNN was trained to provide information about the applied printing pattern and the occurring printing anomalies | |||

| The combined model accurately detected and recognized anomalies in various different printing patterns | |||

A. Supervised learning approaches

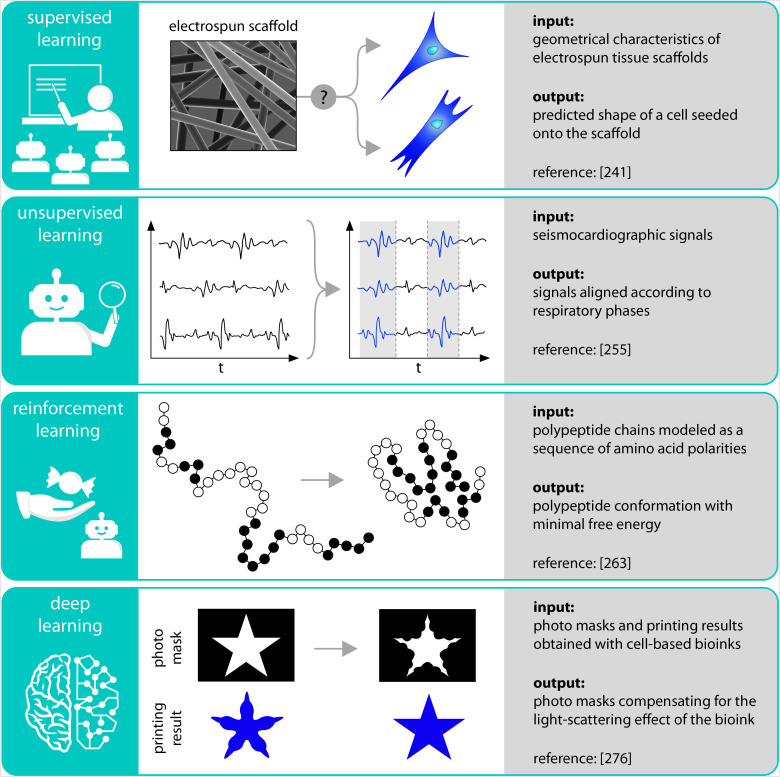

When applying supervised learning strategies, the researchers still have a good level of control over how the algorithms are trained and what type of predictions they try to achieve. For instance, Tourlomousis et al.241 used a supervised SVM algorithm to investigate the mechano-sensing response of cells to electrospun fibrous materials (Fig. 4). Therefore, they compared the morphologies of cells after they were cultivated on different substrate geometries. They correlated cell morphology parameters (e.g., cell area, ellipticity, or number of focal adhesions per cell) obtained from confocal microscopy images with architectural features of the substrate (e.g., fiber diameter, pore size, or degree of uniform fiber alignment). With this ML strategy, it was possible to investigate yet unexplored design spaces to yield specific designs qualified at the single-cell level. The authors demonstrated that certain geometrical characteristics of fiber-based materials can be mapped onto unique aspects of cell morphologies—and this is an important step toward a shape-driven pathway to controlling cellular phenotypes.

FIG. 4.

Schematic representation of selected examples from the biosciences, where ML algorithms have been successfully applied. By using a supervised approach, the geometrical characteristics of electrospun scaffolds were successfully linked to the resulting shape of cells seeded onto the scaffold. An unsupervised ML algorithm could group seismocardiographic signals according to the respiratory phases during which they were acquired to allow for a more direct signal comparison. Reinforcement learning was employed to find energetically optimal conformations of polypeptides. Finally, deep learning was applied to generate photo masks that compensate for the light-scattering effects of cells present in the used bioink.

Other studies went beyond purely analyzing datasets and used the knowledge generated by ML algorithms to tailor materials for specific applications. For instance, Sujeeun et al.242 utilized multiple supervised learning algorithms for the development of scaffolds for tissue regeneration; such scaffolds are typically used to provide structural support for cell attachment and to enable cell proliferation. Here, the main challenge was to browse through a plethora of available polymeric materials to identify the most suitable candidate that meets specified requirements regarding, e.g., biocompatibility, biodegradability, mechanical strength, porosity, and wound healing behavior. To do so, in vitro cell viability data (obtained from an MTT [3–(4,5-dimethylthiazol-2-yl)-2,5-diphenyltetrazolium bromide] assay) were combined with physico-chemical properties (e.g., the dimensions of fibers and pores, Young's modulus, or water contact angles) of different scaffolds to model the material-cell interactions. The established correlations then served for reverse-engineering scaffolds with desired performance. Six basic supervised approaches, including KNN and SVM, were compared, and a random forest classifier achieved the highest accuracy. Moreover, this RF algorithm could provide deeper insight into the identified correlations and demonstrated that two selected material characteristics (the pore and fiber diameter) have the strongest influence on the material-cell interaction. Finally, by performing preliminary in vivo biocompatibility experiments, the authors were able to show that the determined correlations also hold true (at least to a certain extent) when the material is placed into a living organism. The authors mention, however, that an integration of more advanced techniques, such as reinforcement learning or transfer learning (see Chap. 4), should be considered to obtain a more generalized and robust model that is applicable to unknown scaffolds.

In addition to those applications related to tissue engineering, basic supervised ML algorithms were proven to be handy for various other tasks: RF models, for example, can support the design of self-assembling dipeptide hydrogels243 and anti-biofouling surfaces244 and can supervise 3D bioprinting.245 With KNN and SVM algorithms, it is possible to differentiate healthy from apoptotic cells,246 to detect pneumonia247 or COVID-19248,249 by extracting features from x-ray images, to diagnose Parkinson based on recordings of speech disorders,250 and to classify white blood cells.251 Finally, Naïve Bayes models can classify protein folding patterns,252 identify post-transcriptional modifications in RNA sequences,253 and support the detection of brain tumors.254

B. Unsupervised learning approaches

Different from supervised learning approaches, unsupervised algorithms process unlabeled data. For instance, Gamage et al.255 employed a k-means clustering algorithm to group seismocardiographic signals (SCG) according to the patients' different respiratory states (Fig. 4). SCG is a noninvasive technique that monitors heart function by measuring cardiac-related vibrations on the chest surface. Since the measured signals are typically a convolution of respiratory movements and heart contractions, a direct comparison of two different measurements is difficult. By subdividing the obtained signals and using the vibration amplitudes in those subsignals as input features to cluster the generated subsequences based on their similarity, the SCG data were automatically separated into classes of different lung volumes (high or low) or different flow directions (inhaling or exhaling process). Indeed, within those categories, a comparison of the vibration signals to assess cardiac health (and to detect anomalies) is feasible. Hence, an ML-supported analysis of SCG signals may eliminate the necessity of additional (simultaneous, but independent) respiratory measurements.

Another unsupervised clustering approach was reported by Helfrecht et al.256 who aimed at identifying secondary and tertiary structures in proteins and rationalizing their formation. Here, the idea was not to use common structural descriptions of molecules that are based on predefined motifs such as intramolecular hydrogen bonds or distinct dihedral angle patterns (two strategies, which often rely on human intuition/approximations and only cover a predefined subset of molecular motifs) but to develop a more general approach that is readily applicable to various macromolecules. Therefore, the positions of all atoms in a given protein backbone were combined into an input vector whose complexity was reduced into 6–10 features based on a principle component analysis; then, a density-based algorithm was employed to cluster those reduced vectors: Regions in the feature space with high data density were defined as clusters that are separated from each other by low density areas. Even though several of the formed molecule clusters can belong to the same category of secondary structures (e.g., α-helices or β-strands), a similarly good over-all classification could be achieved with this ML-based approach as with traditional methods. Furthermore, the authors compared unsupervised and supervised methods: Their example highlighted that a supervised approach is suitable to adapt existing motif definitions or to test whether the chosen input data sufficiently represent the output. Unsupervised learning, in contrast, turned out to be better suitable for finding new patterns in the feature space.

In addition to clustering, which is certainly one of the most important techniques where unsupervised ML is applied for, unsupervised association rule mining was shown to be a useful tool to highlight hidden correlations between data such as between the material properties and production process of nanoparticles and their cytotoxicity.257 Moreover, unsupervised learning methods were successfully employed for image processing or pattern analysis. For instance, the autonomous detection of characteristic features from abdominal computed tomography (CT) images enabled the reconstruction of CT images captured with low radiation doses.258 Owing to this reduced radiation exposure, the concomitant risk of side effects for patients (such as developing new cancer) is minimized while sufficient image quality is maintained. Furthermore, unsupervised models were able to rationalize and predict selected functional properties (e.g., the biological activity259 or thermostability260) of proteins based on their sequences only, they could unravel the structure of block copolymer micelles,261 and they managed to successfully and automatically recognize the origin tissue of metastatic tumor cells.262

C. Reinforcement learning approaches

Reinforcement learning does not aim at identifying correlations or classifying samples according to given labels (which are typical goals for supervised and unsupervised learning strategies) but makes use of learning procedures to perform certain actions “correctly.” An interesting example for a reinforcement based learning approach was presented by Jafari and Javidi.263 Here, the researchers tried to obtain a complete prediction of the conformation of a polypeptide based on hydrophobic interactions only (Fig. 4). For this purpose, polypeptides were modeled as a sequence of amino acid polarities: For instance, the sequence “HHHPP” would represent a polypeptide with three hydrophobic (H) amino acids followed by two polar (P) ones. Then, the possible conformational space of a polypeptide is given as a bidimensional Cartesian grid with two constraints: First, two consecutive amino acids must be vertical or horizontal neighbors in the grid; second, two amino acids cannot be superimposed. To find the ideal overall conformation, a Q-learning algorithm with a dedicated reward function was employed, which aimed at minimizing the free energy of the polypeptide. In this model, the only actions available to the algorithm are moving a given amino acid from its current position in the grid to a neighboring position. With this approach, conformations of minimal free energy were identified (and found to agree with classical calculations using complex models) without explicitly implementing biophysical knowledge; moreover, it was faster than other state-of-the-art approaches. Remarkably, the “long short-term memory” network (a subtype of recurrent neural networks) used in this study proved to be particularly capable of handling sequential data such as chains of amino acids.

Interestingly, reinforcement learning was also successfully used for target-oriented design tasks such as de novo drug development: Popova et al.264 employed reinforcement learning to combine two independent supervised learning algorithms; here, the first one was capable of creating drug-like molecules, and the second one could predict certain properties of molecular structures. After individual, supervised training phases of both algorithms (in which either learned how to fulfill its particular task), they were jointly re-trained in a reinforcement approach to deliberately bias the creation of new molecules toward variants with desired properties: The first algorithm received a reward only if the properties predicted by the second matched the predefined goal. By adjusting this reward, the created molecule library was successfully tailored to contain drugs with specific physical properties, biological activity, or chemical substructures. Overall, this study impressively demonstrated how reinforcement learning can be used for generating property-optimized chemical libraries of novel compounds.

Overall, reinforcement learning is currently gaining an importance. It was recently used to control and optimize bioprocesses,265 to adapt cold atmospheric plasma conditions to optimally eliminate cancer cells,266 or to identify efficient surgical cardiac ablation strategies for atrial fibrillation.267 Moreover, reinforcement learning was shown to be useful for controlling tumor growth,268 to optimize cancer therapy,269,270 and for the development and dosing of anti-cancer drugs.271–273

D. Deep learning approaches

Deep learning is a special subtype of machine learning, where all types of (supervised, unsupervised, or reinforcement) approaches are solved by algorithms that try to mimic the structure and function of the human brain. These algorithms are often difficult to interpret, but they come with the advantage of high variability and the potential to model even highly complex systems. A process-oriented application of deep learning that recently gained considerable importance addresses 3D bioprinting: Here, deep learning-based algorithms can be used for monitoring the printing procedure to determine optimal process parameters or for detecting anomalies in the printed products.274,275 Moreover, an advanced deep learning approach was demonstrated by Guan et al.276; here, the researchers set out to compensate for cell-induced light scattering effects in light-based bioprinting—a common fabrication technology used for tissue engineering and regenerative medicine purposes (Fig. 4). To obtain the desired structures, a typical approach is to illuminate a reservoir containing the bioink while using a photo mask that only allows curing in predefined regions. However, the light-scattering effect brought about by cells embedded into the bioink impacts the photopolymerization process and entails a reduced printing resolution. To determine the correlation between the used photo mask and the resulting printing pattern, a convolutional neural network was employed: Pairs of graphical representations of the photo mask on the one hand and the printing result on the other hand were processed with several subsequent convolution and deconvolution steps to model the transformation of the former into the latter. With such a trained network, a photo mask was generated that was supposed to compensate the light-scattering effect of this particular bioink sample based on a desired printing output. Indeed, with this approach, a considerable improvement of the printing resolution was achieved; without the help provided by ML, a similar result would have required an extensive and costly trial-and-error style optimization for each individual structure.

Overall, deep learning techniques have been proven to be particularly useful for processing and analyzing images. This includes assessing the damage mechanics of bone tissue based on microCT images,277 extracting quantitative properties of cells from bright-field images,278 or compensating optical errors in microscopy images to obtain reliable images even under difficult conditions.279 Skärberg et al.280 employed a deep learning approach to analyze images of porous polymer films; here, the aim was to obtain a better understanding of how to tune those materials for controlled drug release. Therefore, they collected combined focused ion beam and scanning electron microscopy images of polymer films with different porosities and fed them into a convolutional neural network for segmentation. From the obtained dataset, 100 images (which corresponds to ∼0.4% of the total dataset) were manually segmented and used for training. To increase the dataset size, those images were subdivided, resulting in over 19 × 106 training samples. The trained CNN was then able to automatically identify pores in the images; thus, important information was retrieved that is needed for further sample analysis but that otherwise could only be gathered through expensive expert assessments. In fact, the results received with the CNN were comparable to manual segmentations and better than those previously obtained with a random forest classifier that was trained on scale-space features. Hence, extending the training set by augmenting data (for more information on this particular method, see Sec. IV) was an important step to achieve a robust ML model capable of competing with actual expert judgments.

The potential applications of deep learning approaches are virtually limitless, and many highly sophisticated neural network architectures have been developed and applied to different problem sets. For instance, generative models, such as generative adversarial neural networks, Gaussian mixture models, or hidden Markov models, are unsupervised approaches that can learn patterns from given input data; then, those models can generate new examples that could plausibly stem from the original dataset. Such algorithms were shown to be useful for the design and discovery of drugs,281,282 for the development of complex materials with desired elasticity and porosity283 or tissue engineering-related properties,284 to create synthetic data (e.g., photo-realistic images285 or biomedical signals286) for network training, and for analytical tasks such as identifying cell morphologies typical for cancer.287 Whether for an automated evaluation of tumor spheroid behavior in 3D cultures288 or for identifying cancer based on RNA data,289 for predicting the in vivo fate of nanomaterials based on mass spectrometry,290 to detect the presence of viral DNA sequences from metagenomic contigs,291 or to autonomously detect sleep apnea events from electrocardiogram signals,292 deep learning can be considered the ML equivalent of a Swiss-Army Knife as it can be a helpful tool in many fields of research.

IV. BIGGER IS BETTER BUT HARD TO GET—HOW TO HANDLE SMALL DATA

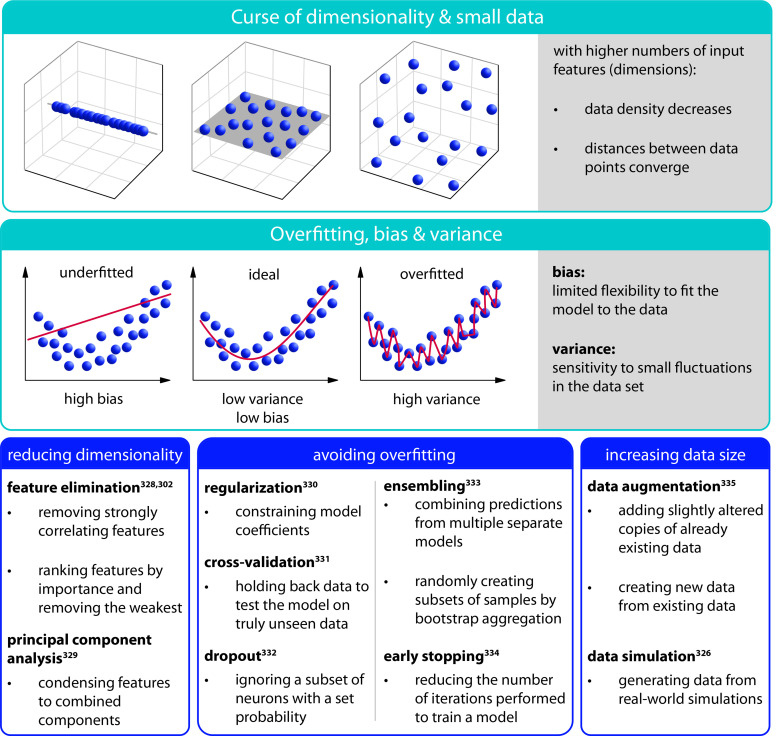

The performance of all ML algorithms critically depends on the amount of existing knowledge, i.e., the size of the database available for training. Whereas “Big Data” are a phrase commonly used in the context of machine learning, generating large volumes of data from experimental trials is often very challenging: The costs and time requirements associated with experimental studies are typically significant. When the training set is too small, commonly encountered problems include overfitting, biased predictions, or a phenomenon known as the “curse of dimensionality” (Fig. 5). Overfitting refers to algorithms that represent the training data in too much detail. Typically, this happens when a model depicts the variations (and, sometimes, even noise) in the training data to such an extent that it negatively impacts the performance of the model when confronted with new data.303 Data bias denotes a type of prejudice or favoritism toward a certain class or a decision that is based on wrong assumptions, which are made based on (non-ideal) training data.304 This can, for instance, occur when the sample set used does not sufficiently represent the whole problem, hence (possibly) neglecting concealed factors or if the model does not properly fit the training data.305,306 Finally, a prominent issue of small datasets occurs with increasing dimensionality (i.e., with increasing numbers of features added): When the total amount of training data stays the same, the density of data points decreases with every dimension added to a multi-dimensional feature space, and low data density can lead to reduced accuracy. Thus, a frequently asked question is: How many data points are actually necessary to establish robust models that provide reliable results? Answering this question is, however, not trivial as several factors need to be taken into account: the complexity of the problem, the chosen algorithm, the number and type of input features, and the noise level in the available data.

FIG. 5.

Typical challenges that can arise when applying ML methods and available remedies to deal with them. High dimensionality, small datasets, overfitting, bias, and variance are common difficulties encountered when using ML. High dimensionality entails a decrease in the data density in the feature space and leads to an equalization of distances between data points. This becomes particularly problematic when datasets are too small to compensate for these effects. Overfitting refers to ML models that approximate the training data too well. Overfitted models show a high sensitivity to small fluctuations in the dataset—a phenomenon which is referred to as “high variance.” In contrast, when the models are not able to sufficiently capture the relationship between input and output, the model is underfitting the training data. Such limited flexibility to fit the model to the data is called “bias.” Those problems, however, can be tackled for the following strategies: Reducing the dimensionality can be achieved by performing a feature elimination302,328 or by condensing the feature space via a principal component analysis.329 Overfitting can be avoided or at least reduced by including regularization,330 early stopping,334 or dropouts332 into the ML models, by using multiple independent predictors (ensembles),333 or by validating the models using cross-validation.331 Finally, the size of a dataset can be increased by simulating326 or augmenting data.335

There are some established rules of thumb that can help researchers to navigate this issue: In a regression problem, the number of training samples should be ten times as high as the number of dimensions of the investigated problem; and at least 1000 images per class should be available for computer vision tasks.307 However, under certain conditions, good prediction accuracies have also been reported for much smaller datasets. For instance, Shaikhina et al.308 successfully established a deep neural network for predicting the compressive strength of human trabecular bones in severe osteoarthritic conditions, and they could achieve this by using data from 35 bone specimens only. Here, the versatile design of DNNs came in handy: The number of hidden layers as well as the number or neurons and their activation functions were iteratively adjusted until the predictive accuracy of the model reached a maximum. Similarly, basic (non-deep) ML models can be optimized with respect to both, the desired problem and the available dataset: Every ML model is characterized by a set of distinct parameters, which are typically referred to as hyperparameters. Examples for such hyperparameters are the number of neighbors considered in a KNN model, the allowed dimensions of the trees in a RF model, or the set amount of penalty for misclassified samples in an SVM; however, also more advanced parameters can be adjusted. With such optimized algorithms, even fewer than 65 samples were shown to be sufficient to train various algorithms including RF or SVM models.309,310

Another important realization in this context is that, even though each research topic is distinct, most questions asked are not entirely unique. Thus, machine learning models that were trained for a certain task can often be used as a starting point for similar problems (this is referred to as transfer learning).311 Then, only few data points of the target problem are needed to transfer models generated from the source task to the target task—a procedure known as few-shot312 or even one-shot learning.313 With this approach, neural networks trained on large-scale image datasets of various macroscopic objects were successfully employed to classify electroencephalogram (EEG) signals obtained from patients diagnosed with delirium,314 or to identify diseases on grape leaves.315

Of course, no algorithm can generate knowledge where no data exist—all models are based on the assumption that the training data cover a suitable and representative subset of the problem at hand. Inter- or extrapolation procedures can (to a certain extent) fill in local gaps, where data are missing, but the machines and models generated by them will only be as reliable as the data fed into them. Even though there might not be a pre-trained algorithm for every research problem, there is a huge amount of data documented in the literature or even stored in readily accessible repositories. From those sources, it is often possible to selectively extract a subset of data to complement one's own dataset, thus increasing the amount of training data. Intriguingly, the collection of such supplemental data is not limited to data already available in a numerical form; especially the extraction of data from texts has been quite successful recently:316 for instance, unsupervised algorithms were—without having been provided with explicit chemical knowledge—able to understand the structure of the periodic table from text-based sources only, and they could recognize complex structure-property relationships of materials for specific applications, such as energy conversion,317 nanomedicine,318 or pharmaceutics,319 even years before they were actually realized.320,321

Gathering data from various sources can, of course, involve considerable effort in terms of retrieving and formatting. Other—possibly less expensive—approaches to extend the training dataset (to improve model generalization and robustness) make use of augmented or synthetic data. Data augmentation refers to a strategy where slightly altered copies of existing data are added to the training set. In the case of images, for example, augmented data can be created by rotating, shifting, splitting, zooming, or flipping the original pixel matrix.322 With these transformations, Liang et al.323 used 48 microscopy images obtained from collagenous tissue to create >300 000 training images; with this augmented dataset, they then successfully trained a CNN to predict non-linear stress-strain responses of the tissue. Importantly, such an approach is not limited to images—also other data types can be augmented, e.g., by superimposing random noise324 or by adding synthetically generated features; examples for the latter include crude estimations of the property-to-predict325 or calculated characteristics derived from empirical models.326 When training samples are created entirely from simulations, this is referred to as synthetic or in silico data. Indeed, by complementing experimental datasets with large amounts of such in silico data, Tulsyan et al.327 were able to develop a reliable ML-based monitoring system for biopharmaceutical manufacturing processes—a task that was previously very difficult due to the lack of data.

V. CONCLUSION AND OUTLOOK

Ongoing challenges encountered in the context of ML include having to deal with insufficient data quality, data scarcity, under- or overfitting of the models on the training data, biased training sets, and high computational costs. Indeed, for a long time, the application of ML techniques for bio-related research questions has been severely restricted by the range of difficulties associated with such problems, i.e., small datasets, complex problem definitions, and biological variability. However, some of those issues can now successfully be dealt with: Once the research questions have been translated into computer-readable formats, various methods can be used to increase the data density and to optimize the models in a way that common problems, such as overfitting and bias, are reduced. Even though the training phase of such algorithms might be computationally and/or experimentally costly, once trained, the models can make predictions very quickly.328–335

The black-box character of most deep learning methods and the increasing complexity of advanced algorithms in combination with the lack of experienced users especially entails a completely new set of hurdles on the path to fully exploiting the potential of ML. ML nowadays includes a diverse spectrum of different algorithms that can be employed for a plethora of different purposes, and the continuous advancement and expansion of the ML portfolio open up an ever-increasing number of possible applications in all kinds of scientific areas. Generative adversarial neural networks, for example, have successfully been employed to mimic any type of data (including images, numerical, or binary data), which then can be used to either increase the training dataset and/or to generate results. As neural networks are automatically developed inspired by human evolution, evolutionary machine learning approaches can decrease the required expert knowledge needed for creating deep ML models. Attention mechanisms are very recent but promising strategies to improve deep model performances by putting a stronger focus on a few, more relevant aspects while paying less attention to the rest. Finally, by integrating statistical properties into variables, Bayesian neural networks are especially suitable for research problems dealing with sparse data. With these improved techniques available now, current ML models are well-equipped to explore the diverse range of structures, effects, and mechanisms of bio-related systems in more detail, and it is clear that we will encounter many more exciting results in the near future.

ACKNOWLEDGMENTS

The authors thank Jochen Mück for helpful discussions regarding terminology. This project was conducted in the framework of the innovation network “ARTEMIS” by the Technical University of Munich.

AUTHOR DECLARATIONS

Conflict of Interest

The authors have no conflicts to disclose.

DATA AVAILABILITY