Abstract

Smallholder farms are major contributors to agricultural production, food security, and socio-economic growth in many developing countries. However, they generally lack the resources to fully maximize their potential. Subsequently they require innovative, evidence-based and lower-cost solutions to optimize their productivity. Recently, precision agricultural practices facilitated by unmanned aerial vehicles (UAVs) have gained traction in the agricultural sector and have great potential for smallholder farm applications. Furthermore, advances in geospatial cloud computing have opened new and exciting possibilities in the remote sensing arena. In light of these recent developments, the focus of this study was to explore and demonstrate the utility of using the advanced image processing capabilities of the Google Earth Engine (GEE) geospatial cloud computing platform to process and analyse a very high spatial resolution multispectral UAV image for mapping land use land cover (LULC) within smallholder farms. The results showed that LULC could be mapped at a 0.50 m spatial resolution with an overall accuracy of 91%. Overall, we found GEE to be an extremely useful platform for conducting advanced image analysis on UAV imagery and rapid communication of results. Notwithstanding the limitations of the study, the findings presented herein are quite promising and clearly demonstrate how modern agricultural practices can be implemented to facilitate improved agricultural management in smallholder farmers.

Keywords: Smallholder farms, Drones, Geospatial cloud computing, Landcover, Machine learning

1. Introduction

Smallholder farms which are typically less than 2 ha in size, contribute an inordinate amount to food production relative to the area they occupy and, therefore, can play a pivotal role in tackling food security challenges [[1], [2], [3], [4]]. In many developing countries, smallholder farms are not only major contributors to agricultural production and food security but are also one of the main drivers of socio-economic growth [3,5]. Despite their relative importance, smallholder farms generally lack the resources of their larger-scale commercial counterparts and do not receive sufficient support from government led-initiatives due to capacity constraints [4].

Subsequently, this may contribute to their potential agricultural productivity not being fulfilled, resulting in these farms not effectively contributing to addressing food security and socio-economic challenges [3,6,7]. In order to remedy this situation, local government and agricultural decision-makers in these regions require innovative, evidence-based, context-specific and lower-cost solutions that can assist in better managing agricultural practices in smallholder farms to optimize their productivity [7,8].

Quantifying spatio-temporal land use land cover (LULC) dynamics within smallholder farms can play an important role in more effectively managing resources and enhancing the productivity of these farms, as it facilitates changes in agricultural crop types, management practices, climatic influences, water use efficiency and vegetation health to be assessed in near-real-time allowing for quick and decisive operational management actions to be taken [4,5,[9], [10], [11], [12], [13]]. Several studies have demonstrated the potential of using remote sensing technologies for agricultural applications such as cropland mapping [11,[13], [14], [15], [16]]. Satellite-earth observation datasets and associated products can provide information at various spatial, spectral and temporal resolutions. However, their application in smallholder farm settings is limited [11].

The spatial resolution of these open-access datasets or products is often too coarse to capture the spatial heterogeneity generally found within smallholder farms [7,11,14]. Whereas more advanced satellite-earth observation systems and manned-aerial vehicles which can capture data at finer spatial resolutions (metre to sub-metre spatial resolution) are often too costly for widespread and long-term smallholder agricultural applications [14]. Furthermore, satellite revisit and repeat cycles coupled with the influence of cloud cover reduces the frequency at which data can be captured and processed [7,17].

Recently, precision agricultural practices facilitated by unmanned aerial vehicles (UAVs) have gained traction in the agricultural sector [14,18,19]. UAVs have been shown to hold vast potential for agricultural applications, as their low flight altitudes can potentially capture very-high spatial resolution data at various spectral resolutions (depending on the optical-properties of the on-board camera). Moreover, data can be captured at user-determined intervals and are less severely impacted by the effects of cloud cover allowing data to be captured more frequently than satellite-based approaches [14,17,20,21]. The aforementioned features and the relatively lower costs of UAVs has seen them emerge as a promising tool for smallholder agricultural applications [14,22].

Furthermore, with advances in geospatial cloud computing platforms such as Google Earth Engine (GEE) many more users are now able to implement sophisticated image analysis techniques without being limited by computational power and access to specialised proprietary software [[23], [24], [25]]. According to Bennet et al. [21], with the increased use of UAVs for various applications and the growing popularity of utilizing cloud-based computing platforms for image analysis, it is important to develop reproducible, adaptable and distributable techniques that can facilitate more efficient analysis of UAV imagery for future applications.

Despite, their immense potential for accurately mapping crops in smallholder farms as shown by Chew et al. [5], Alabi et al. [12]and Hall et al. [26], the application of UAV and cloud computing technologies in these settings is limited [5,27]. Considering that the potential of the aforementioned technologies remains largely untapped for smallholder farm applications, in this study we aim to explore and demonstrate the utility of using GEE to develop a semi-automated workflow to i) process multi-spectral UAV imagery for the purpose of mapping LULC within smallholder rural farms at a localized level and ii) widely share these results in a simplified manner through a user-friendly interactive data visualization web-based app.

2. Materials and methods

2.1. Study site description

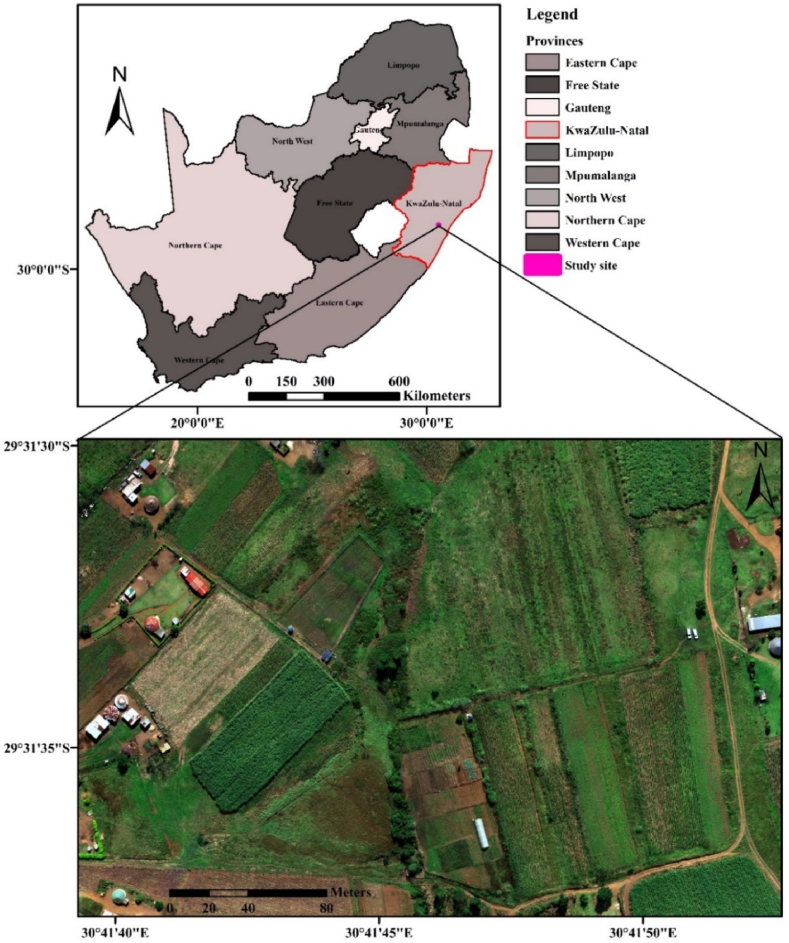

The study site is located within Ward 8 of the rural community of Swayimane which forms part of the uMshwati Local Municipality situated within the KwaZulu-Natal province of South Africa (Fig. 1). Swayimane is approximately 880 m. a.s.l and covers a geographic extent of approximately 36 km2. A generally warm climate with cooler, dry winters and warm wet summers is experienced within the study area with a mean annual temperature of 18.0 °C [28]. Mean annual precipitation ranges between 600 and 1200 mm, with the majority of this rainfall being received during the summer months as high-intensity thundershowers [28].

Fig. 1.

Location of the study site at Swayimane within the KwaZulu-Natal province of South Africa.

The community of Swayimane are largely dependent on subsistence farming to support their livelihoods. Farming activities consist of cropping and animal husbandry, with crops dominating the agrarian system. Farmers grow various crops including maize, amadumbe (taro), sugarcane and sweet potato [28]. Although the study area is characterised by good rainfall and deep soils, these soils are depleted in some mineral elements which are essential to good and sustained crop production [29]. Further compounding this situation is the influence of short-term droughts on crop performance. With projected increases in the frequency of extreme events, threats to food security and livelihoods are an ever-present danger which the immediate and surrounding communities are expected to face [29,30].

Considering the socio-economic circumstances of this community in concert with the biophysical factors which influence crop production, this study site provides the ideal opportunity to demonstrate how the use of UAVs can be utilized to provide a relatively cost-effective approach to provide spatially explicit information in near real-time which can then be used to inform and guide operational decision making at a localized level to enhance crop production and mitigate the risk of crop failure in the future.

2.2. Data acquisition and processing

The images for the study area were collected using a consumer-grade DJI Matrice 300 (M − 300) UAV fitted with a Micasense Altum multispectral sensor and Downwelling Light Sensor 2 (DLS-2). The Altum imaging sensor captures both multi-spectral (blue, green, red, red-edge and near infra-red) and thermal data (https://micasense.com/altum/). It should be noted that the thermal band was not used in this study. The Altum imaging sensor was configured to capture images that have a side and front overlap of 70 and 80%, respectively, across the study area.

The study area boundary which covered a geographic extent of approximately 0.11 km2 was digitised within Google Earth and saved as a Keyhole Markup Language (kml) file which was then imported into a DJI smart controller connected to the internet following the DJI user account creation. The kml file was used to design an optimal flight path to acquire images covering the entire study area. The DJI Matrice 300 was configured to fly at an altitude of 100 m, which was sufficient to capture data at a 0.07 m pixel resolution for the entire study area. Light intensity changes during the day were accounted for through calibration before and after each flight mission using the MicaSense Altum calibrated reflectance panel (CRP). The UAV images were acquired between 8:00 and 10:00 a.m. UTC on the 28th of April 2021. All images that were captured were pre-processed using Pix4D fields software (version 1.8) before further analysis. Pre-processing of the UAV images essentially involved performing atmospheric and radiometric corrections with the corrected images then being mosaicked to create a single georeferenced orthomosaic.

The orthomosaic was saved in a GeoTIFF file format as this is the format that is required for upload to GEE [21]. The GeoTIFF orthomosaic image was uploaded and imported in GEE for further processing. All the spectral bands captured by the UAV on-board sensor, as well as 9 vegetation indices (Table 1) for each of the training and validation points were extracted and used as covariates to train the classification algorithm [9]. The selection of vegetation indices (VIs) to be used was subjective and guided by their frequency of application and performance in the literature [[31], [32], [33], [34], [35], [36]]. However, it should be noted that the methods implemented herein allow for additional VIs to be included or feature selection (to optimize the combination of bands and VIs) to be employed during the classification.

Table 1.

List of vegetation indices used in this study.

| Name | Equation |

|---|---|

| Normalized Difference Vegetation Index | |

| Green Normalized Difference Vegetation Index | |

| Red-Edge Normalized Difference Vegetation Index | |

| Enhanced Vegetation Index | |

| Soil Adjusted Vegetation Index | |

| Simple Blue and Red-Edge Ratio | |

| Simple NIR and Red-Edge Ratio | |

| Simple NIR Ratio | |

| Green Chlorophyll Index |

Training data points used for the image classification were acquired through a mix of data collected in the field and visual inspection of the GeoTIFF orthomosaic [9]. Five broad LULC were identified, and training data was collected by identifying pixels within the image that completely contained one of these classes (Table 2). The selection of these pixels were guided by a priori knowledge of the study area acquired from multiple site visits. A total of 150 points were randomly captured for each class. A total of 750 points were available for model training (70%) and validation (30 %) of the classification accuracy [9,37]. For each of the training and validation data points, covariates (UAV spectral bands and VIs) were extracted from the image captured on the 28th of April 2021.

Table 2.

A description of the LULC classes that were identified and categorized for mapping.

| Broad LULC classes | LULC present in each broad LULC class |

|---|---|

| 1. Buildings and infrastructure | Buildings, roads, water tanks and solar panels |

| 2. Bareground | Bare soil, harvested crops, dirt roads |

| 3. Crops | Maize, sugarcane, amadumbe, sweet potato and butternut |

| 4. Grassland | Grassland |

| 5. Trees and shrubs | Intermediate and tall trees or shrubs |

In order to model and predict the 5 broad LULC classes, some of the commonly used machine learning classification algorithms i) Classification and Regression Tree (CART), ii) Support Vector Machine (SVM), iii) Random Forest (RF) and iv) Gradient Tree Boost (GTB) available in GEE were deployed [38]. Default hyper-parameter values for each of the aforementioned classification algorithms were used during model development and validation. However, the number of decision trees (n = 10) was specified for the RF and GTB classifiers as this is a requirement when using these classification algorithms in GEE. The performance of each classification algorithm was then determined by comparing the overall accuracy (OA), user accuracy (UA), producer accuracy (PA) and kappa coefficient. The model which achieved the highest accuracy scores across all the performance metrics was then selected to perform the image classification.

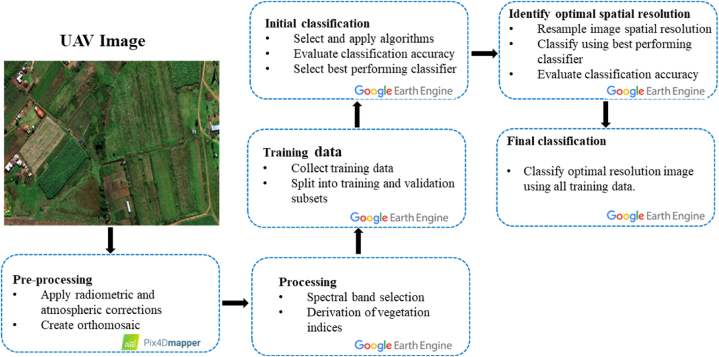

Once the best performing classifier had been established and applied for the original image (0.07 m), the spatial resolution of the original UAV image was resampled in GEE using bilinear interpolation to produce lower resolution images (0.50, 1.00 and 5.00 m). The classification process was then repeated using the best performing classifier and these images, to identify the optimal resolution for mapping LULC within the study area. Once the optimal spatial resolution had been established a final classification was performed (C1) using all of the previously collected training data points, the best performing classifier and the optimal spatial resolution. An overview of the classification workflow is provided in Fig. 2.

Fig. 2.

A conceptual representation of the classification workflow used to create the LULC maps for the study area.

3. Results

3.1. Identifying the best performing classifier for the original UAV image

The best performing classification algorithm was the RF classifier, marginally outperforming GTB classifier. The LULC classification using the RF model achieved an OA of 86.00%, as shown in Table 3. The average class-specific PA and UA for the RF classification were 85.40 % (±8.30) and 85.20 % (±8.32). The buildings and infrastructure class was most accurately predicted within the RF classification, whereas the crops class was least accurately predicted.

Table 3.

Classification accuracies for the original spatial resolution (0.07 m) UAV image classified using the various classification algorithms available in GEE.

| Accuracy Assessment | ||||||||

|---|---|---|---|---|---|---|---|---|

| Classification algorithm | CART | SVM | RF | GTB | ||||

| Overall Accuracy (%) | 81.00 | 83.00 | 86.00 | 85.00 | ||||

| Kappa Coefficient |

0.77 |

0.79 |

0.82 |

0.81 |

||||

| PA (%) |

UA (%) |

PA (%) |

UA (%) |

PA (%) |

UA (%) |

PA (%) |

UA (%) |

|

| Buildings and infrastructure | 98.00 | 88.00 | 88.00 | 100.00 | 98.00 | 93.00 | 98.00 | 91.00 |

| Bareground | 85.00 | 100.00 | 100.00 | 89.00 | 93.00 | 97.00 | 88.00 | 97.00 |

| Crops | 80.00 | 69.00 | 67.00 | 77.00 | 78.00 | 78.00 | 84.00 | 79.00 |

| Grassland | 69.00 | 79.00 | 78.00 | 73.00 | 80.00 | 80.00 | 76.00 | 80.00 |

| Trees & shrubs | 76.00 | 76.00 | 86.00 | 80.00 | 81.00 | 81.00 | 81.00 | 79.00 |

3.2. Comparison of best performing classifier at different spatial resolutions

The classification results for each of the different spatial resolution UAV images that were classified using the RF classification algorithm are shown in Table 4. An interactive web-based app to visualize the differences between the classifications at the various spatial resolutions can be accessed from https://shaedengokool.users.earthengine.app/view/swayimane-landcover-maps. The overall classification accuracy and kappa coefficient for each of these classifications was relatively high. However, the highest level of accuracy was achieved using a spatial resolution of 0.50 m, whereas the lowest classification accuracy was obtained at a spatial resolution of 5.00 m.

Table 4.

Classification accuracies for the UAV images at differing spatial resolutions classified using the GTB classification algorithm.

| Accuracy Assessment | ||||||||

|---|---|---|---|---|---|---|---|---|

| Spatial resolution | 0.07 m | 0.50 m | 1.00 m | 5.00 m | ||||

| OA (%) | 86.00 | 91.00 | 88.00 | 81.00 | ||||

| Kappa Coefficient |

0.82 |

0.89 |

0.84 |

0.77 |

||||

| PA (%) |

UA (%) |

PA (%) |

UA (%) |

PA (%) |

UA (%) |

PA (%) |

UA (%) |

|

| Buildings and infrastructure | 98.00 | 93.00 | 96.00 | 100.00 | 97.00 | 93.00 | 87.00 | 84.00 |

| Bareground | 93.00 | 97.00 | 100.00 | 95.00 | 90.00 | 100.00 | 77.00 | 69.00 |

| Crops | 78.00 | 78.00 | 87.00 | 77.00 | 78.00 | 76.00 | 84.00 | 86.00 |

| Grassland | 80.00 | 80.00 | 82.00 | 94.00 | 88.00 | 84.00 | 89.00 | 87.00 |

| Trees & shrubs | 81.00 | 81.00 | 95.00 | 90.00 | 85.00 | 88.00 | 71.00 | 82.00 |

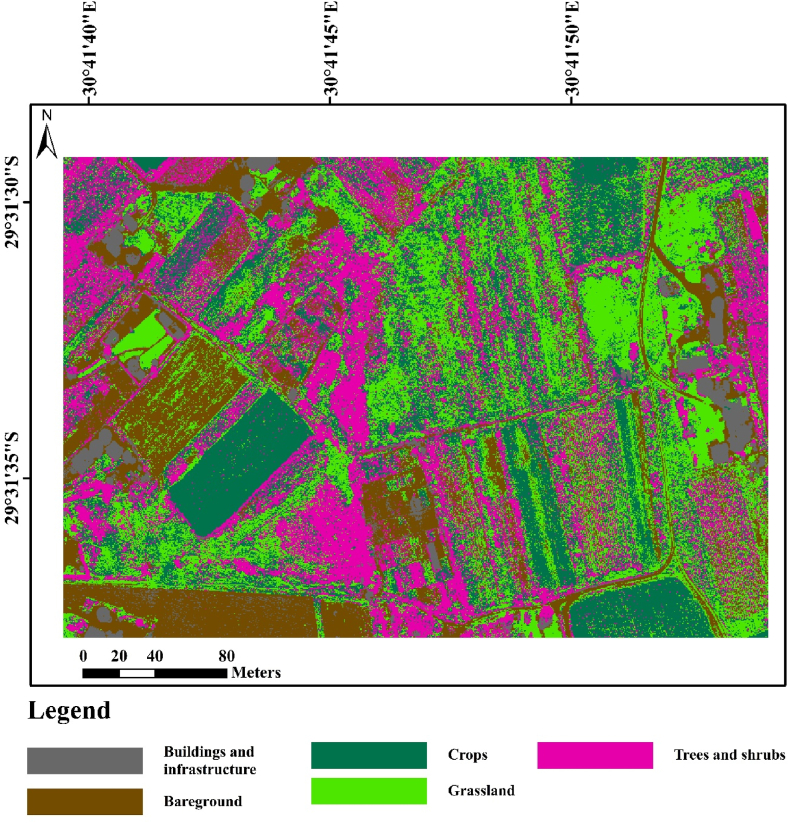

The classification using the 0.50 m spatial resolution image achieved an OA of 91.00 %, with an average PA and UA of 92.00 (±7.31) and 91.20 (±8.70) %, respectively. The Buildings and infrastructure as well as the bareground classes were most accurately predicted within this classification, whereas crops were the least accurately predicted. Following the analyses and results of identifying the optimal classifier and spatial resolution for mapping LULC in the study area, the original UAV image (0.07 m) was resampled to a 0.50 m spatial resolution prior to performing the final LULC classifications using the RF model (Fig. 3).

Fig. 3.

LULC classification result across the study area at a 0.50-m spatial resolution for the 28th April 2021.

4. Discussion

This study explored the utility of using the GEE geospatial cloud computing platform to process and analyse a multi-spectral UAV image to map LULC within smallholder rural farms, with a particular focus on croplands. In general, we found that were able to map LULC at a relatively high accuracy, capture the spatial heterogeneity within the study area and adequately distinguish between crops and other LULC classes. The average PAs and UAs for crops across the various classification algorithms for the original UAV image (0.07 m) was approximately 75 %. However, once the best performing classifier and an optimal spatial resolution for mapping had been identified and applied, the average PA and UA for crops increased to approximately 82 %, which is relatively close to the 85% target accuracy specified by McNairn et al. [39]. These results are consistent and within the range of values reported in similar studies [5,12,26]. Overall, the C1 classification was able to adequately capture the spatial heterogeneity within the study area and fairly accurately distinguish crops from other LULC classes.

Considering the choice of classification algorithm on classification accuracy, we found that the various machine learning algorithms available within GEE performed relatively well at mapping LULC within the study area (OA ≥ 75%). However, it should be noted that the training and evaluation of these algorithms were performed using data that was largely collected from visual inspection of the UAV True colour image. The collection of training data from visual inspection of the UAV True colour image does promote ease of application and expedites the classification process. However, this may contribute to occurrences of misclassification. Some of the data points may be incorrectly identified or may not adequately represent the unique spectral properties for each of the classes that are to be mapped. Subsequently, it is recommended that more ground truth data be included in the training and evaluation process where possible, so that a more accurate and objective assessment of the performance of these classification algorithms can be ascertained [9].

Furthermore, since there was no real time kinematic (RTK) or post processing kinematic (PPK) corrections performed on the images, positional inaccuracies of pixels within the image may exist. This may also contribute to occurrences of misclassification as the spectral signature extracted for a particular training point from the image may not correspond to the LULC class on the ground. Subsequently, it is recommended that the use of ground control points (GCPs) to georeference the image or the implementation of PPK or RTK corrections be applied where possible to minimise the impact of potential positional inaccuracies [40].

During the initial classifications when trying to establish the best performing classifier, the classification accuracy of each LULC class ranged between 67 and 100%. The major source of inaccuracy in these classifications was generally due to the confusion between crops, grasslands as well as trees and shrubs. This confusion may largely be a consequence of the limited training data that was used during the classification process. Training data for crops was collected mainly from sugarcane and maize since these are the dominant crops and cover the largest geographic extent within the study area. Consequently, there were fewer training points used for the other crops which may have resulted in them being incorrectly classified and contributing to the overall classification inaccuracy for this broad LULC class.

Comparisons between the various classification algorithms showed that the ensemble-machine learning algorithms (RF and GTB) performed the best, with similar findings being reported in Orieschnig et al. [38]. This occurrence may have been due to the ability of these ensemble-machine learning methods to combine several predictive models to create a single model that can improve predictive performance by decreasing variance and bias while significantly improving the classification accuracy relative to other machine learning models [38,41]. Furthermore, these models are generally less sensitive to input data and are more well suited for generalized LULC applications [38]. While the RF and GTB models were shown to perform better than the SVM and CART models, the accuracies that have been presented herein may not represent the maximum possible, as these values will largely be influenced by the user-specified parameter choices for each algorithm [42].

While it was not within the scope of this study, Abdi [42] recommends that an unbiased evaluation of each algorithm's performance is undertaken through an equally robust hyper-parameter selection where possible, so as not to introduce bias into the model's performance. During this process, these algorithms should be evaluated with respect to their overall accuracy and their ability to adequately represent each LULC class [42]. Considering the influence of spatial resolution on classification accuracy, we found that as the spatial resolution decreased from 0.07 to 1.00 m, there was an increase in the OA and kappa coefficients. The OA and kappa coefficient then decreased to its lowest value when spatial resolution decreased from 1.00 to 5.00 m.

These results are consistent with the findings presented in Zhao et al. [43] and Liu et al. [44] which indicates that the use of the finest spatial resolution image available does not necessarily translate into improved classification accuracy, however there is a limit to which the spatial resolution can be reduced. Instead, an intermediate spatial resolution whereby spectral intra-class variation and the mixed-pixel effect is minimized will be most appropriate for LULC mapping [44]. While the 0.50 m spatial resolution image produced the highest overall and individual class accuracies, this spatial resolution should not be viewed as a generalized optimal spatial resolution as this may vary for specific LULC classes or agricultural applications [43,44]. Although the C1 classification was not the highest spatial resolution image that could have been used for the mapping of LULC within the study area, this spatial resolution more than adequately captures the spatial heterogeneity within the study area.

In this particular study we elected to define and map five broad LULC classes within the study area. However, in order for the landcover map to be applicable to a wider range of potential applications it would be advantageous to map LULC in greater detail (for e.g., identifying individual crop types). The relatively low spectral resolution of the UAV on-board sensor may pose a challenge to this, as it may prove difficult to distinguish between certain features which are spectrally similar [44,45]. The acquisition and use of higher spectral resolution imaging sensors can address this limitation but these sensors are accompanied by higher costs.

The fusion of high spatial resolution UAV imagery with freely available higher spectral resolution satellite imagery, does present an alternate and more pragmatic approach to potentially address the aforementioned challenges and reduce costs, however, further testing is advocated before these are readily implemented [43,45,46]. Overall, the investigations in this study could have benefited from the availability of more training data, higher spectral resolution data or model hyper-parameter tuning. However, the results were quite promising and can serve as a foundation for the development of improved LULC maps for the study area in the future.

Additionally, the use of object-based image analysis (OBIA) techniques has been gaining popularity as an alternate to traditional pixel-based approaches (such as those applied in this study) or for the classification of high-resolution imagery. Similarly, deep-learning algorithms have shown much promise for producing accurate crop types maps. The application of these approaches albeit more complex and computationally intensive, may represent useful alternatives to improving classification accuracy [4,47].

The ability to accurately map crops' location and spatial distribution can play a pivotal role in improving the management of smallholder farms [7,48]. Given the unique characteristics of UAVs and UAV imagery, these technologies are well suited for mapping LULC within smallholder farms as demonstrated in this study. However, their application in smallholder farming has been relatively limited largely due to their perceived high costs coupled with a lack of technical skills to operate and derive meaningful information from the acquired images [7].

Through creative ownership solutions and the decrease in acquisition and operational costs, UAV technologies are now more accessible to various users [7]. Furthermore, the use of freely available open-source UAV image processing software (rather than the proprietary software used in this study) such as OpenDroneMap (https://www.opendronemap.org/) and geospatial cloud computing platforms such as GEE can be used to develop automated or semi-automated processing and analysis procedures which can be shared and used by expert and non-expert users. This may serve to further reduce operational costs and improve the efficiency with which tasks are executed.

Although the choice of classification algorithms and the amount of UAV-acquired data that can be uploaded and stored in GEE is limited, the platform provides unparalleled freely available processing power and permits the development of dynamic web-based apps which are also freely available and can be used to rapidly communicate results in an aesthetically appealing and comprehendible manner to a wide range of users [25]. Considering these advancements and innovative solutions; local government, agricultural authorities and researchers are now better placed to assist smallholder farmers to exploit the benefits of modern agricultural practices and overcome many of the limitations they have traditionally faced.

5. Conclusions

In recent times, the use of UAVs has been gaining traction in the agricultural sector. With the emergence of geospatial cloud computing platforms, there are now greater opportunities for local government, agricultural authorities and researchers in developing countries to integrate technological advances into agricultural practices that can be used to aid smallholder farmers in optimizing their productivity. The synergistic application of these technologies offers the opportunity to develop bespoke, innovative and lower-cost solutions which can facilitate improved agricultural management within smallholder farms. Considering these developments, in this study we aimed to demonstrate how GEE can be leveraged to maximize the potential of UAVs for mapping LULC in smallholder farms.

The results of these investigations demonstrated that LULC could be mapped fairly accurately with the resultant map also adequately representing the spatial heterogeneity within the study area. Furthermore, it was shown that it is possible to sacrifice spatial resolution up to a point to expedite data collection and image processing or to reduce costs by purchasing cheaper lower spatial resolution imaging sensors, without greatly sacrificing classification accuracy.

Notwithstanding the limitations of the study, GEE was found to be particularly useful for performing computationally intensive and advanced image analysis on a UAV image. The availability of such approaches is particularly beneficial to data-scarce and resource-poor regions, as it provides a wide-range of users with a powerful tool to guide and support decision making. This in turn can serve to ensure that smallholder farmers in developing countries are not excluded from the big data revolution in agriculture.

Funding

This research was funded by the Water Research Commission (WRC) of South Africa through the WRC Project Number K5/2971//4 entitled: “Use of drones to monitor crop health, water stress, crop water requirements and improve crop water productivity to enhance precision agriculture and irrigation scheduling” as well as the WRC Project Number C2021-2022-00800 titled: “Leveraging the Google Earth Engine to analyse very-high spatial resolution unmanned aerial vehicle data to guide and inform precision agriculture in smallholder farms.”

Data availability Statement

The data presented in this study are available on request from the corresponding author.

CRediT authorship contribution statement

Shaeden Gokool: Writing – review & editing, Writing – original draft, Visualization, Validation, Software, Methodology, Investigation, Formal analysis, Data curation, Conceptualization. Maqsooda Mahomed: Writing – review & editing, Visualization, Validation, Software, Methodology, Investigation, Formal analysis. Kiara Brewer: Writing – review & editing, Visualization, Validation, Software, Resources, Methodology, Investigation, Formal analysis, Data curation. Vivek Naiken: Writing – review & editing, Software, Resources, Investigation, Formal analysis, Data curation. Alistair Clulow: Writing – review & editing, Validation, Software, Resources, Methodology, Investigation, Formal analysis. Mbulisi Sibanda: Writing – review & editing, Visualization, Validation, Software, Resources, Project administration, Methodology, Investigation, Funding acquisition, Formal analysis, Data curation. Tafadzwanashe Mabhaudhi: Writing – review & editing, Visualization, Validation, Software, Resources, Project administration, Methodology, Investigation, Funding acquisition, Formal analysis, Data curation.

Declaration of competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgements

The authors would like to acknowledge the smallholder farming community of Swayimane for all their efforts throughout this project, with special mention to Luyanda Gwala. The authors would also like to thank the anonymous reviewers for their invaluable feedback on the previous versions of this manuscript.

Contributor Information

Shaeden Gokool, Email: GokoolS@ukzn.ac.za, shaedengokool@gmail.com.

Kiara Brewer, Email: 216012945@stu.ukzn.ac.za.

Vivek Naiken, Email: NaikenV@ukzn.ac.za.

Alistair Clulow, Email: ClulowA@ukzn.ac.za.

Mbulisi Sibanda, Email: msibanda@uwc.ac.za.

Tafadzwanashe Mabhaudhi, Email: Mabhaudhi@ukzn.ac.za.

References

- 1.Wolfenson K. Food and Agriculture Organization of the United Nations; Rome, Italy: 2013. Coping with the Food and Agriculture Challenge: Smallholders' Agenda. [Google Scholar]

- 2.Lowder S., Skoet J., Singh S. Food and Agriculture Organization of the United Nations (FAO), Agricultural Development Economics Division, 2014; Rome: 2014. What Do We Really Know about the Number and Distribution of Farms and Family Farms in the World? Background Paper for the State of Food and Agriculture 2014, ESA Working Paper 14–02. [Google Scholar]

- 3.Kamara A., Conteh A., Rhodes E.R., Cooke R.A. The relevance of smallholder farming to African agricultural growth and development. Afr. J. Food Nutr. Sci. 2019;19(1):14043–14065. doi: 10.18697/ajfand.84.BLFB1010. [DOI] [Google Scholar]

- 4.Kpienbaareh D., Sun X., Wang J., Luginaah I., Kerr R.B., Lupafya E., Dakishoni L. Crop type and land cover mapping in Northern Malawi using the integration of Sentinel-1, Sentinel-2, and PlanetScope satellite data. Rem. Sens. 2021;13 doi: 10.3390/rs13040700. [DOI] [Google Scholar]

- 5.Chew R., Rineer J., Beach R., O'Neil M., Ujeneza N., Lapidus D., Miano T., Hegarty-Craver M., Polly J., Temple D.S. Deep Neural Networks and Transfer learning for food crop identification in UAV images. Drones. 2020;4(7) doi: 10.3390/drones4010007. [DOI] [Google Scholar]

- 6.Department of Agriculture, Forestry and Fisheries (DAFF) Directorate Co-operative and Enterprise Development, Department of Agriculture, Forestry and Fisheries; South Africa: 2012. A Framework for the Development of Smallholder Farmers through Cooperatives Development. [Google Scholar]

- 7.Nhamo L., Magidi J., Nyamugama A., Clulow A.D., Sibanda M., Chimonyo V.G.P., Mabhaudhi T. Prospects of improving agricultural and water productivity through unmanned aerial vehicles. Agriculture. 2020;10:256. doi: 10.3390/agriculture10070256. [DOI] [Google Scholar]

- 8.Agidew A.A., Singh K.N. The implications of land use and land cover changes for rural household food insecurity in the Northeastern highlands of Ethiopia: the case of the Teleyayen sub-watershed. Agric. Food Secur. 2017;6 doi: 10.1186/s40066-017-0134-4. [DOI] [Google Scholar]

- 9.Midekisa A., Holl F., Savory D.J., Andrade-Pacheco R., Gething P.W., Bennett A., Sturrock H.J.W. Mapping land cover change over continental Africa using Landsat and Google Earth Engine cloud computing. PLoS One. 2017;12(9) doi: 10.1371/journal.pone.0184926. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Ketema H., Wei W., Legesse A., Wolde Z., Temesgen H., Yimer F., Mamo A. Quantifying smallholder farmers' managed land use/land cover dynamics and its drivers in contrasting agro-ecological zones of the East African Rift. Global Ecol. Conserv. 2020;21 doi: 10.1016/j.gecco.2019.e00898. [DOI] [Google Scholar]

- 11.Rao P., Zhou W., Bhattarai N., Srivastava A.K., Singh B., Poonia S., Lobell D.B., Jain M. Using sentinel-1, sentinel-2, and planet imagery to map crop type of smallholder farms. Rem. Sens. 2021;13(10) doi: 10.3390/rs13101870. [DOI] [Google Scholar]

- 12.Alabi T.R., Adewopo J., Duke O.P., Kumar P.L. Banana mapping in heterogenous smallholder farming systems using high-resolution remote sensing imagery and machine learning models with implications for Banana Bunchy top disease surveillance. Rem. Sens. 2020;14 doi: 10.3390/rs14205206. [DOI] [Google Scholar]

- 13.Ren T., Xu H., Cai X., Yu S., Qi J. Smallholder crop type mapping and rotation monitoring in mountainous areas with sentinel-1/2 imagery. Rem. Sens. 2022;14 doi: 10.3390/rs14030566. [DOI] [Google Scholar]

- 14.Cucho-Padin G., Loayza H., Palacious S., Balcazar M., Carbajal M., Quiroz R. Development of low-cost remote sensing tools and methods for supporting smallholder agriculture. Appl. Geomat. 2020;12:247–263. doi: 10.1007/s12518-019-00292-5. [DOI] [Google Scholar]

- 15.Sishodia R., Ray R.L., Singh S.K. Applications of remote sensing in precision agriculture: a review. Rem. Sens. 2020;12 doi: 10.3390/rs12193136. [DOI] [Google Scholar]

- 16.Zhao J., Zhong Y., Hu X., Wei L., Zhang L. A robust spectral-spatial approach to identifying heterogeneous crops using remote sensing imagery with high spectral and spatial resolutions. Remote Sens. Environ. 2020;239 doi: 10.1016/j.rse.2019.111605. [DOI] [Google Scholar]

- 17.Manfreda S., McCabe M., Miller P., Lucas R., Pajuelo M.V., Mallinis G., Ben Dor E., Helman D., Estes L., Ciraolo G., Müllerová J., Tauro F., De Lima M.I., De Lima J.L.M.P., Maltese A., Frances F., Caylor K., Kohv M., Perks M., Ruiz-Pérez G., Su Z., Vico G., Toth B. On the use of unmanned aerial systems for environmental monitoring. Rem. Sens. 2018;10:641. doi: 10.3390/rs10040641. [DOI] [Google Scholar]

- 18.Radoglou-Grammatikis P., Sarigiannidis P., Lagkas T., Moscholios I. A compilation of UAV applications for precision agriculture. Comput. Networks. 2020;172 doi: 10.1016/j.comnet.2020.107148. [DOI] [Google Scholar]

- 19.Delavarpour N., Koparan C., Nowatzki J., Bajwa S., Sun X. A technical study on UAV characteristics for precision agriculture applications and associated practical challenges. Rem. Sens. 2021;13:1204. doi: 10.3390/rs13061204. [DOI] [Google Scholar]

- 20.Torres-Sánchez J., Peña J.M., de Castro A.I., López-Granados F. Multi-temporal mapping of the vegetation fraction in early-season wheat fields using images from UAV. Comput. Electron. Agric. 2014;103:104–113. doi: 10.1016/j.compag.2014.02.009. [DOI] [Google Scholar]

- 21.Bennet M.K., Younes N., Joyce K. Automating drone image processing to map coral reef substrates using Google earth engine. Drones. 2020;4(3) doi: 10.3390/drones4030050. [DOI] [Google Scholar]

- 22.Salamí E., Barrado C., Pastor E. UAV flight experiments applied to the remote sensing of vegetated areas. Rem. Sens. 2014;6:11051–11081. [Google Scholar]

- 23.Huang, Y, Zhong-xin, C, Tao, Y, Xiang-zhi, H and Xing-fa, G. Agricultural remote sensing big data: management and applications. J. Integr. Agric. 17(9): 1915–1931..

- 24.Gorelick N., Hancher M., Dixon M., Ilyuschenko S., Thau D., Moore R. Google earth engine: planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017;202:18–27. [Google Scholar]

- 25.Tamiminia H., Salehi B., Mahdianpari M., Quackenbush L., Adeli S., Brisco B. Google Earth Engine for geo-big data applications: a meta-analysis and systematic review. ISPRS J. Photogrammetry Remote Sens. 2020;164:152–170. doi: 10.1016/j.isprsjprs.2020.04.001. [DOI] [Google Scholar]

- 26.Hall O., Dahlin S., Marstorp H., Bustos M.F.A., Öborn I., Jirström M. Classification of maize in complex smallholder farming systems using UAV imagery. Drones. 2018 doi: 10.3390/drones2030022. [DOI] [Google Scholar]

- 27.Gokool S., Mahomed M., Kunz R., Clulow A., Sibanda M., Naiken V., Chetty K., Mabhaudhi T. Crop monitoring in smallholder farms using unmanned aerial vehicles to facilitate precision agriculture practices: a scoping review and bibliometric analysis. Sustainability. 2023;15(4) doi: 10.3390/su15043557. [DOI] [Google Scholar]

- 28.Brewer K., Clulow A., Sibanda M., Gokool S., Naiken V., Mabhaudhi T. Predicting the chlorophyll content of maize over phenotyping as a proxy for crop health in smallholder farming systems. Rem. Sens. 2022;14(3) doi: 10.3390/rs14030518. [DOI] [Google Scholar]

- 29.uMngeni Resilience Project (URP) Adaptation Fund; 2014. Building Resilience in the Greater uMngeni Catchment, South Africa.https://www.adaptation-fund.org/project/building-resilience-in-the-greater-umngeni-catchment/ Accessed 10 June 2021. [Google Scholar]

- 30.Mahomed, M, Clulow, AD, Strydom, S, Mabhaudhi, T and Savage, MJ. Assessment of a Ground-Based Lightning Detection and Near-Real-Time Warning System in the Rural Community of Swayimane, KwaZulu-Natal, South Africa. Weather Clim. Soc. 13(3): 605-621. 10.1175/WCAS-D-20-0116.1.. [DOI]

- 31.Xue J., Baofang S. Significant remote sensing vegetation indices: a review of developments and applications. J. Sens. 2017;2017 doi: 10.1155/2017/1353691. [DOI] [Google Scholar]

- 32.Bolyn C., Michez A., Gaucher P., Lejeune P., Bonnet S. Forest mapping and species composition using supervised per pixel classification of Sentinel-2 imagery. Biotechnol. Agron. Soc. Environ. 2018;22:172–187. [Google Scholar]

- 33.Yeom, J, Jung, J, Chang, A, Ashapure, A, Maeda, M, Maeda, A and Landivar, J. Comparison of vegetation indices derived from UAV data for differentiation of tillage effects in agriculture. Rem. Sens. 11. doi:10.3390/rs11131548..

- 34.de Castro A.I., Shi Y., Maja J.M., Peña J.M. UAVs for vegetation monitoring: overview and recent scientific contributions. Rem. Sens. 2021;13 doi: 10.3390/rs13112139. [DOI] [Google Scholar]

- 35.Rebelo A., Gokool S., Holden P., New M. Can Sentinel-2 be used to detect invasive alien trees and shrubs in Savanna and Grassland Biomes? Remote Sens. Appl.: Society and Environment. 2021;23 doi: 10.1016/j.rsase.2021.100600. [DOI] [Google Scholar]

- 36.Wei H., Grafton M., Bretherton M., Irwin M., Sandoval E. Evaluation of the use of UAV-derived vegetation indices and environmental variables for grapevine water status monitoring based on machine learning algorithms and SHAP analysis. Rem. Sens. 2022;14(23) doi: 10.3390/rs14235918. [DOI] [Google Scholar]

- 37.Odindi J., Mutanga O., Rouget M., Hlanguza N. Mapping alien and indigenous vegetation in the KwaZulu-Natal Sandstone Sourveld using remotely sensed data. Bothalia. 2016;46(2):a2103. doi: 10.4102/abc.v46i2.2103. [DOI] [Google Scholar]

- 38.Orieschnig C.A., Belaud G., Venot J.P., Massuel S., Ogilvie A. Input imagery, classifiers, and cloud computing: insights from multi-temporal LULC mapping in the Cambodian Mekong Delta. Eur. J. Remote Sens. 2021;54(1):398–416. doi: 10.1080/22797254.2021.1948356. [DOI] [Google Scholar]

- 39.McNairn H., Champagne C., Shang J., Holmstrom D.A., Reichert G. Integration of optical and Synthetic Aperture Radar (SAR) imagery for delivering operational annual crop inventories. ISPRS J. Photogrammetry Remote Sens. 2009;64:434–449. doi: 10.1016/j.isprsjprs.2008.07.006. [DOI] [Google Scholar]

- 40.Martínez-Carricondo P., Agüera-Vega, Carvajal-Ramírez F. Accuracy assessment of RTK/PPK UAV-photogrammetry projects using differential corrections from multiple GNSS fixed base stations. Geocarto Int. 2023;38(1) doi: 10.1080/10106049.2023.2197507. [DOI] [Google Scholar]

- 41.Wagle N., Acharya T.D., Kolluru V., Huang H., Lee D.H. Multi-temporal land cover change mapping using Google earth engine and ensemble learning methods. Appl. Sci. 2020;10 doi: 10.3390/app10228083. [DOI] [Google Scholar]

- 42.Abdi A.M. Land cover and land use classification performance of machine learning algorithms in a boreal landscape using Sentinel-2 data. GIScience Remote Sens. 2020;57(1):1–20. doi: 10.1080/15481603.2019.1650447. [DOI] [Google Scholar]

- 43.Zhao L., Shi Y., Liu B., Hovis C., Duan Y., Shi Z. Finer classification of crops by fusing UAV images and Sentinel-2A data. Rem. Sens. 2019;11 doi: 10.3390/rs11243012. [DOI] [Google Scholar]

- 44.Liu M., Yu T., Gu X., Sun, Yang G., Zhang Z., Mi X., Cao W., Li J. The impact of spatial resolution on the classification of vegetation types in highly fragmented planting areas based on unmanned aerial vehicle hyperspectral images. Rem. Sens. 2020;12(1) doi: 10.3390/rs12010146. [DOI] [Google Scholar]

- 45.Böhler J.E., Schaepman M.E., Kneubühler M. Crop classification in a heterogeneous arable landscape using uncalibrated UAV data. Rem. Sens. 2018;10(8) doi: 10.3390/rs10081282. [DOI] [Google Scholar]

- 46.Adão T., Hruška J., Pádua L., Bessa J., Peres E., Morais R., Sousa J.J. Hyperspectral imaging: a review on UAV-based sensors, data processing and applications for agriculture and forestry. Rem. Sens. 2017;9 doi: 10.3390/rs9111110. [DOI] [Google Scholar]

- 47.Tassi A., Vizzari M. Object-oriented LULC classification in Google earth engine combining SNIC, GLCM, and machine learning algorithms. Rem. Sens. 2020;12 doi: 10.3390/rs12223776. [DOI] [Google Scholar]

- 48.Timmermans A. Masters in Environmental Bioengineering, Faculté des Bioingénieurs, Université Catholique de Louvain; Belgium: 2018. Mapping cropland in smallholder farmer systems in South-Africa using Sentinel-2 imagery. MSc Dissertation. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.