Abstract

Machine learning (ML), particularly deep learning (DL), has made rapid and substantial progress in synthetic biology in recent years. Biotechnological applications of biosystems, including pathways, enzymes, and whole cells, are being probed frequently with time. The intricacy and interconnectedness of biosystems make it challenging to design them with the desired properties. ML and DL have a synergy with synthetic biology. Synthetic biology can be employed to produce large data sets for training models (for instance, by utilizing DNA synthesis), and ML/DL models can be employed to inform design (for example, by generating new parts or advising unrivaled experiments to perform). This potential has recently been brought to light by research at the intersection of engineering biology and ML/DL through achievements like the design of novel biological components, best experimental design, automated analysis of microscopy data, protein structure prediction, and biomolecular implementations of ANNs (Artificial Neural Networks). I have divided this review into three sections. In the first section, I describe predictive potential and basics of ML along with myriad applications in synthetic biology, especially in engineering cells, activity of proteins, and metabolic pathways. In the second section, I describe fundamental DL architectures and their applications in synthetic biology. Finally, I describe different challenges causing hurdles in the progress of ML/DL and synthetic biology along with their solutions.

Introduction

Over the past two decades, biology has undergone a massive transformation that makes it possible to effectively build biological systems. The fundamental force behind this abrupt transition is the genomic revolution,1 which made it possible to sequence the DNA of a cell. With CRISPR-based technologies,2 it is now possible to accurately modify DNA in vivo, which is among the newest advances and techniques made possible by this genomic revolution. Precision DNA editing and high-throughput phenotypic data offer an exciting opportunity to connect phenotypic alterations to underlying code modifications. The goal of synthetic biology is to develop biological systems that meet specific requirements,3 for instance, cells responding in a particular way to external stimuli or generating the requisite quantity of biofuel. To achieve this, synthetic biologists make use of engineering design concepts to employ engineering’s predictability to regulate intricate biological systems. Standardized genetic components and the Design–Build–Test–Learn (DBTL) cycle are two examples of engineering approaches that are applied iteratively to get the desired result. According to the synthetic biology DBTL cycle, this discipline goes through the following four stages: (i) Design: Conjecture a DNA pattern or series of cellular alterations that can accomplish specified objectives of the plan. (ii) Build: This mainly entails the development of the DNA fragment and its effective incorporation into a cell. (iii) Test: Provide data to determine how well the assessed phenotype reaches the desired outcome and assesses the impact of off targeted or unintended effects. (iv) Learn: Use the test data to discover principles that direct the cycle toward the desired outcomes more effectively than a random search might. It frequently involves identifying errors that result from unintended off-target impacts. Modification to a pathway can result in a flux redistribution leading to byproducts, toxicity, slower cell growth, or several other outcomes that must be addressed. The next set of designs can be guided by artificial intelligence (AI), which would decrease the number of DBTL repetitions required to attain the desired result. Synthetic biology generally entails genomic alterations to urge a cell to produce products or behave in a specific manner.

ML has come to light as a promising option to speed up the progress in synthetic biology design by uncovering patterns in the data-rich accomplishments provided by systems biology. DL generally employs representations with numerous layers of artificial neurons to discover the link between the inputs and outputs. Examples comprise frameworks that use sequence information to predict the activation of components like promoters or precise protein structure forecasting algorithms.4−7 One of the main characteristics of DL models is their ability to gradually extract insights from input data by systematically transmitting information between layers of an artificial neural network (ANNs).8 For example, early layers of the network may retrieve low-level properties like vertical or horizontal edges when examining a microscope image, while the subsequent layers combine this data to determine the shape or patterns of cells in the image.9,10 DL networks can also encode intricate nonlinear connections between input values. For instance, a DL model that infers a protein’s function from its amino acid sequence can discover that specific combinations of amino acids operate synergistically to increase activity above what would be predicted based on the individual amino acids’ contributions.11

There are various obstacles that must be solved to advance synthetic biology and DL in the future. Synthetic biologists are not taught DL techniques typically; therefore, it might be challenging to keep up with two fields that are expanding quickly at the same time. Moreover, synthetic biology data sets have discipline-specific limitations. Natural sequence information is one area where there is a wealth of data, but the diversity of these data sets is constrained since nonfunctional patterns or those that have high levels of expression are often underrepresented. As a result of practical limitations in the execution and evaluation of synthetic biology components, the quantity of information available for other applications is greatly limited.

This review seeks to assist synthetic biologists in comprehending and applying ML and DL strategies in their research by presenting an overview of techniques and summarizing recent advances at the nexus of ML/DL and engineering biology (Figure 1). I begin by describing barriers in the progress of synthetic biology and the predictive potential of ML in overcoming these barriers. Then, I have described ML scenarios, mathematical frameworks, and their applicability in cell, protein, and metabolic engineering. Afterward, I review prevalent DL network architectures pertinent to engineering biology applications. Next, I describe recent advances that leverage DL to enable synthetic biology, emphasizing examples from component design, imaging, structure-based learning, and other fields. Finally, I present challenges pertinent to ML, DL, and synthetic biology and their possible solutions.

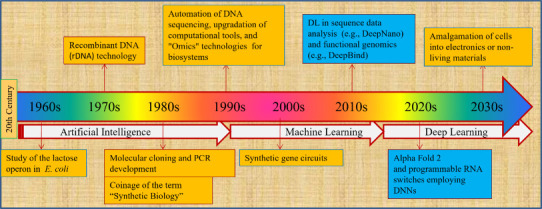

Figure 1.

An overview of the advances in ML/DL and synthetic biology since the 1960s.

Predictive Potential of ML

By learning the basic pattern in experimental results, machine learning can give predictive power without the requirement of complete mechanistic insight. Training data is employed to statistically relate a set of inputs to a set of outputs using sufficiently expressive models that reflect practically any relationship and is free from assumptions in prior knowledge. Machine learning has been applied in this context to forecast pathway dynamics, tune pathways via translational control, detect cancers in breast tissues, diagnose skin cancer, and determine RNA and DNA protein-binding motifs.12−14 Moreover, machine learning can be utilized to create synthetic biology systems by understanding the connection between phenotype and the genetic parts employed in genetic circuits, allowing for more stable circuits. However, ML algorithms are data hungry. They require a large amount of data to be trained and be efficacious. The recent machine learning revolution was enabled not by new techniques but by (i) increasing computational power and (ii) the accessibility of massive training libraries.15,16 Artificial vision would have probably not extended superhuman performance if it had to be taught on pictures taken on photographic film and mailed physically from photographers to AI researchers. The accessibility of vast image libraries facilitated by automated digital image collecting using charge-coupled device (CCD) cameras, as well as their distribution via the Internet, has been vital to its advancement.

Categories of Ml Methods

ML is an AI subset that enables computers to acquire knowledge from experience. ML algorithms employ computational approaches to “learn” particulars directly from data without depending on a preordained equation as a representation. The ML algorithms advance their performance adaptively in the presence of excess samples available for learning. In general, the more the training data, the more accurate and precise the learned function. Tens of thousands of ML algorithms exist, and hundreds of new ones are developed annually. When creating an ML model, input representation, loss function, output variables, hyperparameters, and model evaluation are significant considerations. The types of ML are described below in brief.

Supervised Machine Learning (SML)

SML is the most fundamental type of ML in which an algorithm is instructed on the labeled data. SML methods identify patterns of correlation between input attributes and output variables. The objective is to learn a task that perfectly delineates the relationship between the input attributes and output value in labeled data. Generally, there is direct a relation between the training data and the accuracy of learned tasks, however, the entailed size of training data also relies upon the attributes employed for the specific task. This solution is subsequently deployed for usage with the final data set, from which it learns in the same way as it learned from the training data set. In regression type, an output label is real-valued continuous variables whereas in classification type, the output label is a discrete variable (Figure 2A).

Figure 2.

Schematic representation of machine learning scenarios and mathematical frameworks. (A) SML in which data sets involve ground truth labels. (B) UML in which data sets do not involve ground truth labels. (C) Reinforcement learning where interaction between an algorithmic agent and simulated environment takes place. (D) Linear regression/classification that can be employed to fit models in which the output is a scalar value and data can be predicted by a straight line. (E) Support vector machines locate a separating hyper-plane that parts data into classes. (F) RFs employ the “bagging” technique to construct complete decision trees (DTs) in parallel using random bootstrap instances of the data sets and attributes. RFs select the most labels between different randomized DTs. (G) k-NN is employed for both regression as well as classification, and the input comprises the k nearest training instances in the data set. The output relies on whether the k-NN is employed for regression or classification. (H) NNs generally form a feedforward network of weights in which inputs trigger the hidden layers which give output. However, NNs also form a feedback network in which NNs learn by back-propagation through the networks.

Unsupervised Machine Learning (UML)

UML has the advantage of working with unlabeled data. The algorithms employ clustering approaches, clustering data points with identical attributes into prominent features with little information loss. Hence, the appraisal generally depends on fact-finding analysis. These algorithms attempt to apply approaches to the input data to explore for rules, find patterns, summarize and cluster data points, derive useful insights, and better communicate the data to users (Figure 2B). For more details on SML and UML, I refer the readers to an ML-based book.17

Reinforcement Learning (RL)

RL is directly inspired by how humans learn from events in their daily lives. It has an algorithm that uses trial and error to better itself and learn from new scenarios. Favorable outputs are rewarded, and nonfavorable outputs are rejected. Reinforcement learning, which is built on the psychological idea of conditioning, works by setting the algorithm in a workplace setting with an interpreter and rewards. The output result is delivered to the interpreter at every algorithmic iteration, which decides if the outcome is beneficial or not. If the result is favorable, the interpreter reinforces it by rewarding the algorithm whereas, in case of unfavorable results, the algorithm is compelled to repeat until a better result is found. Generally, the reward system is closely related to the efficacy of the outcome. Due to the availability of large training data sets from simulations under various genetic settings, RL algorithms can provide an efficient computational method to aid in decision-making in the DBTL cycle (Figure 2C).

Semisupervised Machine Learning (SSML)

By employing small labeled and large unlabeled data sets, SSML boosts the efficiency of a supervised model. It can reduce the requirement for vast amounts of organized and human-labeled data along with filtering the systemic noise arising in biological measurements due to various experimental variables. Because SSML is compatible with small training sets, it may have considerable potential in organisms, particularly metazoans with fewer experiment-aided genetic interactive gene pairs.

Active Learning (AL)

AL is a special case of SML. This method is used to create an effective classifier while minimizing the amount of the training data set by actively organizing the valuable data points.

Transfer Learning (TL)

Standard ML approaches presume that the training and testing contexts have the same probability distribution. This assumption, however, does not hold in the situation of merging biological data from several platforms. TL refers to the situation when a classifier is trained on one data set and then tested on another data set that may have a completely diverse probability distribution function. Biological data produced from several platforms and maybe employing various technologies is an obvious option for transfer learning approaches. For example, features acquired from the prediction of yeast growth rate may be transferred to other predictive tasks,18 including predicting ethanol generation in yeast.

Common Ml Algorithms Used in Synthetic Biology

In this section, I discuss a few specific algorithms employed in synthetic biology applications.

Linear Regression or Classification

The linear regression algorithm19 is based on SML. It carries out a regression task. In this algorithm, a linear equation is used to simulate the connection between inputs and outputs. Linear models are simple to design and analyze, but the connection between the objective variable and the attribute in several applications extends more than a linear function. However, linear regression is not appropriate for classification since it concerns continuous values, while classification issues require discrete values. The second issue is the shifting in threshold value caused by the addition of new data points (Figure 2D).

Support Vector Machines (SVMs)

Several researchers prefer SVM20 because it produces substantial accuracy while using minimal computing power. SVM is useful for both classification and regression tasks. Nonetheless, it is commonly employed in classification tasks. The SVM algorithm learns a collection of ideal hyperplanes that can classify samples. For each class, it maximizes the distance between the hyperplane and the closest data point. The data points (support vectors) assist in developing SVM. Increasing the margin distance gives some reinforcement, allowing future data points to be classified with greater certainty. Soft margin SVMs encompass “slack” variables that permit a few data points to be incorrectly categorized and are effective when data is not differentiable (Figure 2E).

Random Forests (RFs)

Random forest21 is a popular ML technique that integrates the output of numerous decision trees to produce a single conclusion. Its ease of usage, flexibility, and ability to tackle classification and regression challenges have boosted its popularity. The RF model is composed of several decision trees (DTs). While DTs are popular SML algorithms, they might suffer from bias and overfitting. When numerous DTs create an ensemble in the RF algorithm, the results are more accurate when the individual trees are not correlated with one another. The RF algorithm is a bagging method extension that employs both bagging and feature randomization to produce an uncorrelated forest of DTs. DTs build tree-like classifiers by progressively splitting data about specific attributes, most frequently employing classification performance to determine which trait and value to split (Figure 2F). RF techniques have three major hyperparameters that must be regulated before training. These hyperparameters include node size, number of attributes sampled, and number of trees. From there, the RF classifier can be applied to address regression or classification issues.

k-Nearest Neighbors

The k-nearest neighbors (KNNs)22 technique is a straightforward SML approach that can be used to address classification and regression issues. However, it is mostly employed to solve classification difficulties. Most SML methods use training data to learn a task and predict unknown data, while NNs preserve the training data and the pairing distances between them to classify unknown data points with the labels of close training data points. It is known as a lazy learner since it does not do any training when given training data. Instead, it simply saves the information during the training period and makes no calculations. It does not create a model until a query is run on the data set. As a result, KNN is significant for data mining. Here, “K” refers to the number of nearest neighbors employed for predicting unknown points (Figure 2G).

Neural Networks

Neural networks (NNs),23 also called simulated neural networks (SNNs) or artificial neural networks (ANNs), are nonlinear statistical decision-making or data modeling tools. They can be applied to identify patterns in data or to model intricate connections among inputs and outputs. Each node in a NN, which is commonly referred to as a neuron, is connected to every other node by a link, each of which is assigned a weight and threshold. The network is referred to as feedforward when neurons are exclusively connected to other neurons in succeeding layers. On the contrary, a network is referred to as recurrent when neurons in the same layer communicate with one another. The output layer serves as the last layer that gives the model predictions, while the input layer is the first layer that receives the representations of each incident as input. Hidden layers (any layers of neurons) exist in between the input and output layers. Each neuron multiplies the input by the link weights and transforms the data using an activation function to send information to the neurons it is connected to (Figure 2H). Any node whose output exceeds the defined threshold value is activated and begins providing data to the network’s next layer. Instead, no data is transmitted to the network’s next layer. NNs depend on training data to develop and enhance their accuracy over time (Figure 2H).

Applications of ML in Biosystems Design

The different ML approaches outlined in the preceding section stipulate a toolkit to solve the issues related to designing biological components. An ML model can be used to simulate synthetic biology applications with input and output variables that are easily quantifiable. In this section, I shall describe the assimilation of machine learning in synthetic biology, with a strong focus on cell and metabolic engineering subfields. I shall also discuss how this assimilation can help synthetic biology overcome the current difficulties in understanding the intricacies of biological systems.24

Applications in Cell Engineering

Cell engineering is an area of synthetic biology that involves the assembly of biomolecules to form genetic circuits/networks that can coordinate with internal cell machinery to improve, restore, or add unique functionalities to a designated host cell.25 The biological components typically comprise elements that control transcription, translation, and transcriptional factors that can be utilized to control the activity of supplemental proteins.

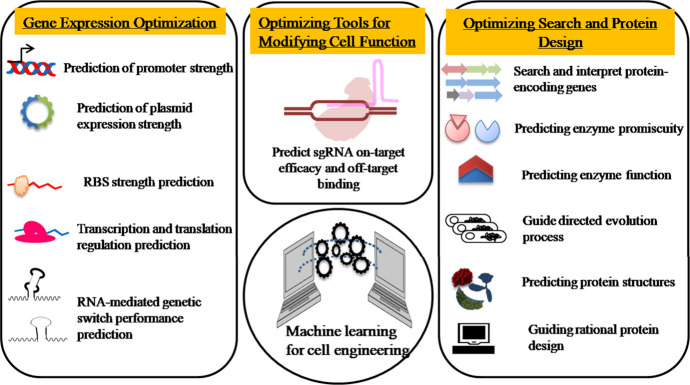

Synthetic biologists have worked to describe the performance outcomes of recognized biological components, comprehend their fundamental mode of action, and evaluate the interactions of all these components inside the host cell by trial-and-error research protocol.26 Although cell engineering methods have become more advanced, synthetic biologists still confront several challenges. Designing innovative biological components and discovering the interactions among host cell machinery and engineered features can be difficult due to a lack of understanding of design guidelines, causing troubleshooting issues. To that end, ML provides a way for optimally constructing and fine-tuning biomolecules in the host cell with predictable implications. It has multiple applications in gene expression optimization, cellular function modification, and protein designing (Figure 3).

Figure 3.

Applications of ML in cell engineering. ML can be employed for (i) improving gene expression, (ii) bettering tools for altering cellular functions, and (iii) upgrading protein search and design.

Several researchers started to use neural networks to guide the data-driven design of promoters4,27,28 and RBS sequences29 for regulating gene expression. Meng et al. used neural networks to estimate promoter strength using altered promoters and RBS motifs as inputs.30 Interestingly, their technique outperformed even mechanistic frameworks based on position weight matrices and methods of thermodynamics.31−33

ML can determine gene expression by optimizing the biological modules involved in translation and transcription, in addition to promoters and RBS sequences. Tunney et al. employed a feedforward neural network architecture, in which information is continuously “fed forward” from one stratum to the next, mimicking biological processes for predicting ribosome distribution across mRNA transcripts and translation elongation speeds from mRNA transcript coding sequences.34 Besides the development of biological components to control gene expression, more efficient strategies for changing cell function are required. This can be accomplished by removing undesirable genes or permanently incorporating foreign biomolecules into the cell genome utilizing genome editing systems such as the CRISPR-Cas system. Even though these tools have transformed the synthetic biology field, there is still potential to optimize CRISPR-Cas tools for identifying and optimizing sgRNA binding to the intended target site while decreasing off-target binding. Previous research employed the support vector machine algorithm, a form of supervised ML, to improve CRISPR-Cas9 efficiency35,36 but was hampered by the small size and poor quality of training data. The integration of higher-throughput screening techniques and deep learning, on the other hand, has enhanced the efficiency of modern sgRNA activity prediction algorithms. The DeepCpf1 tool, for example, prognosticates on-target knockout efficiency (indel frequencies)37 using DNNs trained on vast sgRNA (AsCpf1: Cpf1 from Acidaminococcus sp. BV3L6) task data sets.

In cell engineering, ML can be used to identify and describe protein-encoding genes in the genome. It is beneficial for creating and constructing metabolic pathways in the production host cells.38 The hidden Markov model has traditionally been utilized for this purpose.39,40 Genes are found in the genome using protein-coding signatures such as the Shine-Dalgarno sequence and subsequently functionally annotated using a sequence homology analysis against a database of known proteins. ML might discover and detect enzymes that can catalyze new reactions via enzyme promiscuity, in addition to assessing enzyme function. Chemoinformatic methods, molecular mechanics, and partitioned quantum mechanics, for example, can be employed to envisage metabolite-protein correlations in silico.41 These strategies, however, are computationally complex and necessitate domain expertise. Similarly, more robust, and efficient approaches, such as the Gaussian process model42 and support vector machine,43 are increasingly being employed to explore and match promiscuous enzymes to reactions. These approaches predict protein sequences (for example, K-mers), reaction signatures (for instance, chemical transformation properties, functional groups), and protein substrate affinity (Km values). Metabolic engineers now enjoy novel approaches to finding enzymes for innovative biochemical reactions while no recognized enzyme is available. Very recently, Yu et al.44 presented a CLEAN (Contrastive Learning-enabled Enzyme Annotation) ML algorithm for assigning Enzyme Commission (EC) numbers to enzymes with improved reliability, sensitivity, and accuracy compared to BLASTp, which is a commonly used tool for comparing protein sequences. The key features of CLEAN include its contrastive learning framework, which enables it to perform better in several aspects like (i) annotation of understudied enzymes, (ii) identification of promiscuous enzymes, and (iii) correction of mislabeled enzymes. Hence, CLEAN appears to be a promising tool for enzyme function prediction, leveraging contrastive learning to enhance accuracy and reliability, making it valuable for researchers in diverse biological and biotechnological domains.

Another ML application involves the designing and engineering of proteins. The most prevalent method is directed evolution, in which proteins undergo repeating processes of mutation and selection until the intended function and performance are obtained.45 By lowering the number of experimental repetitions required to achieve the desired protein, ML can steer the directed evolutionary process. It entails using past experimental data, which includes the sequence of each protein and its functional performance, to produce a library of variants with more fitness. Wu et al. simultaneously deployed different ML models and selected the models with the maximum accuracy to effectively produce nitric oxide dioxygenase and human guanine nucleotide-binding proteins from Rhodothermus marinus.46 Machine learning-aided directed evolution has also been employed to boost enzyme output,47 change the colors of fluorescent proteins,48 and improve the thermostability of proteins.49

Aside from directed evolution, ML can help with rational protein design. UniRep, for example, may use neural networks to learn statistical depictions of proteins (for instance, structural, evolutionary, functional, and physicochemical properties) from 24 million UniRef50 sequences.50 The method could predict the stability of a vast proportion of de novo proteins as well as functional alterations caused by genetic variations in wild-type proteins. Even with a small pool of training data, Biswas et al. used UniRep to improve the design of a green fluorescent protein (GFP) from Aequorea Victoria jellyfish and TEM-1-lactamase enzyme from E. coli.51 Another study employed neural networks that had been trained to correlate amino acids with the spatial orientation of oxygen, carbon, sulfur, and nitrogen atoms within a protein. The researchers succeeded in recognizing unique gain-of-function mutations and enhancing the protein function of three separate proteins.52,53

Applications in Metabolic Engineering

Rather than designing and regulating the synthesis of a single protein and single gene expression, the subfield entails rebuilding pathways that affect the engineered organism’s metabolism. Metabolic engineering entails changing cells’ natural chemical interactions to focus on generating desired biological molecules. It is typically a multistep process that involves multiple enzymes. While the cells can synthesize various enzyme pathways and specific products, they usually require a small group of ubiquitous metabolites or cofactors.54 Hence, while attempting to maximize the yields of a particular metabolite, it is vital to consider the overall cellular state of affairs.55 A single compound, for example, could be a result of several metabolic pathways.56 While high-yield pathways have been built via rational design,57−59 these efforts are most effective for simple pathways and necessitate extensive knowledge of the enzyme processes entailed and significant experimental expertise.

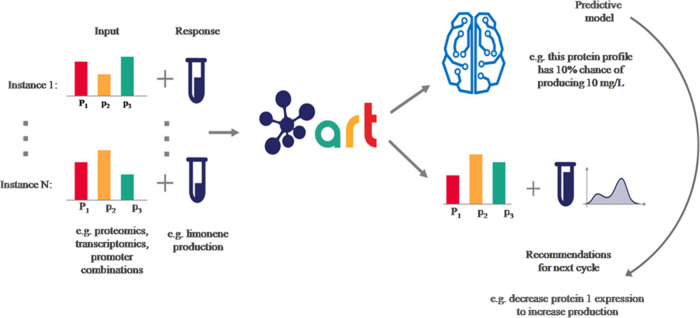

One big problem for ML in metabolic engineering is producing large biological data sets for training algorithms. To address this constraint, Radivojevic et al. created automated recommendation tool (ART), a machine-learning tool that combines network optimization with experimental design.54 The team achieved predictive modeling using 19 constructed strains in a test cycle by recommending experiment strategies to fulfill the desired aim. To summarize, ART provides a technology designed specifically for the demands of synthetic biologists to use the power of ML to facilitate predictable biology (Figure 4). By enabling successful inverse design, this combination of synthetic biology, ML, and automation has the potential to transform bioengineering.55−57

Figure 4.

ART gives predictions and recommendations for the following cycle. ART employs experimental data for (i) constructing a probable predictive representation that predicts response from input variables and (ii) utilizes this model to give a set of recommended inputs for the following experiment that will assist in reaching the desired goal. The predicted response for the directed inputs is specified as an entire probability distribution, efficiently quantifying unpredictability. Instances have relevance to each of the diverse examples of input and response employed for training the algorithm. Reproduced with permission from ref (54). (Licensed under a Creative Commons Attribution 4.0: http://creativecommons.org/licenses/by/4.0/). Copyright 2020, Radivojević et al. Nature Research.

Despite their fundamentally distinct foundations, there is growing interest in combining mechanistic modeling with ML. In general, this takes advantage of the benefits of both methodologies to deliver data-driven forecasts and deep insight into the underlying biology. Imposing model limitations based on biological settings, for example, have been demonstrated to improve prediction accuracy by ignoring biologically implausible solution spaces.58

One avenue being investigated is the use of data derived from mechanistic representations as input for ML. Because complete genome sequences are now available, genome-scale models (GEMs) have gained favor as an engineering tool for forecasting system-wide events. GEMs are constructed from the ground up, based on stoichiometry and mass balance concepts, and include all known genes that contribute to metabolism, allowing for a full assessment of the metabolic status in a given organism.59,60 Computer modeling flux estimates, for example, have been demonstrated to improve the predictive capacity of ML in yeast and cyanobacteria whole-genome models.61,62 Similarly, genome-scale representations can be employed to recognize engineering objectives and focus on the realms of machine-learning algorithms.63 Another technique is to utilize machine learning to forecast the parameters employed in mechanistic models. Heckmann et al. demonstrated that enzyme turnover rates predicted by ML algorithms beat naively earmarked values at flux estimations.64 In one study, supervised ML algorithms and FBA were used in tandem to estimate bacterial central metabolism using input features from 37 different bacteria species, all of which had C13 metabolic flux data.65

One significant work attempted to comprehend the metabolism-regulatory mechanism by examining alterations in the metabolome and proteome of 97 kinase Saccharomyces cerevisiae mutants. The investigation demonstrated that in the absence of an underlying molecular framework machine learning can be employed to map alterations in regular enzyme expression profiles, which can subsequently be used to determine the metabolic phenotype.66 Burstein et al. used an ML and experimental strategy on the genome scale to find 40 new virulent bacterial effectors in Legionella pneumophila.67 Automation of significant aspects during fermentation is often unfeasible; however, soft sensors enable correlation between easily detected offline and online parameters to predict relevant offline variables in real time. One study employing structure additive regression (STAR) illustrates a model that can be created gradually, making it easier to analyze and adjust for operators.68 Furthermore, novel biosensor development strategies have been explored to build new soft sensors with potentially higher predictive ability over significant offline variables.69,70

Data are abundant in industrial bioengineering that is suitable for data mining and inclusion into ML models. Because of its capacity to extract the most significant predictors from vast, overlapping data sets, principal component analysis (PCA) has proven to be the most popular technique in the field.71 A significant amount of data in the industry and the literature needs to be normalized and standardized, which has shown to be a difficult challenge for biological systems data sets. For instance, Oyetunde et al. manually collected data containing 1200 cellular factories from approximately 100 papers to forecast the efficiency of an E. coli-based cell factory relying on all biologically significant parameters that were consistent among publications.72 They emphasized the need for standardization of data.

One of the ultimate goals of metabolic engineering is to merge pathway design with host strain and culture condition optimization into a single pipeline (Figure 5). A standard workflow improves reproducibility, decreases the time required from project conception to realization,73 and allows for the usage of experimental automation to enhance throughput. Despite the benefits of a complete pipeline for metabolic engineering, there is a paucity of scientific literature explaining such methodologies. It opens the door for industries to establish unique techniques for engineering organisms for industrial purposes and for academics to investigate ways to use ML algorithms and techniques in streamlining the engineering of biosynthetic systems in organisms.

Figure 5.

Applications of ML in metabolic engineering systems. In general, a metabolic engineering venture can be divided into three parts: (i) metabolic pathway design, (ii) boosting cells for production, and (iii) upgrading industrial operations for product yield. Numerous computing tools have been developed to direct designing throughout the process. (A) One can design pathways for the synthesis of target products by employing predicted genomic functions or proven chemical reactions. It can assist in locating hosts with inherent industrial applicability. (B) To increase production titer, frequency, and productivity, strains are engineered. Mechanistic techniques leverage the understanding of fundamental biology to predict metabolite synthesis. On the other hand, data-driven methods use patterns found in massive data sets to recommend improvements. Subsequent initiatives have attempted to integrate the two methodologies to boost predictive power. (C) The output of downstream bioprocesses is maximized. The time needed to adapt a lab strain for industrial output can be significantly decreased with in silico prediction.

Fundamental Building Blocks for DL Models

DL is a subset of ML that learns complicated patterns in data using networks with numerous layers of artificial neurons. An artificial neuron in ANNs is a mathematical function that simulates the activity of a biological neuron. ANN models are employed to classify data, recognize patterns, and accomplish multiple tasks. Although a single-layer neural network can be used for making predictions, extra hidden layers are used to enable optimization and increase accuracy. There are multiple DL architectures, and in this review article, I cover some popular ones employed in synthetic biology based applications.

Multilayer Perceptrons (MLPs)

A standard ANN architecture employs a collection of “neurons”, and each neuron receives a series of numeric inputs. The inputs are multiplied by weight factors, and a constant termed bias is introduced. This value is subsequently processed by a nonlinear function to produce the neuron’s output. Initially, researchers utilized a sigmoid for the nonlinear function, but for computational performance, most recent DL network implementations employ ReLU (rectified linear units) for the neurons within the network’s hidden layers. There are typically multiple neurons, with the same inputs multiplied by various weights for each neuron. For instance, if the inputs are DNA sequence data, the weights regulate how each nucleotide influences the final output, including transcriptional activity. When given a multidimensional array as input, it can be unraveled into a vector (for example, a 4 × 50 matrix peeled into a 200-dimensional vector).

MLPs connect groups of neurons in fully linked networks so that the output of one layer enters the next. This hierarchical structure enables the detection of low-level traits in the early layers and far more complex characteristics in the later layers. The depth provided by numerous successive layers is where the prefix “deep” in the phrase “deep learning” derives from. Each neuron’s output is fully linked to all nodes on the next layer downstream in the MLP architecture. The network’s internal layers are referred to as hidden layers, while the final layer is known as the output layer. In contrast to the prior layers, which have several outputs, the output layer is unique in that it typically collapses to a single value or a limited number of values. In the network that delineates promoter data set to transcriptional activity, for example, the output may be a single integer that quantifies transcriptional activity.

Convolutional Neural Networks (CNNs)

CNNs can save localized position data about how neighboring data is structured with one other. Furthermore, they employ a parameter-sharing approach in which the same model weights are used throughout the entire input. As a result, CNNs are particularly well suited to jobs like image processing, where neighboring pixels contain relevant information, and operations like edge detection must be executed effectively across the image. The input is convolved using a filter (or filters) and then fed through a nonlinear activating function for every convolutional layer of the network. Filters are valuable for detecting specific patterns.

Traditional filter-based analytic tasks use hand-selected numeric values in the filter to define features that a user believes are likely to be significant, such as edge detection. CNNs, on the other hand, employ filter parameters as model weights that the network learns (Table 1). CNNs often undertake sequential analysis actions that can abstract properties, including color gradients and patterns, using a set of convolution steps. Convolution layers are generally sandwiched between layers that conduct other mathematical functions, including pooling, which is employed to focus information by lowering data dimensionality. CNNs can also incorporate components of other network architectures, such as fully connected layers after convolutional layers.

Table 1. Some Key DL Architectures and Terms Used in the Manuscript.

Recurrent Neural Networks (RNNs)

RNNs are a type of model that is intended for usage with sequential data. They work by iterating through the data set and iteratively updating the model’s internal representation (or memory) based on the internal state’s content and the succeeding values in the input sequence (Table 1). These networks have traditionally been employed for language comprehension, where the organization of words is significant for context and interpretation. These networks are also suitable for analyzing biological time series information or sequence data. When processing DNA sequences, for example, the relative location of start and stop codons is crucial in determining protein expression. Nevertheless, the repetitive nature of these networks has significant drawbacks. Most crucially, because of the fading gradients issue, basic RNNs do not acquire long-term relations between elements that are located far apart in sequence space,73 and their iterative nature prevents parallelism in execution, restricting their scalability.

The introduction of LSTM (long short-term memory) networks significantly improved the performance of RNNs.74 LSTM models were created to improve RNNs’ limited temporal memory by including a long-term memory state in which the model must make clear-cut decisions regarding adding or removing information to the long-term memory. For instance, if a model is seeking to predict if a protein would be translated from a particular mRNA, the existence of a stop codon is likely to be stored in long-term memory until a downstream start codon is detected. More information on LSTM models is included in the review by Van Houdt et al.,75 and Angenent-Mari et al.76 provide an example of their use in synthetic biology.

Transformers

The transformer is a more contemporary model built for sequential data that addresses the problems of limited memory experienced with RNN variants while also being computationally more methodical and parallelizable due to recurrence reduction. The transformer outperformed RNNs and LSTMs on all sequence-based tasks, demonstrating paradigm-shifting performance.77 Transformers have even outperformed CNNs on computer vision challenges,78 despite the fact that they were not initially designed for such tasks. This transforming performance is achieved by renouncing the notion of model memory and instead permitting the model to examine and produce outputs for every node in the whole sequence of data at the same time.

The model chooses which sections of the sequence to gather information from for each output. This is accomplished through a mechanism known as “attention”, in which the model may learn what information is relevant at each stage in the sequence and concentrate on passing that knowledge forward (Table 1). A model anticipating the behavior of a short RNA that may form secondary structures, for example, is likely to focus on sequences that are supporting to the sequence of relevance (e.g., outputs for “CGA” will contain a significant amount of data from the other section of the sequence having “UCG”). The mathematical intricacies of the attention mechanism are outside the scope of this review article, but readers should read Chaudhari et al.79 for further information.

Graph Neural Networks (GNNs) and Geometric Approaches

Learning methods for image and sequence data take advantage of the data’s methodical Euclidean structure and the intuitive notion of spatial locality that it provides. These structural attributes are not shared by other structured data, including secondary structure graphs of RNA and DNA, structural formula graphs of molecules, and atomic coordinate data for proteins. Nonetheless, they possess their own symmetries and notions of locality that can lead to developing learning frameworks. GNNs can expand the sharing of information in Euclidean neural networks to the graph structure, offering a scaled and generalized method for conveying information between nodes via the irregular edge connections that operate to encode the locality of the structure (Table 1).

It enables the learning of high-quality renderings of a structured data that can then be employed for edge prediction tasks or node labeling or pooled across the structure and supplied into an MLP to conduct regression or classification at the molecular scale. Bronstein et al.80 provide a thorough and inclusive primer for understanding ML from a geometric standpoint, and Zhou et al.81 provide a description of the intricacies of GNN formation.

Generative Models

Generative models82−84 are a class of artificial intelligence models that aim to learn and replicate patterns present in the data they were trained on. These models are trained on a data set and then used to generate new, similar data. There are various types of generative models, and they operate in different ways. Some common types include the following.

Generative Adversarial Networks (GANs)

GANs consist of two neural networks, a generator, and a discriminator, which are trained simultaneously through adversarial training. The generator creates synthetic data, and the discriminator’s role is to distinguish between real and generated data. The competition between these two networks helps the model generate increasingly realistic data.85

Variational Autoencoders (VAEs)

VAEs are probabilistic generative models that learn a probabilistic mapping between the data space and a latent space.86 They aim to encode input data into a probabilistic distribution in the latent space, allowing for the generation of new samples by sampling from this distribution.

Autoencoders

Autoencoders consist of an encoder and a decoder. The input data is compressed by the encoder into a latent space representation, which the decoder then uses to recreate the original data. While not inherently generative, variations like variational autoencoders can be used for generative purposes.

Boltzmann Machines

Boltzmann machines are a type of stochastic recurrent neural network. They use a network of binary-valued nodes and learn to model the probability distribution of the training data.87 They can be used for generating new samples.

Generative models have various applications, such as image and text generation, data augmentation, style transfer, and more. They play a crucial role in unsupervised learning tasks and can be used to explore and understand the underlying structure of the data they are trained on.

Applications of DL in Synthetic Biology

In this section, I investigate examples of deep learning in synthetic biology research (Figure 7A). I discuss current advances in the design of biological parts, imaging applications, structure-based learning, optimal experimental design, and implementations of biomolecular neural networks.

Figure 7.

DL enabled applications of synthetic biology. (A) Representative cases of pertinent inputs to DL networks and their allied output predictions. (B) Given a fresh input, predictions can be made using deep learning. Using a desired output as a starting point, models can likewise be utilized in reverse to produce new designs.

Design and Simulation of Biological Components

Deep learning has recently made substantial progress in predicting the function of biological components, like ribosome binding sites (RBSs), promoters, and 3′ and 5′ untranslated regions (UTRs).4,76,88−95 Since these components are frequently constrained in length, for instance, approximately 50 nucleotides for a 5′ UTR sequence or approximately three hundred for a promoter-DNA synthesis can be used to create massive randomized or semirandomized libraries whose function can be assessed using massively parallelized reporter assays combined with the next-generation array. The capacity to synthesize enormous libraries is an excellent example of how synthetic biology methods may produce training sets for data-hungry models.

Deep learning algorithms have recently been utilized to detect96,97 and potentially interpret protein sequences98 in genomes from superior-quality experimental data sets. DeepRibo, a deep neural network (DNN)-based technique that uses increased ribosome profiling coverage indicators and potential open-reading frame patterns to map and detect translated open-reading frames in the prokaryotes is one approach currently being used to locate protein sequences. REPARATION, a similar tool, uses a random forest classifier to do the same task.99 After discovering new proteins, functional interpretation of their sequences can be accomplished using DNN-based techniques such as DeepEC, which uses a protein sequence to determine enzyme commission numbers (EC numbers) quickly and precisely.98 EC numbers categorize enzymes according to the chemical reactions they catalyze and assist in studying enzyme functions. Alternative EC number prediction algorithms, in addition to DeepEC, are Cat Fam,100 DEEPre,101 ECPred,102 DETECT v2,103 PRIAM,104 and EFI CAz2.5.105

Sample et al.90 created Optimus 5-Prime, a DL model that precisely predicts how the 5′ UTR sequence regulates ribosome loading (Figure 6). Even though data sets relating sequence to translation performance from endogenous human 5′ UTRs exist,106,107 these innate data sets are not best suited for model training since sequences with detrimental effects are plausible to be underrepresented in innate illustrations, and endogenous transcript data are not diverse enough to capture a wide range of expression profiles. To address these concerns, Sample et al. synthesized and evaluated data from a 280,000-member library of random 50-nucleotide 5′ UTR segments upstream of the green fluorescent protein coding region (Figure 6). The Optimus 5-Prime model was trained using data from transfected HEK293T cells, with inputs being one-hot encoding renditions of the 5′ UTR sequencing and the output being the average ribosome load values. The researchers utilized CNN, and the model performed admirably, predicting up to 93% of the test set’s average ribosome loading values.

Figure 6.

Library consists of 280,000 random 50 nucleotide oligomers as 5′ untranslated regions (UTRs) for enhanced green fluorescent protein (eGFP). (A) Shows the usage of a 5′ UTR to assess the potential of 5′ UTR single nucleotide variants (SNVs) and engineer state-of-the-art sequences for prime protein expression. (B) The construction of the library of 280,000 members by the insertion of a T7 promoter accompanied by 25 nucleotides of stipulated 5′ UTR pattern, a random 50-nucleotide pattern, and the eGFP coding sequences (CDSs) into the backbone of a plasmid. In vitro transcribed (IVT) library mRNA was generated by in vitro transcription from a linear DNA template acquired by a polymerase chain reaction from the plasmid library. HEK293T cells were transfected with IVT library mRNA; cells were collected after 12 h; and polysome fractions were then collected and sequenced. In vitro transcribed library mRNA transfected HEK293T cells were recovered after 12 h, and then polysome profiling was conducted. For each UTR, read counts per fraction were utilized to calculate mean ribosome load (MRL), and the resulting information was employed to train a CNN. (C) The uAUGs (out-of-frame upstream start codons) decrease ribosome loading (positions that are in frame with the enhanced green fluorescent protein coding sequences are shown by the vertical lines). Analogous but very weak periodicity was observed in the case of GUGs and CUGs. (D) Shows the repressive efficacy of all out-of-frame variance of NNNAUGNN. (E) Shows the nucleotide frequencies deliberated for the 20 least repressive (weak) and most repressive (strong) translation initiation site sequences. Adapted with permission from ref (90). Copyright 2019, Nature Publishing Group.

For promoter designs, similar strategies that integrate DNA synthesis, DL, and massively parallel reporter assays have been applied. Traditionally, synthetic biologists have used a restricted number of native regulators in their construction designs. Although there are artificial promoter libraries,108−110 they are typically variants of existing sequences, like those obtained through mutagenesis, limiting diversity. Moreover, because they are underrepresented in natural situations, there is a scarcity of strong promoters. Kotopka and Smolke4 used massively parallel reporter tests to characterize a promoter variant library. The design kept the conserved sequences within the promoter and randomly generated the rest (∼80% of the sequences).

It demonstrates a potential method for accessing bigger sequence spaces by combining sensible and randomized designs. The researchers utilized a blend of high-throughput DNA sequencing (FACS-seq) and fluorescence-activated cell counting to categorize cells based on their expression levels, then sequenced the promoter regions within every bin. These data were utilized to train a CNN, which takes a DNA sequence as input and predicts activity. Generally, the model predictions translated well to test data, with R2 values greater than 0.79 for all libraries, a noteworthy achievement given the complexity of the sequences. This method of employing massively parallel reporter assays is broadly applicable. Jores et al.111 created synthetic promoters for plant species such as Arabidopsis, sorghum, and maize, and instructed a CNN to forecast promoter strength. MPRA (Massively parallel reporter assays) are not the only technique to create big data sets, and alternative ways may be less prone to processing biases. Hollerer et al. employed genetic reporters to generate a large data set that correlates directly sequence to function, which they then used to design a deep learning model that accurately predicts the translation pursuit of an RBS.91 The researchers constructed a library of 300,000 bacterial RBSs and inserted them upstream of a site-specific recombinase, which flips a specific DNA sequence in a region close to the recombinase.

The researchers were able to test function by assessing the proportion of constructs that had undergone recombination for each RBS variant by sequencing the area comprising both the RBS and the recombinase domains. This data set was utilized to instruct a ResNet53 (a CNN version), which resulted in a model that prognosticated the RBS function with inflated accuracy (R2 = 0.927). It is worth mentioning that the basic approach utilized to construct a physical DNA-recorded linkage between DNA sequence and gene regulatory element functionality is not limited to RBS optimization but could also be used for translational or transcriptional biosensor design or promoter sequence optimization. Despite the high promise of employing synthetic sequences to produce diverse libraries, this strategy has certain limitations. Deep learning studies have repeatedly encountered the difficulty that employing purely randomized sequences sequels a large number of sections that do not work. On the other hand, because natural elements are biased in their depiction, exclusively random parts are likewise prone to fail. Researchers have worked around this issue by adopting semirational strategies, including interspersing regulatory elements believed to give functional regulators with randomized sequences2 and then employing model predictions to choose libraries augmented for elements with an intermediary or strong activity.110,91 Furthermore, the sequence length will eventually limit the library’s diversity. The capability to synthesize and sequence larger sections may sequel reduced coverage and biased data quality in the case of lengthier sequences. Furthermore, researchers must negotiate between sequencing read length, sequencing depth, and library size.

The advantages of emphasizing particular sequence areas as “modules” must be balanced against the reality that gene regulation is complicated. Zrimec et al.112 demonstrated the importance of interactions between coding and noncoding domains in ascertaining gene expression levels. However, they illustrated that DNA sequences can be utilized to assess mRNA abundance straight with some precision (R2 = 0.6 on the mean across a wide range of model organisms, such as Saccharomyces cerevisiae, Arabidopsis thaliana, Homo sapiens, and others), the interplay between regulatory motifs, rather than the motifs themselves, ascertained mRNA abundance. These findings serve as a straightforward reminder that biological components do not function in isolation.

Generative Strategies for Novel Synthetic Components

Synthetic biology applications are typical prerequisites for a model to be predictive as well as generative (Figure 7B). Nondeep learning applications have been highly beneficial to the engineering biology field. The RBS calculator,113 for example, may produce unique designs based on a thermodynamic framework, and synthetic 5′ UTR sequences have been auspiciously generated using genetic algorithms.90 Mechanistic modeling techniques are very potent; nevertheless, they require the professional expertise of which attributes contribute to performance. Deep-learning-based generative techniques are an attractive field of research, as these tools approach the capacity to work backward, for example, from translation efficiency specifications to candidate sequence designs. Kotopka and Smolke2 employed a CNN model to execute sequence-design approaches in their research on yeast promoters, demonstrating that the best algorithms provided potent synthetic constitutive and inducible promoters.

Traditional techniques to design optimization, on the other hand, might be vulnerable to practical drawbacks such as computing inefficiency and a proclivity to become stuck at classical optimization minima. Moreover, these algorithms have no limitations on sequencing diversity, which might be troublesome for generating a large number of distinct library variants. Deep generative models, which include models such as variational autoencoders, generative adversarial networks, and autoregressive models, have the capability to fill these gaps. Linder et al.114 built a deep exploration network framework as an example of this method. They used a similarity metric that discourages sequence similarities that surpass a threshold to maximize fitness for the intended function while simultaneously explicitly emphasizing sequence diversity. Generative models have also shown success in the field of peptide engineering for simple challenges involving short-chain peptides, such as antibacterial peptide design.115,116

Applications Based on Structure

Rapid advancements in the field of geometric DL have facilitated a surge in exploration into structure-to-function learning in the field of biotechnology. The AlphaFold2 protein structure predicting model,117 which promises protein structure prediction fidelity high enough to be used as a successor for costly and time-taking protein crystallography, is perhaps the most high-profile example. As inputs, the model uses the protein sequence and several sequence alignments akin to proteins to learn about three separate data structures: (i) a sequence-level representation, (ii) a pairwise nucleotide interaction representation, and (iii) the protein’s atom-level three-dimensional (3-D) structure production. The 3-D structure is depicted as a cloud of unconnected nodes that correspond to the backbone constituents of each nucleotide and their respective amino acid side chains. To make use of the translational and rotational symmetries inherent in 3-D space geometry, a geometric equivariant attention mechanism is applied. Protein sequence-function mapping and engineering are further aspects of interest in the protein arena.118−122 Gelman et al.11 reported that on receiving training on data from deep mutational scanning tests, deep networks, including convolutional networks, can effectively predict function for new unidentified sequence variants.

When compared to the protein folding problem, the lack of known structural data makes predicting the 3-D RNA structure more difficult. Although over 100,000 protein structures have been identified, only a few RNA structures have high-fidelity structures. Townshend et al.123 used an intriguing strategy to overcome this restriction, in which they reframed the task as one of scoring the structural predictions given by the FARFAR2 algorithm rather than predicting the structure of RNA end-to-end with a DL model. It allowed for a substantial augmentation of the available data set, which only contains 18 RNA structures. It is insignificant to build thousands of proposed structures for every RNA molecule in the training data set, instead of learning to identify the similarity between proposed structures and the rational truth. The learned structural scoring function, termed the Atomic Rotationally Equivariant Scorer (ARES), outperforms existing nonmachine learning procedures in terms of accuracy. In recent years, structural modeling on small-molecule graphs has grown fast in the realms of drug discovery124,125 and drug repurposing.126 Stokes et al.,127 for example, used graph neural networks (GNNs) in tandem with screening assays to predict antibiotic activity in small molecules, identifying a new medication termed halicin as an efficient antibiotic in animal models.

Protein engineering entails either synthesizing new proteins or altering the sequence and structure of existing proteins.128 Large DL models are splendidly capable of learning various properties of proteins.128,129 Better wild-type templates can be generated by employing structural data. The usage of a local structural environment for identifying sites suitable to optimize wild-type proteins is one promising approach for this purpose. Recent research based on plastic degrading enzymes showed the power of this strategy.130 For determining which sites, the estimated probabilities of wild-type AÃs (amino acids) were relatively low, and Lu et al.130 employed the MutCompute131 algorithm. This suggests that certain alternative AÃs may be more “suited” to the appropriate structural microenvironment. Dauparas et al. trained ProteinMPNN (a graph based NN) on 19,700 high resolution single chain structures from PDB. They demonstrated that ProteinMPNN can extricate different failed designs by advising optimized protein sequences for the given templates.132 In a recent study, SoluProt133 and the enzyme miner integrated pipeline were employed for mining industrially pertinent haloalkane dehalogenases134 and fluorinases.135

Applications for Imaging and Computer Vision

DL has enabled unprecedented development in computer vision.136 Imaging applications in synthetic biology can involve automated detection of appropriate ties within an image, including colony growth on a plate or microscopy data analysis. Classification (for example, determination of the existence of a colony) and segmentation (for example, identifying the sets of pixels related to each cell in an image) are two examples of image analysis tasks. Classification is the simplest of these tasks, and basic CNN algorithms from computer vision, such as AlexNet,137 LeNet-5,138 and ResNets,139 were developed for it. Deep neural networks with numerous parameters (for example, AlexNet makes use of approximately 60 million parameters) were usually used in these classical algorithms. To decrease this complexity, smaller versions, including MobileNetv2140 (approximately 3 million parameters), have been developed, providing a realistic alternative.

Locating the exact position of an entity within an image is a more complicated task that is especially useful for quantification. Segmentation, for example, can be used to locate the position of cells within microscope images so that fluorescence measurements can be retrieved. With the advent of the U-Net algorithm,141 a CNN that performed extraordinarily well on biological data, the field witnessed a big advance. DeepCell,142 YeaZ,143 DeLTA,144,145 CellPose,146 and MiSiC147 are some significant DL algorithms that are applicable for single-cell resolution data.146 Image analysis algorithms can also handle more powerful analytics tasks, including monitoring cells from frame to frame in time-lapse photos and dealing with 3D image data.

Optimal Experimental Design

When compared to other domains, data tagging for synthetic biology challenges is frequently quite expensive, requiring professional knowledge of the subject and, in some cases, sophisticated laboratory-based data-gathering systems. This cost is especially problematic for deep learning models requiring outstanding training data. It increases interest in ensuring practitioners do not squander time and resources in classifying data, not adding much to a model. The selection of appropriate data to label or tests to run is an optimum experimental design termed active learning in the ML community. The usage of this method to solve DL problems can greatly minimize data set development costs.148,149

DL algorithms for optimal experimental design are not yet extensively employed in engineering biology; nonetheless, the ability of laboratory automation and initial findings based on simulation indicates that this is a viable area for future research. Treloar et al.150 employed deep reinforcement learning for controlling a simulated chemostat representation of a microbial coculture developing in a continuous bioreactor. The authors showed that by running five bioreactors in tandem for 24 h a reasonable control policy can be gained and that deep reinforcement learning can be employed to determine the best pattern of inputs and control actions to pertain to a continuous chemostat to increase the product performance of a microbial coculture bioprocess. It is a computational example of a DL-driven optimal experimental design in which reinforcement learning is employed to estimate near-optimal patterns of bioreactor inputs to manage a complicated system (Figure 8). Future work in optimum experimental design can rely on existing ML algorithms, such as those used in metabolic engineering applications.54,63,151−153

Figure 8.

Learning a proposed plan in 24 h. (A) Training of reinforcement learning agent was conducted online for 24 h on a model comprising five parallel chemostats. (B) Shows the reward obtained from the surroundings. Despite a little standard difference in reward, all five chemostats had been relocated to the intended population levels by the completion of the simulation. (C) Exhibit the population curve of one chemostat. The population levels change, and random actions are conducted throughout the exploration phase. When the exploring rate declines, the population levels approach the target values. Reproduced from ref (150) (an open access article distributed under the terms of the Creative Commons Attribution License). Copyright 2020, Treloar et al.

Biomolecular Applications of DL Networks

Although DL models are generally executed using computers, new research has shown that ANN mimics can be built utilizing biomolecular elements. These designs create biochemical systems and live cells that can compute and “learn” to resolve simple benchmark optimization issues. One of the primary reasons for this is that inducible gene expressions to chemical inducers often resemble a sigmoidal function of the inducer concentration and can therefore act as the nonlinear function in the neuron model.

On this basis, Moorman et al.154 introduced the theoretical design of a biomolecular neural network which is a dynamical chemical reaction network that reliably executes ANN computations and illustrated its applicability for classification tasks. The authors emphasized the significance of molecular entrapment in attaining negative weight values and the sigmoidal activation function in its elementary unit known as a biomolecular perceptron. Samaniego et al.154,155 theoretically showed that interlinked phosphorylation/dephosphorylation cycles can function as multilayer biomolecular neural systems. From an application point of view, they created signaling networks that potentially function as linear and nonlinear classifiers.

Sarkar et al.156 experimentally applied a single-layer ANN in Escherichia coli (E. coli) cells. They demonstrated the application of engineered bacteria as ANN-empowered wetware capable of performing complex computing operations, including multiplexing, demultiplexing, majority functions, encoding, decoding, and Feynman and Fredkin gates. In another study, Li et al.157 applied ANNs to a consortia of bacteria interacting via quorum-sensing molecules. They employed these engineered bacteria to identify 3 × 3 binary patterns. Sarkar et al.158 used elementary genetic circuits dispersed across different bacteria to solve chemically derived 2 × 2 maze issues by selectively articulating four distinct fluorescent proteins, illustrating the feasibility of using engineered bacteria to conduct distributed cellular computing and optimizations (Figure 9A). van der Linden et al.159 used genetic engineering to create a perceptron competent of binary classification. It was accomplished by constructing a synthetic in vitro transcription and translation (TxTl)-based weighted sum operation (WSO) circuit linked to a thresholding function employing toehold switch riboregulators. The synthetic genetic circuit was employed for binary classification, which involves expressing a single output protein only if the necessary minimum of inputs is exceeded (Figure 9B).

Figure 9.

(A) Application of the distribution of simple genetic circuits among bacterial populations to solve chemically produced 2 × 2 maze issues by selectively articulating four distinct fluorescent proteins. Reproduced with permission from ref (158). Copyright 2021, American Chemical Society (https://pubs.acs.org/doi/10.1021/acssynbio.1c00279, further permissions related to the material excerpted should be directed to the ACS). (B) Synthetic in vitro TxTl-based perceptron comprised of WSO linked to a thresholding function. Reproduced with permission from ref (159). Copyright 2022, American Chemical Society (https://pubs.acs.org/doi/10.1021/acssynbio.1c00596, further permissions related to the material excerpted should be directed to the ACS).

Pandi et al.160 described a method for biological computing using metabolic components applied in whole-cell and cell-free systems. The implementation depends on metabolic transducers, which are analog adders that perform a linear combination of the concentrations of numerous input metabolites with customizable weights and are used to generate metabolic perceptrons. Relying on this, the authors constructed two four-input metabolic perceptrons for binary classifying metabolite combinations, providing the framework for quick and scalable multiplex sensing using metabolic perceptron networks. Faure et al.161 recently demonstrated that artificial metabolic networks may be utilized to create RNNs that can be trained to anticipate growth rates or an organism’s consensual metabolic behavior in response to its surroundings. Because the proposed artificial metabolic networks can improve multiple objective functions, they might be employed to find optimal solutions in a variety of industrial applications, including finding the best media for the bioproduction of desired compounds or engineering microorganism-based judgment devices for multiplexed identification of metabolic biomarkers or environmental contaminants. Such biological evidence of ANNs and ML paradigms executed at the biomolecular level opens routes for novel research into the engineering of living cells for resolving complex computing, governing, and optimization problems.

Challenges

AI has started to find its way into many synthetic biology applications, but significant sociological and technological barriers remain between the two sectors. Large volumes of high-quality data are needed for machine learning to train algorithms. Getting these data is the major challenge in synthetic biology. Large-scale data generation is a serious difficulty in synthetic biology sectors where deep learning models are known to be notoriously data hungry. Training data, imbalanced data, uncertainty scaling, catastrophic scaling, overfitting, and vanishing gradient problem are some of the issues162−164 of DL.

Technological Challenges

The technical hurdles of applying AI to synthetic biology (Figure 10A) are as follows: (i) data is dispersed across multiple modalities, hard to combine, nonstructured, and generally lacks the scope in which it was gathered; (ii) models likely require more data than is typically gathered in a single trial and inadequate predictability and turmoil quantification; and (iii) there are no measurements or benchmarks to accurately assess prediction accuracy in the higher range task to be performed. Moreover, investigations are typically planned to investigate only positive outcomes, confounding or biasing the model’s judgment.

Figure 10.

(A) Challenges of amalgamating ML/DL techniques with applications of synthetic biology. (B) A standard ML/DL framework can help synthetic biology research. The intermediate stages are typically the center of attention, yet the foundation is critical and requires massive resource investment.

Data Challenges

The first big obstacle to combining AI and synthetic biology is the lack of adequate data sets. To use AI for synthetic biology, massive amounts of classified, organized, high-quality, and context-rich data from investigations are required. Despite advancements in establishing databases,165 including varied biological sequences (like whole genomes) and characteristics, there remains a dearth of labeled data. I refer to “labeled data” as phenotypic data that has been mapped to assessments that capture its bioactivity or cellular responses. The inclusion of such metrics and labels, as in other sectors, will accelerate the maturation of AI/ML and synthetic biology solutions to surpass human competency. The issue of irreproducibility in scientific research is indeed a serious concern that has garnered increasing attention in recent years. Irreproducibility refers to the inability of other researchers to replicate the results and findings of a study using the same methods and data. This problem undermines the reliability and credibility of scientific research, as reproducibility is a fundamental principle of the scientific method. Numerous research reports claim a significant outcome; however, their results cannot be reproduced. Studies showing that research is frequently not repeatable have drawn more attention to this issue in recent years. For instance, a 2016 Nature survey166 found that over 70% of scientists in the field of biology alone were unable to replicate the results of other scientists, and almost 60% of researchers were unable to replicate their own findings. Addressing irreproducibility requires a collaborative effort from researchers, institutions, journals, and funding agencies to establish a culture of transparency, rigor, and accountability in scientific research.

A lack of funding in data engineering is partly to blame for the scarcity of suitable data sets. Artificial intelligence advancements typically eclipse the computing infrastructure needs that underpin and ensure its success. Data engineering is a prime component of the basic infrastructure often regarded as the pyramid of needs167 (Figure 10B) by the AI community. Data engineering includes the phases of experimental design, data gathering, organization, accessing, and interpretation. Most AI application examples include a consistent, systematic, reproducible data engineering process. While we can currently collect biological data on an unprecedented scale and in unprecedented detail,168 this data is not always instantly suited for machine learning. Many barriers remain in the way of the acceptance of society standards for storing and sharing measurements, experimental procedures, as well as other metadata that would render them more accessible to AI approaches.165,169 To make such norms quickly deployable and to encourage shared metrics of data performance analysis, intensive formalization work and agreement are required. In brief, AI models necessitate reliable and comparable measurements throughout all trials, which lengthens the experimental timeline. This prerequisite adds a tremendous burden to experimentalists, following intricating protocols to produce scientific breakthroughs. As a result, the long-term demands of data collection are sometimes sacrificed to achieve the short timelines that are frequently placed on such initiatives.

It frequently leads to sparse data sets that reflect only a portion of the various layers that comprise the omics data stack. Data representation has an increasing impact on the capacity to merge these siloed sources for modeling in these circumstances. Today, tremendous effort is expended across a wide range of industry verticals to gather and organize unmanageable digital data for analysis through data cleansing, data set alignment, extraction, transformation, and load operations (ETL). These tasks consume nearly half to 80% of a data scientist’s time, reducing their potential to extract insights.170 Coping with a wide range of data forms (data multimodality) is problematic for researchers of synthetic biology, and the intricacy of pretreatment tasks increases considerably as data variety increases compared to data volume.

Algorithmic/Modeling-Based Challenges

Several efficient models driving current AI developments (for example, in natural language processing and computer vision) are not flavorful when examining omics data. When used for data obtained in a given experiment, common approaches of these models can undergo the “curse of dimensionality”. For instance, a single researcher can generate proteomics, transcriptomics, and genome data for an entity under a specific circumstance, yielding over 12,000 observations (dimensions). For such a study, the number of annotated events (e.g., failure or success) typically ranges from tens to hundreds. For such wide data types, the system dynamics (time resolution) are rarely recorded. These measuring gaps make drawing conclusions about complicated and dynamic systems difficult.