Abstract

Background

Ecological momentary assessment (EMA) involves completing multiple surveys over time in daily life, capturing in-the-moment experiences in real-world contexts. EMA use in psychosis studies has surged over several decades. To critically examine EMA use in psychosis research and assist future researchers in designing new EMA studies, this systematic review aimed to summarize the methodological approaches used for positive symptoms in psychosis populations and evaluate feasibility with a focus on completion rates.

Methods

A systematic review of PubMed, PsycINFO, MEDLINE, Web of Science, EBSCOhost, and Embase databases using search terms related to EMA and psychosis was conducted. Excluding duplicate samples, a meta-analysis was conducted of EMA survey completion rates and meta-regression to examine predictors of completion.

Results

Sixty-eight studies were included in the review. Characteristics and reporting of EMA methodologies were variable across studies. The meta-mean EMA survey completion computed from the 39 unique studies that reported a mean completion rate was 67.15% (95% CI = 62.3, 71.9), with an average of 86.25% of the sample meeting a one-third EMA completion criterion. No significant predictors of completion were found in the meta-regression. A variety of EMA items were used to measure psychotic experiences, of which few were validated.

Conclusions

EMA methods have been widely applied in psychosis studies using a range of protocols. Completion rates are high, providing clear evidence of feasibility in psychosis populations. Recommendations for reporting in future studies are provided.

Keywords: Experience sampling methodology, ecological momentary assessment, psychosis, schizophrenia

Introduction

Ecological momentary assessment (EMA), otherwise known as experience sampling methodology (ESM), involves the repeated measurement of momentary experiences in the context of daily life.1,2 Measuring experiences as they arise in their natural environment can overcome retrospective recall bias3,4 and increase ecological validity.5 Furthermore, repeat assessment results in micro-longitudinal data, enabling examination of temporal relationships between variables.6–9 Analysis of EMA data can include group and individual level trends in momentary experiences over time,10 including within treatment.11–15

EMA has been used within psychosis research for several decades.16 While early research (eg, Delespaul and colleagues17) made use of paper and pencil questionnaires which participants were prompted to complete at set times by a beeper (digital reminder system), smartphone apps and other digital devices are now typically relied on in modern EMA studies,18 affording automated data capture and more control over when the surveys can be completed. Such devices have also extended EMA methods to include the collection of “passive data,” referring to data captured on an ongoing basis without direct input from the participant, such as motion tracking, geolocation or call/text logs.19,20 This concept, often called digital phenotyping21 and offers the advantages of EMA plus additional behavioral, cognitive, and environmental data (for systematic review, see Benoit et al22).

A rich history of EMA research in psychosis has revealed important insights and emerging trends in clinical treatment. Despite this legacy, the methodologies employed in these studies are highly variable, making the design of new EMA studies and the synthesis of results challenging. Furthermore, while some articles provide guidance on best practices for conducting and reporting on EMA studies in psychiatric populations,23–26 and some narrative reviews have reported overall findings in the field,16,27 a thorough systematic examination of EMA methods in psychosis studies has never been conducted. Meta-analyses have been published on compliance with EMA surveys in psychiatric populations25,28; however, individuals diagnosed with a psychotic condition are a population with unique experiences that warrant explicit consideration in designing EMA studies. Positive symptoms are an important focus as distinct phenomenon that show a high degree of temporal variation and are theoretically well suited to self-report as they are relatively discrete and recognizable events. It is also important to understand the willingness of individuals to reflect on and report these experiences in the moment using EMA.

To address this gap in the literature, the current systematic review aimed to summarize the methodological characteristics employed in EMA studies in psychosis which measured positive psychotic symptoms and critically examine these approaches to assist future researchers in designing new EMA psychosis studies. A further aim was to evaluate the feasibility of EMA methods in psychosis, with a focus on EMA survey completion rates. The current review focused on digital EMA as the most common tool used in modern EMA research which produces more reliable, timestamped completion rate data. Furthermore, as the use of passive EMA data in psychosis has recently been reviewed22 and the methodological considerations differ, the focus of the current review is on active EMA method only (ie, momentary experiences captured via surveys on a digital device).

Method

The current review followed the PRISMA protocol for conducting systematic reviews (see supplementary material S1 for checklist29) and the protocol was registered with PROSPERO (CRD42020153429). The search was conducted on May 20, 2023.

Search Protocol

Relevant studies were retrieved by searching PubMed, PsycINFO, MEDLINE, Web of Science, EBSCOhost, and Embase with combinations of the following search terms within keywords, title, or abstract (see supplementary material S2 for search syntax for each database):

(ema OR esm OR diary OR diaries OR “momentary assessment” OR “experience sampling” OR “ambulatory assessment” OR “ambulatory monitoring”)

AND

(schizo* OR psychosis OR psychotic OR “positive symptom*” hallucinat* OR “hearing voices” OR “voice hear*” OR delusion* OR paranoi* OR “unusual beliefs” OR persecut*).

Identified studies were imported into Endnote, and duplicates were systematically removed. The abstract of each study was screened by IHB for basic criteria, and full texts were then independently evaluated by 2 authors (IHB and SA/EE/SC) against the inclusion and exclusion criteria, with an Excel spreadsheet used to record screening. Reference lists of studies meeting inclusion criteria and of prior relevant reviews were manually searched for additional articles.

Study Selection

Inclusion criteria were: (1) published in a peer-reviewed journal in English, between 1980 and the date of search, (2) more than 50% of study sample were individuals diagnosed with a psychotic disorder of any kind, (3) measurement of positive psychotic symptoms (eg, auditory verbal hallucinations, delusions, etc) using digital EMA, defined as a method of assessment undertaken in an ecologically valid context at multiple points throughout the day over an extended period using a digital device (ie, smartphone, personal digital assistant, SMS), and (4) full description of EMA protocol used ie, studies needed to report the EMA device used (smartphone app, PDA, or other), sampling frequency and length (ie, how often the surveys were delivered and over how many days), survey length (ie, number of EMA items), analytical approach (eg, multilevel modeling, aggregated analysis) OR EMA completion rates.

Exclusion criteria were: (1) case studies, reviews, theses, and book chapters, (2) EMA method was not digital (ie, paper and pencil surveys), (3) data collection did not occur within naturalistic settings, (4) insufficient reporting of methodological characteristics AND EMA completion rates, (5) collection of passive EMA data only; (6) EMI studies where EMA was not a distinct and separate part of the intervention, (7) data reported in another study (eg, where this was explicitly reported within the manuscript, with the larger or more study retained). Studies were identified as a duplicate sample if this was mentioned in the method, or where the sample details were very similar in which case authors were contacted to confirm. Note that these duplicate studies were retained in the overall systematic review to provide details on publications in this research area; however, were excluded from the data synthesis to avoid biased results. Studies were also excluded from the meta-regression if they did not report completion rates.

Data Extraction

Three authors (IHB, SA, and EE) agreed on the data extraction protocol, with one (IHB/SC) completing the full data extraction using an Excel spreadsheet and a second (SA/EE/SC) checking a random selection of 80% of these. Extracted data included the following: (1) basic study information (authors, country, and year), (2) sample characteristics (number of participants, diagnosis, age, and gender), (3) EMA characteristics (length of assessment period, number of surveys per day), (4) EMA tool (smartphone, personal digital assistant, and SMS), (5) analytical approach (time-lagged, multilevel regression, group comparisons, summary statistics), (6) EMA survey completion rates (if reported), (7) EMA items used to measure psychotic symptoms (if reported), (8) variables assessed using EMA.

Meta-Analysis and Meta-Regression

Statistical analyses were performed with R30 by author SA. Studies reporting a completion rate from unique samples were combined using a random-effects meta-analysis model and implemented with the meta-mean function from the meta package (v4.17-031). This produced a weighted, pooled estimate of the population's total mean completion rate across studies. A forest plot was generated to show the effect sizes for each of the relevant studies with the estimated meta-mean and the corresponding 95% confidence intervals. To further contextualize completion rates, a series of exploratory univariate random-effects meta-regression analyses were conducted with the aim of explaining the heterogeneity using the moderator effects of covariates assumed to impact adherence. We assessed (1) participant reimbursement, (2) number of surveys a day, (3) length of protocol, (4) device type, (5) study reporting quality (indicated by total score on the STROBE), (6) participant age, (7) gender, (8) Positive and Negative Symptom Scale (PANSS) total score, and (9) inpatient status (compared to community outpatients and mixed samples). Heterogeneity between the studies was measured through the I2 statistic (with a value higher than 75% considered large). The proportion of between-study variance explained by the model was calculated through tau squared. For both meta-analysis and meta-regression, we included data where covariate data were reported (ie, participant reimbursement, number of surveys, etc). Both the meta-regression and meta-analyses methods require an estimate of spread using standard deviations (SD). As SD information was unavailable for a minority of studies, we imputed missing SD using predictive mean matching.32 Bajuat plots33 were visually inspected to determine if there may be an influence of individual studies on the beta coefficients vs. its relative contribution to the overall heterogeneity estimate.

Study Quality Analysis

Author EE/SC rated each included study against the 16 items of the adapted STROBE Checklist for Reporting EMA Studies (CREMAS34). The extent to which the included studies fully met, partially met, or did not meet the criteria outlined in the CREMAS is reported quantitatively, along with a descriptive summary of the range of reporting practices.

Results

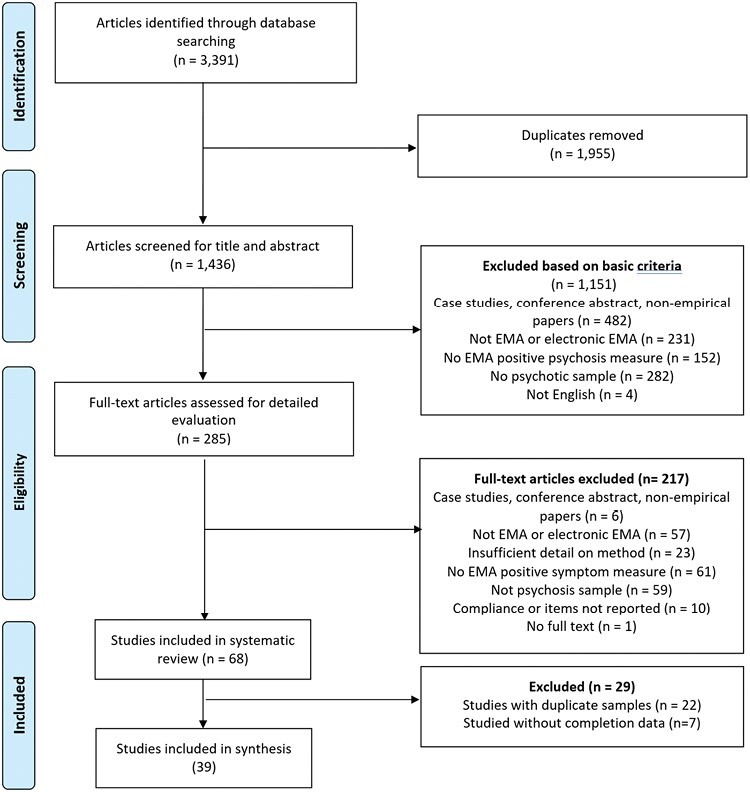

Of 3391 studies identified, 285 underwent detailed evaluation, and 68 met inclusion criteria for the systematic review see PRISMA flow diagram in figure 1). Of these 68 studies, 22 were removed from the data synthesis due to having duplicate samples leaving 46 studies with unique samples. A further 7 were excluded from the meta-regression because they did not report completion rates. One of the remaining 39 studies, one study35 included 2 different samples, resulting in 40 independent samples included in the meta-regression.

Fig. 1.

PRISMA flow diagram.

The study characteristics and sample characteristics for all included studies are outlined in table 1 (see supplementary S3 material for a list of included studies). Of the 46 studies with a unique sample, 34 were observational studies aiming to examine psychosis mechanisms or processes, 6 examined the feasibility, acceptability, or validity of EMA measures or methodologies in psychosis samples, and 6 were clinical trials of digital interventions involving EMA or using EMA within the trial methodology. There was a total of 2655 participants across these 46 unique samples, with a mean age of 36.90 years (SD = 10.27 years) and an average of 43% females. The mean illness duration was 12.81 years (SD = 8.62 years). Psychotic symptom severity at the person level was captured using a variety of measures, most frequently using the (PANSS; mean Total score from 21 studies = 51.74; SD = 14.80).

Table 1.

Study and Sample Characteristics of all Included Studies

| Study | Location | Type of Study | Sample Size | Clinical Condition | Mean Age | Gender (% Female) | Mean Illness Severity and Measure | Mean Illness Duration | Recruitment Context | |

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | Ainsworth, Palmier-Claus35 | UK | Acceptability study | 24 | SZ 92% SZA 8% |

33.00 | 21 | PANSS Total: 56.10 | NA | Community outpatients |

| 2 | Bartolomeo, Raugh36 | USA | Observational study | 50 | SZ or SZA 100% | 39.53 | 66 | PANSS Total 62.14 | NA | Community outpatients |

| 3 | Bell, Rossell11 | Australia | Clinical trial | 17 | BDwPF 11.8% SZA 29.4% SZ 52.9% USPD 5.9% |

39.12 | 65 | PSYRATS AVH Total: 28.47 | Not reported | Community outpatients |

| 4 | Bell, Velthorst37 | UK | Observational study | 33 | NAP 100% | 39.1 | 21 | PANSS Positive Mean: 1.85 | NA | Psychiatric inpatients |

| 5 | Ben-Zeev, Ellington38 | USA | Observational study | 145 | SZ 72% SZA 28% |

46.50 | 39 | PANSS Total: 66.77 | 24.35 | Community outpatients |

| 6 | Ben-Zeev, Frounfelker39 | USA | Observational study | 24 | SZ/SZA 100% | 44.87 | 29 | SAPS: 23.58 SANS: 10.54 |

16.95 | Community outpatients |

| 7 | Ben-Zeev, McHugo40 | USA | Validation study | 24 | SZ 100% | 44.87 | 29 | PANSS Total: 23.58 | 16.95 | Community outpatients |

| 8 | Ben-Zeev, Morris41 | USA | Observational study | 130 | SZ 72% SZA 28% |

46.20 | 41 | PANSS total: 66.69 | 25.37 | Community outpatients |

| 9 | Berry, Emsley42 | UK | Observational study | 19 | FEP 47% SZ 27% SZA 21% PNOS 5% |

33.70 | 63 | NA | NA | Community outpatients |

| 10 | Brand, Bendall43 | Australia | Observational study | 28 | 35.7% SZ 25% SZA 14.3% MDwPF 17.8% PNOS |

44.96 | 64 | PSYRATS Total = 28.21 | 22.27 | Community outpatients |

| 11 | Buck, Munson44 | USA | Observational study | 465 | BD 39.8% MDD 66.7% PTSD 43.2% SZA 25.6% SZ 29.2% SUD 32.5% |

40.67 | 52 | NA | NA | NA |

| 12 | Cristóbal‐Narváez, Sheinbaum45 | Spain | Observational study | 96 | FEP 100% | 22.23 | 31 | NA | NA | Community outpatients |

| 13 | Daemen, van Amelsvoort46 | UK | Observational study | 147 | PD 100% | 34.3 | 33 | PANSS Positive symptoms Total: 12.1 | 12.29 | NA |

| 14 | Daemen, van Amelsvoort47 | UK, Netherlands Belgium | Observational study | 194 | PD 100% | 34.5 | 35 | NA | NA | |

| 15 | Dokuz, Kani48 | Turkey | Observational study | 57 | SZ 92.7% SZA 4.9% DelD 2.4% | 32.76 | 17 | PANSS Positive Mean: 1.85 | 11.32 | Community outpatients |

| 16 | Dupuy, Abdallah49 | France | Observational study | 33 | SZ 100% | 33.90 | 27 | PANSS total = 69.00 | 9.54 | Community outpatients |

| 17 | Fett, Hanssen50 | UK | Observational study | 29 | SZ 79.3% SZA13.8% PNOS 6.9% |

39.10 | 25 | NA | NA | Community outpatients |

| 18 | Fielding-Smith, Greenwood51 | UK | Observational study | 35 | SZ 38.7% SZA 6.5% Other PD 9.7% BPD 32.3% MDDwPF 9.7% BD 3.2% |

41.90 | 58 | NA | NA | Community outpatients |

| 19 | Gaudiano, Ellenberg52 | USA | Observational study | 23 | SZspectrum/MDwPF 100% | 40.00 | 78 | NA | NA | Recently released inpatients |

| 20 | Geraets, Snippe53 | Germany | Clinical trial | 91 | SZ 87% SZA 5% PDNOS 8% |

39.50 | 31 | NA | 15.65 | Community outpatients |

| 21 | Gohari, Moore54 | USA | Observational study | 101 | SZ or SZA 100% | NA | NA | |||

| 22 | Granholm, Loh55 | USA | Feasibility and validity study | 54 | SZ 79% SZA 21% |

44.06 | 37 | PANSS Total: 61.89 | 23.83 | Community outpatients |

| 23 | Hanssen, Balvert56 | Netherlands | Clinical trial | 50 | SZ spectrum 100% | 39.10 | 36 | PANSS Total = 57.30 | NA | Community outpatients |

| 24 | Hartley, Haddock57 | UK | Observational study | 32 | SZ 47% PDNOS 44% SZA 6% APD 3% |

33.00 | 33 | PANSS Total: 62.60 | NA | Community outpatients |

| 25 | Hartley, Haddock58 | UK | Observational study | 32 | SZ 47% PDNOS 44% SZA 6% APD 3% |

33.00 | 33 | PANSS Total: 62.60 | NA | Community outpatients |

| 26 | Harvey, Miller59 | USA | Validation study | 173 | SZ 59% BD 41% |

40.6 | 59 | NA | NA | Community outpatients |

| 27 | Jongeneel, Aalbers9 | Netherlands | Clinical trial | 95 | SZ 44.4% PNOS 20% SZA 11.1% PTSD 8.9% BPD 5.6% Mood disorder 5.6% Other 4.4% |

43.00 | 54 | NA | 15.90 | Community outpatients |

| 28 | Kammerer, Mehl60 | Germany | Observational study | 67 | SZ 70% SZA 24% DelDx 6% |

38.04 | 40 | PANSS total = 66.31 | NA | Community outpatients |

| 29 | Kimhy, Lister61 | USA | Observational study | 54 | SZ 76% SZA 20% Szphreniform 4% |

32.31 | 60 | NA | NA | Community outpatients |

| 30 | Kimhy, Vakhrusheva62 | USA | Observational study | 33 | SZ 100% | 27.80 | 45 | NA | NA | Inpatients |

| 31 | Kimhy, Wall63 | USA | Observational study | 40 | SZ 68% SZA 18% PDNOS 7% SZphreniform 7% |

30.50 | 38 | NA | NA | Inpatients and outpatients |

| 32 | Kimhy, Delespaul64 | USA | Feasibility and acceptability study | 11 | SZ 100% | 34.50 | 55 | NA | 10.30 | Inpatients |

| 33 | Klippel65 | Netherlands | Observational study | 59 | FEP 54% ARMS 46% |

NA | NA | NA | NA | Community outpatients |

| 34 | Ludwig, Mehl66 | Germany | Observational study | 71 | Psychotic disorder 100% | 37.80 | 42 | PANSS Total = 66.32 | 13.46 | Community outpatients |

| 35 | Lüdtke, Kriston67 | Germany | Observational study | 35 | SZ 97% SZA 3% |

39.00 | 54 | NA | NA | Community outpatients |

| 36 | Lüdtke, Moritz68 | Germany Norway | Clinical trial | 30 | NAP 100% | 42.87 | 53 | PANSS Positive Total: 13.03 | NA | NA |

| 37 | Miller, Harvey69 | USA | Validation study | 173 | SZ/SZA 59% BD 41% |

40.60 | 57 | NA | NA | Community outpatients |

| 38 | Moitra, Park70 | USA | Feasibility and validation study | 24 | BDwPF 4.2% MDDwPF 8.3% SZA 45.8% SZ 4.2% USPD 37.5% |

38.00 | 75 | BPRS Total = 50.60 | NA | Inpatients and outpatients |

| 39 | Monsonet, Rockwood71 | Spain | Observational study | 43 | SZ 74.4% DDwPF 2.6% BDwPF 23% | 24.59 | 31 | NA | NA | Community outpatients |

| 40 | Morgan, Strassnig72 | USA | Observational study | 240 | SZ 50.7% BD 49.2% | 40.18 | 59 | PANSS Positive Symptoms SZ Total: 15.88 BD Total: 10.32 |

NA | NA |

| 41 | Mulligan, Haddock73 | UK | Observational study | 22 | SZ 59% SZA 5% NAP 36% |

37.40 | 41 | PANSS total: 64.80 | 12.00 | Community outpatients |

| 42 | Nittel, Lincoln74 | Germany | Observational study | 37 | SZ 72% SZA 22% SPD 3% DelD 3% |

35.87 | 56 | PANSS total 62.70 | 10.68 | Community outpatients |

| 43 | Orth, Hur75 | USA | Observational study | 37 | SZ 34.4% SZ-BD type 21.9% SZA depressive type 18.8% BPwPF 15.6% MDDwPF 9.4% |

41.66 | 44 | The Expanded Brief Psychiatric Rating Scale - the suspiciousness item 2.13 | NA | Community outpatients |

| 44 | Palmier-Claus, Ainsworth18 | UK | Feasibility and validation study | 44 | SZ 53% SZA 8% SZphreniform 5% |

31.43 | 22 | PANSS Total = 56.70 | 7.70 | Community outpatients |

| 45 | Parrish, Chalker76 | USA | Observational study | 96 | SZ 10.4% SZA 42.7% BDwPF 16.7% MDDwPF 2.1% |

43.90 | 55 | PANSS positive= 18.0 PANSS negative = 13.30 |

NA | Community outpatients |

| 46 | Parrish, Chalker77 | USA | Observational study | 128 | SZ 33.5% SZA 43.7% ADwPF 22.6% | 43.4 | 56 | PANSS Positive Symptoms 18.1 | NA | NA |

| 47 | Perez, Tercero78 | USA | Observational study | 173 | SZ 59% BD 41% |

40.6 | 59 | NA | NA | NA |

| 48 | Pieters, Deenik79 | Netherlands | Feasibility and acceptability study | 33 | SZ 48.5% SZA 12.1% Other PD 39.4% | 37.6 | 24 | BPRS Positive Symptoms Total: 12.9 | NA | Community outpatients |

| 49 | Pos, Meijer80 | Netherlands | Observational study | 50 | SZ/SZphreniform 60% PNOS 18% SZA 6% Other 16% |

23.70 | 20 | Green Paranoid Thought Scales = 51.90 | NA | Community outpatients |

| 50 | Radley, Barlow81 | UK | Observational study | 35 | Psychosis 100% | 41 | 80 | NA | NA | NA |

| 51 | Raugh, James20 | USA | Observational study | 52 | SZ 41.6% SZA 54.1% BDwPF 4.1% | 38.56 | 65 | NA | NA | Community outpatients |

| 52 | Raugh, Strauss82 | USA | Feasibility and acceptability study | 54 | SZ 40.7% SZA 53.7% BDwPF 5.6% | 39.17 | 65 | PANSS Positive 13.58 | NA | Community outpatients |

| 53 | Reininghaus, Kempton83 | UK | Observational study | 51 | FEP 54% ARMS 46% |

28.30 | 45 | NA | NA | Community outpatients |

| 54 | Reininghaus, Gayer-Anderson84 | UK | Observational study | 50 | FEP 54% ARMS 46% |

28.40 | 44 | NA | NA | Community outpatients |

| 55 | Reininghaus, Oorschot85 | UK | Observational study | 51 | FEP 54% ARMS 46% |

28.30 | 45 | NA | NA | Community outpatients |

| 56 | Sa, Wearden86 | UK | Observational study | 25 | FEP 33% SZ 38% SZA 5% PNOS 10% Other 14% |

52.00 | 29 | NA | NA | Community outpatients |

| 57 | Schick, Van Winkel87 | Netherlands Belgium |

Observational study | 96 | NAP 100% | 33.45 | 33 | PANSS Positive 11.83 | NA | Community outpatients |

| 58 | Smelror, Bless88 | Norway | Clinical trial | 2 | NAP 100% | 17.70 | NA | PANSS positive mean score = 14.00 | 2.80 (1.80) | Inpatients and outpatients |

| 59 | So, Chung89 | China | Observational study | 47 | SZ spectrum 100% | 43.83 | 63 | PANSS Total = 51.98 | NA | Outpatients |

| 60 | So, Chau90 | Hong Kong | Observational study | 25 | SZ 38% PNOS 38% MDwPF 15% DelD 8% |

34.07 | 43 | PANSS total = 64.07 | NA | NA |

| 61 | So, Peters91 | UK | Observational study | 26 | SZ 41% PNOS 23% MDwPF 14% SZA 9% SZphreniform 5% DelD 5% APD 5% |

36.12 | 50 | PANSS total = 64.23 | NA | Psychiatric inpatients |

| 62 | So, Peters92 | UK | Observational study | 26 | SZ 33% PNOS 33% MDwPF 13% SZA 7% DelD 7% APD 7% |

36.12 | 50 | PANSS total =64.23 | NA | Psychiatric inpatients |

| 63 | Steenkamp, Parrish93 | USA | Observational study | 222 | SZ 28.2% SZA 33% BDwPF 36.4% MDDwPF 2.4% | 41.7 | 65 | PANSS Positive Symptoms Total: 16.3 |

NA | NA |

| 64 | Strauss, Esfahlani94 | USA | Observational study | 30 | SZ/SZA 100% | 41.39 | 43 | NA | NA | Outpatients |

| 65 | Swendsen, Ben-Zeev95 | USA | Observational study | 145 | SZ 68% SZ 32% |

46.50 | 39 | PANSS total = 66.77 | NA | Community outpatients |

| 66 | Vilardaga, Hayes96 | USA | Observational study | 25 | SZA 56% SZ 28% BD 4% Other 12% |

45.00 | 40 | NA | NA | Community outpatients |

| 67 | Westermann, Grezellschak97 | Germany | Observational study | 15 | SZ 100% | 39.90 | 40 | CAPE positive = 1.90 CAPE negative = 2.21 |

18.50 | Community outpatients |

| 68 | Wright, Palmer-Cooper98 | UK | Observational study | 41 | SZ spectrum 71% BD 21% PTSD 21% OCD 21% ASD 21% | 31.85 | 46 | NA | NA | Community outpatients |

Note: AD, affective disorder; APD, acute psychotic disorder; ARMS, at-risk mental state; ASD, autism spectrum disorder; BDwPF, bipolar disorder with psychotic features; BD, bipolar disorder; BPD, borderline personality disorder; BPRS, brief psychiatric rating scale; CAPE, community assessment of psychic experiences; CHR, clinical high risk; CPD, cluster c personality disorder; DD, depressive disorder; DelD, delusional disorder; FEP, first episode psychosis; MDDwPF, major depressive disorder with psychotic features; MDwPF, mood disorder with psychotic features; NAP, non-affective psychosis; OCD, obsessive-compulsive disorder; PNOS, psychotic disorder not otherwise specified; PTSD, post-traumatic stress disorder; PANSS, Positive and Negative Symptom Scale; SAPS, scale for the assessment of positive symptoms; SANS, scale for the assessment of negative symptoms; SZA, schizo-affective disorder; SZ, schizophrenia; szphreniform, schizophreniform; SUD, substance use disorder UHR, ultrahigh risk; USPD, unspecified psychotic disorder

The majority (25 studies) included samples with a psychotic disorder only, 16 had a mixed sample with psychotic disorders and mood disorders with psychotic features, 4 had a mixed sample including personality disorders, 5 included participants with first-episode psychosis or meeting ultrahigh risk criteria, and 2 had a mixed sample including PTSD. Most studies were recruited from community outpatient services (35 studies), 4 from psychiatric inpatient services only, 2 from both inpatient and outpatient services, and 5 were unclear.

Methodological Features of EMA Studies

table 2 presents details of EMA study methodology. Reporting of EMA protocols varied between studies. The most common EMA protocol, adopted in 10 studies, involved 10 surveys per day (range = 3–10) over 6 days (range = 1–30) delivered within a pseudorandomized schedule of prompts spread evenly across 4, 3-hour blocks during 12–15 waking hours (most often 9 AM to 9 PM), in which participants had 15 minutes to respond. Few studies provided a thorough justification for their approach. Eighteen studies included a financial reward for EMA completion, of which 14 provided a reward for participating in the whole EMA procedure and 4 provided rewards for each completed survey.

Table 2.

EMA Study Methodology

| Study | Length of EMA Period (Days) | Number Surveys Per Day | Total Number of Items Per Survey | Sampling Schedule | EMA Completion Reward | Technology Used | Analysis Approach | |

|---|---|---|---|---|---|---|---|---|

| 1 | Ainsworth, Palmier-Claus35 | 6 | 4 | 35 | Stratified pseudorandomized prompts are scheduled at least 1 h apart within 12 h window from 9 AM to 9 PM. 15 min windows to complete survey. | $38 USD | SMS and mobile app | Multilevel modeling |

| 2 | Bartolomeo, Raugh36 | 6 | 8 | 16 | Stratified pseudorandomized prompts are scheduled at least 18 min to 3 h apart within 12 h window from 9 AM to 9 PM. 25 min window to complete: 10 min prior to and 15 min after receiving a notification | $ 20 USD per hour for laboratory testing, $1 USD per EMA survey completed, $80 USD for returning the phone at the end of the study. | Smartphone app mEMA app from ilumivu |

Multilevel modeling |

| 3 | Bell, Rossell11 | 6 | 10 | 39 | Stratified pseudorandomized prompts are scheduled within 4 blocks of a personalized 12 h waking window. 15 min window to complete survey. | None | Smartphone app Movisens |

NA |

| 4 | Bell, Velthorst37 | 7 | 10 | 34 | Stratified pseudorandomized prompts scheduled at least 1 h apart within 12 h window from 8 AM to 10:30 PM with at least 15 min and at most 1.5 h between 2 consecutive beep. |

40 pounds | iPod | Time-lagged multilevel modeling |

| 5 | Ben-Zeev, Ellington38 | 7 | 4 | 8 | Randomized prompts are scheduled at equal intervals across 13 h window from 9 AM to 10 PM. | $35 USD | PDA Purdue Momentary Assessment Tool (PMAT) |

Multilevel modeling |

| 6 | Ben-Zeev, Frounfelker39 | 7 | 6 | 24 | Stratified pseudorandomized prompts are scheduled within 4 blocks of a personalized 12 h waking window. 15 min window to complete survey. | NA | PDA Experience Sampling Program version 4.0 |

Time-lagged multilevel modeling |

| 7 | Ben-Zeev, McHugo40 | 7 | 6 | 13 | Randomized prompts are scheduled at equal intervals across 13 h window from 9 AM to 10 PM. | NA | PDA Experience Sampling Program version 4.0 |

Proximal and time-lagged multilevel modeling |

| 8 | Ben-Zeev, Morris41 | 7 | 4 | 15 | Stratified pseudorandomized prompts are scheduled within 4 blocks of a personalized 12 h waking window. 15-min window to complete survey. | NA | PDA Purdue Momentary Assessment Tool (PMAT) |

Time-lagged multilevel modeling |

| 9 | Berry, Emsley42 | 6 | 6 | 33 | Pseudorandomized prompts are scheduled within personalized 11-h waking window. 15 min window to complete survey. | None | Smartphone | Multilevel modeling |

| 10 | Brand, Bendall43 | 6 | 10 | 4 | Stratified pseudorandomized prompts are scheduled at least 1 h apart within 10 h window from 10 AM to 8 PM. 15 min windows to complete survey. | NA | Mobile app Movisens |

Proximal and time-lagged multilevel modeling |

| 11 | Buck, Munson44 | 30 | 4 | 12 | Stratified pseudorandomized prompts scheduled at least 3 apart within 12-h window from 9 AM to 9 PM | $75USD | Smartphone app | Proximal and time-lagged multilevel modeling |

| 12 | Cristóbal‐Narváez, Sheinbaum45 | 7 | 8 | 13 | Randomized prompts are scheduled across 12 h window from 10 AM to m-10 PM. | NA | PDA | Multilevel modeling |

| 13 | Daemen, van Amelsvoort46 | 6 | 10 | NA | Stratified pseudorandomized prompts are scheduled within 90-min window between 7:30 AM and 10:30 PM 10-min windows to complete survey. | NA | Smartphone app PsyMate |

Proximal aggregated and time-lagged multilevel modeling |

| 14 | Daemen, van Amelsvoort47 | 6 | 10 | 10 | Stratified pseudorandomized prompts are scheduled at least 90-min apart within 12 h window from 7:30 AM to 10:30 PM. | NA | Smartphone app PsyMate |

Proximal and time-lagged multilevel modeling |

| 15 | Dokuz, Kani48 | 6 | 10 | NA | Stratified pseudorandomized prompts are scheduled at least 90-min apart within 16 h window from 7:00 to 23:00 or 08:00 to 00:00. 15 min windows to complete survey. | NA | Smartphone | Multilevel modeling |

| 16 | Dupuy, Abdallah49 | 7 | 5 | NA | Stratified pseudorandomized prompts are scheduled within 3 blocks of a 15 h waking window. | 100 Euros for EMA and MRI component of study | Mobile app | Time-lagged multilevel modeling |

| 17 | Fett, Hanssen50 | 7 | 10 | 34 | Pseudorandomized prompts are scheduled within 14.5-h window between 8 AM and 10.30 PM. | 40 British Pounds | iPod/iPhone | Proximal and time-lagged multilevel modeling |

| 18 | Fielding-Smith, Greenwood51 | 9 | 10 | 8 | Stratified pseudorandomized sampling within equal blocks during day. Max 15 min to respond. | NA | Mobile app Movisens |

Proximal and time-lagged Multilevel modeling |

| 19 | Gaudiano, Ellenberg52 | 28 | 4 | 13 | Randomized prompts are scheduled within 12 hour window 9 AM–9 PM. One fixed prompt at 9 PM. | $0.50 per survey, up to $60 | Smartphone app MyExperience Tool |

Time-lagged multilevel modeling |

| 20 | Geraets, Snippe53 | 6 to 10 | 10 | 16 | Stratified pseudorandomized prompts are scheduled at least 1.5 h apart within 13 h window from 7:30 AM to 10:30 PM. | 30 Euro | Mobile app PsyMate |

Proximal and time-lagged Multilevel modeling and network analysis |

| 21 | Gohari, Moore54 | 30 | 3 | NA | Stratified pseudorandomized prompts are scheduled at least 2 h apart within participant’s sleep and wake schedule. 1 h windows to complete survey. | NA | Android smartphone | Multilevel modeling |

| 22 | Granholm, Loh55 | 7 | 4 | 14 | Randomized prompts are scheduled at equal intervals across 13 h window from 9 AM to 10 PM. | $35 USD | PDA Purdue Momentary Assessment Tool (PMAT) |

Multilevel modeling |

| 23 | Hanssen, Balvert56 | 21 | 6 | 15 | Stratified pseudorandomized prompts are scheduled at least 1.5 h apart within 12 h window from 10 AM to 10 PM | 150 Euros | Mobile app SMARTapp |

Multilevel modeling |

| 24 | Hartley, Haddock57 | 6 | 10 | 5 | Stratified pseudorandomized prompts are scheduled intervals across 15 h between 9 AM and 12 AM. 15 min window to complete survey. | NA | PDA ESP software |

Proximal and time-lagged multilevel modeling |

| 25 | Hartley, Haddock58 | 6 | 10 | 7 | Stratified pseudorandomized prompts are scheduled intervals across 15 h between 9 AM and 12 AM. 15 min window to complete survey. | NA | PDA ESP software |

Proximal and time-lagged multilevel modeling |

| 26 | Harvey, Miller59 | 30 | 3 | NA | Stratified pseudorandomized prompts are scheduled at least 2 h apart within 12 h window from 9 AM to 9 PM. 1 h windows to complete survey. | NA | SMS web link sent to Smartphone | Multilevel modeling |

| 27 | Jongeneel, Aalbers9 | 6 | 10 | 45 | Stratified pseudorandomized prompts scheduled intervals across 15 h between 7:30 AM and 10:30 PM. 15 min window to complete survey. | NA | Mobile app PsyMate |

Proximal and time-lagged, Time series network analysis |

| 28 | Kammerer, Mehl60 | 6 | 10 | 9 | Stratified pseudorandomized prompts are scheduled intervals at least 30 min apart across 12 hs between 10 AM and 10 PM. | 45 Euros | Mobile app Movisens |

Proximal and time-lagged multilevel modeling |

| 29 | Kimhy, Lister61 | 1.5 | 10 | 10 | Randomized prompts within 12 h window between 10 AM and 10 PM. | NA | PDA iESP software |

Descriptive statistics, t-tests, correlations, moderation |

| 30 | Kimhy, Vakhrusheva62 | 2 | 10 | 16 | Randomized prompts within 12 h window between 10 AM and 10 PM. | NA | PDA iESP software |

Multilevel modeling |

| 31 | Kimhy, Wall63 | 3 | 10 | NA | Randomized prompts within 12 h window between 10 AM and 10 PM. | NA | PDA iESP software |

Multilevel modeling |

| 32 | Kimhy, Delespaul64 | 1 | 10 | 16 | Stratified pseudorandomized prompts scheduled intervals across 12 h between 10 AM and 10 PM. 3 min window to complete survey. | NA | PDA iESP software |

Multilevel modeling |

| 33 | Klippel65 | 6 | 10 | 23 | Stratified pseudorandomized prompts. 10 min to complete survey | NA | PDA PsyMate |

Multilevel modeling |

| 34 | Ludwig, Mehl66 | 6 | 10 | 19 | Stratified pseudorandomized prompts scheduled intervals across 12 h between 10 AM and 10 PM. 30 min window to complete survey. | 45 Euros | Mobile app Movisens |

Time-lagged Multilevel modeling |

| 35 | Lüdtke, Kriston67 | 2 | 8 | 5 | Fixed intervals of 4 h periods across 12 h window from 9 AM to 9 PM. 5 min to complete survey | NA | PC, laptop, smartphone, or tablet EFS Survey |

Time-lagged Multilevel modeling |

| 36 | Lüdtke, Moritz68 | 7 | 10 | 13 | NA | NA | Smartphone | Time-lagged Multilevel modeling |

| 37 | Miller, Harvey69 | 30 | 3 | 10 | Stratified pseudorandomized prompts scheduled intervals across 12 h between 9 AM and 9 PM. 1 h window to complete survey. | NA | Mobile app | Multilevel modeling |

| 38 | Moitra, Park70 | 28 | 4 | 13 | Randomized prompts are scheduled within 12 h window 9 AM–9 PM. One fixed prompt at 9 PM. | $0.50 USD per survey | PDA MyExperience |

Correlations |

| 39 | Monsonet, Rockwood71 | 7 | 8 | NA | Stratified pseudorandomized prompts are scheduled within 11 h window from 11 AM to 10 PM. 15 min windows to complete survey. | NA | PDA and Smartphone app | Multilevel modeling |

| 40 | Morgan, Strassnig72 | 30 | 3 | NA | Stratified pseudorandomized prompts are scheduled at least 1 h apart within 12 h window from 9 AM to 9 PM. 1 h windows to complete survey. | $1USD for each survey and $50USD endpoint assessment | SMS Web link sent to Smartphone | Multilevel modeling |

| 41 | Mulligan, Haddock73 | 7 | 5 | 16 | Stratified random intervals within tailored waking hours. 10 min to complete survey. | NA | PDA CamNtech PRO-Diary |

Time-lagged multilevel modeling |

| 42 | Nittel, Lincoln74 | 6 | 10 | 16 | Randomized prompts within 14 h window between 9 AM and 11 PM. | 30 Euros | iPod touch iDialogPad |

Time-lagged multilevel modeling |

| 43 | Orth, Hur75 | 7 | 8 | NA | Stratified pseudorandomized prompts are scheduled at least 1 h apart within 13 h window from 8 AM to 9 PM. 15 min windows to complete survey. | monetary bonus for completing 80% of EMAs | Smartphone app OmniTrack for Research |

Proximal and time-lagged Multilevel modeling |

| 44 | Palmier-Claus, Ainsworth18 | 7 | 6 | 31 | Pseudorandomized prompts are scheduled at least 1 h apart across 12 h window from 9 AM to 9 PM. 15 min window to complete survey | None | Smartphone app ClinTouch |

Aggregated descriptive statistics and correlation |

| 45 | Parrish, Chalker76 | 10 | 3 | 7 | Fixed schedule is chosen by participant (morning, afternoon, and evening). 1 h window to complete survey. | $1.66 per survey, max $50 | Mobile app | Proximal and time-lagged multilevel modeling |

| 46 | Parrish, Chalker77 | 10 | 3 | NA | Stratified pseudorandomized prompts are scheduled at least 2 h. 15 min windows to complete survey. | $1.66USD for each survey completed, for a maximum of $50USD | NeuroUX platform web link sent to Smartphone | Multilevel modeling |

| 47 | Perez, Tercero78 | 3 | 30 | NA | Stratified pseudorandomized prompts are scheduled at least 2 h apart within 12 h window from 9 AM to 9 PM. 1 h windows to complete survey. | NA | SMS Web link sent to Smartphone | Multilevel modeling |

| 48 | Pieters, Deenik79 | 7 | 8 | 24 | Stratified pseudorandomized prompts are scheduled at least 90-min apart within 12 h window from 10 AM to 10 PM. 15 min windows to complete survey. | NA | Smartphone app PsyMate |

Descriptive statistics |

| 49 | Pos, Meijer80 | 6 | 10 | 52 | Randomized prompts within 15 h window between 7:30 AM and 10:30 PM. | NA | PDA PsyMate |

Multilevel modeling |

| 50 | Radley, Barlow81 | 10 | 6 | 21 | Stratified pseudorandomized prompts are scheduled at least 1 h apart within 14 h window from 9 AM to 11 PM. 5 min windows to complete survey. | 25 pounds | Smartphone app mobileQ |

Time-lagged Multilevel modeling |

| 51 | Raugh, James20 | 6 | 8 | NA | Stratified pseudorandomized prompts are scheduled at least 90-min apart within 12 h window from 9 AM to 9 PM. 25 min windows to complete survey. | $1USD per survey completed, and an $80 USDbonus for returning the study phone. | Smartphone app Ilumivu and the Alert app from Empatica |

Multilevel modeling |

| 52 | Raugh, Strauss82 | 6 | 8 | NA | Stratified pseudorandomized prompts scheduled at least 90-min apart within 12 h window from 9 AM to 9 PM? minute windows to complete survey. | NA | Smartphone app Ilumivu and the Alert app from Empatica |

Proximal and time-lagged Multilevel modeling |

| 53 | Reininghaus, Kempton83 | 6 | 10 | 24 | Stratified pseudorandomized prompts within 90-min blocks. 10 min window to complete survey. | NA | PDA PsyMate |

Multilevel modeling |

| 54 | Reininghaus, Gayer-Anderson84 | 6 | 10 | 18 | Stratified pseudorandomized prompts within 90-min blocks. 10 min window to complete survey. | NA | PDA PsyMate |

Multilevel modeling |

| 55 | Reininghaus, Oorschot85 | 6 | 10 | 24 | Stratified pseudorandomized prompts within 90-min blocks. 10-min window to complete survey. | NA | PDA PsyMate |

Multilevel modeling |

| 56 | Sa, Wearden86 | 6 | 10 | 26 | Stratified pseudorandomized prompts scheduled intervals across 15 h between 9 AM and 12 AM. 15 min window to complete survey. | NA | PDA ESP software |

Proximal and time-lagged Multilevel modeling |

| 57 | Schick, Van Winkel87 | 6 | 10 | NA | 10 times a day on 6 consecutive days with a semi-random sampling scheme within a fixed, predefined time frame | NA | Smartphone app PsyMate |

Multilevel modeling |

| 58 | Smelror, Bless88 | 12 (average) | 5 | 5 | Stratified pseudorandomized prompts scheduled intervals across 12 h between 10 AM and 10 AM. 15 min window to complete survey. | NA | iPod touch App developed in house |

Summary statistics, spearman’s correlation |

| 59 | So, Chung89 | 6 | 10 | 9 | Randomized prompts at least 30 min apart within 10–12 h waking window. 15 min window to complete survey. | NA | Smartphone/ipod touch | Proximal and time-lagged multilevel modeling |

| 60 | So, Chau90 | 14 | 7 | 7 | Stratified pseudorandomized prompts scheduled intervals across 12 h tailored to sleep–wake cycle. 20 min window to complete survey. | NA | PDA Purdue Momentary Assessment Tool (PMAT) |

Time-lagged multilevel modeling |

| 61 | So, Peters91 | 14 | 7 | 23 | Stratified pseudorandomized prompts scheduled intervals across 12 h tailored to sleep–wake cycle. 20 min window to complete survey. | NA | PDA Purdue Momentary Assessment Tool (PMAT) |

Multilevel modeling |

| 62 | So, Peters92 | 14 | 7 | 20 | Stratified pseudorandomized prompts scheduled intervals across 12 h tailored to sleep–wake cycle. 20 min window to complete survey. | NA | PDA Purdue Momentary Assessment Tool (PMAT) |

Multilevel modeling |

| 63 | Steenkamp, Parrish93 | 10 | 3 | NA | Stratified pseudorandomized prompts are scheduled at least 2 h apart within 12 h window from 9 AM to 9 PM. 1 h windows to complete survey. | Each survey completed, participants received $1.66 USD with a maximum of $50 USD. | SMS Web link sent to phone | Multilevel modeling |

| 64 | Strauss, Esfahlani94 | 6 | 4 | 46 | Stratified pseudorandomized prompts scheduled intervals across 12 h between 9 AM–9 PM. 15 min window to complete survey. | NA | PDA Experience Sampling Program software |

Markov chain analysis + proximal and time-lagged multilevel modeling + network analysis |

| 65 | Swendsen, Ben-Zeev95 | 7 | 4 | 10 | Stratified pseudorandomized prompts scheduled intervals across 12 h tailored to sleep–wake cycle. 15 min window to complete survey. | $35 USD | PDA Purdue Momentary Assessment Tool (PMAT) |

Time-lagged Multilevel modeling |

| 66 | Vilardaga, Hayes96 | 6 | 9 | 14 | Stratified pseudorandomized prompts scheduled intervals across 12 h tailored to sleep–wake cycle. 15 min window to complete survey. | $10 USD + $5 for meeting 80% survey completion | PDA MyExperience |

Proximal and time-lagged Multilevel modeling |

| 67 | Westermann, Grezellschak97 | 7 | 10 | 8 | Unclear | 30 Euro | Smartphone app Movisens |

Markov chain multilevel modeling |

| 68 | Wright, Palmer-Cooper98 | 14 | 7 | 27 | NA | NA | Smartphone app mindLAMP |

Proximal and time-lagged multilevel modeling |

Note: USD, US Dollar; SMS, short messaging service; PDA, personal digital assistant.

*The term multilevel modeling was used as a broad term for analyses employing a multilevel structure, including Hierarchical linear model, Linear mixed model, Mixed multilevel regression, Multilevel linear mixed effects model, Multilevel linear regression, etc.

The most common device used was a smartphone (24 studies), followed by a PDA (17 studies), iPod (4 studies), SMS (2 studies), and computer (1 study). Notably, more recent EMA studies (published since 2017) tended to use specialized smartphone apps designed for EMA. Of the 35 studies that reported the EMA applications used, the most common was the Experience Sampling Program/iESP (7 studies) and Psymate (7 studies), followed by Movisens (6 studies), MyExperience (3 studies), Purdue Momentary Assessment Tool (2 studies), and individual apps used in only 1 study (ClinTouch, SMARTapp, CamNtech PRO-Diary, EFS Survey, iDialogPad, Ilumivu, mindLAMP, mobileQ, Omnitrack,).

The method of analysis used depended on the aims of the study. The most common aim was to examine momentary predictors of psychotic experiences using some form of multilevel modeling (61 studies). Some studies used time-lagged analysis only (12) to examine predictors “over time” and others did both time-lagged and contemporaneous predictors “in the moment” (20 studies), or network analysis (3 studies). Five studies used descriptive statistics, correlations, or group comparison analyses based on aggregated EMA data.

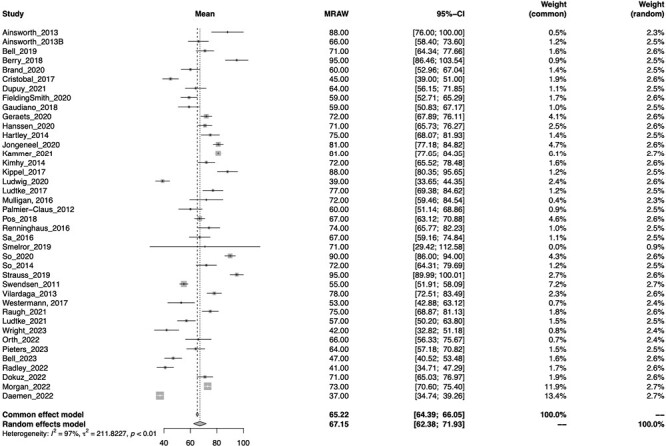

Completion Rates of EMA Surveys

table 3 reports the completion rates across all 68 studies. Of the 40 unique samples that clearly reported a completion rate, results from the meta-analysis estimated the total meta-mean completion rate across studies was 67.15% (95% CI = 62.3, 71.9). A forest plot of mean completion rates for each study and overall meta-mean estimates is provided in figure 2. The Q value for heterogeneity was 1559.00, P < .001, and the I2 index for the meta-analysis was 97.6%, suggesting high levels of heterogeneity between studies which supports the use of a random effect model. It is important to note that the total completion rate may be overestimated by studies, which derived this value from subsamples, which met the 33% cutoff (ie, “completers”). Additional completion criteria included across studies was the percentage of the sample that met the minimum cutoff of 33% (or one-third) of completed EMA surveys (33% of prompts in 14 studies, 30% in 3 studies, 20%–25% in 2 studies, and 3 used a criteria specific to the study). An average of 86.25% (range 62% to 100%) of participants across the 40 samples met the 33% (or 30%) cutoff criterion. To assess for possible publication bias, a sensitivity analysis was conducted comparing studies with small and large sample sizes (split by medium of 35 participants). No significant difference was detected, suggesting that the completion rate was not influenced by the sample size (P > .05).

Table 3.

EMA Completion Rates

| Study | Percentage of Sample Meeting >33% Cutoff | Percentage of EMA Surveys Completed | Other Measure of Completion | Other Completion Outcome | Predictors of EMA Completion | |

|---|---|---|---|---|---|---|

| 1 | Ainsworth, Palmier-Claus35 | 88% (smartphone) 71% (SMS) |

69% (smartphone) 56% (SMS) |

— | — | EMA completion significantly higher with the smartphone app compared to the SMS procedure. Completion rate lower in week 2 compared to week 1. |

| 2 | Bartolomeo, Raugh36 | — | 66% (of completers) | % sample ≥ 20% cutoff | 94% | SZ sample had lower completion rates than controls. SZ sample had lower personal education compared to controls, no other differences on demographics. |

| 3 | Bell, Rossell11 | 100% | 72% | — | — | No relationship between completion rates and confidence in using smartphone apps, demographic, or clinical variables. |

| 4 | Bell, Velthorst37 | 87.8% | 68% (of completers) | — | — | No differences in completion rates between patients vs. controls or patients vs. relatives. However, differences in demographics (sex, education, living status, and ethnicity). No differences in age across samples. |

| 5 | Ben-Zeev, Ellington38 | — | — | % completing ≥2 full days of monitoring | 87% | — |

| 6 | Ben-Zeev, Frounfelker39 | — | 88% | — | — | — |

| 7 | Ben-Zeev, McHugo40 | — | 98% | — | — | — |

| 8 | Ben-Zeev, Morris41 | — | — | % completing ≥2 full days of monitoring | 87% | — |

| 9 | Berry, Emsley42 | — | 67% | — | — | No relationship between completion rate and age, clinical status, gender, own or loaned smartphone, employment status, or education. |

| 10 | Brand, Bendall43 | 83% | 71% | — | — | Completion rate lower in week 4 compared to week 1. |

| 11 | Buck, Munson44 | — | — | — | — | — |

| 12 | Cristóbal‐Narváez, Sheinbaum45 | — | — | — | — | — |

| 13 | Daemen, van Amelsvoort46 | 100% | 63% | — | — | No differences in completion rates between patients vs controls or patients vs relatives. Differences between groups on age, gender, education, and marital status. |

| 14 | Daemen, van Amelsvoort47 | — | — | — | — | No differences in completion rates between patients vs controls or patients vs relatives. Patients had higher levels of child abuse. |

| 15 | Dokuz, Kani48 | 88% | 71% (of completers) | — | — | No relationships between ESM data and demographics, or duration of illness, or time without treatment. |

| 16 | Dupuy, Abdallah49 | — | 95% | — | — | — |

| 17 | Fett, Hanssen50 | 86% | 77% | — | — | — |

| 18 | Fielding-Smith, Greenwood51 | 89% | 60% (of completers) |

— | — | — |

| 19 | Gaudiano, Ellenberg52 | — | 45% | % completing ≥10 EMA survey | 62% | Male gender and cannabis (but not alcohol or other drug) use is associated with lower completion rate. No relationship between completion rate and age, educational attainment, non-Latino White vs. minority ethnicity/race); positive, negative, and affective symptom severity, or cognitive functioning. Completion rate lower in week 4 compared to week 1. |

| 20 | Geraets, Snippe53 | — | 64% | — | — | — |

| 21 | Gohari, Moore54 | — | 75% | — | — | — |

| 22 | Granholm, Loh55 | — | 69% (of completers) |

% completing >4 EMA surveys | 87% | Poorer cognitive impairment in those who did not meet completion criteria. No relationship between completion rate and age, gender, positive, negative or total symptoms severity, or number of days in the study. |

| 23 | Hanssen, Balvert56 | — | 59% | % completing >30% EMA surveys | 89% | No significant differences were found for completion between the feedback and no-feedback group. |

| 24 | Hartley, Haddock57 | 75% | 59% (of completers) |

% who “completed” the EMA phase | 84% | No difference in education, employment status or gender, age, positive, negative or general symptoms, severity of delusions, or hallucinations between those that did and did not meet completion criteria. |

| 25 | Hartley, Haddock58 | 75% | 59% (of completers) |

% who “completed” the EMA phase | 84% | No difference in education, employment status or gender, age, positive, negative or general symptoms, severity of delusions or hallucinations between those that did and did not meet completion criteria. |

| 26 | Harvey, Miller59 | — | 80% | — | — | SZ and BD differed in demographics and PANSS symptoms. |

| 27 | Jongeneel, Aalbers9 | — | 72% (of completers) |

— | — | — |

| 28 | Kammerer, Mehl60 | — | 72% | — | — | — |

| 29 | Kimhy, Lister61 | — | 75% | — | — | |

| 30 | Kimhy, Vakhrusheva62 | — | 81% (of completers) |

— | — | — |

| 31 | Kimhy, Wall63 | — | 89% (of completers) |

— | — | — |

| 32 | Kimhy, Delespaul64 | 91% | 81% (of completers) |

— | — | — |

| 33 | Klippel65 | 86% | — | — | — | — |

| 34 | Ludwig, Mehl66 | — | 72% | — | — | — |

| 35 | Lüdtke, Kriston67 | — | — | — | — | — |

| 36 | Lüdtke, Moritz68 | — | 82% (of completers) | — | — | — |

| 37 | Miller, Harvey69 | — | 80% | — | — | — |

| 38 | Moitra, Park70 | — | 39% | — | — | |

| 39 | Monsonet, Rockwood71 | — | — | — | — | Compliance rates did not differ between smartphone or PDA. |

| 40 | Morgan, Strassnig72 | — | 73% | — | — | SZ had differences with BD on education, age, and mother’s education. |

| 41 | Mulligan, Haddock73 | 100% | 77% | — | — | — |

| 42 | Nittel, Lincoln74 | 87% | — | — | — | No difference in completion rates between recruitment methods (outpatients vs recruited via leaflet). |

| 43 | Orth, Hur75 | — | 67% (of completers) | % sample ≥ 25% cutoff | 86% | Compliance rates did not differ on individual levels of paranoia. |

| 44 | Palmier-Claus, Ainsworth18 | 82% | 72% (of completers) |

— | — | More severe positive symptoms, but not negative symptoms, depression or age, predicted decreased likelihood of meeting 33% completion criterion. No relationship between these variables and completion rate. |

| 45 | Parrish, Chalker76 | — | 81% (of completers) |

— | — | Completion not related to age, years of education, gender, symptom severity, cognition, or diagnosis; weak negative correlation with mania. |

| 46 | Parrish, Chalker77 | — | 80% | — | — | — |

| 47 | Perez, Tercero78 | — | 75% | — | — | No differences between SZ and BD. |

| 48 | Pieters, Deenik79 | — | 64% | — | — | |

| 49 | Pos, Meijer80 | — | — | — | — | — |

| 50 | Radley, Barlow81 | 97% | 69% (of completers) | — | — | Time of day did not predict missing data. Participants missed more surveys towards the end of the study. |

| 51 | Raugh, James20 | — | 54% | % sample ≥ 20% cutoff | — | — |

| 52 | Raugh, Strauss82 | — | 62% | % sample ≥ 20% cutoff | 92% | SZ sample had lower completion rates than controls. |

| 53 | Reininghaus, Kempton83 | 86% | 60% (of completers) |

— | — | — |

| 54 | Reininghaus, Gayer-Anderson84 | — | — | — | — | — |

| 55 | Reininghaus, Oorschot85 | 86% | 60% (of completers) |

— | — | — |

| 56 | Sa, Wearden86 | — | 67% (of completers) |

% completing ≥20 beeps | 100% | — |

| 57 | Schick, Van Winkel87 | 100% | 68% | — | — | — |

| 58 | Smelror, Bless88 | — | 74% (of completers) |

— | — | — |

| 59 | So, Chung89 | — | 67% | % completing >30% EMA surveys | 77% | There was no significant difference in age, gender, years of education, number of psychiatric admissions, or any of the clinical and self-report measure for those that did and did not meet completion criteria. |

| 60 | So, Chau90 | — | 85% (of completers) |

% completing >30% EMA surveys | 58% | No difference in clinical severity measures for those that did and did not meet completion criteria. |

| 61 | So, Peters91 | — | 71% (of completers) |

% completing >30% EMA surveys | 62% | No relationship between completion rate and number of days in study. |

| 62 | So, Peters92 | — | 71% (of completers) |

% completing >30% EMA surveys | 62% | — |

| 63 | Steenkamp, Parrish93 | — | — | — | — | — |

| 64 | Strauss, Esfahlani94 | — | 90% (of completers) |

% completing >25% EMA surveys | 93% | — |

| 65 | Swendsen, Ben-Zeev95 | — | 72% (of completers) |

— | — | No relationship between completion rate and number of days in study. |

| 66 | Vilardaga, Hayes96 | — | 55% (of completers) |

— | — | — |

| 67 | Westermann, Grezellschak97 | — | 78% | — | — | No relationship between completion rate and number of days in study. |

| 68 | Wright, Palmer-Cooper98 | 76% | 42.2% | — | — | Compliance was related to working and lower levels of self-reflectiveness. No relationships with demographics or clinical variables. |

Note: BD, Bipolar Disorder; EMA, ecological momentary assessment; SMS, short messaging service; SZ, Schizophrenia.

Fig. 2.

Forest plot of meta-mean of total completion rate.

Predictors of completion rates

Predictors of completion rates were reported in 26 studies. Of the 15 studies that examined the relationship between demographic or clinical variables and completion rates, 9 found no relationship with age, gender, and symptom severity measures. In the remaining 6 studies, lower completion rates were predicted by more severe manic symptoms (1 study), more severe positive symptoms (2 studies), less severe negative symptoms (1 study), not being in employment, and lower levels of self-reflection (1 study), greater cognitive impairment (1 study), male gender, and cannabis (but not other drug or alcohol) use (1 study). Three studies compared completion rates between psychosis samples and controls, with 2 finding slightly lower rates in clinical samples and the other no difference was found. Eight studies examined the relationship between completion and number of days in the study, with 4 finding no relationship and 4 finding completion lower in the last week of assessments compared to the first week. Three studies examined the effects of EMA delivery methods. One found no significant difference in EMA completion rates when comparing participants receiving EMA-derived personalized feedback and those receiving no feedback. Another study reported significantly higher EMA completion using a smartphone app than an SMS-based EMA procedure, and another found no difference between a smartphone app and PDA. Where significant, effect sizes were small.

As shown in table 4, The results from the exploratory meta-regressions found no significant (P > .05) associations between completion rates and protocol length (questions), duration of ESM protocol in days, participant payment, inpatient status, whether device was a PDA or not, PANSS total, overall study quality, participant age, or percentage of female participants.

Table 4.

Meta-Regression Coefficients

| Variable | n | Coefficient | 95% CI | P value |

|---|---|---|---|---|

| Total CREMIS Score | 40 | 1.16 | −1.14, 3.47 | .35 |

| PDA vs. smartphone or SMS | 40 | 4.53 | −5.78, 14.8 | .37 |

| Number of ESM surveys per day | 40 | −0.60 | −2.64, 1.42 | .54 |

| Duration of ESM study protocol in days | 38 | −0.29 | −1.15, 0.56 | .48 |

| Reimbursement vs. no reimbursement | 18 | −11.85 | −31.95, 8.23 | .22 |

| Participant Age | 39 | 0.19 | −0.56, 0.95 | .60 |

| Outpatients vs. mixed in- and outpatient | 36 | −7.39 | −61.27, 46.48 | .78 |

| Inpatients vs. mixed in- and outpatient | 36 | −1.60 | −53.12, 49.92 | .95 |

| PANSS total score | 21 | 0.15 | −0.24, 0.56 | .41 |

| Percentage of females | 38 | 0.05 | −0.24, 0.34 | .72 |

Note: PDA, personal digital assistance; PANSS, Positive and Negative Symptom Scale; CREMAS, (STROBE) Checklist for Reporting EMA Studies.

Constructs Examined and Properties of EMA Psychosis Items

A full list of the EMA items used to measure psychotic experiences is detailed in supplementary material S4. The most commonly measured constructs, aside from psychotic symptoms, were emotional states such as positive or negative affect (51 studies). Also measured were context and activities (15 studies), social processes (26 studies), psychological processes such as worry, rumination, dissociation, meta cognition, aberrant salience and thought control (9 studies), coping or behavioral responses (6 studies), appraisals (5 studies), emotion regulation (9 studies), and sleep (1 study).

Sixty studies reported the EMA items used to measure psychosis. EMA surveys varied in length from 52 items, with the average number of items being 19. A range of psychotic symptoms and dimensions were measured. This included hearing voices (42 studies), seeing visions (24 studies), specific delusional beliefs (41 studies), and unusual experiences such as dissociation (eleven studies). For hallucinatory experiences, either 1 or 2 items were most commonly used which referred to “hearing voices” and “seeing visions,” whereas delusions were more likely to be captured at the level of the belief type with multiple items (eg, paranoia, mind reading, thought broadcasting, and ideas of reference). Twenty-six studies used the same response scale from 1 (not at all) to 7 (very/very much/very much so), with the remainder using varied rating scales.

Only 33 of the 68 studies reported any indices of reliability or validity for EMA scales and none had been fully validated. Internal consistency for psychosis scales was reported in 20 studies, and correlations between these scales and related constructs were examined for construct validity in 13 studies. Scales were most often constructed by adapting standard measures (eg, PANSS, Psychotic Symptom Rating Scales; PSYRATS) in conjunction with face validity procedures involving expert feedback from researchers, clinicians, and those with lived experience of psychosis.

Methodological Quality

See supplementary material S5 for a descriptive summary of reporting practices of all included studies using the STROBE Checklist for Reporting EMA Studies (CREMAS). Studies varied in reporting quality. Most studies mentioned EMA appropriately in title/keywords (55 studies; CREMAS item 1) and provided a good rationale for using EMA (41 studies; item 2). Although two-thirds of studies described EMA training adequately (item 3), the remaining studies provided little-to-no information on this. As expected, given the study inclusion criteria, EMA procedures were well described (items 4–8), although only one study provided information on the proportion of weekends/weekdays in the monitoring period (item 6) and whether prompt frequency differed on weekend days vs. weekdays (item 8). Around one-third of studies described design features to address potential sources of bias and/or participant burden (item 9). Although most studies reported attrition (item 10) and completion rates (item 13), the form in which these were reported was not always consistent and no studies reported these figures per monitoring day (or wave, where applicable). Studies usually reported (or provided enough information to calculate) the number of expected prompts (item 11) but rarely stated the number of delivered prompts. Similarly (item 12), 43 studies reported planned response latency (eg, 15-minute response window) but only one study reported actual latency (average time from prompt signal to answering the prompt). With regards to missing data (item 14), 25 studies examined whether EMA completion was related to demographic or time-varying variables, but the implications of this for the analysis were rarely discussed. Two-thirds of all reviewed studies detailed the limitations of the EMA method in the specific study context (item 15), provided a general interpretation of the results with reference to the benefits of EMA and/or discussed new ways that EMA has been used or could be used in the future (item 16).

Discussion

This systematic review aimed to critically examine studies employing EMA methods for positive psychotic symptoms in psychosis samples by summarizing key methodological features and evaluating feasibility, with a particular focus on completion rates. The reviewed studies provide clear evidence that using digital devices to measure momentary positive psychotic experiences in daily life is feasible and acceptable for people with psychosis across a range of demographic and clinical characteristics.

Principal Findings in Context

Across 39 studies with unique samples, the meta-mean EMA completion rate was 67.15%, with 86.25 % of participants meeting the standard study cutoff criterion of 33% (or 30%) of EMA surveys completed, supporting the feasibility, and acceptability of the method in psychosis populations. Note that while 7 studies were excluded because they did not report a completion rate, it is unlikely these would have had a meaningful influence on the results given the number of studies included and relatively narrow confidence interval of the pooled completion rate estimate (62.3%–71.9%). The overall completion rate found in this review is notably lower compared to those reported in systematic reviews and meta-analyses of EMA use in chronic health conditions (74%–78% EMA completion99,100), and mental health research including clinical and nonclinical samples (78%–82% EMA completion25,28,101). This finding is consistent with a prior meta-analyses which found lower completion rates in samples of people diagnosed with a psychotic disorder compared to other psychiatric conditions.25 Together, this result suggests that a slightly lower rate of completion may be expected in psychosis populations compared to psychiatric conditions overall.

The methodological characteristics of the studies were variable. The most consistent EMA protocol (adopted in 10 studies) involved 10 surveys per day over 6 days delivered within a pseudorandomized schedule of prompts spread evenly across equal blocks during 12 waking hours (most often 9 AM to 9 PM), in which participants had 15 minutes to respond to each survey. Few studies examined the influence of methodology on completion rates, with one study finding advantage of smartphone app over an SMS-delivered EMA protocol35 and another finding no difference between PDA and smartphone app delivery.71 Four (of 8) studies found completion reduced over time and there was no effect of personalized feedback (vs. no feedback). Similarly, there was no evidence from the meta-regression findings that EMA completion rates were associated with protocol length and duration, inpatient status, or participant age, nor was there any association with other clinical or demographic variables. In contrast, prior meta-analyses in psychiatric populations have found very small links between male gender and lower compliance,25,28 which was most pronounced with higher sampling rates (around 97 total surveys28), It is possible that the effect did not emerge in this review due to the lower average sampling rate (60 surveys), or that gender does not influence compliance in psychosis populations.

It is clear that EMA is a flexible method that can be used successfully across a wide range of groups and for a range of purposes. While the majority of the studies reviewed here used EMA to examine hypothesized relationships between specific variables of interest in a research context (eg, whether worry predicts hallucinatory experiences57), some studies have also investigated direct clinical applications of EMA methods in psychosis samples.11 Similarly, there is increasing interest in using repeated self-reported assessments via smartphone apps to monitor early predictors of psychosis relapse102 and fluctuations in other clinical outcomes.56 Key advantages of EMA as a research method, such as increased ecological validity and reduced recall bias, also extend to clinical use. However, it is important to note that the current study excluded EMI studies where EMA was not a distinct component, therefore rates of EMA completion in these types of interventions are not reflected by the current findings.

Limitations in EMA Survey Design

Many of the reviewed studies cited the brevity of EMA items as a major limitation. EMA items used to assess psychotic symptoms were often simplistic and may therefore have lacked sensitivity to the multidimensional experience of hallucinations and delusions. Delusional experiences tended to be captured in more detail than hallucinations, despite hearing voices having highly varied phenomenological features (voice content, distress, beliefs about voices, etc103). While some studies assessed delusions using multiple questions which were sometimes individually tailored, voice-hearing experiences were assessed using more general questions. Fewer studies assessed negative or cognitive symptoms; however, this is not unexpected given these studies were only included if they measured positive symptoms as well. EMA scales used to measure psychosis constructs were also highly varied between studies, presenting issues for comparability of findings and conducting meta-analysis. While the findings suggest EMA is an acceptable data collection method for people diagnosed with schizophrenia, the scales presented here used many different EMA items, assessing a variety of positive symptom constructs which makes comparison of results challenging.

The approach to developing EMA scales typically involved adapting cross-sectional measures, with very few studies following formal validation procedures, and indices of reliability and validity were rarely reported. While the bipolar field has begun to research specific EMA scales for this disorder, such efforts are currently lacking for schizophrenia.104 Notably, the focus of this review was not to evaluate psychometric properties of EMA scales of psychotic experiences, but rather to report approaches used in existing studies. The lack of studies reporting psychometric properties highlights a need for future studies to develop and validate EMA scales for psychotic experiences. Considerations for the brevity of these scales should balance validity with the time-intensive nature of EMA, as well as appropriate sampling schedules based on the construct of interest, where more frequent measurements may be required for brief or more rapidly fluctuating phenomena. Efforts in the psychosis field have commenced towards the development of validated EMA scales, including the ESM Item Repository led by Myin-Germeys and colleagues.105

EMA Completion Rate Reporting: Limitations and Considerations

Most studies reported the EMA rationale, methods, limitations, and conclusions adequately; however, many did not always meet CREMAS reporting recommendations. In particular, reporting of EMA completion rates was often inconsistent, with details regularly omitted. This accords with the findings of prior reviews which have also identified inconsistent reporting in EMA studies in other populations.25,101 The adherence of research participants to the assessment protocol “must be sufficient to obtain a meaningful sample of experience during the sampling period.”59 Hence, it is crucial that studies report EMA completion in enough detail for findings to be critically evaluated.

Thirty-nine studies with unique samples reported percent EMA completion (or an equivalent that allowed percent completion to be calculated), with 86.25% reporting the proportion of the sample meeting the 33% or one-third completion rate criteria. There is some dispute regarding the recommendation23 that at least 33% of EMA surveys should be completed for a participant’s data to be included in analysis. Arguably, this cutoff is arbitrary. Indeed, the source most commonly cited on the topic106 provides no justification for this value.107 Furthermore, the most widely used approach for statistically analyzing EMA data (multilevel modeling using maximum likelihood estimation) allows all available observations to be included,107,108 obviating the need to exclude low-responders. In fact, by excluding participants who respond to less than a third of EMA prompts, existing selection biases may be exaggerated, since lower-functioning individuals are not only less likely to take part, but also more likely to drop out. We contend that the reliance on the 33% cutoff should be critically reviewed and that a more nuanced approach may be necessary. For example, some have argued59,109 that the density of sampling in EMA studies (ie, percentage completion on a particular day) may be as important as overall percentage completion. This is particularly relevant where time-lagged analyses, examining associations between subsequent EMA surveys, are used.

Recommendations for Methods and Reporting of Future EMA Studies

There is a need for clear and consistent guidelines for EMA reporting in psychosis studies, and for researchers and journals to adopt these. Based on reporting limitations observed in the reviewed studies, and in line with recommendations outlined in the adapted STROBE Checklist for Reporting EMA Studies (CREMAS34), here we make 7 specific recommendations in table 5. These recommendations aim to facilitate comparison of results across studies and critical evaluation of findings, and to ensure that studies collect sufficient data for future meta-analyses to be possible. In each case, we present an observed limitation in the reviewed studies and an accompanying recommendation. While guidelines for conducting and reporting in EMA studies of psychiatric populations have been published more broadly,25–28 the lack of adherence to these in subsequent studies has been notable.26 We hope that by providing considered and explicit recommendations for psychosis populations, we may help future researchers adopt a more consistent standard in future publications, ultimately leading to more transparent findings that are more readily synthesizable in future reviews.

Table 5.

Recommendations for Reporting in Future EMA Studies in Psychosis

| Recommendations | Rational |

|---|---|

| Recommendation 1: studies should consistently report completion rates in terms of: (1) The total number of prompts participants responded to out of the total number of actually delivered prompts (expressed as a fraction and a percentage), and (2) The average number of prompts responded to per participant (as a fraction and a percentage). | No single index of EMA completion was reported across all studies. Percentage of total EMA survey completion was often reported (or calculable from reported data). However, the number of actually delivered EMA prompts (as opposed to the intended number of prompts) was rarely reported, making it difficult to evaluate the accuracy of calculations based on supplied data. |

| Recommendation 2: studies should report the standard deviation of all reported completion indices. For example, the time to complete each EMA session may offer individual participant data on how they completed the questions. This timestamped data are already recorded with most EMA tools like smartphones. | Studies did not always report a measure of variability in completion between participants, precluding precise meta-examination of completion rates. |

| Recommendation 3: studies should provide information on the distribution of responses per day across the sample (e.g. graphically) and per wave, if applicable. | None of the reviewed studies reported completion rate per monitoring day (or wave). As others have argued,50,53 density of sampling in EMA studies may be as important as overall percentage completion. |

| Recommendation 4: studies should report number of EMA prompts scheduled, number completed, and expressed as a percentage. If “completer” analyses are conducted, a clear rational must be provided. | Many studies only reported percentage EMA completion for study/EMA “completers” (often defined as those completing >33% EMA surveys), making comparison across studies difficult. |

| Recommendation 5: Where appropriate, researchers should examine possible predictors of non-completion to determine biases within the data and reasons for non-completion should be gathered and reported where possible. We contend that examining predictors of completion as a continuous variable will provide more valid insights than comparing the binary variable of “completers” and “non-completers.” We also suggest reporting correlations between completion rates and key predictors to aid future meta-analytic work. Further, implications for missing data assumptions should be explicitly considered. | Detailed reasons for non-completion were not always reported. Although some studies examined predictors of EMA completion (which amounts to an examination of missing data), these were rarely explicitly considered in relation to missing data assumptions or potential biases within the data. |

| Recommendation 6: Future studies should include more detailed EMA items, particularly for assessing hallucinations (eg, include measures of voice content, beliefs, and distress), and evaluate their psychometric properties empirically. Studies should also consider and justify sampling frequency based on the phenomena of interest. | Psychotic symptom items were often brief, and evidence of psychometric validation was often lacking. Items assessing hallucinations were particularly underspecified and lacked complexity/granularity of the voice-hearing experience. Studies lacked consideration for the frequency of sampling based on the research questions and phenomena being measured. |

| Recommendation 7: Studies may wish to use the following standard format for reporting the EMA protocol (adjusting details as needed): “A 15-item EMA survey was delivered over a 7 d period, with 10 prompts per day occurring at stratified pseudorandomized intervals within 5 blocks of 2 h, within a 10 hour window from 9 AM to 7 PM.” | Although generally well-reported in the reviewed studies, it is worth reiterating the CREMAS recommendations on reporting the EMA protocol itself. Namely, the following should be reported: number of EMA items per survey, frequency of prompts (eg, per day), sampling schedule (eg, fixed time, randomized within time windows), length of survey period (eg, number of days), prompting schedule (ie, event-based, user-initiated, time based), and number and duration of waves (eg, 2 monitoring periods over the course of 1 year), if applicable. |

Review Limitations

The current review has several limitations. First, the focus of this review was solely on EMA as a research methodology for positive symptoms in psychosis studies, therefore the findings may not apply to other psychotic experiences such as negative symptoms and cognitive disorganization. Second, the decision to exclude passive sensing studies meant that these and related search terms (eg, digital phenotyping) were not included. As studies using passive sensing sometimes include EMA as a subcomponent, this meant that some relevant studies may have been missed. In recognizing the progression of the field towards multi-modal technologies that incorporate both passive sensing and active EMA data collection, the findings of this review apply to the distinct use of EMA only. Third, broader terms related to the use of mobile technology for assessment and treatment (eg, “mobile health”) were not included in order to focus the search on EMA as a methodology. However, this may have resulted in studies of similar app-based monitoring studies being missed. Fourth, while included studies provided a good representation of typical research participants with psychosis, some populations were underrepresented including inpatient, ultrahigh risk, and first episode psychosis samples. Sixth, while the meta-regression reflected trends across studies, this cannot apply to individual-level relations. Furthermore, while attempts were made to exclude studies where data were reported elsewhere, this was not always clear nor determinable from the study details, which may potentially distort estimates. Similarly, clarity of reporting for completion rate criteria were not always transparent, with some studies reporting completion rates from analyzed samples which included data from participants who met the 33% cutoff criteria. It is possible that future studies which include the full sample may expect a slightly lower rate of completion. Finally, the aforementioned lack of clear and consistent methodological reporting within this body of research also impairs the quality of this review.

Conclusions

In conclusion, this review has determined that EMA is a feasible and acceptable methodology for use with psychosis populations with high levels of completion rates that appear unrelated to clinical or demographic predictors. Variations in study reporting highlighted a need for clear and consistent guidelines for EMA studies in psychosis, for which recommendations have been provided in this review. Further research is needed to develop validated EMA scales for psychotic experiences.

Supplementary Material

Acknowledgment