Key Points

Question

What is the current state of dermatology mobile applications (apps) enabled by artificial intelligence?

Findings

In this cross-sectional study involving 41 apps, several concerning and unsafe aspects were identified. These include a lack of supporting evidence, insufficient clinician/dermatologist input, opacity in algorithm development, questionable data usage practices, and inadequate user privacy protection.

Meaning

Presently, artificial intelligence dermatology apps may cause harm associated with bias, inconsistent validation, and misleading user communication; thus, further work is necessary to standardize the information provided by these apps, ensuring both patients and dermatologists are supported with accurate and reliable data while minimizing associated risks.

Abstract

Importance

With advancements in mobile technology and artificial intelligence (AI) methods, there has been a substantial surge in the availability of direct-to-consumer mobile applications (apps) claiming to aid in the assessment and management of diverse skin conditions. Despite widespread patient downloads, these apps exhibit limited evidence supporting their efficacy.

Objective

To identify and characterize current English-language AI dermatology mobile apps available for download, focusing on aspects such as purpose, supporting evidence, regulatory status, clinician input, data privacy measures, and use of image data.

Evidence Review

In this cross-sectional study, both Apple and Android mobile app stores were systematically searched for dermatology-related apps that use AI algorithms. Each app’s purpose, target audience, evidence-based claims, algorithm details, data availability, clinician input during development, and data usage privacy policies were evaluated.

Findings

A total of 909 apps were initially identified. Following the removal of 518 duplicates, 391 apps remained. Subsequent review excluded 350 apps due to nonmedical nature, non-English languages, absence of AI features, or unavailability, ultimately leaving 41 apps for detailed analysis. The findings revealed several concerning aspects of the current landscape of AI apps in dermatology. Notably, none of the apps were approved by the US Food and Drug Administration, and only 2 of the apps included disclaimers for the lack of regulatory approval. Overall, the study found that these apps lack supporting evidence, input from clinicians and/or dermatologists, and transparency in algorithm development, data usage, and user privacy.

Conclusions and Relevance

This cross-sectional study determined that although AI dermatology mobile apps hold promise for improving access to care and patient outcomes, in their current state, they may pose harm due to potential risks, lack of consistent validation, and misleading user communication. Addressing challenges in efficacy, safety, and transparency through effective regulation, validation, and standardized evaluation criteria is essential to harness the benefits of these apps while minimizing risks.

This cross-sectional study identifies and characterizes the current English-language artificial intelligence dermatology mobile applications available for download in US application stores.

Introduction

The surge of mobile applications (apps) claiming to assist with skin conditions presents opportunities and risks.1,2 However, the performance of these apps is inconsistent, and none have gained approval from the US Food and Drug Administration.3,4 Studies focusing on smartphone apps for skin cancer risk have found variable accuracy and sensitivity, with poor performance overall.2,3,4,5,6 Nevertheless, the fast-paced emergence of direct-to-consumer health apps has posed challenges to regulatory bodies worldwide. As a result, approaches to developing regulatory strategies for health apps are still in development.7,8 Although the availability of dermatology mobile apps with artificial intelligence (AI) features can have enormous benefits, ranging from skin cancer detection to acne management, the current state of evidence supporting their clinical use remains unclear. The goal of this study is to identify and characterize the current dermatology mobile apps with AI features available for download in app stores.

Methods

From November to December 2023, we searched for publicly available dermatology-related mobile apps using the search terms dermatology, derm, and skin in both the Apple and Android app stores. We included mobile apps in the review that were related to dermatology and included AI features. The searches and identification of apps for inclusion in the study were performed independently by 2 investigators (V.P. and W.M.). A third investigator (S.W.) independently reviewed the identified apps and resolved any discrepancies to determine the final list of apps for inclusion in the analysis.

Based on the official description given in the app store and any referenced websites or publications, the following details were extracted: purpose, target audience, evidence, average rating (out of 5 stars), number of ratings, number of downloads, cost, country of development, algorithm details, information regarding the training and testing datasets and data availability, disclaimers, whether dermatologist or nondermatologist clinician input was provided in the app development process, and terms of use (specifically focusing on whether user image data are stored and, if so, how image data are stored and how the data can be used by the app developers). A third investigator (S.W.) independently reviewed the findings and resolved any discrepancies. To complete a more comprehensive evaluation, app developers were contacted to supply additional information (eMethods and eFigure 1 in Supplement 1). Institutional review board approval and informed consent were not required because only publicly available data unrelated to patient care were used in this study.

Results

Initially, 909 apps were identified. Following the elimination of 518 duplicates, 391 apps were retained. Subsequent scrutiny excluded 350 apps based on nonmedical content, non-English language, absence of AI features, or unavailability, resulting in 41 apps for in-depth analysis (eFigure 2 in Supplement 1). Additionally, outreach to the 41 app developers yielded 14 responses; however, these responses either did not furnish new information or were incorporated into the existing results and Supplement 1.

The main target audience of 32 apps (78.0%) was the lay public or patients, while 4 apps (9.76%) focused on clinicians, and 5 apps (12.2%) were designed for both. Twelve apps (29.3%) were exclusive to Android, 13 apps (31.7%) to Apple, and 16 apps (39.0%) were available through both platforms. The top 3 app purposes were 14 apps (34.1%) for skin cancer detection, 13 (31.7%) for diagnosis and/or identification of skin and/or hair conditions, and 7 (17.1%) for mole tracking. Other uses included 6 apps (14.6%) for tracking skin conditions; 5 (12.2%) for acne diagnosis, treatment, and/or monitoring; 2 (4.9%) for atopic dermatitis management; and 2 (4.9%) for sun protection (Table 1; Table 2).

Table 1. Application Details, Including Name, Availability, Purpose, Rating, Number of Ratings, and Costa.

| App name | Availabilityb | Purpose | Rating | No. of ratings | Cost | ||

|---|---|---|---|---|---|---|---|

| Android | Apple | Android | Apple | ||||

| Aysa | Both | Identification of skin conditions | 4.8 | 4.6 | 1440 | 360 | No cost |

| DermEngine | Both | Mole tracking, visual search for similar images | NA | 4.1 | NA | 9 | No cost |

| Model Dermatology | Both | Identification of skin conditions | 4.4 | 4.5 | 2260 | 10 | No cost |

| Skin-Check | Apple | Skin cancer detection, mole tracking | NA | 4.7 | NA | 227 | No cost |

| VisualDx | Both | Identification of skin conditions | 3.9 | 4.5 | 434 | 176 | No costc |

Abbreviations: app, application; NA, not available due to either an insufficient number of ratings or because the app is not available in the app store.

Supporting evidence in the form of peer-reviewed publications is detailed in eTable 4 in Supplement 1.

Android, Apple, or both app stores.

Subscription required.

Table 2. Information on Application Purpose, Evidence, and Ratings for Applications Without Supporting Evidence.

| App name | Availabilitya | Purpose | Rating | No. of ratings | Cost | ||

|---|---|---|---|---|---|---|---|

| Android | Apple | Android | Apple | ||||

| Acne Doc | Android | Acne management | NA | NA | NA | NA | No cost |

| Acno | Both | Acne management | NA | NA | NA | NA | No cost |

| AI Dermatologist: Skin Scanner | Both | Skin cancer detection, mole tracking, identification of skin conditions | 4.5 | 4.6 | 2890 | 3077 | No costb |

| AI Skin Disease Detection | Android | Diagnosis of skin conditions | NA | NA | NA | NA | No cost |

| AI Tool for Skin Problems | Android | Tracking skin conditions | 2.7 | NA | 216 | NA | No costc |

| Atopic Dermatitis | Apple | Atopic dermatitis management | NA | NA | NA | NA | No cost |

| Blemish Types, Skin Cancer ID | Android | Skin cancer detection, mole tracking | NA | NA | NA | NA | No cost |

| Cube-Check Ur Birthmark Easily | Apple | Identification of skin conditions | NA | NA | NA | NA | No cost |

| Derma AI | Android | Skin cancer detection | NA | NA | NA | NA | No cost |

| DermoApp: SkinCancer Detection | Android | Skin cancer detection | 3.1 | NA | 55 | NA | No cost |

| DermObserver: Dermatology Scan | Android | Skin cancer detection | NA | NA | NA | NA | No cost |

| EczemaLess | Both | Eczema tracking and management | 4.4 | 4.7 | 149 | 61 | No cost |

| Emdee Skin | Android | Skin cancer detection | NA | NA | NA | NA | No cost |

| FaceDia - Acne Diagnostic Scan | Android | Acne diagnosis and treatment | NA | NA | NA | NA | No cost |

| Human Disease Checker With AI | Android | Diagnosis of skin conditions | NA | NA | NA | NA | No cost |

| iHairium: Hair & Skin Health | Apple | Diagnosis of hair conditions | NA | 4.8 | NA | 173 | No cost |

| Kara AI Skin Expert Assistant | Both | Tracking skin conditions | NA | NA | NA | NA | No cost |

| MatchLab AI | Apple | Tracking skin conditions | NA | 5 | NA | 1 | No cost |

| MDAcne | Both | Acne treatment and monitoring | 4.5 | 4.6 | 4340 | 18 000 | No cost |

| Medgic - AI Skin Analysis | Android | Identification of skin conditions | 3.3 | NA | 5610 | NA | No cost |

| Medic Scanner–Skin Analyze | Android | Skin cancer detection | 3.7 | NA | 71 | NA | No cost |

| Melatectd | Apple | Skin cancer detection | NA | 5 | NA | 1 | No cost |

| MetisAI+ | Apple | Identification of skin conditions | NA | NA | NA | NA | No cost |

| Miiskin Skin & Dermatology | Both | Mole tracking, acne tracking | 4 | 4.4 | 1815 | 985 | No cost |

| Mole Checker Skin Dermatology | Apple | Skin cancer detection | NA | 4.8 | NA | 71 | Fee |

| Piel | Apple | Skin cancer detection | NA | 3.7 | NA | 6 | No cost |

| Proton Health: Acne & Eczema | Both | Identification of skin conditions | NA | 4.5 | NA | 2 | No cost |

| Rash ID - Rash Identifier | Apple | Diagnosis of skin conditions | NA | 3.2 | NA | 383 | Fee |

| Scanoma–Mole Check | Both | Skin cancer detection | 4.3 | 4.7 | 310 | 749 | No cost |

| Skin Bliss: Skincare Routines | Both | Tracking skin conditions | 4.7 | 4.8 | 5440 | 346 | No cost |

| SkinChange.AI | Apple | Mole tracking | NA | 5 | NA | 6 | No cost |

| SkinIO | Both | Skin cancer detection, mole tracking | 4.3 | 4.6 | 72 | 44 | No cost |

| Skinlog | Skin Diary, Analysis | Both | Tracking skin conditions | 4.7 | 4.7 | 218 | 17 | No cost |

| Skinner: Analyze Your Skin | Apple | Identification of skin conditions | NA | 1.3 | NA | 3 | No cost |

| Sun Index–Vitamin D & UV | Both | Tracking skin conditions, sun protection | 3.5 | 3.9 | 698 | 230 | No cost |

| UV Safe–Sun Protection | Apple | Skin cancer detection, sun protection | NA | 4.4 | NA | 17 | No cost |

Abbreviations: app, application; NA, not available due to an insufficient number of ratings or because the app is not available in the app store.

Android, Apple, or both app stores.

In-app purchases.

Fee applied for online consultation with a dermatologist to obtain a confirmed diagnosis.

A preprint is available for Melatect,10 but Melatect is included in this table because no peer-reviewed publication is available as supporting evidence.

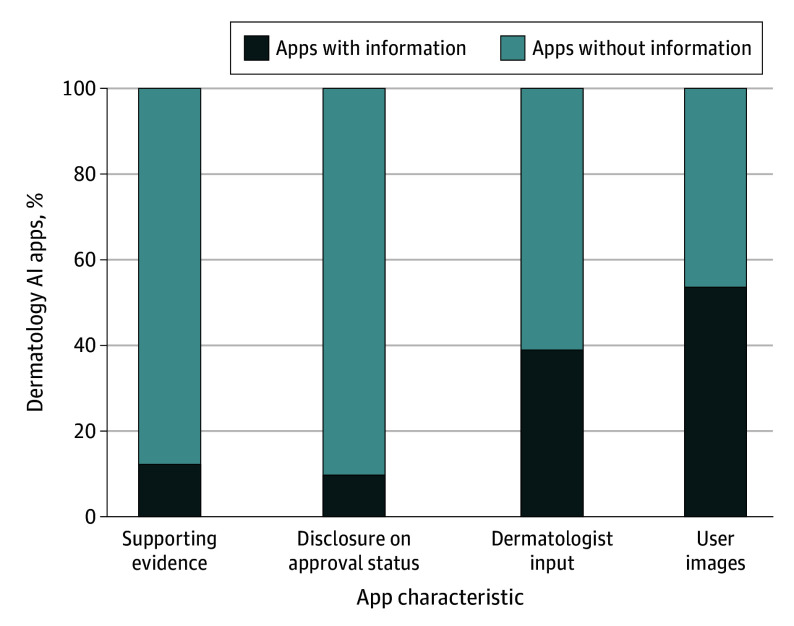

Ten apps (24.4%) claimed diagnostic capability. Notably, none of these had scientific publications as supporting evidence, and 2 lacked warnings in their descriptions cautioning about the potential inaccuracy of results and the absence of formal medical diagnoses. Fourteen apps (34.1%) were based in the US, with only 2 providing a disclaimer for lacking US Food and Drug Administration approval. Additionally, 14 apps (34.1%) were based in Europe, and only 2 possessed CE Mark (Conformité Européenne) status, indicating regulatory health and safety product approval in the European Union (eTable 1 in Supplement 1; Figure).

Figure. Characteristics of Dermatology Artificial Intelligence (AI) Applications.

Only 5 applications (apps) had supporting evidence in the form of peer-reviewed journal publications. Only 4 apps had disclosure on CE (Conformité Européenne) Mark or US Food and Drug Administration approval status. Less than half of the apps had dermatologist input, and nearly half of the apps did not specify how user images can be used.

Notably, only 5 apps had supporting peer-reviewed journal publications; however, only 1 was a multicenter prospective diagnostic clinical trial (Table 1, Table 2, Figure).9 One app had a preprint article available10 (Table 1, Table 2); 24 apps (58.5%) lacked any information on training and testing datasets. For apps with dataset information, the majority offered only general descriptions, providing vague details such as “photographs,” “publicly available,” or “proprietary.” Only 6 apps (14.6%) provided data availability. However, none of the datasets were provided by the app developers. Instead, these datasets have been publicly available, exemplified by the International Skin Imaging Collaboration Archive11 and HAM1000012 (Human Against Machine with 10000 training images) (eTable 2 in Supplement 1). Furthermore, 21 apps (51.2%) lacked information on algorithm details (eTable 2 in Supplement 1). The majority of the apps did not specify any clinician input. Only 16 apps (39.0%) specified including dermatologist input (eTable 2 in Supplement 1; Figure).

Only 12 apps (29.3%) indicated that they do not store user-submitted images. Of the 16 apps (39.0%) specifying storing user images, 12 reported using secure cloud servers. Regarding how submitted image data would be used, 19 apps (46.3%) did not provide any details. In contrast, 20 apps (48.8%) used the data for analysis to provide user results, while 12 apps (29.3%) specified using the data for research and further app development (eTable 3 in Supplement 1; Figure).

Discussion

The findings of this cross-sectional study highlight that dermatology AI apps lack transparency about the effectiveness of the AI models, data used for development, and how user images are used. This raises concerns about biases, inappropriate recommendations, and user privacy. The absence of regulatory approval and limited clinician involvement, particularly from dermatologists, further compounds these concerns.5 In fact, the American Academy of Dermatology warns that apps claiming to diagnose or provide treatment plans can provide inaccurate information. Nevertheless, AI dermatology apps hold promise for improving access and patient outcomes,13 but in their current state, may cause harm due to the potential risks, lack of consistent validation, and misleading user communication. Although a randomized clinical trial is the ideal study design for evaluating an intervention, this is not always necessary for vetting diagnostic apps. For instance, diagnostic validation studies can also be used to demonstrate the app’s utility. However, current AI apps in dermatology are overall poorly transparent regarding the method of app development and validation. Addressing challenges in efficacy, safety, and transparency through effective regulation, validation, and standardized evaluation criteria is essential to harness the benefits of these apps while minimizing risks. App developers should, at a minimum, disclose information on the specific AI algorithms used; the datasets used for training, testing, and/or validation; the extent of clinician input; the existence of supporting publications; the use and handling of user-submitted images; and the implementation of measures to safeguard data privacy.14

Limitations

The app identification search was limited by the availability of apps in the Apple and Android app stores, which are subject to regional variations; the searches were limited to the current English-language artificial intelligence dermatology mobile apps available for download in US app stores. Potential exists for the omission of apps in use globally that were not captured by our study. Furthermore, app stores undergo dynamic changes with continual additions and removals. Although this study yielded meaningful findings, the dynamic nature of the field requires ongoing research and monitoring to remain relevant in a continuously evolving landscape.

Conclusions

This cross-sectional study uncovered numerous concerns regarding the current state of AI dermatology apps. Most notably, the use of these apps has risks associated with a lack of consistent validation and transparent user communication. These findings are important to increase awareness of the current limitations and the need to further develop methods for creation of AI dermatology apps with effective regulation, validation, and standardized evaluation criteria.

eMethods

eFigure 1. Form Sent to App Contacts as Formal Survey for More Comprehensive Evaluation

eFigure 2. Flowchart of Dermatology AI App Identification

eTable 1. Information on App Country of Origin and FDA Approval / CE Mark

eTable 2. Information on Training/Testing Data Sets, Data Availability, Algorithm, and Clinician Input

eTable 3. Information on Terms of Use

eTable 4. References for Apps with Evidence

Data Sharing Statement

References

- 1.Brewer AC, Endly DC, Henley J, et al. Mobile applications in dermatology. JAMA Dermatol. 2013;149(11):1300-1304. doi: 10.1001/jamadermatol.2013.5517 [DOI] [PubMed] [Google Scholar]

- 2.Sun MD, Kentley J, Mehta P, Dusza S, Halpern AC, Rotemberg V. Accuracy of commercially available smartphone applications for the detection of melanoma. Br J Dermatol. 2022;186(4):744-746. doi: 10.1111/bjd.20903 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Freeman K, Dinnes J, Chuchu N, et al. Algorithm based smartphone apps to assess risk of skin cancer in adults: systematic review of diagnostic accuracy studies. BMJ. 2020;368:m127. doi: 10.1136/bmj.m127 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Abbasi J. Artificial intelligence-based skin cancer phone apps unreliable. JAMA. 2020;323(14):1336. doi: 10.1001/jama.2020.4543 [DOI] [PubMed] [Google Scholar]

- 5.Matin RN, Dinnes J. AI-based smartphone apps for risk assessment of skin cancer need more evaluation and better regulation. Br J Cancer. 2021;124(11):1749-1750. doi: 10.1038/s41416-021-01302-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Chuchu N, Takwoingi Y, Dinnes J, et al. Smartphone applications for triaging adults with skin lesions that are suspicious for melanoma. Cochrane Database Syst Rev. 2018;12(12):CD013192. doi: 10.1002/14651858.CD013192 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Diao JA, Venkatesh KP, Raza MM, Kvedar JC. Multinational landscape of health app policy: toward regulatory consensus on digital health. NPJ Digit Med. 2022;5(1):61. doi: 10.1038/s41746-022-00604-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Essén A, Stern AD, Haase CB, et al. Health app policy: international comparison of nine countries’ approaches. NPJ Digit Med. 2022;5(1):31. doi: 10.1038/s41746-022-00573-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Menzies SW, Sinz C, Menzies M, et al. Comparison of humans versus mobile phone-powered artificial intelligence for the diagnosis and management of pigmented skin cancer in secondary care: a multicentre, prospective, diagnostic, clinical trial. Lancet Digit Health. 2023;5(10):e679-e691. doi: 10.1016/S2589-7500(23)00130-9 [DOI] [PubMed] [Google Scholar]

- 10.Meel V, Bodepudi A. Melatect: a machine learning model approach for identifying malignant melanoma in skin growths. arXiv. Preprint posted September 21, 2021. doi: 10.48550/arXiv.2109.03310 [DOI]

- 11.International Skin Imaging Collaboration . ISIC. Accessed January 10, 2024. https://www.isic-archive.com/

- 12.Tschandl P, Rosendahl C, Kittler H. The HAM10000 dataset, a large collection of multi-source dermatoscopic images of common pigmented skin lesions. Sci Data. 2018;5:180161. doi: 10.1038/sdata.2018.161 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Wongvibulsin S, Frech TM, Chren MM, Tkaczyk ER. Expanding personalized, data-driven dermatology: leveraging digital health technology and machine learning to improve patient outcomes. JID Innov. 2022;2(3):100105. doi: 10.1016/j.xjidi.2022.100105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Daneshjou R, Barata C, Betz-Stablein B, et al. Checklist for evaluation of image-based artificial intelligence reports in dermatology: CLEAR derm consensus guidelines from the international skin imaging collaboration artificial intelligence working group. JAMA Dermatol. 2022;158(1):90-96. doi: 10.1001/jamadermatol.2021.4915 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

eMethods

eFigure 1. Form Sent to App Contacts as Formal Survey for More Comprehensive Evaluation

eFigure 2. Flowchart of Dermatology AI App Identification

eTable 1. Information on App Country of Origin and FDA Approval / CE Mark

eTable 2. Information on Training/Testing Data Sets, Data Availability, Algorithm, and Clinician Input

eTable 3. Information on Terms of Use

eTable 4. References for Apps with Evidence

Data Sharing Statement