Abstract

Objectives:

The link between memory ability and speech recognition accuracy is often examined by correlating summary measures of performance across various tasks, but interpretation of such correlations critically depends on assumptions about how these measures map onto underlying factors of interest. The current work presents an alternative approach, wherein latent factor models are fit to trial-level data from multiple tasks to directly test hypotheses about the underlying structure of memory and the extent to which latent memory factors are associated with individual differences in speech recognition accuracy. Latent factor models with different numbers of factors were fit to the data and compared to one another to select the structures which best explained vocoded sentence recognition in a two-talker masker across a range of target-to-masker ratios, performance on three memory tasks, and the link between sentence recognition and memory.

Design:

Young adults with normal hearing (N = 52 for the memory tasks, of which 21 participants also completed the sentence recognition task) completed three memory tasks and one sentence recognition task: reading span, auditory digit span, visual free recall of words, and recognition of 16-channel vocoded Perceptually Robust English Sentence Test Open-set (PRESTO) sentences in the presence of a two-talker masker at target-to-masker ratios between +10 and 0 dB. Correlations between summary measures of memory task performance and sentence recognition accuracy were calculated for comparison to prior work, and latent factor models were fit to trial-level data and compared against one another to identify the number of latent factors which best explains the data. Models with one or two latent factors were fit to the sentence recognition data and models with one, two, or three latent factors were fit to the memory task data. Based on findings with these models, full models that linked one speech factor to one, two, or three memory factors were fit to the full data set. Models were compared via Expected Log pointwise Predictive Density (ELPD) and post-hoc inspection of model parameters.

Results:

Summary measures were positively correlated across memory tasks and sentence recognition. Latent factor models revealed that sentence recognition accuracy was best explained by a single factor that varied across participants. Memory task performance was best explained by two latent factors, of which one was generally associated with performance on all three tasks and the other was specific to digit span recall accuracy at lists of 6 digits or more. When these models were combined, the general memory factor was closely related to the sentence recognition factor, whereas the factor specific to digit span had no apparent association with sentence recognition.

Conclusions:

Comparison of latent factor models enables testing hypotheses about the underlying structure linking cognition and speech recognition. This approach showed that multiple memory tasks assess a common latent factor that is related to individual differences in sentence recognition, although performance on some tasks was associated with multiple factors. Thus, while these tasks provide some convergent assessment of common latent factors, caution is needed when interpreting what they tell us about speech recognition.

INTRODUCTION

Speech recognition accuracy in degraded listening conditions partially depends on the listener’s cognitive ability (Arlinger et al., 2009), such that measures of individual differences in working memory ability tend to correlate with speech recognition accuracy (Akeroyd, 2008). However, measures of various cognitive constructs tend to positively correlate with one another (Miyake et al., 2000). As a result, most measures of cognitive ability have been found to correlate weakly with speech recognition accuracy to some extent (Dryden et al., 2017). These correlations are difficult to interpret because they could arise from a weak direct link between the measured cognitive abilities and speech recognition, or alternatively they could arise indirectly from covariance between the measured cognitive abilities and other cognitive abilities that directly support speech recognition. Discovering such correlations is an important initial step in the development of theory, but studies in this field are often conducted with the goal of identifying latent factors, i.e. the underlying individual differences that affect performance on multiple tasks of interest. The work presented here demonstrates a method of moving beyond examining correlations by instead identifying and comparing latent factor models to explain the individual differences that link performance across cognitive and speech recognition tasks.

In the psychology literature, individual performance on specific tasks is typically modeled as arising from an underlying set of latent factors (Engle et al., 1999; Miyake et al., 2000). While the exact nature and relationship of those latent factors to one another is an area of active research (Friedman & Miyake, 2017; Kovacs & Conway, 2016; Rey-Mermet et al., 2018; Shipstead et al., 2016; Troche et al., 2021), the norm is to explicitly state and test assumptions about how task performance maps onto latent variables. This approach is advantageous in that it can identify latent factors which contribute to performance across multiple tasks, estimate individual differences in those factors, and identify tasks for which performance depends on multiple latent factors. In contrast, not using this approach makes the implicit assumption that the observed task performance directly reflects the latent variable of interest (Oberauer & Lewandowsky, 2019). Such assumptions can be problematic, as it is not always evident at face value whether two tasks measure the same latent factor. For example, although both the Stroop and flanker tasks have long been regarded as tests of inhibition, recent work indicates that they are unrelated measures which do not measure a common latent variable (Rey-Mermet et al., 2018; Rouder & Haaf, 2019). Similarly, the antisaccade task, a measure of attentional control that requires looking away from a cued location, and complex span working memory tasks do not obviously relate to one another, yet individual differences in performance on complex span tasks predict speed and accuracy on the antisaccade task (Kane et al., 2001; Unsworth et al., 2004). Thus, there is a need to test hypotheses about what latent factors any given task measures to advance our understanding of the cognitive processes that affect speech recognition.

An influential model of the link between working memory and speech recognition is the Ease of Language Understanding (ELU) model (Rönnberg, 2003; Rönnberg et al., 2008, 2013, 2022). The premise of the ELU model is that speech recognition occurs via two processes. The first process occurs automatically and rapidly whenever sensory inputs unambiguously match a lexical representation in long-term memory, and the second process is an explicit and slower mechanism for restoring or inferring an interpretation of ambiguous input. This slow, explicit mechanism is conceptualized as working memory.

There is a wealth of empirical findings that have motivated the development of several theories regarding the structure of working memory (Oberauer et al., 2018). The ELU model is based on one of these models of working memory, Baddeley’s multicomponent model (Baddeley, 2012). In this model, working memory is defined as the ability to concurrently store and process information. Working memory is distinct from short-term memory, which is the temporary storage of information without processing. The ELU model emphasizes working memory as a critical ability for speech recognition because tasks that measure working memory tend to predict speech recognition accuracy, whereas tasks that measure short-term memory do not (Rönnberg et al., 2013). From this perspective, individuals differ from one another in terms of their short-term capacity and their ability to process information in memory, and it is specifically the processing ability that accounts for correlations between working memory tasks and speech recognition accuracy. Working memory is often experimentally tested with complex span tasks, which include a primary memory task interleaved with a secondary processing task. One of the most widely used complex span tasks is reading span (Daneman & Carpenter, 1980). In reading span tasks, participants read a sequence of sentences one at a time and make a judgment about each sentence, such as whether it is factually true or semantically sensible. At the end of the sequence, they then recall the last word of each sentence. Interleaving both a storage task (storing and recalling words in order) and a processing task (judging sentence truth) is what critically distinguishes reading span from simple span tasks that only include a primary recall task, such as serial recall of lists of digits in the order they were presented. This processing requirement is the reason complex span tasks are hypothesized to relate to speech recognition while simple span tasks are not (Rönnberg et al., 2016, 2021).

However, alternative theories about the structure of working memory have been proposed. For example, some models of working memory posit the presence of a limited capacity focus of attention that is used to maintain information in a readily accessible state. When the capacity of the focus of attention is overloaded, information is displaced into an activated state in long-term memory and must be retrieved back into the focus of attention before it can be used (Cowan, 2001; Oberauer, 2002). In these models, individuals differ from one another in both their ability to maintain information in the focus of attention and retrieve activated information from long-term memory back into the focus of attention (Unsworth & Engle, 2007b). From this perspective, maintenance and retrieval demands vary across memory tasks, although simple and complex span tasks both measure these processes to some extent (Unsworth & Engle, 2007a). Specifically, the dual-task structure of complex span tasks requires retrieval of activated items in long-term memory regardless of list length because the secondary task displaces those items from the focus of attention. In contrast, simple span requires retrieval only at longer list lengths, in which the amount of information in the list overloads an individual’s ability to maintain that information in the focus of attention. In support of this idea, previous research has reported correlations between the number of items recalled at longer list lengths (5 – 7 item lists) in serial recall tasks with recall accuracy for complex span at all list lengths (Unsworth & Engle, 2006). Similarly, free recall tasks, which present long lists of items and require participants to recall as many items as they can in any order, load onto the same latent factor as complex span tasks (Unsworth & Engle, 2007b), which supports the theory that individual differences in retrieval ability affect performance in both tasks. More generally, Wilhelm et al. (2013) demonstrated that performance across a variety of memory tasks was highly correlated and loaded onto the same underlying factor, which was in turn closely related to fluid intelligence.

The storage and processing model (which the ELU model is based on) and the maintenance and retrieval model make different predictions about which memory tasks will overlap in the latent factors they measure. The storage and processing model predicts that all tasks which depend on storing information will load onto a common storage factor, and tasks with an explicit processing component will also depend on a distinct processing factor. Thus, if we use a complex span task, which includes a processing component, in conjunction with serial or free recall tasks, which do not include a processing component, we should be able to partition individual differences in storage and processing ability from one another. Specifically, all tasks should associate with a common storage factor, while complex span tasks will also depend on individual differences in processing ability. In contrast, the maintenance and retrieval model predicts that complex span tasks at all list lengths and serial or free recall tasks with long list lengths that overload the focus of attention will depend on retrieval ability, whereas short list lengths in serial or free recall tasks should only depend on maintenance ability. Thus, including tasks for which these models make different predictions will enable us to identify the underlying latent factor structure. Identifying latent factors and estimating individual differences in ability for each factor will enable us to identify which latent memory factor(s) determine individual differences in speech recognition accuracy.

For this study, three memory tasks were selected to determine how these tasks overlap in the latent memory factor(s) they measure and determine the relationship between sentence recognition and memory factors. Reading span was included for its theoretical importance in the ELU model and because it often associates with speech recognition in older adults (O’Neill et al., 2019; O’Neill, Parke, et al., 2021). Reading span requires concurrent storage and processing of information within a trial, as described above. Forward digit span was included because performance on this task has been previously found to correlate with vocoded sentence recognition accuracy (Bosen & Barry, 2020), which is part of the listening condition used in the current study. This correlation indicates that digit span and sentence recognition abilities are related to some extent, but do not tell us whether digit span assesses the same underlying speech-related factors as reading span. Forward digit span is a serial recall task, which requires storing a sequence of digits in order but does not require any manipulation or processing of that sequence. Digit sequence length can be manipulated to control the amount of information that needs to be retrieved from activated long-term memory during recall. Visual free recall of words was included because it has been shown to associate with complex span task performance (Unsworth & Engle, 2007b) but does not include an explicit processing component. In a typical free recall task, more items are presented than can be stored in short-term memory to place a demand on retrieval ability. To our knowledge, free recall has not been previously examined with respect to speech recognition. Together, these memory tasks enable the identification of latent memory factors that are associated with sentence recognition.

The ELU model states that working memory is needed to infer missing speech information whenever the input is ambiguous (Rönnberg et al., 2013). Specifically, when few ambiguous inputs are present, individual differences in speech recognition should be governed by the automatic, rapid process. As more ambiguous inputs are introduced, i.e. as listening conditions become more difficult, then individual differences in recognition accuracy should be dominated by the explicit, slow process, with the automatic process playing a smaller role. By extension, the ELU model predicts that individual differences in working memory factor(s) should have stronger effects on speech recognition accuracy in more difficult listening conditions. Currently, it is unknown how the transition from automatic to explicit processing occurs across changes in listening condition. If the transition is sharp, then there should be a threshold below which working memory factor(s) should consistently associate with speech recognition accuracy. Alternatively, if the transition is more gradual, then the relationship between working memory and speech recognition accuracy should change as a function of listening condition difficulty. It could be the case that a single working memory factory becomes more strongly associated with recognition accuracy as difficulty increases. Alternatively, it could be that factors associated with automatic and explicit processing trade off in their relationship with speech recognition accuracy. If so, individual differences in the factors that support automatic processing would be most strongly associated with speech recognition in easy listening conditions and factors that support explicit processing would be most associated with difficult listening conditions.

Some evidence against a change in latent factors as a function of listening condition difficulty was found by Bosen & Barry (2020), in which individual differences in sentence recognition accuracy were moderately correlated across three listening conditions that were degraded with a vocoder to produce different levels of spectral resolution. At a group level, keyword recognition accuracy declined as the number of channels in the vocoder was reduced, from 88.1% for a 16-channel vocoder to 77.1% for an 8-channel vocoder and 39.1% for a 4-channel vocoder. However, individual differences in keyword recognition accuracy across the 16 and 4 channel conditions were correlated with an r = 0.66, which is only slightly smaller than the correlations between the 16 and 8 channel (r = 0.75) and the 8 and 4 channel conditions (r = 0.73). If the latent factors which supported sentence recognition changed as a function of accuracy within the tested range then we would expect individual differences in sentence recognition to be weakly correlated, if at all, across the 16 and 4 channel conditions. However, we noted above that interpreting correlations in performance across tasks can be challenging, so the present study was designed to identify the latent factors underlying sentence recognition across a manipulation of recognition accuracy.

For this study, we measured sentence recognition accuracy in young adults with normal hearing for sentences spoken by multiple talkers which were mixed with competing two-talker speech and then vocoded. This listening condition was selected to elicit a high cognitive demand for successful sentence recognition. Changes in talker elicit a processing cost as the listener normalizes to characteristics of the new talker (Magnuson et al., 2021; Mullennix et al., 1989; Mullennix & Pisoni, 1990). Listening to speech in the presence of competing speech requires selecting and attending to the target talker while ignoring competitors, with the greatest interference from the competing talkers occurring with a two-talker masker (Freyman et al., 2004). Vocoding the combined target and masking speech further increases the cognitive demand because it removes some of the cues that support stream segregation (Bernstein et al., 2016; Brungart, 2001; Qin & Oxenham, 2003; Shinn-Cunningham & Best, 2008). The inclusion of these elements in the listening condition used here should make it more likely that we would observe changes in the latent factors that support speech recognition across manipulations of recognition accuracy, if such changes exist. Using a degraded listening condition with a high cognitive demand should also facilitate the identification of the relationship between memory and speech recognition. As a secondary motivation, this listening condition is translationally relevant because identifying indexical properties and recognizing speech in the presence of other talkers is particularly difficult for individuals with cochlear implants (Smith et al., 2019; Stickney et al., 2004) and is a listening situation these individuals frequently encounter in daily life (O’Neill, Basile, et al., 2021). Thus, the results of this experiment could inform the design of future studies to identify the cognitive constructs that facilitate speech recognition in listeners with cochlear implants.

Sentence recognition accuracy was tested across a range of target-to-masker ratios. At favorable target-to-masker ratios, we predicted that sentence recognition accuracy would not be substantially impeded by the competing speech, so the correlation between sentence recognition accuracy and performance on the memory tasks should replicate the findings of prior work which used vocoded speech without competing speech (Bosen & Barry, 2020). At more difficult target-to-masker ratios the ELU model predicts that there is a greater requirement for slow, explicit processing, which may manifest as increased effect of specific memory factor(s) on recognition accuracy in the difficult conditions.

MATERIALS AND METHODS

Young adults with normal hearing completed three memory tasks and repeated vocoded sentences in the presence of a two-talker masker at a range of target-to-masker ratios. Performance in the sentence recognition and memory tasks was compared across individuals, first by correlating average performance in each task across participants and second by fitting latent factor models to trial-level data. Task design documentation, data, and analysis scripts are available as supplemental material at https://osf.io/j2s45/.

Participants and Experimental Environment

A total of 52 people participated in this study, 27 in the lab and 25 via remote testing. 27 young adults (21 women, 6 men, range of 19 – 34 years of age, mean of 25.2 years) were recruited for this study in our lab. These participants were screened for typical hearing (pure-tone thresholds < 20 dB HL at octave frequencies between 0.5 and 8 kHz) and did not report any developmental, intellectual, or neurological disorders that would interfere with any of the tests used here. For these participants, the tasks described below were implemented in MATLAB (Mathworks, Natick, MA, USA) and the Psychtoolbox-3 library (Kleiner et al., 2007) was used for visual presentation in the free recall task. Tasks were completed in an echo-attenuated sound booth. Visual stimuli were presented on a computer monitor located in the booth and auditory stimuli were presented from a loudspeaker, both of which were located side-by-side approximately 1 m straight ahead of the participant. Auditory stimuli were presented at an average level of 65 dB SPL. Of these 27 participants, 21 completed all tasks described below, while 6 completed the memory tasks described here and a pilot sentence recognition task which used a different approach to vocoding and mixing the target and masker speech than the one described below. Given the small in-lab sample size of the pilot sentence recognition task, those results are not reported here.

In-person data collection was discontinued at the onset of the COVID-19 pandemic. We originally intended to collect more data in the pilot sentence recognition task by recruiting participants to complete the study in a remote testing format, although concerns about differences in audio equipment and auditory fidelity across participants prevent us from reporting their sentence recognition results until we can replicate our findings under more controlled listening conditions. However, prior results indicate that assessment of auditory digit span is robust to degradations in auditory quality (Bosen & Barry, 2020; Bosen & Luckasen, 2019) and the other memory tasks were visually presented, so data collected remotely for these tasks should be comparable to those collected in the laboratory. Thus, performance on the memory tasks for these remote participants is included to determine if in-lab and remote testing yield a similar range of group performance as a reference for future studies and to increase the sample size for analyses which compare individual differences in performance across memory tasks.

25 participants (20 women, 5 men, range of 19 – 26 years of age, mean of 22 years) completed the study via remote testing. Remote testing was conducted via a WebEx video call with an experimenter, who provided instructions and links to websites which implemented the tasks. Participants were asked to wear headphones throughout the study and were free to use any headphones they had available. At the start of the experiment, participants were sent a link to a calibration noise which matched the long-term spectral characteristics of the digit stimuli and were asked to adjust the volume of their headphones so that the calibration noise was at a “loud, but comfortable” listening level. These individuals completed the digit span and free recall tasks described here using an implementation of the tasks written using the jsPsych library (de Leeuw, 2015) and hosted on the website https://www.cognition.run/. Reading span was administered through Inquisit 6 Online (Millisecond Software, 2020), which requires participants to download a driver program to their computer to ensure reliable timing for stimulus presentation and responses.

All participants provided informed written consent and were compensated hourly for participation. This study was approved by our Institutional Review Board.

Memory Tasks

All participants completed reading span, free recall, and digit span in that order.

Reading Span

Participants completed an automated reading span task on a computer (Unsworth et al., 2005) implemented in Inquisit Lab 5 (Millisecond Software, 2019), available at https://www.millisecond.com/download/library/rspan/ at the time of publication. The version of the reading span task used here is slightly different than the one developed by Daneman & Carpenter (1980), in that the items to be remembered are distinct letters presented after each sentence rather than the last word of the sentence. This version was used to prevent participants from using semantic information from sentences to facilitate recall (Conway et al., 2005). In this task, participants were shown alternating sequences of letters and sentences between 3 and 7 letter-sentence pairs long, with each length presented three times in a random order. Participants were asked to judge whether or not the sentences were sensical and to remember the sequence of letters in order. Nonsensical sentences were created by taking a semantically sensible sentence and changing one word (e.g. “Andy was stopped by the policeman because he crossed the yellow heaven.”). After each sentence a prompt appeared stating “This sentence makes sense”, to which participants clicked on true or false dialog boxes with a mouse. At the end of each sequence, a keypad appeared on screen containing all 12 possible letters and participants were asked to click on the letters in the order they were presented using a mouse. The task instructions encouraged participants to maintain at least 85% accuracy on sentence judgment and percent accuracy for sentence judgment was displayed on screen to ensure participants attended both the judgment and the recall portions of the task. Practice trials of letter recall, sentence judgment, and a mixture of both were provided prior to completing the experimental trials. The time to respond in the practice sentence judgment trials was used to calculate a timeout period, defined as the mean time to respond plus 2.5 standard deviations for each participant. In experimental trials, sentence judgment responses timed out and were marked as incorrect if a response was not provided within the timeout period. This timeout period was intended to ensure participants could not chose to pause and rehearse the letter sequence after making sentence judgments.

Free Recall

The structure of this task was previously described by (Engle et al., 1999). Participants saw 10 lists of 12 words flashed on a computer screen. Each word was presented for 750 ms with a 250 ms blank screen between words. At the end of each list, participants said aloud as many words as they could remember in any order within a 30 s time limit. Participants were told it is easier to recall words from the end of the list first before recalling any other words they could remember to be consistent with the methods described by Engle et al. (1999), although they were free to use whatever response strategy they preferred. Verbal responses were recorded and transcribed offline by the second author. Unclear responses were reviewed by both authors and consensus was reached.

We designed a novel word list for this study. A list of potential words was taken from the consonant-nucleus-consonant word lists provided by Storkel (2013). Three-phoneme, four-letter words were then selected to form two practice lists and 10 test lists. Lexical frequency for each potential word was obtained from Brysbaert & New (2009), and high frequency words were preferred because they tend to be easier to recall in other types of memory tasks (Roodenrys & Quinlan, 2000). Words with a high (>30) or low (<10) number of phonological neighbors were excluded because lexical neighborhood density can also affect recall (Roodenrys et al., 2002). Words were compiled into lists that were approximately balanced with respect to the number of neighbors, the biphone segment sum, and lexical frequency. Meaningful phrases, rhyming words and semantically related words were avoided within lists. A spreadsheet of words used and their lexical statistics is provided in the OSF repository associated with this manuscript.

Digit Span

Participants repeated spoken lists of between 2 and 9 digits, with each list length presented 5 times for a total of 40 trials. Prerecorded digits were spoken by a single female talker at a rate of one digit per second. All participants heard the same 40 lists in the same order. List length was randomized and was not known at the onset of each list. This task was previously described by Bosen and Barry (2020), with the exception that in the prior study digits were vocoded and participants heard 10 lists of each length. We conducted a sensitivity analysis to estimate the distribution of measurement error as a function of the number of lists tested at each length and found that we only needed 5 lists per length to obtain similar levels of precision as the 10-list version used in the prior study. Task reliability for a version which uses 5 lists per length was then estimated by randomly splitting the 10-list data from the prior study into two sets of 5 lists per length, summing the number of digits correct recalled by each subject in each set, and correlating sum digits recalled across sets (Parsons et al., 2019). This process was repeated 5000 times to estimate a range of reliabilities, which yielded an average reliability for the task with 5 lists per length of r = 0.87, with a 95% confidence interval ranging from 0.81 to 0.93. Thus, halving the length of the digit span task relative to the prior study provides a shorter task with good reliability. The scripts used to conduct these analyses are provided in the OSF repository associated with this manuscript.

Sentence Recognition Task

Following the memory tasks, participants heard and repeated Perceptually Robust English Sentence Test Open-set (PRESTO) sentences (Gilbert et al., 2013; Tamati et al., 2013). These sentences vary in talker gender, talker dialect, syntactic structure, and semantic contents, which limits the ability to strategically use these cues to facilitate sentence recognition. These sentences were mixed with a two-talker masker across a range of target-to-masker ratios and then vocoded.

The two-talker masker was generated by concatenating PRESTO sentences that were not used as target sentences, splitting the concatenated audio into two halves, and summing those halves. Silence was automatically trimmed from the onset and offset of each sentence to ensure a maximum gap between sentence offset and onset of 100 ms.

Target sentences were taken from PRESTO lists 7, 8, 13, 15, 17, and 23, as these lists have good equivalency and consistency (Faulkner et al., 2015). Each list contained 76 keywords which were unevenly distributed across 18 sentences. For each target sentence, a random sample of the masker was selected that was 1 s longer than the target sentence and aligned so that the masker started and ended 0.5 s before and after the target sentence. This delay between masker and target onset and offset helped participants identify the target speech. The gain of the masker was adjusted such that the long-term average target-to-masker ratio across sentences was 0 (list 7), +2 (list 8), +4 (list 13), +6 (list 15), +8 (list 17), and +10 dB (list 23) to produce a broad range of keyword recognition accuracy. Based on previous work with IEEE sentences in steady-state noise (O’Neill et al., 2019), the masker was expected to have little effect on sentence recognition at +10 dB target-to-masker ratio, with a substantial reduction of accuracy to a low, but non-zero, level at 0 dB.

Stimuli were presented such that the level of the target sentences in quiet was fixed at an average of 65 dB SPL, so overall stimulus level varied with target-to-masker ratio. Target-masker pairings were the same across all participants to avoid variability in target-masker interactions (Buss et al., 2021). Sentences were also presented in a fixed order, starting with the highest target-to masker ratio (+10 dB for list 23) and stepping down to the most difficult (0 dB for list 7).

Target-masker pairs were vocoded with a 16 channel, sine-wave carrier vocoder, selected to match the 16-channel vocoder used by Bosen & Barry (2020). A 16 channel vocoder is expected to yield high keyword recognition accuracy at the higher target-to-masker ratios used here. Stimuli were passed through rectangular filters with edge frequencies that were equally spaced on the Greenwood (1990) function (filter edges of 100, 158, 230, 319, 430, 566, 735, 944, 1202, 1522, 1917, 2406, 3011, 3760, 4686, 5832, and 7250 Hz). The envelope of each filter’s output was calculated with a Hilbert transform and then low-pass filtered with a 300 Hz fourth-order Butterworth filter. Low-pass envelopes were then multiplied with a sine-wave carrier with a frequency of the geometric mean of the filter edges, and the products were summed to produce the vocoded stimulus.

No training was provided for the sentence recognition task. Verbal responses were recorded to every sentence for each participant and scored offline for number of keywords correct by the second author. Scoring followed the rules provided with the PRESTO sentences (Gilbert et al., 2013), which required exact matches for morphological markers. Unclear responses were reviewed and scored by both authors and consensus was reached.

Data Analysis

The data were analyzed in two ways. First, individual performance on each task was estimated by calculating task-level summary statistics. We report descriptive statistics for the range and mean performance on each task to facilitate comparison of participants in the current study to prior work. Pairwise linear correlations were calculated between each task-level statistic and tested for significance to demonstrate that our results replicate previously discovered positive correlations between memory task performance and sentence recognition accuracy. Second, latent factor models were fit to trial-level task performance and compared against one another to identify the number of latent factors which best explains the data.

Task-Level Summary Statistics

For reading span and digit span, responses were scored by the number of edits required to transform the given response into the target list (Gonthier, 2022), such that each edit (an insertion, deletion, or swap of a letter or digit) reduced the score for that trial by one, down to a minimum of zero. This scoring method differs from the common approach of scoring based on the total number of letters recalled in the correct position across all lists (Conway et al., 2005; Friedman & Miyake, 2005). The advantage of scoring by number of edits is that it yields more intuitive scores that are not sensitive to error position. For example, if the target sequence is ‘12345’, then responses of ‘2345’ and ‘1234’ would both receive a score of 4 of 5 items correct when scoring by number of edits. Scoring by correct in position would instead assign scores of 0 of 5 and 4 of 5 items correct, respectively, despite the fact that the only error in either response is a single deletion. Preliminary analysis with the latent factor models described below suffered from the inclusion of trials which were assigned a score of 0 by the correct in position scoring method, as these trials tended to have very high leverage over the model fit. Scoring by number of edits yielded similar task-level individual difference scores relative to the group average but made the distribution of trial-level scores more consistent within participants. We also scored the data by correct in position for comparison to prior work, but used edit distance scoring for all other analyses. For both scoring methods, reading span had a maximum possible cumulative score of 75 letters, and digit span had a maximum possible cumulative score of 220 digits.

For free recall, any responses that matched a word shown in the list were scored as correct, and the average number of words recalled across all 10 lists was calculated for each participant. Repeated responses were not counted more than once.

For sentence recognition, the proportion of keywords correctly identified by each participant for each target-to-masker ratio was calculated to quantify sentence recognition accuracy. Pairwise correlations of task performance were calculated across all target-to-masker ratios and the memory tasks.

Latent Model Description

Key aspects of the latent model approach are described here, with additional technical details provided in supplemental digital content 1. Instead of aggregating data across trials in each task, trial level data were coded as the number of correct responses out of the total number of items in that trial for every participant and task. These data can be modeled as samples from a binomial distribution, such that for a given trial i, task t, and participant p, the number of items correct, Xitp, is binomially distributed as a function of the total number of items in the trial, ni, and the probability of a correct response for that participant and task, αtp (expressed in log odds). The total number of items in the trial is dependent on the task (either number of keywords or number of items to be remembered) and can vary across trials within a task. Incorporating the variable number of keywords in each PRESTO sentence into the model reflects the variable amount of information obtained in each trial. Each list length in the reading span and digit span tasks is modeled as a separate task to allow the probability of a correct response to vary across list lengths.

The probability of a correct response is the sum of the group-level average probability of a correct response for each task, μt, and individual differences in latent factor(s), ηfp, scaled by the amount each factor contribution to performance on each task, λtf.

This model allows each latent factor to affect performance on each task and enables us to estimate the likelihood that the contribution of each factor to each task, λtf, is non-zero, as described below. In models with more than one factor, the factors were constrained to be orthogonal to one another. The orthogonality constraint was imposed to prevent the model from “collapsing”, wherein two different latent factors might take the same values during model estimation and thereby reduce the effective number of latent factors in the model. Despite this constraint, it is still possible for models with an excess of latent factors to collapse, as was observed for a few models described below. An alternative model formulation is to allow each latent factor to affect performance on a single task, but allow latent factors to be correlated with one another (see Friedman et al., 2008 for an example of both model structures applied to the same data set). Here we opted to allow factors to affect multiple tasks to examine the convergent and divergent loading of tasks onto distinct latent factors. To ensure the model had a fixed scale and was therefore identifiable, each latent factor η was restricted such that the mean across participants was zero and the standard deviation was 1 in each sample drawn by the fitting algorithm described below. To ensure that factors could not change their ordering (i.e. higher values lead to worse task performance), all λ parameters were constrained to be positive.

To examine relationships between speech and memory latent factors, individual differences in speech recognition factors, ηspeech, were modeled as a linear regression against memory factors with regression coefficients βf and normally distributed error terms, σspeech, which were constrained to be positive.

Latent Factor Model Comparison

Models that varied in the number of latent factors, η, were fit to three portions of the data to determine the number of latent factors which best accounted for task performance. For each portion of the data, we started with a model with one latent factor, then iteratively increased the number of latent factors in the model until the model with the highest number of factors demonstrated poor convergence and high correlations between latent factors. For the sentence recognition data alone, this iterative approach yielded a comparison between models with one or two latent factors. For the memory task data alone, models with one, two, and three latent factors were compared. For the entire dataset, models with one, two, or three latent memory factors and one sentence recognition factor were compared, based on the results obtained for the two separate portions of the data.

Models were fit using the Stan programming language (v2.26.1, Carpenter et al., 2017) using the RStan interface (v2.26.11, Stan Development Team 2020) in R (v4.2.0, R Development Team, 2022). Stan code describing each model and R code used to fit and analyze models are provided in the OSF repository associated with this manuscript. Stan estimates the posterior distribution of model parameters via Markov Chain Monte Carlo sampling. This approach to modeling allows us to examine the estimated posterior probability distribution of each model parameter given the data and thereby use Bayesian inference to test hypotheses. An overview of the workflow involved in Bayesian inference is described by Gelman et al., (2020). We can use the probability distributions generated by Stan to estimate values of interest, such as the Maximum A Posteriori value of a parameter (MAP, i.e. the most likely value or mode of the parameter), and to calculate the likelihood that the value of a parameter lies outside of a null hypothesis, which in our case is the likelihood that a model parameter lies outside of a negligible region around zero.

The posterior distributions generated by model fitting can be used to estimate the likelihood that the experimental data could have arisen from the given model, which can in turn be used to select the model which best explains the data from among a candidate set. Pareto smoothed importance-sampling leave-one-out cross-validation (Vehtari et al., 2017) was used to estimate the goodness of fit of each model, quantified as the expected log pointwise predictive density (ELPD). ELPD includes a penalty term for the number of effective parameters to penalize more complex models which do not have a better explanatory power than simpler models. When comparing models, both the ELPD difference between models and the standard error of that difference are calculated. If the ELPD difference between models is greater than 4 and the standard error is smaller than the difference then we select the model with the better fit as a substantially better explanation for the data (Sivula et al., 2020).

Model Parameter Significance

In addition to examining the likelihood of whole models, we can also examine the likelihood that parameters within those models are non-negligible. There are several approaches to testing parameter values in a Bayesian context, depending on the hypothesis being tested (see Makowski et al., 2019 for an overview). Here, we are interested in testing whether the contribution of each latent factor to performance on each task, λtf, and whether the regression coefficients of the latent speech factor onto each latent memory factor, βf, were likely to be greater than a negligible region around zero (Morey & Wagenmakers, 2014). For this test, we computed Bayes Factors as the ratio of probability density inside or outside a Region of Practical Equivalence (Kruschke, 2018; Morey & Rouder, 2011). We tested whether the effect of factors on task performance, λ, were likely to have values above a region of 0 to 0.02. This small negligible region was selected to account for the fact that effect size likely depends on overall task performance, and tasks with low overall performance, such as Free Recall in the manner the data were coded, could have small but meaningful effect sizes. We also tested whether regression coefficients, β, were likely to have values above 0.3. This negligible region was selected based on the meta-analysis by Dryden et al., (2017), which found that most cognitive measures correlated with speech recognition accuracy at around r = 0.3, so normalized regression coefficients less than this value are unlikely to reflect a specific relationship between the construct being measured and sentence recognition. We adopt the convention of labeling Bayes Factors greater than 3 in favor of the parameter value lying outside of the negligible region as “substantial” evidence in favor of that parameter being meaningful in the model (Wetzels et al., 2011).

RESULTS

Task Performance

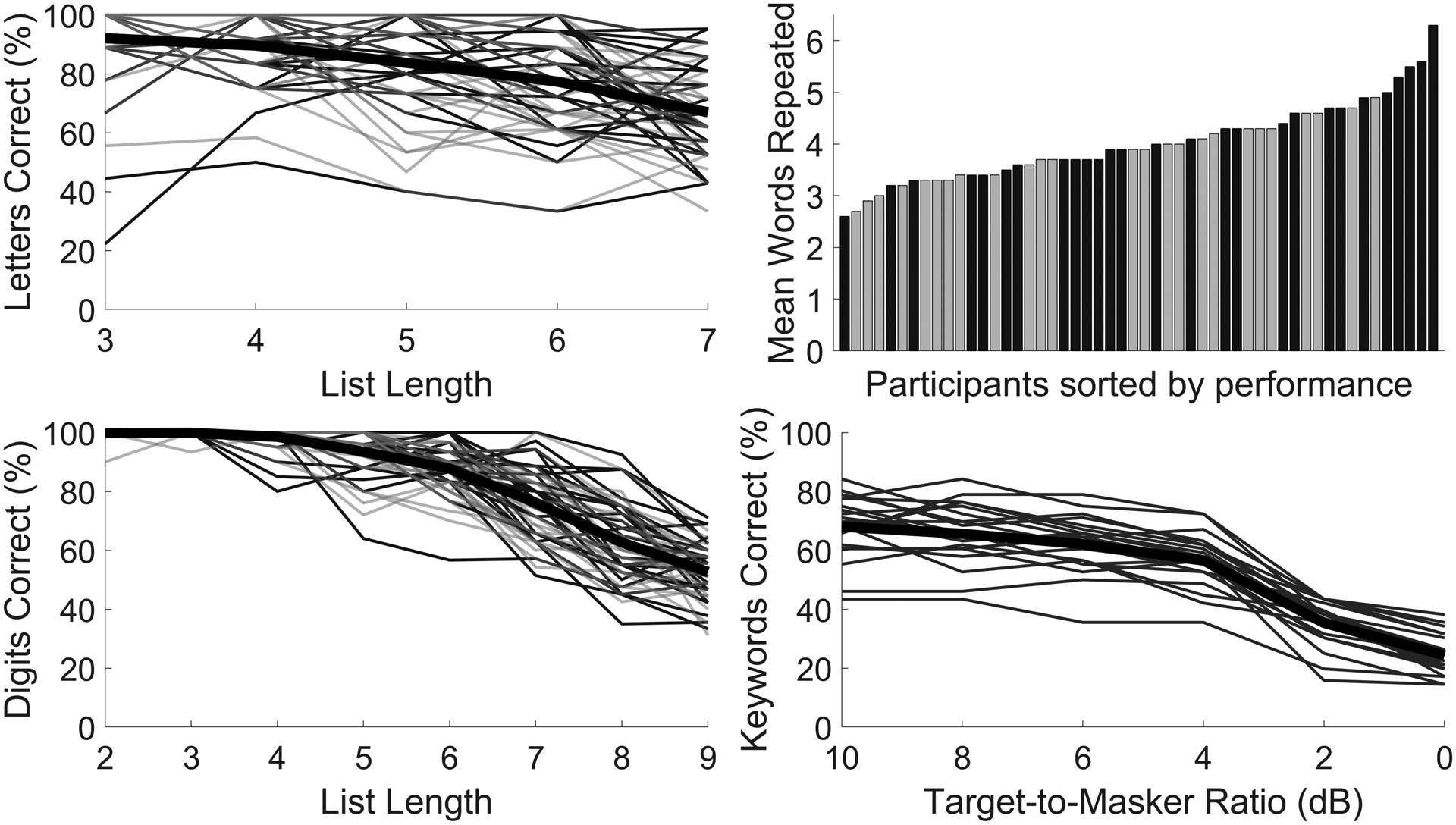

The distribution of performance on each task is depicted in Figure 1. Comparison of the range of performance on each of these tasks to prior work and estimates of task reliability are provided in supplemental digital content 2. To summarize, individual differences in performance on all tasks were reliable and followed trends that have been previously reported in the literature, and the in-lab and remote testing participant groups did not differ from one another on memory task performance.

Figure 1.

Performance on working memory and speech tasks. For reading span (top left) and digit span (bottom left), edit distance score as a percentage of maximum possible score is shown as a function of list length. Thin lines show recall accuracy for each participant, and the thick line shows the group average. In-lab participants are depicted with black lines and remote participants are depicted with gray lines. For free recall (top right), the number of items in each list was fixed, so the average number of items recalled across all lists is shown as a bar for each participant. In-lab participants are depicted with black bars and remote participants are depicted with gray bars. For speech recognition (bottom right), keyword recognition accuracy is shown a as a function of target-to-masker ratio. Thin lines show keyword recognition accuracy for each participant, and the thick line shows the group average.

Task-level summary statistic correlations between sentence recognition and memory tasks

Individual performance relative to the group was consistent across target-to-masker ratios in the sentence recognition task and across memory tasks, as shown by the correlations in Table 1. Significant positive correlations across target-to-masker ratios indicates that individuals with relatively high/low keyword recognition accuracy had high/low accuracy across target-to-masker ratios. Memory task performance was positively correlated with sentence recognition at all target-to-masker ratios, although many of these correlations did not reach significance (p < 0.05).

Table 1.

Correlation of speech recognition accuracy at each target-to-masker ratio and performance for each memory task.

| Correlations | 10 dB | 8 dB | 6 dB | 4 dB | 2 dB | 0 dB | Reading Span | Digit Span | Free Recall |

|---|---|---|---|---|---|---|---|---|---|

| 10 dB | - | ||||||||

| 8 dB | 0.74 | - | |||||||

| 6 dB | 0.70 | 0.84 | - | ||||||

| 4 dB | 0.71 | 0.84 | 0.87 | - | |||||

| 2 dB | 0.44 | 0.53 | 0.70 | 0.47 | - | ||||

| 0 dB | 0.48 | 0.68 | 0.65 | 0.56 | 0.75 | - | |||

| Reading Span | 0.69 | 0.44 | 0.37 | 0.37 | 0.22 | 0.35 | - | ||

| Digit Span | 0.64 | 0.41 | 0.31 | 0.40 | 0.15 | 0.20 | 0.50 | - | |

| Free Recall | 0.68 | 0.54 | 0.66 | 0.55 | 0.58 | 0.42 | 0.44 | 0.40 | - |

Pearson’s linear correlation coefficients (r) are reported for each pair of values across participants. Correlation coefficients that have p-values less than 0.05 are in bold.

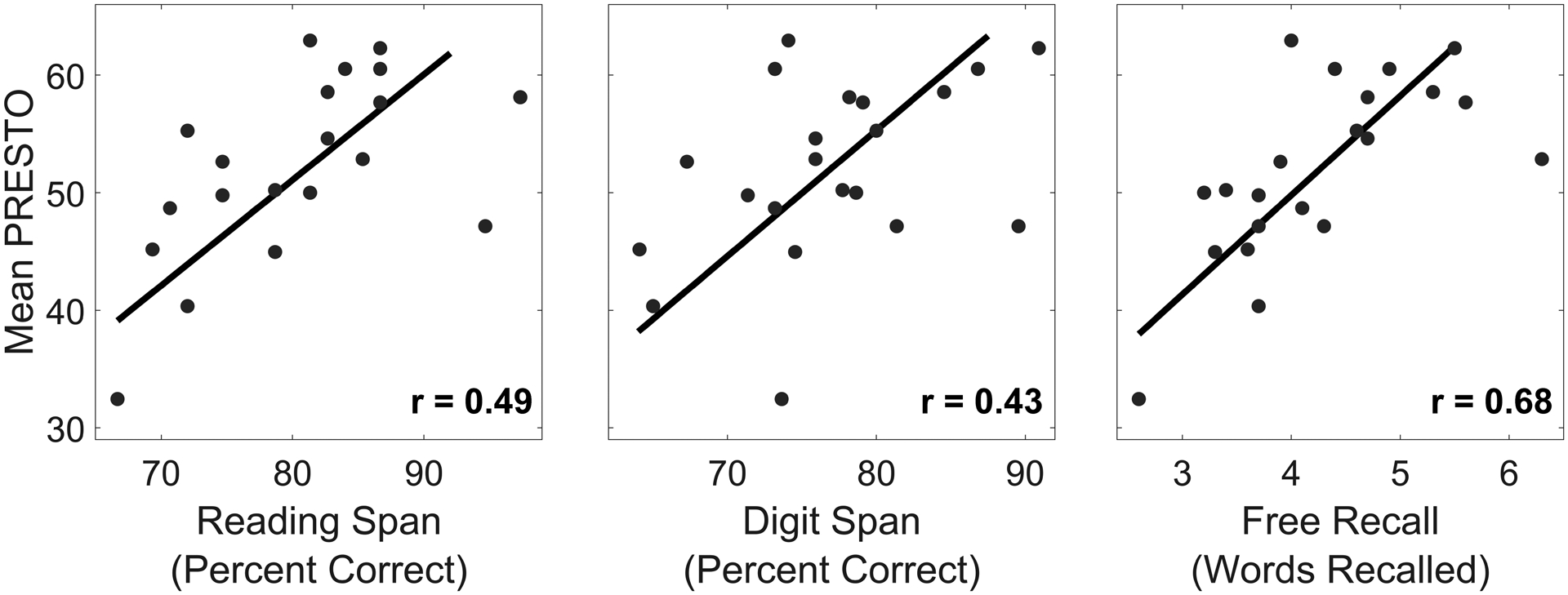

To visualize these correlations, Figure 2 shows the association between PRESTO sentence recognition accuracy averaged across target-to-masker ratios and performance on the memory tasks. Significant correlations (p < 0.05) were observed for each memory task but were marginal for reading and digit span (p = 0.02 and p = 0.05, respectively).

Figure 2.

Mean PRESTO sentence recognition accuracy across target-to-masker ratios as a function of performance in each memory task. Each dot shows data from one individual, and lines show standard major axis regression fits (Legendre, 2013) across individuals. All correlations were significant (p < 0.05).

Latent factor models of sentence recognition and memory task accuracy

Models with one or two latent individual difference factors were fit to keyword recognition accuracy for PRESTO sentences across target-to-masker ratios to test which model provided the best explanation for these speech data. A comparison of expected log pointwise predictive density across models indicated that a model with a single latent factor was a better explanation for the data than a model with two latent factors, as summarized in Table 2. Post-hoc inspection of the two-factor model fit indicated that even though the model was constrained so that latent factors were orthogonal within each sample, the posterior modes of both factors were highly correlated (r > 0.99). Exploratory attempts to constrain the model to reduce this correlation were unable to remove this correlation or improve the model fit. Thus, the model fit comparison and correlation between factors in the two-factor model both indicate that one latent factor is the best explanation for individual differences in sentence recognition accuracy across target-to-masker ratios in this study. This finding is consistent with the strong correlations across target-to-masker ratios in Table 1.

Table 2.

Comparison of model fits to PRESTO sentence recognition accuracy.

| Model | ELPDloo | ELPDloo SE | ploo | Ploo SE | ΔELPD | ΔELPD SE |

|---|---|---|---|---|---|---|

| One Factor | −3891.3 | 48.8 | 57.1 | 1.6 | - | - |

| Two Factors | −3900.5 | 49.2 | 87.3 | 2.4 | −9.3 | 3.3 |

Expected Log pointwise Predictive Density (ELPDloo), number of effective model parameters (ploo), and the standard errors for both estimates (SE) were estimated for both model fits using the LOO package in R (Vehtari et al., 2017). Model fits were compared to obtain an estimated difference in expected log posterior density relative to the best-fitting model (ΔELPD) and standard error for the difference. Comparison of model fits indicates that the one-factor model has a substantially better fit to the observed data.

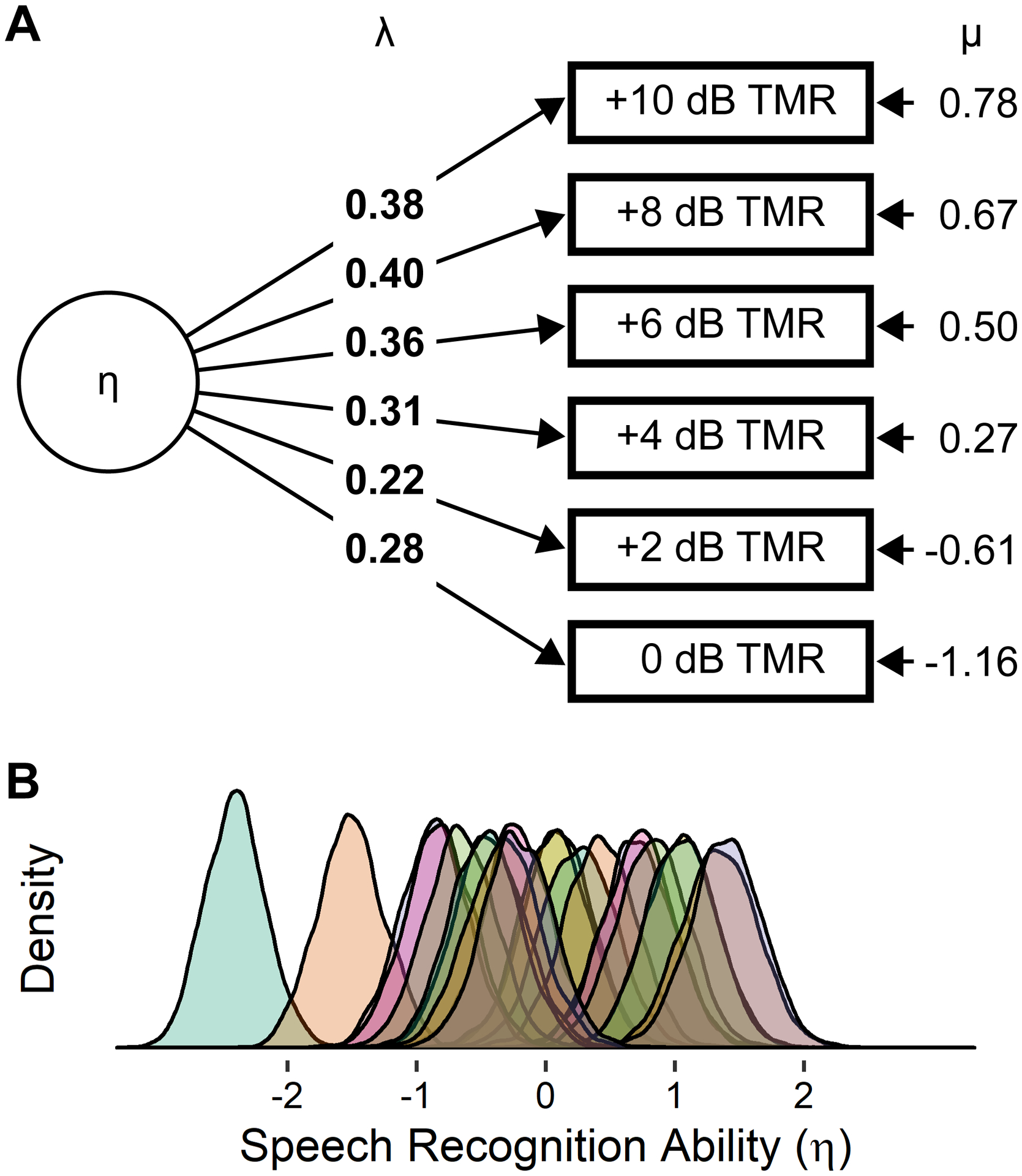

The one-factor model fit is depicted in Figure 3A. Individual differences in the latent factor, η, significantly contributed to keyword recognition accuracy in every target-to-masker ratio (bayes factors greater than 100 for all λ parameters), and group-level keyword recognition accuracy, μ, declined from +10 dB down to 0 dB, consistent with the trend depicted in Figure 1. Note that the estimated values of contribution of the latent factor to performance are not directly comparable across target-to-masker ratios because they are on a log-odds scale and the effect of a change in log-odds on percent correct is relative to where that change occurs on the scale. For example, in the +10 dB and +4 dB target-to-masker ratio conditions, the group-level accuracy is 0.78 and 0.27, respectively, which corresponds to keyword recognition probabilities of 68.6% and 56.7%. Going up by one standard deviation in the latent factor (0.78 + 0.38 and 0.27 + 0.31) yields an increase to 76.1% and 64.1%, respectively, or a difference of 7.6% and 7.4% relative to the mean. Thus, although the contribution of the latent factor to each condition differs across target-to-masker ratios (0.38 vs 0.31), they both yield a similar change in the probability of correctly recognizing keywords because they are relative to different group-level intercepts.

Figure 3.

The best-fitting latent factor model for speech recognition across target-to-masker ratios. (A) Group-level keyword recognition accuracy for each target-to-masker ratio is given as μ (reported in log odds), and individual performance varied relative to the group based on the product of their individual speech recognition ability η and the amount that individual ability contributed to accuracy for each target-to-masker ratio, λ. (B) Each shaded region depicts the posterior probability density of speech recognition ability η for one participant.

The estimated posterior densities for the single latent sentence recognition factor are depicted in Figure 3B. These densities show the range of values that the latent factor could likely have for each participant and can be used in two ways. First, individual differences in sentence recognition ability can be estimated as a point value by finding the most likely value for each participant (i.e. the maximum a posteriori value or mode of the distribution), which is analogous to a random intercept in a mixed effects model. Second, density can be used to estimate a range of likely values for the parameter for each participant, which can be quantified in a variety of ways (e.g. Kruschke, 2018; Morey et al., 2016). Estimates of 95% highest density interval and visual inspection of Figure 3B both indicate that individuals are heterogeneous in their latent sentence recognition ability, which demonstrates that substantial individual differences exist in sentence recognition accuracy in this population for these stimuli and that sufficient data was collected per participant to estimate those differences across participants.

For the three memory tasks, models with one, two, or three latent factors were fit to data from all three tasks, again to select the latent structure which best explained task performance. A comparison of model fits is provided in Table 3. As in the speech model, post-hoc inspection of the three-factor model fit found that the posterior modes of all three factors were highly correlated (r > 0.8), indicating that the three-factor model had an excess of latent factors. Further inspection of posterior densities for model parameters found that they tended to be multi-modal, indicating that there were multiple model fits that could explain the data. Multiple fits can arise from swapping parameters throughout model fitting, such that there is not clear mapping of each latent factor onto performance in each task. As in the speech model, exploratory attempts to constrain the model did not eliminate these correlations or improve the fit of the three-factor model. This evidence favors the two-factor model as the best explanation for the memory data, which is a finding that is not evident from correlations of task-level performance reported in Table 1.

Table 3.

Comparison of model fits to reading span, digit span, and free recall task performance.

| Model | ELPDloo | ELPDloo SE | ploo | Ploo SE | ΔELPD | ΔELPD SE |

|---|---|---|---|---|---|---|

| Two Factors | −3843.7 | 62.9 | 163.4 | 7.3 | - | - |

| Three factors | −3846.8 | 62.8 | 219.1 | 9.4 | −3.1 | 4.1 |

| One Factor | −3866.1 | 63.6 | 99.1 | 4.6 | −22.4 | 10.4 |

Model metrics are reported as in Table 2. Comparison of model fits indicates that the two-factor model has a substantially better fit to the observed data than the one-factor model and had a marginally better fit than the three-factor model.

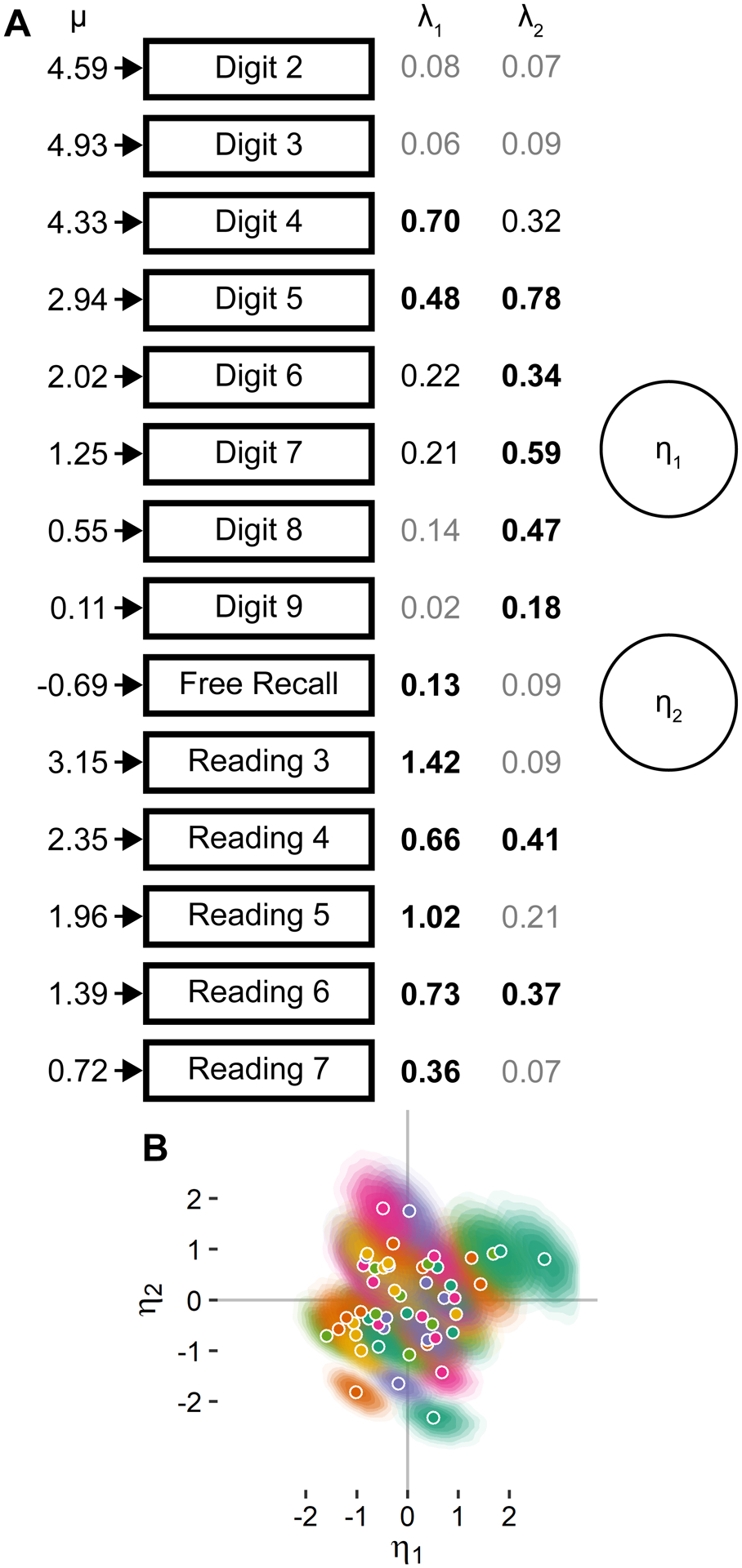

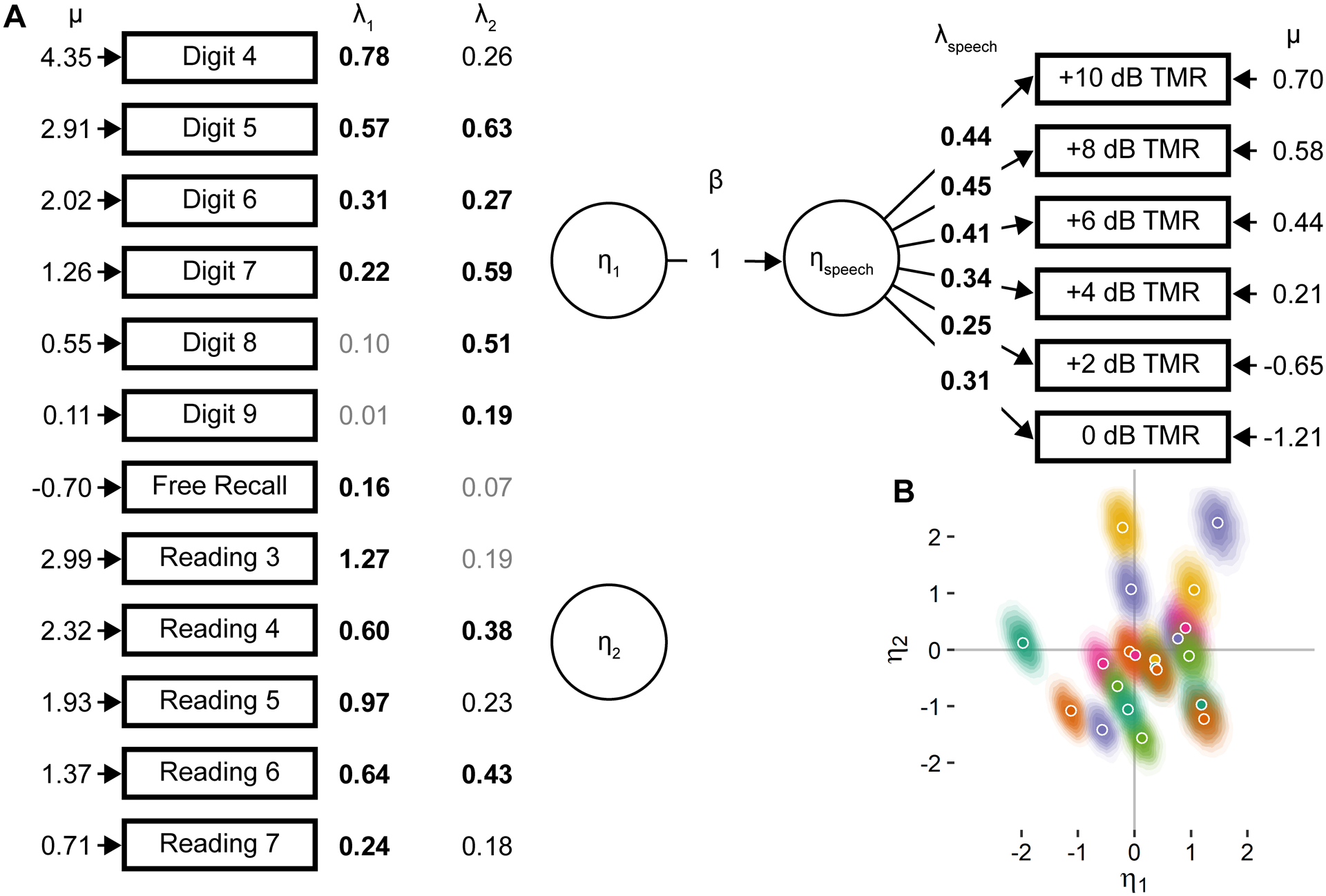

The two-factor memory model is depicted in Figure 4A. Group-level accuracy, μ, declines with increasing list length for digit and reading span, consistent with the trends depicted in Figure 1. Performance on each task was determined by individual differences in two latent factors, η1 and η2. Scale parameters λ1 and λ2 are comparable within a task, so the relative contribution of each latent factor to performance on each task is a meaningful comparison. The first level of comparison of scale parameters is to examine whether Bayes Factors support each parameter lying outside the region of practical equivalence, which is depicted as coefficients in bold in Figure 4. As shown, η1 predominantly determined performance on the free recall task and reading span at all list lengths and had some contribution to digit span at short list lengths. η2 predominantly determined performance in the digit span task at longer list lengths and had a secondary effect on performance in the reading span task. The second level of comparison is to examine the relative magnitude of the coefficients that are likely to be nonzero. η1 has a larger contribution to reading span performance than η2 even when λ 2 is nonzero. Conversely, η2 has a larger contribution to performance on the digit span task for lists of length 5 and greater, which is where most of the variance in performance on this task arises. Because performance on the digit span task for lengths of 2 and 3 was almost completely at ceiling, meaningful individual differences in performance on these list lengths were not observed, which is reflected in the fact that the scale parameters for these list lengths are unlikely to differ from zero.

Figure 4.

The best-fitting latent factor model for working memory tasks and across list lengths within digit and reading span tasks. (A) Parameters are depicted as in Figure 3, although arrows depicting the contribution of each latent factor, η, to accuracy on each task, λ, are omitted for clarity. Model parameters λ that had a Bayes Factor greater than 3:1 in favor of lying outside of the region of practical equivalence (see text for details) are in bold, parameters with Bayes Factors between 3:1 and 1:1 are shown in black, and parameters with Bayes Factors less than 1:1 are shown in gray. (B) The two latent working memory factors differ across individuals and are not correlated across the group. Most likely values of each latent factor for each participant are depicted as circles, and shaded gradients around each most likely value depict the corresponding probability density for that participant.

Estimated posterior densities for η1 and η2 are shown in Figure 4B. Because these data are two-dimensional, posterior density is depicted as a shade gradient around the most likely values of each of these parameters for each participant. As shown, the most likely parameter values are uncorrelated across participants, indicating that the model was able to recover two independent factors per participant that accounted for their performance across memory tasks. Visual inspection of the gradient regions for each participant indicate that they were centered around the most likely value and took on an approximately multivariate Gaussian shape, indicating that latent factors estimates were independent of one another. As in Figure 3, the separation of posterior density between participants indicates that meaningful differences in each latent factor exist across participants in this population and the data collected per participant were sufficient to precisely estimate those differences.

Finally, models with a varying number of memory factors and a single speech factor were fit to the entire data set to select the latent structure which best explained the link between memory and sentence recognition. The decision to compare these specific models was made post-hoc after seeing the results of the models depicted in Figures 3 and 4. Only a single speech factor was included here because the two-factor speech model completely collapsed into a single factor. The memory model with three factors appeared to have an excess of interchangeable factors that was less severe than the two-factor speech model, so we believed that it was possible that adding their relationship to the speech factor could constrain the model in such a way that allows for all three memory factors to have a unique relationship with the speech factors and memory tasks. For these models, data from digit span at list lengths 2 and 3 were excluded because they did not contribute to estimation of individual differences in latent memory factors. Preliminary model fits with one latent memory factor and the speech factor converged to a single solution, with a regression coefficient of β = 0.62 and σspeech = 0.78 (Bayes Factor of 26.7 that β lay outside the region of practical equivalence). However, the model we initially used failed to converge when the number of latent memory factors was greater than one. Post-hoc inspection of posterior densities indicated that model fitting failed because the model trended toward values of β1 = 1 and σspeech = 0 (i.e. ηspeech = η1), which were parameter values at the boundaries of the initial model. Based on this observation, a second model was created that fixed ηspeech = η1 and was fit with two and three latent memory parameters. Comparison of models with different free or fixed model parameters is valid because they are both used to explain the same data set and thereby generate comparable estimates of likelihood of the data arising from each model. A comparison of model fits is provided in Table 4. As was expected based on the memory models reported in Table 3 and Figure 4, the model with two or three latent memory factors had similar model fits to the data, but inspection of the three-factor model found that latent factors 2 and 3 were highly correlated, which supports the selection of the two-factor model as the best explanation for the data.

Table 4.

Comparison of model fits to performance on all memory and speech tasks.

| Model | ELPDloo | ELPDloo SE | ploo | Ploo SE | ΔELPD | ΔELPD SE |

|---|---|---|---|---|---|---|

| Three factors | −7704.7 | 75.7 | 254.9 | 8.9 | - | - |

| Two Factors | −7706.4 | 76.0 | 195.0 | 6.3 | −1.7 | 6.4 |

| One Factor | −7737.1 | 76.3 | 153.6 | 4.8 | −32.4 | 11.8 |

The full two-factor model is depicted in Figure 5A. The effect of latent factors on their respective memory and speech task performance is similar to the component models depicted in Figures 3 and 4. The latent sentence recognition factor affected recognition accuracy across all target-to-masker ratios and was the same factor that predominantly affected performance in the free recall and reading span tasks. Because this model fixed β1 = 1 estimating Bayes Factor is not possible for this model parameter. However, the fact that this model is a better explanation for the data than the one-factor model indicates that η1 is closely related to ηspeech, even if their true relationship is not unitary. Posterior densities for η1 and η2 are shown in Figure 5B in the same format as in Figure 4B, and similarly indicate that these latent factors are independent of one another and meaningfully vary across individuals. A post-hoc power analysis, described in supplemental digital content 3, demonstrated that the amount of data collected per participant was sufficient to select from among models with the number of latent factors tested here and to estimate individual differences in latent factors with high reliability.

Figure 5.

The best-fitting latent factor model linking individual differences in latent working memory abilities, η1 and η2, to individual differences in speech recognition ability, ηspeech. (A) The parameters linking individual differences in latent factors to performance in each task and task condition are depicted as in Figures 3 and 4. In this model, the regression coefficient β1 was fixed to 1 because preliminary models failed to converge when attempting to approach this boundary value. (B) The two latent individual difference factors obtained by this model fit are depicted as in Figure 4B for participants who completed both the memory and speech tasks.

DISCUSSION

In this study, we examined the relationship among three memory tasks, digit span, reading span, and free recall, and recognition of vocoded sentences in the presence of a two-talker masker across a range of target-to-masker ratios. Performance across memory tasks was positively correlated. Memory tasks had positive correlations with recognition accuracy for vocoded speech-in-speech, although only some correlations were significant. These results indicate that all three memory tasks measure underlying abilities which are associated with sentence recognition. Subsequent latent factor modeling of accuracy in each trial of each task indicated the presence of two distinct memory factors, one of which was closely related to sentence recognition and one of which was not.

Individual differences in sentence recognition across target-to-masker ratios

As shown in Figure 3, individual differences in PRESTO sentence recognition across target-to-masker ratios were best explained by a single latent factor that differed across participants. The posterior densities of these individual differences, depicted in Figures 3B and 5B, indicates that these differences are likely to be meaningful variability within the population of young adults with normal hearing, rather than measurement error. This finding is consistent with the results from Bosen and Barry (2020), which found that individual differences in sentence recognition accuracy were correlated across spectral resolutions. Together, these results indicate that the extent to which latent factors are responsible for individual differences in sentence recognition accuracy does not depend on recognition accuracy in the task. Thus, our results indicate that the explicit, slow processing component of the ELU model consistently affects speech recognition accuracy in young adults with normal hearing within the range of performance we have observed (group averages of 88% to 23% across this study and Bosen and Barry, 2020). It is possible that the factors that affect speech recognition change outside of this range, although in such cases accuracy would be close to floor or ceiling and individual differences in speech recognition accuracy would be challenging to precisely assess. Thus, if there is a threshold for which cognition “kicks in” (Rönnberg et al., 2010), it is likely that this threshold is reached whenever recognition accuracy is not perfect, or alternatively whenever inference is needed to identify unclear words regardless of accuracy (Winn & Teece, 2022). If the transition from automatic to explicit processing occurs when recognition accuracy is nearly perfect, then alternative methodology is likely needed to identify this transition, such as examining the time course of lexical activation (Farris-Trimble et al., 2014) or listening effort (Winn & Teece, 2022).

An important design detail in the present study is that the target-masker pairs were fixed across all participants, which allows for fair comparison of individual differences in sentence recognition accuracy without the confounding effects of variability in the vocoder’s effect on target intelligibility (DiNino et al., 2016), in target audibility relative to a fluctuating speech masker (Buss et al., 2021), and in cross-talker differences in intelligibility (Markham & Hazan, 2004). This finding is in agreement with prior work by Carbonell (2017), who found consistent individual differences in monosyllabic word recognition when words were vocoded, time-compressed, or presented in the presence of four competing talkers. It seems plausible that these individual differences in sentence recognition accuracy are driven by individual differences in cognitive ability, but a study that uses multiple types of speech materials and adverse listening conditions within participants is needed to determine whether multiple latent factors are needed to account for individual differences across stimuli and degraded listening conditions.

There appears to be a discontinuity in recognition accuracy in Figure 1, where accuracy drops between the +4 and +2 dB target-to-masker ratio conditions. Such a discontinuity could indicate that the attentional mechanisms for picking out the target relative to the masker were impaired when the target-to-masker ratio was close to zero (Ihlefeld & Shinn-Cunningham, 2008). Vocoding reduces access to auditory cues that facilitate segregation of concurrent auditory streams (Qin & Oxenham, 2003), so the discontinuity could reflect loss of access to an obvious level cue to distinguish the target speech from the maskers. If a distinct attentional mechanism is responsible for stream segregation we might expect to see recognition accuracy at +2 dB and 0 dB target-to-masker ratio associate more with a second latent sentence recognition factor, but that was not the case here. It is possible that individual differences in segregation ability would manifest as a second latent factor when stream segregation cues are available, so it would be informative to repeat this study without vocoding the target and masker speech to determine if multiple latent factors are evident in sentence recognition when stream segregation cues are available.

Multiple memory factors explain cross-task performance

Examination of the most likely model to explain memory (Tables 3 and 4) supports the existence of two independent memory factors. The presence of multiple latent factors provides evidence against a single unifying explanation for individual differences in task performance, such as motivation or general cognitive ability. However, these factors did not seem to align with our expectations based on the storage and processing or maintenance and retrieval models as described by prior literature, and instead supported the existence of one factor that predominantly affected performance on free recall and reading span and a second factor that dominated digit span performance at long list lengths, as shown in Figures 4 and 5.

A post-hoc explanation for the observed results is that serial recall of digits, and to a lesser extent serial recall of letters in the reading span task, depends on domain-specific knowledge of the stimuli used for the task (Botvinick, 2005; Waris et al., 2017). Recall accuracy for digit sequences has been shown to depend on how often transitions between successive digits occur in natural language samples (Jones & Macken, 2015), which supports the idea that individual differences in digit span task performance might depend on experience specifically with storing and retrieving digit sequences, in addition to general memory ability. Previous work with older adults who hear with cochlear implants found a correlation between digit serial recall accuracy and self-reported vocabulary (Bosen et al., 2021), which further suggests that the memory factor which primarily determined digit span task performance could be reflective of verbal crystallized intelligence or experience. This factor made no contribution to sentence recognition outcomes in the best fitting model tested here. In contrast, the memory factor that accounted for performance in the free recall task and was the primary determinant of performance in the reading span task was equivalent to the latent sentence recognition factor in the best-fitting model. While it seems unlikely that these two latent factors are truly unitary, the evidence is in favor of a strong relationship between these latent factors.

Our results suggest a refinement to the Ease of Language Understanding model. There is ample evidence that performance on complex span tasks such as reading span, which include interleaved storage and processing components, correlates with speech recognition accuracy in a variety of conditions. However, our results demonstrate that complex span tasks are not unique predictors of speech recognition accuracy. Performance on the free recall task also correlated with speech recognition accuracy and loaded onto a common latent factor with reading span. The common feature linking these tasks is the ability to retrieve recently presented verbal information (Unsworth & Engle, 2007b). Thus, we posit that the slow, explicit process of the Ease of Language Understanding model is dependent on individual differences in activation and subsequent retrieval of information in long-term memory, which is line with the role of long-term memory in the Ease of Language Understanding Model (Rönnberg et al., 2021).

While performance on all three tasks was affected by the factor related to sentence recognition ability to some extent, digit span task performance seems to be primarily determined by a second factor that is not related to sentence recognition ability. It appears that prior work (Bosen & Barry, 2020) found correlations between digit span performance and sentence recognition despite the choice of task, not because of it. Re-examination of the correlations shown in Figure 2 are consistent with this interpretation, with the weakest correlation for digit span and the strongest correlation for free recall. Our findings indicate that caution is needed when interpreting the presence or absence of correlations between various cognitive tasks and speech recognition, as it cannot be assumed that any given task is a pure measure of the cognitive construct it is purported to measure.

When does memory affect speech recognition in young adults with normal hearing?

As discussed in the introduction, performance on many cognitive tasks weakly correlates with speech recognition accuracy (Dryden et al., 2017), so it is generally unsurprising to find positive correlations such as the ones shown in Table 1 and Figure 2 so long as the tasks used have sufficient reliability (Heinrich & Knight, 2020; Parsons et al., 2019). Latent factor modeling demonstrated that all three tasks used here measure the latent memory factor which was related to sentence recognition to some extent, so it appears that observing associations between memory and sentence recognition does not depend on the use of a specific memory task. Comparison of the current results with prior literature indicates that observing a correlation between memory task performance and speech recognition accuracy in young adults with normal hearing seems to require specific conditions in the speech recognition task that are present in the PRESTO sentences, as described below.

One potential condition is adaptation to a novel listening condition. When participants who are unfamiliar with vocoded speech hear it for the first time they undergo a period of learning which improves speech recognition accuracy, which occurs within about 10 – 12 sentences (Davis et al., 2005). If the rate at which participants could learn to interpret vocoded speech were a major factor determining the presence or absence of a correlation, then we would expect accuracy for the first target-to-masker ratio tested (+10 dB) to have the strongest correlation with individual differences in memory ability because that is when learning would occur. The magnitude of correlations between memory task performance and sentence recognition accuracy in Table 1 is indeed larger for the +10 dB condition, but significant correlations are present at other target-to-masker ratios. Thus, it is possible that individual differences in learning the novel listening condition were partially responsible for the observed link between memory and sentence recognition, although the consistency of individual differences in recognition accuracy across target-to-masker ratios suggests that a transient learning mechanism would only account for a small portion of this link.

Another potential condition is the presence of the two-talker masker. Bosen & Barry (2020) observed correlations between serial recall accuracy and vocoded PRESTO sentence recognition when vocoder spectral resolution was manipulated, rather than target-to-masker ratio as in the current study. The presence of correlations in that study without including the two-talker masker used here indicates that the inclusion of the masker does not determine the presence or absence of a correlation between memory and sentence recognition accuracy. However, a within-participant comparison of recognition accuracy with and without the two-talker masker would be needed to determine whether the magnitude of the correlation changes across these listening conditions.

The use of vocoded speech is also unlikely to determine the presence or absence of correlations, as O’Neill et al., (2019) used vocoded IEEE sentences and found no correlation between sentence recognition accuracy and performance on the reading span task in young adults with normal hearing. A similar lack of correlation was also reported by Shader et al., (2020) between vocoded IEEE sentence recognition and performance on the List Sorting working memory test from the NIH Toolbox. Contrasting their findings with the ones presented in the current manuscript indicates that the strength of the correlations that we observed were likely driven by the use of PRESTO sentences.